Deep Learning Approaches to Source Code Analysis for Optimization of Heterogeneous Systems: Recent Results, Challenges and Opportunities

Abstract

:1. Introduction

- RQ1

- How, historically, have learning techniques been applied to source code?

- RQ2

- How have machine learning techniques been applied to cyber-physical systems and, in particular, to heterogeneous device mapping?

- RQ3

- How do the machine learning methods analyse source code in heterogeneous device mapping, and what results have been obtained?

2. Research Timeline

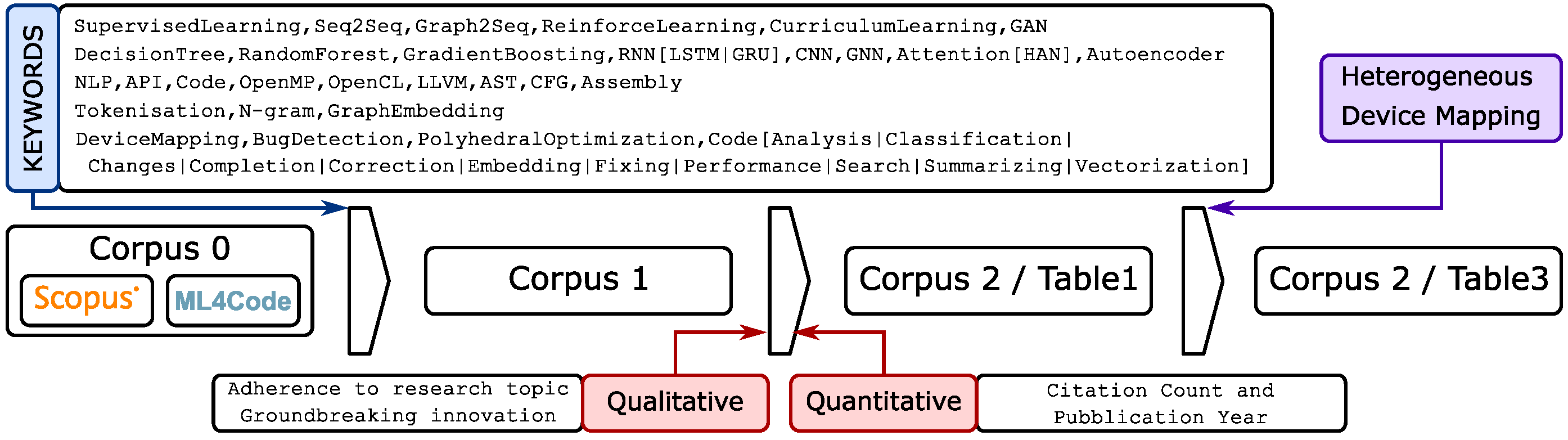

2.1. Selection Criteria

2.2. Relevant Related Surveys

Is it [source code, author’s note] driven by the “language instinct”? Do we program as we speak? Is our code largely simple, repetitive, and predictable? Is code natural? … Programming languages, in theory, are complex, flexible and powerful, but, “natural” programs, the ones that real people actually write, are mostly simple and rather repetitive; thus they have usefully predictable statistical properties that can be captured in statistical language models and leveraged for software engineering tasks [11].

Software is a form of human communication; software corpora have similar statistical properties to natural language corpora; and these properties can be exploited to build better software engineering tools [6].

- Executability A small change in the code produces a big change in the code meaning. Thus, a probabilistic model requires formal constraints to reduce the noise introduced. Moreover, the executability property determines the presence of two forms of code analysis, static and dynamic.

- Formality Unlike natural languages, programming languages are not written in stone and do not evolve over centuries. They are built as mathematical models that can change drastically over time. Moreover, the formality property does not avoid the semantic ambiguity of some languages due to some design choices (such as polymorphism and weak typing).

- Cross-Channel Interaction The source code has two channels: algorithmic and explanatory. These channels are sometimes fused (e.g., explanatory identifiers). A model can exploit this property to build more robust knowledge.

2.3. Machine Learning and Deep Learning Techniques for Source Code Analysis

2.3.1. The Beginning, from NLP to Code Analysis (2013–2015)

2.3.2. Broad Investigations: New Models, New Representations and New Applications (2016–2018)

2.3.3. Consolidation, Graph Models and Multilevel Code Analysis (2019–2021)

2.4. Final Remarks

3. Approaches for Heterogeneous Device Mapping in CPS

Final Remarks

4. Deep Learning Methods for Heterogeneous Device Mapping

4.1. Token-Based Language Modelling

4.2. Graph-Based Methodologies

4.3. Alternative Methodologies

4.4. Classification Models and Comparative Results

5. Deep Learning for CPS Programming: What Is Missing?

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AST | Abstract syntax tree |

| BLEU | Bilingual Evaluation Understudy |

| BoT | Bag of Tree |

| BoW | Bag of Word |

| BPE | Byte-Pair Encoding |

| CDFG | Control Data Flow Graph |

| CFG | Control Flow Graph |

| CNN | Convolutional Neural Network |

| CPS | Cyber-Physical Systems |

| CV | Computer Vision |

| DL | Deep Learning |

| DNN | Deep Neural Network |

| DT | Decision Tree |

| GGNN | Gated Graph Neural Networks |

| GRU | Gated Recurrent Unit (RNN) |

| GNN | Graph Neural Network |

| HAN | Hierarchical Attention Network |

| LSTM | Long short-term memory (RNN) |

| MDP | Markov Decision Process |

| ML | Machine Learning |

| MPNN | Message Passing Neural Network (GNN) |

| NLP | Natural Language Processing |

| RL | Reinforcement Learning |

| RNN | Recurrent Neural Network |

| Seq2Seq | Sequence to sequence |

| SIMD | Single instruction multiple data |

References

- Sztipanovits, J.; Koutsoukos, X.; Karsai, G.; Kottenstette, N.; Antsaklis, P.; Gupta, V.; Goodwine, B.; Baras, J.; Wang, S. Toward a Science of Cyber–Physical System Integration. Proc. IEEE 2012, 100, 29–44. [Google Scholar] [CrossRef]

- Mittal, S.; Vetter, J.S. A Survey of CPU-GPU Heterogeneous Computing Techniques. ACM Comput. Surv. 2015, 47, 1–35. [Google Scholar] [CrossRef]

- Fuchs, A.; Wentzlaff, D. The Accelerator Wall: Limits of Chip Specialization. In Proceedings of the 2019 IEEE International Symposium on High Performance Computer Architecture (HPCA), Washington, DC, USA, 16–20 February 2019; pp. 1–14. [Google Scholar] [CrossRef]

- Pouyanfar, S.; Sadiq, S.; Yan, Y.; Tian, H.; Tao, Y.; Reyes, M.P.; Shyu, M.L.; Chen, S.C.; Iyengar, S.S. A survey on deep learning: Algorithms, techniques, and applications. ACM Comput. Surv. (CSUR) 2018, 51, 1–36. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Allamanis, M.; Barr, E.T.; Devanbu, P.; Sutton, C. A survey of machine learning for big code and naturalness. ACM Comput. Surv. (CSUR) 2018, 51, 1–37. [Google Scholar] [CrossRef] [Green Version]

- Ashouri, A.H.; Killian, W.; Cavazos, J.; Palermo, G.; Silvano, C. A survey on compiler autotuning using machine learning. ACM Comput. Surv. (CSUR) 2018, 51, 1–42. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; O’Boyle, M. Machine learning in compiler optimization. Proc. IEEE 2018, 106, 1879–1901. [Google Scholar] [CrossRef] [Green Version]

- Barchi, F.; Urgese, G.; Macii, E.; Acquaviva, A. Code mapping in heterogeneous platforms using deep learning and llvm-ir. In Proceedings of the 2019 56th ACM/IEEE Design Automation Conference (DAC), Las Vegas, NV, USA, 2–6 June 2019; pp. 1–6. [Google Scholar]

- Parisi, E.; Barchi, F.; Bartolini, A.; Acquaviva, A. Making the Most of Scarce Input Data in Deep Learning-based Source Code Classification for Heterogeneous Device Mapping. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2021, 41, 1636–1648. [Google Scholar] [CrossRef]

- Hindle, A.; Barr, E.T.; Gabel, M.; Su, Z.; Devanbu, P. On the naturalness of software. Commun. ACM 2016, 59, 122–131. [Google Scholar] [CrossRef]

- Allamanis, M.; Barr, E.T.; Devanbu, P.; Sutton, C. Machine Learning on Source Code. Available online: https://ml4code.github.io (accessed on 12 December 2021).

- Grewe, D.; Wang, Z.; O’Boyle, M.F. Portable mapping of data parallel programs to OpenCL for heterogeneous systems. In Proceedings of the 2013 IEEE/ACM International Symposium on Code Generation and Optimization (CGO), Shenzhen, China, 23–27 February 2013; pp. 1–10. [Google Scholar]

- Raychev, V.; Vechev, M.; Yahav, E. Code completion with statistical language models. In Proceedings of the 35th ACM SIGPLAN Conference on Programming Language Design and Implementation, Edinburgh, UK, 9–11 June 2014; pp. 419–428. [Google Scholar]

- Zaremba, W.; Sutskever, I. Learning to execute. arXiv 2014, arXiv:1410.4615. [Google Scholar]

- Iyer, S.; Konstas, I.; Cheung, A.; Zettlemoyer, L. Summarizing source code using a neural attention model. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Berlin, Germany, 7–12 August 2016; pp. 2073–2083. [Google Scholar]

- Bhatia, S.; Singh, R. Automated correction for syntax errors in programming assignments using recurrent neural networks. arXiv 2016, arXiv:1603.06129. [Google Scholar]

- Mou, L.; Li, G.; Zhang, L.; Wang, T.; Jin, Z. Convolutional neural networks over tree structures for programming language processing. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016. [Google Scholar]

- Gu, X.; Zhang, H.; Zhang, D.; Kim, S. Deep API learning. In Proceedings of the 2016 24th ACM SIGSOFT International Symposium on Foundations of Software Engineering, Seattle, WA, USA, 13–18 November 2016; pp. 631–642. [Google Scholar]

- Allamanis, M.; Brockschmidt, M.; Khademi, M. Learning to represent programs with graphs. arXiv 2017, arXiv:1711.00740. [Google Scholar]

- Gu, X.; Zhang, H.; Kim, S. Deep code search. In Proceedings of the 2018 IEEE/ACM 40th International Conference on Software Engineering (ICSE), Gothenburg, Sweden, 27 May–3 June 2018; pp. 933–944. [Google Scholar]

- Santos, E.A.; Campbell, J.C.; Patel, D.; Hindle, A.; Amaral, J.N. Syntax and sensibility: Using language models to detect and correct syntax errors. In Proceedings of the 2018 IEEE 25th International Conference on Software Analysis, Evolution and Reengineering (SANER), Campobasso, Italy, 20–23 March 2018; pp. 311–322. [Google Scholar]

- Bavishi, R.; Pradel, M.; Sen, K. Context2Name: A deep learning-based approach to infer natural variable names from usage contexts. arXiv 2018, arXiv:1809.05193. [Google Scholar]

- Bui, N.D.; Jiang, L.; Yu, Y. Cross-language learning for program classification using bilateral tree-based convolutional neural networks. In Proceedings of the Workshops at the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Alon, U.; Zilberstein, M.; Levy, O.; Yahav, E. code2vec: Learning distributed representations of code. Proc. ACM Program. Lang. 2019, 3, 1–29. [Google Scholar] [CrossRef] [Green Version]

- Alon, U.; Brody, S.; Levy, O.; Yahav, E. code2seq: Generating Sequences from Structured Representations of Code. arXiv 2019, arXiv:1808.01400. [Google Scholar]

- Mendis, C.; Renda, A.; Amarasinghe, S.; Carbin, M. Ithemal: Accurate, portable and fast basic block throughput estimation using deep neural networks. In Proceedings of the International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 4505–4515. [Google Scholar]

- Pradel, M.; Gousios, G.; Liu, J.; Chandra, S. Typewriter: Neural type prediction with search-based validation. In Proceedings of the 28th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering, Online, 8–13 November 2020; pp. 209–220. [Google Scholar]

- Hoang, T.; Kang, H.J.; Lo, D.; Lawall, J. CC2vec: Distributed representations of code changes. In Proceedings of the ACM/IEEE 42nd International Conference on Software Engineering, Seoul, Korea, 6–11 July 2020; pp. 518–529. [Google Scholar]

- Karampatsis, R.M.; Babii, H.; Robbes, R.; Sutton, C.; Janes, A. Big code != big vocabulary: Open-vocabulary models for source code. In Proceedings of the 2020 IEEE/ACM 42nd International Conference on Software Engineering (ICSE), Seoul, Korea, 6–11 July 2020; pp. 1073–1085. [Google Scholar]

- Haj-Ali, A.; Ahmed, N.K.; Willke, T.; Shao, Y.S.; Asanovic, K.; Stoica, I. NeuroVectorizer: End-to-end vectorization with deep reinforcement learning. In Proceedings of the 18th ACM/IEEE International Symposium on Code Generation and Optimization, San Diego, CA, USA, 22–26 February 2020; pp. 242–255. [Google Scholar]

- Brauckmann, A.; Goens, A.; Castrillon, J. A Reinforcement Learning Environment for Polyhedral Optimizations. arXiv 2021, arXiv:2104.13732. [Google Scholar]

- Allamanis, M.; Jackson-Flux, H.; Brockschmidt, M. Self-Supervised Bug Detection and Repair. arXiv 2021, arXiv:2105.12787. [Google Scholar]

- Stanley, K.O.; Miikkulainen, R. Evolving neural networks through augmenting topologies. Evol. Comput. 2002, 10, 99–127. [Google Scholar] [CrossRef]

- Cummins, C.; Petoumenos, P.; Wang, Z.; Leather, H. End-to-end deep learning of optimization heuristics. In Proceedings of the 2017 26th International Conference on Parallel Architectures and Compilation Techniques (PACT), Portland, OR, USA, 9–13 September 2017; pp. 219–232. [Google Scholar]

- Quinlan, J.R. C4. 5: Programs for Machine Learning; Elsevier: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Bailey, D.; Barszcz, E.; Barton, J.; Browning, D.; Carter, R.; Dagum, L.; Fatoohi, R.; Frederickson, P.; Lasinski, T.; Schreiber, R.; et al. The Nas Parallel Benchmarks. Int. J. High Perform. Comput. Appl. 1991, 5, 63–73. [Google Scholar] [CrossRef] [Green Version]

- Scarselli, F.; Gori, M.; Tsoi, A.C.; Hagenbuchner, M.; Monfardini, G. The graph neural network model. IEEE Trans. Neural Netw. 2008, 20, 61–80. [Google Scholar] [CrossRef] [Green Version]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. Bleu: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 7–12 July 2002; pp. 311–318. [Google Scholar]

- Cho, K.; Van Merriënboer, B.; Bahdanau, D.; Bengio, Y. On the properties of neural machine translation: Encoder-decoder approaches. arXiv 2014, arXiv:1409.1259. [Google Scholar]

- Brauckmann, A.; Goens, A.; Ertel, S.; Castrillon, J. Compiler-Based Graph Representations for Deep Learning Models of Code. In Proceedings of the 29th International Conference on Compiler Construction, San Diego, CA, USA, 22–23 February 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 201–211. [Google Scholar] [CrossRef] [Green Version]

- Ben-Nun, T.; Jakobovits, A.S.; Hoefler, T. Neural code comprehension: A learnable representation of code semantics. arXiv 2018, arXiv:1806.07336. [Google Scholar]

- Allamanis, M.; Peng, H.; Sutton, C. A convolutional attention network for extreme summarization of source code. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 20–22 June 2016; pp. 2091–2100. [Google Scholar]

- Tai, K.S.; Socher, R.; Manning, C.D. Improved semantic representations from tree-structured long short-term memory networks. arXiv 2015, arXiv:1503.00075. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Di Biagio, A. llvm-mca: A Static Performance Analysis Tool. 2018. Available online: https://lists.llvm.org/pipermail/llvm-dev/2018-March/121490.html (accessed on 1 May 2022).

- Hirsh, I.; Stupp, G. Intel Architecture Code Analyzer. 2012. Available online: https://www.intel.com/content/www/us/en/developer/articles/tool/architecture-code-analyzer.html (accessed on 1 May 2022).

- Gage, P. A new algorithm for data compression. C Users J. 1994, 12, 23–38. [Google Scholar]

- Grosser, T.; Groesslinger, A.; Lengauer, C. Polly–performing polyhedral optimizations on a low-level intermediate representation. Parallel Process. Lett. 2012, 22, 1250010. [Google Scholar] [CrossRef] [Green Version]

- VenkataKeerthy, S.; Aggarwal, R.; Jain, S.; Desarkar, M.S.; Upadrasta, R.; Srikant, Y. IR2Vec: LLVM IR Based Scalable Program Embeddings. ACM Trans. Archit. Code Optim. (TACO) 2020, 17, 1–27. [Google Scholar] [CrossRef]

- Cummins, C.; Fisches, Z.V.; Ben-Nun, T.; Hoefler, T.; Leather, H. ProGraML—Graph-based Deep Learning for Program Optimization and Analysis. arXiv 2020, arXiv:2003.10536. [Google Scholar]

- Parisi, E.; Barchi, F.; Bartolini, A.; Tagliavini, G.; Acquaviva, A. Source Code Classification for Energy Efficiency in Parallel Ultra Low-Power Microcontrollers. In Proceedings of the 2021 Design, Automation & Test in Europe Conference & Exhibition (DATE), Grenoble, France, 1–5 February 2021; pp. 878–883. [Google Scholar]

- Barchi, F.; Parisi, E.; Urgese, G.; Ficarra, E.; Acquaviva, A. Exploration of Convolutional Neural Network models for source code classification. Eng. Appl. Artif. Intell. 2021, 97, 104075. [Google Scholar] [CrossRef]

- Keerthy S, V.; Aggarwal, R.; Jain, S.; Desarkar, M.S.; Upadrista, R. IR2Vec: A Flow Analysis based Scalable Infrastructure for Program Encodings. arXiv 2019, arXiv:1909.06228. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- LeCun, Y. Generalization and network design strategies. Connect. Perspect. 1989, 19, 143–155. [Google Scholar]

- Yin, W.; Kann, K.; Yu, M.; Schütze, H. Comparative study of cnn and rnn for natural language processing. arXiv 2017, arXiv:1702.01923. [Google Scholar]

- Zhou, J.; Cui, G.; Hu, S.; Zhang, Z.; Yang, C.; Liu, Z.; Wang, L.; Li, C.; Sun, M. Graph neural networks: A review of methods and applications. AI Open 2020, 1, 57–81. [Google Scholar] [CrossRef]

- Mikolov, T.; Le, Q.V.; Sutskever, I. Exploiting similarities among languages for machine translation. arXiv 2013, arXiv:1309.4168. [Google Scholar]

- Bordes, A.; Usunier, N.; Garcia-Duran, A.; Weston, J.; Yakhnenko, O. Translating embeddings for modeling multi-relational data. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–8 December 2013; Volume 26. [Google Scholar]

| Reference | Year | Keywords | |

|---|---|---|---|

| Key papers and Surveys | |||

| Hindle A. et al. | [11] | 2012 * | N-gram, Code properties, Code completion |

| Allamanis M. et al. | [6] | 2018 | Survey, Code properties |

| Ashouri AH. et al. | [7] | 2018 | Survey, Compiler autotuning |

| Wang Z. et al. | [8] | 2018 | Survey, Compiler optimization |

| Innovative Models, Applications and Techniques | |||

| Grewe D. et al. | [13] | 2013 | DT, Device mapping |

| Raychev V. et al. | [14] | 2014 | RNN, N-gram, Code completion |

| Zaremba W. et al. | [15] | 2014 | Seq2Seq, LSTM, Curriculum Learning |

| Iyer S. et al. | [16] | 2016 | LSTM, Summarizing, Attention |

| Bhatia S. et al. | [17] | 2016 | RNN, LSTM, Seq2Seq, Code fixing |

| Mou L. et al. | [18] | 2016 | Tree CNN, AST, Code classification |

| Gu X. et al. | [19] | 2016 | Seq2Seq, RNN Encoder-Decoder, NLP to code API |

| Allamanis M. et al. | [20] | 2017 | GGNN, GRU, Code analysis, Graph2Seq |

| Gu X. et al. | [21] | 2018 | RNN, Code Search, Cosine Similarity, NLP to Code Example |

| Santos ED. et al. | [22] | 2018 | LSTM, N-gram, Code Correction |

| Bavishi R. et al. | [23] | 2018 | LSTM Autoencoder, Code Analysis |

| Bui NDQ. et al. | [24] | 2018 | Tree CNN, AST, Code classification |

| Alon U. et al. | [25] | 2019 | AST Paths, Code Classification, Attention |

| Alon U. et al. | [26] | 2019 | AST Paths, Code Classification, LSTM Encoder-Decoder |

| Mendis C. et al. | [27] | 2019 | Hierarchical LSTM, Code Performance Regression, Assembly |

| Pradel M. et al. | [28] | 2020 | AST features, RNNs, Type prediction |

| Hoang T. et al. | [29] | 2020 | HAN, Bidirectional GRU, attentions, Code changes |

| Karampatsis RM. et al. | [30] | 2020 | Code embedding, BPE, LSTM |

| Haj-Ali A. et al. | [31] | 2020 | RL, Code vectorization |

| Brauckmann A. et al. | [32] | 2021 | RL, Polyhedral optimisation |

| Allamanis M. et al. | [33] | 2021 | GNN, Bug detection, GAN |

| Code Modelling | Code Manipulation | Code Optimisation |

|---|---|---|

| API exploration | Code Completion | Heuristic for compilers |

| Code Conventions | Code Synthesis | Auto-parallelisation |

| Code Semantic | Code Fixing | Bug localisation |

| Code Summarising | Comment generation |

| Reference | Year | Keywords | |

|---|---|---|---|

| Heterogeneous Device Mapping | |||

| Cummins C. et al. | [35] | 2017 | LSTM, OpenCL |

| Ben-Nun T. et al. | [42] | 2018 | LSTM, LLVM graph embedding |

| Barchi F. et al. | [9] | 2019 | LSTM, LLVM tokenisation |

| Venkata Keerthy S. et al. | [50] | 2020 | LLVM embedding, Gradient Boosting |

| Brauckmann A. et al. | [41] | 2020 | MPNN (GNN), LLVM graph embedding, CDFG, AST |

| Cummins C. et al. | [51] | 2020 | MPNN (GNN), LLVM graph embedding, CFG |

| Parisi E. et al. | [52] | 2021 | OpenMP, Random Forest, Energy consumption |

| Barchi F. et al. | [53] | 2021 | LLVM tokenisation, CNN, LSTM |

| Suite | Version | Benchmarks | Kernels | Samples |

|---|---|---|---|---|

| amd-sdk | 3.0 | 12 | 16 | 16 |

| npb | 3.3 | 7 | 114 | 527 |

| nvidia-sdk | 4.2 | 6 | 12 | 12 |

| parboil | 0.2 | 6 | 8 | 19 |

| polybench | 1.0 | 14 | 27 | 27 |

| rodinia | 3.1 | 14 | 31 | 31 |

| shoc | 1.1.5 | 12 | 48 | 48 |

| Total | 71 | 256 | 680 | |

| State-of-the-Art Methodologies | ||||

|---|---|---|---|---|

| AMD | NVIDIA | Mean | ||

| DeepTune | [35] | 0.814 | 0.805 | 0.810 |

| NCC/inst2vec | [42] | 0.802 | 0.810 | 0.806 |

| CDFG | [41] | 0.864 | 0.814 | 0.839 |

| ProGraML | [51] | 0.866 | 0.800 | 0.833 |

| DeepLLVM | [53] | 0.853 | 0.823 | 0.838 |

| Siamese | [10] | 0.917 | 0.888 | 0.903 |

| IR2vec | [50] | 0.924 | 0.870 | 0.897 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Barchi, F.; Parisi, E.; Bartolini, A.; Acquaviva, A. Deep Learning Approaches to Source Code Analysis for Optimization of Heterogeneous Systems: Recent Results, Challenges and Opportunities. J. Low Power Electron. Appl. 2022, 12, 37. https://doi.org/10.3390/jlpea12030037

Barchi F, Parisi E, Bartolini A, Acquaviva A. Deep Learning Approaches to Source Code Analysis for Optimization of Heterogeneous Systems: Recent Results, Challenges and Opportunities. Journal of Low Power Electronics and Applications. 2022; 12(3):37. https://doi.org/10.3390/jlpea12030037

Chicago/Turabian StyleBarchi, Francesco, Emanuele Parisi, Andrea Bartolini, and Andrea Acquaviva. 2022. "Deep Learning Approaches to Source Code Analysis for Optimization of Heterogeneous Systems: Recent Results, Challenges and Opportunities" Journal of Low Power Electronics and Applications 12, no. 3: 37. https://doi.org/10.3390/jlpea12030037

APA StyleBarchi, F., Parisi, E., Bartolini, A., & Acquaviva, A. (2022). Deep Learning Approaches to Source Code Analysis for Optimization of Heterogeneous Systems: Recent Results, Challenges and Opportunities. Journal of Low Power Electronics and Applications, 12(3), 37. https://doi.org/10.3390/jlpea12030037