3.1. Definition of Digitalization of an Enterprise and Rules for Its Identification

To overcome these limitations identified in prior studies (see

Section 2.3), this study employs a new method by using an interpretable AI large language model to establish indicators for enterprise digitalization. This method aims to minimize training costs, maximize accuracy, ensure generalizability, and enhance interpretability, and serve as the core independent variable measurement used in the subsequent regression and mechanism analysis models.

However, accurately identifying digitalization in enterprises remains challenging.

Table 1 highlights four key features in existing descriptions of ‘enterprise digitalization’ in annual reports:

(1) Lack of an appropriate subject: e.g., “First, create a new mode of intelligent procurement.”

(2) Focus on the broader digitalization landscape, not the enterprise: e.g., “Facing fierce market competition, peer companies have used digital technology to improve production processes and enhance internal production efficiency.”

(3) Industry-wide digitization descriptions: e.g., “In the future, rehabilitation medical equipment will integrate with intelligent sensors, IoT, big data, and other technologies, moving toward intelligence.”

(4) National policy outlines: e.g., “In July 2017, the State Council released the New Generation Artificial Intelligence Development Plan, aiming for global leadership in AI by 2030.”

Based on these characteristics, this study proposes guidelines for identifying statements related to enterprise digitalization:

A statement is considered to describe an enterprise’s digitalization behavior if it specifically targets the enterprise and involves the use of digital technology in any of the following ways:

(1) Developing relevant products.

(2) Improving production processes and techniques.

(3) Marketing its own IT products (for IT companies).

(4) Obtaining recognition and certification for digital behaviors.

(5) Declaring related projects.

In essence, enterprise digitalization is depicted when a company engages in activities such as product development, process enhancement, IT product marketing, digital certification, or project announcements. The presence of any one of these activities qualifies the company as undergoing digitalization.

Conversely, if the statement focuses on national policies, industry trends, non-IT products, or competitors’ digital behaviors, it does not describe enterprise digitalization.

3.2. Construction of an Enterprise Digitalization Database

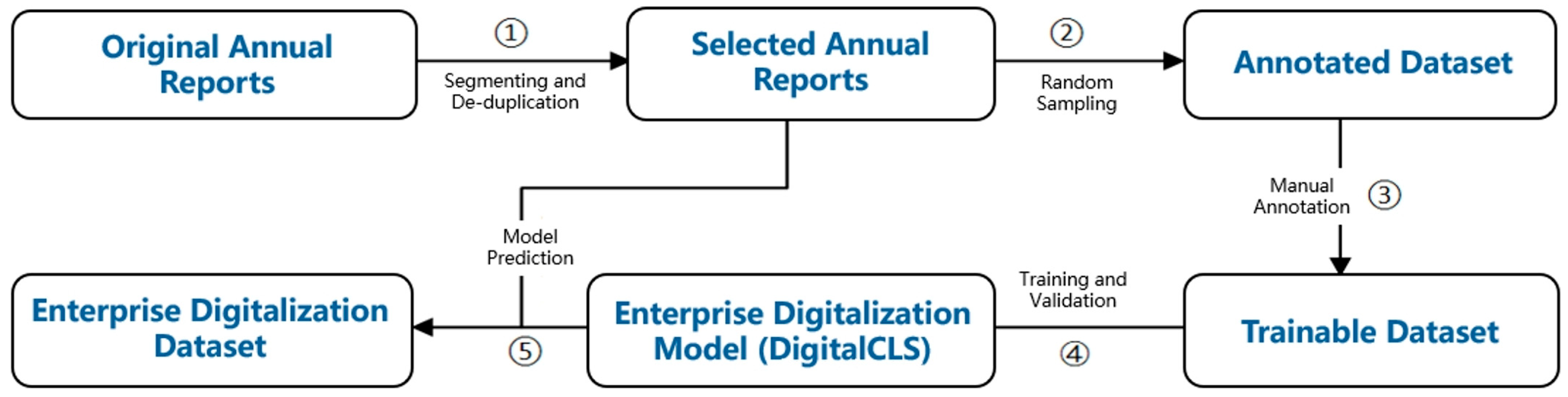

The construction of the enterprise digitalization database encompasses five primary stages: cleaning the annual reports, creating the dataset for labeling, constructing the dataset for training, building the deep learning discriminative model for ‘enterprise digitalization’, and acquiring the dataset specifically related to ‘enterprise digitalization’.

Figure 2 illustrates this methodology.

(1) Cleaning annual reports.

① Download reports: Collect annual reports for A-share listed companies in Shanghai and Shenzhen from 2013 to 2022 via the China Research Data Service Platform (CNRDS). ② Text extraction: Remove non-textual elements such as tables and extract plain text from the ‘Management Discussion and Analysis’ sections. ③ Text processing: Split the text into sentences, check for and remove repetitive statements, and compile the cleaned text.

(2) Constructing the dataset to be labeled.

Sample selection: Combine all cleaned text from the ‘Management Discussion and Analysis’ and randomly select 10,000 sentence units for labeling to ensure representativeness.

(3) Constructing a trainable dataset.

① Training annotators: Recruit and train two research assistants on definitions and rules related to ‘enterprise digitalization.’ ② Annotation process: Assistants independently annotate the 10,000 sentences, which are then reviewed by experts to resolve discrepancies and finalize the guidelines. ③ Export dataset: Save the annotated data in JSON format.

(4) Constructing the ‘enterprise digitalization’ discriminative model.

To enhance the model’s classification capabilities, it is constructed in two stages:

Stage 1: Use a pre-trained BERT language model and add perturbations to training samples to enhance generalization.

Stage 2: Apply keyword matching to identify relevant terms and integrate these into the model using cross-attention mechanisms. This helps construct an end-to-end binary learning network to handle diverse sample descriptions and improve interpretability.

The process involves constructing a deep learning network for binary classification using the cross-attention mechanism to handle the variety of sample descriptions and improve the interpretability of the discriminative model. The specifications of the model are detailed below.

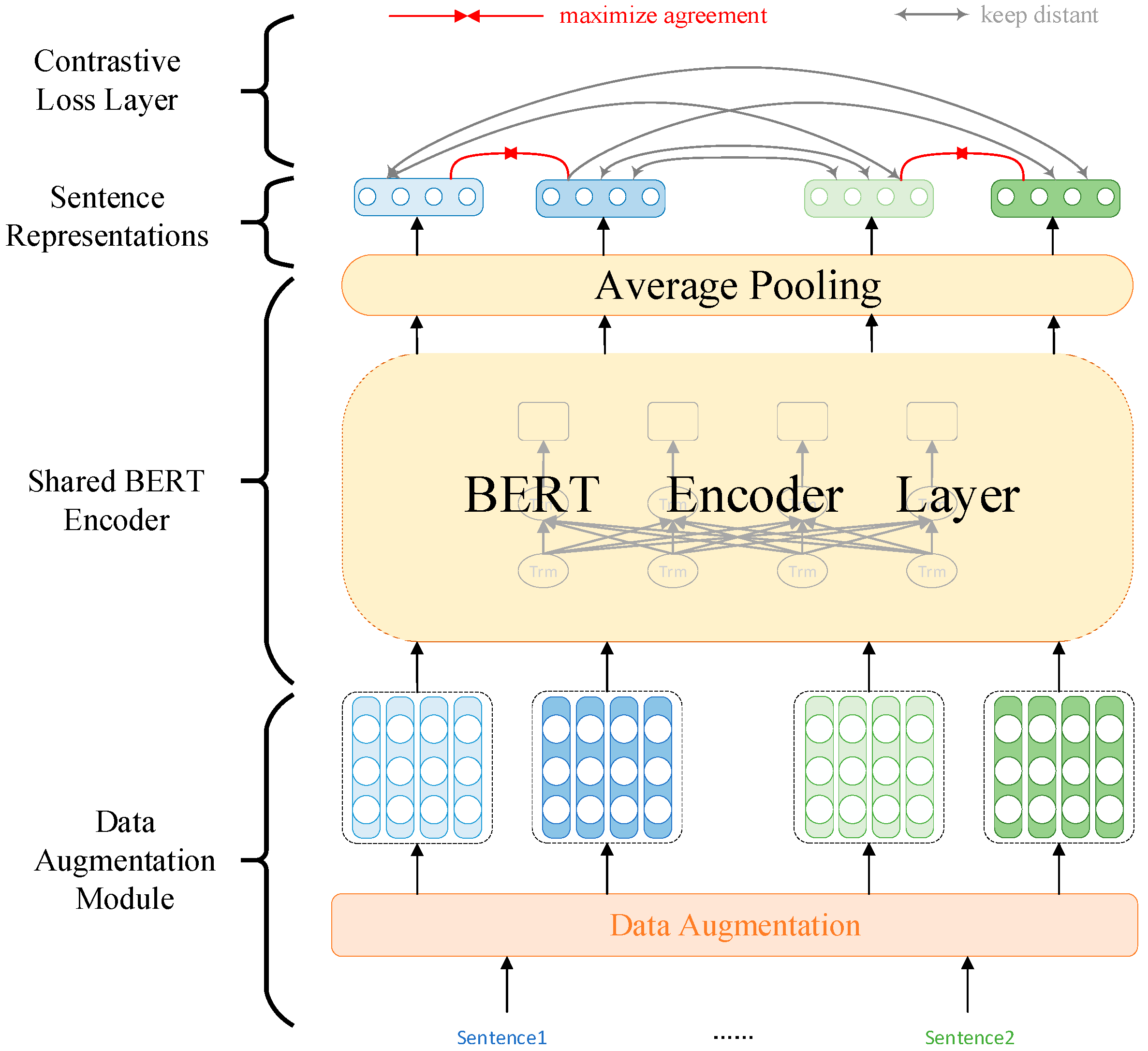

① Stage 1: BERT representation learning based on contrastive learning.

Considering the large amount of text content in corporate annual reports and the relatively weak adaptability of existing universal LLMs to domain data, the inference speed is slow, and the fine-tuning effect is uncontrollable. For the pre-trained language model BERT, firstly, it is pre-trained through a large amount of text and has strong text representation ability; Secondly, its parameter count is relatively small compared to general LLMs, resulting in higher computational efficiency; Thirdly, the fine-tuning effect of the BERT model is controllable when the sample size is moderate. Therefore, this stage is based on the pretrained language model BERT, fine-tuned on an unlabeled task-oriented corpus D. As shown in

Figure 3, its structure consists of three main parts:

Data augmentation layer: As shown in

Figure 4, each input sample

first enters the data augmentation layer, which applies token cutoff (randomly selecting tokens and setting their entire embedding vectors to zero) for augmentation. This process generates two different token embeddings

, where

,

L is the sequence length,

d is the hidden dimension, and

denotes the different cutoff methods.

Shared BERT encoding layer: Based on the processing of , the representation vector of is encoded by the multilayer transformer block inside the BERT and obtained after average pooling.

Contrastive loss layer: Following Chen et al. (2020a) [

68], this study employs normalized temperature-scaled cross-entropy loss (NTSCL) as the loss function. Each training session involves augmenting the dataset

D by randomly sampling and expanding it with a batch of

2N sample representations. During training, each sample is made to be close to its own augmented version while remaining distant from the other

2N − 2 samples in the vector space. The classification loss is computed for sample pairs

i and

j.

where

is the cosine similarity function;

denotes the corresponding sample representation vector;

is the hyperparameter (taking the value 0.1).

Training: In the training phase, the goal of this study is to minimize the final loss function

:

② Stage 2: End-to-end binary classification deep learning networks based on the cross-attention mechanism.

The model in the second stage mainly includes the keyword query layer, token representation layer, cross-attention layer, and fully-connected classification layer, and the specific model structure is shown in

Figure 5.

Keyword query layer: For the training samples , we first perform a matching process using the compiled keyword library Q. This involves identifying the keywords present in the training samples X, resulting in the keyword set .

Token representation layer: The training samples are

and are added into the fine-tuned BERT model for encoding to obtain a sequence of hidden vectors of the training samples

.

Moreover, the keyword set

is also input into the fine-tuned BERT model for encoding, resulting in a sequence of hidden vectors for the keyword set

.

Cross-attention layer: To enhance the interpretability of model predictions and improve the recognition of non-primary objects, information is integrated from the keyword set relevant to the training samples X based on a cross-attention mechanism. For any hidden vector in the sequence of hidden vectors of the training samples and any hidden vector in the sequence of hidden vectors of the training samples, any hidden vector can be expressed as , where . Therefore, the attention vector is given by Thus, the attention matrix A is defined as ; then, we can obtain .

Fully connected classification layer: In this study, the attention matrix a is concatenated with the hidden vector sequence H of the training samples. This concatenated matrix is then fed into a fully connected layer to obtain the final classification prediction .

Loss function: To train the aforementioned neural network, this study utilizes cross-entropy as the final loss function. Let

denote the

i-th training sample, where

represents the content of the

i-th sample and

yi is the true classification label of the

i-th sample. The training objective is to minimize the final loss function:

Dataset description and preprocessing: This study employs the manually annotated Digital Transformation Dataset (

N = 10,000 instances) introduced in

Section 3.2. Following standard machine learning practice, the dataset was randomly partitioned into training (80%), validation (10%), and test sets (10%). The training subset was used for parameter estimation, the validation subset for hyperparameter tuning, and the test subset for final performance assessment.

Evaluation metrics: Given that this study addresses a text binary classification problem, the accuracy and bias of classification predictions can be measured by four metrics: accuracy, precision, recall, and F1-score. Their calculations are given by the following:

Specifically, TP (true positive) denotes the count of actual positive instances correctly predicted; TN (true negative) represents the number of actual negative cases correctly identified; FP (false positive) indicates misclassified negative cases predicted as positive; and FN (false negative) refers to misclassified positive cases predicted as negative.

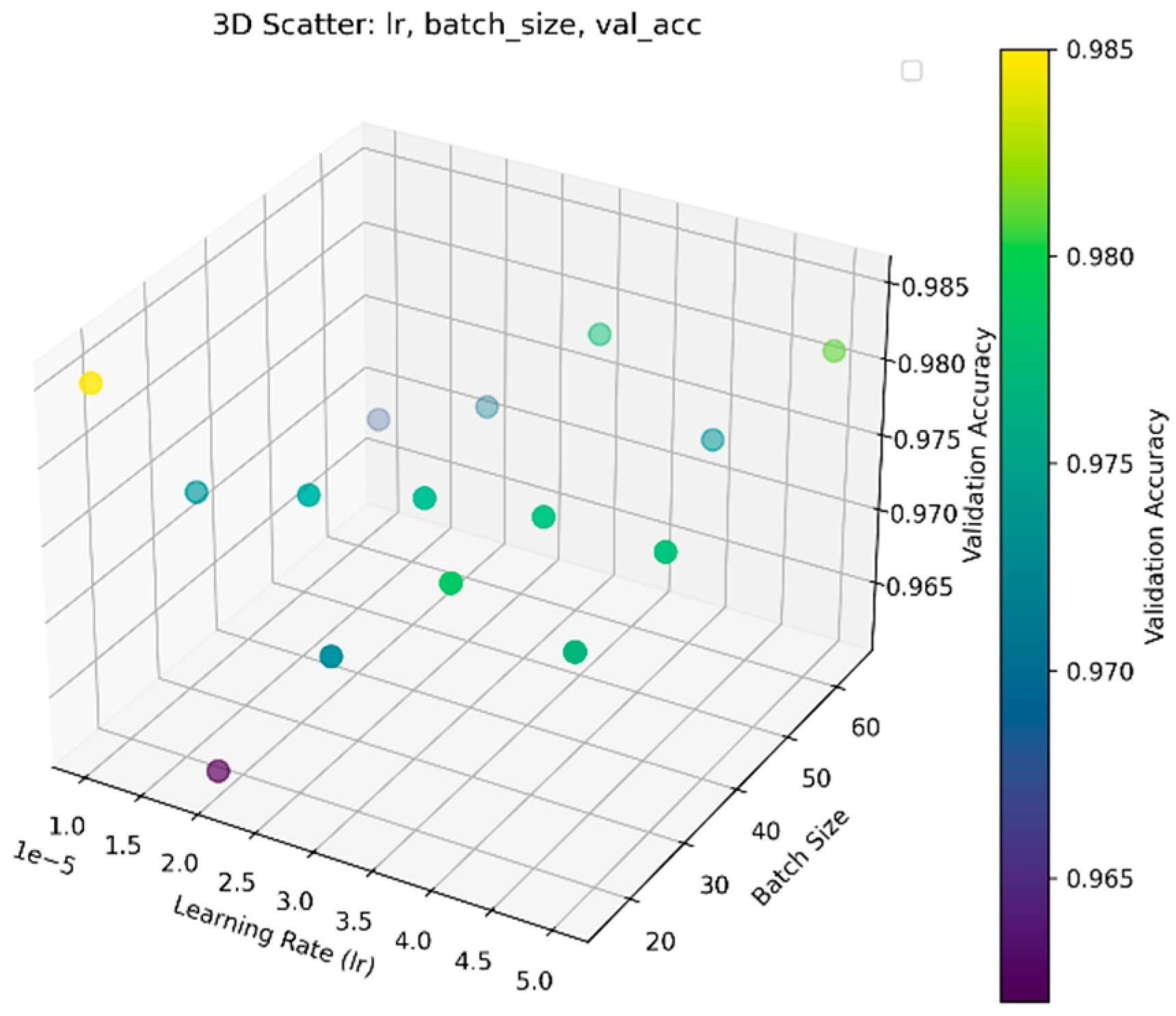

Model training details: The model was implemented in PyTorch 2.7 and trained on an NVIDIA Tesla V100 GPU. Throughout the training process, we configured the model to run for 50 epochs and selected the checkpoint exhibiting peak validation accuracy as the final model weights. For hyperparameter optimization, we adhered to standard BERT fine-tuning protocols, performing Bayesian optimization via Optuna across learning rates {1 × 10

−5, 2 × 10

−5, 3 × 10

−5, 4 × 10

−5, 5 × 10

−5} and batch sizes {16, 32, 64}. As illustrated in

Figure 6, the optimal configuration achieved maximum performance at a learning rate of 1 × 10

−5 with a batch size of 16.

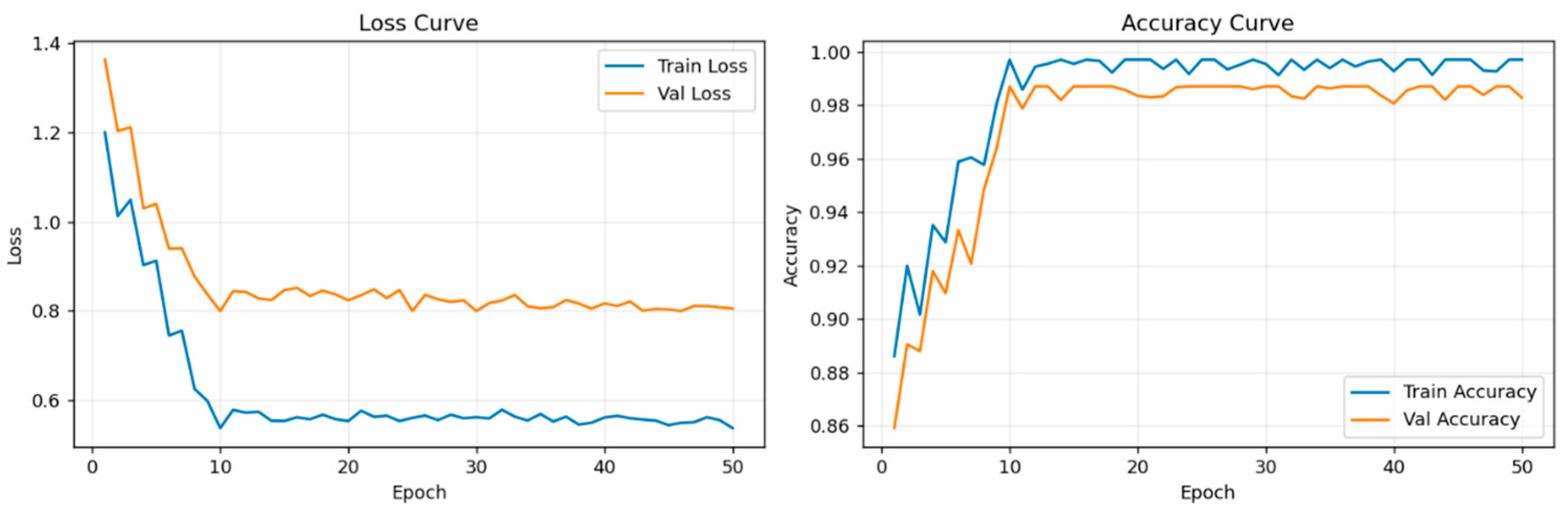

Under the optimal hyperparameter settings, the model’s training process is depicted in

Figure 7, where the performance gradually stabilizes around the 10th epoch. Specifically, the trained model achieves nearly 100% accuracy on the training set, with validation accuracy fluctuating between 97.2% and 98.5%. Additionally, the loss gap between the training and validation sets remains small, indicating strong generalization performance without overfitting. Ultimately, we designate the training weights achieving the highest accuracy on the validation set as the final model parameters.

Experimental results: On the test set, the model achieves a classification accuracy of 98.5%. The detailed confusion matrix for the test set is presented in

Table 2. These results clearly demonstrate that the model exhibits strong robustness and generalization capability.

Meanwhile, to better demonstrate the model’s classification performance, we have also trained models including ChatGLM (6B), BERT_base_Chinese, SVM, and the word frequency method on the same datasets. Their performance was evaluated using precision, recall, accuracy and F1-score with detailed results shown in

Table 3. As can be seen, our proposed model significantly outperforms all other models.

(5) Obtain the ‘enterprise digitalization’ dataset.

Based on the discriminative model developed in step 4, the study identifies digitalization statements in the cleaned ‘Management Discussion and Analysis’ text from all A-share-listed companies in Shanghai and Shenzhen between 2013 and 2022. From this analysis, digitalization indicators for all enterprises are constructed annually.