1. Introduction

Artificial intelligence (AI) is increasingly shaping education systems worldwide, offering new opportunities to personalize learning, enhance teaching efficiency, and support innovative practices. However, the successful adoption of AI in classrooms depends significantly on teachers’ ability to understand, evaluate, and apply AI technologies effectively [

1]. In this context, AI literacy has emerged as a critical competency for educators, encompassing technical understanding, ethical awareness, critical appraisal, and practical application [

2].

Recent literature has provided a conceptual foundation for AI literacy. Long and Magerko [

3] proposed early definitions emphasizing understanding AI capabilities and limitations. These have since been expanded into more comprehensive models. Chiu et al. [

4], for example, proposed a comprehensive AI literacy framework consisting of five interconnected dimensions—technology, impact, ethics, collaboration, and self-reflection—to support both cognitive and ethical engagement in AI education, while Pei et al. [

5] emphasized institutional and contextual factors that influence AI literacy among preservice teachers. These developments reflect a growing consensus that AI literacy is not limited to technical fluency but also involves the ability to reflect on the social and ethical dimensions of AI, assess AI tools critically, and engage with them as collaborative partners in the learning process [

6,

7].

In the K–12 sector, teacher preparedness has become a focal point in discussions about integrating AI into schooling. Yet empirical studies show that many teachers feel underprepared to adopt AI tools in practice, citing gaps in training, ethical uncertainty, and challenges in evaluating the appropriateness of available technologies [

8,

9]. This raises significant concerns for countries aiming to modernize their educational systems in alignment with global trends.

Saudi Arabia presents a compelling case in this regard. National reform strategies such as Vision 2030 and the National Strategy for Data and AI (NSDAI) have placed a strong emphasis on building human capital through education and digital transformation [

10,

11]. These reforms prioritize equipping teachers with competencies that extend beyond general digital skills to include AI-specific literacy. While these national priorities are clearly articulated, there is little empirical evidence assessing the extent to which K–12 teachers in Saudi Arabia possess these competencies. Most existing studies have concentrated on higher education settings [

12,

13,

14], leaving a notable research gap in the K–12 context. This gap hampers efforts to tailor professional development programs, design targeted curricula, and formulate policies that address the actual needs of school educators.

To address this limitation, the present study investigates Saudi K–12 teachers’ self-perceived AI literacy. This study offers a theoretical contribution by conceptualizing awareness as the foundational dimension of AI literacy, influencing how teachers develop ethical reasoning, critical evaluation, and practical classroom use of AI tools. The aim is to provide data-driven insights that can inform educational strategies and support the country’s broader transformation goals. Accordingly, this study aims to explore how competent Saudi K–12 teachers are in AI literacy across the dimensions of awareness, ethics, evaluation, and use, and investigates the interrelationships among these competencies, positioning awareness as a foundational driver of the others. As such, this study is guided by the following two research questions:

How competent do Saudi K–12 teachers perceive themselves to be in AI literacy across the factors of awareness, ethics, evaluation, and use?

What are the interrelationships among AI literacy factors (awareness, use, evaluation, and ethics) among Saudi K–12 teachers?

3. Conceptual Framework

This section introduces a conceptual framework to examine AI literacy among Saudi K–12 teachers. The selected dimensions—awareness, ethics, evaluation, and use—emerge from documented gaps in the Saudi context, particularly in teachers’ preparedness, ethical understanding, and critical assessment of AI tools [

33,

34]. These dimensions are also consistent with international models of AI literacy that emphasize the multifaceted nature of AI competence, including technical knowledge, ethical reasoning, and pedagogical application [

4,

22]. By integrating both global insights and local educational needs, this study adopts the four-dimensional framework proposed by Wang et al. [

35], comprising awareness, ethics, evaluation, and use, to guide the investigation.

While existing models often treat AI literacy dimensions as parallel constructs, this study adopts a different perspective by conceptualizing awareness as a foundational competency that informs teachers’ ethical reasoning, critical evaluation, and classroom use of AI. This framing reflects both the developmental nature of professional learning and the ethical imperatives emphasized in the Saudi context. In the Saudi K–12 context, current educational initiatives (e.g., Vision 2030, SDAIA’s AI Talent Development Program) emphasize the ethical awareness, responsible use, and critical understanding of AI systems rather than advanced technical development or interdisciplinary design. Moreover, recent studies in the region [

33,

34] indicate that teachers often face challenges in the basic awareness, ethical discernment, and evaluation of AI tools, which further supports the relevance of these four dimensions.

The following literature review supports this framework by examining the prior research that establishes the interrelationships among these constructs in educational settings.

Awareness is a foundational element of AI literacy that strongly influences educators’ adoption and integration of AI tools. Ng et al. [

22] defined awareness as the ability to recognize AI systems in daily life and comprehend their role in shaping human interactions and decision-making processes. For educators, awareness includes knowledge about how AI is used in educational technologies, such as adaptive learning platforms and administrative tools, as well as an understanding of these systems’ potential benefits and risks. Extant studies consistently have found that when educators possess a clear understanding of AI technologies—how they function, their benefits, and their limitations—they are more likely to use these tools effectively in their classrooms. Ng et al. [

22] emphasized that awareness creates a sense of familiarity and confidence, reducing anxieties about AI’s complexities and encouraging exploration. Similarly, Zhao et al. [

26] argued that awareness demystifies AI tools, empowering educators to recognize their potential for enhancing teaching and learning. For example, educators who are aware of AI’s ability to personalize learning can leverage it to support differentiated instruction.

Güneyli et al. [

36] explored teacher awareness of artificial intelligence (AI) in education through a case study conducted in Northern Cyprus, revealing that teacher awareness—particularly when grounded in applied experience—is a key determinant in the effective adoption and integration of AI tools in educational settings. Chiu et al. [

4] added that awareness is a critical determinant during the initial stages of AI adoption, facilitating smoother transitions from traditional methods to AI-enhanced practices. Other studies have similarly highlighted awareness as a crucial factor in AI adoption among educators [

37,

38]. This body of literature collectively underscores the pivotal role of awareness in fostering educators’ willingness and capability to integrate AI tools into their teaching strategies. Accordingly, the following hypothesis is proposed:

Hypothesis 1 (H1). Awareness of AI tools exerts a positive impact on their use among K–12 teachers.

Teachers with a strong awareness of AI tools are significantly better-equipped to evaluate these tools critically. Awareness provides educators with the conceptual knowledge needed to assess AI applications’ reliability, accuracy, and appropriateness in educational contexts. Ng et al. [

22] argued that awareness lays the groundwork for evaluative skills by familiarizing teachers with the operational principles and potential biases inherent in AI systems. This knowledge enables them to scrutinize AI tools more effectively, thereby ensuring alignment with pedagogical objectives. Chiu et al. [

4] and Alammari [

39] emphasized that awareness helps educators identify various AI tools’ strengths and limitations, empowering them to make informed decisions about their use. For example, a teacher aware of data privacy concerns in AI systems is more likely to evaluate these systems critically for compliance with ethical and legal standards. Sperling et al. [

27] found that teachers with heightened awareness are more discerning when selecting AI tools, focusing on their educational value and potential to address specific classroom challenges. Zhao et al. [

26] also found that awareness positively impacts educators’ ability to assess the scalability and long-term implications of adopting AI tools. Together, these studies demonstrate that awareness serves as the cognitive foundation for developing robust evaluation skills. Based on this, the following hypothesis is proposed:

Hypothesis 2 (H2). Awareness of AI tools exerts a positive impact on their evaluation among K–12 teachers.

Awareness of AI technologies significantly influences educators’ ethical decision-making, enabling them to navigate complex issues, such as bias, equity, and privacy. Ng et al. [

22] asserted that awareness fosters a deeper understanding of AI’s societal and ethical implications, providing educators with the tools to address these challenges responsibly. For example, a teacher who understands how AI algorithms function is better-positioned to recognize potential biases and advocate for equitable practices in their application. UNESCO [

40] underscored that awareness is a prerequisite for developing ethical literacy, as it equips educators with the knowledge needed to identify and mitigate ethical risks associated with AI tools. Eguchi et al. [

23] found that teachers who are aware of the potential for misuse of AI technologies are more likely to adopt preventive measures to protect student data and ensure transparency in AI-driven decision-making. Holmes and Porayska-Pomsta [

15] added that awareness of ethical issues enhances educators’ ability to foster discussions among students about AI ethics, promoting a culture of accountability and social responsibility. These studies collectively have found that awareness acts as a catalyst for ethical competence, guiding educators in making informed and morally sound decisions about AI integration. Therefore, the following hypothesis is proposed:

Hypothesis 3 (H3). Awareness of AI tools makes a positive impact on their ethics among K–12 teachers.

Ethical considerations significantly enhance teachers’ ability to evaluate AI tools, particularly for identifying biases, ensuring fairness, and maintaining transparency. Ng et al. [

22] argued that an understanding of ethical principles provides educators with a moral framework for assessing AI systems critically. This includes evaluating whether AI tools align with the values of inclusivity and equity, which are essential in diverse educational contexts. Chiu et al. [

4] found that ethical awareness allows educators to scrutinize AI tools for potential harm, such as discriminatory outcomes or breaches of privacy. UNESCO [

40] supports this perspective, emphasizing that ethical literacy is integral to the evaluation process, ensuring that AI tools meet both educational and societal standards. Eguchi et al. [

23] added that ethical considerations strengthen the evaluation process by encouraging educators to critically assess the broader societal and pedagogical implications of adopting AI in classrooms. These studies collectively demonstrate that ethics and evaluation are deeply intertwined, with ethical literacy serving as a foundation for responsible and informed assessments of AI technologies. Consequently, the following hypothesis is proposed:

Hypothesis 4 (H4). The ethical aspects of AI tools make a positive impact on their evaluation among K–12 teachers.

Ethical considerations are pivotal in shaping educators’ decisions to adopt and use AI tools responsibly. Ng et al. [

22] found that teachers with a strong understanding of AI ethics are more likely to implement these technologies in ways that promote fairness, transparency, and student well-being. Ethical literacy ensures that educators prioritize tools that respect data privacy, avoid reinforcing biases, and support equitable learning opportunities. Holmes and Porayska-Pomsta [

15] argued that ethical frameworks guide teachers in selecting AI tools that align with both societal values and educational integrity. For example, an educator aware of data privacy concerns may choose AI platforms with robust security measures to ensure that student information is protected. Alotaibi and Alshehri [

34] added that ethical considerations prevent misuse of AI tools, fostering a culture of accountability in their application. Sperling et al. [

27] emphasized that teachers with strong ethical awareness are more likely to advocate for policies and practices that support responsible AI integration, ensuring that these technologies are used for the benefit of all students. Thus, the following hypothesis is proposed:

Hypothesis 5 (H5). AI tools’ ethical aspects make a positive impact on their use among K–12 teachers.

Teachers’ ability to evaluate AI tools critically impacts their effective use in educational contexts. Evaluation allows educators to assess whether an AI tool aligns with their instructional goals, supports diverse learning needs, and operates reliably. Ng et al. [

22] argued that evaluation skills enable teachers to select tools that are both pedagogically sound and technically robust, ensuring that their use contributes positively to student outcomes. For example, an educator with strong evaluation skills might prioritize AI tools with adaptive learning features to cater to students with varying proficiency levels. Eguchi et al. [

23] emphasized that evaluation is crucial when identifying potential pitfalls in AI applications, such as biases in algorithmic decision-making or limitations in data processing. Teachers who evaluate tools critically are better-positioned to mitigate these issues, thereby ensuring responsible implementation. Zhao et al. [

26] found that evaluation skills not only improve the effectiveness of AI tool usage but also enhance educators’ confidence in integrating advanced technologies into their classrooms. Chung et al. [

41] highlighted that evaluation is a foundational component of AI literacy, as it equips teachers with the ability to interpret diagnostic feedback and performance metrics. This evaluative capacity empowers K–12 educators to make informed instructional decisions, ultimately enhancing the effectiveness and confidence in their use of AI tools. Together, these studies underscore evaluation’s essential role in bridging the gap between theoretical understanding and practical application. Therefore, the following hypothesis is proposed:

Hypothesis 6 (H6). Evaluation of AI tools makes a positive impact on their use among K–12 teachers.

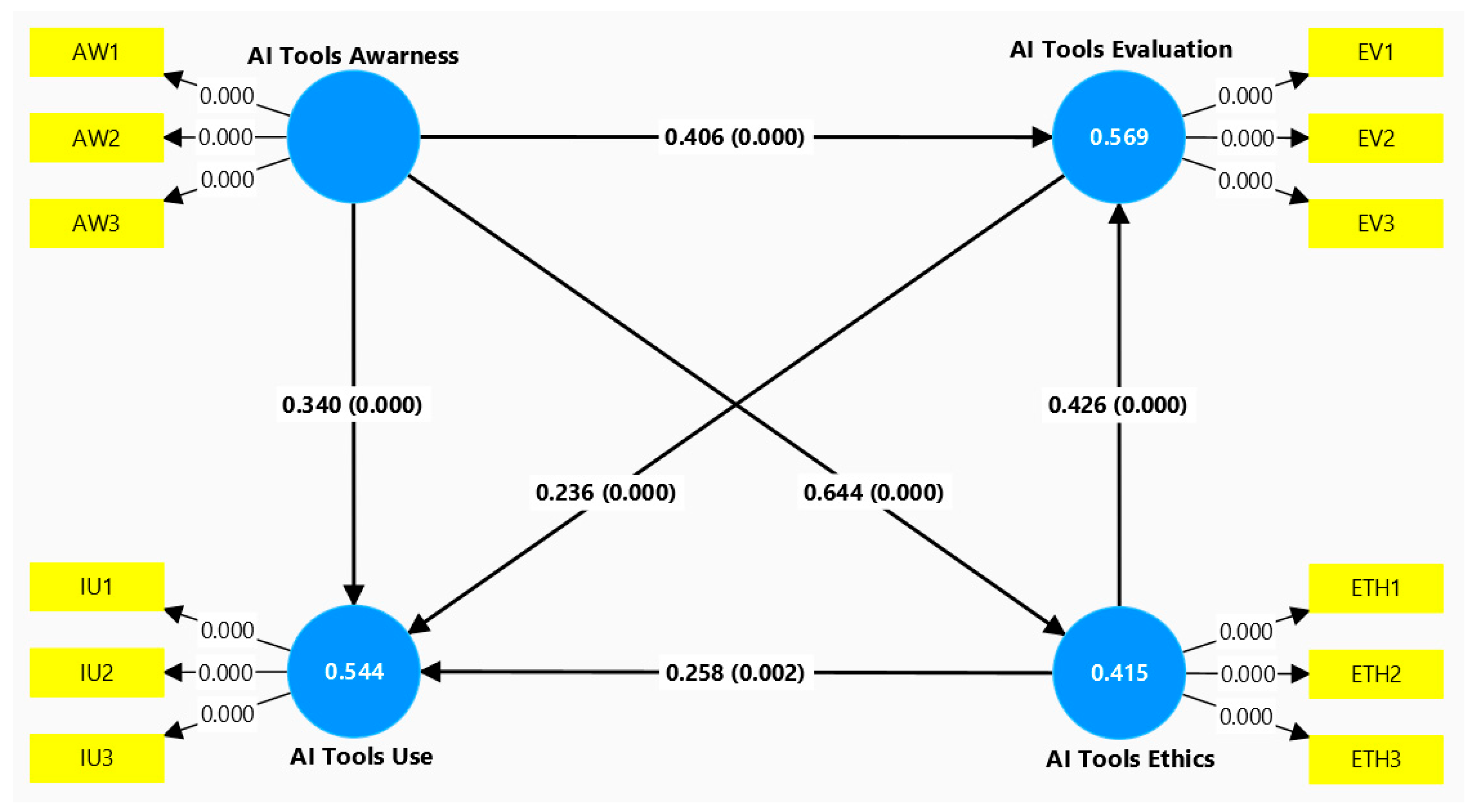

Figure 1 presents the proposed model of the interrelationships among the AI literacy competence constructs.

6. Discussion

This study investigated AI literacy level among Saudi K–12 teachers and examined the interrelationships among four key AI literacy dimensions: awareness, ethics, evaluation, and use. The results offer valuable insights into how teachers are positioned to engage with AI technologies and how these competencies interact to support responsible human–machine cooperation in educational contexts.

In response to the first research question, the descriptive findings indicate that Saudi teachers reported moderately high levels of AI literacy across all four domains. Ethics (M = 4.11) and awareness (M = 4.04) emerged as the most developed competencies, followed closely by evaluation (M = 4.00), while use (M = 3.94) scored the lowest. This pattern suggests that while teachers possess a strong conceptual understanding of AI and its ethical dimensions, their confidence and experience using AI tools practically in classrooms remain somewhat limited. These results are consistent with international findings, such as Zhao et al. [

26], who reported that Chinese teachers similarly exhibited high levels of ethical awareness but lacked hands-on familiarity with AI tools. Likewise, Tenberga and Daniela [

7] found that Latvian educators scored lower on practical AI use compared to conceptual understanding, indicating a global gap between awareness and implementation.

In addressing the second research question, the SEM revealed significant and theoretically coherent relationships between AI literacy dimensions. First, awareness significantly predicted the use of AI tools (β = 0.340,

p < 0.001), supporting the notion that teachers who are more informed about AI technologies are more likely to adopt them in practice. This finding aligns with the literature emphasizing awareness as a gateway to technology integration [

4,

5,

8]. Awareness helps reduce uncertainty and builds confidence to try out AI tools, thereby encouraging experimentation and adoption [

27].

In addition to influencing use, awareness also was found to make a strong impact on teachers’ ability to evaluate AI tools (β = 0.406,

p < 0.001). This relationship indicates that teachers who understand AI’s operational principles and potential risks are better-equipped to assess its educational value critically. This supports Sperling et al. [

27], who noted that awareness forms the cognitive basis for evaluative judgement, allowing teachers to discern AI applications’ appropriateness in relation to specific pedagogical goals. Awareness is not just informational; it activates reflective thinking that leads to better decision-making about AI use in the classroom.

The strongest relationship identified in this study was between awareness and ethics (β = 0.644,

p < 0.001), emphasizing that conceptual familiarity with AI also underpins ethical literacy. Teachers who are aware of how AI works are more likely to recognize issues of bias, data privacy, and algorithmic fairness. This finding is particularly relevant given the ethical risks posed by uncritical AI use in educational contexts. UNESCO [

40] and Holmes and Porayska-Pomsta [

15] both assert that awareness is essential for ethical reasoning, as it enables teachers to evaluate AI not only as a tool but also as a sociotechnical system with real-world consequences. In the Saudi context, in which formal AI policies for K–12 education are still emerging, this link between awareness and ethics underscores the importance of building foundational knowledge as a step toward responsible practice.

The results also confirm that ethics significantly influences evaluation (β = 0.426,

p < 0.001). This relationship illustrates that ethical reasoning enables teachers to assess AI systems more holistically, considering not only technical functionality but also issues such as fairness, transparency, and inclusivity. These findings are consistent with Chiu et al. [

4] and Eguchi et al. [

23], who argued that ethical awareness enhances the quality and rigor of technology assessment. Educators with a strong ethical grounding are more likely to question the implications of AI implementation and evaluate tools in line with professional and societal values.

Furthermore, ethics also were found to predict AI use directly (β = 0.258,

p = 0.002), suggesting that teachers who feel ethically competent are more confident in applying AI in their classrooms. This builds on extant research by Sperling et al. [

27], who found that ethical literacy reduces anxiety about using emerging technologies. Teachers who understand data protection principles or bias mitigation strategies may be more comfortable integrating AI tools, knowing they can use them responsibly and safely.

Finally, this study confirmed a significant link between evaluation and use (β = 0.236,

p < 0.001), in which teachers who can evaluate AI tools critically are more likely to use them effectively and meaningfully in practice. This aligns with Chung et al. [

41], who demonstrated that critical evaluation supports intentional, informed use of AI, as opposed to trial-and-error or passive adoption. Teachers who can assess AI tools’ pedagogical and ethical suitability are better-positioned to align these tools with curricular goals and student needs.

While this study focused on self-reported competencies, the findings imply clear application pathways in real-world classrooms. For instance, a teacher with strong ethical literacy may critically assess the fairness of an adaptive learning system that personalizes instruction based on potentially biased data. Similarly, a teacher with high awareness might question the data handling policies of an AI-driven homework-grading tool before adopting it in their class. Teachers with strong evaluative skills may opt out of opaque algorithmic tools, instead choosing platforms with explainable AI models that align with instructional transparency.

In addition to these practical implications, the study offers a theoretical contribution by conceptualizing awareness as a foundational AI literacy competency that significantly influences ethics, evaluation, and use. This structure departs from earlier models—such as Ng et al. [

22]—which tend to treat these dimensions as parallel rather than sequential. The significant path coefficients in our model suggest a developmental progression: teachers first develop awareness of AI technologies, which then informs their ethical reasoning, critical evaluation, and eventual classroom use. This stage-based interpretation provides a more dynamic understanding of how AI literacy evolves in professional practice and offers a practical framework for designing targeted training programs that scaffold competencies in a progressive manner.

6.1. Implications

This study’s findings pose meaningful implications for educational practice, policy development, and future research directions, particularly as nations such as Saudi Arabia accelerate digital transformation under initiatives such as Vision 2030. At the practical level, the results underscore the urgent need to design and implement comprehensive, competency-based professional development programs for K–12 teachers. While teachers demonstrated relatively high levels of awareness and ethical sensitivity, their comparatively lower scores in practical use and evaluation signal a critical skills gap that may hinder effective integration of AI into teaching and learning environments. This suggests that current training models may overemphasize theoretical exposure to AI concepts while underdelivering on applied skill-building.

To address this, professional development must move beyond introductory awareness sessions to provide immersive, hands-on experiences that allow teachers to engage directly with AI tools in pedagogically meaningful contexts [

5]. This includes integrating scenario-based learning that simulates ethical dilemmas, critical case analysis exercises that examine real-world implications of algorithmic bias and privacy breaches, and guided experimentation with classroom-ready AI platforms, such as intelligent tutoring systems, automated grading tools, or adaptive learning software. Such programs should be differentiated based on teachers’ prior experience, subject area, and educational level to ensure relevance and engagement. Moreover, teacher training programs should be tailored to the practical use of AI in schools, supported by diverse experts sharing their knowledge, and designed as ongoing initiatives with continuous mentoring, peer collaboration, and embedded feedback mechanisms within school communities [

44]. Without these measures, even high levels of theoretical AI literacy may fail to translate into confident and ethical classroom application.

At the policy level, this study highlights the necessity of embedding AI literacy competencies into national teacher qualification frameworks and certification standards. The statistically significant interrelationships among awareness, ethics, evaluation, and use reinforce the idea that these are not standalone constructs and actually form an integrated skillset essential for modern teaching. Therefore, policymakers must recognize that any initiative to introduce AI in education will be incomplete if it focusses solely on technological infrastructure or digital tools without equally investing in teacher readiness. This study’s implications suggest that national AI-in-education strategies must include structured pathways for teacher development that highlight AI literacy’s multidimensional nature.

Furthermore, as ethics emerged as a key predictor of both evaluation and use, policy documents should articulate clear ethical guidelines and protocols for AI integration within schools. This includes data protection policies tailored to educational contexts, frameworks for algorithmic transparency, and standards for equitable AI implementation across various socioeconomic and geographic contexts. In line with this, educational authorities should consider developing national AI literacy benchmarks or certification schemes—potentially modeled after international frameworks, such as UNESCO’s AI Competency Framework for Educators [

45]—that emphasize ethical, legal, and societal dimensions alongside technical skills.

Theoretically, this study’s results emphasize that AI literacy among teachers should be understood as a multidimensional professional competency that integrates cognitive understanding, ethical awareness, critical evaluation, and practical application. The significant interrelationships found among these constructs suggest that AI literacy cannot be developed through isolated technical training alone. Instead, it evolves through interconnected learning experiences that foster both reflective judgement and applied practice. This supports emerging theoretical perspectives that position AI literacy as part of teachers’ evolving digital competence, shaped not only by access to technology but also by values, pedagogical goals, and contextual realities within the classroom. Such a perspective encourages future research that investigates how these dimensions co-develop over time and how they can be supported through holistic professional learning models.

Moreover, this study invites further theoretical development by highlighting the interplay between conceptual understanding and ethical application. It raises questions about how AI literacy evolves over time, how contextual factors such as school culture or leadership support influence its development, and what interventions are most effective for building lasting competencies. Future research should also explore how these AI literacy competencies manifest in specific classroom interactions—such as lesson planning with intelligent tutoring systems, managing algorithmic bias in adaptive tools, or making ethical decisions when using automated feedback generators. Investigating how teachers operationalize these skills will help bridge the gap between self-perceived literacy and actual pedagogical practice, aligning more closely with Vision 2030’s emphasis on practical, real-world readiness. Future research should investigate these dynamics using longitudinal designs, mixed-methods approaches, or intervention-based studies to capture the complexities of how teachers internalize and operationalize AI literacy in real educational contexts.

6.2. Limitations and Future Directions

While this study provides valuable insights into K–12 teachers’ AI literacy competence in Saudi Arabia, several limitations should be acknowledged, each offering directions for future research. First, the use of a cross-sectional survey design limits the ability to determine causal relationships or track changes in AI literacy over time. To address this, future studies should adopt longitudinal or repeated-measures designs to monitor how teachers’ AI literacy develops through ongoing professional development, technological advancements, or policy interventions.

Second, the study relied on self-reported data, which may be subject to social desirability bias—particularly in sensitive domains such as ethical awareness and AI use. Although the instrument employed was previously validated [

35], the potential for over- or underreporting remains. Moreover, the exclusive use of a quantitative survey approach limited the ability to explore the nuanced perspectives, contextual factors, and lived experiences that shape teachers’ engagement with AI. Future research could incorporate complementary methods—such as direct assessments, classroom observations, digital usage analytics, and qualitative approaches like interviews or focus groups—to provide a more comprehensive and balanced understanding of teachers’ actual competencies and perceptions.

Third, the absence of geolocation data limits the study’s ability to explore regional disparities in AI literacy across Saudi Arabia’s diverse educational environments. Teachers working in metropolitan areas may have different levels of access to AI tools and training compared to those in suburban or remote regions. Future research should collect and analyze geolocation data to assess how geographical factors influence AI integration and inform location-specific policy and training efforts.

Fourth, the demographic profile of the sample showed some homogeneity, with 77.5% of participants identifying as female and most being mid-career professionals. While this may reflect workforce trends in the local educational context, it restricts the generalizability of the findings across different teacher populations. Future studies should aim for more demographically diverse samples and explore how variables such as gender, age, and teaching experience influence AI literacy through subgroup analysis or moderation modeling.

Lastly, while the study aligns with Saudi Arabia’s Vision 2030 in promoting technological readiness in education, it did not explore practical implementation strategies. Future research should engage in collaborative efforts with schools to co-design and test AI-integrated curricula, helping to bridge the gap between national policy objectives and classroom practice. Such partnerships would support more grounded, scalable, and contextually relevant applications of AI in K–12 education.

7. Conclusions

This study examined Saudi K–12 teachers’ AI literacy competence by examining four key constructs: awareness, ethics, evaluation, and use. The results indicate that teachers generally possess strong awareness and ethical understanding of AI technologies, though slightly less confidence in practical use and evaluation. SEM revealed that these competencies are significantly interrelated, with awareness serving as a foundational influence on ethical reasoning, evaluative skills, and eventual classroom application. Ethics, in turn, played a key role in enhancing both critical evaluation and practical use. Evaluation also emerged as a meaningful predictor of use, reinforcing reflective thinking’s importance in effective technology integration.

Together, these findings suggest that empowering teachers with AI literacy is not only about increasing their exposure to AI tools but also about cultivating a rich blend of conceptual understanding, ethical sensitivity, and practical confidence. As Saudi Arabia continues to invest in educational transformation under Vision 2030, these insights provide timely guidance for designing professional development, teacher preparation, and policy frameworks that support responsible, informed, and impactful AI integration in classrooms. Future studies should continue to build on this work by examining AI training’s longitudinal impacts, as well as AI literacy development across different educational contexts and grade levels.