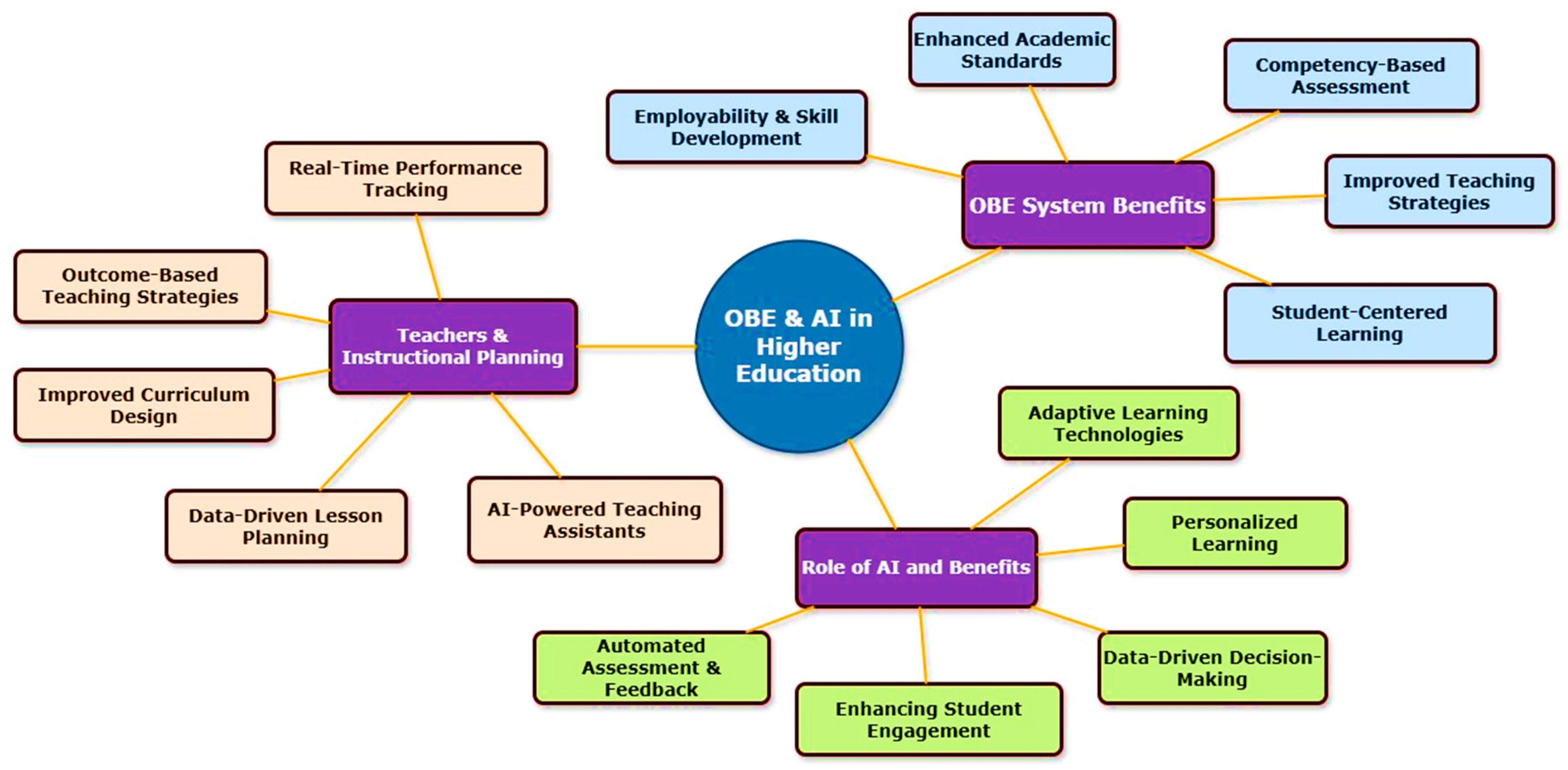

Exploring the Role of AI and Teacher Competencies on Instructional Planning and Student Performance in an Outcome-Based Education System

Abstract

1. Introduction

- To explore teachers’ perceptions of AI ChatGPT capabilities in supporting instructional planning within the OBE paradigm.

- To examine how teachers perceive the impact of their competencies on lesson planning and their ability to integrate AI tools.

- To assess the perceived direct and indirect effects of ChatGPT capabilities and teacher competencies on student achievement.

- To investigate the mediating effect of instructional planning in the relationship between AI capacities and teacher competencies, and their impact on student achievement.

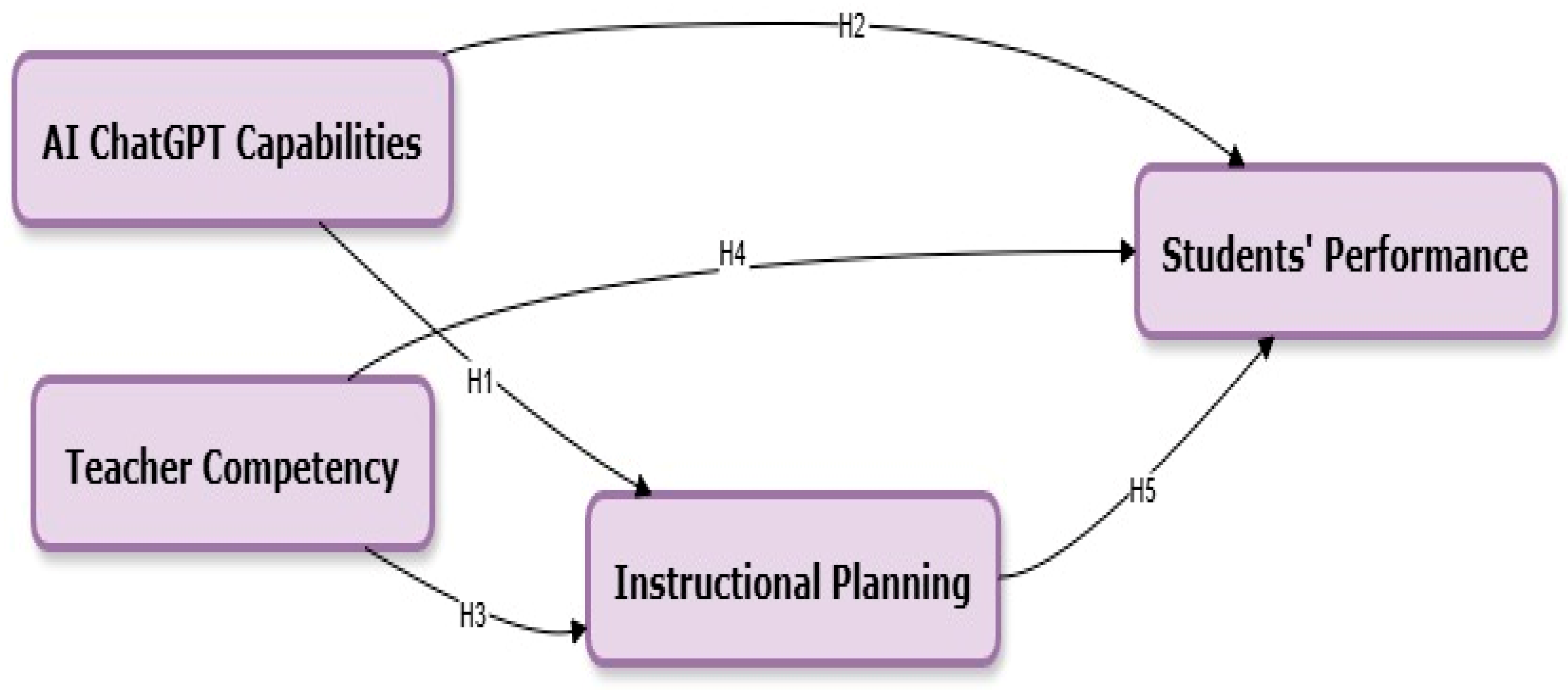

2. Theoretical Background and Hypothesis Development

2.1. AI ChatGPT Capabilities → Instructional Planning

2.2. AI ChatGPT Capabilities → Students’ Performance

2.3. Teacher Competency → Instructional Planning

2.4. Teacher Competency → Students’ Performance

2.5. Instructional Planning → Students’ Performance

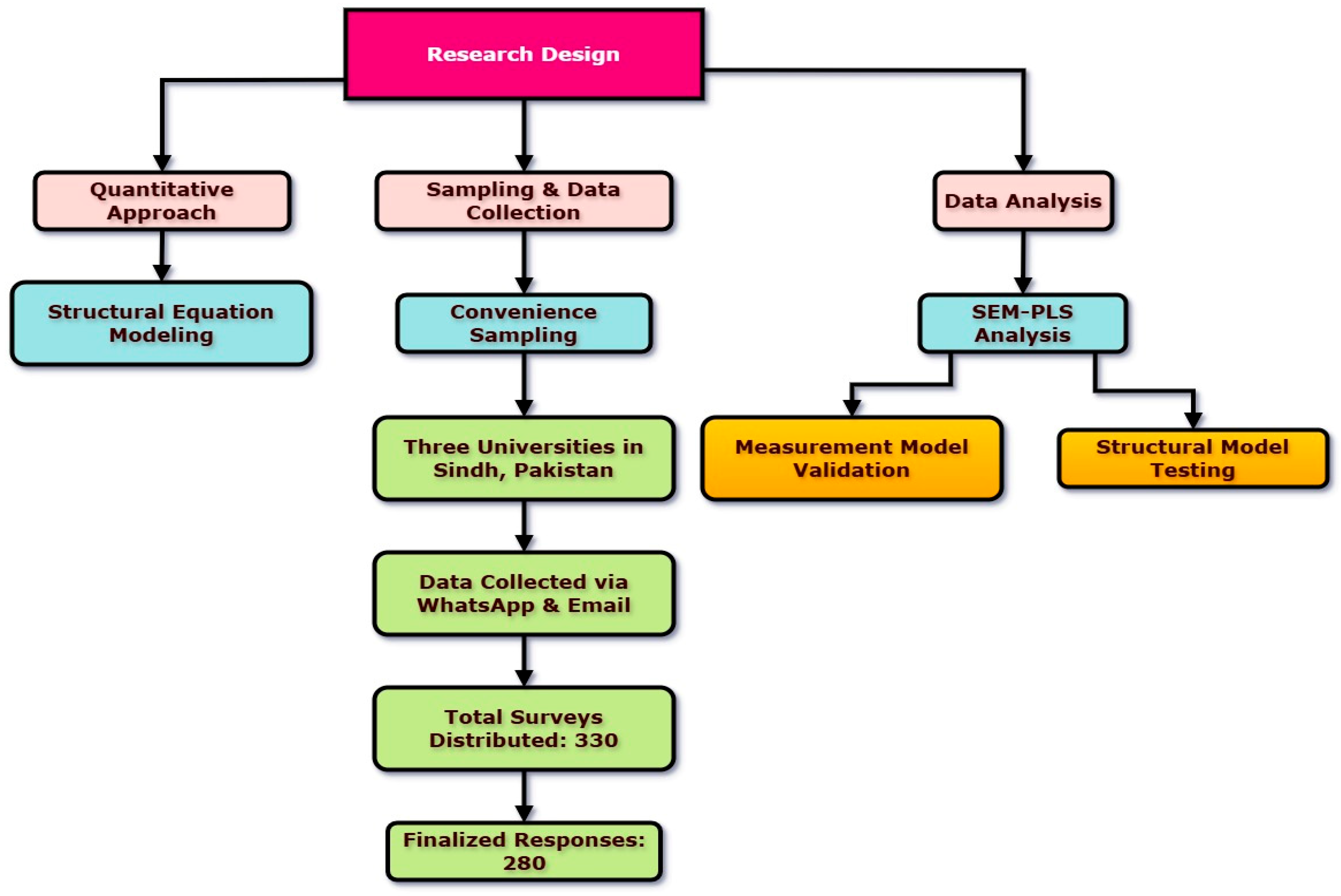

3. Methodology

3.1. Research Design

3.2. Sampling Strategy

3.3. Instrument Design and Validation

3.4. Ethical Considerations

3.5. Data Analysis Procedure

4. Findings

4.1. Survey Results

4.2. Measurement Model Analysis

4.3. Discriminant Validity Analysis

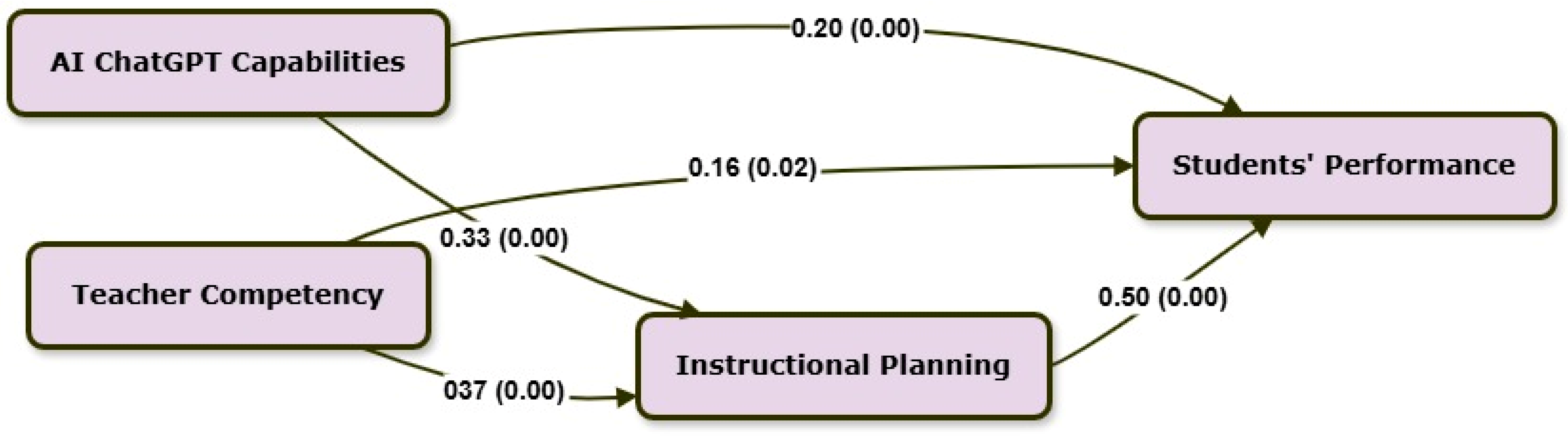

- Effect Size (f2) and Coefficient of Determination (R2) Analysis

- Small effect: 0.02 ≤ f2 < 0.15

- Moderate effect: 0.15 ≤ f2 < 0.35

- Large effect: f2 ≥ 0.35

- ACC → INP (f2 = 0.09): Small effect

- ACC → STP (f2 = 0.04): Small effect

- INP → STP (f2 = 0.33): Moderate effect

- TCO → INP (f2 = 0.11): Small effect

- TCO → STP (f2 = 0.03): Small effect

- R2 ≥ 0.75 → Substantial

- 0.50 ≤ R2 < 0.75 → Moderate

- 0.25 ≤ R2 < 0.50 → Weak

4.4. Structural Model Analysis

5. Discussion

5.1. Practical Implications

5.2. Theoretical Implications

6. Conclusions

Limitations and Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Data Collection Questionnaire

| S. No. | Item Statement | SD | D | N | A | SA |

| AI ChatGPT Capabilities | ||||||

| 1 | ChatGPT helps generate high-quality instructional content. (ACC1) | □ | □ | □ | □ | □ |

| 2 | ChatGPT provides relevant and accurate responses to teaching needs. (ACC2) | □ | □ | □ | □ | □ |

| 3 | ChatGPT enhances lesson planning and curriculum design. (ACC3) | □ | □ | □ | □ | □ |

| 4 | ChatGPT assists in providing personalized student feedback. (ACC4) | □ | □ | □ | □ | □ |

| 5 | ChatGPT improves efficiency in classroom management. (ACC5) | □ | □ | □ | □ | □ |

| Teacher Competency | ||||||

| 6 | I am confident in integrating AI tools like ChatGPT in teaching. (TCO1) | □ | □ | □ | □ | □ |

| 7 | I can effectively evaluate AI-generated content for instructional use. (TCO2) | □ | □ | □ | □ | □ |

| 8 | I have sufficient knowledge to incorporate ChatGPT into my teaching. (TCO3) | □ | □ | □ | □ | □ |

| 9 | I can guide students in using AI tools responsibly. (TCO4) | □ | □ | □ | □ | □ |

| 10 | I adapt my teaching strategies based on AI-generated insights. (TCO5) | □ | □ | □ | □ | □ |

| Instructional Planning | ||||||

| 11 | AI tools help me create structured and effective lesson plans. (INP1) | □ | □ | □ | □ | □ |

| 12 | I use ChatGPT to enhance my instructional strategies. (INP2) | □ | □ | □ | □ | □ |

| 13 | AI tools support differentiated instruction for diverse learners. (INP3) | □ | □ | □ | □ | □ |

| 14 | AI-driven insights improve my assessment strategies. (INP4) | □ | □ | □ | □ | □ |

| 15 | ChatGPT helps me align my teaching with curriculum goals. (INP5) | □ | □ | □ | □ | □ |

| 16 | AI tools enhance my ability to track student progress. (INP6) | □ | □ | □ | □ | □ |

| Student Performance | ||||||

| 17 | AI-driven tools improve student engagement in learning. (STP1) | □ | □ | □ | □ | □ |

| 18 | Students show a better understanding with AI-assisted learning. (STP2) | □ | □ | □ | □ | □ |

| 19 | ChatGPT helps students develop critical thinking skills. (STP3) | □ | □ | □ | □ | □ |

| 20 | AI-based instructional support enhances student motivation. (STP4) | □ | □ | □ | □ | □ |

| 21 | AI integration positively impacts students’ academic performance. (STP5) | □ | □ | □ | □ | □ |

References

- Syeed, M.M.M.; Shihavuddin, A.S.M.; Uddin, M.F.; Hasan, M.; Khan, R.H. Outcome Based Education (OBE): Defining the Process and Practice for Engineering Education. IEEE Access 2022, 10, 119170–119192. [Google Scholar] [CrossRef]

- Li, M.; Rohayati, M.I. The Relationship between Learning Outcomes and Graduate Competences: The Chain-Mediating Roles of Project-Based Learning and Assessment Strategies. Sustainability 2024, 16, 6080. [Google Scholar] [CrossRef]

- Harden, R.M. AMEE Guide No. 14: Outcome-Based Education: Part 1-An Introduction to Outcome-Based Education. Med. Teach. 1999, 21, 7–14. [Google Scholar] [CrossRef]

- Alamri, H.; Lowell, V.; Watson, W.; Watson, S.L. Using Personalized Learning as an Instructional Approach to Motivate Learners in Online Higher Education: Learner Self-Determination and Intrinsic Motivation. J. Res. Technol. Educ. 2020, 52, 322–352. [Google Scholar] [CrossRef]

- Harris, J.B.; Hofer, M.J. Technological Pedagogical Content Knowledge (TPACK) in Action: A Descriptive Study of Secondary Teachers’ Curriculum-Based, Technology-Related Instructional Planning. J. Res. Technol. Educ. 2011, 43, 211–229. [Google Scholar] [CrossRef]

- Lim, C.P.; Chai, C.S. Rethinking Classroom-Oriented Instructional Development Models to Mediate Instructional Planning in Technology-Enhanced Learning Environments. Teach. Teach. Educ. 2008, 24, 2002–2013. [Google Scholar] [CrossRef]

- Smith, S. An Analysis of Teachers’ Viewpoints from Public, Private, and Charter Schools on Effective Lesson Planning and Instruction. Ph.D. Thesis, Lindenwood University, St. Charles, MO, USA, 2024. [Google Scholar]

- Farhang, A.P.Q.; Hashemi, A.; Ghorianfar, A. Lesson Plan and Its Importance in Teaching Process. Int. J. Curr. Sci. Res. Rev. 2023, 6, 5901–5913. [Google Scholar] [CrossRef]

- Bhat, B.A.; Bhat, G.J. Formative and Summative Evaluation Techniques for Improvement of Learning Process. Eur. J. Bus. Soc. Sci. 2019, 7, 776–785. [Google Scholar]

- Rodrigues, F.; Oliveira, P. A System for Formative Assessment and Monitoring of Students’ Progress. Comput. Educ. 2014, 76, 30–41. [Google Scholar] [CrossRef]

- Looney, J.W. Integrating Formative and Summative Assessment: Progress Toward a Seamless System? OECD: Paris, France, 2011. [Google Scholar]

- Chisunum, J.I.; Nwadiokwu, C. Enhancing Student Engagement through Practical Production and Utilization of Instructional Materials in an Educational Technology Class: A Multifaceted Approach. NIU J. Educ. Res. 2024, 10, 81–89. [Google Scholar]

- Jayaraman, J.; Aane, J. The Impact of Digital Textbooks on Student Engagement in Higher Education: Highlighting the Significance of Interactive Learning Strategies Facilitated by Digital Media. In Implementing Interactive Learning Strategies in Higher Education; IGI Global: Hershey, PA, USA, 2024; pp. 301–328. [Google Scholar]

- Orlich, D.C.; Harder, R.J.; Callahan, R.C.; Trevisan, M.S.T.; Brown, A.H. Teaching Strategies: A Guide to Effective Instruction; Cengage Learning: Wadsworth, DC, USA, 2010. [Google Scholar]

- De Vera, J.L.; Manalo, M.; Ermeno, R.; Delos Reyes, C.; Elores, Y.D. Teachers’ Instructional Planning and Design for Learners in Difficult Circumstances. J. Pendidik. Progresif 2022, 12, 17–32. [Google Scholar] [CrossRef]

- Gupta, P.; Kulkarni, T.; Barot, V.; Toksha, B. Applications of ICT: Pathway to Outcome-Based Education in Engineering and Technology Curriculum. In Technology and Tools in Engineering Education; CRC Press: Boca Raton, FL, USA, 2021; pp. 109–142. [Google Scholar]

- Shaheen, S. Theoretical Perspectives and Current Challenges of OBE Framework. Int. J. Eng. Educ. 2019, 1, 122–129. [Google Scholar] [CrossRef]

- Spear-Swerling, L.; Zibulsky, J. Making Time for Literacy: Teacher Knowledge and Time Allocation in Instructional Planning. Read. Writ. 2014, 27, 1353–1378. [Google Scholar] [CrossRef]

- Angeli, C.; Valanides, N. Technology Mapping: An Approach for Developing Technological Pedagogical Content Knowledge. J. Educ. Comput. Res. 2013, 48, 199–221. [Google Scholar] [CrossRef]

- Chai, C.S.; Hwee Ling Koh, J.; Teo, Y.H. Enhancing and Modeling Teachers’ Design Beliefs and Efficacy of Technological Pedagogical Content Knowledge for 21st Century Quality Learning. J. Educ. Comput. Res. 2019, 57, 360–384. [Google Scholar] [CrossRef]

- Dahri, N.A.; Vighio, M.S.; Alismaiel, O.A.; Al-Rahmi, W.M. Assessing the Impact of Mobile-Based Training on Teachers’ Achievement and Usage Attitude. Int. J. Interact. Mob. Technol. 2022, 16, 107–129. [Google Scholar] [CrossRef]

- Dahri, N.A.; Yahaya, N.; Al-Rahmi, W.M.; Noman, H.A.; Alblehai, F.; Kamin, Y.B.; Soomro, R.B.; Shutaleva, A.; Al-Adwan, A.S. Investigating the Motivating Factors That Influence the Adoption of Blended Learning for Teachers’ Professional Development. Heliyon 2024, 10, e34900. [Google Scholar] [CrossRef]

- Dahri, N.A.; Yahaya, N.; Al-Rahmi, W.M.; Almogren, A.S.; Vighio, M.S. Investigating Factors Affecting Teachers’ Training through Mobile Learning: Task Technology Fit Perspective. Educ. Inf. Technol. 2024, 29, 14553–14589. [Google Scholar] [CrossRef]

- Pirzada, G.; Gull, F. Impact of Outcome-Based Education on Teaching Performance at Higher Education Level in Pakistan. J. Res. Humanit. Soc. Sci. 2019, 2, 95–110. [Google Scholar]

- Mishra, P.; Koehler, M.J. Technological Pedagogical Content Knowledge: A Framework for Teacher Knowledge. Teach. Coll. Rec. 2006, 108, 1017–1054. [Google Scholar] [CrossRef]

- Ren, X.; Wu, M.L. Examining Teaching Competencies and Challenges While Integrating Artificial Intelligence in Higher Education. TechTrends 2025, 69, 519–538. [Google Scholar] [CrossRef]

- Asim, H.M.; Vaz, A.; Ahmed, A.; Sadiq, S. A Review on Outcome Based Education and Factors That Impact Student Learning Outcomes in Tertiary Education System. Int. Educ. Stud. 2021, 14, 1. [Google Scholar] [CrossRef]

- Mouza, C. Promoting Urban Teachers’ Understanding of Technology, Content, and Pedagogy in the Context of Case Development. J. Res. Technol. Educ. 2011, 44, 1–29. [Google Scholar] [CrossRef]

- Utari, V.T.; Maryani, I.; Hasanah, E.; Suyatno, S.; Mardati, A.; Bastian, N.; Karimi, A.; Reotutor, M.A.C. Exploring the Intersection of TPACK and Professional Competence: A Study on Differentiated Instruction Development within Indonesia’s Merdeka Curriculum. Indones. J. Learn. Adv. Educ. 2025, 7, 136–153. [Google Scholar] [CrossRef]

- Dahri, N.A.; Yahaya, N.; Al-Rahmi, W.M.; Vighio, M.S.; Alblehai, F.; Soomro, R.B.; Shutaleva, A. Investigating AI-Based Academic Support Acceptance and Its Impact on Students’ Performance in Malaysian and Pakistani Higher Education Institutions. Educ. Inf. Technol. 2024, 29, 18695–18744. [Google Scholar] [CrossRef]

- Holmes, W.; Miao, F. Guidance for Generative AI in Education and Research; UNESCO Publishing: Paris, France, 2023; ISBN 9231006126. [Google Scholar]

- Dahri, N.A.; Yahaya, N.; Al-Rahmi, W.M. Exploring the Influence of ChatGPT on Student Academic Success and Career Readiness. Educ. Inf. Technol. 2024, 30, 8877–8921. [Google Scholar] [CrossRef]

- Almuhanna, M.A. Teachers’ Perspectives of Integrating AI-Powered Technologies in K-12 Education for Creating Customized Learning Materials and Resources. Educ. Inf. Technol. 2024, 30, 10343–10371. [Google Scholar] [CrossRef]

- Vetrivel, S.C.; Vidhyapriya, P.; Arun, V.P. The Role of AI in Transforming Assessment Practices in Education. In AI Applications and Strategies in Teacher Education; IGI Global: Hershey, PA, USA, 2025; pp. 43–70. [Google Scholar]

- Strielkowski, W.; Grebennikova, V.; Lisovskiy, A.; Rakhimova, G.; Vasileva, T. AI-driven Adaptive Learning for Sustainable Educational Transformation. Sustain. Dev. 2024, 33, 1921–1947. [Google Scholar] [CrossRef]

- Fitria, T.N. Artificial Intelligence (AI) Technology in OpenAI ChatGPT Application: A Review of ChatGPT in Writing English Essay. ELT Forum J. Engl. Lang. Teach. 2023, 12, 44–58. [Google Scholar] [CrossRef]

- Khlaif, Z.N.; Mousa, A.; Hattab, M.K.; Itmazi, J.; Hassan, A.A.; Sanmugam, M.; Ayyoub, A. The Potential and Concerns of Using AI in Scientific Research: ChatGPT Performance Evaluation. JMIR Med. Educ. 2023, 9, e47049. [Google Scholar] [CrossRef]

- Kooli, C.; Yusuf, N. Transforming Educational Assessment: Insights into the Use of ChatGPT and Large Language Models in Grading. Int. J. Hum.-Comput. Interact. 2024, 41, 3388–3399. [Google Scholar] [CrossRef]

- van den Berg, G.; du Plessis, E. ChatGPT and Generative AI: Possibilities for Its Contribution to Lesson Planning, Critical Thinking and Openness in Teacher Education. Educ. Sci. 2023, 13, 998. [Google Scholar] [CrossRef]

- Karataş, F.; Ataç, B.A. When TPACK Meets Artificial Intelligence: Analyzing TPACK and AI-TPACK Components through Structural Equation Modelling. Educ. Inf. Technol. 2024, 30, 8979–9004. [Google Scholar] [CrossRef]

- Lo, C.K. What Is the Impact of ChatGPT on Education? A Rapid Review of the Literature. Educ. Sci. 2023, 13, 410. [Google Scholar] [CrossRef]

- Ning, Y.; Zhang, C.; Xu, B.; Zhou, Y.; Wijaya, T.T. Teachers’ AI-TPACK: Exploring the Relationship between Knowledge Elements. Sustainability 2024, 16, 978. [Google Scholar] [CrossRef]

- Harris, J.; Mishra, P.; Koehler, M. Teachers’ Technological Pedagogical Content Knowledge and Learning Activity Types: Curriculum-Based Technology Integration Reframed. J. Res. Technol. Educ. 2009, 41, 393–416. [Google Scholar] [CrossRef]

- Biggs, J.; Tang, C. Constructive Alignment: An Outcomes-Based Approach to Teaching Anatomy. In Teaching Anatomy: A Practical Guide; Springer: Berlin/Heidelberg, Germany, 2014; pp. 31–38. [Google Scholar]

- Shulman, L.S. Those Who Understand: Knowledge Growth in Teaching. Educ. Res. 1986, 15, 4–14. [Google Scholar] [CrossRef]

- Pierson, M.E. Technology Integration Practice as a Function of Pedagogical Expertise. J. Res. Comput. Educ. 2001, 33, 413–430. [Google Scholar] [CrossRef]

- Hava, K.; Babayiğit, Ö. Exploring the Relationship between Teachers’ Competencies in AI-TPACK and Digital Proficiency. Educ. Inf. Technol. 2024, 30, 3491–3508. [Google Scholar] [CrossRef]

- Henri, M.; Johnson, M.D.; Nepal, B. A Review of Competency-based Learning: Tools, Assessments, and Recommendations. J. Eng. Educ. 2017, 106, 607–638. [Google Scholar] [CrossRef]

- Kennedy, M.; Birch, P. Reflecting on Outcome-Based Education for Human Services Programs in Higher Education: A Policing Degree Case Study. J. Criminol. Res. Policy Pract. 2020, 6, 111–122. [Google Scholar] [CrossRef]

- Hussain, W.; Spady, W.G.; Khan, S.Z.; Khawaja, B.A.; Naqash, T.; Conner, L. Impact Evaluations of Engineering Programs Using Abet Student Outcomes. IEEE Access 2021, 9, 46166–46190. [Google Scholar] [CrossRef]

- Morcke, A.M.; Dornan, T.; Eika, B. Outcome (Competency) Based Education: An Exploration of Its Origins, Theoretical Basis, and Empirical Evidence. Adv. Health Sci. Educ. 2013, 18, 851–863. [Google Scholar] [CrossRef]

- Tan, K.; Chong, M.C.; Subramaniam, P.; Wong, L.P. The Effectiveness of Outcome Based Education on the Competencies of Nursing Students: A Systematic Review. Nurse Educ. Today 2018, 64, 180–189. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Xie, H.; Zou, D.; Hwang, G.-J. Application and Theory Gaps during the Rise of Artificial Intelligence in Education. Comput. Educ. Artif. Intell. 2020, 1, 100002. [Google Scholar] [CrossRef]

- Jung, D.; Suh, S. Enhancing Soft Skills through Generative AI in Sustainable Fashion Textile Design Education. Sustainability 2024, 16, 6973. [Google Scholar] [CrossRef]

- Naznin, K.; Al Mahmud, A.; Nguyen, M.T.; Chua, C. ChatGPT Integration in Higher Education for Personalized Learning, Academic Writing, and Coding Tasks: A Systematic Review. Computers 2025, 14, 53. [Google Scholar] [CrossRef]

- Wang, H.; Dang, A.; Wu, Z.; Mac, S. Generative AI in Higher Education: Seeing ChatGPT Through Universities’ Policies, Resources, and Guidelines. Comput. Educ. Artif. Intell. 2024, 7, 100326. [Google Scholar] [CrossRef]

- Kasneci, E.; Seßler, K.; Küchemann, S.; Bannert, M.; Dementieva, D.; Fischer, F.; Gasser, U.; Groh, G.; Günnemann, S.; Hüllermeier, E. ChatGPT for Good? On Opportunities and Challenges of Large Language Models for Education. Learn. Individ. Differ. 2023, 103, 102274. [Google Scholar] [CrossRef]

- Hussain, F.; Anwar, M.A. Towards Informed Policy Decisions: Assessing Student Perceptions and Intentions to Use ChatGPT for Academic Performance in Higher Education. J. Asian Public Policy 2024, 1–28. [Google Scholar] [CrossRef]

- Celik, I. Towards Intelligent-TPACK: An Empirical Study on Teachers’ Professional Knowledge to Ethically Integrate Artificial Intelligence (AI)-Based Tools into Education. Comput. Hum. Behav. 2023, 138, 107468. [Google Scholar] [CrossRef]

- Almaiah, M.A.; Alfaisal, R.; Salloum, S.A.; Al-Otaibi, S.; Shishakly, R.; Lutfi, A.; Alrawad, M.; Mulhem, A.A.; Awad, A.B.; Al-Maroof, R.S. Integrating Teachers’ TPACK Levels and Students’ Learning Motivation, Technology Innovativeness, and Optimism in an IoT Acceptance Model. Electronics 2022, 11, 3197. [Google Scholar] [CrossRef]

- Karaman, M.R. Are Lesson Plans Created by ChatGPT More Effective? An Experimental Study. Int. J. Technol. Educ. 2024, 7, 107–127. [Google Scholar] [CrossRef]

- Tungpalan, K.A.; Antalan, M.F. Teachers’ Perception and Experience on Outcomes-Based Education Implementation in Isabela State University. Int. J. Eval. Res. Educ. 2021, 10, 1213–1220. [Google Scholar] [CrossRef]

- Donker, A.S.; De Boer, H.; Kostons, D.; Van Ewijk, C.C.D.; van der Werf, M.P.C. Effectiveness of Learning Strategy Instruction on Academic Performance: A Meta-Analysis. Educ. Res. Rev. 2014, 11, 1–26. [Google Scholar] [CrossRef]

- Egara, F.O.; Mosimege, M. Exploring the Integration of Artificial Intelligence-Based ChatGPT into Mathematics Instruction: Perceptions, Challenges, and Implications for Educators. Educ. Sci. 2024, 14, 742. [Google Scholar] [CrossRef]

- Al-Mamary, Y.H.; Alfalah, A.A.; Shamsuddin, A.; Abubakar, A.A. Artificial Intelligence Powering Education: ChatGPT’s Impact on Students’ Academic Performance through the Lens of Technology-to-Performance Chain Theory. J. Appl. Res. High. Educ. 2024; ahead-of-print. [Google Scholar]

- Zamir, M.Z.; Abid, M.I.; Fazal, M.R.; Qazi, M.A.A.R.; Kamran, M. Switching to Outcome-Based Education (OBE) System, a Paradigm Shift in Engineering Education. IEEE Trans. Educ. 2022, 65, 695–702. [Google Scholar] [CrossRef]

- Gopal, Y. Exploring Academic Perspectives: Sentiments and Discourse on ChatGPT Adoption in Higher Education. Master’s Thesis, Universität Koblenz, Koblenz, Germany, 2024. [Google Scholar]

- Miah, A.S.M.; Tusher, M.M.R.; Hossain, M.M.; Hossain, M.M.; Rahim, M.A.; Hamid, M.E.; Islam, M.S.; Shin, J. ChatGPT in Research and Education: Exploring Benefits and Threats. arXiv 2024, arXiv:2411.02816. [Google Scholar]

- Lee, G.-G.; Zhai, X. Using ChatGPT for Science Learning: A Study on Pre-Service Teachers’ Lesson Planning. IEEE Trans. Learn. Technol. 2024, 17, 1643–1660. [Google Scholar] [CrossRef]

- Zou, D.; Xie, H.; Kohnke, L. Navigating the Future: Establishing a Framework for Educators’ Pedagogic Artificial Intelligence Competence. Eur. J. Educ. 2025, 60, e70117. [Google Scholar] [CrossRef]

- Chaudhry, I.S.; Sarwary, S.A.M.; El Refae, G.A.; Chabchoub, H. Time to Revisit Existing Student’s Performance Evaluation Approach in Higher Education Sector in a New Era of ChatGPT—A Case Study. Cogent Educ. 2023, 10, 2210461. [Google Scholar] [CrossRef]

- Lo, C.K.; Hew, K.F.; Jong, M.S. The Influence of ChatGPT on Student Engagement: A Systematic Review and Future Research Agenda. Comput. Educ. 2024, 219, 105100. [Google Scholar] [CrossRef]

- Ye, L.; Ismail, H.H.; Aziz, A.A. Innovative Strategies for TPACK Development in Pre-Service English Teacher Education in the 21st Century: A Systematic Review. Forum Linguist. Stud. 2024, 6, 274–294. [Google Scholar] [CrossRef]

- Rodafinos, A.; Barkoukis, V.; Tzafilkou, K.; Ourda, D.; Economides, A.A.; Perifanou, M. Exploring the Impact of Digital Competence and Technology Acceptance on Academic Performance in Physical Education and Sports Science Students. J. Inf. Technol. Educ. Res. 2024, 23, 19. [Google Scholar] [CrossRef]

- Wang, J.; Fan, W. The Effect of ChatGPT on Students’ Learning Performance, Learning Perception, and Higher-Order Thinking: Insights from a Meta-Analysis. Humanit. Soc. Sci. Commun. 2025, 12, 621. [Google Scholar] [CrossRef]

- ElSayary, A. An Investigation of Teachers’ Perceptions of Using ChatGPT as a Supporting Tool for Teaching and Learning in the Digital Era. J. Comput. Assist. Learn. 2024, 40, 931–945. [Google Scholar] [CrossRef]

- Caratiquit, K.D.; Caratiquit, L.J.C. ChatGPT as an Academic Support Tool on the Academic Performance among Students: The Mediating Role of Learning Motivation. J. Soc. Humanit. Educ. 2023, 4, 21–33. [Google Scholar] [CrossRef]

- Pantić, N.; Wubbels, T. Teacher Competencies as a Basis for Teacher Education–Views of Serbian Teachers and Teacher Educators. Teach. Teach. Educ. 2010, 26, 694–703. [Google Scholar] [CrossRef]

- Podungge, R.; Rahayu, M.; Setiawan, M.; Sudiro, A. Teacher Competence and Student Academic Achievement. In Proceedings of the 23rd Asian Forum of Business Education (AFBE 2019), Bali, Indonesia, 12–13 December 2019; Atlantis Press: Paris, France, 2020; pp. 69–74. [Google Scholar]

- Rapanta, C.; Botturi, L.; Goodyear, P.; Guàrdia, L.; Koole, M. Online University Teaching during and after the Covid-19 Crisis: Refocusing Teacher Presence and Learning Activity. Postdigital Sci. Educ. 2020, 2, 923–945. [Google Scholar] [CrossRef]

- Molotsi, A.; van Wyk, M. Exploring Teachers’use of Technological Pedagogical Knowledge in Teaching Subjects in Rural Areas. J. Inf. Technol. Educ. Res. 2024, 23, 30. [Google Scholar]

- Macayan, J. V Implementing Outcome-Based Education (OBE) Framework: Implications for Assessment of Students’ Performance. Educ. Meas. Eval. Rev. 2017, 8, 1–10. [Google Scholar]

- Fuchs, L.S.; Fuchs, D.; Stecker, P.M. Effects of Curriculum-Based Measurement on Teachers’ Instructional Planning. J. Learn. Disabil. 1989, 22, 51–59. [Google Scholar] [CrossRef] [PubMed]

- Bennett, S.; Dawson, P.; Bearman, M.; Molloy, E.; Boud, D. How Technology Shapes Assessment Design: Findings from a Study of University Teachers. Br. J. Educ. Technol. 2017, 48, 672–682. [Google Scholar] [CrossRef]

- Hora, M.T.; Holden, J. Exploring the Role of Instructional Technology in Course Planning and Classroom Teaching: Implications for Pedagogical Reform. J. Comput. High. Educ. 2013, 25, 68–92. [Google Scholar] [CrossRef]

- Tseng, J.-J.; Chai, C.S.; Tan, L.; Park, M. A Critical Review of Research on Technological Pedagogical and Content Knowledge (TPACK) in Language Teaching. Comput. Assist. Lang. Learn. 2022, 35, 948–971. [Google Scholar] [CrossRef]

- Kabakci Yurdakul, I.; Çoklar, A.N. Modeling Preservice Teachers’ TPACK Competencies Based on ICT Usage. J. Comput. Assist. Learn. 2014, 30, 363–376. [Google Scholar] [CrossRef]

- Agustini, K.; Santyasa, I.W.; Ratminingsih, N.M. Analysis of Competence on “TPACK”: 21st Century Teacher Professional Development. J. Phys. Conf. Ser. 2019, 1387, 12035. [Google Scholar] [CrossRef]

- Nbina, J.B. Teachers’ Competence and Students’ Academic Performance in Senior Secondary Schools Chemistry: Is There Any Relationship? Glob. J. Educ. Res. 2012, 11, 15–18. [Google Scholar]

- Levy-Feldman, I. The Role of Assessment in Improving Education and Promoting Educational Equity. Educ. Sci. 2025, 15, 224. [Google Scholar] [CrossRef]

- Tomaszewski, W.; Xiang, N.; Huang, Y.; Western, M.; McCourt, B.; McCarthy, I. The Impact of Effective Teaching Practices on Academic Achievement When Mediated by Student Engagement: Evidence from Australian High Schools. Educ. Sci. 2022, 12, 358. [Google Scholar] [CrossRef]

- Ekmekci, A.; Serrano, D.M. The Impact of Teacher Quality on Student Motivation, Achievement, and Persistence in Science and Mathematics. Educ. Sci. 2022, 12, 649. [Google Scholar] [CrossRef]

- Woodcock, S.; Gibbs, K.; Hitches, E.; Regan, C. Investigating Teachers’ Beliefs in Inclusive Education and Their Levels of Teacher Self-Efficacy: Are Teachers Constrained in Their Capacity to Implement Inclusive Teaching Practices? Educ. Sci. 2023, 13, 280. [Google Scholar] [CrossRef]

- Dean, C.B.; Hubbell, E.R. Classroom Instruction That Works: Research-Based Strategies for Increasing Student Achievement; ASCD: Arlington, VA, USA, 2012; ISBN 1416613625. [Google Scholar]

- Reeves, A.R. Where Great Teaching Begins: Planning for Student Thinking and Learning; ASCD: Arlington, VA, USA, 2011; ISBN 1416614265. [Google Scholar]

- Dunlosky, J.; Rawson, K.A.; Marsh, E.J.; Nathan, M.J.; Willingham, D.T. Improving Students’ Learning with Effective Learning Techniques: Promising Directions from Cognitive and Educational Psychology. Psychol. Sci. Public Interes. 2013, 14, 4–58. [Google Scholar] [CrossRef]

- Reigeluth, C.M.; Aslan, S.; Chen, Z.; Dutta, P.; Huh, Y.; Lee, D.; Lin, C.-Y.; Lu, Y.-H.; Min, M.; Tan, V. Personalized Integrated Educational System: Technology Functions for the Learner-Centered Paradigm of Education. J. Educ. Comput. Res. 2015, 53, 459–496. [Google Scholar] [CrossRef]

- Wei, W. Using Summative and Formative Assessments to Evaluate EFL Teachers’ Teaching Performance. Assess. Eval. High. Educ. 2015, 40, 611–623. [Google Scholar] [CrossRef]

- Noushad, P.P. Aligning Learning Outcomes with Learning Process. In Designing and Implementing the Outcome-Based Education Framework; Springer: Berlin/Heidelberg, Germany, 2024; pp. 139–202. ISBN 9819604400. [Google Scholar]

- Macklem, G.L. Boredom in the Classroom: Addressing Student Motivation, Self-Regulation, and Engagement in Learning; Springer: Berlin/Heidelberg, Germany, 2015; Volume 1, ISBN 3319131206. [Google Scholar]

- Ritter, O.N. Integration of Educational Technology for the Purposes of Differentiated Instruction in Secondary STEM Education. Ph.D. Thesis, University of Tennessee, Knoxville, TN, USA, 2018. [Google Scholar]

- Hair, J.F., Jr.; Hult, G.T.M.; Ringle, C.M.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM); Sage Publications: Thousand Oaks, CA, USA, 2021; ISBN 1544396333. [Google Scholar]

- Etikan, I.; Musa, S.A.; Alkassim, R.S. Comparison of Convenience Sampling and Purposive Sampling. Am. J. Theor. Appl. Stat. 2016, 5, 1. [Google Scholar] [CrossRef]

- Creswell, J.W.; Creswell, J.D. Research Design: Qualitative, Quantitative, and Mixed Methods Approaches; Sage Publications: Thousand Oaks, CA, USA, 2017; ISBN 1506386717. [Google Scholar]

- Taherdoost, H. What Is the Best Response Scale for Survey and Questionnaire Design; Review of Different Lengths of Rating Scale/Attitude Scale/Likert Scale. Int. J. Acad. Res. Manag. (IJARM) 2019, 8, 1–10. [Google Scholar]

- Hair, J.F.; Sarstedt, M.; Hopkins, L.; Kuppelwieser, V.G. Partial Least Squares Structural Equation Modeling (PLS-SEM): An Emerging Tool in Business Research. Eur. Bus. Rev. 2014, 26, 106–121. [Google Scholar] [CrossRef]

- Kline, R.B. Principles and Practice of Structural Equation Modeling; Guilford Publications: New York, NY, USA, 2015; ISBN 1462523358. [Google Scholar]

- Liu, Z.; Vobolevich, A.; Oparin, A. The Influence of AI ChatGPT on Improving Teachers’ Creative Thinking. Int. J. Learn. Teach. Educ. Res. 2023, 22, 124–139. [Google Scholar] [CrossRef]

- Dahri, N.A.; Yahaya, N.; Al-Rahmi, W.M.; Aldraiweesh, A.; Alturki, U.; Almutairy, S.; Shutaleva, A.; Soomro, R.B. Extended TAM Based Acceptance of AI-Powered ChatGPT for Supporting Metacognitive Self-Regulated Learning in Education: A Mixed-Methods Study. Heliyon 2024, 10, e29317. [Google Scholar] [CrossRef]

- Stronge, J.H.; Ward, T.J.; Grant, L.W. What Makes Good Teachers Good? A Cross-Case Analysis of the Connection between Teacher Effectiveness and Student Achievement. J. Teach. Educ. 2011, 62, 339–355. [Google Scholar] [CrossRef]

- Nunnally, B.; Bernstein, I. Psychometric Theory; Oxford Univer: New York, NY, USA, 1994. [Google Scholar]

- Bryman, A. Social Research Methods; Oxford University Press: Oxford, UK, 2016; ISBN 0199689458. [Google Scholar]

- Hair, J.F.; Risher, J.J.; Sarstedt, M.; Ringle, C.M. When to Use and How to Report the Results of PLS-SEM. Eur. Bus. Rev. 2019, 31, 2–24. [Google Scholar] [CrossRef]

- Sarstedt, M.; Ringle, C.M.; Hair, J.F. Partial Least Squares Structural Equation Modeling. In Handbook of Market Research; Springer: Berlin/Heidelberg, Germany, 2021; pp. 587–632. [Google Scholar]

- Fornell, C.; Larcker, D.F. Structural Equation Models with Unobservable Variables and Measurement Error: Algebra and Statistics. J. Mark. Res. 1981, 18, 382–388. [Google Scholar] [CrossRef]

- Henseler, J.; Ringle, C.M.; Sarstedt, M. A New Criterion for Assessing Discriminant Validity in Variance-Based Structural Equation Modeling. J. Acad. Mark. Sci. 2015, 43, 115–135. [Google Scholar] [CrossRef]

- Chin, W.W. The Partial Least Squares Approach to Structural Equation Modeling. Mod. Methods Bus. Res. 1998, 295, 295–336. [Google Scholar]

- Hair, J.F., Jr.; Hult, G.T.M.; Ringle, C.M.; Sarstedt, M.; Danks, N.P.; Ray, S. Partial Least Squares Structural Equation Modeling (PLS-SEM) Using R: A Workbook; Springer Nature: Berlin/Heidelberg, Germany, 2021; ISBN 3030805190. [Google Scholar]

- Fornell, C.; Larcker, D.F. Evaluating Structural Equation Models with Unobservable Variables and Measurement Error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences; Academic press: Cambridge, MA, USA, 1988; ISBN 1483276481. [Google Scholar]

- Duong, C.D.; Nguyen, T.H.; Ngo, T.V.N.; Dao, V.T.; Do, N.D.; Pham, T. Van Exploring Higher Education Students’ Continuance Usage Intention of ChatGPT: Amalgamation of the Information System Success Model and the Stimulus-Organism-Response Paradigm. Int. J. Inf. Learn. Technol. 2024, 41, 556–584. [Google Scholar]

- Parker, L.; Carter, C.W.; Karakas, A.; Loper, A.J.; Sokkar, A. Artificial Intelligence in Undergraduate Assignments: An Exploration of the Effectiveness and Ethics of ChatGPT in Academic Work. In ChatGPT and Global Higher Education: Using Artificial Intelligence in Teaching and Learning; STAR Scholars Press: Baltimore, MD, USA, 2024. [Google Scholar]

- Hooda, M.; Rana, C.; Dahiya, O.; Rizwan, A.; Hossain, M.S. Artificial Intelligence for Assessment and Feedback to Enhance Student Success in Higher Education. Math. Probl. Eng. 2022, 2022, 5215722. [Google Scholar] [CrossRef]

- Mariani, M.M.; Machado, I.; Magrelli, V.; Dwivedi, Y.K. Artificial Intelligence in Innovation Research: A Systematic Review, Conceptual Framework, and Future Research Directions. Technovation 2023, 122, 102623. [Google Scholar] [CrossRef]

- Tenedero, E.Q.; Pacadaljen, L.M. Learning Experiences in the Emerging Outcomes-Based Education (OBE) Curriculum of Higher Education Institutions (HEI’S) on the Scope of Hammond’s Evaluation Cube. Psychol. Educ. 2021, 9, 2. [Google Scholar]

- Chiu, T.K.F.; Moorhouse, B.L.; Chai, C.S.; Ismailov, M. Teacher Support and Student Motivation to Learn with Artificial Intelligence (AI) Based Chatbot. Interact. Learn. Environ. 2024, 32, 3240–3256. [Google Scholar] [CrossRef]

- Kim, J.; Lee, H.; Cho, Y.H. Learning Design to Support Student-AI Collaboration: Perspectives of Leading Teachers for AI in Education. Educ. Inf. Technol. 2022, 27, 6069–6104. [Google Scholar] [CrossRef]

- Baig, M.I.; Yadegaridehkordi, E. ChatGPT in the Higher Education: A Systematic Literature Review and Research Challenges. Int. J. Educ. Res. 2024, 127, 102411. [Google Scholar] [CrossRef]

- Holmes, W.; Tuomi, I. State of the Art and Practice in AI in Education. Eur. J. Educ. 2022, 57, 542–570. [Google Scholar] [CrossRef]

- Fabiyi, S.D. What Can ChatGPT Not Do in Education? Evaluating Its Effectiveness in Assessing Educational Learning Outcomes. Innov. Educ. Teach. Int. 2025, 62, 484–498. [Google Scholar] [CrossRef]

- Rani, S.; Kaur, G.; Dutta, S. Educational AI Tools: A New Revolution in Outcome-Based Education. In Explainable AI for Education: Recent Trends and Challenges; Springer: Berlin/Heidelberg, Germany, 2024; pp. 43–60. [Google Scholar]

- Seo, K.; Yoo, M.; Dodson, S.; Jin, S.-H. Augmented Teachers: K–12 Teachers’ Needs for Artificial Intelligence’s Complementary Role in Personalized Learning. J. Res. Technol. Educ. 2024, 1–18. [Google Scholar] [CrossRef]

- Al-kfairy, M. Factors Impacting the Adoption and Acceptance of ChatGPT in Educational Settings: A Narrative Review of Empirical Studies. Appl. Syst. Innov. 2024, 7, 110. [Google Scholar] [CrossRef]

- Katsamakas, E.; Pavlov, O.V.; Saklad, R. Artificial Intelligence and the Transformation of Higher Education Institutions: A Systems Approach. Sustainability 2024, 16, 6118. [Google Scholar] [CrossRef]

| Category | Sub-Category | Frequency (n = 320) | Percentage (%) |

|---|---|---|---|

| University | QUEST | 192 | 60% |

| SBBU | 96 | 30% | |

| SALU | 32 | 10% | |

| Gender | Male | 218 | 68% |

| Female | 102 | 32% | |

| Teacher Designation | Lecturer | 160 | 50% |

| Assistant Professor | 96 | 30% | |

| Associate Professor | 48 | 15% | |

| Professor | 16 | 5% | |

| Educational Qualification | Master’s Degree | 128 | 40% |

| MPhil/MS | 112 | 35% | |

| PhD | 80 | 25% | |

| Teaching Experience | 1–5 years | 144 | 45% |

| 6–10 years | 112 | 35% | |

| 11–15 years | 48 | 15% | |

| Above 15 years | 16 | 5% | |

| Survey Questions | Do you believe OBE enhances student learning and academic success? | Yes: 280 | 88% |

| Have AI tools like ChatGPT improved your instructional planning? | Yes: 240 | 75% | |

| Do you feel confident in using AI-driven tools for teaching? | Yes: 256 | 80% | |

| Does OBE help in aligning course objectives with student performance? | Yes: 272 | 85% | |

| Do you think AI-powered feedback mechanisms enhance student engagement? | Yes: 248 | 78% |

| Construct | Items | Factor Loading | VIF | Cronbach’s Alpha | CR | AVE |

|---|---|---|---|---|---|---|

| AI ChatGPT Capabilities | ACC01 | 0.79 | 1.760 | 0.83 | 0.88 | 0.6 |

| ACC02 | 0.82 | 2.020 | ||||

| ACC03 | 0.82 | 2.090 | ||||

| ACC04 | 0.76 | 1.640 | ||||

| ACC05 | 0.68 | 1.380 | ||||

| Instructional Planning | INP01 | 0.69 | 1.480 | 0.88 | 0.91 | 0.64 |

| INP02 | 0.84 | 2.530 | ||||

| INP03 | 0.84 | 2.600 | ||||

| INP04 | 0.83 | 2.290 | ||||

| INP05 | 0.81 | 2.350 | ||||

| INP06 | 0.76 | 1.950 | ||||

| Students Performance | STP01 | 0.79 | 1.780 | 0.87 | 0.9 | 0.65 |

| STP02 | 0.78 | 1.910 | ||||

| STP03 | 0.86 | 2.480 | ||||

| STP04 | 0.82 | 2.120 | ||||

| STP05 | 0.77 | 1.790 | ||||

| Teacher Competency | TCO01 | 0.74 | 1.630 | 0.86 | 0.9 | 0.64 |

| TCO02 | 0.85 | 2.250 | ||||

| TCO03 | 0.82 | 1.990 | ||||

| TCO04 | 0.78 | 1.740 | ||||

| TCO05 | 0.79 | 1.940 |

| HTMT | ||||

| ACC | INP | STP | TCO | |

| ACC | ||||

| INP | 0.69 | |||

| STP | 0.71 | 0.8 | ||

| TCO | 0.85 | 0.69 | 0.69 | |

| Fornell-Larcker Criterion | ||||

| ACC | INP | STP | TCO | |

| 0.77 | ||||

| ACC | 0.59 | 0.8 | ||

| INP | 0.6 | 0.71 | 0.81 | |

| STP | 0.71 | 0.6 | 0.6 | 0.8 |

| Direct Effects of Each Relationship | ||||

| Path | Original Sample (β) | T-Statistic | p-Value | Decision |

| ACC → INP | 0.33 | 4.81 | 0.000 | Accepted |

| ACC → STP | 0.20 | 3.01 | 0.000 | Accepted |

| INP → STP | 0.50 | 8.51 | 0.000 | Accepted |

| TCO → INP | 0.37 | 5.29 | 0.000 | Accepted |

| TCO → STP | 0.16 | 2.33 | 0.020 | Accepted |

| Indirect Effects of Each Relationship | ||||

| ACC → INP → STP | 0.160 | 4.400 | 0.000 | Accepted |

| TCO → INP → STP | 0.180 | 4.250 | 0.000 | Accepted |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alwakid, W.N.; Dahri, N.A.; Humayun, M.; Alwakid, G.N. Exploring the Role of AI and Teacher Competencies on Instructional Planning and Student Performance in an Outcome-Based Education System. Systems 2025, 13, 517. https://doi.org/10.3390/systems13070517

Alwakid WN, Dahri NA, Humayun M, Alwakid GN. Exploring the Role of AI and Teacher Competencies on Instructional Planning and Student Performance in an Outcome-Based Education System. Systems. 2025; 13(7):517. https://doi.org/10.3390/systems13070517

Chicago/Turabian StyleAlwakid, Wafa Naif, Nisar Ahmed Dahri, Mamoona Humayun, and Ghadah Naif Alwakid. 2025. "Exploring the Role of AI and Teacher Competencies on Instructional Planning and Student Performance in an Outcome-Based Education System" Systems 13, no. 7: 517. https://doi.org/10.3390/systems13070517

APA StyleAlwakid, W. N., Dahri, N. A., Humayun, M., & Alwakid, G. N. (2025). Exploring the Role of AI and Teacher Competencies on Instructional Planning and Student Performance in an Outcome-Based Education System. Systems, 13(7), 517. https://doi.org/10.3390/systems13070517