Evaluating User Engagement in Online News: A Deep Learning Approach Based on Attractiveness and Multiple Features

Abstract

1. Introduction

- (1)

- This study proposes an extended LDA model based on users’ click–comment behavior. The model can effectively represent the attractiveness of words in news headlines and content. To a certain extent, the attractiveness of words constitutes the news’s readability and is an essential factor in attracting users to click and comment.

- (2)

- This study proposes a deep learning model, DUEE, that integrates news headlines, content, meta-features, and attractiveness. More importantly, the DUEE model integrates word attraction in news headlines and content representations through attention units. The DUEE model considers various elements of news that collectively determine the ability of the news to attract clicks and engagement.

- (3)

- This study verifies the effectiveness of the DUEE as a whole, provides empirical evidence for news attractiveness in this task, and provides a new idea for user engagement evaluation. The proposed model and indicators can better help news platforms adjust their news release strategies to promote user engagement.

2. Related Works

2.1. News Popularity Prediction Methods

2.2. Clickbait and Fake News Detection Methods

2.3. Other News Evaluation Methods

3. Data Collection and Labelling

4. The Proposed Model (DUEE)

4.1. Attractiveness Representation

- For each topic , a word distribution is generated where each topic has a word distribution .

- Each topic–word pair generates a distribution of values of user comments.

- The following calculations were made for each news article:

- (1)

- Generate a topic distribution for each news article, where each news article has a topic distribution .

- (2)

- For each word in the news content, a topic distribution is generated, and a word distribution is generated.

- (3)

- For each word in a news headline, a topic distribution and a word distribution are generated..

- (4)

- For a news article, click behavior generates a distribution of comment behavior .

4.2. Explicit Information Representation

4.3. Implicit Information Representation

4.4. Attention Unit and Model Output

5. Experiment and Result Analysis

5.1. Data Pre-Processing

5.2. Experiment Setup

5.3. Complexity Analysis

5.4. Comparison Models

5.5. Evaluation Metrics

5.6. Experiment Results

5.7. Ablation Experiments

5.8. Effect of Attractiveness on the Proposed Model

5.9. Effect of Dropout on the Proposed Model

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ren, J.; Dong, H.; Popovic, A.; Sabnis, G.; Nickerson, J. Digital platforms in the news industry: How social media platforms impact traditional media news viewership. Eur. J. Inform. Syst. 2024, 33, 1–18. [Google Scholar] [CrossRef]

- Pewreaearch. Available online: https://www.pewresearch.org/journalism/fact-sheet/news-platform-fact-sheet/ (accessed on 10 July 2024).

- Statista. Available online: https://www.statista.com/statistics/910787/china-monthly-active-users-on-leading-news-apps/ (accessed on 10 July 2024).

- Fletcher, R.; Nielsen, R.K. Are people incidentally exposed to news on social media? A comparative analysis. New Media Soc. 2018, 20, 2450–2468. [Google Scholar] [CrossRef]

- Evans, R.; Jackson, D.; Murphy, J. Google News and machine gatekeepers: Algorithmic personalization and news diversity in online news search. Digit. J. 2023, 11, 1682–1700. [Google Scholar] [CrossRef]

- Kuai, J.; Lin, B.; Karlsson, M.; Lewis, S.C. From wild east to forbidden city: Mapping algorithmic news distribution in China through a case study of Jinri Toutiao. Digit. J. 2023, 11, 1521–1541. [Google Scholar]

- Qiu, Z.; Hu, Y.; Wu, X. Graph neural news recommendation with user existing and potential interest modeling. ACM Trans. Knowl. Discov. Data 2022, 16, 1–17. [Google Scholar] [CrossRef]

- Voronov, A.; Shen, Y.; Mondal, P.K. Forecasting popularity of news article by title analyzing with BN-LSTM network. In Proceedings of the 2019 International Conference on Data Mining and Machine Learning, New York, NY, USA, 13–18 July 2019; pp. 19–27. [Google Scholar]

- Cervi, L.; Tejedor, S.; Blesa, F.G. TikTok and political communication: The latest frontier of politainment? A case study. Media Commun. 2023, 11, 203–217. [Google Scholar] [CrossRef]

- Yang, Y.; Liu, Y.; Lu, X.; Xu, J.; Wang, F. A named entity topic model for news popularity prediction. Knowl.-Based Syst. 2020, 208, 106430. [Google Scholar] [CrossRef]

- Xiong, J.; Yu, L.; Zhang, D.S.; Leng, Y.F. DNCP: An attention-based deep learning approach enhanced with attractiveness and timeliness of news for online news click prediction. Inform. Manag.-Amster. 2021, 58, 103428. [Google Scholar] [CrossRef]

- Liao, D.; Xu, J.; Li, G.; Huang, W.; Liu, W.; Li, J. Popularity prediction on online articles with deep fusion of temporal process and content features. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019. [Google Scholar]

- Arora, A.; Hassija, V.; Bansal, S.; Yadav, S.; Chaloma, V.; Hussain, A. A novel multimodal online news popularity prediction model based on ensemble learning. Expert. Syst. 2023, 40, e13336. [Google Scholar] [CrossRef]

- Omidvar, A.; Pourmodheji, H.; An, A.; Edall, G. A novel approach to determining the quality of news headlines. In Proceedings of the NLPinAI 2020, Valletta, Malta, 22–24 February 2020. [Google Scholar]

- Yang, Y.; Cao, J.; Lu, M.; Li, J.; Lin, C. How to Write High-quality News on Social Network? Predicting News Quality by Mining Writing Style. arXiv 2019, arXiv:1902.00750. [Google Scholar]

- Wang, H.C.; Maslim, M.; Liu, H.Y. CA-CD: Context-aware clickbait detection using new Chinese clickbait dataset with transfer learning method. Data. Technol. Appl. 2023, 58, 243–266. [Google Scholar] [CrossRef]

- Liu, T.; Yu, K.; Wang, L.; Zhang, X.; Zhou, H.; Wu, X. Clickbait detection on WeChat: A deep model integrating semantic and syntactic information. Knowl-Based. Syst. 2022, 245, 108605. [Google Scholar] [CrossRef]

- Pujahari, A.; Sisodia, D.S. Clickbait detection using multiple categorization techniques. J. Inf. Sci. 2021, 47, 118–128. [Google Scholar] [CrossRef]

- Kaushal, V.; Vemuri, K. Clickbait—Trust and credibility of digital news. IEEE Trans. Technol. Soc. 2021, 2, 146–154. [Google Scholar] [CrossRef]

- Molyneux, L.; Coddington, M. Aggregation, clickbait and their effect on perceptions of journalistic credibility and quality. J. Pract. 2020, 14, 429–446. [Google Scholar] [CrossRef]

- Blei, D.M.; Ng, A.Y.; Jordan, M.I. Latent dirichlet allocation. J. Mach. Learn. Res. 2003, 3, 993–1022. [Google Scholar]

- Yang, Z.C.; Yang, D.Y.; Dyer, C.; He, X.D.; Smola, A.; Hovy, E. Hierarchical attention networks for document classification. In Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016. [Google Scholar]

- Zrnec, A.; Poženel, M.; Lavbič, D. Users’ ability to perceive misinformation: An information quality assessment approach. Inform. Process. Manag. 2022, 59, 102739. [Google Scholar] [CrossRef]

- Lin, H.; Lasser, J.; Lewandowsky, S.; Cole, R.; Gully, A.; Rand, D.G.; Pennycoo, G. High level of correspondence across different news domain quality rating sets. PNAS Nexus 2023, 2, pgad286. [Google Scholar] [CrossRef]

- Mosallanezhad, A.; Karami, M.; Shu, K.; Mancenido, M.V.; Liu, H. Domain Adaptive Fake News Detection via Reinforcement Learning. In Proceedings of the ACM Web Conference 2022, Lyon, France, 25–29 April 2022. [Google Scholar]

- Song, G.H.; Wang, Y.B.; Li, J.F.; Hu, H.B. Deep learning model for news quality evaluation based on explicit and implicit information. Intell. Autom. Soft Comput. 2023, 38, 275–295. [Google Scholar] [CrossRef]

- Alam, S.M.; Asevska, E.; Roche, M.; Teisseire, M. A Data-Driven Score Model to Assess Online News Articles in Event-Based Surveillance System. In Proceedings of the Annual International Conference on Information Management and Big Data, Virtual Event, 1–3 December 2021. [Google Scholar]

- Wu, Q.Y.; Li, L.; Zhou, H.; Zeng, Y.; Yu, Z. Importance-aware learning for neural headline editing. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Romanou, A.; Smeros, P.; Castillo, C.; Aberer, K. Scilens news platform: A system for real-time evaluation of news articles. Proc. Vldb. Endow. 2020, 13, 2969–2972. [Google Scholar] [CrossRef]

- Kim, J.H.; Mantrach, A.; Jaimes, A.; Oh, A. How to compete online for news audience: Modeling words that attract clicks. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016. [Google Scholar]

- DuBay, W.H. The principles of readability. Online Inf. 2004, 1, 12–13. [Google Scholar]

- Shulman, H.C.; Markowitz, D.M.; Rogers, T. Reading dies in complexity: Online news consumers prefer simple writing. Sci. Adv. 2024, 10, eadn2555. [Google Scholar] [CrossRef] [PubMed]

- Griffiths, T.L.; Steyvers, M. Finding scientific topics. Proc. Natl. Acad. Sci. USA 2004, 101, 5228–5235. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Zhao, Z.; Hu, R.F.; Li, W.S.; Liu, T.; Du, X.Y. Analogical Reasoning on Chinese Morphological and Semantic Relations. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics(Volume 2: Short Papers), Melbourne, Australia, 15–20 July 2018. [Google Scholar]

- Metzger, M.J.; Flanagin, A.J. Credibility and trust of information in online environments: The use of cognitive heuristics. J. PRAGMATICS 2005, 59, 210–220. [Google Scholar] [CrossRef]

- Harcup, T.; O’Neill, D. What is news? Galtung and Ruge revisited. J. Stud. 2001, 2, 261–280. [Google Scholar] [CrossRef]

- Cui, Y.; Jia, M.L.; Lin, T.Y.; Song, Y.; Belongie, S. Class-Balanced Loss Based on Effective Number of Samples. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014. [Google Scholar]

- Lai, S.; Xu, L.H.; Liu, K.; Zhao, J. Recurrent convolutional neural networks for text classification. In Proceedings of the Twenty-Ninth AAAI Conference on Artificial Intelligence, Austin, TX, USA, 25–29 January 2015. [Google Scholar]

| User Engagement Indicators | Description |

|---|---|

| High comments and low clicks. News articles close to this indicator represent news content that generates more attention, but the implicit information is less capable of triggering users to click. | |

| High comments and low clicks. News articles close to this indicator show that the implicit and explicit have enough ability to attract users to click and comment. | |

| High clicks and low comments. News articles close to this indicator show that explicit information attracts users, but their content does not. | |

| Low clicks and low comments. News articles close to this indicator show that neither explicit nor implicit information effectively attracts users. |

| Variable Name | Description |

|---|---|

| The number of topics. | |

| Number of words in the dataset. | |

| Number of news articles in the dataset. | |

| Topic distribution of news articles. | |

| Topic distribution of words. | |

| The probability that a word is attractive to a particular topic. | |

| Topic distribution of words in the news headlines. | |

| Words in news headlines. | |

| Topic distribution of words in the news content. | |

| Words in news content. | |

| Probability click–comment behavior. | |

| Number of words in news headlines. | |

| Number of words in news content. | |

| . |

| Model | Time Complexity | Space Complexity |

|---|---|---|

| UCCB |

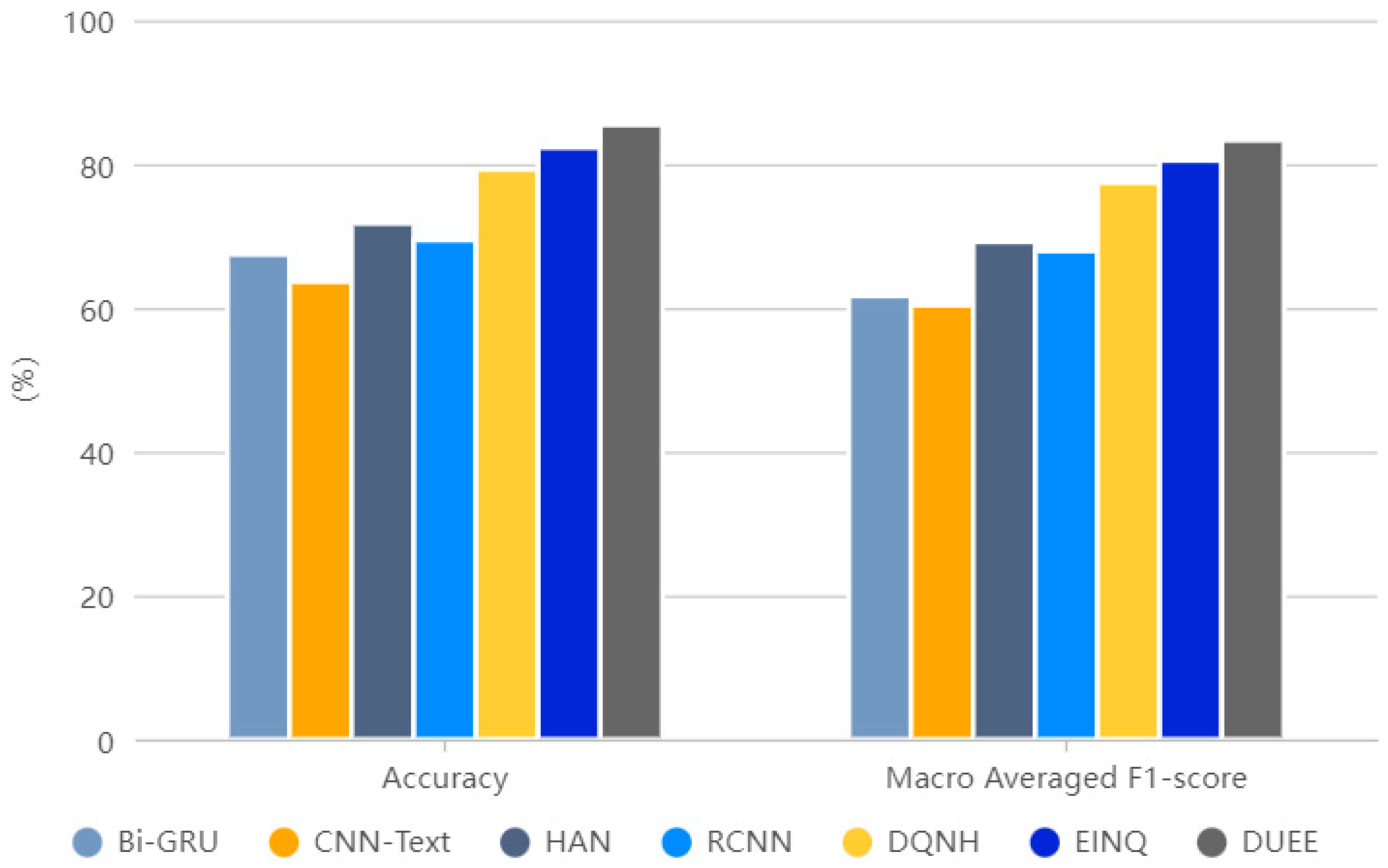

| Model | User Engagement Indicators | Accuracy (%) | Macro Averaged F1 Score (%) | Model Size (M) | Inference Time (ms) | |||

|---|---|---|---|---|---|---|---|---|

| F1 Score (%) | ||||||||

| Bi-GRU | 60.76 | 58.45 | 62.91 | 64.56 | 67.45 | 61.67 | 3.2 | 0.71 |

| CNN-Text | 58.79 | 59.47 | 61.24 | 62.03 | 63.65 | 60.38 | 4.8 | 0.84 |

| HAN | 65.42 | 66.89 | 73.25 | 71.18 | 71.76 | 69.19 | 5.6 | 1.07 |

| RCNN | 65.78 | 63.23 | 70.89 | 71.83 | 69.43 | 67.93 | 5.1 | 1.12 |

| DQNH | 75.61 | 77.82 | 79.44 | 76.82 | 79.29 | 77.42 | 9.1 | 1.78 |

| EINQ | 78.62 | 80.12 | 81.16 | 82.12 | 82.31 | 80.51 | 7.9 | 1.47 |

| DUEE | 82.42 | 80.41 | 86.17 | 84.32 | 85.46 | 83.33 | 8.5 | 1.67 |

| Ablation Models | User Engagement Indicators | Accuracy (%) | Macro Averaged F1-Score (%) | |||

|---|---|---|---|---|---|---|

| F1-Score (%) | ||||||

| DUEE-H* | 77.43 | 79.14 | 81.18 | 79.16 | 81.32 | 79.23 |

| DUEE-LDA^ | 79.49 | 80.24 | 83.54 | 82.12 | 82.11 | 81.35 |

| DUEE-HAT* | 81.28 | 79.95 | 83.51 | 83.43 | 83.24 | 82.04 |

| DUEE-CAT* | 79.94 | 80.12 | 83.14 | 83.85 | 82.17 | 81.76 |

| DUEE-AM^ | 81.68 | 79.32 | 82.47 | 83.23 | 81.47 | 81.68 |

| DUEE | 82.42 | 80.41 | 86.17 | 84.32 | 85.46 | 83.33 |

| Dropout Layer | User Engagement Indicators | Accuracy (%) | Macro Averaged F1 Score (%) | |||

|---|---|---|---|---|---|---|

| F1 Score (%) | ||||||

| No | 78.23 | 78.68 | 83.18 | 82.54 | 83.53 | 80.66 |

| Yes | 82.42 | 80.41 | 86.17 | 84.32 | 85.46 | 83.33 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, G.; Wang, Y.; Chen, X.; Hu, H.; Liu, F. Evaluating User Engagement in Online News: A Deep Learning Approach Based on Attractiveness and Multiple Features. Systems 2024, 12, 274. https://doi.org/10.3390/systems12080274

Song G, Wang Y, Chen X, Hu H, Liu F. Evaluating User Engagement in Online News: A Deep Learning Approach Based on Attractiveness and Multiple Features. Systems. 2024; 12(8):274. https://doi.org/10.3390/systems12080274

Chicago/Turabian StyleSong, Guohui, Yongbin Wang, Xiaosen Chen, Hongbin Hu, and Fan Liu. 2024. "Evaluating User Engagement in Online News: A Deep Learning Approach Based on Attractiveness and Multiple Features" Systems 12, no. 8: 274. https://doi.org/10.3390/systems12080274

APA StyleSong, G., Wang, Y., Chen, X., Hu, H., & Liu, F. (2024). Evaluating User Engagement in Online News: A Deep Learning Approach Based on Attractiveness and Multiple Features. Systems, 12(8), 274. https://doi.org/10.3390/systems12080274