Simple Summary

The diversity of fish resources is an important component of biodiversity. As a branch of fish, the diversity of koi varieties is conducive to improving the genetic quality of offspring, avoiding inbreeding, and improving their adaptability to the natural environment. The variety classification of koi is a necessary step to improve the diversity of koi varieties and breeding quality. The traditional manual classification method of koi variety faces some problems, such as high subjectivity, low efficiency, and high misclassification rate. Therefore, we studied an intelligent method of classifying koi variety using an artificial intelligence approach, and designed a deep learning network model, KRS-Net. The intelligent and nondestructive classification was realized for 13 varieties of koi by using the proposed model, and the accuracy rate was 97.9%, which is higher than that of the classical mainstream classification network. This study provides a reference for intelligent classification of marine organisms, and can be extended to the screening and breeding of other species.

Abstract

As the traditional manual classification method has some shortcomings, including high subjectivity, low efficiency, and high misclassification rate, we studied an approach for classifying koi varieties. The main contributions of this study are twofold: (1) a dataset was established for thirteen kinds of koi; (2) a classification problem with high similarity was designed for underwater animals, and a KRS-Net classification network was constructed based on deep learning, which could solve the problem of low accuracy for some varieties that are highly similar. The test experiment of KRS-Net was carried out on the established dataset, and the results were compared with those of five mainstream classification networks (AlexNet, VGG16, GoogLeNet, ResNet101, and DenseNet201). The experimental results showed that the classification test accuracy of KRS-Net reached 97.90% for koi, which is better than those of the comparison networks. The main advantages of the proposed approach include reduced number of parameters and improved accuracy. This study provides an effective approach for the intelligent classification of koi, and it has guiding significance for the classification of other organisms with high similarity among classes. The proposed approach can be applied to some other tasks, such as screening, breeding, and grade sorting.

1. Introduction

The diversity of fish resources is an important part of biodiversity and is the basis of the stable and sustainable development of fisheries [1]. As a branch of fish resources, koi variety diversity is conducive to improving the genetic quality of offspring, avoiding inbreeding, and improving the adaptability of koi to the natural environment. To improve the diversity of ornamental fish, classifying koi varieties is a necessary step in breeding koi for biodiversity. The culture of ornamental fish has a long history. As a representative ornamental fish, koi have become increasingly popular since the 1980s. Koi are known as “living gemstones in water”, “geomantic fish”, and “lucky fish” due to their beautiful physique, bright colors, and elegant swimming posture [2]. In the traditional biological breeding process, various genes are selectively gathered to form a new variety through cross-breeding, which can increase species diversity [3]. In the same way, the varieties of koi have gradually evolved into Tancho, Hikariutsurimono, Utsurimono, Bekko, Kawarimono, Taisho Sanshoku, Showa Sanshoku, Asagi, Kohaku, Hikarimoyomono, Koromo, Kinginrin, and Ogon, for a total of thirteen varieties [4,5]. Koi may produce different varieties of offspring through cross-breeding, and each female lays about 300,000 eggs, with an average survival rate of more than 80%. Koi are generally sorted three months after hatching, which is labor-intensive [6]. Additionally, the prices of different varieties of koi greatly vary, so classifying koi is an indispensable step in koi breeding, screening, and grade sorting.

With the improvement in breeding technology and the expansion of breeding scales [7], the market value of koi is increasing. As the price and ornamental value of different koi varieties greatly vary, the screening and grade sorting of koi have become more and more important [8]. However, regardless of variety screening or grade sorting, they both involve classification problems [9,10]. Therefore, many scholars have attached great importance to the research of classification problems for koi varieties. For example, Peng et al. [11] performed the division of Kohaku, Taisho, and Showa varieties, and proposed a four-stage classification method for koi. Song et al. [12] divided three varieties of koi from the perspectives of color, pattern, and lineage. The classification of koi varieties is still manually performed at present, which depends on those with skilled experienced with koi. The traditional manual classification method for koi has disadvantages, such as high subjectivity, low efficiency, and high work intensity. More importantly, classification errors often occur due to the different skills of sorting workers and the subjectivity of classification. Especially when there is high similarity between some varieties of koi, such as Taisho and Showa, misclassification often occurs. To solve the difficulties caused by traditional manual classification, it is necessary to research intelligent classification approaches for koi varieties.

With the development of computer technology, artificial intelligence, and improvements in hardware performance, deep learning technology has rapidly developed [13,14,15]. Recognition technology based on deep learning has especially received attention and been applied in agriculture [16,17], fisheries [18,19,20], medical treatment [21,22,23], and other fields [24,25,26,27]. In the field of fisheries, the existing studies have mainly focused on determining the freshness of marine animals, intelligently recognizing marine organisms, and classifying economic marine organisms. For example, Lu et al. [28] proposed a method of automatically recognizing common tuna and saury based on deep learning, and the final test accuracy of this method was 96.24%. Rauf et al. [29] proposed a fish species recognition framework based on a convolutional neural network, and the classification accuracy of cultured fish (e.g., grass carp, carp, and silver carp) was 92%. Knausgrd et al. [30] proposed a temperate fish detection and classification method based on transfer learning. The classification network was trained on a public dataset for fish (Fish4Knowledge), and the species classification accuracy was 99.27%. From the above research, it is possible to classify koi based on image processing and deep learning technology. The above studies and their related references all discussed methods of classifying underwater animals with high similarity among species.

To the best of our knowledge, there is currently no study on intelligent classification methods for underwater animals with high similarity between varieties. Motivated by the above discussion, we studied a classification approach for underwater animals with high similarity among varieties. The main contributions of this study are as follows: (1) A dataset was created for thirteen kinds of koi. To the best of our knowledge, no koi dataset has yet been reported. (2) A classification problem with high similarity was proposed for underwater animals, and a KRS-Net network was constructed to solve this classification problem for koi with high similarity among varieties. (3) The proposed network could extract deeper koi feature information, and could further improve the classification accuracy by fusing the advantages of support vector machine (SVM) and a fully connected layer. The superiority of this proposed approach was verified through comparison experiments with mainstream networks (AlexNet [31], VGG16 [32], GoogLeNet [33], ResNet101 [34], and DenseNet201 [35]).

2. Materials and Methods

2.1. Image Acquisition and Data Augmentation

In this study, thirteen kinds of koi were selected as the research objects, and 569 original images of koi with a resolution of 2400 × 1600 were collected using a digital camera (EOS 200D, Canon, Tokyo, Japan). Figure 1 shows representative koi images of the thirteen varieties. For the convenience of drawing and charting, the Hikariutsurimono, Taisho Sanshoku, Showa Sanshoku, and Hikarimoyomono koi varieties are abbreviated as Hikariu, Taisho, Showa, and Hikarim, respectively. The dataset in this study was taken from the actual breeding data of koi, and the method used in this study will also be applied to the actual koi breeding and production.

Figure 1.

Images of thirteen kinds of koi.

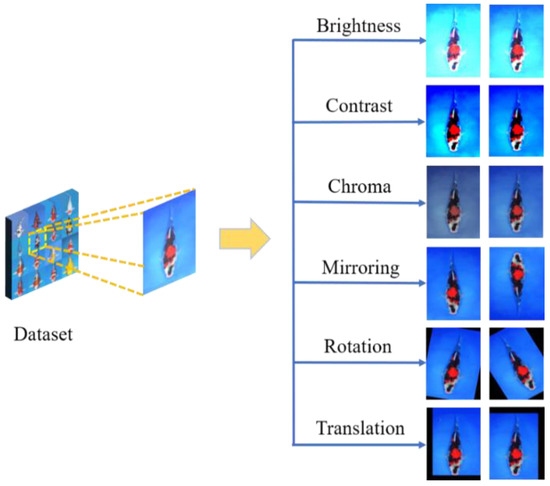

To improve the generalization ability of convolutional neural networks, we used image augmentation methods including brightness, contrast, chroma, mirroring, rotation, and horizontal or vertical translation. Generative adversarial network is a kind of efficient augmentation method [36,37]. The images generated by the usual augmentation methods were sufficient to meet the training task of our network. Therefore, the generative adversarial network was not used to expand the dataset in this study. A schematic diagram of the effect of data augmentation is shown in Figure 2.

Figure 2.

Effect of data augmentation.

The dataset had 1464 images after image augmentation, including 1027 images in the training set, 294 images in the verification set, and 143 images in the test set. Because the number of images of Showa and Kohaku was sufficient, we did not perform additional data augmentation processing. The detailed number of images for each variety is shown in Table 1.

Table 1.

Numbers of images of thirteen koi varieties.

2.2. KRS-Net Classification Approach

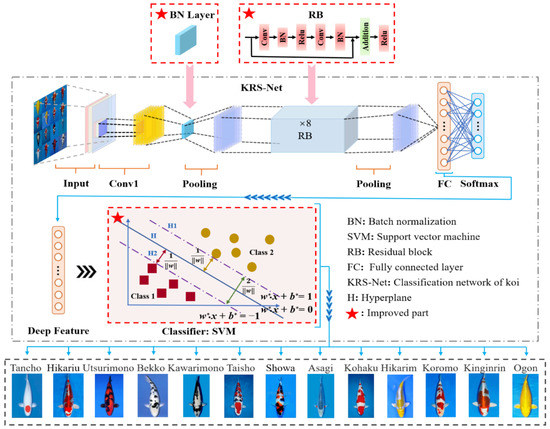

Based on the AlexNet framework, a KRS-Net classification network was designed to classify thirteen kinds of koi. KRS-Net is a classification network that is mainly composed of a residual network and SVM. The residual network is used to extract the features of the object, and SVM realizes the classification of objects. A schematic diagram of the proposed network is illustrated in Figure 3.

Figure 3.

Schematic diagram of the proposed KRS-Net.

Based on the AlexNet framework, the main structural changes were as follows:

(1) Replace the original local response normalization (LRN) with batch normalization (BN). Both the LRN layer and BN layer can improve the network generalization ability and training speed, but the performance of the latter is usually superior [37]. Compared with the LRN layer, the BN layer can adapt to a larger learning rate to further improve the network training speed. At the same time, it improves the effect of the regularization strategy, reduces the dependence on the dropout layer, and improves the anti-disturbance ability.

(2) Add eight residual blocks to the network structure. The skip connection in the residual block can overcome the problem of gradient vanishing caused by increasing depth in the network. Therefore, multiple residual blocks were introduced to increase the depth of the network and extract deeper koi feature information. In addition, the difficulty of extracting more subtle koi characteristics is reduced.

(3) Fuse SVM with fully connected (FC) layer to improve accuracy. Inspired by [38], we replaced the softmax layer with SVM to achieve higher generalization model performance, thus improving the accuracy of koi variety classification. To improve the accuracy of classification, the fused SVM with FC was added to the network framework to realize classification of the softmax layer. The fused SVM with FC transforms the nonlinear classification problem into a linear classification problem in high dimensional space by improving the spatial dimensions of the deep feature information extracted from the FC layer. Therefore, the complex processing process of feature information is simplified, and the classification accuracy for koi is further improved.

The information flow of KRS-Net is as follows: First, thirteen kinds of koi images were input into the network after data balance. Second, the koi feature information images was extracted by convolution and pooling, and the extracted feature information was transmitted to the FC layer. The loss rate was reduced by a gradient descent algorithm, and the feature vectors of the FC layer were imported into SVM. Finally, the optimal classification hyperplane was obtained by a kernel function, and the parameters of the network were updated. The process of using the kernel function algorithm to explore the optimal classification hyperplane is as follows:

We assume that the sample space of the training set is where is the input sample set, is the output sample set, and is the training samples. By adopting the appropriate kernel function , sample can be mapped into a high dimensional space, where represents the dimensional transform function of . The convex optimization problem with constraints is constructed as:

where is the optimized object function; is the penalty parameter; is the Lagrange multiplier, and its optimal solution is , which can be obtained by Formulas (1) and (2). Furthermore, we construct the hyperplane for classification. The optimal classification hyperplane is defined as follows:

where is the normal vector to the optimal hyperplane, and is the offset.

Then, the categories of koi varieties can be determined through the classification decision function, which is defined as:

3. Experimental Results and Analysis

3.1. Setup of Experiment and Performance Indexes

The training work of KRS-Net proposed in this paper was implemented in MATLAB2020a (MathWorks, Natick, MA, USA). The computer performance parameters for network training were as follows: CPU: Intel (R) Xeon (R) E5-4627v4@2.60 GHz; GPU: NVIDIA RTX 2080Ti; RAM: 64 G; OS: Windows10 (Lenovo, Hong Kong, China).

To unify the training environment and avoid interference from other factors, the experiments were conducted under the same conditions.

KRS-Net is trained on the established dataset of koi. The learning rate of network training was uniformly set to 0.0001 according to [39]. Because the values of batch size and epoch affect the training effect to a certain extent, we studied the influence of the batch size and epoch on the effect of the network training under different hyperparameters and obtained the maximum classification performance of the network. The experimental results are shown in Table 2.

Table 2.

Experimental test results of KRS-Net.

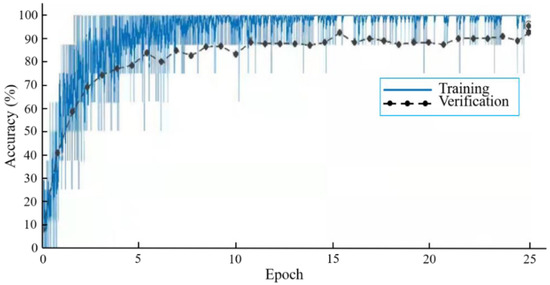

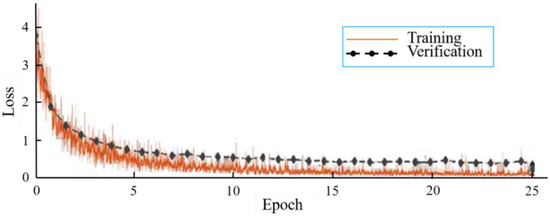

From Table 2, the best classification test accuracy of KRS-Net was 97.90% when the batch size was set to 8 and the epoch was set to 25. When the batch size was 8 and the epoch is 25, Figure 4 shows the training and verification accuracy curves of KRS-Net. From Figure 4, we can see that the verification curve is close to the training curve, which indicates that the network had better performance. The loss curves of KRS-Net in the training and verification processes are shown in Figure 5.

Figure 4.

Accuracy curves.

Figure 5.

Loss curves.

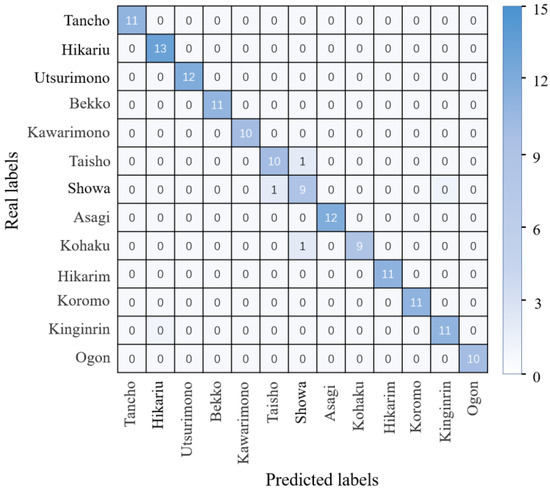

To further show the specific koi classification with the proposed approach, 143 images were used to test the trained KRS-Net. The real and predicted koi categories are summarized in the form of a matrix in Figure 6. Figure 6 shows that each value is the largest in the diagonal line in the same columns, which indicates that the KRS-Net had a better classification effect.

Figure 6.

Confusion matrix.

3.2. Visualization of Features

We visualized the features to make the extracted features more intuitive from three aspects: different network layers, single image, and gradient-weighted class activation mapping (Grad-CAM).

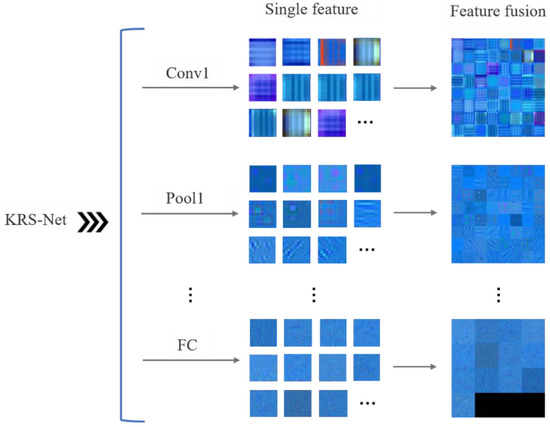

To reflect the features learned by the convolutional neural network in the training process, the single and fusion features were extracted and visualized for different network layers of KRS-Net, as shown in Figure 7.

Figure 7.

Feature visualization of KRS-Net.

In Figure 7, the single feature and fusion feature are visualized for the first convolution layer (Conv1), first pooling layer (Pool1), and the FC layer of KRS-Net from the shallow to the deep layer. The fused feature is a new combined feature created on the basis of multiple single features, and the fused features reflect the correlation information among the single features. Eliminating the redundant information caused by the correlation between single features is helpful for the subsequent classification decision making of the network. From Figure 7, we can see that some low-level image feature information was extracted by the convolution layer and pooling layer at the front of the network, such as koi color; and some high-level image feature information was extracted by the FC layer at the end of the network.

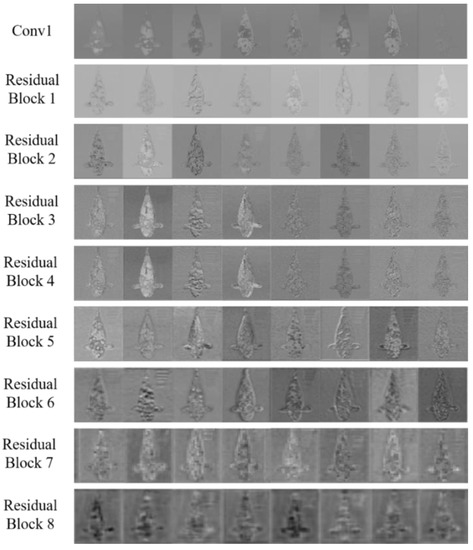

Different activation regions are generated at each layer due to the differing abilities to extract image features at each network layer when the image is input into the convolutional neural network. Furthermore, the features learned by each layer can be intuitively seen by comparing the activation regions with the original image. To study the intermediate processing process of KRS-Net for a single image, the activation regions are successively shown for the first convolution layer and eight residual blocks by considering a Showa example in Figure 8.

Figure 8.

Activation regions of KRS-Net in a single image.

From Figure 8, it can be seen that the shallow network extracted the simple features of images, and the extracted features became more complex and abstract with the increase in network depth.

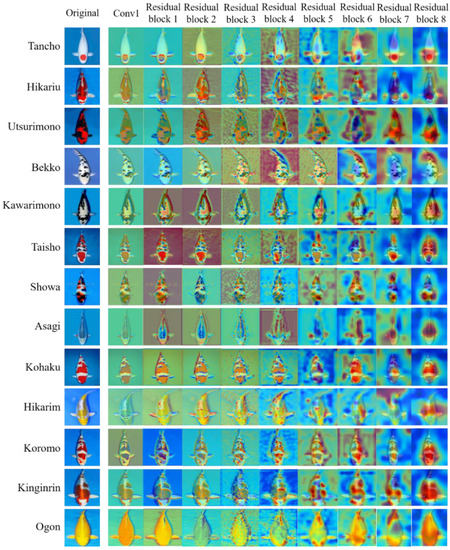

Grad-CAM [40] can visualize a region of interest of an image, which helped to understand how the convolutional neural network makes decisions on the classification of koi. Figure 9 gives the Grad-CAM visualization of the first convolution layer and eight residual blocks in KRS-Net for koi. In Figure 9, the red region of Grad-CAM provides an important basis to make classification decisions on the input image for the network, and the blue region is the second part. With the increase in network depth, the red region gradually focuses on the special characteristics of the object. Taking the image of Tancho as an example in Figure 9, we can see that the red region of the output image of residual block 4 is relatively scattered, but the red region slowly focuses on the round spot on the head of Tancho with the deepening of the network, which is the most obvious feature that distinguishes this variety from other varieties. As can be seen from Figure 9, the network could effectively capture the characteristics of each koi variety, so that the classification task was completed well.

Figure 9.

Grad-CAM visualization of KRS-Net.

3.3. Comparative Analysis with Other Classification Networks

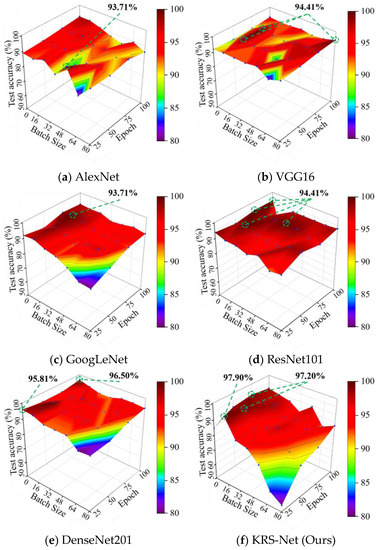

To verify the superiority of the proposed approach, the test accuracy of the KRS-Net was compared with that of some mainstream classification networks such as AlexNet, VGG16, GoogLeNet, ResNet101, and DenseNet201. To visually display the comparison results, a 3D colormap surface was used to study the influence of hyperparameters on the test accuracy of the networks. Figure 10 shows the 3D colormap surfaces of the test accuracy of KRS-Net and that of the other five classification networks.

Figure 10.

Test accuracies of different networks.

As can be seen from Figure 10, the highest test accuracy of AlexNet, VGG16, GoogLeNet, ResNet101, DenseNet201, and the proposed KRS-Net was 94.41%, 94.41%, 93.71%, 93.71%, 96.50%, and 97.90%, respectively. The results of the comparative analysis showed that the test accuracy of KRS-Net was 1.4% higher than the highest test accuracy of the other five classification networks, which proves the superiority of the proposed approach. Notably, the classification effect of the six networks gradually increased with the decrease in batch size. For this phenomenon, a specific analysis is provided in Section 4.

The accuracy, precision, recall, and F1 were selected as performance evaluation indexes to further analyze the koi classification performance of the networks, whose definitions are as follows [41]:

where TP, TN, FP, and FN, respectively, represent true positive, true negative, false positive, and false negative. Accuracy is an intuitive evaluation index, representing the proportion of the number of koi samples correctly classified to the total number of koi samples. Precision represents the proportion of the number of real positive samples to the number of positive samples predicted by the network. Recall represents the proportion of positive samples predicted by the network to all positive samples. F1 is a comprehensive evaluation index based on precision and recall. A larger F1 value indicates better network performance.

The comparative experimental results are shown in Table 3. The proposed KRS-Net had a better effect when the batch size was eight, as shown in Table 2. So, the batch size was set to 8 and the epoch was set to 25, 50, 75, or 100 in the experiment. The following performance evaluation indexes of each network represent the average values of thirteen koi varieties, but not the performance evaluation index of a single variety.

Table 3.

Performance evaluation of networks.

It can be seen from Table 3 that when the batch size of KRS-Net was set to 8 and the epoch was set to 25, the classification accuracy, precision, recall, and F1 were 99.68%, 97.90%, 97.76%, and 97.80%, respectively, which are all higher than those of the other five classification networks. In addition, we can see that the four evaluation indices of the network all decreased with the increase in epochs, as shown in Table 3, which may have occurred because the network gradually generated overfitting with the increase in epoch number in the subsequent training process.

Remark: there is a kind of fine-grained image classification method (subcategory image classification method), which we used to divide coarse-grained categories into more detailed subclasses according to the differences in some special parts among subclasses. However, the difference between the subclasses of koi lies not only in some special parts, but also in the shape and position of its body patterns as well as the ratio of red, white, and black. Considering the above factors, we did not choose a similar algorithm such as a fine-grained algorithm.

4. Discussion

4.1. Factors Influencing Test Accuracy

Although the test accuracy of the proposed KRS-Net reached 97.90%, there were still some koi varieties that were misclassified. The reasons for the misclassification may include the following several aspects: (1) Some images were not clear enough because koi swim quickly. Additionally, the part of the image containing the typical characteristics may have been incomplete due to the differences in shooting angle during the data acquisition, which may have affected the training effect of the network. (2) The water ripples generated by the swimming of koi may have affected the image definition, which resulted in the blurring and distortion of images, as well as other problems [42]. (3) The cross-breeding of koi reduces the differences between varieties, resulting in a situation where the offspring are neither like the characteristics of their mother fish nor the various characteristics of their father, which poses difficulties for classification.

4.2. Influence of Batch Size on Classification Performance

From the 3D colormap surface in Figure 10, we can see that the batch size in the convolutional neural network had a greater influence on the classification performance of the network, which was also obtained in [43]. The test accuracies of six classification networks all decreased with the increase in batch size. A similar phenomenon was shown in [44]. This phenomenon may be caused by the larger batch size of training data being not conducive to parameter updating and optimization. On the contrary, a smaller batch size may be better for solving the positive and negative cancellation problem of the gradient update value caused by the differences in sampling.

4.3. Advantages of KRS-Net in Structure

The test accuracy of the proposed KRS-Net was higher than that of AlexNet, VGG16, GoogLeNet, ResNet101, and DenseNet201 in this study, which was determined by the advantages of the KRS-Net structure. First, the original LRN is replaced by BN based on AlexNet architecture, which reduces the complexity of the network and improves the convergence of the network. Second, the addition of residual block deepens the network, which can extract deeper information and effectively overcome the gradient vanishing problem. Third, the fusion of SVM with a FC layer replaces the softmax classifier in AlexNet, which transforms the original nonlinear classification problem into a linear classification problem to deal with high-dimensional space so that the test accuracy is further improved.

4.4. Influence of Structure on Training Time and Parameters

To identify the factors affecting the network training time and parameter quantity, we studied the training time and parameters of the six networks when the batch size was 8 and the epoch is 25, as shown in Table 4. It can be seen from Table 4 that there was a high similarity in training time between KRS-Net and the lightweight network AlexNet, but the test accuracy of the former was 4.19% higher than that of the latter. This may be because the addition of the BN layer improves the training speed and convergence of the network. In addition, KRS-Net has more network layers and connections but has a smaller network size and fewer parameters than VGG16 and AlexNet. This may be because the skip connection structure of the residual network not only overcomes the gradient vanishing problem but also reduces the network size and parameters.

Table 4.

Parameters and training times of six classification networks.

4.5. Future Work

The evolution of koi has become more and more complex through many years of breeding and screening. To date, koi can be divided into thirteen categories in a narrow sense, but more than 100 subcategories have broadly been bred. If more than 100 varieties of koi can effectively be classified, the time cost and labor force required for koi breeding will be further reduced to a certain extent. Therefore, a multi-variety and lightweight classification network will be studied with a high accuracy rate and rapid speed in future work to lay the foundation for the research and development of multi-variety classification equipment.

The actual situation for the classification of koi varieties may be complex. Therefore, a multi-objective situation may occur, and some factors (such as posture change of koi, object occlusion, and illumination change) affect the classification accuracy of koi varieties. Our future work will focus on solving the problems of classifying koi varieties in complex situations.

5. Conclusions

Koi variety classification was studied to solve the problems caused by the high similarity among some varieties. In this study, a dataset including thirteen kinds of koi was established, and a koi variety classification network KRS-Net was proposed based on residual network and SVM. Compared with five other mainstream networks, the performance superiority of the proposed KRS-Net was proven. this study provides a new solution for the classification of koi varieties, which can be extended to breeding, aquaculture, grade sorting, and other marine fields.

Author Contributions

Conceptualization, J.L.; methodology, J.L.; software, Y.Z.; validation, J.L. and L.D.; formal analysis, F.W.; investigation, Y.Z. and Q.L.; resources, F.W.; data curation, F.W.; writing—original draft preparation, Y.Z.; writing—review and editing, J.L.; visualization, W.X.; supervision, J.L. and L.D.; project administration, J.L.; funding acquisition, J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research work was financially supported by the project of the National Natural Science Foundation of China (32073029), the key project of the Shandong Provincial Natural Science Foundation (ZR2020KC027), the postgraduate education quality improvement project of Shandong Province (SDYJG19134), the project of the China Scholarship Council (201908370048), and the Open Program of Key Laboratory of Cultivation and High-value Utilization of Marine Organisms in Fujian Province (2021fjscq08).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

With the consent of the authors, the data to this article can be found online at: http://33596lk211.qicp.vip/dataweb/index.html, accessed on 21 May 2022.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Pinkey, S. Study of the fresh water fish diversity of Koshi river of Nepal. Int. J. Fauna Biol. Stud. 2016, 3, 78–81. [Google Scholar]

- Nuwansi, K.; Verma, A.; Chandrakant, M.; Prabhath, G.; Peter, R. Optimization of stocking density of koi carp (Cyprinus carpio var. koi) with gotukola (Centella asiatica) in an aquaponic system using phytoremediated aquaculture wastewater. Aquaculture 2021, 532, 735993. [Google Scholar] [CrossRef]

- Wang, K.L.; Chen, S.N.; Huo, H.J.; Nie, P. Identification and expression analysis of sixteen Toll-like receptor genes, TLR1, TLR2a, TLR2b, TLR3, TLR5M, TLR5S, TLR7-9, TLR13a-c, TLR14, TLR21-23 in mandarin fish Siniperca chuatsi. Dev. Comp. Immunol. 2021, 121, 104100. [Google Scholar] [CrossRef]

- De Kock, S.; Gomelsky, B. Japanese Ornamental Koi Carp: Origin, Variation and Genetics; Biology and Ecology of Carp; Informa UK Limited: Boca Raton, FL, USA, 2015; pp. 27–53. [Google Scholar]

- Sun, X.; Chang, Y.; Ye, Y.; Ma, Z.; Liang, Y.; Li, T.; Jiang, N.; Xing, W.; Luo, L. The effect of dietary pigments on the coloration of Japanese ornamental carp (koi, Cyprinus carpio L.). Aquaculture 2012, 342, 62–68. [Google Scholar] [CrossRef]

- Bairwa, M.K.; Saharan, N.; Rawat, K.D.; Tiwari, V.K.; Prasad, K.P. Effect of LED light spectra on reproductive performance of Koi carp (Cyprinus carpio). Indian J. Anim. Res. 2017, 51, 1012–1018. [Google Scholar] [CrossRef]

- Xie, Z.; Wang, D.; Jiang, S.; Peng, C.; Wang, Q.; Huang, C.; Li, S.; Lin, H.; Zhang, Y. Chromosome-Level Genome Assembly and Transcriptome Comparison Analysis of Cephalopholis sonnerati and Its Related Grouper Species. Biology 2022, 11, 1053. [Google Scholar] [CrossRef]

- Nica, A.; Mogodan, A.; Simionov, I.-A.; Petrea, S.-M.; Cristea, V. The influence of stocking density on growth performance of juvenile Japanese ornamental carp (Koi, Cyprinus carpio L.). Sci. Pap. Ser. D Anim. Sci. 2020, 63, 483–488. [Google Scholar]

- Kim, J.-I.; Baek, J.-W.; Kim, C.-B. Image Classification of Amazon Parrots by Deep Learning: A Potentially Useful Tool for Wildlife Conservation. Biology 2022, 11, 1303. [Google Scholar] [CrossRef]

- Tian, X.; Pang, X.; Wang, L.; Li, M.; Dong, C.; Ma, X.; Wang, L.; Song, D.; Feng, J.; Xu, P. Dynamic regulation of mRNA and miRNA associated with the developmental stages of skin pigmentation in Japanese ornamental carp. Gene 2018, 666, 32–43. [Google Scholar] [CrossRef]

- Peng, F.; Chen, K.; Zhong, W. Classification and appreciation of three species of koi. Sci. Fish Farming 2018, 8, 82–83. [Google Scholar]

- Song, S.; Duan, P. Koi and its variety classification. Shandong Fish. 2009, 26, 53–54. [Google Scholar]

- Garland, J.; Hu, M.; Kesha, K.; Glenn, C.; Duffy, M.; Morrow, P.; Stables, S.; Ondruschka, B.; Da Broi, U.; Tse, R. An overview of artificial intelligence/deep learning. Pathology 2021, 53, S6. [Google Scholar] [CrossRef]

- Talib, M.A.; Majzoub, S.; Nasir, Q.; Jamal, D. A systematic literature review on hardware implementation of artificial intelligence algorithms. J. Supercomput. 2021, 77, 1897–1938. [Google Scholar] [CrossRef]

- Liu, Y.; Yang, C.; Gao, Z.; Yao, Y. Ensemble deep kernel learning with application to quality prediction in industrial polymerization processes. Chemom. Intell. Lab. Syst. 2018, 174, 15–21. [Google Scholar] [CrossRef]

- Wang, T.; Zhao, Y.; Sun, Y.; Yang, R.; Han, Z.; Li, J. Recognition approach based on data-balanced faster R CNN for winter jujube with different levels of maturity. Trans. Chin. Soc. Agric. Mach. 2020, 51, 457–463. [Google Scholar]

- Xu, W.; Zhao, L.; Li, J.; Shang, S.; Ding, X.; Wang, T. Detection and classification of tea buds based on deep learning. Comput. Electron. Agric. 2022, 192, 106547. [Google Scholar] [CrossRef]

- Li, J.; Xu, C.; Jiang, L.; Xiao, Y.; Deng, L.; Han, Z. Detection and analysis of behavior trajectory for sea cucumbers based on deep learning. IEEE Access 2019, 8, 18832–18840. [Google Scholar] [CrossRef]

- Xu, W.; Zhu, Z.; Ge, F.; Han, Z.; Li, J. Analysis of behavior trajectory based on deep learning in ammonia environment for fish. Sensors 2020, 20, 4425. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Xu, W.; Deng, L.; Xiao, Y.; Han, Z.; Zheng, H. Deep Learning for Visual Recognition and Detection of Aquatic Animals: A Review. Rev. Aquac. 2022, 1–25. [Google Scholar] [CrossRef]

- Xu, Y.; Shen, H. Review of Research on Biomedical Image Processing Based on Pattern Recognition. J. Electron. Inf. Technol. 2020, 42, 201–213. [Google Scholar]

- Sarica, A.; Vaccaro, M.G.; Quattrone, A.; Quattrone, A. A Novel Approach for Cognitive Clustering of Parkinsonisms through Affinity Propagation. Algorithms 2021, 14, 49. [Google Scholar] [CrossRef]

- Liu, W.; Wang, Z.; Zeng, N.; Alsaadi, F.E.; Liu, X. A PSO-based deep learning approach to classifying patients from emergency departments. Int. J. Mach. Learn. Cybern. 2021, 12, 1939–1948. [Google Scholar] [CrossRef]

- Han, Z.; Wan, J.; Deng, L.; Liu, K. Oil Adulteration identification by hyperspectral imaging using QHM and ICA. PLoS ONE 2016, 11, e0146547. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Wang, Z.; Liu, X.; Zeng, N.; Liu, Y.; Alsaadi, F.E. A survey of deep neural network architectures and their applications. Neurocomputing 2017, 234, 11–26. [Google Scholar] [CrossRef]

- Eerola, T.; Kraft, K.; Grönberg, O.; Lensu, L.; Suikkanen, S.; Seppälä, J.; Tamminen, T.; Kälviäinen, H.; Haario, H. Towards operational phytoplankton recognition with automated high-throughput imaging and compact convolutional neural networks. Ocean. Sci. Discuss. 2020, 62, 1–20. [Google Scholar]

- Zhu, D.; Qi, R.; Hu, P.; Su, Q.; Qin, X.; Li, Z. YOLO-Rip: A modified lightweight network for Rip Currents detection. Front. Mar. Sci. 2022, 9, 930478. [Google Scholar] [CrossRef]

- Lu, Y.; Tung, C.; Kuo, Y. Identifying the species of harvested tuna and billfish using deep convolutional neural networks. ICES J. Mar. Sci. 2020, 77, 1318–1329. [Google Scholar] [CrossRef]

- Rauf, H.T.; Lali, M.I.U.; Zahoor, S.; Shah, S.Z.H.; Rehman, A.U.; Bukhari, S.A.C. Visual features based automated identification of fish species using deep convolutional neural networks. Comput. Electron. Agric. 2019, 167, 105075. [Google Scholar] [CrossRef]

- Knausgård, K.M.; Wiklund, A.; Sørdalen, T.K.; Halvorsen, K.T.; Kleiven, A.R.; Jiao, L.; Goodwin, M. Temperate fish detection and classification: A deep learning based approach. Appl. Intell. 2022, 52, 6988–7001. [Google Scholar] [CrossRef]

- Ju, Z.; Xue, Y. Fish species recognition using an improved AlexNet model. Optik 2020, 223, 165499. [Google Scholar] [CrossRef]

- Hridayami, P.; Putra, I.K.G.D.; Wibawa, K.S. Fish species recognition using VGG16 deep convolutional neural network. J. Comput. Sci. Eng. 2019, 13, 124–130. [Google Scholar] [CrossRef]

- Huang, X.; Chen, W.; Yang, W. Improved Algorithm Based on the Deep Integration of Googlenet and Residual Neural Network; IOP Publishing: Bristol, UK, 2021; p. 012069. [Google Scholar]

- Xu, X.; Li, W.; Duan, Q. Transfer learning and SE-ResNet152 networks-based for small-scale unbalanced fish species identification. Comput. Electron. Agric. 2021, 180, 105878. [Google Scholar] [CrossRef]

- Zhang, K.; Guo, Y.; Wang, X.; Yuan, J.; Ding, Q. Multiple feature reweight densenet for image classification. IEEE Access 2019, 7, 9872–9880. [Google Scholar] [CrossRef]

- Gao, S.; Dai, Y.; Li, Y.; Jiang, Y.; Liu, Y. Augmented flame image soft sensor for combustion oxygen content prediction. Meas. Sci. Technol. 2022, 34, 015401. [Google Scholar] [CrossRef]

- Liu, K.; Li, Y.; Yang, J.; Liu, Y.; Yao, Y. Generative principal component thermography for enhanced defect detection and analysis. IEEE Trans. Instrum. Meas. 2020, 69, 8261–8269. [Google Scholar] [CrossRef]

- Zhu, H.; Yang, L.; Fei, J.; Zhao, L.; Han, Z. Recognition of carrot appearance quality based on deep feature and support vector machine. Comput. Electron. Agric. 2021, 186, 106185. [Google Scholar] [CrossRef]

- Hemke, R.; Buckless, C.G.; Tsao, A.; Wang, B.; Torriani, M. Deep learning for automated segmentation of pelvic muscles, fat, and bone from CT studies for body composition assessment. Skelet. Radiol. 2020, 49, 387–395. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization. Int. J. Comput. Vis. 2020, 128, 336–359. [Google Scholar] [CrossRef]

- Taheri-Garavand, A.; Ahmadi, H.; Omid, M.; Mohtasebi, S.S.; Carlomagno, G.M. An intelligent approach for cooling radiator fault diagnosis based on infrared thermal image processing technique. Appl. Therm. Eng. 2015, 87, 434–443. [Google Scholar] [CrossRef]

- Zhang, Z.; Tang, Y.-G.; Yang, K. A two-stage restoration of distorted underwater images using compressive sensing and image registration. Adv. Manuf. 2021, 9, 273–285. [Google Scholar] [CrossRef]

- Burçak, K.C.; Baykan, Ö.K.; Uğuz, H. A new deep convolutional neural network model for classifying breast cancer histopathological images and the hyperparameter optimisation of the proposed model. J. Supercomput. 2021, 77, 973–989. [Google Scholar] [CrossRef]

- Goyal, P.; Dollár, P.; Girshick, R.; Noordhuis, P.; Wesolowski, L.; Kyrola, A.; Tulloch, A.; Jia, Y.; He, K. Accurate, large minibatch sgd: Training imagenet in 1 hour. arXiv 2017, arXiv:1706.02677. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).