Simple Summary

Coronavirus disease 2019 is a worldwide pandemic posing significant health risks. Medical imaging tools can be considered as a supporting diagnostic testing method for coronavirus disease since it uses available medical technologies and clinical findings. The classification of coronavirus disease using computed tomography chest images necessitates massive data collection and innovative artificial intelligence-based models. In this study, we explored the significant application of computer vision and an ensemble of deep learning models for automated coronavirus disease detection. In order to show the better performance of the proposed model over the recently developed deep learning models, an extensive comparative analysis is made, and the obtained results exhibit the superior performance of the proposed model on benchmark test images. Therefore, the proposed model has the potential as an automated, accurate, and rapid tool for supporting the detection and classification process of coronavirus disease.

Abstract

Coronavirus disease 2019 (COVID-19) has spread worldwide, and medicinal resources have become inadequate in several regions. Computed tomography (CT) scans are capable of achieving precise and rapid COVID-19 diagnosis compared to the RT-PCR test. At the same time, artificial intelligence (AI) techniques, including machine learning (ML) and deep learning (DL), find it useful to design COVID-19 diagnoses using chest CT scans. In this aspect, this study concentrates on the design of an artificial intelligence-based ensemble model for the detection and classification (AIEM-DC) of COVID-19. The AIEM-DC technique aims to accurately detect and classify the COVID-19 using an ensemble of DL models. In addition, Gaussian filtering (GF)-based preprocessing technique is applied for the removal of noise and improve image quality. Moreover, a shark optimization algorithm (SOA) with an ensemble of DL models, namely recurrent neural networks (RNN), long short-term memory (LSTM), and gated recurrent unit (GRU), is employed for feature extraction. Furthermore, an improved bat algorithm with a multiclass support vector machine (IBA-MSVM) model is applied for the classification of CT scans. The design of the ensemble model with optimal parameter tuning of the MSVM model for COVID-19 classification shows the novelty of the work. The effectiveness of the AIEM-DC technique take place on benchmark CT image data set, and the results reported the promising classification performance of the AIEM-DC technique over the recent state-of-the-art approaches.

1. Introduction

In December 2019, a new coronavirus disease 2019 (COVID-19) appeared in Wuhan, China, and has become a global healthcare emergency rapidly [1]. Because of its optimized medical resource assignment, higher infection rates and fast diagnoses in pandemic regions are crucial. Fast and accurate diagnoses of COVID-19 assist in isolating diseased persons could slow the disease spread. However, in pandemic regions, inadequate healthcare resource has become a major problem [2]. Hence, finding higher-risk persons with the worst prognoses for earlier special care and medical resource is critical in COVID-19 treatments. Now, reverse transcription (RT)-PCR is employed as a gold truth for the diagnosis of COVID-19. However, the shortage of testing kits and the limited sensitivity of RT-PCR in pandemic areas increases the burden of screening, and some diseased peoples are not isolated instantly [3]. This accelerates the spread of COVID-19. In contrast, owing to the absence of healthcare resources, some deceased persons could not receive prompt treatments. In such situations, detecting higher-risk patients with the worst prognoses for earlier prevention and treatments are significant. Subsequently, faster diagnoses and detecting higher-risk patients with the worst prognoses are highly useful for the management and control of COVID-19.

In order to alleviate the shortage and inefficiency of the present test for COVID-19, a large number of measures have been dedicated to searching for other testing systems [4]. Various researches using statistical models, machine learning (ML), and deep learning (DL) models have demonstrated that computed tomography (CT) scan manifests clear radiological findings of COVID-19 patients and serves as a more accessible and efficient testing method because of the wide accessibility of CT devices that could rapidly achieve results [5,6,7]. Furthermore, to mitigate the burden of healthcare experts in reading CT scans, several studies have proposed a DL method that could interpret CT images automatically and forecast whether the CT is positive for COVID-19 [5]. When this work has demonstrated a possible result, they have two constraints. Initially, the CT scans data set employed in this work is not shareable to the public because of privacy concerns. Subsequently, the CT images cannot be used by other trained models to diagnose the COVID-19 [6]. In addition, the limited availability of open-source annotated COVID-19 CT data sets considerably hinders the development and research of more innovative AI methods for precise CT-based testing of COVID-19. Next, this work requires a huge amount of CTs at the time model training to attain performances that meet the medical standards. These requirements are severe in practice and may not be confronted by several hospitals, particularly in the circumstance where healthcare experts are occupied highly by taking care of COVID-19 persons and are not likely to have time to annotate and collect huge amounts of COVID-19 CT scans.

The DL method as artificial intelligence (AI) method has demonstrated a possible result in assisting lung disease analyses through CT images [7]. Benefit from the stronger feature learning capability, DL could mine feature that is associated with medical outcome from CT image manually. Feature learned through DL methods could reflect higher dimension abstract mapping that is complicated for humans to sense; however, they are highly related to the medical outcome [8]. The transfer learning (TL) method aims to leverage data-rich source tasks to assist the learning of data-deficient targeted tasks (CT-based diagnoses of COVID-19). One frequently employed approach is to learn a strong visual feature extraction deep networks by pre-training this network on a huge data set in the source task and later adopt these pretrained networks to the targeted tasks by fine-tuning the network’s weight on the small size data sets in the targeted tasks [9]. In general, the TL model might be sub-optimal because the source data might contain a huge discrepancy with the targeted data based on the visual appearances of class labels and images that cause the feature extraction networks biased to the source information and generalize worse on the targeted information.

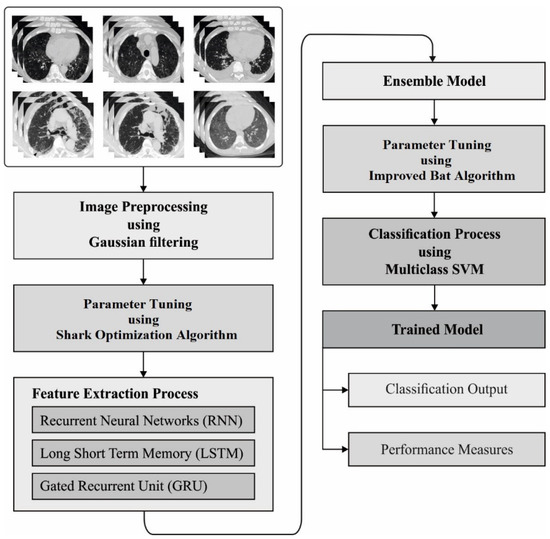

This study proposes an artificial intelligence-based ensemble model for the detection and classification (AIEM-DC) of COVID-19. Primarily, the Gaussian filtering (GF)-based preprocessing technique is applied for the removal of noise and improving image quality. Moreover, shark optimization algorithm (SOA) with an ensemble of DL models, namely recurrent neural networks (RNN), long short-term memory (LSTM), and gated recurrent unit (GRU), is employed for feature extraction. In addition, an improved bat algorithm with a multiclass support vector machine (IBA-MSVM) model is used as a classifier. The experimental validation of the AIEM-DC technique is validated on the benchmark CT image data set, and the results reported the promising classification performance of the AIEM-DC technique over the recent state-of-the-art approaches.

2. Literature Review

This section offers a brief review of existing COVID-19 diagnosis and classification models. Serte and Demirel [10] proposed an AI method for classifying COVID-19 and standard CT volume. The presented model employs the ResNet-50 DL models for predicting COVID-19 on all CT images of three-dimensional CT scans. Next, these AI methods fuse image-level prediction to detect COVID-19 on three-dimensional CT volumes. In Li et al. [11], an AI scheme has been proposed to manually quantify and segment the COVID-19 diseased lung region on a thick section chest CT image. The 531 CT images from 204 COVID-19 persons have been gathered from selected COVID-19 hospitals. The manually segmented lung abnormalities have been related to the automatic segmentation of two skillful radiotherapists with the Dice coefficients on arbitrarily elected subsets (30 CT scans). The two imaging bio-markers have been computed manually such as POI, and the iHU, for assessing diseases progression and severity.

Alshazly et al. [12] explore how a deep learning model trained on chest CT images could detect COVID-19 diseased persons in an automated and fast manner. Then, they adapted deep network architecture and presented a TL approach with customized input tailored to all the deep architectures for achieving better results. Yousefzadeh et al. [13] present ai-corona, a radiotherapist assistant DL model for COVID-19 disease diagnoses with chest CT scan. This model incorporates an effective NetB3-based feature extractor. They used three data sets: the CC-CCII set, MosMedData, and MDH cohorts. General, this data set constitutes 7184 scans from 5693 subjects and includes the normal class, COVID-19NCA, CP, and non-pneumonia. Hasan et al. [14] introduce the integration of DL models of extracted features using the Q-deformed entropy hand-crafted feature to discriminate among healthier CT lung images, COVID-19 coronavirus, and pneumonia. In this work, preprocessing is employed for reducing the effects of intensity differences among CT slices. Next, histogram thresholding is employed for isolating the background of CT lung scans. All the CT lung scans undergo a feature extraction that involves Q-deformed entropy and DL algorithms. The attained feature is categorized into an LSTMNN classifier.

In Shah et al. [15], the DL methods employed in the presented model is depending on CNN models. These manuscripts focus on distinguishing the CT scans of COVID-19 and non-COVID-19 CT images with distinct DL methods. A self-developed model called CTnet-10 has been developed for the COVID-19 diagnoses, has 82.1% of accuracy. Moreover, another methods that verified are VGG-16, DenseNet-169, VGG-19, ResNet-50, and InceptionV3. In Zheng et al. [16], a weakly supervised DL-based software framework has been proposed by three-dimensional CT volumes for detecting COVID-19. For all the patients, the lung regions are divided into a pretrained UNet; next, the separated three-dimensional lung regions were fed to a three-dimensional DNN for predicting the likelihood of COVID-19 disease.

Shalbaf and Vafaeezadeh [17] introduce an automated method that depends on an ensemble of deep TL for the diagnosis of COVID-19. The overall of 15 pretrained CNNs architecture: NasNetLarge, EfficientNet (B0-B5), InceptionV3, NasNetMobile, SeResnet 50, ResNet-50Xception, Inception_resnet_v2, and ResNext50 DenseNet121 are employed and later finetuned on the targeted tasks. Next, constructed an ensemble model according to the majority voting of optimal combinations of deep TL output for additionally improving the detection accuracy. Wu et al. [18] present a weakly supervised deep active learning model named COVID-AL for diagnosing COVID-19 by CT scan and patient-level label. The COVID-AL includes the lung region segmentation using two-dimensional UNet and the diagnoses of COVID-19 using a new hybrid active learning method that concurrently considers predicted loss and samples diversity.

3. The Proposed Model

In this study, a new AIEM-DC technique is proposed for the detection and classification of COVID-19 using chest CT scans. The AIEM-DC technique aims to accurately detect and classify the COVID-19 using an ensemble of DL models. The AIEM-DC technique involves GF-based preprocessing, ensemble DL-based feature extraction, SOA-based hyperparameter tuning, MSVM-based classification, and IBA-based parameter tuning. Figure 1 demonstrates the overall block diagram of the AIEM-DC model. These processes are elaborated in the succeeding sections.

Figure 1.

The process flow of proposed model.

3.1. Stage 1: Gaussian Filtering (GF)-Based Preprocessing

At the initial stage, the GF technique is applied for image preprocessing to eradicate the noise and boost the quality of the CT scans. The two dimensions GF has been used widely for noise elimination and smoothing. It requires huge processing resources and the efficacy in executing is a stimulating study. Convolution’s operator is determined as Gaussian operator, and suggestion of Gaussian smoothing is accomplished using a convolution. The Gaussian operators in 1D are given below:

The optimal smoothing filters for an image undergo localization in the frequency and spatial domain, in which the ambiguity relations are fulfilled by [19]:

The Gaussian operator in 2D is demonstrated as:

whereas (sigma) represents the standard deviation (SD) of the Gaussian operator. While it contains a maximal value, the image smoothing will be high. represent the Cartesian coordinate points of the image.

3.2. Stage 2: Ensemble Feature Extraction

During feature extraction, the preprocessed CT scans are passed into the DL models, and the ensemble process takes place. The three DL models receive the CT scans as input and generate the feature vectors as output, which are then integrated by the ensemble process. Followed by the SOA is applied to properly tune the hyperparameters involved in the DL models.

3.2.1. RNN Model

In recent times, the RNN technique was extremely preferred, particularly for consecutive data and classic RNN [20]. All nodes at the time step involve input in the preceding nodes, and it remains to use the feedback loops. All the nodes generate the existing hidden form and outcome by employing present input and preceding hidden form as:

where implies the hidden block of all the time steps (t), represents the weights to the hidden layer from the recurrent link, but indicates the bias to hidden as well as output forms as signifies the activation functions executed on all nodes in the networks.

3.2.2. LSTM Model

The major demerit of the conventional RNN technique is that when the time step improves, the network gets failed to derive the context in the time step of the preceding state so much fear after as phenomenon is called long-term dependencies. Because of the deep layer of the network as well as the recurrent performance of classic RNN, explode and vanish gradient issues are also encountered quite frequently. Furthermore, for addressing this issue, the LSTM techniques are established by using memory cells with many gates in hidden layers [20,21]. The block of hidden layers with LSTM cell units and three purposes of gate controller as:

- The forget gate chooses that measure of long-term state must be omitted;

- An input gate control that measure of must be further to long-term form

- An output gate defines that quantity of must be read and output to and

The subsequent formulas illustrate the long-term as well as short-term forms of cell and output of all layers in time step:

where implies the weight matrices to equivalent linked input vector, defines the weight matrices of the short-term form of preceding time step, and are bias.

3.2.3. GRU Model

In GRU cell units [22], the two vectors in LSTM cells are related as to one vector . One gate controller controls the combined form of forgetting as well as input gates. If output is one, the forget gate was opened, and input gate was closed, but was zero, the forget gate was closed, and the input gate was opened. During this case, an input of time step was deleted all the times the earlier memory has been saved. During the absence of an output gate, it could be supposed that GRU has various execution of transfer and group of data that LSTM needs for applying. Intuitively, the reset gate defines as combining a novel input with preceding memory, and the upgrade gate chooses the preceding memory data has retained for calculating the novel state. The variances in the outstanding LSTM, but the changes previously defined as:

where stands for the weight matrices to equivalent linked input vector, implies the weight matrices of preceding time steps, and are bias.

3.2.4. Ensemble Modeling

The AIEM-DC technique makes use of RNN, LSTM, and GRU models for feature extraction. For aggregating the outcome from these three DL models, they are trained by individual vectors, and 10-fold cross-validation is treated as the fitness function. Consider a data set with a set of images under class labels (COVID-19 and non-COVID-19) can be defined by,

Assume a set containing DL models, in this case, 3 DL models as defined below,

The images are fed into the DL model and generate the set CN, as expressed in Equation (18):

Every DL model offers a decision , related to classification, where 1 denotes non-COVID and for COVID, based on . The decision can be represented by the use of Equation (19) [21]:

it must be noticed that all elements of matrix are equal to the outcome of the DNN and image group of with respect to place in the matrix, namely . Moreover, the score values, , has been connected to all the decisions and demonstrates the posterior probabilities which an image can go to class . In addition, the group of scores is determined as:

During this case, all the elements of matrix equal to the outcomes of DL techniques and image group of with connected posterior probabilities with respect to place in the matrix like .

3.2.5. Hyperparameter Tuning

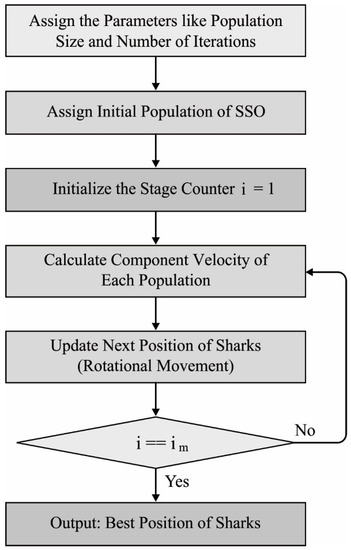

In order to tune the hyperparameters (such as batch size, time step, number of layers, learning rate, weight decay, and epoch count) involved in the three DL models, the SOA is employed in such a way that the classification performance gets increased. SOA is an effective bioinspired optimization algorithm [23]. It is commonly employed in different situations [24,25,26], such as cloud job scheduling, resolving arithmetical functions, training ANNs, constructing load forecast, healthcare image development, and optimum process of the reservoir. The SOA was stimulated by the shark behaviors. The rotation motion of sharks is an important operator in the SOA for presenting local optimum. Figure 2 illustrates the flowchart of SOA. In SOA, few assumptions were created, and they are given in the following:

Figure 2.

Flowchart of SOA.

- (1)

- The injured fishes are considered prey to the shark;

- (2)

- The shark tries to discover the injured fish by getting a blood particle from the injured fish’s body;

- (3)

- The velocity of injured fishes is ignored against the shark’s velocity.

In SOA, the shark position is regarded as a candidate solution of the optimization problems [22]:

where : the primary location, : the dimensions of sharks’ location, and ND: numbers of decision variable. While the shark is closer to the injured fish, they obtain stronger odor particles, whereupon they increase their velocity. The shark changes their velocity by:

whereas : the kth dimensions of jth sharks velocity, : velocity limiter, : inertia coefficient, : stage numbers, : arbitrary number, of the objective function, and : arbitrary numbers. The shark performs the forward movement (FM) with the former location and velocity of the shark:

where : novel location of jth shark according to : time interval, : present place of jth sharks, and : time interval. The shark uses rotation motion to escape from local optimum:

whereas the locations of shark afterward rotation motion, ; arbitrary numbers, : numbers of point in the local search.

When maximization problems are taken into account, the concluding position of the shark is evaluated by:

The position of the shark is arbitrarily initiated. Next, the objective functions are calculated for all the agents. The optimal sharks with optimum objective functions are established. Later, the velocity and location of the sharks are upgraded.

3.3. Stage 3: IBA-MSVM-Based Classification

At the final stage, the IBA-MSVM model receives the feature vectors as input and allot proper class labels to it. The MSVM classification was dependent upon Vapnik–Chervonenkis (VC) dimensional of the statistical learning system. The key objective of MSVM is to map the preprocessing, non-linear inseparable microarray gene expression information as to a linear extremely dimensional manifold with the uses of change , afterward attaining an optimum hyperplane: with resolving the subsequent optimized convex issue (the soft margin issue):

Subjected to

where refers to the coefficient vectors of hyperplane from the manifold (feature space), implies the threshold value of hyperplanes, stands for the slack issue presented to classifier error, and indicates the penalty factor to error [27]. The parameter controls the penalty of misclassified and their value has been usually defined through cross-validation. Superior values of generally lead to a small margin that minimizes classifier error, but lower values of can generate a wider margin resulting from various misclassification.

The feature space has been extremely dimensional; hence, their direct calculation leads to “dimensional disaster.” But, as , at that point, every operation of MSVM in the feature space is only dot products [28]. Then, kernel functions [29], i.e., , are effectual at handle dot product, it can be were presented as to SVM. This represents there is no requirement for knowing to map the microarray gene expression information to their original space to the feature space Therefore, the selection of kernels and their coefficient was essential in the computational performance and accuracy of MSVM classification techniques.

The general kernel function, which is employed as a continuous predictor, contains as:

The linear kernel can be defined as follows.

Next, the polynomial kernel can be represented using Equation (28):

where , and

Then, the Gaussian kernel can be equated as follows.

where .

This MSVM kernel function is approximately considered as follows: local kernel function as well as the global kernel function. Samples widely different have a huge influence on the global kernel values but instanced nearby each other significantly control the local kernel value. The linear, as well as polynomial kernels, were optimum samples of global kernels, but the Gaussian radial basis function (RBF) and Gaussian are local kernels.

Finally, the parameter tuning of the MSVM technique is accomplished by the use of an improved bat algorithm (IBA). BA is a potent optimization method, i.e., broadly employed in distinct applications such as image development domain, parameter extraction of photovoltaic model, satellite formation system, training ELM model, optimum control of power scheme, and FS method [30,31,32]. Excellent and quick convergence in exploitation and exploration are benefits of BA. For getting a sense of distance and finding the variance among food and obstacle, the bat uses their exclusive echolocation capability. In all the iterations, the loudness and pulsation rate of the bats are upgraded. Initially, an arbitrary population of bats is initiated. The bat position is considered a decision variable. The location, frequency, and velocity of the bat changed by:

whereas : arbitrary numbers, : minimal frequency, : maximal frequency, : optimal solutions, : the velocity of ith bats at iteration : the location of ith bats at iteration , : frequency of ith bats, and : the position of ith bats at iteration The bat uses an arbitrary walk as a local search:

: novel location of bats, : old location of bats, : loudness, and : arbitrary numbers. The bat’s loudness and pulsation rate are different by:

In which and : constants, : pulsation rate of ith bats, : loudness of ith bats at iteration . Initially, the first population and arbitrary value of the parameters are determined [24]. Next, the value of objective functions is calculated for all bats to define the quality of the solution. Lastly, the optimal bat with optimal values of the objective functions are determined, the velocity and position of bats are upgraded.

The IBA technique is derived by the use of Lévy flight (HH). This process was employed for more relieving the premature convergence problem that is the core drawback of BA. The Lévy flight (LF) [33] offers an arbitrary walk process for prospering management of local search. This procedure was demonstrated as:

where and implies the Gamma function, explains the step size, stands for the Lévy index, implies that instances create from Gaussian distribution in that mean is 0 and variance is correspondingly. According to the above-mentioned process, a novel enhanced part to upgrade the solutions of BA as:

where signifies the novel place of search agents To guarantee the optimum solution candidate, a fitter agent is kept:

4. Experimental Validation

4.1. Data Set Details

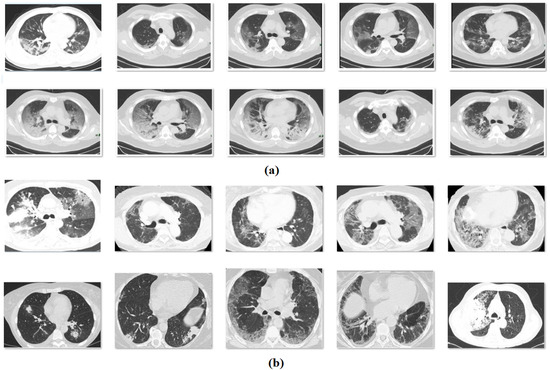

This section assesses the performance of the proposed model on the benchmark COVID-CT-data set [34], which includes 349 CT images with the clinical findings of COVID-19 from 216 patients. The images are collected from COVID-19-related papers from medRxiv, bioRxiv, NEJM, JAMA, Lancet, etc. CTs containing COVID-19 abnormalities are selected by reading the figure captions in the papers. Figure 3 depicts the sample test images. Moreover, we have used 10-fold cross-validation to split the data set into training and testing parts.

Figure 3.

(a) COVID, (b) non-COVID: sample images.

4.2. Results and Discussion

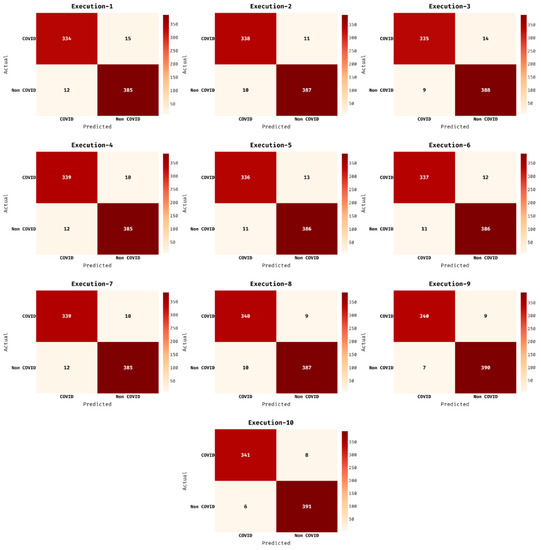

Figure 4 demonstrates the confusion matrices of the AIEM-DC techniques under 10 executions. The results exhibited that the AIEM-DC technique has demonstrated effectual outcomes under every execution. For instance, with execution-2, the AIEM-DC technique has classified a set of 338 images into COVID and 391 images into non-COVID. Followed by, with execution-4, the AIEM-DC method has ordered a set of 339 images into COVID and 385 images into non-COVID. In line with, with execution-6, the AIEM-DC approach has classified a set of 337 images into COVID and 386 images into non-COVID. Moreover, with execution-8, the AIEM-DC manner has categorized a set of 340 images into COVID and 387 images into non-COVID. At last, with execution-10, the AIEM-DC algorithm has classified a set of 341 images into COVID and 391 images into non-COVID.

Figure 4.

Confusion matrix of AIEM-DC technique under ten executions.

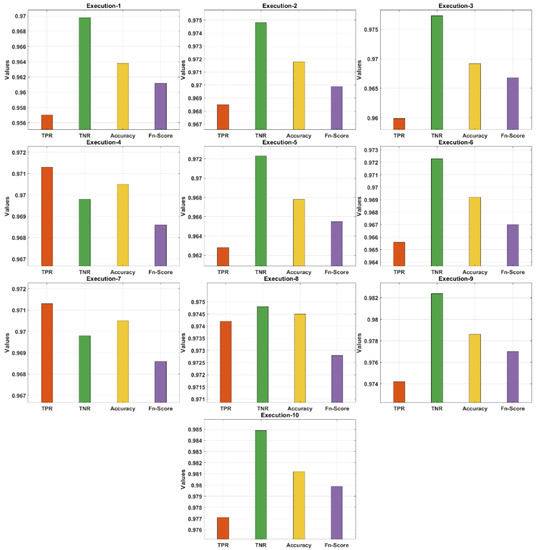

Table 1 and Figure 5 demonstrate the confusion matrix of the AIEM-DC technique under 10 execution rounds. The obtained results highlighted the effectual outcome of the AIEM-DC technique under every round. For instance, with execution-1, the AIEM-DC technique has obtained a TPR of 0.9570, TNR of 0.9698, accuracy of 0.9638, and F-score of 0.9612. In addition, with execution-4, the AIEM-DC method has gained a TPR of 0.9713, TNR of 0.9698, accuracy of 0.9705, and F-score of 0.9686. In addition, with execution-6, the AIEM-DC approach has reached a TPR of 0.9656, TNR of 0.9723, accuracy of 0.9692, and F-score of 0.9670. Moreover, with execution-8, the AIEM-DC system has achieved a TPR of 0.9742, TNR of 0.9748, accuracy of 0.9745, and F-score of 0.9728. Finally, with execution-10, the AIEM-DC methodology has gained a TPR of 0.9771, TNR of 0.9849, accuracy of 0.9812, and F-score of 0.9799.

Table 1.

Results analysis of proposed AIEM-DC model in terms of various measures.

Figure 5.

Result analysis of AIEM-DC model with different measures.

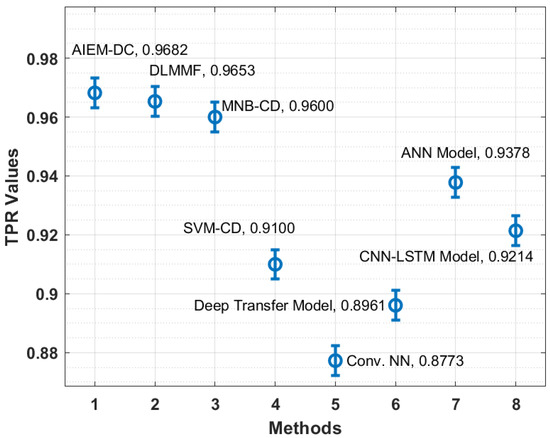

In order to showcase the effectual outcome of the AIEM-DC technique, a detailed comparison study is made with recent techniques in Table 2 [35]. Figure 6 showcases the TPR analysis of the AIEM-DC technique with existing techniques. The figure demonstrated that the Conv. NN and deep transfer models have obtained ineffective outcomes with the lower TPR of 0.8773 and 0.8961, respectively. In addition, the SVM-CD and CNN-LSTM techniques have attained slightly enhanced TPR of 0.9100 and 0.9214, respectively. Followed by, the ANN and MNB-CD techniques have showcased reasonable TPR of 0.9378 and 0.9600, respectively. Furthermore, the DLMMF technique has accomplished near-optimal outcomes with the TPR of 0.9653. However, the proposed technique has resulted in improved performance with a TPR of 0.9682.

Table 2.

Comparative analysis of existing with proposed AIEM-DC method with recent methods [35].

Figure 6.

TPR analysis of AIEM-DC model with existing approaches.

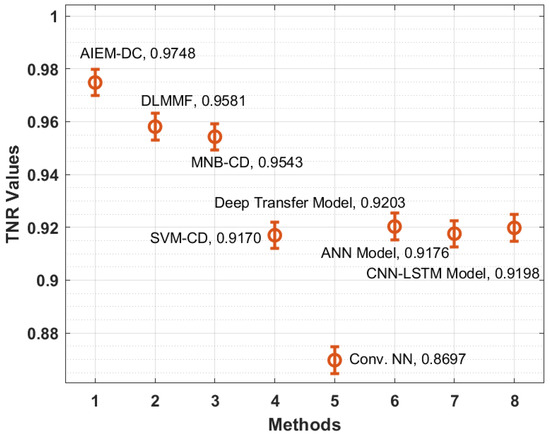

Figure 7 illustrates the TNR analysis of the AIEM-DC approach with recent methods. The figure depicted that the Conv. NN and SVM-CD manners have attained ineffective outcomes with the minimal TNR of 0.8697 and 0.9170 correspondingly. Moreover, the ANN and CNN-LSTM methods have reached somewhat improved TNR of 0.9176 and 0.9198 correspondingly. In addition, the deep transfer and MNB-CD techniques have demonstrated a reasonable TNR of 0.9203 and 0.9543 correspondingly. Moreover, the DLMMF manner has accomplished near optimum outcomes with the TNR of 0.9581. However, the proposed methodology has resulted in increased performance with the TNR of 0.9748.

Figure 7.

TNR analysis of the AIEM-DC model with existing approaches.

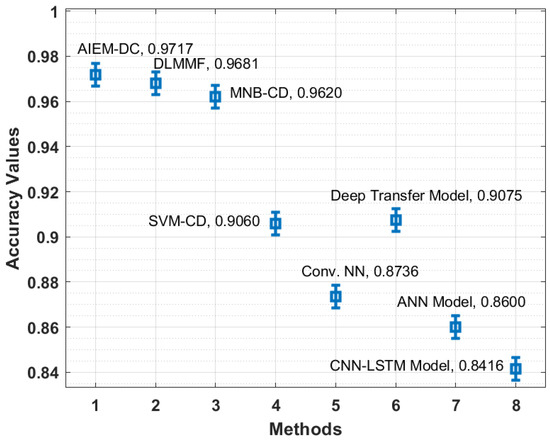

Figure 8 depicts the accuracy analysis of the AIEM-DC method with state-of-the-art algorithms. The figure outperformed that the CNN-LSTM and ANN manners have gained ineffective outcomes with the least accuracy of 0.8416 and 0.8600 correspondingly. Moreover, the Conv. NN and SVM-CD manners have achieved slightly superior accuracy of 0.8736 and 0.9060, respectively. At the same time, the deep transfer and MNB-CD techniques have depicted reasonable accuracy of 0.9075 and 0.9620 correspondingly. Additionally, the DLMMF algorithm has accomplished near-optimal results with an accuracy of 0.9681. Finally, the presented technique has resulted in maximum efficiency with an accuracy of 0.9717.

Figure 8.

Accuracy analysis of the AIEM-DC model with existing approaches.

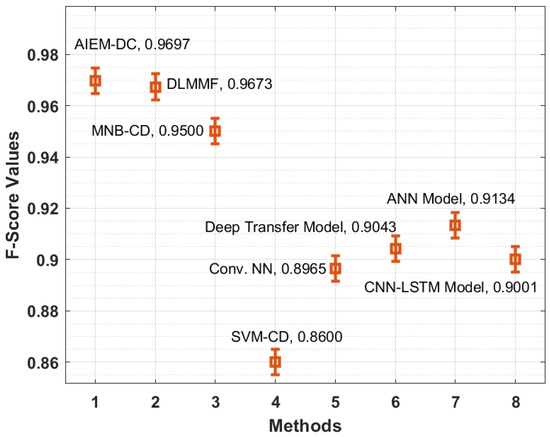

Figure 9 demonstrates the F-score analysis of the AIEM-DC manner with existing methods. The figure stated that the SVM-CD and Conv. NN methodologies have gained ineffective outcomes with the minimum F-score of 0.8600 and 0.8965 correspondingly. In addition, the CNN-LSTM and deep transfer techniques have reached somewhat increased F-score of 0.9001 and 0.9043 correspondingly. Similarly, the ANN and MNB-CD approaches have outperformed reasonable F-score of 0.9134 and 0.9500 correspondingly. Moreover, the DLMMF approach has accomplished near-optimal outcomes with an F-score of 0.9673. At last, the proposed method has resulted in maximal performance with an F-score of 0.9697.

Figure 9.

F-score analysis of the AIEM-DC model with existing approaches.

5. Conclusions

In this study, a new AIEM-DC technique is proposed for the detection and classification of COVID-19 using chest CT scans. The AIEM-DC technique aims to accurately detect and classify the COVID-19 using an ensemble of DL models. The AIEM-DC technique involves GF-based preprocessing, ensemble DL-based feature extraction, SOA-based hyperparameter tuning, MSVM-based classification, and IBA-based parameter tuning. The design of SOA and IBA techniques paves a way to improve the overall classification performance of the AIEM-DC technique to a maximum extent. The experimental validation of the AIEM-DC technique is validated on the benchmark CT image data set, and the results reported the promising classification performance of the AIEM-DC technique over the recent state-of-the-art approaches. As a part of future extension, the hybrid DL architectures can be designed to boost the classification performance of the AIEM-DC technique.

Author Contributions

Conceptualization, Writing—original draft, M.R.; Data curation, Formal analysis, K.E.; Funding acquisition, Investigation, N.A.A.; Methodology, Project administration, H.A.A.; Resources, Software, A.A.B.; Validation, S.M.A.-D.; Validation, Visualization, E.M.K. All authors have read and agreed to the published version of the manuscript.

Funding

This project was supported financially by Institution Fund projects under grant no. (IFPRC-214-166-2020).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing does not apply to this article as no data sets were generated during the current study.

Acknowledgments

The authors extend their appreciation to the Deputyship for Research & Innovation, Ministry of Education in Saudi Arabia for funding this research work through the project number (IFPRC-214-166-2020). and King Abdulaziz University, DSR, Jeddah, Saudi Arabia.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wang, S.; Zha, Y.; Li, W.; Wu, Q.; Li, X.; Niu, M.; Wang, M.; Qiu, X.; Li, H.; Yu, H.; et al. A fully automatic deep learning system for COVID-19 diagnostic and prognostic analysis. Eur. Respir. J. 2020, 56, 2000775. [Google Scholar] [CrossRef]

- Wang, D.; Hu, B.; Hu, C.; Zhu, F.; Liu, X.; Zhang, J.; Wang, B.; Xiang, H.; Cheng, Z.; Xiong, Y.; et al. Clinical Characteristics of 138 Hospitalized Patients With 2019 Novel Coronavirus—Infected Pneumonia in Wuhan, China. JAMA 2020, 323, 1061–1069. [Google Scholar] [CrossRef]

- Yang, X.; Yu, Y.; Xu, J.; Shu, H.; Liu, H.; Wu, Y.; Zhang, L.; Yu, Z.; Fang, M.; Yu, T.; et al. Clinical course and outcomes of critically ill patients with SARS-CoV-2 pneumonia in Wuhan, China: A single-centered, retrospective, observational study. Lancet Respir. Med. 2020, 8, 475–481. [Google Scholar] [CrossRef] [Green Version]

- Mukherjee, H.; Ghosh, S.; Dhar, A.; Obaidullah, S.M.; Santosh, K.C.; Roy, K. Deep neural network to detect COVID-19: One architecture for both CT Scans and Chest X-rays. Appl. Intell. 2020, 51, 2777–2789. [Google Scholar] [CrossRef]

- Santosh, K.; Ghosh, S. COVID-19 Imaging Tools: How Big Data is Big? J. Med. Syst. 2021, 45, 71. [Google Scholar] [CrossRef] [PubMed]

- Zhai, P.; Ding, Y.; Wu, X.; Long, J.; Zhong, Y.; Li, Y. The epidemiology, diagnosis and treatment of COVID-19. Int. J. Antimicrob. Agents 2020, 55, 105955. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Shi, J.; Ye, Z.; Dong, D.; Yu, D.; Zhou, M.; Liu, Y.; Gevaert, O.; Wang, K.; Zhu, Y.; et al. Predicting EGFR mutation status in lung adenocarcinoma on computed tomography image using deep learning. Eur. Respir. J. 2019, 53, 1800986. [Google Scholar] [CrossRef]

- Angelini, E.; Dahan, S.; Shah, A. Unravelling machine learning: Insights in respiratory medicine. Eur. Respir. J. 2019, 54, 1901216. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Zhou, M.; Liu, Z.; Liu, Z.; Gu, D.; Zang, Y.; Dong, D.; Gevaert, O.; Tian, J. Central focused convolutional neural networks: Developing a data-driven model for lung nodule segmentation. Med. Image Anal. 2017, 40, 172–183. [Google Scholar] [CrossRef]

- Serte, S.; Demirel, H. Deep learning for diagnosis of COVID-19 using 3D CT scans. Comput. Biol. Med. 2021, 132, 104306. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Zhong, Z.; Li, Y.; Zhang, T.; Gao, L.; Jin, D.; Sun, Y.; Ye, X.; Yu, L.; Hu, Z.; et al. From community-acquired pneumonia to COVID-19: A deep learning–based method for quantitative analysis of COVID-19 on thick-section CT scans. Eur. Radiol. 2020, 30, 6828–6837. [Google Scholar] [CrossRef]

- Alshazly, H.; Linse, C.; Barth, E.; Martinetz, T. Explainable COVID-19 Detection Using Chest CT Scans and Deep Learning. Sensors 2021, 21, 455. [Google Scholar] [CrossRef]

- Yousefzadeh, M.; Esfahanian, P.; Movahed, S.M.S.; Gorgin, S.; Rahmati, D.; Abedini, A.; Nadji, S.A.; Haseli, S.; Bakhshayesh Karam, M.; Kiani, A.; et al. ai-corona: Radiologist-assistant deep learning framework for COVID-19 diagnosis in chest CT scans. PLoS ONE 2021, 16, e0250952. [Google Scholar]

- Hasan, A.M.; Al-Jawad, M.M.; Jalab, H.A.; Shaiba, H.; Ibrahim, R.W.; AL-Shamasneh, A.A.R. Classification of COVID-19 coronavirus, pneumonia and healthy lungs in CT scans using Q-deformed entropy and deep learning features. Entropy 2020, 22, 517. [Google Scholar] [CrossRef]

- Shah, V.; Keniya, R.; Shridharani, A.; Punjabi, M.; Shah, J.; Mehendale, N. Diagnosis of COVID-19 using CT scan images and deep learning techniques. Emerg. Radiol. 2021, 28, 497–505. [Google Scholar] [CrossRef] [PubMed]

- Zheng, C.; Deng, X.; Fu, Q.; Zhou, Q.; Feng, J.; Ma, H.; Liu, W.; Wang, X. Deep learning-based detection for COVID-19 from chest CT using weak label. MedRxiv 2020. [Google Scholar] [CrossRef] [Green Version]

- Shalbaf, A.; Vafaeezadeh, M. Automated detection of COVID-19 using ensemble of transfer learning with deep convolutional neural network based on CT scans. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 115–123. [Google Scholar]

- Wu, X.; Chen, C.; Zhong, M.; Wang, J.; Shi, J. COVID-AL: The diagnosis of COVID-19 with deep active learning. Med. Image Anal. 2021, 68, 101913. [Google Scholar] [CrossRef]

- Särkkä, S.; Sarmavuori, J. Gaussian filtering and smoothing for continuous-discrete dynamic systems. Signal Processing 2013, 93, 500–510. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Lynn, H.M.; Pan, S.B.; Kim, P. A deep bidirectional GRU network model for biometric electrocardiogram classification based on recurrent neural networks. IEEE Access 2019, 7, 145395–145405. [Google Scholar] [CrossRef]

- Dey, R.; Salem, F.M. Gate-variants of gated recurrent unit (GRU) neural networks. In Proceedings of the 2017 IEEE 60th International Midwest Symposium on Circuits and Systems (MWSCAS), Boston, MA, USA, 6–9 August 2017; pp. 1597–1600. [Google Scholar]

- Abedinia, O.; Amjady, N.; Ghasemi, A. A new metaheuristic algorithm based on shark smell optimization. Complexity 2016, 21, 97–116. [Google Scholar] [CrossRef]

- Mohammadi, M.; Talebpour, F.; Safaee, E.; Ghadimi, N.; Abedinia, O. Small-Scale Building Load Forecast based on Hybrid Forecast Engine. Neural Process. Lett. 2018, 48, 329–351. [Google Scholar] [CrossRef]

- Zhou, Y.; Ye, J.; Du, Y.; Sheykhahmad, F.R. New improved optimized method for medical image enhancement based on modified shark smell optimization algorithm. Sens. Imaging 2020, 21, 20. [Google Scholar] [CrossRef]

- Seifi, A.; Ehteram, M.; Soroush, F. Uncertainties of instantaneous influent flow predictions by intelligence models hybridized with multi-objective shark smell optimization algorithm. J. Hydrol. 2020, 587, 124977. [Google Scholar] [CrossRef]

- Manzo, M.; Pellino, S. Fighting Together against the Pandemic: Learning Multiple Models on Tomography Images for COVID-19 Diagnosis. AI 2021, 2, 261–273. [Google Scholar] [CrossRef]

- Segera, D.; Mbuthia, M.; Nyete, A. Particle Swarm Optimized Hybrid Kernel-Based Multiclass Support Vector Machine for Microarray Cancer Data Analysis. BioMed Res. Int. 2019, 2019, 4085725. [Google Scholar] [CrossRef] [Green Version]

- Aizerman, M.A. Theoretical foundations of the potential function method in pattern recognition learning. Autom. Remote Control. 1964, 25, 821–837. [Google Scholar]

- Rodrigues, D.; Pereira, L.A.; Nakamura, R.Y.; Costa, K.A.; Yang, X.S.; Souza, A.N.; Papa, J.P. A wrapper approach for feature selection based on bat algorithm and optimum-path forest. Expert Syst. Appl. 2014, 41, 2250–2258. [Google Scholar] [CrossRef]

- Mansour, R.F.; Aljehane, N.O. An optimal segmentation with deep learning based inception network model for intracranial hemorrhage diagnosis. Neural Comput. Appl. 2021, 33, 13831–13843. [Google Scholar] [CrossRef]

- Li, L.; Sun, L.; Xue, Y.; Li, S.; Huang, X.; Mansour, R.F. Fuzzy Multilevel Image Thresholding Based on Improved Coyote Optimization Algorithm. IEEE Access 2021, 9, 33595–33607. [Google Scholar] [CrossRef]

- Haklı, H.; Uğuz, H. A novel particle swarm optimization algorithm with Levy flight. Appl. Soft Comput. 2014, 23, 333–345. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, Y.; He, X.; Xie, P. COVID-CT-Dataset: A CT Scan Dataset about COVID-19. arXiv 2020, arXiv:2003.13865. Available online: https://github.com/UCSD-AI4H/COVID-CT (accessed on 18 October 2021).

- Subhalakshmi, R.T.; Balamurugan, S.A.A.; Sasikala, S. Deep learning based fusion model for COVID-19 diagnosis and classification using computed tomography images. Concurr. Eng. 2021, 1–12. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).