ARMIA: A Sensorized Arm Wearable for Motor Rehabilitation

Abstract

:1. Introduction

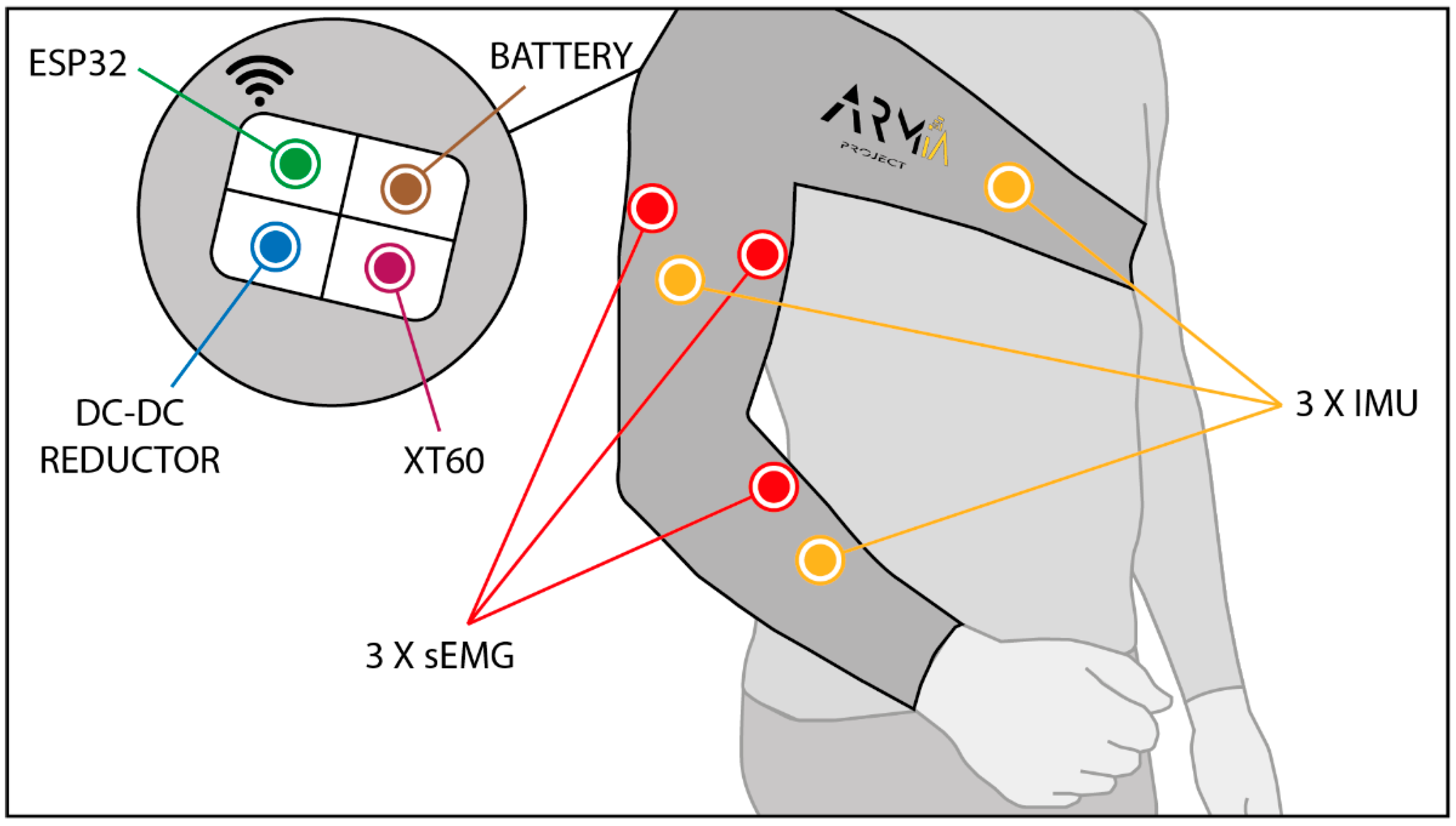

2. Hardware

2.1. Microcontroller

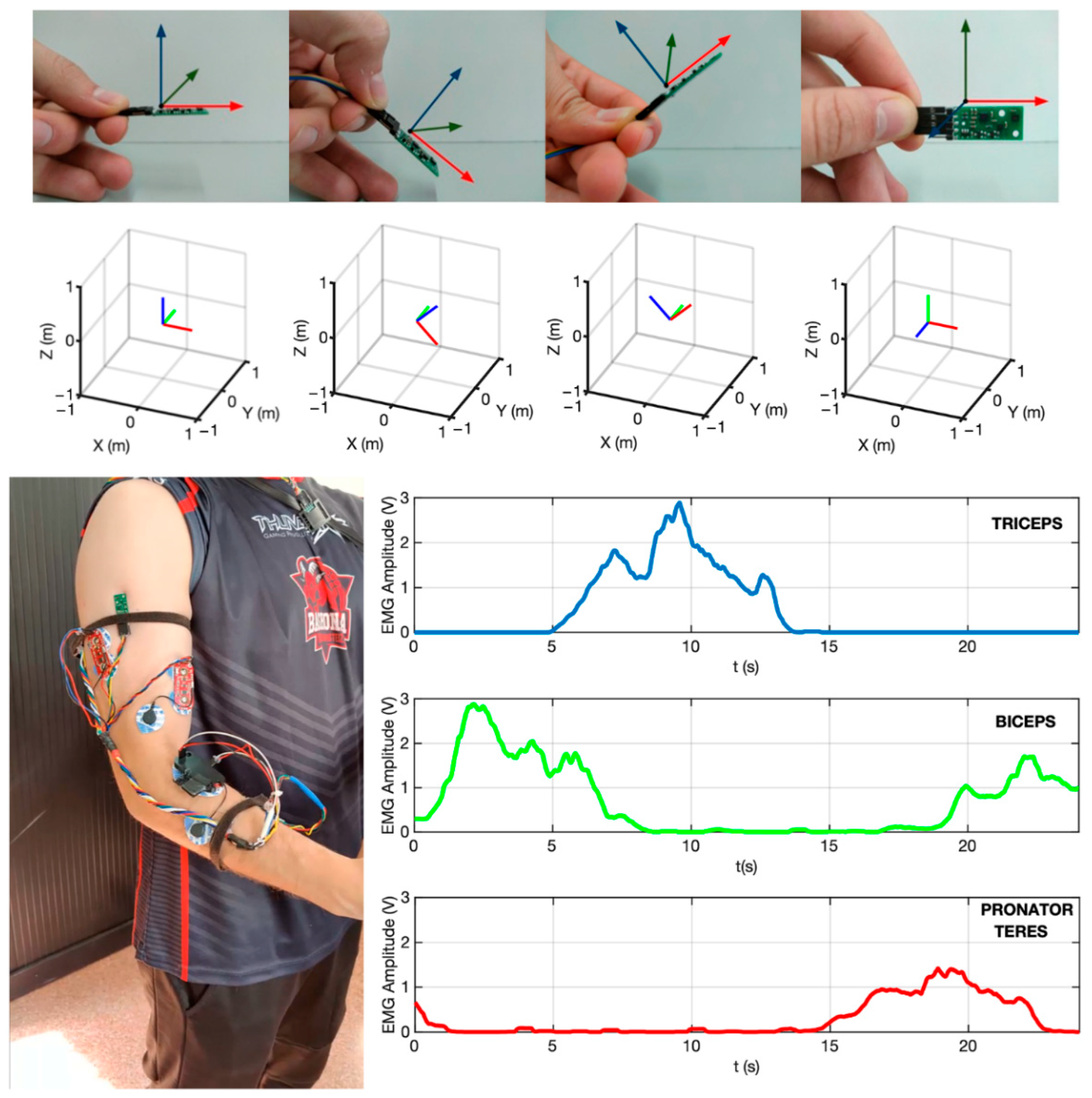

2.2. Sensors

2.3. Battery

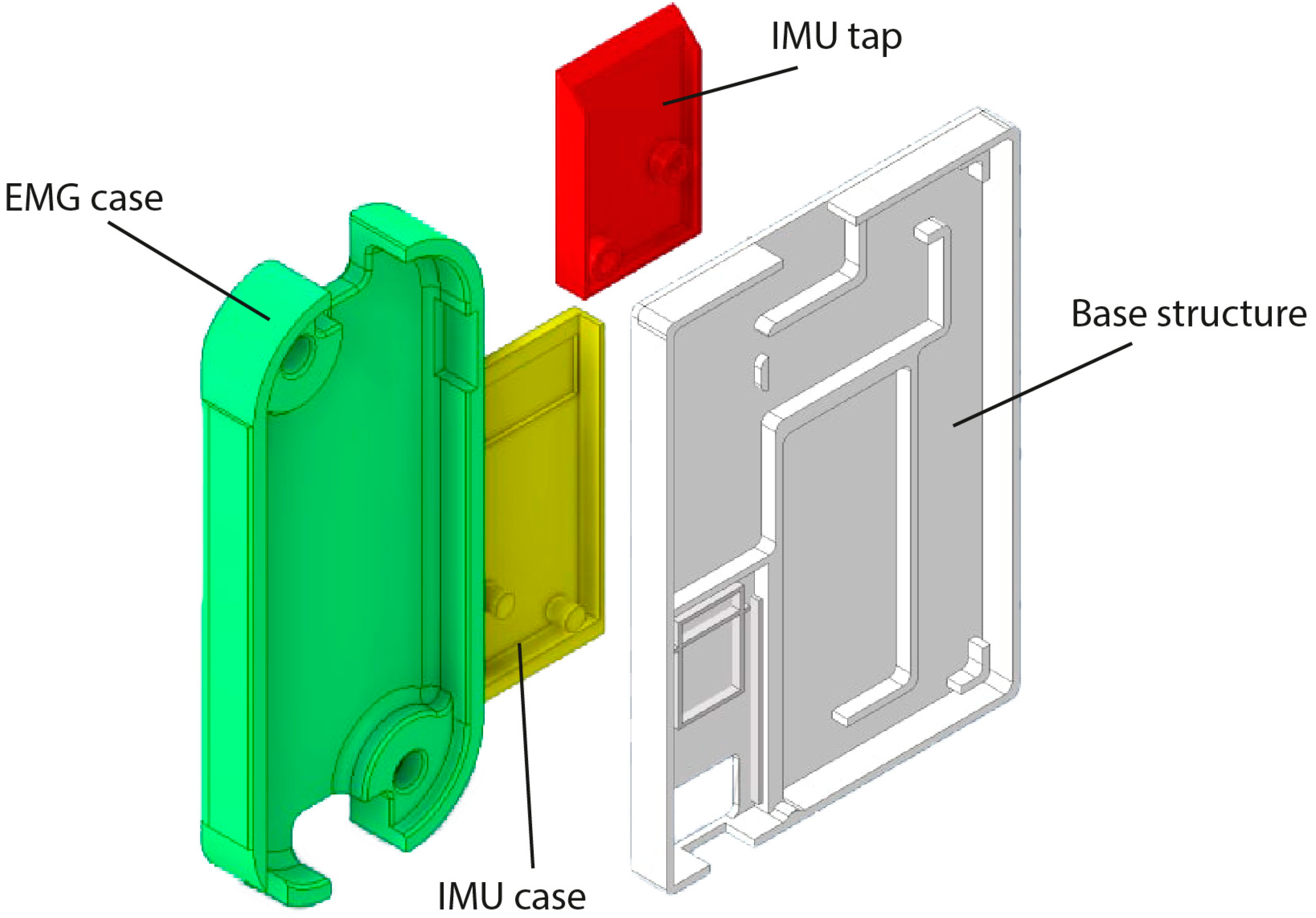

2.4. Structural Elements

2.5. Garment

3. Software

3.1. Communication Layer

3.2. Acquisition Layer

3.3. Processing and Visualization Layer

4. System Validation

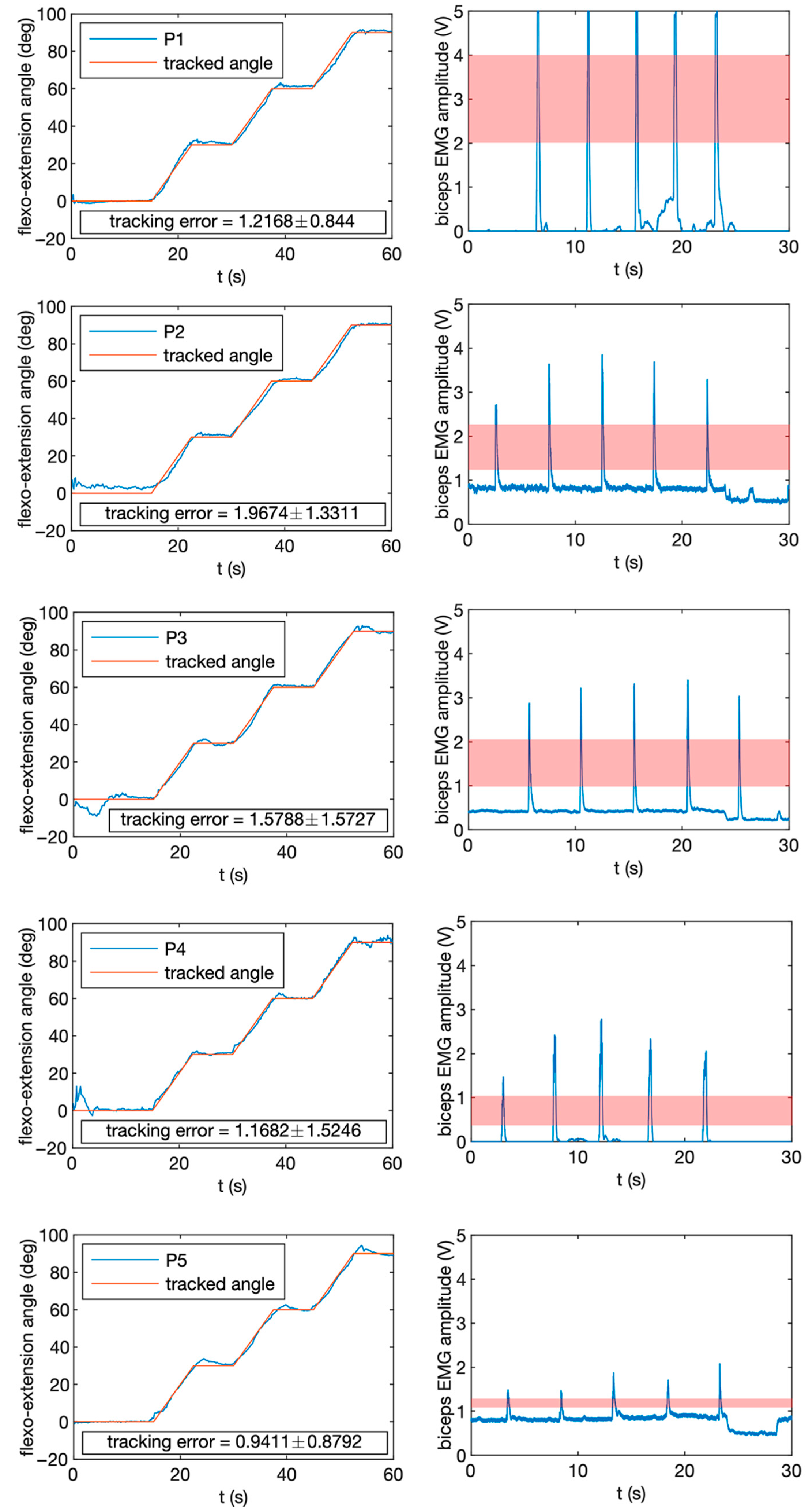

4.1. Experiments

- Experiment 1 consisted of tracking a specific angle profile by performing arm flexion movements. The duration of the run was 60 s. Four different angle levels were evaluated: 0° (full extension), 30°, 60° and 90° (arm flexed). Transitions between levels were interpolated to maintain the continuity of the movement;

- Experiment 2 consisted of performing five short contractions of the biceps muscle at full capacity during a recording of 40 s. Participants were given timing feedback and asked to perform the contractions after the first 5 s. For evaluation purposes, the first and last 5 s were removed.

4.2. Results

5. Discussion

5.1. Comparison with Current and Previous Technology

5.2. Clinical Scope

- People who have suffered recent brain damage due to stroke, traumatism or any other condition;

- People with neurodegenerative or neuromuscular diseases of any kind who need regular physical therapy;

- Elderly people with mobility problems in the upper limb and in need of maintaining physical activity;

- People affected by post-traumatic or post-surgical complications in arms or hands that require rehabilitation.

5.3. Current and Future Developments

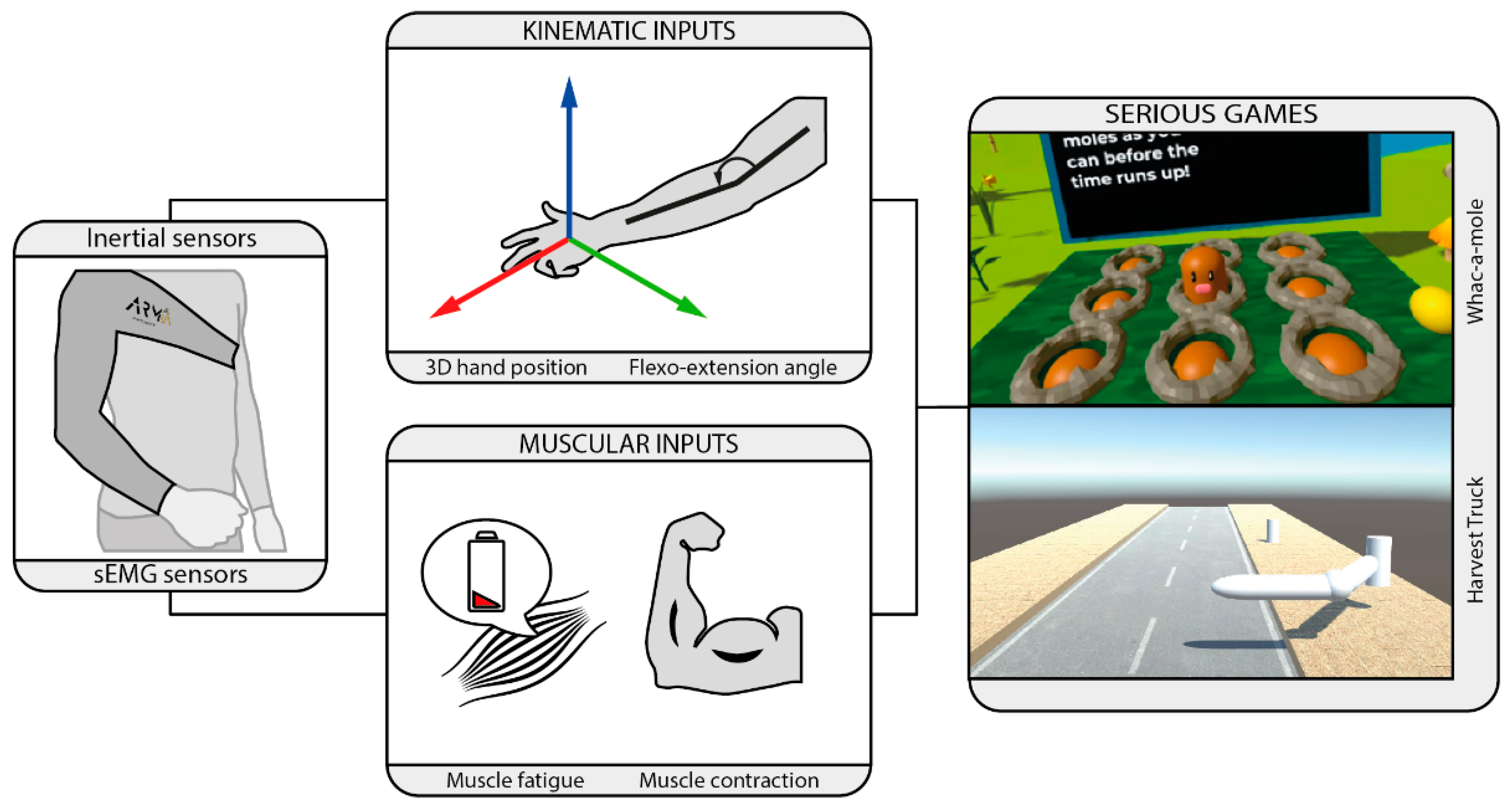

- Whac-a-Mole: this game focuses on the rehabilitation of reaching tasks on the transverse plane. The players grab a virtual ball to smash the moles that appear on the screen by reaching their position and hitting them. The score increases on each correct hit. Players have different possibilities for hitting depending on the level of impairment and pathology. One option is to use an external input such as a button to hit the moles or use arm co-contraction to hit the moles at the precise moment. If players have big movement limitations on the hand, the hit can be performed by using the so-called dwell click, which sets the ball to take action automatically when the ball stops moving for a certain amount of time in a certain range to the target. The game will have different difficulty levels that dynamically change depending on players’ performance, or that could be customized by therapists. This difficulty can be based on increasing the number of moles that show up per minute, decreasing the time they are visible, increasing the score required to advance to the next level or including cognitive challenges such as hitting a mole with a particular appearance to obtain extra points.

- Harvest Truck: this game only takes two control inputs from the wearable: the flexion and extension angle and an action input corresponding to the contraction force level measured on one of the recorded muscles. The players are on the back of a harvest truck traveling on a rural road. On the side of the road, different vegetable boxes of different weights are waiting for pickup. Players must extend the arm to grab the boxes and apply a different amount of contraction force depending on the weight or size of the boxes to be capable of moving them. In this case, in-game difficulty levels change by adding or removing total boxes, increasing box weight or changing the distance to the harvest truck. The score will be computed depending on the number and weight of the collected boxes on each level.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Johansson, T.; Wild, C. Telerehabilitation in stroke care-a systematic review. J. Telemed. Telecare 2010, 17, 1–6. [Google Scholar] [CrossRef]

- Velayati, F.; Ayatollahi, H.; Henmat, M. A systematic review of the effectiveness of telerehabilitation interventions for therapeutic purposes in the elderly. Methods Inf. Med. 2020, 59, 104–109. [Google Scholar] [CrossRef]

- Kwakkel, G.; Kollen, B.J.; Krebs, H.I. Effects of robot-assisted therapy on upper limb recovery after stroke: A systematic review. Neurorehabil. Neural Repair 2008, 22, 111–121. [Google Scholar] [CrossRef]

- Bertani, R.; Melegari, C.; De Cola, M.C.; Bramanti, A.; Bramanti, P.; Calabrò, R.S. Effects of robot-assisted upper limb rehabilitation in stroke patients: A systematic review with meta-analysis. Neurol Sci. 2017, 38, 1561–1569. [Google Scholar] [CrossRef]

- Krebs, H.I. Robot-aided neurorehabilitation. IEEE Trans. Rehabil. Eng. 1998, 6, 75–87. [Google Scholar] [CrossRef] [Green Version]

- Richardson, R.; Brown, M.; Bhakta, B.; Levesley, M.C. Design and control of a three degree of freedom pneumatic physiotherapy robot. Robotica 2003, 21, 589–604. [Google Scholar] [CrossRef]

- Zhu, T.L.; Klein, J.; Dual, S.A. ReachMAN2: A compact rehabilitation robot to train reaching and manipulation. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014. [Google Scholar] [CrossRef]

- Gull, M.A.; Bai, S.; Bak, T. A Review on Design of Upper Limb Exoskeletons. Robotics 2020, 9, 16. [Google Scholar] [CrossRef] [Green Version]

- Levin, M.F.; Weiss, P.L.; Keshner, E.A. Emergence of virtual reality as a tool for upper limb rehabilitation: Incorporation of motor control and motor learning principles. Phys. Ther. 2015, 95, 415–425. [Google Scholar] [CrossRef] [Green Version]

- Tomic, T.J.D.; Savic, A.M.; Vidakovic, A.S.; Rodic, A.Z.; Isakovic, M.S.; Rodriguez-de-Pablo, C.; Keller, T.; Konstantinovic, L.M. ArmAssist robotic system versus matched conventional therapy for poststroke upper limb rehabilitation: A randomized clinical trial. Biomed. Res. Int. 2017, 7659893. [Google Scholar] [CrossRef]

- O’Neil, O.; Fernandez, M.M.; Herzog, J.; Beorchia, M.; Gower, V.; Gramatica, F.; Starrost, K.; Kiwull, L. Virtual reality for neurorehabilitation: Insights from 3 European clinics. PMR 2018, 10, 198–206. [Google Scholar] [CrossRef]

- Leuenberger, K.; Gonzanbach, R.; Wachter, S.; Luft, A.; Gassert, R. A method to qualitatively assess arm use in stroke survivors in the home environment. Med. Biol. Eng. Comput. 2016, 55, 141–150. [Google Scholar] [CrossRef] [Green Version]

- Colombo, R. Performance measures in robot assisted assessment of sensorimotor functions. In Rehabilitation Robotics; Colombo, R., Sanguineti, V., Eds.; Academic Press: London, UK, 2018; pp. 101–115. [Google Scholar] [CrossRef]

- Lee, S.I.; Adans-Dester, C.P.; Obrien, A.T.; Vergara-Diaz, G.P.; Black-Schaffer, R.; Zafonte, R.; Dy, J.G.; Bonato, P. Predicting and monitoring upper-limb rehabilitation outcomes using clinical and wearable sensor data in brain injury survivors. IEEE Trans. Biomed. Eng. 2021, 68, 1871–1881. [Google Scholar] [CrossRef]

- Held, J.P.O.; Klaassen, B.; Eenhoorn, A.; van Beijnum, B.-J.F.; Buurke, J.H.; Veltnik, P.H.; Luft, A.R. Inertial sensor measurements of upper-limb kinematics in stroke patients in clinic and home environment. Front. Bioeng. Biotechnol. 2018, 6, 27. [Google Scholar] [CrossRef] [Green Version]

- Cifrek, M.; Medved, V.; Tonkovic, S.; Ostojic, S. Surface EMG based muscle fatigue evaluation in biomechanics. Clin. Biomech. 2009, 24, 327–340. [Google Scholar] [CrossRef]

- Pan, B.; Sun, Y.; Huang, Z.; Wu, J.; Hou, J.; Liu, Y.; Huang, Z.; Zhang, Z. Alterations of muscle synergies during voluntary arm reaching movement in subacute stroke survivors at different levels of impairment. Front. Comput. Neurosci. 2018, 12, 69. [Google Scholar] [CrossRef]

- Kwon, Y.; Dwivedi, A.; Mcdaid, A.; Liarokapis, M. Electromyography-based decoding of dexterous, in-hand manipulation of objects: Comparing task execution in real world and virtual reality. IEEE Access 2021, 9, 37297–37310. [Google Scholar] [CrossRef]

- Lockery, D.; Peters, J.F.; Ramanna, S.; Shay, B.L.; Szturm, T. Store-and- feedforward adaptive gaming system for hand-finger motion tracking in telerehabilitation. IEEE Trans. Inf. Technol. Biomed. 2011, 15, 467–473. [Google Scholar] [CrossRef]

- Park, H.S.; Peng, Q.; Zhang, L.Q. A portable telerehabilitation system for remote evaluations of impaired elbows in neurological disorders. IEEE Trans. Neural Syst. Rehabil. Eng. 2008, 16, 245–254. [Google Scholar] [CrossRef]

- Wang, Q.; Markopoulos, P.; Yu, B.; Chen, W.; Timmermans, A. Interactive wearable systems for upper body rehabilitation: A systematic review. J. Neuroeng. Rehabil. 2017, 14, 20. [Google Scholar] [CrossRef] [Green Version]

- Maceira-Elvira, P.; Popa, T.; Schmid, A.C.; Hummel, F.C. Wearable technology in stroke rehabilitation: Towards improved diagnosis and treatment of upper limb impairment. J. Neuroeng. Rehabil. 2019, 16, 142. [Google Scholar] [CrossRef]

- Tannous, H.; Istrate, D.; Perrochon, A.; Daviet, J.C.; Benlarbi-Delai, A.; Sarrazin, J.; Tho, M.C.H.B.; Dao, T.T. GAMEREHAB@HOME: A New Engineering System Using Serious Game and Multisensor Fusion for Functional Rehabilitation at Home. IEEE Trans Games 2021, 13, 89–98. [Google Scholar] [CrossRef]

- Lim, C.K.; Chen, I.M.; Luo, Z.; Yeo, S.H. A low cost wearable wireless sensing system for upper limb home rehabilitation. In Proceedings of the 2010 IEEE Conference on Robotics, Automation and Mechatronics, Singapore, 28–30 June 2010. [Google Scholar] [CrossRef]

- Zhou, H.; Stone, T.; Hu, H.; Harris, N. Use of multiple wearable inertial sensors in upper limb motion tracking. Med. Eng. Phys. 2008, 30, 123–133. [Google Scholar] [CrossRef] [PubMed]

- Tognetti, A.; Lorussi, F.; Bartalesi, R.; Quaglini, S.; Tesconi, M.; Zupone, G.; De Rossi, D. Wearable kinesthetic system for capturing and classifying upper limb gesture in post-stroke rehabilitation. J. Neuroeng. Rehabil. 2005, 2, 8. [Google Scholar] [CrossRef] [PubMed]

- Montoya, M.F.; Munoz, J.; Henao, O.A. Fatigue-aware videogame using biocybernetic adaptation: A pilot study for upper-limb rehabilitation with sEMG. Virtual Real. 2021, 1–14. [Google Scholar] [CrossRef]

- Zhao, S.; Liu, J.; Gong, Z.; Lei, Y.; Yang, X.O.; Chan, C.C.; Ruan, S. Wearable Physiological Monitoring System Based on Electrocardiography and Electromyography for Upper Limb Rehabilitation Training. Sensors 2020, 10, 4861. [Google Scholar] [CrossRef]

- Bortone, I.; Barsotti, M.; Leonardis, D.; Crecchi, A.; Tozzini, A.; Bonfiglio, L.; Frisoli, A. Immersive Virtual Environments and Wearable Haptic Devices in rehabilitation of children with neuromotor impairments: A single-blind randomized controlled crossover pilot study. J. Neuroeng. Rehabil. 2020, 17, 144. [Google Scholar] [CrossRef]

- Simpson, L.A.; Menon, C.; Hodgson, A.J.; Ben Mortenson, W.; Eng, J.J. Clinicians’ perceptions of a potential wearable device for capturing upper limb activity post-stroke: A qualitative focus group study. J. Neuroeng. Rehabil. 2021, 18, 135. [Google Scholar] [CrossRef]

- Hayward, K.S.; Eng, J.J.; Boyd, L.A.; Lakhani, B.; Bernhardt, J.; Lang, C.E. Exploring the role of accelerometers in the measurement of real world upper-Limb use after stroke. Brain Impair. 2016, 17, 16–33. [Google Scholar] [CrossRef] [Green Version]

- Saposnik, G.; Teasell, R.; Mamdani, M.; Hall, J.; McIlroy, W.; Cheung, D.; Thorpe, K.E.; Cohen, L.G.; Bayley, M. Effectiveness of Virtual Reality Using Wii Gaming Technology in Stroke Rehabilitation A Pilot Randomized Clinical Trial and Proof of Principle. Stroke 2010, 41, 1477–1484. [Google Scholar] [CrossRef] [Green Version]

- Saposnik, G.; Cohen, L.G.; Mamdani, M.; Pooyania, S.; Ploughman, M.; Cheung, D.; Shaw, J.; Hall, J.; Nord, P.; Dukelow, S.; et al. Efficacy and safety of non-immersive virtual reality exercising in stroke rehabilitation (EVREST): A randomised, multicentre, single-blind, controlled trial. Lancet Neurol. 2016, 15, 1019–1027. [Google Scholar] [CrossRef] [Green Version]

- Adie, K.; Schofield, C.; Berrow, M.; Wingham, J.; Humfryes, J.; Pritchard, C.; James, M.; Allison, R. Does the use of Nintendo Wii Sports TM improve arm function? Trial of Wii TM in Stroke: A randomized controlled trial and economics analysis. Clin. Rehabil. 2017, 31, 173–185. [Google Scholar] [CrossRef] [PubMed]

- Jonsdottir, J.; Bertoni, R.; Lawo, M.; Montesano, A.; Bowman, T.; Gabrielli, S. Serious games for arm rehabilitation of persons with multiple sclerosis. A randomized controlled pilot study. Mult. Scler. Relat. Disord. 2017, 19, 25–29. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Türkbey, T.A.; Kutlay, S.; Gök, H. Clinical feasibility of Xbox KinectTM training for stroke rehabilitation: A single-blind randomized controlled pilot study. J. Rehabil. Med. 2017, 49, 22–29. [Google Scholar] [CrossRef] [Green Version]

- Fernandez-Gonzalez, P.; Carratala-Tejada, M.; Monge-Pereira, E.; Collado-Vazquez, S.; Sanchez-Herrera Baeza, P.; Cuesta-Gomez, A.; Ona-Simbana, E.D.; Jardon-Huete, A.; Molina-Rueda, F.; Balaguer-Bernaldo de Quiros, C.; et al. Leap motion controlled video game-based therapy for upper limb rehabilitation in patients with Parkinson’s disease: A feasibility study. J. Neuroeng. Rehabil. 2019, 16, 133. [Google Scholar] [CrossRef]

- Aguilera-Rubio, A.; Alguacil-Diego, I.M.; Mallo-Lopez, A.; Cuesta-Gomez, A. Use of the Leap Motion Controller (R) System in the Rehabilitation of the Upper Limb in Stroke. A Systematic Review. J. Stroke Cerebrovasc. Dis. 2022, 31, 106174. [Google Scholar] [CrossRef]

- Sucar, L.E.; Orihuela-Espina, F.; Velazquez, R.L.; Reinkensmeyer, D.J.; Leder, R.; Hernández-Franco, J. Gesture therapy: An upper limb virtual reality-based motor rehabilitation platform. IEEE Trans. Neural. Syst. Rehabil. Eng. 2014, 22, 634–643. [Google Scholar] [CrossRef] [Green Version]

- Burke, J.W.; McNeill, M.; Charles, D.; Morrow, P.; Crosbie, J.; McDonough, S. Serious Games for Upper Limb Rehabilitation Following Stroke. In Proceedings of the 2009 Conference in Games and Virtual Worlds for Serious Applications, Coventry, UK, 23–24 March 2009. [Google Scholar] [CrossRef]

- Ma, M.; Bechkoum, K. Serious Games for Movement Therapy after Stroke. In Proceedings of the 2008 IEEE International Conference on Systems, Man and Cybernetics (SMC), Singapore, 12–15 October 2008. [Google Scholar] [CrossRef] [Green Version]

- Deutsch, J.E.; McCoy, S.W. Virtual Reality and Serious Games in Neurorehabilitation of Children and Adults: Prevention, Plasticity, and Participation. Pediatr. Phys. Ther. 2017, 29, S23–S36. [Google Scholar] [CrossRef]

- Lee, S.H.; Jung, H.Y.; Yun, S.J.; Oh, B.M.; Seo, H.G. Upper Extremity Rehabilitation Using Fully Immersive Virtual Reality Games with a Head Mount Display: A Feasibility Study. PMR 2020, 12, 257–262. [Google Scholar] [CrossRef]

- Woodward, R.B.; Hardgrove, L.J. Adapting myoelectric control in real-time using a virtual environment. J. Neuroeng. Rehabil. 2019, 16, 11. [Google Scholar] [CrossRef]

- Trojan, J.; Diers, M.; Fuchs, X.; Bach, F.; Bekarter-Bodmann, R.; Foell, J.; Kamping, S.; Rance, M.; Maab, H.; Flor, H. An augmented reality home-training system based on the mirror training and imagery approach. Behav. Res. Methods 2014, 46, 634–640. [Google Scholar] [CrossRef] [Green Version]

- Pinches, J.; Hoermann, S. Automated instructions and real time feedback for upper limb computerized mirror therapy with augmented reflection technology. J. Altern. Med. Res. 2018, 10, 37–46. [Google Scholar]

- Mousavi, H.; Khademi, M.; Dodakian, L.; McKenzie, A.; Lopes, C.V.; Cramer, S.C. Choice of human-computer interaction mode in stroke rehabilitation. Neurorehabil. Neural Repair 2016, 30, 258–265. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Colomer, C.; Llorens, R.; Noé, E.; Alcañiz, M. Effect of a mixed reality-based intervention on arm, hand, and finger function on chronic stroke. J. Neuroeng. Rehabil. 2016, 13, 45. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Condino, S.; Turini, G.; Viglialoro, R.; Gesi, M.; Ferrari, V. Wearable Augmented Reality Application for Shoulder Rehabilitation. Electronics 2019, 8, 1178. [Google Scholar] [CrossRef] [Green Version]

- Prahm, C.; Bressler, M.; Eckstein, K.; Kuzuoka, H.; Daigeler, A.; Kolbenschlag, J. Developing a wearable Augmented Reality for treating phantom limb pain using the Microsoft Hololens 2. Augment. Hum. 2022, 2022, 309–312. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Garcia, G.J.; Alepuz, A.; Balastegui, G.; Bernat, L.; Mortes, J.; Sanchez, S.; Vera, E.; Jara, C.A.; Morell, V.; Pomares, J.; et al. ARMIA: A Sensorized Arm Wearable for Motor Rehabilitation. Biosensors 2022, 12, 469. https://doi.org/10.3390/bios12070469

Garcia GJ, Alepuz A, Balastegui G, Bernat L, Mortes J, Sanchez S, Vera E, Jara CA, Morell V, Pomares J, et al. ARMIA: A Sensorized Arm Wearable for Motor Rehabilitation. Biosensors. 2022; 12(7):469. https://doi.org/10.3390/bios12070469

Chicago/Turabian StyleGarcia, Gabriel J., Angel Alepuz, Guillermo Balastegui, Lluis Bernat, Jonathan Mortes, Sheila Sanchez, Esther Vera, Carlos A. Jara, Vicente Morell, Jorge Pomares, and et al. 2022. "ARMIA: A Sensorized Arm Wearable for Motor Rehabilitation" Biosensors 12, no. 7: 469. https://doi.org/10.3390/bios12070469

APA StyleGarcia, G. J., Alepuz, A., Balastegui, G., Bernat, L., Mortes, J., Sanchez, S., Vera, E., Jara, C. A., Morell, V., Pomares, J., Ramon, J. L., & Ubeda, A. (2022). ARMIA: A Sensorized Arm Wearable for Motor Rehabilitation. Biosensors, 12(7), 469. https://doi.org/10.3390/bios12070469