1. Introduction

Fluorescent materials have become foundational elements across numerous advanced technological domains, significantly influencing biomedical imaging, optical sensing, display technologies, and solid-state lighting. For instance, fluorescent probes have profoundly transformed biomedical imaging by enabling high-resolution, real-time visualization of cellular and molecular processes [

1,

2,

3]. Likewise, quantum dot-based displays and organic light-emitting diodes (OLEDs) represent revolutionary advances in consumer electronics, greatly improving color purity, display efficiency, and energy consumption [

4,

5]. Additionally, fluorescent-based sensors exhibit superior sensitivity and selectivity in environmental monitoring, providing robust analytical solutions for detecting contaminants and pollutants [

6,

7,

8,

9]. However, despite substantial progress, the ongoing demand for novel fluorescent materials featuring enhanced emission efficiency, spectral tunability, and stability under diverse operating conditions continues to propel intensive research and innovation at the intersection of materials science, photonics, and biotechnology [

10,

11,

12]. The significant economic impact of these applications is underscored by substantial market valuations and robust growth projections. According to data from QYResearch in 2025, the global market for OLED blue fluorescent materials, crucial for displays, was valued at USD 404 million in 2023 and is expected to rise to USD 851 million by 2030, exhibiting a compound annual growth rate of 10.8%. China National Chemical Information Center believes that, by 2025, the dominant force in China’s fluorescent material supply chain will have an overall market size of CNY 35 billion (approximately USD 4.8 billion), driven by LED lighting sectors (58%), and is expected to expand to CNY 65 billion (approximately USD 9 billion) by 2030. In addition, according to MarketsandMarkets’ data in 2024, the global biomarker market with fluorescent materials accounting for over 30% is expected to reach USD 7.8 billion by 2025, driven by advances in medical diagnosis and imaging. This substantial and growing economic potential further incentivizes the development of high-performance fluorescent materials.

Traditional approaches for discovering and optimizing fluorescent materials primarily rely on empirical trial-and-error experiments and first-principles computational simulations, particularly density functional theory (DFT) [

13,

14,

15]. Experimental techniques, although valuable, often face substantial constraints due to high costs, labor-intensive processes, and lengthy time requirements, limiting their scalability, efficiency, and reproducibility [

16,

17]. Similarly, theoretical approaches, including DFT and time-dependent DFT (TD-DFT), while adept at elucidating electronic and excited-state properties, demand considerable computational resources, making exhaustive exploration of extensive molecular design spaces impractical [

13,

15,

18]. Moreover, these conventional methods struggle to effectively capture the inherently complex and nonlinear relationships among molecular structures, synthesis conditions, and fluorescence properties, thereby significantly impeding the systematic and rapid identification of high-performance materials [

13,

16]. Thus, there exists an imperative need for alternative methodologies capable of efficiently and systematically exploring vast chemical spaces.

Recently, machine learning (ML) has emerged as a powerful alternative, effectively addressing the intrinsic limitations of conventional approaches by utilizing large datasets to rapidly and accurately predict fluorescent properties [

19,

20,

21]. ML methods, such as neural networks, support vector machines, and ensemble learning algorithms, directly extract intricate, nonlinear relationships between structural descriptors and fluorescence performance, circumventing explicit quantum mechanical modeling and significantly reducing computational resources and time [

20,

21,

22]. Recent applications have demonstrated ML’s robust capability to predict essential photophysical properties—including emission wavelength, quantum yield, and operational stability—in diverse fluorescent systems such as aggregation-induced emission (AIE) luminogens, thermally activated delayed fluorescence (TADF) emitters, quantum dots, and perovskite-based materials [

23,

24,

25,

26]. Nonetheless, a systematic and comprehensive integration of ML methodologies, comparative analyses, and their application across different fluorescent material categories remains sparse.

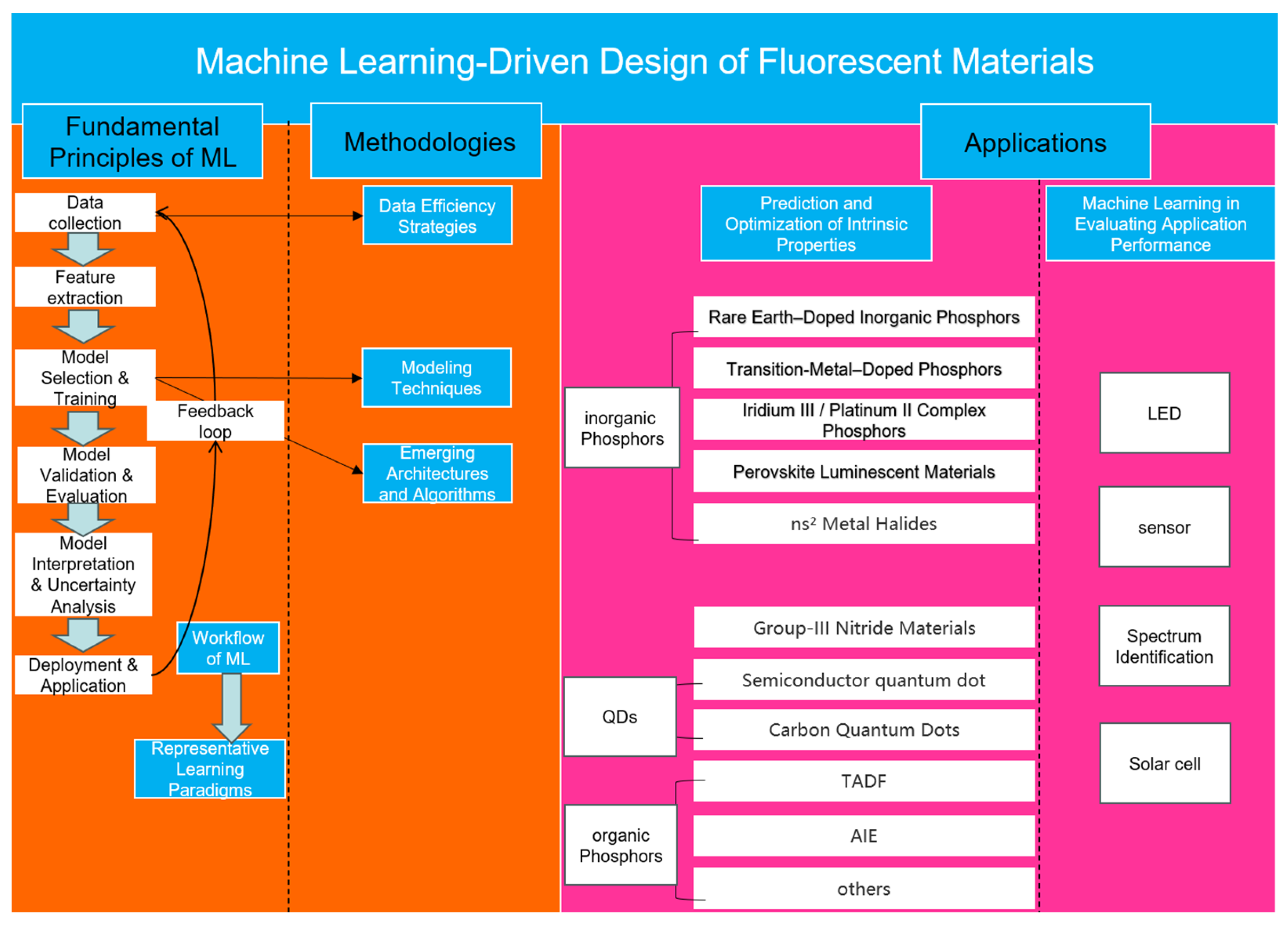

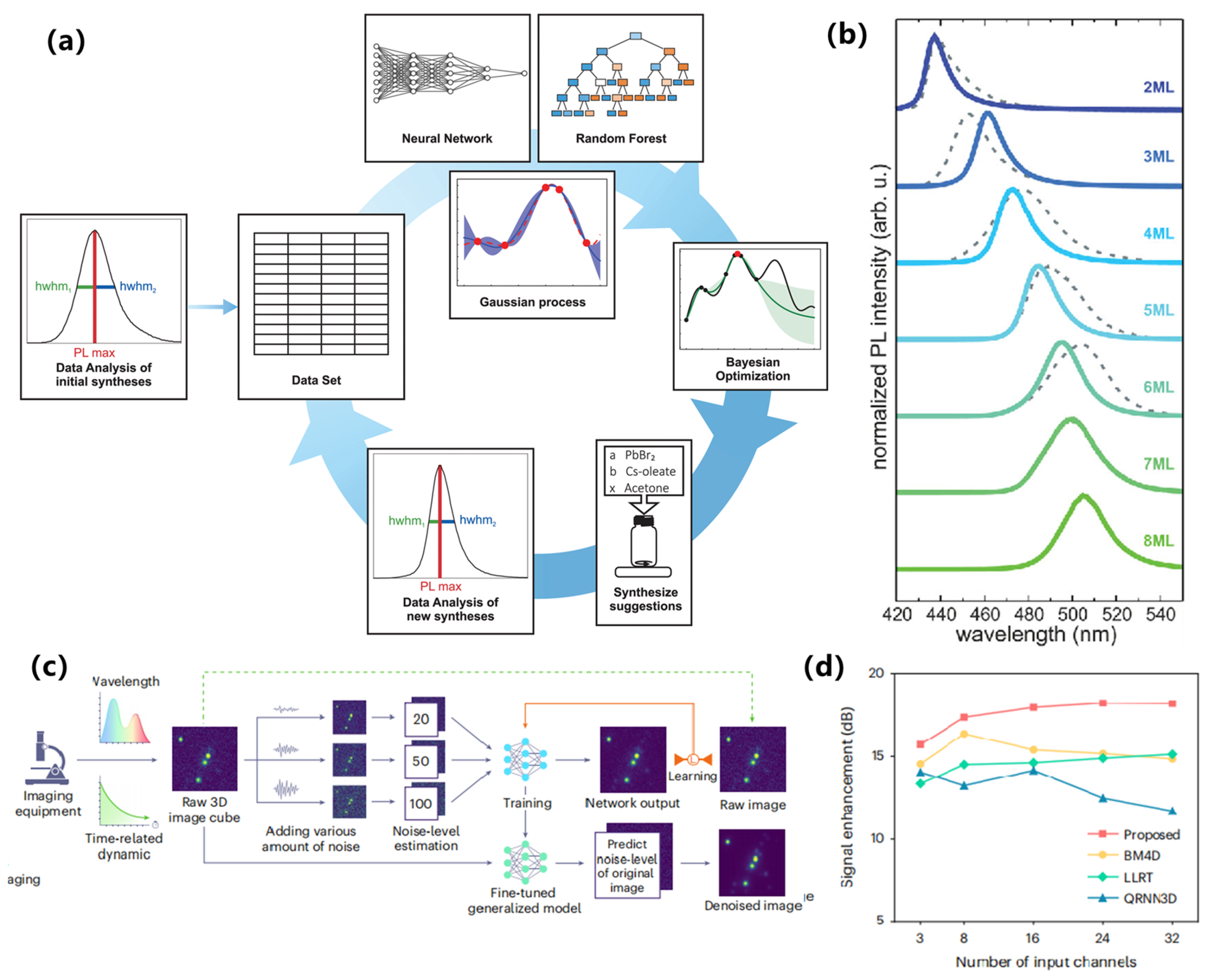

Addressing this critical gap, the present review systematically summarizes the foundational methods, fundamental principles, and advanced applications of ML in fluorescent material research, as shown in

Figure 1. Initially, we detail the core ML-driven property prediction workflow, including data acquisition, feature engineering, model development, and validation. Subsequently, the review analyzes essential methodological frameworks, differentiating purely data-driven strategies from physics-informed approaches, while also emphasizing advanced ML techniques such as active learning and transfer learning. Following this, we critically survey representative ML applications across multiple classes of fluorescent materials—including AIE compounds, TADF emitters, quantum dots, carbon dots, metal-halide perovskites, and rare-earth-doped phosphors—highlighting unique challenges and solutions pertinent to each category. Finally, we synthesize comparative insights across material categories, identify prevailing research limitations such as data scarcity, interpretability challenges, and limited generalizability [

20,

27], and propose strategic directions for future research. These include integrating domain-specific physical knowledge and developing robust, multimodal ML frameworks to substantially advance fluorescent material design and discovery [

19,

28].

This review differs from previous studies in three key aspects. First, it provides a comprehensive overview covering a wide range of fluorescent materials, spanning from organic to inorganic materials. Second, it specifically introduces state-of-the-art research methodologies in cutting-edge fields, such as data mining using large language models and physics-informed neural networks. Finally, it offers a unique interdisciplinary and systematic comparative perspective, linking fundamental ML principles with specific applied case studies. This research approach provides timely and comprehensive guidance of this field, facilitating researchers in the discipline to quickly grasp the current state of cutting-edge developments.

2. Fundamental Principles of Machine Learning in Fluorescent Materials

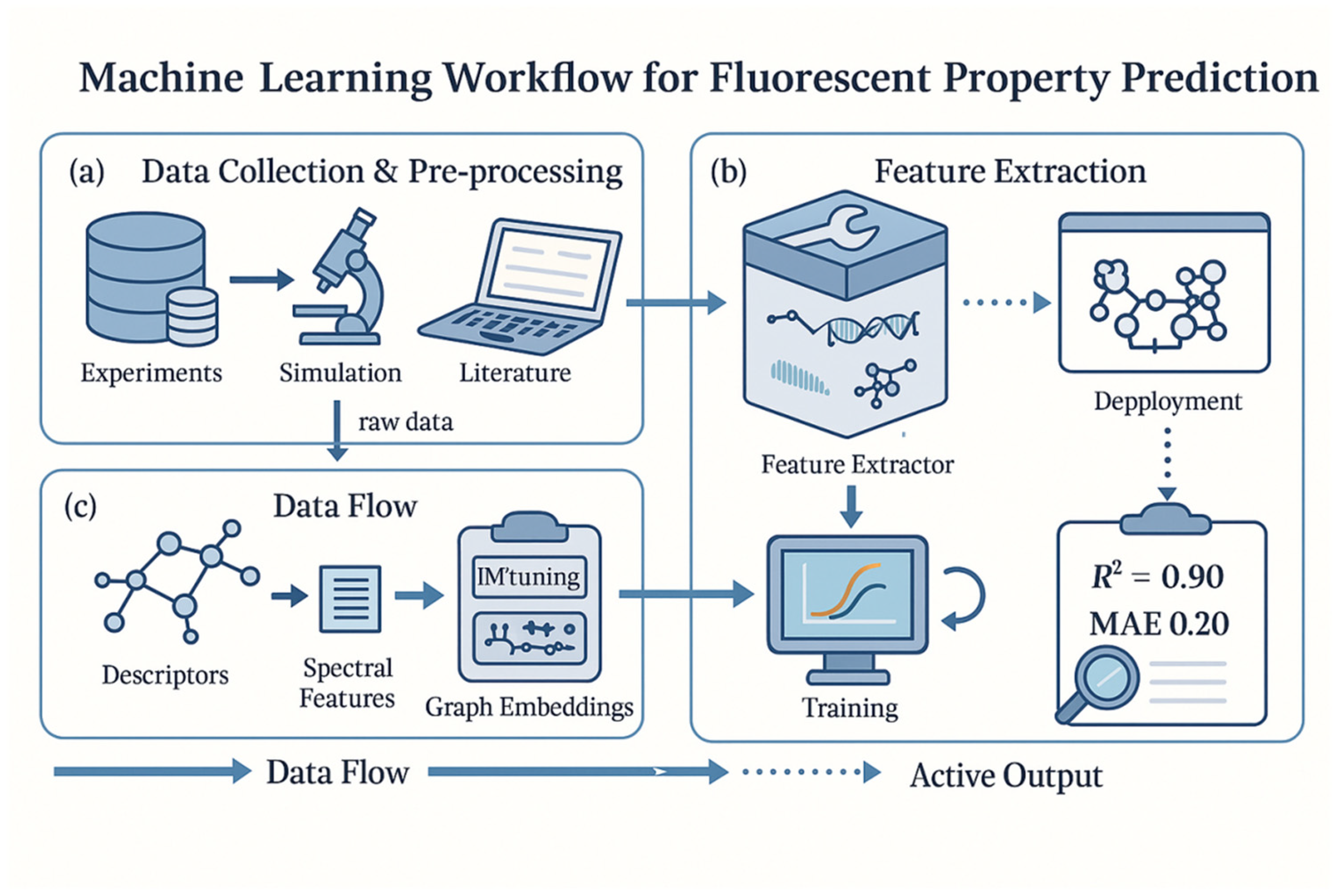

2.1. Workflow of ML for Fluorescent Property Prediction

A typical machine learning (ML) workflow for predicting fluorescent material properties involves several interrelated stages that each influence the accuracy and robustness of the final model. These stages generally include data collection, feature extraction, model development, model validation, and final deployment for property prediction. In the data collection phase, researchers assemble datasets of fluorescence properties (e.g., emission wavelengths, photoluminescence quantum yields, lifetimes, stability metrics) from experiments, simulations, or databases. Ensuring that this data is high-quality and representative is critical, because errors or biases introduced at this stage can mislead the ML algorithms. It is often necessary to preprocess raw data (for example, handling missing or inconsistent entries) to improve reliability. Given the growing availability of public fluorescent materials datasets, careful curation and error-checking are essential to avoid propagating experimental noise into model training.

After gathering data, the next step is feature extraction (or featurization), where raw inputs are translated into structured descriptors capturing the underlying chemistry or physics of fluorescence. Depending on the problem, features may include physicochemical properties, structural fingerprints (such as molecular graphs or fragment-based descriptors), or even spectral characteristics. For example, predicting emission wavelength might involve features related to molecular conjugation length or electronic transition energies, whereas quantum yield prediction might use descriptors reflecting molecular rigidity or excited-state dynamics. The choice of representation strongly affects model performance—appropriate features allow for the algorithm to more easily discover meaningful patterns. Domain knowledge can guide feature design (e.g., using known photophysical parameters), although modern deep learning models can also learn abstract representations directly from data, bypassing manual feature engineering.

The growing importance of data-driven research has spurred the development of several publicly available databases and resources. These repositories are invaluable for benchmarking ML models, pre-training, and discovering new fluorescent materials.

Table 1 summarizes a selection of key resources relevant to the community.

In the model development stage, an ML algorithm (or a combination of algorithms) is selected and trained on the prepared features to learn the mapping from molecular descriptors to fluorescent properties. Common supervised learning models for fluorescent property prediction include ensemble tree methods (such as random forests or gradient-boosting algorithms like XGBoost) and neural networks (including fully connected neural networks, convolutional neural networks, or graph neural networks). Simpler models like linear regression or decision trees might be chosen for small datasets, whereas deep neural architectures are favored for larger or more complex datasets. The choice often hinges on a trade-off between interpretability and predictive power. For instance, tree-based ensembles offer built-in feature importance metrics that help interpret which molecular features influence fluorescence, while neural networks can capture highly nonlinear relationships and often achieve higher accuracy at the cost of being “black boxes” with limited interpretability. In practice, the model architecture and type must be tailored to the nature of the available data (whether inputs are vectorized descriptors, spectra, molecular graphs, etc.) and the specific prediction task. It is also common to evaluate multiple candidate models or even use an ensemble of different learners to improve robustness and performance.

After training, rigorous model validation is performed to assess predictive performance and generalization ability. Typically, techniques like k-fold cross-validation or hold-out test sets are employed to ensure the model performs well on unseen data. Key regression metrics include the coefficient of determination (R2), mean absolute error (MAE), and root mean square error (RMSE), whereas classification tasks may use metrics such as accuracy, precision/recall, and ROC-AUC. High performance on training data must be balanced with checks against overfitting. For example, if data are limited, one might favor simpler models or apply regularization to prevent the model from simply memorizing the training set. By examining validation results, researchers can tune hyperparameters or revisit feature selection to further refine the model before deployment.

Finally, the validated model is deployed for property prediction or high-throughput screening of candidate materials. In fluorescent materials research, deployment could mean using the model to predict the emission wavelengths or quantum yields of new candidate molecules before synthesis, or scanning a virtual library of molecular structures to find promising luminophores (i.e., performing high-throughput virtual screening) [

29,

30,

31]. In some cases, multi-task learning is employed, wherein a single model simultaneously predicts multiple related fluorescent properties (for example, both emission energy and quantum yield) to exploit correlations between those properties. For the model’s predictions to be practically useful, it is crucial that the model be not only accurate, but also generalizable—it should reliably predict properties for novel molecules or material compositions that were not explicitly represented in the training data. Throughout this workflow, iterative refinement is often necessary. As the scope of fluorescent materials under study expands, researchers periodically update the dataset with new experimental results, adjust feature sets, or retrain models to maintain and improve predictive performance. Increasingly, active learning strategies are integrated into this refinement loop, where the model actively suggests the most informative new experiments or data points to acquire, thereby efficiently improving itself with minimal experimental effort [

30,

32,

33,

34,

35,

36].

In summary, the ML workflow for fluorescent property prediction mirrors a standard data-driven modeling pipeline, but it must be executed with special attention to the nuances of photophysical data. Data quality and feature relevance set the upper limit on achievable model performance, while thoughtful model selection, hyperparameter tuning, and validation ensure the resulting model can be trusted to guide fluorescent material discovery. This foundational workflow underpins the more advanced machine learning strategies discussed in subsequent sections. As shown in

Figure 2, the overall ML workflow spans data collection, feature engineering, model training, and iterative validation for fluorescence prediction.

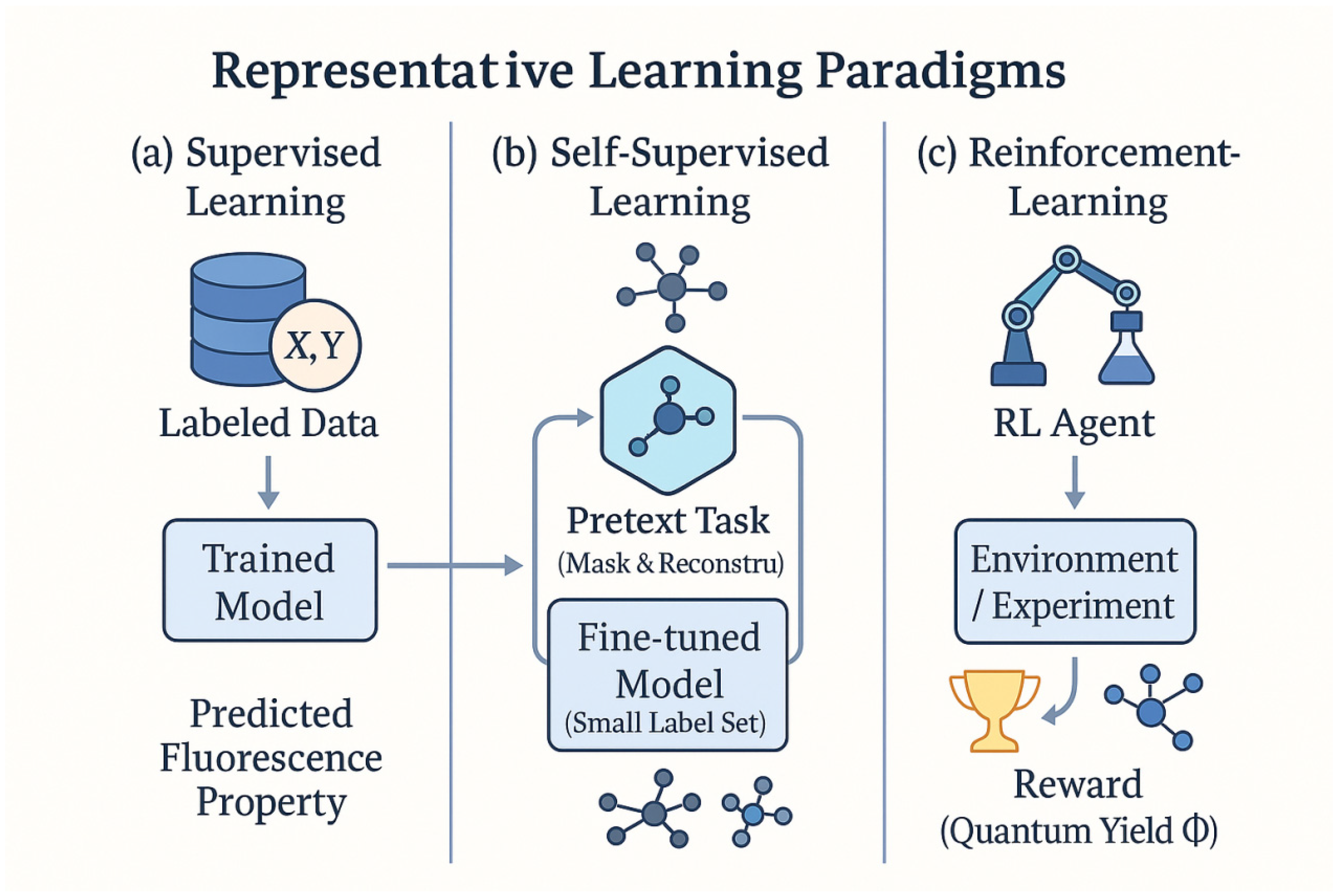

2.2. Representative Learning Paradigms: Supervised, Self-Supervised, and Reinforcement Learning

Machine learning methods can be categorized by how they learn from data. The most widely used paradigm in materials informatics is supervised learning, in which models train on examples paired with known output labels (here, measured fluorescent properties). The goal of supervised learning is to learn a mapping from input features (e.g., molecular descriptors) to target outputs (e.g., emission wavelength) with high fidelity. Supervised learning has underpinned the majority of ML studies on fluorescent materials to date because typically many known compounds have experimental or simulated fluorescence data available as labels. For instance, a supervised regression model might be trained on a database of molecules with their measured absorption and emission peaks, then used to predict the photoluminescence wavelength of new molecules. Owing to its maturity and effectiveness, supervised learning remains the workhorse for most predictive tasks (such as quantitative structure–property relationship modeling) in this field. However, supervised approaches require substantial labeled datasets, and their performance can degrade when data are scarce or biased toward certain regions of chemical space.

By contrast, self-supervised learning leverages unlabeled data to pre-train models via surrogate tasks, providing a way to exploit large pools of unannotated information (such as unmeasured molecular structures or unassigned spectra). In self-supervised schemes, the model learns intrinsic patterns or representations from the data itself—for example, by predicting missing parts of an input or by distinguishing altered inputs—and can then be fine-tuned on the actual prediction task using only a small amount of labeled data. This paradigm is valuable for fluorescent materials research because obtaining experimental fluorescence labels can be costly and time-consuming, whereas uncharacterized molecules or spectra are relatively plentiful. As shown in

Figure 3, supervised learning relies on labeled structure–property pairs, while self-supervised learning leverages abundant unlabeled molecular or spectral data. One representative approach is to devise a “pretext” task on molecular structures, such as predicting masked fragments of a molecule or generating one data modality from another [

37,

38,

39,

40,

41]. For example, Xie et al. introduced a chemistry-aware fragmentation strategy where a model is trained to reconstruct missing substructures of a molecule from the remaining parts [

42]. This self-supervised pre-training approach (termed CAFE-MPP) forces the model to learn chemically meaningful features of molecules, which in turn improves its performance on downstream fluorescent property prediction tasks [

42]. More generally, by pre-training on large unlabeled chemical libraries and then fine-tuning on smaller labeled fluorescent-material datasets, self-supervised learning can significantly boost predictive accuracy and robustness. It effectively acts as a form of knowledge transfer: the model extracts general chemical feature representations (for example, molecular motifs relevant to electronic transitions) from vast unlabeled data, which helps it predict fluorescence behavior even when only limited training examples are available for the target task. Self-supervised paradigms are still emerging in this field, but they hold great potential to alleviate data scarcity and to uncover nuanced structure–property relationships that might be missed by purely supervised models.

Another promising paradigm is reinforcement learning (RL), which is fundamentally different from both supervised and self-supervised approaches. In reinforcement learning, an agent learns to make a sequence of decisions through trial-and-error interactions with an environment, guided by a feedback reward signal rather than direct example labels. Instead of training on fixed input–output pairs, the RL agent actively explores different actions (for example, proposing a new molecular structure or adjusting a synthesis parameter) and receives higher rewards when those actions yield a desirable outcome (such as a high predicted quantum yield). This approach is well-suited for optimization and design problems in fluorescent materials science. For instance, researchers have applied RL to inverse molecular design of fluorophores, with the goal of discovering novel fluorescent molecules that have optimal properties beyond those present in the training set [

43]. One notable strategy is to use RL to navigate chemical space by assembling a molecule step-by-step: the agent adds chemical fragments one at a time, treating each addition as an action, and it is rewarded for constructing molecules that meet target fluorescence criteria. Kim et al. demonstrated an RL-guided molecular generator capable of finding emissive compounds with extreme target properties that conventional models (which are bound by learning the training data distribution) failed to discover [

44]. This result highlights RL’s strength in extrapolation—it can search beyond the domain of known examples by prioritizing high-reward (high-performance) candidates rather than strictly reproducing the patterns seen in the training data. Moreover, domain knowledge can be incorporated into RL by shaping the reward function (for example, penalizing structures that violate chemical stability rules), and RL naturally handles multi-step decision processes such as multi-stage syntheses or sequential screening experiments. In the context of fluorescent materials, one can envision RL-driven systems that suggest which molecule to synthesize next or how to tune processing conditions to maximize emission intensity. Early studies have even combined RL with generative models (for example, using an RL policy to guide a generative adversarial network in molecule creation) and reported promising success in creating unique candidate fluorophores [

44,

45]. Overall, reinforcement learning provides a powerful tool for active discovery and optimization in fluorescent material research, complementing passive predictive models with an ability to perform goal-directed searches through vast chemical and process parameter spaces.

2.3. Key Modeling Techniques: Attention, Multimodal Learning, Transfer, and Interpretability

To further improve the performance and usefulness of ML models for fluorescent materials, researchers have adopted several key modeling techniques in recent years. One such advancement is the incorporation of attention mechanisms, which allow for models to dynamically weight the importance of different parts of the input. Attention was originally popularized in natural language processing (e.g., the Transformer architecture) and has since been adapted to chemical representations and even spectral data. By learning where to “focus”, an attention-enabled model can highlight which atoms or bonds in a molecule are most influential for its fluorescence. In sequence-based models that operate on linear representations like SMILES strings, self-attention helps capture long-range dependencies—for instance, a distant substituent that affects a molecule’s emissive properties—more effectively than traditional recurrent neural networks. Similarly, in graph-based models, the graph attention network (GAT) variant extends a standard graph neural network by assigning learnable weights to neighboring nodes in a molecular graph. This means the model can pay greater attention to specific atomic interactions (for example, a particular conjugated bond or an electron-donating group) that strongly impact excited-state behavior, while ignoring less relevant parts of the structure. The incorporation of attention not only tends to improve prediction accuracy, but also provides a measure of interpretability: the learned attention weights can be visualized as an “importance map” over the molecule or input features. For example, an attentive model might automatically learn that the presence of a donor–acceptor pair in a TADF emitter deserves high attention due to its effect on the singlet–triplet energy gap, thereby implicitly identifying a key substructure. Indeed, one study on crystalline materials introduced a crystal GAT model that improved the prediction of new stable compounds by highlighting critical local chemical environments [

46]. Overall, attention mechanisms make ML models for fluorescent materials more flexible in modeling complex relationships and more transparent in explaining which features drive their predictions.

Another important approach is multimodal learning, which involves integrating multiple forms of data or representations into a single predictive model. Fluorescent material properties are often governed by a combination of factors that can be captured in different data modalities. For instance, a molecule’s fluorescence quantum yield might depend on its molecular structure and on its excitation/emission spectrum or environment. Rather than building separate models for each factor, multimodal ML techniques aim to learn a joint representation from all available data types to better predict outcomes. In practice, this could mean combining chemical structural descriptors with optical spectra, images, or even textual information about experimental conditions in one unified framework. Such integration has proven powerful in recent studies—for example, the modeling of aggregation-induced emission (AIE) luminogens was significantly improved by using hybrid descriptors that include both molecular structural features and aggregate-state spectral features [

47]. By training on these multimodal inputs, the model captures how structural factors and spectral behavior together influence emission efficiency. This strategy is especially valuable when single-modality data is insufficient to account for complex photophysical phenomena. We are also seeing “cross-modal” approaches, where a model might, say, translate an experimental absorption spectrum into a prediction of the most likely molecular structure or vice versa (akin to a multimodal translation task). Incorporating multiple data sources tends to make models more robust as well: a multimodal model is less likely to overfit to the quirks of one particular data type, and it can generalize better by finding consistent patterns that appear across different modalities. As public datasets for fluorescent materials continue to grow (including spectral libraries, molecular databases, etc.), multimodal learning is expected to become increasingly prominent, enabling richer predictive insights than any single data stream alone.

Transfer learning is another technique that has become crucial in this domain, helping to address the challenge of limited data for specific fluorescent material classes. In transfer learning, knowledge gained from one task or dataset is transferred to improve learning on a related second task or dataset. A common scenario in fluorescent materials research is that we have abundant data for one type of system or property, but far less for another—for example, thousands of measurements for quantum dots versus only a few dozen for a new class of organic dyes. Rather than training a model from scratch on the small dataset, one can first pre-train a model on the larger, related dataset and then fine-tune it on the target task with the smaller dataset. This approach has been shown to significantly boost performance in fluorescence property prediction. For instance, Jeong et al. demonstrated a deep learning model that was initially trained on a large corpus of optical spectra and then adapted via transfer learning to predict orbital energy levels (HOMO/LUMO) of organic fluorophores [

48]. The transfer-learned model achieved substantially higher accuracy than a model trained directly on the small fluorophore dataset [

48,

49,

50]. The success of transfer learning in such contexts stems from the fact that many photophysical patterns and mechanisms are shared across different material systems. A neural network that has already learned general spectral features or molecular feature patterns from one dataset (e.g., inorganic phosphors or quantum dots) can reuse those learned representations to more efficiently learn the behavior of another dataset (e.g., organic emitters). In essence, the model carries over a “head start” in recognizing which molecular characteristics correlate with fluorescence properties. Transfer learning is especially powerful when combined with careful fine-tuning, wherein the model’s parameters are adjusted gradually on the new data to avoid catastrophic forgetting of the earlier knowledge. This technique reduces the amount of experimental data required in the target domain and accelerates model development. It also opens up possibilities like cross-modal transfer—for example, using a model pre-trained on computationally generated spectra (from simulations) to help interpret experimental spectra—effectively transferring knowledge from a simulated domain to an experimental one. As the fluorescent materials field diversifies with new emitters and operating conditions, transfer learning provides a practical pathway to leverage data-rich domains and jump-start modeling in emerging areas.

Finally, ensuring model interpretability is a key concern, given that scientific understanding and trust are as important as raw predictive accuracy in materials research. Interpretability techniques aim to explain or rationalize the predictions of complex models, helping researchers gain insight into the structure–property relationships that the model has learned. There are several approaches to achieve this. One straightforward method is to use inherently interpretable models (such as decision trees or sparsified linear models) or to examine feature importance scores in ensemble methods. For example, random forest and XGBoost models can rank which input descriptors (e.g., molecular weight, band gap, conjugation length, etc.) most strongly influence the fluorescence output, yielding human-understandable insights (e.g., “conjugation length and donor strength were the top predictors of emission wavelength”). However, for more complex models like deep neural networks, post hoc interpretability tools are required. Techniques such as Shapley value analysis and saliency maps have recently been applied to chemical ML problems. For instance, a method called MolSHAP was developed to compute Shapley additive explanations at the level of molecular substructures, quantifying how much each functional group contributes to a model’s predicted property [

51]. Using MolSHAP, a model predicting quantum yield might reveal that the presence of a heavy-atom substituent subtracts a certain amount from the predicted yield (due to enhanced intersystem crossing), whereas a rigid planar core adds to the predicted yield (by reducing non-radiative decay). Such explanations align well with chemical intuition, thereby validating the model’s reasoning or, conversely, highlighting when the model might be relying on spurious correlations. Another set of interpretability techniques involves leveraging the model’s internal attention weights (as mentioned above) or conducting counterfactual analyses—for example, seeing how small, targeted modifications to a molecule’s structure would affect the model’s prediction. Recent developments in explainable AI for chemistry have even begun integrating physical knowledge into the interpretability process: for example, explainable graph neural networks can be constrained to attend to chemically meaningful features like aromatic rings or charge-transfer pathways [

52]. By making ML models more transparent, researchers can trust and act on their predictions more readily, which is crucial for adopting ML-guided design of new fluorescent materials. Moreover, improved interpretability often leads to new scientific hypotheses: if a model consistently points to a particular molecular motif as crucial for high brightness or stability, that insight can guide the rational design of next-generation luminophores and prompt targeted experiments to verify the model’s suggestions.

In summary, attention mechanisms, multimodal data integration, transfer learning, and interpretability methods are all pivotal techniques that enhance the capabilities of ML models to not only predict fluorescent material properties more accurately, but also to do so in a manner that yields scientific insight and practical trust. Together, these techniques help bridge the gap between black-box predictions and actionable understanding, making data-driven models more useful and credible in real-world fluorescent materials research. It can be seen from

Figure 4 that attention mechanisms, multimodal fusion, and physics-informed models enhance interpretability and robustness.

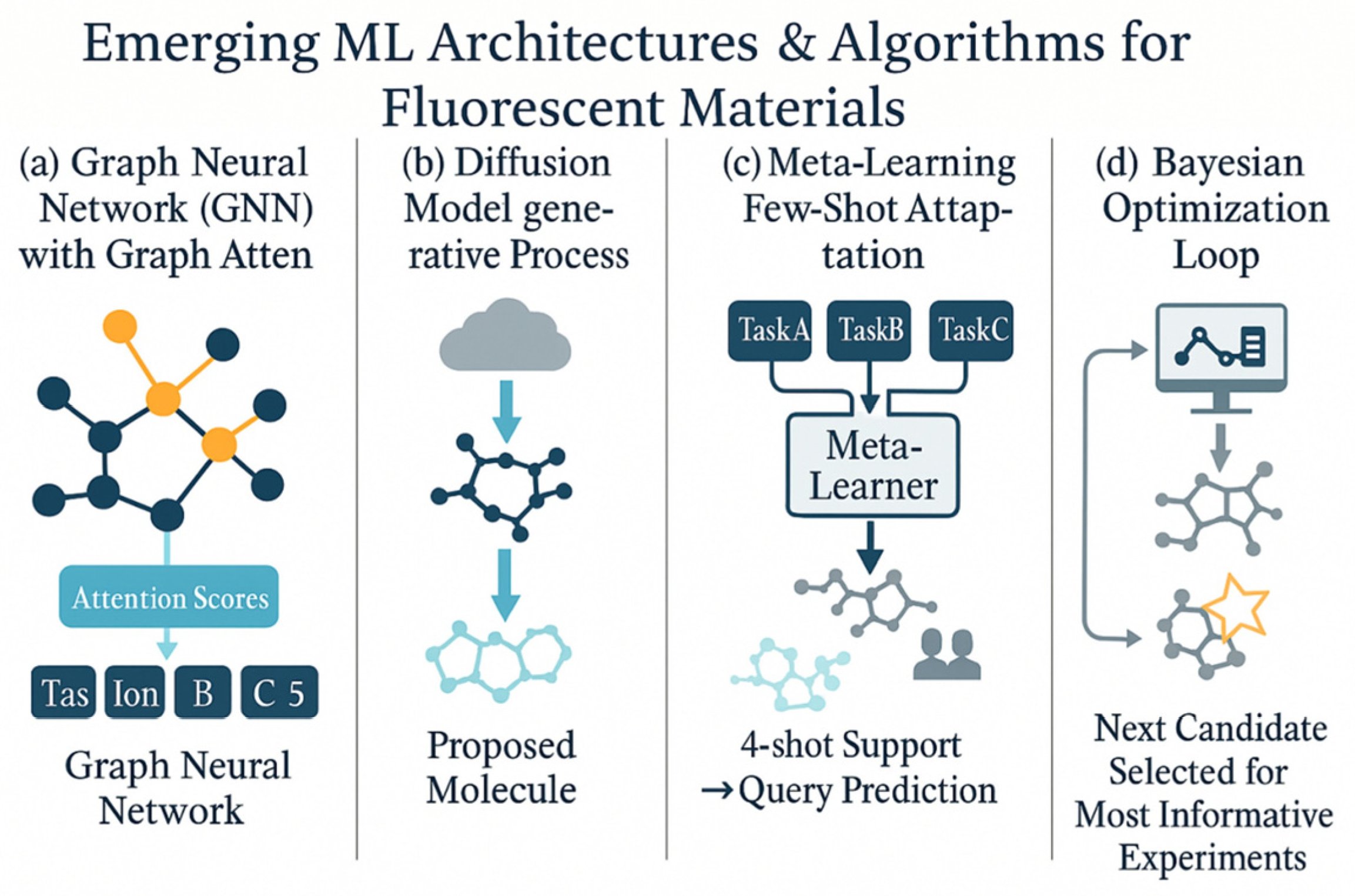

2.4. Emerging Architectures and Algorithms: GNNs, GATs, Diffusion Models, Meta-Learning, and Bayesian Optimization

As the field progresses, several advanced ML architectures and algorithms have emerged that show great promise for fluorescent materials research. One major development is the rise of graph neural networks (GNNs). Unlike traditional neural networks that require fixed-length vector features, GNNs operate directly on graph-structured data—in this case, molecular graphs or crystal lattices, where nodes represent atoms and edges represent bonds or interactions. GNN models (including message-passing neural networks) naturally capture the connectivity and local chemical environments of a molecule, making them ideal for predicting properties that fundamentally derive from molecular structure. In fluorescent materials, GNN-based models have been successfully used to predict outcomes like emission wavelength and stability by training on large datasets of molecular structures. These models often outperform traditional descriptor-based approaches because they do not rely on human-defined features; instead, the network learns its own relevant features during training (for example, automatically recognizing subgraph patterns that correspond to chromophores or quenchers). An important extension of the GNN is the graph attention network (GAT), which incorporates attention mechanisms into the graph model. GATs allow for the network to weight different bonds or neighboring atoms unequally, effectively learning which parts of the molecular graph are most important for the property of interest. This has both accuracy and interpretability benefits. For example, a GAT model might focus attention on the π-conjugated core of a molecule when predicting its fluorescence color, aligning with chemical intuition that the conjugated system dictates emission wavelength. Indeed, a study on crystalline materials introduced a crystal GAT model that improved the prediction of new stable compounds by highlighting critical local chemical environments [

22]. For luminescent molecular crystals or metal–organic frameworks, such graph-attention models could similarly identify key structural motifs (like specific ligand–metal interactions or packing features) that influence photoluminescence. As research pushes into more complex material systems and larger molecular datasets, GNNs and GATs provide scalable and insightful modeling tools that directly leverage the structural formula or crystallographic data of fluorescent materials.

Another cutting-edge development is the advent of diffusion models for generative tasks. Diffusion models are a class of deep generative networks that have recently proven highly effective in domains like image synthesis, and they are now being adapted for molecule generation and materials design. The basic idea is that a diffusion model learns to invert a gradual noising process: it starts from a random noise input and iteratively “denoises” it to produce a structured output such as a molecular graph. In the context of fluorescent materials, diffusion models enable a new approach to de novo molecular design—one can generate novel molecular structures that have desired fluorescent properties by appropriately guiding the diffusion process. A powerful example is the Guider of Autoencoding Diffusion for Individuals (GaUDI) framework. In a recent study, GaUDI was trained on a large dataset of polycyclic aromatic compounds (many of which are classic organic fluorophores) and then used to generate new molecular structures optimized for multiple target properties (such as specific HOMO–LUMO energy gaps and thermal stabilities) [

53]. By incorporating a property prediction module into the generative loop, the diffusion model was biased toward producing candidates with the desired photophysical characteristics. Remarkably, this diffusion-based approach was able to propose chemically valid, diverse molecules that went beyond the training set distribution, achieving nearly 100% validity and uniqueness in the generated candidates [

53]. This result indicates a strong potential for discovering truly novel emitters that conventional generative techniques or human intuition might miss.

Compared to earlier generative techniques like variational autoencoders (VAEs) or generative adversarial networks (GANs), diffusion models tend to provide more stable training and higher-quality outputs, and they can be more readily conditioned on complex design objectives. For fluorescent materials design, one can imagine using diffusion models to simultaneously optimize a molecule’s structure for a set of criteria—for example, maximizing quantum yield and photostability while also hitting a target emission color—by formulating those objectives into the diffusion model’s guidance or reward function. This capability aligns well with the multi-objective nature of materials discovery. As these models mature, diffusion-based generative design could become a mainstream approach to propose new fluorescent compounds (or even device structures) that meet stringent performance requirements before any lab synthesis is attempted. This generative capability essentially enables an inverse design strategy: rather than predicting properties from a given structure (the traditional forward modeling approach), the ML model works backward from desired target properties to suggest new molecular structures that are likely to exhibit those properties [

53,

54].

In parallel, the concept of meta-learning (or “learning to learn”) has gained traction as a way to tackle the small-data problem that often plagues specialized fluorescent material studies. Meta-learning algorithms improve their learning efficiency by leveraging experience from multiple learning tasks. In practical terms, a meta-learning approach might train a model on a variety of related tasks (each with limited data) to develop an adaptable model that can quickly fine-tune to a new task with only a few examples. This is highly relevant in cases where for example, a researcher has several small datasets—perhaps fluorescence data under different environmental conditions or for different families of luminophores—and wants a model that can handle a brand-new case with minimal additional data. One popular form of meta-learning is few-shot learning, which aims to achieve reasonable performance given just a handful of training samples by relying on knowledge distilled from previous tasks. In the fluorescent materials domain, few-shot meta-learning could manifest as follows: during training, the model is exposed to many different fluorescence-prediction tasks (e.g., predicting emission peaks for coumarins, for BODIPY dyes, for quantum dots, etc.) and through this process it learns a universal representation of “what generally controls fluorescence”. When faced with a new task—say, predicting properties for a novel class of lanthanide-doped phosphors with only a very few measured data points—the meta-trained model can quickly adapt to this task, often requiring only a handful of additional examples to reach good accuracy.

Recent studies have started to demonstrate the power of meta-learning in materials science. For instance, Allen et al. showed that a meta-learning approach could fit interatomic potential models across multiple levels of theory, resulting in models that adapted to new atomic systems with significantly reduced error using very little training data [

55]. Analogously, for fluorescence problems, a meta-learned model could be quickly calibrated to a new measurement setup or a new molecular scaffold with minimal data, leveraging prior knowledge gained from related fluorescence datasets. Another benefit of meta-learning is its ability to handle noisy or inconsistent data. Meta-learning algorithms have shown resilience in scenarios with high label noise or varying data quality, which is useful since experimental fluorescence data often come from different labs or methods and can vary in reliability [

56,

57]. While still a developing area, meta-learning holds promise for producing more generalizable and adaptable ML models in fluorescent materials research. It effectively serves as a route toward “foundation models” for this field—large-scale models that capture general patterns of fluorescence behavior and can then be specialized to myriad specific prediction tasks with minimal effort.

Beyond model architectures and learning paradigms, advanced approaches in closed-loop optimization are transforming how researchers search for optimal fluorescent materials or experimental conditions. One such approach is Bayesian Optimization (BO), which has emerged as a powerful algorithm for guiding both experimental design and hyperparameter tuning in materials science. BO is a sequential optimization strategy particularly well-suited for expensive-to-evaluate problems, such as actually synthesizing a new material or running a costly high-accuracy simulation. It works by building a probabilistic surrogate model (often a Gaussian process) of the target objective function and then selecting the next query point by balancing exploration and exploitation (typically via an acquisition function). In fluorescent materials discovery, for example, one might use BO to suggest the next material composition or molecular structure to test in order to maximize a target metric like fluorescence quantum yield or color purity. The BO algorithm uses past observations (e.g., which materials have been tried and their measured properties) to model the objective surface and intelligently propose new candidates that are predicted either to be very high-performing or to significantly reduce uncertainty. One notable advantage of BO is its principled way of incorporating uncertainty: it naturally gravitates towards experiments that are predicted to be highly promising (high reward) or those that would be very informative (high uncertainty and thus high potential information gain). This is ideal in domains where experiments are costly or time-consuming, and one cannot brute-force through thousands of candidates.

Researchers have applied BO in fluorescent materials research to efficiently navigate large search spaces with minimal experiments. For instance, BO has been used to optimize the synthesis conditions for two-dimensional material phosphors, achieving improvements in photoluminescence by efficiently searching the space of growth parameters [

58]. Similarly, in an autonomous laboratory setting, BO has helped identify optimal compositional blends of OLED emitters, discovering formulations that yield long device lifetimes and high efficiency in far fewer experimental iterations than a grid or random search would require. Beyond purely data-driven optimization, physics-guided variants of BO further enhance its power by integrating known physical relationships or constraints into the model. For example, a physics-informed BO approach was demonstrated for materials discovery where the model was constrained by Vegard’s law (a rule describing how lattice constants vary with composition), which enabled more reliable extrapolation beyond measured composition ranges [

59]. By embedding such prior knowledge, BO can avoid suggesting implausible or unphysical candidates and focus on the most promising regions of material space that respect known scientific rules.

In summary, Bayesian optimization offers a data-efficient strategy to optimize fluorescent materials and their processing, effectively closing the loop by connecting model predictions with experimental decision-making. It is often used in tandem with complementary techniques like reinforcement learning or active learning to guide the selection of subsequent experiments [

58]. For example, an active learning approach might analyze a model’s uncertainty to choose the next material candidate whose experimental evaluation would maximally improve the model [

60,

61]. Together, these emerging architectures (GNNs, GATs, diffusion models) and algorithms (meta-learning, BO, and related strategies) are rapidly expanding the researcher’s toolkit, enabling faster and smarter exploration of the vast design space of fluorescent materials and facilitating the inverse design of new luminophores with desired properties. As illustrated in

Figure 5, GNNs, diffusion models, and generative frameworks empower inverse design and discovery of novel emitters.

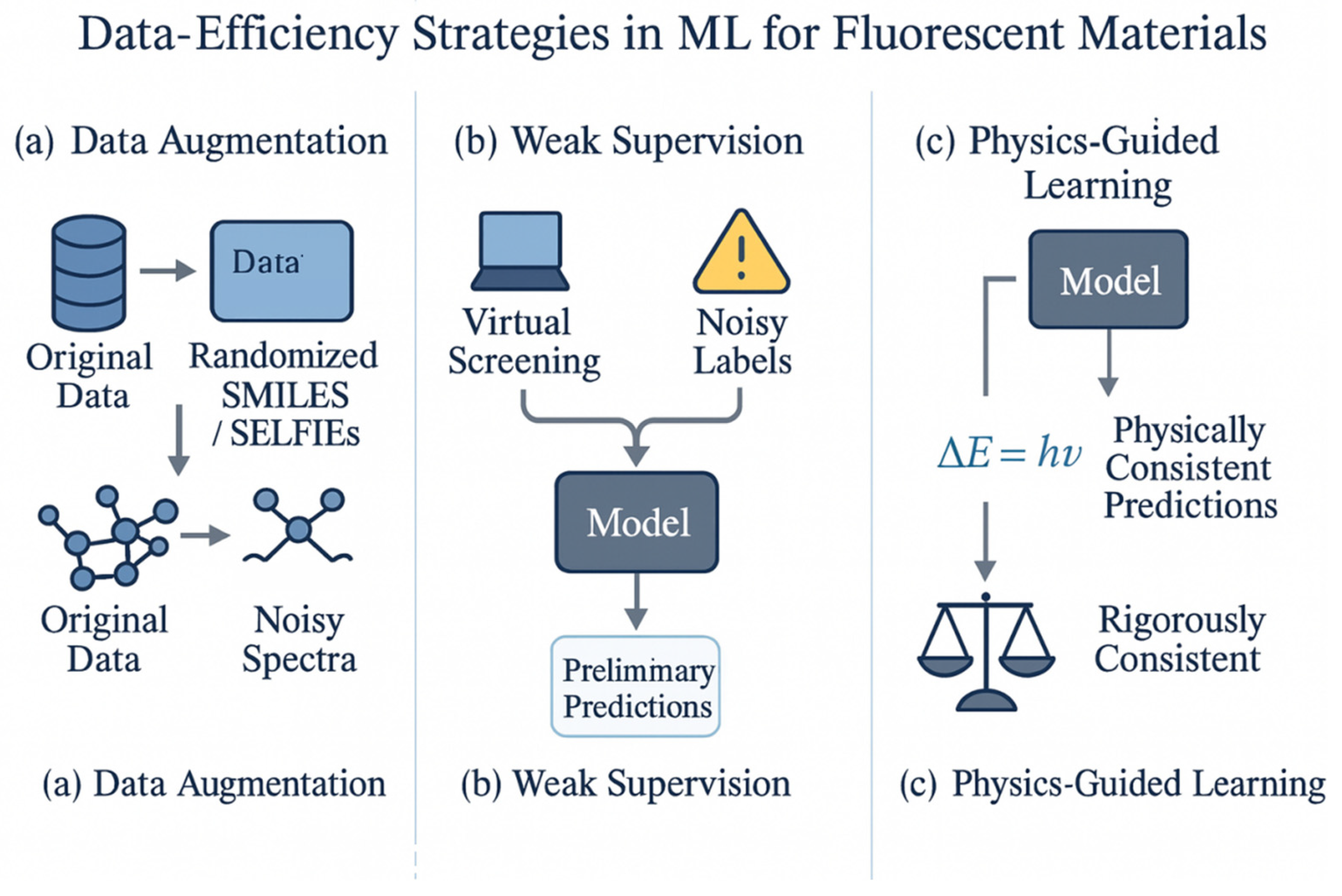

2.5. Data Efficiency Strategies: Augmentation, Weak Supervision, and Physics-Guided Learning

One of the central challenges in applying ML to fluorescent materials is the limited quantity and diversity of high-quality data available for training models. To address this, a number of data-centric strategies have been developed to improve model generalization without requiring massive new experimental datasets. Data augmentation is a first-line approach, wherein the training dataset is artificially expanded by transforming existing data or generating synthetic data that preserves the essential information. In the context of image-based ML, augmentation techniques (like rotating or cropping images) are commonly used; analogously, for molecular and spectral data, one can create variations that maintain the underlying labels. For example, a popular technique for molecules is SMILES augmentation. A single molecule can be represented by many different, but equivalent, SMILES strings (due to the arbitrary ordering of atoms in the notation). By generating multiple randomized SMILES permutations for each molecule, one can effectively multiply the dataset size without collecting new experimental data. This practice has been shown to improve model robustness, as the ML model becomes less sensitive to any particular token ordering and instead learns more general chemical features [

62]. Another advanced augmentation method for molecular strings uses the SELFIES (Self-Referencing Embedded Strings) encoding, an alternate text representation that guarantees a valid molecule for any sequence of symbols. Augmenting training data with slight random mutations in the SELFIES representation can introduce novel but still chemically valid samples, which is particularly helpful for preventing overfitting when the original dataset is very small [

63,

64].

Beyond molecular structure representations, spectral augmentation is also valuable when dealing with optical data. Techniques such as adding random noise to spectra, shifting peak positions, or even mixing parts of different spectra have been employed to simulate measurement variability and broaden the coverage of the training data. For instance, creating several noisy versions of an experimental fluorescence spectrum can teach a model to ignore minor instrumental fluctuations and focus on the true spectral features, thereby improving its robustness in real-world prediction scenarios. Studies have reported that models trained with augmented spectral and structural data yield higher accuracy and better generalization, especially for tasks like predicting photoluminescence quantum yields or near-infrared emission peaks where the initial training data might be very limited [

65]. In summary, data augmentation leverages known invariances and noise patterns in the data to supply the model with a richer variety of training examples. This ultimately reduces overfitting and makes the model more trustworthy when predicting fluorescence properties for new materials.

In cases where obtaining perfectly accurate ground-truth labels is particularly difficult, weak supervision offers a way to utilize imperfect or proxy data to train ML models. Weak supervision is an umbrella term for methods that learn from noisy, partially correct, or indirectly obtained labels instead of relying solely on small amounts of pristine data. In the context of fluorescent materials, this could mean using theoretical or semi-empirical calculations as provisional labels for training, or mining the scientific literature for reported fluorescence values that come with some uncertainty. For example, one strategy is to perform high-throughput virtual screening by using computational chemistry to generate labels: one could calculate thousands of approximate excitation energies with DFT as substitutes for experimental absorption maxima [

66]. While individual DFT-calculated values might have systematic errors, collectively they can provide a useful learning signal across a much larger number of compounds than could be measured experimentally. Another form of weak supervision is leveraging distant supervision from published text: algorithms can scan journal papers or databases to extract mentions of molecules and their fluorescence properties, essentially constructing a rough dataset from the literature (with the understanding that these extracted labels may not be perfectly reliable) [

67]. These noisy or proxy labels can then be used to train a model, ideally with techniques that account for label uncertainty. For instance, the model might be trained to predict a distribution of possible property values rather than a single point estimate, reflecting the uncertainty in the training labels.

Research in related fields has shown that models can be trained on large, weakly labeled datasets to achieve performance close to that of models trained on a smaller set of clean, high-accuracy labels [

68]. A key trick is often to combine multiple weak signals so that they can compensate for one another’s errors. For example, one might combine a simple heuristic based on chemical intuition with a rough predictor; the model is then encouraged to learn the underlying fluorescence property that is consistent with both sources. Semi-supervised learning is another approach in this vein: one can train an initial model on the small set of labeled data, then use that model to predict labels for a larger pool of unlabeled data, and iteratively retrain. This process (sometimes called self-training) allows for the model’s own predictions on unlabeled fluorescent materials to become additional training data, gradually expanding the effective dataset. Overall, the effect of weak supervision methods is to broaden the training pool by accepting that not all labels are fully accurate. In fluorescent materials discovery, where experiments can be slow and expensive, these methods allow for researchers to incorporate cheaper information sources (computational simulations, expert chemical knowledge, existing databases with approximate values) to mitigate data scarcity. The outcome is often a model that, while not as precise as one trained on an enormous perfectly labeled dataset, still captures the general trends and can effectively rank or filter candidate materials for further investigation.

A complementary strategy to purely data-driven approaches is physics-guided learning, which integrates domain knowledge from physics or chemistry into the ML model to make more efficient use of limited data. Instead of treating the model as a completely black-box predictor, physics-guided methods introduce known scientific constraints or biases into the model’s structure or training objective so that it respects established principles of fluorescence. This can significantly reduce the amount of data needed, because the model does not have to re-discover fundamental relationships that are already well-known to human experts—those relationships are built in from the start. One way to implement this is by adding physical constraint terms to the model’s loss function during training. For example, one could enforce that a model’s predicted emission energy and absorption energy for a molecule never violate the typical range of Stokes shifts observed in practice, or ensure that the model’s predictions obey known selection rules (e.g., assigning lower scores to forbidden transitions that should have negligible oscillator strength). Another approach is to incorporate physics awareness into the feature set or model architecture: for instance, providing the model with physically meaningful input features (such as an estimate of an excited-state lifetime or a quantum yield computed from a simple theoretical formula) can guide it toward the correct relationships more quickly. A clear illustration of physics-guided ML in materials science is the development of physics-informed neural networks for material stability prediction, where embedding thermodynamic constraints (like phase stability conditions or defect formation energy limits) into the model prevented nonsensical outputs and even enabled the model to extrapolate sensibly into regimes with sparse data [

68].

In fluorescent materials research, investigators have shown the value of physics-guided models for improving predictions under data-scarce conditions. For example, Chen et al. demonstrated that incorporating known radiative and non-radiative decay rate formulas into an ML model for fluorescence quantum yield led to markedly improved accuracy on novel compounds, even when training data were limited. (Notably, the physics-based constraints ensured the model would never predict a physically impossible quantum yield above 100% or below 0%, since those limits were hard-coded into the model’s allowable outputs.) Another example is the use of hybrid modeling, where a few steps of a physics-based simulation (such as a short molecular dynamics run to assess aggregation behavior) are performed for each candidate and the resulting physical insights (e.g., a metric of aggregation propensity) are fed into the ML predictor. In this way, the ML model benefits from physics-based hints about the system. We also see physics guidance being applied in the context of Bayesian optimization and active learning: for instance, incorporating Vegard’s law as a constraint when searching a compositional space of mixed crystals ensured that the ML-guided search only proposed candidates following known composition–property trends [

68,

69]. The net effect of physics-guided learning is a model that is more data-efficient and often more interpretable. By aligning the ML model with known scientific truths (for example, penalizing it if it violates energy conservation or known monotonic relationships), we effectively narrow the hypothesis space that the model must explore. This means fewer experimental data points are needed for the model to converge on a realistic solution that fits the observations. In fluorescent materials research, where we often juggle sparse data and complex phenomena, physics-guided ML serves as a vital bridge between the predictive power of data-driven algorithms and the reliability of established photophysical theory. It helps ensure that our models not only fit the data we have, but also make sense in light of decades of accumulated knowledge in fluorescence science—a crucial factor for the acceptance and success of ML-guided approaches in fluorescent material innovation. It can be seen from

Figure 6 that strategies such as transfer learning, data augmentation, and weak supervision effectively mitigate data scarcity.

In conclusion, data-efficiency strategies such as augmentation, weak (or semi-) supervision, and physics-guided learning are enabling machine learning models to thrive even in the data-constrained scenarios typical of fluorescent materials research. These approaches extend and enrich the available information, mitigate overfitting, and enforce scientific consistency, thereby significantly enhancing the reliability and applicability of ML predictions for luminescent materials. Through the smart use of such strategies, researchers can extract maximal value from every experimental data point and confidently apply ML models to discover and optimize the next generation of fluorescent materials.

3. Machine Learning for Fluorescent Materials Across Systems

3.1. AIE Luminogens

Aggregation-induced emission (AIE) luminogens have emerged as a pivotal class of fluorescent materials due to their unique restriction of intramolecular motion (RIM) mechanism, which enables strong solid-state emission and has broad applications in bioimaging, sensing, and optoelectronics [

70]. The application of machine learning (ML) in this field not only accelerates the discovery of novel AIE-active molecules, but also provides mechanistic insights into emission processes that are challenging to capture by conventional trial-and-error or quantum chemical approaches.

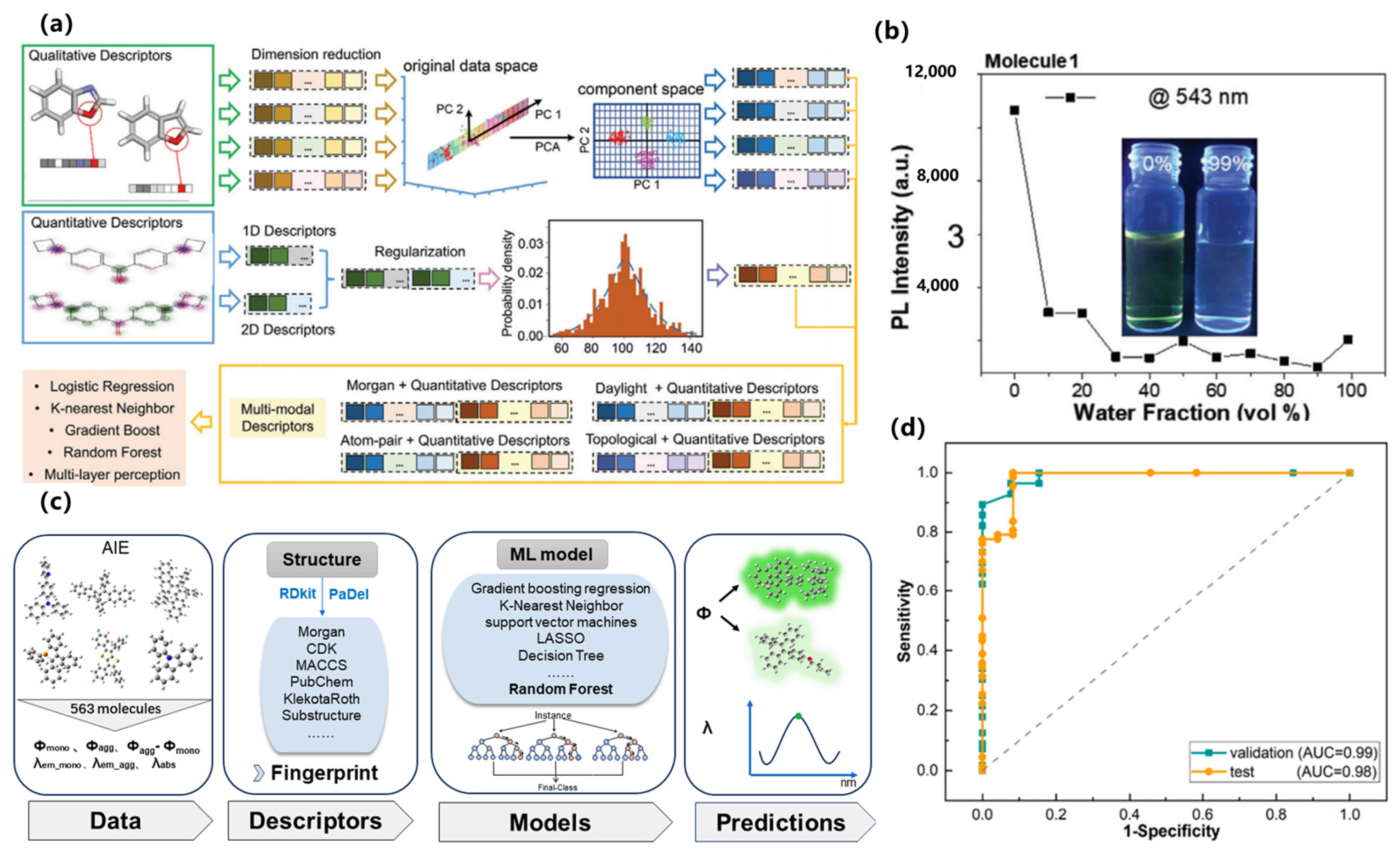

In terms of key modeling techniques, supervised learning and attention-based models have been widely applied to predict quantum yields, emission wavelengths, and mechanistic features of AIE luminogens. For example, Qiu et al. developed one of the earliest QM-ML hybrid approaches to distinguish AIE from aggregation-caused quenching (ACQ) molecules, successfully identifying RIM-related descriptors as critical predictive features [

70]. Xu et al. subsequently introduced ML-assisted modeling to predict molecular optical properties upon aggregation, combining experimental and DFT-derived descriptors to enhance predictive accuracy [

71], as shown in

Figure 7a,b. More recently, Bi et al. employed advanced regression techniques to quantitatively predict both quantum yields and emission wavelengths, offering systematic guidelines for molecular design, as shown in

Figure 7c,d [

72].

With respect to emerging architectures, novel ML paradigms such as graph neural networks (GNNs) and photodynamics-informed frameworks have been adopted to address AIE complexity. Wang et al. applied ML to photodynamics simulations, uncovering non-radiative pathways blocked by aggregation that drive high luminescence, providing an interpretable link between structure and emission performance [

73]. Peng et al. reported a ground-state descriptor–based virtual screening framework that leveraged interpretable ML to identify mechanofluorochromic AIE molecules, demonstrating the power of descriptor engineering in high-throughput discovery [

74]. In addition, Zhang et al. extended ML models to AIE-active metal–organic frameworks (MOFs), revealing how ligand-level features control ensemble emission [

75].

Regarding data efficiency strategies, multiple approaches have been explored to overcome the challenge of limited labeled AIE datasets. Zhao et al. demonstrated a weak-supervision strategy by integrating literature-mined data with curated experimental datasets to develop robust ML predictors for organic AIE materials [

76]. Dave et al. highlighted multimodal learning by combining synthetic design features, photophysical measurements, and biological performance metrics to guide AIE molecular discovery in biomedical contexts [

77]. Zhang et al. further showed that ML-assisted screening can significantly reduce the experimental burden by prioritizing candidates with strong fluorescence properties from large molecular pools [

78]. Taken together, machine learning has enabled AIE research to transition from empirical optimization toward knowledge-driven discovery. The integration of supervised prediction, graph-based architectures, and data-efficient learning strategies has not only accelerated screening, but also provided mechanistic interpretability, underscoring its role as a cornerstone for the rational design of next-generation AIE luminogens.

3.2. Thermally Activated Delayed Fluorescence (TADF) Emitters

Thermally activated delayed fluorescence (TADF) materials have attracted extensive attention as next-generation emitters for organic light-emitting diodes (OLEDs), owing to their capability of harvesting both singlet and triplet excitons through reverse intersystem crossing (RISC), thereby achieving near-unity internal quantum efficiency [

79,

80]. The design of efficient TADF molecules, however, involves balancing frontier orbital separation, singlet–triplet energy gaps (ΔE_ST), and charge-transfer character, which renders conventional quantum chemical screening both computationally intensive and limited in scope. Machine learning (ML) has thus emerged as a transformative approach to accelerate the rational design and optimization of TADF emitters [

81,

82].

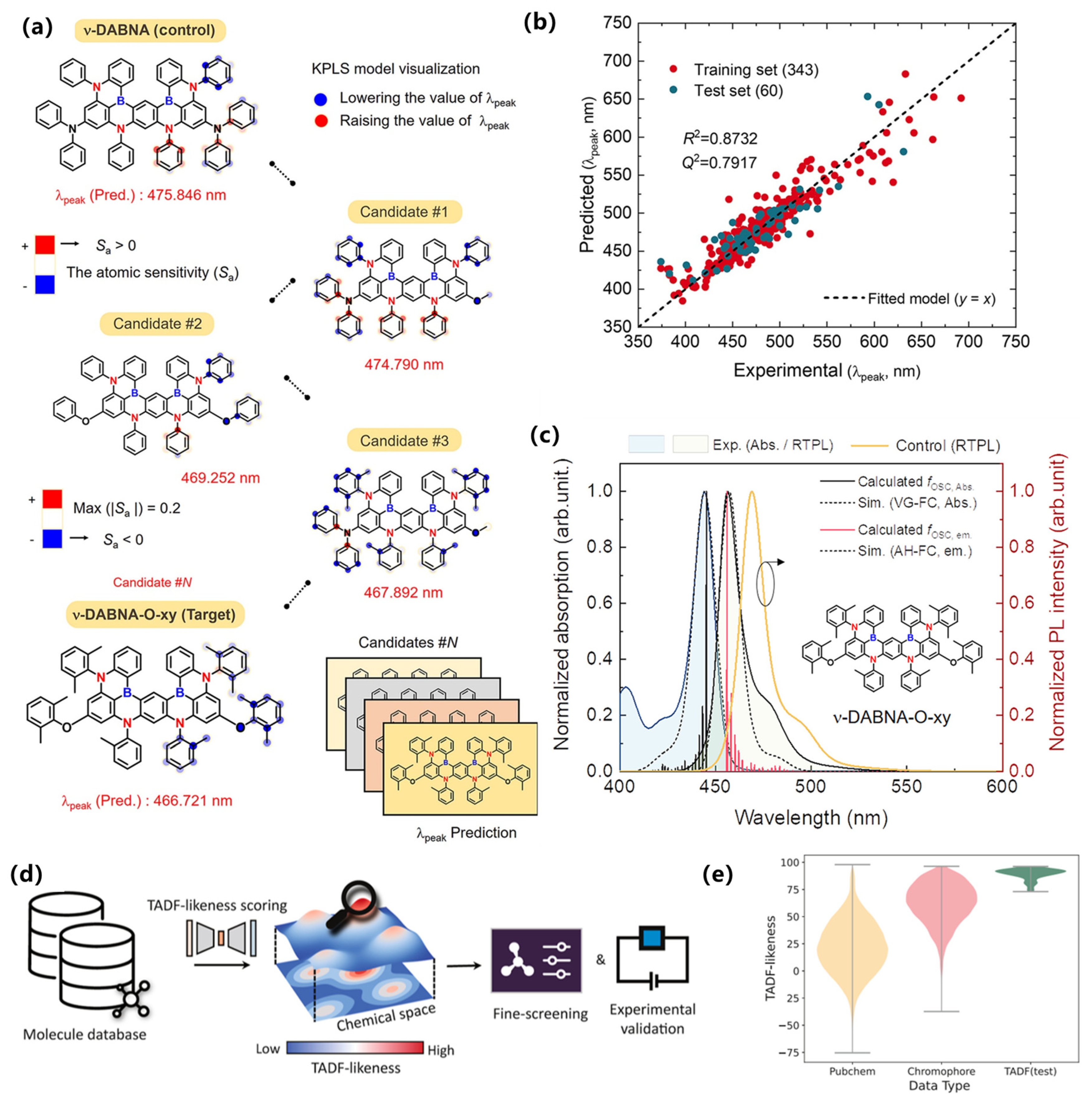

In terms of key modeling techniques, early ML efforts have largely employed supervised regression or classification using handcrafted features in TADF materials. For example, Shi et al. (2022) compiled a ~300-point database of TADF OLEDs with descriptors including photoluminescence quantum yield (PLQY), singlet–triplet gap (ΔE_ST), emission wavelength, host polarity, etc., and applied multiple algorithms (linear regression, random forest, neural nets, XGBoost) to predict external quantum efficiency (EQE) [

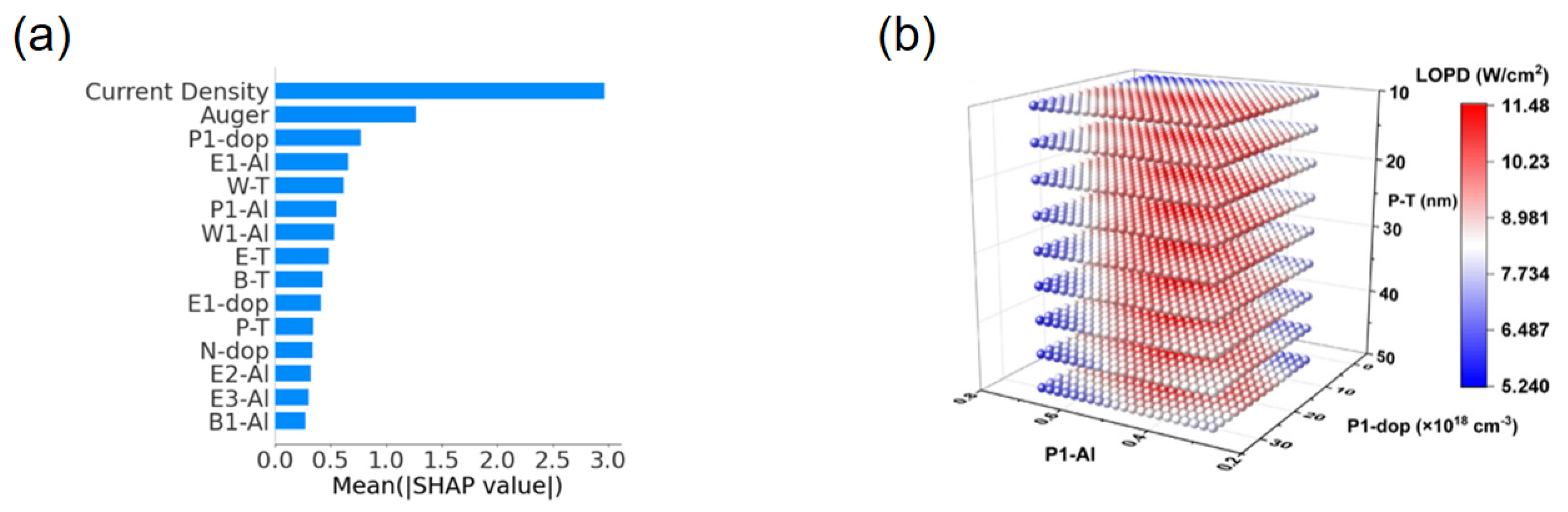

83]. They found PLQY, emission wavelength and ΔE_ST to be the most important features governing EQE, as revealed by feature-importance analysis (e.g., RF/XGBoost). Similarly, Bu and Peng (2023) built an ML–QM high-throughput screening workflow for TADF (combining DFT calculations with an ML model) to flag promising emitters [

29]. Complementing these approaches, an ML-QSPR workflow targeting multiresonant deep-blue systems nominated ν-DABNA-O-xy; subsequent synthesis and device testing confirmed narrowband emission and high efficiency, validating the in silico selection, as shown in

Figure 8a–c [

84].

Beyond mere prediction, ML has successfully guided the experimental discovery of novel TADF emitters. For instance, Bu and Peng employed an ML-assisted high-throughput virtual screening to identify 384 promising candidates from over 44,000 molecules, with subsequent quantum chemical validation confirming excellent TADF properties [

85]. Remarkably, this approach was extended to full experimental synthesis and characterization by Shi et al., who developed an integrated ML-designed TADF molecule that was successfully synthesized and exhibited high performance in devices [

86]. These cases exemplify a complete ML-guided pipeline, from in silico prediction to experimental validation, dramatically accelerating the discovery cycle. In parallel, a deep-learning chemical-similarity metric (“TADF-likeness”) has been introduced to pre-filter very large libraries and enrich downstream QSAR modeling, improving virtual-screening hit rates, as shown in

Figure 8d,e [

87]. Likewise, Bu and Peng (2023) used ML-assisted virtual screening to rapidly rank candidate TADF emitters, accelerating discovery by focusing on a narrowed chemical space [

29]. Although graph-based GNNs or generative models have seen use in related luminescent materials, in TADF the literature remains dominated by tree-based regressors and neural networks. Multi-task learning (e.g., jointly predicting lifetime and efficiency) or Bayesian optimization could be applied to TADF design in future work.

Data-efficiency strategies have been less explored for TADF to date. The ML models above typically rely on published datasets and DFT-computed features, but only a few hundred data points exist. For example, Shi et al. note that experimental TADF data are sparse and non-IID, so they employ regularized models (ridge, Lasso) to mitigate overfitting [

88]. In principle, active learning or physics-informed constraints (e.g., embedding rate equations for RISC) could reduce data needs, as has been performed in other luminescent systems, although specific TADF cases are not yet reported. Overall, ML-guided TADF design has shown promise in targeting key photophysical descriptors, but more sophisticated data-efficient schemes (active learning, multi-fidelity ML) remain a future opportunity.

Figure 8.

(

a) Interpretable model visualization from radial-based KPLS regression model for λ peak prediction. (

b) Scatter plot from the continuous model result, showing the training/test set of λ peak predictions. Reproduced from Ref. [

84] with permission from the American Association for the Advancement of Science. (

c) Absorption spectrum of ν-DABNA-O-xy and fluorescence spectra for the target and control molecules in toluene (concentration, 0.05 mM). (

d) Overview of using the TADF-likeness score as a prefilter for high-throughput virtual screening. (

e) The violin plots of TADF-likeness scores on various datasets. Reproduced from Ref. [

87] with permission from American Chemical Society.

Figure 8.

(

a) Interpretable model visualization from radial-based KPLS regression model for λ peak prediction. (

b) Scatter plot from the continuous model result, showing the training/test set of λ peak predictions. Reproduced from Ref. [

84] with permission from the American Association for the Advancement of Science. (

c) Absorption spectrum of ν-DABNA-O-xy and fluorescence spectra for the target and control molecules in toluene (concentration, 0.05 mM). (

d) Overview of using the TADF-likeness score as a prefilter for high-throughput virtual screening. (

e) The violin plots of TADF-likeness scores on various datasets. Reproduced from Ref. [

87] with permission from American Chemical Society.

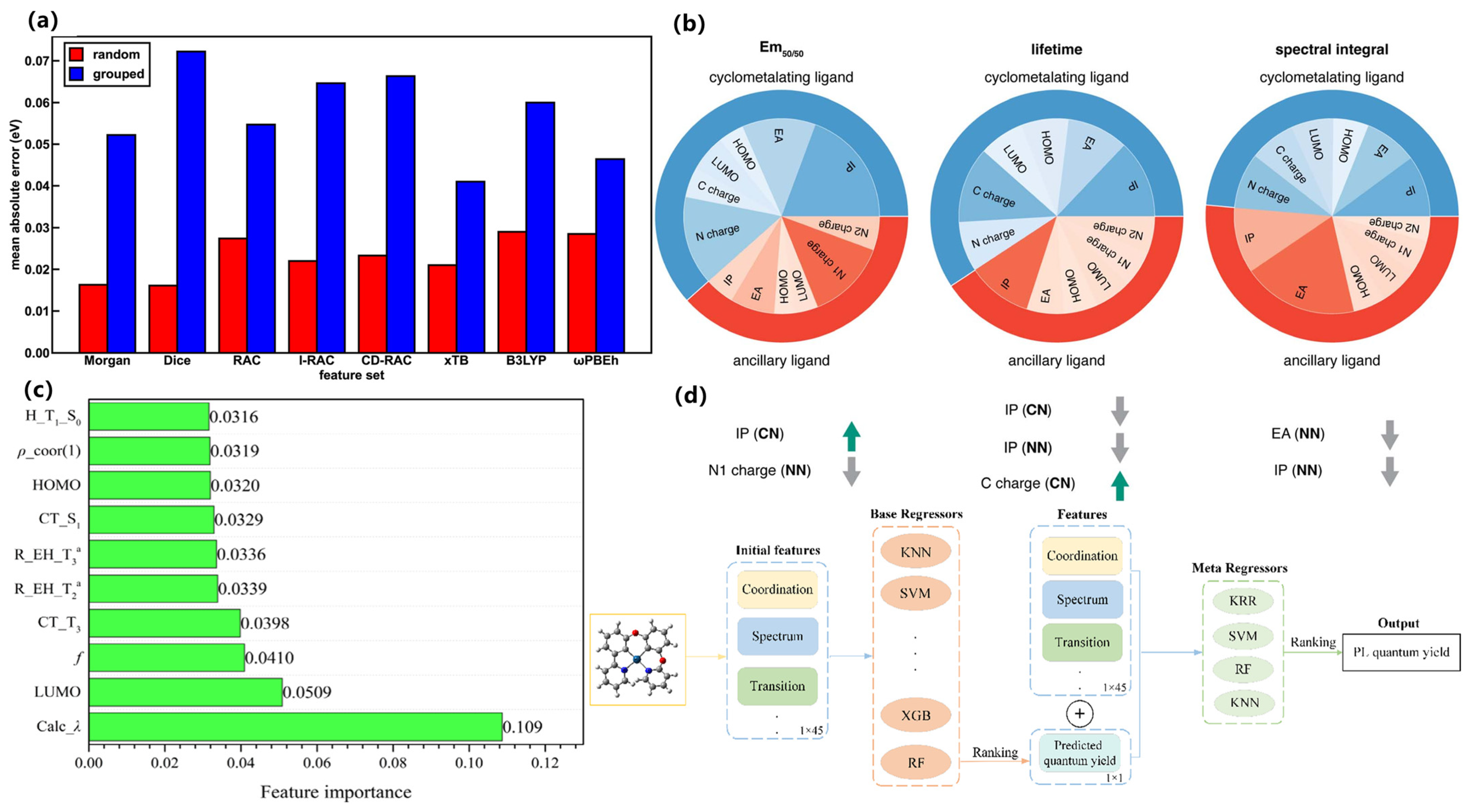

3.3. Rare Earth–Doped Inorganic Phosphors (e.g., Ce/Eu3+)

Rare earth–doped inorganic phosphors, particularly those activated by Ce

3+ and Eu

3+ ions, are indispensable in solid-state lighting and display applications due to their high quantum efficiency, spectral tunability, and chemical stability [

89]. However, optimizing luminescent properties such as emission wavelength, energy transfer efficiency, and thermal quenching typically requires laborious experimental synthesis and characterization. Machine learning (ML) methods have thus been introduced to accelerate the discovery and rational design of high-performance phosphors by efficiently mapping the complex relationships between host lattices, dopant environments, and photophysical outcomes.

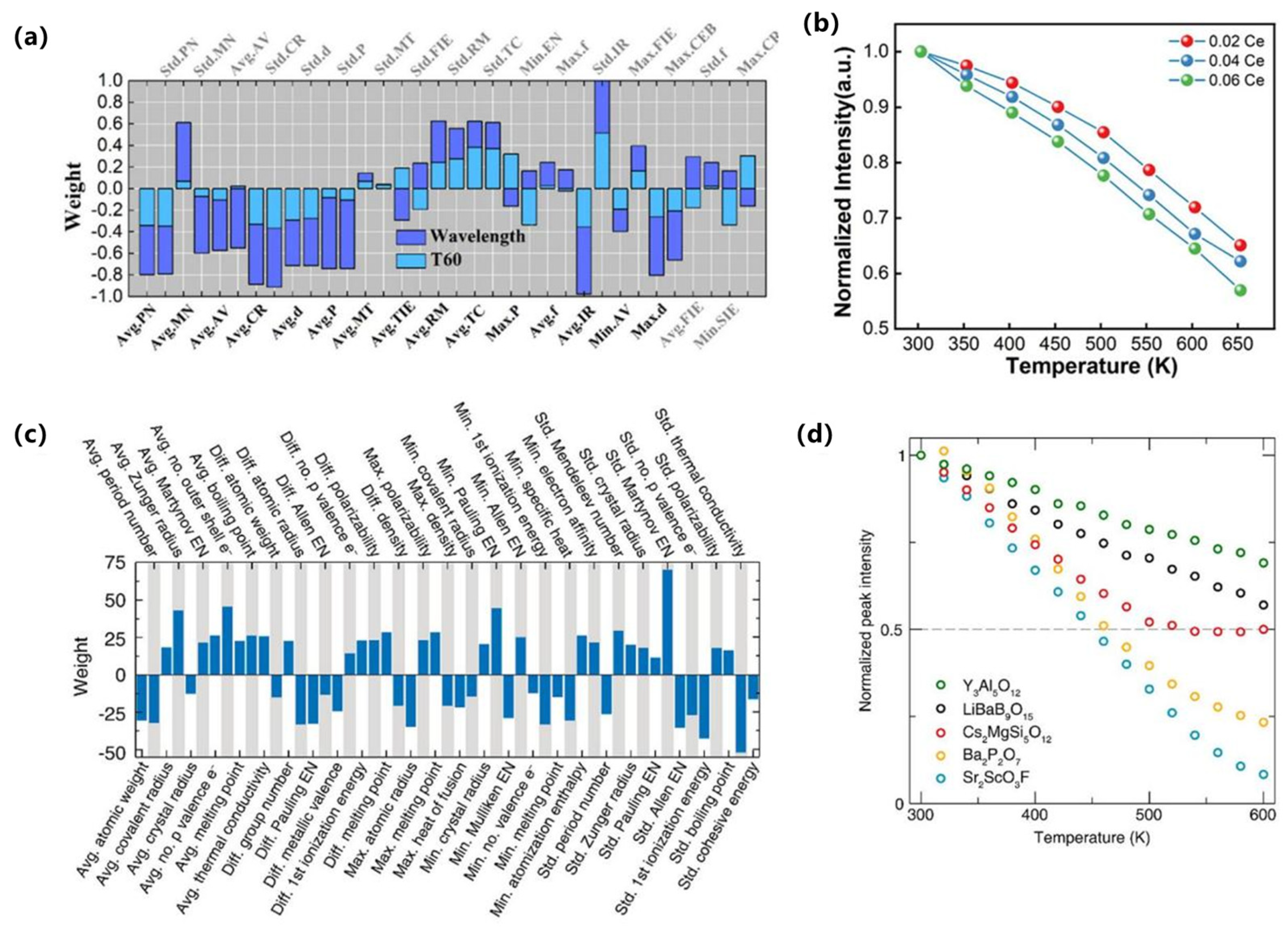

From the perspective of supervised learning approaches, regression and classification models have been widely employed to predict luminescence intensity, color coordinates, and thermal stability. For example, Park et al. reported a data-driven platform for Eu

2+ phosphors that predicts band gap, excitation, and emission energies from 29 host descriptors, with ridge/lasso outperforming unregularized regressors and shallow ANNs on modest data [

90]. Otsuka et al. reported a model for Eu

3+-perovskites that predicts the intensity ratio Λ = I(^5D

0→^7F

2)/I(^5D

0→^7F

1) from chemical/structural features to control hue via site asymmetry (Otsuka, 2024) [

91]. For Ce

3+ systems, Zhuo et al. reported that crystal-field–motivated descriptors (ionic radii/charges) accurately estimate the 5d-level centroid shift ε_c across hosts (Zhuo, 2020) [

92]. In addition, Zhuo et al. quantified feature–target correlations and temperature-resilience benchmarks in Ce

3+-garnets, providing a quantitative baseline for ML-guided stability optimization, as shown in

Figure 9a,b [

93].

With regard to emerging architectures, While deep or graph-based models remain less common than classical regressors in Ce/Eu phosphors, Otsuka et al. further reported spectrum-level agreement between predicted and measured profiles, evidencing end-to-end fidelity from descriptors to optical response (Otsuka, 2024) [

91]. Complementarily, Ding et al. reported hierarchical clustering that delineates host families conducive to targeted emission profiles, offering a data-driven map for composition-space navigation, as shown in

Figure 9c,d [

94]. These advances complement supervised baselines by adding spectral validation and unsupervised structure discovery, respectively.

To address data scarcity and generalization challenges, non-IID datasets, Park et al. reported explicit regularization (ridge/lasso) to stabilize inference and extract robust trends (Park, 2021) [

90]. Koyama et al. reported a practical ML–experiment loop in which a classifier first predicts Eu oxidation state (+2 vs. +3) prior to targeted synthesis, yielding twelve Eu

2+ phosphors from thirteen trials (Koyama, 2024) [

95]. Zhuo et al. reported physics-guided feature construction that embeds crystal-field intuition into Ce

3+ predictors, improving interpretability and sample efficiency (Zhuo, 2020) [

92]. Zhang et al. used GPT-4 to extract Eu

2+ phosphor data from 274 papers, training a CGCNN model (test R

2 = 0.77) to predict emission wavelengths. This showcases a powerful closed-loop where ML builds the dataset for its own discovery, drastically reducing manual effort [

96]. Collectively, these strategies move the field beyond black-box regression toward interpretable, feedback-rich pipelines that shorten the path from compositional hypotheses to verified Ce/Eu emitters, as shown in

Figure 9a–d [

94].

3.4. Transition-Metal–Doped (e.g., Mn4+/Cr3+/Fe3+)

Transition-metal–doped phosphors, typically activated by Mn

4+, Cr

3+, or Fe

3+ ions, have attracted significant attention for applications in wide-color-gamut displays, plant lighting, and near-infrared bioimaging [

97,

98,

99]. Compared with rare-earth activators, transition metals exhibit broad-band d–d or charge-transfer transitions that are highly sensitive to local coordination environments and lattice distortions. This complexity, while beneficial for tunable emission, presents major challenges in rational design and performance optimization. Machine learning (ML) has therefore emerged as a powerful approach to unraveling structure–property relationships and guiding targeted synthesis of high-efficiency transition-metal phosphors.

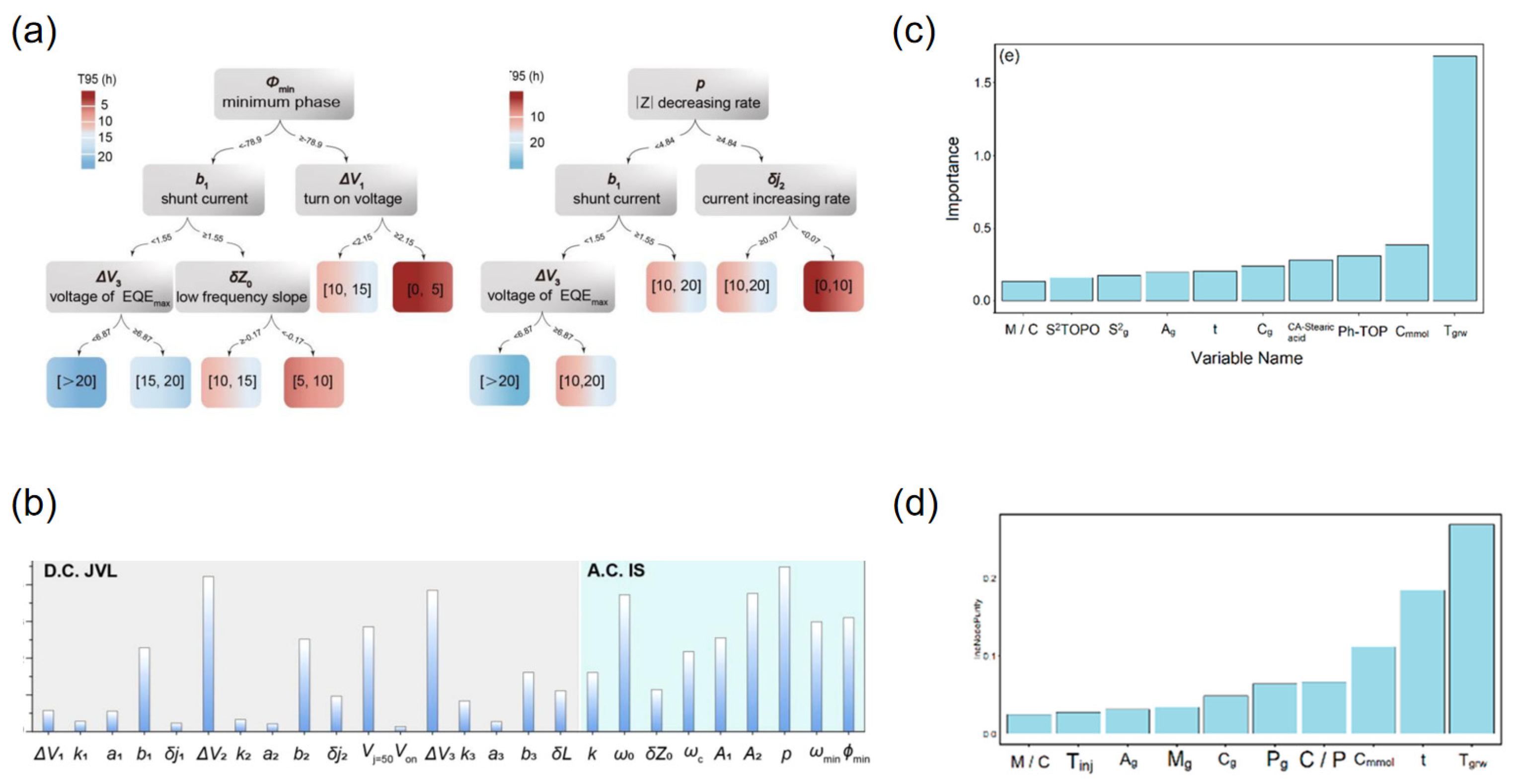

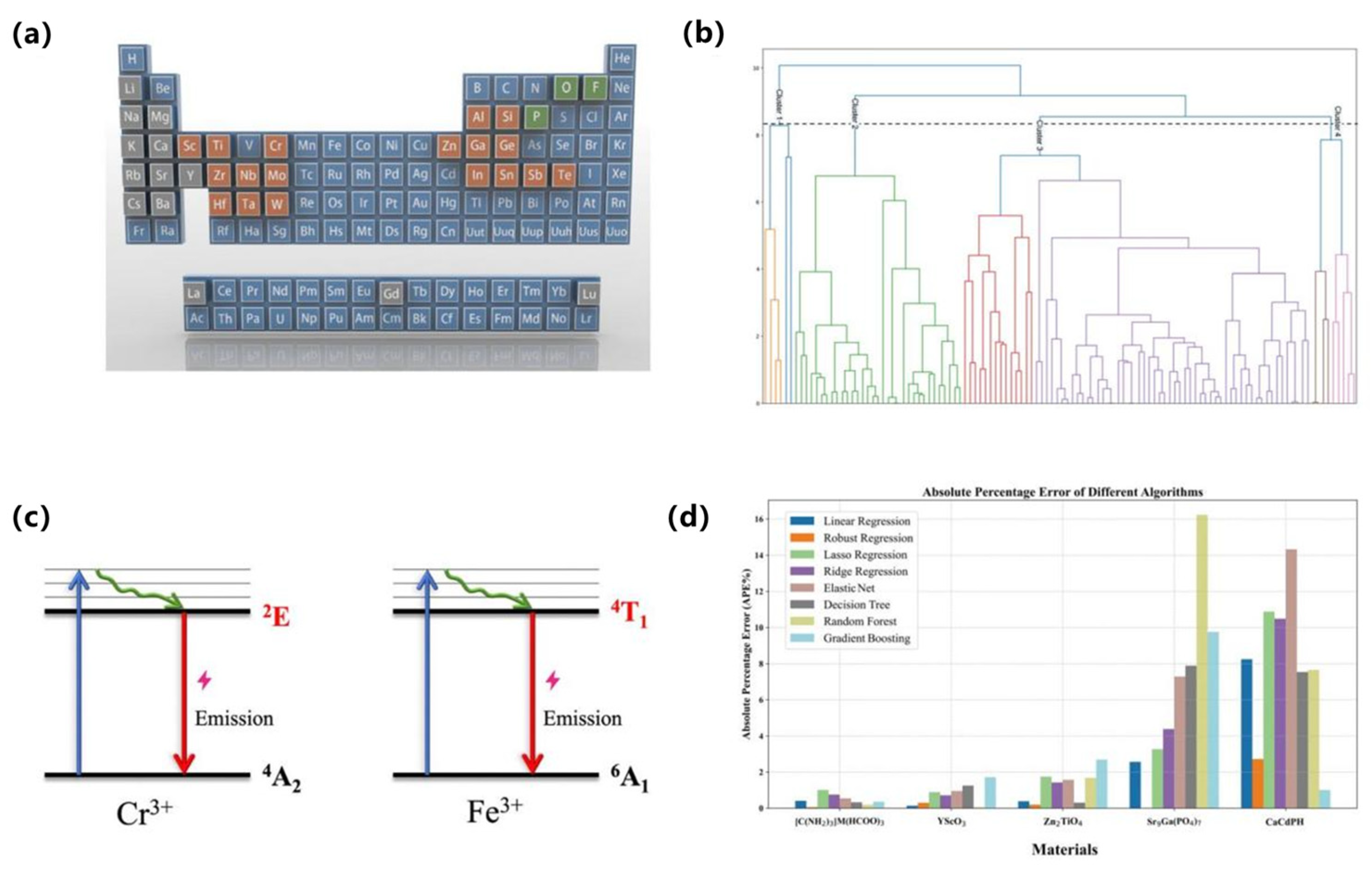

In terms of key modeling techniques, Ding et al. reported supervised models for Mn

4+ hosts and, via recursive feature elimination over 32 structural descriptors, built a KNN regressor that accurately predicts peak emission wavelength from sparse literature data, as shown in

Figure 10a,b [

100]. For Cr

3+/Fe

3+ systems, Li et al. reported a regression workflow that learns the ^2E and ^4T

1 level positions across oxide hosts with ≈1% error, enabling host–dopant tuning of NIR/red output, as shown in

Figure 10c,d [

101]. Together these studies show that carefully engineered crystal-field and lattice descriptors enable reliable forward prediction across Mn/Cr/Fe activators.

With respect to emerging architectures and algorithms, Ding et al. reported a model-comparison suite (KNN, SVR, RF, etc.), highlighting nonparametric and ensemble learners as robust baselines for small, heterogeneous datasets [

100]. Complementing this, Wang et al. reported a random-forest pipeline trained on known Mn

4+ fluorides; feature-importance analysis guided synthesis of Cs

2NaAlF

6:Mn

4+ with ultra-narrow 628 nm emission and 99.7% color purity, validating ML-informed design in experiment [

102].

Regarding data efficiency strategies, To mitigate data scarcity, Ding et al. reported screening of 278 ICSD-derived candidates with their KNN model, followed by selective synthesis of six top predictions—all confirmed experimentally, thus maximizing information per experiment [

100]. Li et al. reported robustness to noisy, limited labels by favoring regularized/robust regressors that preserved sub-1% spectral-level errors [

101]. Wang et al. reported an ML-triaged synthesis loop in which RF-ranked hosts were prioritized for fabrication, shortening the path from hypothesis to validated Mn

4+ emitters [

102]. Collectively, supervised feature engineering (Ding; Li), resilient learners, and selective validation (Wang) constitute a practical CEJ-style playbook for accelerating Mn

4+/Cr

3+/Fe

3+ phosphor discovery, as shown in

Figure 10a–d.

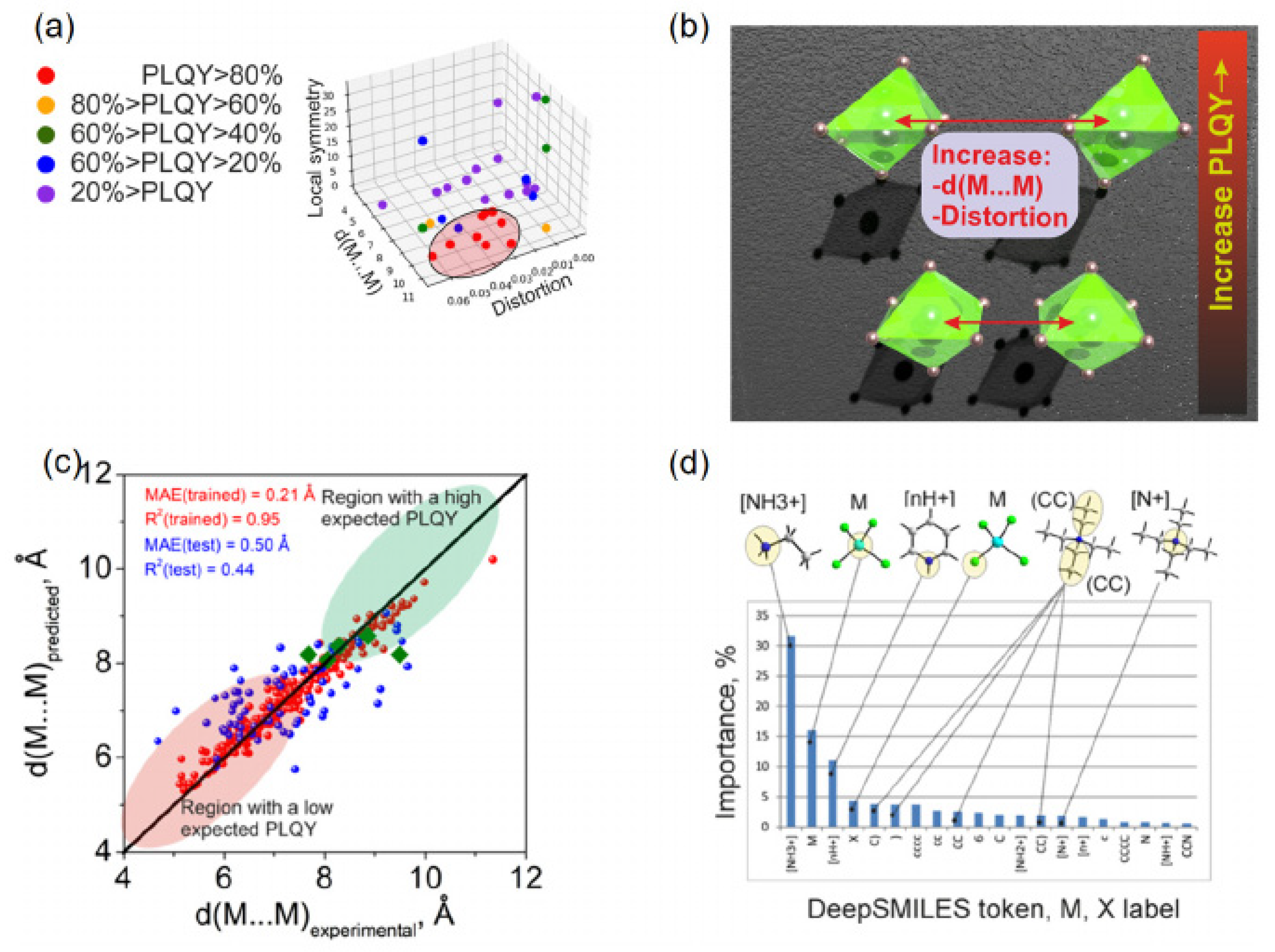

3.5. Perovskite Luminescent Materials

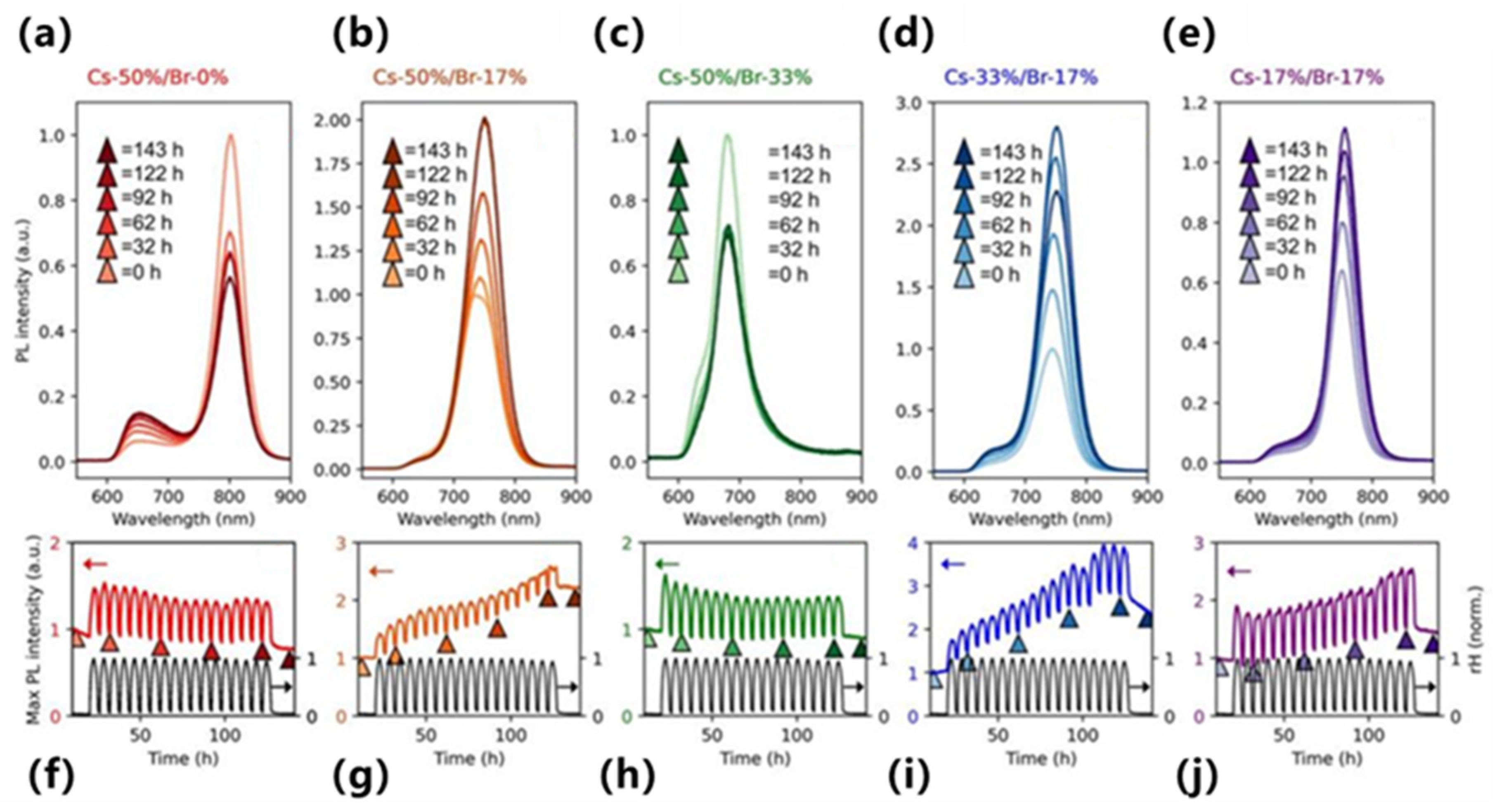

Perovskite emitters—including colloidal CsPbX3 (X = Cl/Br/I) nanocrystals and layered hybrids—offer high PLQY and tunable band gap, but are sensitive to composition and processing; ML is increasingly used to map composition–process–structure to emission color, yield, and stability in a data-efficient, designable manner.

In terms of key modeling techniques, Cakan et al. reported an interpretable workflow that links time-evolving PL/spectral descriptors of triple-halide films to durability and implements a regression–Bayesian predictor under light/heat stress [

103]. Wu et al. introduced a data-driven band-gap resource to analyze halide segregation and trained predictors that generalize across compositions, enabling band-gap/emission trend forecasting from heterogeneous literature and computed data [

104]. A physics-guided structured Gaussian-process surrogate with chemically informed mean functions was reported to improve band-gap targeting with calibrated uncertainty, directly informing emission-color design [

105].

With respect to emerging architectures and algorithms, Lampe et al. merged supervised models with Bayesian optimization to steer CsPbBr

3 nanoplatelet syntheses from precursor-ratio inputs, tuning the PL maximum toward cyan–green emission, as shown in

Figure 11a,b [

106]. Gu et al. introduced a GNN-based synthesizability classifier (PU-learning + transfer) that achieves high out-of-sample true-positive rates, enabling high-throughput triage of feasible halide perovskites prior to optical screening [

107]. At the platform scale, Omidvar et al. coupled ML screening with robotic synthesis and high-throughput characterization to accelerate exploration of solid-solution spaces in a closed loop from proposals to measured properties, as shown in

Figure 11c,d [

108].

Regarding data efficiency strategies, A microfluidic auto-meta-learner (AMML) with coiled-flow reactors was used to synthesize cesium lead-halide nanocrystals at room temperature, leveraging meta-learning to reach target emission with few experiments [

109]. Active meta-learning in J. Chem. Phys. combined uncertainty-aware learners with autonomous selection to prioritize informative candidates and reduced simulation/experimental demands for band-gap ranking (and implied emission colors) [

110]. Physics-driven GP surrogates with crystal-chemistry-aware priors demonstrated data-efficient band-gap optimization with calibrated uncertainty; practical stability mapping under humidity cycling is shown in

Figure 12 [

111]. Beyond guiding the synthesis of emitters, ML models also excel at predicting fundamental structural properties that underpin optical performance. Alfares et al. employed Gaussian Process Regression (GPR) to achieve exceptional accuracy (R

2 > 0.99) in predicting the lattice constants of ABX

3 perovskite materials using basic elemental descriptors like ionic radii and electronegativity [

112]. This capability allows for researchers to virtually screen and down-select promising perovskite compositions with desired structural parameters before embarking on resource-intensive experimental synthesis or DFT calculations, significantly accelerating the discovery pipeline for novel optoelectronic materials. Summary, Interpretable/physics-guided surrogates, GNN-aided feasibility screening, and Bayesian/active/meta-learning are converging into closed-loop workflows that predict and optimize PL color and yield with minimal measurements, enabling inverse-design strategies for perovskite emitters.

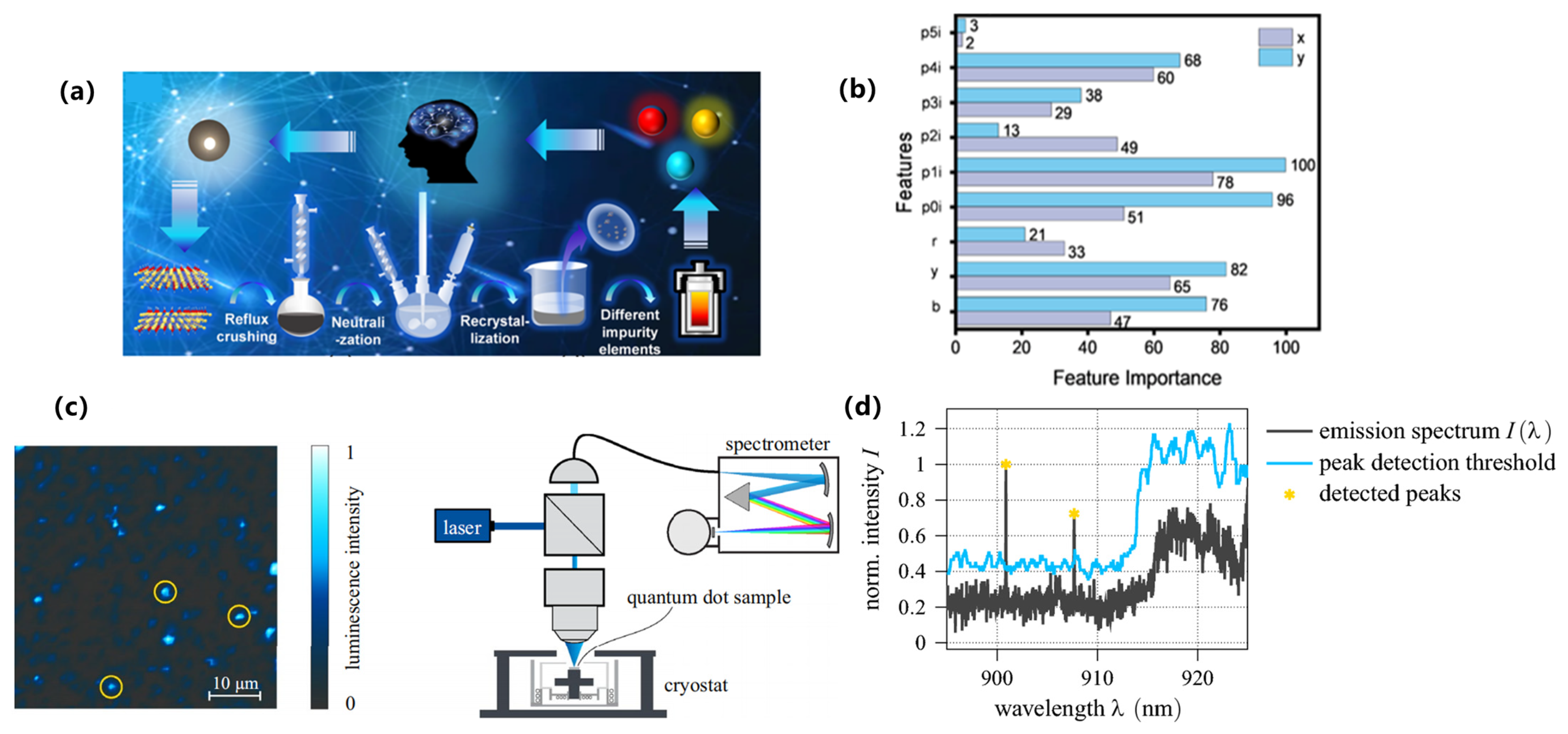

3.6. Others Organic/Small-Molecule Fluorescent Materials

Small-molecule fluorophores underpin sensing, bioimaging, and display applications, yet diverse backbones, conformational ensembles, and solvent/polarity effects complicate rational optimization of emission color, quantum yield, and Stokes shift. Machine learning (ML) provides data-driven mappings from molecular structure and environment to spectral outputs, enabling faster triage and design.

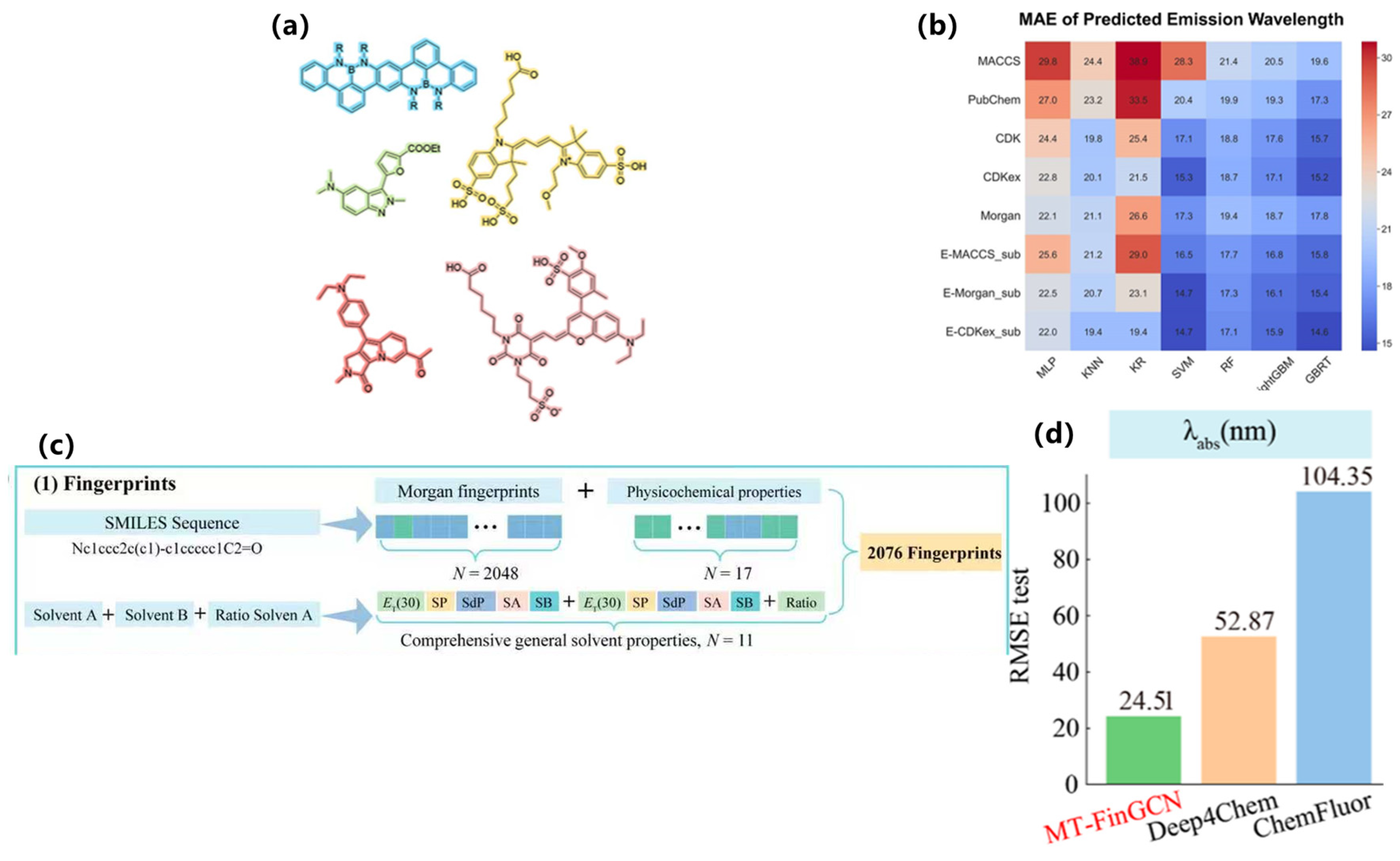

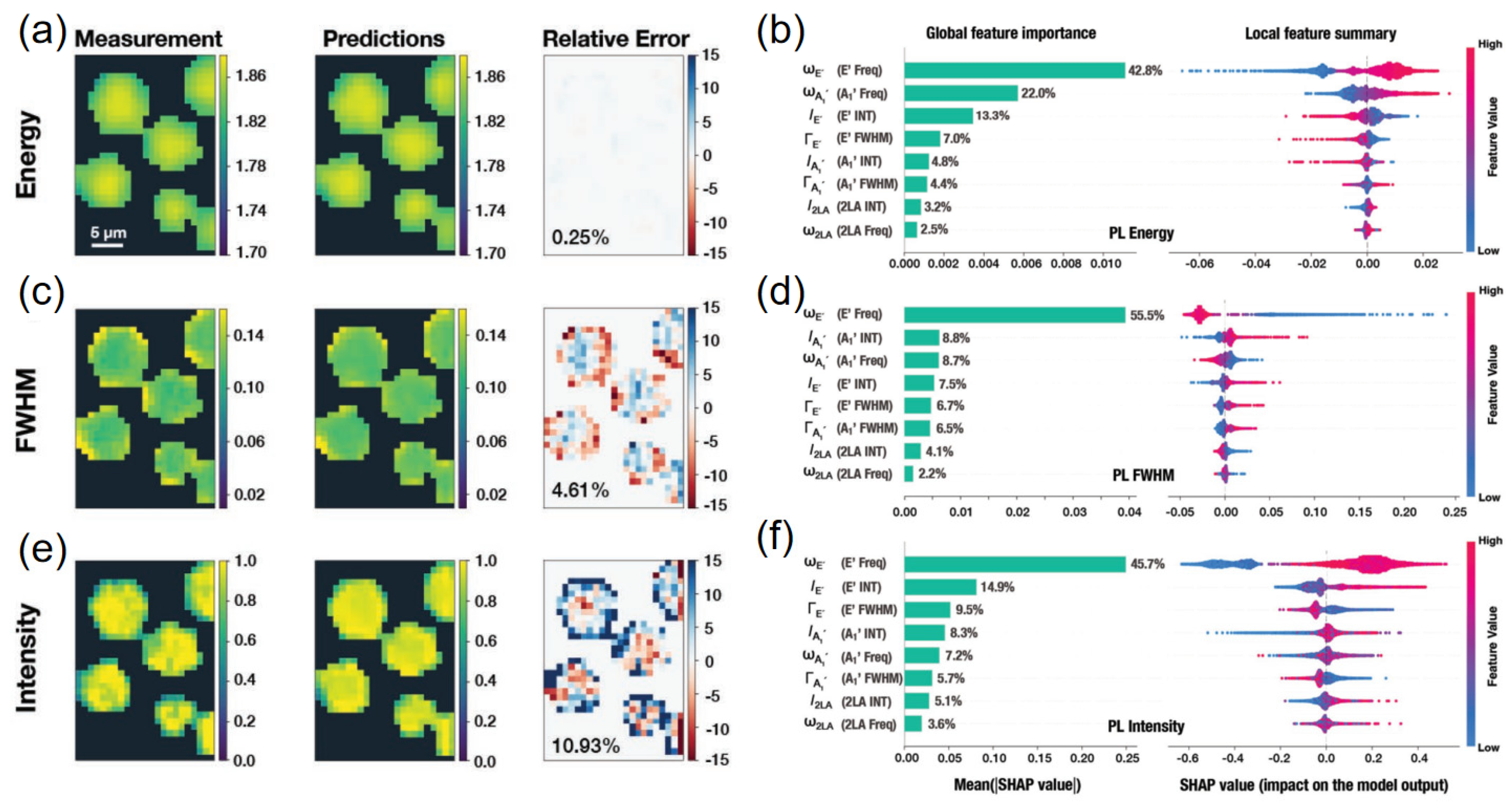

In terms of key modeling techniques, Supervised and interpretable pipelines now predict fluorescence metrics directly from structure/solvent descriptors. Souza et al. trained models on the Deep4Chem corpus (20,236 molecule–solvent combinations) to jointly predict emission wavelengths and QYs, showing robust generalization across chemotypes and media [

113]. Mahato et al. developed hybrid ensemble regressors over 3066 organic dyes to estimate absorption/emission wavelengths and QY in a single workflow, illustrating how model stacking improves accuracy for multi-property prediction [

114]. For mechanistic transparency, Chebotaev et al. used QSPR/ML on BODIPY photosensitizers to predict the fluorescence-to-singlet-oxygen generation ratio and ranked key descriptors (e.g., electronic and topological terms) that govern competition between radiative and photochemical channels [

115]. As shown in

Figure 13a,b, model–descriptor combinations for organic dyes yield accurate emission-wavelength predictions across the curated dye database [

65].

With respect to emerging architectures and algorithms, new model classes support inverse design and richer structure–property learning. Han et al. introduced a generative deep-learning framework to design small organic fluorophores at target optical properties, demonstrating constrained generation guided by learned structure–optics rules [

110]. Jung et al. combined deep residual CNNs with solvent encoding to predict peak optical absorption from SMILES, offering an architecture readily extensible to fluorescence endpoints and solvent effects [

116]. At the probe level, Xiang et al. applied ML-assisted design to xanthene-type Si-rhodamine systems, quantitatively linking substituent patterns to pH responsiveness and in situ imaging SNR, and using the model to guide synthesis of a high-performance probe [

117]. As shown in

Figure 13c,d, Wang et al. demonstrated that the NiRFluor multitask FinGCN platform markedly improves multi-endpoint prediction for NIR small-molecule fluorophores [

118].

Regarding data efficiency strategies, to counter sparse, heterogeneous labels, groups exploit literature-mined corpora, curated benchmark sets, and physics-guided targets. Zhu et al. built a modular AI framework that taps text-mined optical data (e.g., the ChemFluor database, >4300 solvated fluorophores) to pretrain predictors before task-specific fine-tuning, improving data efficiency for fluorophore discovery [

119]. Shao et al. released SMFluo1 (1181 solvated fluorophores) and trained deep models to predict λ_max, establishing solvent-aware baselines that transfer to related fluorescence tasks [

120]. Complementing purely statistical learners, Ravasco et al. used physics-grounded multilinear free-energy relationships (mLFER) as a compact surrogate to discover a new BASHY dye with targeted emission—showcasing how mechanistic priors reduce data needs while preserving designability [

121]. Altogether, for general organic fluorophores, enhancement strategies (multi-property supervised learning with interpretable features) deliver reliable, explainable predictions; emerging architectures (generative models, solvent-aware deep networks) enable inverse design across chemotypes; and data-efficiency tactics (literature-mined corpora, curated solvent datasets, physics-guided surrogates) mitigate label scarcity. Together, these advances convert empirical dye tuning into principled, ML-assisted design workflows with clear routes from molecular blueprint to emission performance.

The aforementioned data-driven approaches are widely applicable to various fluorescent materials, among which benzimidazole-based fluorescent probes exhibit strong binding affinity for heavy metal ions and thus can be employed for water quality testing and environmental monitoring [