Neuromorphic Photonics Circuits: Contemporary Review

Abstract

:1. Introduction

2. Neuromorphic Computing Market Size

3. Neuromorphic Photonic Integrated Circuits

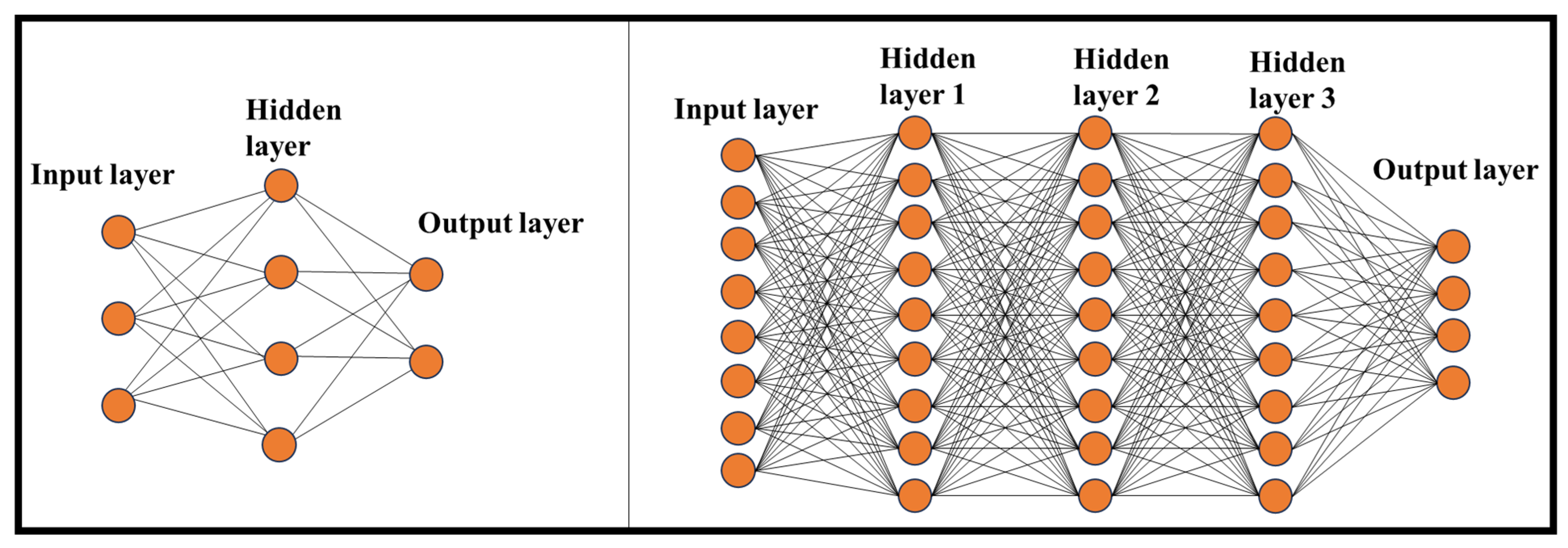

3.1. Deep DNNs

- (A)

- Traditional NNs: Typically composed of 2 or 3 hidden layers.

- (B)

- DL Networks: These can contain up to 150 hidden layers, making them significantly more complex.

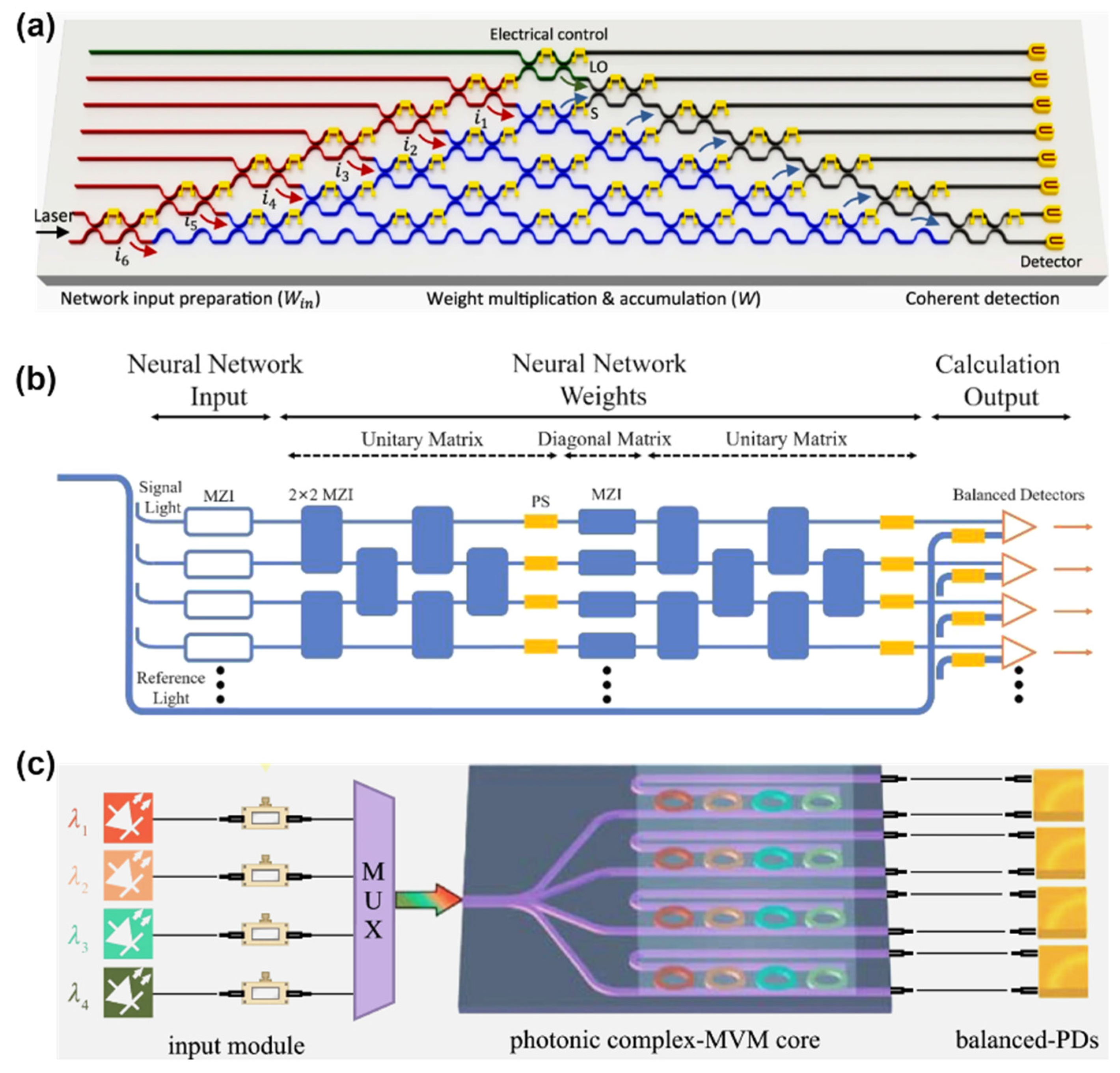

3.2. NNs with Complex Arithmetic Calculations

3.3. Spike NNs

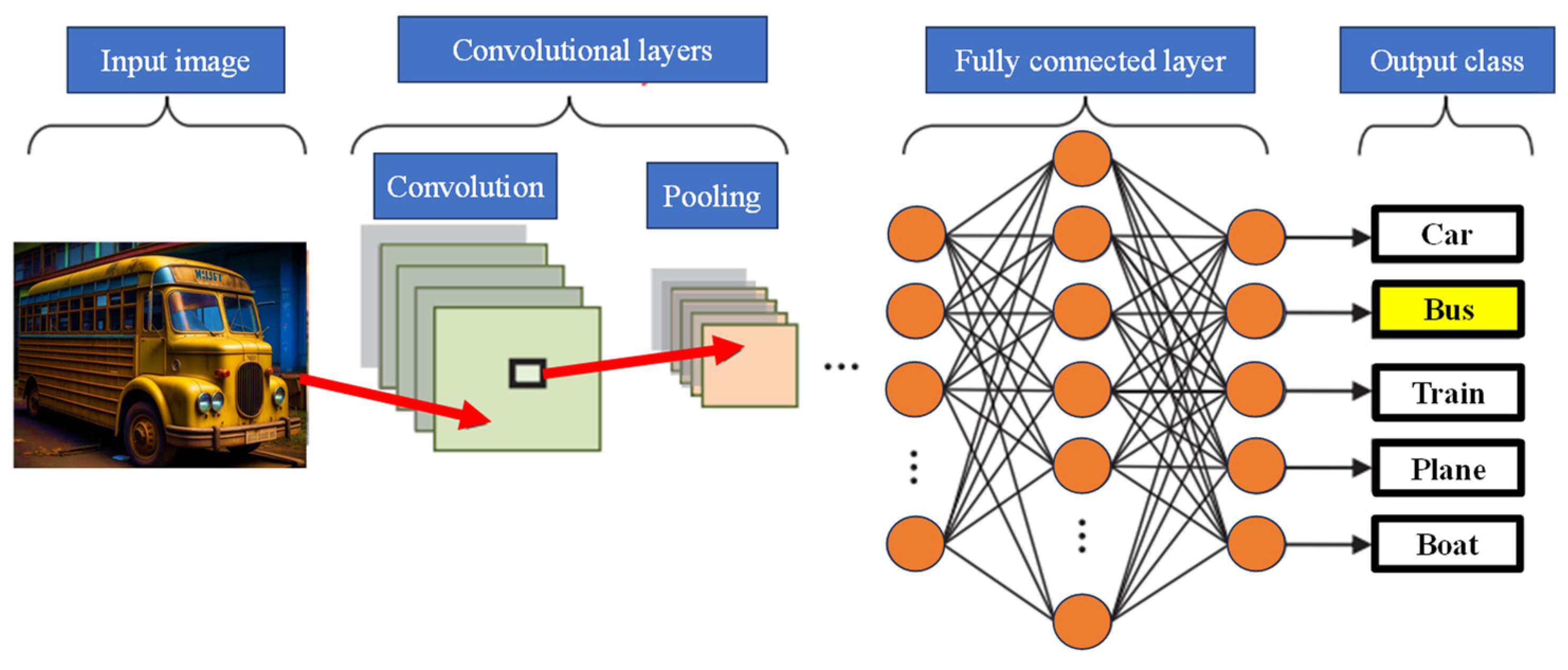

3.4. Convolutional Neural Networks (CNNs)

3.5. Methods for Implementing the Activation Functions in Optical Neural Networks

3.6. Programmable PNNs

4. Applications of Neuromorphic Photonics

4.1. Neuromorphic Computing Based on PICs

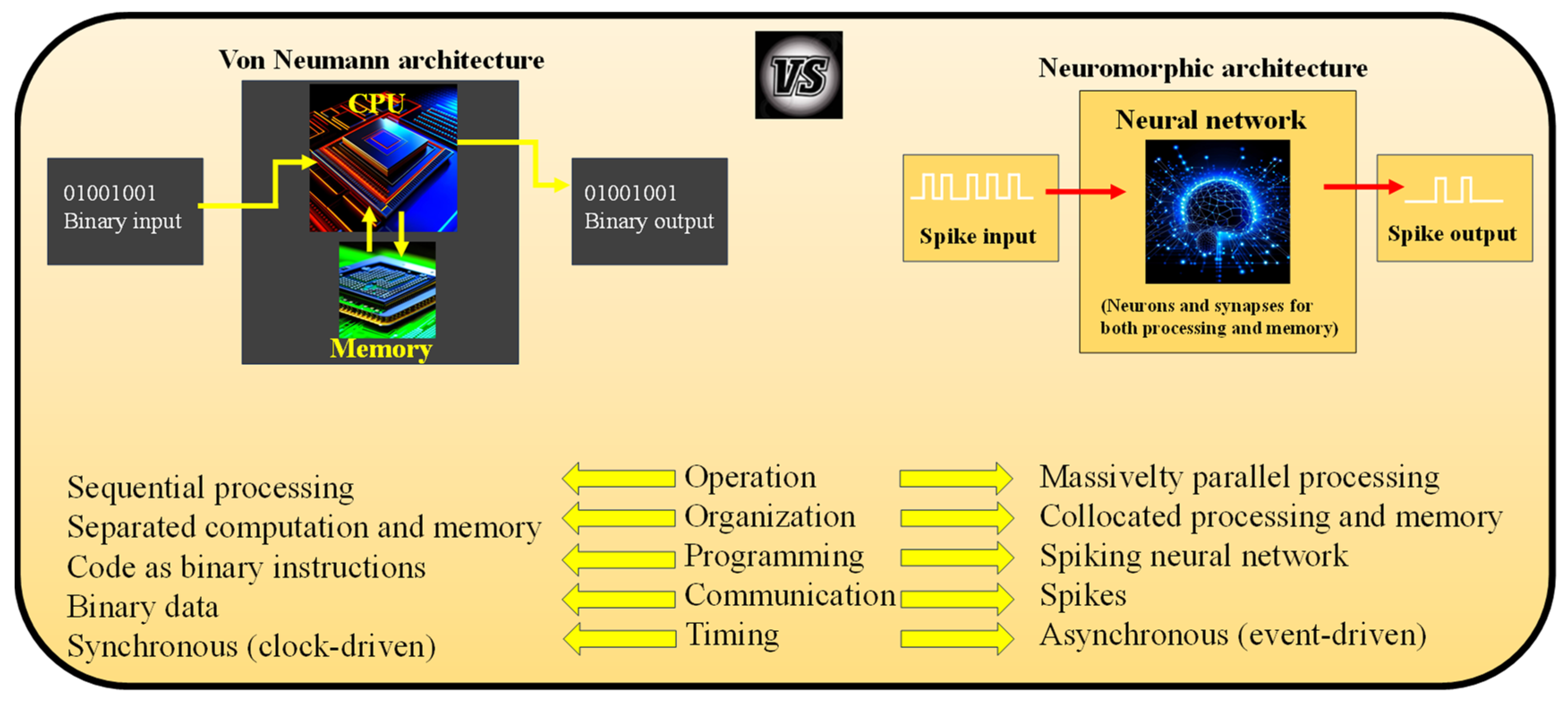

- (I)

- Highly Parallel Operation: Neuromorphic computers inherently embrace parallelism, where all neurons and synapses can potentially operate simultaneously. However, the computations performed by these elements are relatively simple when compared to parallelized von Neumann systems.

- (II)

- Collocated Processing and Memory: In neuromorphic hardware, there is no separation between processing and memory. Neurons and synapses are involved in processing and storage, mitigating the von Neumann bottleneck. This collocation enhances efficiency by eliminating data accesses from main memory, reducing energy consumption.

- (III)

- Inherent Scalability: Neuromorphic computers are designed to be inherently scalable. Adding more neuromorphic chips increases the number of neurons and synapses. Multiple physical neuromorphic chips can be combined to create larger implementations, which has been successfully demonstrated in various large-scale neuromorphic hardware systems like SpiNNaker and Loihi.

- (IV)

- Event-Driven Computation: Neuromorphic computers employ event-driven computation, meaning they only compute when data is available. This temporally sparse activity allows for highly efficient computation. Neurons and synapses operate only when spikes (data) are present, which is typically sparse within the network’s operation.

- (V)

- Stochasticity: Neuromorphic computers can incorporate randomness, such as in the firing of neurons, allowing for noise in the system.

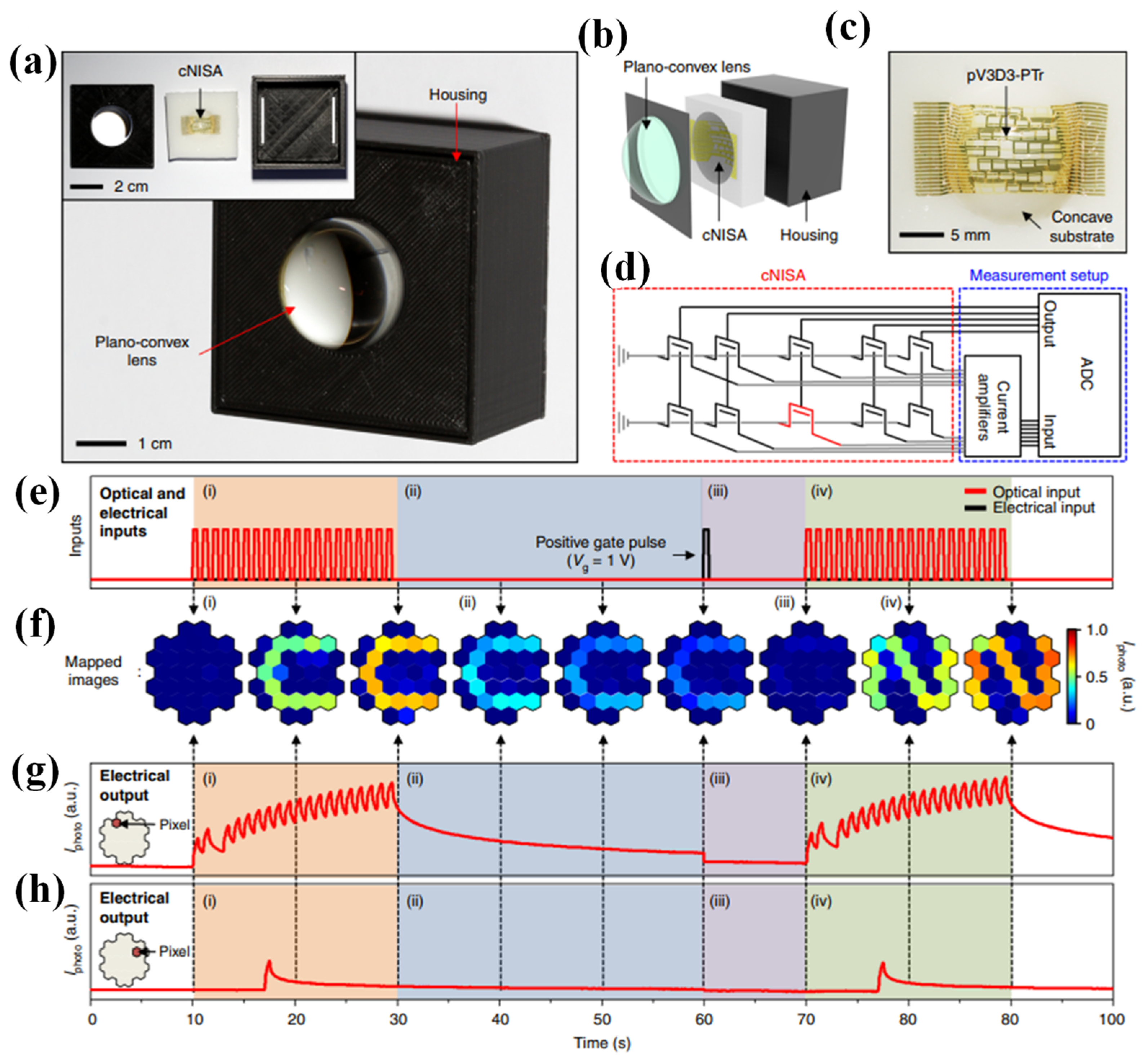

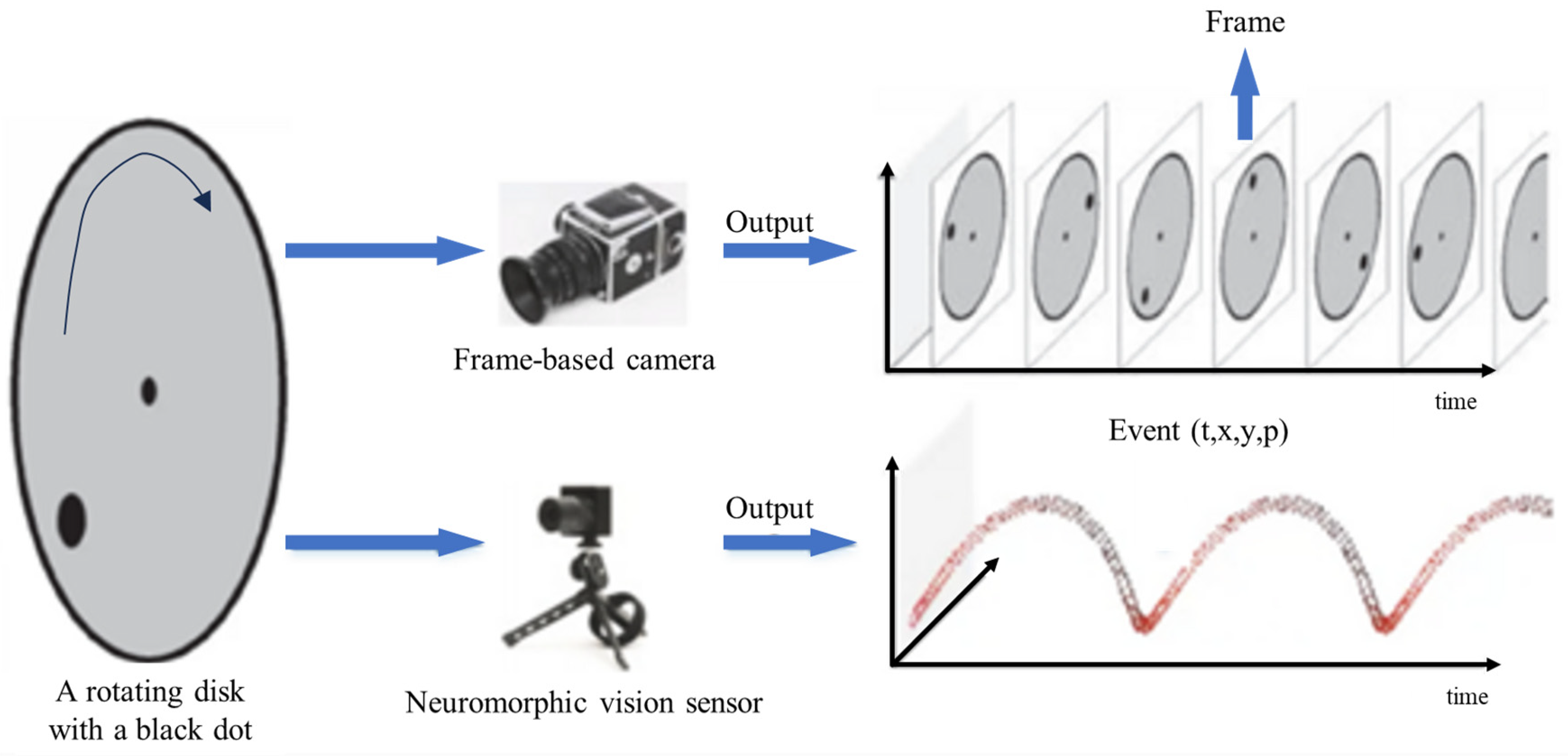

4.2. Neuromorphic Imaging

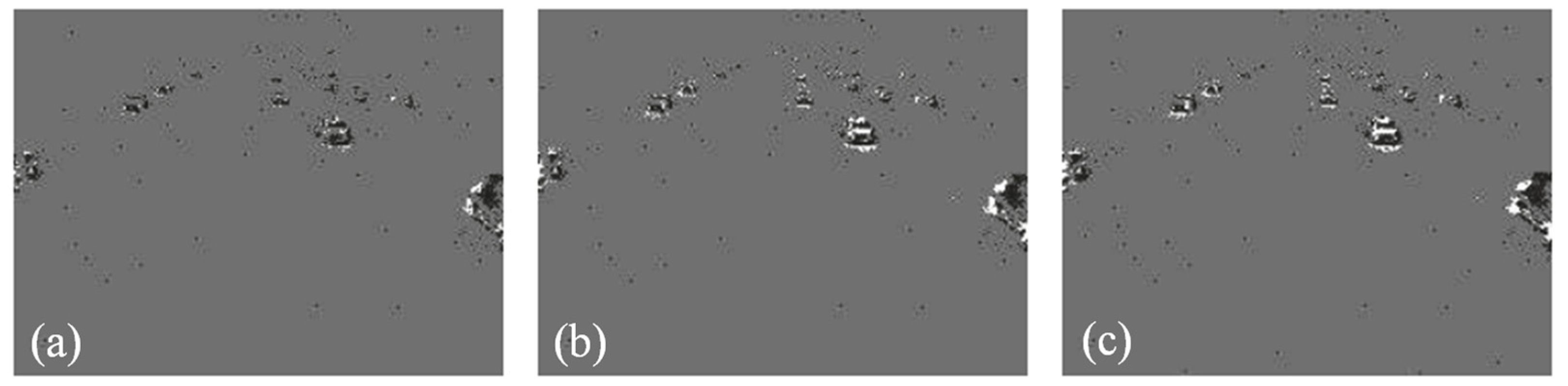

4.3. Image Processing by Neuromorphic Structures

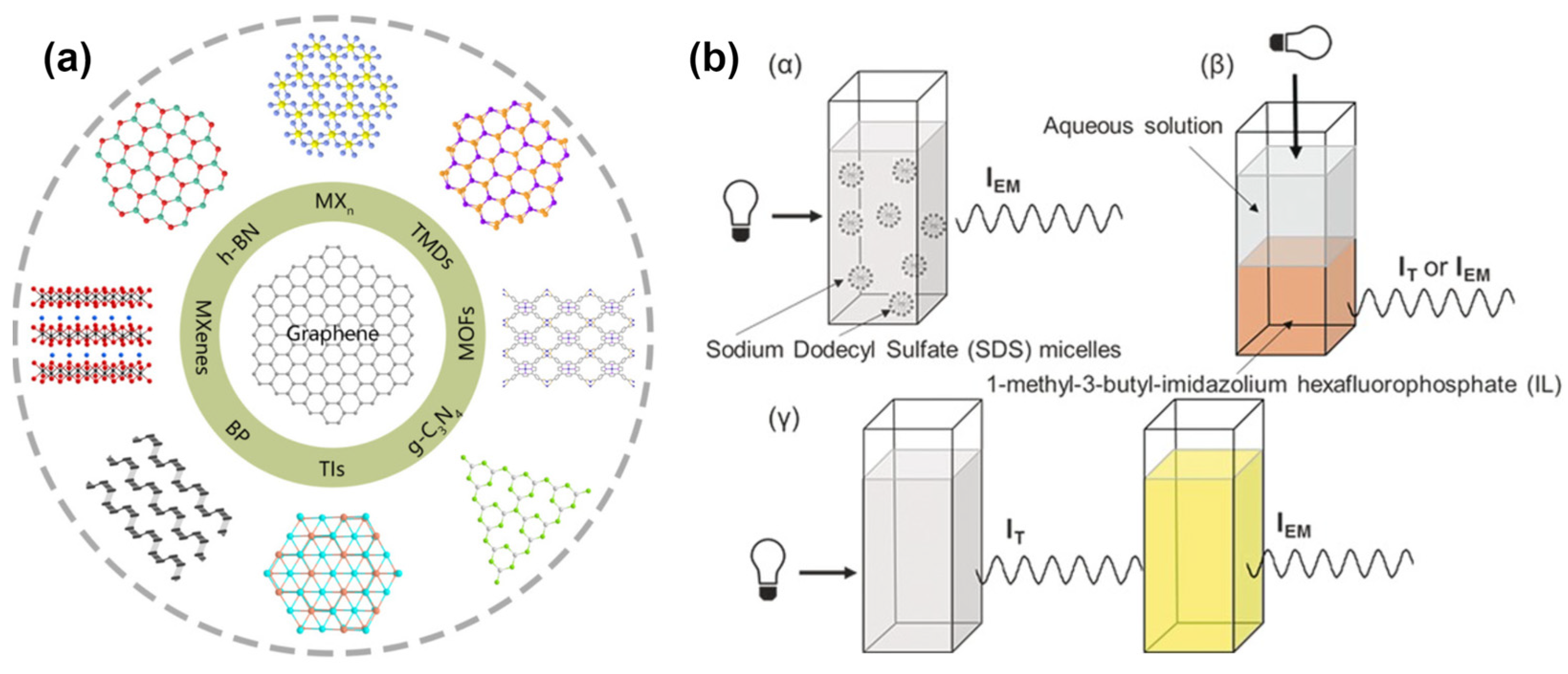

5. Materials Used for Implementation of Photonic Neuromorphic Computing

6. Current Challenges

7. Concluding Remarks

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

References

- Schuman, C.D.; Kulkarni, S.R.; Parsa, M.; Mitchell, J.P.; Date, P.; Kay, B. Opportunities for Neuromorphic Computing Algorithms and Applications|Nature Computational Science. Nat. Comput. Sci. 2022, 2, 10–19. Available online: https://www.nature.com/articles/s43588-021-00184-y (accessed on 22 October 2023). [CrossRef]

- van de Burgt, Y.; Santoro, F.; Tee, B.; Alibart, F. Editorial: Focus on organic materials, bio-interfacing and processing in neuromorphic computing and artificial sensory applications. Neuromorphic Comput. Eng. 2023, 3, 040202. [Google Scholar] [CrossRef]

- Alagappan, G.; Ong, J.R.; Yang, Z.; Ang, T.Y.L.; Zhao, W.; Jiang, Y.; Zhang, W.; Png, C.E. Leveraging AI in Photonics and Beyond. Photonics 2022, 9, 75. Available online: https://www.mdpi.com/2304-6732/9/2/75 (accessed on 13 November 2023). [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of Deep Learning: Concepts, CNN Architectures, Challenges, Applications, Future Directions. J. Big Data 2021, 8, 1–74. Available online: https://journalofbigdata.springeropen.com/articles/10.1186/s40537-021-00444-8 (accessed on 25 October 2023). [CrossRef] [PubMed]

- Zhou, J.; Cui, G.; Hu, S.; Zhang, Z.; Yang, C.; Liu, Z.; Wang, L.; Li, C.; Sun, M. Graph neural networks: A review of methods and applications. AI Open 2020, 1, 57–81. [Google Scholar] [CrossRef]

- Das, R.R.; Reghuvaran, C.; James, A. Graphene-based RRAM devices for neural computing. Front. Neurosci. 2023, 17, 1253075. [Google Scholar] [CrossRef]

- Nassehi, A.; Zhong, R.Y.; Li, X.; Epureanu, B.I. Chapter 11-Review of machine learning technologies and artificial intelligence in modern manufacturing systems. In Design and Operation of Production Networks for Mass Personalization in the Era of Cloud Technology; Mourtzis, D., Ed.; Elsevier: Amsterdam, The Netherlands, 2022; pp. 317–348. [Google Scholar] [CrossRef]

- Mukhamediev, R.I.; Popova, Y.; Kuchin, Y.; Zaitseva, E.; Kalimoldayev, A.; Symagulov, A.; Levashenko, V.; Abdoldina, F.; Gopejenko, V.; Yakunin, K.; et al. Review of Artificial Intelligence and Machine Learning Technologies: Classification, Restrictions, Opportunities and Challenges. Mathematics 2022, 10, 2552. Available online: https://www.mdpi.com/2227-7390/10/15/2552 (accessed on 13 November 2023). [CrossRef]

- Le, Q.V. Building high-level features using large scale unsupervised learning. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 8595–8598. [Google Scholar] [CrossRef]

- Ríos, C.; Youngblood, N.; Cheng, Z.; Le Gallo, M.; Pernice, W.H.P.; Wright, C.D.; Sebastian, A.; Bhaskaran, H. In-memory computing on a photonic platform. Sci. Adv. 2023, 5, eaau5759. [Google Scholar] [CrossRef]

- Zhang, D.; Tan, Z. A Review of Optical Neural Networks. Appl. Sci. 2022, 12, 5338. [Google Scholar] [CrossRef]

- Zhang, J.; Ma, B.; Zhao, Y.; Zou, W. A Large-Scale Photonic CNN Based on Spike Coding and Temporal Integration. IEEE J. Sel. Top. Quantum Electron. 2023, 29, 7600910. [Google Scholar] [CrossRef]

- Huang, C.; de Lima, T.F.; Tait, A.N.; Marquez, B.A.; Shastri, B.J.; Prucnal, P.R. Neuromorphic Photonics for Intelligent Signal Processing. In Proceedings of the 2021 IEEE Photonics Conference (IPC), Vancouver, BC, Canada, 18–21 October 2021; pp. 1–2. [Google Scholar] [CrossRef]

- Neuromorphic Computing Market Size & Share Report. 2030. Available online: https://www.grandviewresearch.com/industry-analysis/neuromorphic-computing-market (accessed on 24 October 2023).

- Polyn Technology|Polyn Technology. Available online: https://polyn.ai/ (accessed on 24 October 2023).

- Edge Impulse. Available online: https://edgeimpulse.com/ (accessed on 24 October 2023).

- Peng, H.-T.; Nahmias, M.A.; de Lima, T.F.; Tait, A.N.; Shastri, B.J.; Prucnal, P. Neuromorphic Photonic Integrated Circuits. IEEE J. Sel. Top. Quantum Electron. 2018, 24, 1–15. [Google Scholar] [CrossRef]

- Du, W.; Wang, T.; Chu, H.-S.; Nijhuis, C.A. Highly efficient on-chip direct electronic–plasmonic transducers. Nat. Photonics 2017, 11, 623–627. [Google Scholar] [CrossRef]

- Prucnal, P.R.; Shastri, B.J.; Teich, M.C. (Eds.) Neuromorphic Photonics; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar] [CrossRef]

- Shen, Y.; Harris, N.C.; Skirlo, S.; Prabhu, M.; Baehr-Jones, T.; Hochberg, M.; Sun, X.; Zhao, S.; Larochelle, H.; Englund, D.; et al. Deep learning with coherent nanophotonic circuits. Nat. Photonics 2017, 11, 441–446. [Google Scholar] [CrossRef]

- Accelerating Systems with Real-Time AI Solutions-Groq. Available online: https://groq.com/ (accessed on 22 October 2023).

- Teich, P. Tearing Apart Google’s TPU 3.0 AI Coprocessor-The Next Platform. Available online: https://www.nextplatform.com/2018/05/10/tearing-apart-googles-tpu-3-0-ai-coprocessor/ (accessed on 22 October 2023).

- Smith, R. NVIDIA Volta Unveiled: GV100 GPU and Tesla V100 Accelerator Announced. Available online: https://www.anandtech.com/show/11367/nvidia-volta-unveiled-gv100-gpu-and-tesla-v100-accelerator-announced (accessed on 22 October 2023).

- Wijesinghe, P.; Ankit, A.; Sengupta, A.; Roy, K. An All-Memristor Deep Spiking Neural Computing System: A Step Toward Realizing the Low-Power Stochastic Brain. IEEE Trans. Emerg. Top. Comput. Intell. 2018, 2, 345–358. Available online: https://ieeexplore.ieee.org/document/8471280?denied= (accessed on 22 October 2023). [CrossRef]

- Timurdogan, E.; Sorace-Agaskar, C.M.; Sun, J.; Shah Hosseini, E.; Biberman, A.; Watts, M.R. An Ultralow Power Athermal Silicon Modulator. Nat. Commun. 2014, 5, 1–11. Available online: https://www.nature.com/articles/ncomms5008 (accessed on 22 October 2023). [CrossRef] [PubMed]

- Shastri, B.J.; Huang, C.; Tait, A.N.; de Lima, T.F.; Prucnal, P.R. Silicon Photonics for Neuromorphic Computing and Artificial Intelligence: Applications and Roadmap. In Proceedings of the 2022 Photonics & Electromagnetics Research Symposium (PIERS), Hangzhou, China, 25–29 April 2022; pp. 18–26. [Google Scholar] [CrossRef]

- Zhou, Z.; Chen, R.; Li, X.; Li, T. Development trends in silicon photonics for data centers. Opt. Fiber Technol. 2018, 44, 13–23. [Google Scholar] [CrossRef]

- Tait, A.N.; De Lima, T.F.; Nahmias, M.A.; Shastri, B.J.; Prucnal, P.R. Continuous Calibration of Microring Weights for Analog Optical Networks. IEEE Photonics Technol. Lett. 2016, 28, 887–890. Available online: https://ieeexplore.ieee.org/document/7377037 (accessed on 21 October 2023). [CrossRef]

- Tait, A.N.; De Lima, T.F.; Nahmias, M.A.; Miller, H.B.; Peng, H.T.; Shastri, B.J.; Prucnal, P.R. Silicon Photonic Modulator Neuron. Phys. Rev. Appl. 2019, 11, 064043. Available online: https://journals.aps.org/prapplied/abstract/10.1103/PhysRevApplied.11.064043 (accessed on 21 October 2023). [CrossRef]

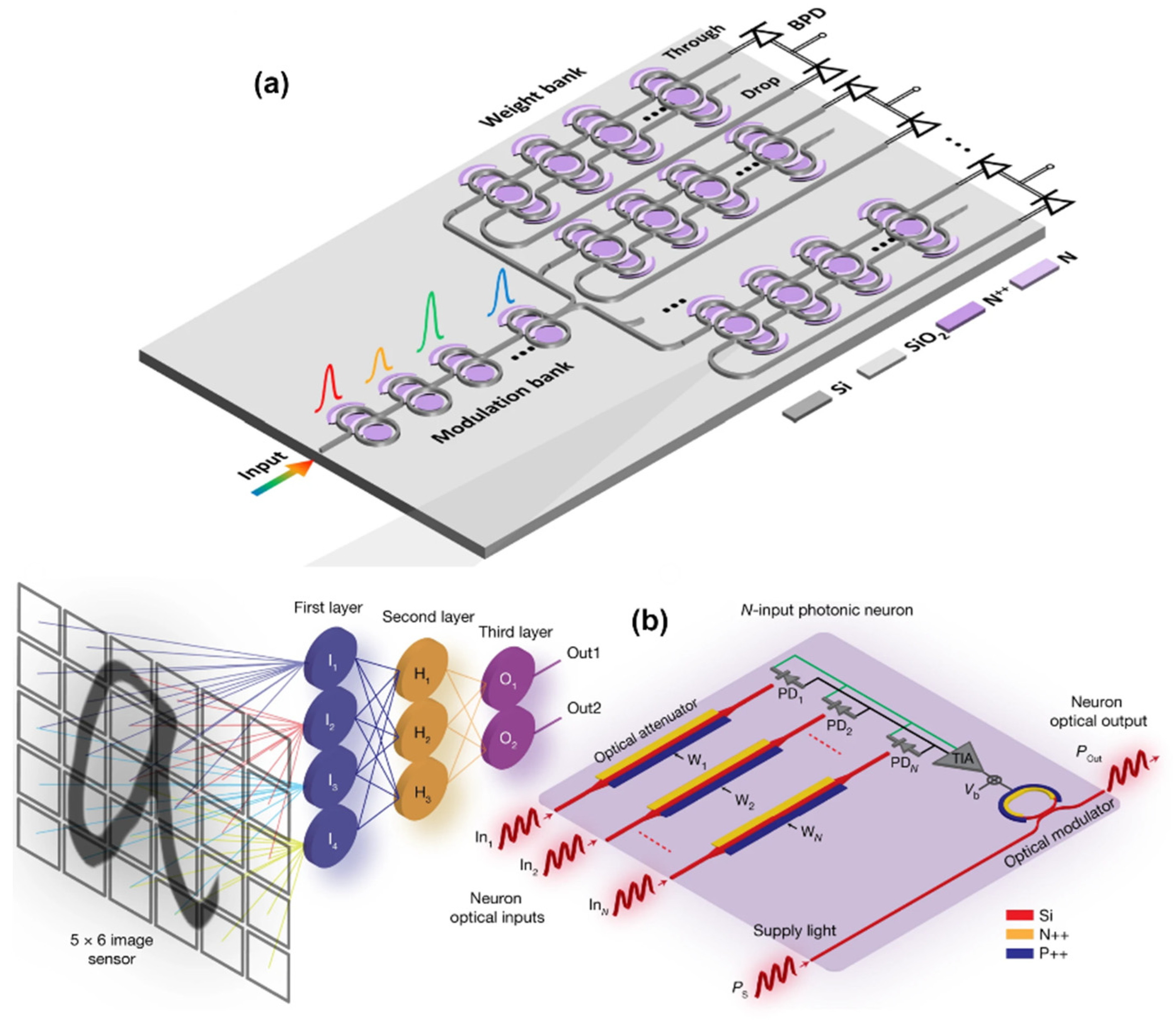

- Tait, A.N.; de Lima, T.F.; Zhou, E.; Wu, A.X.; Nahmias, M.A.; Shastri, B.J.; Prucnal, P.R. Neuromorphic photonic networks using silicon photonic weight banks. Sci. Rep. 2017, 7, 7430. [Google Scholar] [CrossRef]

- de Lima, T.F.; Tait, A.N.; Mehrabian, A.; Nahmias, M.A.; Huang, C.; Peng, H.-T.; Marquez, B.A.; Miscuglio, M.; El-Ghazawi, T.; Sorger, V.J.; et al. Primer on silicon neuromorphic photonic processors: Architecture and compiler. Nanophotonics 2020, 9, 4055–4073. [Google Scholar] [CrossRef]

- Marquez, B.A.; Huang, C.; Prucnal, P.R.; Shastri, B.J. Neuromorphic Silicon Photonics for Artificial Intelligence. In Silicon Photonics IV: Innovative Frontiers; Lockwood, D.J., Pavesi, L., Eds.; Topics in Applied Physics; Springer International Publishing: Cham, Switzerland, 2021; pp. 417–447. [Google Scholar] [CrossRef]

- Xu, L.; de Lima, T.F.; Peng, H.-T.; Bilodeau, S.; Tait, A.; Shastri, B.J.; Prucnal, P.R. Scalable Networks of Neuromorphic Photonic Integrated Circuits. IIEEE J. Sel. Top. Quantum Electron. 2022, 28, 1–9. [Google Scholar] [CrossRef]

- Peng, S.; Zeng, R.; Liu, H.; Cao, L.; Wang, G.; Xie, J. Deep Broad Learning for Emotion Classification in Textual Conversations. Tsinghua Sci. Technol. 2024, 29, 481–491. [Google Scholar] [CrossRef]

- Taouktsis, X.; Zikopoulos, C. A decision-making tool for the determination of the distribution center location in a humanitarian logistics network. Expert Syst. Appl. 2024, 238, 122010. [Google Scholar] [CrossRef]

- Wu, H.; Lei, R.; Peng, Y.; Gao, L. AAGNet: A graph neural network towards multi-task machining feature recognition. Robot. Comput. Manuf. 2024, 86, 102661. [Google Scholar] [CrossRef]

- Choi, C.Q. 7 Revealing Ways AIs Fail: Neural Networks can be Disastrously Brittle, Forgetful, and Surprisingly Bad at Math. IEEE Spectr. 2021, 58, 42–47. Available online: https://ieeexplore.ieee.org/document/9563958 (accessed on 26 October 2023). [CrossRef]

- Searle, J.R. Minds, Brains, and Programs. Behav. Brain Sci. 1980, 3, 417–424. Available online: https://www.cambridge.org/core/journals/behavioral-and-brain-sciences/article/abs/minds-brains-and-programs/DC644B47A4299C637C89772FACC2706A (accessed on 26 October 2023). [CrossRef]

- Marcus, G. Deep Learning: A Critical Appraisal. arXiv 2018, arXiv:1801.00631. [Google Scholar]

- Santoro, A.; Lampinen, A.; Mathewson, K.; Lillicrap, T.; Raposo, D. Symbolic Behaviour in Artificial Intelligence. arXiv 2022, arXiv:2102.03406. [Google Scholar] [CrossRef]

- Trask, A.; Hill, F.; Reed, S.E.; Rae, J.; Dyer, C.; Blunsom, P. Neural Arithmetic Logic Units. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Nice, France, 2018; Available online: https://proceedings.neurips.cc/paper_files/paper/2018/hash/0e64a7b00c83e3d22ce6b3acf2c582b6-Abstract.html (accessed on 26 October 2023).

- Lample, G.; Charton, F. Deep Learning for Symbolic Mathematics. arXiv 2019, arXiv:1912.01412. [Google Scholar] [CrossRef]

- Lee, D.; Szegedy, C.; Rabe, M.N.; Loos, S.M.; Bansal, K. Mathematical Reasoning in Latent Space. arXiv 2019, arXiv:1909.11851. [Google Scholar] [CrossRef]

- Davies, A.; Veličković, P.; Buesing, L.; Blackwell, S.; Zheng, D.; Tomašev, N.; Tanburn, R.; Battaglia, P.; Blundell, C.; Juhász, A.; et al. Advancing Mathematics by Guiding Human Intuition with AI. Nature 2021, 600, 70–74. Available online: https://www.nature.com/articles/s41586-021-04086-x (accessed on 26 October 2023). [CrossRef] [PubMed]

- Fawzi, A.; Balog, M.; Huang, A.; Hubert, T.; Romera-Paredes, B.; Barekatain, M.; Novikov, A.; RRuiz, F.J.; Schrittwieser, J.; Swirszcz, G.; et al. Discovering Faster Matrix Multiplication Algorithms with Reinforcement Learning. Nature 2022, 610, 47–53. Available online: https://www.nature.com/articles/s41586-022-05172-4 (accessed on 26 October 2023). [CrossRef] [PubMed]

- Drori, I.; Zhang, S.; Shuttleworth, R.; Tang, L.; Lu, A.; Ke, E.; Liu, K.; Chen, L.; Tran, S.; Cheng, N.; et al. A Neural Network Solves, Explains, and Generates University Math Problems by Program Synthesis and Few-Shot Learning at Human Level. Proc. Natl. Acad. Sci. USA 2022, 119, e2123433119. Available online: https://www.pnas.org/doi/10.1073/pnas.2123433119 (accessed on 26 October 2023). [CrossRef] [PubMed]

- Jordan, N.C.; Kaplan, D.; Ramineni, C.; Locuniak, M.N. Early math matters: Kindergarten number competence and later mathematics outcomes. Dev. Psychol. 2009, 45, 850–867. [Google Scholar] [CrossRef] [PubMed]

- Liao, K.; Li, C.; Dai, T.; Zhong, C.; Lin, H.; Hu, X.; Gong, Q. Matrix eigenvalue solver based on reconfigurable photonic neural network. Nanophotonics 2022, 11, 4089–4099. [Google Scholar] [CrossRef]

- Wang, R.; Wang, P.; Lyu, C.; Luo, G.; Yu, H.; Zhou, X.; Zhang, Y.; Pan, J. Multicore Photonic Complex-Valued Neural Network with Transformation Layer. Photonics 2022, 9, 384. [Google Scholar] [CrossRef]

- Zhang, H.; Gu, M.; Jiang, X.D.; Thompson, J.; Cai, H.; Paesani, S.; Santagati, R.; Laing, A.; Zhang, Y.; Yung, M.H.; et al. An optical neural chip for implementing complex-valued neural network. Nat. Commun. 2021, 12, 457. [Google Scholar] [CrossRef]

- Cheng, J.; Zhao, Y.; Zhang, W.; Zhou, H.; Huang, D.; Zhu, Q.; Guo, Y.; Xu, B.; Dong, J.; Zhang, X. A small microring array that performs large complex-valued matrix-vector multiplication. Front. Optoelectron. 2022, 15, 15. [Google Scholar] [CrossRef]

- Goel, A.; Goel, A.K.; Kumar, A. The Role of Artificial Neural Network and Machine Learning in Utilizing Spatial Information. Spat. Inf. Res. 2023, 31, 275–285. Available online: https://link.springer.com/article/10.1007/s41324-022-00494-x (accessed on 25 October 2023). [CrossRef]

- Yamazaki, K.; Vo-Ho, V.-K.; Bulsara, D.; Le, N. Spiking Neural Networks and Their Applications: A Review. Brain Sci. 2022, 12, 863. [Google Scholar] [CrossRef]

- Zhang, D.; Yang, J.; Ye, D.; Hua, G. LQ-Nets: Learned Quantization for Highly Accurate and Compact Deep Neural Networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 365–382. Available online: https://openaccess.thecvf.com/content_ECCV_2018/html/Dongqing_Zhang_Optimized_Quantization_for_ECCV_2018_paper.html (accessed on 25 October 2023).

- Li, G.; Qian, C.; Jiang, C.; Lu, X.; Tang, K. Optimization based Layer-wise Magnitude-based Pruning for DNN Compression. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13 July 2018; International Joint Conferences on Artificial Intelligence Organization: San Francisco, CA, USA, 2018; pp. 2383–2389. [Google Scholar] [CrossRef]

- Jin, X.; Peng, B.; Wu, Y.; Liu, Y.; Liu, J.; Liang, D.; Yan, J.; Hu, X. Knowledge Distillation via Route Constrained Optimization. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, October 27–November 2 2019; pp. 1345–1354. Available online: https://openaccess.thecvf.com/content_ICCV_2019/html/Jin_Knowledge_Distillation_via_Route_Constrained_Optimization_ICCV_2019_paper.html (accessed on 25 October 2023).

- Merolla, P.A.; Arthur, J.V.; Alvarez-Icaza, R.; Cassidy, A.S.; Sawada, J.; Akopyan, F.; Jackson, B.L.; Imam, N.; Guo, C.; Nakamura, Y.; et al. A million spiking-neuron integrated circuit with a scalable communication network and interface. Science 2014, 345, 668–673. [Google Scholar] [CrossRef] [PubMed]

- Davies, M.; Srinivasa, N.; Lin, T.-H.; Chinya, G.; Cao, Y.; Choday, S.H.; Dimou, G.; Joshi, P.; Imam, N.; Jain, S.; et al. Loihi: A Neuromorphic Manycore Processor with On-Chip Learning. IEEE Micro 2018, 38, 82–99. [Google Scholar] [CrossRef]

- Furber, S.B.; Galluppi, F.; Temple, S.; Plana, L.A. The SpiNNaker Project. Proc. IEEE 2014, 102, 652–665. [Google Scholar] [CrossRef]

- Benjamin, B.V.; Gao, P.; McQuinn, E.; Choudhary, S.; Chandrasekaran, A.R.; Bussat, J.-M.; Alvarez-Icaza, R.; Arthur, J.V.; Merolla, P.A.; Boahen, K. Neurogrid: A Mixed-Analog-Digital Multichip System for Large-Scale Neural Simulations. Proc. IEEE 2014, 102, 699–716. [Google Scholar] [CrossRef]

- Kasabov, N.K. Time-Space, Spiking Neural Networks and Brain-Inspired Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2019; Available online: https://link.springer.com/book/10.1007/978-3-662-57715-8 (accessed on 25 October 2023).

- Hubel, D.H.; Wiesel, T.N. Receptive fields of single neurones in the cat’s striate cortex. J. Physiol. 1959, 148, 574–591. [Google Scholar] [CrossRef] [PubMed]

- Hubel, D.H.; Wiesel, T.N. Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J. Physiol. 1962, 160, 106–154.2. [Google Scholar] [CrossRef] [PubMed]

- Mo, W.; Luo, X.; Zhong, Y.; Jiang, W. Image recognition using convolutional neural network combined with ensemble learning algorithm. J. Phys. Conf. Ser. 2019, 1237, 022026. [Google Scholar] [CrossRef]

- Rawat, W.; Wang, Z. Deep Convolutional Neural Networks for Image Classification: A Comprehensive Review. Neural Comput. 2017, 29, 2352–2449. Available online: https://pubmed.ncbi.nlm.nih.gov/28599112/ (accessed on 25 October 2023). [CrossRef]

- He, Y.; Zhao, C.; Zhou, X.; Shen, W. MJAR: A novel joint generalization-based diagnosis method for industrial robots with compound faults. Robot. Comput. Manuf. 2024, 86, 102668. [Google Scholar] [CrossRef]

- Daidone, M.; Ferrantelli, S.; Tuttolomondo, A. Machine learning applications in stroke medicine: Advancements, challenges, and future prospectives. Neural Regen. Res. 2024, 19, 769–773. [Google Scholar] [CrossRef]

- Pacal, I. Enhancing crop productivity and sustainability through disease identification in maize leaves: Exploiting a large dataset with an advanced vision transformer model. Expert Syst. Appl. 2024, 238, 122099. [Google Scholar] [CrossRef]

- Stoica, I.; Song, D.; Popa, R.A.; Patterson, D.; Mahoney, M.W.; Katz, R.; Joseph, A.D.; Jordan, M.; Hellerstein, J.M.; Gonzalez, J.E.; et al. A Berkeley View of Systems Challenges for AI. arXiv 2017, arXiv:1712.05855. Available online: https://www2.eecs.berkeley.edu/Pubs/TechRpts/2017/EECS-2017-159.html (accessed on 24 October 2023).

- Chen, Y.H.; Krishna, T.; Emer, J.S.; Sze, V. Eyeriss: An Energy-Efficient Reconfigurable Accelerator for Deep Convolutional Neural Networks. IEEE J. Mag. 2016, 52, 127–138. Available online: https://ieeexplore.ieee.org/document/7738524 (accessed on 24 October 2023).

- De Lima, T.F.; Peng, H.T.; Tait, A.N.; Nahmias, M.A.; Miller, H.B.; Shastri, B.J.; Prucnal, P.R. Machine Learning with Neuromorphic Photonics. IEEE J. Mag. 2019, 37, 1515–1534. Available online: https://ieeexplore.ieee.org/document/8662590 (accessed on 21 October 2023). [CrossRef]

- Bai, B.; Shu, H.; Wang, X.; Zou, W. Towards Silicon Photonic Neural Networks for Artificial Intelligence. Sci. China Inf. Sci. 2020, 63, 1–14. Available online: https://link.springer.com/article/10.1007/s11432-020-2872-3 (accessed on 24 October 2023). [CrossRef]

- Sacher, W.D.; Poon, J.K. Dynamics of Microring Resonator Modulators. Opt. Express 2008, 16, 15741–15753. Available online: https://opg.optica.org/oe/fulltext.cfm?uri=oe-16-20-15741&id=172148 (accessed on 24 October 2023). [CrossRef] [PubMed]

- Hassanien, A.E.; Ghoname, A.O.; Chow, E.; Goddard, L.L.; Gong, S. Compact MZI Modulators on Thin Film Z-Cut Lithium Niobate. Opt. Express 2022, 30, 4543–4552. Available online: https://opg.optica.org/oe/fulltext.cfm?uri=oe-30-3-4543&id=468841 (accessed on 24 October 2023). [CrossRef]

- Hughes, T.W.; Minkov, M.; Shi, Y.; Fan, S. Training of Photonic Neural Networks through in Situ Backpropagation and Gradient Measurement. Optica 2018, 5, 864–871. Available online: https://opg.optica.org/optica/fulltext.cfm?uri=optica-5-7-864&id=395466 (accessed on 24 October 2023). [CrossRef]

- Wu, B.; Li, H.; Tong, W.; Dong, J.; Zhang, X. Low-threshold all-optical nonlinear activation function based on a Ge/Si hybrid structure in a microring resonator. Opt. Mater. Express 2022, 12, 970–980. [Google Scholar] [CrossRef]

- Jha, A.; Huang, C.; Prucnal, P.R. Reconfigurable all-optical nonlinear activation functions for neuromorphic photonics. Opt. Lett. 2020, 45, 4819–4822. [Google Scholar] [CrossRef]

- Tait, A.N.; Shastri, B.J.; Fok, M.P.; Nahmias, M.A.; Prucnal, P.R. The DREAM: An Integrated Photonic Thresholder. J. Light. Technol. 2013, 31, 1263–1272. [Google Scholar] [CrossRef]

- Feldmann, J.; Youngblood, N.; Wright, C.D.; Bhaskaran, H.; Pernice, W.H.P. All-optical spiking neurosynaptic networks with self-learning capabilities. Nature 2019, 569, 208–214. [Google Scholar] [CrossRef]

- Xu, Z.; Tang, B.; Zhang, X.; Leong, J.F.; Pan, J.; Hooda, S.; Zamburg, E.; Thean, A.V.-Y. Reconfigurable nonlinear photonic activation function for photonic neural network based on non-volatile opto-resistive RAM switch. Light. Sci. Appl. 2022, 11, 288. [Google Scholar] [CrossRef]

- Hamerly, R.; Bernstein, L.; Sludds, A.; Soljačić, M.; Englund, D. Large-Scale Optical Neural Networks Based on Photoelectric Multiplication. Phys. Rev. X 2019, 9, 021032. Available online: https://journals.aps.org/prx/abstract/10.1103/PhysRevX.9.021032 (accessed on 24 October 2023). [CrossRef]

- Sludds, A.; Bandyopadhyay, S.; Chen, Z.; Zhong, Z.; Cochrane, J.; Bernstein, L.; Bunandar, D.; Dixon, P.B.; Hamilton, S.A.; Streshinsky, M.; et al. Delocalized Photonic Deep Learning on the Internet’s Edge. Science 2022, 378, 270–276. Available online: https://www.science.org/doi/10.1126/science.abq8271 (accessed on 24 October 2023). [CrossRef]

- Zuo, Y.; Li, B.; Zhao, Y.; Jiang, Y.; Chen, Y.-C.; Chen, P.; Jo, G.-B.; Liu, J.; Du, S. All-optical neural network with nonlinear activation functions. Optica 2019, 6, 1132–1137. [Google Scholar] [CrossRef]

- Bonifacio, R.; Lugiato, L.A.; Gronchi, M. Theory of Optical Bistability; Springer: Berlin/Heidelberg, Germany, 1979; Available online: https://link.springer.com/chapter/10.1007/978-3-540-38950-7_45 (accessed on 24 October 2023).

- Zhang, P.; Chung, T.F.; Li, Q.; Wang, S.; Wang, Q.; Huey, W.L.; Yang, S.; Goldberger, J.E.; Yao, J.; Zhang, X. All-Optical Switching of Magnetization in Atomically Thin CrI3. Nat. Mater. 2022, 21, 1373–1378. Available online: https://www.nature.com/articles/s41563-022-01354-7 (accessed on 24 October 2023). [CrossRef]

- Li, Q.; Liu, S.; Zhao, Y.; Wang, W.; Tian, Y.; Feng, J.; Guo, J. Optical Nonlinear Activation Functions Based on MZI-Structure for Optical Neural Networks. In Proceedings of the 2020 Asia Communications and Photonics Conference (ACP) and International Conference on Information Photonics and Optical Communications (IPOC), Beijing, China, 24–27 October 2020; Available online: https://ieeexplore.ieee.org/document/9365615 (accessed on 24 October 2023).

- Lin, Q.; Ma, J.; Yin, Z.; Yuan, P.; Wang, J.; Xie, G.; Qian, L. Optical modification of nonlinear crystals for quasi-parametric chirped-pulse amplification. Fundam. Res. 2022. [Google Scholar] [CrossRef]

- All Optical Nonlinear Activation Function Based on Ge/Si Hybrid Micro-Ring Resonator. Available online: https://opg.optica.org/abstract.cfm?uri=ACPC-2021-M5I.6 (accessed on 24 October 2023).

- Feldmann, J.; Youngblood, N.; Karpov, M.; Gehring, H.; Li, X.; Stappers, M.; Le Gallo, M.; Fu, X.; Lukashchuk, A.; Raja, A.S.; et al. Parallel Convolutional Processing Using an Integrated Photonic Tensor Core. Nature 2021, 598, 52–58. Available online: https://www.nature.com/articles/s41586-020-03070-1 (accessed on 24 October 2023). [CrossRef]

- Porte, X.; Skalli, A.; Haghighi, N.; Reitzenstein, S.; Lott, J.A.; Brunner, D. A Complete, Parallel and Autonomous Photonic Neural Network in a Semiconductor Multimode Laser. J. Phys. Photonics 2021, 3, 024017. Available online: https://iopscience.iop.org/article/10.1088/2515-7647/abf6bd (accessed on 24 October 2023). [CrossRef]

- Totovic, A.R.; Dabos, G.; Passalis, N.; Tefas, A.; Pleros, N. Femtojoule per MAC Neuromorphic Photonics: An Energy and Technology Roadmap. IEEE J. Sel. Top. Quantum Electron. 2020, 26, 1–15. [Google Scholar] [CrossRef]

- Nahmias, M.A.; de Lima, T.F.; Tait, A.N.; Peng, H.-T.; Shastri, B.J.; Prucnal, P.R. Photonic Multiply-Accumulate Operations for Neural Networks. IEEE J. Sel. Top. Quantum Electron. 2019, 26, 1–18. [Google Scholar] [CrossRef]

- Pai, S.; Williamson, I.A.; Hughes, T.W.; Minkov, M.; Solgaard, O.; Fan, S.; Miller, D.A. Parallel Programming of an Arbitrary Feedforward Photonic Network. IEEE J. Mag. 2020, 26, 6100813. Available online: https://ieeexplore.ieee.org/document/9103211 (accessed on 24 October 2023). [CrossRef]

- Lu, T.; Wu, S.; Xu, X.; Yu, F.T.S. Two-dimensional programmable optical neural network. Appl. Opt. 1989, 28, 4908–4913. [Google Scholar] [CrossRef] [PubMed]

- Huang, C.; Jha, A.; De Lima, T.F.; Tait, A.N.; Shastri, B.J.; Prucnal, P.R. On-Chip Programmable Nonlinear Optical Signal Processor and Its Applications. IEEE J. Mag. 2020, 27, 6100211. Available online: https://ieeexplore.ieee.org/document/9104002 (accessed on 24 October 2023). [CrossRef]

- Xu, X.; Tan, M.; Corcoran, B.; Wu, J.; Boes, A.; Nguyen, T.G.; Chu, S.T.; Little, B.E.; Hicks, D.G.; Morandotti, R.; et al. 11 TOPS Photonic Convolutional Accelerator for Optical Neural Networks. Nature 2021, 598, 44–51. Available online: https://www.nature.com/articles/s41586-020-03063-0 (accessed on 24 October 2023). [CrossRef] [PubMed]

- Totovic, A.; Giamougiannis, G.; Tsakyridis, A.; Lazovsky, D.; Pleros, N. Programmable photonic neural networks combining WDM with coherent linear optics. Sci. Rep. 2022, 12, 5605. [Google Scholar] [CrossRef] [PubMed]

- Mourgias-Alexandris, G.; Totovic, A.; Tsakyridis, A.; Passalis, N.; Vyrsokinos, K.; Tefas, A.; Pleros, N. Neuromorphic Photonics With Coherent Linear Neurons Using Dual-IQ Modulation Cells. J. Light. Technol. 2020, 38, 811–819. [Google Scholar] [CrossRef]

- Giamougiannis, G.; Tsakyridis, A.; Mourgias-Alexandris, G.; Moralis-Pegios, M.; Totovic, A.; Dabos, G.; Passalis, N.; Kirtas, M.; Bamiedakis, N.; Tefas, A.; et al. Silicon-integrated coherent neurons with 32GMAC/sec/axon compute line-rates using EAM-based input and weighting cells. In Proceedings of the 2021 European Conference on Optical Communication (ECOC), Bordeaux, France, 13–16 September 2021; IEEE: Piscataway Township, NJ, USA, 2021; pp. 1–4. [Google Scholar] [CrossRef]

- Reck, M.; Zeilinger, A.; Bernstein, H.J.; Bertani, P. Experimental realization of any discrete unitary operator. Phys. Rev. Lett. 1994, 73, 58–61. [Google Scholar] [CrossRef]

- Optimal Design for Universal Multiport Interferometers. Available online: https://opg.optica.org/optica/fulltext.cfm?uri=optica-3-12-1460&id=355743 (accessed on 24 October 2023).

- Fard, M.M.P.; Williamson, I.A.; Edwards, M.; Liu, K.; Pai, S.; Bartlett, B.; Minkov, M.; Hughes, T.W.; Fan, S.; Nguyen, T.A. Experimental Realization of Arbitrary Activation Functions for Optical Neural Networks. Opt. Express 2020, 28, 12138–12148. Available online: https://opg.optica.org/oe/fulltext.cfm?uri=oe-28-8-12138&id=429881 (accessed on 24 October 2023). [CrossRef]

- Liang, G.; Huang, H.; Mohanty, A.; Shin, M.C.; Ji, X.; Carter, M.J.; Shrestha, S.; Lipson, M.; Yu, N. Robust, Efficient, Micrometre-Scale Phase Modulators at Visible Wavelengths. Nat. Photonics 2021, 15, 908–913. Available online: https://www.nature.com/articles/s41566-021-00891-y (accessed on 24 October 2023). [CrossRef]

- Tait, A.N.; Wu, A.X.; De Lima, T.F.; Zhou, E.; Shastri, B.J.; Nahmias, M.A.; Prucnal, P.R. Microring Weight Banks. IEEE J. Mag. 2016, 22, 312–325. Available online: https://ieeexplore.ieee.org/document/7479545/ (accessed on 24 October 2023). [CrossRef]

- Miscuglio, M.; Sorger, V.J. Photonic Tensor Cores for Machine Learning. Appl. Phys. Rev. 2020, 7, 031404. Available online: https://pubs.aip.org/aip/apr/article/7/3/031404/998338/Photonic-tensor-cores-for-machine-learning (accessed on 24 October 2023). [CrossRef]

- Wang, J.; Rodrigues, S.P.; Dede, E.M.; Fan, S. Microring-based programmable coherent optical neural networks. Opt. Express 2023, 31, 18871. [Google Scholar] [CrossRef]

- Sato, T.; Enokihara, A. Ultrasmall design of a universal linear circuit based on microring resonators. Opt. Express 2019, 27, 33005–33010. [Google Scholar] [CrossRef] [PubMed]

- Deng, L. The MNIST Database of Handwritten Digit Images for Machine Learning Research [Best of the Web]. IEEE J. Mag. 2012, 18, 141–142. Available online: https://ieeexplore.ieee.org/document/6296535 (accessed on 24 October 2023). [CrossRef]

- Shastri, B.J.; Tait, A.N.; de Lima, T.F.; Pernice, W.H.; Bhaskaran, H.; Wright, C.D.; Prucnal, P.R. Photonics for Artificial Intelligence and Neuromorphic Computing. Nat. Photonics 2021, 15, 102–114. Available online: https://www.nature.com/articles/s41566-020-00754-y (accessed on 21 October 2023). [CrossRef]

- Argyris, A. Photonic Neuromorphic Technologies in Optical Communications. Nanophotonics 2022, 11, 897–916. Available online: https://www.degruyter.com/document/doi/10.1515/nanoph-2021-0578/html (accessed on 21 October 2023). [CrossRef]

- Li, T.; Li, Y.; Wang, Y.; Liu, Y.; Liu, Y.; Wang, Z.; Miao, R.; Han, D.; Hui, Z.; Li, W. Neuromorphic Photonics Based on Phase Change Materials. Nanomaterials 2023, 13, 1756. [Google Scholar] [CrossRef]

- Robertson, J.; Kirkland, P.; Alanis, J.A.; Hejda, M.; Bueno, J.; Di Caterina, G.; Hurtado, A. Ultrafast neuromorphic photonic image processing with a VCSEL neuron. Sci. Rep. 2022, 12, 4874. [Google Scholar] [CrossRef]

- Moore’s Law, Intel. Available online: https://www.intel.com/content/www/us/en/newsroom/resources/moores-law.html (accessed on 14 October 2023).

- Bohr, M. A 30 Year Retrospective on Dennard’s MOSFET Scaling Paper. IEEE Solid-State Circuits Soc. Newsl. 2007, 12, 11–13. [Google Scholar] [CrossRef]

- Mead, C. How We Created Neuromorphic Engineering. Nat. Electron. 2020, 3, 434–435. Available online: https://www.nature.com/articles/s41928-020-0448-2 (accessed on 22 October 2023). [CrossRef]

- Mead, C. Neuromorphic electronic systems. Proc. IEEE 1990, 78, 1629–1636. [Google Scholar] [CrossRef]

- Indiveri, G. Neuromorphic analog VLSI sensor for visual tracking: Circuits and application examples. IEEE Trans. Circuits Syst. II Analog. Digit. Signal Process. 1999, 46, 1337–1347. [Google Scholar] [CrossRef]

- Gutierrez-Galan, D.; Dominguez-Morales, J.P.; Perez-Peña, F.; Jimenez-Fernandez, A.; Linares-Barranco, A. Neuropod: A real-time neuromorphic spiking CPG applied to robotics. Neurocomputing 2019, 381, 10–19. [Google Scholar] [CrossRef]

- Zhao, J.; Risi, N.; Monforte, M.; Bartolozzi, C.; Indiveri, G.; Donati, E. Closed-Loop Spiking Control on a Neuromorphic Processor Implemented on the iCub. IEEE J. Emerg. Sel. Top. Circuits Syst. 2020, 10, 546–556. [Google Scholar] [CrossRef]

- Klos, C.; Kossio, Y.F.K.; Goedeke, S.; Gilra, A.; Memmesheimer, R.-M. Dynamical Learning of Dynamics. Phys. Rev. Lett. 2020, 125, 088103. [Google Scholar] [CrossRef]

- Illing, B.; Gerstner, W.; Brea, J. Biologically plausible deep learning—But how far can we go with shallow networks? Neural Netw. 2019, 118, 90–101. [Google Scholar] [CrossRef]

- Kaiser, J.; Mostafa, H.; Neftci, E. Synaptic Plasticity Dynamics for Deep Continuous Local Learning (DECOLLE). Front. Neurosci. 2020, 14, 424. [Google Scholar] [CrossRef]

- Christensen, D.V.; Dittmann, R.; Linares-Barranco, B.; Sebastian, A.; Le Gallo, M.; Redaelli, A.; Slesazeck, S.; Mikolajick, T.; Spiga, S.; Menzel, S.; et al. 2022 roadmap on neuromorphic computing and engineering. Neuromorphic Comput. Eng. 2022, 2, 022501. [Google Scholar] [CrossRef]

- Enoka, R.M. Morphological Features and Activation Patterns of Motor Units. J. Clin. Neurophysiol. 1995, 12, 538–559. [Google Scholar] [CrossRef] [PubMed]

- Rodriguez-Tapia, B.; Soto, I.; Martinez, D.M.; Arballo, N.C. Myoelectric Interfaces and Related Applications: Current State of EMG Signal Processing–A Systematic Review. IEEE Access 2020, 8, 7792–7805. [Google Scholar] [CrossRef]

- Park, K.-H.; Lee, S.-W. Movement intention decoding based on deep learning for multiuser myoelectric interfaces. In Proceedings of the 2016 4th International Winter Conference on Brain-Computer Interface (BCI), Gangwon, Republic of Korea, 22–24 February 2016; pp. 1–2. [Google Scholar] [CrossRef]

- Del Vecchio, A.; Germer, C.M.; Elias, L.A.; Fu, Q.; Fine, J.; Santello, M.; Farina, D. The Human Central Nervous System Transmits Common Synaptic Inputs to Distinct Motor Neuron Pools during Non-synergistic Digit Actions. J. Physiol. 2019, 597, 5935–5948. Available online: https://physoc.onlinelibrary.wiley.com/doi/full/10.1113/JP278623 (accessed on 22 October 2023). [CrossRef] [PubMed]

- Han, X.; Zhao, X.; Zeng, T.; Yang, Y.; Yu, H.; Zhang, C.; Wang, B.; Liu, X.; Zhang, T.; Sun, J.; et al. Multimodal-Synergistic-Modulation Neuromorphic Imaging Systems for Simulating Dry Eye Imaging. Small 2023, 19, e2206181. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Xu, F.; Zhao, X.; Zhang, M.; Han, W.; Yu, H.; Wang, S.; Yang, Y.; Tong, Y.; Tang, Q.; et al. Natural polyelectrolyte-based ultraflexible photoelectric synaptic transistors for hemispherical high-sensitive neuromorphic imaging system. Nano Energy 2022, 95, 107001. [Google Scholar] [CrossRef]

- Choi, C.; Leem, J.; Kim, M.S.; Taqieddin, A.; Cho, C.; Cho, K.W.; Lee, G.J.; Seung, H.; Bae, H.J.; Song, Y.M.; et al. Curved neuromorphic image sensor array using a MoS2-organic heterostructure inspired by the human visual recognition system. Nat. Commun. 2020, 11, 5934. [Google Scholar] [CrossRef] [PubMed]

- Mangalwedhekar, R.; Singh, N.; Thakur, C.S.; Seelamantula, C.S.; Jose, M.; Nair, D. Achieving nanoscale precision using neuromorphic localization microscopy. Nat. Nanotechnol. 2023, 18, 380–389. [Google Scholar] [CrossRef]

- Mueggler, E.; Bartolozzi, C.; Scaramuzza, D. Fast Event-based Corner Detection. In Proceedings of the British Machine Vision Conference, London, UK, 4–7 September 2017; British Machine Vision Association: Durham, UK, 2017; p. 33. [Google Scholar] [CrossRef]

- Datondji SR, E.; Dupuis, Y.; Subirats, P.; Vasseur, P. A Survey of Vision-Based Traffic Monitoring of Road Intersections. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2681–2698. Available online: https://ieeexplore.ieee.org/document/7458203 (accessed on 23 October 2023). [CrossRef]

- Rubio, J.C.; Serrat, J.; Lopez, A.M.; Ponsa, D. Multiple-Target Tracking for Intelligent Headlights Control. IEEE Trans. Intell. Transp. Syst. 2012, 13, 594–605. [Google Scholar] [CrossRef]

- Zhang, X.; Hu, S.; Zhang, H.; Hu, X. A Real-Time Multiple Vehicle Tracking Method for Traffic Congestion Identification. KSII Trans. Internet Inf. Syst. 2016, 10. Available online: https://itiis.org/digital-library/21122 (accessed on 23 October 2023).

- Sivaraman, S.; Trivedi, M.M. Looking at Vehicles on the Road: A Survey of Vision-Based Vehicle Detection, Tracking, and Behavior Analysis. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1773–1795. [Google Scholar] [CrossRef]

- Cucchiara, R.; Piccardi, M.; Mello, P. Image analysis and rule-based reasoning for a traffic monitoring system. IEEE Trans. Intell. Transp. Syst. 2000, 1, 119–130. [Google Scholar] [CrossRef]

- Huang, M.-C.; Yen, S.-H. A real-time and color-based computer vision for traffic monitoring system. In Proceedings of the 2004 IEEE International Conference on Multimedia and Expo (ICME) (IEEE Cat. No.04TH8763), Taipei, Taiwan, 27–30 June 2004; Volume 3, pp. 2119–2122. [Google Scholar] [CrossRef]

- Lin, S.-P.; Chen, Y.-H.; Wu, B.-F. A Real-Time Multiple-Vehicle Detection and Tracking System with Prior Occlusion Detection and Resolution, and Prior Queue Detection and Resolution. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; pp. 828–831. [Google Scholar] [CrossRef]

- Betke, M.; Haritaoglu, E.; Davis, L.S. Real-time multiple vehicle detection and tracking from a moving vehicle. Mach. Vis. Appl. 2000, 12, 69–83. [Google Scholar] [CrossRef]

- Aytekin, B.; Altuğ, E. Increasing Driving Safety with a Multiple Vehicle Detection and Tracking System Using Ongoing Vehicle Shadow Information. In Proceedings of the 2010 IEEE International Conference on Systems, Man and Cybernetics, Istanbul, Turkey, 10–13 October 2010; Available online: https://ieeexplore.ieee.org/abstract/document/5641879 (accessed on 23 October 2023).

- Geiger, A.; Lenz, P.; Urtasun, R. Are We Ready for Autonomous Driving? The Kitti Vision Benchmark Suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; Available online: https://ieeexplore.ieee.org/document/6248074 (accessed on 23 October 2023).

- Wen, L.; Du, D.; Cai, Z.; Lei, Z.; Chang, M.C.; Qi, H.; Lim, J.; Yang, M.H.; Lyu, S. UA-DETRAC: A new benchmark and protocol for multi-object detection and tracking. Comput. Vis. Image Underst. 2020, 193, 102907. [Google Scholar] [CrossRef]

- Blum, H.; Dietmüller, A.; Milde, M.; Conradt, J.; Indiveri, G.; Sandamirskaya, Y. A neuromorphic controller for a robotic vehicle equipped with a dynamic vision sensor. In Robotics: Science and Systems XIII; Robotics Science and Systems Foundation: Cambridge, UK, 2017. [Google Scholar] [CrossRef]

- Litzenberger, M.; Kohn, B.; Belbachir, A.; Donath, N.; Gritsch, G.; Garn, H.; Posch, C.; Schraml, S. Estimation of Vehicle Speed Based on Asynchronous Data from a Silicon Retina Optical Sensor. In Proceedings of the 2006 IEEE Intelligent Transportation Systems Conference, Toronto, ON, Canada, 17–20 September 2006; pp. 653–658. [Google Scholar] [CrossRef]

- Hu, Y.; Liu, H.; Pfeiffer, M.; Delbruck, T. DVS Benchmark Datasets for Object Tracking, Action Recognition, and Object Recognition. Front. Neurosci. 2016, 10, 405. [Google Scholar] [CrossRef] [PubMed]

- Tedaldi, D.; Gallego, G.; Mueggler, E.; Scaramuzza, D. Feature detection and tracking with the dynamic and active-pixel vision sensor (DAVIS). In Proceedings of the 2016 Second International Conference on Event-Based Control, Communication, and Signal Processing (EBCCSP), Krakow, Poland, 13–15 June 2016; pp. 1–7. [Google Scholar] [CrossRef]

- NI, Z.; Pacoret, C.; Benosman, R.; Ieng, S.; Régnier, S. Asynchronous event-based high speed vision for microparticle tracking. J. Microsc. 2012, 245, 236–244. [Google Scholar] [CrossRef]

- Chen, G.; Cao, H.; Aafaque, M.; Chen, J.; Ye, C.; Röhrbein, F.; Conradt, J.; Chen, K.; Bing, Z.; Liu, X.; et al. Neuromorphic Vision Based Multivehicle Detection and Tracking for Intelligent Transportation System. J. Adv. Transp. 2018, 2018, e4815383. [Google Scholar] [CrossRef]

- Hinz, G.; Chen, G.; Aafaque, M.; Röhrbein, F.; Conradt, J.; Bing, Z.; Qu, Z.; Stechele, W.; Knoll, A. Online Multi-object Tracking-by-Clustering for Intelligent Transportation System with Neuromorphic Vision Sensor. In Proceedings of the KI 2017: Advances in Artificial Intelligence, Dortmund, Germany, 25–29 September 2017; Kern-Isberner, G., Fürnkranz, J., Thimm, M., Eds.; Lecture Notes in Computer Science. Springer International Publishing: Cham, Switzerland, 2017; pp. 142–154. [Google Scholar] [CrossRef]

- Luan, E.; Yu, S.; Salmani, M.; Nezami, M.S.; Shastri, B.J.; Chrostowski, L.; Eshaghi, A. Towards a high-density photonic tensor core enabled by intensity-modulated microrings and photonic wire bonding. Sci. Rep. 2023, 13, 1260. [Google Scholar] [CrossRef]

- Moralis-Pegios, M.; Mourgias-Alexandris, G.; Tsakyridis, A.; Giamougiannis, G.; Totovic, A.R.; Dabos, G.; Passalis, N.; Kirtas, M.; Rutirawut, T.; Gardes, F.Y.; et al. Neuromorphic Silicon Photonics and Hardware-Aware Deep Learning for High-Speed Inference. J. Light. Technol. 2022, 40, 3243–3254. [Google Scholar] [CrossRef]

- Totovic, A.; Pappas, C.; Kirtas, M.; Tsakyridis, A.; Giamougiannis, G.; Passalis, N.; Moralis-Pegios, M.; Tefas, A.; Pleros, N. WDM equipped universal linear optics for programmable neuromorphic photonic processors. Neuromorphic Comput. Eng. 2022, 2, 024010. [Google Scholar] [CrossRef]

- Ashtiani, F.; Geers, A.J.; Aflatouni, F. An on-chip photonic deep neural network for image classification. Nature 2022, 606, 501–506. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Robertson, J.; Xiang, S.; Hejda, M.; Bueno, J.; Hurtado, A. All-optical neuromorphic binary convolution with a spiking VCSEL neuron for image gradient magnitudes. Photon. Res. 2021, 9, B201–B209. [Google Scholar] [CrossRef]

- Wang, S.; Chen, C.; Yu, Z.; He, Y.; Chen, X.; Wan, Q.; Shi, Y.; Zhang, D.W.; Zhou, H.; Wang, X.; et al. A MoS2/PTCDA Hybrid Heterojunction Synapse with Efficient Photoelectric Dual Modulation and Versatility. Adv. Mater. 2019, 31, e1806227. [Google Scholar] [CrossRef] [PubMed]

- Guo, B.; Xiao, Q.; Wang, S.; Zhang, H. 2D Layered Materials: Synthesis, Nonlinear Optical Properties, and Device Applications. Laser Photon. Rev. 2019, 13, 1800327. [Google Scholar] [CrossRef]

- Li, H.; Jiang, X.; Ye, W.; Zhang, H.; Zhou, L.; Zhang, F.; She, D.; Zhou, Y.; Han, S.-T. Fully photon modulated heterostructure for neuromorphic computing. Nano Energy 2019, 65, 104000. [Google Scholar] [CrossRef]

- Cho, S.W.; Kwon, S.M.; Lee, M.; Jo, J.-W.; Heo, J.S.; Kim, Y.-H.; Cho, H.K.; Park, S.K. Multi-spectral gate-triggered heterogeneous photonic neuro-transistors for power-efficient brain-inspired neuromorphic computing. Nano Energy 2019, 66, 104097. [Google Scholar] [CrossRef]

- Wang, Y.; Yin, L.; Huang, W.; Li, Y.; Huang, S.; Zhu, Y.; Yang, D.; Pi, X. Optoelectronic Synaptic Devices for Neuromorphic Computing. Adv. Intell. Syst. 2020, 3, 2000099. [Google Scholar] [CrossRef]

- Zhang, J.; Dai, S.; Zhao, Y.; Zhang, J.; Huang, J. Recent Progress in Photonic Synapses for Neuromorphic Systems. Adv. Intell. Syst. 2020, 2, 1900136. [Google Scholar] [CrossRef]

- Gentili, P.L.; Giubila, M.S.; Germani, R.; Romani, A.; Nicoziani, A.; Spalletti, A.; Heron, B.M. Optical Communication among Oscillatory Reactions and Photo-Excitable Systems: UV and Visible Radiation Can Synchronize Artificial Neuron Models. Angew. Chem. Int. Ed. 2017, 56, 7535–7540. [Google Scholar] [CrossRef]

- Gentili, P.L. Photochromic and luminescent materials for the development of Chemical Artificial Intelligence. Dye. Pigment. 2022, 205, 110547. [Google Scholar] [CrossRef]

- Proskurkin, I.S.; Smelov, P.S.; Vanag, V.K. Experimental verification of an opto-chemical “neurocomputer”. Phys. Chem. Chem. Phys. 2020, 22, 19359–19367. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kutluyarov, R.V.; Zakoyan, A.G.; Voronkov, G.S.; Grakhova, E.P.; Butt, M.A. Neuromorphic Photonics Circuits: Contemporary Review. Nanomaterials 2023, 13, 3139. https://doi.org/10.3390/nano13243139

Kutluyarov RV, Zakoyan AG, Voronkov GS, Grakhova EP, Butt MA. Neuromorphic Photonics Circuits: Contemporary Review. Nanomaterials. 2023; 13(24):3139. https://doi.org/10.3390/nano13243139

Chicago/Turabian StyleKutluyarov, Ruslan V., Aida G. Zakoyan, Grigory S. Voronkov, Elizaveta P. Grakhova, and Muhammad A. Butt. 2023. "Neuromorphic Photonics Circuits: Contemporary Review" Nanomaterials 13, no. 24: 3139. https://doi.org/10.3390/nano13243139

APA StyleKutluyarov, R. V., Zakoyan, A. G., Voronkov, G. S., Grakhova, E. P., & Butt, M. A. (2023). Neuromorphic Photonics Circuits: Contemporary Review. Nanomaterials, 13(24), 3139. https://doi.org/10.3390/nano13243139