4.2.1. Model Fit Testing

Selecting an appropriate cognitive diagnostic model is a prerequisite to accurately assessing students’ cognitive states. As highlighted by

Tatsuoka (

1983) and

Templin and Henson (

2010), cognitive diagnostic models are a class of statistical models that integrate cognitive variables to link test performance with mastery of underlying cognitive attributes. Model selection must consider theoretical assumptions and algorithmic characteristics to determine suitability for specific research contexts.

However, cognitive diagnostic models are numerous and relatively complex, making it difficult to thoroughly analyze the principles of each model and manually select the optimal one. Therefore, in practice, researchers often adopt a data-driven approach. This method analyzes and compares actual data to select the model from existing ones that provides the best fit and most accurately reflects the characteristics of the data. To obtain a model with better fit, this study utilizes the CDM and GDINA R packages to evaluate the parameters of several commonly used cognitive diagnostic models. These models include DINA (Deterministic Input, Noisy “And” Gate), DINO (Deterministic Input, Noisy “Or” Gate), RRUM (Reduced Reparameterized Unified Model), ACDM (Additive Cognitive Diagnostic Model), LCDM (Log-linear Cognitive Diagnostic Model), LLM (Linear Logistic Model), G-DINA (Generalized DINA), and Mixed Model. By comparing the parameters of these models (as shown in

Table 4), the goal is to identify the cognitive diagnostic model that best fits the current high school English reading comprehension test data, thereby providing the most reliable foundation for subsequent cognitive diagnosis.

In cognitive diagnostic assessments, selecting the model that best represents the data is of paramount importance. Model fit statistics are generally divided into two levels: absolute fit statistics and relative fit statistics. The former evaluates the degree to which the model aligns with the observed data, serving as a prerequisite for all subsequent analyses by ensuring that the model can adequately explain the data in an absolute sense. The latter adopts a comparative perspective to identify the optimal model among a set of candidate models.

In this study, the absolute fit was assessed using the Root Mean Square Error of Approximation (RMSEA), a widely applied fit index in structural equation modeling and cognitive diagnostic modeling. RMSEA adjusts for model complexity by incorporating degrees of freedom and reflects how well the model reproduces the population covariance structure. According to the criteria proposed by

Browne and Cudeck (

1992), an RMSEA value below 0.05 indicates excellent fit; values between 0.05 and 0.08 indicate acceptable fit; and values above 0.10 suggest poor fit. Based on the results presented in

Table 4, it can be concluded that all evaluated cognitive models demonstrate satisfactory absolute fit, thus providing a sound basis for subsequent model comparisons.

The process of model comparison is essentially a relative fit evaluation aiming to select the best model from among those demonstrating good absolute fit. During this process, the Akaike Information Criterion (AIC) and the Bayesian Information Criterion (BIC) are primarily considered, alongside the total number of estimated parameters and the deviance statistic. The number of parameters reflects model complexity: models with more parameters are more flexible but may overfit the training data and perform poorly on new data. Therefore, under similar fit quality, models with fewer parameters are preferred, consistent with Occam’s Razor principle, which advocates for parsimony (

Jefferys and Berger 1992).

In selecting cognitive diagnostic models, AIC and BIC are the primary criteria. As noted by

Vrieze (

2012), models with lower AIC and BIC values demonstrate better fit because these metrics simultaneously consider goodness-of-fit and penalize complexity, thereby mitigating overfitting. Results from

Table 4 clearly show that the Mixed Model yields the smallest AIC, BIC, and deviance values among the candidate models (highlighted in red). This strongly supports the superior fit of the Mixed Model relative to other models. Consequently, the Mixed Model was adopted for further analyses to ensure accurate diagnostic outcomes and robust modeling.

4.2.2. Model Selection for Multi-Attribute Items

In the field of cognitive diagnosis, striking a balance between model fit and parsimony is critical. To this end,

De La Torre and Lee (

2013) proposed a model selection method based on the Wald test, specifically designed to identify the most appropriate parsimonious model for multi-attribute items while preserving adequate model-data fit. Multi-attribute items refer to those that assess two or more cognitive attributes. Parsimonious models, characterized by fewer parameters and more intuitive interpretability, are essential for enhancing both the practicality and comprehensibility of diagnostic outcomes. This model selection approach is commonly referred to as the Mixed Model strategy.

The core idea of this approach is as follows: for items measuring only a single attribute, the saturated Generalized Deterministic Inputs, Noisy “And” gate (G-DINA) model is applied directly. In contrast, for items involving multiple attributes, a data-driven selection process is employed to determine the most suitable parsimonious model. Following these rigorous rules, each multi-attribute item was evaluated individually, resulting in the final construction of the mixed model. For single-attribute items, the G-DINA model was used. For the 14 multi-attribute items, the Wald test was used to select the most appropriate parsimonious model. The item-level selection outcomes are presented in

Table 5. For example, for Item 11, the LLM was selected (

p = 0.2442), while for Item 7, no parsimonious model fit as well as the saturated G-DINA model, so the G-DINA model was retained. This hybrid model, combining the strengths of both saturated and parsimonious models, was validated using the Wald test to ensure that the selected parsimonious models did not significantly worsen the model fit (

p > 0.05). This approach balances model fit and interpretability, providing a robust foundation for subsequent diagnostic estimations.

In this study, the GDINA R package was used to construct the mixed model framework. The Wald test was then applied to perform model selection for multi-attribute items. The Wald test is a statistical hypothesis test used to determine whether a parameter significantly differs from zero, or to evaluate whether imposing constraints on a model leads to a statistically significant deterioration in fit (

Agresti and Hitchcock 2005). The decision rules for model selection in the mixed framework are as follows:

Fit Difference Test: This is the first step. If a parsimonious model does not significantly differ from the G-DINA model in terms of fit (i.e., p > 0.05), the parsimonious model is considered acceptable, as it achieves a simpler structure without a meaningful loss in explanatory power.

Competitive Selection Among Parsimonious Models: When multiple parsimonious models pass the fit difference test, the one with the highest p-value is preferred. A higher p-value indicates that the constraints imposed by the model are most compatible with the data, thereby maximizing information retention while simplifying the model.

Parsimony Preference: In practical scenarios requiring more straightforward interpretability, the most parsimonious model—such as DINA or DINO—may be prioritized, even if multiple models are statistically acceptable. However, it is important to note that when three or more attributes are involved, sufficient sample size is necessary (typically

n > 1000) to avoid inflated Type I errors. This highlights the crucial role of sample size in ensuring the reliability of statistical inference (

Templin and Henson 2010).

Fallback to Saturated Model: If all parsimonious models are rejected by the Wald test, it indicates that none can simplify the G-DINA model without a significant loss in fit. In such cases, the saturated model is retained as the final choice to ensure that the model captures the complexity inherent in the data.

Following these rigorous rules, each multi-attribute item was evaluated individually, resulting in the final construction of the mixed model. The item-level selection outcomes are presented in

Table 5. These results provide the foundation for generating precise diagnostic feedback and support the subsequent construction of learning trajectories.

4.2.3. Construction of Learning Pathways

The core objective of cognitive diagnostic assessment is to provide a fine-grained evaluation of each student’s mastery across various cognitive attributes based on their response data. Specifically, each attribute is assessed as either mastered (1) or not mastered (0), thereby shifting diagnostic emphasis from a single aggregate score to a multidimensional cognitive profile. Each student’s mastery status across attributes can thus be represented as a binary vector, typically denoted as

, where m is the number of cognitive attributes and

indicates the mastery of attribute

. As

Mislevy (

1994) noted, this knowledge state vector is a key conceptual tool in cognitive diagnosis theory, enabling precise identification of individual learning needs and supporting the implementation of personalized instruction.

A student’s knowledge state is not determined by a simple count of correct answers but is a latent classification estimated by the cognitive diagnostic model. The model takes the student’s entire vector of item responses (the pattern of correct/incorrect answers across all 20 items) as input. Using a statistical estimation method (in this study, Maximum A Posteriori estimation), the model calculates the probability of the student belonging to each of the 27 = 128 possible knowledge states. The student is then classified into the single knowledge state that has the highest posterior probability. This probabilistic approach provides a robust classification that considers the complex interplay between the student’s unique response pattern and the cognitive attributes required by each item as defined in the Q-matrix.

The construction of learning pathways is grounded in a fundamental assumption: human cognition progresses in a sequential order from basic to advanced skills. That is, students are more likely to master foundational attributes before acquiring more complex ones. This hierarchical progression is reflected in the knowledge states, where the presence or absence of each attribute follows a developmental sequence. More specifically, the order among elements of

implies a nested structure among different knowledge states. This concept is closely aligned with Piaget’s theory of cognitive development and Gagné’s hierarchy of learning, both of which emphasize the necessity of acquiring foundational knowledge before progressing to higher-order competencies (

Gagné 1985;

Piaget 1970).

The construction procedure includes the following steps:

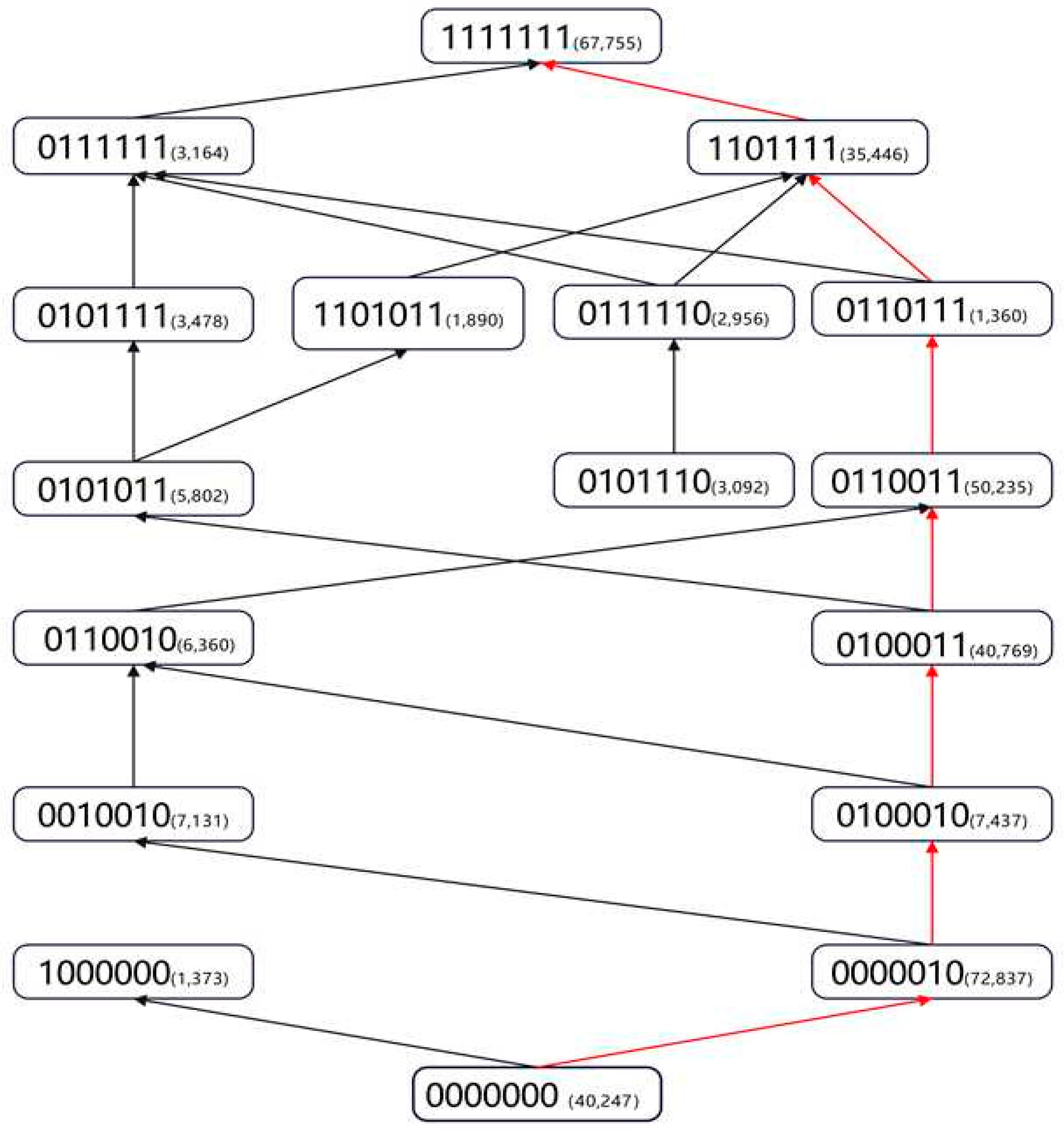

Knowledge State Stratification: First, students’ estimated knowledge states are categorized and stratified based on the number of mastered attributes. For example, a state with no attributes mastered (e.g., 0000000) is designated as Level 0; states with one mastered attribute (e.g., 1000000, 0000010) are Level 1, and so forth, resulting in eight levels from 0 to 7. This stratification provides a clear representation of students’ cognitive development stages.

Generation of Pathway Sequences: Given the complexity of learning trajectories, this study focuses on first-order transitions—paths between adjacent levels that differ by the mastery of a single attribute. A path is established when one knowledge state is a subset of another. For instance, the state (0110011) is a subset of (0110111) if the latter includes all attributes of the former plus an additional one (e.g., the fifth attribute). This subset-based identification mirrors the modeling logic of attribute hierarchy models and reveals the likely order in which students acquire knowledge (

Leighton and Gierl 2007b). For example, in the domain of English reading comprehension among high school students, a representative learning pathway identified in this study is:

In theory, given seven attributes, there are 2

7 = 128 possible knowledge states. However, only 79 unique states were observed in the current dataset. To enhance analytical clarity and focus on the most prevalent developmental patterns, the subsequent pathway construction was based on the 17 most frequent knowledge states. This subset has a high degree of sample representativeness, as these 17 states collectively account for 97% (n = 351,332) of the total student population (N = 361,967). The remaining 3% of students (n = 10,635), who were distributed across 62 less frequent knowledge states, were excluded from the pathway visualization to avoid visual clutter. By linking all valid transitions between these states, a comprehensive learning pathway map for English reading comprehension was constructed. Full details of the learning pathway structure are illustrated in

Figure 1. It is crucial to interpret this map as a network of possible transitions rather than a single, fixed sequence for all learners. For example, the presence of both state (0000010) (mastery of A6 only) and state (1000000) (mastery of A1 only) at Level 1 indicates that these are two distinct, alternative starting points for learners progressing from the novice state (0000000). The diagram does not imply a direct, illogical transition between these two Level 1 states where one skill is ‘lost’ and another is ‘gained.’ Rather, they represent parallel branches in the overall learning network, demonstrating that different students may begin their skill acquisition journey by mastering different foundational attributes.

Identifying the Dominant Learning Path: To better inform instructional practices, this study defines the most frequently observed learning path among students as the dominant learning trajectory. As illustrated by the red-marked sequence in the diagram—(0000000) → (0000010) → (0100010) → (0100011) → (0110011) → (0110111) → (0111111) → (1111111)—this dominant path is the most common trajectory found within the full sample. Specifically, a key divergence in learning occurs after the mastery of A7, where students tend to master either A3 or A4 next. Our analysis of the full dataset revealed that the path segment involving the mastery of A3 before A4, (0110011)→(0110111)→(0111111), was followed by 54,759 students. In contrast, the alternative path segment involving the mastery of A4 before A3, (0101011) → (0101111) → (1101111), was followed by 44,726 students. Because the former path is more populous, it was identified as the dominant trajectory. These findings offer clear pedagogical implications for educators and textbook developers by aligning instruction with the natural progression of students’ cognitive development.

It is important to note that the path diagram does not cover the entire student population. Several factors may explain this exclusion. First, data noise may have distorted their knowledge state estimates, potentially due to inattention, guessing, or other irregularities during the assessment. Second, in the absence of data contamination, it is possible that these students followed highly idiosyncratic learning trajectories. Since this study considers only first-order transitions—i.e., adjacent layers differing by one attribute—the framework may have overlooked more nonlinear or nonsequential learning patterns. For instance, certain students may exhibit “jumping learning,” skipping intermediate attributes and acquiring more advanced ones directly, likely due to alternative learning experiences or individual aptitude.

4.2.4. Constructing the Learning Progression

While the learning path depicts the micro-level cognitive sequence based on the inclusion relations among individual knowledge states, the learning progression offers a macro-level categorization of student learning levels. By stratifying learners into hierarchical stages, the learning progression supports differentiated instruction across academic levels. To achieve this, we bridge the multidimensional, categorical output of the CDM (the knowledge states) with a unidimensional, continuous scale using Item Response Theory (IRT). It is critical to clarify the rationale for this step. The purpose of applying a unidimensional IRT model is not to argue that reading comprehension is a single latent trait, which would contradict the multidimensional premise of CDM. Rather, it is a pragmatic step to create a macro-level summary of overall proficiency. This allows us to project the complex, multi-pathway cognitive structure onto a single, interpretable scale that can be aligned with existing educational frameworks, such as the national curriculum standards, which are typically presented as a single, hierarchical progression. The construction procedure includes the following steps:

Integration of Idiosyncratic Knowledge States: The top 17 knowledge states covered 97% of students (

n = 351,332). The remaining 3% (

n = 10,635) exhibited 62 low-frequency states. To incorporate these into the progression framework, K-means clustering assigned each of these 10,635 students to existing clusters defined by the dominant 17 states. This ensured full sample inclusion while prioritizing analytical clarity over cluster validation (

Chen et al. 2017;

Wu et al. 2020,

2021,

2022a,

2022b). Final progression tiers derived their validity from IRT ability estimates and alignment with national curriculum standards.

Ability Estimation: After clustering, the study estimates students’ abilities using the two-parameter logistic (2PL) model within the Item Response Theory (IRT) framework, implemented via the mirt R package. The 2PL model, known for its balance between complexity, stability, and interpretability, provides estimates for both item difficulty and discrimination parameters, offering a precise assessment of student ability (

Hambleton et al. 1991;

De Ayala 2013). The average θ value for students in each knowledge state is then used to represent the ability level associated with that state.

According to

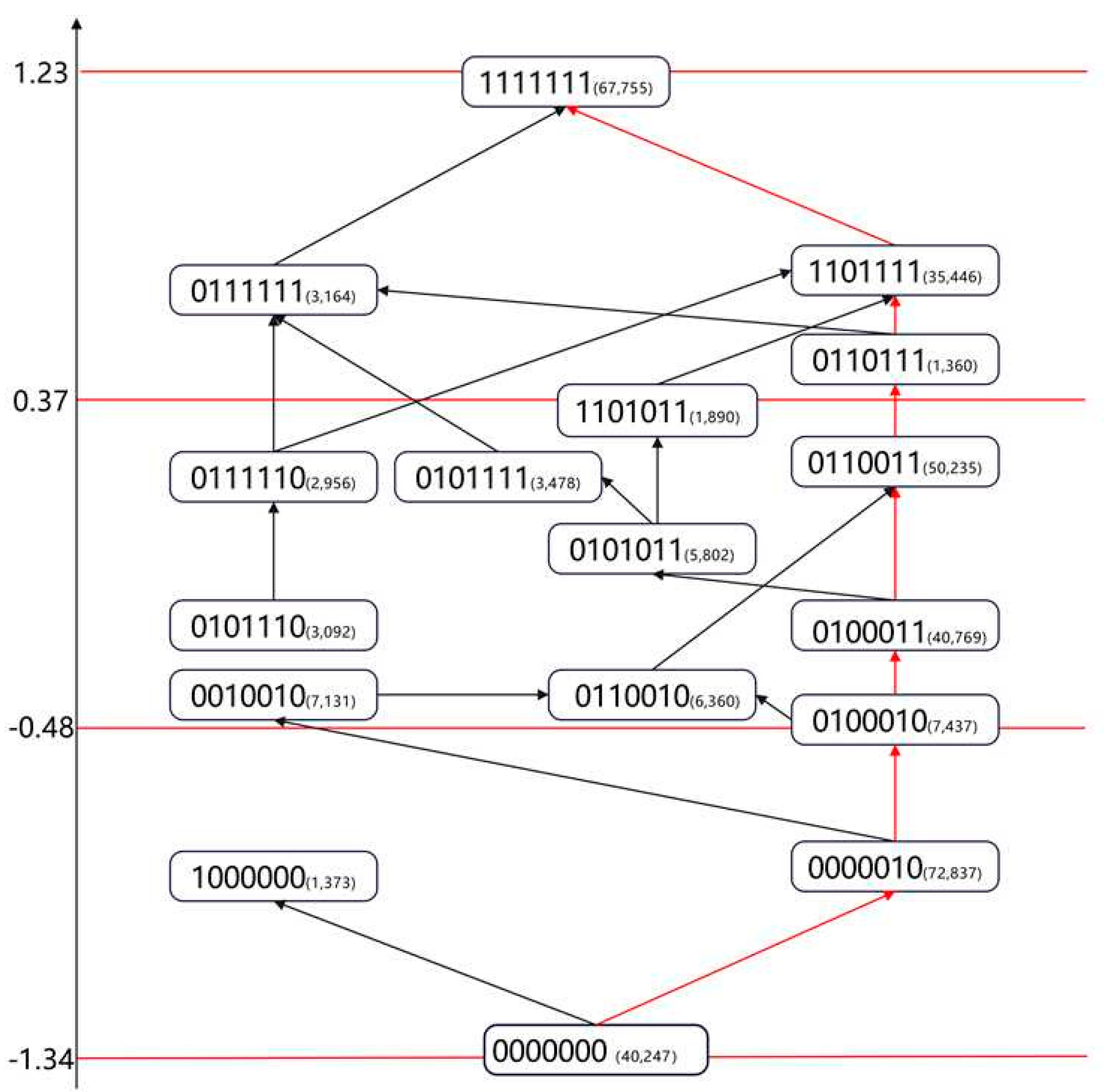

Table 6, the lowest average ability score (−1.34) corresponds to the knowledge state (0000000), while the highest score (1.23) belongs to students who mastered all cognitive attributes (1111111). These estimated values provide a quantitative foundation for the stratification of the learning progression.

A noteworthy and counter-intuitive finding emerges from

Table 6: the mean ability estimates (θ) for knowledge states (1000000) and (0000010) are nearly identical. This proximity is particularly striking when contrasted with the population-level mastery probabilities presented in Table 8, which identify Attribute A1 (‘Difficult Vocabulary’) as the most difficult attribute (33.3% mastery) and Attribute A6 (‘Information Matching’) as the easiest (85.8% mastery). This apparent paradox is resolved by recognizing that the θ value for a knowledge state represents the average functional ability of the students within that group, not the intrinsic difficulty of the attribute itself. The (0000010) group represents typical low-ability learners on the dominant learning path who have acquired only the most foundational skill. In contrast, the (1000000) group likely comprises a small, atypical subset of students who possess isolated vocabulary knowledge but lack the integrated comprehension strategies necessary for overall success on the assessment. The IRT model correctly assigns this atypical group a low overall ability score, as their unbalanced cognitive profile does not translate to functional reading proficiency. This finding demonstrates the model’s sensitivity in distinguishing between different types of low-performing cognitive profiles.

Stratification of the Learning Progression: Academic quality standards serve as an overall description of students’ academic achievement and define the key competencies and their specific proficiency levels that students are expected to attain upon completion of different educational stages. These standards provide an authoritative reference for educational assessment. The orientation and construction of academic quality standards share strong similarities with that of learning progressions, as both aim to describe student abilities in a hierarchical manner. Therefore, the establishment of academic quality standards can draw upon the procedures used for constructing learning progressions (

Xin et al. 2015). Accordingly, we aligned students’ knowledge states with the three academic quality levels defined in the national curriculum standards, classifying them into three tiers based on increasing ability values, as illustrated by the red lines in

Figure 2. This approach is conceptually similar to level setting, which maps continuous ability scores onto discrete levels for better interpretability and practical application (

Cizek et al. 2004). Each knowledge state was then positioned along the ability continuum according to its corresponding ability value, thereby completing the construction of the learning progression. This framework provides a clear roadmap for teachers to identify students’ current learning stages and to offer targeted instructional support accordingly.

By linking the previously identified dominant learning path—(0000000) → (0000010) → (0100010) → (0100011) → (0110011)→(0110111)→(0111111) → (1111111)—to the corresponding ability estimates, a clear trend emerges: students who master a greater number of attributes tend to exhibit progressively higher ability levels. This pattern aligns not only with established cognitive development theories but also with intuitive expectations. More importantly, this pathway represents the most common sequence of attribute acquisition among students in this study, further validating its status as the dominant learning path in the domain of English reading comprehension.

Based on the categorization of learning progression levels illustrated in the figure above, we summarized the attribute patterns within each identified level. Combined with descriptors from national curriculum standards regarding the three levels of academic achievement, we established a detailed classification of the learning progression, as shown in

Table 7.

The results in

Table 7 provide meaningful insights for refining the definitions of academic quality standards. For instance, Level 2 includes descriptors such as “the ability to understand conceptual vocabulary or terms using contextual clues” and “understanding the connotation and denotation of words in context.” These abilities align with attribute A1 (which involves understanding of difficult words in questions, options, or target sentences), yet our data suggests this attribute may be more difficult than previously thought, and better fits within Level 3. Similarly, Level 1 contains the descriptor “making inferences, comparisons, analyses, and generalizations based on reading or visual materials,” which was originally associated with attribute A5 (which involves drawing inferences based on textual information, context, and background knowledge; inferring word meanings; deducing author’s purpose, intention, and strategies). However, our analysis indicates this attribute is also more cognitively demanding and should be classified under Level 2.

These findings were further corroborated by students’ overall mastery probabilities for each cognitive attribute (see

Table 8). The data show that students demonstrated the highest level of mastery for Attribute A6 (Information Matching), with a probability of 85.8%, followed by Attribute A7 (Complex Sentence Comprehension) at 58.7%. In contrast, students showed the lowest levels of mastery for Attribute A5 (Inference) and Attribute A1 (Difficult Vocabulary), with probabilities of 36.3% and 33.3%, respectively. These results clearly indicate that A5 and A1 are the most cognitively demanding attributes in senior high school English reading comprehension and should be classified at higher levels within the academic quality standards.

These data-driven insights provide important implications for future revisions of curriculum standards, the development of instructional materials, and the refinement of teaching strategies. They contribute to a more precise alignment between academic quality standards and students’ actual cognitive levels.

4.2.5. Personalized Analysis

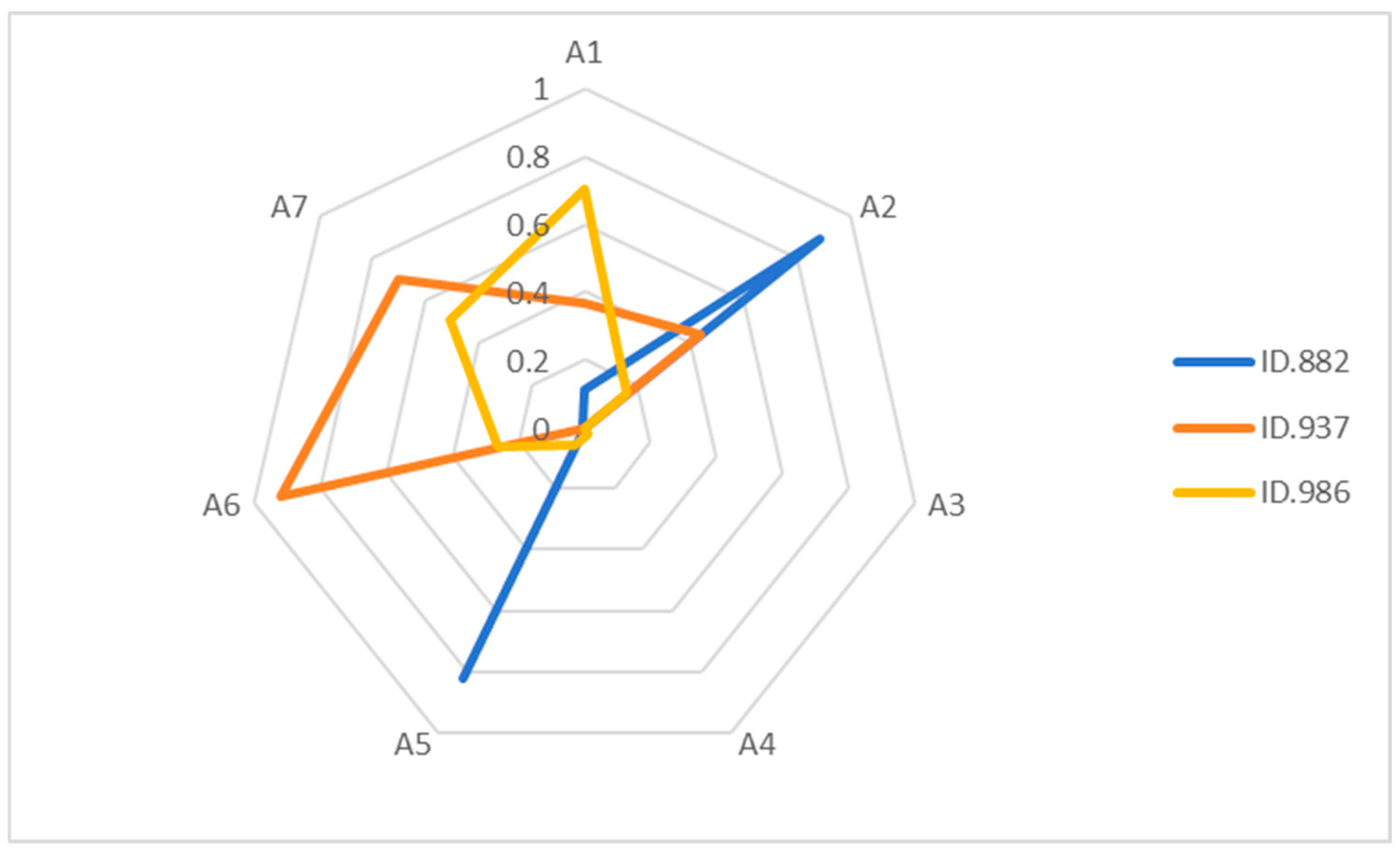

A key advantage of Cognitive Diagnostic Assessment lies in its ability to generate individualized knowledge profiles for each student. To demonstrate the granularity and diagnostic power of this method, we conducted an in-depth analysis of three representative students, identified as IDs 882, 937, and 986. Their radar charts (see

Figure 3) vividly illustrate their distinct strengths and weaknesses across the seven cognitive attributes, offering clear guidance for personalized instruction.

As illustrated in the radar plots, the three students presented in this figure achieved identical total scores. Traditional assessment methods would consider them to be at the same proficiency level. However, from the perspective of cognitive diagnostic assessment, their mastery of cognitive attributes varies significantly, reaffirming the well-documented phenomenon of “homogeneous scores, heterogeneous cognition” (

Templin and Henson 2010;

De La Torre and Ma 2016).

A detailed breakdown is as follows:

Student 882 demonstrates mastery primarily in Attribute A2 and Attribute A5.

Student 937 shows strong performance in Attribute A6 and Attribute A7.

Student 986 exhibits mastery in Attribute A1 and Attribute A7.

All three students share relatively low mastery in Attribute A3, Attribute A4, and Attribute A5, which aligns with the overall pattern of these attributes being the most challenging as shown in

Table 8. These findings indicate substantial differences not only in the types of attributes mastered but also in the degree of mastery. Such fine-grained diagnostic information enables truly personalized learning plans. Rather than relying solely on total scores, teachers can design tailored instructional strategies to address each student’s specific weaknesses (

De La Torre 2011;

De La Torre and Ma 2016;

Tatsuoka 2009).