FLUX (Fluid Intelligence Luxembourg): Development and Validation of a Fair Tablet-Based Test of Cognitive Ability in Multicultural and Multilingual Children

Abstract

1. Introduction

1.1. Development of Cognitive Abilities in Children

1.2. Rationale for Developing a New Test for Multicultural and Multilingual Children

1.3. Adapting the CHC Model to a Culture and Language-Fair Assessment Context

1.4. Research Questions of the Present Study

- (1)

- To ensure whether FLUX measures what it is designed to measure (a child’s general fluid cognitive ability). This involves investigating (a) if its hypothesised factorial structure is supported by empirical data (by applying Confirmatory factor Analysis; CFA), and (b) determining its concurrent and criterion-related validity by correlating it with a test measuring cognitive ability (same construct), and with educational achievement measures: in mathematics for convergent validity (related constructs), and German reading and listening for divergent validity (unrelated constructs).

- (2)

- To explore the reliability of FLUX by investigating its internal consistency (applying McDonald’s Omega, ω, and split-half reliability) by examining if a group of items reflects the same underlying construct.

- (3)

- To determine whether FLUX is assessing the Gf of third-grade children in a fair manner, independent of their background characteristics (SES, language spoken at home, and gender) (by applying DIF, to test for measurement invariance at the item level).

2. Materials and Methods

2.1. Test Development: Item and Instruction Development

2.1.1. Item Development

2.1.2. Instruction Development

2.2. Participants

2.3. Procedure

2.4. Measures

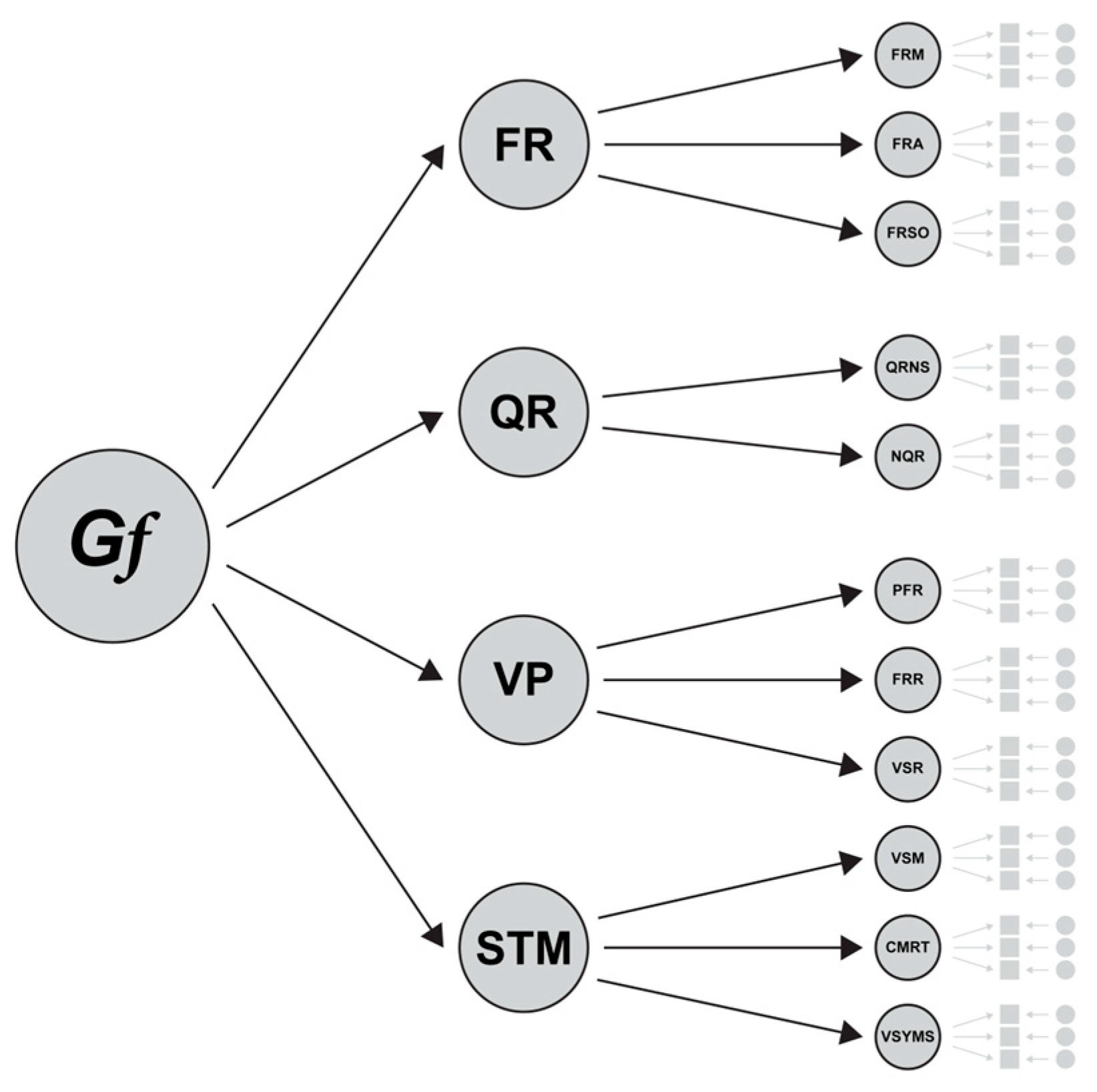

2.4.1. FLUX

- Figural Reasoning—Matrices (FRM). To find the missing figure, children were required to decipher the connection between four to nine abstract figures connected per row, per column, diagonally or in several directions, by selecting the correct answer from four possible solutions.

- Figural Reasoning—Analogies (FRA). The task involved two rows of abstract figures positioned in relation to each other. Children had to identify the rule based on the first row (e.g., big becomes small) to complete the equation on the second row, where the figure on the right side was always missing, by selecting the correct answer from four possible solutions.

- Figural Reasoning—Sequential Order (FRSO). Children had to correctly complete the respective sequences of four abstract figures. Starting from the initial figure, they had to find out what happened to it (e.g., the figure becomes smaller and smaller) and then complete the sequence by selecting the correct one from four possible solutions.

- Quantitative Reasoning—Numerical Series (QRNS). Children were presented with sequences of five to six numbers with one number (the second last) missing in each sequence. By using an operation (i.e., addition, subtraction, multiplication, or division), they were able to infer the rule applicable (e.g., +1, +1 or −2, −1) to the series, which allowed the missing number to be deduced by selecting the right one among four answer possibilities.

- Non-Symbolic Quantitative Reasoning (NQR). Children were shown a 3 × 3 grid with dots or bars in each cell except one, which was empty. By first inferring that two identical colours represent addition (e.g., white-white or black-black) and two different colours (e.g., black-white) represent subtraction, children were able to determine the quantitative relationship between figures in each row and column by applying the right operation and choosing the right answer from four possible answers.

- Paper Folding Reasoning (PFR). A drawing of a sheet of paper on top of the screen had been folded either once (from top to bottom) or twice (from top to bottom and right to left). Additionally, each paper had one or more holes cut out of it. The task required children to visualise the paper being unfolded and predict its appearance (by selecting an answer among four) while accounting for the holes.

- Figural Rotation Reasoning (FRR). Children were presented with a figure on the top of the screen and required to find the exact figure in a rotated form from four options below using mental rotation. It is important to note that the upper figure must not be imagined as a mirror image, and children were not allowed to rotate the tablet manually while solving the task.

- Visual Spatial Reasoning (VSR). This task required children to mentally connect three puzzle pieces and rotate them mentally if needed to create the corresponding figure at the top. To respond, children had to select three out of six possible answers.

- Visual-Spatial Memory (VSM). In a 4 × 4 grid, a sequence of three to seven apples appeared simultaneously in their respective cells. Children were asked to memorise the position of each apple and reproduce it by selecting the corresponding cells in an empty grid once the apples disappeared. They could only move on to the next step once they had reproduced the quantity of apples shown previously (if two apples were projected, children had to select two cells to be able to move to the next item).

- Counting-Memory-Recall Task (CMRT). A sequence of yellow squares with dots appeared on the screen; each square displayed a certain number of small quantities of green dots (minimum one dot, maximum five dots). With an innate ability to subitise up to about four dots without counting, children can determine the exact number of dots on each square in a sequence even without counting knowledge (Davis and Pérusse 1988; Kaufmann et al. 1949), enabling them to determine the number of dots quickly and accurately. The task started with sequences of three squares of dots and progressed to sequences of six squares of dots. During each presentation, children were required to memorise the respective sequence, and as soon as it disappeared, they had to drag and drop the squares of dots on the lower screen into empty sequenced boxes in the answer format on the upper screen to reproduce the recently shown sequence in the correct order.

- Visual Symbolic Memory Span (VSYMS). Abstract figures were presented to the children in a sequence (from two to four). Each figure (trapezoid, circle, triangle) was either yellow or blue and pointed upwards or downwards. The correct sequence of each figure had to be memorised based on its shape, colour, direction, and place. Immediately after the presentation ended, an answer format appeared, and children were asked to reproduce the recently shown sequence by dragging and dropping the figures into empty sequenced boxes.

2.4.2. Measures for Validation

2.4.3. Data Analysis

3. Results

3.1. Descriptive Statistics

3.2. Construct and Concurrent Validity

3.3. Reliability

3.4. Test Fairness

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Levels | Scale | N (Listwise) | Number of Test Items | Mean | SD | Variance | McDonald’s ω | rtt | Skewness (SE) | Kurtosis (SE) |

|---|---|---|---|---|---|---|---|---|---|---|

| FRM | 703 | 13 | 7.53 | 2.87 | 8.26 | 0.71 | 0.70 | −0.43 (0.09) | −0.44 (0.18) | |

| FRA | 703 | 13 | 6.90 | 2.69 | 7.25 | 0.70 | 0.70 | 0.03 (0.09) | −0.65 (0.18) | |

| FRSO | 672 | 13 | 8.12 | 2.82 | 7.92 | 0.73 | 0.75 | −0.26 (0.09) | −0.72 (0.19) | |

| QRNS | 702 | 11 | 5.71 | 2.56 | 6.54 | 0.66 | 0.70 | 0.08 (0.09) | −0.66 (0.18) | |

| Subtest level | NQR | 701 | 15 | 8.68 | 3.39 | 11.51 | 0.75 | 0.79 | −0.03 (0.09) | −0.90 (0.18) |

| FRR | 672 | 12 | 5.57 | 3.12 | 9.73 | 0.77 | 0.80 | 0.42 (0.09) | −0.80 (0.19) | |

| VSR | 659 | 10 | 4.51 | 2.34 | 5.48 | 0.75 | 0.76 | 0.33 (0.10) | −0.58 (0.19) | |

| PFR | 670 | 13 | 6.77 | 2.97 | 8.85 | 0.72 | 0.73 | 0.24 (0.09) | −0.71 (0.19) | |

| CMRT | 702 | 10 | 5.08 | 2.42 | 5.86 | 0.75 | 0.77 | −0.16 (0.09) | −0.61 (0.18) | |

| VSYMS | 672 | 10 | 5.00 | 1.96 | 3.82 | 0.55 | 0.55 | −0.08 (0.09) | −0.44 (0.19) | |

| VSM | 701 | 12 | 5.74 | 2.76 | 7.64 | 0.75 | 0.73 | 0.23 (0.09) | −0.72 (0.18) | |

| FR | 672 | 39 | 22.57 | 6.93 | 47.99 | 0.85 | 0.85 | −0.13 (0.09) | −0.70 (0.19) | |

| QR | 700 | 26 | 14.39 | 4.98 | 24.83 | 0.79 | 0.84 | 0.07 (0.09) | −0.65 (0.19) | |

| Domain level | VP | 653 | 35 | 16.91 | 7.01 | 49.07 | 0.87 | 0.90 | 0.49 (0.10) | −0.52 (0.19) |

| STM | 669 | 32 | 15.79 | 5.24 | 27.45 | 0.79 | 0.82 | −0.02 (0.09) | −0.45 (0.19) | |

| Full-scale | FLUX | 648 | 132 | 69.83 | 19.90 | 395.64 | 0.94 | 0.95 | 0.19 (0.10) | −0.65 (0.19) |

| RAVEN-Short | 702 | 15 | 7.76 | 3.36 | 11.31 | 0.78 | 0.80 | −0.17 (0.09) | −0.65 (0.18) | |

| EA-MA | 655 | - | 477.89 | 115.29 | 13292.18 | - | - | 0.24 (0.10) | 0.90 (0.19) | |

| Educational Achievement | EA-GL | 631 | - | 469.91 | 105.04 | 11033.20 | - | - | 0.27 (0.10) | −0.47 (0.19) |

| EA-GR | 630 | - | 474.02 | 127.01 | 16131.44 | - | - | 0.21 (0.10) | −0.36 (0.19) |

References

- Alfonso, Vincent C., Dawn P. Flanagan, and Suzan Radwan. 2005. The Impact of the Cattell-Horn Carroll Theory on Test Development and Interpretation of Cognitive and Academic Abilities. In Contemporary Intellectual Assessment: Theories, Tests, and Issues. Edited by Dawn P. Flanagan and Patti L. Harrison. New York: The Guilford Press, pp. 185–202. [Google Scholar]

- American Educational Research Association, American Psychological Association, and National Council on Measurement in Education. 2014. Standards for Educational and Psychological Testing. Washington, DC: American Psychological Association. [Google Scholar]

- Archer, Dane. 1997. Unspoken diversity: Cultural differences in gestures. Qualitative Sociology 20: 79–105. [Google Scholar] [CrossRef]

- Baddeley, Alan. 2000. The episodic buffer: A new component of working memory? Trends in Cognitive Sciences 4: 417–23. [Google Scholar] [CrossRef]

- Banerjee, Jayanti, and Spiros Papageorgiou. 2016. What’s in a topic? Exploring the interaction between test-taker age and item content in High-Stakes testing. International Journal of Listening 30: 8–24. [Google Scholar] [CrossRef]

- Baudson, Tanja Gabriele, and Franzis Preckel. 2013. Development and validation of the German Test for (Highly) Intelligent Kids—T(H)INK. European Journal of Psychological Assessment 29: 171–81. [Google Scholar] [CrossRef]

- Binet, Alfred, and Théodore Simon. 1905. Méthodes nouvelles pour le diagnostic du niveau intellectuel des anormaux [A new method for the diagnosis of the intellectual level of abnormal persons]. L’Année Psychologique 11: 191–244. [Google Scholar] [CrossRef]

- Boateng, Godfred O., Torsten B. Neilands, Edward A. Frongillo, Hugo R. Melgar-Quiñónez, and Sera L. Young. 2018. Best Practices for developing and Validating scales for health, Social, and Behavioral Research: A primer. Frontiers in Public Health 6: 149. [Google Scholar] [CrossRef]

- Bracken, Bruce A., and R. Steve McCallum. 1998. Universal Nonverbal Intelligence Test. Chicago: PRO-ED. [Google Scholar]

- Bracken, Bruce A., and R. Steve McCallum. 2016. Universal Nonverbal Intelligence Test, 2nd ed. Rolling Meadows: Riverside. [Google Scholar]

- Bradley, Robert H., and Robert F. Corwyn. 2002. Socioeconomic status and child development. Annual Review of Psychology 53: 371–99. [Google Scholar] [CrossRef] [PubMed]

- Buckley, Jeffrey, Niall Seery, Donal Canty, and Lena Gumaelius. 2018. Visualization, inductive reasoning, and memory span as components of fluid intelligence: Implications for technology education. International Journal of Educational Research 90: 64–77. [Google Scholar] [CrossRef]

- Burgess, Gregory C., Jeremy R. Gray, Andrew R. A. Conway, and Todd S. Braver. 2011. Neural mechanisms of interference control underlie the relationship between fluid intelligence and working memory span. Journal of Experimental Psychology: General 140: 674–92. [Google Scholar] [CrossRef]

- Calvin, Catherine M., Cres Fernandes, Pauline Smith, Peter M. Visscher, and Ian J. Deary. 2010. Sex, intelligence and educational achievement in a national cohort of over 175,000 11-year-old schoolchildren in England. Intelligence 38: 424–32. [Google Scholar] [CrossRef]

- Camarata, Stephen, and Richard Woodcock. 2006. Sex differences in processing speed: Developmental effects in males and females. Intelligence 34: 231–52. [Google Scholar] [CrossRef]

- Campbell, Hannah Cruickshank, Christopher J. Wilson, and Nicki Joshua. 2021. The performance of children with intellectual giftedness and intellectual disability on the WPPSI-IV A&NZ. The Educational and Developmental Psychologist 38: 88–98. [Google Scholar] [CrossRef]

- Canivez, Gary L., and Eric A. Youngstrom. 2019. Challenges to the Cattell-Horn-Carroll theory: Empirical, clinical, and policy implications. Applied Measurement in Education 32: 232–48. [Google Scholar] [CrossRef]

- Carroll, John B. 1993. Human Cognitive Abilities: A Survey of Factor-Analytic Studies. Cambridge: Cambridge University Press. [Google Scholar]

- Cattell, Raymond. B. 1949. Culture Free Intelligence Test. Scale 1. Savoy: Institute of Personality and Ability Testing. [Google Scholar]

- Cattell, Raymond B. 1963. Theory of fluid and crystallized intelligence: A critical experiment. Journal of Educational Psychology 54: 1–22. [Google Scholar] [CrossRef]

- Cattell, Raymond B. 1987. Intelligence: Its Structure, Growth and Action. Haarlem: North-Holland. [Google Scholar]

- Chen, Feinian, Patrick J. Curran, Kenneth A. Bollen, James B. Kirby, and Pamela Paxton. 2008. An empirical evaluation of the use of fixed cutoff points in RMSEA test statistic in structural equation models. Sociological Methods & Research 36: 462–94. [Google Scholar] [CrossRef] [PubMed]

- Coleman, Maggie, Alicia Paredes Scribner, Susan Johnsen, and Margaret Kohel Evans. 1993. A comparison between the Wechsler Adult Intelligence Scale—Revised and the Test of Nonverbal Intelligence-2 with Mexican-American secondary students. Journal of Psychoeducational Assessment 11: 250–58. [Google Scholar] [CrossRef]

- Daseking, Monika, and Franz Petermann. 2015. Nonverbale Intelligenzdiagnostik: Sprachfreie Erhebung kognitiver Fähigkeiten und Prävention von Entwicklungsrisiken. Gesundheitswesen 77: 791–92. [Google Scholar] [CrossRef] [PubMed]

- Davis, Hank, and Rachelle Pérusse. 1988. Numerical competence in animals: Definitional issues, current evidence, and a new research agenda. Behavioral and Brain Sciences 11: 561–79. [Google Scholar] [CrossRef]

- De Ayala, Rafael Jaime. 2009. The Theory and Practice of Item Response Theory. New York: Guilford Press. [Google Scholar]

- DeThorne, Laura S., and Barbara A. Schaefer. 2004. A guide to child nonverbal IQ measures. American Journal of Speech-Language Pathology 13: 275–90. [Google Scholar] [CrossRef]

- Dilling, Horst, Werner Mombour, Martin H. Schmidt, and Weltgesundheitsorganisation, eds. 2015. Internationale Klassifikation Psychischer Störungen: ICD-10 Kapitel V (F); Klinisch-Diagnostische Leitlinien, 10th ed. Göttingen: Hogrefe. [Google Scholar]

- Dings, Alexander, and Frank M. Spinath. 2021. Motivational and personality variables distinguish academic underachievers from high achievers, low achievers, and overachievers. Social Psychology of Education 24: 1461–85. [Google Scholar] [CrossRef]

- Duncan, John, Rüdiger J. Seitz, Jonathan Kolodny, Daniel Bor, Hans Herzog, Ayesha Ahmed, Fiona N. Newell, and Hazel Emslie. 2000. A neural basis for general intelligence. Science 289: 457–60. [Google Scholar] [CrossRef]

- Evers, Arne, Carmen Hagemeister, Andreas Høstmælingen, Patricia Lindley, José Muñiz, and Anders Sjöberg. 2013. EFPA Review Model for the Description and Evaluation of Psychological and Educational Tests (Version 4.2.6). Brussels: Board of Assessment of EFPA. [Google Scholar]

- Finn, Amy S., Matthew A. Kraft, Martin R. West, Julia A. Leonard, Crystal E. Bish, Rebecca E. Martin, Margaret A. Sheridan, Christopher F. O. Gabrieli, and John D. E. Gabrieli. 2014. Cognitive skills, student achievement tests, and schools. Psychological Science 25: 736–44. [Google Scholar] [CrossRef]

- Flanagan, Dawn P., and Shauna G. Dixon. 2014. The Cattell-Horn-Carroll theory of cognitive abilities. In Encyclopedia of Special Education: A Reference for the Education of Children, Adolescents, and Adults with Disabilities and Other Exceptional Individuals. Edited by Cecil R. Reynolds, Kimberly J. Vannest and Elaine Fletcher-Janzen. Hoboken: Wiley, pp. 1–13. [Google Scholar] [CrossRef]

- Fleishman, John A., William D. Spector, and Barbara M. Altman. 2002. Impact of differential item functioning on age and gender differences in functional disability. The Journals of Gerontology Series B: Psychological Sciences and Social Sciences 57: S275–S284. [Google Scholar] [CrossRef]

- Ford, Laurie, and V. Susan Dahinten. 2005. Use of intelligence tests in the assessment of preschoolers. In Contemporary Intellectual Assessment: Theories, Tests, and Issues. Edited by Dawn P. Flanagan and Patti L. Harrison. New York: The Guilford Press, pp. 487–503. [Google Scholar]

- Franklin, Trish. 2017. Best practices in multicultural assessment of cognition. Test of Nonverbal Intelligence: A language-free measure of cognitive ability. In Handbook of Nonverbal Assessment, 2nd ed. Edited by R. Steve McCallum. Cham: Springer, pp. 39–46. [Google Scholar] [CrossRef]

- Gana, Kamel, and Guillaume Broc. 2019. Structural Equation Modeling with Lavaan. Hoboken: John Wiley & Sons. [Google Scholar]

- Ganzeboom, Harry B. G., Paul M. De Graaf, and Donald J. Treiman. 1992. A standard international socio-economic index of occupational status. Social Science Research 21: 1–56. [Google Scholar] [CrossRef]

- Geary, David C. 2005. The Origin of Mind: Evolution of Brain, Cognition, and General Intelligence. Washington: American Psychological Association. [Google Scholar] [CrossRef]

- Giofrè, David, Ernesto Stoppa, Paolo Ferioli, Lina Pezzuti, and Cesare Cornoldi. 2016. Forward and backward digit span difficulties in children with specific learning disorder. Journal of Clinical and Experimental Neuropsychology 38: 478–86. [Google Scholar] [CrossRef] [PubMed]

- Giofrè, David, Katie Allen, Enrico Toffalini, and Sara Caviola. 2022. The impasse on gender differences in intelligence: A meta-analysis on WISC batteries. Educational Psychology Review 34: 2543–68. [Google Scholar] [CrossRef]

- Goldbeck, Lutz, Monika Daseking, Susanne Hellwig-Brida, Hans C. Waldmann, and Franz Petermann. 2010. Sex differences on the German Wechsler Intelligence Test for Children (WISC-IV). Journal of Individual Differences 31: 22–28. [Google Scholar] [CrossRef]

- Gottfredson, Linda S. 1997. Why g matters: The complexity of everyday life. Intelligence 24: 79–132. [Google Scholar] [CrossRef]

- Gray, Jeremy R., Christopher F. Chabris, and Todd S. Braver. 2003. Neural mechanisms of general fluid intelligence. Nature Neuroscience 6: 316–22. [Google Scholar] [CrossRef]

- Greisen, Max, Caroline Hornung, Tanja G. Baudson, Claire Muller, Romain Martin, and Christine Schiltz. 2018. Taking language out of the equation: The assessment of basic math competence without language. Frontiers in Psychology 9: 1076. [Google Scholar] [CrossRef]

- Greisen, Max, Carrie Georges, Caroline Hornung, Philipp Sonnleitner, and Christine Schiltz. 2021. Learning mathematics with shackles: How lower reading comprehension in the language of mathematics instruction accounts for lower mathematics achievement in speakers of different home languages. Acta Psychologica 221: 103456. [Google Scholar] [CrossRef] [PubMed]

- Guttman, Louis. 1965. A faceted definition of intelligence. In Studies in Psychology, Scripta Hierosolymitana. Edited by R. Eiferman. Jerusalem: The Hebrew University, vol. 14, pp. 166–81. [Google Scholar]

- Haier, Richard J. 2017. The Neuroscience of Intelligence. Cambridge: Cambridge University Press. [Google Scholar]

- Hamhuis, Eva, Cees Glas, and Martina Meelissen. 2020. Tablet assessment in primary education: Are there performance differences between TIMSS’ paper-and-pencil test and tablet test among Dutch grade-four students? British Journal of Educational Technology 51: 2340–58. [Google Scholar] [CrossRef]

- Hammill, Donald. D., Nils A. Pearson, and J. Lee Wiederholt. 2009. Comprehensive Test of Nonverbal Intelligence–Second Edition (CTONI-2). Chicago: PRO-ED. [Google Scholar]

- Hassett, Natalie R., R. Steve McCallum, and Bruce A. Bracken. 2024. Nonverbal assessment of intelligence and related constructs. In Desk Reference in School Psychology. Edited by Lea A. Theodore, Bruce A. Bracken and Melissa A. Bray. Oxford: Oxford University Press, pp. 45–62. [Google Scholar]

- Härnqvist, Kjell. 1997. Gender and grade differences in latent ability variables. Scandinavian Journal of Psychology 38: 55–62. [Google Scholar] [CrossRef]

- Heitz, Richard. P., Nash Unsworth, and Randall. W. Engle. 2004. Working memory capacity, attention control, and fluid intelligence. In Handbook of Understanding and Measuring Intelligence. Edited by Oliver Wilhelm and Randall W. Engle. Newcastle upon Tyne: Sage, pp. 61–77. [Google Scholar]

- Hirschfeld, Gerrit, and Ruth von Brachel. 2014. Multiple-Group confirmatory factor analysis in R: A tutorial in measurement invariance with continuous and ordinal indicators. Practical Assessment, Research & Evaluation 19: 1–12. [Google Scholar] [CrossRef]

- Holland, Paul W., and Dorothy T. Thayer. 1988. Differential item performance and the Mantel–Haenszel procedure. In Test Validity. Edited by Howard Wainer and Henry I. Braun. Mahwah: Lawrence Erlbaum, pp. 129–45. [Google Scholar]

- Hopkins, Shelley, Alex A. Black, Sonia White, and Joanne M. Wood. 2019. Visual information processing skills are associated with academic performance in grade 2 school children. Acta Ophthalmologica 97: 779–87. [Google Scholar] [CrossRef]

- Hopkins, Will G. 2002. New View of Statistics: A Scale of Magnitudes for Effect Statistics. Sportsci.org. Available online: http://www.sportsci.org/resource/stats/effectmag.html (accessed on 27 May 2024).

- Horn, John L., and Jennie Noll. 1997. Human cognitive capabilities: Gf–Gc theory. In Contemporary Intellectual Assessment: Theories, Tests, and Issues. Edited by Dawn P. Flanagan, Judy L. Genshaft and Patti L. Harrison. New York: The Guilford Press, pp. 53–91. [Google Scholar]

- Horn, John L., and Nayena Blankson. 2005. Foundations for better understanding of cognitive abilities. In Contemporary Intellectual Assessment: Theories, Tests, and Issues, 3rd ed. Edited by Dawn P. Flanagan and Patti L. Harrison. New York: The Guilford Press, pp. 41–68. [Google Scholar]

- Hornung, Caroline, Christine Schiltz, Martin Brunner, and Romain Martin. 2014. Predicting first-grade mathematics achievement: The contributions of domain-general cognitive abilities, nonverbal number sense, and early number competence. Frontiers in Psychology 5: 1–18. [Google Scholar] [CrossRef] [PubMed]

- Hornung, Caroline, Lena Maria Kaufmann, Martha Ottenbacher, Constanze Weth, Rachel Wollschläger, Sonja Ugen, and Antoine Fischbach. 2023. Early Childhood Education and Care in Luxembourg. Attendance and Associations with Early Learning Performance. Luxembourg: Luxembourg Center of Educational Testing (LUCET). Available online: https://hdl.handle.net/10993/54926 (accessed on 27 May 2024).

- Hornung, Caroline, Martin Brunner, Robert A.P. Reuter, and Romain Martin. 2011. Children’s working memory: Its structure and relationship to fluid intelligence. Intelligence 39: 210–21. [Google Scholar] [CrossRef]

- Höffler, Tim N., and Detlev Leutner. 2007. Instructional animation versus static pictures: A meta-analysis. Learning and Instruction 17: 722–38. [Google Scholar] [CrossRef]

- Hu, Li-tze, and Peter M. Bentler. 1999. Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling: A Multidisciplinary Journal 6: 1–55. [Google Scholar] [CrossRef]

- Jensen, Arthur Robert. 1980. Bias in Mental Testing. New York: Free Press. [Google Scholar]

- Jensen, Arthur Robert. 1998. The G Factor: The Science of Mental Ability. Elmwood Park: Praeger. [Google Scholar]

- Joél, Torsten. 2018. Intelligenzdiagnostik mit geflüchteten Kindern und Jugendlichen. Zeitschrift für Heilpädagogik 69: 196–206. [Google Scholar]

- Johnsen, Susan K. 2017. Test of Nonverbal Intelligence: A language-free measure of cognitive ability. In Handbook of Nonverbal Assessment, 2nd ed. Edited by R. Steve McCallum. Cham: Springer, pp. 59–76. [Google Scholar] [CrossRef]

- Kane, Michael J., and Jeremy R. Gray. 2005. Fluid intelligence. In Encyclopedia of Human Development. Edited by Neil J. Salkind. Newcastle upon Tyne: Sage, vol. 3, pp. 528–29. [Google Scholar]

- Kaufman, Alan S., and Nadeen L. Kaufman. 1983. Kaufman Assessment Battery for Children. Las Vegas: American Guidance Service. [Google Scholar]

- Kaufman, Alan S., Cheryl K. Johnson, and Xin Liu. 2008. A CHC theory-based analysis of age differences on cognitive abilities and academic skills at ages 22 to 90 years. Journal of Psychoeducational Assessment 26: 350–81. [Google Scholar] [CrossRef]

- Kaufman, Alan S., Timothy A. Salthouse, Caroline Scheiber, and Hsin-Yi Chen. 2016. Age differences and educational attainment across the life span on three generations of Wechsler adult scales. Journal of Psychoeducational Assessment 34: 421–41. [Google Scholar] [CrossRef]

- Kaufmann, E. L., M. W. Lord, T. W. Reese, and J. Volkmann. 1949. The discrimination of visual number. American Journal of Psychology 62: 498–525. [Google Scholar] [CrossRef]

- Keith, Timothy Z., Matthew R. Reynolds, Lisa G. Roberts, Amanda L. Winter, and Cynthia A. Austin. 2011. Sex differences in latent cognitive abilities ages 5 to 17: Evidence from the Differential Ability Scales—Second Edition. Intelligence 39: 389–404. [Google Scholar] [CrossRef]

- Kim, Kyung Hee, and Darya L. Zabelina. 2015. Cultural bias in assessment: Can creativity assessment help? The International Journal of Critical Pedagogy 6: 129–44. [Google Scholar]

- Kline, Rex B. 2011. Principles and Practice of Structural Equation Modeling, 3rd ed. New York: The Guilford Press. [Google Scholar]

- Kocevar, Gabriel, Ilaria Suprano, Claudio Stamile, Salem Hannoun, Pierre Fourneret, Olivier Revol, Fanny Nusbaum, and Dominique Sappey–Marinier. 2019. Brain structural connectivity correlates with fluid intelligence in children: A DTI graph analysis. Intelligence 72: 67–75. [Google Scholar] [CrossRef]

- Kuncel, Nathan R., Sarah A. Hezlett, and Deniz S. Ones. 2004. Academic performance, career potential, creativity, and job performance: Can one construct predict them all? Journal of Personality and Social Psychology 86: 148–61. [Google Scholar] [CrossRef] [PubMed]

- Kyllonen, Patrick, and Harrison Kell. 2017. What is fluid intelligence? Can it be improved? In Cognitive Abilities and Educational Outcomes: A Festschrift in Honour of Jan-Eric Gustafsson. Edited by Monica Rosén, Kajsa Yang Hansen and Ulrika Wolff. Cham: Springer International Publishing, pp. 15–37. [Google Scholar] [CrossRef]

- Lakin, Joni Marie. 2010. Comparison of Test Directions for Ability Tests: Impact on Young English Language Learner and Non-ELL Students. Unpublished Doctoral dissertation, University of Iowa, Iowa City, IA, USA. Available online: http://ir.uiowa.edu/etd/536 (accessed on 27 May 2024).

- Langener, Anna M., Anne-Will Kramer, Wouter Van Den Bos, and Hilde M. Huizenga. 2021. A shortened version of Raven’s standard progressive matrices for children and adolescents. British Journal of Development Psychology 40: 35–45. [Google Scholar] [CrossRef] [PubMed]

- Lavergne, Catherine, and François Vigneau. 1997. Response speed on aptitude tests as an index of intellectual performance: A developmental perspective. Personality and Individual Differences 23: 283–90. [Google Scholar] [CrossRef]

- Liu, Xiaowen, and H. Jane Rogers. 2022. Treatments of differential item functioning: A comparison of four methods. Educational and Psychological Measurement 82: 225–53. [Google Scholar] [CrossRef]

- Lohman, David F. 1993. Spatial Ability and G. Paper presented at the First Spearman Seminar, University of Plymouth, Plymouth, UK, July 21. [Google Scholar]

- Marshalek, Brachia, David F. Lohman, and Richard E. Snow. 1983. The complexity continuum in the radix and hierarchical models of intelligence. Intelligence 7: 107–27. [Google Scholar] [CrossRef]

- Martin, Romain, Sonja Ugen, and Antoine Fischbach, eds. 2015. Épreuves Standardisées: Bildungsmonitoring für Luxemburg. Nationaler Bericht 2011 bis 2013. Luxembourg: University of Luxembourg, LUCET. [Google Scholar]

- Martini, Sophie Frédérique. 2021. The Influence of Language on Mathematics in a Multilingual Educational Setting. Doctoral dissertation, University of Luxembourg, Luxembourg, March 25. [Google Scholar]

- McCallum, R. Steve. 2017. Context for nonverbal assessment of intelligence and related abilities. In Handbook of Nonverbal Assessment, 2nd ed. Cham: Springer International Publishing AG, pp. 3–19. [Google Scholar] [CrossRef]

- McCallum, R. Steve, and Bruce A. Bracken. 2018. The Universal Nonverbal Intelligence Test—Second Edition: A multidimensional nonverbal alternative for cognitive assessment. In Contemporary Intellectual Assessment: Theories, Tests, and Issues. Edited by Dawn P. Flanagan and Erin M. McDonough. New York: The Guilford Press, pp. 567–83. [Google Scholar]

- McGrew, Kevin S. 2005. The Cattell-Horn-Carroll theory of cognitive abilities: Past, present, and future. In Contemporary Intellectual Assessment: Theories, Tests, and Issues. Edited by Dawn P. Flanagan and Patti L. Harrison. New York: The Guilford Press, pp. 136–81. [Google Scholar]

- McNeish, Daniel. 2018. Thanks coefficient alpha, we’ll take it from here. Psychological Methods 23: 412–33. [Google Scholar] [CrossRef]

- MENFP, ed. 2011. Plan d’études. École fondamentale. Available online: https://men.public.lu/fr/publications/courriers-education-nationale/numeros-speciaux/plan-etudes-ecoles-fondamentale.html (accessed on 27 May 2024).

- MENJE. 2020. The Luxembourg Education System. Available online: www.men.lu (accessed on 27 May 2024).

- MENJE, and SCRIPT. 2022. Education System in Luxembourg. Luxembourg: Key Figures. Available online: https://www.edustat.lu (accessed on 27 May 2024).

- Messick, Samuel. 1989. Validity. In Educational Measurement, 3rd ed. Edited by R. L. Linn. Washington, DC: American Council on Education and Macmillan, pp. 13–104. [Google Scholar]

- Méndez, Lucía I., Carol Scheffner Hammer, Lisa M. López, and Clancy Blair. 2019. Examining language and early numeracy skills in young Latino dual language learners. Early Childhood Research Quarterly 46: 252–61. [Google Scholar] [CrossRef]

- Moosbrugger, Helfried, and Augustin Kelava. 2020. Testtheorie und Fragebogenkonstruktion, 3rd ed. Cham: Springer. [Google Scholar]

- Naglieri, Jack. 1996. Naglieri Nonverbal Ability Test. San Diego: Harcourt Brace. [Google Scholar]

- Neisser, Ulric, Gwyneth Boodoo, Thomas J. Bouchard, Jr., A.Wade Boykin, Nathan Brody, Stephen J. Ceci, Diane Halpern, John C. Loehlin, Robert Perloff, Robert J. Sternberg, and et al. 1996. Intelligence: Knowns and unknowns. American Psychologist 51: 77–101. [Google Scholar] [CrossRef]

- Oakland, Thomas. 2009. How universal are test development and use? In Multicultural Psychoeducational Assessment. Edited by Elena L. Grigorenko. New York: Springer, pp. 1–40. [Google Scholar]

- OECD. 2018. Education at a Glance 2018: OECD Indicators. Paris: OECD Publishing. [Google Scholar] [CrossRef]

- Ortiz, Samuel O., and Agnieszka M. Dynda. 2005. Use of intelligence tests with culturally and linguistically diverse populations. In Contemporary Intellectual Assessment: Theories, Tests, and Issues. Edited by Dawn P. Flanagan and Patti L. Harrison. New York: The Guilford Press, pp. 545–56. [Google Scholar]

- Paek, Insu, and Mark Wilson. 2011. Formulating the Rasch Differential Item Functioning Model Under the Marginal Maximum Likelihood Estimation Context and Its Comparison with Mantel–Haenszel Procedure in Short Test and Small Sample Conditions. Educational and Psychological Measurement 71: 1023–46. [Google Scholar] [CrossRef]

- Parveen, Amina, Mohammad Amin Dar, Insha Rasool, and Shazia Jan. 2022. Challenges in the multilingual classroom across the curriculum. In Advances in Educational Technologies and Instructional Design Book Series. Hershey: IGI Global, pp. 1–12. [Google Scholar] [CrossRef]

- Peng, Peng, and Douglas Fuchs. 2014. A meta-analysis of working memory deficits in children with learning Difficulties. Journal of Learning Disabilities 49: 3–20. [Google Scholar] [CrossRef] [PubMed]

- Pezzuti, Linda, and Arturo Orsini. 2016. Are there sex differences in the Wechsler Intelligence Scale for Children—Forth Edition? Learning and Individual Differences 45: 307–12. [Google Scholar] [CrossRef]

- Pitchford, Nicola, and Laura A. Outhwaite. 2016. Can Touch Screen Tablets be Used to Assess Cognitive and Motor Skills in Early Years Primary School Children? A Cross-Cultural Study. Frontiers in Psychology 7: 1666. [Google Scholar] [CrossRef]

- Postlethwaite, Bennett Eugene. 2011. Fluid Ability, Crystallized Ability, and Performance Across Multiple Domains: A Meta-Analysis. Doctoral dissertation, University of Iowa, Iowa City, IA, USA. Available online: https://iro.uiowa.edu/esploro/outputs/doctoral/Fluid-ability-crystallized-ability-and-performance/9983777196602771 (accessed on 27 May 2024).

- Primi, Ricardo. 2002. Complexity of geometric inductive reasoning tasks. Intelligence 30: 41–70. [Google Scholar] [CrossRef]

- Raju, Nambury S., Larry J. Laffitte, and Barbara M. Byrne. 2002. Measurement equivalence: A comparison of methods based on confirmatory factor analysis and item response theory. Journal of Applied Psychology 87: 517–29. [Google Scholar] [CrossRef] [PubMed]

- R Core Team. 2023. R: A Language and Environment for Statistical Computing. Vienna: R Foundation for Statistical Computing. Available online: https://www.R-project.org/ (accessed on 27 May 2024).

- Reise, Steven P., Keith F. Widaman, and Robin H. Pugh. 1993. Confirmatory factor analysis and item response theory: Two approaches for exploring measurement invariance. Psychological Bulletin 114: 552–66. [Google Scholar] [CrossRef]

- Reynolds, Cecil. R., and Lisa A. Suzuki. 2013. Bias in psychological assessment: An empirical review and recommendations. In Handbook of Psychology: Vol. 10. Assessment Psychology, 2nd ed. Edited by John R. Graham, Jack A. Naglieri and Irving B. Weiner. Hoboken: John Wiley & Sons, Inc., pp. 82–113. [Google Scholar]

- Reynolds, Cecil R., Kimberly J. Vannest, and Elaine Fletcher-Janzen. 2014. Encyclopedia of Special Education: A Reference for the Education of Children, Adolescents, and Adults Disabilities and Other Exceptional Individuals, 4th ed. Hoboken: Wiley. [Google Scholar]

- Reynolds, Matthew R., Timothy Z. Keith, Kristen P. Ridley, and Puja Patel. 2008. Sex differences in latent general and broad cognitive abilities for children and youth: Evidence from higher-order MG-MACS and MIMIC models. Intelligence 36: 236–60. [Google Scholar] [CrossRef]

- Robitzsch, Alexander, Thomas Kiefer, and Margaret Wu. 2022. TAM: Test Analysis Modules (Version 4.1-4) [R package]. Comprehensive R Archive Network (CRAN). Available online: https://CRAN.R-project.org/package=TAM (accessed on 27 May 2024).

- Rose, L. Todd, and Kurt W. Fischer. 2011. Intelligence in childhood. In The Cambridge Handbook of Intelligence. Edited by Robert Sternberg and Scott Barry Kaufman. Cambridge: Cambridge University Press, pp. 144–73. [Google Scholar]

- Rosenthal, James A. 1996. Qualitative descriptors of strength of association and effect size. Journal of Social Service Research 21: 37–59. [Google Scholar] [CrossRef]

- Rosseel, Yves. 2012. lavaan: An R package for structural equation modeling. Journal of Statistical Software 48: 1–36. [Google Scholar] [CrossRef]

- Roth, Bettina, Nicolas Becker, Sara Romeyke, Sarah Schäfer, Florian Domnick, and Frank M. Spinath. 2015. Intelligence and school grades: A meta-analysis. Intelligence 53: 118–37. [Google Scholar] [CrossRef]

- Salthouse, Timothy A. 1998. Independence of age-related influences on cognitive abilities across the life span. Developmental Psychology 34: 851–64. [Google Scholar] [CrossRef] [PubMed]

- Salthouse, Timothy A. 2005. Effects of Aging on Reasoning. In The Cambridge Handbook of Thinking and Reasoning. Edited by Keith J. Holyoak and Robert G. Morrison. Cambridge: Cambridge University Press, pp. 589–605. [Google Scholar]

- Salvia, John, James Ysseldyke, and Sara Witmer. 2012. Assessment: In Special and Inclusive Education, 11th ed. Boston: Cengage Learning. [Google Scholar]

- Satorra, Albert. 2000. Scaled and adjusted restricted tests in multi-sample analysis of moment structures. In Innovations in Multivariate Statistical Analysis: A Festschrift for Heinz Neudecker. Edited by Risto D. H. Heijmans, D. Stephen, G. Pollock and Albert Satorra. Cham: Springer, pp. 233–47. [Google Scholar] [CrossRef]

- Schaap, Peter. 2011. The differential item functioning and structural equivalence of a nonverbal cognitive ability test for five language groups. SA Journal of Industrial Psychology 37: 881. [Google Scholar] [CrossRef]

- Schmidt-Atzert, Lothar, and Manfred Amelang. 2012. Psychologische Diagnostik, 5th ed. Edited by Thomas Frydrich and Helfired Moosbrugger. Berlin/Heidelberg: Springer. [Google Scholar]

- Schneider, W. Joel, and Kevin S. McGrew. 2012. The Cattell-Horn-Carroll model of intelligence. In Contemporary Intellectual Assessment: Theories, Tests, and Issues, 3rd ed. Edited by Dawn P. Flanagan and Patti L. Harrison. New York: The Guilford Press, pp. 99–144. [Google Scholar]

- Schneider, W. Joel, and Kevin S. McGrew. 2018. The Cattell–Horn–Carroll theory of cognitive abilities. In Contemporary Intellectual Assessment: Theories, Tests, and Issues, 4th ed. Edited by Dawn P. Flanagan and Erin M. McDonough. New York: The Guilford Press, pp. 73–163. [Google Scholar]

- Schoon, Ingrid, Elizabeth Jones, Helen Cheng, and Barbara Maughan. 2012. Family hardship, family instability, and cognitive development. Journal of Epidemiology and Community Health 66: 716–22. [Google Scholar] [CrossRef]

- Schumacker, Randall E., and Richard G. Lomax. 2004. A Beginner’s Guide to Structural Equation Modeling, 2nd ed. Mahwah: Lawrence Erlbaum Associates. [Google Scholar]

- Schweizer, Karl, and Wolfgang Koch. 2002. A revision of Cattell’s investment theory. Learning and Individual Differences 13: 57–82. [Google Scholar] [CrossRef]

- Semmelmann, Kilian, Marisa Nordt, Katharina Sommer, Rebecka Röhnke, Luzie Mount, Helen Prüfer, Sophia Terwiel, Tobias W. Meissner, Kami Koldewyn, and Sarah Weigelt. 2016. U can touch this: How tablets can be used to study cognitive development. Frontiers in Psychology 7: 1021. [Google Scholar] [CrossRef]

- Sievertsen, Hans Henrik, Francesca Gino, and Marco Piovesan. 2016. Cognitive fatigue influences students’ performance on standardized tests. Proceedings of the National Academy of Sciences 113: 2621–24. [Google Scholar] [CrossRef]

- Sijtsma, Klaas. 2009. On the use, the misuse, and the very limited usefulness of Cronbach’s alpha. Psychometrika 74: 107–20. [Google Scholar] [CrossRef]

- Sonnleitner, Philipp, Steve Bernard, and Sonja Ugen. 2024. Emerging Trends in Computer-Based Testing: Insights from OASYS and the Impact of Generative Item Models. In E-Testing and Computer-Based Assessment: CIDREE Yearbook 2024. Edited by Branislav Ranđelović, Elizabeta Karalić, Katarina Aleksić and Danijela Đukić. Belgrade: Institute for Education Quality and Evaluation, pp. 82–99. [Google Scholar]

- Spearman, Charles. 1904. ‘General intelligence,’ objectively determined and measured. The American Journal of Psychology 15: 201–93. [Google Scholar] [CrossRef]

- Strenze, Tarmo. 2007. Intelligence and socioeconomic success: A meta-analytic review of longitudinal research. Intelligence 35: 401–26. [Google Scholar] [CrossRef]

- Swanson, H. Lee, Olga Jerman, and Xinhua Zheng. 2008. Growth in working memory and mathematical problem solving in children at risk and not at risk for serious math difficulties. Journal of Educational Psychology 100: 343–79. [Google Scholar] [CrossRef]

- Taber, Keith S. 2017. The use of Cronbach’s Alpha when developing and reporting research instruments in science education. Research in Science Education 48: 1273–96. [Google Scholar] [CrossRef]

- Tamnes, Christian K., Ylva Østby, Kristine B. Walhovd, Lars T. Westlye, Paulina Due-Tønnessen, and Anders M. Fjell. 2010. Intellectual abilities and white matter microstructure in development: A diffusion tensor imaging study. Human Brain Mapping 31: 1609–25. [Google Scholar] [CrossRef] [PubMed]

- Tellegen, Peter J., Jacob A. Laros, and Franz Petermann. 2018. SON-R 2-8. Non-Verbaler Intelligenztest 2-8—Revision. Göttingen: Hogrefe. [Google Scholar]

- Terman, Lewis M. 1916. The Measurement of Intelligence: An Explanation of and a Complete Guide for the Use of the Stanford Revision and Extension of the Binet–Simon Intelligence Scale. Boston: Houghton Mifflin. [Google Scholar]

- Thorndike, Robert L. 1994. g. Intelligence 19: 145–55. [Google Scholar] [CrossRef]

- Tucker-Drob, Elliot M., and Timothy A. Salthouse. 2009. Methods and measures: Confirmatory factor analysis and multidimensional scaling for construct validation of cognitive abilities. International Journal of Behavioral Development 33: 277–85. [Google Scholar] [CrossRef]

- Ugen, Sonja, Christine Schiltz, Antoine Fischbach, and Ineke M. Pit-ten Cate. 2021. Lernstörungen im multilingualen Kontext—Eine Herausforderung [Learning disorders in a multilingual context—A challenge]. In Lernstörungen im Multilingualen Kontext. Diagnose und Hilfestellungen. Luxembourg: Melusina Press. [Google Scholar]

- Wechsler, David, and Jack A. Naglieri. 2006. Wechsler Nonverbal Scale of Ability (WNV). San Antonio: Pearson Assessment. [Google Scholar]

- Wilhoit, Brian. 2017. Best Practices in cross-battery assessment of nonverbal cognitive ability. In Handbook of Nonverbal Assessment, 2nd ed. Edited by R. Steve McCallum. Cham: Springer, pp. 59–76. [Google Scholar] [CrossRef]

- Wu, Margaret, Hak Ping Tam, and Tsung-Hau Jen. 2016. Educational Measurement for Applied Researchers. Cham: Springer. [Google Scholar] [CrossRef]

| N | Percentage | Mean | SD | |

|---|---|---|---|---|

| Age (years) | - | - | 8.85 | 0.66 |

| Gender | 703 | 100 | - | - |

| Female | 343 | 48.8 | - | - |

| Male | 359 | 51.1 | - | - |

| No Information | 1 | 0.1 | - | - |

| Language | 703 | 100 | ||

| Native speakers | 279 | 39.7 | - | - |

| Non-native speakers | 423 | 60.2 | - | - |

| No Information | 1 | 0.1 | - | - |

| SES (HISEI) | 568 | 100 | 48.76 | 16.87 |

| High | 286 | 50.4 | 63.91 | 5.89 |

| Low | 282 | 49.6 | 33.39 | 8.26 |

| First-Order Models | χ2 | df | χ2/df | CFI | TLI | RMSEA | SRMR |

|---|---|---|---|---|---|---|---|

| FRM | 146.45 | 65 | 2.25 | 0.953 | 0.944 | 0.042 | 0.066 |

| FRA | 140.46 | 65 | 2.16 | 0.942 | 0.930 | 0.041 | 0.079 |

| FRSO | 121.58 | 65 | 1.87 | 0.966 | 0.959 | 0.036 | 0.066 |

| QRNS | 204.67 | 44 | 4.65 | 0.848 | 0.810 | 0.072 | 0.089 |

| NQR | 228.45 | 90 | 2.54 | 0.934 | 0.923 | 0.047 | 0.069 |

| FRR | 110.63 | 54 | 2.05 | 0.971 | 0.965 | 0.040 | 0.057 |

| VSR | 41.74 | 35 | 1.19 | 0.995 | 0.994 | 0.017 | 0.059 |

| PFR | 168.20 | 65 | 2.59 | 0.930 | 0.916 | 0.049 | 0.072 |

| CMRT | 74.31 | 35 | 2.12 | 0.976 | 0.970 | 0.040 | 0.065 |

| VSYM | 53.83 | 35 | 1.54 | 0.951 | 0.937 | 0.028 | 0.064 |

| VSM | 63.02 | 54 | 1.17 | 0.995 | 0.994 | 0.015 | 0.050 |

| Models | χ2 | df | χ2/df | CFI | TLI | RMSEA (90% CI) | SRMR |

|---|---|---|---|---|---|---|---|

| Model 1 | 9202.06 | 8503 | 1.08 | 0.962 | 0.962 | 0.011 (0.009–0.013) | 0.069 |

| Model 2 | 9068.05 | 8497 | 1.07 | 0.969 | 0.969 | 0.010 (0.008–0.012) | 0.067 |

| Model 3 | 9081.33 | 8499 | 1.07 | 0.968 | 0.968 | 0.010 (0.008–0.012) | 0.067 |

| Model | df | χ2 | Δχ2 | Δdf | CFI | TLI | RMSEA (90% CI) | SRMR |

|---|---|---|---|---|---|---|---|---|

| Model 2 vs. | 8497 | 8889.6 | - | - | 0.995 | 0.995 | 0.008 (0.006–0.011) | 0.067 |

| Model 1 | 8503 | 9322.2 | 88.023 *** | 6 | 0.990 | 0.989 | 0.012 (0.010–0.013) | 0.069 |

| Model 3 | 8499 | 8935.9 | 9.6971 ** | 2 | 0.994 | 0.994 | 0.009 (0.006–0.011) | 0.067 |

| Model 3 vs. | 8499 | 8935.9 | - | - | 0.994 | 0.994 | 0.009 (0.006–0.011) | 0.067 |

| Model 1 | 8503 | 9322.2 | 79.339 *** | 4 | 0.990 | 0.989 | 0.012 (0.010–0.013) | 0.069 |

| N (Listwise) = 586 | ||||

|---|---|---|---|---|

| Educational Achievement | ||||

| EA-MA | EA-GL | EA-GR | ||

| Domain level | FR | 0.546 ** | 0.220 ** | 0.318 ** |

| QR | 0.487 ** | 0.141 ** | 0.246 ** | |

| VP | 0.527 ** | 0.228 ** | 0.303 ** | |

| STM | 0.449 ** | 0.103 * | 0.247 ** | |

| Full-scale | FLUX | 0.617 ** | 0.220 ** | 0.345 ** |

| RAVEN-Short | 0.496 ** | 0.194 ** | 0.278 ** | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kijamet, D.; Wollschläger, R.; Keller, U.; Ugen, S. FLUX (Fluid Intelligence Luxembourg): Development and Validation of a Fair Tablet-Based Test of Cognitive Ability in Multicultural and Multilingual Children. J. Intell. 2025, 13, 139. https://doi.org/10.3390/jintelligence13110139

Kijamet D, Wollschläger R, Keller U, Ugen S. FLUX (Fluid Intelligence Luxembourg): Development and Validation of a Fair Tablet-Based Test of Cognitive Ability in Multicultural and Multilingual Children. Journal of Intelligence. 2025; 13(11):139. https://doi.org/10.3390/jintelligence13110139

Chicago/Turabian StyleKijamet, Dzenita, Rachel Wollschläger, Ulrich Keller, and Sonja Ugen. 2025. "FLUX (Fluid Intelligence Luxembourg): Development and Validation of a Fair Tablet-Based Test of Cognitive Ability in Multicultural and Multilingual Children" Journal of Intelligence 13, no. 11: 139. https://doi.org/10.3390/jintelligence13110139

APA StyleKijamet, D., Wollschläger, R., Keller, U., & Ugen, S. (2025). FLUX (Fluid Intelligence Luxembourg): Development and Validation of a Fair Tablet-Based Test of Cognitive Ability in Multicultural and Multilingual Children. Journal of Intelligence, 13(11), 139. https://doi.org/10.3390/jintelligence13110139