A Novel Local Dimming Approach by Controlling LCD Backlight Modules via Deep Learning

Abstract

1. Introduction

- (1)

- To develop a method of directly generating HDR images from a single image for LDR images, traditional methods, such as relying on expert experience or heuristics, often fail to fully process the multiple exposed pixels in the image, resulting in artifacts. Therefore, this study plans to achieve higher image reproduction performance through deep learning models.

- (2)

- Most of the existing BLD (Backlight Local Dimming) algorithms are not designed for HDR images. Therefore, these algorithms will be optimized to make them more suitable for the display needs of HDR images.

- (3)

- The best balance between image quality and power consumption will be achieved to reduce image distortion, improve contrast, and reduce overall power consumption.

- (4)

- Considering the key factors of local dimming techniques, an LCD-LED software simulation platform will be developed to quickly evaluate the improvement effect of image contrast without the need to modify the actual hardware structure.

- (5)

- A set of local dimming algorithms based on deep learning will be designed to generate backlight images with LED brightness distribution and LCD pixel images more quickly and effectively.

- (1)

- Develop a software simulator for a backlight module to reduce the cost of hardware modifications.

- (2)

- Propose an algorithm to directly generate HDR images from a single LDR image to overcome the limitation that multiple exposure images cannot be obtained.

- (3)

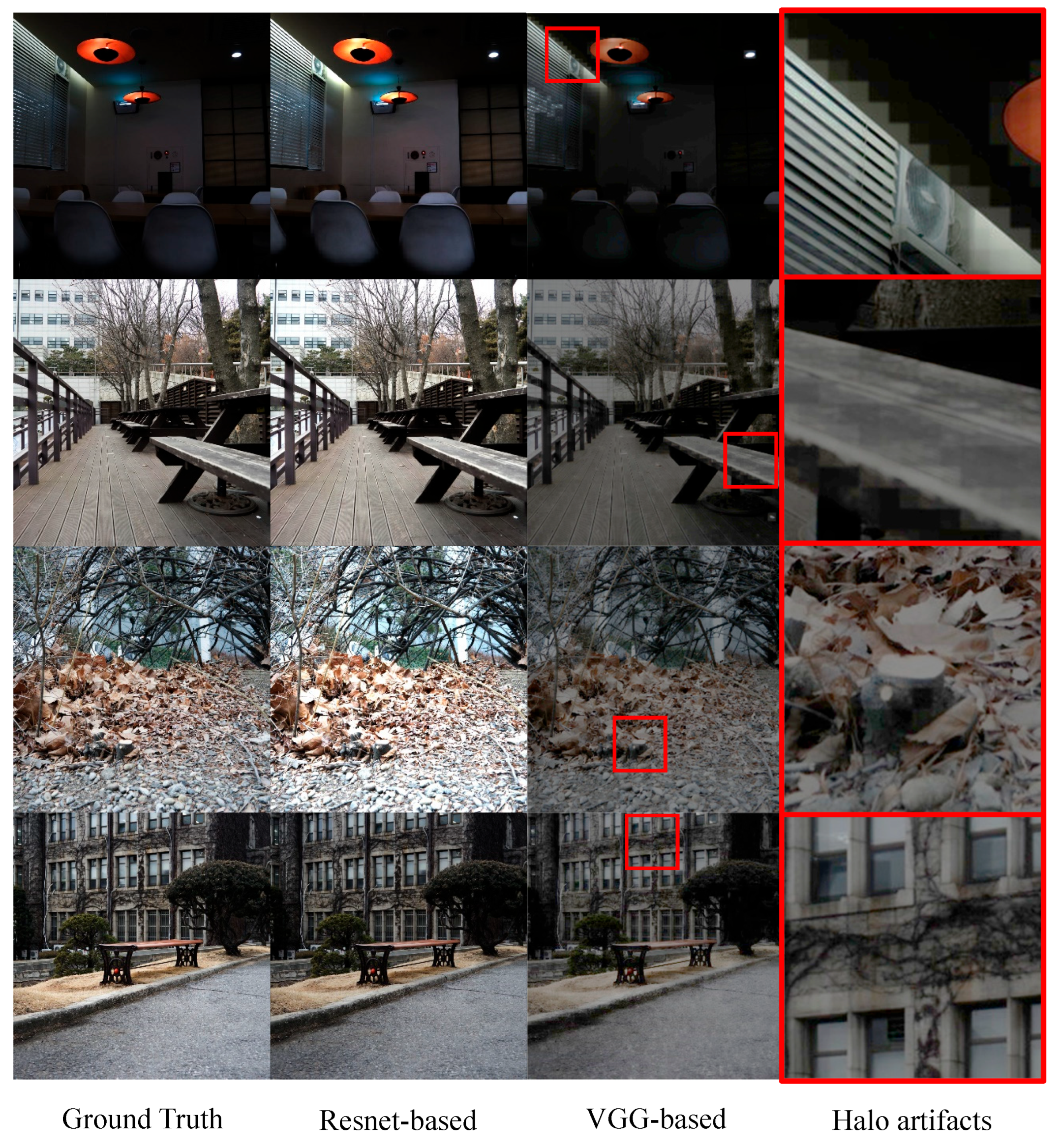

- Use a deep learning method to generate backlight module images and LCD panel images, and halo artifacts are optimized.

2. Related Work

3. Proposed Scheme

3.1. HDR Image Reconstruction

3.1.1. Principle of Image Reconstruction

- (1)

- There may be a lack of direct correlation between successive LDR image inputs, so HDR reconstruction must rely entirely on a single LDR image. Our technique focuses on recovering the lost information in the saturated part of the LDR image to achieve the reconstruction of the corresponding HDR image.

- (2)

- On most correctly exposed or saturated pixels, the use of traditional convolutional filters may introduce ambiguity during training, resulting in checkerboard or halo-like artifacts. To solve this problem, the feature masking method of Santos et al. [43] was adopted in this study to reduce the effect of features generated from saturated regions.

- (3)

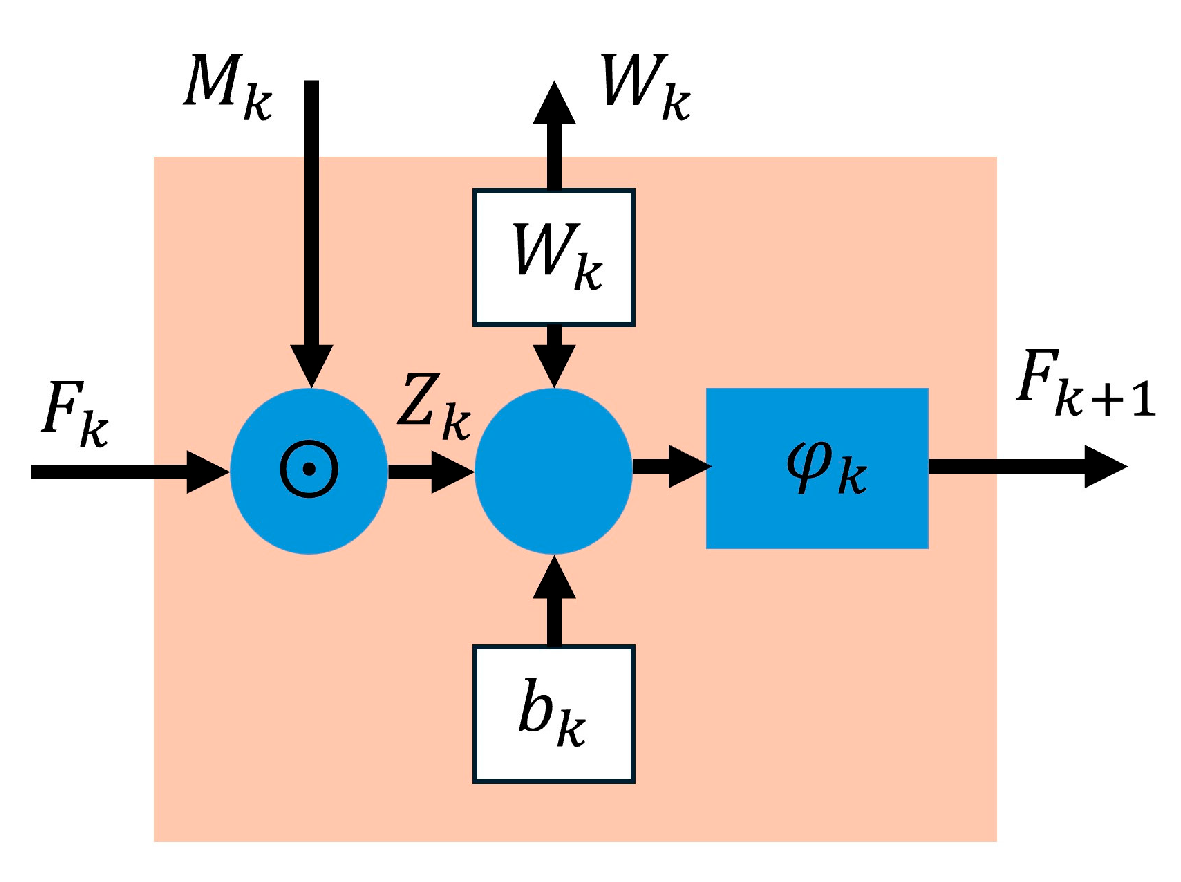

- Inspired by image restoration techniques, this study considered using the perceived loss function proposed by Gatys et al. [44] and adjusting this function to meet the requirements of HDR image reconstruction. By minimizing this perceptual loss during the training phase, the deep learning model can synthesize visually realistic textures in saturated regions.Based on the concept of gamma correction, the input LDR image L (color range defined at [0, 1]) can be converted to adjust the brightness of the pixels. The masking weight M obtained from the deep learning network (the numerical range is defined at [0, 1]) can be used to effectively suppress the influence of saturated pixels in the image, so the reconstructed HDR image can be represented as follows:where is the input LDR image (normalized to [0, 1]), γ is the gamma correction factor (γ > 1), ⊙ represents the pixel-wise product (Hadamard product), M is the feature mask generated by the deep learning network with values in [0, 1], and is the predicted HDR image reconstructed by the deep learning network. The first term and the second term on the right side of Equation (1) define the contribution from the normal exposure area and the saturated area, respectively.

3.1.2. Deep Learning Model

3.1.3. Loss Function

- (a)

- Loss function LVGG: This is used to evaluate the degree of matching between the features captured from the reconstructed image and the real image, respectively. This induces the model to generate a texture that is like the real image at the perceptual level. The loss term is defined as follows:where Fk represents the k-th layer feature map of the VGG 19 network and R( ) is a numeric range compression function that compresses the value of each variable to between [0, 1]. Moreover, the image is obtained by combining the content of the well-exposed areas in the real image and the saturated areas in the network output image represented by the following:

- (b)

- Style loss LS: For the color style and texture of the whole image, the Gram matrix is used to calculate the features [45], which is defined as follows:in which Gk is a Gram matrix representing the features of k-th layer of the VGG 19 network, defined by the following:where Nk represents the normalization constant of the feature map Fk (CkHkWk). In addition, the feature map Fk is treated as an array of (HkWk) × Ck, so the size of Gk is Ck × Ck.

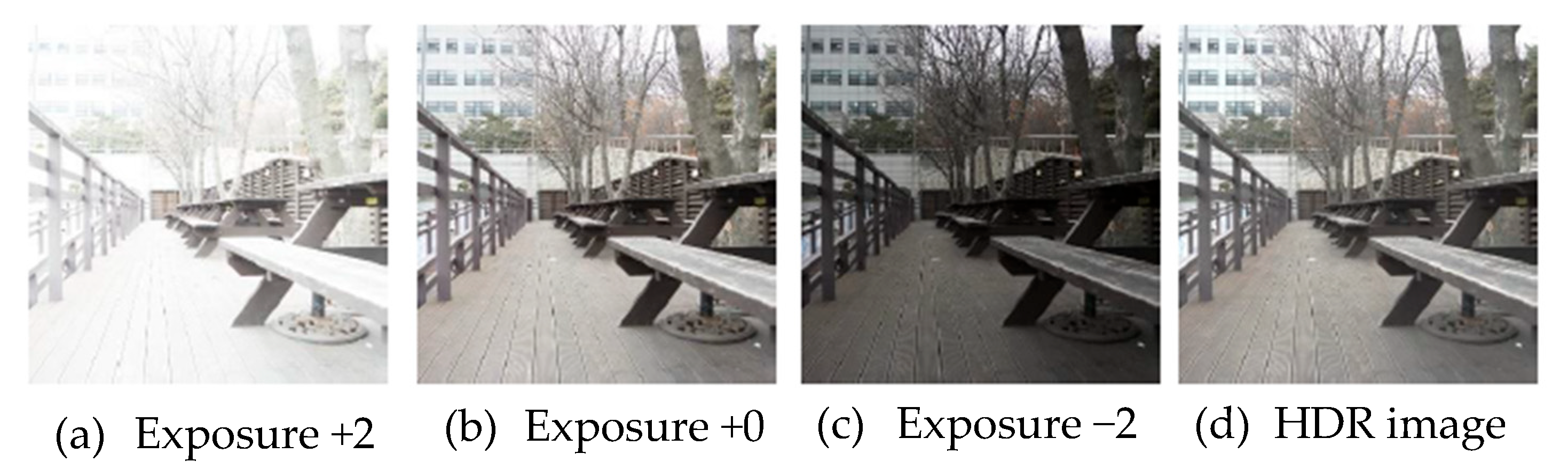

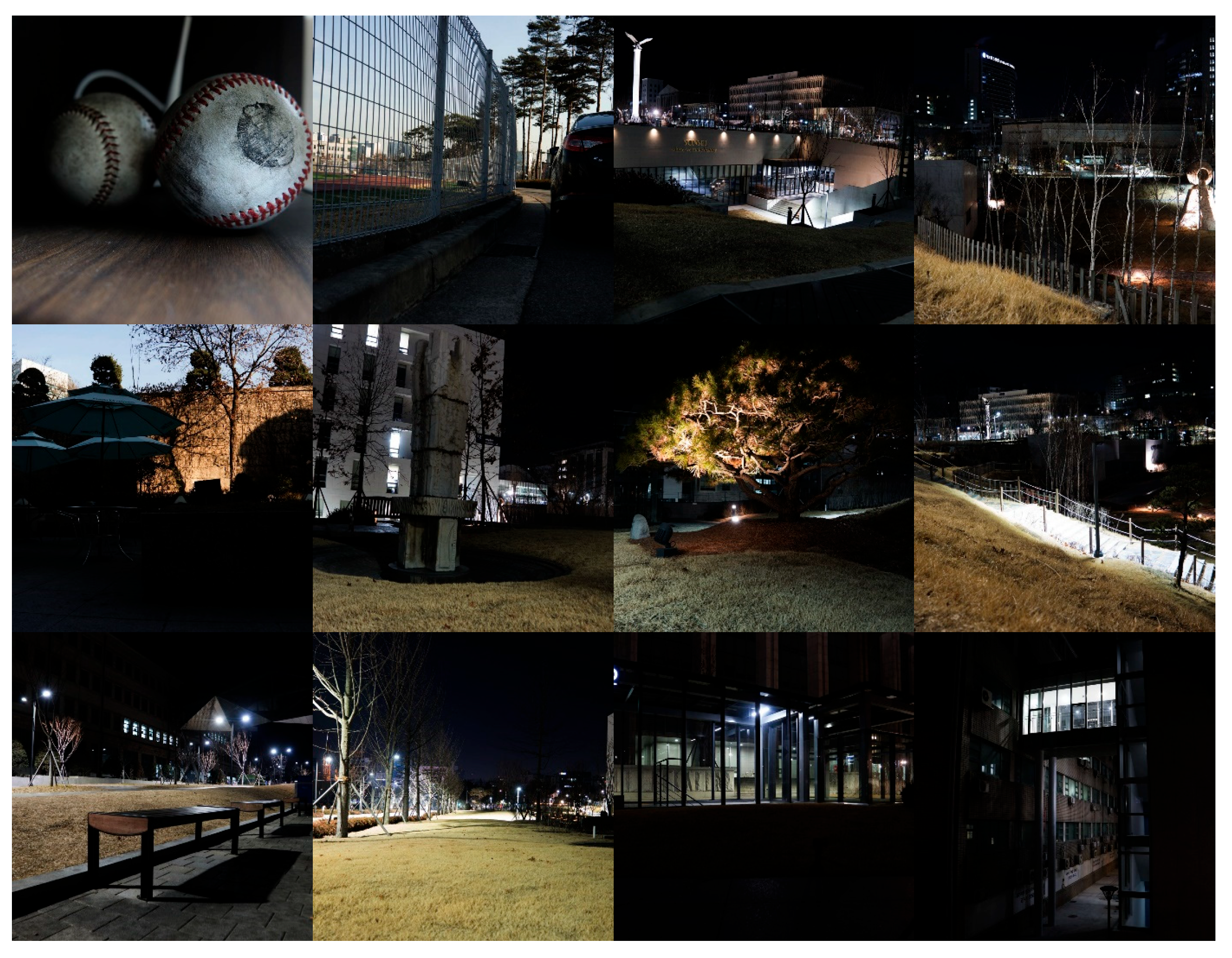

3.1.4. Training Dataset

3.2. Local Dimming

3.2.1. Principle of Local Dimming

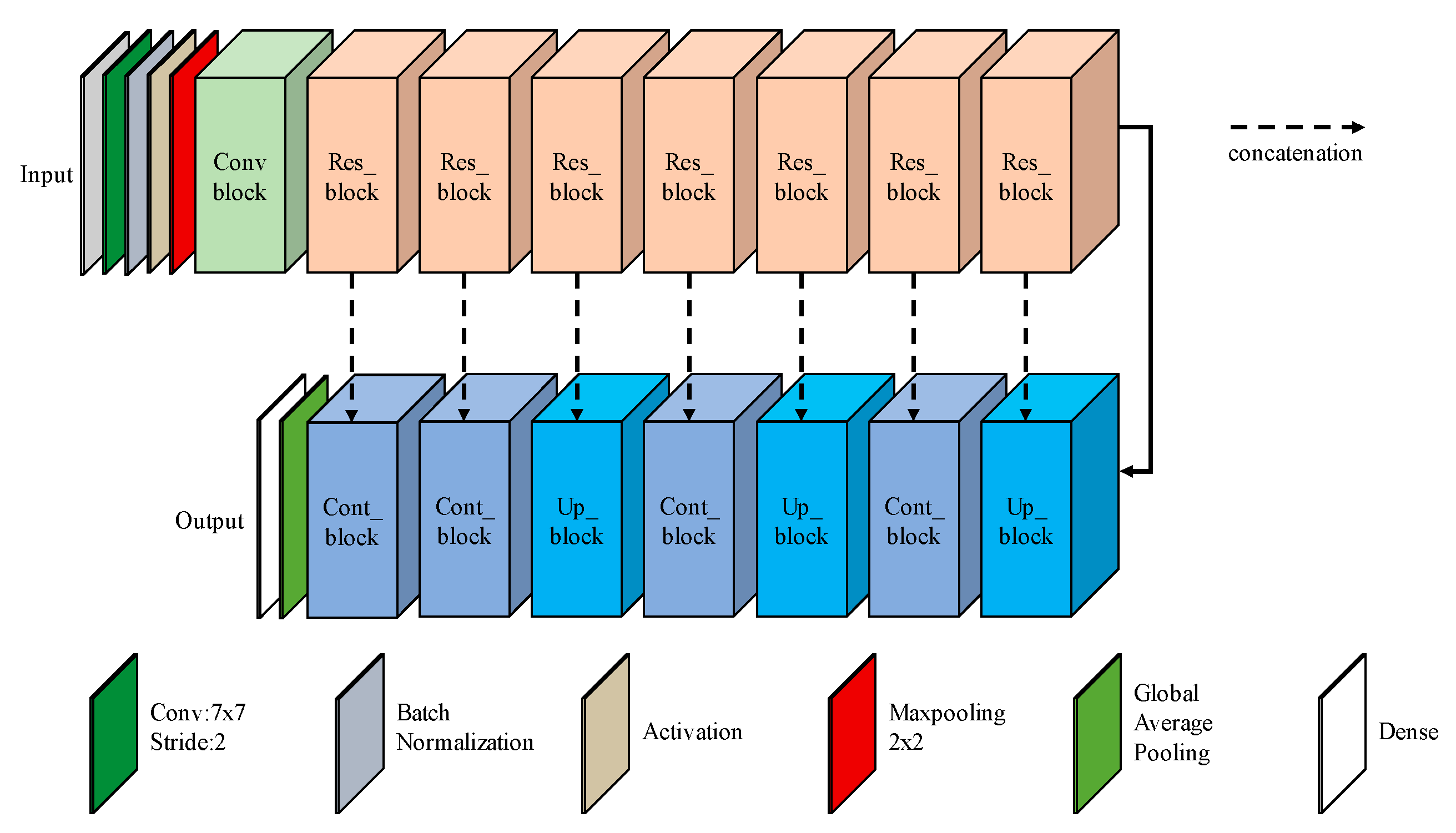

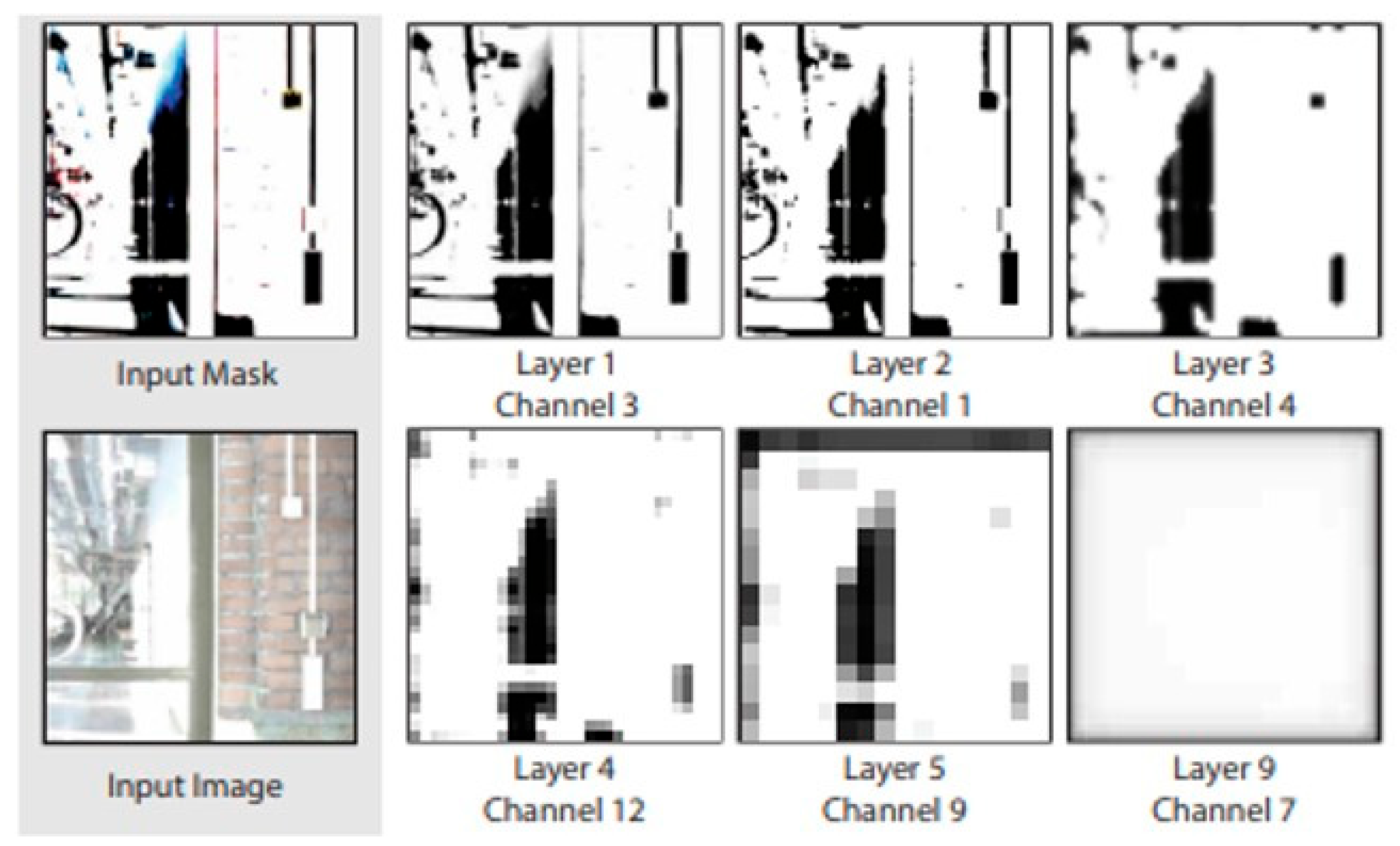

3.2.2. Deep Learning Model

- (1)

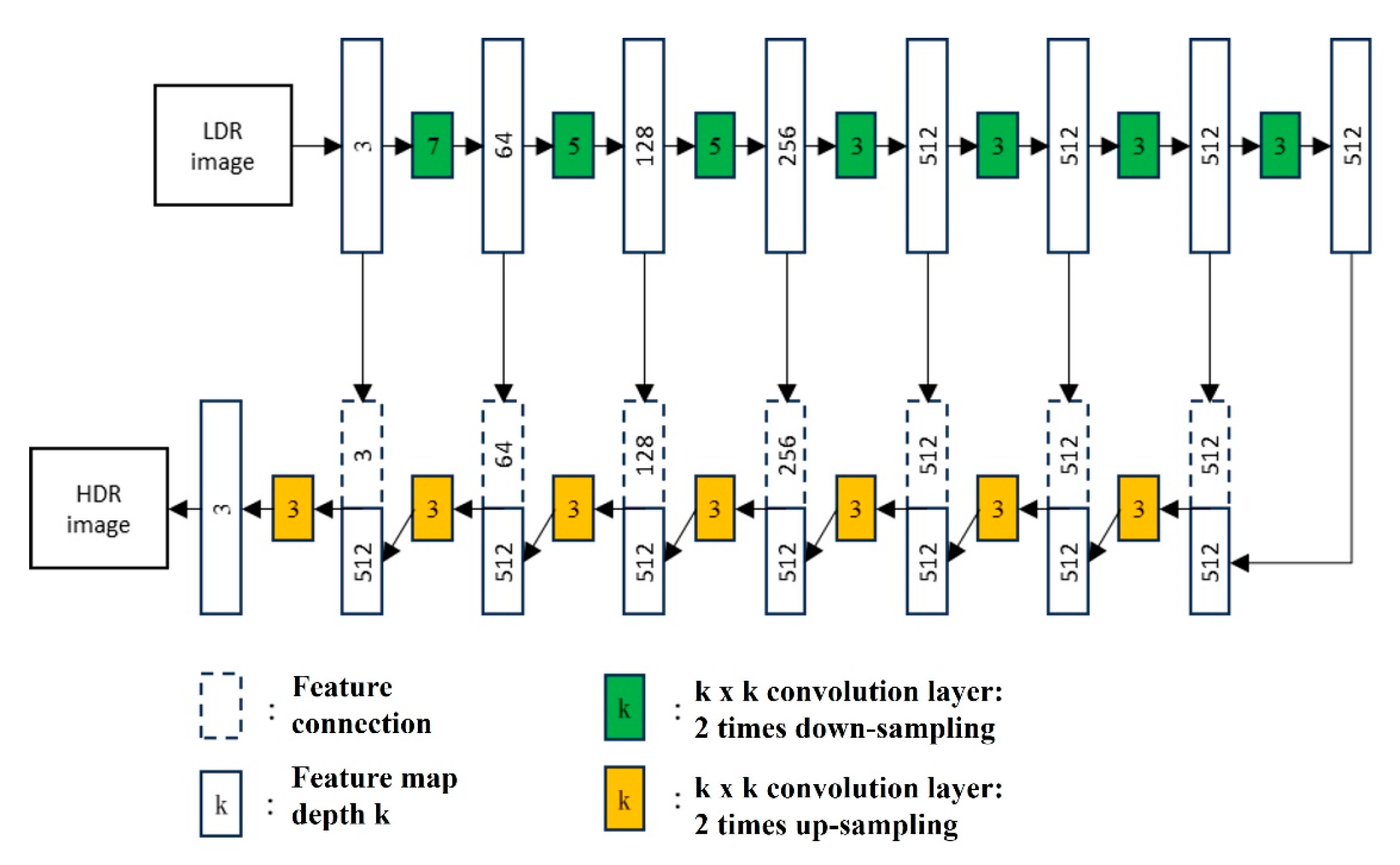

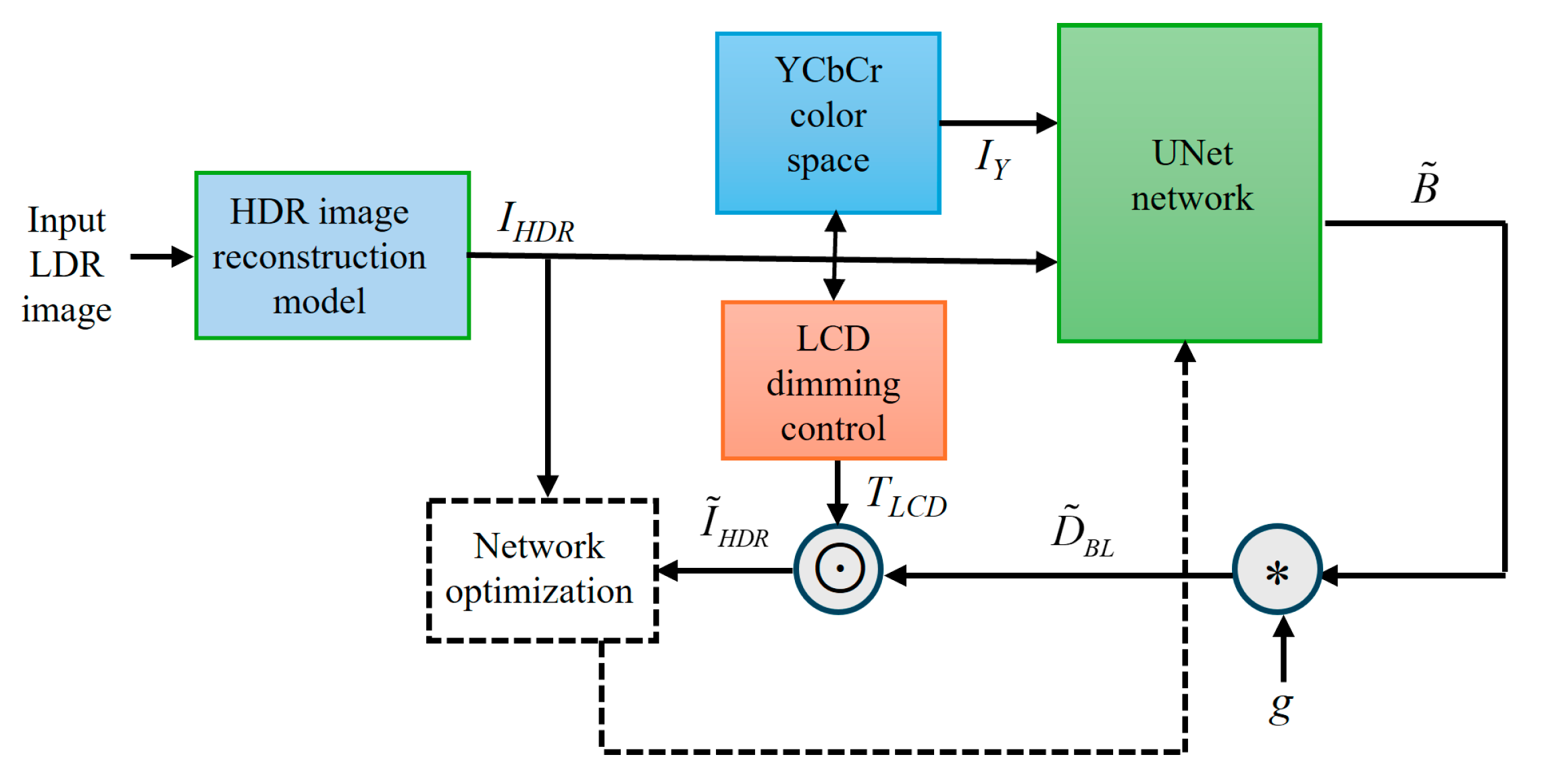

- UNet networkAs shown in Figure 9, the purpose of UNet is to combine and optimize the BLD features of HDR images by minimizing the loss function. The UNet architecture consists of two main parts, an encoder and a decoder, both of which are made up of multiple convolutional layers. The encoder down-samples features stage by stage until a low-resolution threshold is reached, and then the decoder starts upsampling the features stage by stage. Under each resolution, features from the encoder are propagated directly to the decoder, which can effectively combine image information at multiple scales and accelerate the convergence of optimization. UNet can process image information at multiple scales; therefore, a large field of perception can be obtained. At the same time, due to the use of downsampling, most of the computation can be performed under lower resolution. Therefore, only lower computing power is in need of being compared to other architectures.The encoder adopted in this study is a residual network with 18 convolutional layers [49]. The residual network consists of residual blocks in which the computational output of each block is added to its inputs, allowing for better gradient flow and improved training of deeper networks. Apart from the first layer with a convolutional kernel size of 7 × 7 and stride = 1 for residual-connection convolutions, all other convolutional layers use a convolutional kernel of 3 × 3. The decoder includes five upsampling layers that use bilinear upsampling followed by the process of three deconvolution kernels, normalization, and activation functions. At the same time, the features spanned from the encoder are matched and connected on each layer. ReLU activation is used for encoders and decoders, as well as normalization to help optimize convergence.The network input is composed of three RGB channels and one brightness channel of the HDR image, and the color brightness I of all channels is in the range of [0, 1]. The network output is a full-resolution single-channel image of backlight prediction ∈ [0, 1], which is the result of the sigmoid function after the final convolution operation. Since the training of the depth model only relies on the input HDR images, the HDR image pairs of the NTIRE 2021 and LDR-HDR-pair datasets can be directly used.

- (2)

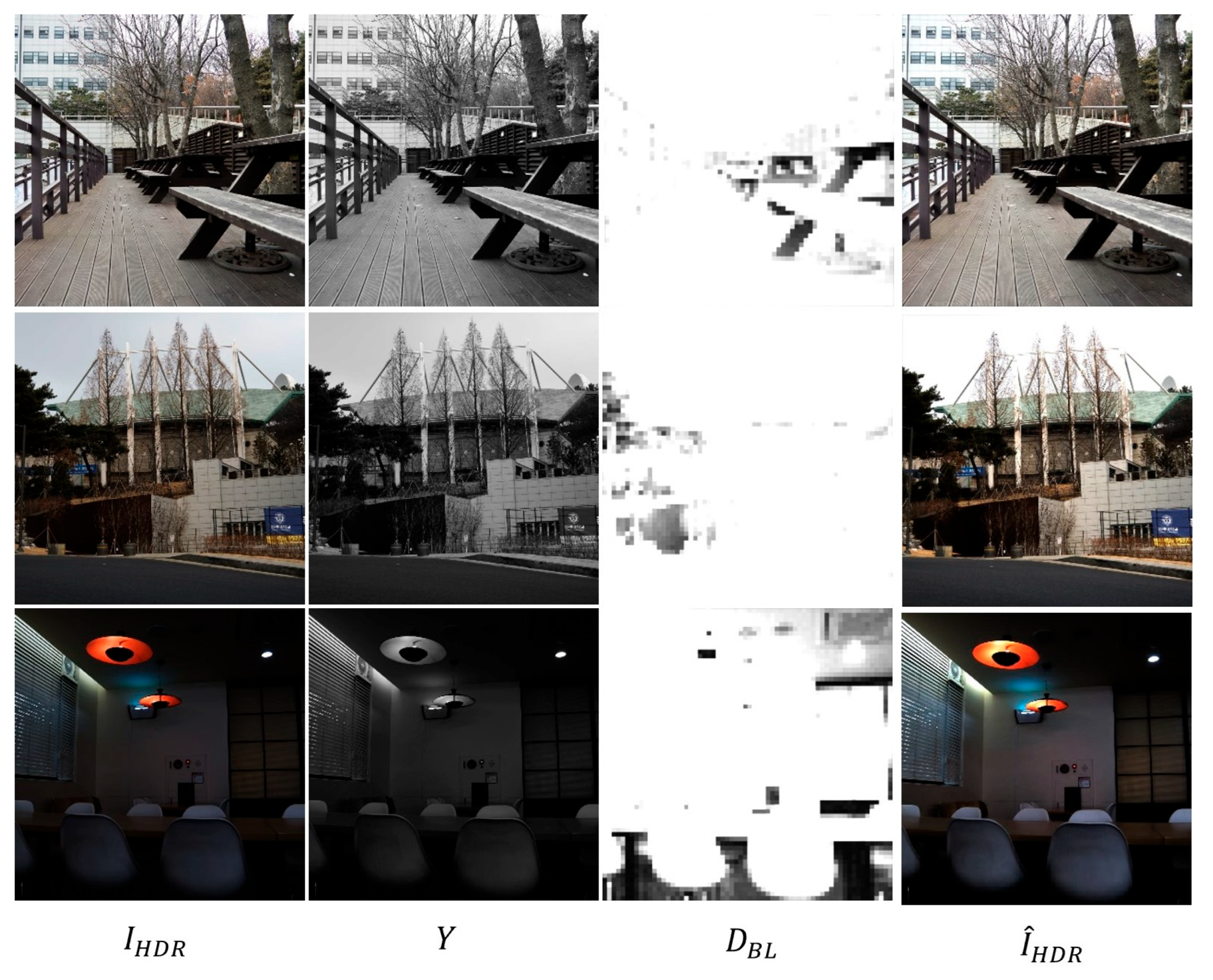

- BLD image reconstructionBased on the backlight value of the backlight image , the simulated backlight intensity of the diffuser can be further estimated. The effect of diffusion panel g is defined using the Point Spread Function (PSF), and the BLD image can be written as follows:where Wg and Hg are the width and height of the PSF filter, respectively. The final backlight prediction needs to match the number of LEDs in the backlight module of the target LCD. The backlight image is evenly divided into many rectangular blocks according to the number and position of LEDs, and the pixel brightness in each block is replaced by an average value.

- (3)

- HDR image reconstructionThe purpose is to reconstruct the HDR image to be displayed from the backlight image and the LCD panel image. Theoretically, the display image produced by LCD can be defined as follows:in which TLCD is the light transmittance of the LCD panel driven by the grayscale of each pixel of each color channel on the LCD panel image.

3.2.3. Loss Function

4. Experiments and Evaluation

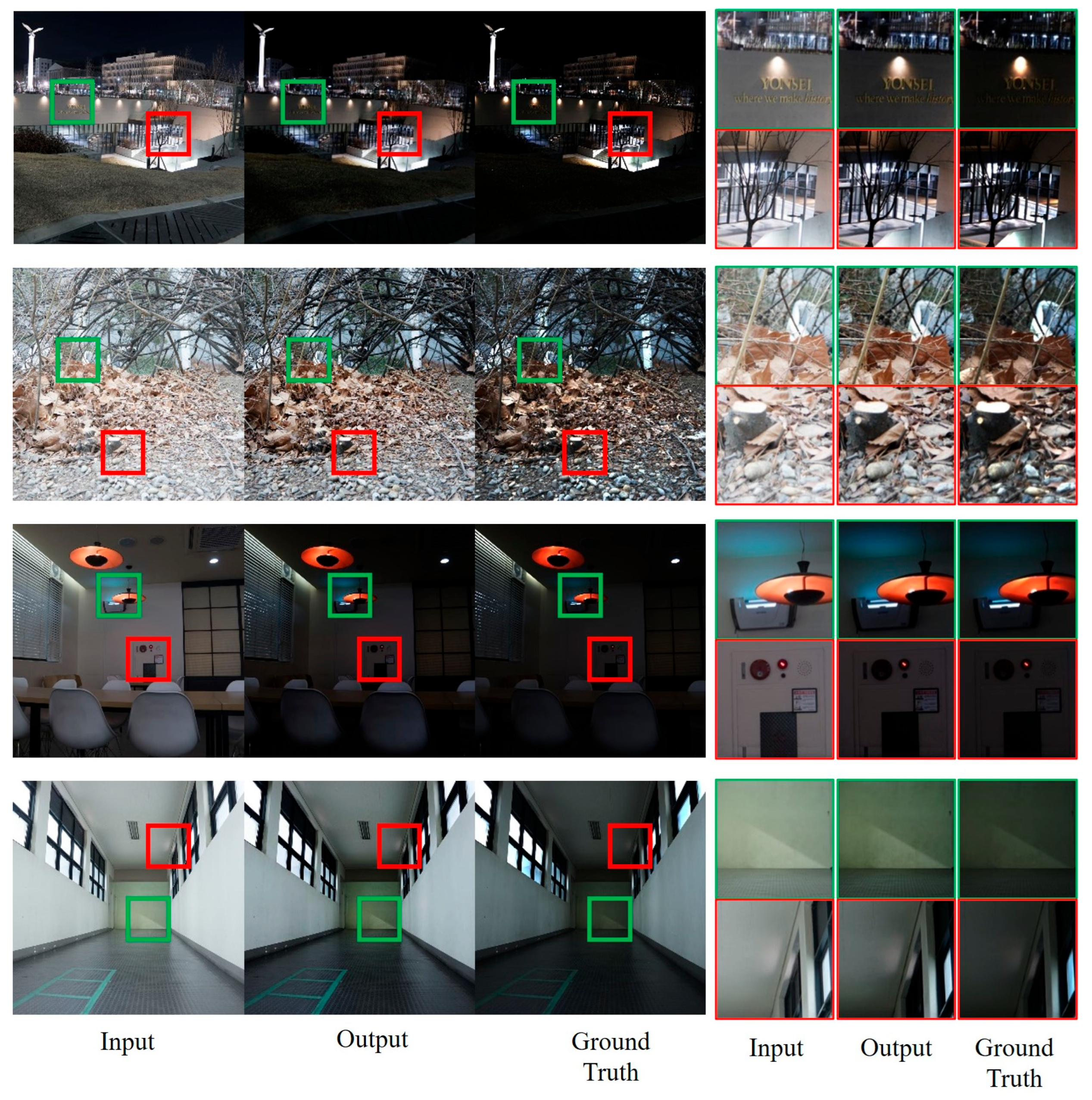

4.1. HDR Image Reconstruction

4.1.1. Preserving Image Display Details with HDR Quality

4.1.2. The Credibility of the Reconstructed HDR Image

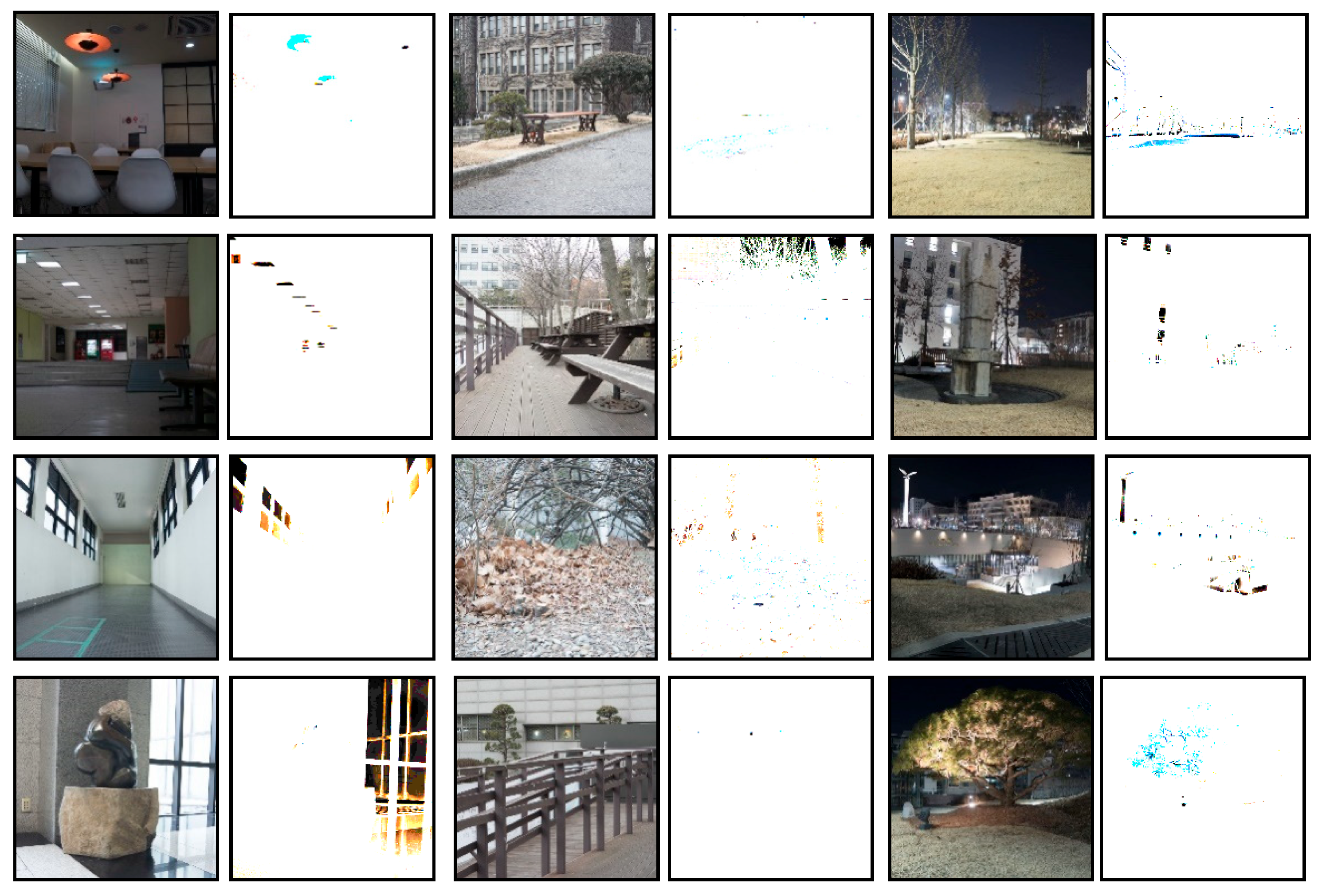

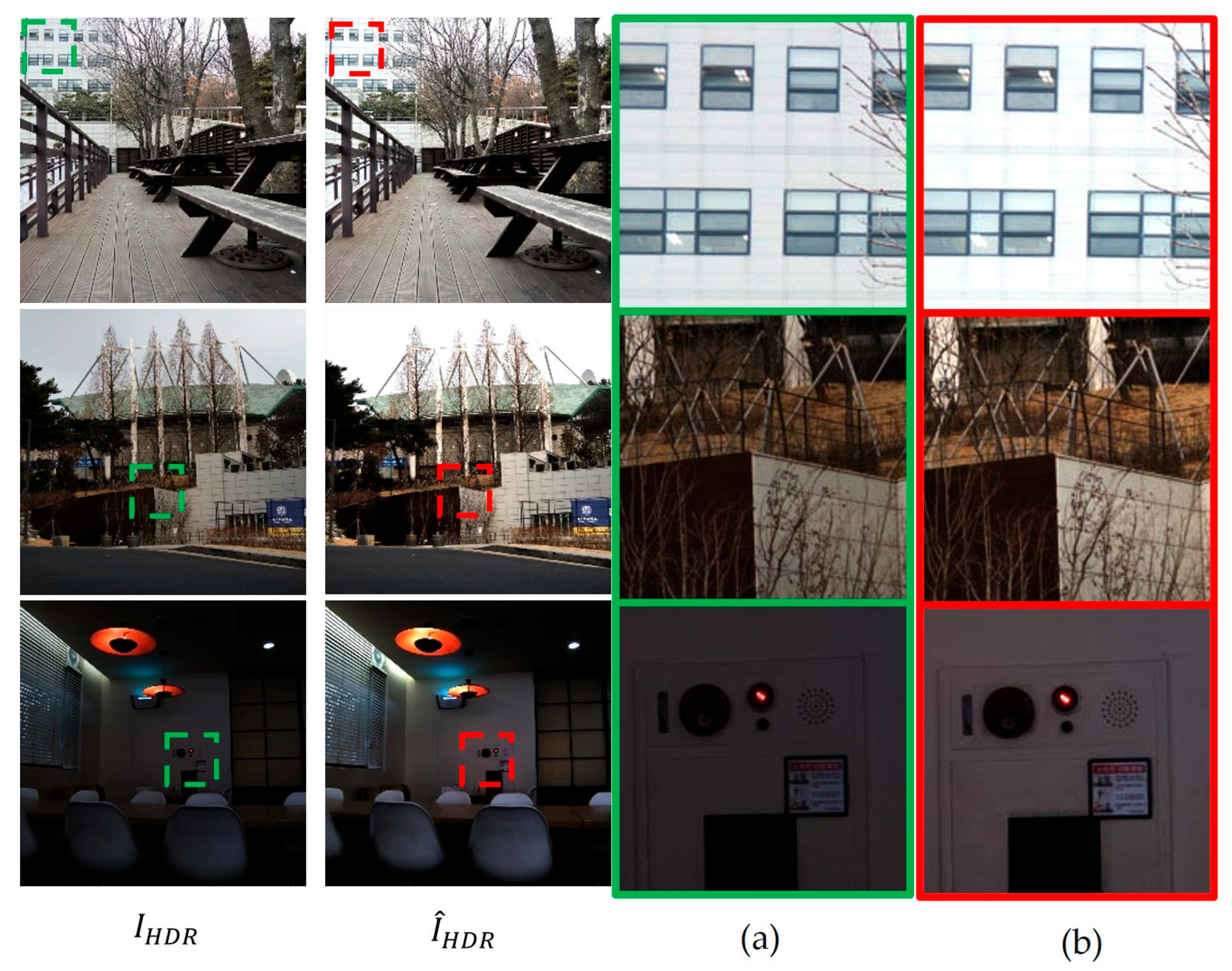

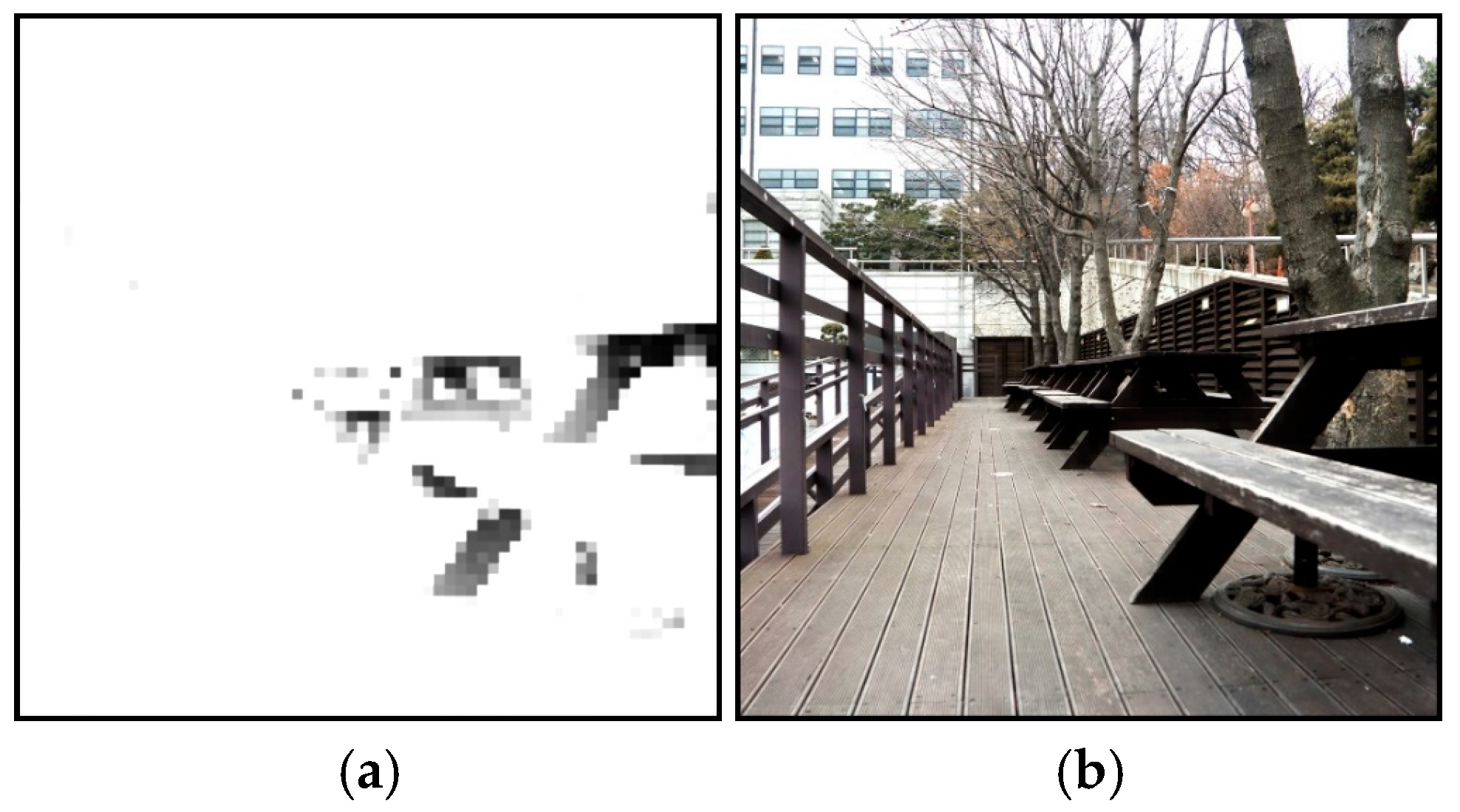

4.2. Backlight Local Dimming

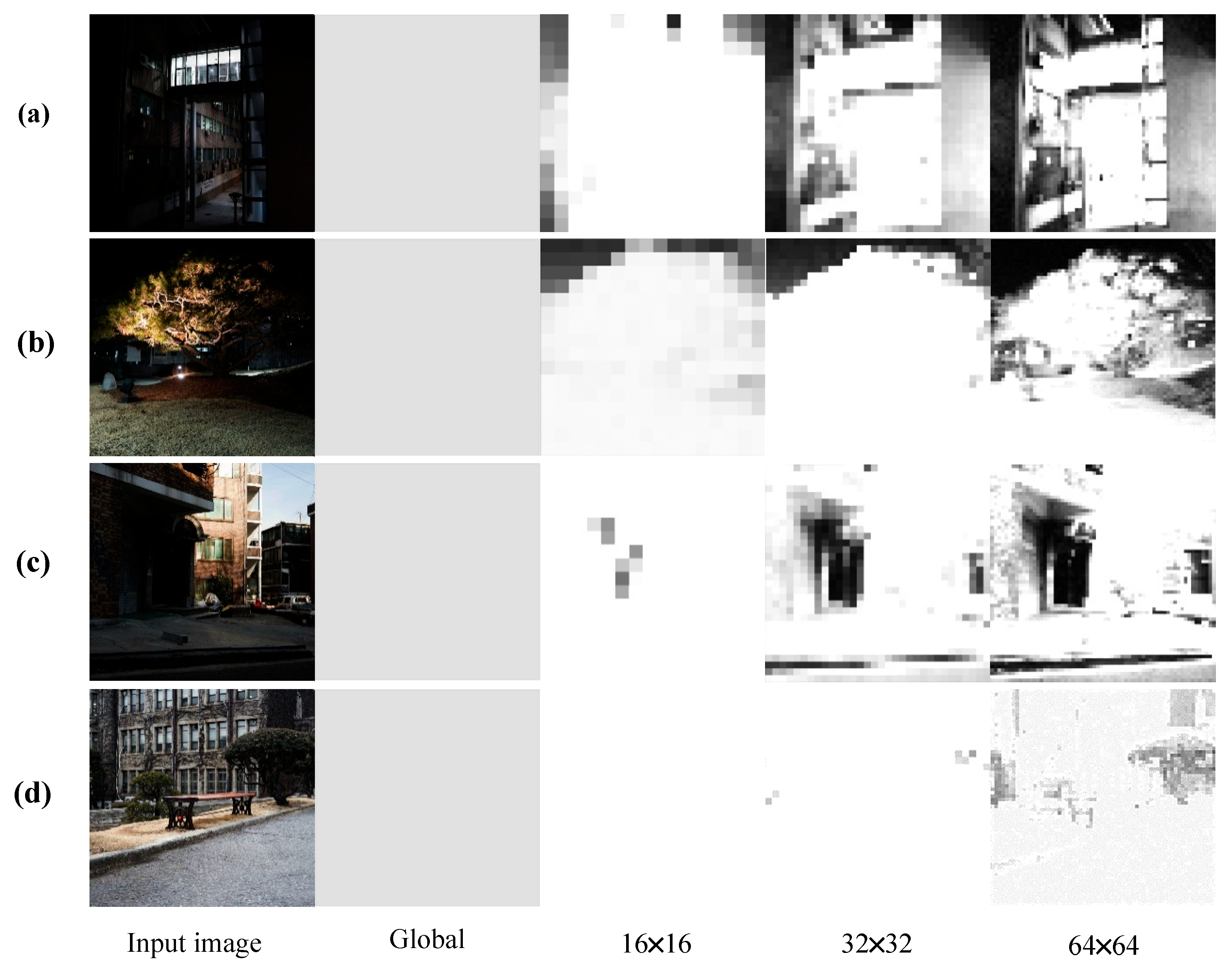

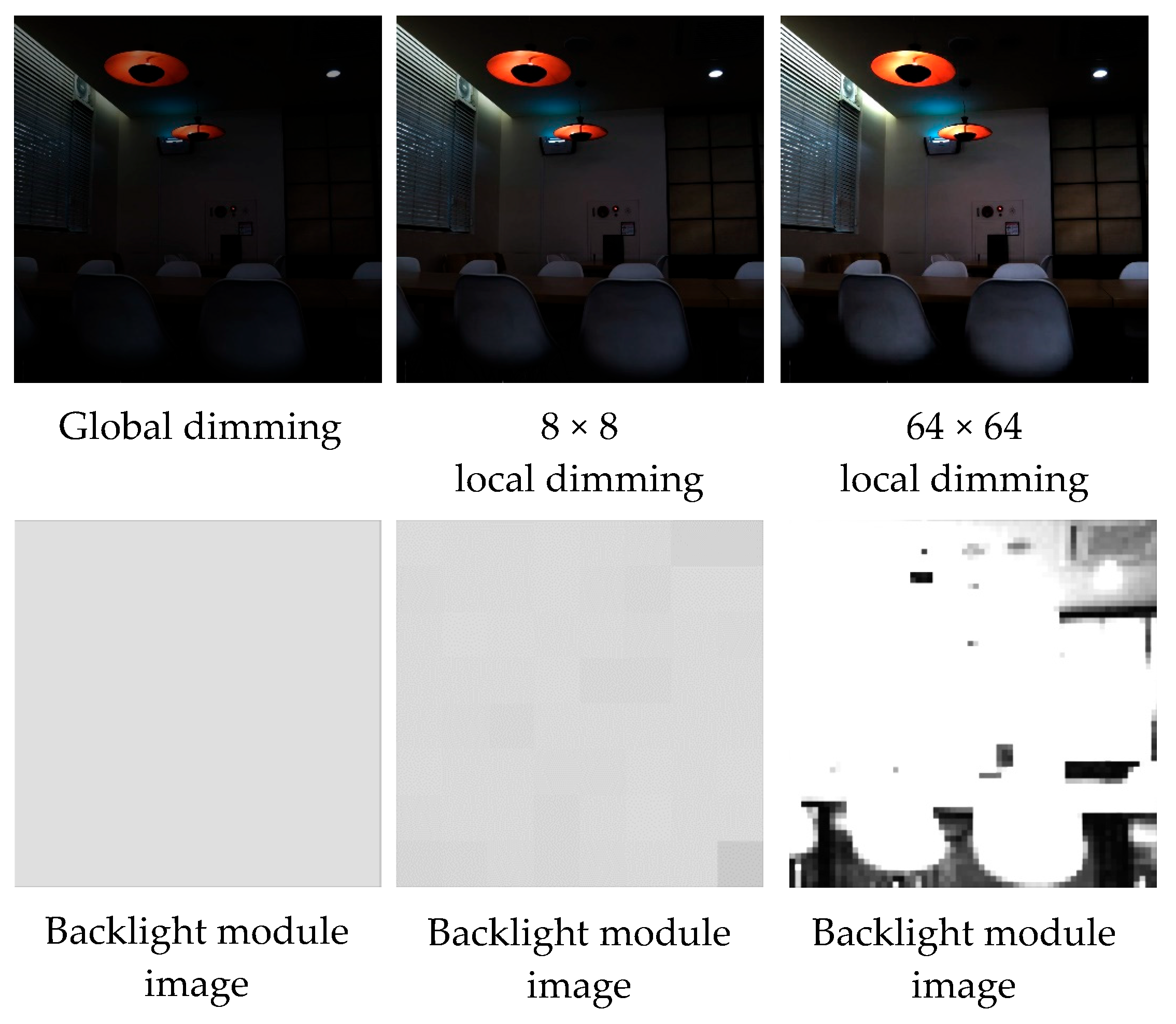

4.2.1. Simulation of Local Dimming of Backlight Module

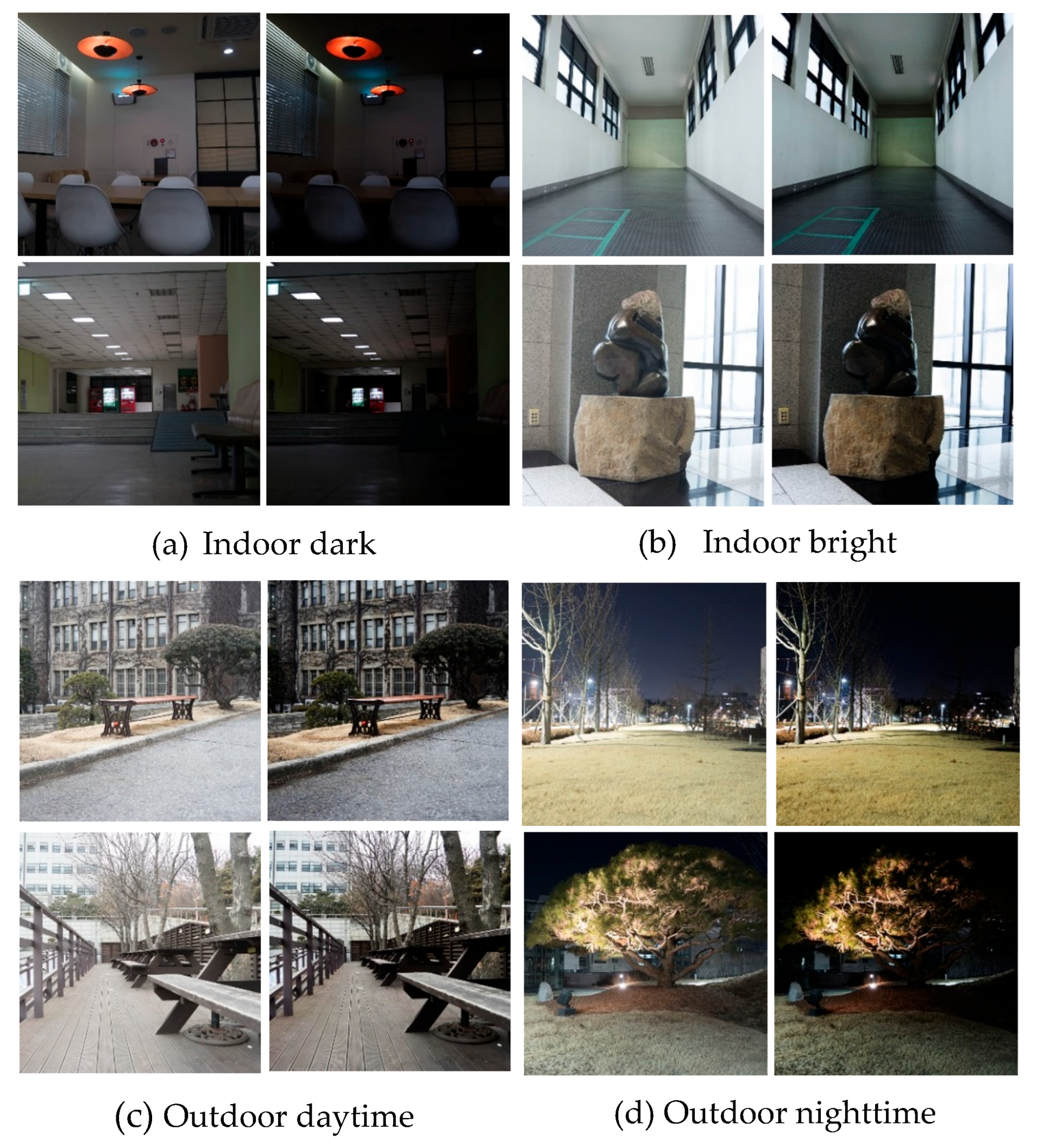

4.2.2. Local Dimming Function

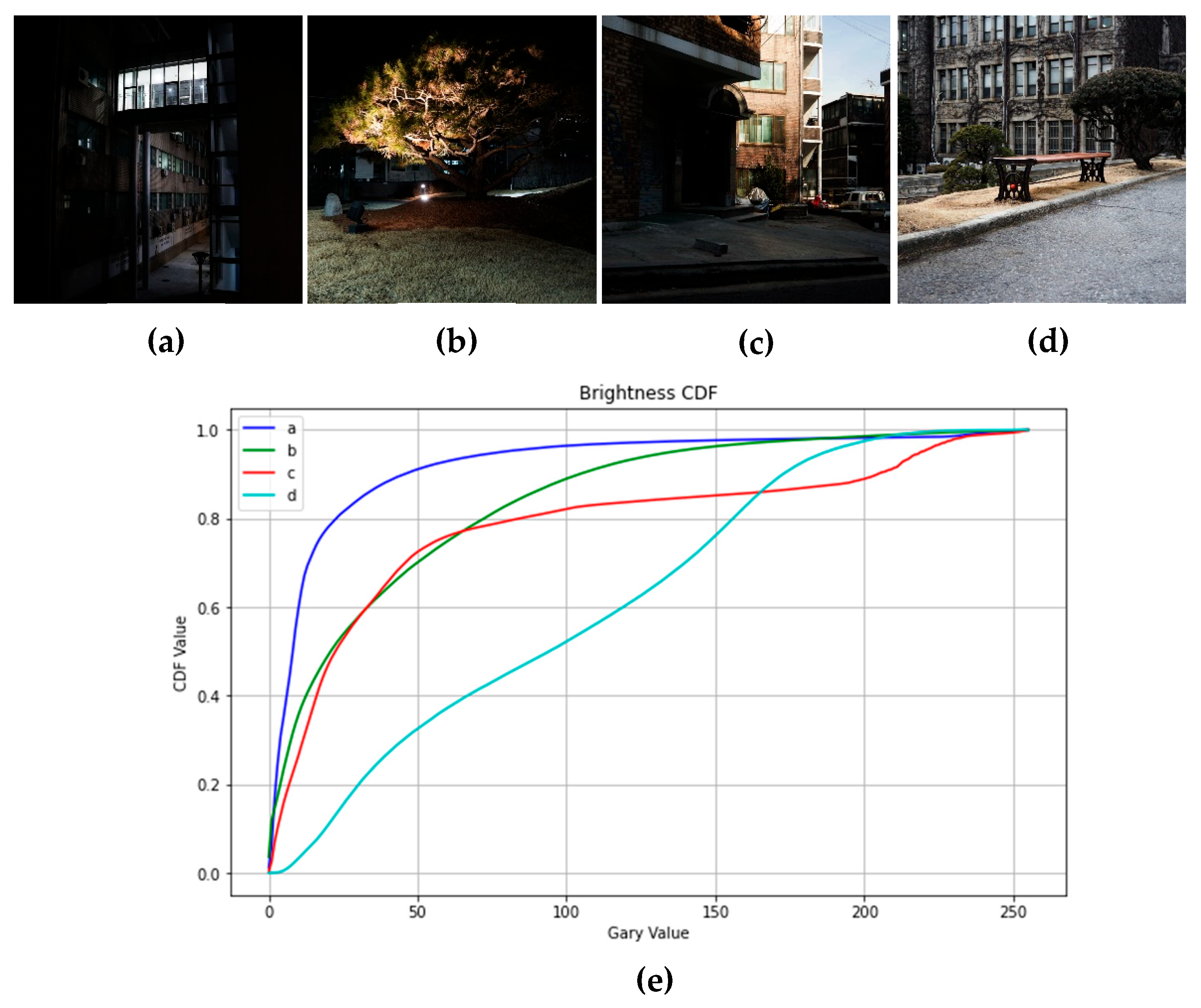

- (1)

- The CDF of the image with a dark background (Figure 17a) may show that most of the pixels are concentrated at the low luminance value, and there is a section that rises rapidly to 1, indicating a small number of high-brightness pixels.

- (2)

- The CDF for the low-luminance image (Figure 17b) may be concentrated in the low-luminance region, but the rise to 1 will be smoother than in the dark background image.

- (3)

- The CDF of the high-contrast image (Figure 17c) may have one or more distinct jumps, indicating a significant contrast in the image.

- (4)

- CDF in the image with a uniform distribution of brightness (Figure 17d) may exhibit a gently rising curve to indicate that the brightness values in the image are evenly distributed.

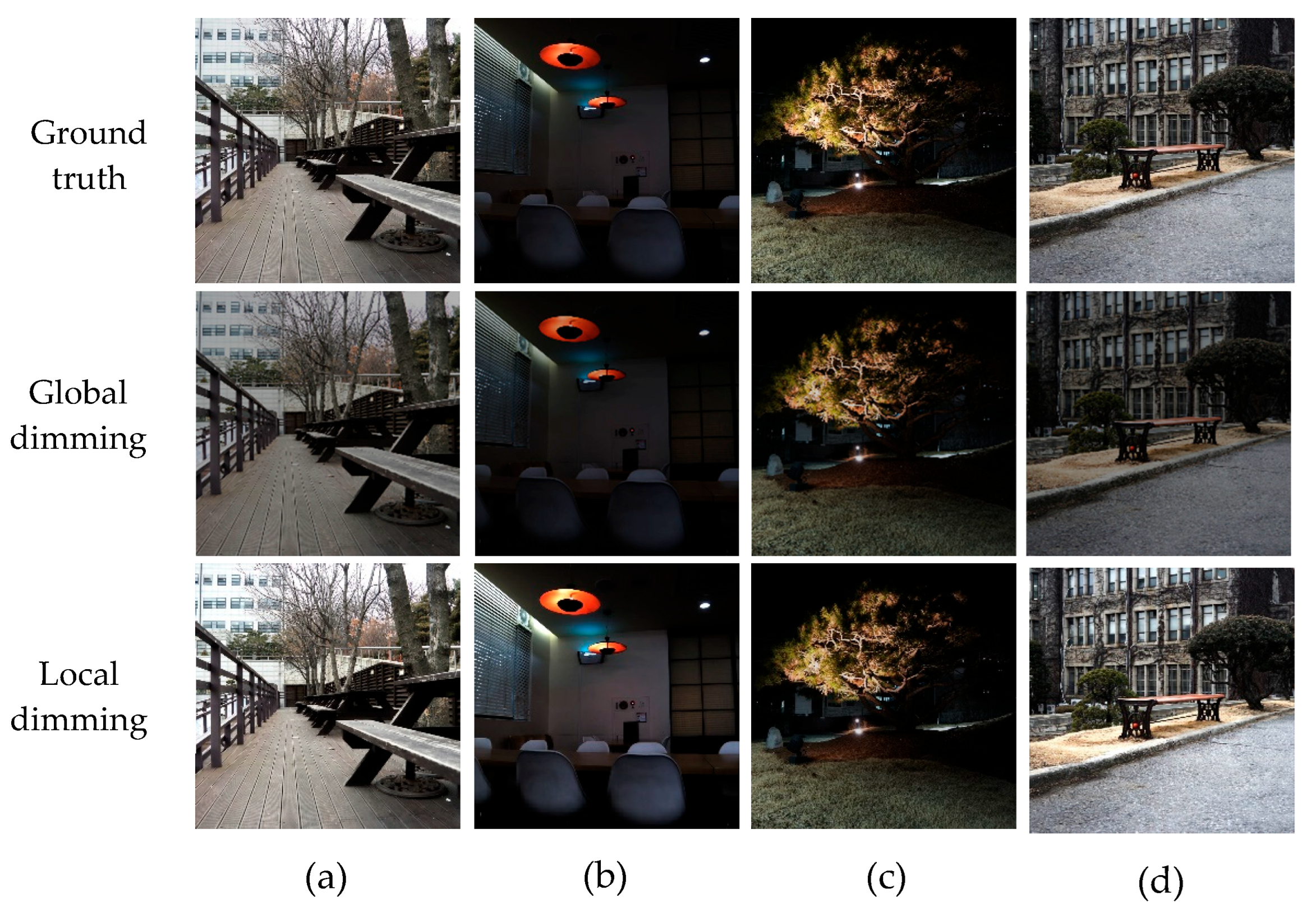

4.2.3. Performance Evaluation Results

- (1)

- The higher the PSNR value, the lower the distortion and the better the image quality. Here, three PSNR values of the local dimming are higher than those of the global dimming method among the four image types. Only the PSNR value of image (b) using global dimming is higher than that of local dimming. However, local dimming can achieve more details than global dimming in image (b), which is an advantage for HDR images.

- (2)

- SSIM is an index used to measure the similarity of two images, especially to assess distortion during image compression or other applications of image processing. Unlike PSNR, SSIM considers the characteristics of the human visual system and is more reflective of the subjective perception of image quality by the human eye. Here, the SSIM values of the local dimming technique are closer to 1 than the global dimming method among the four image types.

- (3)

- CD is a measure used to describe the difference between two colors. It is usually calculated within a specific color space, such as the CIELAB color space. The smaller the value of the color difference, the more similar the two colors are. In this case, the CD values of the local dimming technique were slightly higher than those of the global dimming method among the four image types on average. The reason for this is that the contrast of the HDR image is increased by local dimming, which causes the color to change.

- (4)

- BLU Power refers to the power consumption of the backlight source, and the higher the value, the greater the power consumption. Here, the BLU values of local dimming are lower than those of the global dimming method among the four image types.

4.3. Discussion: Dataset Size and Generalization

4.4. Algorithm Complexity Analysis

5. Conclusions

- (1)

- A software simulator for backlight modules is developed to reduce the cost of hardware modifications.

- (2)

- An algorithm is proposed to directly generate a corresponding HDR image from a single LDR image, overcoming the limitation that multiple exposure images cannot be obtained.

- (3)

- The deep learning method is used to generate the backlight module image and the LCD panel image, and the halo artifact is optimized and mitigated.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kang, S.J. Image-quality-based power control technique for organic light emitting diode displays. J. Disp. Technol. 2015, 11, 104–109. [Google Scholar] [CrossRef]

- Wang, X.; Jang, C. Backlight scaled contrast enhancement for liquid crystal displays using image key-based compression. In Proceedings of the IEEE International Conference on Visual Communications and Image Processing (VCIP), Chengdu, China, 27–30 November 2016; pp. 1–4. [Google Scholar] [CrossRef]

- Zhang, T.; Wang, H.; Du, W.; Li, M. Deep CNN-based local dimming technology. Appl. Intell. 2022, 52, 903–915. [Google Scholar] [CrossRef]

- Liao, L.Y.; Chen, C.W.; Huang, Y.P. Local blinking HDR LCD systems for fast MPRT with high brightness LCDs. J. Disp. Technol. 2010, 6, 178–183. [Google Scholar] [CrossRef][Green Version]

- Tan, G.; Huang, Y.; Li, M.C.; Lee, S.L.; Wu, S.T. High dynamic range liquid crystal displays with a mini-LED backlight. Opt. Express 2018, 26, 16572–16584. [Google Scholar] [CrossRef]

- Cho, H.; Kwon, O.K. A backlight dimming algorithm for low power and high image quality LCD applications. IEEE Trans. Consum. Electron. 2009, 55, 839–844. [Google Scholar] [CrossRef]

- Zhang, T.; Qi, W.; Zhao, X.; Yan, Y.; Cao, Y. A local dimming method based on improved multi-objective evolutionary algorithm. Expert Syst. Appl. 2022, 204, 117468. [Google Scholar] [CrossRef]

- Rahman, M.A.; You, J. Human visual sensitivity based optimal local backlight dimming methodologies under different viewing conditions. Displays 2023, 76, 102338. [Google Scholar] [CrossRef]

- Duan, L.; Marnerides, D.; Chalmers, A.; Lei, Z.; Debattista, K. Deep controllable backlight dimming. arXiv 2020, arXiv:2008.08352. [Google Scholar] [CrossRef]

- Chen, N.J.; Bai, Z.; Wang, Z.; Ji, H.; Liu, R.; Cao, C.; Wang, H.; Jiang, F.; Zhong, H. Low cost perovskite quantum dots film based wide color gamut backlight unit for LCD TVs. SID Symp. Dig. Tech. Pap. 2018, 49, 1657–1659. [Google Scholar] [CrossRef]

- Kunkel, T.; Spears, S.; Atkins, R.; Pruitt, T.; Daly, S. Characterizing high dynamic range display system properties in the context of today’s flexible ecosystems. SID Symp. Dig. Tech. Pap. 2016, 47, 880–883. [Google Scholar] [CrossRef]

- Zhang, T.; Wang, H.; Chen, Y.Z.; Liu, Q.; Li, M.; Lei, Z.C. A rapid local backlight dimming method for interlaced scanning video. J. Soc. Inf. Disp. 2018, 26, 438–446. [Google Scholar] [CrossRef]

- Huang, Y.; Hsiang, E.L.; Deng, M.Y.; Wu, S.T. Mini-LED, Micro-LED and OLED displays: Present status and future perspectives. Light Sci. Appl. 2020, 9, 105. [Google Scholar] [CrossRef]

- Zerman, E.; Valenzise, G.; Dufaux, F. A dual modulation algorithm for accurate reproduction of high dynamic range video. In Proceedings of the 2016 IEEE 12th Image, Video, and Multidimensional Signal Processing Workshop, Bordeaux, France, 11–12 July 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Lin, F.C.; Huang, Y.P.; Liao, L.Y. Dynamic backlight gamma on high dynamic range LCD TVs. J. Disp. Technol. 2008, 4, 139–146. [Google Scholar] [CrossRef]

- Chen, J. Dynamic backlight signal extraction algorithm based on threshold of image CDF for LCD-TV and its hardware implementation. Chin. J. Liq. Cryst. Disp. 2010, 25, 449–453. [Google Scholar]

- Jo, J.; Soh, J.W.; Park, J.S.; Cho, N.I. Local backlight dimming for liquid crystal displays via convolutional neural network. In Proceedings of the Asia-Pacific Signal Information Processing Associate Annual Summit Conference (APSIPA ASC), Auckland, New Zealand, 7–10 December 2020; pp. 1067–1074. [Google Scholar]

- Hatchett, J.; Toffoli, D.; Melo, M.; Bessa, M.; Debattista, K.; Chalmers, A. Displaying detail in bright environments: A 10,000 nit display and its evaluation. Signal Process. Image Commun. 2019, 76, 125–134. [Google Scholar] [CrossRef]

- Gao, Z.; Ning, H.; Yao, R.; Xu, W.; Zou, W.; Guo, C.; Luo, D.; Xu, H.; Xiao, J. Mini-LED backlight technology progress for liquid crystal display. Crystals 2022, 12, 313. [Google Scholar] [CrossRef]

- Guha, A.; Nyboer, A.; Tiller, D.K. A review of illuminance mapping practices from HDR images and suggestions for exterior measurements. J. Illum. Eng. Soc. 2022, 19, 210–220. [Google Scholar] [CrossRef]

- Wang, L.; Yoon, K.J. Deep learning for HDR imaging: State-of-the-art and future trends. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 8874–8895. [Google Scholar] [CrossRef] [PubMed]

- Sun, N.; Mansour, H.; Ward, R. HDR image construction from multi-exposed stereo LDR images. In Proceeding of the 2010 IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 2973–2976. [Google Scholar] [CrossRef]

- Park, W.J.; Ji, S.W.; Kang, S.J.; Jung, S.W.; Ko, S.J. Stereo vision-based high dynamic range imaging using differently-exposed image pair. Sensors 2017, 17, 1473. [Google Scholar] [CrossRef]

- Gupta, S.S.; Hossain, S.; Kim, K.-D. HDR-like image from pseudo-exposure image fusion: A genetic algorithm approach. IEEE Trans. Consum. Electron. 2021, 67, 119–128. [Google Scholar] [CrossRef]

- Luzardo, G.; Kumcu, A.; Aelterman, J.; Luong, H.; Ochoa, D.; Philips, W. A display-adaptive pipeline for dynamic range expansion of standard dynamic range video content. Appl. Sci. 2024, 14, 4081. [Google Scholar] [CrossRef]

- Niu, Y.; Wu, J.; Liu, W.; Guo, W.; Lau, R.W. HDR-GAN: HDR image reconstruction from multi-exposed LDR images with large motions. IEEE Trans. Image Process. 2021, 30, 3885–3896. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Jiang, G.; Yu, M.; Yang, Y.; Ho, Y.S. Learning stereo high dynamic range imaging from a pair of cameras with different exposure parameters. IEEE Trans. Comput. Imaging 2020, 6, 1044–1058. [Google Scholar] [CrossRef]

- Yan, Q.; Gong, D.; Shi, Q.; Hengel, A.V.D.; Shen, C.; Reid, I.; Zhang, Y. Attention-guided network for ghost-free high dynamic range imaging. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 1751–1760. [Google Scholar] [CrossRef]

- Kalantari, N.K.; Ramamoorthi, R. Deep high dynamic range imaging of dynamic scenes. ACM Trans. Graph. 2017, 36, 144. [Google Scholar] [CrossRef]

- Singh, K.; Pandey, A.; Agarwal, A.; Agarwal, M.K.; Shankar, A.; Parihar, A.S. FRN: Fusion and recalibration network for low-light image enhancement. Multimed. Tools Appl. 2024, 83, 12235–12252. [Google Scholar] [CrossRef]

- Didyk, P.; Mantiuk, R.; Hein, M.; Seidel, H.P. Enhancement of bright video features for HDR displays. Comput. Graph. Forum 2008, 27, 1265–1274. [Google Scholar] [CrossRef]

- Huo, Y.; Yang, F.; Dong, L.; Brost, V. Physiological inverse tone mapping based on retina response. Vis. Comput. 2014, 30, 507–517. [Google Scholar] [CrossRef]

- Wang, T.H.; Chiu, C.W.; Wu, W.C.; Wang, J.W.; Lin, C.Y.; Chiu, C.T.; Liou, J.J. Pseudo-multiple-exposure-based tone fusion with local region adjustment. IEEE Trans. Multimed. 2015, 17, 470–484. [Google Scholar] [CrossRef]

- Lu, K.; Zhang, L. TBEFN: A two-branch exposure-fusion network for low-light image enhancement. IEEE Trans. Multimed. 2020, 23, 4093–4105. [Google Scholar] [CrossRef]

- Li, R.; Wang, C.; Wang, J.; Liu, G.; Zhang, H.Y.; Zeng, B.; Liu, S. Uphdr-gan: Generative adversarial network for high dynamic range imaging with unpaired data. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 7532–7546. [Google Scholar] [CrossRef]

- Liu, Y.L.; Lai, W.S.; Chen, Y.S.; Kao, Y.L.; Yang, M.H.; Chuang, Y.Y.; Huang, J.B. Single-image HDR reconstruction by learning to reverse the camera pipeline. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1651–1660. [Google Scholar] [CrossRef]

- Chen, X.; Liu, Y.; Zhang, Z.; Qiao, Y.; Dong, C. Hdrunet: Single image HDR reconstruction with denoising and dequantization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2021; pp. 354–363. [Google Scholar] [CrossRef]

- Sharif, S.M.; Naqvi, R.A.; Biswas, M.; Kim, S. A two-stage deep network for high dynamic range image reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2021; pp. 550–559. [Google Scholar] [CrossRef]

- Wu, G.; Song, R.; Zhang, M.; Li, X.; Rosin, P.L. LiTMNet: A deep CNN for efficient HDR image reconstruction from a single LDR image. Pattern Recognit. 2022, 127, 108620. [Google Scholar] [CrossRef]

- Lecouat, B.; Eboli, T.; Ponce, J.; Mairal, J. High dynamic range and super-resolution from raw image bursts. ACM Trans. Graph. 2022, 41, 1–21. [Google Scholar] [CrossRef]

- Lee, S.; Jo, S.Y.; An, G.H.; Kang, S.J. Learning to generate multi-exposure stacks with cycle consistency for high dynamic range imaging. IEEE Trans. Multimed. 2020, 23, 2561–2574. [Google Scholar] [CrossRef]

- de Paiva, J.F.; Mafalda, S.M.; Leher, Q.O.; Alvarez, A.B. DDPM-Based Inpainting for Ghosting Artifact Removal in High Dynamic Range Image Reconstruction. In Proceedings of the 9th International Conference on Image, Vision and Computing (ICIVC), Suzhou, China, 15–17 July 2024; pp. 28–33. [Google Scholar] [CrossRef]

- Santos, M.S.; Ren, T.I.; Kalantari, N.K. Single image HDR reconstruction using a CNN with masked features and perceptual loss. ACM Trans. Graph. 2020, 39, 80. [Google Scholar] [CrossRef]

- Gatys, L.A.; Ecker, A.S.; Bethge, M. Image style transfer using convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2414–2423. [Google Scholar] [CrossRef]

- Sreeram, V.; Agathoklis, P. On the properties of Gram matrix. IEEE Trans. Circuits Syst. I Fundam. Theory Appl. 1994, 41, 234–237. [Google Scholar] [CrossRef]

- Trending Papers of Hugging Face. Available online: https://huggingface.co/papers/trending (accessed on 31 August 2025).

- Jang, H.; Bang, K.; Jang, J.; Hwang, D. Dynamic range expansion using cumulative histogram learning for high dynamic range image generation. IEEE Access 2020, 8, 38554–38567. [Google Scholar] [CrossRef]

- Duan, L.; Marnerides, D.; Chalmers, A.; Lei, Z.; Debattista, K. Deep controllable backlight dimming for HDR displays. IEEE Trans. Consum. Electron. 2022, 68, 191–199. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Song, S.; Kim, Y.I.; Bae, J.; Nam, H. Deep-learning-based pixel compensation algorithm for local dimming liquid crystal displays of quantum-dot backlights. Opt. Express 2019, 27, 15907–15917. [Google Scholar] [CrossRef] [PubMed]

| Non-Learning-Based Method | Learning-Based Method | |

|---|---|---|

| Multiple LDR Images | [22] (2010) [23] (2017) [24] (2021) [25] (2024) | [26] (2021), [27] (2020) [28] (2019), [29] (2017) [30] (2024) |

| Single LDR Image | [31] (2008) [32] (2014) [33] (2015) [34] (2020) | [35] (2022), [36] (2020) [37] (2021), [38] (2021) [39] (2022), [40] (2022) [41] (2020), [42] (2024) |

| Hyperparameter Name | Value Settings |

|---|---|

| Optimizer | Adam, initial learning rate 1 × 10−4, decay factor 0.5 every 20 epochs |

| Batch size | 16 |

| Epochs | 200 |

| Network channels | [64, 128, 256, 512, 1024] in encoder, symmetric decoder |

| Feature mask threshold | t = 0.8 |

| Loss weights | α1 = 1.0, α2 = 0.1, α3 = 0.2, α4 = 0.05 |

| Luminance regularization | β = 0.01 |

| PSF kernel size | Wg = 15, Hg = 15 |

| Method (Mask + Pre-Training) | MSE | HDR-VDP-2 |

|---|---|---|

| SConv + HDR pre-training | 0.0402 | 58.43 |

| SConv + Inp. pre-training | 0.0374 | 60.03 |

| GConv + HDR pre-training | 0.0398 | 53.32 |

| GConv + Inp. pre-training | 0.1017 | 43.13 |

| IMask + HDR pre-training | 0.0398 | 58.39 |

| IMask + Inp. pre-training | 0.0369 | 61.27 |

| FMask + HDR pre-training | 0.0393 | 58.81 |

| FMask + Inp. pre-training (Our method) | 0.0356 | 63.18 |

| Image | Method | PSNR | SSIM | Color Distortion | BLU |

|---|---|---|---|---|---|

| (a) | Global dimming | 27.94 | 0.81 | 0.08 | 41.54 |

| Local dimming | 29.44 | 0.91 | 0.17 | 20.09 | |

| (b) | Global dimming | 32.38 | 0.88 | 0.07 | 119.24 |

| Local dimming | 29.43 | 0.96 | 0.07 | 78.93 | |

| (c) | Global dimming | 30.56 | 0.86 | 0.13 | 113.36 |

| Local dimming | 30.74 | 0.86 | 0.16 | 56.75 | |

| (d) | Global dimming | 27.89 | 0.77 | 0.10 | 32.15 |

| Local dimming | 28.62 | 0.94 | 0.12 | 28.67 |

| Similarity | Average PSNR | Average SSIM | Average Color Distortion (CIE76) | |

|---|---|---|---|---|

| Model | ||||

| Resnet | 29.50 dB | 0.87 | 7.53 | |

| VGG | 28.36 dB | 0.28 | 9.39 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chia, T.-L.; Syu, Y.-Y.; Huang, P.-S. A Novel Local Dimming Approach by Controlling LCD Backlight Modules via Deep Learning. Information 2025, 16, 815. https://doi.org/10.3390/info16090815

Chia T-L, Syu Y-Y, Huang P-S. A Novel Local Dimming Approach by Controlling LCD Backlight Modules via Deep Learning. Information. 2025; 16(9):815. https://doi.org/10.3390/info16090815

Chicago/Turabian StyleChia, Tsorng-Lin, Yi-Yang Syu, and Ping-Sheng Huang. 2025. "A Novel Local Dimming Approach by Controlling LCD Backlight Modules via Deep Learning" Information 16, no. 9: 815. https://doi.org/10.3390/info16090815

APA StyleChia, T.-L., Syu, Y.-Y., & Huang, P.-S. (2025). A Novel Local Dimming Approach by Controlling LCD Backlight Modules via Deep Learning. Information, 16(9), 815. https://doi.org/10.3390/info16090815