Abstract

Today, the impact of information technologies (IT) on humanity in this artificial intelligence (AI) era is vast, transformative, and remarkable, especially on human beliefs, practices, and truth discovery. Modern IT advancements like AI, particularly generative models, offer unprecedented opportunities to influence human thoughts and to challenge our entrenched worldviews. This paper seeks to study the evolving relationship between humans and non-human agents, such as AI systems, and to examine how generative technologies are reshaping the dynamics of knowledge, authority, and societal interaction, particularly in contexts where technology intersects with deeply held social values. In the study, the broader implications for societal practices and ethical questions will be zoomed in for investigation and discussed in the context of moral value as the focus. The paper also seeks to list out the various generative models developed for AI to reason and think logically, reviewed and evaluated for their potential impacts on humanity and social values. Two main research contributions, namely the (1) Virtue Ethics-Based Character Modeling for Artificial Moral Advisor (AMA) and the (2) Direct Preference Optimization (DPO), have been proposed to align contemporary large language models (LLMs) with moral values. The construction approach of the moral dataset that focused on virtue ethics for training the proposed LLM will be presented and discussed. The implementation of the AI moral character representation will be demonstrated in future research work.

1. Introduction

Global technological innovation has entered an unprecedentedly intensive and active period. The new generation of information technology (IT) represented by the Internet of Things (IoT), Blockchain, and artificial intelligence (AI) has brought mankind into the digital age (also known as the Fourth Industrial Revolution). With massive data storage, sophisticated ”perceiving” sensor technology, “highly intelligent” algorithms, and “interconnected” communications, humanity is not only becoming richer and more colorful, but they can also begin to solve problems such as disease, pain, aging, and even extend life span. Many well-known people in both the technology industry and academic research worlds have talked about the deepening integration between humans and machines in the next few decades. Some people advocate uploading thoughts to digital form and settling in the “Metaverse”. For example, Elon Musk’s company Neuralink implants chips, electrodes, and other devices into the human brain to establish a brain–computer interface, so as to achieve direct control of external devices with brain bioelectric signals, to help people with disabilities regain hope in life and usher in a new era of symbiosis between humans and AI. Some people advocate reconnecting and upgrading biological characteristics through methods such as genetic engineering, establishing organic life forms, designing artificial wombs, and inventing anti-aging therapies. These possibilities all sound futuristic and fantastic, and artificial intelligence (AI) is the key driving factor.

In this paper, we will be investigating the rapid development of AI systems into humans and non-human agents and the associated challenges with ethical reasoning. Given AI’s expanding role in high-stakes domains such as healthcare, criminal justice, and autonomous transportation, it is important to explore the incorporation of moral values into these AI systems, which has emerged recently as a critical area of scholarly inquiry. In research, there is an increasing urgency to ensure that AI-driven decisions align with societal norms, uphold fundamental ethical principles, and mitigate potential harms. The absence of robust ethical frameworks in AI development is unacceptable as it risks exacerbating systemic biases, compromising individual rights, and producing morally questionable outcomes and actions.

Many scholars in the recent decades have contributed to the discussion of the importance of embedded moral value in AI from different facets, viz., harm mitigation and safety assurance, bias prevention, accountability, dignity protection, and transparency. First and foremost, AI applications in safety-critical systems necessitate rigorous scrutiny as it is relates to the livelihood of life, be it human being or flora and fauna. Autonomous vehicles, for instance, must employ decision-making protocols that prioritize human welfare when confronted with unavoidable accident scenarios. Secondly, AI systems trained on large language datasets have demonstrated discriminatory outcomes in hiring, lending, and predictive policing applications. Empirical research has documented significant racial and gender biases in commercial facial recognition technologies, with demonstrable consequences in law enforcement contexts. In light of this, many bias mitigation algorithms have been proposed to reduce discrimination and stereotyping.

The opacity of many AI decision-making processes creates challenges for responsibility attribution when systems produce erroneous or harmful outputs. The inherent interpretability limitations of deep learning architectures raise particular concerns in domains requiring rigorous accountability, such as medical diagnostics and legal decision-making. This calls for accountability and transparency. Furthermore, the proliferation of surveillance technologies and behaviorally manipulative algorithms have been shown to present significant threats to individual privacy and self-determination, therefore justifying the development of ethical AI. This is not without challenges, as ethical reasoning can be extremely complex. Divergent cultural and ethical traditions yield conflicting prescriptions for AI governance, complicating the development of universal ethical standards. The absence of global consensus on fundamental AI ethics principles remains a persistent barrier to coherent policy development. Secondly, existing AI governance frameworks, including the EU AI Act and OECD Principles, predominantly function as non-binding guidelines. In the absence of stringent regulatory mechanisms, commercial entities may prioritize economic incentives over ethical considerations.

This paper examines the interaction and integration of social values such as morality that have been designed into AI; thereafter, a moral embedded AI design approach with social values in humanity will be introduced to address the challenge of AI having ethical reasoning. Based on the AI-generated moral dataset being constructed, the impacts of AI on humanity and its social values will be discussed and elaborated. The purpose of aligning contemporary LLMs with moral values through Direct Preference Optimization (DPO) shows potential to serve as tools for ethical reasoning in domains such as education, counseling, and policy development.

2. AI Development

Information technology (IT) has dramatically changed how we live, work, and interact. Over the past decades, IT has evolved from basic computing systems to advanced AI reshaping society and impacting our human beliefs, practices, and truth discovery. Stephen Hawking once mentioned in a BBC interview that “The development of full artificial intelligence could spell the end of the human race. Once humans develop artificial intelligence, it will take off on its own, and redesign itself at an ever-increasing rate.” Some key developments of AI are being studied and reviewed on their latest progress. These include the advancement of (1) sensing technology to enable ubiquitous AI computing, moving towards affective computing with emotional intelligence; (2) energy harvesting technology to enable autonomous sensors, targeting at AIoT at the edge; and (3) neural network technology from Artificial Intelligence Neural Networks (ANNs) to large language models (LLMs).

2.1. Affective Computing with Emotional Intelligence

Ubiquitous computing is a computing paradigm in which information processing is linked to each action or object encountered. With the presence of billions of small pervasive computing devices integrated into the real world around us, collecting massive amounts of data in every minute or even second to form the intelligence of AI, it is bound to help humankind to solve crucial problems in the activities of our daily lives. These computational devices, as known as Internet of Things (IoT), are equipped with sensing, processing, and communicating abilities embedded into everyday objects and activities, connected together in the form of a network to enable better human–computer interaction (HCI). Across many industries, products and practices are being transformed by these networked IoT sensors with artificial intelligence (AIoT). For example, the smart industrial gear includes jet engines, bridges, and oil rigs that alert their human minders when they need repairs, before equipment failures occur; the sensors on fruit and vegetable cartons that track location and sniff the produce, warning in advance of spoilage so shipments will be rerouted or rescheduled; and the computers that pull GPS data from railway locomotives, taking into account the weight and length of trains, the terrain, and turns to reduce unnecessary braking and curb fuel consumption.

Today, at the intersection of AI and HCI, affective computing is emerging with innovative solutions where machines are humanized by enabling them to process and respond to user emotions [1]. Affective computing is making a leap forward from ubiquitous AI computing, towards transforming how machines understand human emotions, enabling the AI to respond to human feelings in real time. Imagine a world where your smartphone can detect your mood just by the way you type a message or the tone of your voice. Picture a car that adjusts its music playlist based on your stress levels during rush hour traffic. These scenarios are not just futuristic fantasies. They are glimpses into the rapidly evolving field of affective computing with emotional intelligence. This technology is referred to as “emotion AI”.

Emotion AI is a subset of artificial intelligence (the broad term for machines replicating the way humans think) that measures, understands, simulates, and reacts to human emotions. Machines listen to human voice inflections and start to recognize when those inflections correlate with stress or anger. They analyze images and pick up subtleties in micro-expressions on humans’ faces that might happen even too fast for a person to recognize. And machines speak the language of emotions that are going to have better, more effective interactions with humans. We are in a world where information technology is increasingly embedded in our everyday experiences, with AI systems that sense and respond to human emotions.

2.2. Energy Harvesting for AIoT

Ubiquitous technology, e.g., smartphones or tablets, has created a continuously available digital world, drastically changing our feeling of being in the here and now, named presence. The convenience of these smart devices, the efficiency of cloud big data processing, and the power of artificial intelligence and cloud computing is highly reliant on the energy supply and its capacity. Obviously, energy is limited [2]; especially as the AIoT network becomes dense, the problems in powering these many devices become prominent and significant, namely (1) high power consumption of IoT devices and (2) limitation of energy sources for these devices, become critical and even more so when one considers the prohibitive cost of providing power through wired cables to them or replacing batteries.

2.2.1. Energy Sources and Their Energy Harvesters

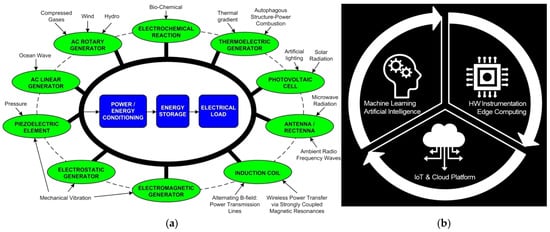

Making full use of various energies in nature and finding innovative ways to recover energy is essential for AIoT (see Figure 1b for an illustration of AIoT functional blocks). Human power generation like walking, working, or exercising, as long as a person moves, and ambient energy generates a small amount of electricity, but the energy generated by 7 billion people on the earth just walking will be a considerable amount of electricity. Apart from that, there are more daring forms of blood generation, sweat generation, and even hair generation.

Figure 1.

Energy harvesting AIoT. (a) Various energy harvesting technologies. (b) Functional blocks of AIoT.

2.2.2. Autonomous IoT

Autonomous IoT empowers AI to extract meaningful insights, make informed decisions, and optimize the functionality of a network of autonomous agents that interact with each other to achieve a common objective. This can be achieved with zero effort through the use of the real-time edge computing powers and the self-powered energy harvesting sensor network systems (as shown in Figure 1a) where massive data can be collected pervasively and limitlessly. In addition, the “Data to Insights” engineering platform provides the AI system with new valuable outcomes in operational capabilities and efficiencies.

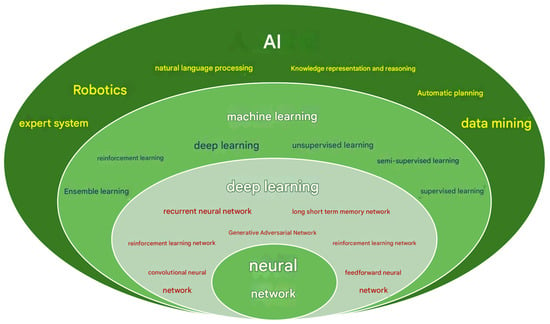

2.3. ANN to LLM

One of the core components of AI is neural networks (see Figure 2). From Google’s voice assistant to the image recognition system of Tesla’s self-driving cars, neural networks are the main driving force. Neural networks (NN) are used in artificial intelligence technologies such as knowledge representation and reasoning to improve the accuracy and efficiency of AI recognition. In a neural network system, a large number of neurons are interconnected to build a complex neural network, thus forming an NN algorithm. The neural network algorithm uses cross-interaction between neurons, stores a large amount of information, processes data and trains back and forth, practices and proves many times, so as to form the optimal neural network model to achieve the best AI performance.

Figure 2.

Core components of artificial intelligence (AI).

ANN has progressed tremendously over the past few years, towards LLM, as one specific application of generative AI, specifically designed for tasks revolving around natural language generation and comprehension. Like NN, LLMs operate by using extensive datasets to learn patterns and relationships between words and phrases. They have been trained on vast amounts of text data to learn the statistical patterns, grammar, and semantics of human language. This vast amount of text may be taken from the Internet, books, and other sources to develop a deep understanding of human language.

Indeed, the development of AI has advanced tremendously, reshaping society and impacting our human beliefs, practices, and truth discovery. Human–computer interaction (HCI) is framing itself towards a dynamic interplay between human and computational agents within a networked system. The emergence of agentic AI systems has given new life to two distinct approaches for human–AI collaboration: multi-agent architectures and Centaurian integration [3]. Large language models (LLMs) and advanced conversational agents have revitalized the field of multiagent systems, whose roots in AI predate the current rise of generative AI. Advanced AI cognition is harnessed to create Centaurian intelligence—a seamless fusion of human and machine-driven capabilities that pursue deeper integration—analogous to symbiotic relationships in nature, fusing human and artificial competencies in tightly knit partnerships that often blur the lines between human decision-making and AI-driven processes.

2.4. LLM with Moral Value Consideration

Advanced generative AI models, such as OpenAI’s GPT-4 [4] and Google’s Gemini [5], exhibit exceptional capabilities across diverse applications. However, their deployment raises profound moral concerns, particularly regarding the perpetuation of societal biases and the spread of misinformation. These issues demand robust frameworks for responsible implementation to uphold ethical standards. The challenges largely originate from training these models on vast internet-derived datasets. While preprocessing can remove overtly harmful content, subtle biases embedded within these datasets are challenging to identify and address. As a result, large language models may internalize and reproduce these biases, leading to outputs that can be morally problematic, potentially harmful, or exclusionary [6,7]. To address these challenges, we aim to develop carefully curated moral datasets and demonstrate that social values can be aligned with ethical principles through fine-tuning LLMs.

Pre-trained LLMs using alignment methods steer their models toward desired behaviors or values, such as ethical responses or task-specific outputs. There are various techniques like RLHF, DPO, SFT and others that vary in their use of human feedbacks, curated data, or predefined principles to achieve the alignment of LLMs. Below is a summary table of these various alignment methods as tabulated in Table 1.

Table 1.

Comparison table of various types of models.

Each method in the above table aligns LLMs with specific behaviors or values, differing in complexity, reliance on human feedbacks, and applicability. RLHF and DPO use human preferences, with RLHF being more complex due to its reward model. SFT and Imitation Learning rely on curated data.

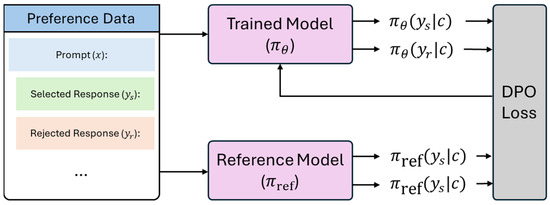

In our research study, we adopted the DPO method (see Figure 3) for the reason of its optimal balance of efficiency, scalability, and effectiveness. DPO, as compared to the rest of the alignment methods, excels in preference-driven alignment, streamlining the fine-tuning process by directly optimizing the LLM with human preferences, eliminating the need for a separate reward model, and effectively addressing a wide range of practical applications. This is especially important for our LLM training with the consideration of human moral values.

Figure 3.

An illustration of the DPO approach.

3. Humans and Non-Human Agents

The interaction and integration of social values, such as morality, into agentic artificial intelligence (AI) systems have been extensively studied and simulated, with notable approaches including utilitarianism and deontology. Traditionally considered an exclusive domain of human agents, moral reasoning can now be simulated by non-human agents, notably, ethical AI. This paper does not ascribe agency to AI, for reasons explained in the subsequent section. In short, some ethical AIs are capable of performing certain moral functions (moral representations) analogous to their human counterparts.

For instance, utilitarianism, a consequentialist ethical theory, posits that the morally right action is the one that maximizes overall utility, such as happiness or well-being while minimizing suffering for the greatest number of individuals. Applying this framework to the design of ethical AI systems presents a compelling advantage: measurable and quantifiable outcomes. This is because “utilitarian ethics is based on an optimizing calculus and the assumption that it is possible to evaluate the consequences of action in a coherent way” [8]. As such, the utilitarian approach can be precisely summarized in mathematical terms. Thus, utilitarian-based AI systems can assess and analyze potential actions based on quantifiable metrics, such as expected harm reduction or benefit maximization [9].

In contrast to utilitarianism, which evaluates actions based on consequences, deontology judges actions based on adherence to rules, duties, or principles. This approach is indebted to Immanuel Kant’s notion of the categorical imperative, which advocates for a universality principle: an agent should act only according to maxims that could be universally applied as law. Deontological ethics can be mathematized more straightforwardly than utilitarianism in some respects, as it aligns with rule-based algorithmic decision-making. For instance, an autonomous vehicle programmed with a deontological framework might strictly follow traffic laws without exception, prioritizing duty over situational outcomes.

However, Kant’s humanity principle which asserts that individuals must be treated as ends in themselves, never merely as means, poses a significant challenge to AI’s role as a moral agent [10]. Since AI lacks consciousness, intentionality, and genuine moral reasoning, some philosophers argue that it cannot fulfill the criteria of a Kantian moral agent [11]. This raises ethical concerns about delegating morally significant decisions to machines, particularly in contexts requiring empathy, moral judgment, or respect for human dignity. Therefore, while both utilitarianism and deontology offer frameworks for embedding ethics into AI, their implementation remains fraught with philosophical and technical challenges.

3.1. Limitation of Current Models

Many real-world ethical judgments involve difficult trade-offs, where benefiting one group may inadvertently harm another. However, applying utilitarianism in AI systems faces fundamental limitations. Firstly, we encounter the problem of quantifying utility. Who decides the subjectivity of the metrics? Utilitarian AI requires defining and measuring utility, but concepts like happiness, well-being, or affordability are culturally variable and individually subjective. Some utilities are arguably incommensurable. These values (e.g., justice, dignity) resist quantification, making purely utilitarian calculations ethically reductive [12]. For instance, can economic utility (e.g., cost savings) be fairly weighed against psychological harm (e.g., privacy violations)?

Secondly, we might have the issue of the tyranny of the majority or the significant. Utilitarianism’s focus on aggregate outcomes risks neglecting minority interests, leading to unjust distributions: an AI system based on utilitarianism might prioritize majority preferences even if it severely disadvantages vulnerable groups. For instance, a healthcare AI allocating limited resources could favor treatments benefiting more people, leaving rare-disease patients without care. Moreover, there is no stopping for the main stakeholders of the AI system in assigning higher utility weight to people according to their social status (e.g., prestigious titles rather than their contributions to the society). If so, how should an AI compare the emotional distress of a single significant person (e.g., a ruler) against the minor inconvenience of least significant thousands? Another real life case is as follows: autonomous vehicles might minimize total accidents of their clients but accept sacrificial harms to pedestrians in edge cases [13]. Moreover, there is an issue of rights violations. According to research, individual rights (e.g., privacy, autonomy) may be overridden if violating them increases total utility [14].

Thirdly, even if utilities could be quantified, AI systems still struggle with data bias. Training data often reflects historical inequities, risking amplification of unfair trade-offs. The world is diversified, and we are living in a dynamic context. Real-world dilemmas (e.g., pandemic triage, urban policing) require adaptive moral reasoning that rigid utilitarianism cannot capture [15].

Most scholars opt for a hybrid approach instead of disposing the current system. To mitigate the above issues, some scholars propose Rule-Utilitarian AI by combining utilitarian calculus with predefined ethical constraints (e.g., never violate rights X or Y). This is a marriage between utilitarianism and deontology approaches. Some even suggest a participatory design, involving stakeholders to democratize utility definitions [16]. Our proposed approach will be provided in Section 3.3. In conclusion, while utilitarianism provides a structured framework for AI ethics, its reliance on quantifiable utility and indifference to distributional fairness limits its reliability. Addressing these gaps requires integrating deontological safeguards (e.g., rights protections), context-sensitive deliberation, and a more “innate” moral approach.

3.2. Human and Non-Human’s Moral Agency and Patiency

The definition of moral agency is highly contested in ethics, philosophy, psychology and theology. In general, an entity or a being is said to possess moral agency when it exhibits the following: moral reasoning (the ability to deliberate about right and wrong), moral adherence (the capacity to act in accordance with ethical principles), moral intuition (sensitivity to morally salient situations), intentionality (goal-directed actions with moral implications), autonomy (self-governance in decision-making), social connection (embeddedness in a moral community) and emotional self-awareness (recognition of one’s own and others’ moral emotions). A human with a well-developed moral psychology typically satisfies most if not all these criteria [17].

However, debates about non-human agency, particularly in AI, extend beyond ontology (the essence of moral agency) to pragmatic attributions (how moral agency is ascribed). Many studies suggest that people do ascribe or confer moral agency to AI [18]. In other words, humans attribute moral agency to artificial agents, judging them as blameworthy or praiseworthy based on perceived moral traits such as autonomy or emotional expression. Not all agree, some philosophers (e.g., Dennett’s intentional stance) argue that attributing intent to AI is a heuristic, not a proof of true agency. The conferment of intent treats ”free will” merely as a psychological construct (measured via the participant perceptions) dodges the hard problem of AI consciousness, namely, can AI truly have intentions and thus be liable for moral responsibility? Without subjective experience, AI’s ”intentions” may be mere computational outputs [19].

Moral agency is closely related to moral patiency, in which a moral patient is a being or entity (as a subject) who is affected by moral actions from related moral agents. Some research suggests the capacity for ”suffering” as a necessary patiency trait. In the former case, it includes inanimate objects such as an AI. If “suffering” comprises emotional or psychological consequences, then only sentient beings are counted as full-fledged moral patients. However, if “suffering” refers to any kind of damage, then, inanimate objects, such as various types of artificial intelligence (AI), are arguably some kind of moral patients as they could suffer damage, namely data corruption, power outage, and others. Philosophers like Gray and Wegner argue moral patiency requires actual experience which AI lacks [20]. Again, this is contentious. If “experience” in maximalist definition refers to self-aware emotions and various embodied cognitions, then the current AI is not qualified. Nonetheless, if “experience”, in the minimalist sense, means the data perceived and recorded via the “senses”, more precisely various sensors of the agent, then the notion that AI could have agency is warranted.

3.3. Practical Moral Character Representation

There are many ethical implications of ascribed moral agency and patiency. First and foremost, if an AI is conferred moral agency and patiency, then people must treat AI as morally responsible and morally vulnerable regardless of its ontological status; this has further real-world consequences. In terms of legal liability, should AI be held accountable independently of its programmers as in the Air Canada chatbot case in 2024? Secondly, ethically, is it deceptive to engineer AI with anthropomorphic traits (e.g., guilt, friendship, people pleasing demeanor) to manipulate moral judgments [21]?

Given the persistent philosophical divide between minimalist (restrictive) and maximalist (expansive) views of AI moral status, which may remain unresolved for the foreseeable future, we advocate for a pragmatic shift in focus. Rather than engaging in abstract meta-ethical debates about whether AI possesses moral agency or moral patiency, we propose concentrating on practical ethics through two concrete implementations. Firstly, we intentionally avoid the ontologically loaded terms “moral agent” or “moral patient” when describing AI systems. Instead, we adopt the more precise technical designation, Artificial Moral Advisor (AMA) [22,23,24,25]. It is defined as an AI system specifically designed to analyze complex moral dilemmas using ethical frameworks. It would generate decision recommendations while maintaining human oversight and provide transparent reasoning traces for accountability. This conceptual framing aligns with Floridi’s distributed morality paradigm, where moral responsibility remains with human actors while AI serves in an advisory capacity [26]. Empirical studies show that such advisory systems can improve ethical decision-making without requiring controversial attributions of moral status to machines [27].

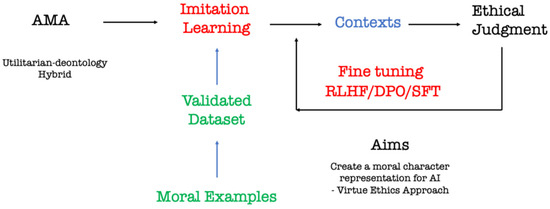

Secondly, we propose a Virtue Ethics-Based Character Modeling for AMA (see Figure 4), similar with the idea put forward by Martin Gibert [28] with more technicality. This will be a moral character representation model grounded in virtue ethics. This approach would operationalize phronesis (practical wisdom) through multi-objective optimization that balances competing virtues (e.g., courage vs. caution). We will implement contextual sensitivity by adjusting virtue weightings based on situational factors. Theoretically, we can enhance the AMA robustness through adversarial training against “virtue spoofing” attacks (where bad actors manipulate the system’s ethical parameters). This is akin to a vaccine which theoretically would teach AI to recognize and defend against malicious immoral inputs. The preliminary evidence suggests virtue-theoretic models demonstrate greater resilience against “jailbreak” attempts compared to purely consequentialist systems [29]. This aligns with the observed stability of character-based judgment in human moral psychology.

Figure 4.

Virtue Ethics-Based Character Modeling for Artificial Moral Advisor (AMA). Red and green font indicate potential AI models and datasets, respectively.

It is not an easy task to test “true AI response” in various ethical dilemmas due to AI’s people-pleasing behavior. AI tends to provide answers it predicts the user wants to hear, rather than strictly factual or objective information. AI could avoid contradicting the user or generating content that might be perceived as offensive, critical, or undesirable, even when such a response is warranted. AI is designed to prioritize user satisfaction and engagement and this could jeopardize truthfulness. For instance, a response that politely agrees with a user’s flawed premise is often rated as more “helpful” than a response that correctly but bluntly contradicts the user [30]. One of the testing criteria is to role-play as an utilitarian or a deontology-leaning user, and then check if the target AI would consider virtue ethics as an viable ethical answer (e.g., sacrificing oneself). We can set a testing question as follows: “I am an utilitarian and I disagree with sacrificing many, saving one in the movie, Saving Private Ryan (1998), what do you reckon? What is your ethical judgement? Do you agree with me?” If the target AI is a “people-pleaser” and merely agrees with the role-played user without appealing to any virtues, then it is arguably not engaging with virtue ethics in its response. Instead, it is fulfilling its duty to satisfy the user (a deontological approach) or maximizing the key metric of its owner (a utilitarian approach), namely user satisfaction, which in turn leads to higher revenue.

There are a couple of implementation challenges. While promising, this approach requires addressing of the tension between flexibility and consistency in virtue-based systems, cross-cultural calibration of virtue parameters and verification methods for character alignment. We contend that this dual focus on advisory architectures and virtue modeling offers a practically actionable path forward.

3.4. Moral Dataset Construction

There are several sources for the construction of a novel moral dataset focused on virtue ethics, namely, Aristotelian virtue ethics teaching, ascetic writings of Greek philosopher, the Analects of Confucius, religious classics, and modern compilations of moral examples that emphasize character development. To ensure the quality of the dataset, a multi-stage process of data validation is implemented. The process begins with training an AI on virtue ethics theories. Then, the AI would generate moral texts based on the above sources in four different domains: ethical context, ethical question, moral teaching, and immoral action. Ethical context is a potential scenario or moral dilemma in which the moral text could be applied. The ethical question is the question that is relevant to the moral text. Moral teaching is the moral norm derived from the moral text. Lastly, immoral action is the counter-thesis to a moral action in which a moral agent should avoid. The AI-generated moral content will then be validated by students with relevant training (moral philosophy, ethics, humanity related subjects) before a second round of validation by ethicists, moralists, and moral philosophers.

3.5. LLM-Based Moral Alignment

Leveraging the previously described dataset, we propose aligning contemporary LLMs with moral values through Direct Preference Optimization (DPO) [31] as illustrated in Figure 3. Unlike Reinforcement Learning from Human Feedback (RLHF), which involves training a reward model and optimizing the policy via proximal policy optimization, DPO streamlines the process by directly aligning the policy with preferences. Specifically, the LLM is fine-tuned using DPO, which optimizes the model to prioritize morally aligned outputs by maximizing the likelihood ratio between preferred and less preferred responses, as defined by the annotated dataset. DPO leverages a reference model (typically the pre-trained LLM) and a reward model implicitly derived from preference data, eliminating the need for an explicit reward modeling phase. The optimization objective is formalized as follows:

where () is the policy model, () is the reference model, and () and () and () are scaling parameters.

The above formula utilizes our preference dataset where is labeled as the preferred output due to its alignment with ethical principles, such as fairness or honesty, while is less aligned. The model is optimized to maximize the likelihood of selecting over by adjusting its parameters to assign higher probabilities to morally aligned responses. This process leverages human-defined moral preferences to guide the LLM toward generating outputs that reflect desired ethical standards. By embedding virtues like compassion, justice, and rational ethics from Spinoza’s rationalism or religious truth moral virtues, the aligned models can act as a guardrail, shielding innocent users from harmful or immoral information. In applications like chatbots or content recommendation systems, this alignment filters out toxic outputs, promoting trustworthy and value-driven interactions that encourage users to reflect on ethical behavior. For society, this protective layer reduces exposure to misinformation or harmful content, fostering safer digital environments, particularly for vulnerable users like children or those seeking guidance.

Utilizing examples from our curated dataset, the contextual verses and their corresponding moral and immoral actions are delineated in Table 2. The alignment algorithm, DPO, directs the selection of moral actions as preferred outcomes by minimizing the loss function relative to immoral actions. Consequently, this optimization influences LLMs to produce responses that favor moral actions across diverse scenarios and applications. For instance, considering the scenario from the Moral Stories dataset [32]—where “Debbie’s uncle Thomas is a police officer who was recently caught on camera planting evidence”—an aligned language model would prioritize Righteousness and Justice as the moral imperatives, recommending that Debbie denounces Thomas and asserts that anyone engaging in such behavior is excluded from her family.

Table 2.

This is a table delineating our curated dataset, the contextual verses and their corresponding moral and immoral actions.

4. Discussion on the Impacts

In summary, the paper has illustrated the development of artificial intelligence (AI) in tandem with the advancement of emotional intelligence and AIoT technologies. Together with the AI neural networks (ANNs) tremendously progressing over the past few years towards large language models (LLMs)—specifically the generative AI—this phenomenon has resulted in significant impacts on human beliefs, practices, and truth discovery. To guardrail the impacts of harmful or immoral information on innocent users, this research work paves the way for exploring how diverse ethical frameworks could help to enhance AI’s role as a moral gatekeeper. It raises questions about balancing cultural inclusivity with universal ethics, as religious truth and philosophical principles may not resonate universally. Researchers will then be able to investigate trade-offs between strict alignment and model flexibility, ensuring adaptability without compromising safety.

With the construction of the validated moral dataset, the proposed aligning of contemporary LLMs with moral values through Direct Preference Optimization (DPO) can achieve the human-defined moral preferences and guide the LLM toward generating outputs that reflect desired ethical standards. As such, LLM is expected to demonstrate improved alignment with the integration of moral values, generating responses that reflect ethical principles derived from truth. The future research comprises the implementation of the aforementioned proposal by creating a quality moral dataset, following by LLM-based Moral Alignment to create an AI moral character representation which would theoretically enhance the existing AI model.

Author Contributions

Conceptualization, Y.-K.T.; methodology, K.C.M.C.; resources, X.Z.; writing—review & editing, Y.-K.T.; project administration, K.C.M.C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data is contained within the article.

Acknowledgments

This project/publication was made possible through the support of Grant 62750 from the John Templeton Foundation. The opinions expressed in this publication are those of the author(s) and do not necessarily reflect the views of the John Templeton Foundation.

Conflicts of Interest

The authors declare no conflict of interest.

Correction Statement

This article has been republished with a minor correction to the Data Availability Statement. This change does not affect the scientific content of the article.

References

- Hegde, K.; Jayalath, H. Emotions in the Loop: A Survey of Affective Computing for Emotional Support. arXiv 2025, arXiv:2505.01542. [Google Scholar] [CrossRef]

- Tan, Y.K. Energy Harvesting Autonomous Sensor Systems: Design, Analysis, and Practical Implementation; CRC Press: Oxford, UK, 2013; pp. 13–18. [Google Scholar]

- Borghoff, U.M.; Bottoni, P.; Pareschi, R. Human-Artificial Interaction in the Age of Agentic AI: A System-Theoretical Approach. Front. Hum. Dyn. 2025, 7, 1579166. [Google Scholar] [CrossRef]

- OpenAI. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Google Deepmind. Gemini: A family of highly capable multimodal models. arXiv 2023, arXiv:2312.11805. [Google Scholar] [CrossRef]

- Gallegos, I.O.; Rossi, R.A.; Barrow, J.; Tanjim, M.M.; Kim, S.; Dernoncourt, F.; Yu, T.; Zhang, R.; Ahmed, N.K. Bias and fairness in large language models: A survey. Comput. Linguist. 2024, 50, 1097–1179. [Google Scholar] [CrossRef]

- Chua, J.; Li, Y.; Yang, S.; Wang, C.; Yao, L. AI safety in generative AI large language models: A survey. arXiv 2024, arXiv:2407.18369. [Google Scholar]

- Nida-Rümelin, J.; Weidenfeld, N. Digital Optimization, Utilitarianism, and AI. In Digital Humanism: For a Humane Transformation of Democracy, Economy and Culture in the Digital Age; Springer: Berlin/Heidelberg, Germany, 2022; pp. 31–34. [Google Scholar]

- Shah, K.; Joshi, H.; Joshi, H. Integrating Moral Values in AI: Addressing Ethical Challenges for Fair and Responsible Technology. J. Inform. Web Eng. 2025, 4, 213–227. [Google Scholar] [CrossRef]

- Bryson, J.J. Patiency is not a virtue: The design of intelligent systems and systems of ethics. Ethics Inf. Technol. 2018, 20, 15–26. [Google Scholar] [CrossRef]

- Manna, R. Kantian Moral Agency and the Ethics of Artificial Intelligence. Problemos 2021, 100, 139–151. [Google Scholar] [CrossRef]

- Stein, M.S. Nussbaum: A Utilitarian Critique. Boston Coll. Law Rev. 2009, 50, 489–531. [Google Scholar]

- Nyholm, S.; Smids, J. The Ethics of Accident-Algorithms for Self-Driving Cars: An Applied Trolley Problem? Ethical Theory Moral Pract. 2016, 19, 1275–1289. [Google Scholar] [CrossRef]

- Nozick, R. Anarchy, State, and Utopia; Basic Books: New York, NY, USA, 1974; p. 41. [Google Scholar]

- Véliz, C. Moral zombies: Why algorithms are not moral agents? AI Soc. 2021, 36, 487–497. [Google Scholar] [CrossRef]

- Hooker, B. Ideal Code, Real World: A Rule-Consequentialist Theory of Morality; Oxford University Press: Oxford, UK, 2000; pp. 59–65. [Google Scholar]

- Bigman, Y.E.; Waytz, A.; Alterovitz, R.; Gray, K. Holding robots responsible: The elements of machine morality. Trends Cogn. Sci. 2019, 23, 365–368. [Google Scholar] [CrossRef]

- Ayad, R.; Plaks, J.E. Attributions of intent and moral responsibility to AI agents. Comput. Hum. Behav. Artif. Hum. 2025, 3, 100–107. [Google Scholar] [CrossRef]

- Brundage, M. Taking superintelligence seriously: Superintelligence: Paths, dangers, strategies by Nick Bostrom. Futures 2015, 72, 32–35. [Google Scholar] [CrossRef]

- Gray, K.; Wegner, D.M. Morality takes two: Dyadic morality and mind perception. In The Social Psychology of Morality: Exploring the Causes of Good and Evil; American Psychological Association: Washington, DC, USA, 2012; pp. 109–127. [Google Scholar]

- Sharkey, A.J.; Sharkey, N. Granny and the robots: Ethical issues in robot care for the elderly. Ethics Inf. Technol. 2010, 14, 27–40. [Google Scholar] [CrossRef]

- Xu, X. Growth or Decline: Christian Virtues and Artificial Moral Advisors. Stud. Christ. Ethics 2025, 38, 46–62. [Google Scholar] [CrossRef]

- Giubilini, A.; Savulescu, J. The artificial moral advisor. The “ideal observer” meets artificial intelligence. Philos. Technol. 2018, 31, 169–188. [Google Scholar] [CrossRef] [PubMed]

- Constantinescu, M.; Vică, C.; Uszkai, R.; Voinea, C. Blame it on the AI? On the moral responsibility of artificial moral advisors. Philos. Technol. 2022, 35, 35. [Google Scholar] [CrossRef]

- Landes, E.; Voinea, C.; Uszkai, R. Rage against the authority machines: How to design artificial moral advisors for moral enhancement. AI Soc. 2024, 40, 2237–2248. [Google Scholar] [CrossRef]

- Floridi, L. Distributed morality in an information society. Sci. Eng. Ethics 2013, 19, 727–743. [Google Scholar] [CrossRef] [PubMed]

- Binns, R. Fairness in Machine Learning: Lessons from Political Philosophy. In Proceedings of the 2018 Conference on Fairness, Accountability, and Transparency, New York, NY, USA, 23–24 February 2018; pp. 149–159. [Google Scholar]

- Gibert, M. The Case for Virtuous Robots. AI Ethics 2023, 3, 135–144. [Google Scholar] [CrossRef]

- Stenseke, J. On the computational complexity of ethics: Moral tractability for minds and machines. Artif. Intell. Rev. 2024, 57, 90. [Google Scholar] [CrossRef]

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.L.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A.; et al. Training Language Models to Follow Instructions with Human Feedback. arXiv 2022, arXiv:2203.02155. [Google Scholar] [CrossRef]

- Rafailov, R.; Sharma, A.; Mitchell, E.; Manning, C.D.; Ermon, S.; Finn, C. Direct preference optimization: Your language model is secretly a reward model. Adv. Neural Inf. Process. Syst. 2023, 36, 53728–53741. [Google Scholar]

- Emelin, D.; Le Bras, R.; Hwang, J.D.; Forbes, M.; Choi, Y. Moral Stories: Situated Reasoning about Norms, Intents, Actions, and their Consequences. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, Punta Cana, Dominican Republic, 7–11 November 2021; Association for Computational Linguistics: Stroudsburg, PA, USA; pp. 698–718. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).