2. Related Works

Deep learning approaches have shown strong performance in static sign recognition tasks. For instance, recent work by Barbhuiya et al. applied modified CNN architectures based on AlexNet and VGG16 for recognizing ASL hand gestures, including both alphabets and numerals. Their system achieved a high accuracy of 99.82% using a multiclass SVM classifier for final prediction [

3]. Importantly, the model was trained and evaluated on real data, using both leave-one-subject-out and random split cross-validation, and demonstrated competitive performance even on low-resource hardware setups. While their study highlights the effectiveness of CNNs for hand gesture recognition, it relies entirely on manually collected and annotated real-world datasets.

Previous research on American Sign Language recognition has explored both classical machine learning techniques and deep learning architectures. In one such study, Jain et al. investigated the use of Support Vector Machines (SVM) with various kernels and CNNs with different filter sizes and depths for recognizing ASL signs [

4]. Their experiments demonstrated that a double-layer CNN with an optimal filter size of 8 × 8 achieved a recognition accuracy of 98.58%, outperforming SVM-based models. The results also highlighted the importance of architectural tuning and hyperparameter optimization in improving model performance.

Previous research has explored the use of AI-generated images to augment datasets for SLR. In our earlier work, we proposed that artificially generated images can serve as a scalable solution for training SLR models, particularly in scenarios where data collection is difficult or where underrepresented sign languages lack sufficient annotated examples. This approach has the potential to enrich existing datasets, improve model generalization, and increase inclusivity in SLR systems by simulating a broader variety of gestures and signing styles. A similar use of GenAI to address dataset incompleteness was proposed by Lan et al., where a GenAI-based Data Completeness Augmentation Algorithm was applied in the healthcare domain to improve training data through a “Quest → Estimate → Tune-up” process [

5]. While their work focused on structured data in smart healthcare applications, our approach leverages generative video synthesis to augment image datasets in SLR tasks, addressing similar challenges of limited and imbalanced data.

Recent work in other domains has similarly explored the potential of GenAI to overcome data scarcity. For instance, Kasimalla et al. [

6], investigated the use of GANs and GenAI to generate synthetic fault data for microgrid protection systems, a domain where transient fault data is both rare and privacy-sensitive. Their findings demonstrated that synthetic data, when statistically validated and labeled, can significantly improve model robustness and accuracy. While the application domain differs, the underlying challenge-limited access to diverse, labeled data is shared, and their methodology reinforces the viability of synthetic data generation for high-stakes machine learning tasks, including SLR.

GenAI techniques have gained traction across various domains facing data scarcity. For example, the challenge of limited annotated X-ray datasets was addressed by introducing synthetic defects into real radiographs using a Scalable Conditional Wasserstein GAN. By strategically injecting synthetic defects based on noise and location constraints, they improved defect detection performance by 17% over baselines trained on real data alone. This supports the broader applicability of GenAI-generated synthetic datasets in enhancing machine learning models in data-constrained environments, including gesture and SLR [

7].

Another recent study has explored the use of deep learning methods, particularly CNNs, for recognizing static ASL gestures. One such work developed a CNN-based application capable of detecting ASL alphabets in real time using webcam input, achieving an accuracy of around 98%. The system utilized a dataset of static hand gesture images that were preprocessed and fed into the model, enabling live prediction of individual letters. While the study focused on static alphabets rather than continuous sign sequences, it demonstrates the effectiveness of CNN architectures for sign recognition tasks and highlights the potential of computer vision techniques for real-time applications in accessibility technologies [

8].

Many other recent studies related to Sign Language Recognition models obtained a respectable accuracy of more than 95%, either continuous SLR or static SLR, demonstrating the advancements in the field. For instance, Al Ahmadi et al. proposed a hybrid CNN–TCN model evaluated on British and American sign datasets, achieving around 95.31% accuracy [

9]. Another study employed five state-of-the-art deep learning models—including ResNet-50, EfficientNet, ConvNeXt, AlexNet, and VisionTransformer—to recognize the ASL alphabet using a large dataset of over 87,000 images. The best-performing model, ResNet-50, achieved a striking 99.98% accuracy, while EfficientNet and ConvNeXt also surpassed 99.5% [

10]. In Indian Sign Language recognition, a two-stream Graph Convolution Network, fusing joint and bone data, achieved 98.08% Top-1 accuracy on the CSL-500 dataset [

11]. Moreover, a deep hybrid model using CNN and hybrid optimizers (HO-based CNNSa-LSTM) obtained 98.7% accuracy, significantly outperforming conventional CNN, RNN, and LSTM baselines [

12].

4. Research Methodology

To establish a fair baseline for evaluation, we began by training the SLR model using the original dataset, without any enhancements or synthetic data. The dataset was partitioned in the following way: 87.5% of the total data was allocated for training, while the remaining 12.5% was reserved for testing and evaluation purposes. For each letter in the ASL alphabet included in the dataset, a total of 2000 images were available. Of these, 1750 images per letter were used for training the model, and 250 images per letter were used to test the model’s performance. The purpose of this initial training phase was to obtain a reference point or baseline performance of the model using only the original dataset. These baseline results were essential for comparative analysis, allowing us to later evaluate the impact of augmenting the dataset with additional generated frames.

After that, we generated using Adobe Firefly numerous videos with hand gestures that describes letters in ASL. Each video was verified by us and in case the result was not good enough, we generated other videos by altering the prompt or seed. When the resulted video was good enough, we extracted frames from it and edited them to make them look like the real dataset. This process is described in

Figure 4.

The following tools and libraries were utilized throughout the study to implement, train, and evaluate the sign language recognition pipeline:

Python 3.11.8: Served as the primary programming language for scripting, data manipulation, and integration of various components in the workflow.

TensorFlow 2.19.0 and Keras 3.10.0: Used for building, training, and evaluating the CNN model. Keras, as a high-level API, enabled rapid prototyping, while TensorFlow provided the backend computational support. The evaluation (accuracy, loss, validation accuracy, and validation loss) was provided through Keras’s terminal output.

Matplotlib 3.10.3 and SciPy 1.15.3: Used for visualization and statistical analysis. Matplotlib helped plot accuracy and loss curves to monitor training progress, while SciPy supported data processing and performance evaluation tasks.

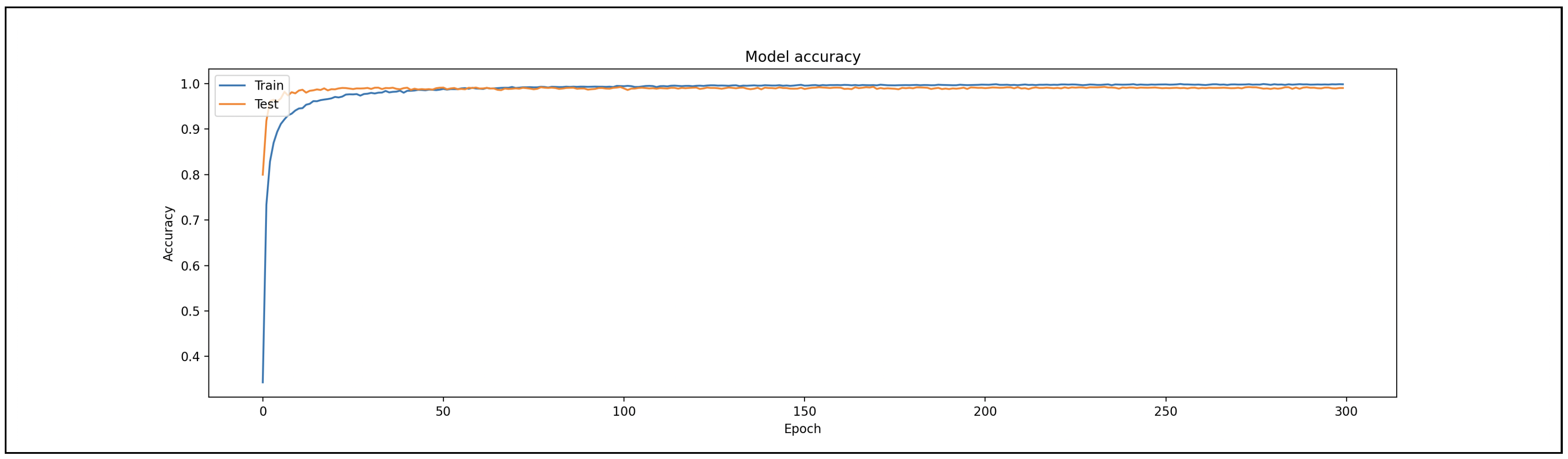

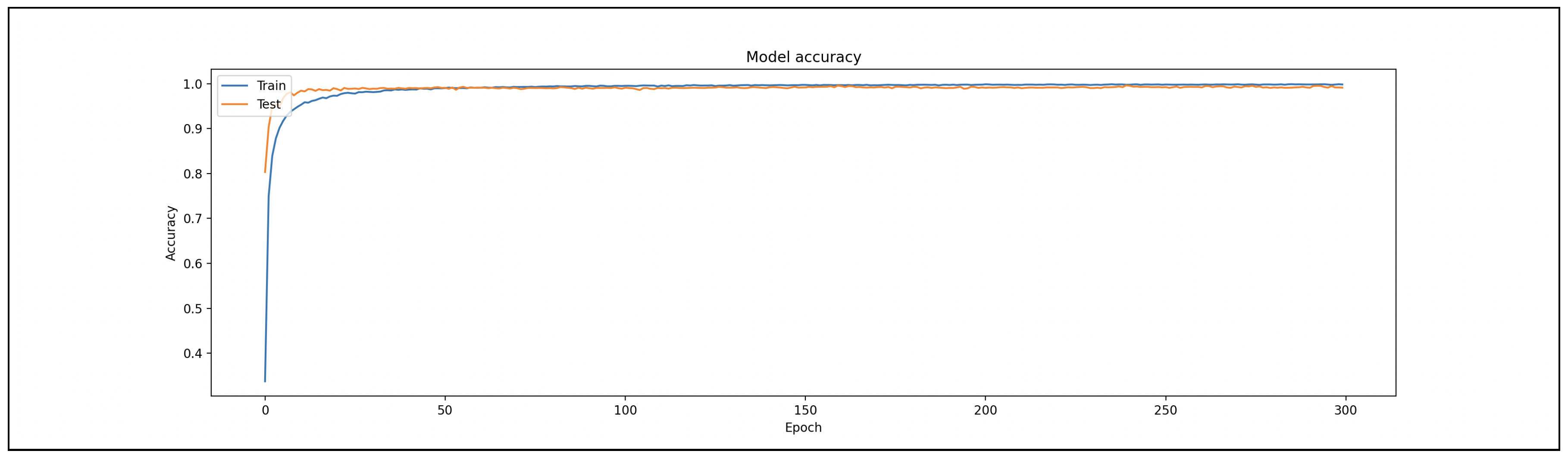

To generate the data presented in the tables, the model was trained five times, using two different training durations: 25 and 35 epochs, respectively. This approach allowed us to observe the model’s behavior and performance consistency across multiple runs. During each training session, the script recorded key performance metrics after every epoch, including training accuracy, validation accuracy, training loss, and validation loss. However, for the purpose of analysis and comparison, we extracted and reported only the metrics from the final epoch of each run. Moreover, to check if the model gets overfit, we trained the model for each experiment for 300 epochs and checked the graphic resulted from Matplotlib.

4.4. Dataset Augmentation

To enhance the variability and representation of the dataset for selected ASL letters, we incorporated the synthetic frames into the original data splits. From the total of 300 frames extracted per letter, 87.5% were allocated to the training set and the remaining 12.5% were assigned to the validation set. This distribution mirrored the split used in the original dataset, ensuring consistency across experiments.

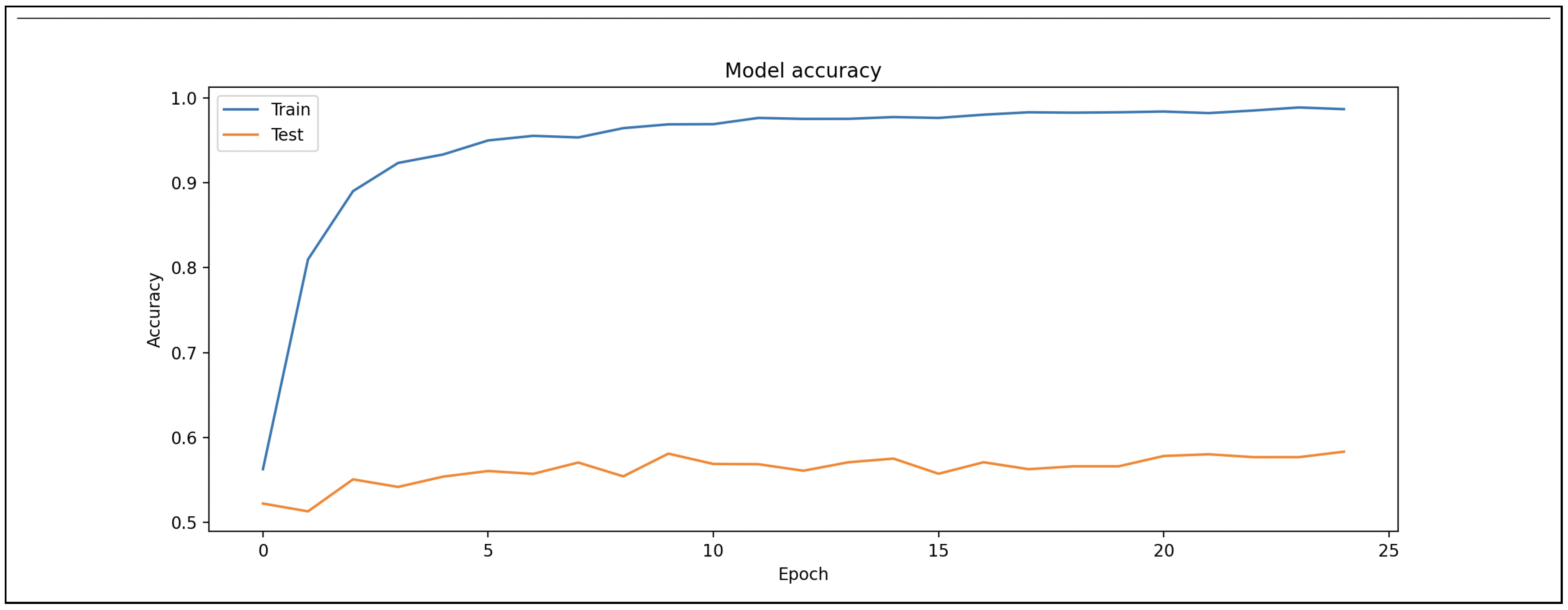

In the initial experiment, we evaluated the impact of replacing the training data for the 13 selected letters (A, B, C, G, K, M, O, P, Q, R, S, U, V) entirely with the newly generated synthetic frames, while keeping the original test set unchanged. The goal was to observe whether synthetic training data alone, without modifying the test set, could lead to improved model performance. However, this approach resulted in poor generalization during validation, as indicated by the following metrics:

These results from

Figure 11 and

Figure 12 suggest that the synthetic training data, although realistic in appearance, introduced a domain gap that the model could not effectively bridge when evaluated on real data.

To address this performance degradation, we adopted a data grafting strategy, in which a portion of the original dataset was retained and combined with the generated content. Specifically, for each of the 13 letters, 263 images (87.5%) in the training set were replaced with synthetic images; 37 images (12.5%) in the test set were also replaced with synthetic ones. This hybrid approach aimed to preserve the natural data distribution while introducing synthetic variability to improve model robustness. We then conducted two sub-experiments:

- -

Sub-experiment A: Used the augmented training set (with synthetic images) while keeping the original test set unchanged.

- -

Sub-experiment B: Used both the augmented training and test sets, incorporating the synthetic frames into both splits. These experiments allowed us to analyze the impact of synthetic data under both isolated and combined augmentation conditions.