Intelligent Assessment of Scientific Creativity by Integrating Data Augmentation and Pseudo-Labeling

Abstract

1. Introduction

2. Related Work

2.1. Scientific Creativity and Its Measurement and Assessment

2.2. Study of Data Augmentation

2.3. Semi-Supervised Learning and Pseudo-Labeling

2.4. Machine Learning Research

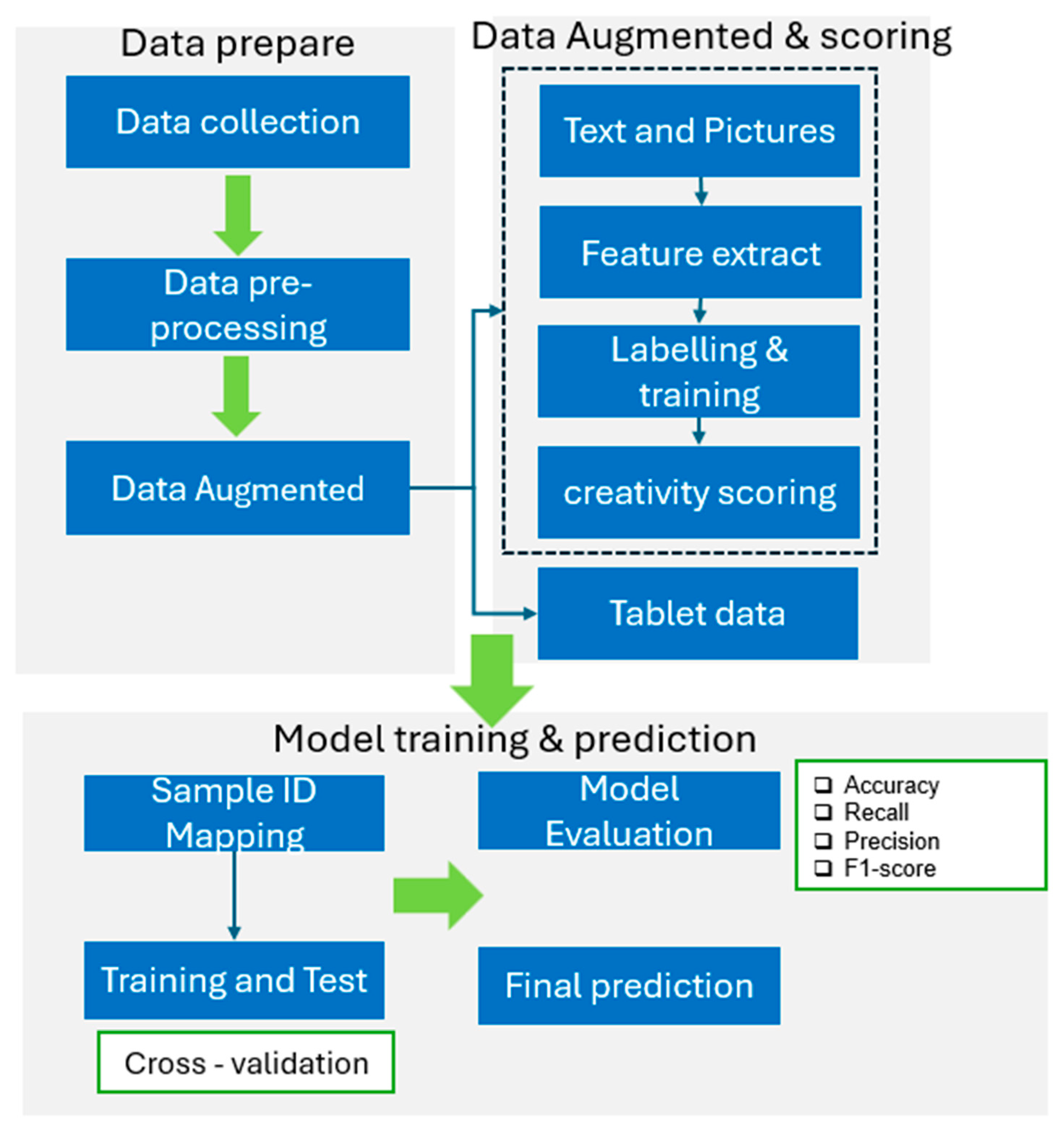

3. Methodology

3.1. Data Preparation

3.2. Feature Engineering

3.3. Data Augmentation Strategies

3.3.1. Data Augmentation for Structured Data

3.3.2. Data Augmentation for Image and Text

- Definition of original data: Based on bicycle patterns drawn with lines, students could create drawings or add text annotations on them, generating original samples with a mixture of graphics and text.

- Graphic–text linkage augmentation strategies: Image augmentation, using OpenCV (V 4.10.0) and Albumentations (V 2.0.8) libraries; geometric transformations (e.g., rotation, scaling, translation); brightness adjustment; and noise addition were applied to generate diversified image samples.

- Each augmented version was assigned a unique image augmentation ID (e.g., rotate_15, scale_1.1) to track the transformation process. Paddle OCR was used to extract handwritten text from the images. To address the difficulty in recognizing Chinese handwritten characters, augmentation strategies were introduced, including synonym replacement, sentence pattern rewriting, and simulation of typos (e.g., “fire-spouting port” → “jet port”). Each augmented text was assigned a unique text augmentation ID (e.g., synonym_1, ocrerror_1), which was paired with the image augmentation ID to ensure consistency between graphics and text.

- A random sample of augmented data was selected, and its rationality and validity were verified through manual evaluation to ensure that the data quality was suitable for subsequent model training.

- To ensure the one-to-one correspondence of augmented graphic–text data, facilitate model input, and enable subsequent analysis, a hierarchical numbering system was constructed. The numbering format is as follows: Original ID_Image Augmentation ID_Text Augmentation ID, for example, Bike_001_rotate_15_synonym_1. In addition, a structured table was established to record the numbering mapping relationship of each sample, with fields including the following: Number, Original ID, Image Path, Text Content, Image Augmentation Parameters, and Text Augmentation Method (see Table 1).

3.4. Semi-Supervised Learning: Pseudo-Label Generation and Model Training Process

3.4.1. Initial Manual Labels

- -

- Image pseudo-labels: The YOLOv8 model is used to train a component detection model, which is combined with a classifier trained on initially labeled data to predict unlabeled images. Samples with a class confidence score, θ, greater than 0.5 in the prediction results are selected and assigned pseudo-labels.

- -

- Text pseudo-labels: First, a BERT classifier is trained using manually labeled texts, which is then used to predict unlabeled samples. Results with a confidence score higher than the threshold θ (set to 0.6) are included in the pseudo-label set and, together with the original labeled data, form a new training set to update the model parameters. The thresholds were determined by a grid search on the validation set (θ ∈ {0.3, 0.4, …, 0.8}). The values θ_image = 0.5 and θ_text = 0.6 yielded the highest F1-scores for the image and text tasks, respectively.

3.4.2. Pseudo-Label

| Algorithm 1: Pseudo-label generation for semi-supervised learning |

| Data: D_ l = labelled dataset(image/text) |

| D_ u = unlabelled dataset (image/text) |

| θ = confidence threshold # θ_image=0.5, θ_text=0.6 T =task-specific module (image/text) |

| Result: D_ l = final enlarged training set |

| M = trained model |

| 1 Initialize model M |

| 2 while not terminate do: |

| 3 M = Train(T, D_l) # Train model using task-specific module |

| 4 P = Inference(T, M, D_u) # Generate pseudo-labels |

| 5 for x in D_u do: |

| 6 if conf(x, P) > θ then: |

| 7 D_l = D_l ∪ {x, P(x)} # Add pseudo-labels to training set |

| 8 end if |

| 9 end for |

| 10 terminate = CheckConvergence(M) |

| 11 end while |

| 12 return D_l, M |

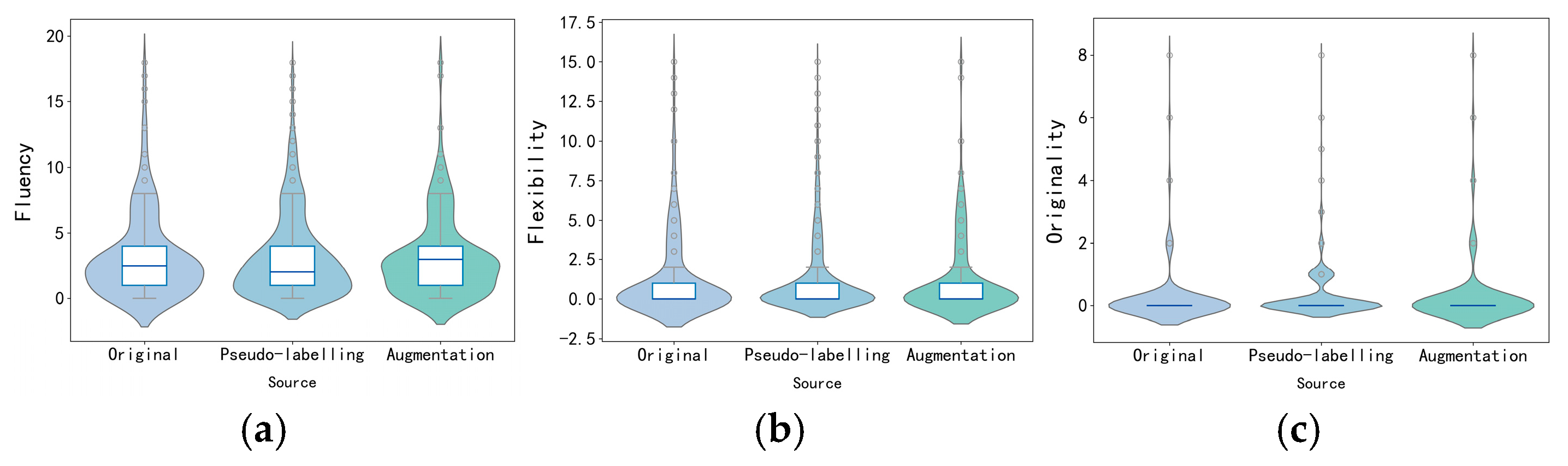

3.5. Semi-Supervised Automated Calculation of Scientific Creativity

- Label extraction and deduplication: Conceptual labels from both images and texts in each student’s response are extracted and merged into a label set (L_s = {t1, t2, …, tn}). If the same label appears repeatedly in both the image and text, only one instance is retained to avoid redundant calculations.

- Semantic clustering and dimensional score calculation:

- Fluency is measured by the total number of labels. It is defined as follows:

- Flexibility is measured by the number of label categories, and its calculation method is as follows:

- Originality is scored based on the frequency of labels appearing in all samples: if the frequency is less than 5%, the label receives 2 points; if the frequency is less than 10%, it receives 1 point; otherwise, it receives 0 points. The originality score of each sample is the sum of the scores of all labels in its label set:

3.6. Predictive Models of Scientific Creativity

3.6.1. Machine Learning Models

3.6.2. Model Training Procedure

3.6.3. Performance Metrics of Machine Learning Models

- Accuracy: This is a reliable metric when the class distribution is balanced, but may be misleading in cases of class imbalance. The calculation formula is as follows:

- Precision: A high precision indicates that the model can effectively avoid misclassifying negative samples as positive, but it fails to reflect the model’s ability to identify all positive samples. The calculation method is as follows:

- Recall: This quantifies the accuracy with which the model correctly identifies all actual positive samples. The calculation formula is as follows:

- F1-score: This is calculated as the harmonic mean of precision and recall, as follows:

4. Results

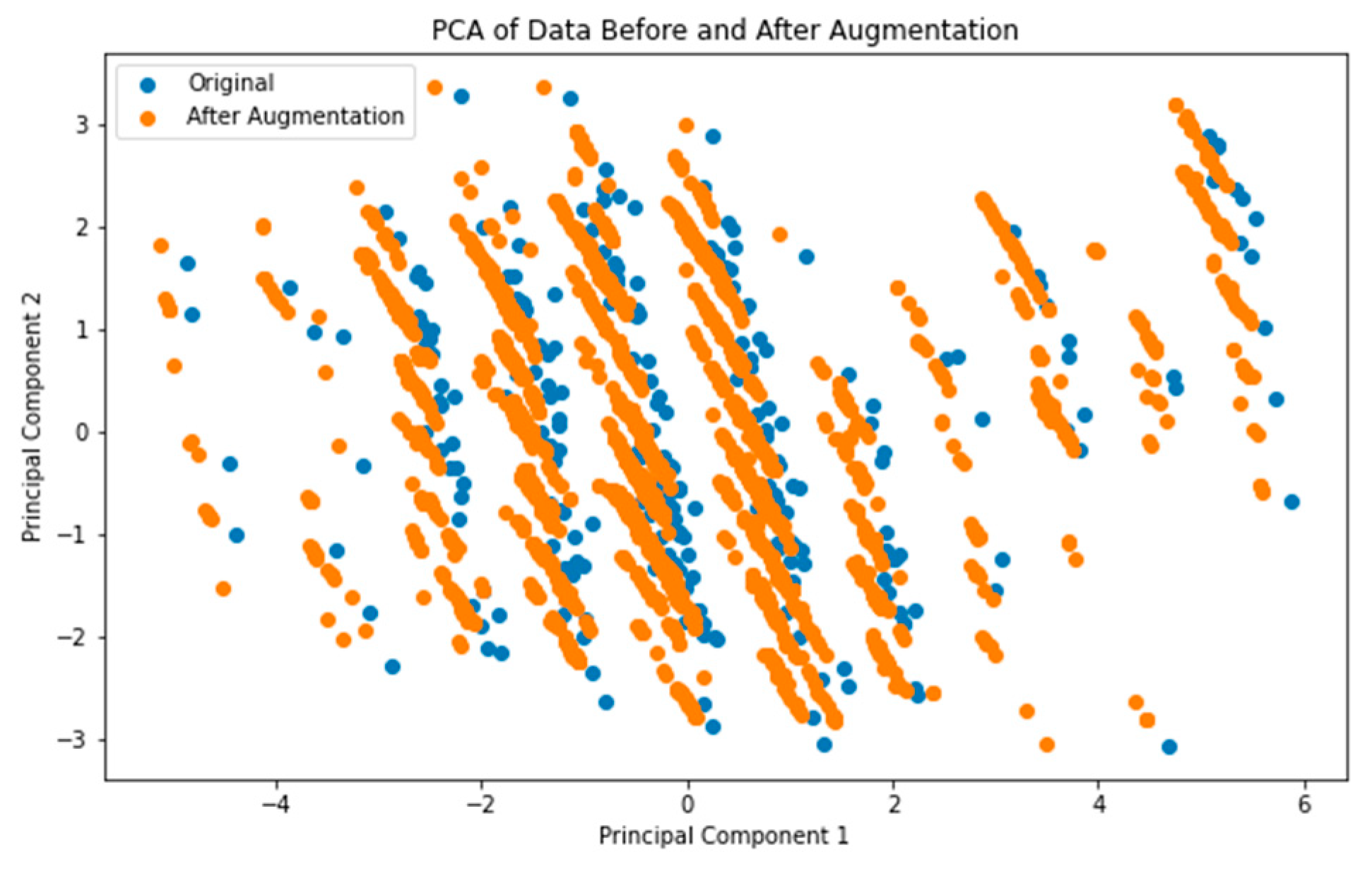

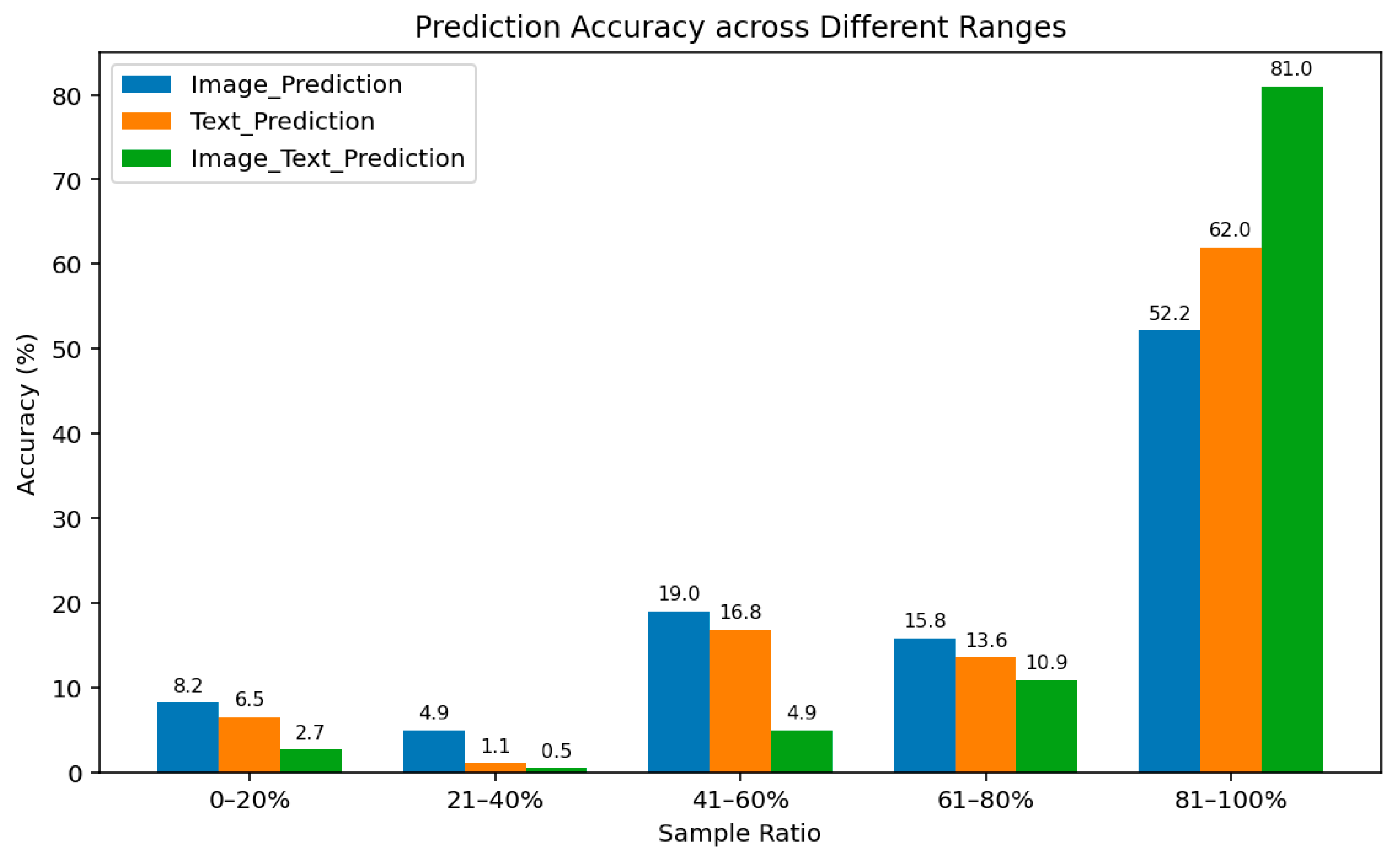

4.1. Data Augmentation

4.2. Pseudo-Labels

4.3. Data Augmentation and Pseudo-Labels

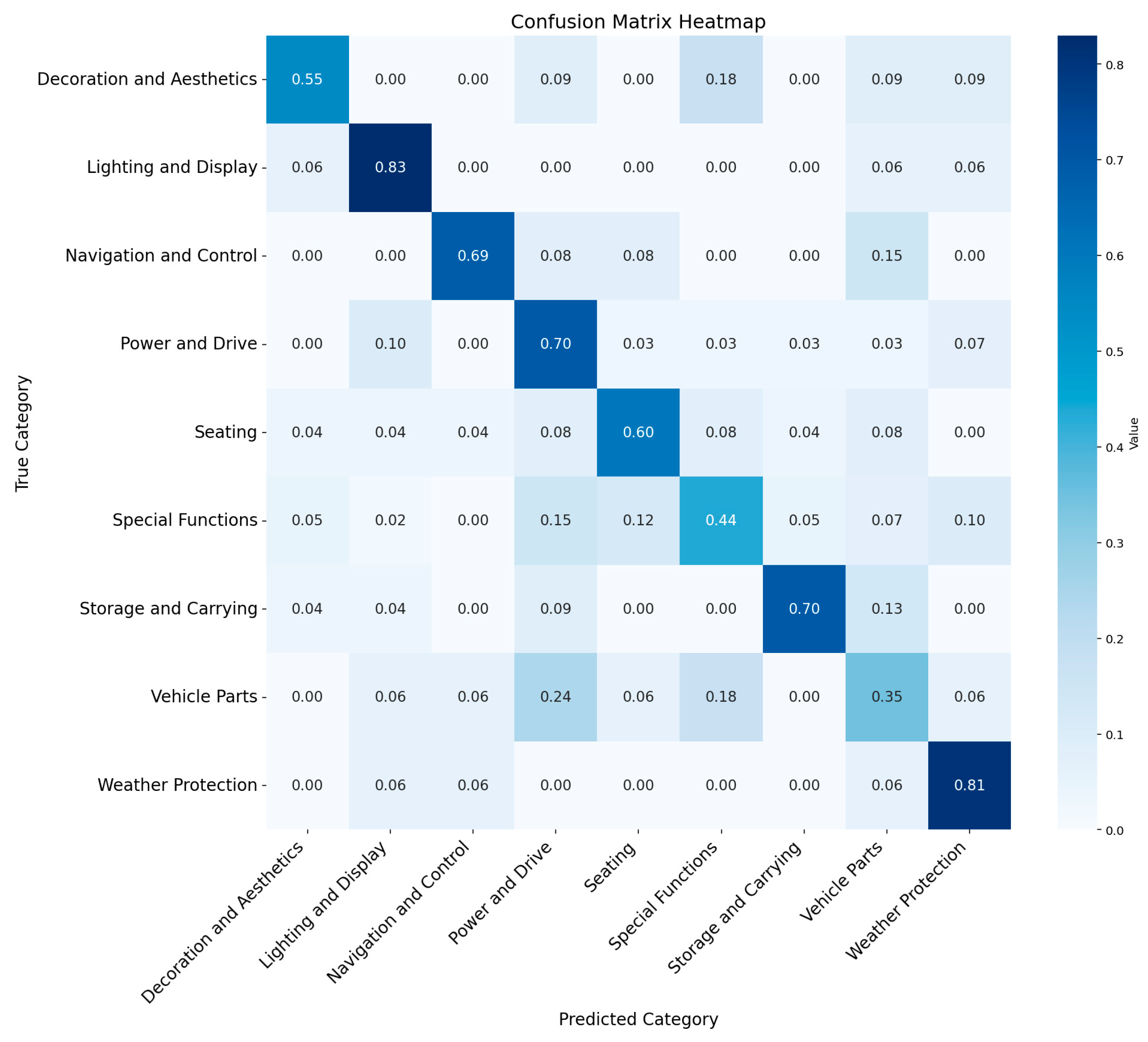

4.4. Machine Learning

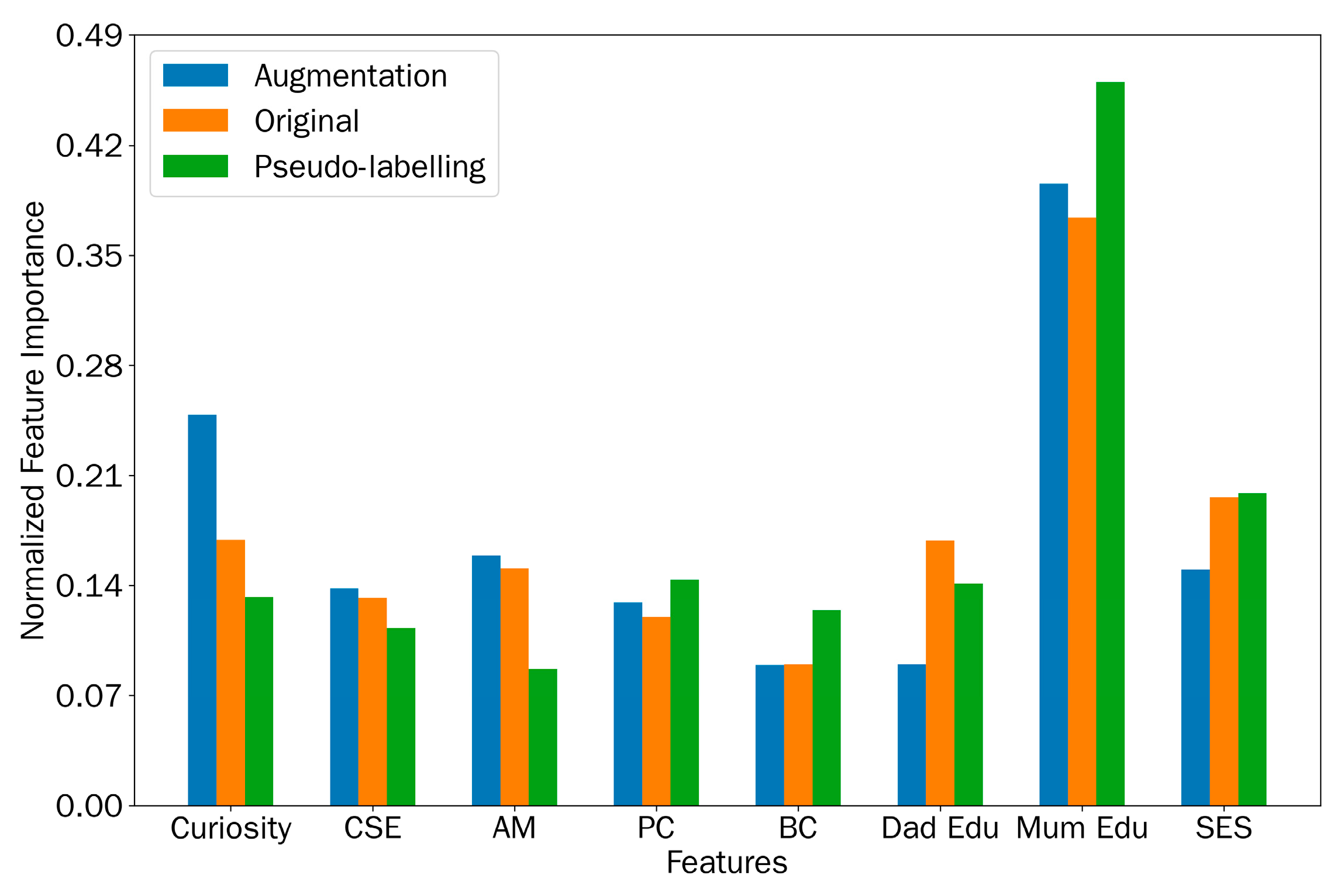

4.5. Importance of Model Features

4.6. Analysis of the Relationship Between Questionnaire Dimensions and Creativity Classifications

5. Discussion

5.1. Data Augmentation and Pseudo-Label

5.2. Performance Analysis of the Scientific Creativity Model

5.3. Feature Importance

5.4. Performance of High and Low Scientific Creativity

6. Conclusions

- SMOTE and EDA techniques effectively expand both questionnaire and image–text datasets, while the image–text alignment mechanism outperforms single modalities, confirming the advantages of multimodal fusion.

- Consistent performance gains—particularly in precision and recall—were observed across four mainstream prediction models, suggesting that the proposed method has good adaptability in predicting scientific creativity.

- Family environment, parental education level, and individual curiosity are identified as important predictors of scientific creativity, highlighting the interaction between internal and external factors.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AM | Autonomy support |

| PC | Psychological control |

| BC | Behavioral control |

| CSE | Creative self-efficacy |

| SES | Socioeconomic status |

| SMOTE | Synthetic Minority Oversampling Technique |

| EDA | Easy Data Augmentation |

| TTCT | Torrance Tests of Creative Thinking |

| C-SAT | Creative Scientific Ability Test |

| FSCT | Figural Scientific Creativity Test |

| TCT-DP | Test of Creative Thinking–Drawing Production |

Appendix A

| Model | Tuned Parameters (Grid) |

|---|---|

| Random Forest | n_estimators ∈ {100, 200}; max_depth ∈ {5, 10, None} |

| MLP | hidden_layer_sizes ∈ {(64,), (64, 32)}; learning_rate_init ∈ {0.001, 0.01}; early_stopping=True |

| XGBoost | n_estimators ∈ {100, 200}; learning_rate ∈ {0.05, 0.1}; subsample ∈ {0.8, 1.0} |

| LightGBM | n_estimators ∈ {100, 200}; learning_rate ∈ {0.05, 0.1}; subsample ∈ {0.8, 1.0} |

| Model | Core Hyperparameters | Final Values |

|---|---|---|

| Random Forest | n_estimators (number of trees) | 200 |

| max_depth (tree depth) | 10 | |

| min_samples_split (min. samples to split) | 5 | |

| Multilayer Perceptron | hidden_layer_sizes (hidden layer structure) | (64, 32) |

| learning_rate_init (initial learning rate) | 0.01 | |

| batch_size | 32 | |

| max_iter (max iterations) | 300 | |

| XGBoost | n_estimators (number of weak learners) | 200 |

| max_depth | 5 | |

| learning_rate | 0.05 | |

| subsample (sampling rate) | 0.8 | |

| LightGBM | n_estimators | 200 |

| num_leaves | 63 | |

| learning_rate | 0.1 | |

| subsample | 0.8 |

References

- Ayas, M.B.; Sak, U. Objective measure of scientific creativity: Psychometric validity of the Creative Scientific Ability Test. Think. Skills Creat. 2014, 13, 195–205. [Google Scholar] [CrossRef]

- Cropley, D.H.; Theurer, C.; Mathijssen, A.C.S.; Marrone, R.L. Fit-for-purpose creativity assessment: Automatic scoring of the test of creative thinking—Drawing production (TCT-DP). Creat. Res. J. 2024, 1–16. [Google Scholar] [CrossRef]

- Lee, A.W.; Russ, S.W. Development of creativity in school-age children. In the Cambridge Handbook of Lifespan Development of Creativity; Russ, S.W., Hoffmann, J.D., Kaufman, J.C., Eds.; Cambridge University Press: Cambridge, UK, 2021; pp. 126–138. [Google Scholar] [CrossRef]

- Lubart, T.; Kharkhurin, A.V.; Corazza, G.E.; Besançon, M.; Yagolkovskiy, S.R.; Sak, U. Creative potential in science: Conceptual and measurement issues. Front. Psychol. 2022, 13, 750224. [Google Scholar] [CrossRef] [PubMed]

- Hu, W.; Adey, P. A scientific creativity test for secondary school students. Int. J. Sci. Educ. 2002, 24, 389–403. [Google Scholar] [CrossRef]

- Cai, Q.; Xiong, J.; Luo, L.; Zhang, J. The influence of classroom teaching strategies on middle school students’ scientific creativity. Educ. Meas. Eval. 2021, 2, 43–49. [Google Scholar]

- Ismayilzada, M.; Paul, D.; Bosselut, A.; van der Plas, L. Creativity in ai: Progresses and challenges. arXiv 2024, arXiv:2410.17218. [Google Scholar] [CrossRef]

- Beaty, R.E.; Johnson, D.R. Automating creativity assessment with SemDis: An open platform for computing semantic distance. Behav. Res. Methods 2021, 53, 757–780. [Google Scholar] [CrossRef] [PubMed]

- Uglanova, I.L.; Gel’ver, E.S.; Tarasov, S.V.; Gracheva, D.A.; Vyrva, E.E. Creativity assessment by analyzing images using neural networks. Sci. Tech. Inf. Process. 2022, 49, 371–378. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, Y.; Hu, W.; Bai, H.; Lyu, Y. Applying machine learning to intelligent assessment of scientific creativity based on scientific knowledge structure and eye-tracking data. J. Sci. Educ. Technol. 2025, 34, 401–419. [Google Scholar] [CrossRef]

- Patterson, J.D.; Barbot, B.; LloydCox, J.; Beaty, R.E. AuDrA: An automated drawing assessment platform for evaluating creativity. Behav. Res. Methods 2024, 56, 3619–3636. [Google Scholar] [CrossRef]

- Kumar, T.; Brennan, R.; Mileo, A.; Bendechache, M. Image data augmentation approaches: A comprehensive survey and future directions. IEEE Access 2024, 12, 187536–187571. [Google Scholar] [CrossRef]

- Wang, J.; Perez, L. The effectiveness of data augmentation in image classification using deep learning. Convolutional Neural Netw. Vis. Recognit. 2017, 11, 1–8. [Google Scholar] [CrossRef]

- Hasan, J.; Das, A.; Matubber, J.; Shifat, S.H.; Morol, K. Enhanced classification of anxiety, depression, and stress levels: A comparative analysis of DASS21 questionnaire data augmentation and classification algorithms. In Proceedings of the 3rd International Conference on Computing Advancements, Dhaka, Bangladesh, 17–18 October 2024. [Google Scholar] [CrossRef]

- Haase, J.; Hanel, P.H.P.; Pokutta, S. S-dat: A multilingual, genai-driven framework for automated divergent thinking assessment. arXiv 2025, arXiv:2505.09068. [Google Scholar] [CrossRef]

- Wang, M.; Zhang, D.J.; Zhang, H. Large language models for market research: A data-augmentation approach. arXiv 2024, arXiv:2412.19363. [Google Scholar] [CrossRef]

- Hu, W.; Han, K. Theoretical Research and Practical Exploration of Adolescents’ Scientific Creativity. Psychol. Dev. Educ. 2015, 31, 44–50. [Google Scholar]

- Gute, G.; Gute, D.S.; Nakamura, J.; Csikszentmihályi, M. The Early Lives of Highly Creative Persons: The Influence of the Complex Family. Creat. Res. J. 2008, 20, 343–357. [Google Scholar] [CrossRef]

- Dong, Y.; Lin, J.; Li, H.; Cheng, L.; Niu, W.; Tong, Z. How parenting styles affect children’s creativity: Through the lens of self. Think. Ski. Creat. 2022, 45, 101045. [Google Scholar] [CrossRef]

- Davies, D.; Jindal-Snape, D.; Collier, C.; Digby, R.; Hay, P.; Howe, A. Creative learning environments in education—A systematic literature review. Think. Ski. Creat. 2013, 8, 80–91. [Google Scholar] [CrossRef]

- Parveen, N.; Khalid, M.; Azam, M.; Khalid, A.; Hussain, A.; Ahmad, M. Unravelling the impact of Perceived Parental Styles on Curiosity and Exploration. Bull. Bus. Econ. 2023, 12, 254–263. [Google Scholar] [CrossRef]

- Karwowski, M. Development of the creative self-concept. Creativity. Theor. Res. Appl. 2015, 2, 165–179. [Google Scholar] [CrossRef][Green Version]

- Prahani, B.K.; Rizki, I.A.; Suprapto, N.; Irwanto, I.; Kurtuluş, M.A. Mapping research on scientific creativity: A bibliometric review of the literature in the last 20 years. Think. Skills Creat. 2024, 52, 101495. [Google Scholar] [CrossRef]

- Sak, U.; Ayas, M.B. Creative Scientific Ability Test (C-SAT): A new measure of scientific creativity. Psychol. Test Assess. Model 2013, 55, 316–329. [Google Scholar]

- Siew, A.; Ambo, N. The scientific creativity of fifth graders in a stem project-based cooperative learning approach. Probl. Educ. 21st Cent 2020, 78, 627–643. [Google Scholar] [CrossRef]

- Zhai, X.; Yin, Y.; Pellegrino, J.W.; Haudek, K.C.; Shi, L. Applying machine learning in science assessment: A systematic review. Stud. Sci. Educ. 2020, 56, 111–151. [Google Scholar] [CrossRef]

- Arizpe, E. A critical review of research into children’s responses to multimodal texts. In Handbook of Research on Teaching Literacy Through the Communicative and Visual Arts; Flood, J., Heath, S.B., Lapp, D., Eds.; Routledge: New York, NY, USA, 2015; Volume II, pp. 391–402. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Inoue, H. Data augmentation by pairing samples for images classification. arXiv 2018, arXiv:1801.02929. [Google Scholar] [CrossRef]

- Zhang, W.; Joseph, J.; Chen, Q.; Koz, C.; Xie, L.; Regmi, A.; Yamakawa, S.; Furuhata, T.; Shimada, K.; Kara, L.B. A data augmentation method for data-driven component segmentation of engineering drawings. J. Comput. Inf. Sci. Eng. 2024, 24, 011001. [Google Scholar] [CrossRef]

- Cao, C.; Zhou, F.; Dai, Y.; Wang, J.; Zhang, K. A survey of mix-based data augmentation: Taxonomy, methods, applications, and explainability. ACM Comput. Surv. 2024, 57, 1–38. [Google Scholar] [CrossRef]

- Acar, S.; Organisciak, P.; Dumas, D. Automated scoring of figural tests of creativity with computer vision. J. Creat. Behav. 2025, 59, e677. [Google Scholar] [CrossRef]

- Zhang, H.; Dong, H.; Wang, Y.; Zhang, X.; Yu, F.; Ren, B.; Xu, J. Automated graphic divergent thinking assessment: A multimodal machine learning approach. J. Intell. 2025, 13, 45. [Google Scholar] [CrossRef]

- Ben Said, M.; Kacem, Y.H.; Algarni, A.; Masmoudi, A. Early prediction of student academic performance based on machine learning algorithms: A case study of bachelor’s degree students in KSA. Educ. Inf. Technol. 2024, 29, 13247–13270. [Google Scholar] [CrossRef]

- Mudallal, R.H.; Mrayyan, M.T.; Kharabsheh, M. Use of machine learning to predict creativity among nurses: A multidisciplinary approach. BMC Nurs. 2025, 24, 539. [Google Scholar] [CrossRef]

- Kovalkov, A.; Paasen, B.; Segal, A.; Pinkwart, N.; Gal, K. Automatic creativity measurement in scratch programs across modalities. IEEE Trans. Learn. Technol. 2022, 14, 740–753. [Google Scholar] [CrossRef]

- Singh, J.; Banerjee, R. A study on single and multi-layer perceptron neural network. In Proceedings of the 2019 3rd International Conference on Computing Methodologies and Communication (ICCMC), Erode, India, 27–29 March 2019. [Google Scholar] [CrossRef]

- Biau, G.; Scornet, E. A random forest guided tour. Test 2016, 25, 197–227. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. Lightgbm: A highly efficient gradient boosting decision tree. Adv. Neural Inf. Process. Syst. 2017, 30, 3149–3157. [Google Scholar]

- Garcês, S. Creativity in science domains: A reflection. Atenea 2018, 517, 241–253. [Google Scholar] [CrossRef]

- Gupta, P.; Sharma, Y. Nurturing scientific creativity in science classroom. Resonance 2019, 24, 561–574. [Google Scholar] [CrossRef]

- Watanuki, S.; Edo, K.; Miura, T. Applying deep generative neural networks to data augmentation for consumer survey data with a small sample size. Appl. Sci. 2024, 14, 9030. [Google Scholar] [CrossRef]

- Piaget, J. Language and Thought of the Child: Selected Works Vol 5; Routledge: London, UK, 2005. [Google Scholar]

- Schutte, N.S.; Malouff, J.M. A meta-analysis of the relationship between curiosity and creativity. J. Creat. Behav. 2020, 54, 940–947. [Google Scholar] [CrossRef]

- Zimmerman, B.J. Self-regulated learning: Theories, measures, and outcomes. In International Encyclopedia of the Social & Behavioral Sciences, 2nd ed.; Elsevier: Amsterdam, The Netherlands, 2015; pp. 541–546. [Google Scholar] [CrossRef]

- Maksić, S.; Jošić, S. Scaffolding the development of creativity from the students’ perspective. Think. Skills Creat. 2021, 41, 100835. [Google Scholar] [CrossRef]

| ID | Original ID | Image_Text Path | Image Augmentation | Text Augmentation |

|---|---|---|---|---|

| Bike_001_rotate_15_ synonym_1 | Bike_001 | ./images/Bike_001_rotate_15_ synonym_1.png | Rotation | Synonym replacement |

| Bike_002_scale_1.1_ rephrase_1 | Bike_002 | ./iages/Bike_002_scale_1.1_ rephrase_1.png | Scaling | Sentence pattern rewriting |

| Bike_003_noise_0.02_ ocrerror_1 | Bike_003 | ./iages/Bike_003_noise_0.02_ ocrerror_1.png | Noise simulation | OCR error simulation |

| Type | Initial Dataset | Dataset Afterward |

|---|---|---|

| Original | 260 | 0 |

| Augmentation | 0 | 300 |

| Augmentation + Pseudo-labelling | 0 | 2000 |

| Model | Method | Accuracy | Precision | Recall | F1-Score | AUC-ROC |

|---|---|---|---|---|---|---|

| Random Forest | Original | 0.74 | 0.85 | 0.81 | 0.83 | 0.82 |

| Augmentation | 0.88 | 0.95 | 0.89 | 0.92 | 0.93 | |

| Pseudo-labeling | 0.85 | 0.88 | 0.98 | 0.94 | 0.86 | |

| MLP | Original | 0.72 | 0.81 | 0.83 | 0.82 | 0.75 |

| Augmentation | 0.88 | 0.9 | 0.96 | 0.93 | 0.81 | |

| Pseudo-labeling | 0.8 | 0.81 | 0.92 | 0.87 | 0.8 | |

| XGBoost | Original | 0.77 | 0.86 | 0.86 | 0.86 | 0.82 |

| Augmentation | 0.85 | 0.93 | 0.87 | 0.9 | 0.89 | |

| Pseudo-labeling | 0.86 | 0.86 | 0.95 | 0.9 | 0.84 | |

| LightGBM | Original | 0.74 | 0.85 | 0.81 | 0.83 | 0.79 |

| Augmentation | 0.85 | 0.96 | 0.94 | 0.92 | 0.91 | |

| Pseudo-labeling | 0.87 | 0.86 | 0.97 | 0.91 | 0.85 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Weng, W.; Liu, C.; Zhao, G.; Song, L.; Zhang, X. Intelligent Assessment of Scientific Creativity by Integrating Data Augmentation and Pseudo-Labeling. Information 2025, 16, 785. https://doi.org/10.3390/info16090785

Weng W, Liu C, Zhao G, Song L, Zhang X. Intelligent Assessment of Scientific Creativity by Integrating Data Augmentation and Pseudo-Labeling. Information. 2025; 16(9):785. https://doi.org/10.3390/info16090785

Chicago/Turabian StyleWeng, Weini, Chang Liu, Guoli Zhao, Luwei Song, and Xingli Zhang. 2025. "Intelligent Assessment of Scientific Creativity by Integrating Data Augmentation and Pseudo-Labeling" Information 16, no. 9: 785. https://doi.org/10.3390/info16090785

APA StyleWeng, W., Liu, C., Zhao, G., Song, L., & Zhang, X. (2025). Intelligent Assessment of Scientific Creativity by Integrating Data Augmentation and Pseudo-Labeling. Information, 16(9), 785. https://doi.org/10.3390/info16090785