Analysis of Large Language Models for Company Annual Reports Based on Retrieval-Augmented Generation

Abstract

1. Introduction

1.1. Problem Statement

1.2. Research Questions

- RQ1: Awareness: How accurately does RAG provide qualitative information in response to specific financial data queries?

- RQ2: Suggestion: What impact do different prompts have on the correctness (accuracy), relevance, and verifiability of financial information retrieved by RAG, and how effective is RAG in general for correct and verifiable financial data retrieval?

- RQ3: Development: How could a suitable RAG approach be designed to enhance the accuracy, relevance, and verifiability of LLMs in analyzing financial reports?

- RQ4: Evaluation: How effectively does RAG convey the source and context of financial data, such as referencing specific documents or indicating data sources?

2. Literature Review

2.1. Background and Models

2.2. Applications and Use Cases

2.3. Recent Advances and State-of-the-Art Techniques

3. Methodology

- Single Regulatory Market (US): Our selection process prioritizes companies operating within a single regulatory environment, specifically focusing on the United States. This criterion streamlines our research scope, enabling a deeper understanding of the regulatory landscape and its implications for the selected companies.

- NASDAQ Listing: We have exclusively chosen companies listed on the NASDAQ stock exchange. NASDAQ-listed companies often represent dynamic, growth-oriented firms with a strong emphasis on innovation and technology.

- Exclusion of Financial Sector: To maintain consistency and comparability across our research cohort, we excluded companies operating within the financial sector. Financial institutions often employ unique accounting practices and regulatory frameworks distinct from those of other industries, which could skew our analysis. By excluding this sector, we ensure a more homogenous group for meaningful insights.

- Focus on the Technology Sector: Our research targets companies within the technology sector, characterized by their asset-light business models and emphasis on innovation-driven growth. Unlike traditional industries, technology firms typically rely less on physical assets and more on intellectual property, talent, and digital infrastructure. This strategic focus allows us to explore the dynamics of disruptive technologies, market trends, and competitive strategies within this high-growth sector.

- Revenue Range of USD 5 to USD 7 Billion: Within the technology sector, we have focused on companies with annual revenues ranging from USD 5 to USD 7.3 billion. This is to reduce the variance in the complexity of the reports generated by the companies.

4. Results

4.1. Accuracy of Qualitative Information

4.2. Impact of Different Prompts

4.3. Suitable RAG Approach

5. Discussion

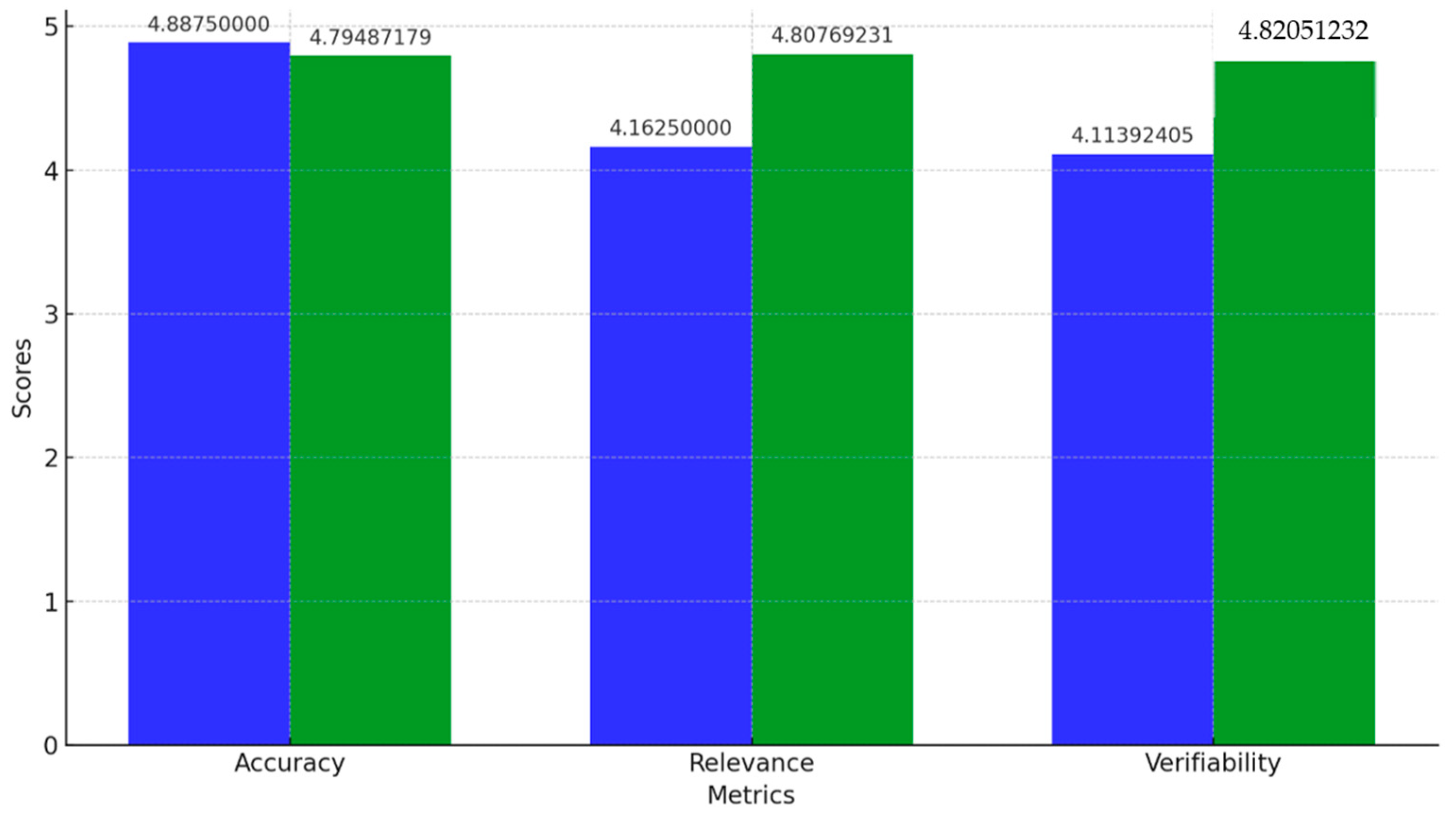

- Enhanced accuracy and relevance: Integrating RAG with LLMs improved the accuracy of qualitative responses and their alignment with current financial contexts, indicating the potential of RAG in refining the performance of LLMs in domain-specific tasks. These findings confirm those of other studies such as [24,26].

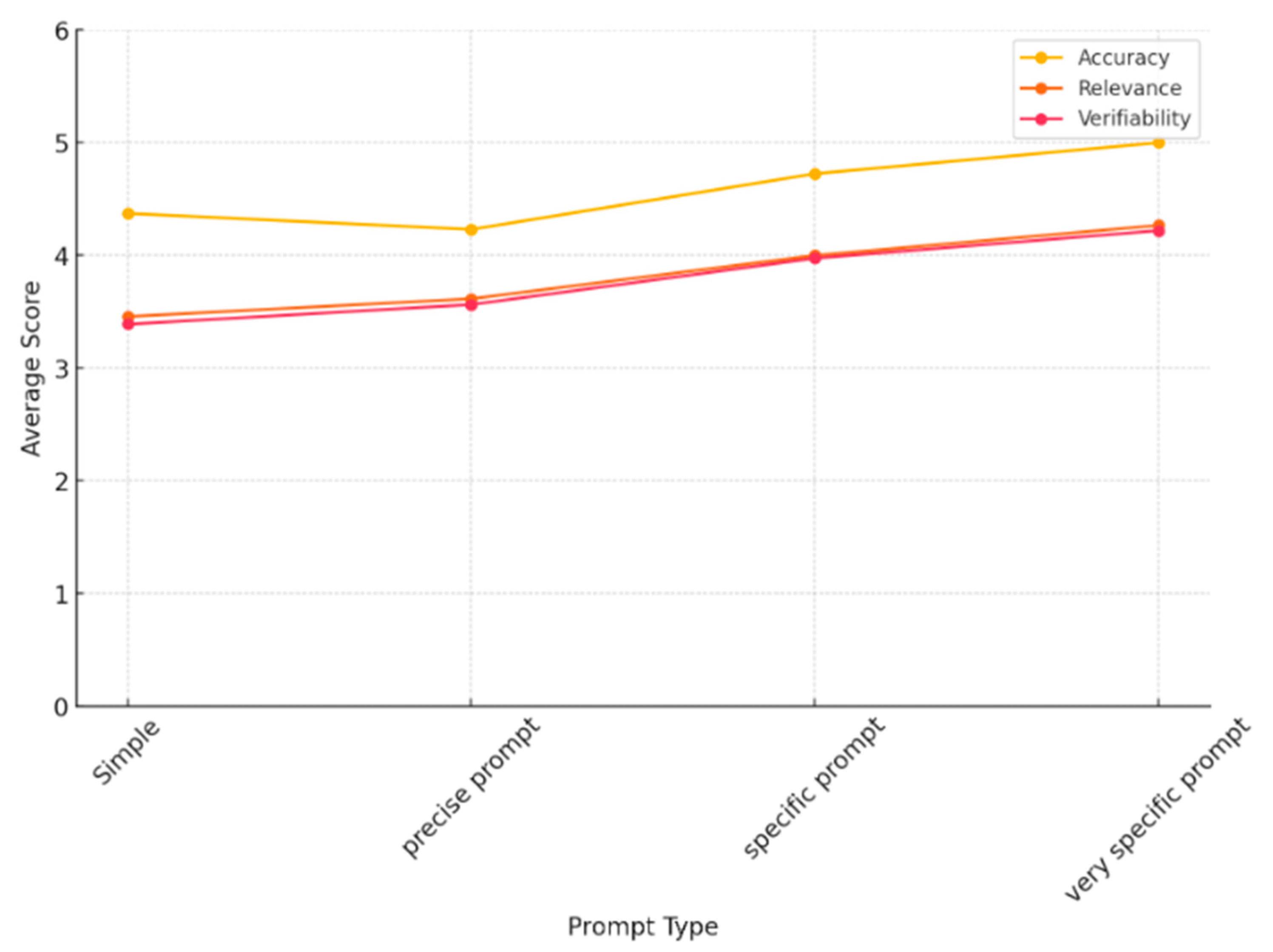

- Impact of prompt specificity: The level of prompt specificity significantly affects the quality of LLM responses, with detailed prompts resulting in higher accuracy, relevance, and verifiability. This is also in accordance with the findings of other studies such as [32].

- Reduction in hallucinations: By using RAG, the incidence of hallucinations or irrelevant responses was reduced by 15.55%, highlighting the role of RAG in enhancing the reliability of LLM outputs. Nevertheless, hallucinations cannot be fully avoided, so this risk needs to be considered in any practical application of such a solution.

- Efficiency in data retrieval: RAG demonstrated efficiency in retrieving and presenting verifiable financial data, particularly when prompts explicitly requested source references and provided further context.

- It is interesting to note that for accuracy, the solution without RAG performed similar to the solution with RAG (which is clearly better regarding relevance and verifiability). We assume that this is caused by the type of questions asking mostly for general and qualitative information. In other studies focusing on specific quantitative information (such as from financial tables) [30], we found a clear advantage of an RAG-based solution.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Documentation of the RAG Chatbot

- Importing Libraries: The script imports the necessary libraries for PDF text extraction (pdfminer.six), embedding generation (transformers, torch), nearest neighbor search (sklearn), and response generation (openai).

- Setting Up Directory and Extracting Text from PDFs: The directory containing the PDF files is specified. Text is extracted from each PDF using the pdfminer.six library. Extracted texts are saved to a file to avoid redundant processing in future runs.

- Generating Embeddings: A pre-trained BERT model from Hugging Face is used to tokenize and encode the extracted texts. Embeddings are generated from the encoded texts using the BERT model and saved to a file.

- Nearest Neighbors Model: A nearest neighbors model from sklearn is created and fitted with the document embeddings. This model is used to retrieve the most relevant documents for a given query.

- Retrieving Relevant Documents: The retrieve_documents function encodes a query and finds the most relevant documents using the nearest neighbors model.

- Truncating Context: The truncate_context function limits the size of the context to a specified number of tokens to ensure that the input stays within the token limit for OpenAI’s API.

- Generating Response with GPT-4: The generate_response_with_gpt4 function generates a response using OpenAI’s GPT-4 model. It constructs the prompt using the retrieved documents and the query, ensuring that the context is truncated to fit within the token limit.

- Chat-like Interaction: The chat function handles user input in a loop, allowing for a chat-like interaction. It retrieves relevant documents for each query, generates a response using GPT-4, and prints the response.

References

- OpenAI; Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; et al. GPT-4 technical report. arXiv 2024, arXiv:2303.08774v6. [Google Scholar]

- Gemini Team; Anil, R.; Borgeaud, S.; Wu, Y.; Alayrac, J.-B.; Yu, J.; Soricut, R.; Schalkwyk, J.; Dai, A.M.; Hauth, A.; et al. Gemini: A family of highly capable multimodal models. arXiv 2023, arXiv:2312.11805. [Google Scholar]

- Lyu, Y.; Li, Z.; Niu, S.; Xiong, F.; Tang, B.; Wang, W.; Wu, H.; Liu, H.; Xu, T.; Chen, E.; et al. CRUD-RAG: A comprehensive Chinese benchmark for retrieval-augmented generation of large language models. arXiv 2024, arXiv:2401.17043. [Google Scholar] [CrossRef]

- Gao, Y.; Xiong, Y.; Gao, X.; Jia, K.; Pan, J.; Bi, Y.; Dai, Y.; Sun, J.; Guo, Q.; Wang, M.; et al. Retrieval-augmented generation for large language models: A survey. arXiv 2024, arXiv:2312.10997v5. [Google Scholar] [CrossRef]

- Huang, L.; Yu, W.; Ma, W.; Zhong, W.; Feng, Z.; Wang, H.; Chen, Q.; Peng, W.; Feng, X.; Qin, B.; et al. A survey on hallucination in large language models: Principles, taxonomy, challenges, and open questions. arXiv 2023, arXiv:2311.05232. [Google Scholar] [CrossRef]

- McKenna, N.; Li, T.; Cheng, L.; Hosseini, M.J.; Johnson, M.; Steedman, M. Sources of hallucination by large language models on inference tasks. arXiv 2023, arXiv:2305.14552. [Google Scholar] [CrossRef]

- Siriwardhana, S.; Weerasekera, R.; Wen, E.; Kaluarachchi, T.; Rana, R.; Nanayakkara, S. Improving the domain adaptation of retrieval augmented generation (RAG) models for open domain question answering. Trans. Assoc. Comput. Linguist. 2023, 11, 1–17. [Google Scholar] [CrossRef]

- Loukas, L.; Stogiannidis, I.; Diamantopoulos, O.; Malakasiotis, P.; Vassos, S. Making LLMs worth every penny: Resource-limited text classification in banking. In Proceedings of the ICAIF ‘23: 4th ACM International Conference on AI in Finance, Brooklyn, NY, USA, 27–29 November 2023; pp. 392–400. [Google Scholar] [CrossRef]

- Bommasani, R.; Hudson, D.A.; Adeli, E.; Altman, R.; Arora, S.; von Arx, S.; Bernstein, M.S.; Bohg, J.; Bosselut, A.; Brunskill, E.; et al. On the Opportunities and Risks of Foundation Models. arXiv 2021, arXiv:2108.07258. [Google Scholar] [CrossRef]

- Zhou, C.; Li, Q.; Li, C.; Yu, J.; Liu, Y.; Wang, G.; Zhang, K.; Ji, C.; Yan, Q.; He, L.; et al. A comprehensive survey on pretrained foundation models: A history from BERT to ChatGPT. arXiv 2023, arXiv:2302.09419. [Google Scholar] [CrossRef]

- Min, B.; Ross, H.; Sulem, E.; Veyseh, A.P.B.; Nguyen, T.H.; Sainz, O.; Agirre, E.; Heintz, I.; Roth, D. Recent advances in natural language processing via large pre-trained language models: A survey. ACM Comput. Surv. 2024, 56, 1–40. [Google Scholar] [CrossRef]

- Naveed, H.; Khan, A.U.; Qiu, S.; Saqib, M.; Anwar, S.; Usman, M.; Akhtar, N.; Barnes, N.; Mian, A. A comprehensive overview of large language models. arXiv 2024, arXiv:2307.06435. [Google Scholar] [CrossRef]

- Hamza, M.; Awan, W.N. Understanding the Landscape of Generative AI: A Computational Literature Review. 2025. Available online: https://ssrn.com/abstract=5327156 (accessed on 29 July 2025).

- Chen, J.; Lin, H.; Han, X.; Sun, L. Benchmarking large language models in retrieval-augmented generation. Proc. AAAI Conf. Artif. Intell. 2023, 38, 17754–17762. [Google Scholar] [CrossRef]

- Zhang, B.; Yang, H.; Zhou, T.; Ali Babar, M.; Liu, X.-Y. Enhancing financial sentiment analysis via retrieval augmented large language models. In Proceedings of the ICAIF ‘23: 4th ACM International Conference on AI in Finance, Brooklyn, NY, USA, 27–29 November 2023; Association for Computing Machinery: New York, NY, USA, 2023; pp. 349–356. [Google Scholar] [CrossRef]

- Ryu, C.; Lee, S.; Pang, S.; Choi, C.; Choi, H.; Min, M.; Sohn, J.-Y. Retrieval-based evaluation for LLMs: A case study in Korean legal QA. In Proceedings of the Natural Legal Language Processing Workshop 2023; Association for Computational Linguistics: Singapore, 2023; pp. 132–137. [Google Scholar]

- Finardi, P.; Avila, L.; Castaldoni, R.; Gengo, P.; Larcher, C.; Piau, M.; Costa, P.; Caridá, V. The chronicles of RAG: The retriever, the chunk and the generator. arXiv 2024, arXiv:2401.07883. [Google Scholar] [CrossRef]

- Ren, Y.; Cao, Y.; Guo, P.; Fang, F.; Ma, W.; Lin, Z. Retrieve-and-sample: Document-level event argument extraction via hybrid retrieval augmentation. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; Rogers, A., Boyd-Graber, J., Okazaki, N., Eds.; Association for Computational Linguistics: Kerrville, TX, USA, 2023; pp. 293–306. [Google Scholar]

- Wang, R.; Bao, J.; Mi, F.; Chen, Y.; Wang, H.; Wang, Y.; Li, Y.; Shang, L.; Wong, K.-F.; Xu, R. Retrieval-free knowledge injection through multi-document traversal for dialogue models. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; Rogers, A., Boyd-Graber, J., Okazaki, N., Eds.; Association for Computational Linguistics: Kerrville, TX, USA, 2023; pp. 6608–6619. [Google Scholar] [CrossRef]

- Wu, S.; Irsoy, O.; Lu, S.; Dabravolski, V.; Dredze, M.; Gehrmann, S.; Kambadur, P.; Rosenberg, D.; Mann, G. BloombergGPT: A large language model for finance. arXiv 2023, arXiv:2303.17564. [Google Scholar] [CrossRef]

- Su, W.; Tang, Y.; Ai, Q.; Wu, Z.; Liu, Y. DRAGIN: Dynamic retrieval augmented generation based on the real-time information needs of large language models. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Bangkok, Thailand, 11–16 August 2024; pp. 12991–13013. [Google Scholar]

- Choi, S.; Fang, T.; Wang, Z.; Song, Y. KCTS: Knowledge-constrained tree search decoding with token-level hallucination detection. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; Association for Computational Linguistics: Kerrville, TX, USA, 2023; pp. 14035–14053. [Google Scholar]

- Yu, W.; Zhang, H.; Pan, X.; Ma, K.; Wang, H.; Yu, D. Chain-of-note: Enhancing robustness in retrieval-augmented language models. arXiv 2023, arXiv:2311.09210. [Google Scholar]

- Wang, J.; Ding, W.; Zhu, X. Financial analysis: Intelligent financial data analysis system based on llm-rag. arXiv 2025, arXiv:2504.06279. [Google Scholar] [CrossRef]

- Sælemyr, J.; Femdal, H.T. Chunk Smarter, Retrieve Better: Enhancing LLMS in Finance: An Empirical Comparison of Chunking Techniques in Retrieval Augmented Generation for Financial Reports. Master’s Thesis, Norwegian School of Economics, Bergen, Norway, 2024. [Google Scholar]

- Gondhalekar, C.; Patel, U.; Yeh, F.C. MultiFinRAG: An Optimized Multimodal Retrieval-Augmented Generation (RAG) Framework for Financial Question Answering. arXiv 2025, arXiv:2506.20821. [Google Scholar]

- Chinaksorn, N.; Wanvarie, D. LLM-RAG for Financial Question Answering: A Case Study from SET50. In Proceedings of the 2025 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Fukuoka, Japan, 18–21 February 2025; pp. 0952–0957. [Google Scholar]

- Balsiger, D.; Dimmler, H.R.; Egger-Horstmann, S.; Hanne, T. Assessing large language models used for extracting table information from annual financial reports. Computers 2024, 13, 257. [Google Scholar] [CrossRef]

- Bas, H.; Christen, M.; Radović, D.; Hanne, T. Large Language Models for Table Content Extraction from Annual Reports. In Congress on Intelligent Systems; Springer Nature: Singapore, 2024; pp. 61–79. [Google Scholar]

- Iaroshev, I.; Pillai, R.; Vaglietti, L.; Hanne, T. Evaluating Retrieval-Augmented Generation Models for Financial Report Question and Answering. Appl. Sci. 2024, 14, 9318. [Google Scholar] [CrossRef]

- Martineau, K. What Is Retrieval-Augmented Generation? IBM Research Blog, 1 May 2024. Available online: https://research.ibm.com/blog/retrieval-augmented-generation-RAG?ref=robkerr.ai (accessed on 29 July 2025).

- Rosa, S. Large Language Models for Requirements Engineering. Ph.D. Dissertation, Politecnico di Torino, Turin, Italy, 2025. [Google Scholar]

| Sr. No. | Symbol | Company | Revenue | Markert Cap | Sector Classification |

|---|---|---|---|---|---|

| USD (Billion) | USD (Billion) | ||||

| 1 | PAYX | Paychex, Inc., Rochester, NY, USA | 5.007 | 118.45 | Technology Services |

| 2 | FTNT | Fortinet, Inc., Sunnyvale, CA, USA | 5.305 | 65.2 | Technology Services |

| 3 | TTWO | Take-Two Interactive Software, Inc., New York, NY, USA | 5.35 | 143.07 | Technology Services |

| 4 | ADSK | Autodesk, Inc. | 5.497 | 209.95 | Technology Services |

| 5 | SSNC | S&C Technologies Holdings, Inc., San Francisco, CA, USA | 5.503 | 61.52 | Technology Services |

| 6 | SNPS | Synopsys, Inc., Sunnyvale, CA, USA | 5.853 | 523.38 | Technology Services |

| 7 | ROP | Roper Technologies, Inc., Sarasota, FL, USA | 6.178 | 510.82 | Technology Services |

| 8 | PANW | Palo Alto Networks, Inc., Santa Clara, CA, USA | 6.893 | 295.32 | Technology Services |

| 9 | WDAY | Workday, Inc., Pleasanton, CA, USA | 7.197 | 250.85 | Technology Services |

| 10 | EA | Electronic Arts Inc., Redwood City, CA, USA | 7.241 | 128.5 | Technology Services |

| No. | Question | Category |

|---|---|---|

| 1 | What is the bet revenue for the fiscal year and it’s breakdown? | Quantitative |

| 2 | What is the cost of revenue for the fiscal year and its breakdown? | Quantitative |

| 3 | What are the operating expenses for the fiscal year and its breakdown? | Quantitative |

| 4 | What is the current ratio as per the balance sheet? | Quantitative—Calculated |

| 5 | What is the debt-to-equity ratio as per the balance sheet? | Quantitative—Calculated |

| 6 | What is the quick ratio as per the balance sheet? | Quantitative—Calculated |

| 7 | Have the auditors expressed a qualified or unqualified opinion about the financial statements? | Qualitative |

| 8 | Have there been any changes in the accounting standards or practices adopted by the company for the fiscal year? | Qualitative |

| 9 | What is the company’s policy for revenue recognition? | Qualitative |

| 10 | Does the company have a stock repurchase program? If yes, what is it? | Qualitative |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mokashi, A.; Puthuparambil, B.; Daniel, C.; Hanne, T. Analysis of Large Language Models for Company Annual Reports Based on Retrieval-Augmented Generation. Information 2025, 16, 786. https://doi.org/10.3390/info16090786

Mokashi A, Puthuparambil B, Daniel C, Hanne T. Analysis of Large Language Models for Company Annual Reports Based on Retrieval-Augmented Generation. Information. 2025; 16(9):786. https://doi.org/10.3390/info16090786

Chicago/Turabian StyleMokashi, Abhijit, Bennet Puthuparambil, Chaissy Daniel, and Thomas Hanne. 2025. "Analysis of Large Language Models for Company Annual Reports Based on Retrieval-Augmented Generation" Information 16, no. 9: 786. https://doi.org/10.3390/info16090786

APA StyleMokashi, A., Puthuparambil, B., Daniel, C., & Hanne, T. (2025). Analysis of Large Language Models for Company Annual Reports Based on Retrieval-Augmented Generation. Information, 16(9), 786. https://doi.org/10.3390/info16090786