Graph Convolutional Network with Agent Attention for Recognizing Digital Ink Chinese Characters Written by International Students

Abstract

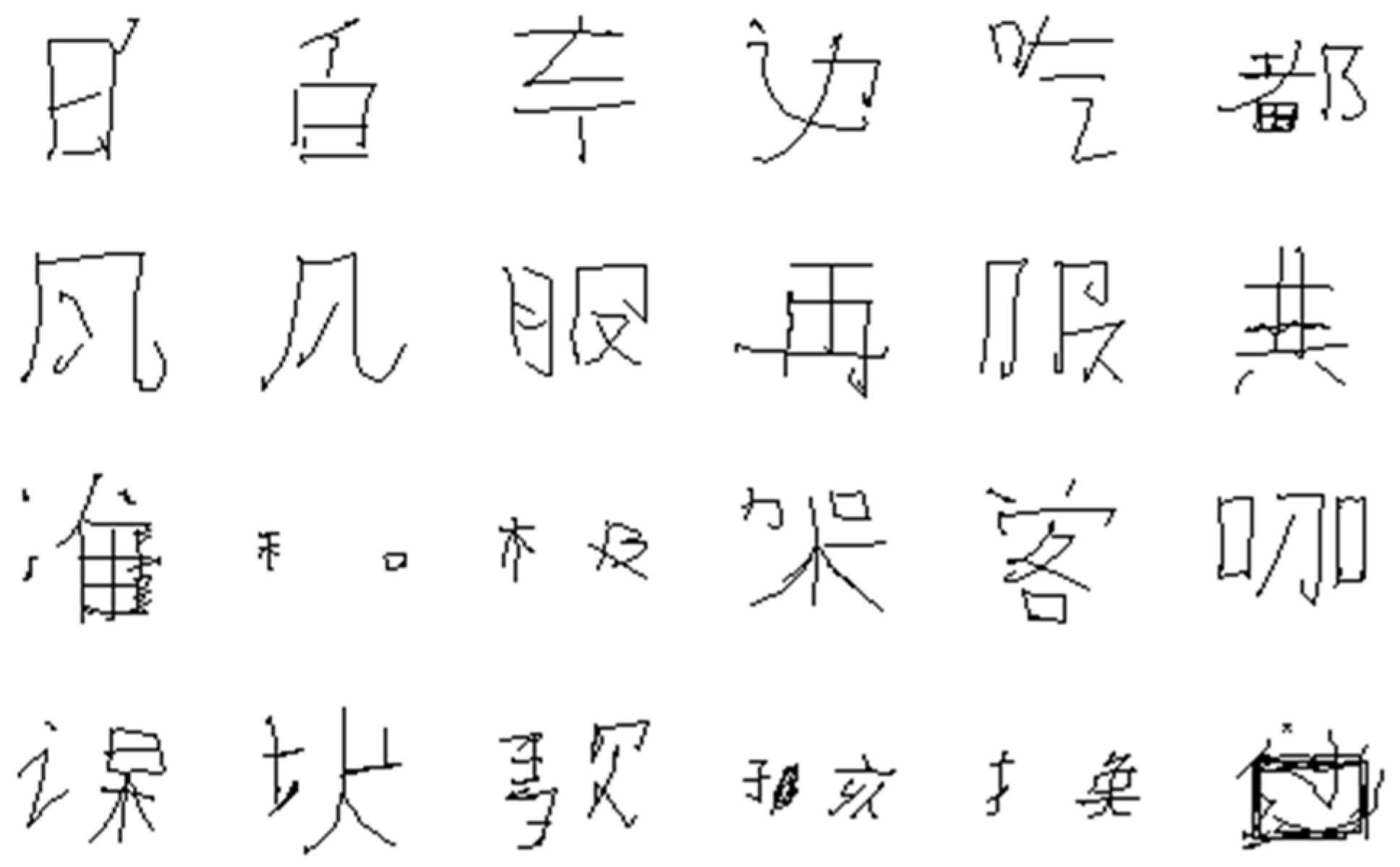

1. Introduction

- (1)

- We propose representing DICCs of international students as graphs, which not only model the geometric structure of Chinese characters but also include the temporal and spatial information of sampling points.

- (2)

- For the first time, GCNAA is proposed to implement DICCR for international students. GCNAA has low computational complexity, fast inference speed, and high recognition rate for DICCR of international students with writing errors and lack of standardization.

- (3)

- There have been relevant studies on using GCNs to achieve 3755 first-level Chinese character recognition tasks, but the effectiveness of GCNs has not been validated in larger categories of Chinese character recognition tasks, such as the recognition of 6763 first-level and second-level Chinese characters. This paper experimentally verifies the generalization performance of the GCNAA on 6763 large category datasets using the publicly available datasets CASIA-OLWHDB1.0-1.2, SCUT-COOCH2009 GB1&GB2, and HIT-OR3C-ONLINE.

2. Related Work

2.1. DICCR

- (1)

- Image-based 2D-CNN methodThe 2D-CNN method converts DICCs into images and treats Chinese character recognition as image classification [3,27], which loses the temporal information contained in DICCs. Yang et al. [2] and Zhang et al. [1] used domain-specific knowledge to extract feature images (such as eight-directional feature maps, path signatures, etc.) to fully utilize spatial and temporal information, significantly improving recognition performance. However, this method requires complex domain-specific knowledge, while also increasing computational costs and storage space requirements.

- (2)

- Sequence-based RNN and 1D-CNN methodsThe recurrent neural network (RNN) method directly processes DICCs, thereby better utilizing the temporal and spatial information contained in sequence data, and does not require any domain knowledge to extract feature images. It has achieved good results in DICCR. Zhang et al. [5] used LSTM and GRU networks to model temporal dependencies, and achieved the best recognition level at the time on the ICDAR-2013 dataset. However, due to the serial computing mechanism of RNNs, the processing speed of long sequences is slow. Gan et al. [28] and Xu et al. [29] proposed a one-dimensional convolutional neural network (1D-CNN) method, which has faster computation speed compared to RNNs and can process long sequences in parallel. But these methods all rely on temporal information and cannot achieve freedom of stroke order.

- (3)

- Graph-based GNN methodA graph can fully represent the two-dimensional structure of Chinese characters, and traditional graph-based methods describe DICCs as relational data structures, such as attributed relational graphs. Zheng Jing et al. [30] proposed a fuzzy attribute relationship graph FARG to describe DICCs and achieved good results. However, the definition of graph similarity measurement in attributive relational graphs lacks statistical basis, and the graph-matching algorithm is complex, slow in computation speed, weak in anti-interference ability, and difficult to train. With the rapid development of graph neural networks [8,9,10], Gan [11] depicted Chinese characters using skeletal graph structure, and used Transformers and GCNs to design the PyGT architecture for Chinese character recognition, achieving good results. Although Transformers’ global attention mechanism has good expressive power, its high computational cost leads to long inference time in PyGT.

2.2. Attention Mechanism

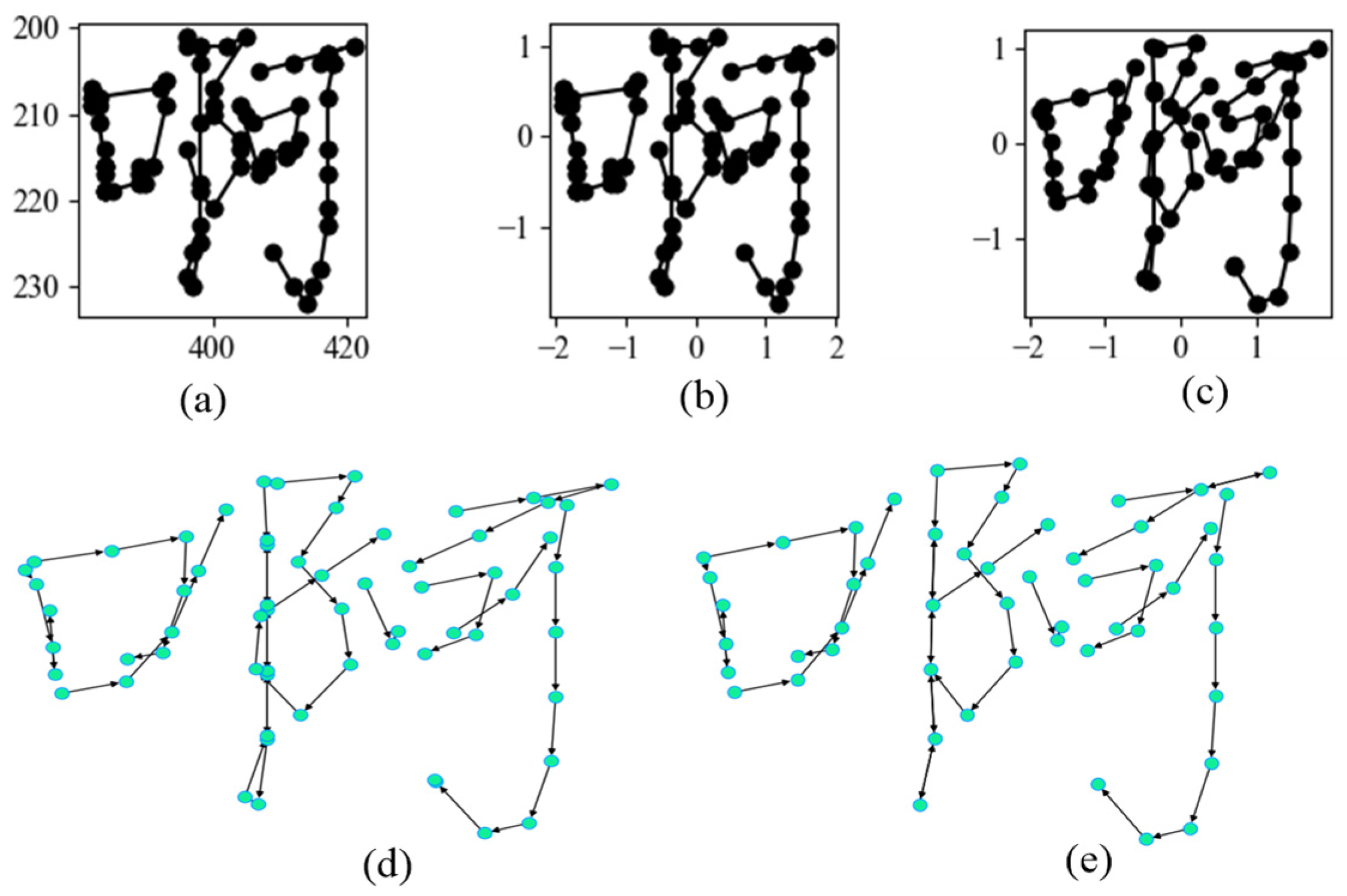

3. Constructing Skeleton Graphs of DICCs

- (1)

- Extract coordinates from the original data to obtain a sequence with length N, , in which and represent the and coordinates of the sampling point , and represents the strokes to which the sampling point belongs.

- (2)

- Normalized coordinates. While keeping the original aspect ratio, the coordinates of sampling points are normalized into a standard coordinate system [5].

- (3)

- Resampling. The original trajectory sequence is resampled to generate a new sequence composed of equidistant points along the path. The coordinates (x, y) of the new point are calculated through linear interpolation. The stroke marker directly inherits the marker of the previous point.

- (4)

- Build skeleton graphs. The skeleton graphs are created by traversing each sampling point, taking the sampling points of a Chinese character as the vertexes of the skeleton graph and the connecting line between adjacent sampling points as the edge of the graph.

- (5)

- Merge nearby graph vertexes.The number of vertices in the new skeleton graph will decrease, and each vertex will contain more information, which will maintain the original structure of Chinese characters intact, yet effectively lowers computational demands. The procedure for merging nearby graph vertexes is as follows.

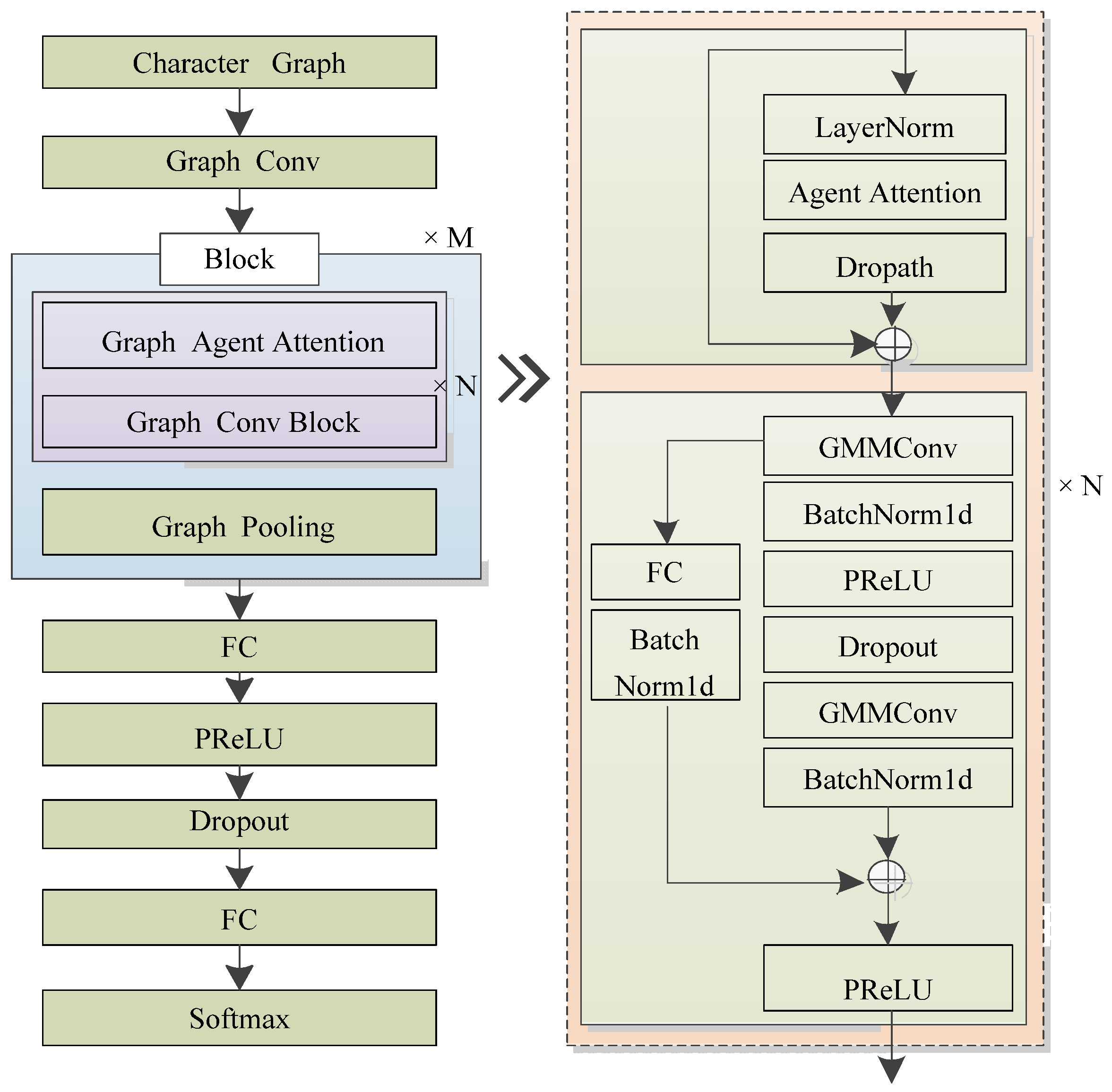

4. Graph Convolutional Network with Agent Attention

4.1. Graph Agent Attention

4.2. Graph Convolution

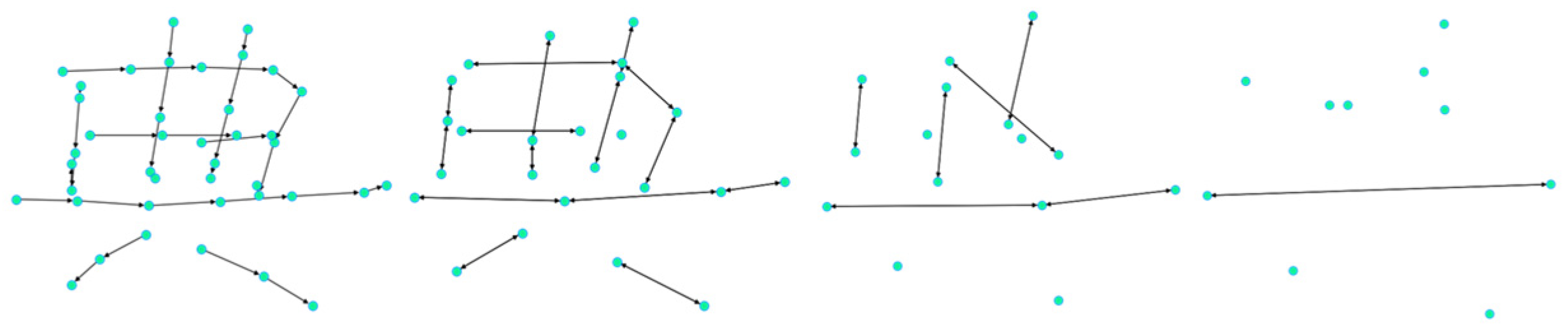

4.3. Graph Pooling

5. Experiments

5.1. Datasets

5.2. Implementation

5.3. Experimental Results

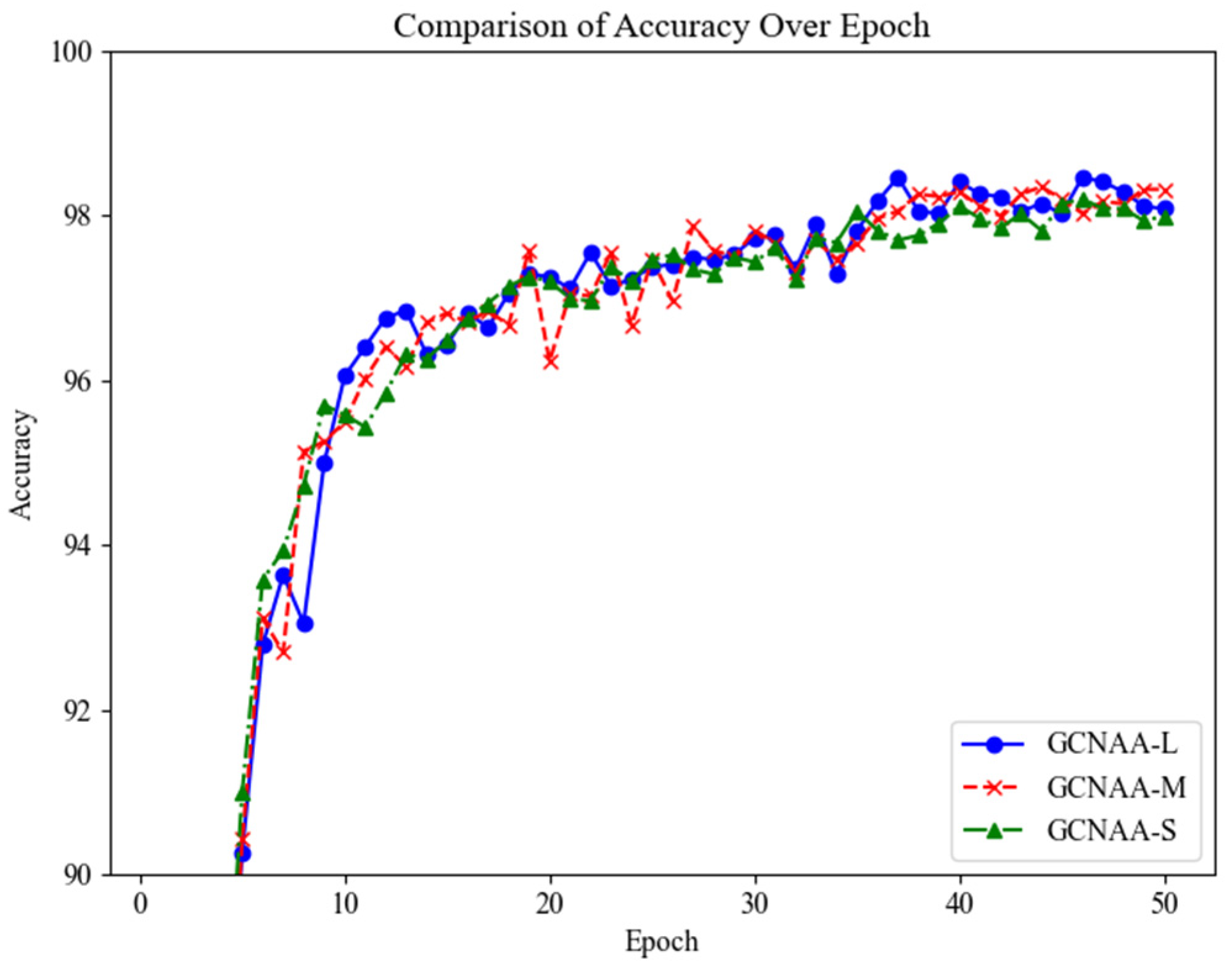

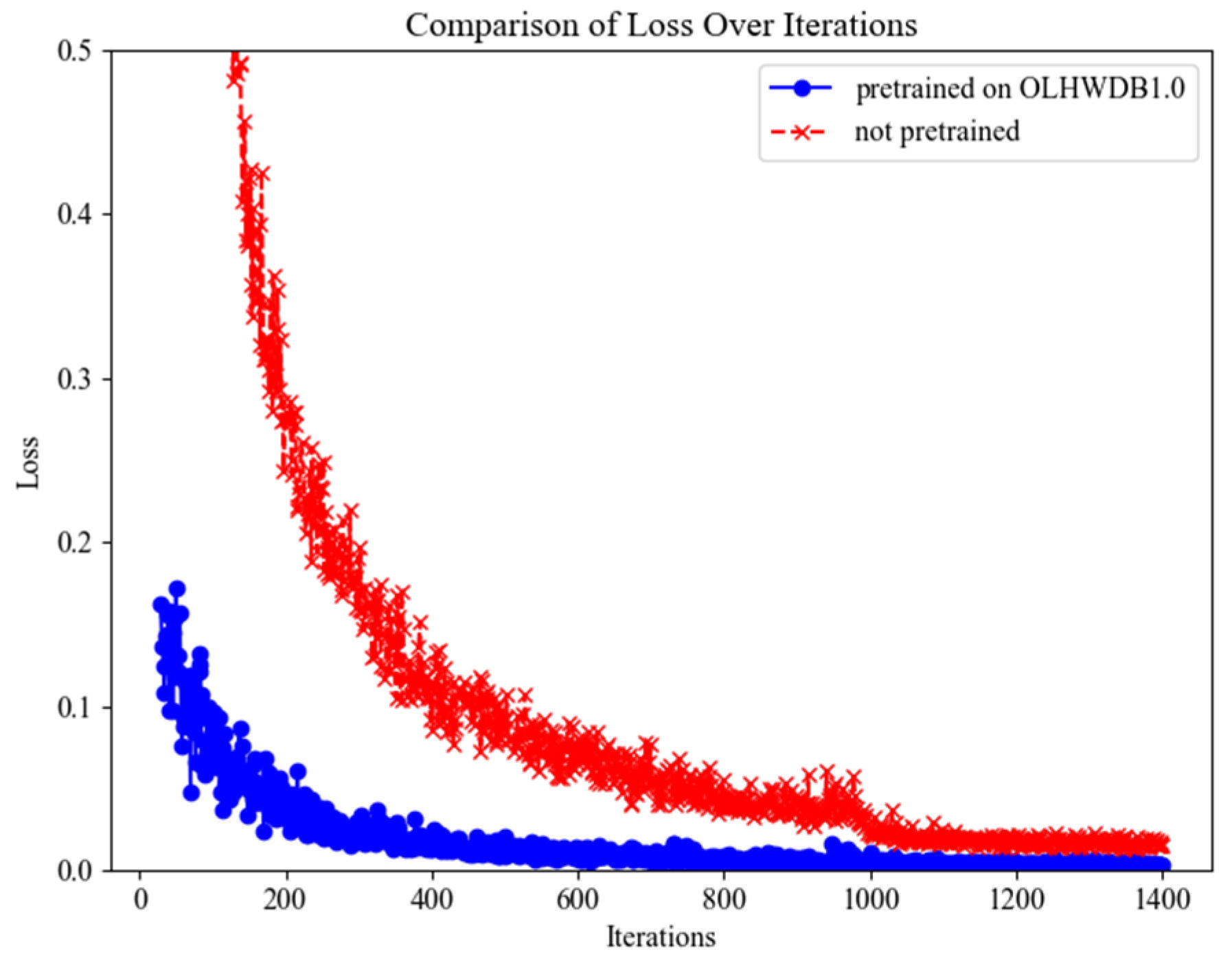

5.3.1. The Classification Effect of GCNAA on DICCs for International Students

5.3.2. The Classification Effect of GCNAA on Super-Large-Category Datasets

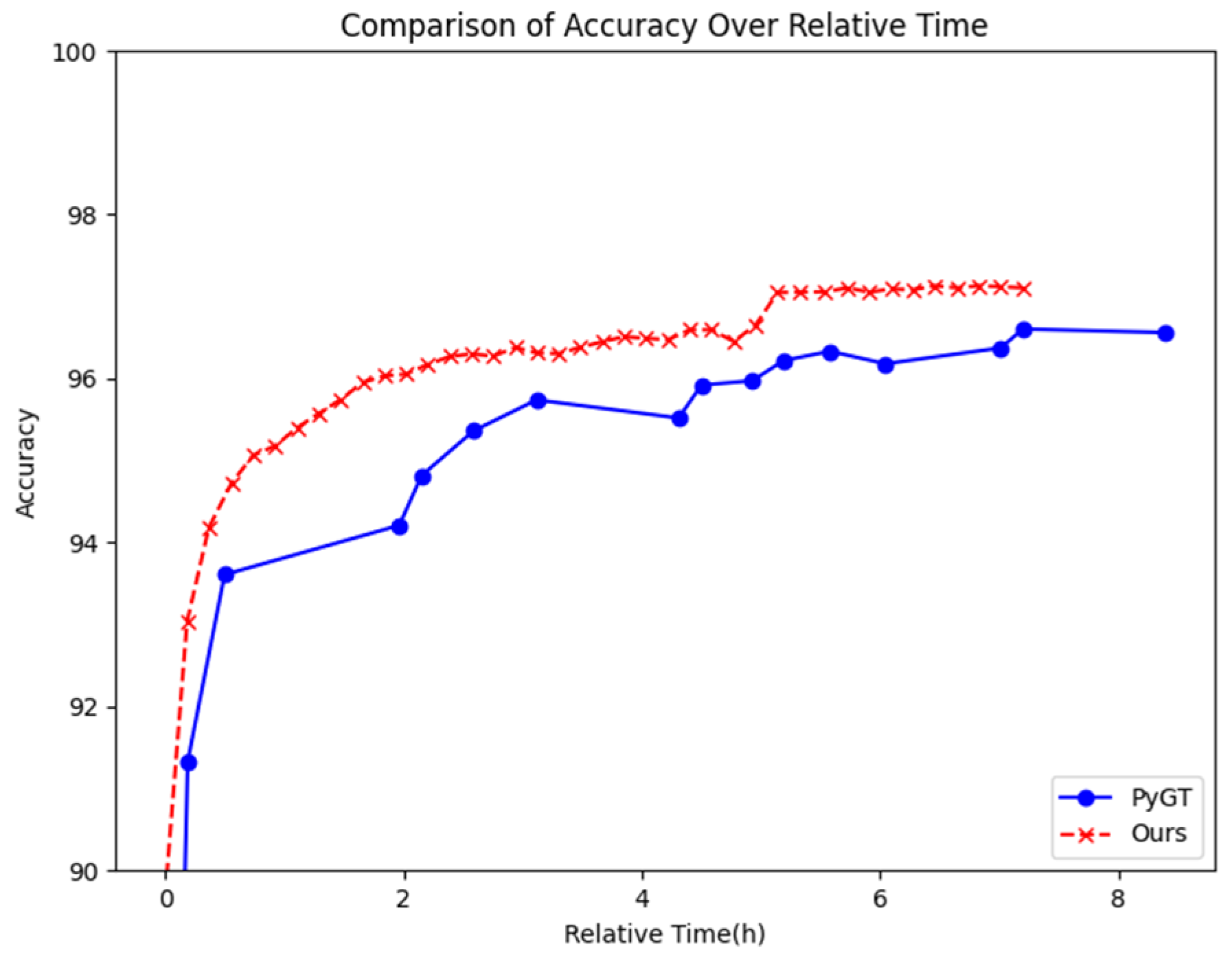

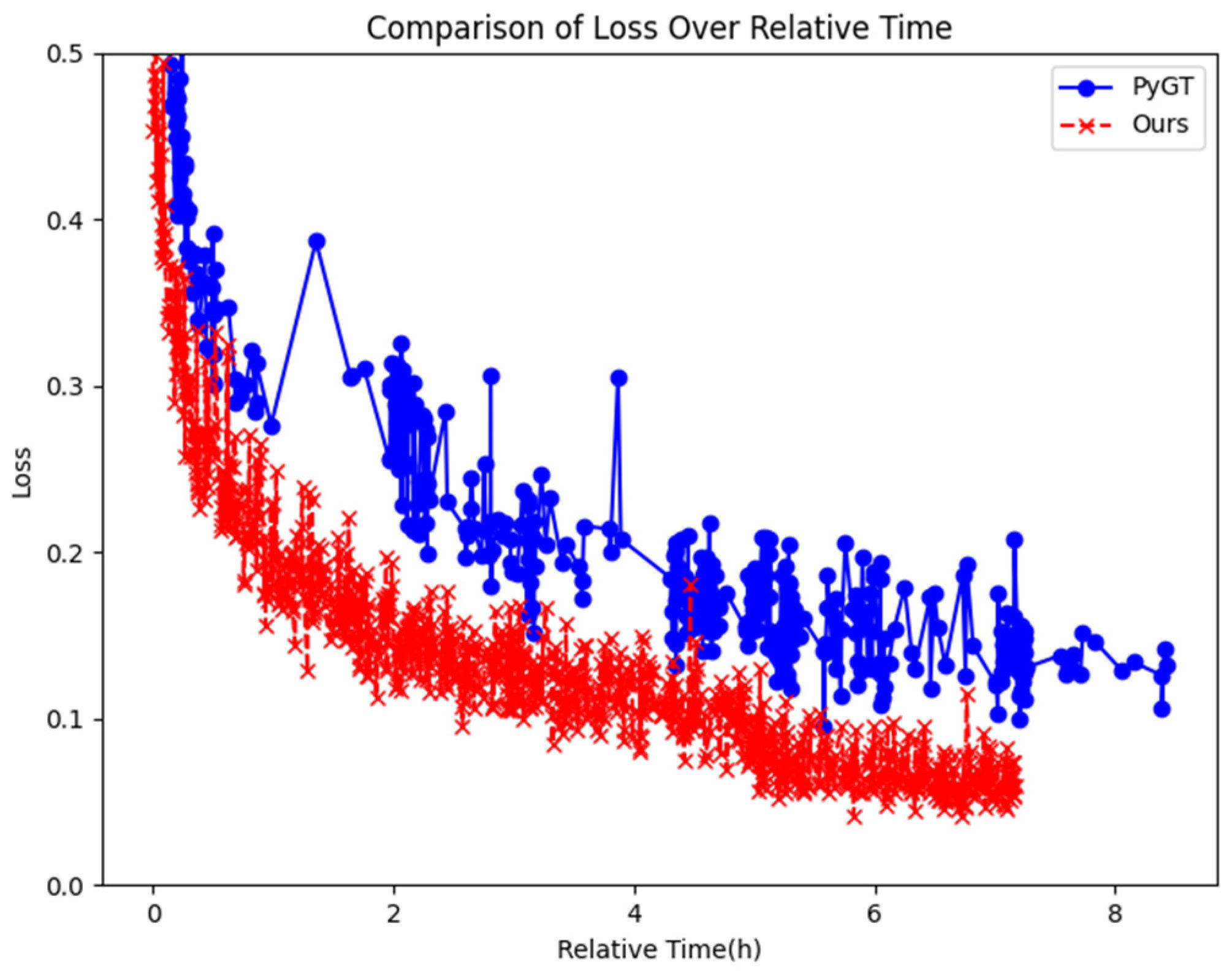

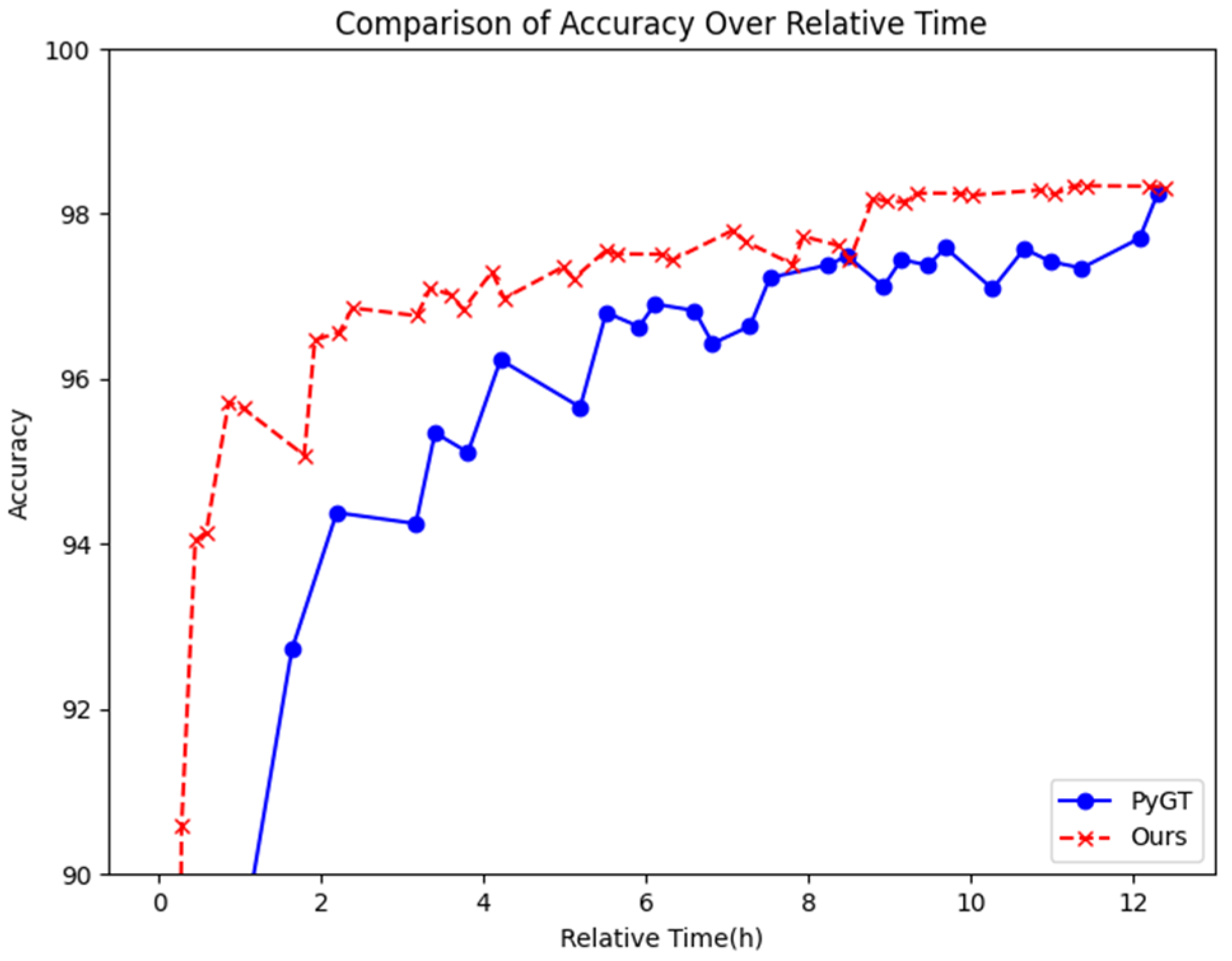

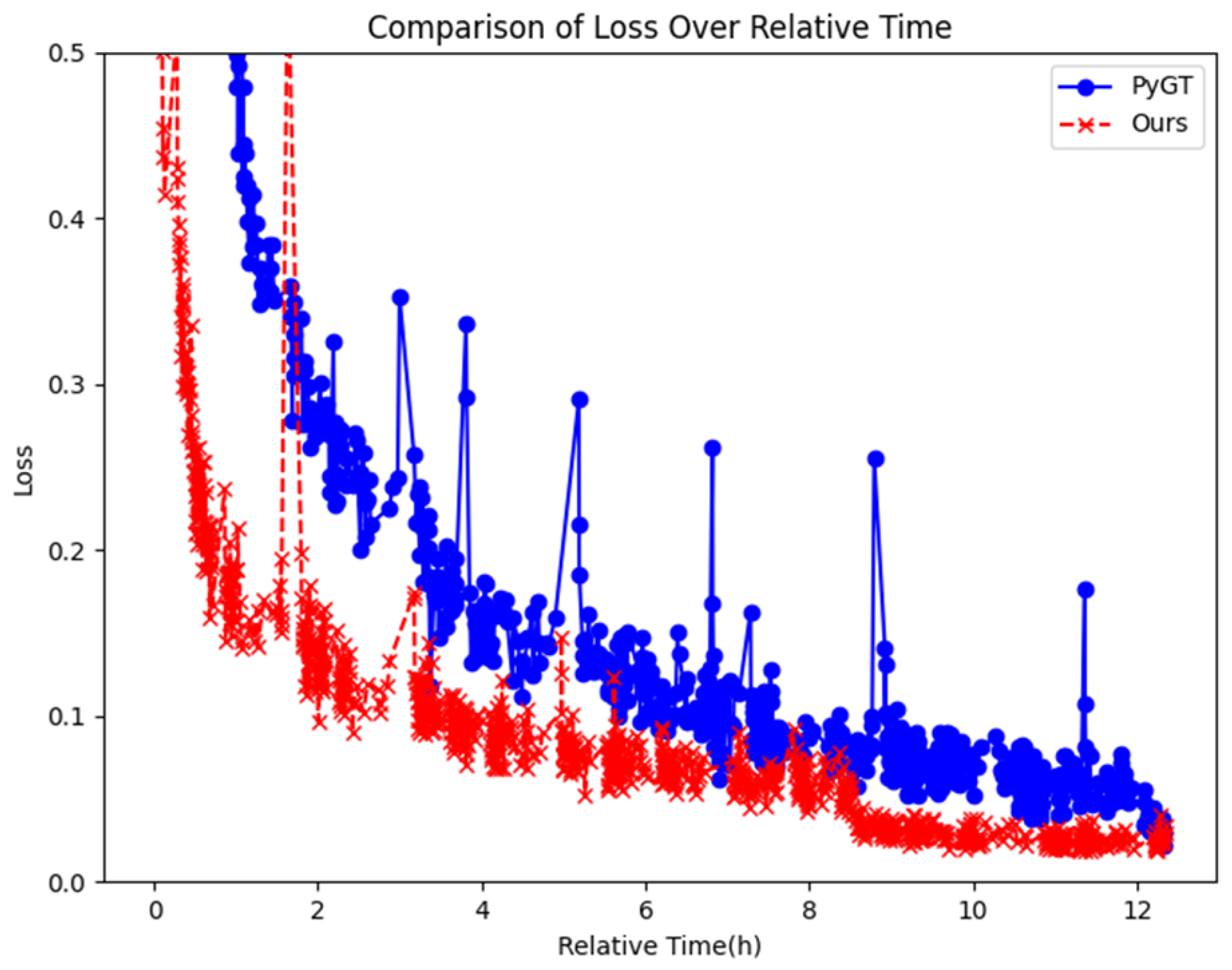

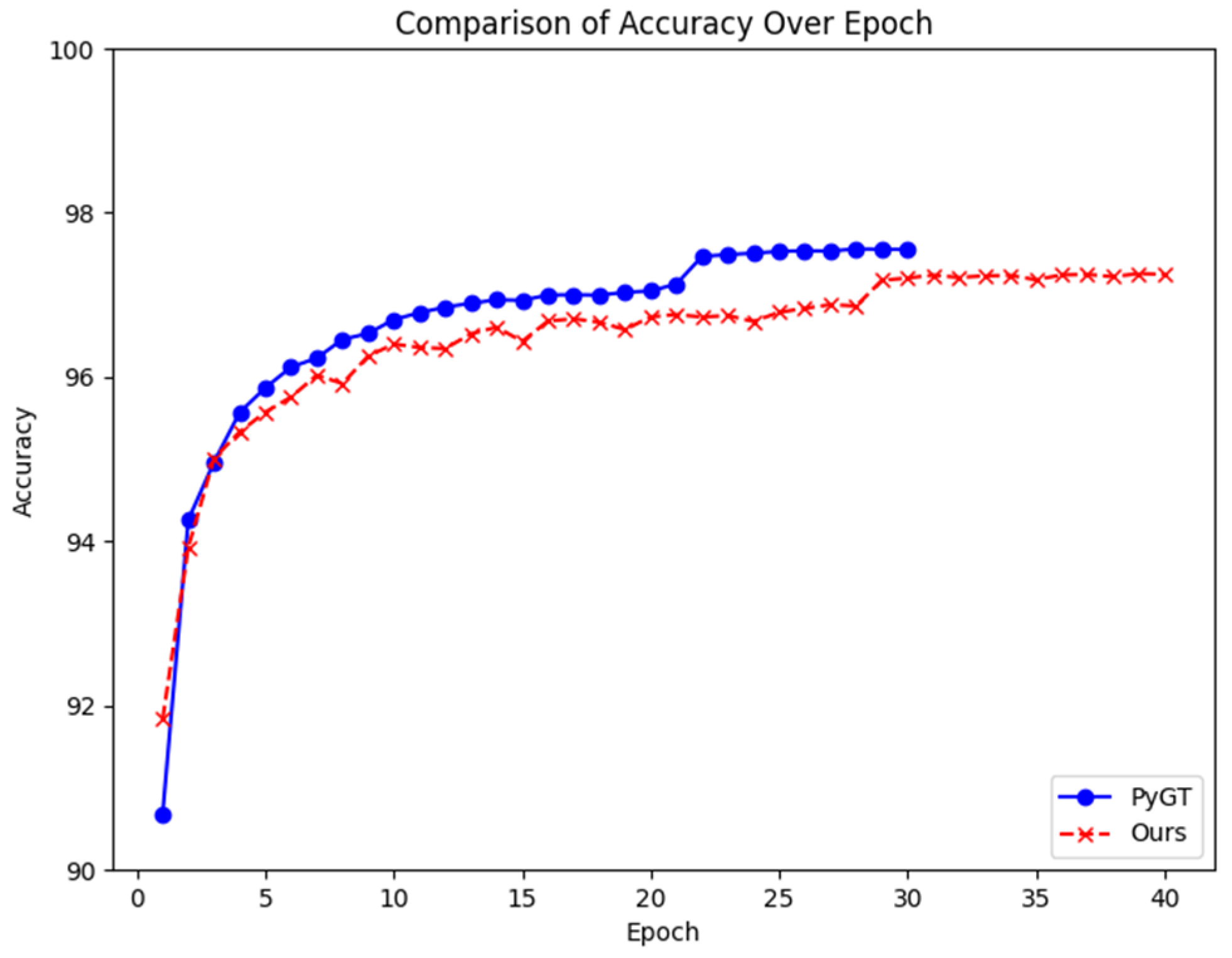

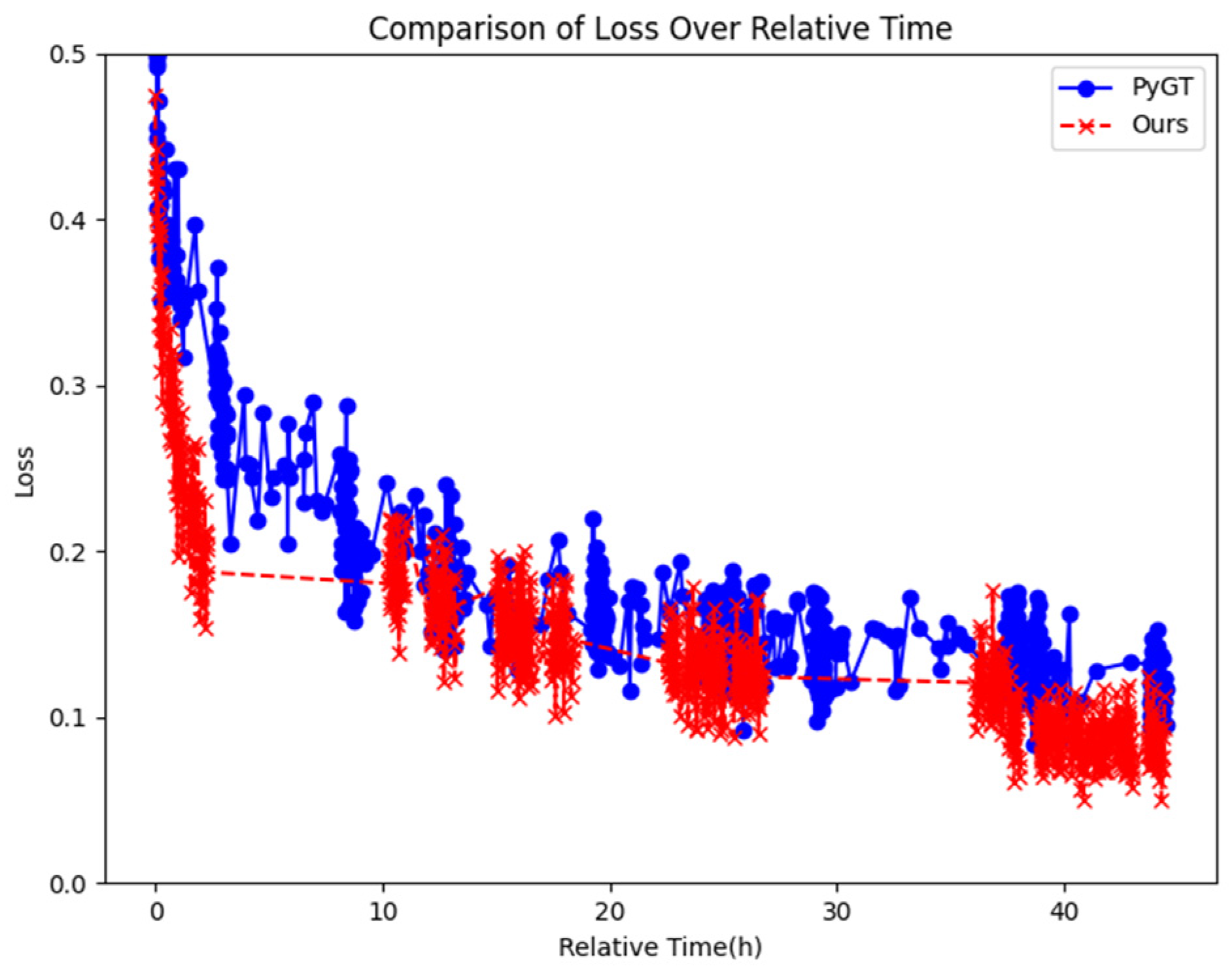

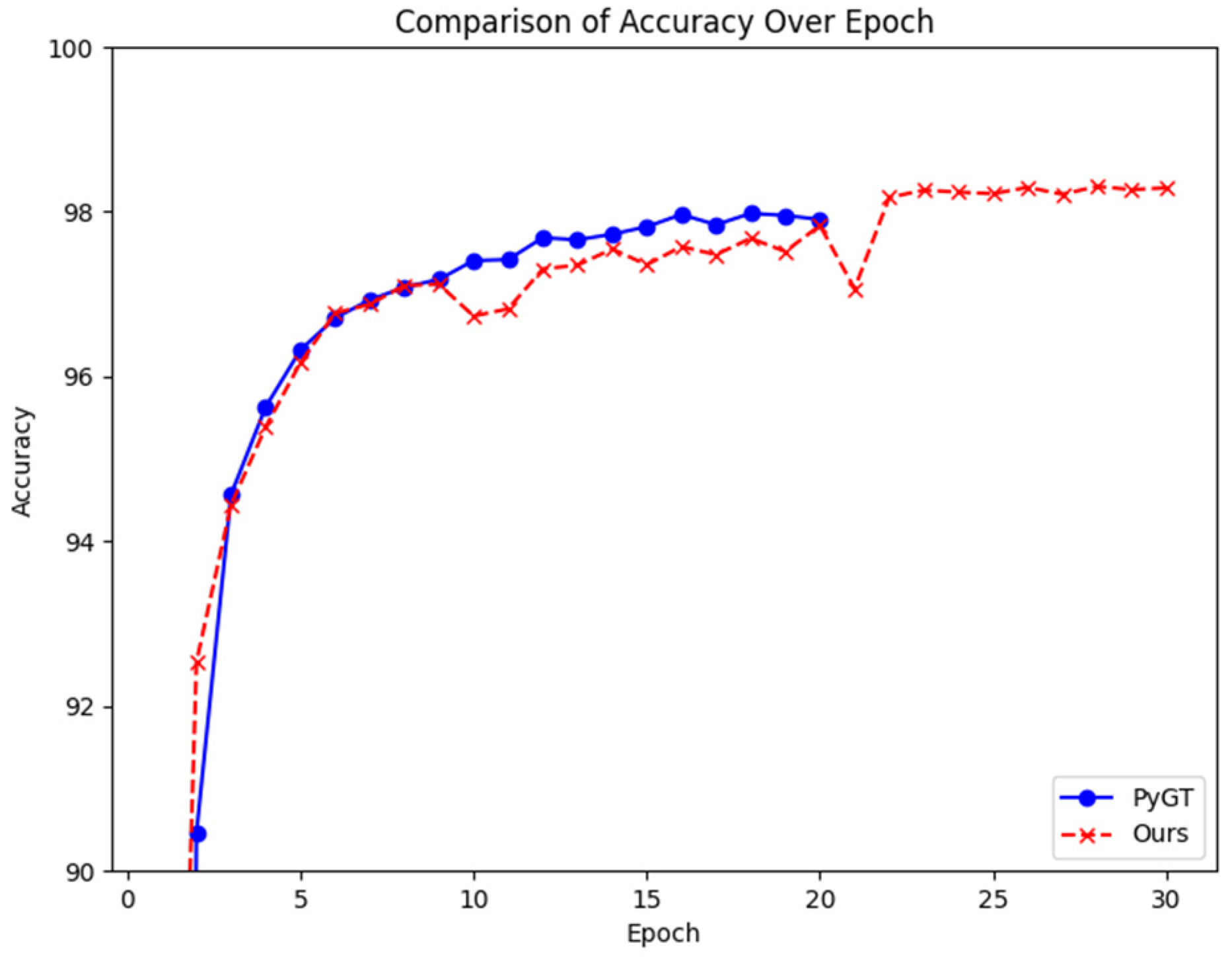

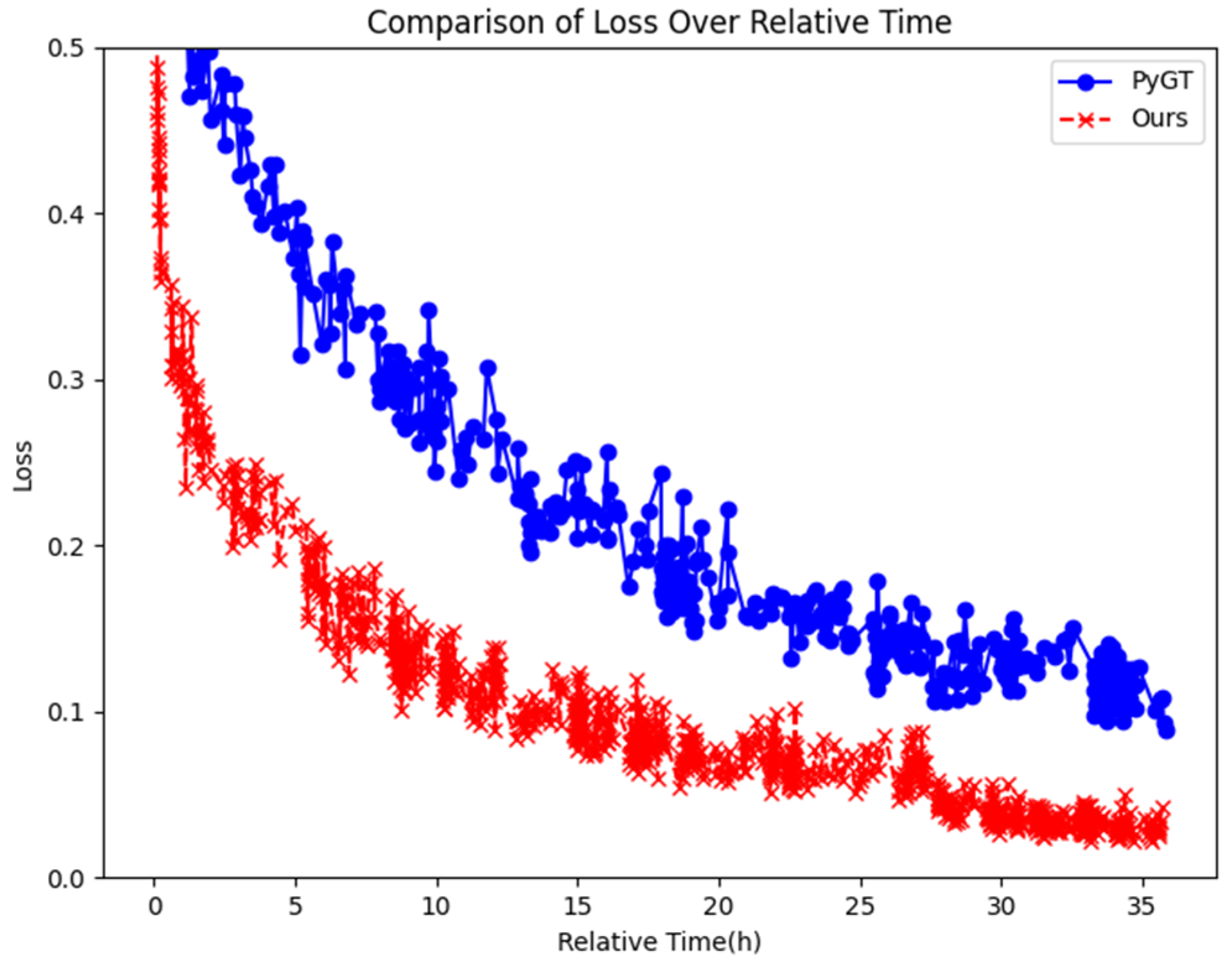

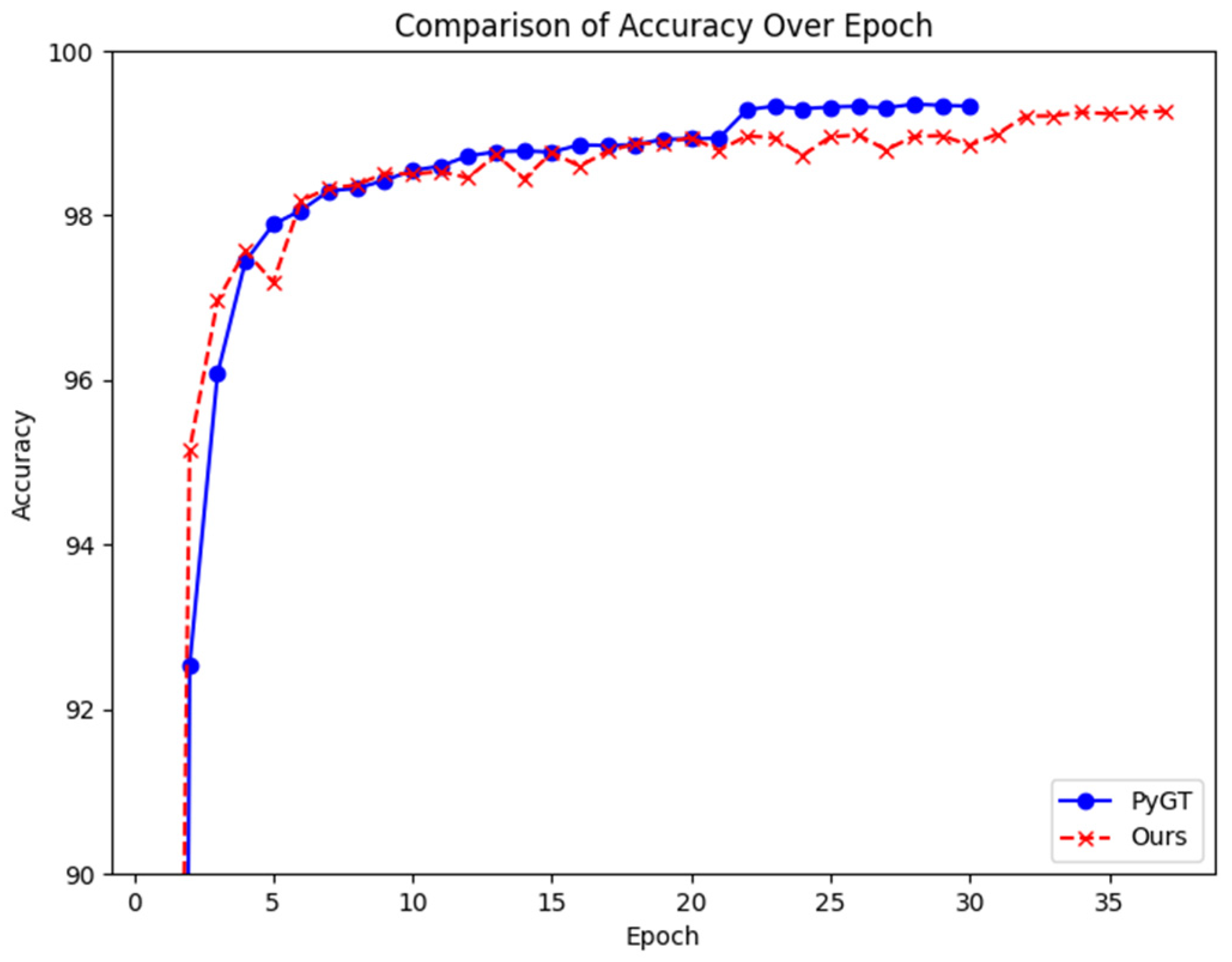

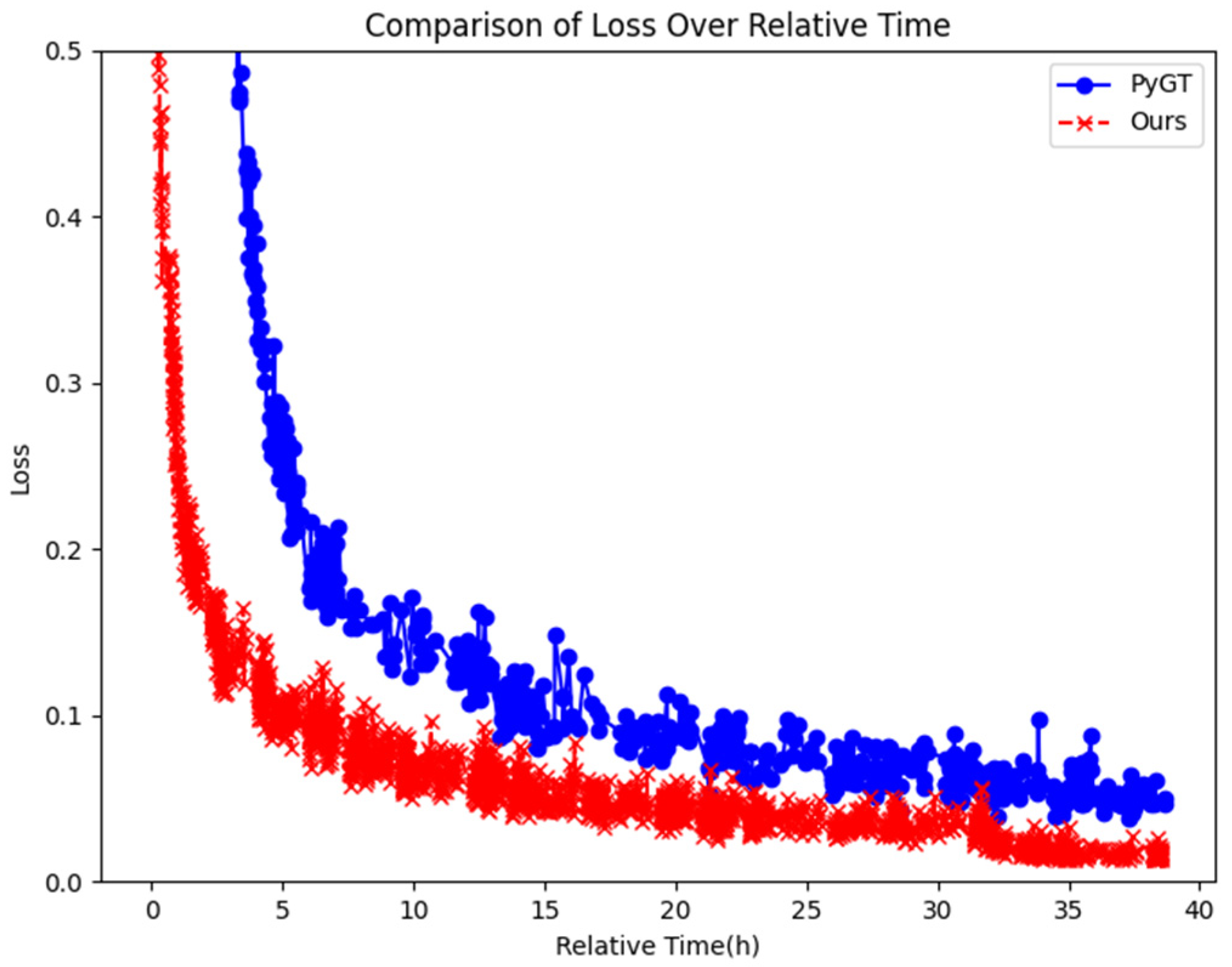

5.3.3. The Comparison of GCNAA and PyGT

- (1)

- High computational efficiencyGCNAA adopts agent attention. Compared with the traditional Softmax attention, agent attention uses a generalized linear attention mechanism, which reduces the computation and improves the processing speed. This means that results can be obtained faster in the process of training and reasoning, especially on large-scale datasets.

- (2)

- Global context modelingAlthough the computational process is simplified, agent attention can still maintain an understanding of the global context in the input data. This is very important for capturing the global dependencies of graph nodes and understanding complex patterns.

- (3)

- Reducing computational complexityBecause of its design, GCNAA reduces the computational complexity of the overall model, which helps to reduce the required computing resources, such as memory and processor time, so that the model can be deployed in a wider range of hardware environments, including those with limited computing power.

- (4)

- The training time is shortenedDue to the improvement of computational efficiency and the reduction in computational complexity, the model using agent attention can complete training in a shorter time. This is very helpful for fast iterative model development and responding to new data or requirement changes.

- (5)

- Speed up reasoningIn addition to the advantages of the training stage, agent attention also optimizes the reasoning process of the model, which makes it possible to predict or make decisions faster in practical applications and improves the real-time performance of the system.

- (6)

- Better balanceAt the same time, GCNAA has higher verification accuracy and lower training loss, which shows that this mechanism can achieve a better balance between efficiency and effect. This shows that it is not only fast but also accurate, which is especially critical for application scenarios that pursue high performance.

5.3.4. Prediction Result Analysis

- (1)

- Labeling errors, such as cases where the handwritten character is ‘水’ but incorrectly labeled as ‘云’, make correct recognition inherently impossible for such samples.

- (2)

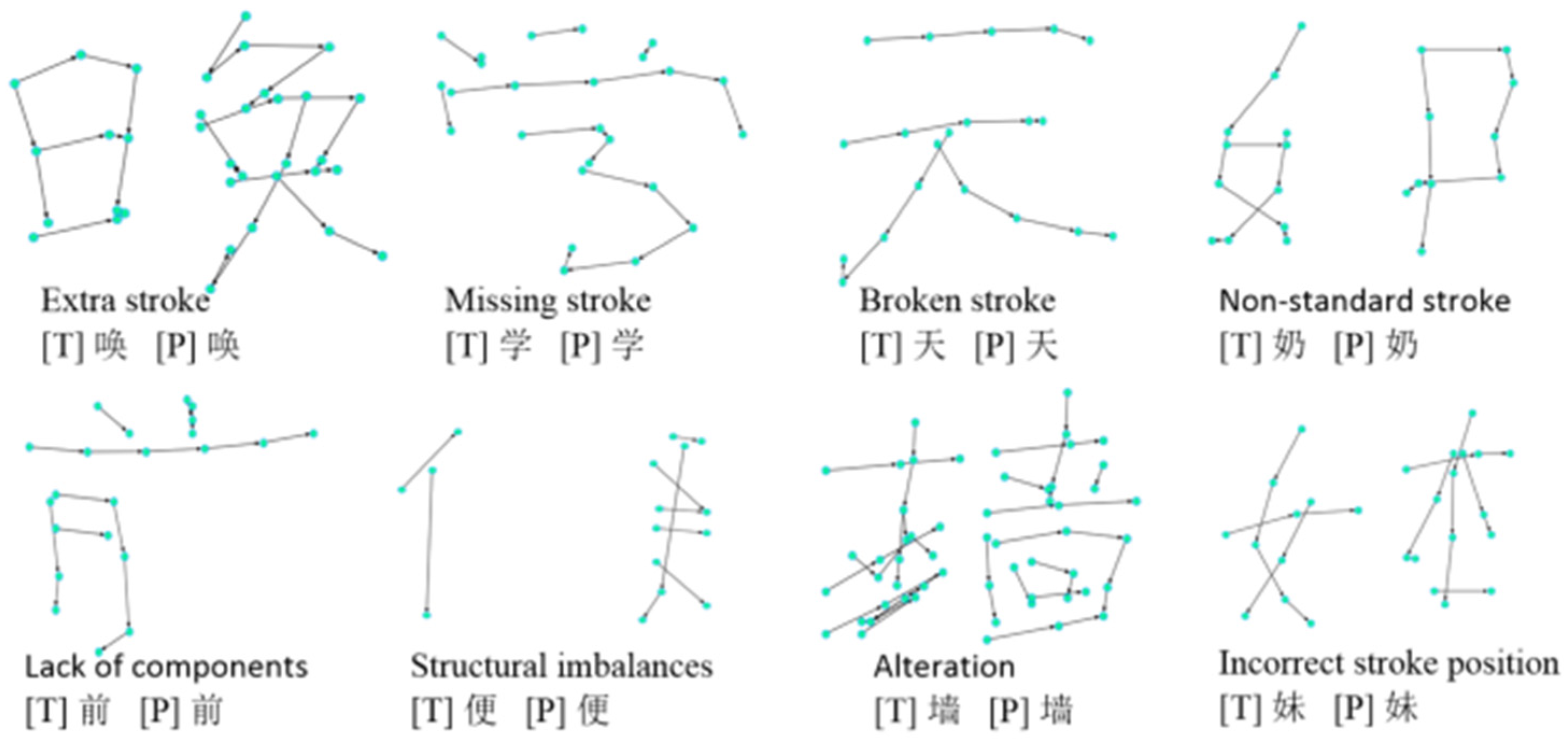

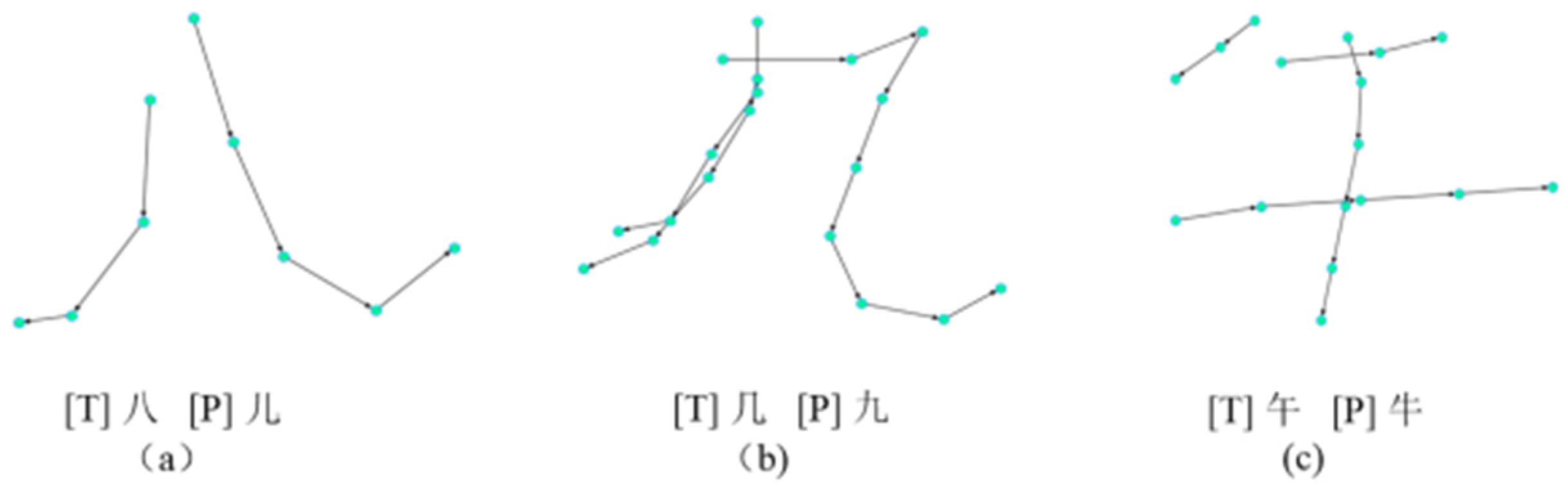

- Classification errors occur when non-standard strokes, incorrect stroke position relationship, missing strokes and so on cause handwritten characters to exhibit greater morphological similarity to other standard characters.For example, the character “八” may resemble “儿” when its “㇏” is written irregularly, as shown in Figure 25a.In “几”, the intersection point between the “丿” and the “㇈” should ideally meet at the starting points of both strokes. However, if the stroke “丿” crosses through the horizontal segment of the stroke “㇈”, the character becomes visually similar to “九”, as shown in Figure 25b.When the stroke “丨” in “午” protrudes excessively at the top, the character morphology approaches that of “牛”, as shown in Figure 25c.

- (3)

- The inherent similarity among Chinese characters. Chinese characters possess intrinsic visual similarities that pose unique challenges for recognition systems. For example, “夏” and “复”.

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zhang, X.-Y.; Bengio, Y.; Liu, C.-L. Online and offline handwritten Chinese character recognition: A comprehensive study and new benchmark. Pattern Recognit. 2017, 61, 348–360. [Google Scholar] [CrossRef]

- Yang, W.; Jin, L.; Tao, D.; Xie, Z.; Feng, Z. DropSample: A new training method to enhance deep convolutional neural networks for large-scale unconstrained handwritten Chinese character recognition. Pattern Recognit. 2016, 58, 190–203. [Google Scholar] [CrossRef]

- Xu, H.; Zhang, X. A Residual Network with Multi-Scale Dilated Convolutions for Enhanced Recognition of Digital Ink Chinese Characters by Non-Native Writers. Int. J. Knowl. Innov. Stud. 2024, 2, 130–146. [Google Scholar] [CrossRef]

- Zhang, J.; Zhu, Y.; Du, J.; Dai, L. Trajectory-based Radical Analysis Network for Online Handwritten Chinese Character Recognition. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; IEEE: New York, NY, USA, 2018; pp. 3681–3686. [Google Scholar]

- Zhang, X.-Y.; Yin, F.; Zhang, Y.-M.; Liu, C.-L.; Bengio, Y. Drawing and Recognizing Chinese Characters with Recurrent Neural Network. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 849–862. [Google Scholar] [CrossRef]

- Ren, H.; Wang, W.; Liu, C. Recognizing online handwritten Chinese characters using RNNs with new computing architectures. Pattern Recognit. 2019, 93, 179–192. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Monti, F.; Boscaini, D.; Masci, J.; Rodola, E.; Svoboda, J.; Bronstein, M.M. Geometric Deep Learning on Graphs and Manifolds Using Mixture Model CNNs. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 5425–5434. [Google Scholar]

- Fey, M.; Lenssen, J.E.; Weichert, F.; Muller, H. SplineCNN: Fast Geometric Deep Learning with Continuous B-Spline Kernels. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; IEEE: New York, NY, USA, 2018; pp. 869–877. [Google Scholar]

- Dwivedi, V.P.; Joshi, C.K.; Luu, A.T.; Laurent, T.; Bengio, Y.; Bresson, X. Benchmarking Graph Neural Networks. J. Mach. Learn. Res. 2022, 23, 1–48. [Google Scholar]

- Gan, J.; Chen, Y.; Hu, B.; Leng, J.; Wang, W.; Gao, X. Characters as graphs: Interpretable handwritten Chinese character recognition via Pyramid Graph Transformer. Pattern Recognit. 2023, 137, 109317. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is All you Need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Liu, C.-L.; Marukawa, K. Pseudo two-dimensional shape normalization methods for handwritten Chinese character recognition. Pattern Recognit. 2005, 38, 2242–2255. [Google Scholar] [CrossRef]

- Ding, K.; Deng, G.; Jin, L. An Investigation of Imaginary Stroke Techinique for Cursive Online Handwriting Chinese Character Recognition. In Proceedings of the 2009 10th International Conference on Document Analysis and Recognition, Barcelona, Spain, 26–29 July 2009; IEEE: New York, NY, USA, 2009; pp. 531–535. [Google Scholar]

- Graham, B. Sparse arrays of signatures for online character recognition. arXiv 2013, arXiv:1308.0371. [Google Scholar] [CrossRef]

- Bai, Z.; Huo, Q. A study on the use of 8-directional features for online handwritten Chinese character recognition. In Proceedings of the Eighth International Conference on Document Analysis and Recognition (ICDAR’05), Seoul, Republic of Korea, 31 August–1 September 2005; IEEE: New York, NY, USA, 2005; Volume 1, pp. 262–266. [Google Scholar]

- Bai, H.; Zhang, X. Recognizing Chinese Characters in Digital Ink from Non-Native Language Writers Using Hierarchical Models; Jiang, X., Arai, M., Chen, G., Eds.; Second International Workshop on Pattern Recognition: Singapore, 2017; p. 104430A. [Google Scholar]

- Bai, H.; Zhang, X.-W. Improved hierarchical models for non-native Chinese handwriting recognition using hidden conditional random fields. In Proceedings of the Fifth International Workshop on Pattern Recognition, Chengdu, China, 5–7 June 2020; Jiang, X., Zhang, C., Song, Y., Eds.; SPIE: Bellingham, WA, USA, 2020; p. 9. [Google Scholar]

- Long, T.; Jin, L. Building compact MQDF classifier for large character set recognition by subspace distribution sharing. Pattern Recognit. 2008, 41, 2916–2925. [Google Scholar] [CrossRef]

- Kimura, F.; Takashina, K.; Tsuruoka, S.; Miyake, Y. Modified quadratic discriminant functions and the application to chinese character recognition. IEEE Trans. Pattern Anal. Mach. Intell. 1987, 9, 149–153. [Google Scholar] [CrossRef]

- Kim, H.J.; Kim, K.H.; Kim, S.K.; Lee, J.K. On-line recognition of handwritten chinese characters based on hidden markov models. Pattern Recognit. 1997, 30, 1489–1500. [Google Scholar] [CrossRef]

- Cheng-Lin, L.; Jaeger, S.; Nakagawa, M. Online recognition of chinese characters: The state-of-the-art. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 198–213. [Google Scholar] [CrossRef]

- Fujisawa, H. Forty years of research in character and document recognition—An industrial perspective. Pattern Recognit. 2008, 41, 2435–2446. [Google Scholar] [CrossRef]

- Jin, L.; Gao, Y.; Liu, G.; Li, Y.; Ding, K. SCUT-COUCH2009—A comprehensive online unconstrained Chinese handwriting database and benchmark evaluation. Int. J. Doc. Anal. Recognit. (IJDAR) 2011, 14, 53–64. [Google Scholar] [CrossRef]

- Liu, C.-L.; Yin, F.; Wang, D.-H.; Wang, Q.-F. Online and offline handwritten Chinese character recognition: Benchmarking on new databases. Pattern Recognit. 2013, 46, 155–162. [Google Scholar] [CrossRef]

- Qu, X.; Wang, W.; Lu, K.; Zhou, J. In-air handwritten Chinese character recognition with locality-sensitive sparse representation toward optimized prototype classifier. Pattern Recognit. 2018, 78, 267–276. [Google Scholar] [CrossRef]

- Ciresan, D.; Meier, U.; Schmidhuber, J. Multi-column deep neural networks for image classification. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; IEEE: New York, NY, USA, 2012; pp. 3642–3649. [Google Scholar]

- Gan, J.; Wang, W.; Lu, K. A new perspective: Recognizing online handwritten Chinese characters via 1-dimensional CNN. Inf. Sci. 2019, 478, 375–390. [Google Scholar] [CrossRef]

- Xu, H.; Zhang, X. Recognizing Digital Ink Chinese Characters Written by International Students Using a Residual Network with 1-Dimensional Dilated Convolution. Information 2024, 15, 531. [Google Scholar] [CrossRef]

- Zheng, J.; Ding, X.; Wu, Y. Recognizing on-line handwritten Chinese character via FARG matching. In Proceedings of the Fourth International Conference on Document Analysis and Recognition, Ulm, Germany, 18–20 August 1997; IEEE Computer Soc: Washington, DC, USA, 1997; Volume 2, pp. 621–624. [Google Scholar]

- Touvron, H.; Cord, M.; Douze, M.; Massa, F.; Sablayrolles, A.; Jégou, H. Training data-efficient image transformers & distillation through attention. arXiv 2021. [Google Scholar] [CrossRef]

- Wang, W.; Xie, E.; Li, X.; Fan, D.-P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid Vision Transformer: A Versatile Backbone for Dense Prediction without Convolutions. arXiv 2021. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. arXiv 2021. [Google Scholar] [CrossRef]

- Xia, Z.; Pan, X.; Song, S.; Li, L.E.; Huang, G. Vision Transformer with Deformable Attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 4794–4803. [Google Scholar]

- Han, D.; Ye, T.; Han, Y.; Xia, Z.; Pan, S.; Wan, P.; Song, S.; Huang, G. Agent Attention: On the Integration of Softmax and Linear Attention. arXiv 2024, arXiv:2312.08874. [Google Scholar]

- Dhillon, I.S.; Guan, Y.; Kulis, B. Weighted Graph Cuts without Eigenvectors A Multilevel Approach. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 1944–1957. [Google Scholar] [CrossRef] [PubMed]

- Bai, H. Study on Digital Ink Characters Stroke Error Extraction by Beginning Learners of Chinese As a Foreign Language. Ph.D. Thesis, Beijing Language and Culture University, Beijing, China, 2018. [Google Scholar]

- Liu, C.-L.; Yin, F.; Wang, D.-H.; Wang, Q.-F. CASIA Online and Offline Chinese Handwriting Databases. In Proceedings of the 2011 International Conference on Document Analysis and Recognition, Beijing, China, 18–21 September 2011; IEEE: New York, NY, USA, 2011; pp. 37–41. [Google Scholar]

- GB 2312-80; Information Technology—Chinese Character Coded Character Set for Information Interchange—Basic Set. Standardization Administration of China (SAC): Beijing, China, 1980.

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Guo, Y.; Wang, H.; Hu, Q.; Liu, H.; Liu, L.; Bennamoun, M. Deep Learning for 3D Point Clouds: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 4338–4364. [Google Scholar] [CrossRef]

| Dataset | Total Writer | Total Class | Total Samples | Chinese Character Class | Chinese Character Samples |

|---|---|---|---|---|---|

| OLHWDB1.0 | 420 | 4037 | 1,694,741 | 3866 | 1,622,935 |

| OLHWDB1.1 | 300 | 3926 | 1,174,364 | 3755 | 1,123,132 |

| OLHWDB1.2 | 300 | 3490 | 1,042,912 | 3319 | 991,731 |

| Block | GCNAA-S | GCNAA-M | GCNAA-L |

|---|---|---|---|

| Block1 | [D = 48, N = 2] × 2 | [D = 64, N = 2] × 2 | [D = 72, N = 2] × 2 |

| Block2 | [D = 72, N = 4] × 2 | [D = 96, N = 4] × 2 | [D = 108, N = 4] × 3 |

| Block3 | [D = 108, N = 6] × 2 | [D = 144, N = 6] × 3 | [D = 168, N = 6] × 3 |

| Block4 | [D = 160, N = 8] × 2 | [D = 200, N = 8] × 2 | [D = 256, N = 8] × 2 |

| Method | Acc. (%) |

|---|---|

| Traditional approach of MQDF [25] | 95.28 |

| MCDNN [27] | 94.39 |

| DropSample-DCNN [2] | 96.93 |

| 1-D ResNetDC [29] | 96.2 |

| GCNAA-L (ours) | 97.47 |

| Networks | Extra Data | Test Data | Acc. (%) |

|---|---|---|---|

| GCNAA-L | × | DICCs written by international students | 97.82% |

| GCNAA-L | +CASIA OLHWDB1.0 | DICCs written by international students | 98.53% |

| GCNAA-L | +SCUT-COUCH 2009 GB1 | DICCs written by international students | 98.59% |

| GCNAA-L | +CASIA OLHWDB1.0-1.2 | DICCs written by international students | 98.70% |

| GCNAA-L | +HIT-OR3C | DICCs written by international students | 98.59% |

| GCNAA-L | +SCUT-COUCH 2009 GB1-GB2 | DICCs written by international students | 98.67% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, H.; Zhang, X. Graph Convolutional Network with Agent Attention for Recognizing Digital Ink Chinese Characters Written by International Students. Information 2025, 16, 729. https://doi.org/10.3390/info16090729

Xu H, Zhang X. Graph Convolutional Network with Agent Attention for Recognizing Digital Ink Chinese Characters Written by International Students. Information. 2025; 16(9):729. https://doi.org/10.3390/info16090729

Chicago/Turabian StyleXu, Huafen, and Xiwen Zhang. 2025. "Graph Convolutional Network with Agent Attention for Recognizing Digital Ink Chinese Characters Written by International Students" Information 16, no. 9: 729. https://doi.org/10.3390/info16090729

APA StyleXu, H., & Zhang, X. (2025). Graph Convolutional Network with Agent Attention for Recognizing Digital Ink Chinese Characters Written by International Students. Information, 16(9), 729. https://doi.org/10.3390/info16090729