1. Introduction

Nowadays, there has been an explosive increase in the amount of data. The widespread use of digital technologies and the internet, the growth of social media, mobile devices, and the Internet of Things (IoT) have all contributed to the explosion of the available data [

1]. The availability of these data has made possible the development and the improvement of machine learning algorithms [

2]. Large datasets are necessary for machine learning algorithms, which can learn from the available data and consequently train effectively [

3]. In addition, with big data, deep learning models, which are a subset of machine learning models, are becoming capable of recognizing and learning patterns in images, speech, text and other types of unstructured data [

4,

5,

6,

7]. Therefore, it becomes easier to create and develop reliable algorithms for handling diverse and complex problems and datasets [

8,

9,

10].

Nevertheless, on several occasions, having an excessive amount of data might be detrimental and it could reduce the efficiency and therefore the reliability of the algorithms [

11]. Specifically, this may occur when the dataset contains noise or irrelevant and redundant features. This can result in overfitting and decrease the model’s performance in terms of accuracy and generalization [

12]. Additionally, it could also lead to computational challenges and scalability issues, especially when the analysis concerns deep learning algorithms, which demand significant computational resources to execute [

13]. In order to avoid such issues, various techniques have been developed and used. One such technique is feature selection [

14].

Feature selection plays an especially critical role in domains such as natural language processing (NLP), where datasets often involve high-dimensional, sparse representations like term frequency-inverse document frequency (TF-IDF), n-grams or word embeddings [

15]. In such settings, a large proportion of the features may be irrelevant or redundant, contributing little to the prediction task and increasing the risk of overfitting [

16]. Filter-based feature selection methods, such as mRMR [

17], are well suited for these contexts because they evaluate features based on intrinsic statistical properties rather than relying on specific classifiers [

18]. This allows for dimensionality reduction before model training, improving computational efficiency and enabling better generalization, especially when only a limited number of labeled instances are available [

19,

20].

Compared to wrapper and embedded methods, which can be computationally expensive or tightly coupled with specific models, mRMR offers a lightweight and model-agnostic solution [

21]. While modern deep learning models often include built-in mechanisms for feature weighting or selection, such approaches require large amounts of data and resources and may still suffer from interpretability challenges [

22]. Furthermore, post hoc explainability techniques such as SHAP or LRP are useful for model interpretation, but they cannot replace the role of robust feature filtering before training [

23]. The mRMR method remains valuable in this preprocessing stage [

24], particularly when enhanced with effective estimators of mutual information that better capture complex, potentially nonlinear dependencies between variables [

25,

26].

The process of feature selection is frequently employed as a preparatory stage or in conjunction with machine learning [

27]. The aim of feature selection algorithms is to construct a subset of features from the initial one, by selecting the most relevant features to the class variable and by removing irrelevant and redundant features. This procedure may produce a substantially smaller dataset that only includes the most relevant features [

28]. Feature selection methods are classified into three main categories. Various methods have been proposed and used in several research for both wrapper methods [

29,

30,

31,

32], filter methods [

33,

34], and embedded methods [

35,

36,

37]. In this paper, a filter method for feature selection known as mRMR will be studied [

17].

The minimum Redundancy Maximum Relevance (mRMR) feature selection method aims to create a subset that contains features that have maximum relevance to the target variable, while having minimum redundancy with each other [

17]. Until a predefined number of features is reached, mRMR iteratively adds features to the optimal subset of features, selecting the features with the highest dependency with the class variable and the lowest dependency between the feature and the previously selected features. In order to quantify the dependency between the variables and consequently relevance and redundancy, various statistical measures can be used. A widespread statistical measure that is also an important component of mRMR is mutual information. Mutual information measures the amount of information that one feature contains about another specific feature and it can be applied to quantify relevance and redundancy [

38]. This measure has the advantage of being able to detect both linear and nonlinear dependencies, contrary to other statistical measures such as Pearson’s correlation.

Nevertheless, despite the advantages of mutual information, its reliable estimation is considered challenging for real-world datasets, as most of them involve continuous variables. In such cases, the joint probability distribution is not directly known and must be approximated from finite samples, which often introduces bias or instability in the estimates. To confront with this problem and to achieve a reliable assessment of mutual information, in many research various methods have been proposed, some non-parametric ones for density estimation such as k-nearest neighbors [

39] and B-splines [

40], but also discretization techniques such as equidistant binning, equiprobable binning and adaptive partitioning [

41], that generally give worse assessment of mutual information compared to the non-parametric ones [

42,

43].

Generally, numerous recent studies have focused on improving feature selection techniques, addressing different aspects of the process. One line of work has proposed approaches that enhance mutual information–based feature selection by incorporating unique relevance criteria [

44]. Other contributions have introduced conditional mutual information strategies for dynamic feature selection [

45], as well as ensemble evaluation functions that combine linear measures, such as the Pearson correlation coefficient, with nonlinear measures like normalized mutual information, in order to capture complex feature–class relationships while maintaining low redundancy [

46]. Further research has emphasized the benchmarking and evaluation of mutual information estimators in high-dimensional and complex settings [

47]. Additionally, methods have been proposed to address the numerical instability of k-nearest-neighbor-based normalized mutual information estimators in high-dimensional spaces [

48]. Feature reduction has also been investigated in the context of IoT intrusion detection, where efficient selection methods are essential for real-time attack classification [

49].

Although these approaches highlight significant advances in the broader field of feature selection, it remains the case that the choice of mutual information estimator, despite being a central component of many methods, often receives comparatively less attention. As a result, distinguishing the impact of the estimator from that of the selection strategy itself continues to be a challenge in the literature.

In fact, numerous well-known and widely applied mutual information estimators exist, yet in many studies the emphasis is placed primarily on the choice of the feature selection method itself, while the choice of the mutual information estimator, despite being universally acknowledged as a critical component, often receives comparatively less attention [

50,

51,

52,

53,

54]. It is not uncommon to find comparisons between feature selection methods that rely on different mutual information estimators, which makes it difficult to disentangle the effect of the selection strategy from that of the estimator [

33].

The goal of the present work is not to propose the most advanced or bias-free estimator, since more sophisticated and reliable methods already exist, but rather to demonstrate how even a very small correction to a widely used discretization-based estimator, under otherwise identical conditions, can lead to noticeably different outcomes. This correction is derived directly from the same estimator itself, by subtracting the average mutual information obtained from surrogate samples from the original estimate, which ensures that the estimator is not fundamentally altered but is adjusted in a simple and controlled way. By doing so, we aim to draw further attention within the research community to the importance of carefully considering the choice of mutual information estimator, in addition to the choice of the feature selection method.

To accomplish this, we evaluate the impact of different mutual information estimators on the performance of the mRMR feature selection method. To this end, three variations of mRMR are compared. The first uses the Parzen window estimation of mutual information, the second is based on equidistant binning using the cells method, and the third incorporates a bias-corrected version of the same discretization-based estimator. A regularization term is optionally added to the mRMR denominator to enhance numerical stability. The comparison is performed through an extensive simulation study involving synthetic datasets with both linear and nonlinear dependencies. In addition, a case study using real-world datasets is included to assess the applicability of the methods in practice. The rest of this paper is organized as follows. In

Section 2, the methodology and the materials used are presented. In

Section 3, a simulation study is carried out in different systems and the results are presented. Additionally, in

Section 4, a case study using real-world datasets is included to evaluate the practical applicability of the proposed methods. In

Section 5 the results are discussed, and in

Section 6 the conclusions are drawn.

3. Simulation Study

In this section, a simulation study is conducted to evaluate the performance of the mRMR feature selection algorithm. Simulated datasets are constructed such that the features relevant to the class variable are known in advance. This allows for a direct assessment of the algorithm’s ability to identify informative features. The systems have been selected progressively from very simple ones to more complex, in order to examine the performance of mRMR in different scenarios. In the following examples, the variable y gives the classes after discretization and the features are random normal variables, some of which are predictors of the class variable y, while some of them are functionally related to the predictors. Beyond the features that are related to the class variable either directly or indirectly, we also added random variables, until each dataset consists of a total of thirty features.

For discretization, the number of classes

k is chosen to satisfy the relation

, where

n is the number of samples. This setting follows the same rationale as in the study where the corrected mutual information estimator was originally introduced. Each system was simulated by generating 20 datasets of 1024 samples. However, for the corrected mutual information estimator, which requires extrapolation to a larger sample size, the estimation was performed by projecting to a length of 2048, following the procedure described in

Section 2.3.2. The results reported in

Section 3.2 correspond to the average performance across these 20 datasets.

To assess the effect of the mutual information estimator and the mRMR configuration, five variations of the mRMR algorithm were compared:

Original-mRMR (s = 0): Uses the original mutual information estimator and applies Equation (

1), without modification to the denominator.

Original-mRMR (s = 1): Same estimator, but with one unit added to the denominator (Equation (

2)).

Corr-mRMR (s = 1): Uses the proposed correction of mutual information with one unit added to the denominator.

Parzen-mRMR (s = 0): Uses the Parzen (KDE-based) estimator without adjustment in the denominator.

Parzen-mRMR (s = 1): Uses the Parzen estimator with one unit added to the denominator.

In addition to the original and corrected mutual information estimators, we also included the original Parzen-based estimator, as proposed by [

17], in order to gain a more complete picture of the mRMR framework’s performance under different estimation strategies. This setup allows us to systematically compare how the choice of mutual information estimator, as well as the presence or absence of a stabilizing constant in the denominator, affects the reliability and effectiveness of mRMR across diverse systems.

3.1. Systems

,

, with additional features correlated to and ,

, where ,

, where ,

, where and ,

, where , , , , , and .

System 1 is the simplest of all, as there are only two functionally related features to the class variable y, the features and , which are moderately correlated with the class variable (both coefficients = 0.5). e is a standard normal random variable. As aforementioned, for all cases the dataset consists of a total of 30 features, of which the remaining 28 are irrelevant features generated from a standard normal distribution.

System 2 is identical to System 1 in terms of the functionally related features to the class variable. In addition to these two features, there are two groups of two features each. The features in the first group are strongly correlated with (), while the features in the second group are weakly correlated with (). Similar to System 1, the remaining 24 features are irrelevant features generated from a standard normal distribution.

In System 3 there are three functionally related features to the class variable y, particularly the features , and . In this case, feature 3 is correlated with the first two selected features. The remaining 27 features are irrelevant. Here, the optimal subset should contain any two of the three functionally related features. In mRMR, the number of features that the optimal subset should contain before the algorithm stops is arbitrarily defined. In this case, we set the algorithm to stop when the optimal subset contains 3 features, in order to examine whether the feature selection algorithm selects the features, , and , or prefers to add in an irrelevant feature to the optimal subset, when it has already selected two of the functionally related features.

System 4 also has three functionally related features to the class variable, specifically the features, , and . However, unlike in System 3, feature in System 4 contains information about another random variable, , in addition to being correlated with and . The remaining 27 features are irrelevant random variables. In this case, the optimal subset should contain all three functionally related features and the objective is to investigate whether the feature selection algorithm chooses them or another irrelevant feature, given that contains information about the predictors of y, namely and .

System 5 has four functionally related features to the class variable, namely , , and . In this case the functionally related features do not equally affect the class variable, since their coefficients differ. Specifically, and are strongly correlated with the class variable y (coefficients = 1), while and are weakly correlated, since their coefficients are equal to 0.2 and 0.3, respectively. In this case, the number of features contained in the optimal subset is set as four, because we consider it more appropriate for the feature selection algorithm to select a feature that contains the same information as other selected predictors e.g., , rather than select an irrelevant feature (standard random variable).

In System 6, there is a group of six predictors of the class variable y, namely ,…,, that are functionally related to the random independent standard variables , . The are set as , i = 1,…,6, where is the standard deviation of , so that all features contribute the same to y. The number of the features that contained in the optimal subset was set as six, for the same reason aforementioned in the description of System 5. In addition to these six features, there are also two groups of six features each. The features of one of these groups are strongly correlated to the corresponding features of the first group (), while the six features of the other group are weakly correlated to the corresponding features of the first group (). The remaining 12 features are irrelevant.

To provide a concise overview of the experimental setup, we summarize the main characteristics of all six systems in

Table 1. This table highlights the functional relationships, the relevant and redundant features, as well as the number of irrelevant features in each case, allowing the reader to more easily interpret and compare the different scenarios.

3.2. Results

In this section, the results are presented. We set the feature selection algorithm to stop when the optimal subset contains the same number of features with the functionally related features, as described in

Section 3.1. If the optimal subset contains all of the features that are functionally related to the class variable, then it is considered as correct (from now on success rate). The results refer to the average accuracy obtained by the 20 generated datasets of each system.

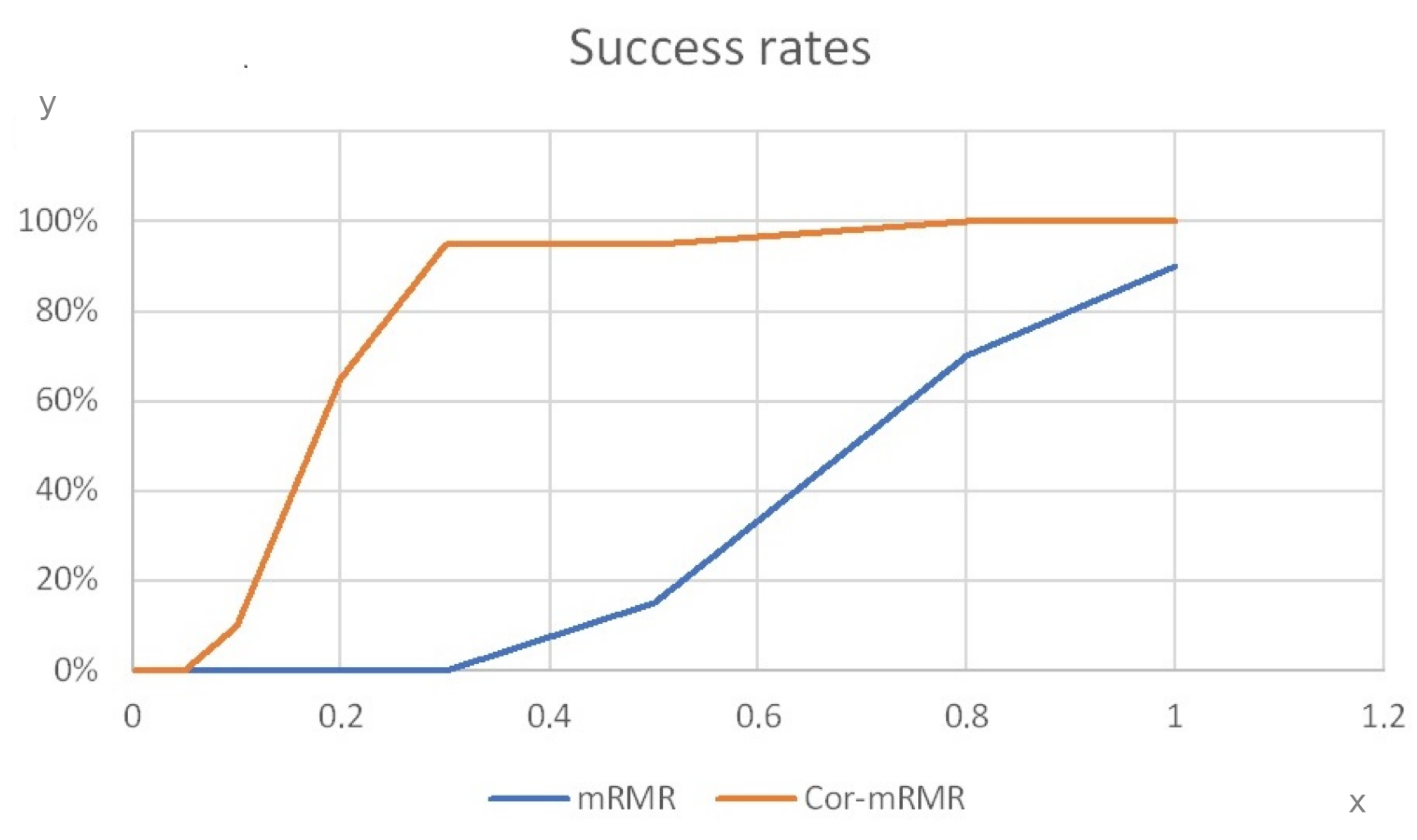

First, the

Figure 2 and

Figure 3 for Systems 5 and 6, respectively, are presented. These figures depict the success rate of mRMR using two different mutual information estimators across various values of s, where s is the number added to the denominator of mRMR to prevent negative values. The algorithm that uses the original estimator is referred to as mRMR, while the one that uses the proposed mutual information correction is referred to as Corr-mRMR.

Figure 2 shows that both of the examined feature selection methods achieve the highest success rate when the number 1 is added to the denominator of mRMR. Corr-mRMR achieves a success rate of above 80% when s is equal to 0.3 or higher, while simple mRMR only does so when s is equal to 1. Additionally, it is observed that for this system, the success rate of the Corr-mRMR method is always equal to or better than that of simple mRMR, regardless of the choice of s.

Figure 3 differs slightly from

Figure 2. When the values of s are equal to 0, 0.05 and 0.1, the Original-mRMR method appears to be more efficient than the corrected one. However, when the value of s is equal to 0.2, the success rate of Corr-mRMR increases rapidly to 65%, while the corresponding success rate of the Original-mRMR is only 40%. The same trend seems to apply for values of s greater than 0.2, where Corr-mRMR performs better. For the selected value of s, which in this study is

, both of the examined methods appear to have a success rate smaller than the maximum achievable. Nevertheless, the differences between these success rates and the optimal ones are small compared to other systems, where choosing

was found to be the optimal choice.

The results presented in

Table 2 indicate that in general, mRMR performs better when the corrected mutual information estimator is used.

In System 1, both of the predictors are moderately correlated to the class variable y (coefficients = 0.5). For this system, all the variations of mRMR performed equally well without making a mistake, since all included in the optimal subset the features and , which are the only features that are functionally related to the class variable, while the remaining 28features are irrelevant.

In System 2, while both Parzen variations and the corrected mRMR method achieve 100% success, the original estimator gives only 80% for and 90% for . On the other hand, the corrected and KDE-based estimators demonstrate better resilience to redundancy. The performance of Corr-mRMR was slightly better, since its success rate is 100%, while the Original-mRMR (), using the original estimator of mutual information, achieved a success rate of 90%. Systems 1 and 2 are similar, except that beyond the two functionally related features to the class variable, System 2 has four additional features that are correlated with the predictors of y, while in System 1, the remaining 28 features are irrelevant. It is observed that Corr-mRMR does not confront difficulties in identifying the actual relevant features, while Original-mRMR () and Original-mRMR () make a few mistakes.

System 3 introduces nonlinearity through the feature , creating a higher-order dependency. In this case, the Parzen-based methods again achieve perfect performance (100%), while both the Original-mRMR () and Corr-mRMR () fail to fully recover the true subset. On the contrary, the Original-mRMR () method without correction completely fails (0%). It is worth noting, however, that the 0% success rate of the Original-mRMR with reflects only the strict definition of success—namely, recovering all three relevant features. If we were to restrict the optimal subset to only the two strongest predictors ( and ), its performance would be nearly equivalent to that of the variant. The failure occurs in the final selection step: instead of selecting the third relevant (though redundant) feature , Original-mRMR () tends to include a completely unrelated feature, ignoring the partial redundancy advantage of over irrelevant variables. The corrected mRMR (Corr-mRMR), which uses the adjusted mutual information estimator and , performs similarly to the Original-mRMR () in this example, also achieving 95%.

System 4 is similar to System 3, except that in System 3, the three features were functions of only two independent predictors, contrary to the System 4, where , and are functions of three independent predictors, since . In this system the optimal subset should contain all three features (, and ). The increased complexity affects the classical estimators more severely. The Original-mRMR with fails completely (0%) as it strongly penalizes redundancy and fails to recognize the added informational contribution of . Increasing the denominator to improves the performance to 80%, indicating a more balanced treatment between relevance and redundancy. However, some misranking still occurs. The corrected version (Corr-mRMR), which incorporates the proposed mutual information adjustment and also uses , achieves a success rate of 95%. This performance comes very close to the Parzen-based methods (both at 100%), highlighting that the correction significantly improves reliability in more complex scenarios where redundancy and relevance coexist.

In System 5 there are four functionally related features to the class variable but only three independent predictors. The Parzen-mRMR methods still succeed in identifying all relevant features. In contrast, the Original-mRMR fails completely for (0%) and performs better with (85%), while the corrected estimator improves further to 95%. The difference lies in the selection of , since for all the generated datasets, both of them selected correctly the features , and , but the simple mRMR in a couple of times added an irrelevant feature to the optimal subset, instead of adding , which, however, is a function of the features and . Contrary to System 4, for this system the complete failure of mRMR for stems from the addition of a completely irrelevant feature instead of the weakly informative , which is a nonlinear function of and . If the selected subset had been limited to three features—namely , and —the performance of Original-mRMR with would have been comparable to that with . Both and Corr-mRMR handle the balance between relevance and redundancy more effectively, with the latter approaching the Parzen benchmark.

System 6 is considered the most complex of all the examined systems. In this system there are six functionally related features to the class variable, five of which are independent predictors, while the sixth () is the product of and . Once again, the Original-mRMR fails when (0%) and performs modestly when (55%). The Corr-mRMR performs better (70%), but only the Parzen-based methods were able to identify all the relevant features (100%). Notably, even when the stopping criterion is set to five features instead of six, Corr-mRMR fails to recover the complete set of relevant features in only 5% of the cases, whereas the Original-mRMR does so in 15%.

4. Case Study

To assess the practical applicability of the examined mRMR variations, we conducted a case study in real-world classification tasks. Specifically, we employed a diverse set of benchmark datasets widely used in feature selection and classification research. The collection includes financial datasets (bankrupt, credit), bioinformatics datasets (qsar), software defect prediction datasets (kc1, pc1) and text or signal classification datasets (spambase, magic).

To ensure consistency with the experimental design used in the simulation study, we randomly selected a subset of 1140 instances from the full datasets. This choice allows training to be conducted on 1026 observations, aligning with the sample size used for the corrected mutual information estimation in previous sections.

Each method was configured to select the top 5 features. Using these subsets, we then trained and evaluated four standard classifiers: Logistic Regression, Decision Tree, Random Forest and K-Nearest Neighbors (KNN). Classification performance was assessed using 10-fold cross-validation and we report the mean accuracy and standard deviation across folds.

The following tables (

Table 3,

Table 4,

Table 5 and

Table 6) summarize the classification accuracies for each classifier across all datasets. For each dataset, the best-performing method is highlighted in bold to emphasize its relative performance.

The results presented in

Table 3 demonstrate how different mutual information estimators and regularization settings influence the performance of the mRMR feature selection algorithm when combined with Logistic Regression. Across the seven benchmark datasets, Corr-mRMR achieved the highest accuracy in four cases, while the Original-mRMR (s = 1), the Original-mRMR (s = 0) and Parzen-mRMR (s = 0) methods performed best in one dataset each. These results indicate that Corr-mRMR provided a measurable advantage over both the uncorrected mRMR variants and the Parzen-based estimators in the majority of cases.

In several datasets, such as bankrupt, magic, kc1, pc1 and credit, the differences between Corr-mRMR and Original-mRMR (s = 1) were minimal, with accuracies differing by less than 0.01. For instance, in bankrupt, Corr-mRMR achieved 0.9649, compared with 0.9588 obtained by Original-mRMR (s = 1). In kc1, the corresponding values were 0.8518 and 0.8500, while in pc1, all three methods, including the Parzen variants, produced nearly identical results in the range 0.8851–0.8860. Similarly, in credit, the performance of the examined methods was nearly identical, with only marginal differences between them.

By contrast, notable performance differences were observed in the qsar and spambase datasets. In qsar, Corr-mRMR achieved an accuracy of 0.8010, clearly higher than Original-mRMR (s = 1) (0.7469) and the best Parzen-based result (0.7631). An even larger improvement was observed in spambase, where Corr-mRMR reached 0.8482, substantially outperforming Original-mRMR (s = 1) (0.6833) as well as the Parzen methods, the best of which achieved 0.7588. It is worth noting that in these two datasets, the direct comparison between Corr-mRMR and Original-mRMR (s = 1) (which differ only in the use of the bias-corrected mutual information estimator while all other parameters remain identical) shows that even a seemingly minor adjustment, which leads to the corrected estimator of mutual information, can result in a substantial performance gain.

Overall, the choice of mutual information estimator appears to have a substantial impact on the performance of feature selection methods in certain cases. Among the examined approaches, the mRMR variant that employs the corrected mutual information estimator (Corr-mRMR) exhibited the most stable performance across all datasets, achieving results that were either nearly identical to or better than those of the alternative methods.

The results presented in

Table 4 show the performance of the examined mRMR variations combined with Decision Tree classifiers across seven benchmark datasets. In this setting, Corr-mRMR achieved the highest accuracy in four datasets (

qsar,

spambase,

kc1 and

pc1), while Original-mRMR (s = 0) performed best in

bankrupt and

magic and Parzen-mRMR (s = 0) slightly outperformed the others in

credit.

In most datasets, the performance differences between methods were modest, typically within a range of 0.01–0.02. For example, in pc1, Corr-mRMR achieved 0.8518, closely followed by Original-mRMR (s = 0) with 0.8456 and Parzen-mRMR (s = 0) with 0.8465. Similarly, in credit, Parzen (s = 0) achieved the highest accuracy (0.7702), differing by less than 0.01 from Corr-mRMR (0.7684) and Original-mRMR (s = 1) (0.7658).

However, clearer advantages for Corr-mRMR were observed in the qsar and spambase datasets, where it outperformed the other examined methods by approximately 0.025 and 0.021, respectively.

Notably, when compared directly with the mRMR variant employing the Parzen estimator (Parzen-mRMR (s = 0)), which is the configuration used in the study where mRMR was originally introduced, the Corr-mRMR showed clear advantages in most of the datasets. In qsar, Corr-mRMR achieved 0.7514, compared with 0.7120 of Parzen-mRMR (s = 0), while in spambase the respective values were 0.7671 and 0.7167. Similarly, in magic, the corrected variant reached 0.7904, outperforming Parzen-mRMR (s = 0) at 0.7719 and in kc1 it obtained 0.8228 compared with 0.7991.

The results in

Table 5 summarize the performance of the examined mRMR variants with Random Forest classifiers. Overall, the differences between methods are relatively modest, with accuracies generally within a 0.01–0.03 range. Corr-mRMR achieved the best performance in

qsar,

spambase and

pc1, reaching 0.8351, 0.8395 and 0.8904, respectively, each representing an improvement of about 0.02 compared with the best performing of the other examined methods. In contrast, Original-mRMR (s = 0) was slightly superior in

bankrupt (0.9596) and

magic (0.8447), while Parzen-mRMR (s = 0) was the most accurate in

kc1 (0.8561) and

credit (0.8088) datasets.

These findings suggest that no single method dominates across all datasets, though Corr-mRMR provided some measurable gains, particularly in qsar and spambase.

It is worth noting that in this setting, all the examined methods performed particularly well, as nearly all achieved accuracies equal to or above 0.80 across the benchmark datasets, with only minimal deviations observed for Original-mRMR (s = 0).

The results presented in

Table 6 summarize the performance of the examined mRMR variations combined with K-Nearest Neighbors classifiers. Corr-mRMR attained the highest accuracy in four datasets (

qsar,

spambase,

kc1 and

pc1), while Original-mRMR (s = 0) performed best in

bankrupt and

magic, and Parzen-mRMR (s = 0) achieved the highest accuracy in

credit.

Across most datasets, the observed differences were modest, typically below 0.01–0.015 in absolute accuracy. For example, in pc1, Corr-mRMR reached 0.8728, only slightly higher than Original-mRMR (s = 0) (0.8675), Parzen (s = 1) (0.8684) and Original-mRMR (s = 1), which with 0.8649 yielded the lowest accuracy among the examined methods. Similarly, in kc1, Corr-mRMR obtained 0.8421 compared with 0.8342 for Parzen-mRMR (s = 1).

More pronounced differences appeared in qsar and spambase. Specifically, Corr-mRMR achieved 0.7816 in qsar, compared with 0.7544 for Parzen-mRMR (s = 0) and 0.7930 in spambase, compared with 0.7667. These results highlight that adopting the corrected mutual information estimator can yield measurable gains even when the improvements appear numerically moderate.

Overall, these findings confirm that incorporating the corrected mutual information estimator into the mRMR framework is effective not only in controlled synthetic settings, but also across a broad range of real-world applications. The results demonstrate that the observed benefits are generalizable and not restricted to a specific domain or dataset.

4.1. Comparison with State-of-the-Art Feature Selection Methods

To further evaluate the Corr-MRMR method, we compared it against two well-established mutual information-based feature selection algorithms, CONMI_FS [

46], and MIFS-ND [

51].

All three methods were applied to the same benchmark datasets described in

Section 4. In this case, the number of selected features was not fixed a priori. Instead, we adopted the intrinsic stopping criterion of CONMI_FS, as defined in its original description. Because CONMI_FS is the only method among the three that specifies a stopping criterion, we used its selected cardinality

on each dataset as the target subset size. MIFS-ND and Corr-mRMR were then constrained to return exactly

features. This approach ensured that the comparison was performed using feature subsets of equal cardinality across all methods.

For CONMI_FS and MIFS-ND, algorithm parameters were set according to the values reported in their respective original studies, corresponding to configurations that achieved the best empirical performance. In particular, for CONMI_FS, the hyperparameter was fixed at , as this value yielded the best classification results in preliminary testing.

Classification performance was assessed using four classifiers: Logistic Regression (LR), Decision Tree (DT), Random Forest (RF) and K-Nearest Neighbors (KNN), each evaluated with 10-fold cross-validation.

The classification results obtained for all datasets and classifiers are summarized in

Table 7,

Table 8,

Table 9 and

Table 10. For each dataset, the mean accuracy and standard deviation are reported, with the highest value in each row highlighted in bold.

Only four out of the seven datasets examined in the case study are reported here. In the remaining three datasets (kc1, pc1 and credit), the stopping criterion of CONMI_FS halted the feature selection process when the subset contained a single feature. In these cases, all three methods selected the same feature, resulting in identical classification performance. Therefore, the corresponding results are omitted for brevity.

The results in

Table 7 present the classification performance of CONMI_FS, MIFS-ND, and Corr-mRMR when combined with Logistic Regression. Among the four datasets considered, Corr-mRMR achieved the highest accuracy in two cases (

bankrupt and

magic), CONMI_FS in two cases (

qsar and

spambase), while MIFS-ND did not achieve the highest accuracy in any dataset.

In bankrupt, Corr-mRMR obtained an accuracy of 0.9640, slightly higher than MIFS-ND (0.9588) and CONMI_FS (0.9509). In magic, Corr-mRMR again outperformed the other two methods, achieving 0.7991 compared with 0.7658 for both CONMI_FS and MIFS-ND. In qsar, CONMI_FS led with 0.8113, marginally surpassing MIFS-ND (0.8076) and showing a clearer advantage over Corr-mRMR (0.7754). For spambase, all three methods achieved relatively high performance, with CONMI_FS obtaining the highest accuracy (0.9123), exceeding MIFS-ND (0.9088) by 0.0035 and Corr-mRMR (0.8807) by 0.0316.

The results in

Table 8 show the classification performance of CONMI_FS, MIFS-ND and Corr-mRMR when combined with a Decision Tree classifier. Compared with the Logistic Regression results, the differences between methods are generally smaller, with relatively close performance across all datasets.

CONMI_FS achieved the highest accuracy in three out of the four datasets (bankrupt, qsar and spambase), while Corr-mRMR led in one case (magic). In bankrupt, CONMI_FS obtained 0.9456, exceeding MIFS-ND (0.9421) by 0.0035 and Corr-mRMR (0.9316) by 0.0140. In qsar, CONMI_FS reached 0.7735, which was 0.0161 higher than MIFS-ND and 0.0427 higher than Corr-mRMR. In spambase, CONMI_FS scored 0.8904, ahead of MIFS-ND (0.8798) and Corr-mRMR (0.8561). The magic dataset was the only case where Corr-mRMR achieved the highest performance (0.7553), outperforming CONMI_FS (0.7246) by 0.0307 and MIFS-ND (0.7298) by 0.0255.

The results in

Table 9 show the classification performance of CONMI_FS, MIFS-ND and Corr-mRMR when combined with a Random Forest classifier. In

bankrupt, all three methods achieved very similar performance, with MIFS-ND obtaining the highest accuracy (0.9570), marginally surpassing CONMI_FS (0.9561) by 0.0009 and Corr-mRMR (0.9491) by 0.0079.A similar pattern was observed in

spambase, where Corr-mRMR reached 0.9053, compared with 0.9193 for MIFS-ND and 0.9246 for CONMI_FS. The maximum difference between methods in this dataset was 0.0193.

In contrast, more pronounced differences were observed in qsar and magic. In qsar, MIFS-ND achieved 0.8179, outperforming CONMI_FS (0.7838) by 0.0341 and Corr-mRMR (0.7536) by 0.0643. In magic, Corr-mRMR obtained the highest accuracy (0.8070), exceeding CONMI_FS (0.7570) by 0.0500 and MIFS-ND (0.7509) by 0.0561.

The results in

Table 10 indicate that, for the K-nearest neighbors classifier, Corr-mRMR achieved the highest accuracy in two out of the four examined datasets (

bankrupt and

magic), while CONMI_FS led in the remaining two (

qsar and

spambase). In

bankrupt, the differences among the three methods were minimal, with all accuracies within 0.005 of each other. Similarly, in

spambase, CONMI_FS outperformed the other two methods by small margins, with deviations of less than 0.021. More pronounced differences occurred in

qsar and

magic. In

qsar, CONMI_FS reached 0.8218, outperforming MIFS-ND and Corr-mRMR by 0.0105 and 0.0606, respectively. The

magic dataset presented the most substantial difference observed across all experiments in this study, with Corr-mRMR achieving 0.8500 and surpassing both CONMI_FS and MIFS-ND (0.7667) by 0.0833.

Overall, no single feature selection method managed to achieve the highest accuracy across all classifiers and datasets. In some cases, the differences were minimal, such as in bankrupt, where depending on the classifier, each of the examined methods achieved the best performance. A similar pattern was observed in spambase, where CONMI_FS consistently achieved the highest accuracy, but with only small margins over the other methods. For qsar, the best-performing method varied between MIFS-ND and CONMI_FS depending on the classifier, while in magic, Corr-mRMR consistently produced the highest accuracy, with the largest observed margins in the study. These findings indicate that the proposed correction to the mutual information estimator enables Corr-MRMR to match or exceed the performance of other strong, well-established feature selection approaches.

4.2. Impact of the Corrected Mutual Information Estimator on CONMI_FS

In order to further evaluate the impact of the mutual information estimator on feature selection performance, we extended our analysis to the CONMI_FS algorithm. Given that CONMI_FS employs the same mutual information estimator as the one originally used in mRMR, this provided an opportunity to examine whether substituting it with the bias-corrected version would yield any notable changes in results. In CONMI_FS, the data discretization process is performed prior to feature selection because the normalized mutual information in the integration requires a discrete data format. Specifically, the Equal-Width discretization method is used to divide each continuous feature into equal intervals, as described in the original study.

Although CONMI_FS integrates Pearson’s correlation coefficient with mutual information in its criterion, which may limit the influence of the mutual information estimator, we considered it relevant to assess whether the corrected estimator could still have a measurable effect. For this comparison, we evaluated the original CONMI_FS alongside a variant (CONMI_Cormut) in which the corrected estimator of mutual information was applied, using the same datasets as in the previous experiments. In this case, however, both methods were allowed to operate with their intrinsic stopping criteria, determining the number of selected features independently. Since the CONMI_FS scoring formula subtracts redundancy from relevance and relies on normalized rather than unnormalized mutual information, the expected impact of estimation bias is somewhat smaller than in the mRMR setting.

Table 11 summarises, for each dataset, the number of features selected by CONMI_FS and CONMI_Cormut, together with the corresponding classification accuracy obtained using the Random Forest classifier.

The results in

Table 11 indicate that the bias-corrected variant of CONMI_FS achieved higher accuracy in

bankrupt,

magic and

kc1, with the largest improvement observed for

kc1 despite both methods selecting a single feature. In contrast, the original method performed better in

qsar,

spambase,

pc1 and

credit, with the largest difference occurring in

credit, where CONMI_Cormut selected six features compared to a single feature for CONMI_FS.

Differences in the number of selected features varied considerably across datasets. For example, in bankrupt and spambase the CONMI_Cormut selected more than twice as many features as the CONMI_FS, without a proportional accuracy gain, whereas in magic and kc1 the subset size was identical between methods, suggesting that the observed accuracy differences in these cases were most likely attributable to the estimator change.

As noted earlier, the smaller influence of mutual information in the CONMI_FS formulation resulting from the use of normalized mutual information, its combination with Pearson correlation and the subtraction of redundancy from relevance, reduces the expected effect of replacing the estimator. The present results are consistent with this expectation, while also illustrating that such modifications can still yield measurable changes in certain scenarios.

Since CONMI_FS also relies primarily on the same mutual information estimator used in our mRMR variants, we found it worthwhile to investigate whether the bias-corrected version could lead to different outcomes in this context. This additional analysis was intended not only to assess its effect here, but also to encourage further examination of such adjustments in other feature selection methods, where the impact might be more pronounced.

5. Discussion

The primary objective of this study was not to identify the most accurate version of the mRMR algorithm, but rather to investigate how the choice of mutual information estimator affects the performance of this widely used feature selection method. To this end, we evaluated five mRMR variations, based on two different estimators (a discretization-based estimator and a Parzen KDE-based estimator), with or without a denominator correction ( or ), as well as a corrected mutual information version (Corr-mRMR), across both synthetic and real data.

Although the original mutual information estimator used in this study is not considered optimal compared to certain non-parametric alternatives such as k-nearest neighbors or B-spline-based approaches (see Introduction,

Section 1), it was chosen due to observed biases in prior work and the existence of a proposed correction. The corrected estimator was integrated into the mRMR framework to evaluate whether mitigating this bias improves selection outcomes.

Overall, the results from the simulation study indicate that the correction has a clear positive effect, especially in more complex systems involving nonlinear dependencies or redundant features. In simpler systems, where the functionally related features were strongly or moderately correlated with the class variable, all methods achieved near-perfect performance. These results were also supported by the use of a slightly modified mRMR formulation (Equation (

2)) that places more emphasis on relevance than redundancy (see

Section 2.4).

For systems such as System 3, we deliberately set the size of the optimal feature subset to include all functionally related features—despite the fact that only two were sufficient to capture the underlying information—so as to test whether the methods would prioritize redundant-but-relevant features over completely irrelevant ones. This decision aligns with practical use cases, where the user predefines the number of features, and thus selection behavior, in such important contexts.

An interesting observation is that the Parzen-based variants performed consistently well across all systems, even in the presence of redundancy and nonlinearity. This suggests that density-based mutual information estimation may offer improved robustness over discretization-based techniques, particularly in more realistic data scenarios. Corr-mRMR, which employs the corrected estimator and in the denominator, approached the performance of the Parzen-based methods in most cases—often surpassing both the uncorrected versions ( and ) and offering a strong compromise between computational simplicity and selection accuracy.

It also should be noted that during simulations with the aforementioned setup, for systems where some of the predictors were weakly correlated to the class variable e.g., , but also when the coefficients of the functionally related features did not all have the same signs and their sum tended to zero, the simple mRMR appeared to be more accurate compared to the corrected one.

In addition to these observations, it should also be noted that the corrected estimator is substantially slower than the original discretization-based version, since it requires computing the mutual information once on the original data and repeatedly (in our case 100 times) on surrogate datasets. This leads to a significantly higher runtime compared to the original method. However, the main aim of this study was not to propose the fastest estimator, but rather to highlight how even a small correction to the mutual information estimation process can lead to markedly different outcomes in mRMR. Therefore, the computational overhead of the corrected estimator should be viewed as secondary to the conceptual point being made.

In addition to the effect of sample size, which was discussed in

Section 2.3.2, dimensionality is another important factor that may influence the performance of mutual information estimators. In very high-dimensional feature spaces, sparsity can reduce the reliability of probability estimates, potentially affecting the stability of mRMR outcomes. A more systematic investigation of this aspect could be pursued in future work.

Finally, the results from the case study further support the simulation findings, showing that the mRMR variant employing the corrected mutual information estimator consistently provided competitive and often superior classification performance across diverse real-world datasets. While Parzen-based and Original-mRMR methods also performed strongly, the corrected-estimator variant (Corr-mRMR) frequently achieved the highest accuracies, particularly in datasets such as qsar and spambase, where the gains were substantial. These results reinforce the conclusion that the choice of mutual information estimator, and even relatively small adjustments to its formulation, can have a measurable impact on feature selection outcomes, especially in scenarios involving complex feature dependencies.

From a practical perspective, the findings of this study highlight that the choice of mutual information estimator can substantially influence the outcome of mRMR-based feature selection, even when all other parameters are kept constant. It is therefore important to consider not only the feature selection framework itself but also the underlying estimator used to quantify dependencies.

Overall, the results suggest that aligning the choice of estimator with the characteristics of the dataset and the resource constraints of the task can lead to more effective and reliable feature selection in practice.