Abstract

Historically regarded as one of the most challenging tasks presented to achieve complete artificial intelligence (AI), machine translation (MT) research has seen continuous devotion over the past decade, resulting in cutting-edge architectures for the modeling of sequential information. While the majority of statistical models traditionally relied on the idea of learning from parallel translation examples, recent research exploring self-supervised and multi-task learning methods extended the capabilities of MT models, eventually allowing the creation of general-purpose large language models (LLMs). In addition to versatility in providing translations useful across languages and domains, LLMs can in principle perform any natural language processing (NLP) task given sufficient amount of task-specific examples. While LLMs now reach a point where they can both replace and augment traditional MT models, the extent of their advantages and the ways in which they leverage translation capabilities across multilingual NLP tasks remains a wide area for exploration. In this literature survey, we present an introduction to the current position of MT research with a historical look at different modeling approaches to MT, how these might be advantageous for the solution of particular problems, and which problems are solved or remain open in regard to recent developments. We also discuss the connection of MT models leading to the development of prominent LLM architectures, how they continue to support LLM performance across different tasks by providing a means for cross-lingual knowledge transfer, and the redefinition of the task with the possibilities that LLM technology brings.

1. Introduction

Machine translation (MT) is the task of automatically translating text or speech in one language to another, and it has an extensive range of applications in business localization, diplomatic communications or content creation for media and educational resources. Having established itself as one of the most challenging tasks in artificial intelligence (AI) [1], MT has been the primary application that has driven research on architecture and model development [2,3,4,5,6,7,8,9,10,11,12,13,14,15,16,17,18,19,20], ultimately allowing the emergence of large-scale generally applicable generative language models, also known as large language models (LLMs). These models, with their ability to understand and generate human-like text, have revolutionized various domains, from automated customer service and content creation to complex problem-solving and interactive applications, reshaping the landscape of AI and its applications across industries.

In spite of providing a wide range of new applications, the inherent nature of the original underlying self-attention model [5] adopted in prominent LLMs was essentially designed for the task of translation. The novel setting in which MT between a pair of languages is now accomplished only as one of the tasks that the LLM is trained with cutting-edge architectures to solve raises interesting new prospects on how knowledge might be represented and shared across languages, domains and even modalities in resolution of various problems, and how LLMs can help redefine the task of MT.

In this paper, we aim to reposition the study of MT in relation to state-of-the-art advances in language modeling and present various approaches for building MT systems in historical context, with a focus on how different hypotheses about the translation task helped develop different modeling approaches, which led to both solutions and new problems. We discuss how early work in MT grappled with the foundational challenge of how to model language as a deeply structured, hierarchical system seeking models that could capture latent syntactic and semantic abstractions for robust generalization, and moving towards the rise of statistical methods, which shifted focus toward empirical performance, often dismissing linguistic theory, leading to an era questioning the necessity of explicit linguistic knowledge, favoring data-driven correlations over structured representations. With neural models, and especially LLMs, new research questions have emerged such as how a model’s capacity to interpret prompts influence translation performance, whether genuine language understanding is even necessary for effective translation, or can task competence emerge from in-context or self-supervised learning alone? These transitions mark a shift from hand-crafted rules, to statistical patterns, to implicit learning, each phase reframing the core hypotheses about what successful translation demands.

After three decades, we have reached an important milestone where MT can finally serve as a human-aided translation tool due to recent advances. We discuss the remaining challenges of using ad hoc methodologies in building and evaluating translation systems for real-life scenarios. Our survey presents a comprehensive collection of problems and limitations across different historical approaches to MT. We identify key issues that remain to be solved and future research directions. These include applicability across languages, domain adaptation, biases, and system evaluation. We also explore theoretical and practical considerations for integrating LLMs into MT systems, focusing on new applications such as fine-grained evaluation and stylistic personalization of translations.

2. Machine Translation

2.1. Historical Approaches

Traditional attempts to solve the MT task have generally adopted the two-fold process of first analyzing the source language to extract its meaning, and then synthesizing its semantic equivalent in the target language. The methods used in modeling the transfer of source meaning into the target language have been implemented using one of three major approaches that are quite distinct [21], and in terms of linguistic and structural comprehensiveness, in many ways, could be considered complimentary to each other.

The prominently adopted approach that also allowed the success of statistical models is the direct transfer approach, which assumes that translation can be solved through lexical mapping, or alignment, of a set of words, between a pair of sentences in two different languages. The direct model regards sentences as sequences of words, treating words as atomic units, and implements translation as joint analysis of the source sentence and synthesis of the target sentence relying on a set of lexical translation and reordering rules. In early rule-based systems [22], such predictions would be derived based on expert annotated dictionaries and grammars which may broadly represent syntactic, semantic, or idiomatic reordering rules for translating a sentence between a pair of languages. However, initial attempts for implementing MT with the direct transfer approach were only successful in revealing the most inherent complexity of language. The main limitation of rule-based direct translation methods was the intractability of the annotations required to build refined dictionaries and reordering rules, especially considering the infinite theoretical capacity of humans in phrasally constructing an utterance in any given language [23], in addition to the inherent complexity arisen by neglecting morphology, the subword-level transformations and their affect on syntax. However, as the preliminary approaches, they allowed for obtaining initial results and drew more interest and attention to MT research.

As a more computationally efficient alternative, following studies proposed extracting syntactic structures during source and target language analysis and hierarchically aligning two languages during translation. The syntactic transfer model takes a sentence as a structure other than a linear string of words as it is taken in the direct model, and finds a structure in the target language which can represent the same meaning. Accordingly, the cost of annotating and searching for sentences in the source language and their translations in the target language reduces to a finite number of equivalent structures in two languages. The syntactic model offers a high level of flexibility by not restricting the structures in two sentences to be exactly symmetric; instead, linguistic specialty and variations in expressivity across languages were also taken into account, making this approach in principle capable of achieving syntactic generalization or rephrasing during translation. On the other hand, developing and maintaining a framework for accurately and coherently representing structural dependencies for many languages proved to be quite challenging.

The third approach, interlingua, or translation by transfer in the universal meaning space, maps source sentences to an intermediate layer of language-agnostic semantic-syntactic representations. These representations comprise predefined symbols designed to express any pragmatic, cultural, and syntactic concepts that enable translation across different languages. The interlingual model was developed to further offer an economical way for developing translation systems that support more than a single translation direction [24]. While the difficulty of designing a comprehensive interlingua is evident, the concept would be revisited in the development of multilingual MT models [25].

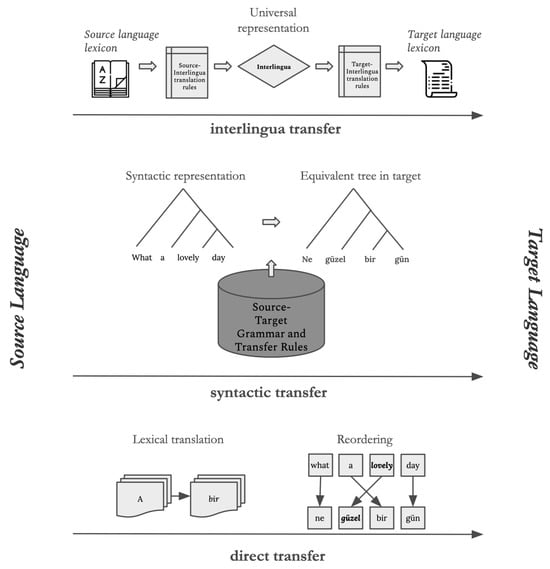

As the first substantial attempt at the non-numerical use of computers, MT received great attention and aroused high expectations from technology. As can be seen in Figure 1, the three main methods for modeling the MT task have quite distinct approaches, and have been found to have different advantages and disadvantages for different types of languages or the availability of resources. While many under-resourced languages continue to rely on rule-based systems for MT due to limitations in sufficient training resources [26,27,28,29,30,31,32,33,34,35,36], the high amount of human labor required to prepare expert annotated dictionaries and grammatical rules for building rule-based systems still motivates the paradigm shift into statistical methods for learning translation, the ideal direction for potentially building MT models that can extract the rules of language from examples and generalize these to translate previously unseen lexical or grammatical forms. On the other hand, for achieving true generalization, one may also need a more comprehensive framework for modeling language and its often irregular characteristics of grammatical structures, which may not be truly possible relying solely on statistical methods.

Figure 1.

Translation of the English sentence What a lovely day. into Turkish (Ne güzel bir gün.) using the three main approaches to machine translation.

Language is far more than a collection of lexical entries or syntactic rules; it is a deeply structured, hierarchical system shaped by layers of meaning, context, and transformation. Despite significant advances in machine translation, current approaches continue to reveal the complexity of language as a cognitive and communicative phenomenon. Many of its fundamental properties remain elusive, particularly when it comes to modeling the abstractions and structures that underlie linguistic generalization. This underscores a central challenge at the core of early MT research: how can we design models that not only process language data, but also learn its latent, hierarchical organization in a way that enables robust and generalizable translation?

2.2. Word-Based Statistical Machine Translation

As in the challenge of statistically modeling of learning any task, the most important constraint for models is the availability of task-specific examples for guiding the learning process. In case of MT, the most useful and predominantly used resource available has always been parallel data, collections of translations between a pair of source and target languages aligned at the sentence level. The modeling of translation directly at the sentence level intuitively encouraged the statistical models to adopt the direct transfer approach.

While the direct transfer method may have not been ideal for rule-based MT, it was inspiring to construct a first method for statistical MT since it had no inherent linguistic assumptions, thus, could in principle be applied to any language.

The IBM statistical word-based machine translation approach [37] was a groundbreaking development in machine translation that viewed translation as a probabilistic process. By using parallel text corpora (texts in two languages), the system learns to map words from a source language to a target language based on statistical patterns and probabilities. At its core, the approach relies on word alignment, which establishes connections between words in the source and target languages by calculating the likelihood that they are translations of each other. These alignments are learned through an iterative process using the expectation–maximization (EM) algorithm [38], which progressively refines the alignment probabilities to find the most likely word pairs across languages. The system combines three key components: a translation model that determines word correspondences between languages, a language model that ensures grammatical correctness in the output, and an alignment model that handles word reordering to account for different word order patterns between languages. While relatively simple by today’s standards, as it focuses on word-to-word translations rather than handling more complex linguistic structures, this approach laid the mathematical and conceptual foundation for modern machine translation systems [39].

2.3. Phrase-Based Machine Translation

Phrase-based machine translation (PBMT) [40,41,42,43] extends word-based MT by leveraging word alignments to learn larger translation units. While it starts with basic word-level alignments between source and target sentences, PBMT extracts consistent phrases (word sequences) from these alignments to build a phrase translation table that captures many-to-many word relationships and common expressions. This approach is enhanced by a distortion model that handles phrase reordering in the target language, typically using distance-based scoring to penalize large positional jumps [44,45,46,47,48,49,50,51]. This evolution from word-based MT offers several advantages: better handling of local context, improved translation of idiomatic expressions, and more natural phrase ordering in the output, while still maintaining the fundamental concepts of alignment and probabilistic translation modeling.

Proposing a gentle step towards a more linguistically coherent processing of language, PBMT still lacked a general grammatical conceptualization since it disregarded any information on the source sentence context and therefore could not capture long-range dependencies. This would lead to some of the well-known problems with PBMT such as mistranslation of infrequent idiomatic expressions, problems with word order between distant languages, and difficulty in handling syntactic and semantic nuances [52].

2.4. Neural Machine Translation

With the advent of sequence-to-sequence learning models, the problem of modeling the translation probability of entire sentences had become trivial [2,53]. The principle of translation in the neural MT (NMT) model is to learn representations of words and phrases in a vector space, using neural networks (NNs) to compute the probability of generating specific translation sequences based on these learned representations. In NMT, each word from the source sentence is first converted into a one-hot vector based on its unique vocabulary ID, which is then transformed into a dense vector representation (embedding) in a continuous space. The encoder, implemented as a recurrent neural network (RNN), processes these embeddings sequentially to create a comprehensive representation of the entire source sentence. Subsequently, another RNN, known as the decoder, generates the target language translation by considering both the encoded source sentence representation and the previously generated target words, effectively learning the relationships between the source and target languages. The overall network is trained to maximize the log-likelihood of a parallel training corpus via stochastic gradient descent [54] and the back-propagation through time algorithm [55]. Initially, NMT relied on a fixed context vector, which was a single compressed representation of the entire source sentence learned by the encoder RNN. This context vector was used to initialize the decoder for generating the complete translation. However, this approach proved limiting, as it forced the model to compress all source sentence information into a single fixed-length vector. A significant advancement came with the introduction of the attention mechanism, which dynamically computes a different context vector at each decoding step. This attention-based approach allows the decoder to selectively focus on different parts of the source sentence that are most relevant for generating each target word, effectively creating a more flexible and context-aware translation process [3,6]. Despite the overwhelming success of attention-based NMT, training recurrent models had been quite costly, and created a major obstacle in front of scaling larger and thus better models. Vaswani et al. [5] revolutionized NMT with the Transformer architecture, replacing the sequential processing of recurrent neural networks with parallel computation through self-attention mechanisms. Unlike RNNs that process words sequentially, the Transformer can model relationships between all words simultaneously, while maintaining word order information through positional embeddings. This design enables both more efficient parallel processing and better handling of long-distance dependencies.

As early statistical MT approaches debated over whether to model translation at the level of words or phrases, NMT pointed the opposite direction: subword units. This was mainly due to the fundamental design of the model which required learning embeddings for a fixed-size vocabulary of words. In addition to controlling the model complexity, this limitation is also related to the difficulty of learning accurate word representations which requires the model to observe words being used in various context. Under conditions of high data sparsity this creates an important bottleneck on being able to translate rare or unseen words that were excluded in the model vocabulary. Some studies proposed overcoming this limitation using optimization methods such as segmenting the model memory devoted to computing embeddings [56,57]. Among these, the particular approach of subword segmentation [58,59,60,61] achieved competitive results in translation of rare words such as loan words and numbers. However, lacking any linguistic notion, purely statistical segmentation often would lead to morphological errors [62,63,64] and cause information loss. For providing more applicable and generic solutions, self-supervised learning of morphological structure was proposed through hierarchical character/word-based architectures [57,65,66,67,68]. Some large-scale studies also showed neural models can learn morphological information to some degree with fully character-level models; however, achieving similar performance with subword-based models was found to eventually require much larger network capacity than subword-based counterparts [69,70,71,72,73,74,75].

2.5. Multilingual Machine Translation and Generative Language Modeling

Deep learning-based methods accelerated machine learning research at a rate that had not been anticipated or observed in decades, and they seemed to promise endless improvement in return of only one thing: scaling [76]. This would, of course, come at the cost of more and more training data. Unfortunately, parallel corpora are not one of the easiest resources to prepare and collect, and that was a major limitation in scaling NMT models. Previously designed PBMT models allowed for alleviating this problem by incorporating monolingual resources into the target language model, but applying this approach did not prove as successful in NMT [77,78]. A more promising approach which yielded useful results was back-translation [79,80,81,82], a data augmentation technique where the baseline translation model trained on parallel corpora would be used to translate new sentences collected in the target language. These synthetic pseudo-translations could then be used to further train the translation model and help larger models reach better performance. Improvements gained by back-translation, however, were found to be proportional to the quality of the synthetic translations obtained with the base model [83,84,85,86], which, ironically, also depends on the availability of resources, making this method only applicable to high-resourced language pairs [87,88].

Inspired by the hypothesis of distributional semantics and universal properties of languages, studies starting to build statistical language models using multiple languages started to find strong evidence that statistical models can naturally align distributed representations of multiple languages in a joint semantic space [89,90,91,92,93,94]. Almost naturally starting to revisit the idea of interlingua, subsequent studies explored these findings to develop unsupervised machine translation (MT) models, learning translation relying solely on monolingual resources in two languages [7,95,96,97,98]. These models explicitly aim to align representations across languages using a reconstruction objective such as with a denoising autoencoder, and an adversarial refinement procedure inspired by online back-translation, allowing the learned representations to gradually reach closer proximity to each other in vector space. While having the potential to overcome the scarcity of parallel data for many language pairs, unsupervised MT methods still face challenges in achieving high translation quality, especially for languages with significant linguistic differences or limited resources [99]. In such cases, using a pivot language to align languages in a common or pivot space has been a well-established alternative direction [100,101,102,103,104,105,106]. In pivot-based MT systems, the choice of the pivot language and the system set-up are crucial in determining translation quality, which is bounded by the quality of the best translation from the selected pivot in the system. Any translation errors would inevitably propagate across the translations to other directions, degrading the overall translation quality.

Successful research on the practicality of learning representations in a shared distributed space also inspired further studies on how network parameters could be shared across languages under different settings. These led to the development of multilingual MT approaches, where a single shared encoder and a decoder was proposed for usage in translating between multiple translation directions. Multilingual MT models are trained using collections of parallel corpora across languages where the translation examples do not necessarily have to contain all possible many-to-many translation directions [25,107,108,109,110]. While providing a novel application for zero-shot translation, multilingual language models were found to ultimately suffer from the curse of multilinguality [111], which states that the performance of a multilingual model with a fixed model capacity tends to decline after a point as the number of languages in the training data increases. Thus, adding more languages may hinder further improvement in low-resource languages, most likely due to incompatibility between the linguistic structures of different languages [112], and in most cases, building bilingual systems were generally able to produce more quality translations, both for the high and low-resourced languages supported by the model [113]. Therefore, very specialized and finely refined procedures for building multilingual MT systems were developed, including how to share vocabulary units and how much data to sample from each language [107], as many of the studies suggested affect the quality of successful transfer for the low-resourced or zero-shot language directions. In order to build more efficient multilingual MT models, one major line of approaches proposed taking a step back and refining which model components should be shared and which should be specialized to store information individually for each language [114,115,116,117,118]. A general conclusion was that for being able to build efficient multilingual MT systems that actually benefit low-resource language directions, languages that have distinct scripts or morphosyntactic properties should be allocated language-specific vocabularies and network parameters. With scalability challenges arising particularly for low-resource languages, following large-scale initiatives, such as the No Language Left Behind (NLLB) project [119], extended NMT to over 200 languages by employing strategies like data mining, backtranslation, and sparsely-gated mixture-of-experts (MoE) models, exemplified by their 54.5B parameter model that maintained a FLOP footprint comparable to a dense 3.3B model. Similarly, JDExplore’s [120] 4.7B parameter Transformer model demonstrated competitive performance at WMT22. Despite these advances, the field continues to face the difficulty of extreme data imbalance, as most of the 1220 evaluated language pairs still fall under the low-resource category. These developments established the foundation for exploring whether further scaling or architectural shifts could address translation challenges beyond the traditional parallel-data paradigm.

Although Transformer-based NMT systems made substantial progress by leveraging parallel corpora, their dependence on task-specific supervision and susceptibility to data scarcity remained limiting factors. To address these challenges, the Pretrain–Finetune (PF) paradigm emerged, wherein models are first pretrained on general multilingual objectives before fine-tuning for specific translation tasks. A prominent example is mBART [121], which applies multilingual denoising pretraining—combining word-span masking and sentence permutation within a Transformer encoder-decoder architecture augmented with language ID tokens. Pretraining on data from 25 languages enabled mBART to generalize across diverse translation directions, with fine-tuning specializing the model to particular language pairs using supervised parallel data. Large-scale pretraining was realized with 256 V100 GPUs, Adam optimization, and scheduled learning rate decay. While the PF paradigm substantially advanced translation capabilities, its continued reliance on supervised adaptation highlighted the need for more universally adaptable approaches.

Although the PF paradigm continued to depend on supervised adaptation, it significantly advanced multilingual generalization and established methodological foundations that deepened awareness of language diversity. These developments not only improved translation across a wider range of languages but also laid the groundwork for building language models capable of broader cross-lingual and cross-domain applicability, ultimately motivating the shift toward pretraining strategies that require minimal task-specific supervision.

2.6. Few-Shot Learning with Language Models

The outstanding performance of neural methods in natural language processing (NLP) tasks encouraged investments in exploring the limits of scaling, consequently transforming the field to take on a direction to continuously search for the optimal deep learning architectures for modeling language, resulting in the development of models with language representations containing linguistic features that are found to be useful in various downstream NLP tasks [8,122,123], making language models a preliminary component of most NLP systems. Evidently, the requirement of finding task-specific data was limiting the development of generally applicable large-scale models; therefore, many studies invested in how data from different languages, domains and eventually tasks could be combined to build large stand-alone models applicable across different settings. Eventually, the work of [124] proposed building efficient multi-task language models which could be easily fine-tuned to perform various NLP tasks.

Preliminary language models were encoder-only architectures, designed primarily for understanding and processing input text [8]. These models focused on contextual prediction objectives, such as masked language modeling and next-token prediction, which made them highly effective for classification tasks. To develop more general-purpose generative models, auto-regressive [125] and decoder-only [126] architectures employing causal language modeling were introduced. These approaches proved to be highly efficient and representative, particularly when trained on large, multilingual, and multi-task datasets [127,128,129,130].

An important innovation in scaling Transformer-based models was the adoption of byte-level vocabulary units, which allowed the handling of non-Unicode symbols included in the vast amounts of training data [111]. A surprising outcome of training these models on unfiltered, multilingual datasets—without explicitly defining language identities—was their ability to process and generate text in multiple languages. While this approach was not entirely optimal, it demonstrated the potential for models to learn cross-lingual representations and inspired further research into building systems that generalize across languages and tasks without explicit input specifications. An important and highly relevant application was indeed MT, especially in low-resourced languages using pretrained representations obtained from encoder-only language models could significantly boost performance when sufficient parallel data is not available, providing a new state-of-the-art baseline for MT which now also included combinations of multi-task and multilingual models [131,132]. The quality of representations in the sense of how multilingual information is learned and shared across languages does primarily affect the success of pretrained language models for MT. Models in particular deploying translation as an auxiliary objective, either by being trained on both masked language modeling and parallel data [111] or unsupervised reconstruction objectives [133] have proven to be more applicable.

With the possibility of training models with longer context windows and larger amounts of diverse training data collected across various tasks, language models have naturally evolved to support prompting, a technique that enables users to guide a model’s output by providing specific input instructions. Early progress in this area was marked by the development of decoder-only models like GPT [126], which utilized auto-regressive decoding to predict the next token in a sequence, making them naturally suited for tasks requiring dynamic generation based on user input. The introduction of in-context learning [134], made possible through training on large and diverse datasets, empowered models to interpret prompts as implicit instructions, effectively eliminating the need for task-specific fine-tuning. This feature showed particular benefit for zero-shot and few-shot learning scenarios, where the model could adapt to new tasks using only examples provided within the prompt.

Following the limitations of supervised fine-tuning, the pretrain–prompt paradigm emerged as a scalable alternative, leveraging large decoder-only language models pretrained on massive corpora to perform translation via prompting without explicit task-specific updates. In this framework, exemplified by models such as GPT-3 [134] and GLM-130B [135], translation is reformulated as a language modeling problem, where carefully crafted prompts guide the model to generate target translations. Architecturally, these models discard the encoder-decoder structure in favor of autoregressive Transformer decoders trained with next-token prediction or denoising objectives. GPT-3, with 175 billion parameters and primarily English-centric data (93%), demonstrated strong zero-shot and few-shot translation capabilities but exhibited notable gaps for non-English languages. To enhance translation performance, prompting strategies have been developed, including template engineering [9], in-context learning with selected demonstrations, and cross-lingual prompting where examples from different language pairs guide translation. The quality of translations in LLM-based translation however has been found to heavily depend on the quality of prompting, which should be carefully designed considering clarity and phrasing of the instructions, and the amount of preliminary information provided, such as translation examples in the given language pair and if possible task constraints which may include domain-specific terminology or stylistic preferences [9]. Their study showed that simple prompts explicitly specifying source and target languages yield superior zero-shot results, while the number and quality of prompt examples, measured through metrics such as semantic similarity (SemScore) [136] and model likelihood (LMScore) [137], substantially influence few-shot performance. Nevertheless, challenges persist: LLM translations tend to prioritize fluency over faithfulness, often leading to phenomena such as dropped content, prompt copying, hallucinations, and translation errors in non-English-centric directions [9,138].

While specialized MT systems continue to provide the highest translation quality for specific language pairs [139], the use of LLMs for translation represents a promising direction for optimizing traditional NLP pipelines, particularly in industrial applications where a more generalized solution is often required to address a wide range of tasks. Within the LLM-based translation framework, fine-tuning can be performed on selected parallel datasets, or, fine-tuning on monolingual data in the target language followed by task-specific fine-tuning on translation data offers another approach to enhance performance [140]. In the context of LLM-based MT, a critical research question that has now become relevant emerges: how does language understanding, and more specifically, the model’s capacity to interpret prompts, influence success in translation tasks? For instance, LLAMA-2 [130], a multilingual model explicitly fine-tuned on parallel datasets, achieves performance comparable to GPT-2 [127], a model originally designed for English but trained with inadvertent inclusion of multilingual data. Despite these advancements, the relationship between prompt clarity and output quality remains elusive, with no well-defined functional framework to measure how various linguistic and stylistic dimensions of translation impact performance. Furthermore, as LLMs scale in size, progress in language understanding and generation does not inherently follow, underscoring the persistent challenge of fostering efficient learning. Addressing these complexities calls for extensive research, including systematic investigations into how variations in prompting influence translation quality and broader model behavior. An extensive evaluation of LLMs in the MT task [141] shows that while GPT-4 surpasses the strong supervised baseline NLLB in 40% of directions, LLMs still lag behind commercial systems like Google Translate, especially for low-resource languages. While many findings suggest LLMs can learn translation efficiently even for unseen languages, instruction semantics can be ignored if in-context examples are given, and cross-lingual exemplars can outperform same-language examples for low-resource translation, in-context learning templates, exemplar quality, and prompt ordering significantly affect translation outcomes. Empirical analyses reveal that pivoting through English improves non-English to non-English translation quality, reflecting the English-centric training bias inherent to many LLMs [9].

Considering the remaining 60% of languages still better translated with specialized models, one can raise a pivotal inquiry not necessarily limited to LLMs but generically neural language modeling methods: does a model need to genuinely “understand” the meaning of the strings it generates to perform tasks effectively? In that case, can such learning take place with in-context or other learning methods? Resolving these research questions are crucial in being able to develop universally applicable models and lies at the heart of ongoing debates about the fundamental nature and limitations of these models in understanding grammar and structural patterns required to generalize to unseen word forms and sentences. Before we discuss this and relevant problems in the section on Current and Emerging Problems (Section 5), we would like to introduce historical methods for addressing irregular or infrequent linguistic paradigms or low-resourced languages by developing specialized hybrid MT methods.

2.7. Inductive Learning and Hybrid Models

The question of whether large language models truly “understand” language remains a contentious topic in the research community, especially as these models continue to achieve unprecedented performance across numerous sophisticated benchmarks and linguistic tasks. However, skepticism toward the necessity of linguistic knowledge in MT has been far from a novel phenomenon. This sentiment is epitomized by Jelinek’s oft-quoted remark, “Every time I fire a linguist, my scores improve,” [142] reflecting a long-standing belief among early proponents of statistical machine translation (SMT) that linguistic insights did little to enhance performance. Similarly, the current era of more and more progress in connectionist models [143,144,145,146,147], computational frameworks in which cognitive processes are represented as emergent patterns of activation across networks of interconnected units that learn from experience, invites renewed scrutiny on whether these models truly acquire and internalize the structure and semantics of language, or are they merely sophisticated pattern-matching systems? Additionally, perhaps a less popular but more fundamental question follows: what do improved scores in the context of generative models truly represent? These questions underscore the enduring tension between the role of linguistic theory and empirical performance in advancing MT and language modeling.

Humans acquire systematic generalization through the compositionality inherent in natural languages, where structural mechanisms operate over sets of primitive finite concepts [148]. In contrast, transductive learning methods, particularly ones relying on neural networks, have been found suboptimal for achieving systematic generalization [149,150,151,152,153,154,155], and they have yet to demonstrate human-like language understanding [156]. This limitation arises primarily from their reliance on extensive data to observe linguistic paradigms repeatedly, enabling accurate modeling of their meaning and usage. However, the inherent complexity of natural languages often results in paradigms that are both too intricate and too sparse to be adequately represented in any collected dataset [157,158,159,160,161,162,163].

To address these challenges, early methods in machine translation (MT) proposed hybrid models that combined statistical approaches with rule-based systems, leveraging the strengths of both methodologies [164,165]. In the realm of statistical MT, translation between typologically distant languages proved particularly problematic due to the sparse and complex nature of linguistic data. To mitigate this issue, structural alignment mechanisms adopting the syntactic transfer approach, also referred to as syntactic MT, were introduced [166,167]. Similarly, extensions to channel operations, such as those proposed in [168], enriched reordering patterns to accommodate more complex linguistic phenomena, including those that cross syntactic brackets [169]. Syntax-based models in MT provided a more linguistically coherent approach that still incorporates statistical learning, moreover, allowing a more comprehensive evaluation of the applicability of statistical methods in capturing different types of hierarchical structures observed across languages with varying typology. The three syntax-based models, Synchronous Context-Free Grammar (SCFG), Inversion Transduction Grammar (ITG), and Synchronous Tree Substitution Grammar (STSG), differ in their use of syntactic information and structural representation. SCFG extends traditional context-free grammars by incorporating two related right-hand sides, one for the source language and one for the target, making it suitable for capturing phrase-level correspondences without relying on explicit linguistic syntax. The hierarchical phrase-based model proposed by [167] is a notable SCFG-based approach. In contrast, ITG, introduced by [166], constructs synchronous parse trees for source and target sentences, explicitly modeling structural correspondences and reordering patterns through permutations, yet it similarly avoids real linguistic parse trees. STSG, on the other hand, leverages actual linguistic parse trees, with productions represented as pairs of elementary trees where non-terminal nodes are linked, providing a linguistically grounded framework for translation. While SCFG and ITG typically operate as string-to-string models with minimal syntactic reliance, STSG emphasizes detailed syntactic representation for more robust linguistic alignment, serving as an example where productions consist of pairs of elementary trees linked at non-terminal leaf nodes, similar to synchronous CFG. The first category, string-to-string, does not utilize linguistic syntax, as seen in SCFG-based and ITG-based models. The second, string-to-tree, applies linguistic syntax only to the target side, allowing the source to remain as a linear string [169,170]. The third, tree-to-string, representation model uses syntactic structures exclusively on the source side to guide translation into a linear target representation [171,172,173]. Finally, tree-to-tree models apply linguistic syntax to both source and target languages, offering the most detailed syntactic alignment [174,175,176,177]. These categories reflect increasing levels of syntactic integration, with tree-to-tree models providing the most linguistically rich framework for translation.

An important limitation of CFG is the neglect of morphology and its relationship to syntax. In fact, morphology and syntax are part of a much more complex mechanism where jointly can determine the sentence meaning. A word’s syntactic role is going to not only affect the the entire construction of the sentence but the morphological inflections that the word, as many others in the sentence which have dependencies to it. On the other hand, such relationships are quite difficult to integrate into modern computational models, making applicability to languages with rich morphology an open question. Another important consequence of statistical modeling in morphologically-rich languages is the high level of lexical sparsity observed in any amount of collected data, due to the exponentially growing potential surface forms a single lemma can have. This problem makes MT a specific challenge in terms of being able to understand as well as generate these rare or unseen word forms. In the context of statistical MT, a commonly used approach was to decrease sparsity by finding more frequent and meaningful shared set of common intermediate units, such as morphemes or morphological features shared across words [178,179,180], ultimately helping better estimate probability distribution of alignments of lexical units and help systematic generalization [181]. This approach also aids in a more homogeneous word alignment in distance languages. For instance, a Turkish word often can translate to a phrase of many words in English or another analytic/morphologically less sparse language, and in such cases segmenting words into morphemes and training machine translation systems can help improve the quality of MT.

Tackling translation into morphologically-rich languages was another line of work addressed by hybrid word–character alignment models [182]. In NMT, Sánchez-Cartagena and Toral [183] suggested using the Finnish morphological segmentation tool Omorfi [184] to separate words in the training corpus into their bases and inflectional suffixes to perform vocabulary reduction in English-to-Finnish neural machine translation. Ataman proposed an unsupervised morphologically-motivated vocabulary reduction method [63] as an extension of the Morfessor algorithm [185]. Similarly, Huck et al. [186] and Tamchyna et al. [187] applied morphological analysis to split words into sequences of lemma and syntactic feature sets in English-to-German and English-to-Czech neural machine translation. However, linguistic tools did not provide generally applicable solutions to open-vocabulary NMT. Statistical subword segmentation methods therefore proved more useful [58,59]. Of course, morphological analysis tools are typically not available across languages, and statistical techniques can only be useful to certain typologies [188]. Since words in this model are generated in the course of predicting multiple subword units, generalizing to unseen word forms becomes more difficult, where some of the subword units that could be used to reconstruct the word may be unlikely in the given context. To alleviate the sub-optimal effects of using explicit segmentation and generalize better to new morphological forms, recent studies explored the idea of extending the same approach to model translation directly at the level of characters [74], which, in turn, have demonstrated the requirement of using comparably deeper networks, as the network would then need to learn longer distance grammatical dependencies [189], increasing the computational complexity as well as the demand on training resources to an unrealistic level. Hybrid word–character models could allow for better efficiency [57,190].

The question of whether the improvements gained with inductive learning methods are significant has been widely discussed in the history of MT. For instance, Koehn et al. [40] argued that syntax-based approaches not only fail to improve performance but can actively degrade translation accuracy. Their experiments demonstrated that the removal of non-constituent yet frequently used phrases, such as “there is,” negatively impacted translation quality, leading them to the conclusion that syntax does not play a critical role in SMT. This claim challenges the assumption that incorporating linguistic structure inherently benefits translation systems, suggesting instead that vast amounts of data can compensate for the lack of explicit syntactic modeling. In high-resource scenarios, the effects of inductive learning appear to diminish, raising the question of whether evaluation metrics truly capture the role of linguistic generalization. Since automatic evaluation methods, such as BLEU [191], primarily measure lexical and phrasal similarity rather than structural generalization, they may not adequately reflect whether a system has internalized deeper syntactic or semantic relationships. This highlights a potential misalignment between how models are trained and how they are assessed, further complicating the debate on whether structured linguistic knowledge is necessary for effective MT. The details of methods used in the evaluation of MT systems are discussed in the next section.

3. Evaluation

While a thorough and accurate evaluation of any translation system should eventually involve human assessment, due to time and cost considerations, a prominent approach especially during system development typically relies on automatic heuristics which can provide costless reinforcement on the sufficiency or efficacy of the model settings or resources used in system development. Such evaluations employ specific metrics to determine the quality of translations, ensuring that they not only retain the meaning of the original content but also adhere to the linguistic and grammatical norms of the target language.

Automatic evaluation metrics are generally designed with two main approaches. The first and traditional approach undertaken in automatically evaluating translation quality was more suitable to earlier rule-based systems as MT systems which were designed to be integrated into Computer-Assisted Translation (CAT) tools. The metrics were designed to perform simple comparison of finding lexical mistakes in the output, in comparison to the reference. A straightforward method for this is measuring the number of edits needed to transform a system-generated translation into a reference translation, also defined as the Translation Edit Rate (TER) [192]. TER calculates the percentage of words that need to be inserted, deleted, substituted, or reordered to match the reference. In error-based metrics, a lower score indicates a higher-quality translation. This way, the translator using the translation memory can assess the MT output for post-editing in the most efficient and productive way.

The development of SMT models has led to the integration of statistical analysis in the automatic evaluation of MT systems. The first notable evaluation metric, BLEU [191], was introduced by the IBM research group, followed by NIST, developed by the National Institute for Standards and Technology [193]. Both metrics have been employed by (D)ARPA in assessing MT projects funded by U.S. research programs. Due to the now shifted nature of usage of MT in new applications the metrics also accounted for the maximization of similarity of system output to a gold-standard utterance presenting an example of an accurate system output. In this case, the quality is evaluated based on its intended purpose, categorized into different levels such as dissemination (publishable-quality translations), assimilation (acceptable at a lower quality threshold), interchange (communication or unscripted presentations) or information access (non-grammatical multilingual information retrieval and extraction purposes) [194]. While for earlier PBMT systems metrics like BLEU provided fast and relatively reliable feedback on the system performance, as systems became more competitive, especially with the NMT technology, matching-based metrics started to fall back significantly in application to translation of real data. For instance, when the output happens to contain a rephrased version of the context due to stylistic or syntactic variations in the generative process, or in many morphologically-rich variations where words not only can change in form at the subword level through inflectional or derivational transformations, but also at the sentence level due to free word order, word-level metrics are known to fail to capture accurate evaluations [195,196,197,198]. Alternatively, ref. [199] proposed n-gram matching at the character level, which has been more appropriate for the evaluation in morphologically-rich languages. However, matching-based approaches still might miss semantic nuances in the generated language. Recent studies proposed the alternative approach to use vector similarity in distributed representations [200]. This method provides a better semantic notion over simple word matching heuristics, yet there are still issues regarding the robustness of pretrained language representations and how well they can capture non-linear functions that can represent different types of semantic or lexical accuracy, grammatical mistakes, or many other complex features for assessing translation quality.

By the nature of their design, some metrics may be able to capture certain typological forms and patterns better than others, and thus correlate better with languages with those features. While language models have been found to learn some generalizable patterns on grammar [201,202,203], these findings have not been found to be applicable across various languages [204]. To overcome limitations of pretrained language models in applicability in translation evaluation, a more recent trend has become fine-tuning models on translations with human evaluation scores. This is accomplished using competition data sets spanning news or specific domain translations in a set of languages from recent years. In addition to having very limited scope in applicability to various languages, this solution is ill-defined in the sense that example annotations are too ambiguous as learning signals to inform a system whether a translation is accurate, a task that even humans often struggle to accomplish.

While quality assessment appraises the goodness of a translation, which can quantify how applicable or useful is the translation for deployment, error analysis judges a translation from the opposite perspective, i.e., measuring its badness, an ultimate measure that estimates the amount of work required to correct raw MT output to a standard considered acceptable as a translation [194]. In either case, metrics were ultimately designed for measuring the feasibility, potentials and limitations of a system, by examining the contexts in which it is most likely to be effective or prone to failure such that its results are usually more meaningful and interpretable to interested parties like system developers and users. With the advent of multi-task models, MT is only one of the applications, and how users use MT in new context raises the question of whether it is time to reconsider evaluation not merely as a process of maximizing specific metrics but rather as a return to its original definition—reassessing it as a multidimensional approach that integrates multiple factors to ensure more comprehensive and reliable assessments considering the possibility to produce translations that could be used across various context and domains.

4. Data Curation Methods Used for Building MT Systems

In traditional statistical and early NMT systems, models were trained separately for each language pair using curated bilingual corpora. These corpora were typically sourced from high-quality institutional data such as Europarl [205] or the UN Parallel Corpus [206], and often required extensive pre-processing steps including correction of any sentence misalignment, tokenization, trucasing, and domain-specific filtering. Domain mismatch and data sparsity, especially for specialized or under-resourced language pairs were common challenges in building MT systems, addressed through data selection techniques like cross-entropy difference scoring [207], perplexity-based ranking [208] and filtering based on alignment confidence [209]. These practices ensured domain consistency and minimized noise in statistical MT pipelines but required high effort and bespoke resources for each pair, limiting scalability. As research shifted toward multilingual MT, these foundational methods informed the development of more generalized and scalable data preparation workflows.

Modern multilingual MT systems rely on large, heterogeneous datasets spanning many languages, domains, and data qualities. Curation begins with the acquisition of both parallel and monolingual data from multilingual web sources (e.g., Common Crawl; [210] and ParaCrawl; [211], CCMatrix; [212], institutional repositories, or crowd-sourced translation efforts). Parallel sentence alignment is typically performed using multilingual encoders such as LASER [213], LaBSE [214], or newer models like LASER3 [215]. Monolingual corpora are utilized through back-translation [82], self-training [216], and forward-translation [217], especially in low-resource contexts. However, these raw corpora often contain noise such as duplicate or misaligned sentences, hallucinated content, and domain inconsistencies. For cleaning data techniques include deduplication, length and language filtering. In addition, data selection techniques continue to play a role, in particular for separating the training data into generic and domain-specific training sets with methods like cross-entropy filtering [218] and classifier-based relevance ranking [219], which can be used to subsample corpora for adapting multilingual models into a specific language or application domain.

A major challenge in multilingual MT is the highly skewed distribution of data across languages. High-resource languages like English or French may be over-represented by orders of magnitude compared to low-resource languages. Naïve uniform sampling disproportionately benefits these dominant languages and can hinder generalization elsewhere. To address this, several multilingual sampling strategies have been proposed. Temperature-based sampling [220] reweights data distributions to upsample low-resource languages, while Target Conditioned Sampling [221] learns language-specific weights to minimize downstream task loss. More recent approaches incorporate adaptive sampling based on learning dynamics [222] or hierarchical clustering to group languages by typological or semantic proximity [223]. These strategies are often combined with curriculum learning and dynamic data selection to better balance training signals across languages.

Recent large-scale MMT systems, such as M2M-100 [222], mT5 [224], and NLLB [215], highlight the central role of curated and filtered data pipelines. These models rely on massive web-scale corpora filtered with multilingual encoders and quality classifiers, often using multiple passes of noisy alignment removal, language verification, and domain scoring. Data quality is further enhanced through knowledge distillation [225], where teacher models generate translations used to train smaller or multilingual student models. Online back-translation [84] and uncertainty-based sampling [226] are increasingly adopted to generate dynamic and informative training data. Benchmark datasets such as FLORES-101 [227] and OPUS-100 [228] emphasize the need for balanced multilingual evaluation, reinforcing the importance of principled data selection and curation strategies. As the field advances, the intersection of data curation, sampling, and filtering continues to define the scalability, fairness, and robustness of multilingual MT systems.

5. Current and Emerging Problems

5.1. Applicability Across Languages

While LLMs are widely used now for MT, research has demonstrated a significant disparity in the performance of large language models (LLMs) between English and other languages [229]. While GPT-4 [127] approaches the performance of state-of-the-art fine-tuned models, it often fails to surpass them, particularly in languages that utilize non-Latin scripts and in low-resource languages. Empirical studies indicate that high-resource languages exhibit robustness in few-shot translation settings, whereas in-context learning benefits low-resource languages more consistently [230].

The extension of NMT models for multilingual training offered significant efficiency gains in across languages, yet a single model covering hundreds of languages is unlikely to provide optimal performance across all language families. Instead, fine-tuning models on language-specific or family-specific subsets has been proposed as a more effective strategy [231]. In multilingual models, a key challenge is parameter allocation across languages. For instance, in a model trained on English and Chinese, parameter updates for English may not necessarily transfer to Chinese, highlighting the difficulty of cross-lingual knowledge transfer [232], with some linguistic features requiring substantial model capacity for effective representation.

A key challenge in multilingual evaluation is the scarcity of comprehensive benchmarks, which hampers thorough assessment and increases the risk of test data contamination. Although initiatives provide translation benchmarks for low-resourced language families and dialects [233,234,235], the overall availability of low-resource evaluation datasets remains limited. Large-scale evaluation efforts [236,237], have invested significantly in developing multilingual test sets encompassing many underrepresented languages. However, these remain constrained by the high cost of human translation. Evaluation campaigns such as the International Workshop on Spoken Language Translation (IWSLT) and Conference on Machine Translation (WMT) have invested significant efforts for building high-quality evaluation data for assessing translation quality of prominent models across the years in large-scale multilingual evaluation [238,239] or focused on typologically diverse language families [236,240,241,242,243,244,245,246,247,248] or spoken dialects [249].

While efforts for building more comprehensive evaluation benchmarks are ongoing, a very recent exploration of the LLM translation capability in very low-resource languages used the AmericasNLP [250] shared task for their evaluation of LLMs in indigenous American languages. Their findings showed continued pretraining and instruction tuning strategies, including mixing MT and synthetic tasks [140,251], significantly improved translation for indigenous American languages such as Hñähñu and Wixarika. Base models like Mistral 7B and MALA-500 showed that lighter LLMs, when appropriately adapted, could achieve substantial gains in low-resource translation without needing extreme computational power. As such results reveal more applicability of LLMs in more efficient settings, it is important to continue investing in more inclusive representation in evaluation benchmarks to be able to truly assess generalization capability of models to the translation of real spoken language.

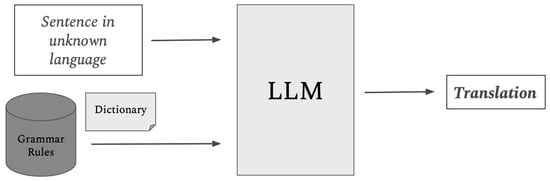

An earlier evaluation of LLMs in zero-shot MT is another important contribution in understanding the nature and limitations of LLMs. A main limitation in the applicability of MT models based on Transformer architectures is the reliance on statistical learning, which has historically minimized the role of linguistic insights, raising fundamental questions about how to determine whether models truly understand language. Studies evaluating the capability of LLMs in performing zero-shot translation, such as for Kalamang [252] (as in the setting depicted in Figure 2), highlight that LLMs primarily generate text with basic to mid-level grammatical complexity. This limitation prompts a broader inquiry into whether these models can achieve structural generalization, which urges one to reconsider the distinction between feed-forward and recurrent architectures [253], the role of inductive learning methods, and the inherent challenges of acquiring grammar through data-driven approaches, all of which underscore the complexity of modeling linguistic competence [254]. Recent results using linguistic explanations for in-context learning based on examples to generalize or encourage researchers argue that a return to hybrid rule-based methods for LLM-based MT, potentially through expert-driven interventions, may be necessary to improve linguistic accuracy [255].

Figure 2.

Machine translation of a new language using only a LLM, a grammar book in the target language, and a bilingual dictionary, as proposed in [252].

Data scarcity remains a fundamental constraint for NMT and LLMs, particularly as models scale. While LLMs can leverage vast training corpora, they ultimately face saturation points where additional data no longer yields significant improvements. This raises critical questions: What determines the upper bound of translation accuracy for NMT? Can these models generate linguistically valid outputs beyond their training data? How should evaluation frameworks be designed to incentivize true generalization rather than memorization? Additionally, as models grow larger, an open question remains as to whether or not translation quality can be improved without simply increasing model size. Addressing these challenges will be crucial in determining the future trajectory of multilingual machine translation in the era of LLMs.

5.2. Evaluation

Automatic evaluation metrics have played a pivotal role in the development of machine translation (MT) systems, particularly in the era of neural machine translation (NMT). Among these, BLEU has been instrumental as a fast and computationally efficient verification tool, significantly influencing large-scale NMT development and shaping the trajectory of MT research. However, the widespread focus on maximizing BLEU scores—often at the expense of qualitative progress—has led to systemic neglect of languages and linguistic phenomena where existing metrics fail. This emphasis on score improvement, rather than meaningful evaluation, has particularly disadvantaged low-resource and morphologically complex languages, where the applicability of standard evaluation metrics remains limited.

On the other hand, many existing evaluation metrics, particularly those designed for statistical models, struggle to capture structural generalization. These models often fail to generate new words or reorder phrasal structures in a linguistically coherent way, particularly for languages with rich morphology or free word order. Given that most widely used evaluation metrics, including BLEU, favor memorization over true generalization, they inadvertently discourage models from learning meaningful linguistic structures. Furthermore, evaluation scores tend to penalize lexical choices and alternative phrasal reorderings [197] that do not exactly match the reference, even when such variations may be equally valid or more contextually appropriate.

The limitations of traditional evaluation metrics have prompted longstanding debates regarding their validity. Notably, the claim that “every time I fire a linguist, my scores improve”—attributed to Jelinek—reflects early SMT researchers’ skepticism regarding the role of linguistic theory in MT performance [142]. Yet, the unresolved discrepancies between purely statistical and linguistically informed approaches raise an important question: Are we truly measuring what we should be measuring? Current single-score evaluation methods often provide misleading insights, as even human evaluators frequently disagree on translation quality assessments. Multi-level scoring frameworks, such as MQM (Multidimensional Quality Metrics) [256], have revealed that translations deemed “high-quality” by automatic metrics often contain significant grammatical errors.

In response to these shortcomings, recent years have seen a shift away from heuristic-based metrics like BLEU [257], with increased investment in pretrained evaluation models such as COMET and BLEURT. However, these newer metrics remain in early stages of development and exhibit critical flaws. For instance, pretrained metrics may be prone to anomalies or overfitting to certain language pairs, raising concerns about their ability to provide reliable and generally applicable cross-lingual evaluation [258,259]. Additionally, different evaluation methodologies lead to inconsistencies in optimization: Maximum Likelihood Estimation (MLE) favors high recall, Reinforcement Learning for MT (RL + MT) prioritizes high precision, and BLEURT-based RL methods fail to penalize repetitive translations [260,261]. The challenge remains in integrating multiple evaluation criteria into a single, interpretable metric.

A promising direction for evaluation involves leveraging LLMs to enhance translation assessment. Recent studies have proposed using LLMs to label translation errors [262], yet they currently lack the capability to consistently rank good vs. bad translations or sentences. Evaluating long-tail errors, which occur infrequently but may be critical in specific domains (e.g., named entity errors), presents another major challenge. Future research should explore metrics that are more sensitive to different types of errors, ensuring that high-impact mistakes receive appropriate weight.

LLMs also hold potential in improving analytic scoring by identifying systematic translation errors and assessing contextual appropriateness. One open question is whether evaluation metrics should penalize lexical variation. For instance, while BLEU assigns a perfect score (1.0) to an exact lexical match, COMET evaluates semantic similarity continuously, potentially assigning no penalty at all. This raises the need for an intermediate approach that balances lexical precision with semantic flexibility.

Ultimately, the goal of MT evaluation should not be to maximize arbitrary scores but to develop robust methodologies that accurately reflect translation quality across diverse languages and domains. Future research should prioritize evaluation frameworks that go beyond single-score metrics, incorporate human-like linguistic reasoning, and address the nuanced challenges posed by multilingual and domain-specific translation tasks.

5.3. Biases and Hallucinations

Hallucinations in MT systems refer to outputs that may be fluent but semantically unfaithful to the source text. They manifest in various forms, including content fabrication (adding information to the output that is not in the source), omission of key elements, semantic drift where meaning subtly changes, and improper substitutions. Other common cases include domain mismatch hallucinations, where the model inserts domain-specific content irrelevant to the input, and memorization-based outputs that arise from noisy or low-resource inputs or in multilingual MT systems, translations that are in the wrong language or may diverge significantly from the source meaning. Additionally, small perturbations in the input can trigger disproportionately erroneous or hallucinated translations, reflecting the model’s sensitivity and limitations in generalization. In addition to internal imbalance in the distribution of data collected across resources of various quality amplifying the noise and irregularities in the underlying distribution and therefore extending the difficulty of learning generalizable patterns [263], the supervised learning setting, in particular, the exposure bias, is also a key contributor to hallucinations, particularly under domain shift conditions, where a model is tested on data from a domain different from what it was trained on [264]. Exposure bias arises from a discrepancy between the training and inference phases, where models trained using Maximum Likelihood Estimation (MLE) over-rely on gold-standard references and struggle when making predictions in real-world scenarios. Another study by [265] categorizes hallucinations into two main types: those induced by perturbations, where small changes to the input drastically alter the translation, and natural hallucinations, which occur even when the source text remains unchanged. Their research connects hallucinations to deep learning’s long-tail theory, showing that memorized samples—data points learned verbatim by the model—are more susceptible to hallucination under perturbation. They further demonstrate that specific patterns of corpus-level noise, such as repeated erroneous source-target pairs, lead to different types of hallucinations. Detached hallucinations result in fluent but semantically incorrect translations, while oscillatory hallucinations produce repetitive or nonsensical phrases. A critical finding of their work is that hallucinations can be amplified through widely used data augmentation or training techniques such as back-translation or knowledge distillation, which introduce noise that reinforces hallucination patterns in downstream models. This amplification effect suggests that careful data curation is necessary to prevent learned hallucinations from propagating across translation systems.

While LLMs certainly show more generalization and creative generation tendencies toward higher fluency, increased translation non-monotonicity (greater paraphrasing and non-literalness), and insertion or deletion of content, they also suffer from occasional hallucination, particularly when prompt templates are poorly handled (prompt traps) [9]. LLMs also sometimes compromise faithfulness to the source sentence, suggesting limitations for high-stakes translation tasks requiring strict accuracy. Studies both with massively multilingual NMT models and LLMs [266] show that hallucinations occur more frequently in low-resource language pairs, particularly when translating from English into other languages. In these cases, models sometimes generate content that is entirely fabricated, misleading, or even toxic, reflecting biases present in the training data. They also find the nature of hallucinations differs between model architectures, indicating that NMT models and LLMs exhibit distinct error patterns due to their differing underlying mechanisms. The study further finds that simply scaling up model size does not effectively reduce hallucinations when training data remains unchanged. However, diversifying training data and model training methodologies can improve translation accuracy and reduce the likelihood of such errors.

Unlike phrase-based machine translation (PBMT), which allowed for direct analysis of model predictions, NMT models lack such transparency and access to its subcomponents for interpretability, making it difficult to diagnose and address hallucinations effectively. This lack of explainability raises fundamental questions about how hallucinations occur and what interpretability methods can be developed to analyze and predict these failures. Addressing these issues requires refining training objectives beyond MLE, improving data quality and diversity, and developing more sophisticated evaluation frameworks that go beyond surface-level fluency. Future research should focus on enhancing model explainability, improving robustness to domain shifts, and designing better detection and mitigation strategies to ensure that multilingual translation systems remain accurate, trustworthy, and applicable across diverse linguistic contexts.

6. LLMs and MT Together

With their impressive performance across numerous languages, NMT models have transformed the landscape of MT from a back-end tool supporting human translators in computer-assisted translation (CAT) tools [267] into fully automated stand-alone services capable of handling translation tasks across diverse domains [4,268]. However, the emergence of LLMs introduces new possibilities, challenges, and shifts in how MT is approached.

One of the central questions in the era of LLMs is whether they can fully replace traditional NMT systems or whether they serve a complementary role. While LLMs can perform translation tasks with little to no task-specific training, dedicated bilingual or multilingual NMT systems still offer advantages in terms of efficiency, domain adaptation, and terminology control [269]. The distinction lies in their design: whereas NMT models are specifically optimized for translation and can be fine-tuned for domain-specific accuracy, LLMs approach translation as part of a broader multilingual understanding, excelling in generalization but often lacking consistency in terminology and alignment.

Fluency, once a significant challenge in machine translation, is largely solved by LLMs [270], which produce highly natural translations. However, their deceptive fluency poses a new problem: while translations may sound impeccable, they are not necessarily accurate. Evaluating translation quality in this context requires shifting from traditional metrics that measure improvement to methods that systematically identify and analyze failure cases. Research suggests that LLM-generated translations often require human intervention, and assessing the effort needed for post-editing could serve as a more reliable measure of quality. Some evaluation frameworks, such as assessing the ability of native speakers to distinguish between human and machine translations, could also provide insights into the authenticity of generated text. Further investigations can include measuring amounts of revisions required to post-edit a translation or rate the perceived authenticity of generated text [271]. Investigating methods to further learn from these corrections or other types of human feedback also remain a promising research direction [272].

Unlike earlier MT models, which offered limited user control and focused primarily on sentence-level translation, large language models (LLMs) introduce a new paradigm in which translation becomes an interactive and customizable process. Through prompt engineering, users can influence aspects such as tone, verbosity, register, and even stylistic coherence, allowing translations to be adapted to specific communicative contexts or target audiences. This flexibility is particularly relevant for discourse-level translation, where maintaining cohesion, coreference, and pragmatic appropriateness across sentences is crucial [273,274]. Unlike conventional MT systems, which typically operate in isolation at the sentence level, LLMs can leverage extended context windows to model inter-sentential relationships, although their performance on such phenomena remains variable and highly prompt-dependent [275]. Rather than replacing traditional translation workflows, this shift redefines the role of translation professionals: their expertise is increasingly critical not only for post-editing and quality control, but also for crafting effective prompts and ensuring that discourse-level features—such as anaphora, ellipsis, and stylistic continuity—are preserved in model outputs.

However, LLMs also introduce new challenges. One is the supervision problem: unlike traditional supervised MT systems trained on explicitly paired source–target examples, LLMs often rely on in-context learning or few-shot prompting, which provides weaker, transient forms of supervision. As a result, they can be prone to inconsistent or unpredictable behavior, especially when prompts are ambiguous or under-specified. While LLMs excel at adapting outputs to user-specified preferences, maintaining strict control over output fidelity and consistency remains difficult without robust prompt engineering or external constraints.

Moreover, the scalability and cost of LLMs raise concerns. Dedicated neural MT models, though smaller in size, often outperform LLMs on narrow translation tasks, particularly when trained on high-quality parallel corpora for specific language pairs or domains [275,276]. These systems offer strong performance with lower inference costs and more stable output distributions. By contrast, the general-purpose nature of LLMs entails a trade-off: while they offer greater flexibility and adaptability—useful for user-guided or context-sensitive translation—they often do so at the expense of deterministic control and efficiency. This tension underscores the need to evaluate the role of LLMs in MT not as replacements for traditional systems, but as complementary tools with distinct strengths and limitations.