An Approximate Algorithm for Sparse Distributionally Robust Optimization

Abstract

1. Introduction

2. The Sparse DRO with CVaR (SDRPC) Model

3. The Discretization Scheme

4. The Approximate Discretization Algorithm

| Algorithm 1 The approximate discretization (AD) algorithm for DRO |

|

5. Applications and Numerical Results

5.1. Applications

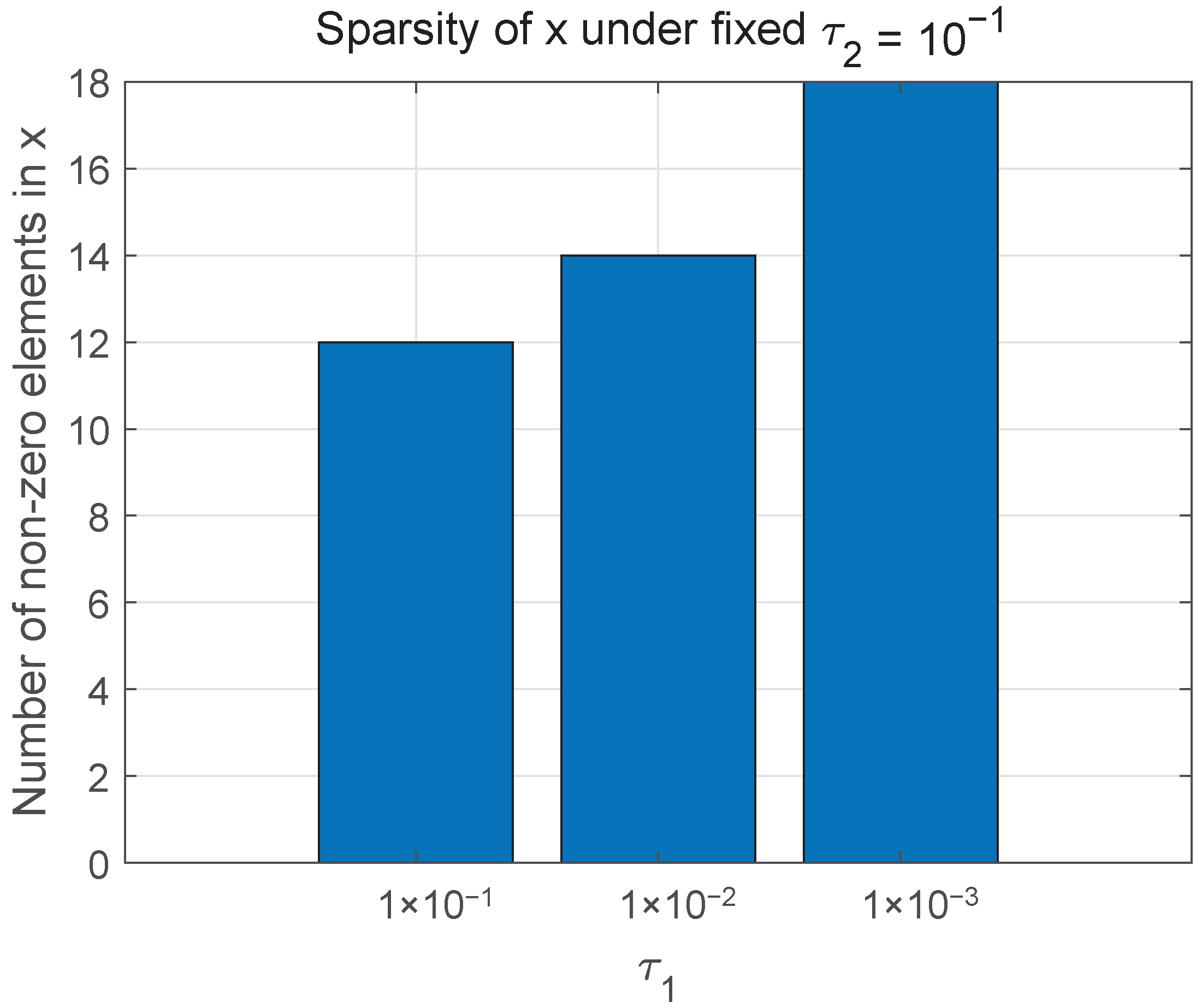

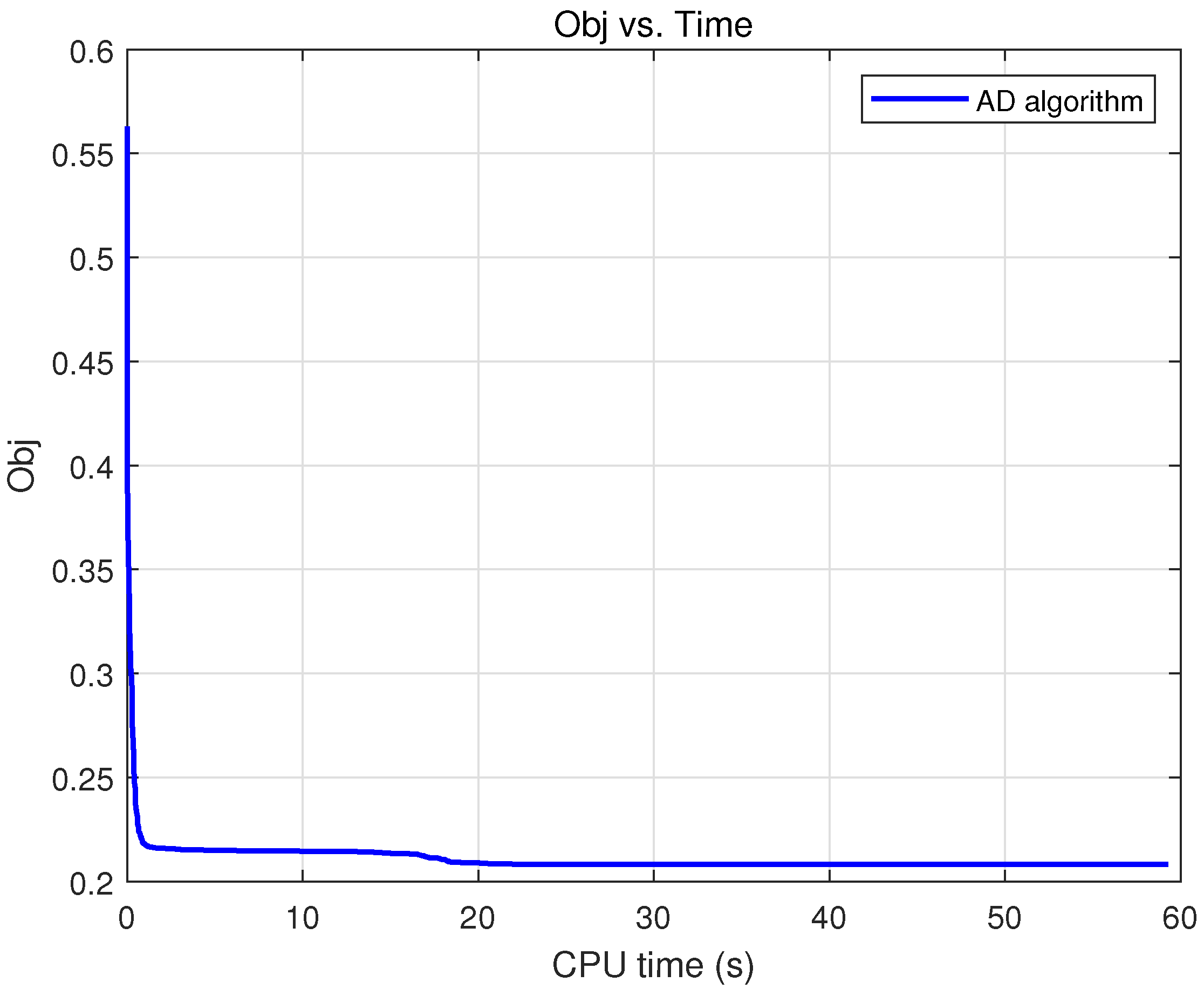

5.2. Numerical Results

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Beyer, H.G.; Sendhoff, B. Robust optimization—A comprehensive survey. Comput. Methods Appl. Mech. Eng. 2007, 196, 3190–3218. [Google Scholar] [CrossRef]

- Bertsimas, D.; Brown, D.B.; Caramanis, C. Theory and applications of robust optimization. SIAM Rev. 2011, 53, 464–501. [Google Scholar] [CrossRef]

- Gabrel, V.; Murat, C.; Thiele, A. Recent advances in robust optimization: An overview. Eur. J. Oper. Res 2014, 235, 471–483. [Google Scholar] [CrossRef]

- Delage, E.; Ye, Y. Distributionally robust optimization under moment uncertainty with application to data-driven problems. Oper. Res. 2010, 58, 595–612. [Google Scholar] [CrossRef]

- Rahimian, H.; Mehrotra, S. Distributionally robust optimization: A review. arXiv 2019. [Google Scholar] [CrossRef]

- Levy, D.; Carmon, Y.; Duchi, J.C.; Sidford, A. Large-scale methods for distributionally robust optimization. Adv. Neural Inf. Process. Syst. 2020, 33, 8847–8860. [Google Scholar]

- Shapiro, A.; Zhou, E.; Lin, Y. Bayesian distributionally robust optimization. SIAM J. Optim. 2023, 33, 1279–1304. [Google Scholar] [CrossRef]

- Fan, Z.; Ji, R.; Lejeune, M.A. Distributionally robust portfolio optimization under marginal and copula ambiguity. J. Optim. Theory Appl. 2024, 203, 2870–2907. [Google Scholar] [CrossRef]

- Rockafellar, R.T.; Uryasev, S. Optimization of Conditional Value-at-Risk. J. Risk. Res. 2000, 2, 21–42. [Google Scholar] [CrossRef]

- Noyan, N. Risk-averse two-stage stochastic programming with an application to disaster management. Comput. Oper. Res. 2012, 39, 541–559. [Google Scholar] [CrossRef]

- Arpón, S.; Homem-de Mello, T.; Pagnoncelli, B. Scenario reduction for stochastic programs with Conditional Value-at-Risk. Math. Program. 2018, 170, 327–356. [Google Scholar] [CrossRef]

- Anderson, E.; Xu, H.; Zhang, D. Varying confidence levels for CVaR risk measures and minimax limits. Math. Program. 2020, 180, 327–370. [Google Scholar] [CrossRef]

- Behera, J.; Pasayat, A.K.; Behera, H.; Kumar, P. Prediction based mean-value-at-risk portfolio optimization using machine learning regression algorithms for multi-national stock markets. Eng. Appl. Artif. Intell. 2023, 120, 105843. [Google Scholar] [CrossRef]

- Yang, C.; Wu, Z.; Li, X.; Fars, A. Risk-constrained stochastic scheduling for energy hub: Integrating renewables, demand response, and electric vehicles. Energy 2024, 288, 129680. [Google Scholar] [CrossRef]

- Brodie, J.; Daubechies, I.; De Mol, C.; Giannone, D.; Loris, I. Sparse and stable Markowitz portfolios. Proc. Natl. Acad. Sci. USA 2009, 106, 12267–12272. [Google Scholar] [CrossRef] [PubMed]

- Fastrich, B.; Paterlini, S.; Winker, P. Constructing optimal sparse portfolios using regularization methods. Comput. Manag. Sci. 2015, 12, 417–434. [Google Scholar] [CrossRef]

- Dai, Z.; Wen, F. Some improved sparse and stable portfolio optimization problems. Financ. Res. Lett. 2018, 27, 46–52. [Google Scholar] [CrossRef]

- Chai, B.; Eisenbart, B.; Nikzad, M.; Fox, B.; Blythe, A.; Blanchard, P.; Dahl, J. Simulation-based optimisation for injection configuration design of liquid composite moulding processes: A review. Compos. Part A Appl. Sci. Manuf. 2021, 149, 106540. [Google Scholar] [CrossRef]

- Wijaya, W.; Bickerton, S.; Kelly, P. Meso-scale compaction simulation of multi-layer 2D textile reinforcements: A Kirchhoff-based large-strain non-linear elastic constitutive tow model. Compos. Part A Appl. Sci. Manuf. 2020, 137, 106017. [Google Scholar] [CrossRef]

- Ali, M.A.; Irfan, M.S.; Khan, T.; Khalid, M.Y.; Umer, R. Graphene nanoparticles as data generating digital materials in industry 4.0. Sci. Rep. 2023, 13, 4945. [Google Scholar] [CrossRef]

- Xu, H.; Liu, Y.; Sun, H. Distributionally robust optimization with matrix moment constraints: Lagrange duality and cutting plane methods. Math. Program. 2018, 169, 489–529. [Google Scholar] [CrossRef]

- Boyd, S.P.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Sion, M. On general minimax theorems. Pac. J. Math. 1958, 8, 171–176. [Google Scholar] [CrossRef]

- Shapiro, A. On duality theory of conic linear problems. In Semi-Infinite Programming; Springer: New York, NY, USA, 2001; pp. 135–165. [Google Scholar]

- Mordukhovich, B.; Nam, N.M. An Easy Path to Convex Analysis and Applications; Springer Nature: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Shapiro, A.; Xu, H. Stochastic mathematical programs with equilibrium constraints, modelling and sample average approximation. Optimization 2008, 57, 395–418. [Google Scholar] [CrossRef]

- Polyak, B.T. Subgradient methods: A survey of Soviet research. In Proceedings of the Nonsmooth Optimization: Proceedings of a IIASA Workshop, Laxenburg, Austria, 28 March–8 April 1977; pp. 5–29. [Google Scholar]

- Boyd, S.; Xiao, L.; Mutapcic, A. Subgradient Methods; Lecture Notes of EE392o; Stanford University: Stanford, CA, USA, 2004. [Google Scholar]

- Nedic, A.; Bertsekas, D.P. Incremental subgradient methods for nondifferentiable optimization. SIAM J. Optim. 2001, 12, 109–138. [Google Scholar] [CrossRef]

- Chen, X. Smoothing methods for nonsmooth, nonconvex minimization. Math. Program. 2012, 134, 71–99. [Google Scholar] [CrossRef]

- Zhang, C.; Chen, X. Smoothing projected gradient method and its application to stochastic linear complementarity problems. SIAM J. Optim. 2009, 20, 627–649. [Google Scholar] [CrossRef]

- Zhang, C.; Chen, X. A smoothing active set method for linearly constrained non-lipschitz nonconvex optimization. SIAM J. Optim. 2020, 30, 1–30. [Google Scholar] [CrossRef]

- Sant’Anna, L.R.; Caldeira, J.F.; Filomena, T.P. Lasso-based index tracking and statistical arbitrage long-short strategies. N. Amer. J. Econ. Financ. 2020, 51, 101055. [Google Scholar] [CrossRef]

- Wiesemann, W.; Kuhn, D.; Sim, M. Distributionally robust convex optimization. Oper. Res. 2014, 62, 1358–1376. [Google Scholar] [CrossRef]

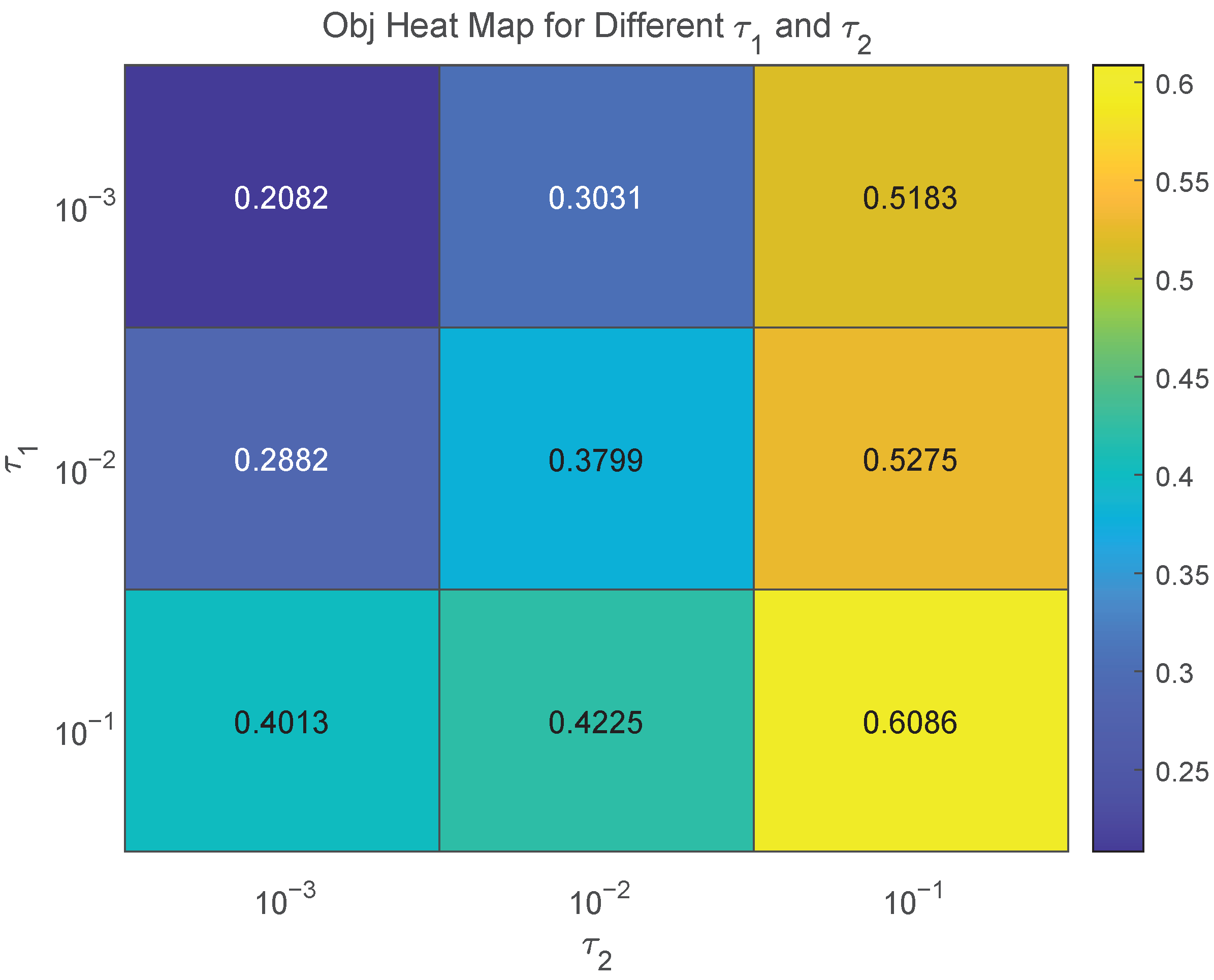

| Obj | CPU Time | ||

|---|---|---|---|

| 0.2082 | 59.9984 | ||

| 0.3031 | 74.0531 | ||

| 0.5183 | 78.4453 | ||

| 0.2882 | 68.1875 | ||

| 0.3799 | 76.6968 | ||

| 0.5275 | 79.2125 | ||

| 0.4013 | 69.6593 | ||

| 0.4225 | 77.2062 | ||

| 0.6086 | 80.0687 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, R.; Hu, Y.; Liu, C.; Gao, Q. An Approximate Algorithm for Sparse Distributionally Robust Optimization. Information 2025, 16, 676. https://doi.org/10.3390/info16080676

Wang R, Hu Y, Liu C, Gao Q. An Approximate Algorithm for Sparse Distributionally Robust Optimization. Information. 2025; 16(8):676. https://doi.org/10.3390/info16080676

Chicago/Turabian StyleWang, Ruyu, Yaozhong Hu, Cong Liu, and Quanwei Gao. 2025. "An Approximate Algorithm for Sparse Distributionally Robust Optimization" Information 16, no. 8: 676. https://doi.org/10.3390/info16080676

APA StyleWang, R., Hu, Y., Liu, C., & Gao, Q. (2025). An Approximate Algorithm for Sparse Distributionally Robust Optimization. Information, 16(8), 676. https://doi.org/10.3390/info16080676