Mobile Platform for Continuous Screening of Clear Water Quality Using Colorimetric Plasmonic Sensing

Abstract

1. Introduction

2. Materials and Methods

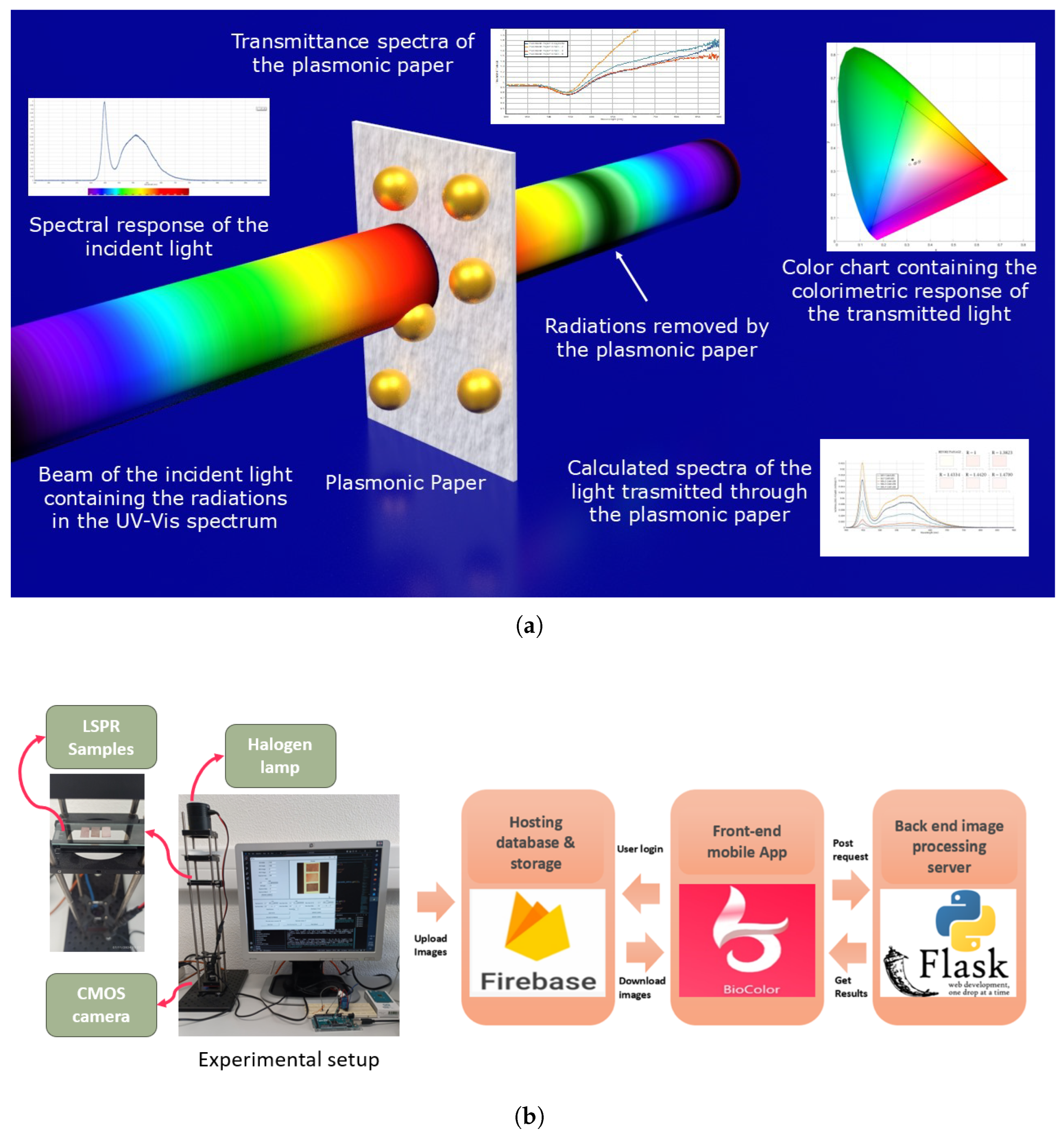

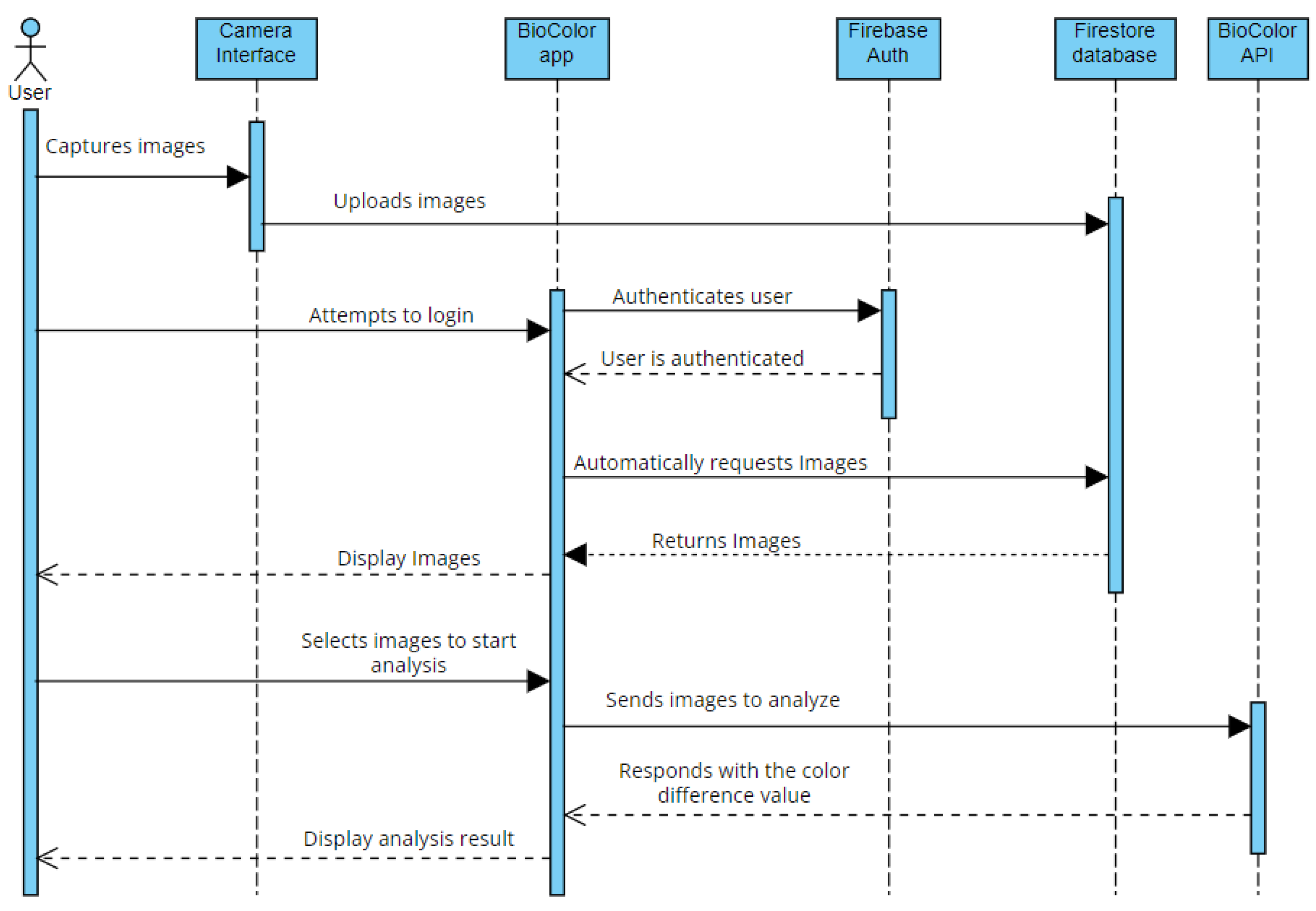

2.1. System Architecture

- Experimental setup, including LSPR samples made from AuNPs plasmonic papers, a halogen lamp positioned directly above the samples, and a CMOS camera placed below to capture the transmitted light. This top-down lighting and bottom-up imaging configuration minimizes external light variability.

- Hosting database that securely stores the captured images using Firebase cloud service.

- Mobile application that displays the images of the samples along with the corresponding sensing results.

- API server that handles the image processing algorithms.

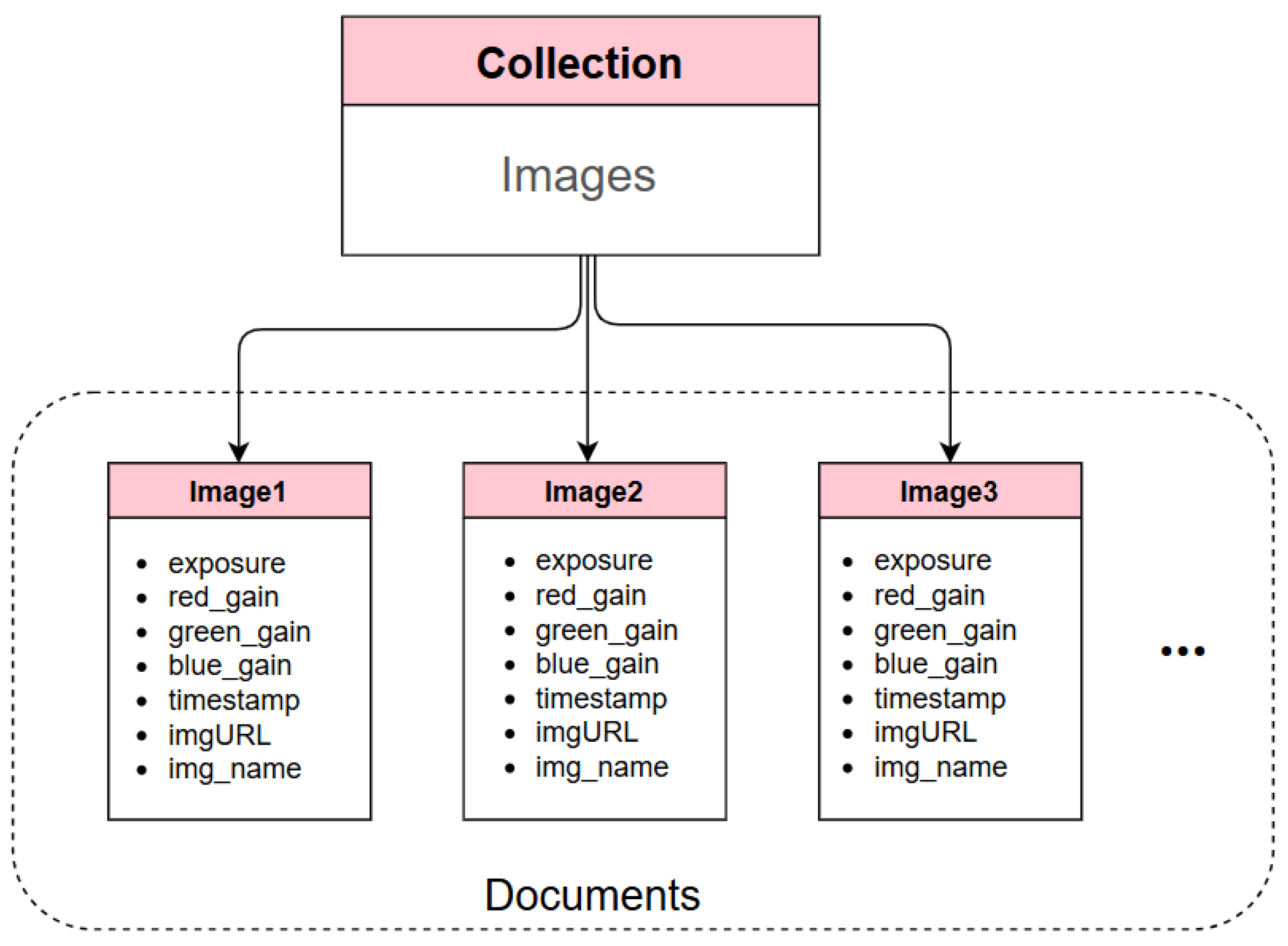

2.2. Firebase Database

2.3. Mobile App

Flutter BLoC State Management

- The User Interface (UI) layer contains all widget files.

- The Business Logic Component layer contains all BLoCs.

- The Repository layer (or data layer) handles the communication with external databases and APIs.

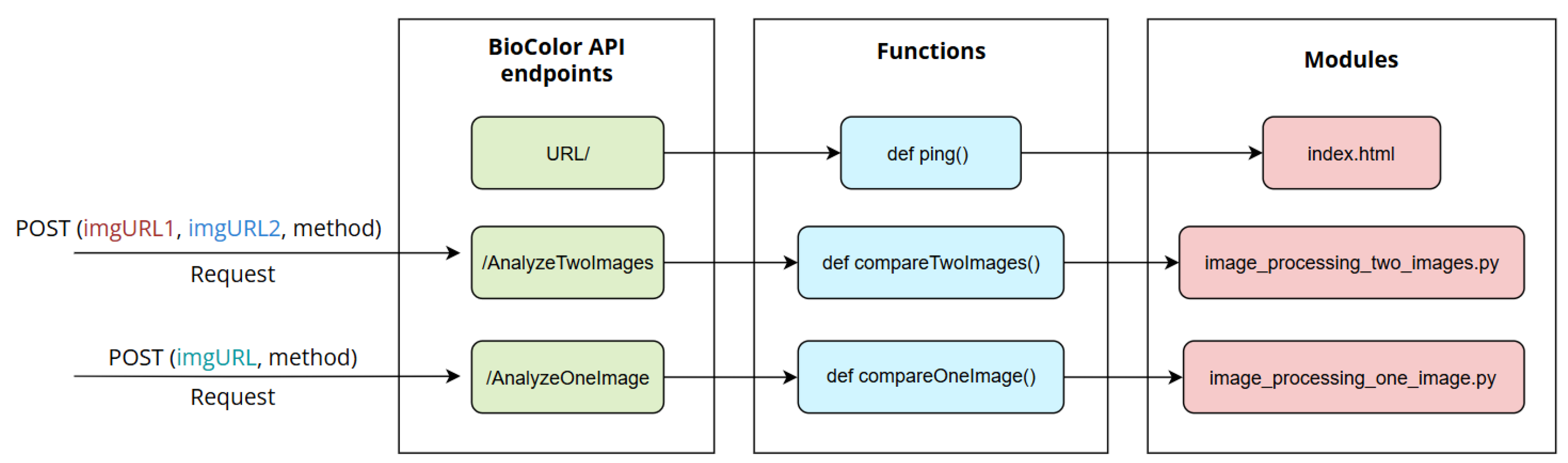

2.4. BioColor Flask API

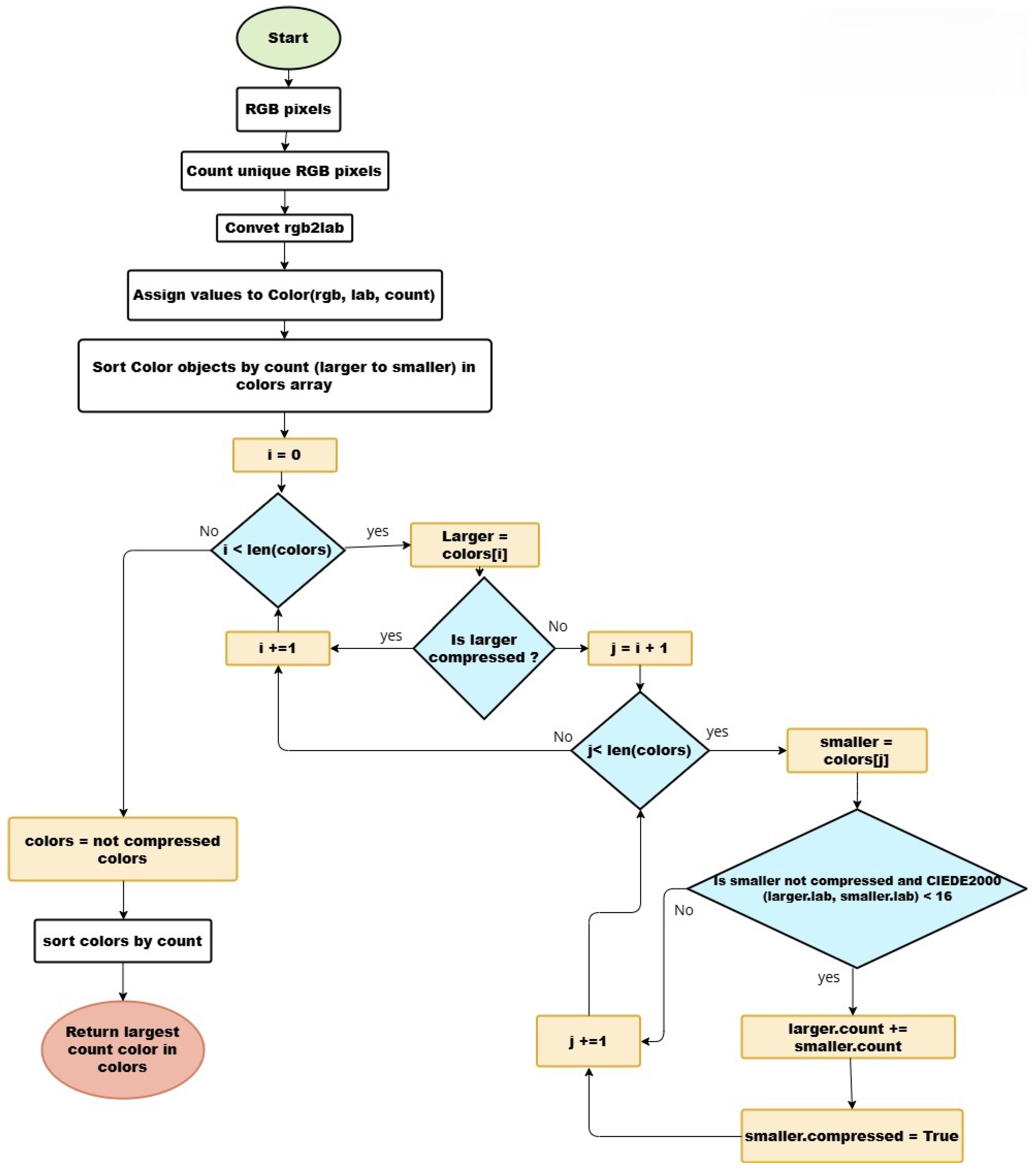

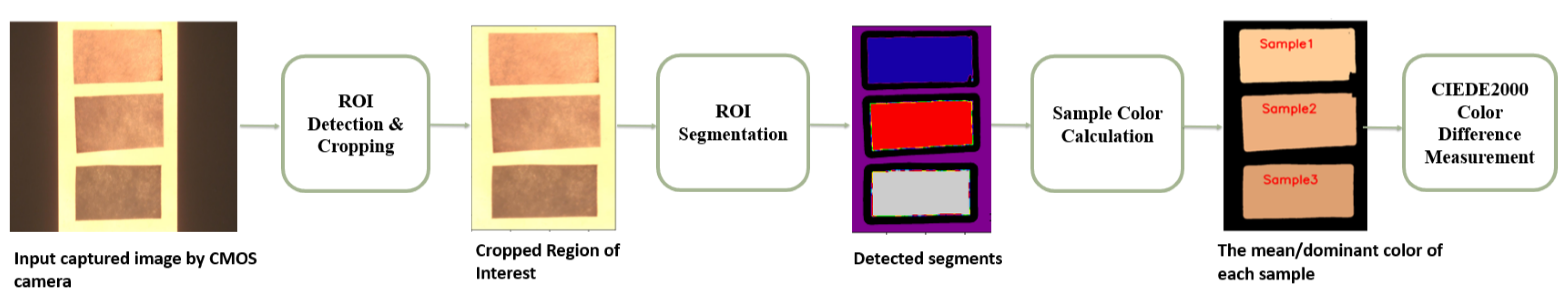

2.5. Image Processing and Color Analysis

3. Results

3.1. Image Processing Evaluation

- A dry substrate with a refractive index of 1.0.

- A substrate moistened with water, which has a refractive index of 1.33

- A substrate moistened with a mixture of glycerol and ethanol, referred to as sol.4, with a refractive index of 1.44.

- A substrate moistened with glycerol, having a refractive index of 1.47.

3.2. User Experience Evaluation

- User registration process using email and password;

- Understanding the types of captured sample images;

- Viewing image camera settings;

- Performing color comparison (three samples displayed in one image);

- Understanding analysis results (three samples displayed in one image);

- Performing color comparison (one sample image compared to one sample image);

- Understanding analysis results (one sample image compared to one sample image).

3.3. Participant Information

- 25% (3) of participants were under 21 years old;

- 33.3% (4) were between 22 and 31 years;

- 8.3% (1) were between 32 and 41 years;

- 16.7% (2) were between 42 and 51 years;

- 16.7% (2) were between 52 and 61 years.

3.4. Task Testing

- Mean: Indicates if users found tasks easy or difficult. For example, a mean of 4.2 suggests tasks were generally easy.

- Standard Deviation: Shows variation in responses. For instance, a value of 0.34 indicates similar opinions, while a value of 1.10 represents mixed opinions, with some users finding the task easy and others finding it difficult.

- Mode: Represents the most frequently chosen response.

3.5. System Usability Scale (SUS) Responses

- I think that I would like to use this system frequently.

- I found the system unnecessarily complex.

- I thought the system was easy to use.

- I think that I would need technical support to use this system.

- I found the various functions in this system well integrated.

- I thought there was too much inconsistency in this system.

- I would imagine that most people would learn to use this system quickly.

- I found the system very cumbersome to use.

- I felt very confident using the system.

- I needed to learn a lot of things before I could start using the system.

4. Discussion

4.1. Analysis of Task Testing and Usability Results

4.2. Limitations and Future Considerations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| PPB | Parts Per Billion |

| DIC | Digital Image Colorimetry |

| LSPR | Localized Surface Plasmon Resonance |

| AuNPs | Gold Nanoparticles |

| API | Application Programming Interface |

| CMOS | Complementary Metal-Oxide-Semiconductor |

| URL | Uniform Resource Locator |

| NoSQL | Non-Structured Query Language |

| SDK | Software Development kit |

| BLoC | Business Logic Component |

| UI | User Interface |

| RESTful | full-Representational State Transfer |

| HTTP | Hyper-text Transfer Protocol |

Appendix A. Image Processing Techniques

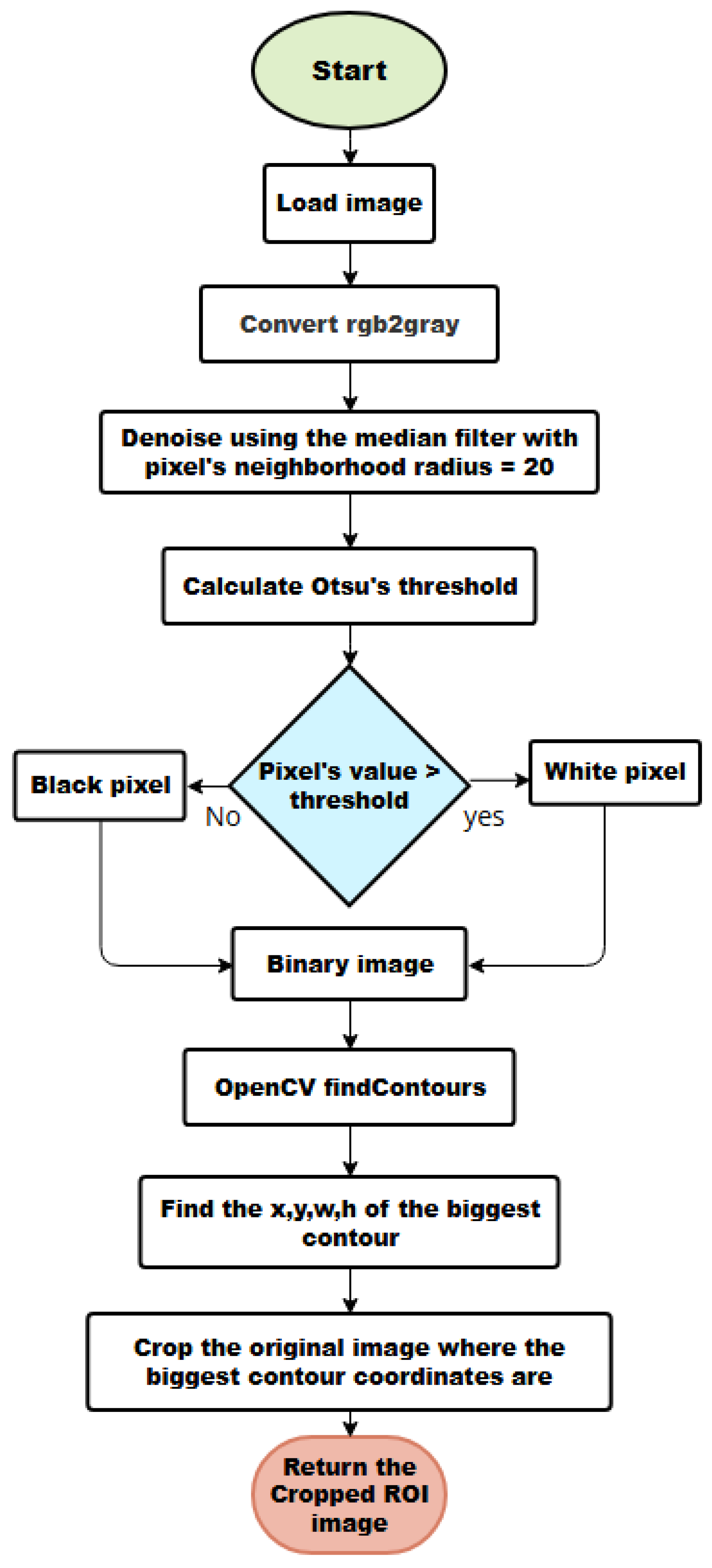

Appendix A.1. ROI Detection and Cropping

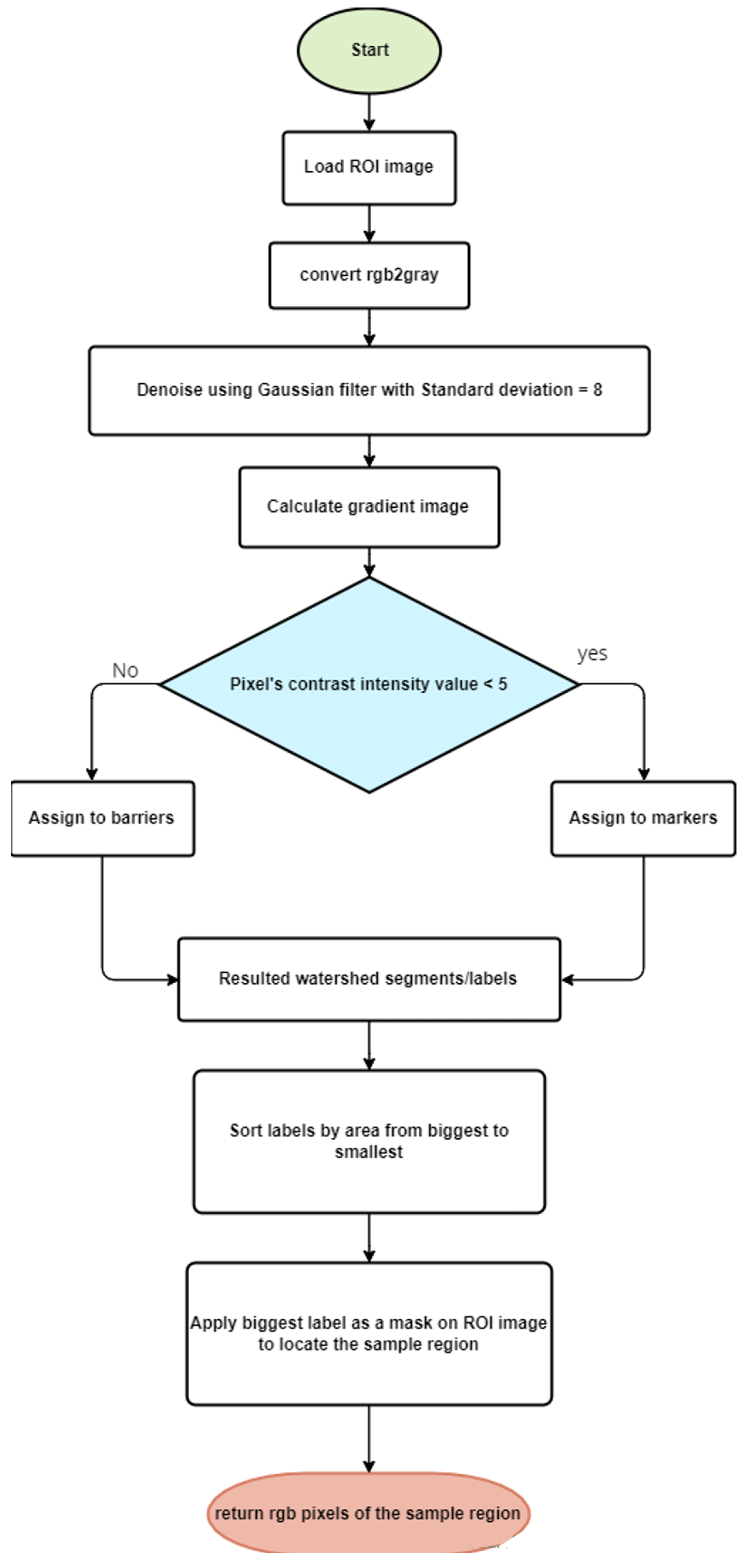

Appendix A.2. ROI Segmentation

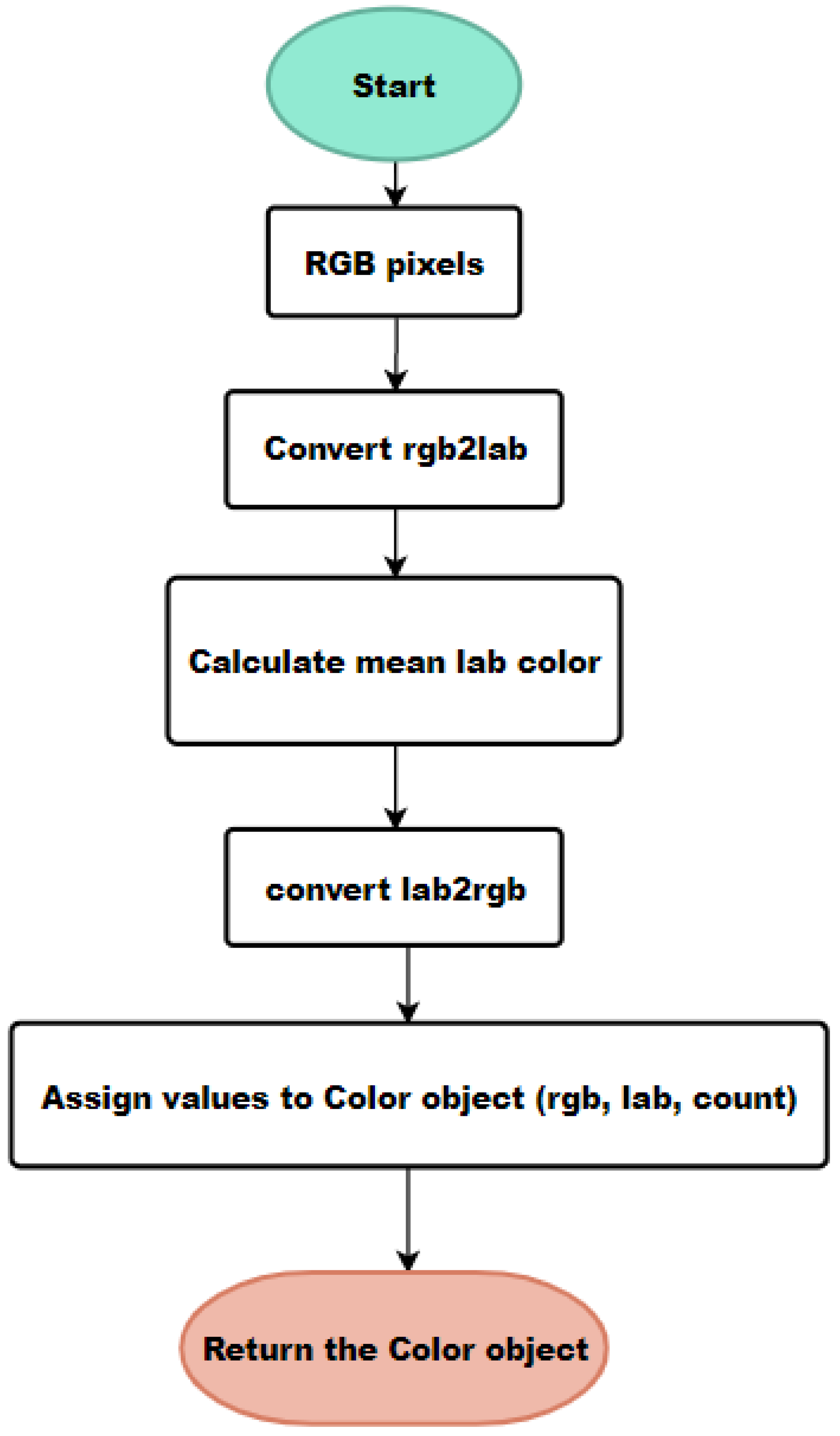

Appendix A.3. Sample Color Calculation

References

- Bline, A.P.; DeWitt, J.C.; Kwiatkowski, C.F.; Pelch, K.E.; Reade, A.; Varshavsky, J.R. Public Health Risks of PFAS-Related Immunotoxicity Are Real. Curr. Environ. Health Rep. 2024, 11, 118. [Google Scholar] [CrossRef]

- Reeve, R.N. Introduction. In Introduction to Environmental Analysis; John Wiley and Sons Ltd.: Chichester, UK, 2002; pp. 2–4. [Google Scholar]

- Alberti, G.; Zanoni, C.; Magnaghi, L.R.; Biesuz, R. Disposable and Low-Cost Colorimetric Sensors for Environmental Analysis. Int. J. Environ. Res. Public Health 2020, 17, 8331. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Chen, S.; Fu, R.; Li, D.; Jiang, H.; Wang, C.; Peng, Y.; Jia, K.; Hicks, B.J. Remote Sensing Big Data for Water Environment Monitoring: Current Status, Challenges, and Future Prospects. Earth’s Future 2022, 10, e2021EF002289. [Google Scholar] [CrossRef]

- Dharshani, J.; Annamalai, S. Cloud-Based Effective Environmental Monitoring of Temperature, Humidity and Air Quality Using IoT Sensors. In Proceedings of the 5th International Conference on Information Management & Machine Intelligence (ICIMMI 2023), New York, NY, USA, 23–25 November 2023; Association for Computing Machinery: New York, NY, USA, 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Dharshani, J.; Annamalai, S. Data Collection and Analysis Using the Mobile Application for Environmental Monitoring. Procedia Comput. Sci. 2015, 56, 532–537. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, X.; Li, J.; Liu, Y.; Zhang, Z.; Li, L.; Li, Y.; Wang, Y. Recent Advancements of Smartphone-Based Sensing Technology for Environmental Monitoring. Trends Environ. Anal. Chem. 2024, 31, e00136. [Google Scholar] [CrossRef]

- Feng, L.; You, Y.; Liao, W.; Pang, J.; Hu, R.; Feng, L. Multi-Scale Change Monitoring of Water Environment Using Cloud Computing in Optimal Resolution Remote Sensing Images. Energy Rep. 2022, 8, 13610–13620. [Google Scholar] [CrossRef]

- Velasco, A.; Ferrero, R.; Gandino, F.; Montrucchio, B.; Rebaudengo, M. A Mobile and Low-Cost System for Environmental Monitoring: A Case Study. Sensors 2016, 16, 710. [Google Scholar] [CrossRef] [PubMed]

- Adjovu, G.E.; Stephen, H.; James, D.; Ahmad, S. Overview of the Application of Remote Sensing in Effective Monitoring of Water Quality Parameters. Remote Sens. 2023, 15, 1938. [Google Scholar] [CrossRef]

- Fan, Y.; Li, J.; Guo, Y.; Xie, L.; Zhang, G. Digital Image Colorimetry on Smartphone for Chemical Analysis: A Review. Measurement 2021, 171, 108829. [Google Scholar] [CrossRef]

- Polat, F. Simple, Accurate, and Precise Detection of Total Nitrogen in Surface Waters Using a Smartphone-Based Analytical Method. Anal. Sci. 2025, 41, 281–287. [Google Scholar] [CrossRef]

- Zhang, H.-Y.; Zhang, Y.; Lai, D.; Chen, L.-Q.; Zhou, L.-Q.; Tao, C.-L.; Fang, Z.; Zhu, R.-R.; Long, W.-Q.; Liu, J.-W.; et al. Real-Time Smartphone-Based Multi-Parameter Detection of Nitrite, Ammonia Nitrogen, and Total Phosphorus in Water. Anal. Methods 2025, 17, 5683–5696. [Google Scholar] [CrossRef]

- Zamora, E. Using Image Processing Techniques to Estimate the Air Quality. McNair J. 2012, 5, 1–10. Available online: https://oasis.library.unlv.edu/mcnair_posters/20/ (accessed on 9 August 2025).

- Sarikonda, A.; Rafi, R.; Schuessler, C.; Mouchtouris, N.; Bray, D.P.; Farrell, C.J.; Evans, J.J. Smartphone Applications for Remote Monitoring of Patients After Transsphenoidal Pituitary Surgery: A Narrative Review of Emerging Technologies. World Neurosurg. 2024, 191, 213–224. [Google Scholar] [CrossRef] [PubMed]

- Kanchi, S.; Sabela, M.I.; Mdluli, P.S.; Inamuddin; Bisetty, K. Smartphone-Based Bioanalytical and Diagnosis Applications: A Review. Biosens. Bioelectron. 2018, 102, 136–149. [Google Scholar] [CrossRef]

- Piriya, V.S.A.; Joseph, P.; Daniel, S.C.G.K.; Lakshmanan, S.; Kinoshita, T.; Muthusamy, S. Colorimetric Sensors for Rapid Detection of Various Analytes. Mater. Sci. Eng. C Mater. Biol. Appl. 2017, 78, 1231–1245. [Google Scholar] [CrossRef] [PubMed]

- Khurana, K.; Jaggi, N. Localized Surface Plasmonic Properties of Au and Ag Nanoparticles for Sensors: A Review. Plasmonics 2021, 16, 981–999. [Google Scholar] [CrossRef]

- Csáki, A.; Stranik, O.; Fritzsche, W. Localized Surface Plasmon Resonance Based Biosensing. Expert Rev. Mol. Diagn. 2018, 18, 279–296. [Google Scholar] [CrossRef]

- Alberti, G.; Zanoni, C.; Magnaghi, L.R.; Biesuz, R. Gold and Silver Nanoparticle-Based Colorimetric Sensors: New Trends and Applications. Chemosensors 2021, 9, 305. [Google Scholar] [CrossRef]

- Serafinelli, C.; Fantoni, A.; Alegria, E.C.; Vieira, M. Hybrid Nanocomposites of Plasmonic Metal Nanostructures and 2D Nanomaterials for Improved Colorimetric Detection. Chemosensors 2022, 10, 237. [Google Scholar] [CrossRef]

- Wang, L.; Hasanzadeh Kafshgari, M.; Meunier, M. Optical Properties and Applications of Plasmonic-Metal Nanoparticles. Adv. Funct. Mater. 2020, 30, 2005400. [Google Scholar] [CrossRef]

- Serafinelli, C.; Fantoni, A.; Alegria, E.; Vieira, M. Recent Progresses in Plasmonic Biosensors for Point-of-Care (POC) Devices: A Critical Review. Chemosensors 2023, 11, 303. [Google Scholar] [CrossRef]

- Ramirez-Priego, P.; Mauriz, E.; Giarola, J.F.; Lechuga, L.M. Overcoming Challenges in Plasmonic Biosensors Deployment for Clinical and Biomedical Applications: A Systematic Review and Meta-Analysis. Sens. Bio-Sens. Res. 2024, 46, 100717. [Google Scholar] [CrossRef]

- Priyadarshini, E.; Pradhan, N. Gold Nanoparticles as Efficient Sensors in Colorimetric Detection of Toxic Metal Ions: A Review. Sens. Actuators B Chem. 2017, 238, 888–902. [Google Scholar] [CrossRef]

- Bendicho, C.; Lavilla, I.; La Calle, I.D.; Romero, V. Paper-Based Analytical Devices for Colorimetric and Luminescent Detection of Mercury in Waters: An Overview. Sensors 2021, 21, 7571. [Google Scholar] [CrossRef] [PubMed]

- Firdaus, M.L.; Aprian, A.; Meileza, N.; Hitsmi, M.; Elvia, R.; Rahmidar, L.; Khaydarov, R. Smartphone Coupled with a Paper-Based Colorimetric Device for Sensitive and Portable Mercury Ion Sensing. Chemosensors 2019, 7, 25. [Google Scholar] [CrossRef]

- Aqillah, F.; Permana, M.D.; Eddy, D.R.; Firdaus, M.L.; Takei, T.; Rahayu, I. Detection and Quantification of Cu2+ Ion Using Gold Nanoparticles via Smartphone-Based Digital Imaging Colorimetry Technique. Results Chem. 2024, 7, 101418. [Google Scholar] [CrossRef]

- Gan, Y.; Liang, T.; Hu, Q.; Zhong, L.; Wang, X.; Wan, H.; Wang, P. In-Situ Detection of Cadmium with Aptamer Functionalized Gold Nanoparticles Based on Smartphone-Based Colorimetric System. Talanta 2020, 208, 120231. [Google Scholar] [CrossRef]

- Mekonnen, M.L.; Workie, Y.A.; Su, W.; Hwang, B.J. Plasmonic Paper Substrates for Point-of-Need Applications: Recent Developments and Fabrication Methods. Sens. Actuators B Chem. 2021, 345, 130401. [Google Scholar] [CrossRef]

- Serafinelli, C.; Fantoni, A.; Alegria, E.C.B.A.; Vieira, M. Colorimetric Analysis of Transmitted Light Through Plasmonic Paper for Next-Generation Point-of-Care (PoC) Devices. Biosensors 2025, 15, 144. [Google Scholar] [CrossRef]

- Mansour, R.; Stojkovic, V.; Vygranenko, Y.; Lourenço, P.; Jesus, R.; Fantoni, A. Colour and Image Processing for Output Extraction of a LSPR Sensor. In Proceedings of the Optics and Biophotonics in Low-Resource Settings VIII, San Francisco, CA, USA, 22 January–28 February 2022; Volume 35. [Google Scholar] [CrossRef]

- Mansour, R.; Serafinelli, C.; Fantoni, A.; Jesus, R. An Affordable Optical Detection Scheme for LSPR Sensors. EPJ Web Conf. 2024, 305, 00025. [Google Scholar] [CrossRef]

- Firebase. Available online: https://firebase.google.com/ (accessed on 12 February 2025).

- Flutter. Available online: https://flutter.dev/ (accessed on 12 February 2025).

- BLoC Library Documentation. Available online: https://bloclibrary.dev/why-bloc/ (accessed on 12 February 2025).

- Flask Documentation. Available online: https://flask.palletsprojects.com/en/stable/ (accessed on 12 February 2025).

- PythonAnywhere. Available online: https://www.pythonanywhere.com/ (accessed on 12 February 2025).

- van der Walt, S.; Schönberger, J.L.; Nunez-Iglesias, J.; Boulogne, F.; Warner, J.D.; Yager, N.; Gouillart, E.; Yu, T.; The scikit-image contributors. scikit-image: Image Processing in Python. PeerJ 2014, 2, e453. [Google Scholar] [CrossRef] [PubMed]

- Ratnarathorn, N.; Chailapakul, O.; Henry, C.S.; Dungchai, W. Simple Silver Nanoparticle Colorimetric Sensing for Copper by Paper-Based Devices. Talanta 2012, 99, 552–557. [Google Scholar] [CrossRef] [PubMed]

- Apilux, A.; Siangproh, W.; Praphairaksit, N.; Chailapakul, O. Simple and Rapid Colorimetric Detection of Hg(II) by a Paper-Based Device Using Silver Nanoplates. Talanta 2012, 97, 388–394. [Google Scholar] [CrossRef]

- Nielsen, J. Why You Only Need to Test with 5 Users. Nielsen Norman Group. 18 March 2000. Available online: https://www.nngroup.com/articles/why-you-only-need-to-test-with-5-users/ (accessed on 29 July 2025).

- Brooke, J. SUS—A Quick and Dirty Usability Scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- MeasuringU. System Usability Scale (SUS). Available online: https://measuringu.com/sus/ (accessed on 12 February 2025).

- Vincent, L.; Soille, P. Watersheds in Digital Spaces: An Efficient Algorithm Based on Immersion Simulations. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 583–598. [Google Scholar] [CrossRef]

- Sharma, G.; Wu, W.; Dalal, E.N. The CIEDE2000 Color-Difference Formula: Implementation Notes, Supplementary Test Data, and Mathematical Observations. Color Res. Appl. 2005, 30, 21–30. [Google Scholar] [CrossRef]

| Page | Description |

|---|---|

| Signup | Registers users in the Firestore database using email and password (Figure 5a). |

| Login | Authenticates users with their email and password (Figure 5b). |

| Home | Shows captured images from the camera. Tapping an image opens the Sample page (Figure 5c). |

| Sample | Displays image details and camera settings. Allows adding the image to the Analysis page (Figure 5d). |

| Analysis | Allows users to select and remove images for analysis. It provides two options for analysis: three samples in single image (Figure 5e) or one sample image compared with another sample image (Figure 5g). Users can choose a comparison method (mean or dominant) and initiate the analysis. |

| Result | Shows color comparison results. For a single image containing three samples, the output includes the original image on the left with the mean or dominant color of each sample on the right, and a color difference graph below (Figure 5f). In the case of one-sample images, each sample is presented alongside its mean or dominant color and the corresponding color difference value (Figure 5h). |

| BLoC | Responsibility |

|---|---|

| Sample | Receives a list of Sample objects from the Sample Repository and updates the Home page to display the images. |

| Analysis | Adds, removes, or checkboxes images on the Analysis page. Selects a color comparison method to start the analysis. |

| Result | Receives checkboxed images and the comparison method from the Analysis page. Sends them to the Result Repository for posting to the API. Receives the Result object from the Result Repository and updates the Result page with the analysis results. |

| Repository | Responsibility |

|---|---|

| Sample | Fetches sample images from the Firestore collection (‘images/’) as a Stream. The Sample BLoC subscribes to the stream to be updated whenever new images are added to the Firestore collection. |

| Result | Sends analysis data as an HTTP POST request to the API endpoint and delivers the received analysis results to the Result BloC. |

| Substrate | Mean Color | Dominant Color |

|---|---|---|

| Dry vs. glycerol | 17.58 | 18.98 |

| Dry vs. sol.4 | 17.44 | 20.81 |

| Dry vs. water | 11.92 | 11.07 |

| Water vs. glycerol | 5.18 | 2.39 |

| Water vs. sol.4 | 5.37 | 4.3 |

| Sol.4 vs. glycerol | 0.73 | 4.08 |

| Task Name | Mean | Standard Deviation | Mode |

|---|---|---|---|

| 1. BioColor App Registration Ease | 4.83 | 0.37 | 5 |

| 2. Understanding Sample Images on Home Page | 4.33 | 1.11 | 5 |

| 3. Checking Image Details Ease | 4.92 | 0.28 | 5 |

| 4. Performing Color Comparison (three samples in one image) | 4.67 | 0.62 | 5 |

| 5. Understanding Analysis Results (three samples displayed in one image) | 3.92 | 1.04 | 4 |

| 6. Performing Color Comparison (one sample image compared to another) | 4.08 | 1.05 | 5 |

| 7. Understanding Analysis Results (one sample image compared to another) | 4.17 | 0.99 | 5 |

| Question | Mean | Standard Deviation | Mode |

|---|---|---|---|

| 1 | 3.5 | 1.35 | 4 |

| 2 | 1.67 | 0.62 | 2 |

| 3 | 4.33 | 0.62 | 4 |

| 4 | 2 | 1.22 | 1 |

| 5 | 4.08 | 0.51 | 4 |

| 6 | 2.33 | 1.10 | 2 |

| 7 | 4.42 | 0.85 | 5 |

| 8 | 1.67 | 0.63 | 2 |

| 9 | 4.08 | 0.88 | 4 |

| 10 | 1.75 | 0.72 | 1 & 2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mansour, R.; Serafinelli, C.; Jesus, R.; Fantoni, A. Mobile Platform for Continuous Screening of Clear Water Quality Using Colorimetric Plasmonic Sensing. Information 2025, 16, 683. https://doi.org/10.3390/info16080683

Mansour R, Serafinelli C, Jesus R, Fantoni A. Mobile Platform for Continuous Screening of Clear Water Quality Using Colorimetric Plasmonic Sensing. Information. 2025; 16(8):683. https://doi.org/10.3390/info16080683

Chicago/Turabian StyleMansour, Rima, Caterina Serafinelli, Rui Jesus, and Alessandro Fantoni. 2025. "Mobile Platform for Continuous Screening of Clear Water Quality Using Colorimetric Plasmonic Sensing" Information 16, no. 8: 683. https://doi.org/10.3390/info16080683

APA StyleMansour, R., Serafinelli, C., Jesus, R., & Fantoni, A. (2025). Mobile Platform for Continuous Screening of Clear Water Quality Using Colorimetric Plasmonic Sensing. Information, 16(8), 683. https://doi.org/10.3390/info16080683