Interaction, Artificial Intelligence, and Motivation in Children’s Speech Learning and Rehabilitation Through Digital Games: A Systematic Literature Review

Abstract

1. Introduction

2. Research Methods

2.1. Person (and Problem)

2.2. Environments

2.3. Stakeholders

- Children with speech disabilities: These are one of the target users in the solutions and published research papers.

- Caregivers or parents: Most projects in speech rehabilitation involve supervision by parents and caregivers. Thus, they are major stakeholders.

- Therapists: They are end users in many speech rehabilitation games for multiple purposes, such as setting up the game or reviewing feedback.

- Developers and Computer Scientists: This paper aims to conclude the common recommendations suggested by the research conducted in the digital speech rehabilitation game area.

- Researchers (Sociologists, Psychologists, Medical Professionals): This paper addresses some of the areas speech rehabilitation games target, as well as the areas for future research recommended by the researchers and this review of the state of the art. These could benefit researchers in related areas.

2.4. Intervention

2.5. Comparison

- Degree of children playing rehabilitation games independently.

- Speech recognition libraries in digital games.

- interaction and feedback implemented in different games.

2.6. Outcome

- Engagement and motivation.

- Greater independence and less intervention from carers or therapists.

- Enhanced pronunciation.

2.7. Research Questions

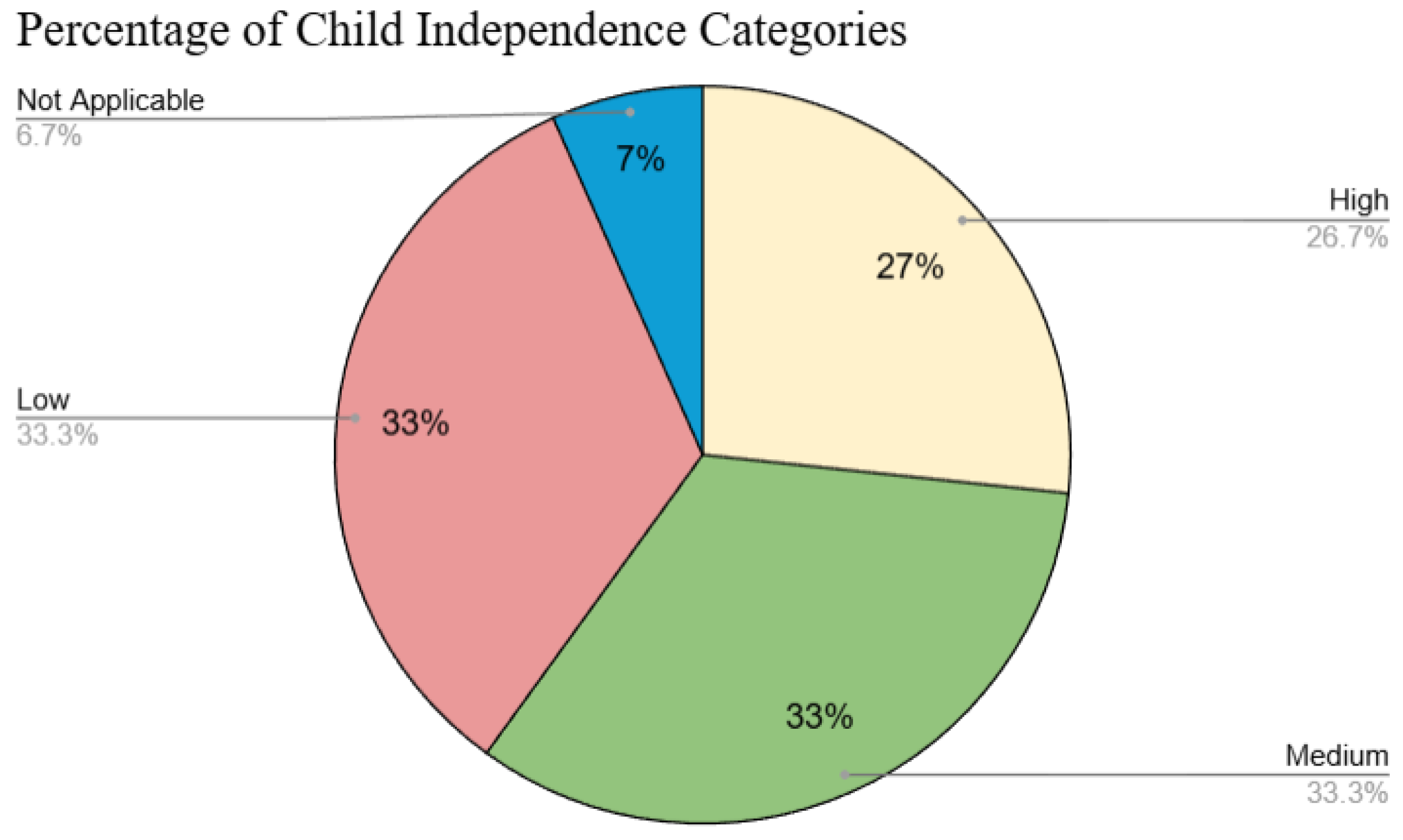

- RQ1: What is the degree of independence of children playing current speech rehabilitation or learning games?

- RQ2: What interaction has been found to be effective in rehabilitating children with speech disabilities?

- RQ3: What is the role of Artificial Intelligence, such as Natural Language Processing, within digital games for children’s speech rehabilitation?

- RQ4: What is the impact of using games for rehabilitation exercises on the motivation and engagement of children with speech disabilities?

2.8. Related Databases

- ACM Digital Library: Provides research articles related to technological and computer science aspects, such as software and hardware.

- IEEE Xplore: Provides the technological aspects of the search.

2.9. Search Terms

- ACM Digital Library:(Title:(speak* OR speech OR voice) AND (Title: (rehab* OR therapy OR serious AND NOT autis* AND NOT dyslex*)) AND Title:(gam*) AND Title:(child*)) OR (Keyword:((speech OR speak* OR voice) AND (rehab OR therapy) AND (gam*)))

- IEEE Xplore:((“Document Title”:speech OR speak* OR voice) AND (“Document Title”:rehab* OR therapy OR serious) AND (“Document Title”:gam*) AND (“Document Title”:child*)) OR ((“Author Keywords”:speech OR speak* OR voice) AND (“Author Keywords”:rehab* OR therapy) AND (“Author Keywords”:gam*)) NOT (“Document Title”:autis* OR “Document Title”:dyslex*)

2.10. Scope

2.11. Selection Process

2.11.1. Inclusion Criteria

- Studies published between 2011 and 2023 inclusive (commercially available ubiquitous interaction with speech recognition influences people and technology enhancement, with Siri being launched in 2011).

- Studies need to be identified as targeting children up to secondary school. It ensures the majority target audience is within the age range we include (2–12).

- Studies shall be identified that contain children with no, or almost no, speech ability in their current state, but with the potential to improve their speech.

- Studies shall target rehabilitation or serious games in order to enhance the users’ speech.

- We only consider papers written in the English language

- Studies shall be published rather than in-press.

- Studies considered in the backward and forward searching can be published anywhere as long as they are relevant and specific to the topic.

2.11.2. Exclusion Criteria

- Dyslexia: There is a fine line between speech disabilities and dyslexia, and we want to ensure that the papers target children with speech disabilities rather than reading, writing, and spelling.

- Case reports, narrative reviews, and opinion pieces shall be excluded.

- Studies shall not be in the peer-reviewing stage.

- Studies focusing on languages other than English shall be excluded.

3. Results

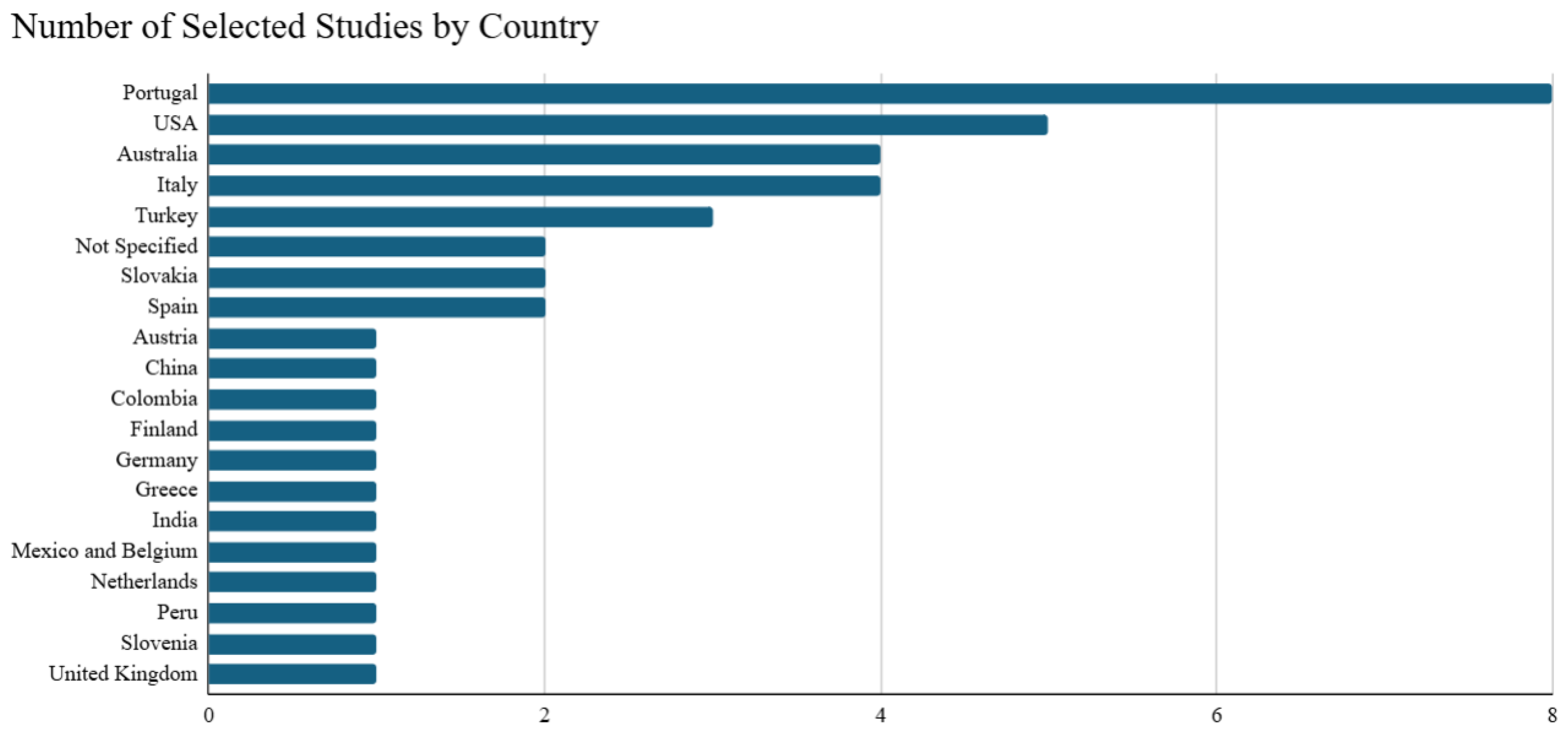

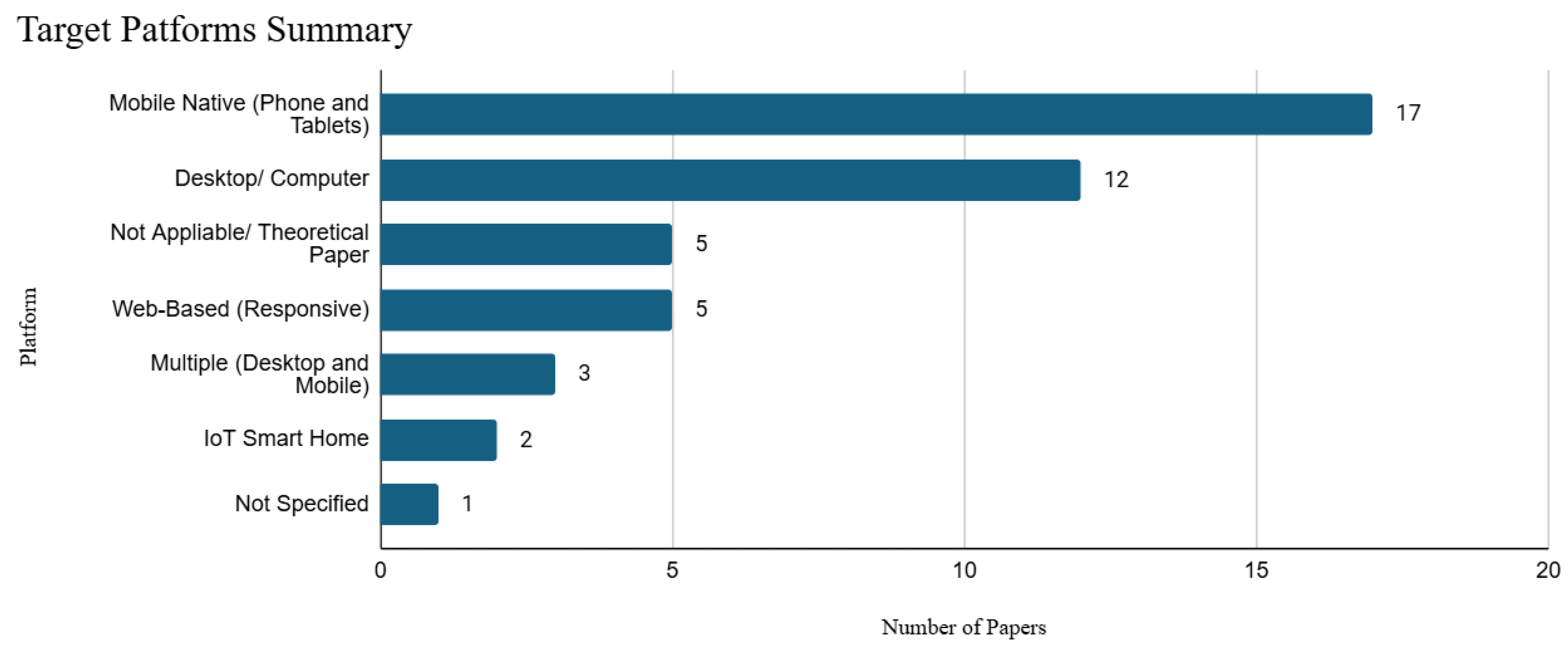

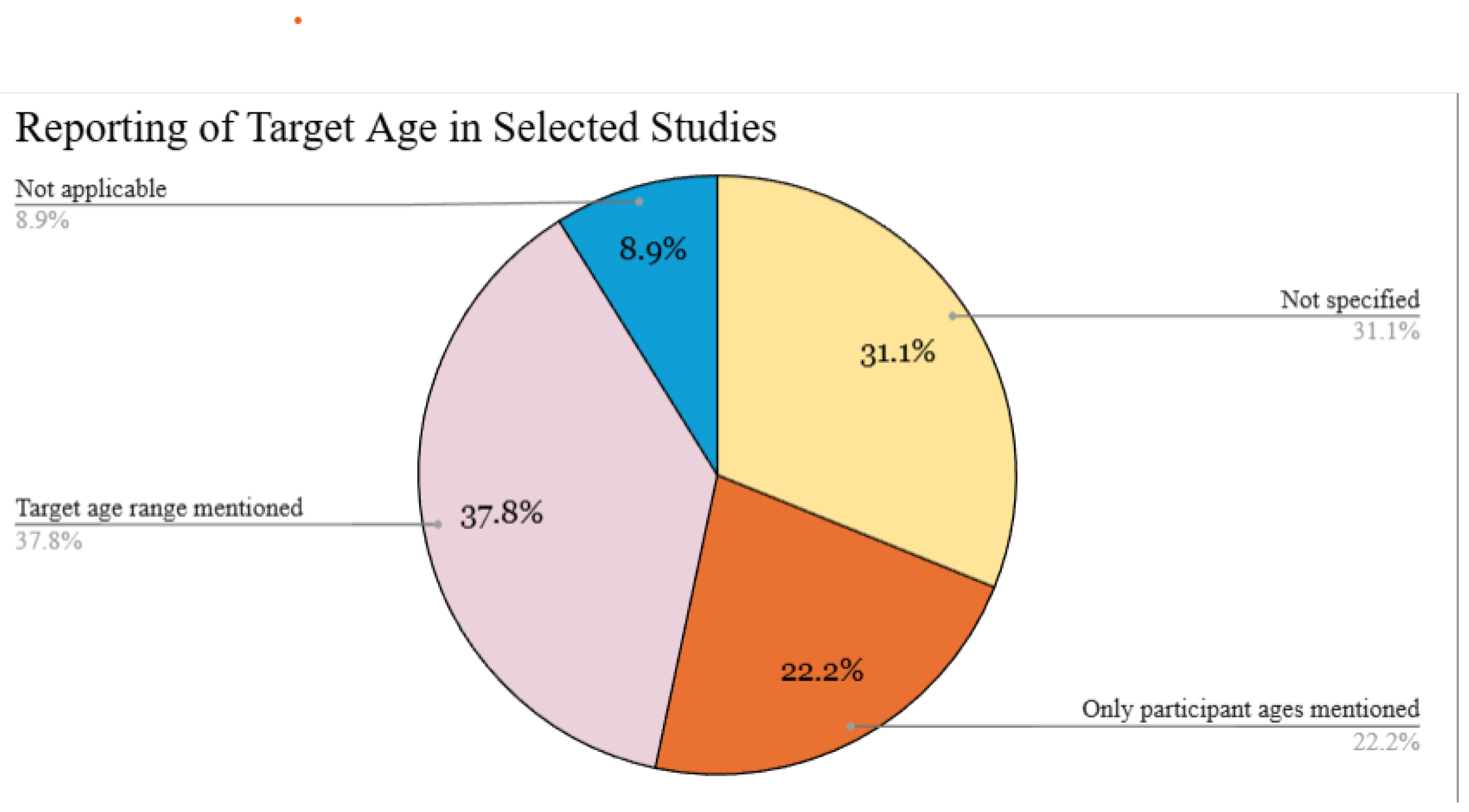

3.1. Conducting the Review

3.2. Summary of Results

3.3. RQ1: What Is the Degree of Independence of Children Playing Current Speech Rehabilitation or Learning Games?

3.4. RQ2: What Interaction Has Been Found to Be Effective in Rehabilitating Children with Speech Disabilities?

3.5. RQ3: What Is the Role of Artificial Intelligence (AI), Such as Natural Language Processing (NLP), Within Digital Games for Children’s Speech Rehabilitation?

3.6. RQ4: What Is the Impact of Using Games for Rehabilitation Exercises on the Motivation and Engagement of Children with Speech Disabilities?

4. Discussions and Future Work

Limitations of the Study

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. ASR Technologies Used in Children’s Speech Rehabilitation Games

| Reference | Technology Category | Accuracy | ASR Technology Conclusion | Details |

|---|---|---|---|---|

| [35] | Template-Based | Template Matching (TM): 72% F1, Goodness of Pronunciation: 69% F1 | Template Matching outperformed GOP; TM was better at identifying mispronunciations. Children improved with both. | Template Matching (TM), GOP algorithm using Kaldi acoustic models trained on Librispeech corpus (960 h of adult speech) |

| [52] | Rule-Based/Hybrid | Not mentioned | Speech scoring system validated in small studies; storybook format found most motivating. Offline ASR effective. | Offline ASR, custom speech scoring |

| [34] | Rule-Based/Hybrid | Not mentioned | No direct ASR accuracy reported; focuses on integration with Alexa and making therapy more engaging through automation. | Alexa voice assistant, AI scoring |

| [11] | Other/Unspecified | 70% threshold for good pronunciation | Children could control the game via voice; 70% score considered a good result. Emphasises motivation and independence. | Word Detection Package, Windows UDP Voice Recognition Server |

| [44] | Data Collection/Preprocessing | Not mentioned (conceptual framework) | No ASR tested; proposes a crowd-sourced dataset creation and validation model to improve ASR performance in therapy. | Collaborative dataset with therapist validation |

| [9] | Rule-Based/Hybrid | Not mentioned | Game offers visual feedback for /s/, /z/, etc.; real-time processing used; accuracy and scalability not evaluated. | Custom audio analysis tool for sibilant sounds |

| [45] | Other/Unspecified | Not mentioned | Supports parent-guided home use with simple detection logic; emphasises usability more than recognition precision. | Sound detection and interactive speech interface |

| [17] | Other/Unspecified | Therapist-rated as accurate enough for dysphonic voices | System filters ambient noise and tracks pitch; FFT preferred over auto-correlation due to sensitivity needs. | Pitch and loudness detection via Fast Fourier Transform (FFT), Kinect mic array |

| [18] | Other/Unspecified | Not mentioned | Focus is on structured language interaction and logging; limited use of automated speech recognition. | Therapist-guided interaction; voice and scenario logging |

| [26] | Machine Learning/AI-Based | 86.1% (cross-validation) | Promising accuracy for phoneme-level classification, not real-time but useful for therapeutic monitoring. | Deep Neural Network (DNN)-based classifier for sibilant consonant detection |

| [28] | Other/Unspecified | Not quantified | Prototype shows motivational benefit despite ASR limitations, aims for future precision improvements. | Conversational speech recognition (prototype) |

| [2] | Other/Unspecified | Not consistent across tools | ASR adoption remains fragmented; real-time, child-friendly ASR is still underdeveloped; environmental noise challenges. | Varied across reviewed studies |

| [36] | Machine Learning/AI-Based | Not evaluated (manual marking used) | ASR integration planned; manual system ensures evaluation consistency but limits scalability. | Wizard of Oz (manual) with plans for PocketSphinx |

| [23] | Other/Unspecified | Not mentioned | Focuses on autonomous engagement; does not evaluate ASR. | Not specified |

| [30] | Other/Unspecified | Not quantified | ASR used for independent vocabulary evaluation; supports scalable remote therapy. | Integrated speech recognition in mobile app (ASR library unspecified) |

| [22] | Other/Unspecified | Not applicable | Focus on ICTs and their general benefit; no use of ASR. | Not implemented (focuses on ICT tools) |

| [20] | Other/Unspecified | Not applicable | Describes future potential of ASR and IoT for home-based therapy; not yet implemented. | Planned conversational interfaces |

| [31] | Rule-Based/Hybrid | Not quantified | Indicates speech quality improvement tracking but lacks model-specific detail. | Custom scoring algorithm based on speech input and pronunciation comparison |

| [32] | Other/Unspecified | Not quantified; adjusted with calibration and thresholding | Highlights the value of lightweight real-time vowel recognition over full ASR for engagement and responsiveness. | Formant-based vowel detection using LPC and FFT; implemented in C++ with OpenFrameworks |

| [47] | Other/Unspecified | High robustness claimed, no specific number | Strong emphasis on robustness to background noise for practical settings. | Robust HMM-based phoneme recogniser with noise-robust features |

| [27] | Other/Unspecified | 73.98% to 85.93% | Demonstrates reliable classification for different voice exercises with low false negatives. | SVM-based classifier for sustained vowels and pitch variation using MFCCs and F0 features |

| [10] | Other/Unspecified | Not applicable | Conceptual metamodel; no empirical results but supports integration of speech recognition as part of user modeling. | Proposed framework includes phoneme recognition; no specific implementation |

| [24] | Machine Learning/AI-Based | Not quantified, but describes ’robust detection’ and ’usable feedback’ | Feasible and acceptable in resource-constrained settings over the long term. | Specific engine not stated |

| [40] | Other/Unspecified | Moderate; poor recognition for children’s accents observed | Popular gameplay can boost motivation, but recognition issues must be addressed. | Microsoft Speech SDK with Kinect microphone array |

| [21] | Machine Learning/AI-Based | High (95% overall, 97.5% phoneme accuracy in pilot) | Highly promising; customisable for cleft-specific errors and works on mobile | PocketSphinx via OpenEars speech recognition API for iOS |

| [53] | Machine Learning/AI-Based | Not quantified in this paper. | Users reported positive engagement; ASR viable with offline use cases. | Not stated |

| [14] | Machine Learning/AI-Based | Perceived as neutral-to-good by users (Likert average 3.2–4.2) | Effective and offline-friendly; supports critical listening and user-specific adaptation. | PocketSphinx via OpenEars and RapidEars (offline) |

| [38] | Other/Unspecified | Not mentioned | Pitch-based voice input is viable for therapy reinforcement but lacks full ASR functionality. | Pitch detection (not specific) |

| [46] | Other/Unspecified | Not mentioned | Highlights motivational value of games but lacks discussion on ASR tools or outcomes. | Not specified |

| [49] | Other/Unspecified | Not quantified | Focus is on UX methodology and assessment; ASR is minimal and used for basic voice response. | Microphone input with pitch/timbre evaluation |

| [15] | Other/Unspecified | Not mentioned | Game supports phonological therapy via simplified input; ASR engine details not specified. | Concept-matching and speech stimulus response |

| [13] | Machine Learning/AI-Based | Not measured; assumed platform-native | Smart assistants enhance motivation and scheduling; effectiveness tied to ecosystem, not custom ASR. | Voice assistant platforms (Amazon Alexa, Google Home) |

| [41] | Other/Unspecified | Not mentioned (focus on feasibility and motivation) | Promising for generating child speech datasets but lacks immediate feedback or therapeutic use. | Gamified speech data collection interface with ASR analysis post hoc |

| [12] | Other/Unspecified | Not evaluated directly | ASR integrated indirectly; system focuses on therapist-aided assessment rather than full automation. | Embedded phonetic-phonological processing via therapist dashboard |

| [48] | Other/Unspecified | Not reported; proposal-focused paper | Highlights potential of adaptive speech interfaces; lacks empirical ASR results. | Exploratory use of speech recognition and voice features |

| [29] | Other/Unspecified | Not applicable | Focus is on structured audio input and feedback rather than ASR analysis. | Tablet-based phoneme training app; audio playback, no ASR |

| [39] | Machine Learning/AI-Based | Acknowledges ASR accuracy challenges for disordered speech | Commercial VAs show potential but current ASR not robust enough for speech disorder needs without retraining. | Voice assistants, ASR |

| [43] | Other/Unspecified | Not mentioned | No full ASR used; pitch-based sound input replaces speech recognition for engagement and tracking. | Pitch detection via Audio Input Handler using whistles |

| [16] | Other/Unspecified | Not specified | game focuses on practice rather than advanced speech analysis. | Basic voice analysis tools |

| [42] | Rule-Based/Hybrid | Word Error Rate (WER) reduced to 24.8% using augmented data | Custom-trained models significantly improved child speech recognition and user engagement. | Custom ASR model trained on Slovak children’s speech using wav2vec2 |

| [25] | Machine Learning/AI-Based | Not quantified; challenges with phoneme detection noted | Whisper-Local shows promise but struggles with precise phonetic level recognition for therapy. | Whisper-Local used for speech-to-text |

| [5] | Rule-Based/Hybrid | Confidence-based matching (specific rates not disclosed) | Custom ASR models support word articulation scoring; confidence levels drive game feedback and progression. | Pre-trained and custom-trained speech recognition models using Raspberry Pi |

| [54] | Machine Learning/AI-Based | Not quantified, but personalised to individual phonetic inventories | Voiceitt shows strong potential for severe impairments by enabling real-time, individualised Augmentative and Alternative Communication (AAC) support using AI-driven speech recognition. | Voiceitt®-AI-based non-standard speech recognition system using deep learning and pattern classification |

Appendix B. Interaction Modalities

| Reference | AI Techniques | Interaction Modalities | Target Age Group/Participant Age Group |

|---|---|---|---|

| [35] | Template Matching, GOP, Automatic Mispronunciation Detection | Touch (tablet joystick/buttons), voice input, audio feedback | Children aged 5–12 |

| [52] | Offline Speech Recognition, Dynamic Curriculum | Voice input, audio feedback, storybook-style navigation | Not explicitly stated |

| [34] | AI-based correction, Alexa Skill integration | Voice input (via Alexa), smart home automation | Children aged 4–8 |

| [11] | Voice Command Detection, Speech Scoring via UDP Server | voice input, game avatar control, visual and audio guidance | Children aged 2–6 |

| [44] | Collaborative dataset generation, Gamified data collection | Voice recording, therapist validation | Not mentioned |

| [9] | Custom audio analysis (sibilant energy extraction) | Visual waveform display, voice input | Children aged 6–10 |

| [45] | Sound detection, Therapist-defined interactive feedback | Voice input, parental and therapist interface | Not explicitly stated; intended for home-based use by children |

| [37] | Phonation time analysis, Intensity threshold detection | Voice input only, audio feedback | Children with articulation challenges (not specifically aged) |

| [17] | FFT-based pitch and loudness estimation, Kinect microphone array | Voice input (pitch-based control), visual feedback | Adolescents and adults with dysphonia (generalisable elements for older children) |

| [18] | Therapist-guided recording and object interaction logging | Voice input, 3D object interaction, therapist-controlled environment | Children aged 5 (tested), supports various therapy scenarios |

| [26] | Deep Neural Network (sibilant classification) | Voice input, visual feedback | Not specified; children with sibilant errors |

| [28] | Conversational agent prototype, visual/audio reinforcement | Voice input, visual prompts, adaptive feedback | Not explicitly stated |

| [2] | Analysis of AI-based games (ASR, NLP, Feedback) | Varies; includes voice, touch, gesture | Primarily 4–12 years (based on included studies) |

| [36] | Planned PocketSphinx ASR, manual SLP scoring | Voice input, touch control, custom prompts | Children aged 2–14 |

| [23] | Autonomous task triggering, Interaction tracking | Touch input, visual/audio prompts | Children and young adults with Down Syndrome |

| [30] | ASR for vocabulary recognition, Manual review for other stages | Voice input, Video/audio uploads, virtual pet | Children post-cleft lip surgery (unspecified age) |

| [22] | Overview of ICT tools (not specific to AI) | Software-based, therapist-controlled tools | Not explicitly mentioned |

| [20] | Smart home orchestration via EUD, Proposed ASR integration | Voice input, tablet control, IoT devices (lights, TV) | Children in home therapy (not explicitly stated) |

| [31] | Speech quality analysis and task-specific scoring | Voice input, visual feedback through game UI | Not explicitly stated |

| [32] | Formant tracking via Linear Predictive Coding (LPC)/FFT for vowel detection | Voice input, real-time retro game interface | Not explicitly stated |

| [47] | the Naive Bayes (NB), Support Vector Machines (SVM) and Kernel Density Estimation (KDE) were compared; The best results were obtained with the flat KDE with Silverman’s bandwidth using MFCCs | Voice input, speech playback, visual feedback | Not explicitly stated |

| [27] | SVM classifiers for sustained vowel and pitch variation | Voice input, visual feedback via screen interface | Not explicitly stated |

| [10] | Model-driven design incorporating phoneme recognition and user profiling | Voice input, audio-visual interaction modules (conceptual) | Framework for various user types, including children with hearing loss |

| [24] | specific technology not stated, multilingual model support | Voice input, visual feedback via game characters | Not explicitly stated |

| [40] | Speech analysis using Microsoft SDK, loudness-based input | Voice input, Kinect gestures, visual rewards | 7 and 10 |

| [21] | Speech pattern matching using PocketSphinx with cleft-specific adaptation | Voice input, touch screen interaction, storybook format | Children aged 2–3 |

| [53] | PocketSphinx, phoneme scoring | Voice input only, Visual/audio game feedback | Not explicitly stated |

| [14] | Custom phoneme scoring, offline ASR via OpenEars | Voice input, audio prompts, tablet interaction | Target children, tested on adults 24–31 |

| [38] | Pitch analysis (signal processing only, no full ASR) | Voice input (sustained vowel) | Not explicitly stated |

| [46] | Not specified (exploratory discussion) | Potentially touch and voice (not evaluated) | Not explcitly stated |

| [49] | UX framework development with voice-based input consideration | Voice input, therapist-led observation, touch | Children with cochlear implants (early to mid-childhood) |

| [15] | Speech stimulus and concept-response (simplified speech processing) | Voice input, game-based touch interface | Children aged 3–6 with phonological disorders |

| [13] | Voice assistant orchestration (Alexa, Google Home) | Voice input, smart device interaction (lights) | Children in home-based therapy (general use case) |

| [41] | Speech data collection with planned ASR analysis | Voice input, touch interface, animated characters | Children aged 5–8 with dysarthria |

| [12] | Therapist-controlled phonological data processing | Voice input, tablet game interface, therapist dashboard | Not explicitly stated |

| [48] | Proposal of adaptive ASR-based interfaces for therapy | Voice input (planned), adaptive feedback | Children with speech disabilities (ages unspecified) |

| [29] | Structured phoneme training, No ASR | Touch input, audio playback, visual rewards | Study 1: mean age of 6 years and 6 months; Study 2: mean age of 7 years 9 months |

| [39] | Commercial ASR and NLP | Voice input, smart assistant responses, screen prompts | Children with speech impairments (general home use) |

| [43] | Pitch detection and audio monitoring (no ASR) | Voice input (whistle/pitch), visual mobile interface | Children aged 4–10 with orofacial myofunctional disorders |

| [16] | Simple voice analysis tools (unspecified) | Voice input, basic visual/audio prompts | Children in early speech therapy (ages not specified) |

| [42] | Wav2Vec2 model fine-tuned on child speech | Voice input, movement-based game interface | Children speaking Slovak (age not specified) |

| [25] | Whisper-Local model for real-time speech recognition | Voice input, visual/audio feedback, directional movement | Children with speech impairments (ages not specified) |

| [5] | Custom-trained language models for speech articulation scoring | Voice input, mouse (in shooter), keyboard (optional), visual/audio feedback | Children aged 6–10 |

| [54] | Deep learning, pattern clustering, voice donor output, speaker-dependent ASR | Voice input, real-time AI interpretation, voice output | Children and adults with speech disabilities, such as cerebral palsy, dysarthria, and autism |

Appendix C. Details of Gamification Tactics Mentioned in the Selected Papers

| Reference | Gamification Element Category | Gamification Element Details | Engagement Over Time |

|---|---|---|---|

| [35] | Avatar and Customisation, Rewards and Progression | Avatars, coins, in-game store, power-ups, progression through levels | Daily session cap, personalisation, story-based progression, high user enjoyment reported |

| [52] | Feedback and Difficulty Scaling, Narrative and Story | Narrative storybook format, characters, adaptive difficulty | User-centred design, sustained motivation reported across studies |

| [34] | Feedback and Difficulty Scaling | Smart reminders, voice assistant interaction | Not emphasised; focus on automation and convenience |

| [11] | Avatar and Customisation, Control and Autonomy | Avatar control, voice-controlled game actions, object collection | Use of guidance arrow, repetitive play encouraged, no negative feedback |

| [44] | Rewards and Progression | Gamified data collection (star ratings, score-based validation) | Conceptual only; no long-term play evaluation conducted |

| [9] | Feedback and Difficulty Scaling, Rewards and Progression | Visual waveform feedback, score thresholds, progress bar | Session-based progression, configurable thresholds by therapist |

| [45] | Rewards and Progression | Task completion tracking, rewards | Designed for routine home use; long-term engagement monitored by caregivers |

| [37] | Avatar and Customisation, Feedback and Difficulty Scaling | Avatar animation (flying bird), real-time voice control, feedback via game success/failure | Adaptive difficulty, multiple intensity levels; plans for scenario expansion |

| [17] | Feedback and Difficulty Scaling | Pitch-controlled visual objects, real-time feedback, points system | Designed for continuous repetition; therapist adjustable goals |

| [18] | Narrative and Story | Explorable 3D environment, object manipulation, scenario-based storytelling | Customisable scenarios, therapist-led exploration, voice logs for follow-up |

| [26] | Feedback and Difficulty Scaling | Feedback animations, task scoring | Emphasised pronunciation monitoring; designed for iterative use |

| [28] | Narrative and Story | Narrative, empathetic characters, procedural content generation, visual/audio rewards | Replayability via PCG; immersion driven by plot and character empathy; volume-based input challenges |

| [2] | Avatar and Customisation, Feedback and Difficulty Scaling | Variable; includes points, character-based feedback, customisable environments | Highlights sustained use challenges; low self-esteem after several failures; reviews importance of meaningful rewards and personalisation |

| [36] | Avatar and Customisation, Rewards and Progression | Avatars, level progression, coin and star collection, rewards | Therapist-driven content adjustment; motivational elements include store, upgrades, and interactive feedback |

| [23] | Feedback and Difficulty Scaling, Rewards and Progression | Autonomous play mode, feedback animations, score keeping | Increased play duration and independence; tested on users with Down Syndrome to assess motivation |

| [30] | Session Design | Virtual pet feeding, candy collection, session rewards | Motivation sustained through pet care dynamics; repeated sessions encouraged by daily decay of pet health |

| [22] | Not Applicable | Not applicable (review of technologies) | Mentions importance of user motivation but does not analyse tactics directly |

| [20] | Narrative and Story, Control and Autonomy | Smart home fantasy scenarios, character response via devices | Focus on emotional reinforcement and parental configurability; plans for immersive future development |

| [31] | Feedback and Difficulty Scaling | Game-like scoring, immediate feedback, audio rewards | Tracks improvement over sessions; encourages continued effort with task-based incentives |

| [32] | Feedback and Difficulty Scaling, Control and Autonomy | Retro game mechanics, vowel-triggered character control, visual reaction | Sustained engagement through nostalgia-style play and real-time voice response |

| [47] | Rewards and Progression | Progress bar (ice cream) and reward (virtual button) | Motivates accurate phoneme production using score and repetition logic |

| [27] | Rewards and Progression | Progress indicators, visual rewards, real-time correctness display | Encourages voice control improvement by minimising false negatives; increases confidence |

| [10] | Feedback and Difficulty Scaling | Metamodel supports points, feedback loops, challenge levels | Design-driven personalisation aims to retain users by adapting difficulty and reward schemes |

| [24] | Feedback and Difficulty Scaling, Rewards and Progression | Character feedback, level-based rewards, visual progress tracking | Long-term classroom deployment; increased confidence and repeat play observed |

| [40] | Avatar and Customisation | Pac-Man style gameplay, voice-triggered avatar, power-up mechanics | Popular game structure increases motivation, though ASR accuracy limits usability |

| [21] | Feedback and Difficulty Scaling, Narrative and Story, Rewards and Progression | Story-driven progression, pop-the-balloon mini-games, auditory feedback | Motivates cleft speech repetition with scenario advancement after repeated attempts |

| [53] | Rewards and Progression | Score system, character animation, word repetition tasks | Children enjoyed progress tracking and character response; found voice interaction intuitive |

| [14] | Feedback and Difficulty Scaling, Rewards and Progression, Session Design | Point scoring, session progression, patient-specific challenges | Critical listening emphasised over pure game reward; motivates goal achievement with therapist-set plans |

| [38] | Rewards and Progression | Visual progression | Visual reinforcement encourages vocal control; suitable for short, repetitive sessions |

| [46] | Not Applicable | Games as a motivational wrapper (discussion only) | Proposes using game elements to overcome training anxiety and boost participation |

| [49] | Feedback and Difficulty Scaling, Narrative and Story | Narrative elements, multi-sensory feedback | Focus on personalisation and sensory accessibility for engagement measurement |

| [15] | Feedback and Difficulty Scaling | Colourful animation, audio response to correct/incorrect input | Designed to provide entertaining structure to speech sound exercises, aligned with therapy goals |

| [13] | Session Design | Daily challenges, verbal praise, smart home cues (lights) | Integrates therapy into household routines; supports emotional motivation via device responses |

| [41] | Feedback and Difficulty Scaling | Character animations | Children showed high participation and motivation during speech recording sessions |

| [12] | Feedback and Difficulty Scaling | Game-like assessment interface, animated feedback | Game structure improves cooperation and enjoyment during assessment; therapists report better child focus |

| [48] | Narrative and Story | Proposed use of audio-visual storytelling, point rewards | Planned use of emotionally engaging interfaces to motivate therapy adherence |

| [29] | Feedback and Difficulty Scaling, Rewards and Progression | Progress charts, colorful feedback, sound playback | Focus on therapist-defined targets; game elements used to maintain attention in early learners |

| [39] | Feedback and Difficulty Scaling, Session Design | Conversational prompts, daily therapy reminders, praise | Encourages routine formation through smart assistant dialogue and friendly voice interactions |

| [43] | Rewards and Progression | Animation, Whistle-driven progress, visual rewards | Reinforces participation through sound-controlled progression; motivates daily practice with app interaction |

| [16] | Feedback and Difficulty Scaling | Colorful prompts, word repetition scoring | Encourages pronunciation through repetition and animated feedback; suitable for early intervention |

| [42] | Rewards and Progression | Game character movement tied to ASR output, score display | Speech quality influences in-game control, increasing repetition and goal-oriented speaking |

| [25] | Rewards and Progression | Level-based progression, visual/audio cues, score system | Voice triggers movement and progression; integrates usability testing for continued motivation |

| [5] | Feedback and Difficulty Scaling | Vertical shooter and adventure platformer, word-triggered obstacles, adaptive difficulty, audio-visual rewards | Confidence-based gameplay promotes repetition and motivation; two distinct styles of gameplay suit varied interaction abilities |

| [54] | Not Applicable | None (not game-based) | Motivation derived from restored communication ability; app promotes inclusion, autonomy, and real-world interactions |

Appendix D. Resources Used to Answer the Research Questions

| Research Question 1 | Research Question 2 | Research Question 3 | Research Question 4 |

|---|---|---|---|

| [10] | [9] | [34] | [34] |

| [9] | [26] | [30] | [49] |

| [13] | [27] | [27] | [10] |

| [10] | [28] | [45] | [47] |

| [14] | [47] | [19] | [22] |

| [19] | [18] | [48] | [23] |

| [17] | [31] | [21] | [35] |

| [15] | [11] | [2] | [47] |

| [18] | [21] | [40] | [35] |

| [24] | [19] | [39] | [23] |

| [11] | [21] | [25] | [32] |

| [21] | [24] | [16] | [33] |

| [12] | [12] | [42] | [11] |

| [25] | [25] | [16] | |

| [16] | [42] | [43] | |

| [43] | [43] | ||

| [5] |

References

- Fitch, J. Pediatric Speech Disorder Diagnoses More than Doubled Amid COVID-19 Pandemic. 2023. Available online: https://www.contemporarypediatrics.com/view/pediatric-speech-disorder-diagnoses-more-than-doubled-amid-covid-19-pandemic (accessed on 21 January 2025).

- Saeedi, S.; Bouraghi, H.; Seifpanahi, M.S.; Ghazisaeedi, M. Application of digital games for speech therapy in children: A systematic review of features and challenges. J. Healthc. Eng. 2022, 2022, 4814945. [Google Scholar] [CrossRef] [PubMed]

- Larson, K. Serious games and gamification in the corporate training environment: A literature review. TechTrends 2020, 64, 319–328. [Google Scholar] [CrossRef]

- Jaddoh, A.; Loizides, F.; Rana, O. Interaction between people with dysarthria and speech recognition systems: A review. Assist. Technol. 2023, 35, 330–338. [Google Scholar] [CrossRef] [PubMed]

- Nicolaou, K.; Carlton, R.; Jaddoh, A.; Syed, Y.; James, J.; Abdoulqadir, C.; Loizides, F. Game based learning rehabilitation for children with speech disabilities: Presenting two bespoke video games. In BCS HCI ’23, Proceedings of the 36th International BCS Human-Computer Interaction Conference, York, UK, 28–29 August 2023; BCS Learning & Development Ltd.: Swindon, UK, 2023. [Google Scholar]

- Moher, D.; Shamseer, L.; Clarke, M.; Ghersi, D.; Liberati, A.; Petticrew, M.; Shekelle, P.; Stewart, L.A.; Group, P.P. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst. Rev. 2015, 4, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Schlosser, R.W.; O’Neil-Pirozzi, T.M. Problem Formulation in Evidence-based Practice and Systemic Reviews. Contemp. Issues Commun. Sci. Disord. 2006, 33, 5–10. [Google Scholar] [CrossRef]

- Parentctrhub. Categories of Disability Under Part B of IDEA—Center for Parent Information and Resources. 2024. Available online: https://www.parentcenterhub.org/713categories/#speech (accessed on 3 January 2025).

- Anjos, I.; Grilo, M.; Ascensão, M.; Guimarães, I.; Magalhães, J.; Cavaco, S. A serious mobile game with visual feedback for training sibilant consonants. In Proceedings of the International Conference on Advances in Computer Entertainment, London, UK, 14–16 December 2017; pp. 430–450. [Google Scholar]

- Céspedes-Hernández, D.; Pérez-Medina, J.L.; González-Calleros, J.M.; Rodríguez, F.J.Á.; Muñoz-Arteaga, J. Sega-arm: A metamodel for the design of serious games to support auditory rehabilitation. In Proceedings of the XVI International Conference on Human Computer Interaction, Vilanova i la Geltr, Spain, 7–9 September 2015; pp. 1–8. [Google Scholar]

- Nasiri, N.; Shirmohammadi, S.; Rashed, A. A serious game for children with speech disorders and hearing problems. In Proceedings of the 2017 IEEE 5th International Conference on Serious Games and Applications for Health (SeGAH), Perth, Australia, 2–4 April 2017; pp. 1–7. [Google Scholar]

- Antunes, I.; Antunes, A.; Madeira, R.N. Designing Serious Games with Pervasive Therapist Interface for Phonetic-Phonological Assessment of Children. In Proceedings of the 22nd International Conference on Mobile and Ubiquitous Multimedia, Vienna, Austria, 3–6 December 2023; pp. 532–534. [Google Scholar]

- Cassano, F.; Pagano, A.; Piccinno, A. Supporting speech therapies at (smart) home through voice assistance. In Proceedings of the International Symposium on Ambient Intelligence, Salamanca, Spain, 6–8 October 2021; pp. 105–113. [Google Scholar]

- Duval, J.; Rubin, Z.; Segura, E.M.; Friedman, N.; Zlatanov, M.; Yang, L.; Kurniawan, S. SpokeIt: Building a mobile speech therapy experience. In Proceedings of the 20th International Conference on Human-Computer Interaction with Mobile Devices and Services, Barcelona, Spain, 3–6 September 2018; pp. 1–12. [Google Scholar]

- Madeira, R.N.; Macedo, P.; Reis, S.; Ferreira, J. Super-fon: Mobile entertainment to combat phonological disorders in children. In Proceedings of the 11th Conference on Advances in Computer Entertainment Technology, Madeira, Portugal, 11–14 November 2014; pp. 1–4. [Google Scholar]

- Haluška, R.; Pleva, M.; Reňák, A. Development of Support Speech Therapy Game. In Proceedings of the 2024 International Conference on Emerging eLearning Technologies and Applications (ICETA), Stary Smokovec, Slovakia, 24–25 October 2024; pp. 174–179. [Google Scholar]

- Lv, Z.; Esteve, C.; Chirivella, J.; Gagliardo, P. A game based assistive tool for rehabilitation of dysphonic patients. In Proceedings of the 2015 3rd IEEE VR International Workshop on Virtual and Augmented Assistive Technology (VAAT), Arles, France, 23 March 2015; pp. 9–14. [Google Scholar]

- Cagatay, M.; Ege, P.; Tokdemir, G.; Cagiltay, N.E. A serious game for speech disorder children therapy. In Proceedings of the 2012 7th International Symposium on Health Informatics and Bioinformatics, Nevsehir, Turkey, 19–22 April 2012; pp. 18–23. [Google Scholar]

- Lan, T.; Aryal, S.; Ahmed, B.; Ballard, K.; Gutierrez-Osuna, R. Flappy voice: An interactive game for childhood apraxia of speech therapy. In Proceedings of the First ACM SIGCHI Annual Symposium on Computer-Human Interaction in Play, Toronto, ON, Canada, 19–21 October 2014; pp. 429–430. [Google Scholar]

- Cassano, F.; Piccinno, A.; Regina, P. End-user development in speech therapies: A scenario in the smart home domain. In Proceedings of the End-User Development: 7th International Symposium, IS-EUD 2019, Hatfield, UK, 10–12 July 2019; Proceedings 7; pp. 158–165. [Google Scholar]

- Rubin, Z.; Kurniawan, S. Speech adventure: Using speech recognition for cleft speech therapy. In Proceedings of the 6th International Conference on PErvasive Technologies Related to Assistive Environments, Rhodes, Greece, 29–31 May 2013; pp. 1–4. [Google Scholar]

- Drosos, K.; Voniati, L.; Christopoulou, M.; Kosma, E.I.; Chronopoulos, S.K.; Tafiadis, D.; Peppas, K.P.; Toki, E.I.; Ziavra, N. Information and Communication Technologies in Speech and Language Therapy towards enhancing phonological performance. In Proceedings of the 2021 5th International Symposium on Multidisciplinary Studies and Innovative Technologies (ISMSIT), Ankara, Turkey, 21–23 October 2021; pp. 187–192. [Google Scholar]

- Escudero-Mancebo, D.; Corrales-Astorgano, M.; Cardeñoso-Payo, V.; González-Ferreras, C. Evaluating the impact of an autonomous playing mode in a learning game to train oral skills of users with down syndrome. IEEE Access 2021, 9, 93480–93496. [Google Scholar] [CrossRef]

- Nanavati, A.; Dias, M.B.; Steinfeld, A. Speak up: A multi-year deployment of games to motivate speech therapy in India. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, Montreal, Canada, 21–26 April 2018; pp. 1–12. [Google Scholar]

- Baranyi, R.; Weber, L.; Aigner, C.; Hohenegger, V.; Winkler, S.; Grechenig, T. Voice-Controlled Serious Game: Design Insights for a Speech Therapy Application. In Proceedings of the 2024 E-Health and Bioengineering Conference (EHB), Iasi, Romania, 14–15 November 2024; pp. 1–4. [Google Scholar]

- Costa, W.; Cavaco, S.; Marques, N. Deploying a Speech Therapy Game Using a Deep Neural Network Sibilant Consonants Classifier. In Proceedings of the Progress in Artificial Intelligence: 20th EPIA Conference on Artificial Intelligence, EPIA 2021, Virtual Event, 7–9 September 2021; Proceedings 20. pp. 596–608. [Google Scholar]

- Diogo, M.; Eskenazi, M.; Magalhaes, J.; Cavaco, S. Robust scoring of voice exercises in computer-based speech therapy systems. In Proceedings of the 2016 24th European Signal Processing Conference (EUSIPCO), Budapest, Hungary, 29 August–2 September 2016; pp. 393–397. [Google Scholar]

- Duval, J.; Rubin, Z.; Goldman, E.; Antrilli, N.; Zhang, Y.; Wang, S.H.; Kurniawan, S. Designing towards maximum motivation and engagement in an interactive speech therapy game. In Proceedings of the 2017 Conference on Interaction Design and Children, Stanford, CA, USA, 27–31 June 2017; pp. 589–594. [Google Scholar]

- Gačnik, M.; Starčič, A.I.; Zaletelj, J.; Zajc, M. User-centred app design for speech sound disorders interventions with tablet computers. Univers. Access Inf. Soc. 2018, 17, 821–832. [Google Scholar] [CrossRef]

- Garay, A.P.A.; Benites, V.S.V.; Padilla, A.B.; Galvez, M.E.C. Implementation of a solution for the remote management of speech therapy in postoperative cleft lip patients using speech recognition and gamification. In Proceedings of the 2021 IEEE Engineering International Research Conference (EIRCON), Lima, Peru, 27–29 October 2021; pp. 1–4. [Google Scholar]

- Nasiri, N.; Shirmohammadi, S. Measuring performance of children with speech and language disorders using a serious game. In Proceedings of the 2017 IEEE International Symposium on Medical Measurements and Applications (MeMeA), Rome, Italy, 11–13 June 2017; pp. 15–20. [Google Scholar]

- Tan, C.T.; Johnston, A.; Bluff, A.; Ferguson, S.; Ballard, K.J. Retrogaming as visual feedback for speech therapy. In Proceedings of the SIGGRAPH Asia 2014 Mobile Graphics and Interactive Applications, Shenzhen, China, 3–6 December 2014; pp. 1–5. [Google Scholar]

- Tori, A.A.; Tori, R.; dos Santos Nunes, F.D.L. Serious game design in health education: A systematic review. IEEE Trans. Learn. Technol. 2022, 15, 827–846. [Google Scholar] [CrossRef]

- Barletta, V.; Calvano, M.; Curci, A.; Piccinno, A. A New Interactive Paradigm for Speech Therapy. In Proceedings of the IFIP Conference on Human-Computer Interaction, York, UK, 28 August–1 September 2023; pp. 380–385. [Google Scholar]

- Hair, A.; Ballard, K.J.; Markoulli, C.; Monroe, P.; Mckechnie, J.; Ahmed, B.; Gutierrez-Osuna, R. A longitudinal evaluation of tablet-based child speech therapy with Apraxia World. ACM Trans. Access. Comput. (TACCESS) 2021, 14, 1–26. [Google Scholar] [CrossRef]

- Hair, A.; Monroe, P.; Ahmed, B.; Ballard, K.J.; Gutierrez-Osuna, R. Apraxia world: A speech therapy game for children with speech sound disorders. In Proceedings of the 17th ACM Conference on Interaction Design and Children, Trondheim, Norway, 19–22 June 2018; pp. 119–131. [Google Scholar]

- Lopes, M.; Magalhães, J.; Cavaco, S. A voice-controlled serious game for the sustained vowel exercise. In Proceedings of the 13th International Conference on Advances in Computer Entertainment Technology, Osaka, Japan, 9–12 November 2016; pp. 1–6. [Google Scholar]

- Lopes, V.; Magalhães, J.; Cavaco, S. Sustained Vowel Game: A Computer Therapy Game for Children with Dysphonia. In Proceedings of the INTERSPEECH, Graz, Austria, 15–19 September 2019; pp. 26–30. [Google Scholar]

- Qiu, L.; Abdullah, S. Voice assistants for speech therapy. In Proceedings of the Adjunct 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2021 ACM International Symposium on Wearable Computers, Virtual Event, 21–26 September 2021; pp. 211–214. [Google Scholar]

- Tan, C.T.; Johnston, A.; Ballard, K.; Ferguson, S.; Perera-Schulz, D. sPeAK-MAN: Towards popular gameplay for speech therapy. In Proceedings of the 9th Australasian Conference on Interactive Entertainment: Matters of Life and Death, Melbourne, Australia, 30 September–1 October 2013; pp. 1–4. [Google Scholar]

- Liu, N.; Barakova, E.; Han, T. A Novel Gamified Approach for Collecting Speech Data from Young Children with Dysarthria: Feasibility and Positive Engagement Evaluation. In Proceedings of the 2024 17th International Convention on Rehabilitation Engineering and Assistive Technology (i-CREATe), Shanghai, China, 23–26 August 2024; pp. 1–5. [Google Scholar]

- Ondáš, S.; Staš, J.; Ševc, R. Speech recognition as a supportive tool in the speech therapy game. In Proceedings of the 2024 34th International Conference Radioelektronika (RADIOELEKTRONIKA), Zilina, Slovakia, 17–18 April 2024; pp. 1–4. [Google Scholar]

- Karthan, M.; Hieber, D.; Pryss, R.; Schobel, J. Developing a gamification-based mhealth platform to support orofacial myofunctional therapy for children. In Proceedings of the 2023 IEEE 36th International Symposium on Computer-Based Medical Systems (CBMS), L’Aquila, Italy, 22–24 June 2023; pp. 169–172. [Google Scholar]

- Barletta, V.; Cassano, F.; Pagano, A.; Piccinno, A. A collaborative ai dataset creation for speech therapies. In Proceedings of the CoPDA2022–Sixth International Workshop on Cultures of Participation in the Digital Age: AI for Humans or Humans for AI? Frascati, Italy, 7 June 2022; pp. 81–85. [Google Scholar]

- Desolda, G.; Lanzilotti, R.; Piccinno, A.; Rossano, V. A system to support children in speech therapies at home. In Proceedings of the 14th Biannual Conference of the Italian SIGCHI Chapter, Bolzano, Italy, 27–29 June 2021; pp. 1–5. [Google Scholar]

- Elo, C.; Inkinen, M.; Autio, E.; Vihriälä, T.; Virkki, J. The role of games in overcoming the barriers to paediatric speech therapy training. In Proceedings of the International Conference on Games and Learning Alliance, Tampere, Finland, 30 November–2 December 2022; pp. 181–192. [Google Scholar]

- Grossinho, A.; Guimaraes, I.; Magalhaes, J.; Cavaco, S. Robust phoneme recognition for a speech therapy environment. In Proceedings of the 2016 IEEE International Conference on Serious Games and Applications for Health (SeGAH), Orlando, FL, USA, 11–13 May 2016; pp. 1–7. [Google Scholar]

- Nayar, R. Towards designing speech technology based assistive interfaces for children’s speech therapy. In Proceedings of the 19th ACM International Conference on Multimodal Interaction, Glasgow, UK, 13–17 November 2017; pp. 609–613. [Google Scholar]

- Cano, S.; Collazos, C.A.; Aristizábal, L.F.; Gonzalez, C.S.; Moreira, F. Towards a methodology for user experience assessment of serious games with children with cochlear implants. Telemat. Inform. 2018, 35, 993–1004. [Google Scholar] [CrossRef]

- Abdoulqadir, C.; Loizides, F.; Hoyos, S. Enhancing mobile game accessibility: Guidelines for users with visual and dexterity dual impairments. In Proceedings of the International Conference on Human-Centred Software Engineering, Reykjavik, Iceland, 8–10 July 2024; pp. 255–263. [Google Scholar]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Duval, J. A mobile game system for improving the speech therapy experience. In Proceedings of the 19th International Conference on Human-Computer Interaction with Mobile Devices and Services, Vancouver, BC, Canada, 9–14 July 2017; pp. 1–3. [Google Scholar]

- Ahmed, B.; Monroe, P.; Hair, A.; Tan, C.T.; Gutierrez-Osuna, R.; Ballard, K.J. Speech-driven mobile games for speech therapy: User experiences and feasibility. Int. J. Speech-Lang. Pathol. 2018, 20, 644–658. [Google Scholar] [CrossRef] [PubMed]

- Murero, M.; Vita, S.; Mennitto, A.; D’Ancona, G. Artificial intelligence for severe speech impairment: Innovative approaches to AAC and communication. In Proceedings of the PSYCHOBIT, Naples, Italy, 4–5 October 2020. [Google Scholar]

| References | Level of Independence | Notes |

|---|---|---|

| [9,11,14,23,26,27,28,29,30,31,32,33] | High | Child plays independently without therapist supervision (therapist supervision is optional if available). |

| [14,15,16,21,28,34,35,36,37,38,39,40,41,42,43] | Medium | Therapist prepares the game, but the child plays autonomously. |

| [10,12,13,17,18,20,22,23,24,34,44,45,46,47,48] | Low | Requires supervision and reinforcement by therapists. |

| Design Recommendation | Supporting Studies |

|---|---|

| Real-time feedback | [12,16,17,25,26,27,28,31,32,36,37,41,42,45,47] |

| Customisation and adapation | [12,16,20,21,25,26,35,36,37,42,45] |

| Type of Rehabilitation Exercise | Supporting Studies |

|---|---|

| Loudness | [17,38] |

| Vowel Exercises | [9,25,27,32,37,38,47] |

| Consonant Words | [9,12,16,25,26,41,42,47] |

| Pitch | [17,24,38,45] |

| Volume | [24,28,45] |

| Emotional Expression and Engagement | [20,23,43] |

| Customisation and adapation | [12,16,20,21,35,36,37,41,45] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abdoulqadir, C.; Loizides, F. Interaction, Artificial Intelligence, and Motivation in Children’s Speech Learning and Rehabilitation Through Digital Games: A Systematic Literature Review. Information 2025, 16, 599. https://doi.org/10.3390/info16070599

Abdoulqadir C, Loizides F. Interaction, Artificial Intelligence, and Motivation in Children’s Speech Learning and Rehabilitation Through Digital Games: A Systematic Literature Review. Information. 2025; 16(7):599. https://doi.org/10.3390/info16070599

Chicago/Turabian StyleAbdoulqadir, Chra, and Fernando Loizides. 2025. "Interaction, Artificial Intelligence, and Motivation in Children’s Speech Learning and Rehabilitation Through Digital Games: A Systematic Literature Review" Information 16, no. 7: 599. https://doi.org/10.3390/info16070599

APA StyleAbdoulqadir, C., & Loizides, F. (2025). Interaction, Artificial Intelligence, and Motivation in Children’s Speech Learning and Rehabilitation Through Digital Games: A Systematic Literature Review. Information, 16(7), 599. https://doi.org/10.3390/info16070599