Trajectories of Digital Teaching Competence: A Multidimensional PLS-SEM Study in University Contexts

Abstract

1. Introduction

1.1. The DigCompEdu Framework and Its Impact on Teacher Digital Competence

1.2. Structural Equation Modeling (SEM) in Digital Competence Analysis

2. Materials and Methods

2.1. Participants

2.2. Instruments

2.3. Procedure

2.4. Data Analysis

3. Results

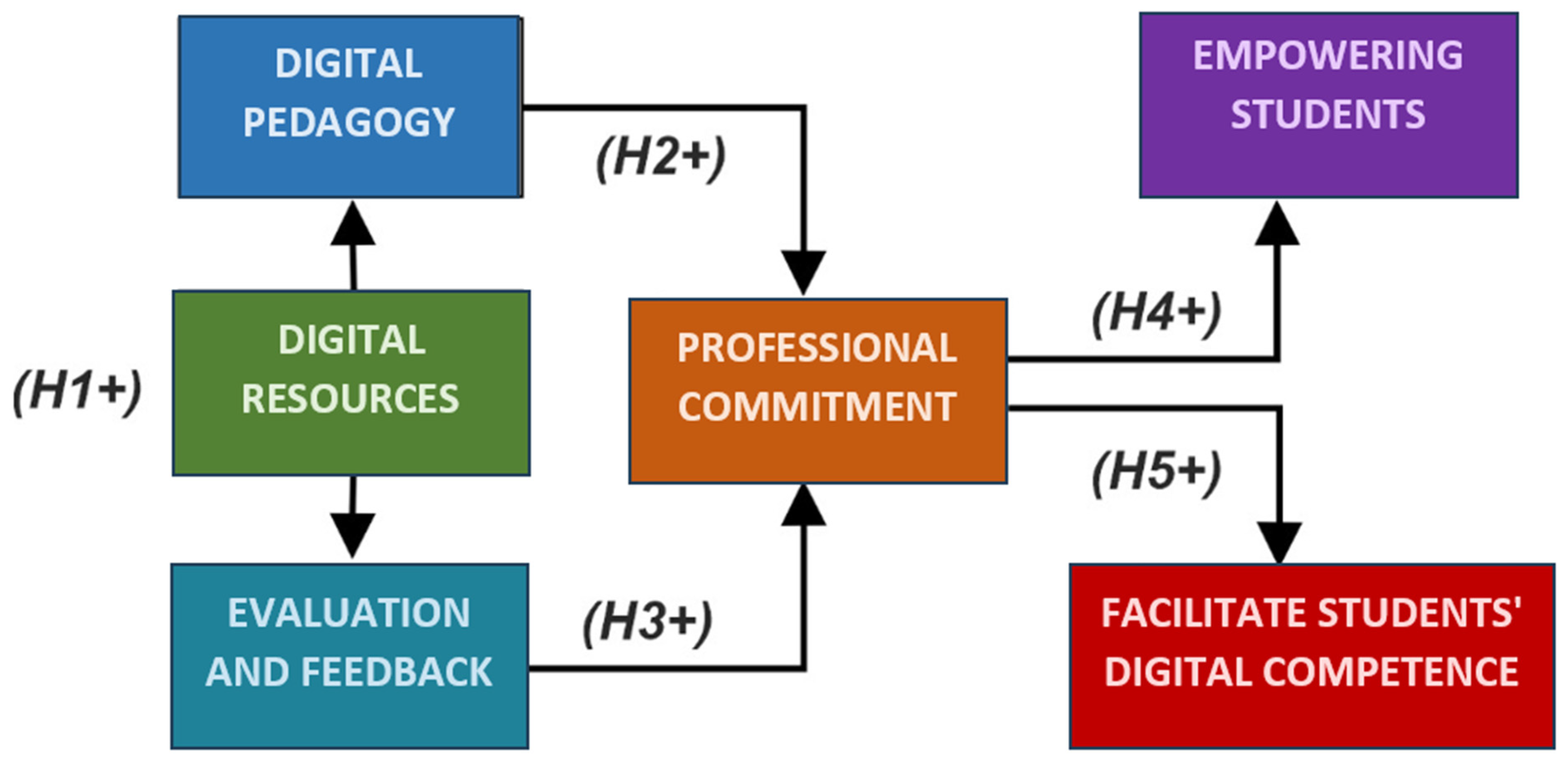

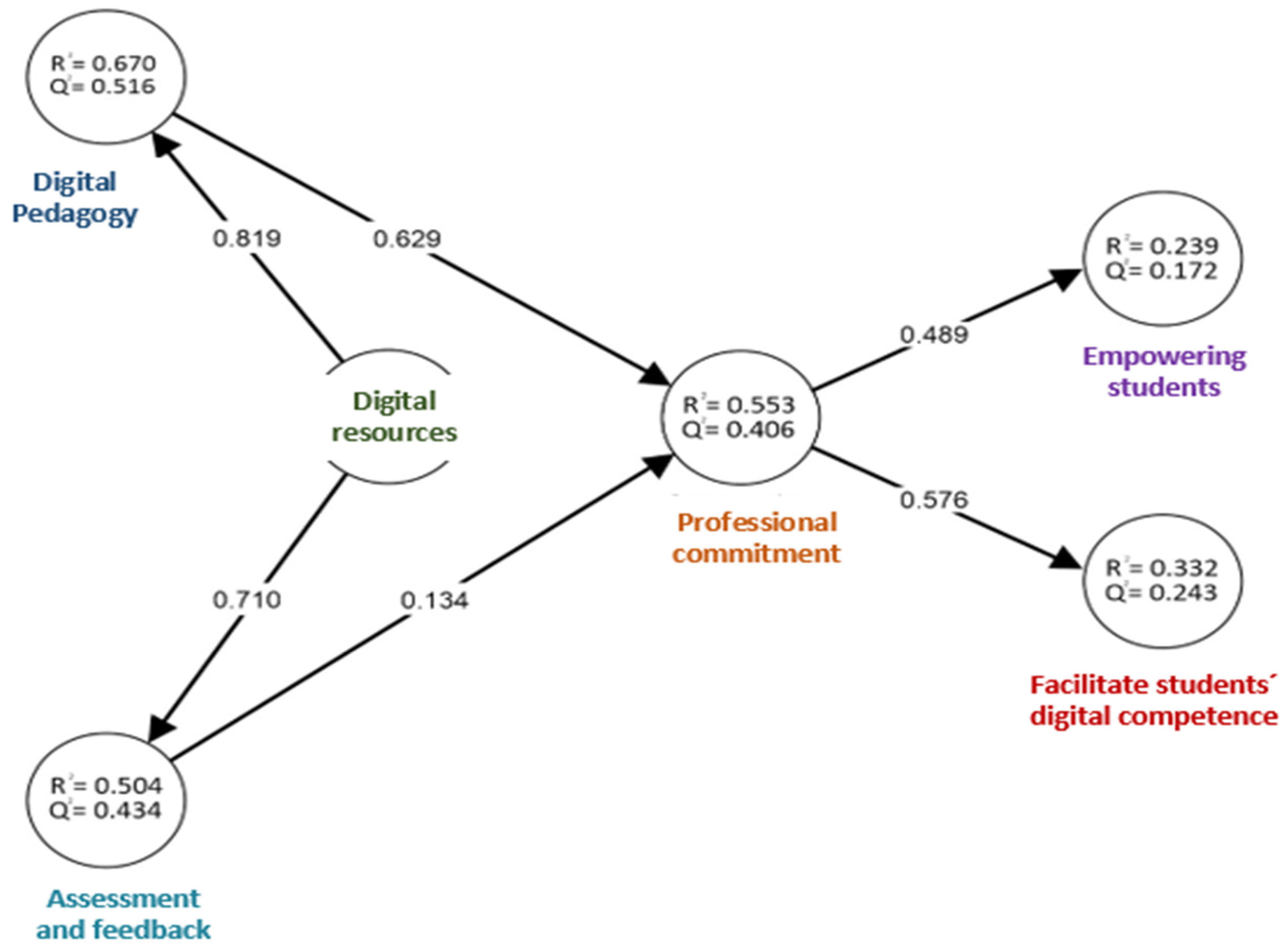

PLS Path Model

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cabero-Almenara, J.; Romero-Tena, R.; Palacios-Rodríguez, A. Evaluation of Teacher Digital Competence Frameworks Through Expert Judgement: The Use of the Expert Competence Coefficient. J. New Approaches Educ. Res. 2020, 9, 275–293. [Google Scholar] [CrossRef]

- Guillén-Gámez, F.D.; Mayorga-Fernández, M.J. Identification of variables that predict teachers’ attitudes toward ICT in higher education for teaching and research: A study with regression. Sustainability 2020, 12, 1312. [Google Scholar] [CrossRef]

- Sánchez-Prieto, J.C.; Huang, F.; Olmos-Migueláñez, S.; García-Peñalvo, F.J.; Teo, T. Exploring the unknown: The effect of resistance to change and attachment on mobile adoption among secondary pre-service teachers. Br. J. Educ. Technol. 2022, 53, 336–352. [Google Scholar] [CrossRef]

- Guillén-Gámez, F.D.; Mayorga-Fernández, M.J. Prediction of factors that affect the knowledge and use higher education professors from Spain make of ICT resources to teach research and evaluate: A study with explanatory factors. Educ. Sci. 2021, 11, 331. [Google Scholar] [CrossRef]

- Caena, F.; Redecker, C. Aligning teacher competence frameworks to 21st century challenges: The case for the European Digital Competence Framework for Educators (DigCompEdu). Eur. J. Educ. 2019, 54, 356–369. [Google Scholar] [CrossRef]

- Ghomi, M.; Redecker, C. Digital Competence of Educators (DigCompEdu): Development and Evaluation of a Self-assessment Instrument for Teachers’ Digital Competence. In Proceedings of the 11th International Conference on Computer Supported Education (CSEDU 2019), Crete, Greece, 2–4 May 2019; Volume 1, pp. 541–548. [Google Scholar] [CrossRef]

- Sánchez-Cruzado, C.; Santiago Campión, R.; Sánchez-Compaña, M.T. University professors’ digital competence: A study in the context of COVID-19. Sustainability 2023, 15, 1570. [Google Scholar] [CrossRef]

- Romero-García, C.; Buzón-García, O.; de Paz-Lugo, P. Self-perception of the digital competence of university teachers in Spain: A cross-sectional study of the influence of personal and professional factors. Int. J. Environ. Res. Public Health 2022, 19, 1474. [Google Scholar] [CrossRef]

- Redecker, C. European Framework for the Digital Competence of Educators: DigCompEdu. Joint Research Centre (Seville Site). 2017. Available online: https://joint-research-centre.ec.europa.eu/jrc-sites-across-europe/jrc-seville-spain_en (accessed on 4 February 2025).

- Redecker, C. European Framework for the Digital Competence of Educators: DigCompEdu. Publications Office of the European Union. 2020. Available online: https://european-union.europa.eu/institutions-law-budget/institutions-and-bodies/search-all-eu-institutions-and-bodies/publications-office-european-union-op_en (accessed on 4 February 2025).

- Dias-Trindade, S.; Moreira, J.A.; Ferreira, A.G. Assessment of university teachers on their digital competences. QWERTY—Open Interdiscip. J. Technol. Cult. Educ. 2020, 15, 50–69. [Google Scholar] [CrossRef]

- García-Delgado, M.Á.; Rodríguez-Cano, S.; Delgado-Benito, V.; de la Torre-Cruz, T. Competencia Docente Digital entre los Futuros Profesores de la Universidad de Burgos. Rev. Int. Multidiscip. Cienc. Soc. 2024, 13, 75–93. [Google Scholar] [CrossRef]

- Cabero-Almenara, J.; Gutiérrez-Castillo, J.J.; Barroso-Osuna, J.; y Rodríguez-Palacios, A. Competencia Digital Docente según el Marco DigCompEdu. Estudio Comparativo en Diferentes Universidades Latinoamericanas. Rev. Nuevos Enfoques Investig. Educ. 2023, 12, 276–291. [Google Scholar] [CrossRef]

- Palacios-Rodríguez, A.; Gutiérrez-Castillo, J.J.; Martín-Párraga, J.; Serrano-Hidalgo, A. La formación digital en los programas de iniciación a la docencia universitaria en España: Un análisis comparativo a partir del DigComp y DigCompEdu. Educ. XX1 2024, 27, 2. [Google Scholar] [CrossRef]

- Rodríguez-Rivera, P.; Rodríguez-Ferrer, J.M.; Manzano-León, A. Diseño de salas de escape digitales con IA generativa en contextos universitarios: Un estudio cualitativo. Multimodal Technol. Interact. 2025, 9, 20. [Google Scholar] [CrossRef]

- Hair, J.F.; Sarstedt, M.; Ringle, C.M.; Gudergan, S.P.; Castillo Apraiz, J.; Cepeda Carrión, G.A.; Roldán, J.L. Manual Avanzado de Partial Least Squares Structural Equation Modeling (PLS-SEM); OmniaScience: Terrassa, Spain; Barcelona, Spain, 2021. [Google Scholar]

- Ringle, C.M.; Sarstedt, M.; Mitchell, R.; Gudergan, S.P. Partial least squares structural equation modeling in HRM research. Int. J. Hum. Resour. Manag. 2020, 31, 1617–1643. [Google Scholar] [CrossRef]

- Koehler, M.J.; Mishra, P. What is technological pedagogical content knowledge? Contemp. Issues Technol. Teach. Educ. 2009, 9, 60–70. [Google Scholar] [CrossRef]

- Nicol, D.J.; Macfarlane-Dick, D. Formative assessment and self-regulated learning: A model and seven principles of good feedback practice. Stud. High. Educ. 2006, 31, 199–218. [Google Scholar] [CrossRef]

- Panadero, E.; Jonsson, A.; Botella, J. Effects of self-assessment on self-regulated learning and self-efficacy: Four meta-analyses. Educ. Res. Rev. 2017, 22, 74–98. [Google Scholar] [CrossRef]

- Hattie, J.; Timperley, H. The power of feedback. Rev. Educ. Res. 2007, 77, 81–112. [Google Scholar] [CrossRef]

- Mishra, P.; Koehler, M.J. Technological pedagogical content knowledge: A framework for teacher knowledge. Teach. Coll. Rec. 2006, 108, 1017–1054. [Google Scholar] [CrossRef]

- Tondeur, J.; van Braak, J.; Ertmer, P.A.; Ottenbreit-Leftwich, A. Understanding the relationship between teachers’ pedagogical beliefs and technology use in education: A systematic review of qualitative evidence. Educ. Technol. Res. Dev. 2017, 65, 555–575. [Google Scholar] [CrossRef]

- Bennett, R.E. Formative assessment: A critical review. Assess. Educ. Princ. Policy Pract. 2011, 18, 5–25. [Google Scholar] [CrossRef]

- Carless, D.; Boud, D. The development of student feedback literacy: Enabling uptake of feedback. Assess. Eval. High. Educ. 2018, 43, 1315–1325. [Google Scholar] [CrossRef]

- Reeve, J.; Jang, H. What teachers say and do to support students’ autonomy during a learning activity. J. Educ. Psychol. 2006, 98, 209–218. [Google Scholar] [CrossRef]

- Beetham, H.; Sharpe, R. Rethinking Pedagogy for a Digital Age: Designing for 21st Century Learning, 2nd ed.; Routledge: London, UK, 2013. [Google Scholar] [CrossRef]

- Krumsvik, R.J. Teacher educators’ digital competence. Scand. J. Educ. Res. 2014, 58, 269–280. [Google Scholar] [CrossRef]

- Uerz, D.; Volman, M.; Kral, M. Teacher educators’ competences in fostering student teachers’ proficiency in teaching and learning with technology: An overview of relevant research literature. Teach. Teach. Educ. 2018, 70, 12–23. [Google Scholar] [CrossRef]

- Mardia, K.V. Applications of some measures of multivariate skewness and kurtosis in testing normality and robustness studies. Sankhyā Indian J. Stat. Ser. B 1974, 36, 115–128. [Google Scholar]

- Lorenzo-Seva, U.; Van Ginkel, J.R. Multiple imputation of missing values in exploratory factor analysis of multidimensional scales: Estimating latent trait scores. An. Psicol. 2016, 32, 596–608. [Google Scholar] [CrossRef]

- Kline, R.B. Principles and Practice of Structural Equation Modeling, 4th ed.; The Guilford Press: New York, NY, USA, 2016. [Google Scholar]

- Fornell, C.; Larcker, D. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Becker, J.M.; Ringle, C.M.; Sarstedt, M. Estimating Moderating Effects in PLS-SEM and PLSc-SEM: Interaction Term Generation. J. Appl. Struct. Equ. Model. 2018, 2, 1–21. [Google Scholar] [CrossRef]

- Chin, W.W. Issues and Opinion on Structural Equation Modeling. MIS Q. 1998, 22, vii–xv. [Google Scholar]

| Factor | Indicator | Estimate | SE | Z | p | β |

|---|---|---|---|---|---|---|

| Professional commitment | item 1 | 0.713 | 0.0138 | 51.6 | <0.001 | 0.794 |

| item 2 | 0.605 | 0.0129 | 47.0 | <0.001 | 0.746 | |

| item 3 | 0.926 | 0.0178 | 52.0 | <0.001 | 0.805 | |

| Digital resources | item 1 | 0.650 | 0.0143 | 45.3 | <0.001 | 0.725 |

| item 2 | 0.936 | 0.0204 | 45.9 | <0.001 | 0.733 | |

| Digital pedagogy | item 1 | 0.941 | 0.0169 | 55.8 | <0.001 | 0.808 |

| item 2 | 1.153 | 0.0177 | 65.2 | <0.001 | 0.891 | |

| item 3 | 0.678 | 0.0126 | 53.9 | <0.001 | 0.789 | |

| item 4 | 1.058 | 0.0160 | 65.9 | <0.001 | 0.898 | |

| Assessment and feedback | item 1 | 0.797 | 0.0126 | 63.1 | <0.001 | 0.891 |

| item 3 | 0.804 | 0.0136 | 58.9 | <0.001 | 0.850 | |

| Empowering students | item 1 | 0.754 | 0.0230 | 32.8 | <0.001 | 0.619 |

| item 2 | 0.884 | 0.0221 | 40.0 | <0.001 | 0.668 | |

| item 3 | 0.647 | 0.0175 | 37.1 | <0.001 | 0.652 | |

| item 4 | 0.819 | 0.0187 | 43.8 | <0.001 | 0.710 | |

| Facilitating students’ digital competence | item 5 | 1.076 | 0.0172 | 62.4 | <0.001 | 0.876 |

| item 6 | 1.142 | 0.0204 | 56.1 | <0.001 | 0.818 | |

| item 7 | 0.942 | 0.0170 | 55.3 | <0.001 | 0.811 | |

| item 8 | 1.059 | 0.0172 | 61.7 | <0.001 | 0.870 |

| A | Composite Reliability (Rho A) | Composite Reliability | Average Variance Extracted (AVE) | |

|---|---|---|---|---|

| Professional commitment | 0.826 | 0.832 | 0.896 | 0.741 |

| Empowering students | 0.811 | 0.821 | 0.888 | 0.726 |

| Facilitating students’ digital competence | 0.922 | 0.935 | 0.941 | 0.761 |

| Assessment and feedback | 0.862 | 0.893 | 0.935 | 0.877 |

| Digital pedagogy | 0.903 | 0.907 | 0.933 | 0.776 |

| Digital resources | 0.794 | 0.796 | 0.867 | 0.765 |

| Fornell–Larcker Criterion | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|

| 1. Professional commitment | 0.861 | |||||

| 2. Empowering students | 0.489 | 0.852 | ||||

| 3. Facilitating students’ digital competence | 0.576 | 0.510 | 0.872 | |||

| 4. Assessment and feedback | 0.658 | 0.755 | 0.637 | 0.937 | ||

| 5. Digital pedagogy | 0.740 | 0.749 | 0.823 | 0.834 | 0.881 | |

| 6. Digital resources | 0.599 | 0.674 | 0.690 | 0.710 | 0.819 | 0.875 |

| Heterotrait–Monotrait Ratio Matrix (HTMT) | 1 | 2 | 3 | 4 | 5 | 6 |

| 1. Professional commitment | ||||||

| 2. Empowering students | 0.594 | |||||

| 3. Facilitating students’ digital competence | 0.642 | 0.585 | ||||

| 4. Assessment and feedback | 0.772 | 0.810 | 0.698 | |||

| 5. Digital pedagogy | 0.848 | 0.873 | 0.899 | 0.840 | ||

| 6. Digital resources | 0.781 | 0.804 | 0.847 | 0.807 | 0.834 |

| Path Coefficient (β) | M | DT | t-Statistic | p | |

|---|---|---|---|---|---|

| Professional commitment → empowering students | 0.489 | 0.489 | 0.014 | 36.158 | *** |

| Professional commitment → facilitating students’ digital competence | 0.576 | 0.577 | 0.012 | 49.737 | *** |

| Assessment and feedback → professional commitment | 0.134 | 0.133 | 0.021 | 6.299 | *** |

| Digital pedagogy → professional commitment | 0.629 | 0.629 | 0.020 | 31.993 | *** |

| Digital resources → assessment and feedback | 0.710 | 0.710 | 0.009 | 82.807 | *** |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

González-Medina, I.; Gavín-Chocano, Ó.; Pérez-Navío, E.; Maldonado Berea, G.A. Trajectories of Digital Teaching Competence: A Multidimensional PLS-SEM Study in University Contexts. Information 2025, 16, 373. https://doi.org/10.3390/info16050373

González-Medina I, Gavín-Chocano Ó, Pérez-Navío E, Maldonado Berea GA. Trajectories of Digital Teaching Competence: A Multidimensional PLS-SEM Study in University Contexts. Information. 2025; 16(5):373. https://doi.org/10.3390/info16050373

Chicago/Turabian StyleGonzález-Medina, Isaac, Óscar Gavín-Chocano, Eufrasio Pérez-Navío, and Guadalupe Aurora Maldonado Berea. 2025. "Trajectories of Digital Teaching Competence: A Multidimensional PLS-SEM Study in University Contexts" Information 16, no. 5: 373. https://doi.org/10.3390/info16050373

APA StyleGonzález-Medina, I., Gavín-Chocano, Ó., Pérez-Navío, E., & Maldonado Berea, G. A. (2025). Trajectories of Digital Teaching Competence: A Multidimensional PLS-SEM Study in University Contexts. Information, 16(5), 373. https://doi.org/10.3390/info16050373