The evaluation and discussion phase focuses on analyzing both quantitative and qualitative findings. This step intends to identify key insights, compare trends, and interpret results to address the RQs. By integrating a statistical analysis, the study ensures an understanding of user needs and platform requirements. The section is structured into three sections: first, the survey is evaluated, followed by an analysis of the expert interviews, and finally, both findings are combined for an interpretation.

5.1. Evaluation of Quantitative Research

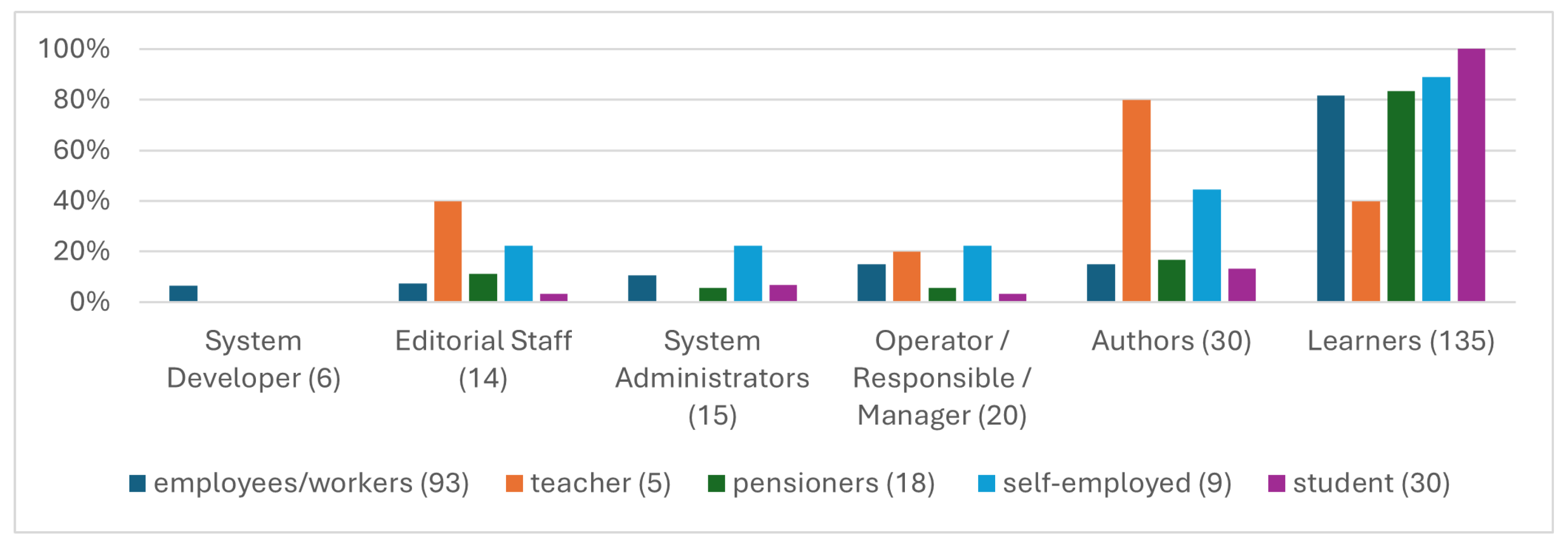

Two hundred and sixty-five participants started the execution of the survey. Out of these, 160 participants concluded the survey. There were 68 (42.5%) female participants, 86 (53.75%) male participants, and 6 (3.75%) gender-diverse participants. In regards to the educational qualifications, most participants held a master’s degree (29.38%), followed by a bachelor’s degree (25.63%). About 13.13% had a university entrance qualification, 10% completed vocational training, and 7.50% had a doctorate. Regarding age, 5.63% were 18–24, 16.25% were 25–34, 22.50% each were 35–44 and 45–54, 25.63% were 55–64, and 7.50% were 65 or older. Mainly, the respondents were employees/workers (93/58.13%), followed by students (30/18.75%), pensioners (18/11.25%), and the self-employed (9/5.63% obtained from “other” section). Five teachers accounted for 3.13%, two unemployed participants for 1.25%, and 1.88% (three) selected “other.” No one was classified as a pupil, lecturer/professor, or trainee.

Compared to the German population (used as a reference since the study was conducted in Germany), the proportion of male participants is elevated [

25], the educational level of participants is significantly higher than the average [

26], and both young and old age groups are under-represented (compared to [

27]: 18–24: 9%; 25–34: 15%; 35–44: 16%; 45–54: 15%; 55–64: 19%; 65+: 27%). This demographic distribution is likely due to the high number of participants affiliated with the distance-learning university in Hagen. Although the study participants do not fully represent the population, they reflect the assumed core user group, and the study is therefore relevant to the design of the system. This assumes that the QBLM Platform will be primarily used by adult learners in HEI and enterprise environments. Nevertheless, future studies should include a more demographically diverse sample to further validate broader usability and accessibility.

The participants were asked to classify their user stereotypes. They were allowed to choose multiple options, and 84% classified themselves as learners, which is by far the biggest group and underlines the main purpose as a learning platform. In addition to the given groups, six employees (3.75%) classified themselves as “System Developers” via the “other” text field. They were included as a separate category, and these participants were subtracted from the “other” group. As a result, the needs of system developers should also be considered in the system’s design and development. Since the “unemployed” (2) and “other” (3) categories each included very few participants, they were excluded from the final evaluation of user stereotypes.

Figure 2 visualizes the distribution of user stereotypes. The x-axis represents different professions, while the y-axis represents the percentage of users within each profession who fit into different user stereotypes. In the legend, the total number of participants for each profession and user stereotypes are shown in brackets. All students and most of the employees, self-employed professionals, and pensioners classified themselves as learners. Only teachers here expressed a significantly lower identification. Teachers mainly classified themselves as authors and editorial staff, which suggests that teachers are more involved in content creation than in consuming learning content. Self-employed professionals have a higher classification rate among both authors and editorial staff, too. For other user stereotypes, no significant correlation with specific professions is evident.

Next, the identification of the main functions of the QBLM Platform follows.

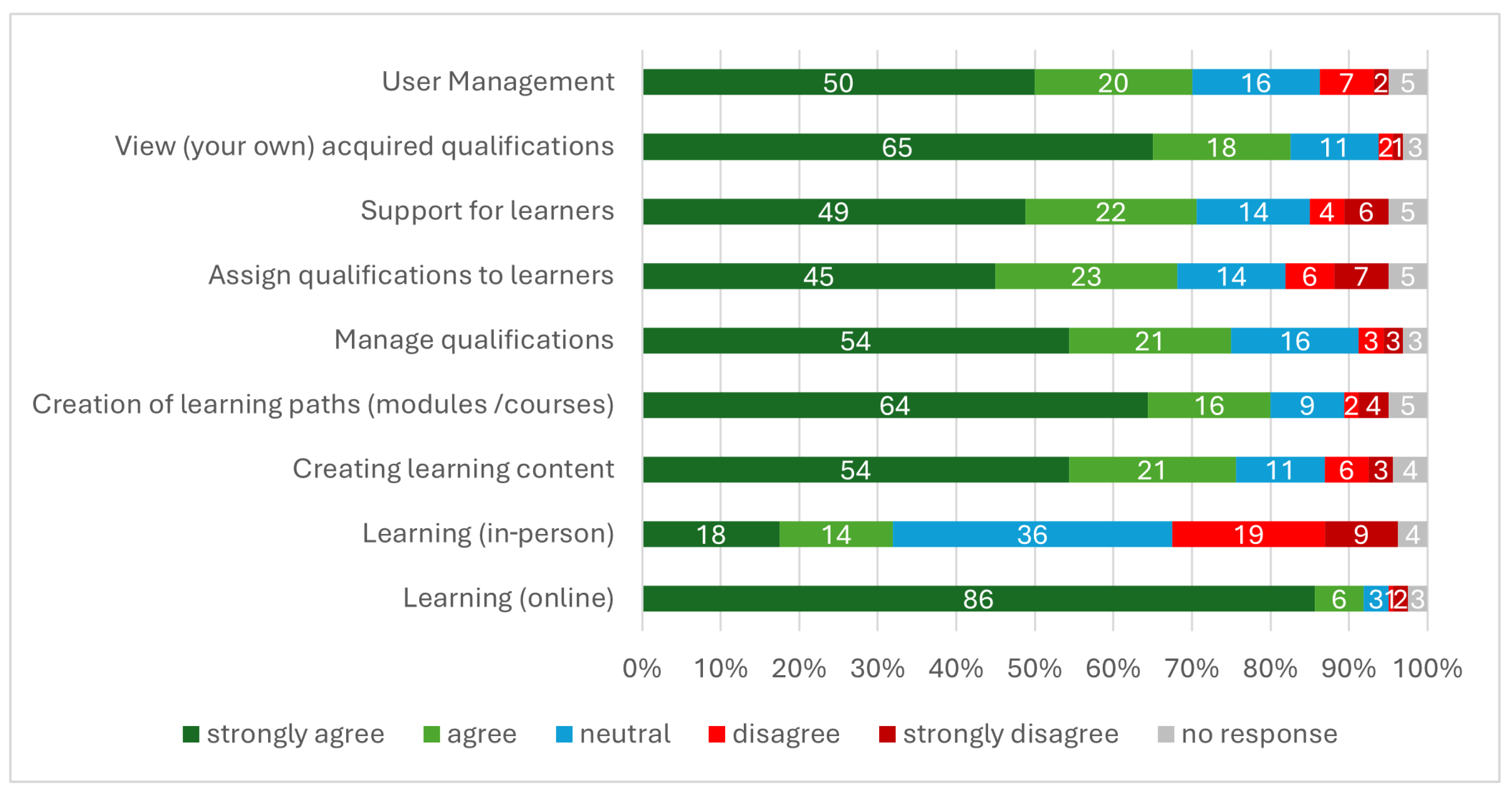

Figure 3 shows the result of the Likert scales. For each functionality, one row shows the consent of the participants to include that functionality in the QBLM Platform. The diagram represents the distribution of the 160 participants using percentages for visualization. It is observed that there is a consensus for all functionalities except the ability to use the QBLM Platform for learning in person.

Therefore, the QBLM Platform has to provide the expected management functionalities, such as maintaining users and CQs. Furthermore, a support functionality such as a help desk, as well as the creation of learning content, should be offered. The available QBLM Software LMS, CAT, CM, and CPM are confirmed to be relevant. In addition, the QBLM Platform should be extended or should allow interoperation with user management and support systems. Learning in person was not considered relevant, which likely does not reflect a general rejection of in-person learning but, rather, its misalignment with the implied digital and systemic nature of CBL and the QBLM Platform. Users assume the QBLM Platform as a tool to support, manage, and track online learning. In consequence, the applicability of CBL for in-person learning has to be evaluated and outlined.

Additionally, the participants were asked to share additional ideas for relevant functionalities. One idea was a seamless LMS integration through APIs and external interfaces while maintaining system stability. Participants mentioned features including learning and content delivery, supporting self-assessments, progress tracking, and personalized recommendations. A further suggestion was customization, allowing learners to adapt the UI to their needs. Motivation and engagement were also highlighted, with features like learning strategies and interactive elements. Participants also highlighted the importance of feedback systems. Analytics was another key aspect, providing insights into learning progress. Some suggested community and collaboration features to facilitate peer exchange, while others proposed time management tools to support structured learning. These suggestions indicate a strong user demand for a more integrated, personalized, and engaging learning experience. In consequence, the future development of the QBLM Platform should prioritize interfaces and features that improve feedback and collaboration.

As a next figure, the most relevant use cases are observed. Each respondent could select up to three preferred use cases. The most frequently chosen use case was participation in online learning modules, selected by 105 participants (65.63%). This was followed by monitoring learning progress, chosen by 78 participants (48.75%), and providing learning materials, which 71 participants (44.38%) found relevant. Additionally, 65 participants (40.63%) identified conducting examinations and tests as a key use case. Supporting and supervising learners was selected by 48 participants (30%), while creating and managing courses was considered important by 36 participants (22.5%). Less frequently mentioned use cases included assigning competencies and qualifications (28 participants, 17.75%), participation in in-person events (19 participants, 11.88%), and the integration of external content and tools (13 participants, 8.13%). The least selected use case was generating reports and analyses, with 12 participants (7.5%) considering it relevant. The results emphasize a strong focus on participation in online learning and progress tracking, while administrative and analytical functions were of lower priority to the respondents. In consequence, ensuring the seamless integration and high usability of the LMS or the platform component responsible for offering learning content should be prioritized.

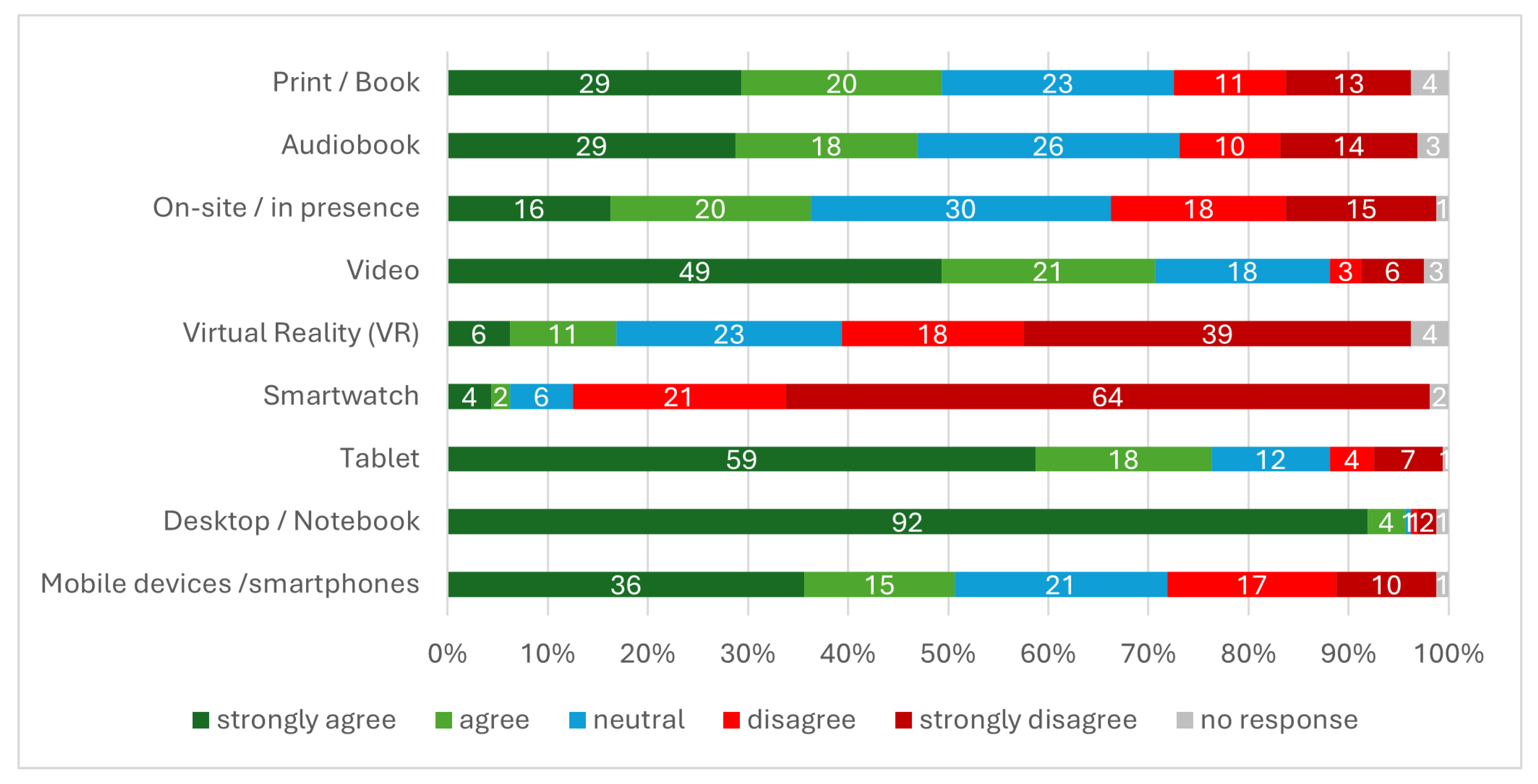

Afterwards, the technical modalities and devices relevant for users to operate the QBLM platform were examined. Therefore, the participants were asked whether they would consider using the QBLM Platform via various technical devices.

Figure 4 visualizes the results of the given Likert scale in percentages. The results indicate that desktop/notebook devices are the most favored option, with 92% of participants strongly agreeing with their use. Tablets also received strong support, with 59% strongly agreeing and 18% agreeing, making them the second most preferred device. Mobile devices/smartphones were widely accepted as well, with 36% strongly agreeing and 15% agreeing, though 17% disagreed and 10% strongly disagreed, indicating some hesitation.

Print/books and audiobooks show a balanced response, with 29% strongly agreeing in both cases, while 23% and 26% of participants, respectively, remained neutral. Videos were positively received, with 49% strongly agreeing and 21% agreeing, making them the most favored digital format. On-site/in-presence learning was associated with a mixed perception, with 16% strongly agreeing, while 30% remained neutral, and 18% disagreed. In contrast, virtual reality (VR) and smartwatches were met with high levels of rejection. While 39% strongly disagreed with VR, a significant 64% strongly disagreed with using smartwatches for the QBLM Platform. In consequence, traditional digital devices (desktops, tablets, and mobile devices) are the preferred modalities, while emerging technologies such as VR and smartwatches face resistance among users. This can be utilized to concentrate the development on these technical modalities.

Additionally, participants provided further suggestions in regards to accessing the QBLM Platform. One idea was offline usage. Another proposal was support for gaming consoles—such as the Nintendo Switch—as a potential learning device. Accessibility was also highlighted, with a suggestion to ensure usability for blind and deaf users. Health considerations were raised, recommending guidelines on ergonomic risks. Further technical enhancements included integrating video conferencing tools and a virtual whiteboard to support collaboration. Artificial intelligence-based learning was another key theme, with suggestions for adaptive training that adjusts to users’ demands. Participants also proposed e-book reader compatibility for longer texts. Furthermore, compliance for corporate computers, including data security policies, was requested. Therefore, the QBLM Platform should support diverse access options, ensure accessibility and corporate compliance, and incorporate AI-driven personalization and collaborative tools to better align with user-centric needs. These requirements could be addressed through additional software components extending the QBLM Platform, which confirms the demand for a modular and flexible approach.

In the next section, the participants were asked to select up to three non-functional and three functional requirements that they considered essential. The results highlight the key priorities for platform design and implementation.

Among the non-functional requirements, usability (71.88%) emerged as the most important factor. This was followed by performance (48.13%), availability (43.75%), and data security and privacy (35.63%). Other aspects include costs (usage costs as a learner) (32.50%), which were more relevant than costs related to operating expenses and investments (8.13%). Accessibility (15.00%), backup and recovery (13.75%), scalability (10.63%), and regulatory compliance (9.38%) were assigned lower overall importance. Consequently, the focus has to be placed on ensuring an intuitive and user-friendly interface, fast performance with minimal downtime, and robust data security measures, as these aspects are the primary concerns for users.

Regarding functional requirements, the most important feature was the integration of learning resources (54.38%). Creating and managing courses (40.63%), collaborative features (38.13%), and conducting exams and assessments (36.88%) were other key priorities. Analysis and reporting tools (26.25%) and qualification management (23.13%) were recognized as important for tracking learning outcomes and managing competencies. Integration with external systems (18.13%) and user and role management (15.63%) were less essential. Therefore, the QBLM Platform has to prioritize the integration of learning resources, course management, collaboration tools, and exam functionalities, as these are the most critical functional requirements identified by users.

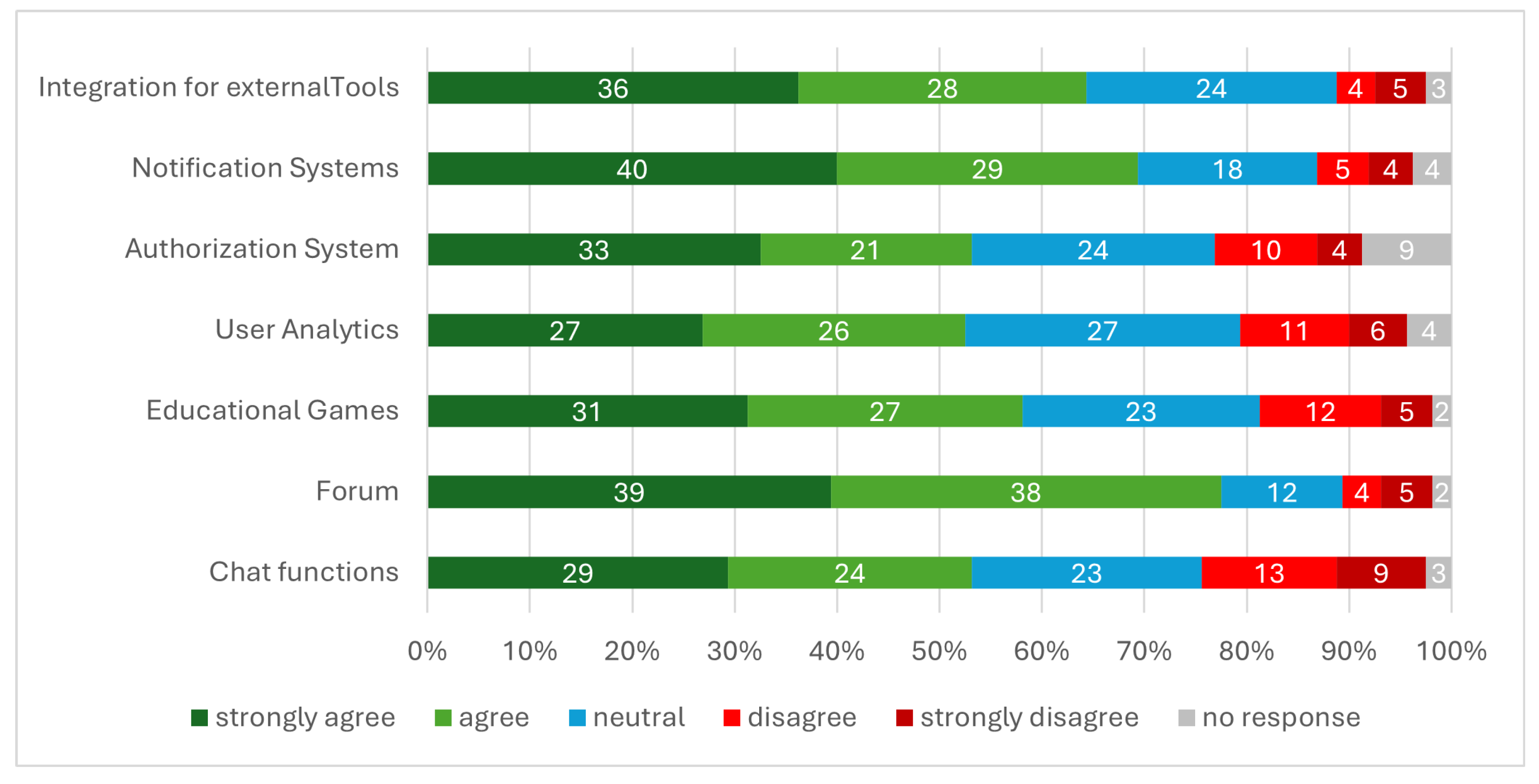

In the next question, the ability to extend the QBLM platform was examined. Participants were asked to evaluate various extension features, including integration for external tools, notification systems, authorization systems, user analytics, educational games, forums, and chat functions.

Figure 5 illustrates the results.

The highest level of agreement was observed for notification systems (69% agree), followed by forums (77% agree) and integration for external tools (64% agree), emphasizing the importance of communication and system integration features. Educational games (58% agree) and user analytics (53% agree) were also well received. Authorization systems received 54% agreement. Chat functions had the highest disagreement (22%), despite 53% agreement, reflecting divided opinions on its necessity. Consequently, the results indicate a strong demand for notification systems, forums, and external tool integration. While educational games, user analytics, and chat functions surpass 50% agreement, they remain important but should be prioritized after higher-demand features in the development process. However, these results show that user demands are heterogeneous. Therefore, the QBLM Platform has to support modular extensibility to allow the selective usage of features.

As an additional input, the participants suggested focusing on QBL core topics while integrating established LMS platforms (e.g., Moodle). Platform independence, using modern programming languages and community-driven development, was also emphasized. Improving communication was a key theme, with calls for a unified platform channel, additional electronic communication methods, and supervised learning with qualified feedback and tailored tasks. Other proposals included a calendar with import/export functions, visible learning progress tracking, and documentation of learner activities.

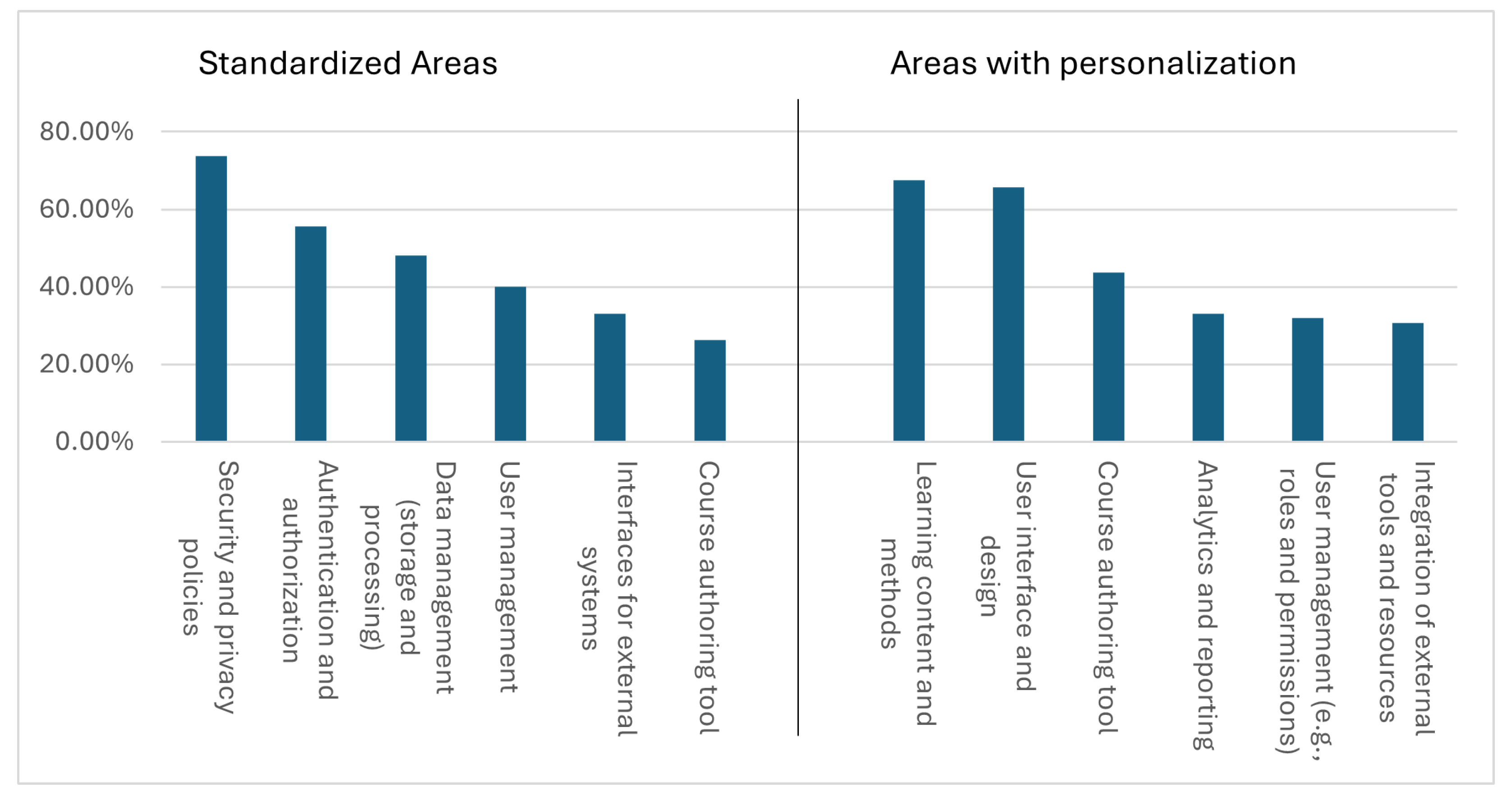

Next, the participants were asked to identify which areas of the QBLM platform should be standardized and not individualized and which areas should allow for personalization. The participants were allowed to select up to three options.

In regards to standardization, the strongest consensus was observed for security and privacy policies (73.75%), followed by authentication and authorization (55.63%) and data management (storage and processing) (48.13%). User management (40.00%) and interfaces for external systems (33.13%) were also seen as areas requiring standardization. The course authoring tool (26.25%) had the lowest preference for standardization, suggesting users see value in customization.

The highest agreement for personalization was observed in learning content and methods (67.50%) and user interface and design (65.63%). The course authoring tool (43.75%) also received notable support for customization. Other areas, such as analytics and reporting (33.13%), user management (e.g., roles and permissions) (31.88%), and the integration of external tools and resources (30.63%), were seen as moderately important for personalization.

These results suggest that security, authentication, and data management should remain fixed and standardized, while content, interface design, and course authoring should be customizable to meet user demands. Therefore, the QBLM Platform has to enforce standards in core architecture to ensure reliability and compliance while enabling flexible customization in pedagogical and user-facing components to support user preferences.

Figure 6 visualizes the results of the questions regarding the standardization and personalization. The figure visualizes the consensus on standardizing or personalizing the areas in percentages.

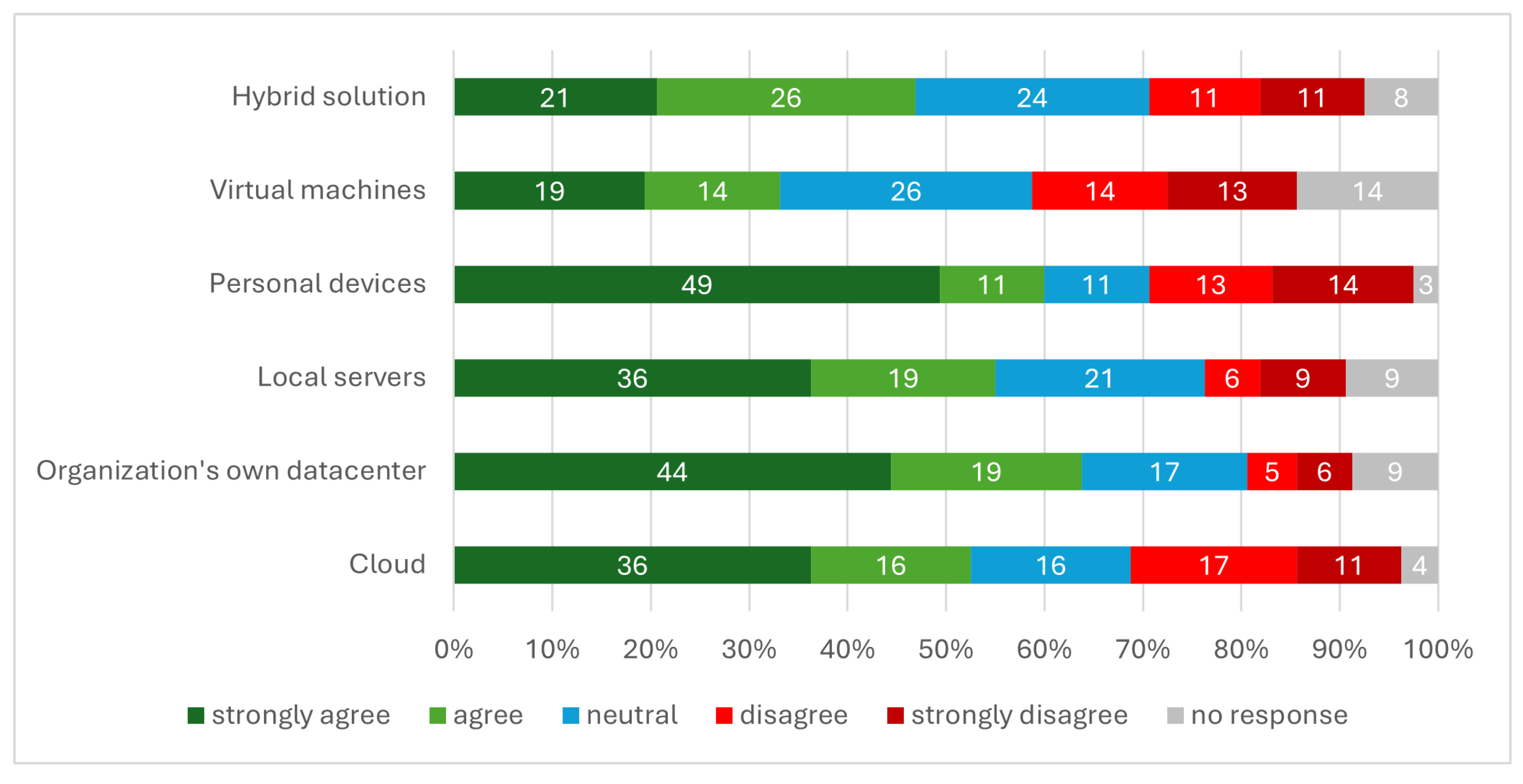

In the next section, the participants were asked to evaluate different IT infrastructures for hosting the QBLM platform.

Figure 7 illustrates the results. The highest level of agreement was observed for the organization’s own data center, with 44% strongly agreeing and 19% agreeing, indicating a strong preference for in-house infrastructure and data control. Local servers (on-premise) also received 36% strong agreement and 19% agreement, emphasizing the preference for self-managed hosting solutions. Personal devices were widely accepted, with 49% strongly agreeing and 11% agreeing, highlighting the demand for accessibility across PCs, notebooks, and smartphones. For cloud deployment, opinions were more divided: while 36% strongly agreed and 16% agreed, disagreement was also high (17% disagree, 11% strongly disagree), indicating concerns regarding the cloud. Hybrid solutions received 21% strong agreement and 26% agreement, with a notable 24% neutral response, suggesting uncertainty. Virtual machines (VMs) received 19% strong agreement and 14% agreement but also the highest neutral (26%) and disagreement (27%) responses, indicating mixed opinions on their relevance for the QBLM Platform. These results suggest a strong preference for on-premise and data center-based solutions, while cloud and hybrid approaches should be considered.

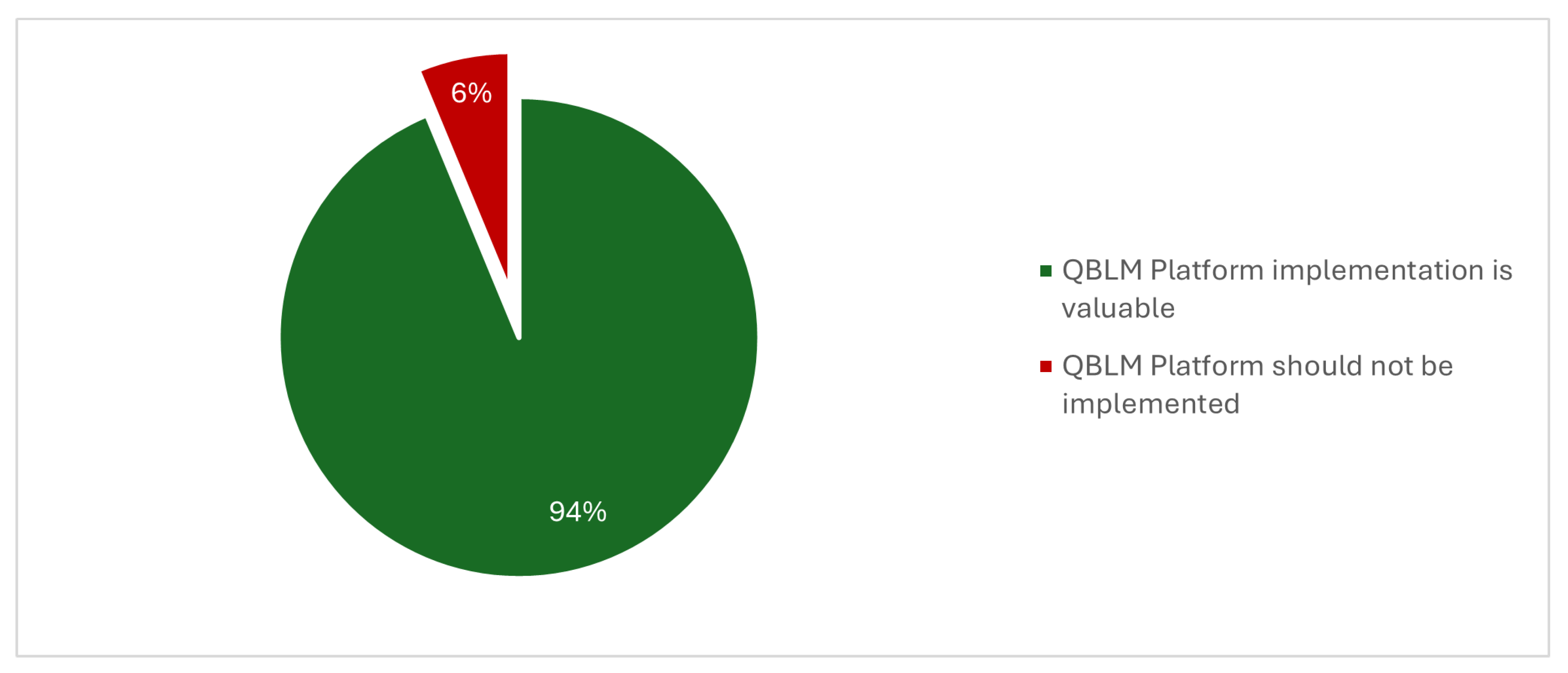

Afterwards, the participants were asked whether they found it valuable to develop a QBLM Platform to make qualification-based learning accessible to a broader audience. The results, shown in

Figure 8, indicate support for the implementation of such a platform. However, several concerns were raised regarding its practicality and feasibility. Some participants doubted whether the platform would be realistic and effectively implementable, questioning its practical benefits. In terms of future relevance, some respondents challenged the necessity of developing a new platform, arguing that the existing learning platforms already cover many needs. Additionally, there was criticism suggesting that the platform might overemphasize technical and subject-specific knowledge while neglecting important soft skills. Finally, concerns were expressed regarding implementation and resource allocation. Participants shared warnings about high costs, long development times, and potential resource waste, emphasizing the need for careful evaluation and large-scale testing before full implementation. While there is strong overall support (94%) for a QBLM platform, these concerns highlight the need for evaluation, differentiation from existing platforms, and resource planning.

Finally, the participants were asked to provide additional comments regarding the survey. They emphasized the need for realistic goals, prioritization, and leveraging existing LMS solutions (e.g., Moodle) for integration. A clear differentiation from other platforms and a unified, user-friendly system were highlighted as important for better navigation and accessibility. A well-defined didactic concept was requested, including personalized learning paths, non-digital learning resources (books, seminars), and real-time progress tracking without additional exams. Participants named simplified digital library access and intelligent writing assistance to detect common errors. Furthermore, expanding accessibility for older users, ensuring motivating and friendly communication, and actively involving users in development were key concerns. Lastly, participants requested clear explanations of the platform’s purpose and the specific problems it aims to solve. Some aspects were already mentioned in other segments of the questionnaire. However, they have to be utilized to enrich the research, too.

5.2. Evaluation of Qualitative Research

The expert interviews followed the same general structure as the quantitative research. To ensure readability and consistency, this evaluation follows a structure similar to the previous section. However, due to the extensive content of the interviews, a full transcription is not provided. Instead, this subsection presents a summary, highlighting the key aspects and main insights derived from the discussions. The experts and their vitae are already presented in

Table 3. For better readability, expert identifiers are used instead of full names in the following text.

Part of the interview also discussed the classification of user stereotypes, which was included in the results. Derived from this classification, experts from HEI match roles such as author, learner, and system administrator. In contrast, professionals from enterprises, who typically manage operational and strategic responsibilities, are mostly classified by stereotypes like operator/responsible/manager, editorial staff, or system administrator.

Regarding core functionalities, experts proposed various features aligned with their respective user stereotypes. E1 proposed competency profiles, learning paths, and analytics tools to ensure data-driven learning progress tracking. E2 emphasized advanced search functions, intuitive UI, and resource management, enhancing platform usability and efficiency. E3 recommended modular architecture, event-driven processes, and microservices, enabling scalability and adaptability. E4 highlighted automated reports, simple navigation, and structured dashboards, optimizing managerial oversight. E5 suggested interactive dashboards and real-time progress visualization, reinforcing user engagement. E6 encouraged clearly structured competency profiles and self-directed learning tools, supporting learner autonomy. E7 stressed qualification tracking and fraud prevention mechanisms, ensuring data integrity and certification security. In consequence, the QBLM Platform should integrate intelligent competency tracking, advanced search capabilities, modular system design, and real-time analytics, ensuring a seamless user experience across different learning environments.

For the topic use cases, the experts outlined practical applications of the QBLM platform, aligning them with organizational and educational needs. E1 identified competency profile creation and analysis as key to structuring qualification pathways. E2 focused on employee training and resource planning, enhancing workforce development strategies. E3 stressed the importance of course and exam integration within university systems. E4 pointed out qualification assignment and tracking, ensuring compliance in professional settings. E5 highlighted learning path creation and certification management, particularly relevant in regulated industries. E6 advocated for course standardization and comparability, improving learning consistency. E7 emphasized fraud detection and adaptive algorithms, ensuring fairness and quality control. Therefore, the QBLM Platform should cater to both structured academic pathways and corporate training environments, with features supporting certification, regulatory compliance, and qualification assignment.

Next, the analysis of expert opinions on technical modalities is presented. E1 emphasized a focus on mobile devices (smartphones, tablets) to facilitate on-the-go learning. E2 preferred desktop environments with strict security and high processing capabilities. E3 emphasized LMS integration (e.g., Moodle) and containerized deployment, ensuring flexibility and scalability. E4 suggested web-based, cross-device compatibility, maximizing accessibility. E5 recommended integration into existing IT infrastructures, reducing implementation costs. E6 stressed the need for platform independence, enabling flexible learning environments. E7 emphasized mobile and browser compatibility, ensuring ease of access. The results indicate a preference for various technical modalities, including mobile, desktop, and web-based access, to ensure flexibility.

Regarding non-functional requirements, experts highlighted data security, data compliance, system maintainability, and accessibility as essential. E1 stressed the importance of data compliance, accessibility, and data security, while E2 emphasized IT security, backups, and controlled access to maintain platform reliability. E3 focused on sustainable system reliability and optimization of computational resources. E4 highlighted cost efficiency and minimal maintenance as critical for corporate environments. E5 pointed out a user-friendly interface and process stability. E6 highlighted a balance between system complexity and ease of use. Lastly, E7 emphasized the need for qualification accreditation and validation mechanisms, ensuring alignment with educational and industry standards.

For functional requirements, experts outlined necessary features to support adaptive learning and structured assessments. E1 proposed automated analyses and testing tools to enhance personalized learning feedback, while E2 emphasized customizable competency profiles with automated progress evaluation. E3 recommended a modular system structure that supports varied learning formats and adaptive workflows. E4 and E5 both highlighted the need for progress tracking, certification automation, and dynamic learning dashboards, ensuring a comprehensive learning experience. E6 focused on flexible course structures to accommodate different learning methodologies. E7 encouraged for question banks and automated reminders to reinforce continuous learning habits.

In terms of system components, experts proposed architectural elements to enhance platform scalability and usability. E1 and E2 recommended competency management modules with performance tracking, while E3 voted for a microservices-based modular architecture to ensure scalability and adaptability. E4 and E5 suggested intuitive administrative interfaces and adaptive learning features, maximizing accessibility for different user groups. E6 emphasized competency analysis and visualization tools, enabling a data-driven approach to learning progress tracking. E7 focused on structured content management to ensure updates and long-term sustainability. Standardization was another aspect discussed with the experts. E1 stressed the need for standardized data formats and API interfaces, allowing seamless integration with external systems. E2 and E3 emphasized cross-institutional interoperability, ensuring that universities and companies can share common learning structures. E4 and E5 pointed out the necessity of standardized certification and reporting mechanisms, facilitating qualification recognition across industries. E6 and E7 supported harmonized training structures and competency frameworks.

On the other hand, experts highlighted the need for personalization and customization to match individual learning needs. E1 suggested individualized learning paths based on skill levels and user preferences, while E2 proposed filtering options for targeted content access. E3 and E4 emphasized flexible course configurations and adaptive system components, ensuring an individual learning experience. E5 and E6 stressed customizable dashboards and user progress tracking, making learning more engaging and structured. E7 recommended reminder functions to reinforce continuous learning habits and motivation.

Experts emphasized the need for a cloud infrastructure to ensure scalability and reliability. E1 and E2 recommended cloud solutions with scalability and adaptability to different organizational needs. E3 highlighted the importance of cross-organizational cloud systems. E4 suggested lightweight, scalable solutions with minimal maintenance requirements, reducing technical overhead for administrators. E5 focused on optimized processes for pharmaceutical training and documentation, ensuring compliance with industry regulations. E6 stressed the need for universal accessibility, allowing all users to engage with the platform, regardless of location or device. Lastly, E7 emphasized adaptations for medical education, ensuring that the platform meets specialized training requirements. These findings indicate that the QBLM Platform should adopt a cloud approach with modular adaptability, ensuring scalability, accessibility, and industry-specific flexibility across diverse educational settings.

Finally, the experts were asked whether the implementation of the QBLM platform is necessary. All experts supported the idea, recognizing its potential for structured learning, competency tracking, and assessment automation. E1, E3, and E5 strongly advocated for the platform’s importance in education and research, while E2 and E6 agreed with the implementation but stressed the need for strategic planning and system integration. E4 and E7 stated concerns about cost efficiency and differentiation from existing learning platforms.

5.3. Evaluation of Combined Results from Qualitative and Qualitative Research

This section combines and compares the results of both the quantitative survey and the qualitative expert interviews, following the convergent mixed-methods design. In consequence, a holistic perspective on the requirements for the QBLM Platform is created. While both research streams largely converge on the platform’s core requirements, certain discrepancies appeared. Again, the section follows the general structure of the research.

First functionalities and use cases are observed. The survey results indicate support for functionalities such as online learning modules, progress tracking, and the integration of learning resources. Experts reinforced these findings, stressing the importance of competency profiles, modular course management, and dynamic dashboards that facilitate adaptive learning. This convergence underscores the necessity of a user-friendly interface with robust tracking and management features. Therefore, the QBLM Platform has to provide a consistent, intuitive design and has to offer easy navigation, i.e., the use of diverse resources.

In regards to the technical modalities and devices, survey respondents demonstrated a strong preference for traditional digital devices while expressing hesitation toward mobile devices. In contrast, experts emphasized the necessity of mobile and web-based access, referring to accessibility and the ability to learn anywhere. This divergence suggests that users currently prefer familiar modalities, but the QBLM Platform should incorporate a mobile-compatible interface and responsive design to be future-proofed. In consequence, the QBLM Platform must be flexible enough to deliver its functionality across various technical modalities (e.g., using web technologies) while also interoperating with existing systems such as LMSs or other learning platforms (e.g., LinkedIn Learning or Udemy).

Next, functional and non-functional requirements were observed. Survey participants rated usability, performance, and data security as top non-functional requirements. Experts complemented these findings by emphasizing the need for standardized data formats, secure APIs, and cross-institutional interoperability. This alignment suggests that the QBLM Platform must prioritize an intuitive design and rigorous security protocols to ensure both ease of use and data integrity.

In regards to software components and extensions, both data sets advocate for a system architecture that is scalable and flexible. The survey results highlight a demand for the integration of additional on-demand features. Experts elaborated on this, specifying the need for a modular, microservices-based design. This synergy confirms that modularity is central to accommodating diverse user needs. The QBLM Platform architecture has to take this finding as a foundational principle for its design.

In the survey, users leaned toward standardizing core elements such as security, authentication, and data management. Meanwhile, they prefer personalization in learning content and interface design. Experts also value standardization for interoperability, emphasizing the potential of individualized learning paths and flexible dashboards as critical for engagement. The difference shows the need for a balanced approach that ensures a consistent implementation while still enabling personalized user experiences. Therefore, the QBLM architecture must not only define an approach to hosting functionality but also establish clear rules and interfaces.

The hosting environment is the next topic. Survey respondents exhibited a marked preference for on-premise and data center-based solutions for enhanced control and data privacy. Conversely, expert interviews leaned toward cloud solutions, citing scalability, maintenance efficiency, and the flexibility required to support a growing user base. This discrepancy implies that the final system design might benefit from a hybrid hosting strategy or has to be compatible with both on-premise and cloud. In consequence, the solution must introduce an abstraction layer between the QBLM Platform and the underlying infrastructure to enable deployment across various scenarios.

Both the quantitative and qualitative research participants largely agreed on the importance of developing a QBLM Platform. However, experts and survey participants expressed concerns regarding the practical implementation. Some participants doubted whether the platform would be realistic and effectively implementable, questioning its practical benefits. Others emphasized the necessity of clear differentiation from existing learning platforms. Concerns were also raised regarding high costs, long development times, and potential resource waste, emphasizing the need for careful evaluation before full implementation. Therefore, a prototypical implementation of the QBLM Platform should be developed and evaluated through pilot projects in both higher education and enterprise environments.

Overall, the combination of quantitative and qualitative results reveals strong convergence on the importance of core functionalities, user-centric design, and a modular system architecture for the QBLM Platform. At the same time, discrepancies in technical modality preferences and hosting environments highlight the need for a flexible QBLM Platform design. Unlike prior research that either focused on technical modeling or prototypical implementations, this study examines stakeholder needs in diverse aspects. The combination of the qualitative and quantitative approaches offers a basis for future platform development, fostering alignment between pedagogical goals and technical implementation.