Abstract

Galenic preparations are patient-centered medicines prepared by pharmacists or veterinarians, which allow personalizing dosages, overcoming allergy problems, reducing costs, and dealing with rare diseases. However, the current manual production process of galenic preparations poses several challenges to human workers. This paper proposes the use of collaborative robots to help pharmacists carry out the most tiresome, precise, error-prone, and time-consuming tasks. In particular, an end-user development (EUD) environment called PRAISE (pharmaceutical robotic and AI system for end users) has been designed to support pharmacists in programming the tasks to be performed by a collaborative robot. The EUD environment integrates artificial intelligence (AI) features based on large language models but ensures that end users always have complete control over the generated output, that is, a robot program. The paper focuses on the application of a human-centered methodology adopted to design PRAISE by involving representative end users (experts in the pharmaceutical sector) from system ideation to its evaluation. Design implications related to AI-enabled EUD for collaborative robots are the main findings of the paper.

1. Introduction

Galenic preparations, namely medicines that are prepared at the local level by a pharmacist or a veterinarian offer the possibility of an alternative approach to industrially produced drugs [1]. They represent an example of patient-centered medicine since they are usually prepared for patients with specific needs (in particular children, see, e.g., [2]) and to comply with specific medical prescriptions. In fact, while the pharmaceutic industrialization process allows large-scale, efficient medicine production, galenic preparations continue to be essential in modern therapeutics since industrial drugs do not consider specific patients’ characteristics, such as gender, weight, age, allergies, and dietary requirements. In addition, some medicines are not produced in adequate quantities because they are not profitable enough for the industry [3]. Galenic preparations are produced in small batches by mixing and blending various ingredients and creating drugs in different forms, such as capsules, tablets, and creams. In summary, the reasons to resort to galenic preparations include personalizing dosages (e.g., for small children), overcoming allergy problems by avoiding or replacing critical ingredients (e.g., excipients), coping with the scarcity of some ingredients, and producing medicines that the pharmaceutical industry does not produce or produces at higher costs (e.g., drugs for rare diseases).

The current process of galenic preparation is still manual and follows the good manufacturing practice (GMP) guidelines [4,5]. Each galenic preparation follows a specific formulation, which determines the steps of the production process. For instance, to prepare drugs in the form of capsules, the pharmacist weighs different ingredients, mixes them in a bowl, grinds and blends them, fills capsules with the obtained preparation, and finally, transfers the capsules into containers, like plastic or glass bottles. The pharmacist performs all these activities manually, except for the filling of capsules, which is usually accomplished with the help of a special machine called an operculator. Therefore, these activities turn out to be repetitive, tiresome, time-consuming, and prone to errors. For instance, manually mixing preparation ingredients can lead to sub-optimal dissolution rates that affect drug potency, while picking each filled capsule from the operculator and putting it into the container can become a very long and tedious activity. Currently, no digital technological support is available for these tasks. This paper suggests that collaborative robots ensure precision and efficiency, representing a way to address these problems while keeping professionals at the center of the preparation process. A collaborative robot could perform the most time and precision-demanding tasks, while the pharmacist could keep on carrying out formulation design, quality assessment, and decision-making activities.

This is in line with the growing interest in the use of collaborative robots to assist human operators in the bio-manufacturing and pharmaceutical sectors [6,7]. Collaborative robots are new types of robots endowed with appropriate sensors that make them safe for human workers; thus, they can share an environment with humans and collaborate with them as co-workers [8]. Some collaborative robot models are also lightweight and can be easily moved in a work environment to different places to perform different tasks. These robots can be regarded as flexible automation technologies; that is, they can be quickly and easily re-tasked to change products and volumes, thus allowing small-batch production [9]. All these features lead us to regard collaborative robots as good candidates for the production of galenic medicines in collaboration with pharmacists. However, the main issue affecting the large-scale deployment of collaborative robots, especially for small-batch production, is the need to reprogram their tasks several times a week or even several times a day. Another requirement is that human workers should be able to create new programs or reprogram robot tasks easily and rapidly, even when they are not experts in programming but are experts in their application domain (e.g., pharmacology in our case). This means that collaborative robots must provide easy-to-use interfaces and simple programming environments [9].

This paper presents the design of an interactive system based on artificial intelligence (AI) techniques that supports pharmacists in the programming of collaborative robots that may help them prepare galenic formulations. Such an interactive system, called PRAISE (pharmaceutical robotic and AI system for end users), is conceived as an end-user development (EUD) [10,11,12] environment, namely, a software environment tailored to domain experts that supports them in adapting, modifying, extending, or creating software artifacts [10]. PRAISE integrates traditional visual programming techniques proposed in the EUD field [12] with interfaces based on natural language processing (NLP) and large language models (LLMs) to empower end users (pharmacists) in programming robot tasks in the easiest and most natural way. Interaction design stems from in-depth user research carried out with the participation of domain experts and from the authors’ expertise in EUD and collaborative robots [13,14]. The application of EUD techniques with the support of AI tools to the case at hand fits the notion of human-centered artificial intelligence [15], as it aims to preserve a high level of human control while achieving at the same time a high level of automation, where possible and appropriate.

In synthesis, this paper provides contributions both at a methodological and at an application-oriented level. From a methodological perspective, the contribution is two-fold: (i) exploring the use of collaborative robots in an application domain where most of the activities are still manually performed and (ii) proposing an approach to programming collaborative robots in this field, which integrates LLMs with domain-specific features for easier and more efficient robot program creation.

Turning to the specific application domain considered, the proposed approach has the potential to enhance the quality of galenic medicines by reducing the variability inherent to fully manual processing. At the same time, it ensures that a precious resource like professional pharmacists is employed only for higher-added-value activities.

The paper is organized as follows. Section 2 presents related works. Section 3 outlines the adopted design methodology. Section 4 describes how we studied the current practice and related issues with galenic solution preparation and identified a way to support this practice with the help of collaborative robots. Section 5 describes the interaction design activities carried out to tackle the problem of programming collaborative robots with an AI-based EUD environment. Section 6 presents the developed EUD environment and explains how it can generate a comprehensible program for end users and provide them with features for easy program correction to ensure reliability, safety, and trustworthiness [16]. Section 7 summarizes the results of an exploratory user study, highlights design implications, and reports the limitations of the work. Section 8 concludes the paper by outlining open issues and future work.

2. Related Works

2.1. Collaborative Robots in the Pharmaceutical Sector

An overview of the automation and robot technologies that can be applied in the pharmaceutical laboratories and industry is presented in [7]. It is highlighted how implementing these solutions in this context is challenging due to the complexity of the production and of existing regulations. Often, components need to be operated by both humans and robots in an interleaved way since many tasks require a level of dexterity that robots do not yet possess: for example, measuring out a given amount of powder from a container is still a task that requires human abilities [7]. Human operators are also still needed to load the samples into automatic systems or to transport them from one device to the next. In these scenarios, collaborative robots could be employed, thanks to their safety characteristics that make them capable of working in a shared human–robot workspace. A few works addressed the sample manipulation problem in the laboratory through the use of a dual-arm collaborative robot [17,18], which is, however, more difficult to control and program. According to [19], in pharmaceutical laboratories, robotics solutions can assume two different configurations: (i) static robots placed in fixed positions surrounded by all devices, tools, labware, and consumables necessary to perform their tasks; (ii) mobile manipulators, which can move around the laboratory to transport the samples and can carry out sample manipulation in the different working stations they reach. The latter consists of a mobile base and one or more arms; sensors, such as laser scanners and 3D cameras, usually complete the configuration. Mathew et al. [6] present an investigation on the potential of these collaborative mobile robots for the automation of the routine tasks carried out within the bio-manufacturing sector in general and in the field of personalized therapeutic drugs in particular. These tasks are related to the transportation and handling of materials and devices and environmental sampling and monitoring. According to the authors, robot adoption in these sectors can lead to lower contamination risks and manufacturing costs and allow microbiologists and operators to focus on more complex operations, such as process design and optimization tasks. This type of application requires more testing, and a series of challenges related to the programming, commissioning, and operation of robots must be overcome [6].

State-of-the-art solutions based on mobile manipulators for the pharmaceutical sector have several limitations, such as a lack of data interface standards, non-conformity with GMP guidelines, cybersecurity issues, high integration effort, and limited adaptability. Wolf and colleagues [7] propose a technology-agnostic framework to overcome these limitations and allow a mobile robot to be installed and operate in a laboratory environment in a plug-and-play manner. However, the framework is defined at a high level of abstraction without providing the details that could make it adaptable to a broad range of applications. Furthermore, robot flexibility should also be achieved by providing suitable robot programming methods; this topic remains an important open issue in this field that we intend to address in this paper.

2.2. End-User Robot Programming

Ajaykumar and colleagues [20] recently reported on a survey on end-user robot programming; they highlight how this introduces additional challenges with respect to traditional end-user programming and end-user development [10,11,12], such as the need for programs to refer to physical objects and locations and interact with the surrounding environment by moving and performing actions. Furthermore, end users may vary in their backgrounds, technology literacy, and computational thinking skills [21] and may not have the time, interest, or capacity to learn robot programming. Thus, the primary goal of end-user robot programming is to define methods that allow users without expertise in robotics and programming to deal with the complexity of robot programming. The paper [20] surveys existing work focused on methods that enable users to specify robot behavior in terms of structure, logic, and characteristics. Methods proposing programming by demonstration as a way to program robots by showing them how to perform desired tasks are extensively discussed in other surveys (e.g., [22,23,24]). Our approach can be framed in the first category since the user is called on to describe textually and/or visually the task to be performed by the robot.

Visual programming languages used in the robotics field often exploit computer-oriented notations, such as flowcharts in RoboFlow [25] or hierarchical trees in CoSTAR [26], which appear more suitable to people knowledgeable in computer programming than in their own application domain. Another family of visual programming languages is inspired by Scratch [27] and Blockly [28], which are usually adopted to teach children the art of programming. For instance, Code3 [29], CoBlox [30], and the graphic interface in CAPIRCI [13,14] all exploit the puzzle metaphor to allow the user to compose programs by dragging and dropping blocks on a canvas. Block types may refer to typical constructs of programming languages, such as variables, loops, conditionals, and functions, as in [29,30], or be more tailored to the domain concepts [13]. The skill-based approach presented in [31] is a further visual programming language aimed at providing higher-level concepts with respect to robot programming; thus, skills, namely task-related actions of the robot, such as “pick object” or “navigate to location”, can be parameterized and combined in a linear sequence to define the desired robot behavior.

Natural language programming has been proposed as an alternative approach to robot programming. Buchina et al. [32] present a web programming interface that supports occupational and rehabilitation therapists to define social interaction tasks for an NAO humanoid robot. However, during the experimentation, it was observed that participants had difficulty creating and employing abstraction, and this led to limited descriptive capabilities and producing tasks with low complexity [33]. In the manufacturing and industrial contexts, [34] presents an approach to translating free natural language sentences into robot instructions, which takes into account the variations and ambiguities in natural language and context and task constraints. Even though validation results are promising, they have been obtained by exploiting available datasets and not through user studies.

End-user development is a broader term than end-user programming since it spans the software development life cycle. It has significantly evolved since the formation of the European Network of Excellence on End-User Development (EUD-Net) in 2003 (https://hiis.isti.cnr.it/projects/eud-net.htm, accessed on 10 March 2025). Initially defined in 2006 as a set of methods and tools enabling non-professional developers to create or modify software artifacts [10], the concept has since expanded to include a broader range of digital artifact creation, especially with the rise of the Internet of things [11]. The updated definition emphasizes empowering users to act professionally in ICT-related tasks without formal software engineering knowledge [12]. EUD aims to transition users from passive consumers to active creators, applicable across domains such as healthcare, business, education, smart environments, entertainment, and robotics. In organizational contexts, end users often possess deep domain knowledge but limited programming expertise. Thus, effective EUD tools must align with users’ reasoning styles rather than traditional programming paradigms [21], encompassing domain-specific environments for digital artifact creation and user-oriented debugging mechanisms [35]. Literature reviews highlight key challenges and directions in EUD research. In [36], Paternò underlines the importance of balancing tool complexity with usability, supporting user-friendly interaction over scripting, and addressing both interactive and functional aspects of applications. The survey by Maceli [37] shows a dominance of tools like spreadsheets and web authoring platforms, with limited support for newer interfaces like voice or tangible interaction. According to the systematic mapping study carried out by Barricelli et al. [12], the most adopted interaction techniques for EUD are as follows: component-based, rule-based, and programming-by-demonstration. In [38], rule-based programming (also known as trigger-action programming) emerges as a common method in context-aware applications. Similarly, [35] emphasizes the important role of trigger-action programming to personalize the behavior of social robots; however, in this case, robot tasks must be defined as sequences of actions triggered by an event, requiring users to adopt a mental model that may not reflect their usual work practice [39]. Another problem that emerged with trigger-action programming is that the trigger event must often be defined as a Boolean expression, which results in being difficult for users to specify, control, and maintain over time [35].

Our approach features multi-modal interaction since it includes natural language processing as one of the possibilities for robot task definition, ensuring the intuitiveness of the interaction, in addition to visual block-based interaction, fostering direct manipulation and programming flexibility. With respect to previous works in the literature, the approach exploits novel generative AI technologies, specifically LLMs. Furthermore, the system can be regarded as an end-user development environment since the user is called on to describe the domain concepts and then use them to define the robot tasks, thus performing activities that lead to generating robot programs in an unwitting manner [40]. The integration of LLMs in EUD is still a research challenge, especially in the robotics field, where safety and security issues require end users to be able to verify the correctness of the generated code [41]. The proposed multi-modal approach aims to overcome this problem by providing users with an effective means to understand and validate the correctness of the specified robot task, as advocated in [35].

3. Methodology

A human-centered design (HCD) methodology has been adopted to analyze in detail the pharmacists’ work practice and identify opportunities for automated support in this context. The methodology is articulated along the classic HCD iterative stages [42]:

- User research: in this stage, various methods were adopted, such as interviews, document analysis, personas, and scenarios, for knowledge elicitation and domain comprehension, and to delineate users’ profiles and possible interaction flows with the system to be designed. A pharmacist, a nurse working in a pharmacy, and a PhD student in pharmacology were the three domain experts involved in the user research stage. The first two are used to prepare galenic medicines and know very well the work practice and its limitations; the third expert helped us study the domain by providing articles, books, videos, and comprehensible explanations. This stage was fundamental to understanding where and how automation could be introduced in pharmacists’ workflow by means of a collaborative robot and identifying the EUD techniques to be included in the environment for collaborative robot programming. Details about the activities performed and results obtained are provided in Section 4.

- Design and development: in this stage, a software system targeted at pharmacists, allowing them to define the tasks of a collaborative robot, was iteratively designed and developed. This activity started from the creation of low-fidelity mock-ups using Balsamiq (see [43] for more details on the results of this step); then, iterative development of a web-based application took place using JavaScript/Typescript React for the front-end and Python Django for the backend. The web application also integrates OpenAI ChatGPT to make pharmacists’ EUD activities easy and engaging. One of the three experts involved in the user research participated throughout the system prototyping activity, providing feedback and suggestions for improvement. This was fundamental to progressively refine the system and satisfy users’ expectations in terms of usability and user experience.

- Evaluation: an exploratory user study with nine real users was performed on the first version of the web application using direct observation during task execution and semi-structured interviews to collect qualitative data.

The rest of the paper focuses on the first two stages, describing in detail the activities performed in the user research (Section 4) and the results of the design and development stage (Section 5 and Section 6). The evaluation is illustrated in detail in a complementary work [44] and is briefly summarized as part of the discussion in Section 7.1.

4. User Research

Three domain experts were interviewed in the user research stage to gather information about the work practice, the terminology used, the environment setting, and possible issues affecting the galenic preparation process. The profiles of the three domain experts are reported in Table 1 (technical proficiency is a self-assessment on a scale of 1–5 of proficiency in computer use).

Table 1.

Domain experts’ profiles.

Individual semi-structured interviews were carried out by one researcher, who took notes of the answers and subsequently arranged the notes in a unique and well-structured document. Table 2 reports the open-ended questions used during the interviews.

Table 2.

Structure of the semi-structured interviews.

A thematic analysis [45] was then performed by two researchers on the resulting document, following a deductive and semantic approach. Codes were extracted by each researcher and then reconciled in a meeting session, which also served to identify the main themes.

The themes that emerged from the interviews, which will be described in Section 4.1, are the following:

- Current procedures for the preparation of galenic formulations;

- Problems and challenges affecting current work practices;

- Opportunities and threats related to the introduction of collaborative robots.

The knowledge acquired through the interviews, along with additional analysis of documents describing the galenic preparation process and research on users’ profiles, allowed (i) identifying where, in the work process, collaborative robots could be of help (see Section 4.2), and (ii) outlining the user requirements for the EUD environment (see Section 4.3).

4.1. Themes That Emerged from the Interviews

Table 3 summarizes the themes that emerged from the thematic analysis with their corresponding codes. They will be illustrated extensively in the following sub-sections.

Table 3.

Themes and codes emerged from the thematic analysis.

4.1.1. Current Procedure for the Preparation of Galenic Formulations

Preparing a galenic formulation must ensure accuracy and efficiency [46] and should be conducted following GMP [47] in compliance with regulatory guidelines to ensure the safety and quality of the final product. For this purpose, pharmacists must carry out a set of activities in a sequence. The interviews with experts allowed us to acquire knowledge about the sequence of steps that compose the galenic preparation process and the tools used in some of these steps. For the sake of framing the scope of our research, we focused on the preparation of galenic formulations in granular form, which yields the production of capsules containing the drug. In this case, the sequence of steps is the following:

- Formulation Design: The first step is to determine the composition of the galenic solution, including the active pharmaceutical ingredients (shortly, ingredients in the following) and excipients.

- Solubility Assessment: This step helps in selecting appropriate solvents and co-solvents to achieve the desired solubility and stability of the solution.

- Ingredient Weighting: Accurate weighting of ingredients and excipients is crucial to ensure the formulation meets the desired specifications.

- Mixing and Dissolution: The weighed ingredients are mixed and dissolved in the chosen solvent(s). Different mixing techniques, such as stirring, shaking, or vortexing, are employed to ensure uniform distribution and dissolution of the ingredients. In technologically advanced pharmacies, an automatic mixer is used in this step, but most pharmacists still adopt a manual process.

- pH Adjustment: Depending on the formulation requirements, the pH of the solution may need to be adjusted.

- Filtration and Clarification: To remove any particulate matter or undissolved solids, the solution may undergo filtration using filters of appropriate pore sizes.

- Quality Control and Analysis: The prepared galenic solution is subjected to rigorous quality control measures to ensure its safety, efficacy, and compliance with regulatory standards.

- Packaging: Packaging consists of transferring the solution into the capsules, ensuring uniformity of dosage, and avoiding cross-contamination. In this phase, a specialized piece of equipment, called an operculator or operculating machine, is used to divide capsules into two halves, filling them with the galenic preparation and then sealing capsules. Specifically, the human operator places void capsules in the cavities of a grid, and then the grid is inserted into the operculator, which is used to separate the capsules into two halves. Different grids can be used, whose cavities correspond to the shape and size of the capsules to be produced. Then, the human operator fills the bottom half of the capsules with the galenic preparation, and once this activity is completed, the operculator is used again for proper alignment of the top halves with the bottom ones and to ensure their sealing by applying controlled compression force.

- Storage: The storage step consists of transferring the capsules from the operculator into suitable containers, such as glass or plastic bottles.

4.1.2. Problems and Challenges Affecting the Current Work Practice

From the interviews, it emerged that the current preparation of galenic formulations is mainly manual, thus yielding problems and challenges that human workers must address.

Time-consuming activities must be performed, especially when a large number of capsules are produced. This problem was underlined for Step 4, which has the goal of obtaining a uniform mixing and efficient dissolution of ingredients to ensure consistent drug potency and therapeutic efficacy, and for Step 9, where transferring each capsule at a time from an operculator grid into a container is considered a low-value and repetitive task for the pharmacist.

In addition, several activities require high precision. For instance, in Step 4, the mixing activity must lead to compound homogeneity. This requires precise mixing ratios, consistent particle size reduction, and optimal dissolution rates; any error may lead to batch-to-batch variability and quality issues. Another issue related to the Mixing and Dissolution step is that ingredients and excipients may be toxic or hazardous substances; thus, their manipulation poses challenges to human workers. Precision is also fundamental in Step 8, which consists of transferring the galenic preparation compound into capsules made available in an operculator grid. In this step, consistent quantities of preparation must be transferred into the capsules to guarantee uniformity and reliability. To ensure consistency, the manual process usually leads to compound waste. Furthermore, during this operation, it is essential to prevent contamination, degradation, or other undesirable changes to ensure that the medicines keep on being effective and safe throughout their life.

4.1.3. Opportunities and Threats Related to the Introduction of Collaborative Robots

A third theme emerging from the interviews concerned the potential for automation and the role a collaborative robot could play in the galenic preparation process, as well as related threats envisaged by domain experts.

As described in Table 2, questions bringing about this theme were posed after a brief description of the characteristics of collaborative robots. The interviewees were not aware of the existence and potential of this kind of robot. Based on the description of their work practice and our knowledge of the literature about collaborative robots in the pharmaceutical sector, we identified the adoption of these robots as an innovation opportunity to be discussed with the experts. This opportunity could not have emerged without the knowledge sharing between domain experts and computer scientists enabled by the human-centered methodology adopted. The domain experts observed that some steps of the galenic preparation process cannot be delegated to a machine yet, since they require decision-making and human control; labor-intensive, low-added value, and repetitive tasks have been considered by the domain experts to be the most suitable for automation.

Domain experts also highlighted that robots must collaborate with pharmacists rather than replace them, thus leaving decision-making in the hands of healthcare professionals. They also underlined that pharmacists possess different expertise in technology, and thus, some users, especially the older ones, might encounter difficulties in dealing with robots. Considering that pharmacists have no computer programming experience, a main challenge that emerged was instructing the robot in doing the desired activities.

Finally, it emerged that regulations could be easily satisfied thanks to sanitized robotic assistants, ensuring the utmost cleanliness.

4.2. Human–Robot Collaboration in Galenic Preparation

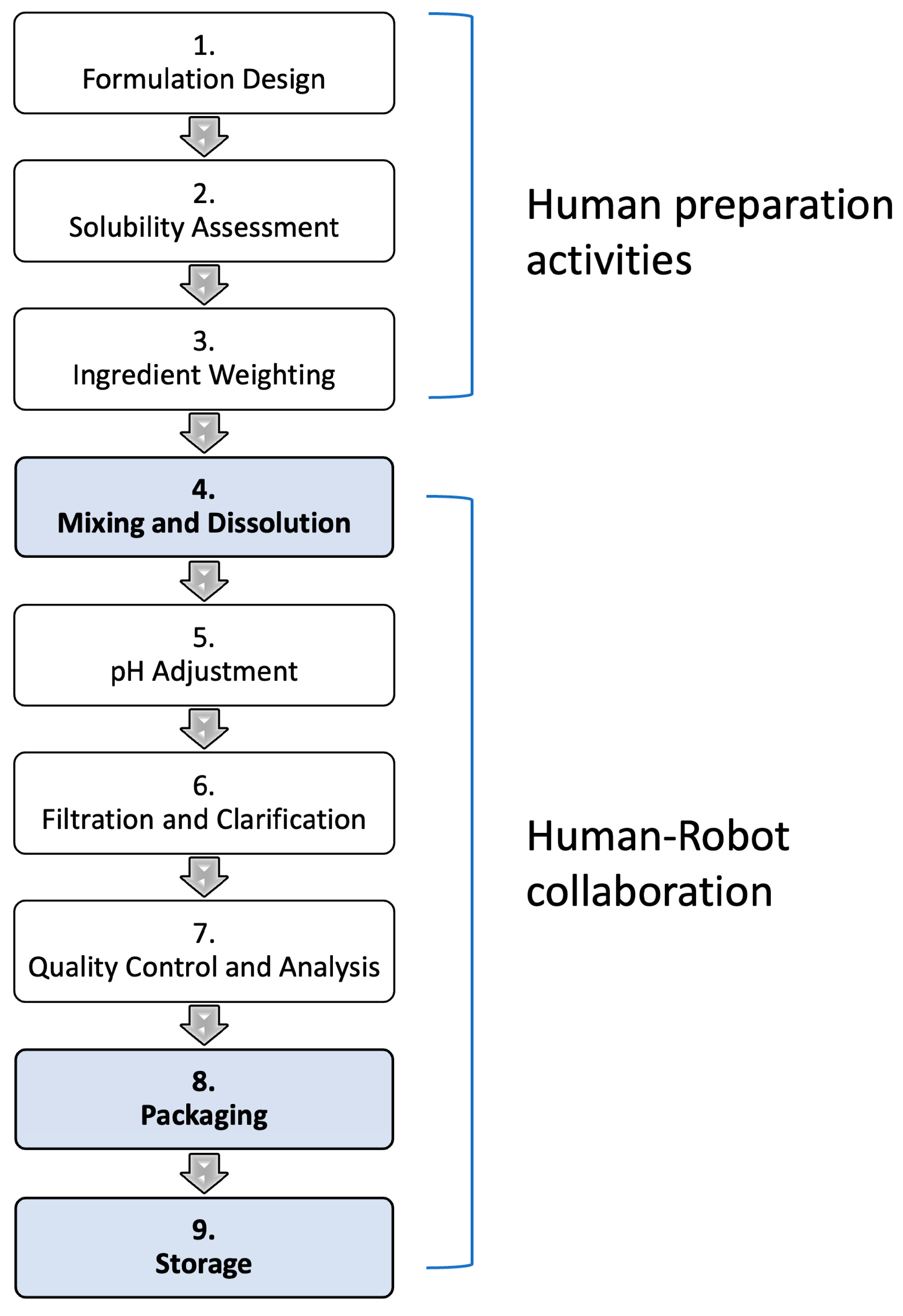

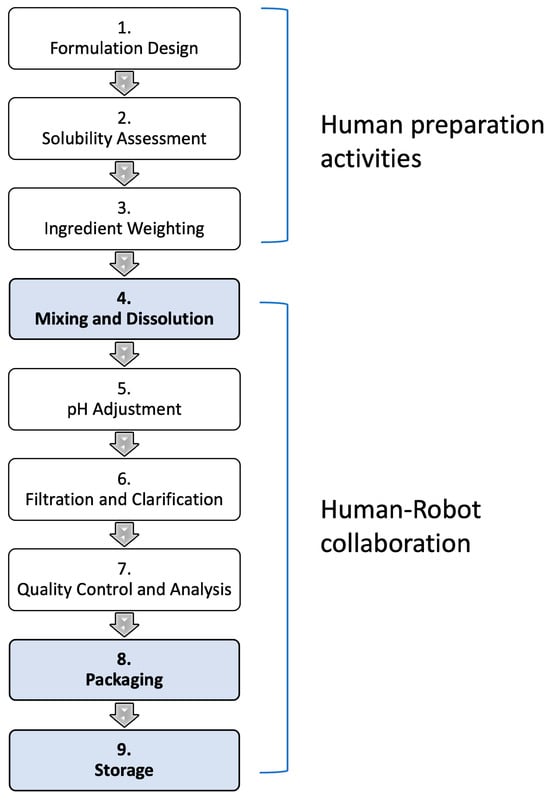

The themes that emerged from the interviews, combined with our competencies in collaborative robots, allowed us to identify which steps could be delegated to a robot and which ones could be performed at the moment only by a human. Figure 1 illustrates the sequence of steps and highlights which ones must be carried out by human workers before the use of a collaborative robot (Steps 1, 2, and 3) and which ones can become the core activities of the human–robot collaboration, distinguishing the activities delegated to the robot (Steps 4, 8, and 9) from those that should remain in the hands of humans, possibly with the help of further machines (Steps 5, 6, and 7).

Figure 1.

The steps of galenic solution preparation: From Step 4 to Step 9, human–robot collaboration can be put in place.

This hypothesis was discussed with one of the three domain experts, who considered it a valid solution.

In Step 4, robots can work continuously without fatigue, performing mixing and dissolution processes on a large scale, thus reducing processing time and increasing productivity. Furthermore, robots can perform tasks with high precision and accuracy since they can precisely control the speed, duration, and force applied during the mixing activity. This yields more reliable and reproducible results, ensuring batch-to-batch consistency and reducing variability in the resulting product. Finally, when dealing with dangerous substances, robots can minimize health and safety issues, protecting workers from potential harm.

As to step 8, a robot can accurately measure and dispense the appropriate quantities of galenic preparation into capsules by performing precise and consistent movements, minimizing the risk of spillage, maintaining proper hygiene and sterility, and avoiding human errors during the process. In addition, a robot can be programmed to comply with GMP and to adhere to safety protocols and regulatory requirements.

A robot can easily and efficiently perform the task foreseen in Step 9 through simple pick-and-place, leaving space for humans to carry out activities with higher added value.

4.3. User Requirements for the EUD Environment

After the identification and comprehension of the steps that can be carried out collaboratively between a pharmacist and a robot, we created two user personas to empathize with the target user population of the EUD environment we intended to develop to support pharmacists in the programming of robot tasks. Personas also allowed us to delineate the main characteristics of the users: they are experts in the pharmaceutical domain that have at least a bachelor’s degree and adopt a specific domain language; they are careful and precise workers, eager to help their patients, but often overwhelmed by their work due to the different tasks to be performed in a pharmacy; they may have a short-term or long-term experience in the preparation of galenic formulations, and, according to their age, they may be more or less willing to accept the intervention of automation in a complex activity that has been carried out manually for centuries; some may find instructing a robot and collaborating with it exciting, but others may be afraid to make mistakes and create dangerous situations.

On the basis of these considerations, we decided to design an EUD environment that could guide the users step-by-step during the definition of robot tasks and always provide a suitable and comprehensible explanation of the activities carried out with the system.

5. Interaction Design

In previous work, we obtained very positive results proposing a multi-modal approach to robot programming [14]; this approach adopts a combination of EUD techniques to accommodate different users’ backgrounds and programming skills. In that case, only pick-and-place tasks could be defined by the end user interacting with two integrated features: (1) a chat-based interface where a simple guided natural language dialogue led to defining robot tasks, and (2) a graphic interface where robot tasks can be expressed through the composition of specific types of blocks. A comparative experiment demonstrated that users preferred multi-modal interaction to graphic interaction alone [14].

In the case at hand, the robot program to be generated must be structured along a sequence of steps that encompass different types of robot actions (e.g., mixing ingredients, filling capsules with the galenic preparation, picking capsules, and placing them into containers), interleaved with human actions. Thus, robot programming must be more flexible than in our previous EUD environment, and, at the same time, it must be suitable for a well-identified user profile, i.e., a pharmacist.

We decided to maintain the multi-modal approach based on a chat interface and a graphic interface, but we completely redesigned both of them to cope with the problem at hand, trying to enhance the naturalness, flexibility, and verifiability of the programming activity. To this end, we decided to integrate the chat-based interface with a generative AI approach, exploiting large language models (LLMs). However, we had to make such an integration as more deterministic as possible, given that LLMs are based on probabilistic algorithms that often provide different answers to the same questions and, above all, may generate imprecise or wrong answers. Programming a collaborative robot must ensure safe program execution in the workplace, and the galenic medicines resulting from robot program execution must be compliant with specified prescriptions and quality standards. Following a human-centered AI methodology [15], we considered all these issues during the design and development of PRAISE in order to provide the user with complete control of the generated program and prevent wrong task execution. This led to the design and development of EUD features, starting from digital low-fidelity mock-ups to be discussed with one of our domain experts until creating a high-fidelity interactive prototype.

The interaction between pharmacists and the proposed system may occur at different times and with different requirements:

- Design time: here, PRAISE supports end users in defining domain items, namely, objects (e.g., operculator grids, containers, etc.) that the robot must recognize and be able to manipulate and the mixing actions that the robot must be able to carry out during the mixing and dissolution phase. In this stage, the EUD environment exploits image recognition algorithms and graphic interfaces to allow pharmacists to “teach” the robot domain concepts. A basic setting of the system can be prepared by software developers, but pharmacists must be able to extend the domain when needed, e.g., new grid types must be recognized, and new mixing actions must be performed by the robot.

- Programming time: the EUD environment supports the definition of tasks for the preparation of galenic formulations using a multi-modal approach based on a chat-based interface integrating an LLM and a graphic interface; the latter permits the verification of the programmed task and is crucial to obtain adequate system explainability and trustworthiness. In fact, the natural language dialogue with the chat-based interface exploiting the LLM leads to the definition of a robot program, whose correctness must be verified by the user before its deployment. Since the user is not an expert in robot programming, representing the program as a sequence of blocks in the graphic interface allows the user to evaluate program correctness and directly modify the blocks and/or their parameters if needed.

- Execution time: the execution of the programmed tasks requires collaboration between the pharmacist and the robot. For example, chemicals can be put by the pharmacist in a specific bowl, and the robot can subsequently perform a blending activity; to ensure effective collaboration, proper human–robot coordination must be managed through the system.

The following section focuses on the interaction with PRAISE at programming time. The definition of domain objects at design time still pertains to an EUD approach since it allows end users to customize the system to the context at hand; however, the description of this part of the system interface is omitted since it is not particularly novel in its interaction modalities. Robot program execution is delineated at the end of Section 6, but its in-depth discussion is out of the scope of the present work.

As a validation of interaction design, an exploratory user study was planned based on qualitative evaluation methods, such as direct observation, thinking aloud, and semi-structured interviews. The use of the user experience questionnaire (UEQ) [48] was also considered to obtain quantitative results. The aim of the study was to collect feedback not only about system usability and user experience but also about its acceptability, perceived effectiveness, and potential applications in further health-related domains. We planned to involve about ten pharmacists in performing a sequence of tasks with PRAISE by annotating their behaviors and comments during task execution. At the end of each test session, the user would have to fill in a form presenting the UEQ questionnaire and answer the questions of the moderator about the system’s learnability, memorability, efficiency, robustness, and about the effectiveness of this type of technology both in the case of galenic preparations and in potential further scenarios. A thematic analysis of the qualitative data gathered through observation, spontaneous comments, and answers to interview questions was planned, while the scores of the UEQ would have been compared with existing benchmarks [48]. From the analysis of results, design implications for system improvement and generalization to other domains would have been derived. The most significant findings of the actual user study are summarized in Section 7.1, while all details are provided in [44].

6. Interaction with PRAISE to Create Robot Programs

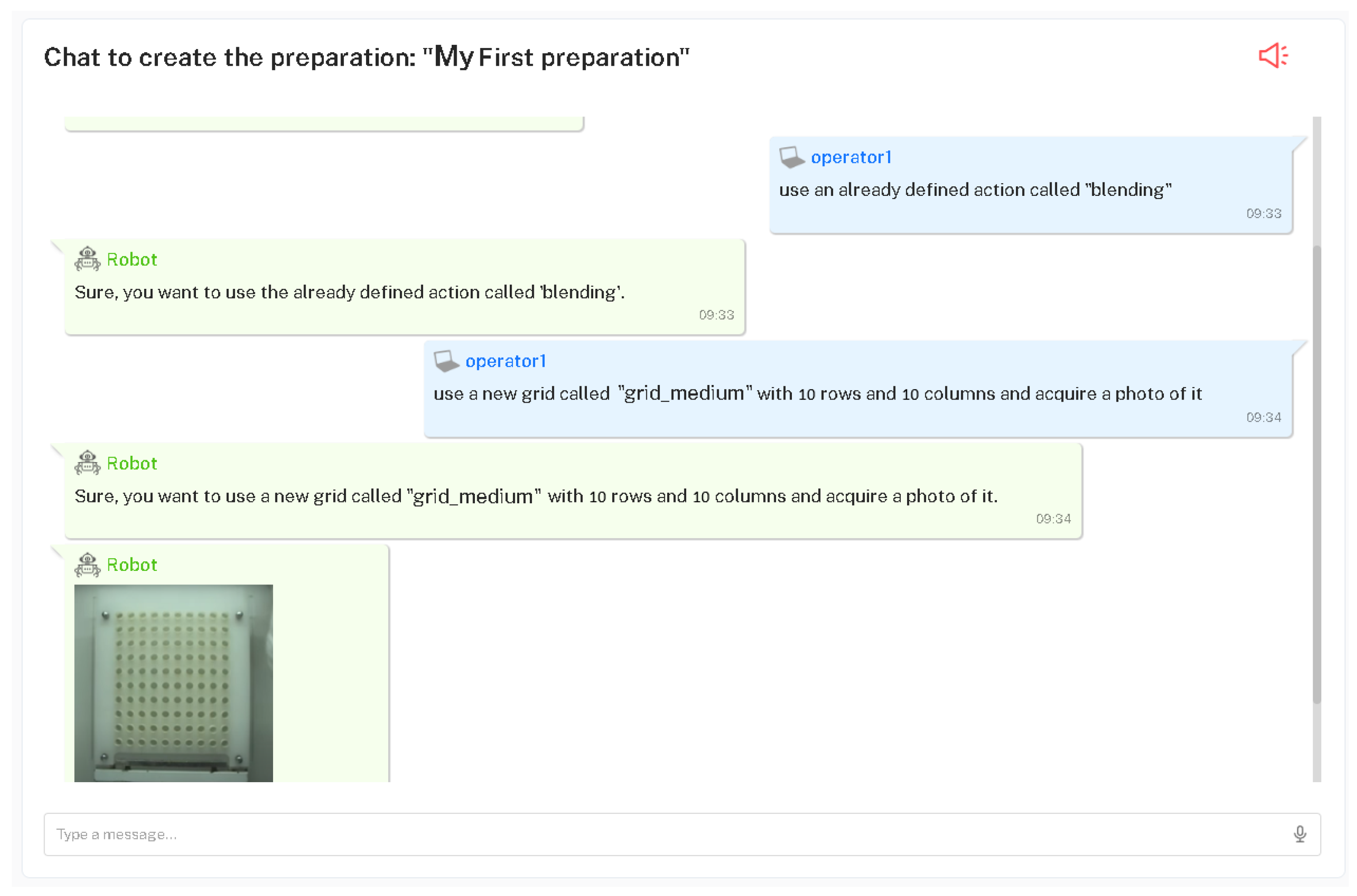

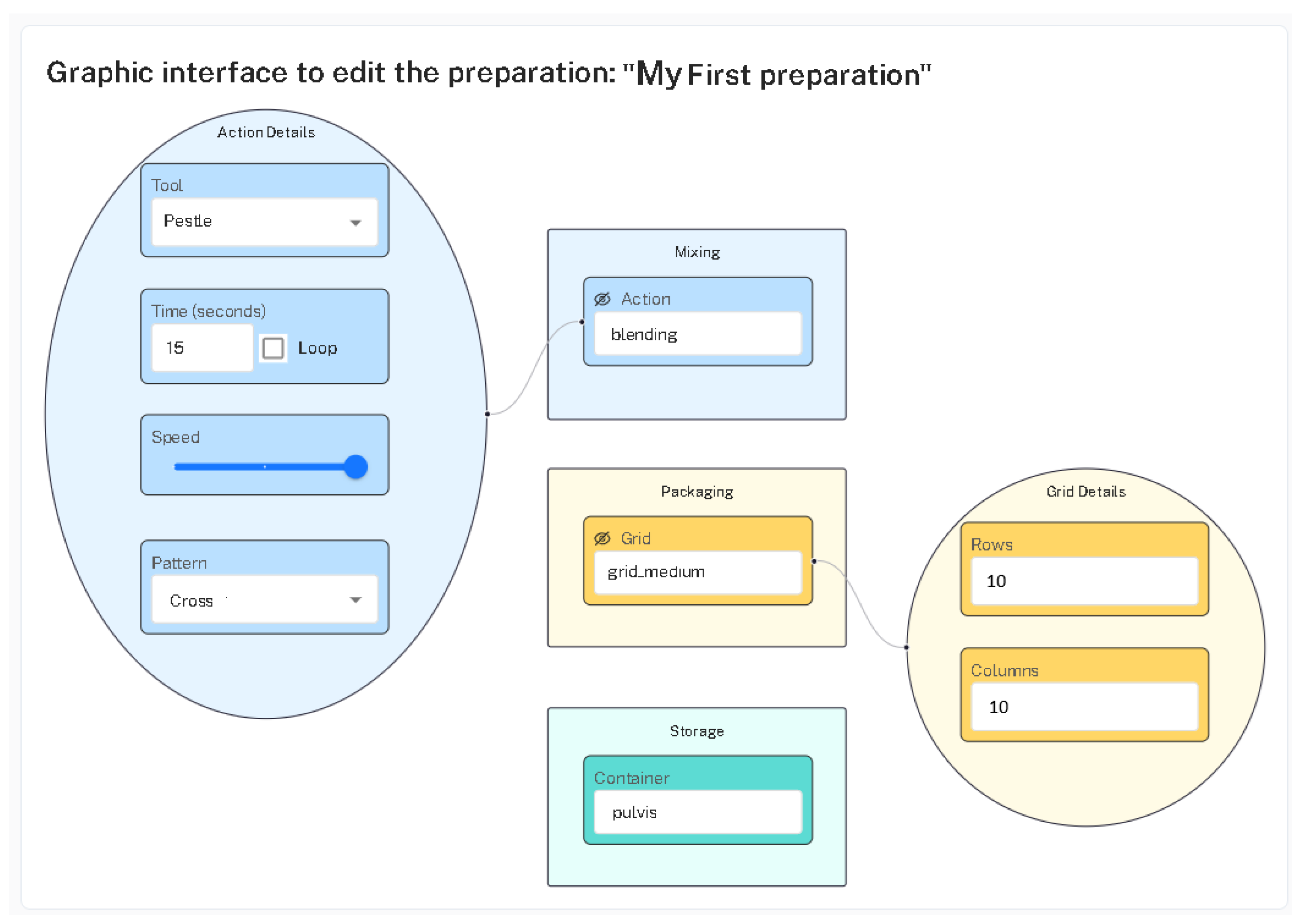

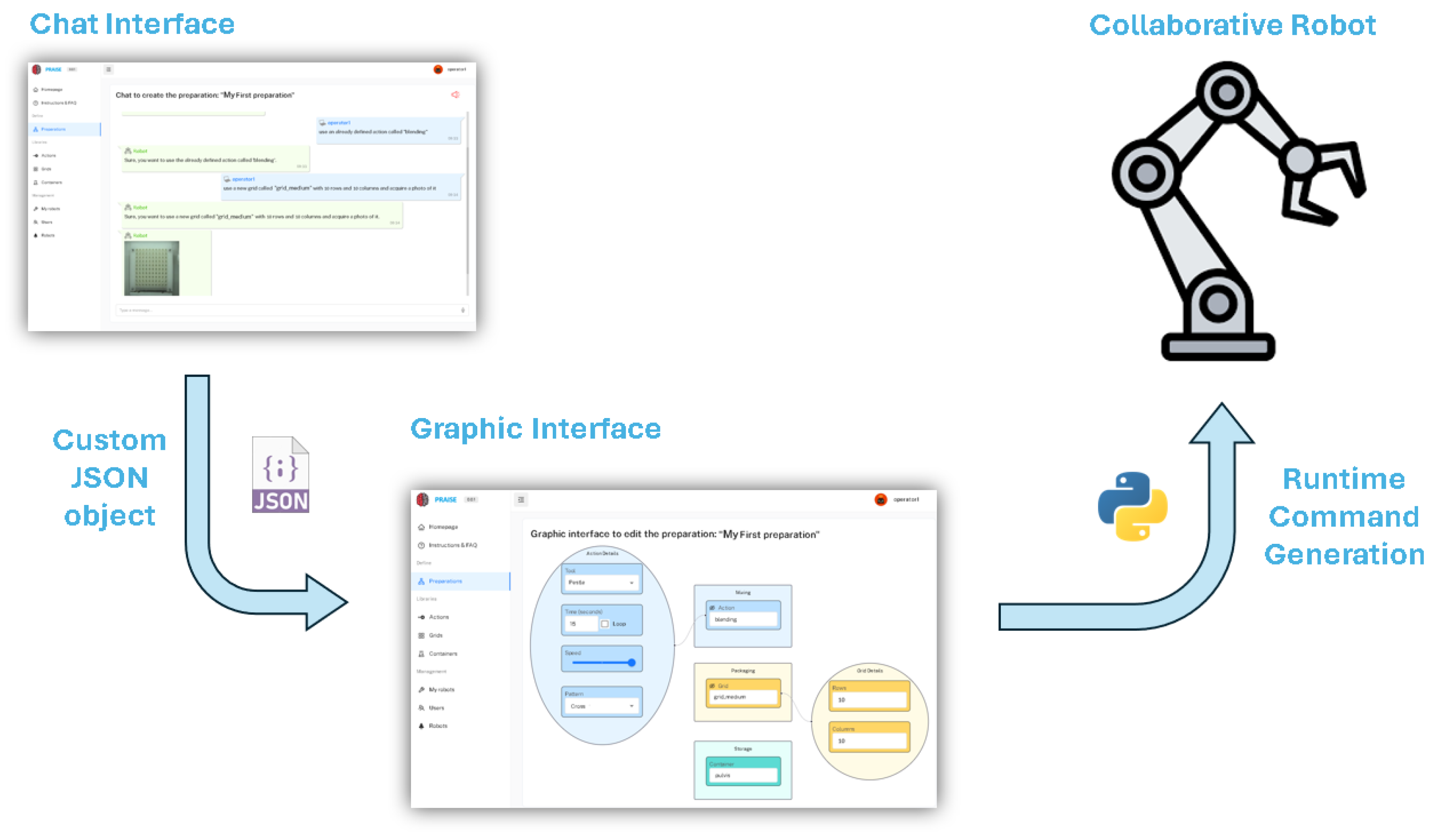

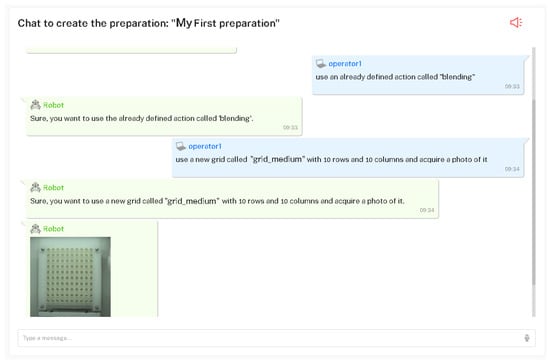

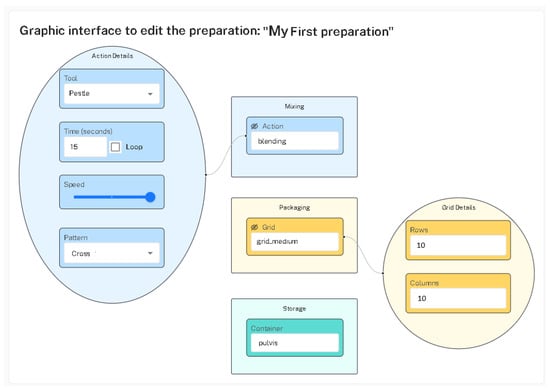

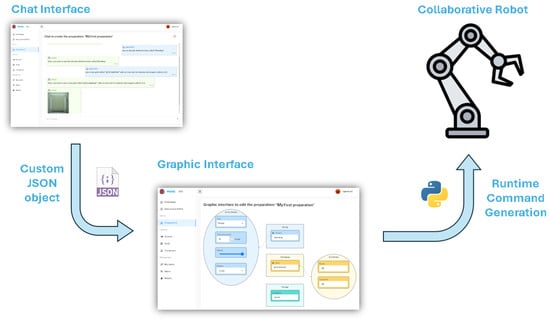

The developed prototype is a web-based application featuring a client–server architecture. Its most important pages are devoted to the definition of a galenic preparation. These pages consist of a chat interface (Figure 2) and a domain-oriented graphic interface (Figure 3). Using these interfaces, the pharmacist can generate a JavaScript object notation (JSON) representation of a robot task concerning a galenic preparation and eventually create a robot program.

Figure 2.

The chat interface of PRAISE.

Figure 3.

The graphic user interface of PRAISE.

A study was conducted with the objective of determining the most appropriate NLP tool to integrate into the chat interface. Recent LLM-based solutions have been analyzed considering different dimensions. ChatGPT was finally chosen for its powerful model and flexible APIs. In particular, the GPT-4-turbo model was used, instantiating it with specific instructions in natural language, with information about the context and its role. This prompt engineering operation was crucial to customize the assistant model to our specific case. For instance, the initial prompt includes the following sentences: “The user is a pharmacist, and he/she needs to create a task for a cobot to help him/her prepare galenic formulations. To define a task, the user has to specify three steps: mixing, packaging, and storage”. To delineate each step, we set out a list of necessary parameters alongside their possible values in a clear and concise manner. This helps the model identify and understand the complexities of each step. We also found that precise punctuation, as well as the arrangement and placement of sentences within the prompt, were essential to achieving the desired outcomes. A number of iterations have been carried out to ascertain the appropriate syntax and level of granularity required for accurate comprehension of our directives.

The definition of a new robot program, in this case a galenic preparation, usually begins from the interaction with the chat. User instructions communicated through the chat interface are routed to the LLM engine for interpretation via an adapter module that provides the LLM with both the user’s request and specific directives, ensuring accurate comprehension of the assigned task. Particularly, a conversational approach is employed to define the various details of the preparation process steps. For example, in Figure 2, the user expresses the need to create a preparation where, for the mixing and dissolution step, an already defined action called blending is used, and for the packaging step, a grid called grid_medium with 10 rows and 10 columns is employed; for the latter, a new grid is defined through the acquisition of a photo. The dialogue will then proceed with the definition of the container (e.g., pulvis) for the storage step, possibly acquiring its position and shape through the robot camera.

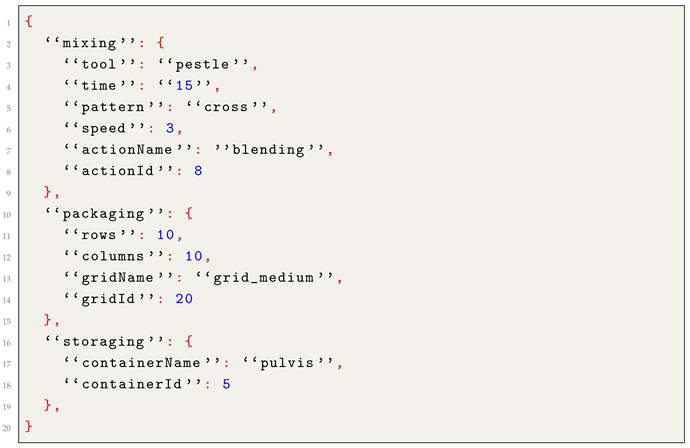

Subsequent to the definition of the preparation and the conclusion of the interaction with the chat, a representation of the preparation process is generated. Such a representation exploits a custom JSON format conveniently used by the application logic of PRAISE. The JSON object describing the task reported in Figure 2 is shown in Listing 1. This allows for the automatic rendering of the preparation process in the graphic interface. Here, the user can evaluate the correctness of the preparation and possibly adjust or modify it by interacting with the blocks that compose the process. For example, in Figure 3, the user has accessed the blending action of the first step to increase its speed to the maximum value. Upon completion of this phase, the preparation can be confirmed and saved. Proficient users of the application can start the definition of a new preparation directly through the graphic interface. While this speeds up the process, it requires more cognitive load than using the chat interface.

| Listing 1. JSON structure of the defined task. |

|

Regarding error management, the graphic interface plays a crucial role in identifying errors, both machine-related and human-related, by providing a clear and schematic visualization of the defined task. Additionally, while the current system does not incorporate automatic learning from past errors, training the LLM to prevent recurring mistakes is considered a future development.

Once the preparation has been defined, either via the chat or the graphic interface, it is stored in the database as a JSON object. This JSON object is a high-level specification of a robot task that needs to be instantiated by selecting at runtime the elementary actions, adapted to the specific characteristics of the robot in use. This is accomplished by a Python program that interprets the JSON object and generates at runtime the corresponding commands for the robot, such as moving to a specific position or searching for an object in the working area. Figure 4 shows the operational process chart for the definition and execution of a robot task.

Figure 4.

The operational process chart for robot task definition.

7. Discussion

This section reports the most significant findings obtained through an exploratory user study and discusses the design implications of adopting LLMs in domain-specific contexts where users do not possess expertise in information technology and programming. Limitations of the research are finally highlighted.

7.1. Findings from an Exploratory User Study

An exploratory study was carried out with the participation of nine users working in the pharmaceutical field and experts in galenic formulations. They were observed during the execution of five tasks with PRAISE. Significant users’ comments and behaviors were annotated by one researcher, and relevant themes were subsequently identified by two researchers through an inductive and latent thematic analysis [45]. After the completion of the five tasks, a semi-structured interview was used to obtain additional feedback from users. Also, in this case, a thematic analysis was conducted on the participants’ responses. Users were also required to answer the UEQ questionnaire, obtaining very positive results in comparison with the benchmark reported in [48]: three out of six scales were assessed as excellent, two as good, and one as above average, thus denoting high user satisfaction.

We summarize in the following the most interesting qualitative results, while all the details of the user study organization and data analysis are reported in a complementary study [44], which is more focused on the technical details of the developed application and its evaluation.

The most significant findings from the study highlight how end users found interactions with the LLM-based chat innovative and engaging, and due to its robustness when users changed the language from English to their mother tongue on the fly, they asked off-topic questions or did not follow the suggested sequence of steps for galenic preparation. As a confirmation, all participants were able to complete the assigned tasks, thus demonstrating the good learnability of the system. However, two issues emerged: the first one is concerned with how to start a conversation, since for three participants, this was unclear, notwithstanding the textual explanation available in the interface; the second one is related to the fact that the interaction with the chat was perceived by a couple of users as slower than that with the block-based graphic interface. In particular, during the task execution, one of them used a step-by-step approach without realizing that he could use a different strategy; that is, defining all preparation details in just one sentence. A further significant finding that emerged from the study was related to the occasional non-deterministic behavior of the LLM. This rarely occurred during the interaction with the chat, which failed to guide the users along the necessary steps of the conversation. For example, the LLM might simply confirm the definition of a step (e.g., “Ok, we have defined the grid”) without immediately providing information on how to proceed (e.g., “Now let’s define the container for the next step”). In these cases, users could easily prompt the system by asking “What are the next steps?” to encourage it to describe how to move forward. When users directly asked for information in the chat, they successfully obtained instructions on how to proceed.

All participants appreciated the graphic interface as a useful tool to verify the correctness of the defined robot task. Two participants judged it to be efficient and practical enough to create robot tasks from scratch (that is, without using the chat). This is actually an option that users can choose, especially when they are already proficient in the system use or simply must re-use and modify existing robot tasks. However, all participants confirmed that the coexistence of natural language interaction and block-based interaction, integrated as in PRAISE, is an important safety feature.

We finally observed that users were able to express their requests according to their communication style and did not manifest the feeling of being controlled by the system, as it might happen when interacting with AI-based systems. With prompt engineering, developers faced the challenge of crafting effective prompts like they were users. The in-depth activity carried out in the user research phase allowed developers to properly tune these prompts to balance human-like creative conversation with deterministic behavior.

7.2. Design Implications

Several implications for designing LLM-based systems can be derived from our experience.

First, beginning a conversation with a natural language interface is a widely recognized issue in the literature [49,50] due to the discoverability problem. Our study suggests that its impact can be mitigated by providing users with a simple explanation of the system’s functionality and how requests must be formulated. For instance, an example of chat messages that can be exchanged could be provided at the beginning of the conversation to help users understand the appropriate level of abstraction and freedom in sentence formulation. In addition, the LLM can be instructed to act as an assistant if the user is uncertain about how to proceed with the conversation, providing instructions to guide the conversation proactively. This turns out to be beneficial when structured tasks like programming must be carried out. Further research is needed to specify which initial instructions should be given to the LLM to always proactively guide the user during the conversation, which is aimed, in our case, to define robot tasks but which can have different goals in other contexts.

Another essential aspect is error recovery, wherein both the user and system can offer explanations to understand each other and fix errors that have occurred. Developers typically pay attention only to the explanations provided by the system as a reaction to user input identified as incorrect. With our approach, the user can provide additional information to the system to enhance mutual understanding. In fact, LLMs undergo training on human language and conversation structure so they can improve the output by following users’ directions; this could facilitate collaboration and help users make better decisions.

Furthermore, it is important to consider the non-determinism inherent to LLMs models, which is a problem present by construction and affects output generation with the possible production of texts that are nonsensical or factually incorrect (also known as hallucinations). Proper fine-tuning of LLMs permits the mitigation of such behavior. PRAISE provides the LLM with suitable instructions to achieve this goal. As a matter of fact, no hallucinations were generated in the user study in response to users’ prompts, which suggests that the adopted approach is effective in this respect. While a larger study would be useful for further confirmation of this result, we also remark that our multi-modal approach allows the user to fix possible hallucinations in the graphic interface, thus retaining control over the system’s behavior.

The effectiveness of a conversation greatly affects the level of users’ trust and confidence in the used technology. The multi-modal approach proposed here allows users to trust the system as they can view the interaction results and fix any errors if needed. This approach has general applicability, particularly when non-deterministic algorithms are involved, as it provides users with complete control over the final output. Therefore, while it is beneficial to employ AI-based technologies, it is important to retain control in the users’ hands and give the possibility to verify the system output, especially when non-deterministic behaviors may lead to errors.

When using AI-based systems, the user can feel confined by their inflexible procedures, which can cause frustration during usage. It is imperative to ensure a degree of system adaptability in order to provide users with a feeling of control over the technology they are employing. In the case of LLMs, the extent of the user’s freedom depends on the instructions (prompts) given to the system by the developer; such instructions must be properly tuned to cope with the trade-off between human-like interaction and determinism.

7.3. Limitations of the Work

The work presented in this paper is affected by some limitations due to the novelty of the approach to supporting pharmacists in their work practice and to the complexity of developing a full-fledged system that could lead to robot program execution in real contexts. The paper focuses on the programming of the actions delegated to the robot, which, in practice, must be interleaved with human actions. While this coordination is operationally feasible, challenges may arise in complex scenarios requiring dynamic collaboration. Further investigation is needed to explore how technical enhancements can improve system adaptability and how to ensure that users can become accustomed to this collaborative activity.

In addition, the design methodology and developed prototype will require further refinements in the future.

In the user research, only three domain experts were involved in acquiring knowledge about the galenic preparation process and the problems affecting it. However, the findings from the exploratory study confirmed the assumptions made about the opportunities offered by collaborative robots in this field.

Then, the ChatGPT GPT-4-turbo model was used as LLM API. More up-to-date models could be used in the future to evaluate whether the conversation can be enhanced, thus avoiding imprecise output generation, prompt interpretation failures, and non-deterministic behaviors.

Last but not least, the developed prototype only accounts for three stages of galenic preparation out of the numerous stages present in a complete preparation. As underlined in Section 4, we studied the current practice and identified the steps where a collaborative robot can be useful to support the pharmacist in error-prone, repetitive, and tiring activities. In the future, we will explore the use of PRAISE at execution time by investigating how the collaboration between pharmacists and robots may take place throughout the galenic preparation process.

8. Conclusions and Future Work

Collaborative robots are currently trending. However, robot programming still poses a hurdle to the acceptance and widespread adoption of collaborative robots, especially in the healthcare sector. End users, experts in their domain but not in robot programming, often need to learn at least a programming language and a variety of technical aspects related to robot operation. Our approach aims to provide an EUD environment integrating AI features, which allows users to exploit a collaborative robot in their work practice without the need to learn a programming language or handle technical complexities. A human-centered methodology has been adopted to design PRAISE, an EUD environment for robot programming specifically oriented to the pharmaceutical sector and, in particular, to the preparation of galenic formulations by pharmacists.

The design and development of PRAISE allowed us to investigate the use of LLMs for the recognition of user intentions and the creation of tasks for collaborative robots. The robot tasks created with the LLM-based chat can be checked and revised through a graphic interface, thus improving the verifiability of the generated result and trustworthiness. The graphic interface can also be used to define new tasks from scratch, thus allowing the users to choose the programming paradigm they prefer. This multi-modal approach to robot programming has demonstrated significant versatility and efficacy. Additionally, the adopted human-centered methodology has demonstrated effectiveness in solving challenges that may arise when adopting a non-deterministic technology, like LLMs.

Prompt engineering is currently a hot topic in the design of LLM-based systems. As future development of PRAISE, it would be interesting to investigate whether it would be feasible for a user to construct their own personalized assistant. Users, as domain experts, might provide their own initial instructions to the LLM to tailor it to a specific context in a better way than a software developer; however, this may turn out to be a demanding task for users without previous experience in interacting with LLMs. Moreover, the developer must ensure sufficient flexibility in the data structures of the system to guarantee its adaptability to different contexts.

Improving the fluidity of the conversation with LLM-based systems is a further challenge for future research. Typically, LLM-powered chatbots structure the conversation by accepting only one message at a time, resulting in users having to wait for a response before they can write another message. Consequently, this can lead to sluggishness in the task accomplishment. There could be delays due to slow network speeds, or the user may realize that an error was made in their input but must wait for the system to respond before correction can occur. How to enable users to send multiple consecutive messages to LLMs without waiting for a response to the previous one is an unresolved issue. For instance, the ChatGPT API only processes one message at a time due to its client–server call nature. In our case, we must consider evolving the Adapter module to allow multiple messages and manage a sequence of ChatGPT responses.

The problem of integrating cutting-edge technologies, like collaborative robots and natural language interaction, into an existing laboratory workflow may arise in a variety of medical applications. In this respect, one of the most important issues is ensuring a correct match between the system and the users’ needs and activities. This calls for a human-centered AI methodology [15] that involves representative users throughout system design and development. We described a concrete example of the application of this methodology and believe that the lessons learned may contribute to improving the user experience with AI technology and the acceptability of this kind of system.

Author Contributions

Conceptualization, L.G., D.F., and P.B.; Methodology, L.G. and D.F.; Software, L.G.; Validation, L.G.; Writing—original draft, L.G. and D.F.; Writing—review and editing, P.B. All authors have read and agreed to the published version of the manuscript.

Funding

The PhD scholarship of Luigi Gargioni is co-funded by the Italian Ministry, Piano Nazionale di Ripresa e Resilienza (PNRR) and Antares Vision S.p.A.

Institutional Review Board Statement

Ethical review and approval were waived for this study, according to the guidelines in force at the time of the study at our institution, concerning studies involving only user interviews without the collection of sensitive data. All data were collected anonymously and handled with strict confidentiality, in line with ethical standards and with no risk of harm to participants. The Institutional Review Board of our university had not yet been established at the time of the study.

Informed Consent Statement

Informed consent was collected from each participant included in the user study.

Data Availability Statement

The anonymized data collected for this manuscript are available from the corresponding author upon request.

Acknowledgments

The authors wish to thank the participants in the exploratory user study for their availability and valuable collaboration.

Conflicts of Interest

None of the authors have any conflicts of interest or competing interests.

References

- Fortané, N. Antimicrobial resistance: Preventive approaches to the rescue? Professional expertise and business model of French “industrial” veterinarians. Rev. Agric. Food Environ. Stud. 2020, 102, 213–238. [Google Scholar] [CrossRef] [PubMed]

- Burlo, F.; Zanon, D.; Minghetti, P.; Taucar, V.; Benericetti, G.; Bennati, G.; Barbi, E.; De Zen, L. Pediatricians’ awareness of galenic drugs for children with special needs: A regional survey. Ital. J. Pediatr. 2023, 49, 76. [Google Scholar] [CrossRef]

- Uriel, M.; Marro, D.; Gómez Rincón, C. An Adequate Pharmaceutical Quality System for Personalized Preparation. Pharmaceutics 2023, 15, 800. [Google Scholar] [CrossRef]

- EudraLex European Commission. Good Manufacturing Practice (GMP) Guidelines; EudraLex European Commission: Brussels, Belgium, 2023. [Google Scholar]

- World Health Organization. Health Products Policy and Standards; World Health Organization: Geneva, Switzerland, 2023. [Google Scholar]

- Mathew, R.; McGee, R.; Roche, K.; Warreth, S.; Papakostas, N. Introducing Mobile Collaborative Robots into Bioprocessing Environments: Personalised Drug Manufacturing and Environmental Monitoring. Appl. Sci. 2022, 12, 10895. [Google Scholar] [CrossRef]

- Wolf, Á.; Wolton, D.; Trapl, J.; Janda, J.; Romeder-Finger, S.; Gatternig, T.; Farcet, J.B.; Galambos, P.; Széll, K. Towards robotic laboratory automation Plug & Play: The “LAPP” framework. SLAS Technol. 2022, 27, 18–25. [Google Scholar] [CrossRef] [PubMed]

- Sauppé, A.; Mutlu, B. The Social Impact of a Robot Co-Worker in Industrial Settings. In Proceedings of the CHI ’15: 33rd Annual ACM Conference on Human Factors in Computing Systems, Seoul, Republic of Korea, 18–23 April 2015; pp. 3613–3622. [Google Scholar] [CrossRef]

- Löfving, M.; Almström, P.; Jarebrant, C.; Wadman, B.; Widfeldt, M. Evaluation of flexible automation for small batch production. Procedia Manuf. 2018, 25, 177–184. [Google Scholar] [CrossRef]

- Lieberman, H.; Paternò, F.; Wulf, V. End User Development (Human-Computer Interaction Series); Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Paternò, F.; Wulf, V. (Eds.) New Perspectives in End-User Development; Springer: Cham, Switzerland, 2017. [Google Scholar] [CrossRef]

- Barricelli, B.R.; Cassano, F.; Fogli, D.; Piccinno, A. End-user development, end-user programming and end-user software engineering: A systematic mapping study. J. Syst. Softw. 2019, 149, 101–137. [Google Scholar] [CrossRef]

- Beschi, S.; Fogli, D.; Tampalini, F. CAPIRCI: A multi-modal system for collaborative robot programming. In Proceedings of the End-User Development: 7th International Symposium, IS-EUD 2019, Hatfield, UK, 10–12 July 2019; Proceedings 7. Springer: Berlin/Heidelberg, Germany, 2019; pp. 51–66. [Google Scholar]

- Fogli, D.; Gargioni, L.; Guida, G.; Tampalini, F. A hybrid approach to user-oriented programming of collaborative robots. Robot. Comput.-Integr. Manuf. 2022, 73, 102234. [Google Scholar] [CrossRef]

- Shneiderman, B. Human-Centered AI; Oxford University Press: Oxford, UK, 2022. [Google Scholar]

- Shneiderman, B. Human-Centered Artificial Intelligence: Reliable, Safe & Trustworthy. Int. J. Hum.-Comput. Interact. 2020, 36, 495–504. [Google Scholar] [CrossRef]

- Chu, X.; Fleischer, H.; Roddelkopf, T.; Stoll, N.; Klos, M.; Thurow, K. A LC-MS integration approach in life science automation: Hardware integration and software integration. In Proceedings of the 2015 IEEE International Conference on Automation Science and Engineering (CASE), Gothenburg, Sweden, 24–28 August 2015; pp. 979–984. [Google Scholar] [CrossRef]

- Fleischer, H.; Baumann, D.; Chu, X.; Roddelkopf, T.; Klos, M.; Thurow, K. Integration of Electronic Pipettes into a Dual-arm Robotic System for Automated Analytical Measurement Processes Behaviors. In Proceedings of the 2018 IEEE 14th International Conference on Automation Science and Engineering (CASE), Munich, Germany, 20–24 August 2018; pp. 22–27. [Google Scholar] [CrossRef]

- Fleischer, H.; Baumann, D.; Joshi, S.; Chu, X.; Roddelkopf, T.; Klos, M.; Thurow, K. Analytical Measurements and Efficient Process Generation Using a Dual–Arm Robot Equipped with Electronic Pipettes. Energies 2018, 11, 2567. [Google Scholar] [CrossRef]

- Ajaykumar, G.; Steele, M.; Huang, C.M. A Survey on End-User Robot Programming. Comput. Surv. 2021, 54, 164. [Google Scholar] [CrossRef]

- Barricelli, B.R.; Fogli, D.; Locoro, A. EUDability: A new construct at the intersection of End-User Development and Computational Thinking. J. Syst. Softw. 2023, 195, 111516. [Google Scholar] [CrossRef]

- Argall, B.D.; Chernova, S.; Veloso, M.; Browning, B. A Survey of Robot Learning from Demonstration. Robot. Auton. Syst. 2009, 57, 469–483. [Google Scholar] [CrossRef]

- Zhu, Z.; Hu, H. Robot Learning from Demonstration in Robotic Assembly: A Survey. Robotics 2018, 7, 17. [Google Scholar] [CrossRef]

- Liu, Y.; Li, Z.; Liu, H.; Kan, Z. Skill transfer learning for autonomous robots and human–robot cooperation: A survey. Robot. Auton. Syst. 2020, 128, 103515. [Google Scholar] [CrossRef]

- Alexandrova, S.; Tatlock, Z.; Cakmak, M. RoboFlow: A flow-based visual programming language for mobile manipulation tasks. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 5537–5544. [Google Scholar] [CrossRef]

- Paxton, C.; Hundt, A.; Jonathan, F.; Guerin, K.; Hager, G.D. CoSTAR: Instructing Collaborative Robots with Behavior Trees and Vision. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 564–571. [Google Scholar] [CrossRef]

- Resnick, M.; Maloney, J.; Monroy-Hernández, A.; Rusk, N.; Eastmond, E.; Brennan, K.; Millner, A.; Rosenbaum, E.; Silver, J.; Silverman, B.; et al. Scratch: Programming for All. Commun. ACM 2009, 52, 60–67. [Google Scholar] [CrossRef]

- Lovett, A. Coding with Blockly; Cherry Lake Publishing: Ann Arbor, MI, USA, 2017. [Google Scholar]

- Huang, J.; Cakmak, M. Code3: A System for End-to-End Programming of Mobile Manipulator Robots for Novices and Experts. In Proceedings of the HRI ’17: 2017 ACM/IEEE International Conference on Human-Robot Interaction, Vienna, Austria, 6–9 March 2017; pp. 453–462. [Google Scholar] [CrossRef]

- Weintrop, D.; Afzal, A.; Salac, J.; Francis, P.; Li, B.; Shepherd, D.C.; Franklin, D. Evaluating CoBlox: A Comparative Study of Robotics Programming Environments for Adult Novices. In Proceedings of the CHI ’18: 2018 CHI Conference on Human Factors in Computing Systems, Montreal, QC, Canada, 21–26 April 2018; pp. 1–12. [Google Scholar] [CrossRef]

- Schou, C.; Andersen, R.S.; Chrysostomou, D.; Bøgh, S.; Madsen, O. Skill-based instruction of collaborative robots in industrial settings. Robot. Comput.-Integr. Manuf. 2018, 53, 72–80. [Google Scholar] [CrossRef]

- Buchina, N.; Kamel, S.; Barakova, E. Design and evaluation of an end-user friendly tool for robot programming. In Proceedings of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 185–191. [Google Scholar] [CrossRef]

- Buchina, N.G.; Sterkenburg, P.; Lourens, T.; Barakova, E.I. Natural language interface for programming sensory-enabled scenarios for human-robot interaction. In Proceedings of the 2019 28th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), New Delhi, India, 14–18 October 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Misra, D.K.; Sung, J.; Lee, K.; Saxena, A. Tell Me Dave: Context-Sensitive Grounding of Natural Language to Manipulation Instructions. Int. J. Rob. Res. 2016, 35, 281–300. [Google Scholar] [CrossRef]

- Paternò, F.; Santoro, C. End-user development for personalizing applications, things, and robots. Int. J. Hum.-Comput. Stud. 2019, 131, 120–130. [Google Scholar] [CrossRef]

- Paternò, F. End User Development: Survey of an Emerging Field for Empowering People. ISRN Softw. Eng. 2013, 532659. [Google Scholar] [CrossRef]

- Maceli, M.G. Tools of the Trade: A Survey of Technologies in End-User Development Literature. In End-User Development; Barbosa, S., Markopoulos, P., Paternò, F., Stumpf, S., Valtolina, S., Eds.; Springer: Cham, Switzerland, 2017; pp. 49–65. [Google Scholar]

- Ponce, V.; Abdulrazak, B. Context-Aware End-User Development Review. Appl. Sci. 2022, 12, 479. [Google Scholar] [CrossRef]

- Andrao, M.; Gini, F.; Greco, F.; Cappelletti, A.; Desolda, G.; Treccani, B.; Zancanaro, M. “React”, “Command”, or “Instruct”? Teachers Mental Models on End-User Development. In Proceedings of the CHI ’25: 2025 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 26 April–1 May 2025. [Google Scholar] [CrossRef]

- Costabile, M.F.; Mussio, P.; Parasiliti Provenza, L.; Piccinno, A. End Users as Unwitting Software Developers. In Proceedings of the WEUSE ’08: 4th International Workshop on End-User Software Engineering, Leipzig, Germany, 12 May 2008; pp. 6–10. [Google Scholar] [CrossRef]

- Vemprala, S.; Bonatti, R.; Bucker, A.; Kapoor, A. ChatGPT for Robotics: Design Principles and Model Abilities; Technical report; Microsoft: Washington, DC, USA, 2023. [Google Scholar]

- Norman, D.; Draper, S. User Centered System Design: New Perspectives on Human-Computer Interaction; Taylor & Francis: Oxford, UK, 1986. [Google Scholar]

- Gargioni, L.; Fogli, D.; Baroni, P. Designing Human-Robot Collaboration for the Preparation of Personalized Medicines. In Proceedings of the GoodIT ’23: 2023 ACM Conference on Information Technology for Social Good, Lisbon, Portugal, 6–8 September 2023; pp. 135–140. [Google Scholar] [CrossRef]

- Gargioni, L.; Fogli, D.; Baroni, P. Preparation of Personalized Medicines through Collaborative Robots: A Hybrid Approach to the End-User Development of Robot Programs. ACM J. Responsib. Comput. 2025. [Google Scholar] [CrossRef]

- Braun, V.; Clarke, V. Using thematic analysis in psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef]

- Cripps, R.A. Galenic Pharmacy: A Practical Handbook to the Processes of the British Pharmacopoeia; J. & A. Churchill: London, UK, 1893. [Google Scholar]

- Tomić, S.; Sučić, A.; Martinac, A. Good manufacturing practice: The role of local manufacturers and competent authorities. Arch. Ind. Hyg. Toxicol. 2010, 61, 425–436. [Google Scholar] [CrossRef]

- Schrepp, M.; Hinderks, A.; Thomaschewski, J. Construction of a Benchmark for the User Experience Questionnaire (UEQ). Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 40–44. [Google Scholar] [CrossRef]

- Norman, D.A. The Psychology of Everyday Things; Basic Books: New York, NY, USA, 1988. [Google Scholar]

- Corbett, E.; Weber, A. What can I say? addressing user experience challenges of a mobile voice user interface for accessibility. In Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services, Florence, Italy, 6–9 September 2016; pp. 72–82. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).