Abstract

Immersive learning has been recognized as a promising paradigm for enhancing educational experiences through the integration of VR. We propose an architecture for intelligent tutoring in immersive VR environments that employs LLM-based non-playable characters. Key system capabilities are identified, including natural language understanding, real-time adaptive dialogue, and multimodal interaction through hand tracking, gaze detection, and haptic feedback. The system synchronizes speech output with NPC animations, enhancing both interactional realism and cognitive immersion. This design demonstrates that AI-driven VR interactions can significantly improve learner engagement. System performance was generally stable; however, minor latency was observed during speech processing, indicating areas for technical refinement. Overall, this research highlights the transformative potential of VR in education and emphasizes the importance of ongoing optimization to maximize its effectiveness in immersive learning contexts.

1. Introduction

The metaverse has emerged as a transformative paradigm, redefining digital interaction across many fields by integrating extended reality (XR), immersive virtual environments, and artificial intelligence (AI) [1]. Its accelerated development has been driven by technological advancements and an increasing demand for virtual engagement, particularly in response to global disruptions such as the COVID-19 pandemic [2]. Extensive research has explored the metaverse’s evolution, economic impact, and technological potential, emphasizing its role in shaping future digital ecosystems [1,3,4]. However, realizing its full potential requires a comprehensive strategy encompassing technological innovation, regulatory frameworks, cross-industry integration, and ethical considerations [1].

A key advantage of the metaverse is its ability to merge physical and virtual realities, enabling immersive and interactive experiences [5,6]. Successful implementation of metaverse technologies follows four key phases: design, model training, operation, and evaluation [7]. However, significant challenges remain, particularly in the areas of user interaction and personalization. Ensuring realistic and adaptive interactions between users and virtual agents—such as non-player characters (NPCs)—is critical for enhancing immersion and engagement. Despite advancements in AI-driven personalization, most NPCs rely on pre-scripted interactions, limiting their ability to adapt dynamically to user input, behaviors, and context in real time [8,9].

Current VR applications often fail to provide believable, context-aware interactions, as they primarily depend on static dialogue options and constrained NPC responses. This hinders user immersion and limits engagement, particularly in scenarios requiring dynamic, real-time adaptation [6]. Communication and collaboration within virtual teams also pose challenges, as the absence of non-verbal cues and natural conversational flow can reduce shared understanding [10].

In this context, virtual reality, conversational generative AI, and speech processing can contribute to enhancing user engagement and interaction within virtual spaces. However, integrating these technologies to create cohesive and responsive non-player characters (NPCs) remains a challenge. This work proposes an AI-driven augmented reality framework to enhance intelligent tutoring through real-time, personalized NPC interactions. By integrating large language models (LLMs) with speech-to-speech (SST), text-to-speech (TTS), and lip-sync technologies, the framework aims to develop context-aware AI tutors capable of dynamic and realistic engagement, bridging the gap between scripted virtual agents and fully adaptive NPCs for a more interactive and personalized learning experience in the metaverse.

Our proposed solution enhances natural language interactions between users and NPCs in VR by integrating AI-driven speech processing and natural language understanding. The system enables real-time, context-aware conversations, allowing NPCs to generate dynamic responses while synchronizing speech with facial animations for a natural and immersive experience. When a VR user interacts with an NPC, the system captures speech, transcribes it via STT, processes it using an LLM, and converts the response back to speech via TTS. This audio is then synchronized with the NPC’s lip movements and facial expressions, ensuring seamless and realistic interactions. By combining speech recognition, language processing, and animation synchronization, this framework enhances NPC engagement and interactivity, significantly improving immersion and realism in virtual environments. This work contributes to the fields of virtual reality, artificial intelligence, and immersive learning by enhancing NPC interactivity through real-time adaptivity and personalization.

The remainder of this paper is organized as follows. Section 2 reviews related works, discussing existing approaches to AI-driven virtual interactions and NPC engagement in immersive environments. Section 3 presents the proposed system, detailing the architecture, core components, and workflow for integrating natural language processing, speech synthesis, and animation synchronization in the VR space. Section 4 provides an analysis of the first results, evaluating system performance, user interaction quality, and the effectiveness of AI-driven NPC responses. Finally, Section 5 concludes this paper by summarizing the key findings and outlining potential directions for future research and improvements.

2. Related Works

This section reviews the existing literature in the aforementioned fields, highlighting progress, current limitations, and how our work addresses these gaps.

2.1. Technological Foundations of the Metaverse

The metaverse is a multifaceted construct that integrates diverse technologies to form an interconnected, immersive virtual universe [5]. The term combines “meta,” denoting transcendence, and “universe,” signifying a virtual domain beyond physical reality [11]. This integration supports immersive and interactive experiences transcending physical reality. A key enabler of these experiences is extended reality (XR), which encompasses augmented reality (AR), mixed reality (MR), and virtual reality (VR), as illustrated in Figure 1. Through XR and other emerging technologies, the metaverse continues to evolve into a cohesive digital universe, offering users unprecedented engagement opportunities [12].

Figure 1.

Hierarchy of extended reality (XR) and its components.

The metaverse represents a post-reality space where physical reality seamlessly converges with virtuality, enabling multisensory interactions with virtual environments, digital assets, and other users [13]. Characterized by hyper-spatial temporality, it eliminates the traditional constraints of time and space, offering boundless exploration and interaction opportunities [14].

Augmented reality (AR) and VR play a central role in creating engaging digital environments. VR immerses users in fully digital spaces via devices such as headsets and gloves, while AR overlays digital elements onto the physical world, enhancing user perception and interaction [14,15]. Complementary technologies such as 3D reconstruction, which digitizes real-world environments, enhance realism and immersion [16,17]. Artificial intelligence (AI) augments interactivity with advancements in natural language processing (NLP) and neural interfaces, powering responsive environments and lifelike avatars [18,19]. Table 1 summarizes these enabling technologies and their contributions.

Table 1.

Technologies enabling immersive virtual environments and their contributions.

2.2. VR in Education

Virtual reality (VR) has established itself as a pivotal innovation in contemporary educational paradigms, offering opportunities for immersive and interactive learning experiences that were previously unattainable [20]. VR enables students to interact with instructional content in a highly visual and interactive manner by constructing virtual environments that simulate real-world scenarios or invent entirely new ones [21]. One of the primary applications of VR in education is in STEM (Science, Technology, Engineering, and Mathematics) fields [22]. For example, VR can simulate complex scientific experiments, such as those conducted in a chemistry lab, providing a safe and controlled environment for students to explore chemical reactions without the risks associated with physical materials [23]. Similarly, in engineering, VR can offer 3D models of machinery and infrastructure, enabling students to visualize and manipulate structures in ways that traditional textbooks cannot provide [24]. Another use of VR is in medical education. Medical students can practice surgical procedures or study human anatomy in a virtual operating room, where they can make mistakes without endangering patients. Studies have shown that such simulations improve knowledge retention and skill acquisition, as they replicate real-life challenges and environments [25]. Additionally, VR environments can incorporate non-playable characters (NPCs) to simulate real-world interactions, such as engaging with a virtual tutor for personalized guidance. Research has shown that NPCs in educational metaverse platforms can enhance empathy, problem reframing, and openness to diverse perspectives, particularly in complex tasks [26]. Furthermore, VR is particularly advantageous in addressing educational accessibility. For students with physical or learning disabilities, VR can create adaptive environments that cater to their specific needs, ensuring inclusive learning experiences [27].

2.3. Designing Virtual Environments and NPCs

Designing immersive VR environments requires creating virtual worlds that engage multiple senses and foster embodiment and presence. Advanced VR technologies such as head-mounted displays and haptic feedback systems enhance sensory input and interaction, deepening user engagement. Research has demonstrated that immersive VR improves spatial awareness and perception [28], and it has gained recognition for its applications in education and rehabilitation, offering interactive and culturally responsive learning experiences [29]. However, challenges remain in integrating AI and language models to simulate authentic NPC behaviors and dialogue [30].

NPCs serve as autonomous agents within virtual worlds, facilitating user engagement and enhancing interactivity. Typically, these characters are programmed with predefined behaviors or guided by the system’s AI to fulfill designated roles [31]. In gaming, for instance, NPCs commonly serve as background characters or antagonists, enriching the narrative and gameplay experience [30]. Beyond gaming, NPCs are increasingly utilized in educational and professional contexts as interactive tools to enhance user engagement. In the educational metaverse, NPCs can adopt roles such as virtual tutors, companions, or advisors [32]. By integrating advanced natural language processing (NLP) technologies, these characters can engage in meaningful conversations, interpret user queries, and deliver responses in a manner that feels both natural and intuitive [33]. This capability makes NPCs valuable in creating interactive and immersive learning experiences tailored to individual needs.

The design and implementation of NPC interactions have become a key focus area. For example, ref. [34] proposed a method for dynamically controlling NPC paths in VR using weight maps and user trajectory-based path similarity, allowing NPCs to adapt movements to match user preferences for more realistic interactions. Similarly, ref. [35] underlined the importance of socially embodied NPCs in VR-based social skills training for autistic children, demonstrating the role of realistic surroundings in fostering meaningful interaction. Another study by [36] explored dialogue generation in VR language-learning applications, highlighting the potential for immersive educational experiences. Despite these advancements, challenges persist in creating lifelike NPC dialogue and effective scenarios for social and educational training. Addressing these limitations is crucial to developing virtual environments that are not only immersive but also socially and cognitively engaging.

2.4. LLMs in the Metaverse

Artificial intelligence (AI) plays a transformative role in content creation within the metaverse [37]. Using AI-powered content generation involves a variety of advanced technologies, including NLP [38] and large language models (LLMs) [39], which together enable more engaging, interactive, and personalized user experiences. NLP is fundamental to AI-driven content generation in the metaverse, as it facilitates intuitive communication between users and virtual entities like NPCs and digital assistants [38]. By interpreting and processing human language, NLP allows virtual agents to respond effectively to user inputs, creating conversations that feel more engaging and meaningful. This capability enhances the immersion of the virtual experience by bridging the gap between human interaction and digital systems. Cutting-edge models, such as GPT [40] and LLaMa [41], further enhance the potential of NLP in the metaverse. Trained on extensive datasets, these models are capable of generating text that closely mimics human language. They support the creation of intricate narratives, dynamic dialogue for NPCs, and real-time language translation to accommodate users from diverse linguistic backgrounds. By incorporating LLMs, the metaverse becomes richer in adaptable and meaningful content.

Speech technologies, including speech-to-text (SST) [42], text-to-speech (TTS) [43], and lip-sync systems [44], are pivotal in animating avatars and enabling natural interactions in virtual environments. Precise synchronization between spoken dialogue and avatar animations is essential for realism and user engagement. Recent advancements in real-time lip-sync algorithms and dynamic speech-driven facial animations enhance the lifelike quality of avatars, ensuring the seamless integration of voice output with natural language inputs. When combined with LLMs such as LLaMA, these technologies empower NPCs to deliver contextually appropriate, emotionally resonant dialogue synchronized with expressive animations. This synergy transforms NPC interactions, offering deeper immersion as avatars “speak” naturally and behave in alignment with their speech, creating more engaging virtual experiences.

2.5. Comparative Analysis with Existing Systems

Several recent systems have aimed to bridge the gap between immersive virtual environments with AI-driven educational support. Notable examples include LearningVerseVR [30], VREd [45], and the VR NPC-based tutoring system [31]. Although these systems represent promising efforts to integrate intelligent agents within virtual learning spaces, they face challenges related to real-time adaptivity, multimodal interaction, and flexibility in deployment.

Table 2 summarizes the functional differences across six core dimensions for these systems compared with the proposed framework.

Table 2.

Comparison of the proposed framework with representative immersive tutoring systems. A checkmark (✓) indicates that the feature is supported, while a cross (×) denotes that it is not.

As shown in Table 2, the proposed framework provides a more comprehensive solution by integrating real-time, naturalistic interactions powered by a locally deployed large language model, real-time lip synchronization for expressive avatars, and multimodal input, including speech, gaze, and gesture. Unlike prior approaches that either depend on cloud inference or offer limited adaptability, our framework emphasizes pedagogical flexibility through role-based instructional behavior (adapting responses according to the tutor’s role or teaching strategy) and Chain-of-Thought (CoT) prompting, which enhances reasoning and step-by-step guidance. Additionally, it supports rich multimodal engagement and broad platform compatibility.

These gaps in adaptivity, interaction richness, and deployment accessibility motivated the design of the intelligent tutoring framework detailed in the following section.

3. Proposed Architecture

Our proposed system focuses on enhancing natural language interactions between users and NPCs within an immersive VR environment. Using AI-driven speech processing and natural language understanding, the system enables seamless and contextually aware conversations between users and NPCs. A key feature of this architecture is its real-time adaptability, allowing NPCs to generate dynamically relevant responses while synchronizing their animations with the corresponding speech output. This integration ensures that interactions are natural, engaging, and immersive, thereby significantly improving the realism of virtual environments.

To help contextualize the technical architecture that follows, we briefly describe the interaction from a learner’s perspective. A user enters the VR classroom, is represented by an avatar, and engages with an NPC tutor. The user communicates naturally, and the system responds in real time by interpreting the input, generating a relevant reply, and delivering it through synchronized facial expressions and speech. This conversational loop feels natural and adaptive, supporting a fluid and personalized learning experience.

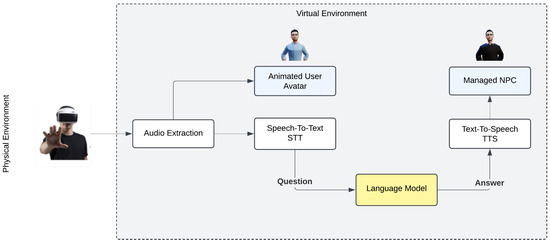

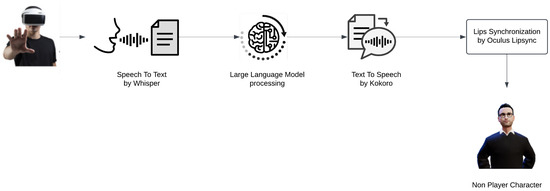

The proposed system architecture, as illustrated in Figure 2, consists of interconnected components designed to support virtual interactive experiences driven by AI. When a user wearing a VR headset initiates interaction with an NPC, the system captures and processes the spoken input through a structured pipeline. This process begins with audio extraction, where the user’s speech is recorded and stored as an audio clip. The captured audio is transcribed into text by an STT module, enabling further processing by the system’s natural language model. The transcribed text serves as input for an LLM, which analyzes the query and generates an appropriate textual response.

Figure 2.

An overview of the proposed virtual environment.

To create a coherent and interactive virtual experience, the system employs TTS conversion to transform the LLM-generated text into synthesized speech. This synthesized response is synchronized with the NPC’s lip movements and facial animations, ensuring that the visual representation aligns naturally with the spoken dialogue. This multi-step process enhances realism, immersion, and user engagement by enabling NPCs to respond dynamically in real time, adapting to contextual changes within the virtual environment. By integrating speech recognition, language processing, and animation synchronization, this system establishes a highly interactive and responsive framework for AI-driven conversational agents in VR environments.

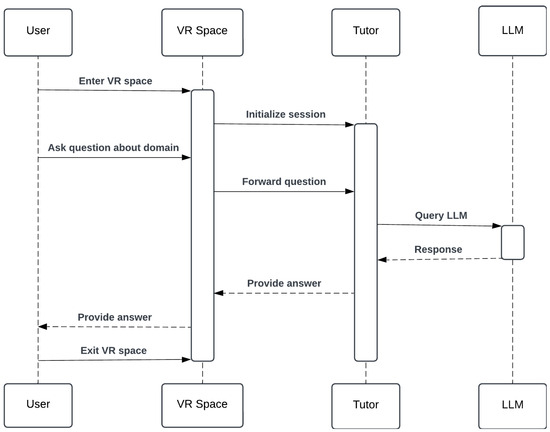

Figure 3 depicts a sequence diagram of the proposed chat completion process, illustrating the interaction between the user, VR space, tutor, and LLM. The sequence begins when the user enters the VR space and initiates a session with the NPC tutor. The user then asks a question related to a specific domain, triggering the chat-processing workflow. The tutor queries the LLM, which processes the request and returns a contextually appropriate response. This response is delivered back to the user within the VR environment, enabling a seamless tutoring interaction.

Figure 3.

Sequence diagram of the chat completion process in the VR tutoring system.

3.1. Virtual Environment

In the context of VR, the virtual environment represents the interactive 3D space where users navigate and engage with digital elements. This environment comprises meticulously designed objects, textures, lighting, and spatial features that contribute to a heightened sense of immersion and realism. The effectiveness of the VR experience heavily depends on the optimization of its visual and interactive components, ensuring that users remain fully engaged within the virtual space.

To enhance visual fidelity, performance, and compatibility, our system employs Unity’s Universal Render Pipeline (URP), which optimizes color accuracy, rendering efficiency, and graphical performance in VR applications. The adoption of the URP ensures that the developed virtual environment delivers high-quality visuals while maintaining smooth performance, a critical factor for sustained user immersion (see Figure 4). The system incorporates four NPCs, each with distinct identities, contributing to a more dynamic and interactive user experience. These NPCs are integrated into the environment to enable context-aware interactions, enhancing the sense of realism within the VR world.

Figure 4.

Example of the virtual environment.

3.2. Avatars

Avatar control plays a fundamental role in enhancing immersion, interaction, and overall user engagement in digital spaces. It encompasses key mechanisms such as embodiment, agency, body ownership, and self-location within avatars in virtual environments [46]. Users who control their avatars may experience avatar–self merging [47]. Additionally, shared avatar control among multiple users can impact the sense of agency and even contribute to motor skill restoration [48,49]. Furthermore, the visual fidelity and personalization of avatars significantly influence body ownership, presence, and emotional engagement, ultimately shaping user behavior and immersive experiences in virtual environments [50].

In our VR environment, all avatars are generated using the ReadyPlayerMe platform [51]. The application features two avatars, one representing the actual user, and the other functioning as a non-playable character (NPC) (see Figure 5). The NPC exhibits a distinct identity and behavioral pattern, enhancing the dynamism and interactivity of the virtual experience.

Figure 5.

The avatars used in the virtual environment. (a) The tutor avatar (NPC). (b) The user avatar.

The NPC loader script automates the instantiation and integration of avatars within the virtual environment. It manages the following key parameters that are essential for avatar configuration:

- Avatar URL—Defines the avatar’s digital asset;

- Animator Controller—Governs avatar motion and behavior;

- Load on Start—Ensures that avatars initialize automatically;

- Is Sitting Flag—Adjusts avatar positioning dynamically.

This approach speeds up avatar instantiation and allows for a smooth integration of the avatars into the metaverse ecosystem through the use of automation techniques. Additionally, customized key configurations enhance accessibility, allowing users to move within the VR environment using various input methods such as WASD keys or directional arrows.

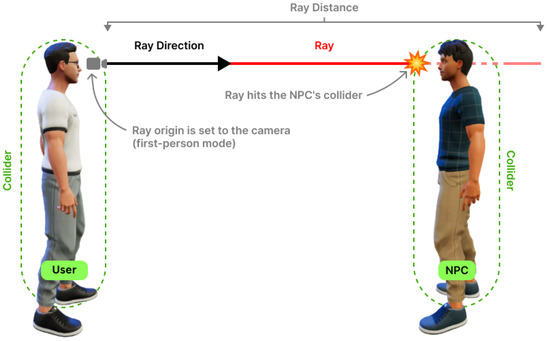

Raycasting-based interaction facilitates dynamic engagement between the user and the NPCs in the VR environment. As depicted in Figure 6, the system employs raycasting mechanics, where a ray is emitted from the user’s camera position toward the NPC. When the ray collides with the NPC’s collider, it triggers dialogue or interaction events. This technique ensures smooth and responsive user–NPC interactions, commonly used in 3D virtual environments for context-aware communication and immersive engagement.

Figure 6.

The use of raycasting to interact with NPCs.

To further enhance realism, the NPC integrates animation-driven behaviors synchronized with speech output, gestures, and contextual interactions. By aligning facial expressions, lip movements, and body gestures, the system ensures that the NPC delivers naturalistic responses, fostering more believable and immersive virtual experiences.

3.3. Adaptive LLM Deployment

To enable real-time, context-aware interactions, the system integrates a locally deployed LLM, selected based on the user’s available computational resources. Two model variants are used:

- llama3.2:3b-text-q8_0: A lightweight model optimized for text generation with lower computational overhead, making it suitable for devices with limited processing power.

- Deepseek-r1:14b-qwen-distill-q4_K_M: A larger, more sophisticated model capable of generating complex, contextually rich responses that require higher system performance.

The system’s adaptability for numerous model configurations provides adaptation to varying hardware constraints while preserving conversation quality. The models work in an offline context, protecting user privacy and ensuring secure, localized processing.

3.3.1. Prompt Engineering and Role-Based Structuring

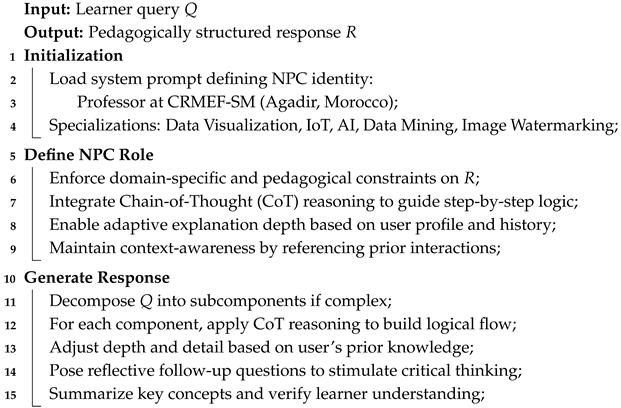

To ensure coherent and contextually relevant responses, the system employs a structured, role-based prompt design comprising two distinct components. As illustrated in Algorithm 1, the system prompt defines the NPC tutor’s identity as a professor at the Regional Center for Education and Training Professions (CRMEF-SM) in Agadir, Morocco, with expertise in data visualization, the Internet of Things (IoT), artificial intelligence (AI), educational data mining, and image watermarking. This role specification constrains responses to the intended pedagogical and disciplinary domains. To further enhance logical flow and instructional clarity, the prompt integrates a Chain-of-Thought (CoT) reasoning mechanism [52], enabling the NPC to decompose complex learner queries into structured, step-by-step explanations. This integration enables the NPC tutor to not only retrieve factual information but also actively construct coherent, pedagogically sound responses.

This methodology enhances pedagogical coherence by enabling the LLM to organize responses in a logically progressive manner, similar to how an expert educator would introduce and elaborate on concepts. It also supports adaptive complexity, allowing the tutor to dynamically adjust the depth and detail of explanations based on the user’s prior knowledge and interaction history. Furthermore, CoT reasoning fosters engagement and interactivity by guiding users through reasoning processes, thereby encouraging critical thinking and problem-solving rather than simply delivering direct answers. This structured methodology creates a more immersive and effective learning experience, reinforcing conceptual understanding through interactive dialogue.

This approach assures that the NPC tutor behaves as an intelligent, context-aware instructional agent by integrating adaptive LLM selection, multimodal input processing, and Chain-of-Thought reasoning. The structured prompt design enables the model to retain the tutor’s established persona while dynamically reacting to user requests, increasing the realism and pedagogical efficacy of virtual learning interactions.

To illustrate this adaptive capability in practice, consider a learner asking the NPC tutor the following question: “What is Big Data?” For a beginner-level profile, the system prompt guides the LLM to generate a simplified explanation:

“Big Data is a term used to describe large volumes of structured and unstructured data that are collected, stored, and analyzed using advanced analytics techniques. In simple terms, big data refers to the vast amounts of information available on a wide variety of topics. This information can include customer preferences, product usage data, social media interactions, and more.”

For an advanced learner, the same query produces a more technical and detailed response:

“Big Data involves the collection and analysis of large amounts of structured or unstructured data from various sources. The typical architecture for processing Big Data involves Data Ingestion, Data Preprocessing, Data Analysis.”

This contrast demonstrates how the prompt structure and the integration of CoT allow the system to adapt explanations based on the background of the user, thus improving personalization and pedagogical relevance.

| Algorithm 1: Structured prompt design for controlling the behavior of the LLM NPC tutor. |

|

3.3.2. Audio Extraction

User speech is recorded through a microphone input and stored as a Waveform Audio Format (WAV) file. This process utilizes a “voice recorder” component, which includes a serialized field to define the maximum duration of recordings. This constraint prevents memory overflows and optimizes application performance.

3.3.3. Speech-to-Text Processing

The LLM model, specifically LLaMA 3.2, processes only text-based input and cannot directly handle audio clips. Therefore, before generating a response, the audio input must be transcribed into text. This is achieved using OpenAI’s local deep learning model, Whisper [53], a state-of-the-art neural network trained for automatic speech recognition (ASR). Once transcription is completed, the textual output is forwarded to the chat completion API, enabling coherent and dynamic NPC interactions.

3.3.4. Text-to-Speech (TTS) and Lip Synchronization

As the audio is played, the NPC’s lip movements are synchronized with the phonetic structure of the speech, resulting in a realistic and immersive conversational experience. As shown in Figure 7, the system’s workflow outlines the end-to-end process from capturing user input to generating synchronized verbal and visual responses in real time.

Figure 7.

The workflow of the conversational system.

The NPC’s lip movements are synchronized with its speech output. There are two main components to this:

- Text-to-speech (TTS) conversion: The LLM-generated response is converted into an audio clip using the Kokoro model. The output is then assigned as the NPC’s audio source.

- Lip syncing via Oculus Lipsync:

- –

- Lip movements are dynamically adjusted in real time using visemes—visual representations of phonemes—to ensure that the NPC’s facial expressions match the spoken content.

- –

- As the audio plays, the NPC’s lips move accordingly, creating a realistic and immersive conversational experience.

3.4. Design Trade-Offs and Technical Choices

The architecture presented in the previous sections reflects deliberate design decisions aimed at balancing system performance, realism, accessibility, and pedagogical coherence within a VR-based educational setting. In this subsection, we outline the key technical trade-offs that guided our implementation.

Language Model Selection: As discussed in the adaptive LLM deployment section, we adopted a dual-model strategy to support scalability across various hardware profiles. Lightweight models such as llama3.2:3b-text-q8_0 were chosen for deployment on devices with limited processing capacity, while larger models like deepseek-r1:14b-qwen-distill-q4_K_M were reserved for higher-performance configurations. This trade-off allowed us to maintain acceptable response latency and preserve conversational depth while enabling fully offline operation, which is essential for privacy and deployment in bandwidth-limited environments.

Rendering Engine and Avatar Design: We selected Unity’s Universal Render Pipeline (URP) to optimize visual fidelity and runtime performance in immersive environments. Although alternatives like Unreal Engine or Unity HDRP offer more advanced rendering features, they demand significantly higher computational resources. The URP provided the necessary balance, enabling smooth performance on Meta Quest 3 headsets and desktop setups. For avatar creation, ReadyPlayerMe offers cross-platform compatibility and expressive, stylized models, aligning with our goal of maintaining immersion without overloading system resources.

Speech Pipeline: For speech-to-text (STT), we used the OpenAI Whisper model locally due to its robust transcription performance, even in noisy environments. For text-to-speech (TTS), we used the Kokoro model, which was selected for its lightweight architecture, compatibility with real-time synthesis, and ability to produce natural, intelligible speech on local devices. Although more advanced cloud-based TTS systems offer greater expressiveness, Kokoro provides a favorable trade-off between performance, speed, and privacy, which is essential for educational use cases requiring offline operation. The TTS output is assigned as the NPC audio source and is synchronized with facial animation using viseme mapping through Oculus Lipsync to preserve immersion and realism.

Multimodal Input Support: For user–NPC interactions, we implemented a raycasting system that emits a virtual ray from the user’s camera to detect collisions with the NPC avatar. This mechanism enables lightweight and highly responsive interaction triggers without requiring external sensors or advanced hardware. The avatars were instantiated using the ReadyPlayerMe platform, with a custom loader script that manages parameters such as animation control, posture (sitting/standing), and initialization logic. This approach ensures compatibility across platforms while preserving a sense of embodiment and control within the virtual environment.

Together, these design choices represent a larger design approach that aims to develop an immersive and adaptable tutoring system that is responsive, ensures user privacy, and is accessible on a variety of devices and in a variety of learning environments.

4. Results

4.1. Implementation Setup

The proposed architecture was deployed on a high-performance computing system to ensure seamless integration of VR functionalities, real-time AI-driven interactions, and immersive user experiences. The hardware configuration included an Asus ROG STRIX G15 G513RW (2022) laptop, featuring an AMD Ryzen 7 6800H processor (8 cores, 16 threads) and an NVIDIA GeForce RTX 3070 Ti GPU (8 GB GDDR6 VRAM, PCIe 4.0). The system is equipped with 16 GB of DDR5 RAM and 2.5 TB of NVMe storage, facilitating efficient data processing and high-quality graphical rendering.

The virtual environment was developed using Unity 6 (6000.0.33f1) LTS, with assets designed in Blender 4.3. VR integration was achieved through the Meta Quest 3, leveraging its advanced spatial tracking, 3D audio, and haptic feedback capabilities. AI-driven interactions were powered by an LLM, enabling real-time adaptive responses within the virtual learning space. A detailed breakdown of the technical specifications is provided in Table A1 and Table A2.

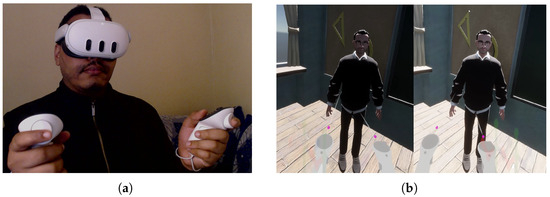

4.2. Use Case 1: Virtual Reality (VR) Interaction

In the VR scenario, learners interact with a simulated environment using the Meta Quest 3 headset, which facilitates a fully immersive and interactive educational experience. A defining aspect of the VR interaction model is its reliance on natural input modalities, such as hand tracking, gaze direction, and head movements, which replace conventional keyboard and mouse inputs. These intuitive interaction mechanisms allow users to navigate the environment, select objects, and engage in natural conversations with the NPC.

The spatial audio system embedded in the Meta Quest 3 enhances realism by dynamically adjusting sound based on the user’s position and movements, creating an authentic auditory experience that mimics real-world learning scenarios. The core component of this immersive setting is an AI-powered NPC tutor designed to simulate an interactive educational session. Upon entering the environment, the tutor introduces himself as a teacher.

The NPC tutor dynamically generates context-aware explanations tailored to the learner’s needs. The system provides adapted interactions by analyzing user queries and engagement patterns in real time. This adaptability enables a more engaging and student-centered approach than static educational material.

Figure 8 illustrates the user interaction in virtual reality mode: subfigure (a) shows the user engaging with the VR headset and controllers, while subfigure (b) provides a virtual environment perspective, displaying the avatar and controller representation for each eye.

Figure 8.

User interaction in virtual reality mode (a) User engaging with the VR headset and controllers. (b) Virtual environment perspective: avatar and controller representation for each eye.

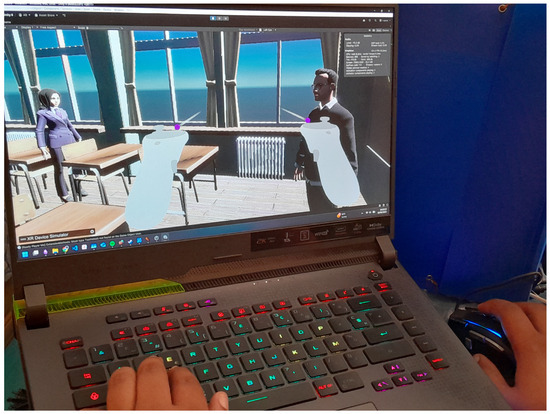

4.3. Use Case 2: Desktop Interaction

Desktop-based interaction mode provides a viable alternative for users without access to VR hardware, ensuring broader accessibility to the learning environment. In this scenario, users navigate the virtual space using traditional input devices such as a keyboard and mouse, engaging with the NPC tutor through typed input rather than natural voice commands or gesture-based interactions. Although the desktop version lacks the spatial immersion and embodied presence that VR provides, it preserves a high level of adaptability and responsiveness in real-time conversations. The NPC tutor uses the LLM to generate context-aware explanations, adapting its responses based on user queries, learning pace, and engagement patterns.

One key advantage of desktop mode is its stability and lower computational requirements than VR, making it suitable for a wider range of devices, including standard personal computers and laptops. Additionally, text-based interactions provide certain benefits, such as enabling users to review past responses more easily, facilitating precise queries, and reducing potential speech recognition errors that can occur in voice-driven systems.

This mode is particularly beneficial in environments where voice input is impractical, such as shared workspaces or libraries. Figure 9 illustrates the user interaction in desktop mode, showcasing how users engage with the system through traditional input devices.

Figure 9.

User interaction in desktop mode.

Finally, desktop-based mode serves as a scalable option, complementing the VR experience by offering a more accessible yet functionally rich alternative. This dual-modality approach ensures that users with varying technological resources and preferences can engage with the learning platform effectively.

4.4. Evaluation Metrics and System Performance

To assess the effectiveness and efficiency of the proposed architecture, we evaluated key performance indicators across multiple dimensions, including system timing, user configuration choices, prompting efficiency, and error analysis. The evaluation was conducted in both desktop simulation mode and virtual reality (VR) mode on the Meta Quest 3, ensuring a comprehensive assessment of system behavior across different environments.

System timing metrics were assessed to evaluate the startup time, interaction latency, scene loading duration, and response delays. The system demonstrated stable performance across both the desktop and VR configurations. In desktop simulation mode, the system achieved an average loading time of 21.58 s. In contrast, direct deployment to the Meta Quest 3 VR headset resulted in a slightly improved average loading time of 20.36 s. This difference suggests that running the system natively in VR reduces initialization overhead.

Speech and audio processing latency also played a critical role in interaction fluidity. The text-to-speech (TTS) system required approximately 10 s to generate spoken responses from text input, while speech-to-text (STT) transcription took 2 s to process user speech.

Interaction delays were observed at two key moments: a 2 s freeze at the beginning of the recording phase and a 10 s delay during TTS conversion, temporarily disrupting the real-time flow of interaction.

Another factor affecting performance was prompting efficiency, which depended on the LLM model chosen for chatbot responses. The selected model affected both the coherence and adaptability of the system’s responses. The efficiency of each model varied based on its computational demands and linguistic structuring capabilities.

To ensure an engaging and pedagogically effective interaction, the LLM-driven NPC’s response quality was analyzed in terms of contextual alignment and instructional relevance. Two distinct models were evaluated for prompting efficiency: LLaMA 3.2:3b, which is optimized for rapid text generation, producing structured and concise responses, and Deepseek-r1:14b, which is designed for in-depth explanations, delivering more detailed responses at the cost of higher computational complexity and increased latency. The choice of the LLM model played a significant role in balancing speed and depth, impacting how well the chatbot could adapt to user queries.

To identify system limitations, a comprehensive error analysis was conducted, focusing on audio processing, system stability, and speech recognition accuracy.

In terms of audio processing, the TTS module performed without errors, as the Kokoro model had been extensively trained on a large dataset, ensuring high-quality speech synthesis.

However, STT transcription exhibited minor errors due to the limitations of the local Whisper model, which lacked sufficient parameters for perfect transcription. Despite this limitation, the system was able to recognize the majority of speech inputs with acceptable accuracy. Table 3 summarizes the system performance metrics across both VR and desktop modes.

Table 3.

System performance metrics.

Through this performance evaluation, we identified system bottlenecks and optimization possibilities, ensuring that the LLM-based NPC could provide an engaging, real-time learning experience with minimal issues.

4.5. Findings and Observations

The evaluation results indicate that the system effectively delivers immersive and interactive learning experiences while maintaining stable performance across different modalities. The VR version demonstrated strong potential for enhanced engagement, as its natural voice and gesture-based interactions are inherently more intuitive compared to traditional text-based input. This aligns with findings from [54], who reported that VR training enhances learning efficacy and provides a heightened sense of presence in mechanical assembly tasks. A recent study has also confirmed that VR-based environments promote increased user engagement, as they offer more immersive experiences that foster active learning [20].

However, the increased complexity of rendering and audio processing introduced minor latency issues and higher system load, which were observed during performance testing. These challenges are consistent with prior research highlighting the need for continuous optimization in VR-based educational platforms [55]. The desktop version, while lacking the full immersion of VR, provides a stable and reliable alternative with efficient interaction capabilities through text input and traditional navigation methods. This observation is supported by [45], who introduced VREd, a virtual reality-based classroom using Unity3D WebGL, highlighting that, while VR offers immersive experiences, desktop platforms remain practical and accessible for online education.

The prompting efficiency analysis demonstrated that the LLM-based NPC effectively generates context-aware responses, ensuring adaptive and dynamic interactions. However, the absence of direct user testing means that further validation is required to assess real-world engagement levels and usability. Additionally, audio-related challenges in VR suggest the need for future improvements in speech recognition accuracy and system optimization to reduce potential errors.

From a technical perspective, VR’s customizable avatars, immersive learning spaces, and spatial interactions contribute to an improved educational experience. While the desktop version is a stable and accessible alternative, its capabilities remain limited compared to VR’s rich and immersive interactions. This is supported by a study on VR- and AR-assisted STEM (Science, Technology, Engineering, and Mathematics) learning, which highlighted the unique benefits of immersive technologies in engaging students and improving learning outcomes [56].

Overall, the findings suggest that VR-based learning environments offer transformative educational experiences by bridging the gap between digital and physical interactions. While the desktop version remains a functional alternative, the immersive nature of VR fosters deeper learning engagement, natural interaction, and a more effective simulation of real-world educational scenarios. Future work will focus on further optimizing VR performance, improving speech recognition accuracy, and enhancing avatar interactivity to advance the capabilities of next-generation immersive learning environments.

Future research will focus on enhancing the performance of the system to support more natural and effective interactions in immersive learning environments. A central objective will be to deploy the system in authentic educational settings to assess its practical effectiveness and evaluate its impact on learning outcomes and user engagement through empirical studies. Technical refinements will include improving speech recognition accuracy to facilitate more precise and responsive communication, thereby enhancing the overall user experience. In parallel, efforts will be directed toward optimizing system performance by mitigating latency and reducing computational overhead to ensure smoother performance. Additionally, innovative strategies will be investigated to advance avatar interactivity, with the objective of creating more adaptive and responsive virtual agents capable of accommodating a wider range of user behaviors and inputs. Furthermore, future work will explore the scalability of the system for multi-user classroom settings and its adaptability to lower-end hardware, with the aim of supporting deployment in underserved educational environments.

5. Conclusions

In conclusion, the proposed architecture demonstrates significant potential for revolutionizing educational interactions by providing immersive and adaptive learning experiences via VR and desktop modalities. The evaluation results indicate that VR environments are effective owing to their natural interaction models and immersive features. The desktop version, although less immersive, serves as a stable alternative, ensuring broader accessibility while maintaining effective educational interaction. The integration of an LLM to generate context-aware responses plays a critical role in delivering personalized learning experiences, ensuring that the system is tailored to individual learner needs.

Future work will address system performance and interactivity to support more natural, effective immersive learning. Real-world deployment will assess its impact on engagement and learning outcomes. Furthermore, challenges such as system latency and processing demands must be addressed to further optimize performance, especially in VR settings.

Author Contributions

Writing—review and editing, Writing—original draft, Validation, Supervision, Resources, Project administration, Methodology, Conceptualization, and Funding acquisition, M.E.H.; Writing—review and editing, Visualization, Methodology, and Conceptualization, T.A.B.; Software, Visualization, and Writing—review and editing, A.B.; Writing—original draft, Visualization, Software, and Conceptualization, H.A.N.; Writing—review and editing and Validation, H.E.A.; Writing—review and editing, Writing—original draft, Validation, and Methodology, Y.E.-S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Ministry of Higher Education, Scientific Research, and Innovation; the Digital Development Agency (DDA); and the CNRST of Morocco (Al-Khawarizmi program, Project 22).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial intelligence |

| AR | Augmented reality |

| LLM | Large language model |

| MR | Mixed reality |

| NLP | Natural language processing |

| NPC | Non-playable character |

| STT | Speech-to-text |

| TTS | Text-to-speech |

| URP | Universal Render Pipeline |

| VR | Virtual reality |

| XR | Extended reality |

Appendix A

Table A1.

Hardware configuration.

Table A1.

Hardware configuration.

| Component | Details |

|---|---|

| Model | Data |

| CPU | AMD Ryzen 7 6800H @ 3.2 GHz, 8 cores, 16 logical processors, 16M Cache |

| GPU | NVIDIA GeForce RTX 3070 Ti (Laptop, 150 W), 8 GB GDDR6, PCIe 4.0 x16 |

| RAM | 16 GB DDR5 @ 4800 MHz |

| Storage | 2.5 TB NVMe |

| OS | Windows 11 Pro 64 bits + WSL (Ubuntu 24.04.1 LTS) |

| Engine | Unity 6 (6000.0.33f1) LTS |

| Modeling | Blender 4.3 |

| XR | Meta Quest 3 |

Appendix B

Table A2.

Software configuration.

Table A2.

Software configuration.

| Category | Library/Tool | Version |

|---|---|---|

| Scripting Languages | C#, Python | |

| Unity Libraries | ReadyPlayerMe Avatar and Character Creator | 7.3.1 |

| XR (VR integration) | ||

| XR Plugin Management | 4.5.0 | |

| XR Legacy Input Helpers | 2.1.12 | |

| XR Interaction Toolkit | ||

| OpenXR Plugin | ||

| Oculus XR Plugin | ||

| Sentis + Whisper (speech-to-text) | 2.1.1 | |

| Oculus Lipsync Unity Plugin | 29.0.0 | |

| Classroom (edited with Blender 4.3) | 2.1.1 | |

| WSL Libraries | PyTorch | 2.6.0 |

| Ollama | 0.4.7 | |

| tinyllama | latest | |

| llama3.2 | 3b-text-q8_0 | |

| deepseek-r1 | 14b-qwen-distill-q4_K_M |

References

- Shen, J.; Zhou, X.; Wu, W.; Wang, L.; Chen, Z. Worldwide overview and country differences in metaverse research: A bibliometric analysis. Sustainability 2023, 15, 3541. [Google Scholar] [CrossRef]

- Moradi, Y.; Baghaei, R.; Feizi, A.; HajiAliBeigloo, R. Challenges of the sudden shift to asynchronous virtual education in nursing education during the COVID-19 pandemic: A qualitative study. Nurs. Midwifery Stud. 2022, 11, 44–50. [Google Scholar]

- Kemec, A. From reality to virtuality: Re-discussing cities with the concept of the metaverse. Int. J. Manag. Account. 2022, 4, 12–20. [Google Scholar]

- Fei, Q. Innovation and Development in the Metaverse under the Digital Economy—Takes Tencent as an Example. BCP Bus. Manag. 2023, 36, 106–115. [Google Scholar] [CrossRef]

- Wang, Y.; Su, Z.; Zhang, N.; Xing, R.; Liu, D.; Luan, T.; Shen, X. A Survey on Metaverse: Fundamentals, Security, and Privacy. IEEE Commun. Surv. Tutor. 2023, 25, 319–352. [Google Scholar] [CrossRef]

- Argote, D. Immersive environments, Metaverse and the key challenges in programming. Metaverse Basic Appl. Res. 2022, 19, 6. [Google Scholar]

- Park, S.; Kim, Y. A metaverse: Taxonomy, components, applications, and open challenges. IEEE Access 2022, 10, 4209–4251. [Google Scholar] [CrossRef]

- Curtis, R.; Bartel, B.; Ferguson, T.; Blake, H.; Northcott, C.; Virgara, R.; Maher, C. Improving user experience of virtual health assistants: Scoping review. J. Med. Internet Res. 2021, 23, e31737. [Google Scholar] [CrossRef]

- Ait Baha, T.; El Hajji, M.; Es-Saady, Y.; Fadili, H. The power of personalization: A systematic review of personality-adaptive chatbots. SN Comput. Sci. 2023, 4, 661. [Google Scholar] [CrossRef]

- Owens, D.; Mitchell, A.; Khazanchi, D.; Zigurs, I. An empirical investigation of virtual world projects and metaverse technology capabilities. ACM SIGMIS Database Database Adv. Inf. Syst. 2011, 42, 74–101. [Google Scholar] [CrossRef]

- Bokyung, K.; Nara, H.; Eunji, K. Educational applications of metaverse: Possibilities and limitations. J. Educ. Eval. Health Prof. 2022, 18, 32. [Google Scholar] [CrossRef]

- Hatami, M.; Qu, Q.; Chen, Y.; Kholidy, H.; Blasch, E.; Ardiles-Cruz, E. A Survey of the Real-Time Metaverse: Challenges and Opportunities. Future Internet 2024, 16, 379. [Google Scholar] [CrossRef]

- Mystakidis, S. Metaverse. Encyclopedia 2022, 2, 486–497. [Google Scholar] [CrossRef]

- Wang, H.; Ning, H.; Lin, Y.; Wang, W.; Dhelim, S.; Farha, F.; Ding, J.; Daneshm, M. A Survey on the Metaverse: The State-of-the-Art, Technologies, Applications, and Challenges. IEEE Internet Things J. 2023, 10, 14671–14688. [Google Scholar] [CrossRef]

- Dincelli, E.; Yayla, A. Immersive virtual reality in the age of the Metaverse: A hybrid-narrative review based on the technology affordance perspective. J. Strateg. Inf. Syst. 2022, 31, 101717. [Google Scholar] [CrossRef]

- Grande, R.; Albusac, J.; Vallejo, D.; Glez-Morcillo, C.; Castro-Schez, J. Performance Evaluation and Optimization of 3D Models from Low-Cost 3D Scanning Technologies for Virtual Reality and Metaverse E-Commerce. Appl. Sci. 2024, 14, 6037. [Google Scholar] [CrossRef]

- Goel, R.; Baral, S.; Mishra, T.; Jain, V. Augmented and Virtual Reality in Industry 5.0. Augmented and Virtual Reality Series, Volume 2; De Gruyter: Berlin, Germany, 2023; ISBN 9783110789997. [Google Scholar]

- Zhang, B.; Zhu, J.; Su, H. Toward the third generation artificial intelligence. Sci. China Inf. Sci. 2023, 66, 121101. [Google Scholar] [CrossRef]

- Ramadan, Z.; Ramadan, J. AI avatars and co-creation in the metaverse. Consum. Behav. Tour. Hosp. 2025; ahead-of-print. Available online: https://www.emerald.com/insight/content/doi/10.1108/cbth-07-2024-0246/full/html (accessed on 18 January 2025).

- Agrati, L. Tutoring in the metaverse. Study on student-teachers’ and tutors’ perceptions about NPC tutor. Front. Educ. 2023, 8, 1202442. [Google Scholar] [CrossRef]

- Pellas, N.; Mystakidis, S.; Kazanidis, I. Immersive Virtual Reality in K-12 and Higher Education: A systematic review of the last decade scientific literature. Virtual Real. 2021, 25, 835–861. [Google Scholar] [CrossRef]

- Acevedo, P.; Magana, A.; Benes, B.; Mousas, C. A Systematic Review of Immersive Virtual Reality in STEM Education: Advantages and Disadvantages on Learning and User Experience. IEEE Access 2024, 12, 189359–189386. [Google Scholar] [CrossRef]

- Gungor, A.; Kool, D.; Lee, M.; Avraamidou, L.; Eisink, N.; Albada, B.; Kolk, K.; Tromp, M.; Bitter, J. The Use of Virtual Reality in a Chemistry Lab and Its Impact on Students’ Self-Efficacy, Interest, Self-Concept and Laboratory Anxiety. Eurasia J. Math. Sci. Technol. Educ. 2022, 18, em2090. [Google Scholar] [CrossRef] [PubMed]

- Halabi, O. Immersive virtual reality to enforce teaching in engineering education. Multimed. Tools Appl. 2020, 79, 2987–3004. [Google Scholar] [CrossRef]

- Ahuja, A.; Polascik, B.; Doddapaneni, D.; Byrnes, E.; Sridhar, J. The digital metaverse: Applications in artificial intelligence, medical education, and integrative health. Integr. Med. Res. 2023, 12, 100917. [Google Scholar] [CrossRef] [PubMed]

- Huyen, N. Fostering Design Thinking mindset for university students with NPCs in the metaverse. Heliyon 2024, 10, e34964. [Google Scholar] [CrossRef]

- Won, M.; Ungu, D.; Matovu, H.; Treagust, D.; Tsai, C.; Park, J.; Mocerino, M.; Tasker, R. Diverse approaches to learning with immersive Virtual Reality identified from a systematic review. Comput. Educ. 2023, 195, 104701. [Google Scholar] [CrossRef]

- Kloiber, S.; Settgast, V.; Schinko, C.; Weinzerl, M.; Fritz, J.; Schreck, T.; Preiner, R. Immersive analysis of user motion in VR applications. Vis. Comput. 2020, 36, 1937–1949. [Google Scholar] [CrossRef]

- Radianti, J.; Majchrzak, T.; Fromm, J.; Wohlgenannt, I. A systematic review of immersive virtual reality applications for higher education: Design elements, lessons learned, and research agenda. Comput. Educ. 2020, 147, 103778. [Google Scholar] [CrossRef]

- Song, Y.; Wu, K.; Ding, J. Developing an immersive game-based learning platform with generative artificial intelligence and virtual reality technologies—“LearningverseVR”. Comput. Educ. X Real. 2024, 4, 100069. [Google Scholar] [CrossRef]

- Campitiello, L.; Beatini, V.; Di Tore, S. Non-player Character Smart in Virtual Learning Environment: Empowering Education Through Artificial Intelligence. In Artificial Intelligence with and for Learning Sciences. Past, Present, and Future Horizons; Springer: Cham, Switzerland, 2024; pp. 131–137. [Google Scholar]

- Chodvadiya, C.; Solanki, V.; Singh, K. Intelligent Virtual Worlds: A Survey of the Role of AI in the Metaverse. In Proceedings of the 2024 3rd International Conference for Innovation in Technology (INOCON), Bangalore, India, 1–3 March 2024; pp. 1–6. Available online: https://ieeexplore.ieee.org/abstract/document/10511825 (accessed on 21 January 2025).

- Pariy, V.; Lipianina-Honcharenko, K.; Brukhanskyi, R.; Sachenko, A.; Tkachyk, F.; Lendiuk, D. Intelligent Verbal Interaction Methods with Non-Player Characters in Metaverse Applications. In Proceedings of the 2023 IEEE 5th International Conference on Advanced Information and Communication Technologies (AICT), Lviv, Ukraine, 21–25 November 2023; pp. 67–71. Available online: https://ieeexplore.ieee.org/abstract/document/10452688 (accessed on 2 February 2025).

- Kim, J.; Lee, J.; Kim, S. Navigating Non-Playable Characters Based on User Trajectories with Accumulation Map and Path Similarity. Symmetry 2020, 12, 1592. [Google Scholar] [CrossRef]

- Moon, J. Reviews of Social Embodiment for Design of Non-Player Characters in Virtual Reality-Based Social Skill Training for Autistic Children. Multimodal Technol. Interact. 2018, 2, 53. [Google Scholar] [CrossRef]

- Xu, H. A Future Direction for Virtual Reality Language Learning Using Dialogue Generation Systems. J. Educ. Humanit. Soc. Sci. 2022, 2, 17–23. [Google Scholar] [CrossRef]

- Huynh-The, T.; Pham, Q.; Pham, X.; Nguyen, T.; Han, Z.; Kim, D. Artificial intelligence for the metaverse: A survey. Eng. Appl. Artif. Intell. 2023, 117, 105581. [Google Scholar] [CrossRef]

- Sumon, R.; Uddin, S.; Akter, S.; Mozumder, M.; Khan, M.; Kim, H. Natural Language Processing Influence on Digital Socialization and Linguistic Interactions in the Integration of the Metaverse in Regular Social Life. Electronics 2024, 13, 1331. [Google Scholar] [CrossRef]

- Li, B.; Xu, T.; Li, X.; Cui, Y.; Bian, X.; Teng, S.; Ma, S.; Fan, L.; Tian, Y.; Wang, F. Integrating Large Language Models and Metaverse in Autonomous Racing: An Education-Oriented Perspective. IEEE Trans. Intell. Veh. 2024, 9, 59–64. [Google Scholar] [CrossRef]

- Kalyan, K. A survey of GPT-3 family large language models including ChatGPT and GPT-4. Nat. Lang. Process. J. 2024, 6, 100048. [Google Scholar] [CrossRef]

- Grattafiori, A.; Dubey, A.; Jauhri, A.; Pandey, A.; Kadian, A. The Llama 3 Herd of Models. arXiv 2024, arXiv:2407.21783. [Google Scholar]

- Sethiya, N.; Maurya, C. End-to-End Speech-to-Text Translation: A Survey. Comput. Speech Lang. 2025, 90, 101751. [Google Scholar] [CrossRef]

- Kumar, Y.; Koul, A.; Singh, C. A deep learning approaches in text-to-speech system: A systematic review and recent research perspective. Multimed. Tools Appl. 2023, 82, 15171–15197. [Google Scholar] [CrossRef]

- Guan, J.; Zhang, Z.; Zhou, H.; Hu, T.; Wang, K.; He, D.; Feng, H.; Liu, J.; Ding, E.; Liu, Z.; et al. StyleSync: High-Fidelity Generalized and Personalized Lip Sync in Style-based Generator. arXiv 2023, arXiv:2305.05445. [Google Scholar]

- Rahman, R.; Islam, M. VREd: A Virtual Reality-Based Classroom for Online Education Using Unity3D WebGL. arXiv 2023, arXiv:2304.10585. [Google Scholar]

- Boban, L.; Boulic, R.; Herbelin, B. An embodied body morphology task for investigating self-avatar proportions perception in Virtual Reality. IEEE Trans. Vis. Comput. Graph. 2025, 31, 3077–3086. [Google Scholar] [CrossRef] [PubMed]

- Müsseler, J.; Salm-Hoogstraeten, S.; Böffel, C. Perspective taking and avatar-self merging. Front. Psychol. 2022, 13, 714464. [Google Scholar] [CrossRef]

- Fribourg, R.; Argelaguet, F.; Lécuyer, A.; Hoyet, L. Avatar and sense of embodiment: Studying the relative preference between appearance, control and point of view. IEEE Trans. Vis. Comput. Graph. 2020, 26, 2062–2072. [Google Scholar] [CrossRef]

- Cha, H.; Kim, B.; Joo, H. Pegasus: Personalized generative 3d avatars with composable attributes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 1072–1081. [Google Scholar]

- Waltemate, T.; Gall, D.; Roth, D.; Botsch, M.; Latoschik, M. The impact of avatar personalization and immersion on virtual body ownership, presence, and emotional response. IEEE Trans. Vis. Comput. Graph. 2018, 24, 1643–1652. [Google Scholar] [CrossRef]

- Altundas, S.; Karaarslan, E. Cross-platform and Personalized Avatars in the Metaverse: Ready Player Me Case. In Digital Twin Driven Intelligent Systems and Emerging Metaverse; Springer: Berlin/Heidelberg, Germany, 2023; pp. 317–330. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, A.; Li, M.; Smola, A. Automatic Chain of Thought Prompting in Large Language Models. arXiv 2022, arXiv:2210.03493. [Google Scholar]

- Radford, A.; Kim, J.; Xu, T.; Brockman, G.; McLeavey, C.; Sutskever, I. Robust Speech Recognition via Large-Scale Weak Supervision. arXiv 2022, arXiv:2212.04356. [Google Scholar]

- Lin, W.; Chen, L.; Xiong, W.; Ran, K.; Fan, A. Measuring the Sense of Presence and Learning Efficacy in Immersive Virtual Assembly Training. arXiv 2023, arXiv:2312.1038. [Google Scholar] [CrossRef]

- Lysenko, S.; Kachur, A. Challenges Towards VR Technology: VR Architecture Optimization. In Proceedings of the 2023 13th International Conference on Dependable Systems, Services and Technologies (DESSERT), Athens, Greece, 13–15 October 2023; pp. 1–9. Available online: https://ieeexplore.ieee.org/abstract/document/10416538 (accessed on 14 February 2025).

- Jiang, H.; Zhu, D.; Chugh, R.; Turnbull, D.; Jin, W. Virtual reality and augmented reality-supported K-12 STEM learning: Trends, advantages and challenges. Educ. Inf. Technol. 2025, 30, 12827–12863. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).