Abstract

Information availability through the web has been both a challenge and an asset for healthcare support, as evidence-based information coexists with unsupported claims. With the emergence of artificial intelligence (AI), this situation may be enhanced or improved. The aim of the present study was to compare the quality assessment of online dietary weight loss information conducted by an AI assistant (ChatGPT 4.5) to that of health professionals. Thus, 177 webpages publishing dietary advice on weight loss were retrieved from the web and assessed by ChatGPT-4.5 and by dietitians through (1) a validated instrument (DISCERN) and (2) a self-made scale based on official guidelines for weight management. Also, webpages were assessed by a ChatGPT custom scoring system. Analysis revealed no significant differences in quantitative quality scores between human raters, ChatGPT-4.5, and the AI-derived system (p = 0.528). On the contrary, statistically significant differences were found between the three content accuracy scores (p < 0.001), with scores assigned by ChatGPT-4.5 being higher than those assigned by humans (all p < 0.001). Our findings suggest that ChatGPT-4.5 could complement human experts in evaluating online weight loss information, when using a validated instrument like DISCERN. However, more relevant research is needed before forming any suggestions.

1. Introduction

As the use of the World Wide Web has already become an integral part of individuals’ everyday lives, the majority of them consciously consider it as the first choice when seeking information [1]. Moreover, a growing number of people who may experience health condition(s) turn to the Internet to look for relevant information instead of acquiring knowledge from healthcare professionals [2]. Recently, a Eurostat statistical analysis indicated that an increasing number of Europeans use the Internet to retrieve health-related information, with the latest update in 2024 showing 58% use by the total population of the European Union [3]. Several reasons draw people into a preference for using online media when seeking information for health purposes. These include easy access to and availability of a broad array of updated content, interactivity among patients or healthcare providers, affordability, and anonymity [4].

However, a considerable amount of existing literature has raised concerns about the quality of web-based health information [1,5]. At the same time, the misinformation retrieved from the Internet significantly influences the development of consumers’ health behavior and their final decisions [1]. This can be attributed to the fact that it is difficult for the public to objectively evaluate the published content, since numerous factors such as the design, aesthetics, and preference for easily understandable content affect their critical skills [6]. Additional findings of recent systematic reviews, in which researchers accumulated evidence regarding the quality of online nutritional information, documented the risk of facing inaccurate information which does not align with official nutrition guidelines [7,8]. In these systematic reviews, the majority of nutritional content published on the web was found to be of poor to fair quality, while only 15 to 40% of the dietary online information was congruent with official guidelines. These results were found independently of the assessment method used in each study.

Up to now, the assessment of quality and/or accuracy of health-related information has been made with either validated instruments or self-constructed tools depicting the individual guidelines for each health condition [8]. The most widely used validated instrument is DISCERN, an instrument assessing online content related to health-related topics [9]. Similarly, the EQIP (Ensuring Quality Information for Patients) tool [10], the JAMA (Journal of the American Medical Association) benchmarks [11], or more recently developed instruments such as the QUEST (Quality Evaluation Scoring Tool) questionnaire [12] serve the same purpose. However, assessing health information provided by the Internet using these means constitutes a challenge for the ordinary user, since few of them remain practical and easy to use [13]. With regard to self-constructed tools used by research teams in information evaluation studies, the methodology used by researchers is more or less the same and consists of translating the management guidelines into separate items of a questionnaire scored based on whether the specific guideline is present and accurately presented in online content [14,15,16].

With recent technological developments in the field of artificial intelligence (AI), AI assistants and more specifically Chat Generative Pre-trained Transformer (ChatGPT) have been used in some cases to assess online health-related information [17,18]. In these cases, ChatGPT’s assessment was compared to humans’, providing mixed results. In detail, when the DISCERN instrument was used by ChatGPT and humans to assess online sources regarding shock wave therapy as a treatment for erectile dysfunction, statistically significant differences were observed in total DISCERN scores and in 6 out of 16 (37.5%) DISCERN questions, with ChatGPT (March 2023 version) scoring higher than humans regarding the quality of the information [17]. Contrary to that, in the case of identifying and quantifying false or misleading claims made by practitioners of pseudoscientific therapies registered with the Complementary and Natural Healthcare Council in the United Kingdom, comparable results were produced by human assessors and ChatGPT-4 [18]. To our knowledge, no study has been identified, so far, using AI assistants to develop or use assessment tools for information quality assessment.

ChatGPT represents a Large Language Model (LLM) developed by OpenAI, built upon the Transformer architecture that enables understanding and generation of text closely resembling human communication [19]. Its functionality stems from extensive pre-training on massive text corpora from diverse sources, including scientific articles, books, and websites, with the final model possessing advanced capabilities in context comprehension and coherent response generation [20]. Unlike traditional search engines that merely retrieve information, ChatGPT can process and evaluate content through its sophisticated neural architecture, responding to specific instructions (prompts) with flexibility [21]. These characteristics render it potentially valuable for assessing the quality of nutritional information, as it can analyze content, identify inaccuracies, and compare against evidence-based guidelines when provided with appropriate evaluation parameters [22]. Its application as an assessment tool could offer a systematic and efficient method for evaluating online nutritional information quality, complementing traditional assessment approaches [23].

Given that obesity has reached pandemic proportions [24] and that individuals seem to rely on online sources to guide their dietary-related decisions [25], researchers have started to investigate the quality of weight loss information available on the internet [1] Existing data regarding the quality of weight loss information found in English-, Spanish-, and German-language websites has shown that the percentage of unsubstantial claims was rather high, ranging from 54 to 94% [26,27,28]. These results were produced using traditional, manually time-consuming quality assessment methods such as validated tools or researcher-constructed scales. With the emergence of AI assistants, the aim of the present study was to assess the quality and/ or accuracy of weight loss information published on Greek websites using ChatGPT-4.5 and compare the results with human-generated assessment outcomes, using up-to-now widely used tools and methodologies (i.e., the DISCERN instrument and a self-constructed checklist). Secondly, we aimed to compare an AI custom scoring system for weight loss information assessment to the above.

2. Materials and Methods

2.1. Study Design

To address our objectives, we initially retrieved Greek webpages publishing dietary advice on weight loss and then proceeded with the assessment of this information. The assessment was made by both humans and an AI assistant, namely ChatGPT-4.5 (February 2025 update), using standard methods (a validated instrument or methodology used in previous research studies) and a new one. Each webpage was assessed five times, two by humans (one with the DISCERN instrument and one with a self-constructed checklist) and three by ChatGPT-4.5 (one with the DISCERN instrument, one with the self-constructed checklist, and one with a tool generated by ChatGPT-4.5). This study employed three distinct prompt strategies to systematically evaluate dietary weight loss information published on Greek websites. Each approach was tailored to provide comprehensive quality assessment through structured methodological frameworks.

2.2. Retrieval of Webpages to Be Assessed

In order to retrieve webpages publishing information on dietary advice for weight loss, a set of relevant keywords was initially compiled by eliciting search queries from 15 individuals, who were asked to indicate the terms they would use in an online search. Additionally, Google’s auto-complete function was used for the most prominent keywords amongst the individuals’ queries, “diet” and “weight loss”. In line with this study’s aim, all queries (those produced by the individuals and those of Google’s autocomplete) were in Greek. The Google search engine was chosen, as at that time it was the most used search engine for online queries in Greece [29]. Similarly, based on popularity, we also chose Google Chrome as the web browser where the searches would be performed [30]. To match the aims of our study of assessing webpages with dietary advice on weight loss management, and not information on other methods (i.e., physical activity), we excluded queries that (a) identified specific fad diets (i.e., the Zone diet), (b) focused on specific foods (i.e., eat a specific soup every day for losing weight), (c) introduced dietary supplements, or (d) focused mainly on exercise as a weight management approach, leading to a total of 19 queries.

From the 13 February till the 1 March 2025, the 2 first—most visited—Search Engine Result Pages (SERPs) on Google search engine [31], for each query, were saved on a PDF file with active hyperlinks, as page ranking on search engines is temporally fluid [32]. The 417 pages that were initially collected were checked to fit the objective of our study to evaluate the quality of freely and easily accessible written content, targeting the lay audience. Thus, we excluded pages that (1) were videos, (2) were duplicates, (3) were non-functional hyperlinks, (4) redirected readers with a link to another webpage, (5) were advertisements, (6) had irrelevant content, (7) were meant for professionals (i.e., a scientific journal), (8) aimed to sell a product, and (9) had restricted access either due to the need for registration or the requirement to pay. After removing duplicates, all remaining pages were unique in content, but two separate pages could have been hosted on the same website domain. Some domain examples are www.dietlist.gr, www.foodforhealth.gr, www.geonutrition.gr, www.healthstories.gr, www.runningmagazine.gr, and www.mynutrihealth.gr. A total of 177 webpages were promoted for assessment. To ensure homogeneity in assessment procedures, the content of each of the 177 webpages was saved into a Word document before promoting it to a human and the AI assistant for rating. Each document’s content was limited to the information related to the title and main text of the webpage’s article on weight loss, plus some additional information about the publication (i.e., author, references, and publication date), if those were publicly available. The document did not include any secondary information that appeared on a webpage (i.e., advertisements, navigation bar, tags, hyperlinks to other websites or external sections, etc.).

2.3. Existing Methods Used for Quality Information Assessment

The two most widely used methods in dietary research were used to assess the accuracy and quality of online nutritional information [8]. Firstly, the previously used [33,34,35] DISCERN instrument, a validated tool for the evaluation of online health information [9], was implemented. This instrument comprises a total of 16 items designed to assess the quality of information presented on a webpage. Each item is scored, resulting in a cumulative score ranging from 16 to 80, with higher scores indicating greater informational quality. The instrument is structured into three distinct sub-sections: reliability (items 1–8), accuracy (items 9–15), and a single item (item 16) evaluating the overall quality of the webpage. Each item is scored with a 5-point Likert-type scale (1—no agreement with statement—to 5—full agreement with statement) [36]. The total score of the instrument ranged from 16 to 80, with higher scores depicting better quality. Also, DISCERN scores can be categorized into five qualitative levels reflecting the overall quality of health information: (1) excellent quality (scores from 64 to 80), (2) good quality (scores from 52 to 63), (3) fair quality (scores from 41 to 51), (4) poor quality (scores from 30 to 40), and (5) very poor quality (scores from 16 to 29) [37].

Secondly, a self-constructed checklist based on the latest European Association for the Study of Obesity (EASO) guidelines [38], prompted by previous researchers in the field [28,39,40] was used to assess the content accuracy of dietary weight loss information published on the retrieved webpages. In brief, each of the EASO dietary guidelines formed the individual items of the checklist depicting 9 dietary aspects proven to be useful for managing weight. Each item was scored 0–2; null points were assigned if the information was absent or wrong; 1 point was assigned when the item’s information was present and partially correct; and 2 points were assigned if the item’s information was present and correct. The total scoring of the EASO guidelines checklist ranged from 0 to 18.

2.4. A Method Developed by ChatGPT-4.5 for Quality Information Assessment

A third method used for the assessment of dietary weight loss quality of information published on the webpages was developed for the purposes of the current study. This method involved asking ChatGPT-4.5 to assess the quality of that information and provide the criteria as well as the scoring system used. Thus, a ChatGPT custom scoring system occurred. The ChatGPT custom scoring system for the assessment of the quality of online nutritional information that was developed by the AI assistant consisted of 5 sections with 4 criteria each, with a total of 20 criteria (Table 1). Each section represented a different area of quality assessment (i.e., source credibility, source accuracy and scientific validity, completeness and depth, objectivity and absence of bias, usability, and readability). The proposed scoring was uniform for all criteria, ranging from 1 to 5 marks; 5 marks were assigned if the criterion was fully met; 3 marks if the criterion was partially met; and 1 mark if the criterion was not met. For example, for criterion 1.2 (presence of scientific backing; see Table 2 below), 5 marks were appointed if the text included citations from peer-reviewed research or reputable organizations (e.g., WHO, CDC, EFSA); 3 marks if it mentioned general scientific principles but lacked specific citations; and 1 mark if no references or scientific backing were provided. A thorough explanation of how each criterion was applied and marked was provided by ChatGPT-4.5, and this information is found in the Supplementary Materials (Table S1). The maximum score of this system was 100 and the minimum was 20. Qualitative characterization categories were also proposed by the AI assistant (90–100: excellent; 75–89: good; 50–74: moderate; 30–49: poor; below 30: very poor).

Table 1.

ChatGPT’s custom scoring system for the assessment of the quality of online dietary weight loss information.

Table 2.

Normalized scores [median and interquartile range (IQR)] assigned by human raters and ChatGPT-4.5.

2.5. Human Operated Assessments

For the human-operated assessments, two dietitians were employed. For the assessment needs, two Excel files were used, one for the DISCERN instrument and one for the EASO guidelines checklist. In each Excel file, all links of the webpages to be assessed were presented in the first column, and the criteria of each tool were ordered one after the other in the following columns. The two raters assessed each Word document containing an individual webpage’s content independently, scoring each of the criteria of the aforementioned tools based on guidelines given by the application protocol of the instrument (DISCERN) or the researchers that developed the tool (self-constructed EASO checklist). Then, a mean score for each webpage was calculated, taking into account the scores produced by the two raters for each of the webpages. For the cases in which a discrepancy over 10% occurred between the scores of the two raters, a third dietitian–rater was employed and asked to independently assess them following the methodology used by the two raters. Then, for these specific cases, two out of three scores produced for each webpage that were less than 10% distant were used to calculate a mean score.

2.6. AI Operated Assessments

For the AI-operated assessments, the ChatGPT (version 4.5, February 2025 update) AI assistant was used. In brief, the assessment procedure consisted of feeding ChatGPT-4.5 with the content of each webpage saved in Word document files and a prompt strategy. As the aim of this study was to assess the quality of information of Greek websites, the content language of said documents was Greek. Then, to perform each assessment (DISCERN instrument, EASO guidelines checklist, or AI custom scoring system) three distinct prompt strategies, written in English, were designed by the researchers. Instructions by the researchers to the AI assistant and its responses were written in English. The full staging of the prompts is presented in the Supplementary Materials (Table S2).

Prompt Preparation

For the AI assessments using the DISCERN instrument, the researchers fed the operational manual to the LLM to familiarize the AI assistant with the assessment criteria. Explicit instructions were given through a prompt, for scoring and result reporting. For the AI assessment using the EASO guidelines checklist, the tool was uploaded to the AI assistant, and the scoring was explained. Again, explicit instructions were given through a prompt, for reporting on results.

As already mentioned, in addition to the already existing methods, researchers prompted the AI assistant to create its own assessment tool (ChatGPT custom scoring system). The instructions to the AI assistant were to identify and propose critical key elements for the evaluation of the quality of online nutritional information on its own, based on the content of 10 sample documents that would be fed to it. The AI assistant was then prompted to create a structured evaluation tool based on these elements, which would be freely organized in clearly defined sections, each one with designated numbered criteria. Once the AI assistant had created the instrument, it was instructed to present the instrument for reviewing and approval by its human operator. The AI assistant was prompted that after granting approval, it would continue to evaluate forthcoming fed documents with this permanent reference framework. The AI assistant was asked to provide a numeric score for each numbered criterion as well as a total score for each uploaded document.

2.7. Data Processing

For both human and AI assessments (i.e., DISCERN instrument, EASO guidelines checklist, ChatGPT custom scoring system), a separate Excel file with marks for each criterion and total score was created. Another Excel document was created for the total scores of each human and AI assessment, for standardization of scoring, for comparison reasons. The overall score of each questionnaire was standardized on a scale of 0–100 using min–max normalization, which was chosen because it preserves the original distribution shape while enabling direct comparison between assessment tools with different scoring ranges. This method is particularly suitable when score ranges are known and fixed, as in our study, unlike z-score normalization, which assumes normal distribution [41].

2.8. Statistical Analysis

A Friedman test was conducted to examine potential differences in score medians and in the categorization of website reliability based on overall ratings. Post hoc pairwise comparisons were carried out using the Wilcoxon signed-rank test for quantitative variables and the chi-square test for qualitative variables, with Bonferroni correction. The intraclass correlation coefficient (ICC) was used to assess the agreement in website reliability scores assigned by a human using the DISCERN instrument, AI using the DISCERN instrument, and AI using the ChatGPT custom scoring system. Similarly, agreement was assessed among a human using the EASO guidelines checklist, AI using the EASO guidelines checklist, and AI using the accuracy factor of the ChatGPT custom scoring system. A two-way random-effects model was applied, as both the human raters and the AI model were considered random. Regarding the ICC definition, “absolute agreement” was used, as the focus was on the assignment of identical scores rather than any form of correlation [42]. A qualitative analysis was also conducted on the content of webpages that showed the greatest rating discrepancies between ChatGPT-4.5 and human evaluators. Statistical analysis was conducted using SPSS software (v. 29).

3. Results

A total of 177 webpages were evaluated, and the normalized scores for each used method are shown in Table 2. Regarding the information quality score, no statistically significant differences were found in the medians across the three ratings (p = 0.528). On the contrary, statistically significant differences were found between the three content accuracy scores (p < 0.001). Post hoc pairwise comparisons using the Wilcoxon signed-rank test with Bonferroni correction (adjusted α = 0.0167) showed that all pairwise differences between ratings were statistically significant (all p < 0.001), with the median ratings assigned by humans being lower than those assigned by AI.

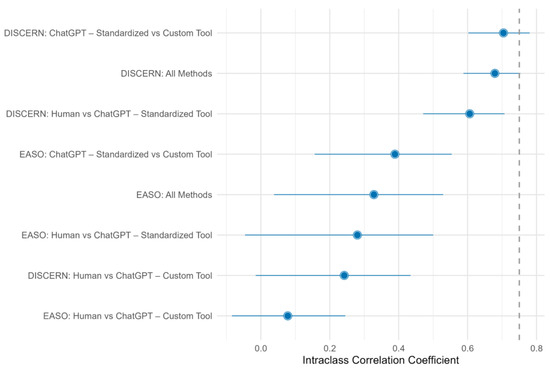

According to the recommended ranges in the existing literature for ICCs [42], the agreement of ratings for the DISCERN instrument was primarily moderate, whereas for the EASO guidelines checklist, it was poor (Figure 1).

Figure 1.

Agreement between raters in quality scoring of websites (ICC). Intraclass correlation coefficients (ICCs) with 95% confidence intervals comparing agreement across human and ChatGPT-4.5-based evaluations. Ratings were derived using both standardized tools and ChatGPT’s custom scoring system, applied to DISCERN and EASO guidelines checklist criteria.

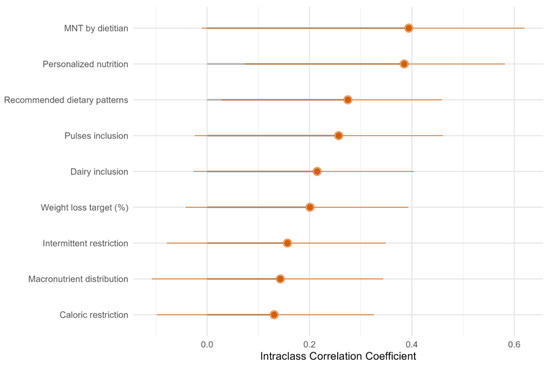

Due to the low agreement between the human and AI ratings, the agreement for individual items in the EASO guidelines checklist was further explored, revealing poor agreement across all assessments (Figure 2).

Figure 2.

Agreement between human and ChatGPT-4.5 ratings per EASO recommendation. Item-level agreement between human and ChatGPT-4.5 ratings across individual EASO-based dietary guidelines. Each dot represents the ICC value for a specific recommendation, with corresponding 95% confidence intervals.

The low agreement was observed due to ChatGPT’s (v. 4.5) higher ratings compared to those assigned by humans. Possible explanations for the divergent evaluations between AI and experts for each EASO-based dietary guideline can be found in the following table (Table 3).

Table 3.

Interpretations on different rating approaches between ChatGPT-4.5 and human raters on each EASO dietary guidelines.

From a qualitative standpoint, among the websites evaluated by human raters using the DISCERN instrument, 77.9% (Ν = 137) were rated as fair or below, whereas the corresponding percentage when AI used the same tool was 58.7% (Ν = 104, Table 4). When AI conducted the evaluation independently, the fair-or-below percentage increased to 88.1% (N = 156). Statistically significant differences were observed in the categorization of website quality across the three scoring methods (p < 0.001). Post hoc pairwise comparisons with Bonferroni correction indicated that all three methods—DISCERN applied by humans, DISCERN applied by ChatGPT-4.5, and ChatGPT’s custom scoring system—differed significantly in how they classified the websites. Despite some variations, both human evaluators using DISCERN and ChatGPT’s custom scoring system similarly categorized the majority of websites as being of fair quality (approximately 60%). In contrast, when ChatGPT-4.5 applied the DISCERN instrument directly, it rated a notably higher proportion of websites (22.0%) as excellent, compared to the other two methods.

Table 4.

Categorization of websites based on overall quality scores provided by human raters and ChatGPT-4.5.

4. Discussion

The present study aimed to explore whether the assessment of dietary weight loss information published on websites produced similar results when conducted by professional dietitians or an AI tool (ChatGPT-4.5). For this purpose, three ways of assessment were used, namely a validated one, still not specific for dietary information but for health (DISCERN instrument); a self-made checklist depicting the official guidelines for weight loss [38], based on methodologies used in previous studies [8]; and criteria originated by the AI tool. Based on our results, DISCERN scores showed no significant difference between humans and AI (p = 0.528), but EASO checklist scores differed significantly (p < 0.001), with AI generally assigning higher scores. In terms of classification, ChatGPT-4.5 rated 22% of webpages as “excellent” using DISCERN, while humans rated only 3%. Analysis regarding content assessment agreement revealed a moderate ICC for DISCERN between human and ChatGPT-4.5 ratings, and a lower one for the EASO guidelines checklist, indicating poorer consistency in specialized content evaluation.

In more detail, the highest agreement was found between assessments made with the DISCERN by ChatGPT-4.5 and the ChatGPT custom scoring system, followed by assessments made with DISCERN by dietitians and ChatGPT-4.5. DISCERN is a validated instrument, encompassing a set of specific questions that the rater needs to answer to assess the quality of health-related information [9]. The questions are about both the information content of a website as well as meta-data that assess the reliability of the published information (i.e., when the published information was produced), also providing support to the rater on what to look for or consider to answer each question, providing specific guidance on the scoring procedure. For all these reasons, small or no space for mis-assessment is left, probably explaining the moderate similarity found between the human and AI rating of weight loss websites with this instrument. Regarding the highest agreement found between the assessment made with the DISCERN instrument by ChatGPT-4.5 and the ChatGPT custom scoring system, these were, though, of a moderate level. The agreement found between the assessments could be explained by the fact that both methods shared a similar methodology (i.e., using meta-data to assess the quality of information in addition to assessing the content per se). However, the two assessment tools approach quality assessment from notably different angles. DISCERN’s 15-item framework prioritizes treatment-related content, splitting its focus evenly between publication reliability and treatment information quality on a traditional five-point scale. ChatGPT’s scoring system, however, casts a wider net, with 20 criteria spanning five domains, though it uses a simplified three-point scale (1-3-5). This broader approach reveals interesting contrasts: DISCERN asks whether sources are clearly stated (items 4–5), yet ChatGPT’s system actually examines author credentials and looks for HONcode certification. The difference becomes particularly apparent in how bias is handled—DISCERN dedicates a single item to balance and bias, while ChatGPT’s (v. 4.5) framework devotes four separate criteria to objectivity, specifically targeting advertisement disclosure and product promotion. Where DISCERN excels is in its granular treatment information section, with seven items examining how treatments are described, their benefits and risks, and decision-making support. ChatGPT’s system touches on these aspects within its accuracy and completeness sections but also ventures into territory DISCERN does not cover, particularly readability and user engagement. So, DISCERN focuses on providing validated, focused assessment of treatment information while ChatGPT’s system captures the broader landscape of online nutritional content quality, including commercial influences and accessibility concerns that DISCERN was not designed to address. The aforementioned results are in contrast to the findings from a previous study, where DISCERN was used to assess online health-related information sources regarding shock wave therapy as a treatment for erectile dysfunction [17]. In that study, significant differences were observed in total DISCERN scores and in 6 out of 16 (37.5%) DISCERN questions between ChatGPT-4.5 and human raters, with ChatGPT-4.5 scoring the quality of information higher [17]. Other than that, the methodology followed in that study is similar to ours, with the exception of the topic and the number of human raters, which was three in that case as opposed to two in the present analysis. From another point of view, our findings agree with those of Rose et al. [18], who found comparable results between ChatGPT-4.5 and human assessors in identifying and quantifying false or misleading claims made by practitioners of pseudoscientific therapies registered with the Complementary and Natural Healthcare Council in the UK [18].

On the contrary, the classification of webpages into quality categories (i.e., very poor, poor, fair, good, and excellent) based on their DISCERN scores exhibited a statistically significant difference across the three evaluations. Notably, the allocation of webpage content quality between human raters and the customized ChatGPT scoring system did not differ substantially, with both having categorized many webpages (approximately 60%) as ‘fair’, while only a marginal proportion (1–3%) was classified as ‘excellent’. In contrast, the categorization conducted by ChatGPT-4.5 using the DISCERN tool yielded markedly different results. Specifically, it characterized the quality of 22% of the webpages as ‘excellent’, 17% as ‘fair’, and the largest proportion (approximately 42%) as ‘poor’ or ‘very poor’. These discrepancies depict differences in the way DISCERN is used to categorize the webpages in terms of quality when used by health professionals or by ChatGPT-4.5, and they reveal a milder difference in this categorization between human rating and ChatGPT’s custom scoring system.

Regarding content accuracy, great variance in total scoring was observed between the three ways of rating, depicting poor agreement (EASO guidelines checklist applied by humans vs. EASO guidelines checklist applied by ChatGPT-4.5 vs. ChatGPT’s custom scoring system). In detail, the lowest score was assigned by the human raters (16.7/100), followed by ChatGPT’s (v. 4.5) assessment (50/100), while ChatGPT’s custom scoring system provided the highest marks (75/100). Furthermore, the level of agreement between human and ChatGPT-4.5 ratings for individual EASO guideline-based dietary recommendations revealed great discrepancies in all items, as most ICC estimates exhibited wide 95% confidence intervals that overlapped zero. According to established guidelines, ICC values below 0.5 indicate poor reliability, those between 0.5 and 0.75 indicate moderate reliability, and those above 0.75 suggest good to excellent agreement [42]. In our analysis, the majority of item-level ICCs fell below 0.4, suggesting poor inter-rater agreement. The overlap with zero further emphasizes statistical uncertainty and reinforces the interpretation that the ChatGPT-4.5 scoring behavior deviated from that of human experts when evaluating specialized dietary content. The observed differences in content accuracy scores between ChatGPT-4.5 and human raters when assessing online dietary information reflect distinct approaches to information processing and evaluation. Recent systematic reviews indicate that LLMs show promise in health and medical applications, including diagnostics and medical content assessment, while showing limitations in contextual understanding and causal reasoning [43,44]. Conversely, healthcare professionals employ what researchers describe as tacit knowledge—an experiential, often non-verbal form of understanding that develops through clinical practice and enables recognition of subtle quality indicators that may not be explicitly codified in evaluation criteria [45]. While AI excels at analyzing vast datasets through statistical pattern recognition and can achieve notable performance on standardized assessments, it occasionally produces outputs that lack grounding in factual evidence—a phenomenon that reflects its probabilistic nature [46]. Human raters, as practitioners, conversely, integrate clinical experience with contextual interpretation and domain-specific intuition developed through direct patient interaction [46]. International health organizations have recognized these distinctions in their guidance documents. According to the World Health Organization, AI and humans can come up with different assessments of the same information, as AI relies on statistical correlations between variables that are not necessarily causal while exhibiting different bias patterns compared to human cognitive processing [47]. These contrasting methodologies represent distinct cognitive frameworks, algorithmic processing versus experiential reasoning, each presenting unique capabilities and limitations within healthcare applications [46], and should be understood when interpreting assessment results, particularly for health-related content where the practical implications of dietary advice require consideration of individual variations, contraindications, and contextual factors that extend beyond standardized criteria.

This is the first study, to our knowledge, that compared human and AI assessment of quality of dietary information published on webpages. Another strength was the fact that several and widely used up-to-date methods were used and compared, such as a validated tool (DISCERN) and a self-made scale (EASO guidelines checklist). In addition, a custom-made scoring system by ChatGPT-4.5 was developed for the same purpose. However, some limitations are also present in this study, such as the fact that we have provided webpage information on word documents rather than using the URL of each webpage, and we have provided information in a refined well-polished manner excluding original formatting (e.g., ads, links), which could have affected the evaluation. It is important to also acknowledge that ChatGPT’s scoring system was not developed as a validated quality assessment tool, as this was beyond this study’s scope. A formal exploration of LLMs’ capacity to produce a valid and reliable scoring system for screening the quality of information could be the next essential research step. Last, the assessment was limited to Greek-language webpages, which may not apply to other languages or cultural contexts. It is important to acknowledge that both the language and the cultural context of the assessed webpages may have influenced the performance of the AI assistant. While ChatGPT-4.5 has been trained on multilingual corpora, including some Greek-language content, its exposure to culturally specific phrasing, nutritional norms, and local references remains limited compared to English-dominant data [6]. Prior research has shown that language structure and cultural framing can influence both the quality of online health content and how it is interpreted by users or automated systems [5]. These aspects may partially explain the discrepancies observed between human and AI assessments, particularly in subjective or nuanced items. Future studies should explore whether such findings persist across other languages and cultural contexts.

5. Conclusions

Our findings suggest that although AI and human raters showed similar performance using the DISCERN instrument, significant discrepancies emerged with the EASO checklist, where AI consistently provided higher scores. This may reflect AI’s stable behavior with structured evaluation tools and a tendency toward more lenient assessments in specialized domains. Furthermore, ChatGPT-4.5 rated more webpages as “excellent” using the DISCERN instrument, compared to human evaluators, suggesting divergent interpretations of rating criteria [17]. Apart from that, the level of agreement between human and ChatGPT-4.5 ratings in each item of the EASO guidelines checklist revealed great discrepancies in all of the items, showing that it was not a matter of a specific guideline but a different overall approach in marking between the two raters. The great discrepancies between ratings of ChatGPT’s developed scoring system and the already existing methodologies depict the need to deepen our understanding of the way AI assistants work in terms of information quality assessment and try to produce a valid AI-assisted custom tool. Given that the assessment of online dietary information is hard to be performed by the general public, the fact that ChatGPT-based information quality assessment with a validated tool (i.e., DISCERN) simulates the assessment of health professionals achieved with a validated tool represents a promising first step.

Our findings are inherently constrained by the language-specific nature of our evaluation, as we assessed ChatGPT’s performance on Greek texts, while an English-centric training approach has been acknowledged in OpenAI’s own technical documentation, resulting in generally superior AI performance for the English language [48]. Furthermore, the domain-specific patterns we observed cannot be assumed to hold across different subject areas or when applied to other language models with distinct architectural designs and training methodologies. Taking into consideration the above, as well as the fact that there are limited works in this field and the results are mixed, replication of these outcomes in other topics of nutrition-related information and other languages (i.e., English) is needed before forming suggestions. Furthermore, future research should expand this comparative approach by evaluating multiple AI assistants (Claude, DeepSeek, Qwen, Grok, Hangzhou, China) against human experts, which could reveal whether certain AI architectures perform better in assessing the quality of nutritional content regarding weight loss and other cases. In that case, guidance could be provided to the public regarding which AI assistant to use to assess the quality of dietary information read online. Also, other types of online dietary or health information could be assessed by AI assistants, like videos, which seem to increase in popularity in terms of acquiring relevant information [49,50]. Validated AI-assisted tools could be produced to perform dietary or health information quality assessments specific for each disease (i.e., obesity) or health status (i.e., pregnancy) for text and/or videos. Last, investigating how different versions of the same AI model perform could provide valuable insights into how rapidly evolving LLM capabilities affect assessment accuracy, potentially leading to the development of a gold standard methodology for AI-assisted evaluation of online nutritional information.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/info16070526/s1, Table S1: Developed and presented sections, scoring system, definitions, and significance of each criterion of the ChatGPT quality assessment instrument.; Table S2: Prompts for AI-Based Assessment of Online Weight Loss Information.

Author Contributions

Conceptualization, E.F. and M.M.; methodology, E.F., M.M. and D.P.; formal analysis, M.S. and D.P.; investigation, M.M., P.T. and D.P.; data curation, P.T., M.M. and D.P.; writing—original draft preparation, E.F., P.T., M.M., D.P. and M.S.; writing—review and editing, E.F., M.M., D.P., M.S., P.T. and G.I.P.; visualization, M.M., D.P. and M.S.; supervision, E.F. and G.I.P.; project administration, E.F. and G.I.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article and Supplementary Materials.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| ChatGPT | Chat Generative Pre-trained Transformer |

| EQIP | Ensuring Quality Information for Patients |

| JAMA | Journal of American Medical Association |

| QUEST | Quality Evaluation Scoring Tool |

| LLM | Large Language Model |

| SERP | Search Engine Result Page |

| Portable Document Format | |

| EASO | European Association for the Study of Obesity |

| WHO | World Health Organization |

| CDC | Centers for Disease Control |

| EFSA | European Food Safety Authority |

| HONcode | Health On the Net Foundation code of conduct |

| ICC | Intraclass Correlation Coefficient |

| SPSS | Statistical Package for the Social Sciences |

| IQR | Interquartile Range |

| n/a | Not Applicable |

| URL | Uniform Resource Locator |

References

- Baqraf, Y.K.A.; Keikhosrokiani, P.; Al-Rawashdeh, M. Evaluating Online Health Information Quality Using Machine Learning and Deep Learning: A Systematic Literature Review. Digit. Health 2023, 9, 20552076231212296. [Google Scholar] [CrossRef]

- Tan, S.S.L.; Goonawardene, N. Internet Health Information Seeking and the Patient-Physician Relationship: A Systematic Review. J. Med. Internet Res. 2017, 19, e9. [Google Scholar] [CrossRef] [PubMed]

- Eurostat Individuals Using the Internet for Seeking Health-Related Information. Available online: https://ec.europa.eu/eurostat/databrowser/view/tin00101/default/bar?lang=en (accessed on 10 April 2025).

- Jia, X.; Pang, Y.; Liu, L.S. Online Health Information Seeking Behavior: A Systematic Review. Healthcare 2021, 9, 1740. [Google Scholar] [CrossRef] [PubMed]

- Daraz, L.; Morrow, A.S.; Ponce, O.J.; Beuschel, B.; Farah, M.H.; Katabi, A.; Alsawas, M.; Majzoub, A.M.; Benkhadra, R.; Seisa, M.O.; et al. Can Patients Trust Online Health Information? A Meta-Narrative Systematic Review Addressing the Quality of Health Information on the Internet. J. Gen. Intern. Med. 2019, 34, 1884–1891. [Google Scholar] [CrossRef]

- Zhang, Y.; Kim, Y. Consumers’ Evaluation of Web-Based Health Information Quality: Meta-Analysis. J. Med. Internet Res. 2022, 24, e36463. [Google Scholar] [CrossRef]

- Denniss, E.; Lindberg, R.; McNaughton, S. Quality and Accuracy of Online Nutrition-Related Information: A Systematic Review of Content Analysis Studies. Public Health Nutr. 2023, 26, 1345–1357. [Google Scholar] [CrossRef]

- Fappa, E.; Micheli, M. Content Accuracy and Readability of Dietary Advice Available on Webpages: A Systematic Review of the Evidence. J. Hum. Nutr. Diet. 2025, 38, e13395. [Google Scholar] [CrossRef]

- Charnock, D.; Shepperd, S.; Needham, G.; Gann, R. DISCERN: An Instrument for Judging the Quality of Written Consumer Health Information on Treatment Choices. J. Epidemiol. Community Health 1999, 53, 105–111. [Google Scholar] [CrossRef]

- Moult, B.; Franck, L.S.; Brady, H. Ensuring Quality Information for Patients: Development and Preliminary Validation of a New Instrument to Improve the Quality of Written Health Care Information. Health Expect. 2004, 7, 165–175. [Google Scholar] [CrossRef]

- Silberg, W.M.; Lundberg, G.D.; Musacchio, R.A. Assessing, Controlling, and Assuring the Quality of Medical Information on the Internet: Caveant Lector et Viewor—Let the Reader and Viewer Beware. JAMA 1997, 277, 1244–1245. [Google Scholar] [CrossRef]

- Robillard, J.M.; Jun, J.H.; Lai, J.A.; Feng, T.L. The QUEST for Quality Online Health Information: Validation of a Short Quantitative Tool. BMC Med. Inform. Decis. Mak. 2018, 18, 87. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Sun, Y.; Xie, B. Quality of Health Information for Consumers on the Web: A Systematic Review of Indicators, Criteria, Tools, and Evaluation Results. J. Assoc. Inf. Sci. Technol. 2015, 66, 2071–2084. [Google Scholar] [CrossRef]

- Gkouskou, K.; Markaki, A.; Vasilaki, M.; Roidis, A.; Vlastos, I. Quality of Nutritional Information on the Internet in Health and Disease. Hippokratia 2011, 15, 304–307. [Google Scholar]

- Keaver, L.; Huggins, M.D.; Chonaill, D.N.; O’Callaghan, N. Online Nutrition Information for Cancer Survivors. J. Hum. Nutr. Diet. 2022, 36, 415–433. [Google Scholar] [CrossRef] [PubMed]

- Guardiola-Wanden-Berghe, R.; Gil-Pérez, J.D.; Sanz-Valero, J.; Wanden-Berghe, C. Evaluating the Quality of Websites Relating to Diet and Eating Disorders. Health Inf. Libr. J. 2011, 28, 294–301. [Google Scholar] [CrossRef]

- Golan, R.; Ripps, S.J.; Reddy, R.; Loloi, J.; Bernstein, A.P.; Connelly, Z.M.; Golan, N.S.; Ramasamy, R. ChatGPT’s Ability to Assess Quality and Readability of Online Medical Information: Evidence From a Cross-Sectional Study. Cureus 2023, 15, e42214. [Google Scholar] [CrossRef]

- Rose, L.; Bewley, S.; Payne, M.; Colquhoun, D.; Perry, S. Using Artificial Intelligence (AI) to Assess the Prevalence of False or Misleading Health-Related Claims. R. Soc. Open Sci. 2024, 11, 240698. [Google Scholar] [CrossRef]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models Are Few-Shot Learners. In Proceedings of the 34th International Conference on Neural Information Processing System, Vancouver, BC, Canada, 6–12 December 2020; Volume 33, pp. 1877–1901. [Google Scholar]

- Zhao, W.X.; Zhou, K.; Li, J.; Tang, T.; Wang, X.; Hou, Y.; Min, Y.; Zhang, B.; Zhang, J.; Dong, Z.; et al. A Survey of Large Language Models. arXiv 2023, arXiv:2303.18223. [Google Scholar] [CrossRef]

- Bommasani, R.; Hudson, D.A.; Adeli, E.; Altman, R.; Arora, S.; von Arx, S.; Bernstein, M.S.; Bohg, J.; Bosselut, A.; Brunskill, E.; et al. On the Opportunities and Risks of Foundation Models. Arxiv Learn. 2021, 10, 1–46. [Google Scholar] [CrossRef]

- Kung, T.H.; Cheatham, M.; Medenilla, A.; Sillos, C.; De Leon, L.; Elepaño, C.; Madriaga, M.; Aggabao, R.; Diaz-Candido, G.; Maningo, J.; et al. Performance of ChatGPT on USMLE: Potential for AI-Assisted Medical Education Using Large Language Models. PLOS Digit. Health 2023, 2, e0000198. [Google Scholar] [CrossRef]

- van Dis, E.A.M.; Bollen, J.; Zuidema, W.; van Rooij, R.; Bockting, C.L. ChatGPT: Five Priorities for Research. Nature 2023, 614, 224–226. [Google Scholar] [CrossRef] [PubMed]

- Phelps, N.H.; Singleton, R.K.; Zhou, B.; Heap, R.A.; Mishra, A.; Bennett, J.E.; Paciorek, C.J.; Lhoste, V.P.; Carrillo-Larco, R.M.; Stevens, G.A.; et al. Worldwide Trends in Underweight and Obesity from 1990 to 2022: A Pooled Analysis of 3663 Population-Representative Studies with 222 Million Children, Adolescents, and Adults. Lancet 2024, 403, 1027–1050. [Google Scholar] [CrossRef] [PubMed]

- Fassier, P.; Chhim, A.S.; Andreeva, V.A.; Hercberg, S.; Latino-Martel, P.; Pouchieu, C.; Touvier, M. Seeking Health- and Nutrition-Related Information on the Internet in a Large Population of French Adults: Results of the NutriNet-Santé Study. Br. J. Nutr. 2016, 115, 2039–2046. [Google Scholar] [CrossRef]

- Modave, F.; Shokar, N.K.; Peñaranda, E.; Nguyen, N. Analysis of the Accuracy of Weight Loss Information Search Engine Results on the Internet. Am. J. Public Health 2014, 104, 1971–1978. [Google Scholar] [CrossRef] [PubMed]

- Cardel, M.I.; Chavez, S.; Bian, J.; Peñaranda, E.; Miller, D.R.; Huo, T.; Modave, F. Accuracy of Weight Loss Information in Spanish Search Engine Results on the Internet. Obesity 2016, 24, 2422–2434. [Google Scholar] [CrossRef]

- Meyer, S.; Elsweiler, D.; Ludwig, B. Assessing the Quality of Weight Loss Information on the German Language Web. Mov. Nutr. Health Dis. 2020, 4, 39–52. [Google Scholar] [CrossRef]

- StatCounter Search Engine Market Share in Greece-January 2024–2025. Available online: https://gs.statcounter.com/search-engine-market-share/desktop/greece (accessed on 13 March 2025).

- StatCounter Browser Market Share in Greece-January 2024–2025. Available online: https://gs.statcounter.com/browser-market-share/all/greece (accessed on 13 March 2025).

- Zhang, Y. Consumer Health Information Searching Process in Real Life Settings. Proc. Am. Soc. Inf. Sci. Technol. 2012, 49, 1–10. [Google Scholar] [CrossRef]

- Cai, H.C.; King, L.E.; Dwyer, J.T. Using the GoogleTM Search Engine for Health Information: Is There a Problem? Case Study: Supplements for Cancer. Curr. Dev. Nutr. 2021, 5, nzab002. [Google Scholar] [CrossRef]

- Alfaro-Cruz, L.; Kaul, I.; Zhang, Y.; Shulman, R.J.; Chumpitazi, B.P. Assessment of Quality and Readability of Internet Dietary Information on Irritable Bowel Syndrome. Clin. Gastroenterol. Hepatol. 2019, 17, 566–567. [Google Scholar] [CrossRef]

- Lee, J.; Nguyen, J.; O’leary, F. Content, Quality and Accuracy of Online Nutrition Resources for the Prevention and Treatment of Dementia: A Review of Online Content. Dietetics 2022, 1, 148–163. [Google Scholar] [CrossRef]

- El Jassar, O.G.; El Jassar, I.N.; Kritsotakis, E.I. Assessment of Quality of Information Available over the Internet about Vegan Diet. Nutr. Food Sci. 2019, 49, 1142–1152. [Google Scholar] [CrossRef]

- Charnock, D. The DISCERN Handbook; Radcliffe Medical Press: London, UK, 1998; ISBN 1857753100. [Google Scholar]

- San Giorgi, M.R.M.; de Groot, O.S.D.; Dikkers, F.G. Quality and Readability Assessment of Websites Related to Recurrent Respiratory Papillomatosis. Laryngoscope 2017, 127, 2293–2297. [Google Scholar] [CrossRef] [PubMed]

- Hassapidou, M.; Vlassopoulos, A.; Kalliostra, M.; Govers, E.; Mulrooney, H.; Ells, L.; Salas, X.R.; Muscogiuri, G.; Darleska, T.H.; Busetto, L.; et al. European Association for the Study of Obesity Position Statement on Medical Nutrition Therapy for the Management of Overweight and Obesity in Adults Developed in Collaboration with the European Federation of the Associations of Dietitians. Obes. Facts 2023, 16, 11–28. [Google Scholar] [CrossRef] [PubMed]

- Ambra, R.; Canali, R.; Pastore, G.; Natella, F. COVID-19 and Diet: An Evaluation of Information Available on Internet in Italy. Acta Biomed. 2021, 92, e2021077. [Google Scholar] [CrossRef]

- Cannon, S.; Lastella, M.; Vincze, L.; Vandelanotte, C.; Hayman, M. A Review of Pregnancy Information on Nutrition, Physical Activity and Sleep Websites. Women Birth 2020, 33, 35–40. [Google Scholar] [CrossRef] [PubMed]

- Han, J.; Kamber, M.; Pei, J. Data Mining: Concepts and Techniques, 3rd ed.; Morgan Kaufmann: Waltham, MA, USA, 2012; ISBN 9780123814791. [Google Scholar]

- Koo, T.K.; Li, M.Y. A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef]

- Meng, X.; Yan, X.; Zhang, K.; Liu, D.; Cui, X.; Yang, Y.; Zhang, M.; Cao, C.; Wang, J.; Wang, X.; et al. The Application of Large Language Models in Medicine: A Scoping Review. iScience 2024, 27, 109713. [Google Scholar] [CrossRef]

- Thirunavukarasu, A.J.; Ting, D.S.J.; Elangovan, K.; Gutierrez, L.; Tan, T.F.; Ting, D.S.W. Large Language Models in Medicine. Nat. Med. 2023, 29, 1930–1940. [Google Scholar] [CrossRef]

- Heiberg Engel, P.J. Tacit Knowledge and Visual Expertise in Medical Diagnostic Reasoning: Implications for Medical Education. Med. Teach. 2008, 30, e184–e188. [Google Scholar] [CrossRef]

- Meskó, B.; Topol, E.J. The Imperative for Regulatory Oversight of Large Language Models (or Generative AI) in Healthcare. NPJ Digit. Med. 2023, 6, 120. [Google Scholar] [CrossRef]

- World Health Organization. Ethics and Governance of Artificial Intelligence for Health: WHO Guidance; World Health Organization: Geneva, Switzerland, 2021. [Google Scholar]

- OpenAI. GPT-4 Technical Report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Kreft, M.; Smith, B.; Hopwood, D.; Blaauw, R. The Use of Social Media as a Source of Nutrition Information. S. Afr. J. Clin. Nutr. 2023, 36, 162–168. [Google Scholar] [CrossRef]

- Weiß, K.; König, L.M. Does the Medium Matter? Comparing the Effectiveness of Videos, Podcasts and Online Articles in Nutrition Communication. Appl. Psychol. Health Well Being 2023, 15, 669–685. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).