Abstract

In the realm of critical infrastructure protection, robust intrusion detection systems (IDSs) are essential for securing essential services. This paper investigates the efficacy of various machine learning algorithms for anomaly detection within critical infrastructure, using the Secure Water Treatment (SWaT) dataset, a comprehensive collection of time-series data from a water treatment testbed, to experiment upon and analyze the findings. The study evaluates supervised learning algorithms alongside unsupervised learning algorithms. The analysis reveals that supervised learning algorithms exhibit exceptional performance with high accuracy and reliability, making them well-suited for handling the diverse and complex nature of anomalies in critical infrastructure. They demonstrate significant capabilities in capturing spatial and temporal variables. Among the unsupervised approaches, valuable insights into anomaly detection are provided without the necessity for labeled data, although they face challenges with higher rates of false positives and negatives. By outlining the benefits and drawbacks of these machine learning algorithms in relation to critical infrastructure, this research advances the field of cybersecurity. It emphasizes the importance of integrating supervised and unsupervised techniques to enhance the resilience of IDSs, ensuring the timely detection and mitigation of potential threats. The findings offer practical guidance for industry professionals on selecting and deploying effective machine learning algorithms in critical infrastructure environments.

1. Introduction

Modern human societies operate on an underlying structure of critical infrastructure, which is made up of a wide range of resources and systems that are necessary to maintain essential operations. These infrastructures are crucial to maintaining public safety, economic stability, and national security. They include the complex networks of electricity grids, transportation systems, water treatment plants, and financial institutions. But the same digital technologies that have ushered critical infrastructure sectors into the modern era have also made them a tempting target for malicious cyber actors looking for ways to undermine national security, disrupt operations, or steal sensitive data.

Over the past decade, there has been a notable escalation in cyber threats targeting critical infrastructure sectors worldwide. As noted in [1], the 2015 cyberattack on Ukraine’s power grid, widely attributed to state-sponsored actors, stands as a stark example of the vulnerabilities inherent in such systems. This attack resulted in widespread blackouts that affected hundreds of thousands of people and highlighted the potential catastrophic consequences of cyber disruptions to critical infrastructure. Similarly, the Not Petya ransomware attack in 2017 [2] wreaked havoc on several major ports, logistics companies, and financial institutions globally, causing billions of dollars in damages and disrupting operations for long periods.

In this environment of heightened cyber risk and evolving threat landscapes, protecting critical infrastructure systems from cyber intrusions has become of highest importance for governments, industry stakeholders, and cybersecurity professionals alike. Predicting, identifying, and effectively mitigating cyber risks is crucial for preserving the confidentiality, availability, and integrity of vital infrastructure services, as well as reducing the possible effects of cyber incidents on public safety and national security.

Moreover, the ongoing digitization of critical infrastructure systems, coupled with the expansion of associated devices and the Internet of Things, has further increased the difficulties faced by critical infrastructure custodians, as highlighted in this study [3]. These systems’ growing interconnectedness and complexity have significantly widened the attack surface, giving attackers numerous ways of entry to take advantage of weaknesses and conduct sophisticated cyberattacks [3]. From remote access points in power grid substations to internet-connected sensors in water treatment plants, the sheer breadth of potential targets presents a formidable challenge for organizations seeking to safeguard critical infrastructure assets from cyber threats.

In response to the escalating cyber threats targeting critical infrastructure, the organizations responsible for their protection have implemented a range of cybersecurity measures to safeguard systems and assets. These measures encompass preventive, detective, and corrective controls aimed at reducing the likelihood and impact of cyber incidents, as per [4]. Among these controls, IDSs are a crucial element of cybersecurity defenses, functioning to monitor network traffic, detect anomalous behavior, and notify security personnel of possible security breaches, among other things.

Traditional IDSs operate on predefined rules, signatures, or heuristics to detect known patterns of malicious behavior, such as known malware signatures or abnormal network traffic patterns. While effective against recognized threats, these IDSs often struggle to detect novel or previously unseen attacks, such as zero-day exploits or polymorphic malware. Additionally, traditional IDSs may generate a high volume of false positives, alerting security personnel to benign activities or legitimate network traffic.

The evolution of cybersecurity threats has prompted the exploration of innovative approaches to enhance the capabilities of IDSs and improve their effectiveness in identifying and reducing risks associated with the internet. One of these strategies is integrating machine learning that allows systems to maximize predictions and extract insights from data into traditional IDSs. By enabling IDSs to detect and identify attacks that were previously not known, reduce false positives, and adapt to emerging threats, machine learning techniques present a promising way to enhance IDS capabilities.

The merger of machine learning with traditional IDSs holds several potential advantages for enhancing cybersecurity defenses. By leveraging machine learning algorithms, IDSs can improve detection accuracy, adaptability, and responsiveness to emerging threats. Dependency on established rules and signatures is reduced because machine learning algorithms can evaluate massive amounts of network data, extract essential details, and identify abnormalities indicative of malicious activity. Furthermore, machine learning gives IDSs the capability to continuously acquire from new data and enhance their models to improve detection accuracy over time, ensuring efficient defense against changing cyberthreats.

Several machine learning algorithms have shown promise in the field of cybersecurity and are potential candidates for integration with traditional IDSs. Algorithms such as 1DCNN and LSTM have been successfully applied to malware detection tasks, as studied by [5], achieving high detection accuracy and low false-positive rates. Unsupervised learning algorithms, such as one-class SVM and isolation forest, give the possibility of identifying anomalies in typical network behavior, which could lead to the detection of unknown or zero-day attacks.

In this paper, we will delve deeper into the cybersecurity challenges facing critical infrastructure sectors and explore the role of machine learning-enabled IDS models in mitigating cyber threats. We will look at the drawbacks of conventional IDSs and talk about how machine learning approaches might help mitigate these drawbacks while refining the effectiveness and resilience of these systems in protecting vital infrastructure assets. Additionally, we will review existing research and case studies on the implementation of machine learning in IDSs for critical infrastructure protection, highlighting key findings and insights.

The present research focuses on evaluating the scalability, flexibility, and generalizability of machine learning-based intrusion detection systems by assessing how well machine learning algorithms detect known and unknown cyber threats using these datasets. Furthermore, by addressing challenges such as data quality, model interpretability, and computational complexity in the context of publicly available datasets, this research aims to provide insights and recommendations that can be applied across various critical infrastructure sectors.

Through empirical studies conducted on publicly available datasets and rigorous experimentation, this research endeavors to aid in the progress of intrusion detection techniques for critical infrastructure protection. Additionally, by focusing on publicly available datasets, this research aims to facilitate transparency, reproducibility, and accessibility in cybersecurity research, thereby enabling broader participation and collaboration within the research community.

Research Questions

Through analysis of the existing literature and careful experimentation in the domain of critical infrastructure datasets and machine learnings algorithms, we look to address the following questions:

- -

- How effective are machine learning algorithms in detecting cyberattacks on critical infrastructure systems?

This question examines how effectively various machine learning algorithms perform in locating and mitigating cyberthreats to vital infrastructure. The study intends to ascertain these algorithm’s applicability and reliability in real-world circumstances by examining their accuracy, precision, recall, and general resilience. To protect against ever-evolving cyber threats, machine learning must be effective in fortifying the security posture of vital systems.

- -

- What form of learning algorithms, supervised or unsupervised, are best suited for intrusion detection in critical infrastructure systems?

This question explores the comparative advantages of supervised and unsupervised learning algorithms in the context of intrusion detection for critical infrastructure. The question evaluates how each type of algorithm performs in detecting anomalies and cyberattacks, considering factors such as data availability, anomaly characteristics, and operational efficiency. The study aims to provide a clear understanding of which approach offers superior performance, aiding in the development of more effective security strategies for critical infrastructure protection.

2. IDSs and Machine Learning

An attacker securing illicit access to a device, network, or system is referred to as an intrusion in the context of cybersecurity. Cybercriminals infiltrate organizations covertly by employing ever more advanced methods and strategies. This includes conventional techniques such as coordinated attacks, fragmentation, address spoofing, and pattern deception. By keeping an eye on system logs and network traffic, an IDS is crucial for spotting and stopping these infiltration attempts.

An intrusion detection system (IDS) is a vital part of cybersecurity infrastructure, tasked with monitoring network traffic to detect potential threats and unauthorized activities. IDSs scrutinize system logs and network traffic to identify suspicious patterns or behaviors that could indicate security breaches. Upon detecting an anomaly, the system notifies IT and security teams, enabling them to investigate and address potential security threats, as highlighted in the study by [6]. While some IDS solutions merely report unusual activities, others can proactively respond by blocking malicious traffic. According to [7], IDS products are commonly implemented as software applications on corporate hardware or as part of network security measures. With the rise of cloud computing, numerous cloud-based IDS solutions are available to safeguard a company’s systems, data, and resources.

There are various types of IDS solutions, each with their own set of capabilities, as per [8]:

- A Network Intrusion Detection System (NIDS) is positioned at key locations within the network of an organization. It keeps track of all incoming and outgoing traffic, identifying malicious and suspicious activities across all connected devices.

- A Host Intrusion Detection System (HIDS) is deployed on separate devices with internal network and internet connections. It identifies both internal and external threats, such as malware infections and unauthorized access attempts.

- A Signature-based Intrusion Detection System (SIDS) gathers information about known attack signatures from a repository and uses this information to compare observed network packets to a list of known threats.

- An Anomaly-based Intrusion Detection System (AIDS) creates a baseline of typical network behavior and notifies administrators of any deviations from this norm, which may signal potential security breaches.

- A Perimeter Intrusion Detection System (PIDS) is deployed at the boundary of critical infrastructures. It detects intrusion attempts aimed at breaching the network perimeter.

- A Virtual Machine-based Intrusion Detection System (VMIDS) monitors virtual machines to identify attacks and malicious activities within these virtualized environments.

- A Stack-based Intrusion Detection System (SBIDS) is combined into the network layer of the organizational network to analyze packets before they interact with the application layer of the network.

Incorporating machine learning approaches into IDSs has emerged as an intriguing methodology to improve the integrity of critical infrastructure in recent years. Mentioned in [9], the growing sophistication of cyberattacks aimed against vital industries like energy, transportation, and water supply makes conventional rule-based IDSs insufficient in identifying and countering new risks. Additionally, IDSs may more precisely scan enormous amounts of data, spot intricate patterns, and identify legitimate activity through the utilization of machine learning algorithms. IDSs can identify and address attacks in actual time by utilizing machine learning, giving enterprises a proactive defense against intrusions. As a result, there has been an increase in the interest of using machine learning algorithms to complement IDSs, allowing them to adapt to changing threat environments and mitigate newly emerging risks to security.

When it comes to protecting vital infrastructure, IDSs can benefit greatly from machine learning. When compared to conventional rule-based or signature-based approaches, machine learning algorithms are more accurate in detecting small patterns or abnormalities that could be signs of security concerns because they are better at analyzing large amounts of data, as described by [10]. Particularly in anomaly detection, ML techniques can identify previously unknown or zero-day attacks by learning the normal behavior of network traffic or system activities, thus enhancing the IDS’s ability to detect novel threats. Moreover, ML algorithms are scalable, adaptable, and capable of efficiently processing large datasets and adapting to changing environments and emerging threats without manual intervention, ensuring continuous protection against evolving cyber threats. ML-based IDSs can operate in near real time, enabling rapid detection and response to security incidents by continuously monitoring network traffic and system logs. Furthermore, ML algorithms can reduce false positives, lowering warning fatigue and allowing security professionals to concentrate on legitimate security issues by examining contextual data and the association between occurrences.

These advantages underscore the effectiveness of ML in enhancing the capabilities of IDSs for critical infrastructure protection, providing a proactive defense against sophisticated cyber threats. Machine learning algorithms offer a wide range of tools and approaches for processing enormous amounts of data and identifying patterns, anomalies, and potential security breaches, as highlighted in the study by [11]. As studied by [12], algorithms can be classified according to their learning methodology and application domain, such as supervised learning, unsupervised learning, and semi-supervised learning approaches.

Supervised Learning: Labeled datasets are used to train these algorithms, with each occurrence attached to a defined class or result. These algorithms are trained to classify novel instances based upon how identical they are to preexisting samples. Supervised learning is a useful feature of IDSs that helps distinguish between malicious and benign network data.

Unsupervised Learning: Algorithms for unsupervised learning analyze unlabeled data, attempting to identify hidden structures or patterns [13]. When it comes to identifying unfamiliar or new dangers that might not be included in training data, these algorithms are especially helpful. Unsupervised learning methods like anomaly detection and clustering can assist IDSs in detecting odd activity that may be a sign of security breaches.

Semi-supervised Learning: By using both labeled and unlabeled data for training, semi-supervised learning incorporates aspects of supervised and unsupervised learning, as per [10]. This method works well in situations where obtaining labeled data is difficult or costly. By harnessing the benefits of both supervised and unsupervised methods, semi-supervised learning in IDSs can raise detection accuracy.

Deep Learning: Raw network traffic or system logs can be analyzed by deep learning models, which can then extract high-level features that point to potential security risks. As mentioned in the study by [14], in IDSs, convolutional neural networks (CNNs) and recurrent neural networks (RNNs) are frequently employed for tasks, including malware classification and intrusion detection.

All these approaches to learning from existing data and predicting the outcomes in the new data are suitable and find their applications in varying ways. Not all these approaches are suitable for datasets or environments that vary the nature and amount of data that these algorithms operate upon. Table 1 shows a comparison of machine learning algorithms.

Table 1.

Comparison of algorithms.

3. Literature Review

Through a systematic assessment of existing literature, we hope to present a thorough overview of the current state of knowledge about the use of machine learning in IDSs for critical infrastructure. By synthesizing findings from many sources, we hope to shed light on the strengths, limitations, and prospective uses of machine learning-based approaches to improving critical infrastructure security.

Our objective in this research is to analyze the existing literature and to place our own work within the larger context of information security and critical infrastructure protection. By critically analyzing the techniques and findings of prior studies, we hope to establish the framework for our own investigation, adding to the ongoing discussion about cybersecurity resilience in an increasingly linked world.

3.1. Background

Systems dealing with critical infrastructure, for example SCADA and other cyber-physical systems, depend heavily on traditional intrusion detection systems to guard themselves against cyberattacks, as noted in the study by [15]. These systems are designed to detect and respond to suspicious activities or anomalies within a network or computing environment.

Traditional IDS architectures typically consist of two main components: sensors and analyzers. Sensors monitor network traffic or system activities and generate alerts upon detecting suspicious patterns or behaviors. Analyzers process these alerts, applying predefined rules or signatures to identify potential threats. Furthermore, a range of detection methods, such as hybrid approaches that combine the two methods, anomaly-based detection, and signature-based detection, can be used in typical IDS designs.

The study by [16] presents insight on how machine learning techniques, both online and offline, can be applied to intrusion detection in cyber–physical systems (CPSs). It analyses the performance of multiple ML techniques using CPS-specific datasets and highlights the importance of a balanced combination of offline and online techniques to enhance CPS security.

Similarly, the work by [17] surveys intrusion detection systems based on machine learning techniques for protecting critical infrastructure. The study discusses the advantages and challenges of employing ensemble models for intrusion detection, emphasizing the need for robust and adaptable detection mechanisms in critical infrastructure.

Additionally, Ref. [18] propose an approach based on a dual-isolation-forest algorithm for anomaly detection in industrial control systems (ICSs), demonstrating improved detection capabilities compared to existing approaches. The framework utilizes two isolation forest models trained on normalized raw data and pre-processed data, achieving enhanced performance in detecting anomalies [18].

Traditional IDSs face several challenges in effectively detecting modern cyber threats. These challenges include the inability to adapt to evolving attack techniques, limited scalability, high false-positive rates, and difficulties in handling encrypted traffic. Moreover, the reliance on predefined signatures or rules makes traditional IDSs susceptible to evasion tactics employed by modern threat actors.

In the realm of cybersecurity, machine learning has become a potent tool, providing novel methods for identifying and reducing a variety of cyber threats. As per [16], the increased frequency of complex cyberattacks has underscored the importance of adopting advanced technologies to enhance the security posture of organizations and critical infrastructures.

A wide range of algorithms and approaches make up machine learning techniques, which enable systems to learn from data and make predictions or judgements, therefore lowering the overhead associated with infrastructure programming, as per the research by [19]. As stated by [10], machine learning techniques are used in cybersecurity for a variety of tasks, such as anomaly detection, malware detection, intrusion detection, and predictive analytics. According to the publication by [17], these methods can analyze enormous volumes of data, spot trends, and identify anomalies that might be signs of security breaches.

In the context of cybersecurity, an evaluation by [20] mentions that ML techniques are applied across various domains, including network security, endpoint security, cloud security, and critical infrastructure protection. These techniques enable organizations to bolster their defense mechanisms, detect previously unknown threats, and proactively mitigate security risks, as reported by [17]. IDSs often rely on predefined signatures or rules to identify known threats, limiting their effectiveness against emerging and sophisticated attacks [16]. Machine learning-based IDSs offer a more dynamic and adaptive approach to threat detection, and are capable of learning from evolving data patterns and adapting to new attack vectors [21].

Network traffic, system logs, and other security-related data sources can all be analyzed by machine learning algorithms to find anomalous activity that might indicate security vulnerabilities, as seen in the paper by [21]. By leveraging advanced analytics and pattern recognition techniques, ML-based IDSs can detect both known and unknown threats, enhancing the overall security posture of organizations.

3.2. Machine Learning Methods for Intrusion Detection

By automatically detecting harmful activity occurring within computer networks, machine learning (ML) techniques are crucial to enhancing the capabilities of intrusion detection systems (IDSs). For classification problems, supervised learning methods like artificial neural networks (ANNs) and support vector machines (SVMs) are frequently used. In these algorithms, the model learns from labeled training data to make predictions on new, unknown data, as mentioned by [22]. For anomaly detection where the model detects deviations from typical behavior in the absence of labeled training data, unsupervised learning techniques, such as clustering and association rule mining, are applied, as demonstrated in the study by [21]. Ref. [18], in their paper, state that semi-supervised and reinforcement learning techniques offer additional capabilities for detecting and responding to evolving cyber threats.

Some of the most commonly used algorithms that can enhance the capabilities of IDSs, according to the existing literature, are listed below:

3.2.1. Supervised Learning Algorithms

Supervised learning algorithms form the bedrock of IDS classification methodologies, leveraging labeled training data to discern normal from malicious network traffic. Support vector machines, as supported by [18], offer robust classification capabilities by delineating optimal hyperplanes to segregate disparate classes of network traffic. Notably, SVMs excel in handling high-dimensional data and have demonstrated efficacy in detecting intrusions within critical infrastructure systems. Decision trees, as explored by [17], exhibit commendable performance in discerning known attacks with remarkable accuracy. However, their efficacy may decrease when faced with novel, previously unseen attack vectors.

Artificial neural networks, studied by [20], show the capacity to discern intricate patterns from network traffic data. Deep neural networks showcase impressive performance in identifying both known and unknown cyber threats. However, for efficient training, they need an extensive amount of labeled training data, as well as processing power.

3.2.2. Unsupervised Learning Algorithms

Algorithms for unsupervised learning, such as autoencoders and k-means clustering, eliminate the need for labeled training data and demonstrate proficiency in identifying abnormal patterns in network traffic.

K-means clustering, as mentioned by [21], clusters network data predicated on similarity metrics, thereby facilitating the detection of outliers diverging from normal behavior. Nevertheless, its efficacy may be curtailed by the exigency to stipulate the number of clusters and its susceptibility to noise.

Autoencoders, as detailed by [23], constitute neural network architectures trained to reconstruct input data. Anomalies are discerned when the reconstruction error surpasses a predefined threshold. Autoencoders adeptly capture complex nonlinear relationships in data but may grapple with elevated false-positive rates in noisy environments.

3.2.3. Semi-Supervised Learning Algorithms

Using both labeled and unlabeled data to improve detection accuracy, semi-supervised learning techniques combine aspects of supervised and unsupervised learning. The one-class SVM, as supported by [23], is an example of a semi-supervised algorithm that constructs an illustration of normal network traffic and flags instances deviating significantly from this representation as anomalies. This approach proves particularly salient in scenarios affected by scarce labeled intrusion data but necessitates the meticulous tuning of hyperparameters to realize optimal performance.

3.2.4. Strengths and Limitations

Each ML technique for intrusion detection offers its unique strengths and grapples with distinct limitations. Supervised learning algorithms excel in ferreting out known attacks, but may falter in the face of zero-day attacks owing to the paucity of labeled training data. Algorithms for unsupervised learning demonstrate effectiveness in detecting abnormalities without any prior knowledge of attack patterns, while they may face high false-positive rates, as stated by [12]. Semi-supervised learning algorithms strike a harmonious balance between supervised and unsupervised approaches but necessitate judicious parameter tuning and may evince sensitivity to the choice of training data.

3.2.5. Traditional IDS Baselines

To contextualize the performance of machine learning-based intrusion detection systems, it is important to consider how traditional IDS approaches operate. These include signature-based detection and threshold-based rule systems. Signature-based IDSs rely on predefined attack signatures, making them highly accurate for known threats but ineffective against zero-day attacks or evolving tactics. Threshold-based systems flag anomalies when metrics exceed preset limits but often suffer from high false-positive rates.

While these methods are computationally efficient and easily interpretable, their lack of adaptability to new or obfuscated threats limits their applicability in dynamic environments such as critical infrastructure. In contrast, ML-based IDSs offer the ability to learn complex patterns, generalize from data, and detect unknown threats, albeit with higher computational costs and reduced interpretability.

ML-based IDSs, while effective at detecting both known and novel threats, are not immune to adversarial manipulation. Attackers may craft inputs to evade detection (evasion attacks) or manipulate training data to degrade model performance (poisoning attacks). These risks are particularly significant in safety-critical environments such as industrial control systems. While this work does not explicitly evaluate adversarial robustness, we recognize it as a key area for future research.

3.2.6. Integration of Machine Learning with Traditional IDSs

Several approaches and frameworks have been proposed to integrate ML with traditional IDSs, aiming to leverage the strengths of both paradigms. Notably, the research by [22] presents a comparative study of AI-based IDS techniques in critical infrastructures. Their study evaluates the performance of ML-driven IDSs in recognizing intrusive behavior in collected traffic data. Similarly, [23] discuss anomaly detection in SCADA systems by the application of one-class classification algorithms, such as SVDD and KPCA, to effectively detect outliers and intrusions.

The integration of ML with traditional IDSs offers several benefits, including improved detection accuracy, adaptability to evolving threats, and scalability. For instance, Ref. [24] demonstrate in their study on real-time threat detection in critical infrastructure that ML models, particularly Logistic Regression, exhibit superior precision and recall in identifying potential hazards. Furthermore, massive data volumes can be handled by ML-based IDSs with efficiency, and they can spot intricate patterns that traditional rule-based techniques could miss.

The main benefit of ML-based IDSs is their capability to improve detection accuracy and adaptability to evolving threats. The research by [18] demonstrates the effectiveness of multiple isolation forest algorithms for anomaly detection in ICSs. Their study indicates superior performance in identifying anomalies and intrusions, thereby enhancing the security of critical infrastructure. Additionally, ML-based IDSs can scale effectively to handle large and diverse datasets, enabling comprehensive threat detection across various network environments.

But there are various difficulties with this integration as well. The computational complexity of machine learning algorithms is one such difficulty, particularly in situations involving real-time detection. Additionally, ensuring the interpretability of ML models remains a concern, as complex models may lack transparency in their decision-making processes. Moreover, adversarial attacks targeting ML-based IDSs pose a significant threat, as highlighted in the research by [21]. Adversaries can manipulate input data to deceive ML models, leading to false alarms or undetected intrusions.

In their research, Ref. [23] discuss the challenges associated with modeling cyberattacks in SCADA systems by applying one-class SVM classification algorithms. These challenges include the need for extensive training data to capture diverse attack scenarios and the optimization of model parameters for robust performance. Moreover, ensuring the interpretability of ML models remains crucial for trust and transparency in decision-making processes. Adversarial attacks targeting ML-based IDSs can exploit vulnerabilities in model architectures, leading to false alarms or undetected intrusions, as highlighted by [21].

3.3. Case Studies and Research Findings

Much research has been undertaken on the efficacy of machine learning-based intrusion detection systems (IDSs) in simulated real-world environments, with an emphasis on identifying cyberthreats in critical infrastructure settings. Ref. [25] conducted a study on real-time threat detection using machine learning and datasets from ICS systems. With an emphasis on accuracy in threat identification, they assessed three machine learning models: K-nearest Neighbors, Random Forest, and Logistic Regression. The outcomes showed that Logistic Regression performed better than the other models, highlighting its importance in strengthening safety controls in critical infrastructure. Specifically, Logistic Regression achieved a precision of 92.5%, recall of 87.3%, and a false-positive rate of 4.1%.

Furthermore, Ref. [25] evaluated the performance of machine learning models in real-time threat detection, focusing on precision, recall, and false-positive rates. Logistic Regression exhibited superior precision and recall compared to other models, emphasizing its balanced approach and high accuracy in predicting potential threats.

Similarly, Ref. [23] investigated anomaly detection in SCADA systems using one-class classification algorithms. Their study focused on the application of Support Vector Data Description (SVDD) and Kernel Principal Component Analysis (KPCA) in detecting anomalies in SCADA systems. The findings showed that these algorithms effectively detected outliers and intrusions, providing a tight description of normal system behavior and enhancing cybersecurity measures in industrial environments. SVDD achieved a precision of 89.6% and a recall of 85.2%, while KPCA achieved a precision of 88.3% and a recall of 82.7%.

In another study, Ref. [18] proposed a dual-isolation-forest-based attack detection framework for industrial control systems (ICSs). Their approach utilized two isolation forest models trained independently using normalized raw data and pre-processed data with Principal Component Analysis (PCA). The framework demonstrated improved performance in detecting attacks, highlighting its significance in ensuring the security of critical infrastructure systems. The proposed framework achieved a detection accuracy of 91.8% on the SWaT dataset and 87.5% on the WADI dataset.

In their comprehensive analysis of anomaly detection algorithms, Ref. [25] compared the performance of sophisticated models, including Interfusion, RANSynCoder, GDN, LSTM-ED, and USAD. The evaluation was based on key metrics, such as precision, recall, false-positive rates, F1 score, and accuracy. The findings indicated variations in model performance across different datasets, with Logistic Regression consistently demonstrating superior performance in accurately identifying potential threats.

Overall, the research findings underscore the effectiveness of machine learning-based IDSs in detecting cyber threats in critical infrastructure environments. The studies highlight the importance of precision and accuracy in threat detection, paving the way for improved security protocols and proactive cybersecurity measures in industrial settings.

3.4. Related Work

This section provides an overview of key studies focusing on the detection of cyber- attacks in industrial control systems (ICSs), particularly utilizing the Secure Water Treatment (SWaT) dataset. Each study contributes unique insights and methodologies, collectively advancing the understanding and combating of cyber threats in critical infrastructure.

Ref. [26] delve into the detection of cyberattacks on water treatment processes, utilizing real data from the Secure Water Treatment (SWaT) testbed. Their research presents a meticulous examination of the challenges posed by cyberattacks on industrial processes, particularly emphasizing the nuances of cyber–physical systems (CPSs) and the intricate communication networks involved. The study offers a comprehensive approach to attack detection, encompassing model-based and data-driven methods tailored to the SWaT process’s network architecture and components. By organizing the dataset and analyzing attacks in a structured manner, the study provides valuable insights into designing a monitoring system for SWaT, integrating limit value checks, safety rules, and various monitoring techniques. Moreover, the paper contributes to the field by exploring the complexities of applying model-based monitoring to the SWaT process, addressing challenges such as system complexity and time-varying sensor behavior. Overall, Ref. [26]’s research serves as a significant milestone in understanding and combating cyber threats in critical infrastructure, showcasing the practical application of fault diagnosis techniques and data-driven approaches in industrial settings.

Ref. [27]’s study focuses on the utilization of convolutional neural networks (CNNs) for detecting attacks on critical infrastructure, with a particular emphasis on the SWaT dataset. Their research highlights the efficacy of CNNs in analyzing complex patterns within industrial control environments, surpassing previous methods in anomaly detection tasks. By leveraging the SWaT dataset, the study demonstrates the superiority of CNNs over recurrent networks in detecting abnormal behavior, showcasing CNNs’ computational efficiency and performance. The research introduces a statistical window-based anomaly detection method, which successfully predicts future values of data features and measures statistical deviations to identify anomalies. Additionally, the paper highlights how effective various neural network architectures are at detecting anomalies, highlighting CNNs’ potential to improve ICS security and dependability. Overall, Ref. [27] provide a solid methodology for identifying cyberattacks in critical infrastructure, which is an important contribution to the area and broadens possibilities for further study on anomaly detection in ICSs utilizing sophisticated neural network architectures.

Ref. [28] introduce the Methodology for Anomaly Detection in Industrial Control Systems (MADICS), aiming to address the lack of standardized methodologies for detecting cyberattacks in ICS scenarios. Their work effectively models ICS behaviors by utilizing deep learning methods in conjunction with semi-supervised anomaly detection. The procedures in MADICS, which include dataset pre-processing, feature filtering, feature extraction, anomaly detection method selection, and validation, are specifically designed to address the difficulties encountered in ICS scenarios. The study surpasses typical performance metrics reported in previous works by achieving state-of-the-art precision, recall, and F1-score values by using MADICS on the SWaT dataset. The research highlights the significance of selecting appropriate hyperparameters and fine-tuning the LSTM model for effective anomaly detection. Moreover, the paper contributes to the discourse on leveraging machine learning, particularly deep learning, for IDSs in critical infrastructure, emphasizing its relevance in addressing security challenges in ICSs. All things considered, the work of [28] offers a thorough approach for identifying cyberattacks in ICSs, setting the stage for further research into boosting the security and dependability of industrial operations.

Ref. [29]’s study focuses on the development of a system-wide anomaly detection approach for industrial control systems (ICSs) using deep learning and correlation analysis. Published in June 2021, the research aims to effectively identify abnormal behavior in industrial control systems critical for various industrial processes. The study introduces a novel technique that leverages deep learning algorithms and correlation analysis to detect anomalies within the system, thereby improving its overall security and reliability. The research emphasizes the multidisciplinary aspect of the method by merging expertise from Queensland University of Technology and Griffith University, with the goal of advancing anomaly detection approaches in ICSs. The suggested method improves the capacity to identify aberrant behavior in industrial control systems using correlation analysis and deep learning algorithms, allowing for prompt intervention and risk-reduction measures. Overall, [29]’s work provides valuable insights into the application of advanced technologies for enhancing security and reliability in critical infrastructure, laying the foundation for future research in anomaly detection in industrial control systems.

3.5. Gaps in the Literature

While the presented literature offers valuable insights into the application of machine learning techniques for intrusion detection in critical infrastructure, several gaps remain unaddressed. Primarily, the existing studies largely focus on evaluating specific algorithms or methodologies in isolation, without a comprehensive comparative analysis that considers the combined performance of both supervised and unsupervised learning approaches. Additionally, while some research highlights the importance of adapting machine learning models to evolving cyber threats, there is limited exploration of frameworks that integrate continuous learning and real-time adaptability within IDSs. Furthermore, the challenges associated with high false-positive rates, particularly in unsupervised learning models, are acknowledged but not thoroughly addressed in terms of practical mitigation strategies. The need for scalable and interpretable models that can handle the complexity and diversity of critical infrastructure environments is also insufficiently explored, leaving a significant gap in developing robust and adaptable IDS solutions. Lastly, there is a lack of extensive case studies or real-world deployments of these advanced machine learning-based IDSs, which would provide practical validation and insights into their effectiveness in operational settings. These gaps underscore the necessity for future research to focus on integrated, adaptive, and scalable machine learning solutions tailored specifically to the dynamic landscape of critical infrastructure cybersecurity.

4. Research Methodology

This section describes the research pathway that will be implemented to explore the answers to our research questions and fulfil the research objectives. We will discuss the complete arc of this research, starting from the literature selection and its analysis to the way we select and work with the proposed dataset, and finally the anomaly detection algorithms and methods used for this research.

4.1. Literature Analysis

In conducting the literature review for this paper, a meticulous methodology was employed to ensure the comprehensive selection of relevant scholarly sources. Leveraging reputable academic databases and platforms such as ResearchGate, Google Scholar, IEEE Xplore, and the library website of Auckland University of Technology (AUT), a systematic search strategy was implemented. This strategy encompassed the use of a diverse set of keywords, including “critical infrastructure cybersecurity”, “machine learning in cybersecurity”, “intrusion detection systems”, “anomaly detection”, and others, to capture various facets of the research topic. To maintain relevance and currency, papers published prior to 2012 were excluded, and only those directly related to the protection of critical infrastructure were considered. This stringent inclusion and exclusion criteria ensured that the selected literature aligned closely with the research objectives. The collected papers were organized based on several criteria to facilitate review and analysis. Firstly, papers were categorized according to the specific critical infrastructure they addressed, such as energy, transportation, or healthcare. Furthermore, studies utilizing common datasets, such as the SWaT dataset, were grouped separately for focused analysis. Additionally, papers were segregated based on the type of machine learning algorithms employed, distinguishing between supervised and unsupervised learning approaches. This systematic organization enabled a structured and comprehensive examination of the literature. Throughout the literature search process, several challenges were encountered, including the vast volume of available literature, requiring careful selection and prioritization based on relevance and quality. Moreover, ensuring consistency in search terms and managing duplicate results posed logistical challenges. However, these challenges were effectively mitigated through systematic screening and consultation with the study supervisor to ensure the inclusion of high-quality and pertinent literature.

4.2. Data Collection

In today’s highly digitized and interconnected world, facilities that handle operations for critical infrastructure are extremely particular about sharing any data which provides insight into the way these facilities operate, their mechanisms, and any vulnerabilities that the data might conceal. The most widely used datasets for cybersecurity analysis purposes are the DARPA and the NSL-KDD datasets, as highlighted by (Pinto et al., 2023 [17]). The issue with using these datasets for our research was that these datasets are quite old. The DARPA dataset [30] is from 1999, and the NSL-KDD dataset [31] is from 2009; to keep our research abreast with the latest information available in this domain, we decided to use the iTrust SWaT dataset.

The SWaT testbed dataset, sourced from the iTrust [32] website, comprises a comprehensive collection of data, including network traffic logs and readings from all 51 sensors and actuators within the simulated water treatment environment. This dataset encompasses both normal operational periods, spanning 3.5 h, and periods containing six distinct attack scenarios. These attacks were meticulously crafted by the iTrust research team, leveraging sophisticated attack models that consider the intent space of cyber–physical systems (CPSs). The SWaT testbed itself, established in 2015 with funding from the Ministry of Defence (MINDEF) of Singapore and guidance from PUB, Singapore’s national water agency, serves as a high-fidelity emulation of a modern water treatment facility. Its construction and design adhere to industry standards, making it a valuable asset for rigorous cyber security research and experimentation. The SWaT dataset used in this study primarily consists of time-series data generated by physical process sensors and actuators embedded in an industrial control system (ICS). It includes readings from multiple devices such as flow indicators, level sensors, motorized valves, and chemical dosing pumps. Notably, the dataset does not contain raw network traffic or packet-level communication data. Therefore, all anomaly detection in this study was performed on controller and sensor/actuator data rather than network-layer telemetry.

This choice aligns with real-world operational priorities in critical infrastructure, where process integrity is often monitored more directly through physical variables rather than abstract network flow. The rationale for focusing on ICS-level telemetry is to identify attacks that manifest through physical anomalies or unauthorized actuator behavior. Access to the SWaT dataset was facilitated through a formal request process via the iTrust website. Upon submission of the request form, access to the dataset was granted, and a download link was provided via the requester’s academic email address. It was imperative to adhere to the terms of usage outlined by iTrust, which included giving explicit credit to the organization in any resulting publications and refraining from sharing the dataset with others without explicit permission.

4.3. Data Pre-Processing

This section focuses on preparing the dataset for analysis with several machine learning and deep learning models to identify anomalies. The process was taken directly from the chosen critical infrastructure scenario, and the main goals of this preprocessing step involved subdividing the dataset into training, validation, and test sets; searching the dataset for erroneous or corrupted values; properly handling missing values; encoding categorical features; and scaling continuous features for model training.

The first step is to examine the dataset to find and eliminate erroneous or corrupted entries, which tend to occur during the ICS’s warm-up or transitory stages. Plotting each feature individually versus time allows us to visually detect outlier values and remove them from the dataset. Furthermore, appropriate procedures are implemented to detect and handle missing values. The missing values are identified as not valid in order to preserve their contribution to our understanding of cyberattacks, as opposed to being set to the mean or median, or having damaged samples removed.

The second goal is to use one-hot encoding (OHE) to prepare categorical features for machine learning models to enhance learning capabilities, as per [33]. With this encoding technique, binary characteristics are used in place of categorical features to represent each distinct categorical value. Additionally, the features that only show the states of certain sensors in the system are identified, and label encoding is applied to them to make the features more conducive for machine learning algorithms. To improve integration into neural network design, an embedding layer may be used to convert the set of OHE categorical features into a vector of continuous features.

Next, we use standardization or min–max scaling to scale continuous features. Subtracting the mean and dividing by the standard deviation is the standardization process, which works well for data that is regularly distributed. However, by scaling features to a range of [0, 1] based on the highest and minimum values in the training dataset, min–max scaling preserves the form of the dataset. The dataset is properly formatted and normalized thanks to these pretreatment steps, which make it suitable for training machine learning models.

The final step in this procedure is to ensure that the training dataset only contains normal data and the test dataset has both normal and anomalous data; the dataset must be divided into training, validation, and test sets. Partitioning is undertaken so that some features depend on how other features change over time to maintain the temporal order of the dataset. The training dataset contains the initial samples, while the test dataset contains the remaining samples. Furthermore, the validation dataset is derived from a tiny subset of the training dataset, usually in line with the 70/30 rule, but variations in the split size were also tested in some cases.

4.4. Algorithm Selection

The selection of appropriate algorithms for anomaly detection in industrial control systems (ICSs), such as those managing critical infrastructure, is a nuanced process that requires careful consideration of several factors. These include the nature of the dataset, the types of anomalies we aim to detect, and the operational constraints of the systems being monitored. For our project, which uses the SWaT July 2019 dataset, the criteria for selecting machine learning algorithms were grounded in the need for robustness, accuracy, real-time processing capabilities, and the ability to handle imbalanced data. The SWaT July 2019 dataset consists of sensor and actuator data from a water treatment testbed, and includes normal operational data and several documented attacks. The time-series data in the dataset exhibit a notable class imbalance, with a large number of normal operations compared to anomalies. These qualities dictated that our algorithm selection process had to concentrate on algorithms that are scalable for huge datasets, can handle class imbalance, and achieve remarkable success in time-series analysis.

4.4.1. Long Short-Term Memory

This algorithm is a type of recurrent neural network architecture that is intended to identify dependencies over time in sequential input. In contrast to conventional RNNs, which have trouble keeping information over lengthy periods due to the vanishing gradient problem, LSTM models have gated units called “memory cells” that control the information flow inside the network. Because of these memory cells, LSTM models are very good at modelling sequential data with long-range dependencies, as mentioned in the study by [34]. They can be applied for long periods of time to either forget or selectively recall information.

The research by [35] provides great insight into this algorithm. Three “gates” make up these memory cells: input, output, and a forget gate. LSTM networks can selectively retain or forget information over time thanks to these gates, which regulate the flow of information into and out of the cell.

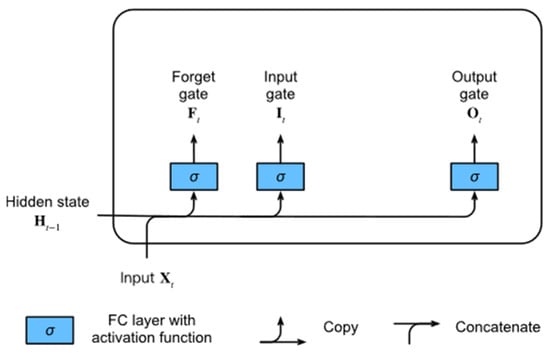

Forget Gate: In an LSTM cell, as shown in Figure 1, choosing which data to discard from the cell state is the first step in handling a new input. The forget gate, which receives as inputs the current input and the previous concealed state, decides this. It produces a value between 0 and 1 for each cell state element. A zero indicates that it should “completely discard this info,” and a 1 indicates that it should “wholly retain this information”.

Figure 1.

Long Short-Term Memory cell.

Input Gate: The input gate then decides what fresh data should be given to the cell state. It is composed of two layers: a tanh layer that generates a vector of new candidate inputs to add to the cell state, and a sigmoid layer that determines what data to update. Values between 0 and 1 are generated by the sigmoid layer, signifying the relative importance of each component in the candidate vector.

Cell State Update: The new candidate values are chosen by the input gate, and the information kept from the previous phase (decided by the forget gate) is combined with them to update the cell state. As a result, a new cell state is created that selectively keeps pertinent information while removing unnecessary information.

Output Gate: Lastly, the output gate chooses which data from the cell to output. It generates a filtered version of the cell state that is passed on to the following time step by taking as inputs the current input and the previous hidden state.

LSTM networks are especially helpful for applications like time-series prediction [36], as seen in natural language processing and speech recognition, because they can efficiently capture long-range dependencies in sequential data by employing these gates to govern the flow of information.

4.4.2. Random Forest

For classification tasks in machine learning, Random Forest is a widely used ensemble learning technique. It belongs to the family of decision tree-based algorithms and is renowned for being robust, flexible, and efficient when working with large, complicated datasets, as indicated in the study by [5]. The basic underlying idea in Random Forest is that during the training phase, multiple decision trees are generated and their predictions are aggregated to increase overall accuracy and generality.

The initial step in the process is to create multiple decision trees. Each tree is constructed using a subset of the training data and a random selection of features. Bagging, or bootstrap aggregating, is a technique for implementing this unpredictability. It is necessary to train each decision tree on a random subset of the training set when using replacement bagging. The study by [37] states that by training each tree on different data subsets, overfitting is minimized and the model’s capacity for applicability to new, untested data is maximized.

Apart from deploying randomized subsets of the training data, Random Forest further increases unpredictability by selecting an arbitrary set of features at every decision tree split, as per [38]. As the trees are more diverse due to this feature randomization, the ensemble is more resilient and less prone to overfitting. Because of the combination of feature randomness and bagging, each tree in the forest is unique and represents a different portion of the data, strengthening the ensemble as a whole.

The prediction stage commences once the forest of decision trees is formed. For a given input sample, every decision tree independently predicts the class label. The final prediction is made by a majority voting technique, where the projected class is selected from among the trees based on which class received the most votes. Through the use of the collective insights from each individual tree, this voting technique yields more reliable and accurate projections.

Random Forest’s ensemble learning approach improves the model’s overall performance. Random Forest offsets the drawbacks of individual trees by blending their predictions, thus enhancing the model’s generalization capabilities. Additionally, the randomness added during the training process provides inherent regularization, preventing overfitting and guaranteeing that the model performs well on both raw unseen data and training data. Therefore, Random Forest is an effective and extensively utilized method for classification applications. It is an appropriate option for ML/DL applications due to its capacity to handle high-dimensional data, manage missing values, and provide consistent performance across a range of datasets. The ensemble aspect of Random Forest, which consists of multiple decision trees and a voting mechanism, offers a dependable and efficient method to handle classification problems.

4.4.3. One-Dimensional CNNs

One-dimensional CNNs are a deep learning subset of the machine learning algorithm family that are designed to process sequential data, which makes them appropriate for use in critical infrastructure systems for tasks like anomaly detection and time-series analysis, as learned from [33]. In our case, 1D CNNs are made to handle one-dimensional data, such as the time series of sensor measurements, as opposed to standard CNNs, which work with 2D data, like photos. Convolutional, pooling, and fully linked layers are among the layers that make up a 1D CNN’s architecture. To identify local patterns in the input data, the convolutional layers apply filters, also known as kernels, that move across the data. Every filter carries out a convolution operation, which entails taking a portion of the input data and the filter’s dot product.

In the context of anomaly detection in critical infrastructure systems, the convolutional layers of a 1D CNN can learn to identify patterns associated with normal operating behavior and distinguish them from patterns indicative of anomalies, as per [33]. For example, a filter could be trained to detect abnormal increases in sensor readings that are indicative of certain kinds of attack. In order to minimize the dimensionality of the feature maps, save computing costs, and help make the features less sensitive to relatively minor variations in the input data, pooling layers are frequently used following convolutional layers. Standard pooling operations involve average pooling, which determines the average value, and max pooling, which chooses the highest value from each feature map segment.

The feature maps are typically flattened and fed into one (or more) fully connected layer after the convolutional and pooling layers. These layers use the collected features to undertake high-level reasoning. The output of the network’s last layer is usually produced by a ReLu or sigmoid activation function. This function can yield a binary classification that indicates the existence or absence of an anomaly, or it can produce a probability distribution over several classes. The study by [39] outlines that 1D CNNs are very good at identifying anomalies in time-series data because they can recognize hierarchical patterns and local relationships in sequential data. They do not require human feature engineering because they can automatically extract pertinent characteristics from raw data. This capability is particularly beneficial in scenarios involving complex and high-dimensional data, such as the SWaT July 2019 dataset, where sensor readings and actuator states are monitored continuously.

4.4.4. Unsupervised Algorithms

We seek to evaluate two well-known unsupervised methods for anomaly detection in critical infrastructure systems (IDSs): isolation forests and the one-class support vector machine (SVM). We will compare their performance with supervised algorithms using the SWaT dataset.

The one-class SVM detects outliers by training a decision function on predominantly normal instances. It creates a boundary around the training data, usually generating a hypersphere or hyperplane. The objective is to maximize the gap between data points and the origin, with points beyond this limit classified as anomalies. As seen in [40], this method can discover small anomalies in complex datasets because it works well in high-dimensional spaces and can use the kernel trick to represent non-linear connections.

Isolation forests adopt a different strategy, specifically isolating anomalies using a collection of random decision trees. Each tree randomly chooses a feature and a split value, splitting the data until all points are isolated or a preset height is attained [18]. Because anomalies are infrequent and distinct, they are easier to isolate and have shorter path lengths. The average path length of every tree is used to compute the anomaly score. Isolation forests are efficient and scalable, making them ideal for handling big datasets found in critical infrastructure systems. While both isolation forests and Random Forests include numerous trees, their purposes are distinct. Isolation forests utilize random splits to isolate anomalies, determining outliers based on path lengths. In contrast, Random Forests integrate predictions from numerous trees to improve classification or regression accuracy. Isolation forests are straightforward and scalable, but they could overlook more complicated anomalies when compared to one-class SVMs, which require more resources but can handle complex data distributions.

4.5. Evaluation Metrics

In any research endeavor, evaluation metrics are essential for evaluating how well tools, algorithms, or procedures perform. We have included quantitative measures of accuracy, precision, recall, and F1 score in our evaluation. In order to comprehend the efficacy and effectiveness of the chosen machine learning algorithms, these indicators are essential. Some of the most relevant measurement metrices for machine learning algorithms are mentioned below. Which performance metric helps us find answers to our research inquisitions depends on the context of the study, along with the datasets used for the study. It is worth noting that not all metrics may offer a correct representation of how apt the proposed model is for anomaly detection.

Accuracy: The percentage of accurately categorized cases in the dataset is known as accuracy. The computation involves calculating the ratio of all cases with the amount of correctly identified instances (true positives and true negatives). Although accuracy offers a broad indicator of the performance of the model, imbalanced datasets where one class predominates over the other may not be appropriate for accuracy.

where

- TP = true positives (correctly classified attacks);

- TN = true negatives (correctly classified normal operations);

- FP = false positives (normal operations incorrectly classified as attacks);

- FN = false negatives (actual attacks incorrectly classified as normal operations).

Precision is a measure of the accurateness of a model’s positive assumptions. It is defined as the ratio of true positive (TP) results to the total number of positive predictions made by the model, which includes both true positives and false positives (FP). In our context, precision tells us that out of all the instances that were detected as attacks or anomalies, how many of them actually were attacks on the system.

Recall (also known as sensitivity or true-positive rate) is a metric that indicates how well a model can locate all pertinent occurrences in a dataset. It is defined as the ratio of true-positive results to the total number of actual positive instances, which includes both true positives and false negatives. In our context, recall tells us the number of attacks that were successfully detected from all the attacks that were carried out on the system.

F1 score is a metric that provides a single assessment of the accuracy of a model by combining recall and precision. In situations where there is a discrepancy between classes, it is extremely beneficial, which is common in anomaly detection tasks. With a high F1 score, the model accurately identifies a large percentage of true positives while keeping a small quantity of false positives, demonstrating a strong balance between precision and recall. The following formula is used to compute it:

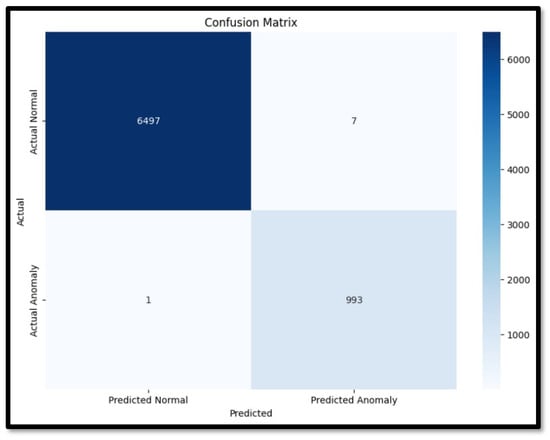

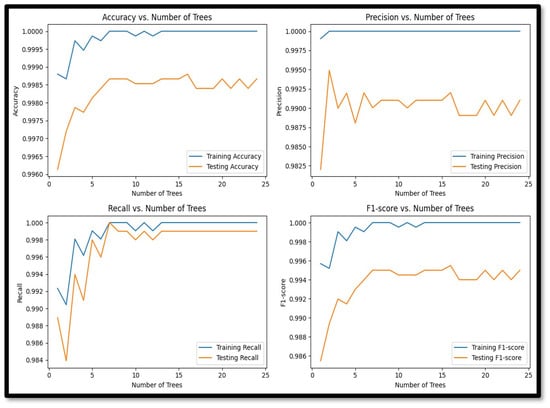

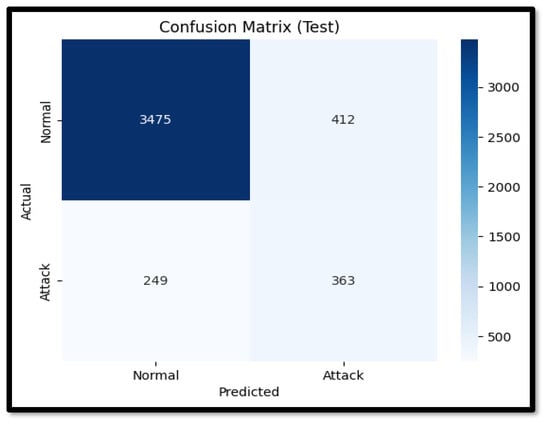

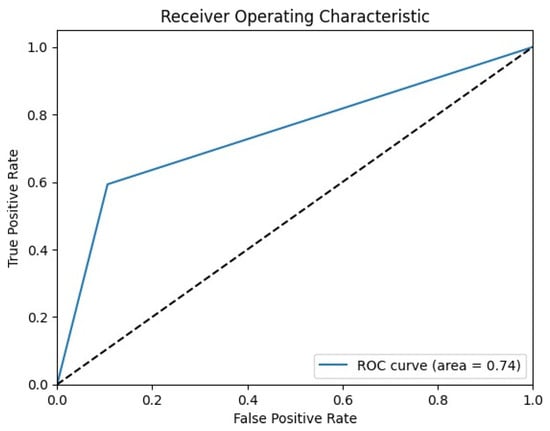

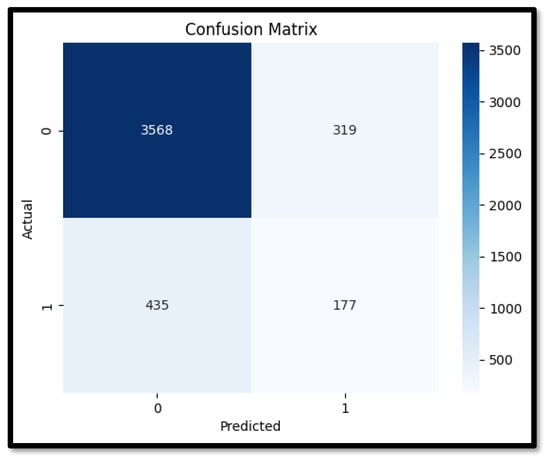

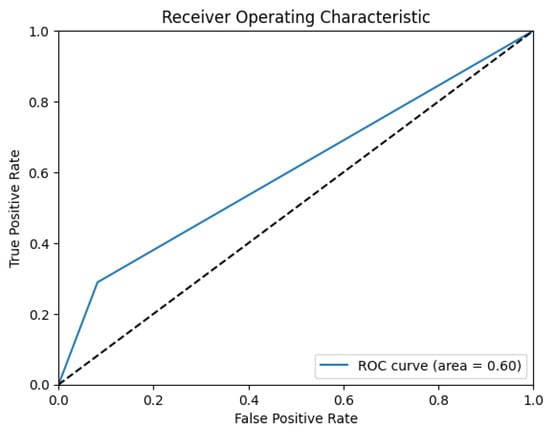

A Confusion matrix is a tabular representation of the counts of true-positive, true-negative, false-positive, and false-negative predictions that provides an overview of the performance of a classification algorithm.

4.6. Preferred Metric

In the context of our research, the F1 score is designated as the preferred metric of evaluation. This choice is justified by several critical factors. First, in anomaly detection, it is essential to not only identify true anomalies (high recall), but also to ensure that the anomalies detected are indeed correct (high precision). The F1 score offers a balanced metric that takes into account both factors because it is the harmonic mean of recall and precision. This balance is crucial in our scenario, where false positives can lead to unnecessary alarms and operational disruptions, while false negatives can allow security breaches to go undetected.

Second, the SWaT dataset, like many real-world datasets, exhibits a severe class imbalance, with far fewer anomalies compared to normal instances. In certain situations, metrics such as accuracy might be deceiving because a model may attain high accuracy by just forecasting the majority class. The F1 score, however, is more informative as it specifically focuses on the performance with respect to the minority class (anomalies), which makes it a better option to assess our models.

Third, the F1 score offers a solitary, comprehensive measure that captures the balance between recall and precision. This is particularly useful for comparing different models and hyperparameter settings in a clear and concise manner. It simplifies the assessment procedure by offering a comprehensive perspective of the model’s functionality in detecting anomalies.

Finally, in the context of critical infrastructure systems, the consequences of both false positives and false negatives are significant. While false negatives can result in security breaches that go unnoticed and have serious repercussions, false positives can cause needless reactions and even shutdowns. The F1 score helps us find a model that optimally balances these risks, ensuring reliable and accurate anomaly detection. Given these considerations, the F1 score emerges as the preferred evaluation metric for the present study, which identifies the impact of machine learning on critical infrastructure systems using the SWaT July 2019 dataset.

By focusing on the balance between precision and recall, the F1 score ensures that our models are both accurate in identifying true anomalies and robust against generating false alarms. This makes it an ideal choice in the assessment of the usability of machine learning algorithms.

5. Experimental Analysis and Discussion

5.1. System Description

The Secure Water Treatment (SWaT) testbed is an actual-world environment designed for examining and experimentation within the domain of industrial control system (ICS) security. It serves as a simulation of a water treatment plant, mimicking the processes and infrastructure found in real-world critical infrastructure systems.

The SWaT testbed consists of various components that replicate the functionalities and processes of a typical water treatment plant. These components include the following:

Pumps and Valves:

The testbed comprises pumps and valves responsible for controlling the passage of water through various phases of the treatment process. These components are critical for regulating water pressure and ensuring the smooth operation of the system.

Tanks and Reservoirs:

Tanks and reservoirs store water at various stages of the treatment process. Their function is essential in preserving a steady flow of water and facilitating the different treatment procedures.

Sensors and Actuators:

Sensors are distributed throughout the testbed in order to gather information regarding various parameters like water flow, pressure, temperature, and chemical levels. Actuators are devices that respond to sensor data by controlling the operation of pumps, valves, and other components.

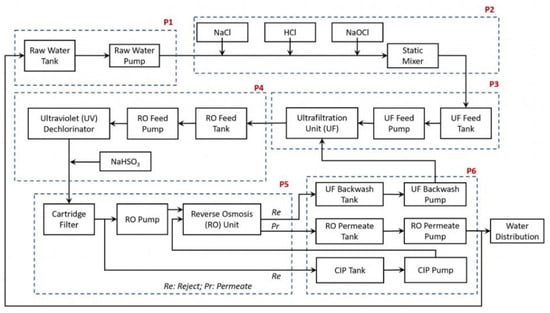

Control Systems:

The control systems in the SWaT testbed oversee the operation of the entire water treatment process. They take data from sensors, process the data using preset algorithms, and then instruct actuators to change the parameters of the system as necessary. The process testbed chart is shown in Figure 2.

Figure 2.

SWaT testbed process chart.

The Secure Water Treatment (SWaT) testbed is designed for emulating the functioning of a contemporary water treatment plant, comprising six distinct processes aimed at purifying water for potable use. Each stage in the SWaT testbed performs a vital part in the treatment process, guaranteeing the removal of impurities and the provision of clean, safe water to users.

Raw Water Intake (P1):

This stage controls the inflow of untreated water into the treatment system. A valve regulates the movement of water into the untreated water tank from the inflow pipe, where it awaits further processing.

Chemical Disinfection (P2):

In this stage, the raw water undergoes chemical treatment for disinfection purposes. Chlorination is performed using a chemical dosing station, which adds chlorine to the water to eliminate pathogens and bacteria.

Ultrafiltration (P3):

The water treated in the previous stages is sent to an Ultra-Filtration (UF) feed water storage unit. Here, a UF feed pump propels the water through UF membranes, removing suspended solids, microorganisms, and other impurities.

Dichlorination and Ultraviolet Treatment (P4):

Prior to passing through the reverse osmosis (RO) unit, the water undergoes dichlorination to remove any residual chlorine. Ultraviolet (UV) lamps are used for this purpose, controlled by a PLC. Additionally, sodium bisulphate may be added to regulate the Oxidation Reduction Potential (ORP) of the water.

Purification by Reverse Osmosis (P5):

After being dechlorinated, the water is filtered through three stages of reverse osmosis. RO membranes remove dissolved salts, organic compounds, and other contaminants, producing purified water that is reserved in an absorbent tank.

Ultrafiltration Membrane Backwash and Cleaning (P6):

This stage is responsible for maintaining the efficiency of the UF membranes by periodically cleaning them through a backwash process. A UF backwash pump initiates the cleaning cycle, which is triggered automatically based on differential pressure measurements across the UF unit.

Throughout these stages, various sensors and actuators collect data and control the operation of the equipment. PLCs (Programmable Logic Controllers) play a central role in orchestrating the treatment process, monitoring sensor readings, and executing control algorithms to ensure optimal performance and safety. Sensor data is logged and transmitted for real-time monitoring and analysis, facilitating the detection of anomalies or deviations from expected behavior.

5.2. Experimental Setup

The experiments for this research were conducted using a high-performance computing environment to manage the substantial computational demands of training and evaluating machine learning models on the SWaT dataset. The setup featured an ASUSTek AMD Ryzen 7 5800H (R) CPU @ 2.20 GHz with 16 virtual CPUs, 16 GB of system memory, and an NVIDIA GeForce GTX 1650 GPU with 4 GB of VRAM (manufactured in Mumbai, India). The software environment comprised Python 3.10.12 as the primary programming language, with TensorFlow 2.13.0 for deep learning models. Traditional machine learning models were implemented and tuned using Scikit-learn. Additionally, NumPy 1.24.4 and Pandas 2.1.1 were employed for data manipulation and preprocessing, while Matplotlib 3.7.2 and Seaborn 0.12.2 were utilized for visualizing results and performance metrics. The code was compiled and executed on Microsoft Visual Studio Code IDE 1.88.0. This robust experimental setup ensured that the models were trained and evaluated effectively, providing reliable insights into the application of machine learning for anomaly detection in critical infrastructure.

5.3. Attack Profile

Table 2 includes the details for the SWaT testbed, and some key characteristics are listed below:

Table 2.

SWaT testbed attack profile.

- Plant operation time: 12:35:00 to 16:39:00.

- Plant start time: 12:35:00 (GMT +8).

- Normal run without any attacks: 12:35:00 to 15:06:59.

- Attack period: 15:07:00 to 16:15:07.

- Plant stop time: 16:39:00.

5.4. Data Pre-Processing of the SWaT Dataset

Upon receipt of the SWaT dataset in XLSX format, it underwent several preprocessing steps to ensure its suitability for analysis. Initially, the dataset was converted to CSV format for ease of handling. Subsequently, rigorous data cleaning procedures were implemented, including the removal of extraneous markings and headers. Quality checks were conducted to identify and address missing values (NaN) and outliers.

Normalization techniques were applied to features exhibiting high variance, ensuring uniformity and comparability across the dataset. Furthermore, features with discrete states were encoded using techniques such as one-hot encoding and label encoding to facilitate compatibility with machine learning algorithms.

The removal of sensor components and actuator values that remained constant throughout the dataset was carefully considered to reduce the possibility of overfitting of the machine leaning models. These unchanging values could potentially bias machine learning models, leading to inaccurate results.

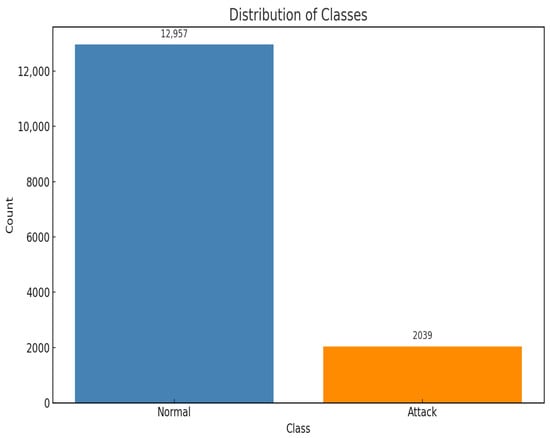

Additionally, timestamp data within the dataset was standardized to a consistent datetime format, enabling easier manipulation and analysis. To facilitate supervised learning tasks, an “Attack” column was added to the dataset, labelling data corresponding to periods of simulated attacks as provided within the dataset documentation. The addition of the attack column was necessary for classification in the algorithms used for anomaly detection. This was performed based on the timestamp data of attack initiation and termination provided by iTrust along with the dataset. After adding the attack labels, the volume of normal and attack classes was checked. It was discovered that there was a severe class imbalance in the dataset when it came to normal and attack classes. In machine learning, class imbalance is a prevalent problem [40], predominantly in scenarios where one class (“normal” instances) heavily outweighs the other class (“attack” instances). Addressing class imbalance in machine learning datasets, particularly in scenarios where one class significantly outweighs the other, is crucial to prevent biased models and ensure accurate predictions. As per the research by [41], several methods are commonly employed to mitigate class imbalance before utilizing machine learning algorithms for classification. Resampling techniques try to balance the class distribution by either replicating instances from the minority class, deleting instances from the majority class, oversampling the minority class, or under sampling the majority class. Class imbalance can be addressed by algorithms, such as ensemble techniques like Random Forest and Gradient Boosting, which give misclassified instances of the minority class more significance during training. Assigning varying costs to misclassification errors for every class is a component of cost-sensitive training, encouraging models to focus on correctly predicting the minority class instances, as mentioned by [42]. Additionally, techniques for detecting anomalies handle the minority class as anomalies or outliers, identifying deviations from the majority class as anomalies. Figure 3 shows the distribution of classes in the dataset.

Figure 3.

Normal and attack instances in the dataset.

Ref. [43] states that while the methods mentioned above can help mitigate class imbalance, they may not always be suitable for real-world critical infrastructure datasets due to concerns about data integrity, impact on performance, and the cost of misclassification. Generating synthetic data or undersampling the majority class can compromise data integrity, while oversampling techniques may lead to overfitting and poor generalization performance. Moreover, this sentiment is reinforced in the study by [44], which outlines that the misclassification of attacks as normal behavior (false negatives) can have severe consequences in critical infrastructure, necessitating careful consideration of the costs associated with misclassification errors. Therefore, when working with real-world critical infrastructure datasets, it is essential to evaluate the implications of addressing class imbalance and choose appropriate methods that prioritize model robustness, reliability, and interpretability. Expert input and domain knowledge play a crucial role in making informed decisions about how to handle class imbalance effectively while mitigating the risks associated with misclassification. Owing to these reasons, it was decided to not address the class imbalance to achieve results as close to real-world scenarios as possible.

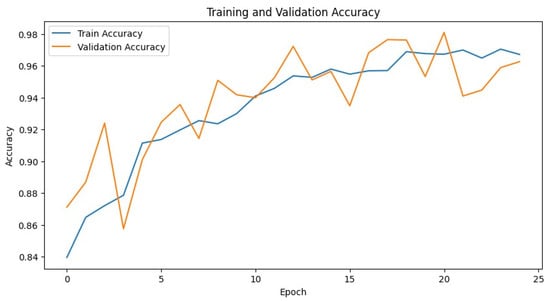

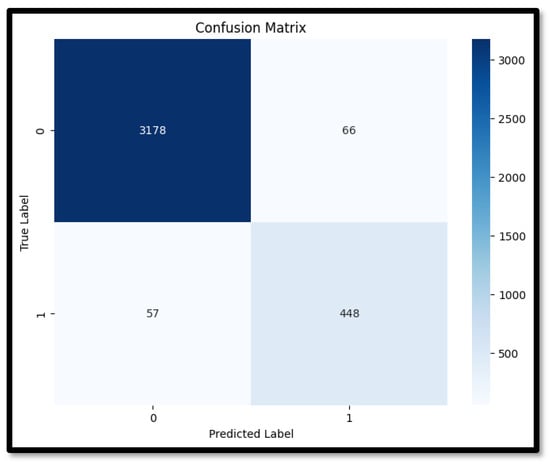

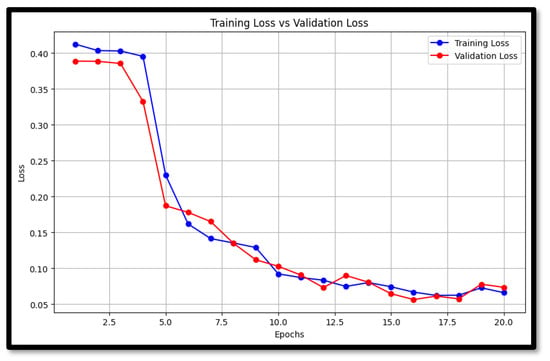

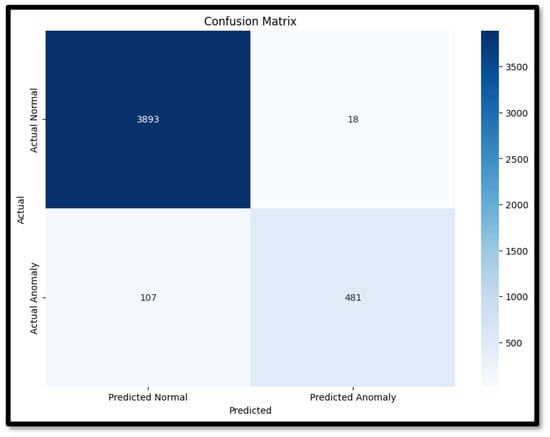

5.5. Feature Selection