Abstract

Unmanned aerial vehicles (UAVs) are a key driver of the low-altitude economy, where precise localization is critical for autonomous flight and complex task execution. However, conventional global positioning system (GPS) methods suffer from signal instability and degraded accuracy in dense urban areas. This paper proposes a lightweight and fine-grained visual UAV localization algorithm (FIM-JFF) suitable for complex electromagnetic environments. FIM-JFF integrates both shallow and global image features to leverage contextual information from satellite and UAV imagery. Specifically, a local feature extraction module (LFE) is designed to capture rotation, scale, and illumination-invariant features. Additionally, an environment-adaptive lightweight network (EnvNet-Lite) is developed to extract global semantic features while adapting to lighting, texture, and contrast variations. Finally, UAV geolocation is determined by matching feature points and their spatial distributions across multi-source images. To validate the proposed method, a real-world dataset UAVs-1100 was constructed in complex urban electromagnetic environments. The experimental results demonstrate that FIM-JFF achieves an average localization error of 4.03 m with a processing time of 2.89 s, outperforming state-of-the-art methods by improving localization accuracy by 14.9% while reducing processing time by 0.76 s.

1. Introduction

With highly flexible and autonomous flight characteristics, UAVs are widely used in fields such as battlefield support, logistics and distribution, and agricultural monitoring and show significant application value in complex environments [1,2]. In mission execution, positioning technology not only affects the efficiency of mission execution but also directly relates to the flight safety and reliability of UAVs. Currently, most UAVs are relying on GPS for positioning and navigation.

The expansion of UAV application scenarios, mainly the increase in the frequency of application in urban environments, is significant in promoting urban infrastructure optimization and intelligent management. When operating at low altitudes, UAVs encounter significant navigation challenges due to dense urban structures and complex electromagnetic interference in built environments. In such complex environments, GPS signals are prone to interference and multipath effects, failing conventional positioning, navigation, and communication systems [3].

To solve this problem, visual positioning technology has gradually developed and become a pivotal technology to support autonomous UAV flight [4,5,6]. Currently, computer-vision-based UAV localization methods usually rely on deep learning algorithms to achieve localization by matching remotely sensed images with images taken by UAVs [7]. Visual localization methods are classified into four categories: localization based on global features, local features, multi-source data fusion, and temporal information [8]. Localization algorithms based on global and local features are matched by extracting overall or local features of the image, respectively, to determine the approximate position of the UAVs. However, such methods often lack sufficient accuracy and produce significant positioning errors, making them challenging for high-precision applications [9]. Multi-source data fusion positioning methods combine visual information with other sensor data (e.g., inertial measurement unit (IMU) and light detection and ranging (LiDAR)). Despite the improved accuracy, the system complexity, hardware cost, and power consumption are high, which limits its promotion in lightweight and low-cost applications [10]. While temporal information-based positioning methods employing advanced deep learning architectures (e.g., Transformer [11] and EfficientNet [12]) demonstrate improved stability, their practical deployment in UAV applications is constrained by substantial computational overhead, excessive model complexity, and limited real-time performance, particularly detrimental for high-frequency operations on resource-constrained platforms [13]. In addition, according to statistics, most existing datasets are collected at lower flight altitudes, with relatively homogeneous environment and altitude conditions and a lack of relevant datasets in complex urban environments, which makes it challenging to validate the effectiveness of visual positioning techniques in complex electromagnetic interference environments.

This paper proposes a lightweight fine-grained UAV positioning algorithm to achieve high-precision positioning in complex urban electromagnetic environments through visual assistance that is entirely free of dependence on GPS. The algorithm effectively obtains rich contextual information by deeply fusing the local and global features of satellite and UAV images to achieve accurate UAV positioning in complex urban electromagnetic environments. Using the limited computing resources of UAVs, a lightweight network, EnvNet-Lite, is designed to extract global semantic features to achieve accurate positioning while ensuring efficient computation. Aiming at the limitations of the existing datasets, we produced the UAVs-1100 dataset, which has the characteristics of long-range, multi-height, and multienvironment, better fitting the actual flight environment, and verified our algorithm on the dataset. The main contributions of this paper are as follows.

(1) Design a network structure for joint feature extraction that simultaneously extracts high-level local and global semantic features from images to generate more representative and robust feature descriptors.

(2) Propose a lightweight EnvNet-Lite with environment-aware adaptivity. By dynamically adjusting the attention weights, the system can enhance its adaptability to environmental features such as lighting, texture, and contrast and improve its performance in complex scenes.

(3) A dataset of urban UAV flights in complex electromagnetic environments was produced. To ensure the diversity and representativeness of the data, it included images taken by UAVs in the flight altitude range of 200–500 m and covering a wide range of environments and resolutions.

In the next section, we review the related work of researchers in the visual localization of UAVs. Section 3 describes in detail the algorithmic model of the method in this paper. The Section 4 describes the basics of the dataset, the relevant setup of the experiments, the experimental results, and the comparison and ablation experiments.

2. Related Works

2.1. Image Matching Techniques

Deep-learning-based image-matching algorithms are categorized into end-to-end and non-end-to-end image-matching algorithms [14,15,16]. End-to-end image-matching algorithms utilize neural network models to realize the whole process, adapting to diverse image features in complex scenes, which rely on large-scale, high-quality data, matching accuracy in the case of insufficient data or noise [17]. Sun et al. [18], based on the superior performance of the Transformer [11] model in dealing with long sequential data and complex dependencies, introduced the Transformer into image matching and established the local feature matching with Transformers (LoFTR) algorithm to enhance the satisfactory capture of detailed image features. Wang et al. [19], inspired by LoFTR, proposed a feature-enhanced Transformer-based image matching, which established the keypoint-topology relationship network (KTR-Net) to realize the recognition of detailed features. However, in areas such as the sea or the forest, which lack prominent visual features, it still lacks the adaptability to recognize detailed features. Regions without prominent visual features like sea surfaces or forests still lack adaptability. Jiang et al. [20] designed anchor matching Transformer (AMatFormer) to achieve image matching by exploiting the dependencies between different features. The non-end-to-end utilization of image-matching algorithms combined with neural networks can ensure the accuracy of image matching while reducing the number of parameters. Tian et al. [21] proposed Describe-to-Detect (D2D) in conjunction with image matching, which utilizes the existing descriptor model and selects the salient locations with high information content as key points to accurately extract features. However, the point-by-point comparison will lack robustness when features change. Based on this, Pautrat et al. [11] proposed self-supervised occlusion-aware line description and detection (SOLD2), which utilizes line features to achieve feature matching. Inspired by the multistage model, Fan et al. [22] proposed a coarse-to-fine matching (3MRS). Log-Gabor convolution is used to realize the coarse-grained description of features. Then, a neural network model based on Log-Gabor convolution is built to complete the image matching, but 3MRS performs poorly in dealing with scene changes. To solve the difficulty of matching image features under scene changes, Yao et al. [23] proposed a co-occurrence filter space matching (CoFSM) based on constructing a CoF co-occurrence scale space to extract feature points and then eliminating abnormal matching points using the Euclidean distance function and consistency sampling algorithm to complete image matching.

Existing deep-learning-based image-matching algorithms still have some limitations and drawbacks despite the performance they show in complex scenes. End-to-end relies on large-scale, high-quality data and tends to perform poorly in scenes with insufficient or noisy data, resulting in poor matching accuracy, especially in areas lacking significant visual features (e.g., sea surface or forests), with poor adaptability. Non-end-to-end lacks robustness to scene changes in response to feature changes and performs poorly in dynamic scenes [24].

2.2. UAV Localization Techniques

To solve the problem that UAVs cannot be accurately positioned without GPS or weak GPS, Choij et al. [25] proposed a UAV localization based on the building ratio map (BRM). This extracts building areas from UAV images, calculates the building occupancy rate in the corresponding image frame, and matches it with the building information on the numerical map. Hou et al. [26] proposed a UAV visual retrieval and localization that integrates local and global deep learning features from known satellite orthophotos, using ConvNeXt as a backbone network combined with generalized mean pooling to form a visual retrieval and localization algorithm used for extraction of satellite and retrieval feature extraction algorithms for global features of UAV images. Sui et al. [27] proposed a monocular vision multipoint localization algorithm based on Tikhonov regularization and L-curve, which achieves localization through the image content without external assistance on specific dependencies.

However, the monocular vision system cannot directly determine the size of the objects in the image, which leads to the scale uncertainty problem. In this regard, Zhuang et al. [28] refined the scale uncertainty by combining visual airborne laser scanning and mapping (ALSM) techniques with known geophysical models or other sensor data to estimate size information. However, illumination variations and lousy weather conditions can affect the accuracy of images and localization. Liu et al. [29] explored the application of multi-source image-matching algorithms in UAVs visual localization. They proposed new evaluation frameworks, algorithmic improvements, and combination strategies to improve the adaptability to illumination and weather variations. Li et al. [30] proposed an adaptive semantic aggregation (ASA) module that segments an image into multiple parts and learns the association between each part and the image block. Dai et al. [31] described a novel UAV autonomous localization called finding point with image (FPI) that effectively improves the accuracy and robustness of localization.

Although existing UAV localization techniques have progressed, they still have limitations and drawbacks. First, most rely on specific features or existing reference data, leading to a significant decrease in localization accuracy when features are scarce or unevenly distributed. Second, some algorithms perform poorly when dealing with light changes and bad weather conditions and are easily affected by the image, leading to localization errors. Overall, the existing techniques must be optimized to adapt to complexity, improve real-time performance, and handle diverse features [3].

3. Methods

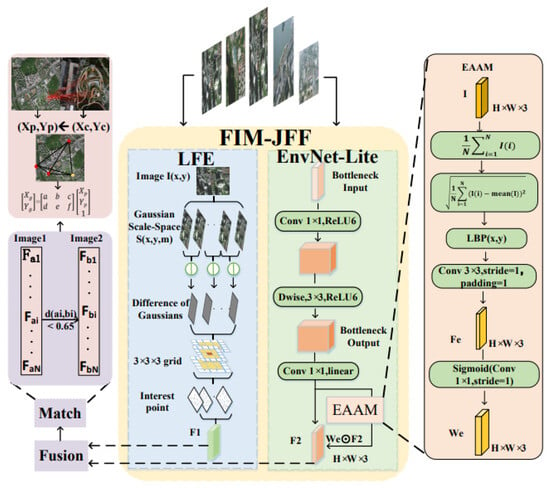

Figure 1 demonstrates the proposed FIM-JFF in this paper, which deeply fuses local and global features of satellite and UAV images through parallel branches to obtain rich contextual information for accurate localization. Specifically, the local features of the images are extracted using the LFE module, the global features are extracted by a lightweight EnvNet-Lite network, and the local features extracted by LFE are subsequently fused with the global features generated by EnvNet-Lite. The Fast Library for Approximate Nearest Neighbors(FLANN) algorithm combined with the ratio test screens the optimal matching points [32]. Finally, based on the geospatial relationship between the satellite image and the UAV image, the latitude and longitude of the UAVs are determined by matching the location and distribution of the feature points.

Figure 1.

FIM-JFF architecture diagram.

In Figure 1, H refers to the number of pixels in the height direction of the image, W refers to the number of pixels in the width direction of the image, and 3 represents the number of channels; and represent the pixel coordinates of satellite images, and represent the pixel coordinates of images captured by unmanned aerial vehicles; N is the total number of pixels; represents the convolution operation; and represent feature maps; represents the primary feature map extracted after the convolution operation, We represents the feature map after adding the spatial attention weight.

3.1. LFE Module

In this section, the LFE network module is designed to extract local features of UAV images and satellite images. Firstly, image pyramids of different scales are generated by Gaussian blurring for the input image :

Among them, is a Gaussian kernel function with scale parameters. refers to the output feature after Gaussian weighting at the ð scale.

Among them, refers to the scale space difference result, indicating the difference in Gaussian filter output between adjacent scales, and refers to the image features after Gaussian smoothing under the larger scale .

Next, the detected key points are accurately located at a subpixel level to filter out the key points with weak edge response and low contrast. One or more orientations are assigned to each key point based on the gradient direction of the local image to obtain the rotation invariant property [33]:

Among them, represents the gradient direction angle of the image at the position. and refer to the partial derivatives of the image in the horizontal and vertical directions. converts the ratio of the gradient components into an angle.

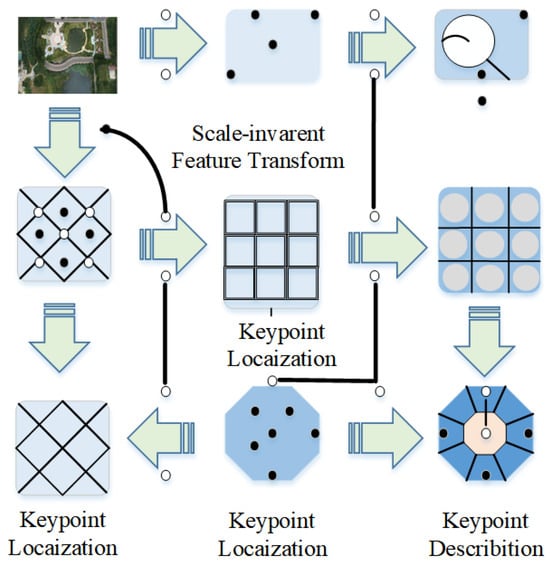

Finally, a 4 × 4 grid is constructed in the local area around the key points with the key points as the center, and the gradient histograms in 8 directions are computed for each grid to generate a 128-dimensional feature vector f. This feature vector describes the gradient information in the neighborhood of the key points, possesses scale and rotation invariance, and is an essential basis for describing the key points in the feature-matching process. Figure 2 shows the LFE extraction localized feature flow.

Figure 2.

LFE extracts local features.

A circle indicates feature detection, a rectangle indicates essential point localization, an arrow indicates direction assignment, and an octagon indicates feature descriptor generation. Hollow points are initially detected as potential vital points, and a solid origin is the key point in the image.

3.2. EnvNet-Lite Module

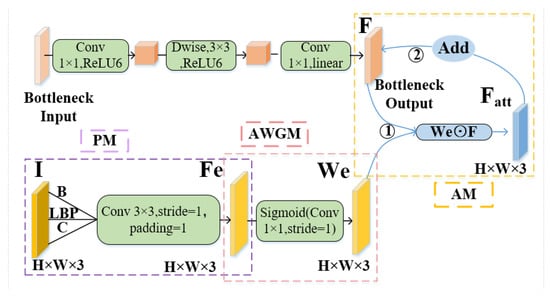

To enhance the accuracy of UAVs’ positioning capability under multiple changes, we designed a lightweight network, EnvNet-Lite, with an integrated perceptually environment adaptive attention module (EAAM), consisting of three parts: perceptual module (PM), attention weight generation module (AWGM), and applying module (AM). Through the EAAM module, EnvNet-Lite efficiently extracts global features and performs dynamic attention weighting on image features, such as illumination, texture, and contrast. The network can effectively adapt to in-flight variations and enhance the matching accuracy between UAVs and satellite images, thus significantly improving the localization performance.

The EnvNet-Lite network employs deeply separable convolution with significant computational complexity and high feature extraction performance. It extracts images’ global features to form a compact and representative feature map. The EAAM module is designed to adapt to feature extraction needs under different conditions, solving problems such as illumination variations, viewpoint differences, and background complexity. The module can sense the ring of the input image. The EnvNet-Lite structure is shown in Figure 3.

Figure 3.

The structure of EnvNet-Lite.

In the PM, global statistical features of the image are extracted by global average pooling and global maximum pooling, and the image luminance mean B is computed for the illumination of the image:

Among them, is the brightness value of the ith pixel in the image, and N is the total number of pixels. takes the luminance values I(1), I(2), … of all pixels in the image I(N).

We texturing the image using local binary (LBP) features,

where P is the number of pixel points in the neighborhood, and is the radius, sgn(·) are symbolic functions.

Calculate the image contrast C:

where is the mean value of image B.

In the AWGM, feature vectors are generated by convolution, pooling, and other operations. The calculation of attention weights is

Among them, responses in singing are the weights and biases, respectively; sigmoid(·) is the activation function.

The AM dynamically recalibrates feature representations by applying learned attention weights to the convolutional feature maps F:

where ⊙ denotes element-by-element multiplication.

To train the perceptual EAAM, the loss function is defined according to Adam to evaluate the model’s predictive performance and guide the model’s optimization process:

Among them, is a real label. is the predicted value, and M is the sample size.

According to the loss function , design optimization algorithms to updated parameters:

Among them, are the model parameters, the is the learning rate. is the gradient of the loss function concerning the parameters, the is a moving average of the squares of the gradients, and the is a constant that prevents division by zero.

3.3. Feature Fusion and Matching Module

Feature-level integration is achieved through concatenation of local descriptors with global representations in the joint embedding space. Assume that the local features are , the global characterization is , then the spliced features are :

Among them, denotes feature vector splicing.

Among them, is the L2 norm of the eigenvector.

The eigenvectors after L2 regularization are normalized so that their mean is 0 and variance is 1:

Among them, responses in singing are the mean and difference of the eigenvectors.

K-Means tree indexes are constructed for the fused feature vectors using the Fast Library for Approximate Nearest Neighbors (FLANN) search algorithm [34]. Then, these index structures quickly match the corresponding feature points between images. Finally, by Lowe’s ratio test, the distance ratio between a match and the second-best match is set to a threshold value, and only those reliable matches are retained.

For the feature vector of a photo taken from a drone characterization of satellite images in the baseline , find the nearest feature vector in :

Among them, denotes the Euclidean distance. represents finding the that minimizes the subsequent expression among all possible values of .

Given two eigenvectors = () and = (), according to its Euclidean distance , we find matches and suboptimal matches of feature vectors in satellite images from photos taken by UAVs:

denotes the feature vector of interest; it is an optimal match for the ; the next best match is , for which the Euclidean distances are, respectively, responses in singing :

The r of a match is considered reliable only if the r is less than a predefined value.

3.4. Positioning

Using the geospatial relationship between the satellite images and the images taken by the UAVs, we determine the actual geographic location of the UAVs by matching the location and distribution of the feature points. Precisely, we extract the successfully matched feature points from the images taken by the UAVs and select the most centrally located one of these feature points [32]. Then, based on this feature point, we find the corresponding matching point in the reference satellite image, whose geographic information is known, so that the pixel coordinates of this matching point in the satellite image can be converted to the exact geographic coordinates (latitude and longitude).

The satellite images used for the experiments in this paper have a resolution of 300 m/pixel. Three known geographic points, called control points, are selected in each quasi-image. A linear transformation is performed using the correspondence between these control points’ geographic coordinates (latitude and longitude) and their pixel coordinates in the image.

Select the point with the most central location from the successfully matched feature points as :

Of these, are the coordinates of the feature points of the matched points, and n is the number of matched points.

Then, find in the baseline satellite image corresponding to the matching point, assuming that the pixel point coordinates are . The order indicates the actual geographic coordinates:

a, b, c, d, e, f are the radial transformation matrices, which are determined based on the known coordinates of a sufficiently large number of control points and the image pixel positions using the least-squares method, taking into account the resolution of the satellite image, the shooting angle, and terrain deformation. Parameters a and d control scaling, parameters b and c control rotation, and parameters e and f control translation.

4. Results

This section describes the experiments in this paper, covering the introduction of the experimental dataset, the experimental design, and the analysis of the results. Firstly, to be closer to the practical application scenarios of UAVs, we have created our own UAVs-1100 dataset (Section 4.1). To verify the effectiveness of the proposed method, we designed a series of experiments. Firstly, the performance of the proposed FIM-JFF method and other advanced UAVs localization methods in denied environments on the test dataset is analyzed in detail (Section 4.3); to prove that our algorithm is scale invariant and rotation invariant, we perform experiments with UAV images of the exact location at different heights and different angles. In addition, to evaluate the performance of the proposed lightweight network EnvNet-Lite, we analyze it in comparison with state-of-the-art lightweight networks; finally, we perform ablation experiments (Section 4.6) to verify the impact of the global feature extraction network and the local feature extraction network modules on the performance of FIM-JFF.

4.1. Experimental Dataset

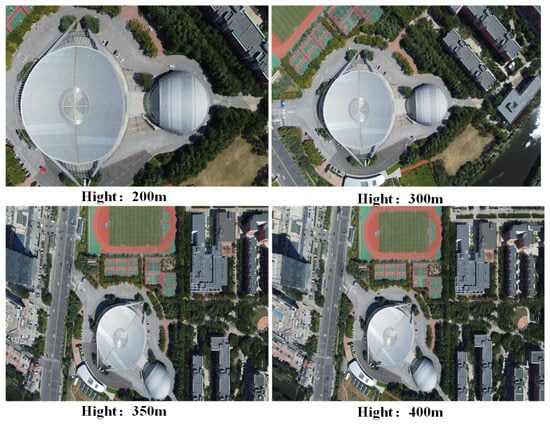

The dataset in this paper consists of two parts: UAV-captured images and satellite images. The UAV images were taken by DJI mini2 around the West Coast New District of Qingdao City, Shandong Province, as shown in Figure 4a. The shooting locations include Tangdaowan District of China University of Petroleum (East China), Dingjiahe Reservoir, Qingdao University of Technology (Huangdao), and Binhai Leisure Square, etc. A total of 1100 images are included, of which 600 are taken on a sunny day, 300 are taken on a cloudy day, and 200 are taken on a foggy day. Six shooting altitudes of 200 m, 300 m, 350 m, 400 m, 450 m, and 500 m are included. Satellite images were taken using Google satellite maps in September 2024, and the satellite images were intercepted at 300 m in height at the same position as the images taken by the drone, as shown in Figure 4b.

Figure 4.

Example of image dataset.

4.2. Experimental Setup

The experiment was run in an environment equipped with an AMD EPYC 7T83 64-core processor cluster(Advanced Micro Devices, Inc., Santa Clara, CA, USA) and two 24 GB GeForce RTX 4090 GPUs, using the PyTorch [35] 1.10.2 software framework. Table 1 shows the model’s hyperparameter settings.

Table 1.

Hyperparameter Settings of the Model.

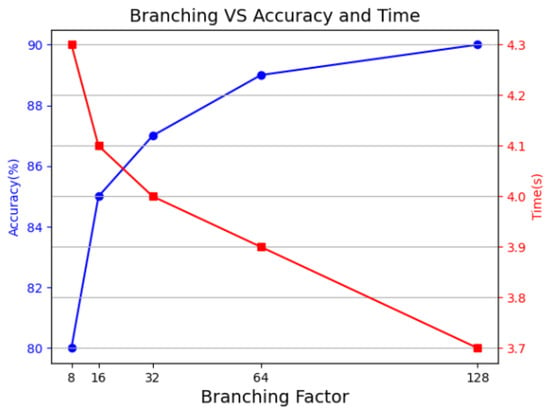

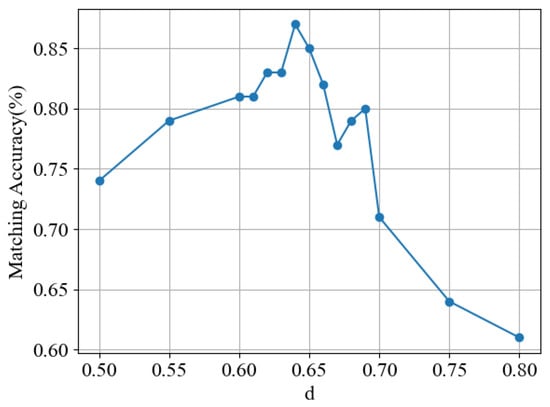

In feature matching, using the K-Means tree [37] in the fast library for FLANN algorithm, when the branching parameter branching = 32, it achieves the optimal accuracy–efficiency tradeoff (as shown in Figure 5, the blue line indicates the change of accuracy under different branching parameters, and the red line indicates the change in the running time under different branching parameters). By comparing the proportion of correct matching points to the total matching points and the correct rate, using the Lowe’s ratio [33] test, we can select reliable matching pairs from the FLANN matching results. By comparing the ratio of correct matching points to the total matching points and the correct rate, the reliable matching pairs are filtered from the FLANN matching results using Rockwell’s ratio test. Through the experiments, the experimental value of r in this paper is taken to be 0.64, and the accuracy rates corresponding to different r are shown in Figure 6.

Figure 5.

The performance of the parameter branching with different values.

Figure 6.

The matching accuracy corresponding to different values of r.

4.3. FIM-JFF Performance Evaluation

In this paper, the experimental results are quantitatively and qualitatively analyzed to evaluate the performance of FIM-JFF. Firstly, the performance of FIM-JFF and four advanced image matching-based UAV location algorithms, namely, BRM [25], FPI [31], UAVAP [38], and ASA [30], are evaluated and analyzed on our UAVs-1100 dataset. Then, some of the experimental results are selected for visualization to present the effect of the algorithms more intuitively. Finally, to verify the performance of FIM-JFF in dealing with scale variation and rotation problems, we use UAV images of different flight altitudes at the exact location and the same angle, as well as UAV images of different angles at the precise location and the same flight altitude, and experimentally compare them with the benchmark satellite images to assess their scale invariance and rotation invariance.

4.3.1. Quantitative Analysis

Table 2 shows the positioning results of five images, and for each image, its feature matching results with the reference satellite image, positioning error, attitude estimation error, and other indicators are recorded. The localization error is quantified by computing the horizontal Euclidean distance between image-derived geocoordinates and ground truth positions acquired by differential GPS with centimeter-level accuracy, while disregarding altitude discrepancies. The lighting conditions take values in the range of 0–1; the closer to 1, the better the lighting conditions. When 0.6 is taken, it is equivalent to a foggy day, 0.8 to a cloudy day, and 1 to a sunny day.

Table 2.

Localization results of different algorithms under different light conditions.

From Table 2, the different algorithms all increase in matching accuracy with the enhancement of light intensity, and the localization errors all decrease by different magnitudes, with BRM, FPI and UAVAP changing more, indicating that they are less robust to various environments, and ASA, as well as our FIM-JFF algorithms, is more stable.

The time required for UAV flight localization is an essential criterion for measuring the merits of the algorithmic model. As can be seen from Table 2, no matter how the light conditions change, our algorithm takes the shortest time, keeping about 3 s, which is about 50% shorter compared to the BRM and UAVAP algorithms, which take a longer time; and we observe that the positioning time increases with the weakening of the light intensity no matter what kind of algorithm is used.

For a better visual comparison of the five algorithms, the overall average results on our overall UVAs-1100 dataset are compared, as shown in Table 3.

Table 3.

The performance of different matching and positioning algorithms.

The experimental results show that our algorithm has an average localization error of 4.03 m and robustness of 89.3% to variations in light, flight altitude, etc., which are much higher than other localization algorithms. Compared with the ASA algorithm, the matching accuracy is improved by 3.5%, and the matching time is counted shorter, close to 50%. It proves that our algorithm guarantees accuracy while remaining lightweight and is suitable for UAVs to achieve fine-grained localization in resource-constrained situations.

4.3.2. Qualitative Analysis

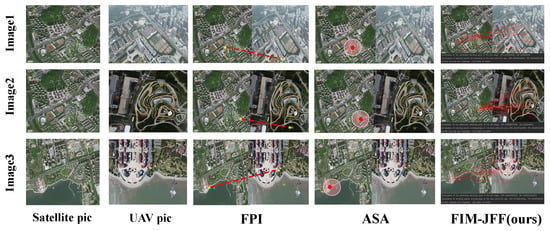

Table 3 shows that the FPI and ASA algorithms have a better combination of localization error and processing time and other indicators, so they are used to analyze our algorithms qualitatively. The matching results of three selected groups of images under different localization methods are shown in Figure 7. Among them, the red dotted line in the FPI method represents the corresponding point in the image captured by the unmanned aerial vehicle and the satellite image; The final positioning position of the red dot in the ASA method; The red solid lines in the result graph of the method in this paper represent the matching lines of feature points.

Figure 7.

Matching results under different positioning methods.

As can be seen in Figure 7 the FPI algorithm ultimately determines the latitude and longitude of the unique matching point in the satellite image and the image taken by the UAVs as the matching result; the ASA algorithm first determines the place where the Attention value of the UAV’s location is closest to 1, which is determined by the pink circle, and then focuses on the features within the region only, from which the most plausible feature area (the red solid point) is selected as the matching and localization result. Our FIM-JFF algorithm selects the feature point in the UAV image corresponding to the centermost feature point of the satellite image among the matching points larger than the threshold value as the final result. The photos show that our algorithm is more fine-grained and has the smallest positioning error.

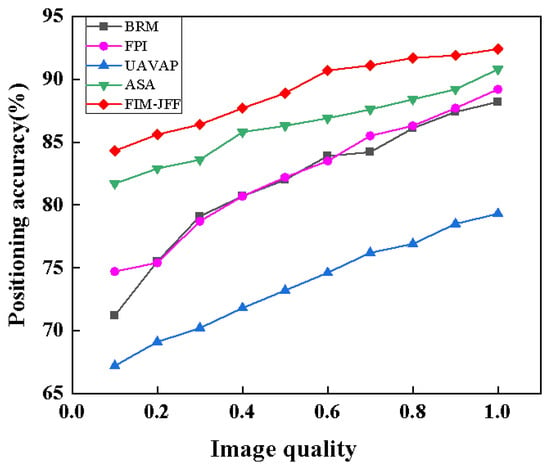

We evaluate the image quality using the reference free image spatial quality evaluator (BRISQUE) [39], which is a reference-free image quality evaluation method that does not require a baseline image. The result is a number between 0 and 100, with smaller numbers indicating better quality of the detected image. To present the quality difference more intuitively, we converted it into a 0.1–1.0 scale according to the following rule: the image quality is rated as 0.1 when the BRISQUE output is between 91 and 100, 0.2 when it is between 81 and 90, 0.3 when it is between 71 and 80, and so forth. The closer the image quality is to 1, the better the image quality is.

To demonstrate more intuitively that our algorithms have good environmental adaptability, the corresponding localization accuracies are calculated by applying different algorithms to photos taken by UAVs with different qualities, and the results are obtained as shown in Figure 8.

Figure 8.

Matching results under different positioning methods.

Figure 8 shows that our algorithm has a very good environmental adaptivity compared to other algorithms. The difference in matching accuracy from image quality from 0.1 to 1 is only 8.1%, while the difference in matching accuracy between the highest and lowest values of BRM, FPI, UAVAP and ASA are 17%, 14.5%, 12.1% and 9.8%, respectively. Even in the case of poor environment level and image quality of only about 0.4, the localization accuracy can still reach 87.2%, proving that the FIM-JFF algorithm can still achieve fine-grained localization of UAVs in various complex environments.

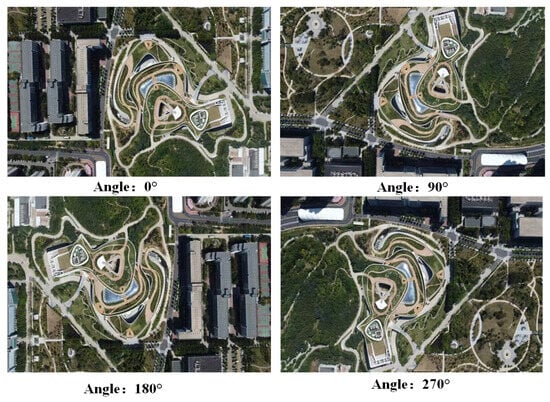

To prove that our algorithm has scale invariance and rotation invariance, experiments are carried out with UAV photographs at the exact location with the same angle and different heights, as well as UAV photographs at the same area with the same height and different angles with the benchmark satellite images, as shown in Figure 9 and Figure 10.

Figure 9.

Photographs of UAVs at the same location from the same angle and at different heights.

Figure 10.

Photographs of UAVs at the same location at the same altitude from different angles.

As shown in Table 4, when the UAV flight altitude changes, the matching accuracy differs by no more than 2.67%, and the localization error remains between 0 and 1.86 m, demonstrating that our algorithm has strong scale invariance. The matching error at a height of 300 m is slightly higher than that at 350 m. The main reason is that the robustness of the method proposed in this paper to scale and angle variations in feature-dense areas needs to be improved. The 350-m field of view is wider, the feature distribution is uniform, and the matching is more stable. This difference is within the allowable error range and does not affect the overall performance.

Table 4.

Positioning results of different heights from the same angle and location.

The results in Table 5 indicate that with changes in the UAV’s flight angle, the matching accuracy differs by no more than 3.96%, and the localization error remains between 0 and 1.01 m, demonstrating that our algorithm has strong rotation invariance.

Table 5.

Positioning results for different angles at the same height at the same location.

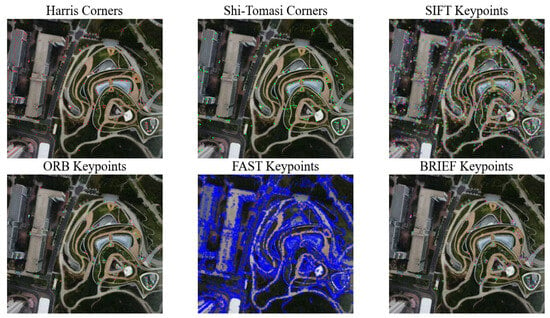

4.4. Comparison of Feature Point Extraction Algorithms

In image-matching-based UAV localization algorithms, feature point extraction is crucial, directly affecting localization’s matching accuracy and reliability, especially in complex environments and diverse scenarios. Stable and accurate feature point extraction can significantly improve the robustness and accuracy of UAV localization. To evaluate our feature extraction algorithms, several classical feature extraction algorithms are selected for comparison, including Harris [40], Shi-Tomasi [41], SIFT [33], ORB [42], FAST [32], and BRIEF [43] algorithms. The results of each feature extraction algorithm are shown in Figure 11. The dots of different colors in one figure represent different degrees feature points.

Figure 11.

Feature point extraction diagram for different extraction algorithms.

Among the above six feature extraction methods, the SIFT algorithm and the FAST algorithm have too many feature points, which will require a large amount of computational resources and are unsuitable for UAVs, which are small in size and limited in computational resources. Harris corners and Shi-Tomasi algorithms extracted feature points with no importance ordering, and all feature points have the same importance, which is not conducive to the fine-grained localization of UAVs. ORB and BRIEF algorithms differentiate the importance of feature points, but they are only shallow features extracted and perform poorly in multi-source image matching.

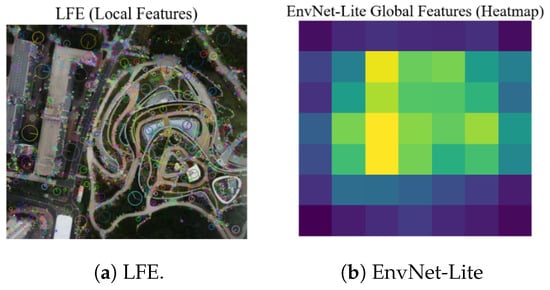

Our feature extraction method is divided into two parts: extracting LFE local feature points and extracting deep features by the EnvNet-Lite lightweight network. The left side of Figure 12 represents the local features of the LFE, which indicate the importance of the points of interest by the size of different circles, the larger circles being the most important. The right side of Figure 12 represents the heat map generated by averaging the feature maps of the global features, which are complementary to each other, and by fusing the global and local features, the relatively unimportant feature points are excluded, and the reliable feature points are retained for subsequent UAV localization.

Figure 12.

LFE and EnvNet-Lite extract local and global features.

Comparative analysis shows that the success rate of feature point matching after binding LFE with EnvNet-Lite is 78%. This means that out of every 100 extracted feature points, 78 can complete multi-source image matching. As a lightweight network, EnvNet-Lite has an average feature extraction time of 1.77 s, which is a reduction of 1.02 s compared to the other six algorithms. Our algorithm achieves a better balance in terms of the number of feature points extracted, stability, and global feature-capturing ability compared to other feature extraction algorithms, making it particularly suitable for UAV localization tasks that require high performance in complex scenarios.

4.5. Lightweight Network Performance Comparison

To verify the performance of the proposed lightweight network, EnvNet-Lite, we compared it with other advanced lightweight networks. Specifically, we replaced EnvNet-Lite with other mainstream lightweight networks and evaluated the performance of FIM-JFF on this basis. Through this comparative experiment, the superiority of EnvNet-Lite in performance and efficiency is systematically verified. Here, FLOPs refer to the number of floating-point operations per second and are used to assess computational complexity. Memory usage indicates the amount of memory or GPU occupied by the network during inference. Robustness refers to the stability of feature points against environmental changes. Bold entries indicate the best performance in individual metrics, while those marked with 1* denote the overall optimal performance. The results are shown in Table 6.

Table 6.

Comparison of the performance of different lightweight networks.

It can be observed that the FLOPs of our EnvNet-Lite is 0.17 GB, slightly higher than ShuffleNet V2’s 0.14 GB but significantly lower than GhostNet’s 0.29 GB and BlazeFace’s 0.30 GB, indicating its low computational complexity. With a robustness of 93%, significantly surpassing other networks, EnvNet-Lite demonstrates a clear advantage in UAV multi-source image-matching tasks by maintaining low computational complexity and resource usage while exhibiting more robust feature extraction capability, minor localization errors, shorter processing times, and higher robustness.

4.6. Ablation Experiments

We conducted ablation experiments to evaluate each key component’s contribution to the overall system performance of the FIM-JFF. We removed or replaced different modules and performed comparative experiments under the same conditions to assess each module’s contribution to matching accuracy and localization effectiveness. The results are shown in Table 7.

Table 7.

Quantitative results of ablation experiments.

The experiments show that the proposed FIM-JFF algorithm significantly outperforms the overall performance of the ablated versions. Regarding localization accuracy, the FIM-JFF algorithm achieves 92.73%, while removing the local feature extraction module LFE or the global feature extraction network EnvNet-Lite results in a matching accuracy decrease of 13.6% and 14.96%, respectively. Regarding matching accuracy, FIM-JFF’s 85.33% also considerably exceeds the performance of each module used individually or in simple combinations. Notably, regarding robustness under environmental changes, FIM-JFF achieves 91%, while using LFE or EnvNet-Lite individually is only 76% and 84%, respectively. Additionally, experiments 2 and 3 indicate that the EAAM module alone can maintain 84% robustness for the algorithm.

4.7. Discussion

For the mismatch phenomena during the experiment, in-depth analysis revealed that they mainly originated from three types of problems: data distribution offset, inconsistent feature dimensions, and model assumption deviation. Optimization and adjustment can be carried out through the following methods. For data distribution offset, it is recommended to adopt moving average normalization(EAM) in combination with batch training and introduce the maximum mean discrepancy(MMD) regularization term [48]. For the differences in feature dimensions, adaptive pooling or 1 × 1 convolution can be used for dimension alignment, and principal component analysis(PCA) for dimension reduction is adopted when necessary [49]. For the model assumption bias, dynamic learning rate scheduling combined with label smoothing technology can be adopted [50].

5. Conclusions

This paper presents a fine-grained UAV localization algorithm based on multi-source image matching and feature fusion. The algorithm enhances UAV localization accuracy and robustness in GPS-denied environments by matching feature points from UAV-captured images with satellite images and integrating multi-source feature fusion strategies. Specifically, the algorithm leverages the complementarity of local and global features by first extracting local features using the LFE algorithm. Then, a lightweight network, EnvNet-Lite, integrated with EAAM, extracts global features. Subsequently, precise feature point matching is achieved through deep feature fusion, combined with the FLANN algorithm and ratio testing. Based on this, geographic information from satellite images converts the matched pixel coordinates into accurate geographic coordinates, enabling high-precision UAV localization. The experimental results indicate that the algorithm maintains high matching accuracy under various environmental conditions and exhibits strong robustness against variations in UAV image rotation, scale, and brightness. In addition, the algorithm operates in real time, meeting the stringent requirements for computational resources and response time in UAV localization. Although the proposed algorithm has significantly improved localization accuracy and robustness, it remains heavily dependent on environmental characteristics during the feature extraction and matching processes. Consequently, localization results can be affected in scenarios with scarce features or complex backgrounds. Furthermore, the algorithm’s reliance on high-quality satellite imagery for geographic coordinate conversion introduces dependency on pre-existing mapping data, which may limit its applicability in regions with outdated or incomplete remote sensing datasets. Additionally, while the lightweight network reduces computational complexity, the real-time performance may degrade in ultra-high-resolution image scenarios due to the increased dimensionality of feature extraction and fusion operations.

Future work could focus on further researching how to incorporate environmental feature information to enhance the algorithm’s adaptability or optimizing the feature extraction and matching processes to improve efficiency and accuracy. Extending and validating the algorithm in more complex scenarios and larger geographic environments is also a promising direction for exploration.

Author Contributions

All authors contributed to the study conception and design. F.G.: investigation, methodology, experiments. J.H.: investigation, methodology, conceptualization, writing—review and editing. C.D.: validation, data curation, formal analysis. H.W.: validation, writing—original draft. Y.Z.: validation, data curation. Y.Y.: formal analysis, data curation. X.J.: methodology, experiments, writing—original draft. All authors have read and agreed to the published version of the manuscript.

Funding

Qingdao Municipal Bureau of Finance, Qingdao Science and Technology Demonstration Special Project, 24-1-8-cspz-23-nsh, Research and Application Demonstration of Urban Flood Monitoring Technology Based on Multimodal Fusion Sensing and Situational Derivation, 2024-01 to 2025-12, 400,000 RMB, under research, chairperson. This work received grants from the project 23-2-1-162-zyyd-jch supported by Qingdao Natural Science Foundation.

Data Availability Statement

Data will be made available on request. If researchers wish to obtain data, please get in touch with us at S23070017@s.upc.edu.cn.

Acknowledgments

Thanks to Qingdao Municipal Government and China University of Petroleum (East China) for their help and guidance on UAV data collection.

Conflicts of Interest

The authors declare no conflicts of interest. The authors have no relevant financial or non-financial interests to disclose.

References

- Banerjee, B.P.; Raval, S.; Cullen, P. UAV-hyperspectral imaging of spectrally complex environments. Int. J. Remote Sens. 2020, 41, 4136–4159. [Google Scholar] [CrossRef]

- Fan, B.; Li, Y.; Zhang, R.; Fu, Q. Review on the technological development and application of UAV systems. Chin. J. Electron. 2020, 29, 199–207. [Google Scholar] [CrossRef]

- Tian, X.; Shao, J.; Ouyang, D.; Shen, H.T. UAV-satellite view synthesis for cross-view geo-localization. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 4804–4815. [Google Scholar] [CrossRef]

- Cesetti, A.; Frontoni, E.; Mancini, A.; Ascani, A.; Zingaretti, P.; Longhi, S. A visual global positioning system for unmanned aerial vehicles used in photogrammetric applications. J. Intell. Robot. Syst. 2011, 61, 157–168. [Google Scholar] [CrossRef]

- Ding, G.; Liu, J.; Li, D.; Fu, X.; Zhou, Y.; Zhang, M.; Li, W.; Wang, Y.; Li, C.; Geng, X. A Cross-Stage Focused Small Object Detection Network for Unmanned Aerial Vehicle Assisted Maritime Applications. J. Mar. Sci. Eng. 2025, 13, 82. [Google Scholar] [CrossRef]

- Chen, N.; Fan, J.; Yuan, J.; Zheng, E. OBTPN: A Vision-Based Network for UAV Geo-Localization in Multi-Altitude Environments. Drones 2025, 9, 33. [Google Scholar] [CrossRef]

- Couturier, A.; Akhloufi, M.A. A review on absolute visual localization for UAV. Robot. Auton. Syst. 2021, 135, 103666. [Google Scholar] [CrossRef]

- Arafat, M.Y.; Alam, M.M.; Moh, S. Vision-based navigation techniques for unmanned aerial vehicles: Review and challenges. Drones 2023, 7, 89. [Google Scholar] [CrossRef]

- Aiger, D.; Araujo, A.; Lynen, S. Yes, We CANN: Constrained Approximate Nearest Neighbors for Local Feature-Based Visual Localization. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 13339–13349. [Google Scholar]

- Zhao, X.; Shi, Z.; Wang, Y.; Niu, X.; Luo, T. Object detection model with efficient feature extraction and asymptotic feature fusion for unmanned aerial vehicle image. J. Electron. Imaging 2024, 33, 053044. [Google Scholar] [CrossRef]

- Pautrat, R.; Lin, J.T.; Larsson, V.; Oswald, M.R.; Pollefeys, M. SOLD2: Self-supervised occlusion-aware line description and detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11368–11378. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. arXiv 2019, arXiv:1905.11946. [Google Scholar]

- Wang, L.; Tong, Z.; Ji, B.; Wu, G. Tdn: Temporal difference networks for efficient action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1895–1904. [Google Scholar]

- Zhou, K.; Meng, X.; Cheng, B. Review of stereo matching algorithms based on deep learning. Comput. Intell. Neurosci. 2020, 2020, 8562323. [Google Scholar] [CrossRef]

- Li, J.; Li, H.; Tu, J.; Liu, Z.; Yao, J.; Li, L. Pavement crack detection based on local enhancement attention mechanism and deep semantic-guided multi-feature fusion. J. Electron. Imaging 2024, 33, 063027. [Google Scholar] [CrossRef]

- Jiang, X.; Ma, J.; Xiao, G.; Shao, Z.; Guo, X. A review of multimodal image matching: Methods and applications. Inf. Fusion 2021, 73, 22–71. [Google Scholar] [CrossRef]

- Gordo, A.; Almazan, J.; Revaud, J.; Larlus, D. End-to-end learning of deep visual representations for image retrieval. Int. J. Comput. Vis. 2017, 124, 237–254. [Google Scholar] [CrossRef]

- Sun, J.; Shen, Z.; Wang, Y.; Bao, H.; Zhou, X. LoFTR: Detector-free local feature matching with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8922–8931. [Google Scholar]

- Wang, H.; Zhou, F.; Wu, Q. Accurate Vision-Enabled UAV Location Using Feature-Enhanced Transformer-Driven Image Matching. IEEE Trans. Instrum. Meas. 2024, 73, 5502511. [Google Scholar] [CrossRef]

- Jiang, B.; Luo, S.; Wang, X.; Li, C.; Tang, J. Amatformer: Efficient feature matching via anchor matching transformer. IEEE Trans. Multimed. 2023, 26, 1504–1515. [Google Scholar] [CrossRef]

- Tian, Y.; Balntas, V.; Ng, T.; Barroso-Laguna, A.; Demiris, Y.; Mikolajczyk, K. D2D: Keypoint extraction with describe to detect approach. In Proceedings of the Asian Conference on Computer Vision, Kyoto, Japan, 30 November–4 December 2020. [Google Scholar]

- Fan, Z.; Liu, Y.; Liu, Y.; Zhang, L.; Zhang, J.; Sun, Y.; Ai, H. 3MRS: An effective coarse-to-fine matching method for multimodal remote sensing imagery. Remote Sens. 2022, 14, 478. [Google Scholar] [CrossRef]

- Yao, Y.; Zhang, Y.; Wan, Y.; Liu, X.; Yan, X.; Li, J. Multi-modal remote sensing image matching considering co-occurrence filter. IEEE Trans. Image Process. 2022, 31, 2584–2597. [Google Scholar] [CrossRef]

- Xu, Y.; Xi, H.; Ren, K.; Zhu, Q.; Hu, C. Gait recognition via weighted global-local feature fusion and attention-based multiscale temporal aggregation. J. Electron. Imaging 2025, 34, 013002. [Google Scholar] [CrossRef]

- Choi, J.; Myung, H. BRM localization: UAV localization in GNSS-denied environments based on matching of numerical map and UAV images. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 4537–4544. [Google Scholar]

- Hou, H.; Lan, C.; Xu, Q. UAV absolute positioning method based on global and local deep learning feature retrieval from satellite image. J. Geo-Inf. Sci. 2023, 25, 1064–1074. [Google Scholar]

- Sui, T.; An, S.; Chen, H.; Zhang, M. Research on target location algorithm based on UAV monocular vision. In Proceedings of the 2020 International Conference on Big Data & Artificial Intelligence & Software Engineering (ICBASE), Bangkok, Thailand, 30 October–1 November 2020; pp. 398–402. [Google Scholar]

- Zhuang, L.; Zhong, X.; Xu, L.; Tian, C.; Yu, W. Visual SLAM for Unmanned Aerial Vehicles: Localization and Perception. Sensors 2024, 24, 2980. [Google Scholar] [CrossRef]

- Liu, J.; Xiao, J.; Ren, Y.; Liu, F.; Yue, H.; Ye, H.; Li, Y. Multi-Source Image Matching Algorithms for UAV Positioning: Benchmarking, Innovation, and Combined Strategies. Remote Sens. 2024, 16, 3025. [Google Scholar] [CrossRef]

- Li, S.; Liu, C.; Qiu, H.; Li, Z. A transformer-based adaptive semantic aggregation method for UAV visual geo-localization. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Xiamen, China, 13–15 October 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 465–477. [Google Scholar]

- Dai, M.; Chen, J.; Lu, Y.; Hao, W.; Zheng, E. Finding point with image: An end-to-end benchmark for vision-based UAV localization. arXiv 2022, arXiv:2208.06561. [Google Scholar]

- Muja, M.; Lowe, D.G. Fast approximate nearest neighbors with automatic algorithm configuration. VISAPP 2009, 2, 2. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Paszke, A. Pytorch: An imperative style, high-performance deep learning library. arXiv 2019, arXiv:1912.01703. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning (ICML-10), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Likas, A.; Vlassis, N.; Verbeek, J.J. The global k-means clustering algorithm. Pattern Recognit. 2003, 36, 451–461. [Google Scholar] [CrossRef]

- Qin, J.; Lan, Z.; Cui, Z.; Zhang, Y.; Wang, Y. A satellite reference image retrieval method for absolute positioning of UAVs. Geomat. Inf. Sci. Wuhan Univ. 2023, 48, 368–376. [Google Scholar]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August 1988; Volume 15, pp. 10–5244. [Google Scholar]

- Shi, J. Good features to track. In Proceedings of the 1994 Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; pp. 593–600. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Calonder, M.; Lepetit, V.; Strecha, C.; Fua, P. Brief: Binary robust independent elementary features. In Proceedings of the Computer Vision–ECCV 2010: 11th European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010; Proceedings, Part IV 11. Springer: Berlin/Heidelberg, Germany, 2010; pp. 778–792. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 116–131. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Bazarevsky, V.; Kartynnik, Y.; Vakunov, A.; Raveendran, K.; Grundmann, M. Blazeface: Sub-millisecond neural face detection on mobile gpus. arXiv 2019, arXiv:1907.05047. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1580–1589. [Google Scholar]

- Cai, Z.; Ravichandran, A.; Maji, S.; Fowlkes, C.; Tu, Z.; Soatto, S. Exponential moving average normalization for self-supervised and semi-supervised learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 194–203. [Google Scholar]

- Shafiq, M.; Gu, Z. Deep residual learning for image recognition: A survey. Appl. Sci. 2022, 12, 8972. [Google Scholar] [CrossRef]

- He, T.; Zhang, Z.; Zhang, H.; Zhang, Z.; Xie, J.; Li, M. Bag of tricks for image classification with convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 558–567. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).