Abstract

Background/Objectives: Lung cancer is a major global health challenge and the leading cause of cancer-related mortality, due to its high morbidity and mortality rates. Early and accurate diagnosis is crucial for improving patient outcomes. Computed tomography (CT) imaging plays a vital role in detection, and deep learning (DL) has emerged as a transformative tool to enhance diagnostic precision and enable early identification. This systematic review examined the advancements, challenges, and clinical implications of DL in lung cancer diagnosis via CT imaging, focusing on model performance, data variability, generalizability, and clinical integration. Methods: Following the 2020 Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines, we analyzed 1448 articles published between 2015 and 2024. These articles are sourced from major scientific databases, including the Institute of Electrical and Electronics Engineers (IEEE), Scopus, Springer, PubMed, and Multidisciplinary Digital Publishing Institute (MDPI). After applying stringent inclusion and exclusion criteria, we selected 80 articles for review and analysis. Our analysis evaluated DL methodologies for lung nodule detection, segmentation, and classification, identified methodological limitations, and examined challenges to clinical adoption. Results: Deep learning (DL) models demonstrated high accuracy, achieving nodule detection rates exceeding 95% (with a maximum false-positive rate of 4 per scan) and a classification accuracy of 99% (sensitivity: 98%). However, challenges persist, including dataset scarcity, annotation variability, and population generalizability. Hybrid architectures, such as convolutional neural networks (CNNs) and transformers, show promise in improving nodule localization. Nevertheless, fewer than 15% of the studies validated models using multicenter datasets or diverse demographic data. Conclusions: While DL exhibits significant potential for lung cancer diagnosis, limitations in reproducibility and real-world applicability hinder its clinical translation. Future research should prioritize explainable artificial intelligence (AI) frameworks, multimodal integration, and rigorous external validation across diverse clinical settings and patient populations to bridge the gap between theoretical innovation and practical deployment.

1. Introduction

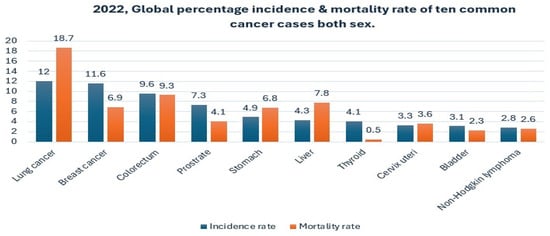

Over the past two decades, cancer has emerged as a significant global health crisis due to its high mortality rates and substantial economic burden [1,2]. In 2022, the International Agency for Research on Cancer (IARC) reported over 20 million new cancer diagnoses worldwide, resulting in approximately 9.7 million deaths. Lung cancer accounts for the highest proportion, with 2.5 million cases (12.4% of all cancers) and 1.80 million deaths (18.7% of global cancer-related mortality) [3]. Figure 1 illustrates the estimated worldwide incidence and mortality rates for the ten most common cancers in 2023 [4]. In the United States, the American Cancer Society (ACS) estimated 238,340 new lung cancer cases and 127,070 deaths in 2023, underscoring its profound public health impact [5]. Similarly, a 2022 study by Peking Medical College and the Chinese Academy of Medical Sciences documented 870,982 cases and 766,898 deaths in China, highlighting the global severity of the disease [6].

Figure 1.

Global percentage incidence and mortality rate of the ten most common cancer cases in 2022 for both sexes [4].

Beyond mortality, lung cancer imposes significant social and financial burdens, including high medical costs and strain on healthcare systems. Prognosis remains poor for advanced-stage diagnosis, with survival rates dropping sharply. Early detection and timely intervention are critical for preventing late-stage progression and improving patient outcomes [7]. The economic burden is staggering: The Journal of the American Medical Association (JAMA Oncology) projected that the global cost of 29 cancers will reach $25.2 trillion by 2050, with lung cancer accounting for approximately $3.9 trillion, or 15.4% of the total cost [8]. Its aggressive nature, marked by high recurrence rates and frequent late-stage detection, further compromises treatment efficacy and clinical outcomes, making lung cancer a pressing global health challenge [7,9].

Medical professionals classify lung cancer into two primary categories: small-cell lung cancer (SCLC) and non-small-cell lung cancer (NSCLC). NSCLC, representing approximately 85% of cases, grows and spreads more slowly than SCLC and includes subtypes such as adenocarcinoma, squamous cell carcinoma, and large-cell carcinoma. In contrast, SCLC, strongly linked to smoking, is highly aggressive, metastasizes rapidly, and often disseminates early [10,11]. Effective diagnosis is pivotal for managing this complex disease. Traditional diagnostic methods, such as microscopy, chest X-rays, and clinical biomarkers, are prone to observational errors and limitations in sensitivity and specificity, which can often lead to delayed or incorrect diagnoses.

Radiologists increasingly rely on advanced imaging modalities, like computed tomography (CT), magnetic resonance imaging (MRI), and positron emission tomography (PET). Among these, low-dose CT scans have emerged as the gold standard for early detection because of their superior ability to capture detailed anatomical structures [12,13]. The National Lung Screening Trial (NSLT) demonstrated a 20% reduction in mortality with low-dose CT, attributed to its ability to generate high-resolution 3-dimensional reconstructions [14]. However, data overload, overdiagnosis, and false positives persist [15,16]. The manual interpretation of CT scans is also prone to errors, given that a typical scan contains 200–300 slices.

Advancements in artificial intelligence (AI) computer-aided diagnosis technologies leverage readily accessible data to enhance diagnostic accuracy and overcome the limitations of manual interpretation. Deep learning (DL) plays a crucial role in detecting, segmenting, and classifying complex medical images. Numerous computer-aided diagnosis (CAD) models have been developed to effectively handle the variability in CT scan data.

Motivation and Contribution

Recent developments in deep learning (DL) offer promising solutions to challenges in lung cancer diagnosis. Advanced deep learning (DL) architectures, particularly convolutional neural networks (CNNs), have demonstrated remarkable success in automating the detection, segmentation, and classification of lung nodules from computed tomography (CT) images. These techniques improve diagnostic accuracy and streamline clinical workflows, reducing the burden on healthcare systems.

Motivated by the transformative potential of DL in enhancing lung cancer diagnosis, this systematic literature review (SLR) consolidates fragmented research on DL applications in CT-based lung cancer diagnosis from 2015 to 2024. While existing reviews, such as Rui L. et al. 2022 [17], focus primarily on pulmonary nodule detection, they often emphasize nodule-specific tasks over a broader diagnostic pipeline. Similarly, Forte et al. (2022) [18] conducted a systematic review and meta-analysis of DL algorithm performance (e.g., sensitivity and specificity) but treated diagnosis as a single endpoint rather than analyzing individual stages. Hosseini et al. (2024) [19] provided a general overview of deep learning (DL) in lung cancer diagnosis, without disaggregating detection, segmentation, and classification tasks. In contrast, Dodia et al. (2022) [20] focused narrowly on technical comparisons of detection methods.

In contrast, our study compares performance metrics, including accuracy (Acc), sensitivity (Sen), specificity (Spe), precision–recall (PRC), F1-score, false positive reduction (FP), Dice similarity coefficient (DSC), computational performance (CPM), the area under the curve (AUC), receiver operating characteristics (ROC), and free-response operating characteristics (FROC). These metrics highlight practical challenges, such as dataset scarcity and annotation variability, while maintaining a comprehensive view of the diagnostic process. The novelty of our research lies in its holistic approach to applying deep learning for lung cancer diagnosis, with the following contributions:

- Granular pipeline analysis: A detailed dissection of the diagnostic pipeline of detection, segmentation, and classification, tailored explicitly for CT imaging;

- Practical challenges: Emphasis on real-world issues, such as data scarcity, data variability, and challenges in clinical integration;

- Forward-looking perspectives: A critical evaluation of performance metrics and future directions was discussed, which includes the potential CNN-transformer hybrid architectures and explainable AI frameworks.

This review assesses current deep learning methodologies, addresses challenges such as data variability and limited generalizability, and proposes future research directions to advance the development of reliable and interpretable diagnostic tools for the lung.

The rest of the paper is structured as follows: Section 2 provides an overview of deep learning (DL) lung nodule detection, segmentation, and classification techniques; Section 3 details the research methodology following the Prepared Reporting Items for Systematic Review and Meta-Analysis PRISMA 2020 [21] guidelines; Section 4 presents the results and data extraction analysis; Section 5 discusses key findings and limitations; and Section 6 concludes the study.

2. Literature Review

2.1. Evolution of CAD and DL

Computer-aided diagnostic (CAD) systems were first conceptualized in the 1960s for the analysis of radiographic images, with early applications in the detection of lung nodules using chest X-rays. This system later expanded to CT-based lung cancer detection following the invention of computed tomography in the 1970s, helping clinicians interpret medical images more effectively. Over time, CAD revolutionized radiology by reducing workloads and improving diagnostic precision [22]. Early applications of neural networks in medical imaging date back to the 1990s, including work by Lo et al. in 1995 on lung nodule detection. However, the widespread adoption of deep learning (DL) in radiology began in the early 2010s, driven by breakthroughs in convolutional neural networks (CNNs) and the acceleration of GPU computational power. This advancement enabled large-scale automated detection of pulmonary nodules and other pathologies. A key milestone occurred in 2012 when Hinton’s teams won the ImageNet Large-Scale Visual Recognition Challenge (ILSVRC) using the AlexNet model, inspiring extensive research into DL architecture for medical imaging, including convolutional neural networks (CNNs), deep belief networks (DBNs), autoencoders (DAEs), restricted Boltzmann machines (RBMs), recurrent neural networks (RNNs) architectures, and long short-term memory (LSTM) [23,24].

Transfer learning, first formally conceptualized by Pan and Yang (2009), has become transformative in medical imaging through its adaptation of ImageNet-based models [25]. This approach effectively addresses the critical challenge of limited labeled medical data by leveraging pre-trained architectures (Shin et al., 2016) [26]. Widely used models, such as the Visual Geometry Group (VGG) [27], ResNet, and DenseNet, have demonstrated success, significantly improving nodule identification accuracy, enhancing the classification of benign and malignant cases, and optimizing diagnostic workflows [28,29]. The field has advanced considerably, with deep learning (DL) systems now capable of fully automated CT scan analysis at high accuracy levels, thereby eliminating the traditional requirement for manual feature extraction [30]. Contemporary deep learning (DL) applications now extend beyond detection to predictive analytics (e.g., survival rate estimation) and tumour behaviour analysis, although current implementations focus primarily on multi-disease detection [31]. However, significant challenges remain, including (1) the need for large, annotated datasets and (2) substantial computational requirements, in addition to intrinsic limitations such as nodule heterogeneity and imaging noise variability, which may compromise model robustness and generalizability [32].

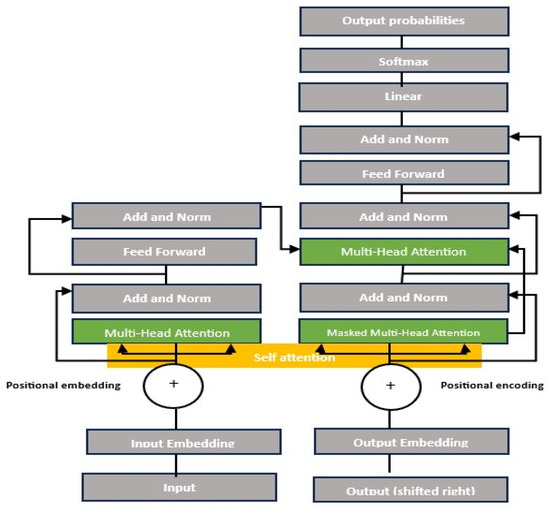

In 2020, Dosovitski et al. introduced vision transformers (ViTs) [33], addressing the limitations of CNNs in modelling long-range dependencies through self-attention mechanisms (see Figure 3 and Figure 4, illustration of the vision transformer architecture). While ViTs excel at capturing global context, their reliance on larger datasets and computational resources often makes CNNs more practical in clinical settings with data as highlighted in recent comprehensive reviews [34]. Hybrid architecture, such as TransUNet, now aims to balance these trade-offs [35]

2.2. Deep Convolutional Neural Networks (DCNNs)

A deep convolutional neural network (DCNN) is a specialized neural architecture designed to process grid-structured data, such as images, using convolutional layers, pooling operations, and hierarchical feature learning. Unlike traditional fully connected neural networks (FCNNs), DCNNs preserve spatial relationships, making them ideal for medical imaging tasks such as lung nodule detection in CT scans. Their ability to automatically learn hierarchical spatial features has led to their dominance in medical imaging, where they have demonstrated remarkable success in lung cancer diagnosis, particularly in nodule detection, segmentation, and malignancy prediction [36]. DCNNs are feedforward, multilayer neural networks for pattern recognition in spatially correlated data. Unlike traditional networks, they perform convolutional operations directly on input images, automating feature extraction and eliminating the need for manual intervention. As a result, DCNN-based systems have become essential for intelligent lung cancer screening and detection, as they require automatic preprocessing by learning the relevant image features [37].

Furthermore, DCNNs excel in various tasks, including image classification, localisation, detection, segmentation, and registration. Their foundational impact began with the introduction of LeNet by LeCun et al. in 1998 [38], pioneered a convolutional architecture for digit recognition. The prominence of DCNs in medical images surged after 2012, driven by the success of AlexNet’s and the advent of GPU-accelerated training. Today, DCNNs underpin most automated pipelines in medical image analysis.

Modern architecture, such as ResNet [28] and U-Net have further propelled the field by enabling more accurate and efficient analysis of complex medical images Specifically U-Net’s encoder–decoder structure supports precise segmentation [39], while ResNet’s residual blocks improve feature learning by facilitating the training of deeper networks without performance degradation [40].

Additionally, recent lightweight architectures, such as InceptionNet [41], MobileNet [42] and Xception [43] have contributed to the development of efficient and accurate deep learning applications. These models are especially valuable in resource-constrained and real-time clinical settings due to their reduced computational requirements.

2.2.1. Overview of Basic DL Techniques

Table 1 provides a structured summary of fundamental deep learning (DL) architecture, helping readers quickly grasp the core characteristics and structural differences among popular models. The table categorizes various deep learning (DL) techniques, including the following:

Table 1.

Overview of basic DL techniques.

- Convolutional neural networks (CNNs);

- Fully connected neural networks (FCNNs/DNNs);

- Deep belief networks (DBNs);

- Recurrent neural networks (RNNs);

- Long short-term memory (LSTM) networks;

- Autoencoders (AEs);

- Vision transformers (ViTs).

This concise reference is particularly valuable for newcomers, as it outlines the unique features, applications, and architectural frameworks of each deep learning (DL) technique, enabling readers to easily identify the strengths and typical use cases of each model type.

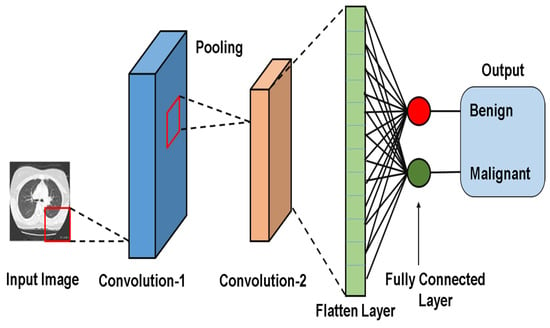

2.2.2. CNN Model Architecture Figure 2

A standard CNN architecture comprises sequential layers, including convolutional, activation, pooling, and fully connected (FC) layers, culminating in a Softmax layer for classification. These layers process imaging data hierarchically, as expressed by the following:

Figure 2.

CNN model architecture for lung nodule detection and classification.

The key components of a convolutional neural network (CNN) are as follows:

- Convolution (Conv) Layer:Extracts spatial features (e.g., edges, texture) using learnable filters (Kernels);

- Activation Function (Fact):Introduces nonlinearity (e.g., ReLU) to enable the network to model complex patterns.

- Pooling Layer:Reduces spatial dimension while retaining critical features (e.g., max pooling preserves dominant activation);

- Fully Connected (FC) Layer:This layer combines learned features for tasks like classification, regression, and/or feature learning.

The variables n, m, and k denote the number of repeated operations, the number of convolutional layers that process pixel values to extract high-level features, which are optimized via backpropagation. Pooling layers enhance generalization by down-sampling feature maps, while fully connected (FC) layers map features to output classes. In some architectures, FC layers are replaced with 1 × 1 convolutions to reduce the computational cost. Figure 2 illustrates a CNN model for detecting and classifying lung nodules. A CT image passes through convolutional and pooling layers, followed by fully connected (FC) layers. The SoftMax layer assigns class probabilities (benign vs. malignant) [44].

Deeper CNNs improve performance by expanding receptive fields and enhancing feature extraction. Smaller convolution kernels (e.g., 3 × 3) enhance computational efficiency, as evident in the evolution from early frameworks, such as the five-layer AlexNet [45], to advanced architectures. For example, a study by Pang et al. [46] introduces VGG-16, a novel deep convolutional neural network (DCNN), to enhance its performance with boosting techniques using 3 × 3 kernels, thereby increasing depth and identifying the pathological type of lung cancer using CT images. In contrast, Xie et al. [47] introduce a novel architecture called residual blocks to train the ResNetXt network, which enhances image classification performance by leveraging a new dimension termed cardinality. This approach aggregates multiple transformations within a modularized network structure, offering a more effective alternative to simply increasing network depth and width. These innovations strike a balance between computational efficiency and diagnostic precision in lung cancer imaging tasks.

2.2.3. Deep CNNs vs. ViTs

Convolutional Neural Networks

Convolutional neural networks (CNNs) remain the cornerstone of medical imaging analysis. Their architecture is particularly suited for grid-like data (e.g., images) because the convolutional operation applies shared weights across all pixels, thereby imposing a substantial prior on spatial hierarchies. Since AlexNet’s 12012 breakthrough in image classification, CNNs have driven advancement in medical imaging. Subsequent architectures expanded receptive fields, optimized feature extraction, and reduced computational cost by using smaller convolutional kernels.

CNN architecture has evolved significantly from the simple five-layer structure of AlexNet to more sophisticated frameworks, such as VGG, ResNet, DenseNet, and GoogleNet [48]. More recent models, such as InceptionNet, MobileNet, and Xception, have further enhanced computational efficiency and accuracy, making them ideal for resource-constrained environments and real-time applications.

Recent studies have highlighted the efficacy of CNNs in medical imaging tasks. For example, Lakshmana Prabu et al. [49] combined an optimal deep neural network (ODNN) with linear discriminant analysis (LDA) for nodule classification, achieving an accuracy of 94.56%. Teramoto and Fujita [50] prioritized minimal false positives, attaining 80% detection accuracy with minimal false positives on the Lung Image Database Consortium (LIDC) dataset. Sebastian and Dua et al. 2023 [51] proposed a four-stage CNN model with Otsu thresholding and local binary pattern (LBP) features, achieving 92.58% accuracy and a rapid processing time of 134.596 s per scan.

Vision Transformers (ViTs)

Vaswani et al. (2017) [52] introduced a transformer for natural language processing (NLP) that revolutionized deep learning with their self-attention mechanism. Vision transformers adapt this architecture for computer vision by treating images as sequences of patches. Additionally, it excels at capturing global dependencies and consists of four key stages: image patching and embedding, positional encoding, a transformer encoder, and a classification head (MLP head). The breakdown of these stages is as follows:

- Image patching and embedding

- o

- Patch splitting: An input image (e.g., of size 224 × 224 pixels) is divided into fixed-size, non-overlapping patches. For instance, splitting the image into 16 × 16-pixel patches yield a grid of 224/16 = 14 × 14 = 196 patches.

- o

- Patch flattening: Each patch is reshaped into a 1D vector (e.g., 16 × 16 × 3 to give a 196-dimensional vector).

- o

- Patch embedding: Flattened patches were projected into a higher dimensional via a learnable linear projection. This linear transformation enables the model to learn richer feature representations for each patch. The result is a sequence of patch embeddings, each representing a part of the image.

- o

- Positional encoding: Spatial information was retained by adding positional embedding to patch vectors. This embedding enables the model to understand the spatial relationships between the patches.

- Transformer encoder:

- o

- Multi-head self-attention (MSA): The self-attention mechanism allows each patch to attend to others, computing attention scores as follows:where Q represents a query, K is a key, and V represents a value, which are learned linear projections.

- o

- Feedforward network (FFN): After self-attention, features pass through two fully connected layers with a nonlinear activation function (typically GELU activation).

- o

- Residual connection and layer normalization: Stabilize training by preserving information across layers. These techniques ensure that the deeper layers do not lose critical information from the earlier layers.

- Classification head (MLP head): The classification tokens (CLS) are extracted and fed into a multilayer perceptron (MLP) for final classification.

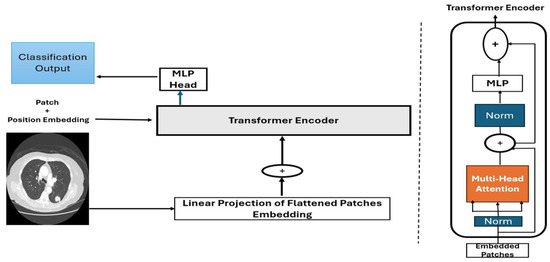

Figure 3 Illustrates the Vision Transformer (ViT) architecture. Meanwhile, Figure 4 depicts a modified transformer for image classification.

Figure 3.

Vision Transformer model architecture [33,52].

Figure 4.

Modified transformer architecture for image classification [33].

- Practical Limitations and Comparisons

Although CNNs and ViTs are promising, they face practical limitations. While ViTs excel at capturing global context modelling, they require substantial data and computational resources. Hybrid CNN-transformer models often underperform in terms of accuracy when data is scarce [53]. For instance, Gai et al. Reported that CNNs achieve 93% recall, outperforming ViTs in low-data scenarios. CNNs remain pragmatic in clinical settings due to their efficiency (e.g., lower computational demands, interpretability (e.g., clear feature visualization), and data efficiency (e.g., robust performance with limited datasets)) [54]. Table 2 Summarizes key comparisons between Convolutional Neural Networks (CNNs) and Vision Transformers (ViTs) in medical imaging.

Table 2.

Comparison of deep CNNs versus ViTs in image processing.

The diagram illustrated in Figure 4 shows the Vision Transformer (ViTs) architecture for processing CT scan images, such as lung scans. The input image first undergoes patch splitting, where it’s divided into fixed-size patches that are flattened and linearly projected into embedding vectors, shown by an explicit arrow from the input image to the linear projection step. These patch embeddings then combine with positional embeddings (indicated by a + operation) to preserve spatial relationships. The resulting enriched embeddings feed into the transformer encoder (marked by another clear arrow), where they undergo multi-head attention, layer normalization, MLP processing, and residual connections. Vertical dotted lines visually separate the initial image processing phase from the subsequent transformer computations. Finally, the processed embeddings pass to the classification head for prediction—the arrows between components.

(input → embedding → encoder → output) Explicitly show the data flow through the ViT pipeline

2.3. Lung Nodule Detection and Segmentation

Detecting tumours and lesion cells through medical image analysis is crucial in diagnosing lung cancer. The primary objective is to facilitate object segmentation and distinguish between benign and malignant tumours, nodules, or lesions. The detection module identifies and localizes regions of interest (ROIs) in CT images, such as nodules or tumour cells in pathology images. Moreover, the segmentation module delineates lesion boundaries in CT scans. Additionally, the classification module determines whether tumour cells are cancerous or non-cancerous and can classify disease stages.

Recent advances in deep learning (DL) have enabled the early detection of lung cancer. Numerous systems have been developed, such as the approach by Zhu et al. (2018) [55], which employed a 3D dual-path network with Faster R-CNN and gradient boosting machine (GBM), achieving 87.5% accuracy with a 12.5% error rate in lung nodule detection. Similarly, Yu [56] utilized a 3D CNN, achieving 87.94% sensitivity and 92% specificity, with four false positives per scan. However, the limited availability of datasets has restricted the generalizability of these models.

Lung nodule segmentation is a critical stage in CT scan processing, as scans often include irrelevant elements such as water, air, bone, and blood, which can hinder accurate nodule identification. Several CNN-based segmentation models have been proposed for the automatic segmentation of lung tumors in computed tomography (CT) images. For example, Shelhamer et al. (2017) [57] proposed a fully convolutional network (FCN) that transforms fully connected layers into convolutional layers and performs semantic segmentation through up-sampling. Liu et al. [58] proposed a patch-level model to differentiate lung and non-lung regions. However, its applicability is limited to local areas.

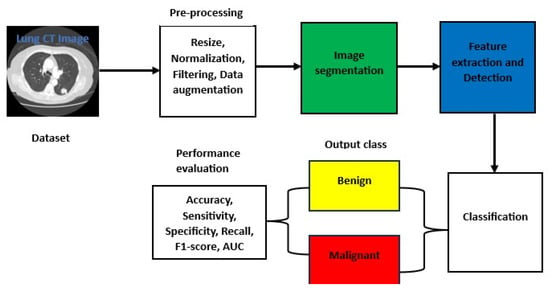

Fu et al. (2024) [59] introduced the multi-scale U-Net, which combines CNN and transformer architectures. This approach addresses the feature learning challenge uniquely, yielding exceptional results through parallel design, multi-scale feature fusion, and cross-attention modules. Jiang et al. (2018) [60] utilized multigroup patches for segmentation and enhancement to design a four-channel convolutional neural network that processes both original and binary images. Their methods were evaluated on the Lung Image Database Consortium and Image Database Resource Initiative (LIDC-IDRI) dataset, achieving a sensitivity of 94% with 15.1 false positives per scan. Similarly, Riaz et al. [61] proposed a hybrid model integrating MobileNetV2 as an encoder within a U-Net framework, achieving a Dice score of 0.8793. This approach outperforms traditional segmentation methods by leveraging transfer learning and efficient feature extraction. Other researchers, such as Lu et al. (2024) [62], developed an improved ParaU-Net-based parallel coding network for lung nodule segmentation, which enhances feature extraction through the use of the Multi-Path Encoding Module (MPEM) and the Cross-Feature Fusion Module (CFFM). This method achieves an Intersection over Union (Iou) of 87.15% and a Dice coefficient of 92.16% on the LIDC data. Despite outperforming other advanced techniques, its high computational cost and risk of overfitting may limit its use in resource-constrained settings. Figure 5 illustrates the lung cancer diagnosis process using the DL framework. It begins with CT image acquisition, followed by lung nodule segmentation, then classification, a critical step-by-step process in the pipeline.

Figure 5.

Basic architecture for lung CT image detection, segmentation, and classification.

2.4. Lung Nodule Classification

Advancements in deep learning (DL) have enabled the development of models trained to distinguish between different types of lung nodules by analyzing input data. These classifiers categorize nodules as benign or malignant, utilizing state-of-the-art deep learning (DL) methods. Many studies employ base models that are pre-trained on benchmark datasets, such as ImageNet [63]. For instance, Shen et al. [64] proposed a multi-crop CNN (MC-CNN) to extract nodule-specific features by cropping regions from CNN feature maps, achieving a classification accuracy of 87.14%. Nasrullah et al. [65] introduced CMixNet, a CNN-based model for nodule detection and classification, trained on the LIDC-IDRI datasets, which achieved 94% recall and 91% specificity. Chang et al. [66] developed a multiview residual selective kernel network using CT images from three anatomical planes for binary classification, reporting an AUC of 97.11%. Zhang et al. [67] proposed a lung nodule classification framework based on the squeeze-and-excitation network and aggregated residual transformation, combining the advantages of ResNeXt and achieving an accuracy of 91.67% compared to models developed by Wei et al., which achieved an accuracy of 87.14% [68].

Other studies focus on enhancing precision. For example, Zhao et al. (2019) [69] developed a hybrid 2D-CNN that combines the LeNet and AlexNet architectures to assess the malignancy of nodules. Despite these advancements, challenges remain in accurately classifying malignant nodules from CT screening. Notably, not all classified nodules are malignant, underscoring the need to improve diagnostic frameworks to reduce false positives and enhance early diagnosis.

3. Research Methodology

3.1. Overview

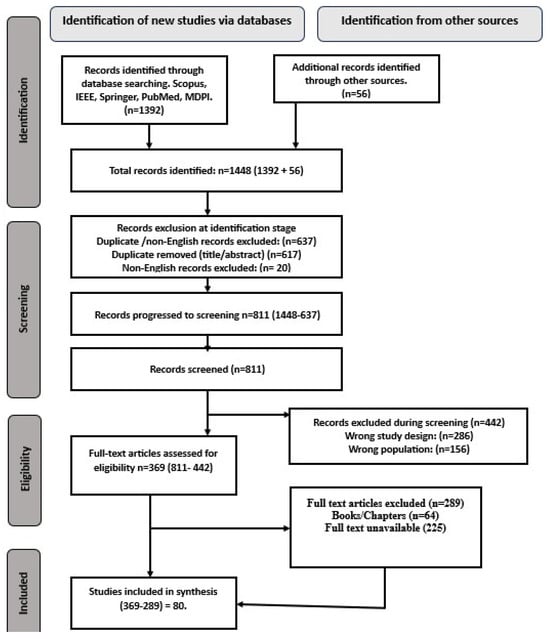

This systematic review adheres to the 2020 PRISMA guidelines [21]. We conducted a structured search across five databases (Scopus, IEEE, Springer, PubMed, and MDPI) using predefined keywords related to deep learning (DL) and lung cancer diagnosis via computed tomography (CT) imaging. After screening titles and abstracts and applying inclusion criteria, we selected 80 studies published between 2015 and 2024 for analysis. Figure 6, displays the PRISMA flow diagram and illustrates the selection process. We evaluated these studies based on their methodologies, performance metrics (e.g., accuracy, sensitivity, specificity, F1-score, false positive reduction, Dice similarity coefficient, intersection over union (IoU)), and clinical applicability, with a focus on lung nodule detection, segmentation, and classification.

Figure 6.

2020 PRISMA record selection procedure flow diagram [21].

Research Questions:

The study addresses the following research questions (RQs):

RQ1: What are the predominant DL approaches (e.g., CNNs, Transformers, ResNet, DenseNet, and pre-trained models) and methodologies used for lung nodules detection, segmentation, and classification in CT imaging?

RQ2: How do the performance, interpretability, and scalability of DL techniques vary across diagnostic sub-tasks (detection, segmentation, and classification)?

RQ3: What are the characteristics, strengths, and limitations of publicly available CT imaging datasets used in lung cancer research?

RQ4: What validation methods were used to evaluate the DL model’s performance, and how do these methods align with clinical requirements?

RQ5: What are the key technical and practical challenges hindering the deployment of DL for lung cancer diagnosis?

3.2. Literature Search and Selection

We employed a systematic search approach to identify and select relevant journal articles aligned with our research objectives. A comprehensive search from 2015 to 2024 was conducted using the following:

Databases: Scopus, IEEE Xplore, Springer, PubMed, and MDPI.

Grey literature: Preprints (arXiv, semantic scholars) and conference proceedings on Medical Image Computing and Computer Assisted Intervention (MICCAI), and Conference on Computer Vision and Pattern Recognition (CVPR).

Keywords: “Deep Learning”, “Lung cancer”, “Pulmonary nodule”, “CT imaging”, “Computed Tomography”, “Nodule Detection”, “Segmentation”, “Classification”, “CNN”, and “Vision Transformer”.

Boolean operators: We used AND/OR to combine terms such as (“lung cancer” OR “pulmonary nodule”) AND (“deep learning” OR “CNN” OR “Vision Transformer” OR “Hybrid CNN-Transformer” AND (“CT imaging” OR “Computed tomography”) AND (“Detection” OR “Segmentation” OR “Classification”).

3.3. Initial Search Results

Table 3 summarizes the initial results identified from the selected databases before applying the inclusion/exclusion criteria for article screening.

Table 3.

Initial selection results.

3.4. Inclusion/Exclusion Criteria

We employed a systematic approach to identify and select relevant journal articles that align with our research objectives. Table 4 details the exact inclusion and exclusion criteria.

Table 4.

Inclusion and exclusion requirement criteria.

3.5. Search Strategy

Our search strategy included the following:

- Screening: Two independent reviewers screened the titles/abstracts and keywords of the English-language articles, and conflicts were resolved through a third reviewer.

- Full-text review: articles meeting the inclusion criteria proceeded to the data extraction stage.

- Timeframe: We prioritized studies published between 2015 and 2024.

- Organization: Full texts were exported to Mendeley, and irrelevant studies (e.g., textbooks, non-peer-reviewed reports, and non-CT modalities) were excluded.

- Data Extraction: We extracted information on global cancer incidence and mortality, model architecture, datasets, performance metrics, and validation methods.

- Tools: we used a custom Excel template and the Mendeley reference manager.

3.6. Quality Assessment

We explicitly reported the reasons for exclusion (language, study design, population) in the PRISMA flow diagram (Figure 6) to enhance transparency and mitigate perceived selection bias. To ensure methodological rigour, we evaluated studies using the following criteria:

- Performance metrics: accuracy, sensitivity, specificity, F1-score, false positive reduction, Dice similarity coefficient, computational performance (CPM), and AUC-ROC;

- Validation methods: cross-validation and external datasets testing;

- Reproducibility: code availability and transparency in hyperparameter reporting;

- Reporting transparency: adherence to standardized reporting guidelines.

These criteria were systematically applied to assess the quality and reliability of the included studies, ensuring consistency and accountability in our analysis.

3.7. Administrative Information

- ■

- Registration Platform: Adopted the International Prospective Register of Systematic Reviews (PROSPERO)

Articles lacking methodological clarity or performance metrics were excluded to prioritize high-quality evidence.

4. Results

4.1. Study Selection Process

An initial search of Scopus, IEEE, Springer, PubMed, and MDPI yielded 1392 articles, with 56 additional records identified through other sources, resulting in 1448 articles. During the initial identification stage, 637 records were excluded due to duplication or language restrictions, of which 617 records (96.86%) were duplicated, according to title/abstract screening, and 20 records (3.14%) were excluded for being non-English.

This left 811 records that progressed to the screening phase. During screening, a further 442 records were excluded for incompatible study designs or an irrelevant population (see Figure 6. PRISMA flow diagram for further information.

4.2. Study Characteristics

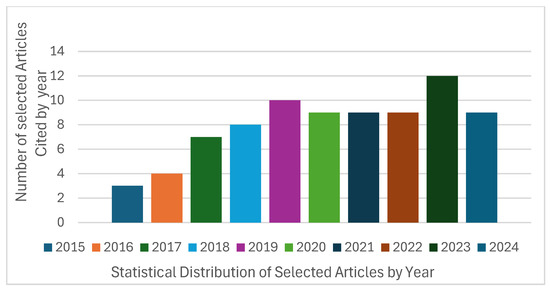

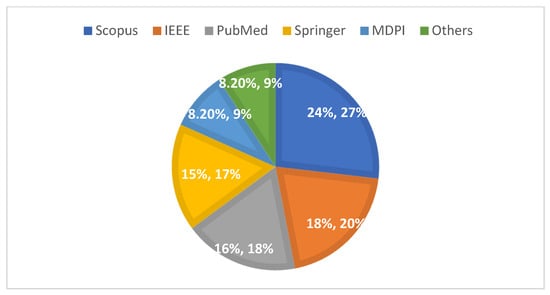

The number of publications on deep learning (DL) methodologies increased significantly starting in 2017, peaking in 2023 (see Figure 7), which illustrates the distribution of selected articles from 2015 to 2024. Additionally, the percentage distribution across the five selected databases and other sources indicates that 24% of the studies were retrieved from Scopus, while 18% were accessed via IEEE Xplore (see Figure 8). Notably, articles published in IEEE Transactions on Medical Imaging and the Scientific Reports together accounted for 10% of the analyzed publications. As detailed in Table 5, Table 6 and Table 7, more than 60% of the studies focused on nodule detection and classification, whereas approximately 25% addressed segmentation tasks.

Figure 7.

Articles distribution of selected papers (2015 to 2024).

Figure 8.

Percentage distribution of articles from selected databases (2015–2024).

Table 5.

Summary of DL techniques for lung cancer detection.

Table 6.

Summary of DL techniques for lung nodule segmentation.

Table 7.

Relevant studies of DL in lung cancer classification.

4.3. Data Synthesis and Analysis

The studies were categorized into three diagnostic tasks: detection (Table 5, segmentation Table 6, and classification Table 7, Each task was evaluated using metrics such as accuracy, sensitivity, specificity, and the area under the curve (AUC). For example, in the detection task, hybrid CNNs models achieved a sensitivity range of 80–98% and false positive rates of up to four per scan. Segmentation models, including those based on Mask R-CNN and multi-scale U-Net architectures, achieved Dice scores of up to 92%. Classification models, particularly CNN-Transformer hybrids, demonstrated accuracy ranging from 84% to 99% in differentiating between benign and malignant cases.

4.4. Databases for Lung Cancer CT Imaging

Acquiring appropriate datasets is critical for deep learning architectures, and these should include sufficient training and test sets. However, due to the data-intensive nature of these models, the available datasets are often inadequate. To address these challenges, researchers employ techniques such as data augmentation (e.g., geometric transformation, intensity adjustment, generative adversarial networks (GAN)), few-shot learning (FSL), or transfer learning. Our review observed that public datasets were primarily utilised in lung cancer diagnosis through CT imaging. These include LIDC-IDRI, ELCAP, LUNA16, ANODE9, Kaggle–Bowl, and LNDb 2020.

Additionally, some studies utilized private datasets from institutions such as Montgomery County Shenzhen Hospital, Aarthi Scan Hospital in Tirunelveli, Tamil Nadu, India, the COPDGene Clinical Trial (Lung Database), and the Ali Baba Tianchi dataset. However, we excluded specific private datasets from our analysis due to data privacy restrictions. Although we contacted the respective researchers to obtain usage rights, permission was not granted before finalizing this review. Consequently, citations for these datasets were omitted. Table 8 Provides a detailed overview of the public datasets mentioned above, covering information about their release years, image modalities, sample sizes, file formats, and available annotations.

Table 8.

Summary of various lung CT databases.

Datasets Analysis

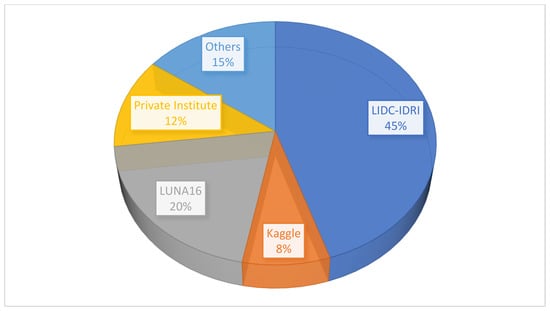

Our systematic literature review (SLR) reveals that most researchers have utilized datasets from The Cancer Imaging Archive (TCIA), a publicly available repository. Among these, the LIDC-IDRI dataset emerged as the most frequently cited, appearing in over 45% of studies, followed by LUNA16, which accounted for approximately 20% of the dataset usage (see Table 8), for a detailed breakdown of databases referenced across studies. These datasets typically include thoracic computed tomography (CT) scans used for lung cancer screening and diagnosis, along with annotated lesions.

Private institutional datasets showed varied accessibility due to data restrictions and privacy concerns. For instance, while obtaining permission to access data from Walter Cantidio Hospital University Caera (UFC), Brazil, was possible via an online platform. However, other private datasets (e.g., those from Shenzhen Hospital) were excluded due to unresolved permissions issues, despite outreach efforts. Publicly available datasets, such as those hosted on Kaggle, were referenced and cited in about 8% of the studies. The remaining studies utilized less commonly used datasets such as Rider, NSLT, and ANODE09. Figure 9 illustrates the percentage distribution of the datasets used across the reviewed studies. Additionally, Table 9 presents references to the datasets used by researchers for detection, segmentation, and classification tasks in the selected articles.

Figure 9.

Statistical distribution of the databases used in the review.

Table 9.

Dataset references.

4.5. Evaluation Metrics

Researchers in medical image analysis utilized a wide range of performance indicators to evaluate the effectiveness of algorithms and validate their models. We evaluated our models using multiple metrics to compare studies comprehensively. These metrics include accuracy (ACC), sensitivity (SEN), specificity (SPE), precision–recall (PRC), F1 score, false positive rate (FPR), receiver operating characteristic (ROC) area under the curve (AUC), free-response receiver operating characteristic (FROC) curve, and the Dice similarity coefficient (DSC). Below are the descriptions of some of these performance metrics:

- I.

- Accuracy (Acc):

Accuracy is a commonly used metric in classification tasks. It is defined as the proportion of correctly labelled instances relative to the total number of specimens in the dataset. Accuracy assesses the overall correctness of the model’s predictions. The formula is as follows:

where TP represents true positives, TN denotes true negatives, FP represents false positives, and FN denotes false negatives.

- II.

- Sensitivity (Recall):

Sensitivity, also known as recall or true positive rate, measures the algorithm’s ability to correctly identify actual positive instances. This metric is especially important in lung nodule detection and segmentation to minimize missed detection, and it is calculated as follows:

A highly sensitive model minimizes false negatives, although it may also increase false positives if it is overly inclusive.

- III.

- Specificity

Specificity assesses the model’s ability to correctly identify true negative instances (non-nodules), ensuring that normal regions are not mistakenly labelled as abnormalities. It is calculated as follows:

However, a model optimized for high specificity may be overly conservative, potentially missing some true positive cases.

- IV.

- F1-Score

F1-score is the harmonic mean of precision (positive predictive value, PPV) and sensitivity, providing a single measure that balances both false positives and false negatives. It is particularly useful when the class distribution is imbalanced. The formula is calculated as follows:

- V.

- Area Under the Curve (AUC):

The area under the curve (AUC) measures a model’s overall discriminative ability across all classification thresholds. It is often used with other metrics, such as sensitivity, specificity, and the Dice similarity coefficient (DSC), for a more comprehensive evaluation of model performance. A limitation of AUC is that it provides an aggregate measure, which may not fully capture performance at specific operating points. The AUC is calculated as follows:

- VI.

- The Receiver Operating Characteristics (ROC) Curve

The receiver operating characteristic (ROC) curve is a visualization tool for assessing the effectiveness of diagnostic methods. It plots the false positive rate on the X-axis against sensitivity on the Y-axis across all possible cutoff values, helping to determine the optimal threshold for diagnosis. However, the ROC curve has limitations. It does not show a cut-off sample for normal or abnormal behaviour on the ROC curve. It may appear jagged with small sample sizes and does not indicate the specific cut-off points.

- VII.

- Dice Similarity Coefficient (DCS):

The Dice similarity coefficient (DSC) quantifies the spatial overlap between the segmented region of interest (ROI) and the ground truth. DSC values range from 0 (no overlap to 1 (complete overlap). High DSC values indicate better alignment between the model’s segmentation and the ground truth. However, DSC does not distinguish between false positives and false negatives. DSC is calculated as follows:

The careful selection of these metrics is crucial, as each offers unique insight into different aspects of model performance. For instance, in nodule detection studies, accuracy often exceeds 95%, with detection sensitivity reaching as high as 98%, and the false positive rates are reported as being up to four per scan. In lung region segmentation tasks, the Dice similarity coefficient achieves values of up to 97% in two studies. Meanwhile, in the nodule classification task, the accuracy reaches as high as 99%, with sensitivity peaking at 98%. Notably, 37 studies across both detection and classification tasks employed cross-validation techniques to validate their methods, ensuring robust and reliable performance assessments.

Key Limitations:

Inconsistent Reporting: approximately 30% of studies omitted details about hyperparameters or validation protocols.

Overfitting Risks: high accuracy (e.g., 99%) was often derived from single-centre data, raising concerns about generalizability.

5. Discussion

Lung cancer has gained considerable attention in the medical field due to its widespread impact, resulting in a high global mortality rate. This disease is particularly challenging to diagnose early because it often remains asymptomatic in its initial stages, and pulmonary nodules can resemble other benign lung conditions. The swift identification of these nodules is crucial for early treatment, which significantly improves patient outcomes. Extensive lung CT screening is essential to this process, providing non-invasive methods for detecting abnormalities.

These reviews synthesize advancements in deep learning (DL) for lung cancer diagnosis via CT imaging. Our systematic study meticulously examines 80 carefully selected publications from an initial pool of 1448, gathered from reputable journal databases. The articles in our collections showcase various contributions to the field, with 2023 marking significant progress in publications from diverse researchers. This comprehensive analysis assesses algorithmic performance at different stages of lung cancer diagnosis, detection, segmentation, and classification. It compares key features of relevant studies, providing valuable insights into the applications of deep learning (DL) in medical image analysis.

Following a thorough review of deep learning (DL) techniques, design patterns, and their purposes, convolutional neural networks (CNNs) have dominated the field. CNNs excel in image analysis due to their ability to capture spatial hierarchies in data, achieving an accuracy of ≥90% and sensitivity of ≥85% across detection, segmentation, and classification tasks. Hybrid architectures, such as Faster R-CNN, ResNet, and U-Net, along with innovative attention mechanisms, further enhance performance. The top-performing models reach 98.7% accuracy and 98.2% sensitivity. Public datasets such as LIDC-IDRI (used in 45% of studies) and LUNA16 (20%) remain pivotal for model development. However, heavy reliance on these datasets risks overfitting their inherent biases, potentially limiting generalizability.

Key Findings, Limitations, and Future Directions

Research on lung cancer diagnosis using artificial intelligence (AI) has been ongoing for over a decade, yielding encouraging results. Despite these advancements, several challenges persist that must be addressed to fully realize AI’s potential in this domain.

- Limitations:

Although CNN has proven successful in medical image processing, studies suggest that unique design and architecture alone cannot overcome all obstacles. Current diagnostic techniques for lung cancer detection rely on visually identifiable abnormalities observed in computed tomography (CT) scans. However, significant global variations in CT scanner capabilities, such as differences in advanced features like resolution or contrast enhancement, pose challenges. Some scanners lack these capabilities, leading to inconsistencies in patients’ CT slice quality. This variability may critically impede the development of reliable automated systems.

From a clinical perspective, multiple obstacles hinder the development of computer-aided diagnosis (CAD) systems. These challenges include:

1. Data variability: Heterogeneity exists in imaging protocols (e.g., slice thickness, contrast usage), annotation standards (manual vs. automated), and nodule characteristics, which limits generalizability. Only 25% of studies validated models on multicentre cohorts, underscoring this issue.

2. Data diversity: Most datasets lack demographic diversity (e.g., age, ethnicity, sex) and multimodal data (e.g., pathology, genomics), restricting their clinical applicability. Including diverse populations is essential to ensure equitable model performance across groups.

3. Challenges in pulmonary nodule segmentation: Structural similarities in lung tissues, such as those between nodules and blood vessels or other anatomical features, pose significant challenges even to advanced deep learning models, including 3D-Unet and transformer-based architectures. Additionally, computational complexity hinders real-world deployment.

4. Classification: While binary classification (benign vs. malignant) achieved 99% accuracy, staging and severity grading remain understudied, which limits comprehensive diagnosis.

5. Explainability: The “black box” nature of DL models erodes clinician trust. Fewer than 10% of studies have incorporated explainable AI frameworks to address this issue.

6. Validation gaps: Only 37 of the 80 conducted studies used external validation, raising concerns about reproducibility in diverse settings.

7. Reporting bias assessment: Reporting bias was assessed by evaluating the completeness of reported outcomes across studies. Heterogeneity in the dataset’s sources (e.g., LIDC-IDRI vs. private datasets) and limited validation in a multicentre setting suggest potential bias toward optimistic performance metrics.

- Language Exclusion Bias:

The exclusion of 20 non-English studies may have introduced selection bias, as relevant research published in languages other than English (e.g., Chinese, Japanese, Portuguese) was not included due to time constraints and a lack of resources for translation into English. While prioritising English-language databases was feasible, this restriction may limit the generalizability of findings to non-English-speaking populations. Future reviews should incorporate multilingual searches or utilize translation tools to mitigate this bias.

- Future Directions:

To address these limitations, we proposed the following:

- Curating multimodal, multicentre datasets: We recommend collaborating globally to build datasets with diverse demographics (e.g., age, ethnicity, sex), imaging protocols (slice thickness: 1–5 mm), and cancer stages (I–IV), for public research utilization. Additionally, we recommend utilizing federated learning (FL) for privacy-preserving data sharing. For example, Liu et al. 2023 [58] demonstrated the efficacy of federated learning with a 3D ResNet18 model, achieving comparable accuracy to centralized training while preserving patient data privacy across institutions, allowing hospitals to collaborate training models without sharing raw data, a vital feature for complying with regulations like the European Union (EU)’s General data Protection Regulation (GDPR), the European Economic Area (EEA)’s regulation for personal data privacy for its citizens regardless of where the data are processed, and the US’s Health Insurance Portability and Accountability (HIPAA) law, which protects healthcare information and patient privacy).

- Develop lightweight, efficient models: To address computational constraints and privacy concerns, developing a lightweight architecture, such as MobileNetV3 or EfficientNet-Lite, for edge deployment is crucial. Lightweight models reduce computational overhead through techniques like pruning (removing redundant neurons), quantization (reducing numerical precision), and knowledge distillation (training compact models to mimic larger ones).

- Expand beyond binary classification: Moving beyond binary classification to multi-class staging (e.g., NSCLC stages I–IV) and histological subtyping (adenocarcinoma vs. squamous cell carcinoma) is crucial for personalized treatment, thereby improving diagnostic granularity. For example, Chang et al. (2024) [66] used a multiview residual network to classify nodule malignancy but did not address staging. We also recommend leveraging federated datasets, such as the Decathlon challenge [90], to pool multi-institutional staging data.

- Ensure real-world validation with explainability: We recommend validating a model on real-world datasets with artefacts (e.g., NSCLC-Radiomics). We also recommend embedding explainable tools, such as Gradient-weighted Class Activation Mapping (GRAD-CAM) and Local Interpretable Model-agnostic Explanations (LIME), into the clinical workflow to build trust.

- Standardize annotation and reporting: We recommend establishing consensus guidelines for nodule labelling (e.g., spatial overlap thresholds), leveraging semi-automated tools to reduce inter-observer variability, and adopting a reporting standard like DECIDE_AI for transparency.

6. Conclusions

Due to its growing prevalence as a leading cause of mortality worldwide, lung cancer remains a significant public health issue. Late-stage diagnosis contributes to poor outcomes, making it crucial to develop effective methods for its early detection and treatment. Integrating deep learning (DL) with computed tomography (CT) imaging has shown promise in enhancing diagnostic accuracy and refining the diagnosis of lung cancer.

To comprehensively assess deep learning (DL) techniques in lung cancer detection, segmentation, and classification, this systematic review evaluates 80 studies published between 2015 and 2024. It assesses performance metrics and analyzes the approaches applied in deep learning (DL) applications for lung cancer diagnosis using computed tomography (CT) images. Significant progress has been made, with nodule detection achieving a sensitivity of 95–99%, segmentation yielding a 97% Dice similarity coefficient, and classification attaining an accuracy of 99.6%. These advancements highlight deep learning’s capability to streamline workflows, reduce radiologists’ workload, and enhance early detection. However, despite these successes, significant gaps persist, limiting the translation of theoretical innovations into reliable clinical tools. Below, we elaborate on these challenges and gaps, supported by evidence from the reviewed studies, and propose actionable solutions for future research. These include the following:

- Limited generalizability due to dataset heterogeneity: While public datasets, such as LIDC-IDRI and LUNA16, dominate deep learning research (used in 45% and 20% of the studies, respectively), they lack diversity in terms of demographics, imaging protocols, and scanner specifications. For instance, LUNA16 primarily includes Western populations, with limited representation of Asian or African cohorts, potentially biasing models towards specific ethnic groups. Furthermore, only 155 studies validated their models on multicenter datasets, which can lead to overfitting. For example, models trained on LIDC-IDRI achieved 98% accuracy, but this dropped to 76% when tested on private datasets, such as those from Walter Cantidio Hospital and UFC Brazil, due to differences in slice thickness and contrast protocols. This heterogeneity undermines model robustness in real-world settings, where CT scanners and patient populations exhibit significant variations.

- Lack of standardization in annotation practices: Annotation variability, including inter-radiologist disagreement and differences between manual and automated labeling, introduces systematic bias. For example, in the LIDC-IDRI dataset, nodule boundaries annotated by four radiologists exhibited a 20–30% variance in spatial overlap metrics, with Dice scores ranging from 0.65 to 0.85. Such inconsistencies propagate into model training, as seen in segmentation studies, where U-Net variants achieved a 92% Dice coefficient on the LIDC-IDRI dataset but only 78% on the Decathlon datasets, due to divergent annotation criteria. Additionally, fewer than 10% of studies disclosed annotation guidelines, complicating reproducibility.

- Neglect of cancer staging and subtype classifications: While binary classification (benign vs. malignant) dominates deep learning research (60% of studies), staging and subtype differentiation (e.g., adenocarcinoma vs. squamous cell carcinoma) remain understudied. Only 5% of the reviewed works addressed NSCLC subtyping, despite its clinical relevance for personalized therapy. For instance, Huang et al. (2022) achieved 94.06% AUC for malignancy detection and classification [102] but did not predict stages (I–IV) or metastatic potential. This gap limits clinical utility, as treatment plans rely heavily on stage-specific protocols.

- Lack of transparency and explainability: The black box nature of deep learning models, particularly vision transformers (ViTs), erodes clinician trust. Only 8% of studies incorporated explainable techniques, such as GRAD-CAM [113], LIME or SHAP [72]. For example, Gai et al. (2024) [54] reported that ViTs outperform CNNs in capturing global context, but they offered no visual explanations for nodule localization. In contrast, the CNN-based model provided interpretable feature maps but lacked ViT’s long-range dependency modelling. Bridging this gap is critical for clinical adoption, as radiologists require transparent decision pathways to validate AI outputs.

Deep learning holds immense promise for revolutionizing lung cancer diagnosis, but its clinical translation hinges on addressing challenges related to reproducibility, standardization, and transparency. By fostering interdisciplinary collaboration and prioritizing real-world validation, the research community can bridge the gap between algorithmic innovation and actionable clinical tools, ultimately reducing mortality and healthcare costs.

Author Contributions

Conceptualization, K.A. and K.R.; writing—original draft preparation, K.A.; writing—review and editing, K.A., K.R. and A.B.A.; supervision, K.R. and A.B.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Informed Consent Statement

Not applicable, as the work is carried out on a publicly accessible dataset, while the private dataset from Walter Cantidio Hospital University, Caera Brazil (UFC) can be accessed via online version at https://doi.org/10.1016/j.artmed.2020.101792 (accessed on 23 November 2023).

Data Availability Statement

The data presented in the study findings are publicly accessible via their website for scholarly usage. LUNA 16 dataset: (http://luna.grand-challenge.org/), https://doi.org/10.1007/s00330-015-4030-7; LIDC-IDRI: (https://wiki.cancerimagingarchive.net/display/NBIA/Downloading+TCIA+Images), https://doi.org/10.1118/1.352820 and https://doi.org/10.1148/radiol.2323032035; Rider Lung CT datasets: (https://www.cancerimagingarchive.net/collection/rider-lung-ct/) https://doi.org/10.7937/k9/tcia.2015.u1x8a5nr; LNDb2020 dataset: (https://lndb.grand-challenge.org/Data/ and https://zenodo.org/records/6613714) https://doi.org/10.1016/j.media.2021.102027; ANODE09 dataset (https://anode09.grand-challenge.org/Details/); Lung City Segmentation challenge: (https://www.cancerimagingarchive.net/collection/lctsc/) https://doi.org/10.7937/K9/TCIA.2017.3R3FVZ08; IQ-OTHNCCD dataset: (https://www.kaggle.com/datasets/hamdallak/the-iqothnccd-lung-cancer-dataset); Walter Cantidio Hospital University Caera Brazil (UFC) online version: (https://doi.org/10.1016/j.artmed.2020.101792); SIMBA Public Database repository: access via ELCAP SIMBA (http://www.via.cornell.edu/lungdb.html); Decathlon dataset: obtained from the Decathlon Challenge (http://medicaldecathlon.com).

Acknowledgments

The authors gratefully acknowledge the Research Management Centre (RMC) at Multimedia University Malaysia (MMU) for their generous support in covering the journal publication fees.

Conflicts of Interest

The authors declare no competing interests.

References

- Sung, H.; Ferlay, J.; Siegel, R.L.; Laversanne, M.; Soerjomataram, I.; Jemal, A.; Bray, F. Global Cancer Statistics 2020: GLOBOCAN Estimates of Incidence and Mortality Worldwide for 36 Cancers in 185 Countries. CA Cancer J. Clin. 2021, 71, 209–249. [Google Scholar] [CrossRef] [PubMed]

- Shatnawi, M.Q.; Abuein, Q.; Al-Quraan, R. Deep learning-based approach to diagnose lung cancer using CT-scan images. Intell. Based Med. 2025, 11, 10188. [Google Scholar] [CrossRef]

- Siegel, R.L.; Miller, K.D.; Wagle, N.S.; Jemal, A. Cancer statistics, 2023. CA Cancer J. Clin. 2023, 73, 17–48. [Google Scholar] [CrossRef] [PubMed]

- Bray, F.; Laversanne, M.; Sung, H.; Ferlay, J.; Siegel, R.L.; Soerjomataram, I.; Jemal, A. Global cancer statistics 2022: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 2024, 74, 229–263. [Google Scholar] [CrossRef]

- Wolf, A.M.D.; Oeffinger, K.C.; Shih, T.Y.; Walter, L.C.; Church, T.R.; Fontham, E.T.H.; Elkin, E.B.; Etzioni, R.D.; Guerra, C.E.; Perkins, R.B.; et al. Screening for lung cancer: 2023 guideline update from the American Cancer Society. CA Cancer J. Clin. 2024, 74, 50–81. [Google Scholar] [CrossRef]

- Xia, C.; Dong, X.; Li, H.; Cao, M.; Sun, D.; He, S.; Yang, F.; Yan, X.; Zhang, S.; Li, N.; et al. Cancer statistics in China and United States, 2022: Profiles, trends, and determinants. Chin. Med. J. 2022, 135, 584. [Google Scholar] [CrossRef]

- Monkam, P.; Qi, S.; Ma, H.; Gao, W.; Yao, Y.; Qian, W. Detection and Classification of Pulmonary Nodules Using Convolutional Neural Networks: A Survey. IEEE Access 2019, 7, 78075–78091. [Google Scholar] [CrossRef]

- Chen, S.; Cao, Z.; Prettner, K.; Kuhn, M.; Yang, J.; Jiao, L.; Wang, Z.; Li, W.; Geldsetzer, P.; Bärnighausen, T.; et al. Estimates and Projections of the Global Economic Cost of 29 Cancers in 204 Countries and Territories from 2020 to 2050. JAMA Oncol. 2023, 9, 465–472. [Google Scholar] [CrossRef]

- Erefai, O.; Soulaymani, A.; Mokhtari, A.; Obtel, M.; Hami, H. Diagnostic delay in lung cancer in Morocco: A 4-year retrospective study. Clin. Epidemiol. Glob. Health 2022, 16, 101105. [Google Scholar] [CrossRef]

- Ambrosini, V.; Nicolini, S.; Caroli, P.; Nanni, C.; Massaro, A.; Marzola, M.C.; Rubello, D.; Fanti, S. PET/CT imaging in different types of lung cancer: An overview. Eur. J. Radiology. 2012, 81, 988–1001. [Google Scholar] [CrossRef]

- Mahmud, S.H.; Soesanti, I.; Hartanto, R. Deep Learning Techniques for Lung Cancer Detection: A Systematic Literature Review. In Proceedings of the 2023 6th International Conference on Information and Communications Technology, ICOIACT 2023, Yogyakarta, Indonesia, 10 November 2023; pp. 200–205. [Google Scholar] [CrossRef]

- Kvale, P.A.; Johnson, C.C.; Tammemägi, M.; Marcus, P.M.; Zylak, C.J.; Spizarny, D.L.; Hocking, W.; Oken, M.; Commins, J.; Ragard, L.; et al. Interval lung cancers not detected on screening chest X-rays: How are they different? Lung Cancer 2014, 86, 41–46. [Google Scholar] [CrossRef] [PubMed]

- Silva, F.; Pereira, T.; Neves, I.; Morgado, J.; Freitas, C.; Malafaia, M.; Sousa, J.; Fonseca, J.; Negrão, E.; de Lima, B.F.; et al. Towards Machine Learning-Aided Lung Cancer Clinical Routines: Approaches and Open Challenges. J. Pers. Med. 2022, 12, 480. [Google Scholar] [CrossRef] [PubMed]

- Brisbane, W.; Bailey, M.R.; Sorensen, M.D. An overview of kidney stone imaging techniques. Nat. Rev. Urol. 2016, 13, 654–662. [Google Scholar] [CrossRef]

- Journy, N.; Rehel, J.-L.; Le Pointe, H.D.; Lee, C.; Brisse, H.; Chateil, J.-F.; Caer-Lorho, S.; Laurier, D.; Bernier, M.-O. Are the studies on cancer risk from CT scans biased by indication? Elements of answer from a large-scale cohort study in France. Br. J. Cancer 2015, 112, 185–193. [Google Scholar] [CrossRef]

- Yang, W.; Zhang, H.; Yang, J.; Wu, J.; Yin, X.; Chen, Y.; Shu, H.; Luo, L.; Coatrieux, G.; Gui, Z.; et al. Improving Low-Dose CT Image Using Residual Convolutional Network. IEEE Access 2017, 5, 24698–24705. [Google Scholar] [CrossRef]

- Li, R.; Xiao, C.; Huang, Y.; Hassan, H.; Huang, B. Deep Learning Applications in Computed Tomography Images for Pulmonary Nodule Detection and Diagnosis: A Review. Diagnostics 2022, 12, 298. [Google Scholar] [CrossRef]

- Forte, G.C.; Altmayer, S.; Silva, R.F.; Stefani, M.T.; Libermann, L.L.; Cavion, C.C.; Youssef, A.; Forghani, R.; King, J.; Mohamed, T.-L.; et al. Deep Learning Algorithms for Diagnosis of Lung Cancer: A Systematic Review and Meta-Analysis. Cancers 2022, 14, 3856. [Google Scholar] [CrossRef]

- Hosseini, S.H.; Monsefi, R.; Shadroo, S. Deep learning applications for lung cancer diagnosis: A systematic review. Multimed. Tools Appl. 2024, 83, 14305–14335. [Google Scholar] [CrossRef]

- Dodia, S.; Annappa, B.; Mahesh, P.A. Recent advancements in deep learning-based lung cancer detection: A systematic review. Eng. Appl. Artificial. Intell. 2022, 116, 105490. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, 71. [Google Scholar] [CrossRef]

- Giger, M.L. Machine learning in medical imaging. J. Am. Coll. Radiol. 2018, 15, 512–520. [Google Scholar] [CrossRef] [PubMed]

- Lo, S.-C.; Lou, S.-L.; Lin, J.-S.; Freedman, M.; Chien, M.; Mun, S. Artificial Convolution Neural Network Techniques and Applications for Lung Nodule Detection. IEEE Trans. Med. Imaging 1995, 14, 711–718. [Google Scholar] [CrossRef] [PubMed]

- Litjens, G.; Kooi, T.; Bejnordi, B.E.; Setio, A.A.A.; Ciompi, F.; Ghafoorian, M.; van der Laak, J.A.W.M.; van Ginneken, B.; Sánchez, C.I. A survey on deep learning in medical image analysis. Med. Image Anal. 2017, 42, 60–88. [Google Scholar] [CrossRef] [PubMed]

- SPan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Shin, H.C.; Roth, H.R.; Gao, M.; Lu, L.; Xu, Z.; Nogues, I.; Yao, J.; Mollura, D.; Summers, R.M. Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Trans. Med. Imaging 2016, 35, 1285–1298. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Jian, S. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; Available online: http://openaccess.thecvf.com/content_cvpr_2016/html/He_Deep_Residual_Learning_CVPR_2016_paper.html (accessed on 20 September 2024).

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef]

- Bhattacharjee, A.; Rabea, S.; Bhattacharjee, A.; Elkaeed, E.B.; Murugan, R.; Selim, H.M.R.M.; Sahu, R.K.; Shazly, G.A.; Bekhit, M.M.S. A multi-class deep learning model for early lung cancer and chronic kidney disease detection using computed tomography images. Front. Oncol. 2023, 13, 1193746. [Google Scholar] [CrossRef]

- Ching, T.; Himmelstein, D.S.; Beaulieu-Jones, B.K.; Kalinin, A.A.; Do, B.T.; Way, G.P.; Ferrero, E.; Agapow, P.-M.; Zietz, M.; Hoffman, M.M.; et al. Opportunities and obstacles for deep learning in biology and medicine. J. R. Soc. Interface 2018, 15, 1520170387. [Google Scholar] [CrossRef]

- Hosny, A.; Parmar, C.; Quackenbush, J.; Schwartz, L.H.; Aerts, H.J.W.L. Artificial intelligence in radiology. Nat. Rev. Cancer 2018, 18, 500–510. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Xia, K.; Wang, J. Recent advances of Transformers in medical image analysis: A comprehensive review. MedComm–Future Med. 2023, 2, e38. [Google Scholar] [CrossRef]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. Transunet: Transformers make strong encoders for medical image segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar]

- Gu, Y.; Chi, J.; Liu, J.; Yang, L.; Zhang, B.; Yu, D.; Zhao, Y.; Lu, X. A survey of computer-aided diagnosis of lung nodules from CT scans using deep learning. Comput. Biol. Med. 2021, 137, 104806. [Google Scholar] [CrossRef] [PubMed]

- Ker, J.; Wang, L.; Rao, J.; Lim, T. Special Section on Soft Computing Techniques for Image Analysis in the Medical Industry Current Trends, Challenges and Solutions Deep Learning Applications in Medical Image Analysis. IEEE Access 2017, 6, 9375–9389. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Xu, W.; Fu, Y.L.; Zhu, D. ResNet and its application to medical image processing: Research progress and challenges. Comput. Methods Programs Biomed. 2023, 240, 107660. [Google Scholar] [CrossRef]

- Durga Bhavani, K.; Ferni Ukrit, M. Design of inception with deep convolutional neural network based fall detection and classification model. Multimed. Tools Appl. 2024, 83, 23799–23817. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Zhao, X.; Wang, L.; Zhang, Y.; Han, X.; Deveci, M.; Parmar, M. A review of convolutional neural networks in computer vision. Artif. Intell. Rev. 2024, 57, 99. [Google Scholar] [CrossRef]

- Naseer, I.; Akram, S.; Masood, T.; Jaffar, A.; Khan, M.A.; Mosavi, A. Performance Analysis of State-of-the-Art CNN Architectures for LUNA16. Sensors 2022, 22, 4426. [Google Scholar] [CrossRef]

- Pang, S.; Meng, F.; Wang, X.; Wang, J.; Song, T.; Wang, X.; Cheng, X. VGG16-T: A novel deep convolutional neural network with boosting to identify pathological type of lung cancer in early stage by CT images. Int. J. Comput. Intell. Syst. 2020, 13, 771–780. [Google Scholar] [CrossRef]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, NI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Lakshmana Prabu, S.K.; Mohanty, S.N.; Shankar, K.; Arunkumar, N.; Ramirez, G. Optimal deep learning model for classification of lung cancer on CT images. Future Gener. Comput. Syst. 2019, 92, 374–382. [Google Scholar] [CrossRef]

- Teramoto, A.; Fujita, H. Fast lung nodule detection in chest CT images using cylindrical nodule-enhancement filter. Int. J. Comput. Assist. Radiol. Surg. 2013, 8, 193–205. [Google Scholar] [CrossRef] [PubMed]

- Sebastian, A.E.; Dua, D. Lung Nodule Detection via Optimized Convolutional Neural Network: Impact of Improved Moth Flame Algorithm. Sens. Imaging 2023, 24, 11. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st Annual Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Chitty-Venkata, K.T.; Mittal, S.; Emani, M.; Vishwanath, V.; Somani, A.K. A survey of techniques for optimizing transformer inference. Med. Image Anal. 2023, 88, 102802. [Google Scholar] [CrossRef]

- Gai, L.; Xing, M.; Chen, W.; Zhang, Y.; Qiao, X. Comparing CNN-based and transformer-based models for identifying lung cancer: Which is more effective? Multimed. Tools Appl. 2024, 83, 59253–59269. [Google Scholar] [CrossRef]

- Zhu, W.; Liu, C.; Fan, W.; Xie, X. Deep Lung: Deep 3D dual path nets for automated pulmonary nodule detection and classification. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision, WACV 2018, Lake Tahoe, NV, USA, 12–15 March 2018; pp. 673–681. [Google Scholar] [CrossRef]

- Gu, Y.; Lu, X.; Yang, L.; Zhang, B.; Yu, D.; Zhao, Y.; Gao, L.; Wu, L.; Zhou, T. Automatic lung nodule detection using a 3D deep convolutional neural network combined with a multi-scale prediction strategy in chest CTs. Comput. Biol. Med. 2018, 103, 220–231. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef]

- Liu, L.; Fan, K.; Yang, M. Federated learning: A deep learning model based on ResNet18 dual path for lung nodule detection. Multimed. Tools Appl. 2023, 82, 17437–17450. [Google Scholar] [CrossRef]

- Fu, B.; Peng, Y.; He, J.; Tian, C.; Sun, X.; Wang, R. HmsU-Net: A hybrid multi-scale U-net based on a CNN and transformer for medical image segmentation. Comput. Biol. Med. 2024, 170, 108013. [Google Scholar] [CrossRef]

- Jiang, H.; Ma, H.; Qian, W.; Gao, M.; Li, Y. An Automatic Detection System of Lung Nodule Based on Multigroup Patch-Based Deep Learning Network. IEEE J. Biomed. Health Inform. 2018, 22, 1227–1237. [Google Scholar] [CrossRef] [PubMed]

- Riaz, Z.; Khan, B.; Abdullah, S.; Khan, S.; Islam, S. Lung Tumor Image Segmentation from Computer Tomography Images Using MobileNetV2 and Transfer Learning. Bioengineering 2023, 10, 981. [Google Scholar] [CrossRef] [PubMed]

- Lu, Y.; Fan, X.; Wang, J.; Chen, S.; Meng, J. ParaU-Net: An improved UNet parallel coding network for lung nodule segmentation. J. King Saud. Univ.—Comput. Inf. Sci. 2024, 36, 102203. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Shen, H.; Chen, L.; Liu, K.; Zhao, K.; Li, J.; Yu, L.; Ye, H.; Zhu, W. A subregion-based positron emission tomography/computed tomography (PET/CT) radiomics model for the classification of non-small cell lung cancer histopathological subtypes. Quant. Imaging Med. Surg. 2021, 11, 2918. [Google Scholar] [CrossRef]

- Nasrullah, N.; Sang, J.; Alam, M.S.; Mateen, M.; Cai, B.; Hu, H. Automated lung nodule detection and classification using deep learning combined with multiple strategies. Sensors 2019, 19, 3722. [Google Scholar] [CrossRef]

- Chang, H.-H.; Wu, C.-Z.; Gallogly, A.H. Pulmonary Nodule Classification Using a Multiview Residual Selective Kernel Network. J. Imaging Inform. Med. 2024, 37, 347–362. [Google Scholar] [CrossRef]

- Zhang, G.; Yang, Z.; Gong, L.; Jiang, S.; Wang, L.; Zhang, H. Classification of lung nodules based on CT images using squeeze-and-excitation network and aggregated residual transformations. Radiol. Med. 2020, 125, 374–383. [Google Scholar] [CrossRef]

- Shen, W.; Zhou, M.; Yang, F.; Yu, D.; Dong, D.; Yang, C.; Zang, Y.; Tian, J. Multi-crop convolutional neural networks for lung nodule malignancy suspiciousness classification. Pattern Recognit. 2017, 61, 663–673. [Google Scholar] [CrossRef]

- Zhao, X.; Qi, S.; Zhang, B.; Ma, H.; Qian, W.; Yao, Y.; Sun, J. Deep CNN models for pulmonary nodule classification: Model modification, model integration, and transfer learning. J. X-Ray Sci. Technol. 2019, 27, 615–629. [Google Scholar] [CrossRef]

- Huidrom, R.; Chanu, Y.J.; Singh, K.M. Neuro-evolutional based computer aided detection system on computed tomography for the early detection of lung cancer. Multimed. Tools Appl. 2022, 81, 32661–32673. [Google Scholar] [CrossRef]