Integrating Bayesian Knowledge Tracing and Human Plausible Reasoning in an Adaptive Augmented Reality System for Spatial Skill Development

Abstract

1. Introduction

1.1. Rationale for Using AR to Enhance Spatial Skills

1.2. Role of BKT and HPR

1.3. Research Questions and Objectives

- How accurately can the integrated BKT-HPR model diagnose skill mastery and cognitive strategies in real time during AR-based spatial tasks? (RQ1)

- What impact does the integrated adaptive system have on learners’ overall spatial reasoning and problem-solving approaches compared to a non-adaptive AR environment? (RQ2)

- What are learners’ perceptions regarding the adaptivity, feedback quality, and strategy support offered by the integrated BKT-HPR augmented reality system? (RQ3)

2. Background and Related Work

2.1. Overview of Spatial Skill Development Strategies

2.2. Prior Work on BKT for Adaptive Learning Systems

2.3. Applications of HPR in Educational Contexts

2.4. Existing AR Approaches for Spatial Training

3. Conceptual Framework

3.1. Explanation of the BKT Model for Skill Acquisition

- Initial knowledge (p_init): the probability that a learner already knows the skill before engaging in any tasks;

- Learning rate (p_learn): the probability that a learner will acquire the skill after an instructional or practice opportunity;

- Guess probability (p_guess): the likelihood that a learner responds correctly despite not having mastered the skill;

- Slip probability (p_slip): the likelihood that a learner responds incorrectly despite having mastered the skill.

3.2. Explanation of HPR for Interpreting Learner Strategies

3.3. Illustration of How These Two Models Complement Each Other

4. System Design and Implementation

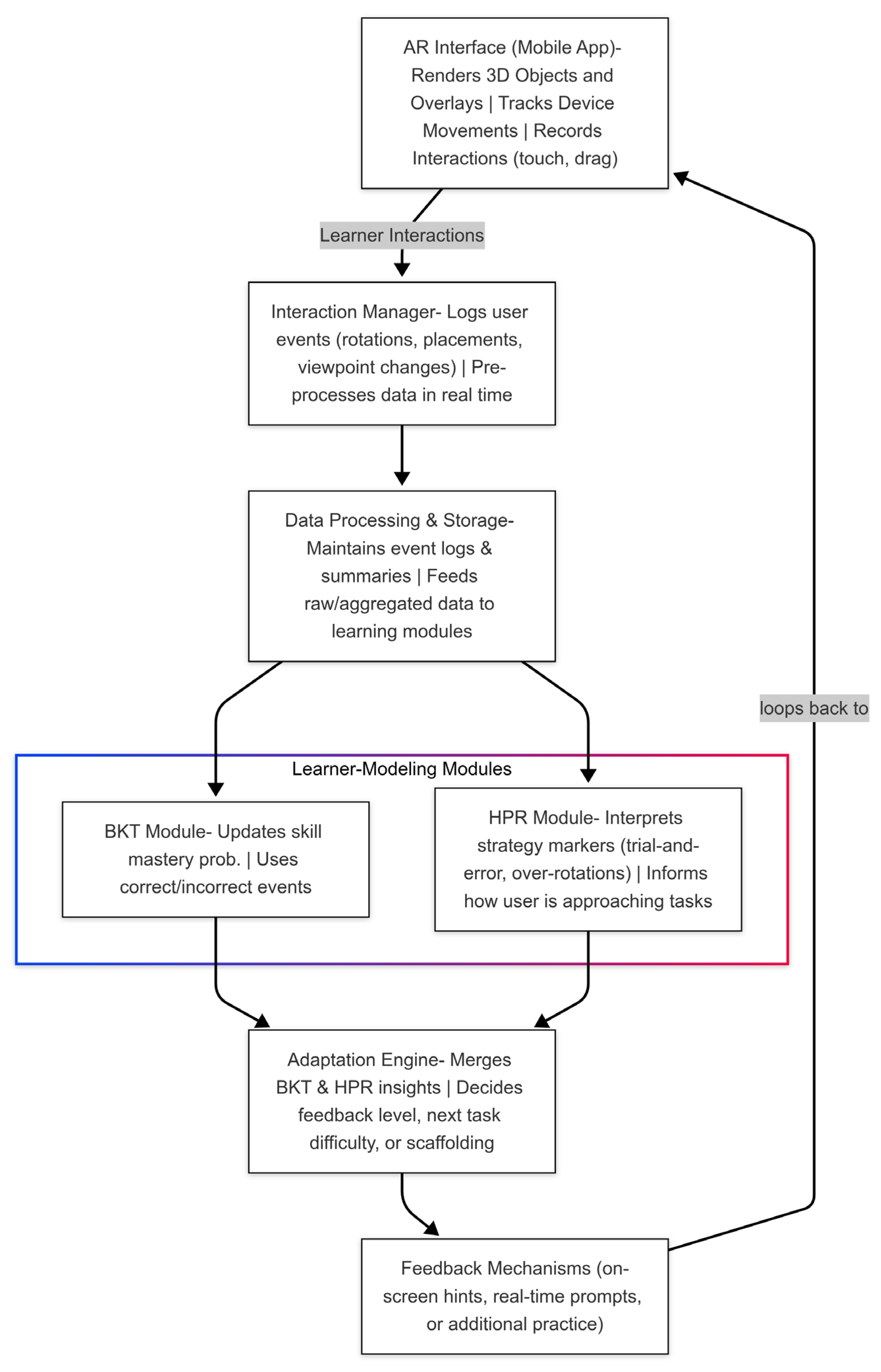

4.1. System Architecture and Workflow

- Client-Side AR Interface: The client application, running on a mobile device or a head-mounted display, captures the learner’s real-world environment via the camera. Virtual 3D elements are rendered over this feed, allowing users to manipulate digital objects in real time. The AR application was developed using the Unity 3D engine in combination with AR Foundation and ARCore (for Android devices), enabling real-time environment tracking, gesture-based manipulation, and dynamic object rendering within the learner’s physical surroundings. The interface supports device orientation sensing and allows seamless overlay of 3D models that respond to user interaction, such as touch-based rotation or drag-and-drop assembly. This technical foundation ensured that the AR environment could support fine-grained interaction monitoring necessary for adaptive reasoning.

- Interaction Manager: On the client side, an interaction manager module logs every user action (object moves, rotations, success/failure messages, and other relevant events). When a user attempts to assemble a 3D object, the manager tracks each manipulation: how the user rotates or repositions the elements, how often they request hints, and the duration of each sub-step. These data points form the raw input for the adaptive engine. The interaction manager typically pre-processes these data (e.g., time-stamping events, compressing repeated actions) before sending them to the central server.

- Server-Side Learning Analytics: While some AR applications can function in an entirely local mode, a server-side analytics component is advantageous when training multiple learners or collecting large-scale data. The server ingests the event streams and applies advanced algorithms, including BKT for estimating skill mastery and HPR for identifying strategy patterns. This centralized approach allows for integrated monitoring of user progress across multiple tasks and sessions.

- BKT Module: The form of knowledge modeling implemented in the system is Bayesian, realized through BKT. This approach enables probabilistic estimation of a learner’s mastery level for each spatial skill in real time, based on observed correct and incorrect responses. The BKT module requires precise logs of both correct and incorrect attempts, error probabilities, guess probability, and the user’s current estimate of mastery for each individual spatial ability. For instance, there may be one specific ability, “mental rotation”, and another, different ability, “assembly of objects”, and another different ability, “symmetry recognition”. Each of these abilities has its own set of parameters, which are adjusted after each user experience with an appropriate task. The updated mastery probabilities are used as key inputs to subsequent system decisions, for example, whether to increase task complexity or to provide a gentle nudge. BKT was selected as the learner modeling approach due to its ability to provide real-time, probabilistic estimations of student mastery, which is well suited for structured, skill-based learning environments such as spatial reasoning tasks. Compared to fixed-rule systems or heuristic thresholds, BKT offers a more flexible and evidence-driven mechanism for adjusting task complexity, enabling the system to maintain learners within their optimal zone of development. Its interpretability and proven success in prior educational applications make it a reliable and pedagogically grounded choice for adaptive learning environments. Standard parameter values were initially set as follows: initial knowledge probability pinit = 0.25, learning rate plearn = 0.15, guess probability pguess = 0.20, and slip probability pslip = 0.10. These values were based on prior literature and calibrated during pilot testing to reflect the skill acquisition pace in spatial tasks. Each skill (e.g., mental rotation, 3D assembly) had its own BKT tracker updated after each task step based on success or error events.

- HPR Module: Along with the BKT module, the HPR module is focused on analyzing learners’ behavioral tendencies. While, as mentioned above, the BKT model takes a high number of correct answers to be a strong indication of mastery, the HPR module can detect signs of over-reliance on trial-and-error procedures or external perspectives. The module performs contextual comparison of the user’s performed actions. For example, it compares patterns of object manipulation and perspective-changing frequency. These patterns provide interpretative results, i.e., such as “inadequate planning”, “over-trust of external perspectives”, or “goal-driven behavior”, and “suboptimal approach”. Finally, based on these data, it subtly alters the skill state of the user or triggers a certain intervention to stimulate enhanced good habits. The HPR module employed a rule-based inference engine with heuristics derived from cognitive strategy patterns. For example, a rule such as “IF object rotations > 5 AND viewpoint changes > 3 THEN classify as ‘trial-and-error strategy’” carried a confidence weight of 0.7. Other rules were similarly defined for detecting behaviors such as externalization bias, impulsive execution, or metacognitive hesitation. These rules were applied using a logic-based evaluator triggered every 10 s or at the end of each task. The integration of HPR and BKT scores was governed by an adaptive weighting factor β = 0.5, balancing quantitative mastery probability and qualitative strategic classification for decision making in the adaptation engine.

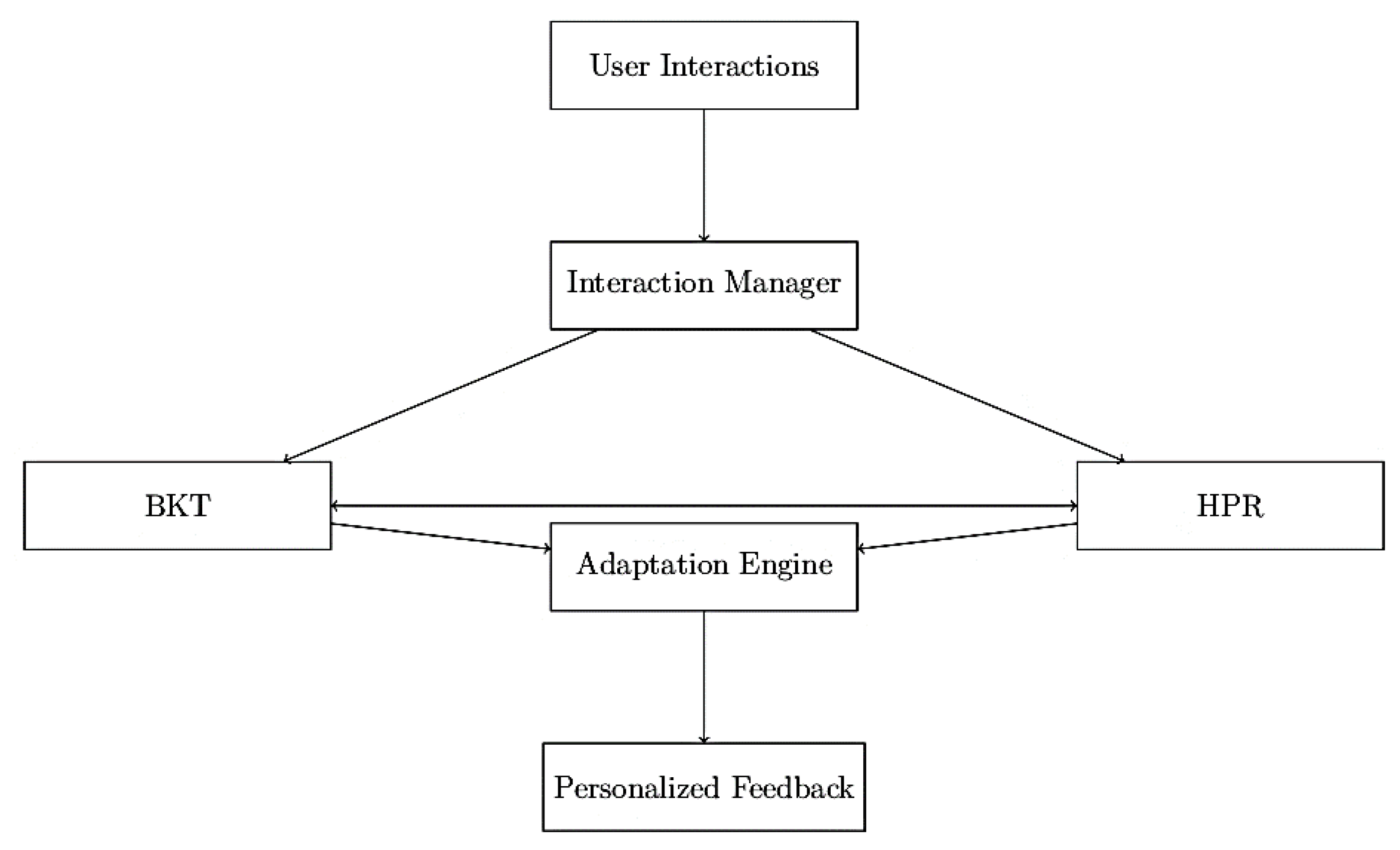

- Adaptation Engine: Central to the system is the adaptation engine, which consolidates inputs gained through BKT and HPR to make decisions in real time concerning intervention. For instance, if BKT shows a higher probability of expertise for rotations and HPR indicates consistent usage of the device, the adaptation engine will continue with the existing rate of skill-linked tasks (since accuracy is consistent for the user) and provide metacognitive guidance directed towards stimulating inner spatial reasoning. On the other hand, if an enhanced lack of confidence towards mastery is evident through BKT, sequential instructions or changing to easier exercises will be implemented by it. The engine produces instructions or adjusts the AR interface, thus affecting future learner action. It is important to note that HPR does not replace BKT. Instead, it supplements it by adjusting interpretation thresholds, such as confidence levels or mastery thresholds, based on strategic behavior, leading to more precise and personalized interventions (Figure 2).

- Data Storage and Reporting: All relevant interactions and conditions of the model are stored in either a relational or a document-oriented data store. Educators and researchers can access dashboards that show learners’ skills development, classify methodologies, and offer detailed analyses of progress. These reports are beneficial for optimizing tasks, detecting misconceptions, and enable research into spatial cognition.

- The learner opens the AR application, which initializes the device’s camera tracking and loads the relevant 3D models.

- The user selects or is assigned a task (e.g., assemble a 3D shape).

- As the user manipulates the virtual objects, the interaction manager logs each event (rotations, placements, errors).

- These events are sent to the server or local analytics module. The BKT and HPR modules process the data to update mastery probabilities and strategy classifications.

- The adaptation engine merges these signals to decide how to respond (e.g., offering a hint, adjusting difficulty, or encouraging strategic reflection).

- Feedback is displayed in real time within the AR interface.

- The learner continues until they complete the task or reach a predefined mastery threshold.

- Data are stored for subsequent analysis, and the cycle repeats for the next task or session.

4.2. Integration of BKT and HPR Within the AR Environment

- Defining Skills and Strategies: The first step is to identify the specific spatial skills to be tracked by BKT. Common examples include “mental rotation”, “perspective-taking”, “symmetry recognition”, and “3D visualization”. Each skill requires its own BKT parameters. For the HPR component, developers must define the potential strategy markers. These might include “frequent device rotation”, “corner-first assembly”, or “excessive reliance on visual prompts”. By enumerating typical patterns of behavior, the system can systematically scan for them in user logs.

- Tagging Interactions to Skills: Not every user action is relevant to every skill. Therefore, the system architecture must map each type of user interaction to one or more specific skills. If a user is working on a mental rotation challenge, correct rotation sequences count toward updating the mastery probability of “mental rotation” in BKT. Similarly, frequent viewpoint changes might prompt the HPR module to label the user’s strategy as “external-based approach”.

- Event Processing and State Updates: As tasks unfold, the BKT module is updated whenever the learner completes a measurable sub-step that can be classified as correct or incorrect. Each correct sub-step increases the probability that the learner has mastered the associated skill, weighted by the guess and slip parameters. Meanwhile, the HPR engine processes the raw logs for evidence of particular strategy markers. This analysis might run in short cycles (e.g., every 10 s) or once per task completion, depending on system design.

- Integrating the Outputs: The crucial integration point is where HPR’s interpretation modifies BKT’s estimates or triggers distinct feedback, without overwriting the BKT logic entirely. For example, if the HPR engine detects high reliance on random manipulations, it might reduce the “confidence” threshold for a correct sub-step, effectively making the BKT model more cautious about attributing mastery to successful outcomes. Alternatively, the system might place a user in a “strategy improvement” mode, offering targeted prompts before the next BKT update occurs.

- Real-Time Feedback Loop: AR is immersive and typically demands that feedback be immediate or nearly so, in order to guide the user before they develop unproductive habits. Thus, a feedback manager monitors the integrated BKT-HPR output. It decides if immediate guidance is necessary (e.g., an on-screen hint: “Try visualizing the next step before rotating the device”) or if a more gradual intervention (e.g., adjusting the next task’s complexity) is sufficient. The key principle is that feedback should be contextually meaningful: addressing both the skill gap indicated by BKT and the strategy gap signaled by HPR.

- Technical Considerations: (a) Processing real-time data streams from AR interactions can be computationally intensive. Optimizing for speed involves efficient event buffering, multi-threaded updates, and caching repeated patterns for quick reference. (b) Scalability: If the platform is to be used by multiple learners concurrently, the system must handle parallel BKT-HPR evaluations. Cloud-based architectures or distributed computing solutions often prove beneficial. (c) Usability: The user interface must convey feedback non-disruptively. Overloading the learner with constant pop-ups or metrics may hinder the immersive aspect of AR. Balancing the system’s adaptive interventions with a streamlined interface is a key design priority.

- Evaluation and Iteration: Integration is not a one-time event. After initial deployment, developers typically conduct pilot studies to observe how learners interact with the system. Feedback from these studies informs adjustments to both the BKT parameters and the HPR triggers. If a particular strategy classification appears too sensitive or too lenient, developers can refine the rules. Similarly, if BKT data suggest that the guess parameter is overestimating mastery, the system can be recalibrated to match real learner performance.

4.3. Data Capture: Recording Learner Actions and Interactions

4.4. Example of Operation

- Rotates the object seven times before final placement;

- Changes the viewing angle of the device five times in succession;

- Completes the task in 28 s;

- Does not necessarily denote commands but conveys repetitive tapping and quick movements.

- Mental rotation: Probability increases from 0.32 to 0.48;

- 3D assembly: Probability increases from 0.25 to 0.38.

- Rule 1: IF object rotations > 5, THEN flag potential trial-and-error strategy (confidence weight: 0.6);

- Rule 2: IF device viewpoint changes > 3 within a short time span, THEN infer reliance on external spatial cues (confidence weight: 0.7);

- Rule 3: IF task completion time < 30 s with no observable pause, THEN infer impulsive decision making (confidence weight: 0.8).

- From BKT: Moderate learning progress without mastery;

- From HPR: A minimal metacognitive regulation-based strategy.

- Based on this, the system decides to:

- Maintain the current difficulty level;

- Remove some visual aids to promote internal thinking;

- Provide a metacognitive prompt: Can you imagine how the block looked before it was moved? Try to visualize the result before you touch it.

- Reduced object rotations (three instead of seven);

- Fewer device movements (two changes);

- Reflective period of nine seconds before the onset of first action;

- An extended time to finish tasks (34 s).

5. Evaluation

5.1. Participant Demographics and Experimental Design

5.2. Learning Gains and Knowledge Modeling

5.3. BKT Skill Mastery Progression

5.4. Strategic Behavior and HPR Interventions

- Rule 1: A trial-and-error approach was seen to illustrate an analysis with over five object rotations in 39 students;

- Rule 2: Multiple rapid device rotations suggested externalization bias, where learners depended more on physical interaction than mental visualization, flagged in 27 students;

- Rule 3: Initiating interaction in under 10 s was associated with impulsivity and lack of planning, triggered for 31 students.

- Pre-action planning mean duration increased from 3.4 s to 8.9 s;

- Average object manipulations per task dropped by 45%;

- 34 students were identified to have made a switch from impulsive and externally based methods to systematic techniques based on visualization.

5.5. User Satisfaction and Perception

5.6. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Carroll, J.B. Human Cognitive Abilities: A Survey of Factor-Analytic Studies; no. 1; Cambridge University Press: Cambridge, UK, 1993. [Google Scholar]

- Gardner, H. Frames of Mind: The Theory of Multiple Intelligences; Basic Books: New York, NY, USA, 1983. [Google Scholar]

- Thorndike, E.L. On the Organization of Intellect. Psychol. Rev. 1921, 28, 141–151. [Google Scholar] [CrossRef]

- Malanchini, M.; Rimfeld, K.; Shakeshaft, N.G.; McMillan, A.; Schofield, K.L.; Rodic, M.; Rossi, V.; Kovas, Y.; Dale, P.S.; Tucker-Drob, E.M.; et al. Evidence for a unitary structure of spatial cognition beyond general intelligence. NPJ Sci. Learn. 2020, 5, 9. [Google Scholar] [CrossRef]

- Wright, R.; Thompson, W.L.; Ganis, G.; Newcombe, N.S.; Kosslyn, S.M. Training generalized spatial skills. Psychon. Bull. Rev. 2008, 15, 763–771. [Google Scholar] [CrossRef]

- Yu, M.; Cui, J.; Wang, L.; Gao, X.; Cui, Z.; Zhou, X. Spatial processing rather than logical reasoning was found to be critical for mathematical problem-solving. Learn. Individ. Differ. 2022, 100, 102230. [Google Scholar] [CrossRef]

- Shelton, A.L.; Davis, E.E.; Cortesa, C.S.; Jones, J.D.; Hager, G.D.; Khudanpur, S.; Landau, B. Characterizing the Details of Spatial Construction: Cognitive Constraints and Variability. Cogn. Sci. 2022, 46, e13081. [Google Scholar] [CrossRef]

- Spence, I.; Feng, J. Video Games and Spatial Cognition. Rev. Gen. Psychol. 2010, 14, 92–104. [Google Scholar] [CrossRef]

- Papanastasiou, G.; Drigas, A.; Skianis, C.; Lytras, M.; Papanastasiou, E. Virtual and augmented reality effects on K-12, higher and tertiary education students’ twenty-first century skills. Virtual Real. 2018, 23, 425–436. [Google Scholar] [CrossRef]

- Martín-Gutiérrez, J.; Saorín, J.L.; Contero, M.; Alcañiz, M.; Pérez-López, D.C.; Ortega, M. Design and validation of an augmented book for spatial abilities development in engineering students. Comput. Graph. 2010, 34, 77–91. [Google Scholar] [CrossRef]

- Carrera, C.C.; Asensio, L.A.B. Landscape interpretation with augmented reality and maps to improve spatial orientation skill. J. Geogr. High. Educ. 2016, 41, 119–133. [Google Scholar] [CrossRef]

- Papakostas, C.; Troussas, C.; Krouska, A.; Sgouropoulou, C. Exploration of Augmented Reality in Spatial Abilities Training: A Systematic Literature Review for the Last Decade. Inform. Educ. 2021, 20, 107–130. [Google Scholar] [CrossRef]

- Papakostas, C.; Troussas, C.; Krouska, A.; Sgouropoulou, C. On the Development of a Personalized Augmented Reality Spatial Ability Training Mobile Application. In Novelties in Intelligent Digital Systems; Frasson, C., Ed.; Frontiers in Artificial Intelligence and Applications; IOS Press: Amsterdam, The Netherlands, 2021; Volume 338, pp. 75–83. [Google Scholar] [CrossRef]

- Papakostas, C.; Troussas, C.; Krouska, A.; Sgouropoulou, C. Modeling the Knowledge of Users in an Augmented Reality-Based Learning Environment Using Fuzzy Logic. In Novel & Intelligent Digital Systems, Proceedings of the 2nd International Conference (NiDS 2022), Athens, Greece, 29–30 September 2022; Krouska, A., Troussas, C., Caro, J., Eds.; Lecture Notes in Networks and Systems; Springer: Cham, Switzerland, 2023; Volume 556, p. 12. [Google Scholar] [CrossRef]

- Papakostas, C.; Troussas, C.; Krouska, A.; Mylonas, P.; Sgouropoulou, C. Modeling Educational Strategies in Augmented Reality Learning Using Fuzzy Weights. In Proceedings of the 2024 9th South-East Europe Design Automation, Computer Engineering, Computer Networks and Social Media Conference (SEEDA-CECNSM), Egaleo, Greece, 20–22 September 2024; pp. 121–126. [Google Scholar] [CrossRef]

- Kaser, T.; Klingler, S.; Schwing, A.G.; Gross, M. Dynamic Bayesian Networks for Student Modeling. IEEE Trans. Learn. Technol. 2017, 10, 450–462. [Google Scholar] [CrossRef]

- Lei, T.; Yan, Y.; Zhang, B. An Improved Bayesian Knowledge Tracking Model for Intelligent Teaching Quality Evaluation in Digital Media. IEEE Access 2024, 12, 125223–125234. [Google Scholar] [CrossRef]

- Sun, J.; Zou, R.; Liang, R.; Gao, L.; Liu, S.; Li, Q.; Zhang, K.; Jiang, L. Ensemble Knowledge Tracing: Modeling interactions in learning process. Expert Syst. Appl. 2022, 207, 117680. [Google Scholar] [CrossRef]

- Abedinzadeh, S.; Sadaoui, S. A trust-based service suggestion system using human plausible reasoning. Appl. Intell. 2014, 41, 55–75. [Google Scholar] [CrossRef]

- Mohammadhassanzadeh, H.; Van Woensel, W.; Abidi, S. Semantics-based plausible reasoning to extend the knowledge coverage of medical knowledge bases for improved clinical decision support. BioData Mining 2017, 10, 7. [Google Scholar] [CrossRef]

- Epler-Ruths, C.M.; McDonald, S.; Pallant, A.; Lee, H.-S. Focus on the notice: Evidence of spatial skills’ effect on middle school learning from a computer simulation. Cogn. Res. Princ. Implic. 2020, 5, 61. [Google Scholar] [CrossRef]

- Harris, D.; Logan, T.; Lowrie, T. Spatial visualization and measurement of area: A case study in spatialized mathematics instruction. J. Math. Behav. 2023, 70, 101038. [Google Scholar] [CrossRef]

- Tiwari, S.; Shah, B.; Muthiah, A. A Global Overview of SVA–Spatial-Visual Ability. Appl. Syst. Innov. 2024, 7, 48. [Google Scholar] [CrossRef]

- Poltrock, S.E.; Brown, P. Individual Differences in visual imagery and spatial ability. Intelligence 1984, 8, 93–138. [Google Scholar] [CrossRef]

- Kozhevnikov, M.; Hegarty, M.; Mayer, R.E. Revising the Visualizer-Verbalizer Dimension: Evidence for Two Types of Visualizers. Cogn. Instr. 2002, 20, 47–77. [Google Scholar] [CrossRef]

- Blazhenkova, O.; Kozhevnikov, M. Visual-object ability: A new dimension of non-verbal intelligence. Cognition 2010, 117, 276–301. [Google Scholar] [CrossRef]

- Uttal, D.H.; Meadow, N.G.; Tipton, E.; Hand, L.L.; Alden, A.R.; Warren, C.; Newcombe, N.S. The malleability of spatial skills: A meta-analysis of training studies. Psychol. Bull. 2013, 139, 352–402. [Google Scholar] [CrossRef] [PubMed]

- Uttal, D.H.; Miller, D.I.; Newcombe, N.S. Exploring and Enhancing Spatial Thinking Links to Achievement in Science, Technology, Engineering, and Mathematics? Curr. Dir. Psychol. Sci. 2013, 22, 367–373. [Google Scholar] [CrossRef]

- Wai, J.; Lubinski, D.; Benbow, C.P. Spatial Ability for STEM Domains: Aligning Over 50 Years of Cumulative Psychological Knowledge Solidifies Its Importance. J. Educ. Psychol. 2009, 101, 817–835. [Google Scholar] [CrossRef]

- Sorby, S.A. Assessment of a ‘New and Improved’ Course for the Development of 3-D Spatial Skills. Eng. Des. Graph. J. 2009, 69, 6–13. [Google Scholar]

- Sorby, S.A. Developing 3-D Spatial Visualization Skills. Eng. Des. Graph. J. 1999, 63, 21–32. [Google Scholar]

- Papakostas, C.; Troussas, C.; Sgouropoulou, C. Fuzzy Logic for Modeling the Knowledge of Users in PARSAT AR Software. In Special Topics in Artificial Intelligence and Augmented Reality. Cognitive Technologies; Springer: Cham, Switzerland, 2024; pp. 65–91. [Google Scholar] [CrossRef]

- Papakostas, C.; Troussas, C.; Krouska, A.; Sgouropoulou, C. Measuring User Experience, Usability and Interactivity of a Personalized Mobile Augmented Reality Training System. Sensors 2021, 21, 3888. [Google Scholar] [CrossRef]

- Baker, R.S.J.D.; Corbett, A.T.; Gowda, S.M. Generalizing automated detection of the robustness of student learning in an intelligent tutor for genetics. J. Educ. Psychol. 2013, 105, 946–956. [Google Scholar] [CrossRef]

- Ritter, S.; Anderson, J.R.; Koedinger, K.R.; Corbett, A. Cognitive tutor: Applied research in mathematics education. Psychon. Bull. Rev. 2007, 14, 249–255. [Google Scholar] [CrossRef]

- Zhang, K.; Yao, Y. A three learning states Bayesian knowledge tracing model. Knowl.-Based Syst. 2018, 148, 189–201. [Google Scholar] [CrossRef]

- Liu, F.; Hu, X.; Bu, C.; Yu, K. Fuzzy Bayesian Knowledge Tracing. IEEE Trans. Fuzzy Syst. 2021, 30, 2412–2425. [Google Scholar] [CrossRef]

- Pelánek, R. Bayesian knowledge tracing, logistic models, and beyond: An overview of learner modeling techniques. User Model. User-Adapt. Interact. 2017, 27, 313–350. [Google Scholar] [CrossRef]

- Slater, S.; Baker, R. Forecasting future student mastery. Distance Educ. 2019, 40, 380–394. [Google Scholar] [CrossRef]

- Zhang, J.; Xia, R.; Miao, Q.; Wang, Q. Explore Bayesian analysis in Cognitive-aware Key-Value Memory Networks for knowledge tracing in online learning. Expert Syst. Appl. 2024, 257, 124933. [Google Scholar] [CrossRef]

- Graesser, A.; Baggett, W.; Williams, K. Question-driven Explanatory Reasoning. Appl. Cogn. Psychol. 1996, 10, 17–31. [Google Scholar] [CrossRef]

- Anderman, L.H.; Anderman, E.M. Considering Contexts in Educational Psychology: Introduction to the Special Issue. Educ. Psychol. 2000, 35, 67–68. [Google Scholar] [CrossRef]

- Polya, G. Mathematics and Plausible Reasoning; Princeton University Press: Princeton, NJ, USA, 1954; Volume 1. [Google Scholar] [CrossRef]

- Collins, A.; Michalski, R. The Logic of Plausible Reasoning: A Core Theory. Cogn. Sci. 1989, 13, 1–49. [Google Scholar] [CrossRef]

- Azuma, R.T. A survey of augmented reality. Presence Virtual Augment. Real. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Pellegrino, J.W.; Hunt, E.B. Cognitive models for understanding and assessing spatial abilities. In Intelligence: Reconceptualization and Measurement; Psychology Press: London, UK, 1991; pp. 203–225. [Google Scholar]

- Ali, D.F.; Omar, M.; Mokhtar, M.; Suhairom, N.; Abdullah, A.H.; Halim, N.D.A. A review on augmented reality application in engineering drawing classrooms. Man India 2017, 97, 195–204. Available online: https://www.researchgate.net/publication/320878073 (accessed on 1 March 2025).

- Wu, H.-K.; Lee, S.W.-Y.; Chang, H.-Y.; Liang, J.-C. Current status, opportunities and challenges of augmented reality in education. Comput. Educ. 2013, 62, 41–49. [Google Scholar] [CrossRef]

- Figueiredo, M.; Cardoso, P.J.S.; Rodrigues, J.M.F.; Alves, R. Learning Technical Drawing with Augmented Reality and Holograms. Recent Adv. Educ. Technol. Methodol. 2014, 1–20. [Google Scholar]

- Chen, Y.-C.; Chi, H.-L.; Hung, W.-H.; Kang, S.-C. Use of tangible and augmented reality models in engineering graphics courses. J. Prof. Issues Eng. Educ. Pr. 2011, 137, 267–276. [Google Scholar] [CrossRef]

- Kaur, N.; Pathan, R.; Khwaja, U.; Murthy, S. GeoSolvAR: Augmented reality-based solution for visualizing 3D Solids. In Proceedings of the IEEE 18th International Conference on Advanced Learning Technologies, ICALT 2018, Mumbai, India, 9–13 July 2018; pp. 372–376. [Google Scholar] [CrossRef]

- Bell, J.; Hinds, T.; Walton, S.P.; Cugini, C.; Cheng, C.; Freer, D.; Cain, W.; Klautke, H. A study of augmented reality for the development of spatial reasoning ability. In Proceedings of the ASEE Annual Conference and Exposition, Conference Proceedings, Salt Lake City, UT, USA, 24–27 June 2018. [Google Scholar] [CrossRef]

- Veide, Z.; Strozheva, V.; Dobelis, M. Application of Augmented Reality for teaching Descriptive Geometry and Engineering Graphics Course to First-Year Students. In Proceedings of the Joint International Conference on Engineering Education & International Conference on Information Technology, Orlando, FL, USA, 25–27 September 2014; pp. 158–164. [Google Scholar]

- Tuker, C. Training Spatial Skills with Virtual Reality and Augmented Reality. In Encyclopedia of Computer Graphics and Games; Lee, N., Ed.; Springer International Publishing: Cham, Switzerland, 2018; pp. 1–9. [Google Scholar] [CrossRef]

- Papakostas, C.; Troussas, C.; Sgouropoulou, C. Artificial Intelligence-Enhanced PARSAT AR Software: Architecture and Implementation. In Special Topics in Artificial Intelligence and Augmented Reality. Cognitive Technologies; Springer: Cham, Switzerland, 2024; pp. 93–130. [Google Scholar] [CrossRef]

| IF (Behavior) | THEN (Inference) | → (Intervention or Tag) |

|---|---|---|

| Frequent device rotation | Reliance on external cues | Prompt: “Try forming a mental image before rotating” |

| Multiple random attempts without pause | Trial-and-error strategy | Tag as “Low planning”; reduce task complexity |

| Long pauses before each move | Careful analysis | Reinforce with a brief confirmation message |

| Repeated use of same failed method | Rigid strategy | Suggest alternative method through hint |

| No interaction for extended time | Hesitation or confusion | Offer optional walkthrough or quick demo |

| Frequent request for hints | Low confidence | Trigger metacognitive prompt: “What would you try first?” |

| Quick correct answers with no hint use | Possible mastery | Confirm proficiency; suggest next level challenge |

| Metric | Experimental Group (n = 50) | Control Group (n = 50) |

|---|---|---|

| Mean Pre-test Score | 58.4 | 57.9 |

| Mean Post-test Score | 84.5 | 68.3 |

| Mean Score Improvement | +26.1 | +10.4 |

| Standard Deviation (Gain) | 6.3 | 7.2 |

| Significance (t-test) | t(98) = 7.63 | – |

| Effect Size | Cohen’s d = 1.45 | – |

| p-value | p < 0.001 | – |

| Session | Experimental Mastery (avg) | Control Mastery (avg) |

|---|---|---|

| 1 | 0.32 | 0.31 |

| 2 | 0.51 | 0.42 |

| 3 | 0.65 | 0.53 |

| 4 | 0.75 | 0.60 |

| Spatial Skill | Experimental Group Gain (Post—Pre) | Control Group Gain (Post—Pre) | Normalized Gain (Experimental) |

|---|---|---|---|

| Mental Rotation | +14.2 | +5.8 | 0.62 |

| 3D Object Assembly | +9.5 | +3.2 | 0.58 |

| Symmetry Recognition | +7.4 | +2.1 | 0.51 |

| Statement | Agree (%) Experimental | Agree (%) Control |

|---|---|---|

| Helped me understand how I solve problems | 92 | 61 |

| Tasks matched my skill level | 88 | 52 |

| Feedback made me reflect on my strategies | 91 | 47 |

| Enjoyed the AR system | 89 | 85 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Papakostas, C.; Troussas, C.; Krouska, A.; Sgouropoulou, C. Integrating Bayesian Knowledge Tracing and Human Plausible Reasoning in an Adaptive Augmented Reality System for Spatial Skill Development. Information 2025, 16, 429. https://doi.org/10.3390/info16060429

Papakostas C, Troussas C, Krouska A, Sgouropoulou C. Integrating Bayesian Knowledge Tracing and Human Plausible Reasoning in an Adaptive Augmented Reality System for Spatial Skill Development. Information. 2025; 16(6):429. https://doi.org/10.3390/info16060429

Chicago/Turabian StylePapakostas, Christos, Christos Troussas, Akrivi Krouska, and Cleo Sgouropoulou. 2025. "Integrating Bayesian Knowledge Tracing and Human Plausible Reasoning in an Adaptive Augmented Reality System for Spatial Skill Development" Information 16, no. 6: 429. https://doi.org/10.3390/info16060429

APA StylePapakostas, C., Troussas, C., Krouska, A., & Sgouropoulou, C. (2025). Integrating Bayesian Knowledge Tracing and Human Plausible Reasoning in an Adaptive Augmented Reality System for Spatial Skill Development. Information, 16(6), 429. https://doi.org/10.3390/info16060429