Drunk Driver Detection Using Thermal Facial Images

Abstract

1. Introduction

2. Literature Review

2.1. Sensor-Based Technologies

2.2. Facial Feature Recognition

3. Materials and Methods

3.1. Data Collection

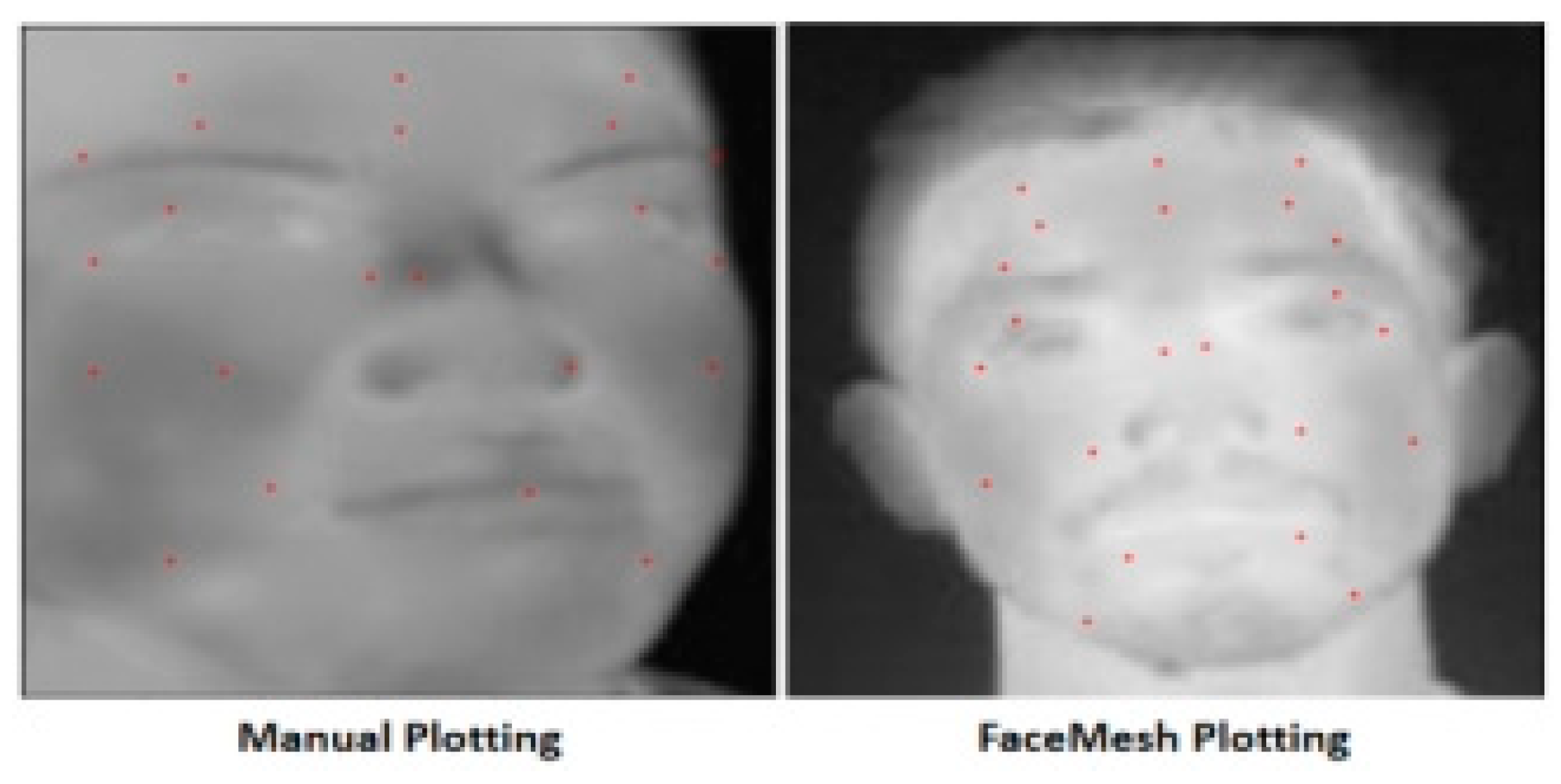

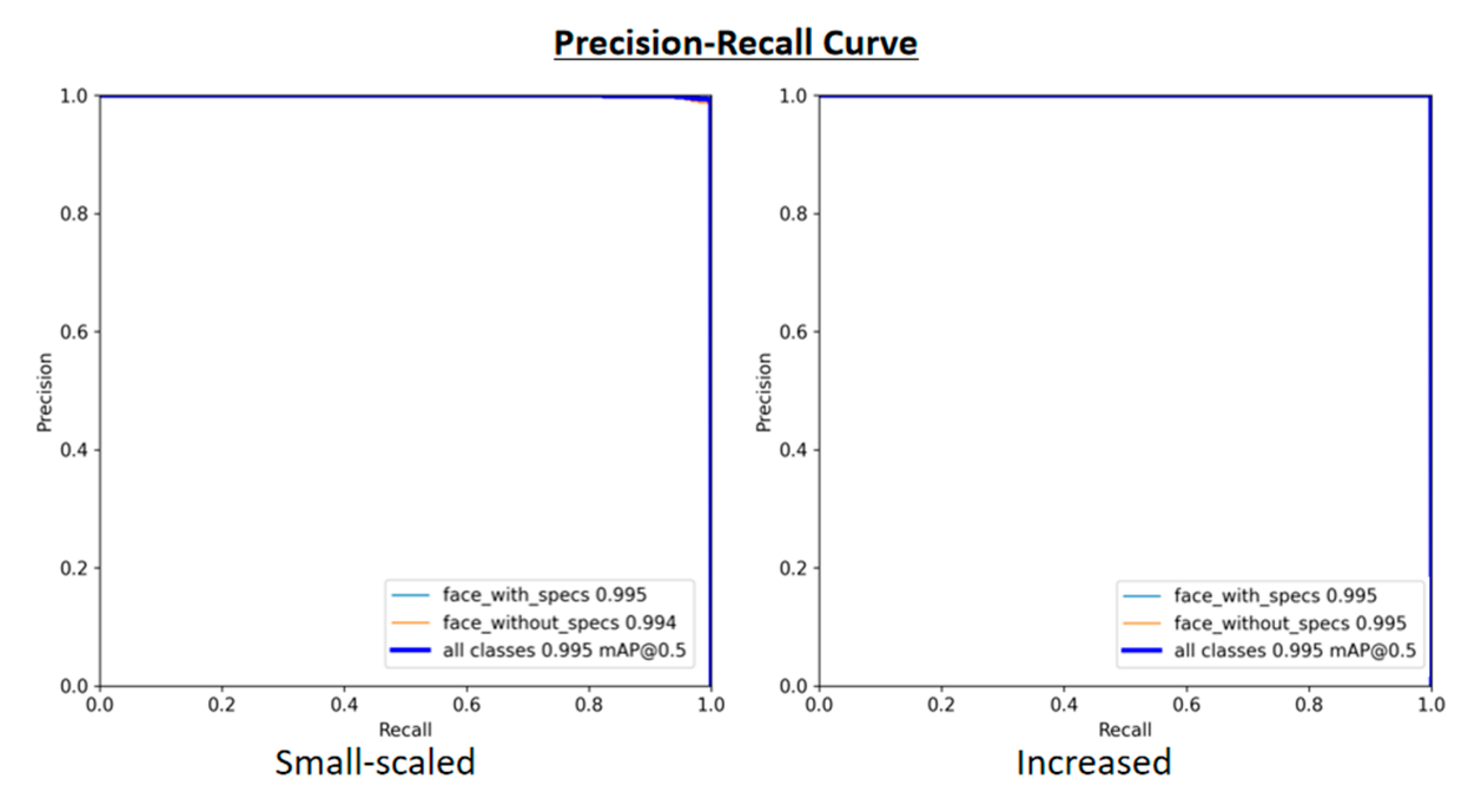

3.2. Face Recognition

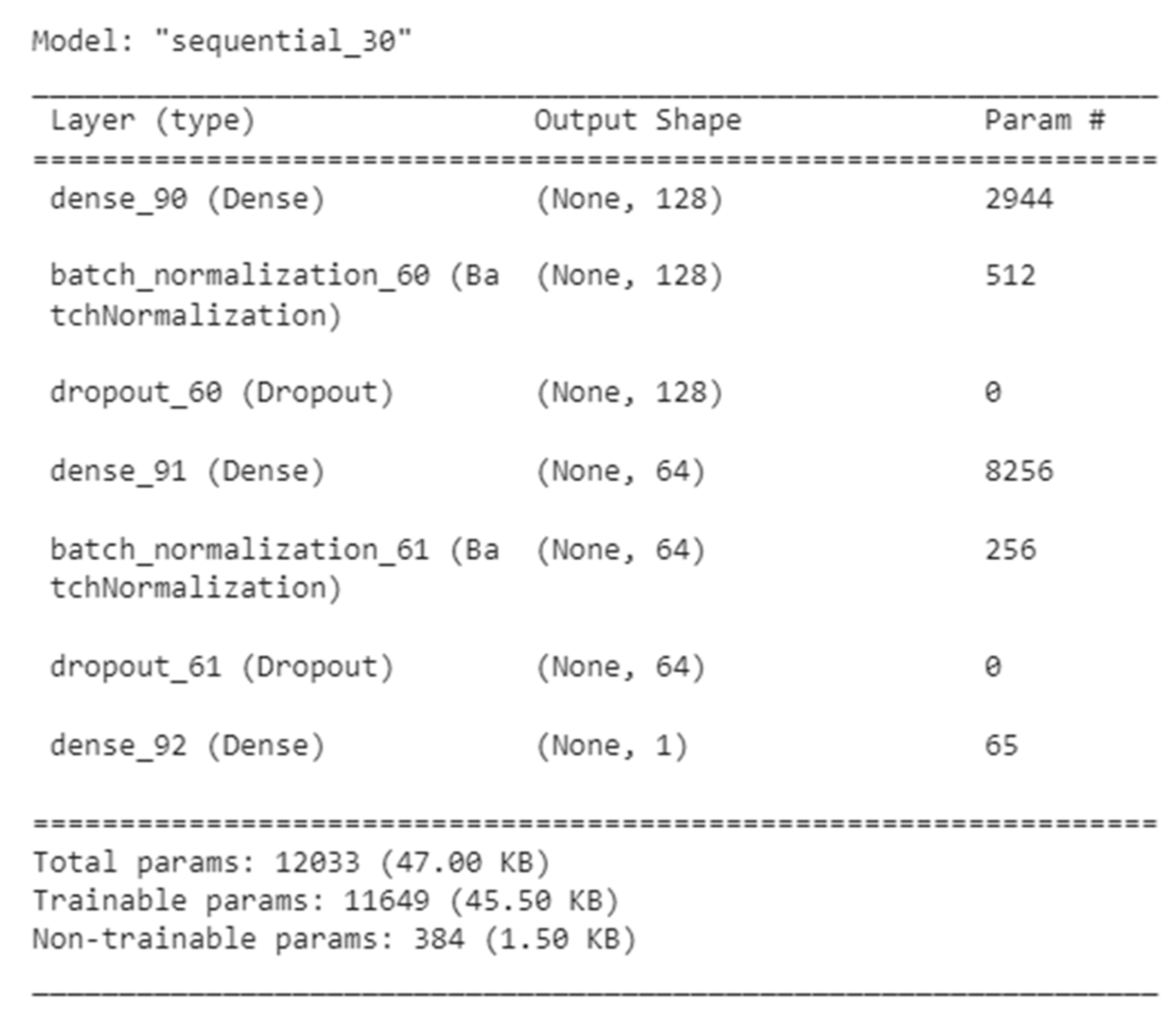

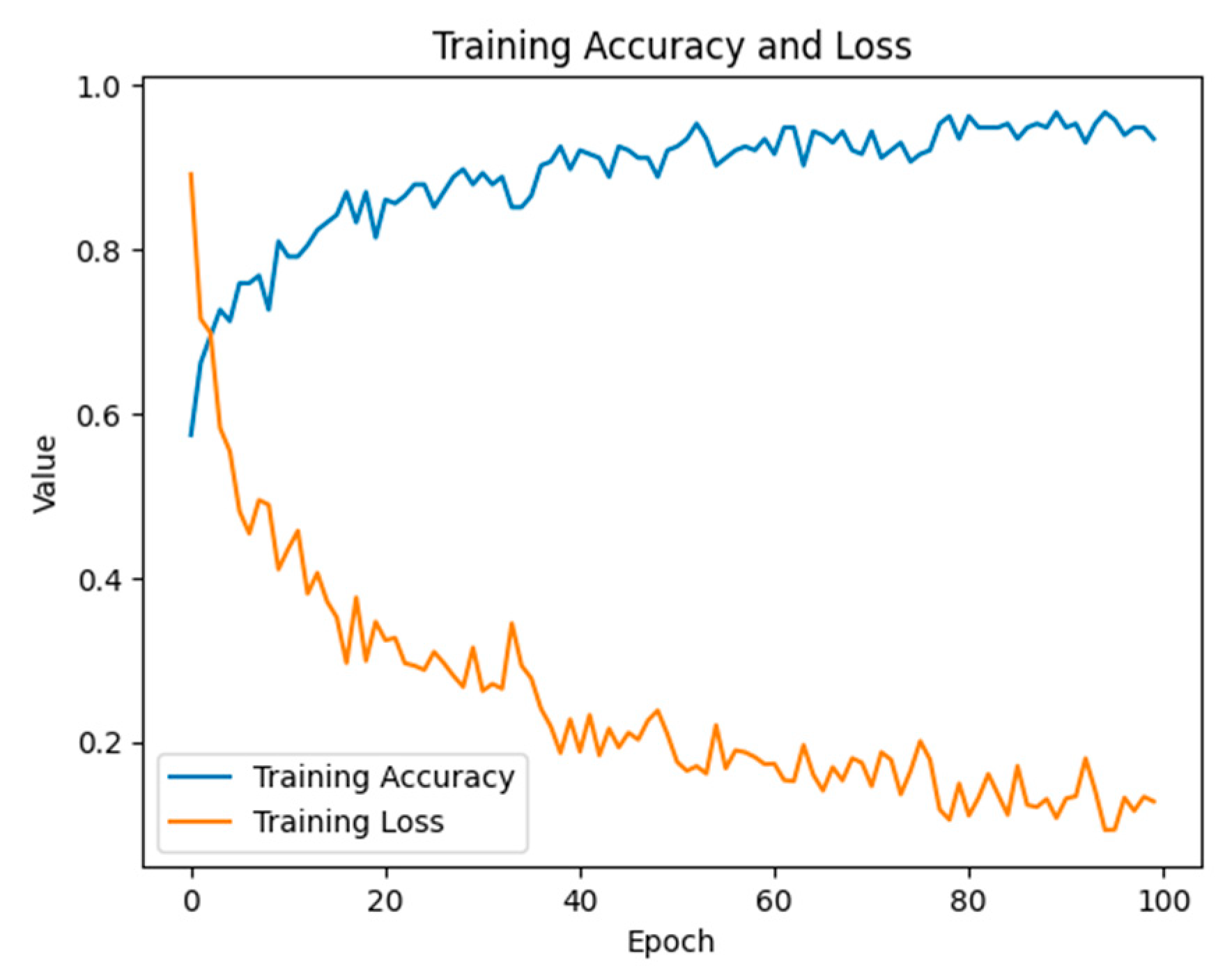

3.3. Drunk Classification

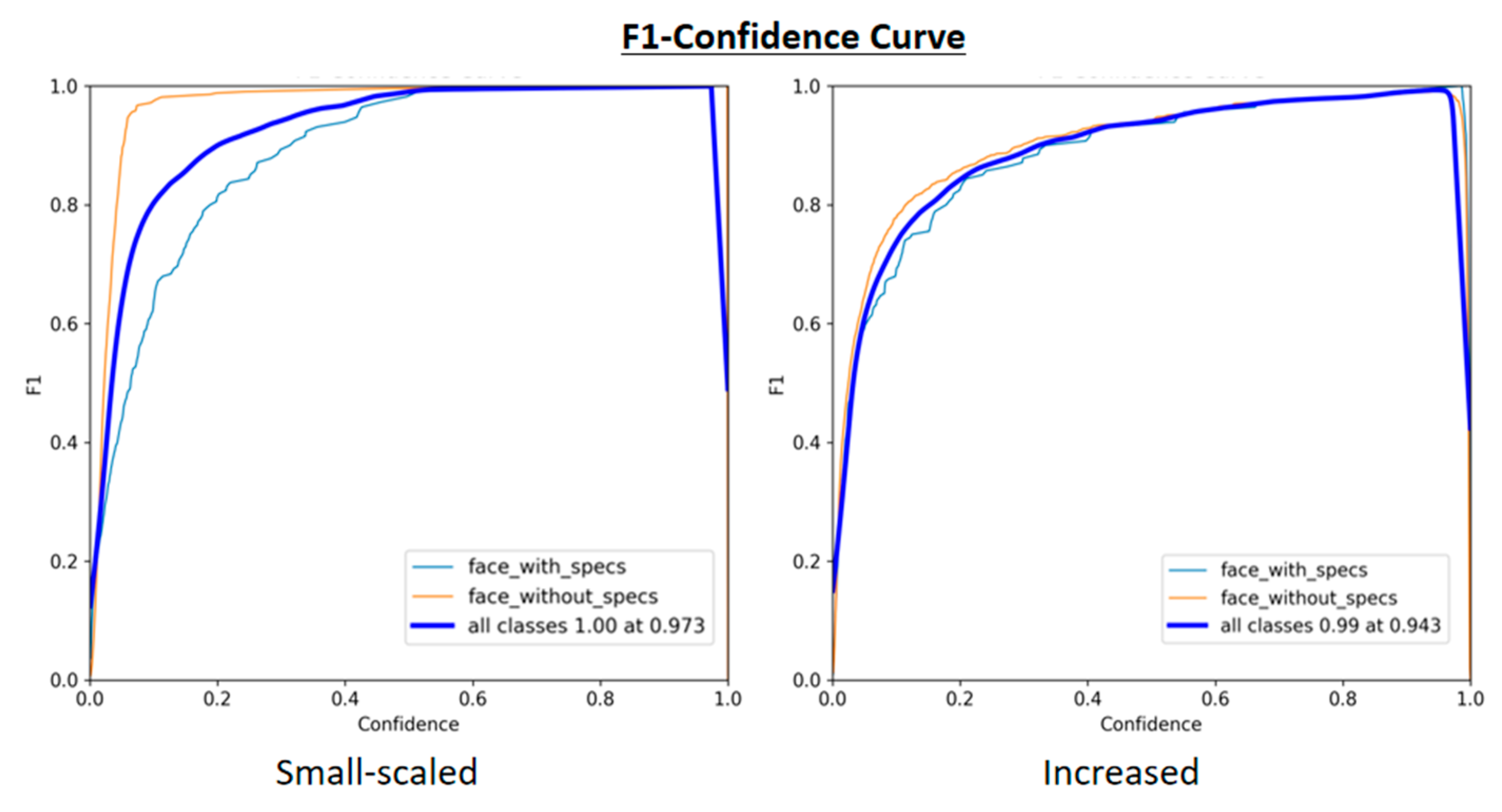

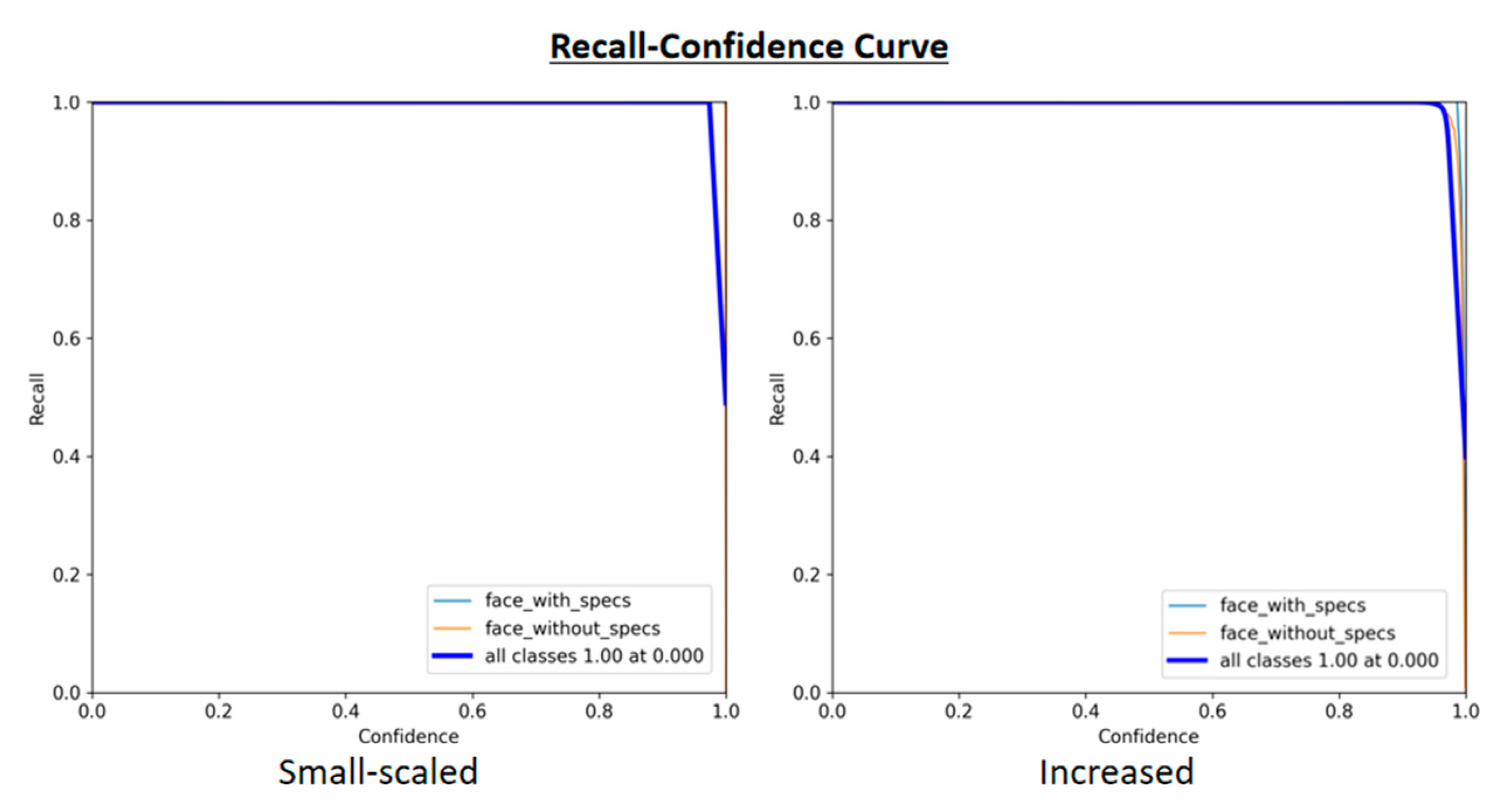

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CNN | Convolutional Neural Network |

| RF | Random Forest |

| SVM | Support Vector Machine |

| MLP | Multi-Layer Perceptron |

| LR | Logistic Regression |

| KNN | K-Nearest Neighbors |

| ReLU | Rectified Linear Unit |

| YOLO | You Only Look Once |

| NETD | Noise Equivalent Temperature Difference |

| SURF | Speeded Up Robust Features |

| NSCT | Non-Sub-sampled Contourlet Transform |

| ROI | Region of Interest |

| O-SNN | Optimizable Shallow Neural Network |

| PUCV-DTF | Pontificia Universidad Católica de Valparaíso Drunk Thermal Face database |

| WLD | Weber Local Descriptor |

| LBP | Local Binary Pattern |

References

- Sanghvi, K.A. Drunk driving detection. Comput. Sci. Inf. Technol. 2018, 6, 24–30. [Google Scholar] [CrossRef][Green Version]

- Omar, R. The Usage of Artificial Intelligence Algorithms in Preventing Drunk Driving. J. Stud. Res. 2023, 12, 1–5. [Google Scholar] [CrossRef]

- Kumar, A.; Kumar, A.; Singh, M.; Kumar, P.; Bijalwan, A. An Optimized Approach Using Transfer Learning to Detect Drunk Driving. Sci. Program. 2022, 2022, 8775607. [Google Scholar] [CrossRef]

- Jeoung, J.; Jung, S.; Hong, T.; Lee, M.; Koo, C. Thermal comfort prediction based on automated extraction of skin temperature of face component on thermal image. Energy Build. 2023, 298, 113495. [Google Scholar] [CrossRef]

- Farooq, H.; Altaf, A.; Iqbal, F.; Galán, J.C.; Aray, D.G.; Ashraf, I. DrunkChain: Blockchain-Based IoT System for Preventing Drunk Driving-Related Traffic Accidents. Sensors 2023, 23, 5388. [Google Scholar] [CrossRef] [PubMed]

- National Highway Traffic Safety Administration. Advanced Impaired Driving Prevention Technology [Internet]. 2023. Available online: https://www.regulations.gov (accessed on 18 March 2025).

- Razak, S.F.A.; Yogarayan, S.; Ullah, A. Preventing Impaired Driving Using IoT on Steering Wheels Approach. HighTech Innov. J. 2024, 5, 400–409. [Google Scholar] [CrossRef]

- Chen, Y.; Xue, M.; Zhang, J.; Ou, R.; Zhang, Q.; Kuang, P. DetectDUI: An in-car detection system for drink driving and BACs. IEEE/ACM Trans. Netw. 2021, 30, 896–910. [Google Scholar] [CrossRef]

- Abu Al-Haija, Q.; Krichen, M. A Lightweight In-Vehicle Alcohol Detection Using Smart Sensing and Supervised Learning. Computers 2022, 11, 121. [Google Scholar] [CrossRef]

- Shukla, P.; Srivastava, U.; Singh, S.; Tripathi, R.; Sharma, R.R. Automatic Engine Locking System Through Alcohol Detection. Int. J. Eng. Res. Technol. (IJERT) 2020, 9, 634–637. [Google Scholar]

- Rakshith, K.B.; Meghana, K.; Pranav, R.; Gagana, N.A.; Pavithra, G.S. Alcohol Detection System for the Safety of Automobile Users. Int. Res. J. Eng. Technol. 2020, 7, 3583–3586. [Google Scholar]

- Liu, J.; Luo, Y.; Ge, L.; Zeng, W.; Rao, Z.; Xiao, X. An Intelligent Online Drunk Driving Detection System Based on Multi-Sensor Fusion Technology. Sensors 2022, 22, 8460. [Google Scholar] [CrossRef] [PubMed]

- Wang, F.; Bai, D.; Liu, Z.; Yao, Z.; Weng, X.; Xu, C.; Fan, K.; Zhao, Z.; Chang, Z. A Two-Step E-Nose System for Vehicle Drunk Driving Rapid Detection. Appl. Sci. 2023, 13, 3478. [Google Scholar] [CrossRef]

- Seong, L.J.; Yogarayan, S.; Razak, S.F.A.; Mogan, J.N. Drunk Detection Using Thermal-Based Face Images. In Proceedings of the 2024 International Conference on Intelligent Cybernetics Technology & Applications (ICICyTA), Bali, Indonesia, 17–19 December 2024; pp. 1003–1007. [Google Scholar]

- Zhao, X.; Zhu, H.; Qian, X.; Ge, C. Design of intelligent drunk driving detection system based on Internet of Things. J. Internet Things 2019, 1, 55. [Google Scholar] [CrossRef]

- Hermosilla, G.; Verdugo, J.L.; Farias, G.; Vera, E.; Pizarro, F.; Machuca, M. Face recognition and drunk classification using infrared face images. J. Sens. 2018, 2018, 1–8. [Google Scholar] [CrossRef]

- Menon, S.; Swathi, J.; Anit, S.K.; Nair, A.P.; Sarath, S. Driver face recognition and sober drunk classification using thermal images. In Proceedings of the 2019 International Conference on Communication and Signal Processing (ICCSP), Chennai, India, 4–6 April 2019; pp. 400–404. [Google Scholar]

- Iamudomchai, P.; Seelaso, P.; Pattanasak, S.; Piyawattanametha, W. Deep learning technology for drunks detection with infrared camera. In Proceedings of the 2020 6th International Conference on Engineering, Applied Sciences and Technology (ICEAST), Chiang Mai, Thailand, 1–4 July 2020; pp. 1–4. [Google Scholar]

- Soltuz, A.I.; Neagoe, V.E. Facial thermal image analysis with deep convolutional neural network architectures for subject dependent drunkenness diagnosis. In Proceedings of the 2021 13th International Conference on Electronics, Computers and Artificial Intelligence (ECAI), Pitesti, Romania, 1–3 July 2021; pp. 1–4. [Google Scholar]

- Bhuyan, M.K.; Bora, K.; Koukiou, G. Detection of Intoxicated Person using Thermal Infrared Images. In Proceedings of the 2019 IEEE 6th Asian Conference on Defence Technology (ACDT), Bali, Indonesia, 13–15 November 2019; pp. 59–64. [Google Scholar]

- Bhuyan, M.K.; Dhawle, S.; Sasmal, P.; Koukiou, G. Intoxicated person identification using thermal infrared images and Gait. In Proceedings of the 2018 International Conference on Wireless Communications, Signal Processing and Networking (WiSPNET), Chennai, India, 22–24 March 2018; pp. 1–3. [Google Scholar]

- Koukiou, G. Thermal Biometric Features for Drunk Person Identification Using Multi-Frame Imagery. Electronics 2022, 11, 3924. [Google Scholar] [CrossRef]

| Ref. | Camera | Image Resolution (Pixels) | Dataset | Feature Extraction | Classification | Accuracy |

|---|---|---|---|---|---|---|

| Menon et al. [17] | Thermo Vision Micron/A10 (FLIR Systems, Inc., Wilsonville, OR, USA) | 128 × 160 | self-collected | Fisher’s linear discriminant (FLD) for dimensionality reduction | Gaussian Mixture Model (GMM) | 87% |

| Hermosilla et al. [16] | FLIR TAU 2 (FLIR Systems, Inc., Wilsonville, OR, USA) | 81 × 150 | PUCV-DTF | Fisher’s linear discriminant (FLD) for dimensionality reduction | Gaussian Mixture Model (GMM) | 86.96% |

| Iamudomchai et al. [18] | FLIR ONE (FLIR Systems, Inc. Wilsonville, OR, USA) | 160 × 120 | Thai nationality (self-collected) | Not mentioned | CNN | 4 levels (normal, 1 glass, 2 glasses, and 3 glasses): 85.10% |

| Soltuz and Neagoe [19] | Thermo Vision Micron/A10 | 128 × 160 | Georgia Koukiou and Vassilis Anastassopoulos (University of Patras, Polytechnic of Crete) | Not mentioned | CNN | DCNN First: 93.17% Second: 95.17% GoogLeNet: 98.54% |

| Bhuyan et al. [20] | Thermo Vision Micron/A10 | 128 × 160 | self-collected | Not mentioned | SVM | Eye: 93% Face: 94.1% Hand: 95.1% Ear: 100% |

| Bhuyan et al. [21] | Thermo Vision Micron/A10 | 128 × 160 | self-collected | Speeded up robust features (SURFs) | RF and SVM | Face: 89.23% Gait and Ear: 100% |

| Koukiou [22] | Thermo Vision Micron/A10 | 128 × 160 | Georgia Koukiou and Vassilis Anastassopoulos (University of Patras, Polytechnic of Crete) | Morphological feature extraction | SVM | 86% |

| Model | Class | Precision | Recall | F1 Score | Accuracy |

|---|---|---|---|---|---|

| MLP | Sober | 86% | 91% | 89% | 90% |

| Drunk | 93% | 89% | 91% | ||

| KNN | Sober | 90% | 80% | 85% | 88% |

| Drunk | 86% | 93% | 89% | ||

| SVM | Sober | 71% | 71% | 71% | 75% |

| Drunk | 78% | 78% | 78% | ||

| RF | Sober | 88% | 86% | 87% | 89% |

| Drunk | 89% | 91% | 90% | ||

| LR | Sober | 73% | 77% | 75% | 78% |

| Drunk | 81% | 78% | 80% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chai, C.-H.; Abdul Razak, S.F.; Yogarayan, S.; Shanmugam, R. Drunk Driver Detection Using Thermal Facial Images. Information 2025, 16, 413. https://doi.org/10.3390/info16050413

Chai C-H, Abdul Razak SF, Yogarayan S, Shanmugam R. Drunk Driver Detection Using Thermal Facial Images. Information. 2025; 16(5):413. https://doi.org/10.3390/info16050413

Chicago/Turabian StyleChai, Chin-Heng, Siti Fatimah Abdul Razak, Sumendra Yogarayan, and Ramesh Shanmugam. 2025. "Drunk Driver Detection Using Thermal Facial Images" Information 16, no. 5: 413. https://doi.org/10.3390/info16050413

APA StyleChai, C.-H., Abdul Razak, S. F., Yogarayan, S., & Shanmugam, R. (2025). Drunk Driver Detection Using Thermal Facial Images. Information, 16(5), 413. https://doi.org/10.3390/info16050413