Enhancing the Learning Experience with AI

Abstract

1. Introduction

2. Review of New Methods Used in Education

2.1. Computer-Aided Methods in Education

2.2. AI in Education

- 100% availability for answers from courses: Similar to a teacher providing answers to students during the main class, an AI solution can provide answers from the course materials to an unlimited number of students 24/7.

- Automated Grading: AI can automate tasks such as assignment grading and providing feedback to student inquiries, thus liberating faculty time for more in-depth student interactions.

- Improved Accessibility: AI-powered solutions can be enhanced with text-to-speech and speech-to-text features, providing support to students with disabilities.

2.3. Estimation of the Usage of New Methods in Education

- Paper-Based Materials (Textbooks and Print Handouts): Traditionally, nearly all instructional supports were paper-based. Meta-analyses in education [40] indicate that, in earlier decades, printed textbooks and handouts could represent 70–80% of all learning materials. Over the past two decades, however, this share has steadily decreased (now roughly 40–50%) as digital tools have been integrated into teaching.

- Computer-Based Supports (Websites, PDFs, and Learning Management Systems): Research [41,42] in the COVID-19 pandemic period demonstrates that Learning Management Systems (LMSs) and other computer-based resources (including websites and PDFs) have increased from practically 10% to about 40–50% of the educational supports in some settings. This evolution reflects both improved digital infrastructure and shifts in teaching practices.

- Smartphones and Mobile Apps: Studies [43] in the early 2010s reported very limited in-class smartphone use. Over time, however, as smartphones became ubiquitous, more recent research [44] shows that these devices have now grown to roughly 20–30% of learning interactions. This growth reflects both increased mobile connectivity and the rising popularity of educational apps.

- Interactive Digital Platforms (Websites, Multimedia, and Collaborative Tools): Parallel to the growth in LMS and mobile use, digital platforms that incorporate interactive multimedia and collaborative features have also expanded. Meta-analyses [45] indicate that while early-2000s classrooms saw digital tool usage in the order of 10–20%, today, these platforms now comprise roughly 30–40% of the overall learning support environment. This trend underscores the increasing importance of online content and real-time collaboration in education.

2.4. The Shift Towards Using AI Tools

2.5. Chatbots in Education

2.5.1. Chatbots: Definition and Classification

2.5.2. Chatbots: Structure and Role in Education

2.5.3. Educational Chatbots Survey

- Insufficient training and digital literacy among educators. For instance, ref. [4] found that many higher education teachers across several countries felt unprepared to fully leverage AI due to inadequate institutional training and support.

- High implementation costs, especially in institutions with lower resources [68].

- Lack of clearly defined and easily applicable policies for the ethical adoption of AI components as well as concerns about data privacy and fears of algorithmic bias.

3. Materials and Methods

3.1. Proposed Solution Description

3.2. High-Level Description

- Add a new course;

- Drag-and-drop the course chapters documents (in PDF, DOC or OPT format);

- Drag-and-drop a document containing exam questions or opt for automatic exam question generation;

- Optionally upload excel files with the previous year’s student results for statistical analysis.

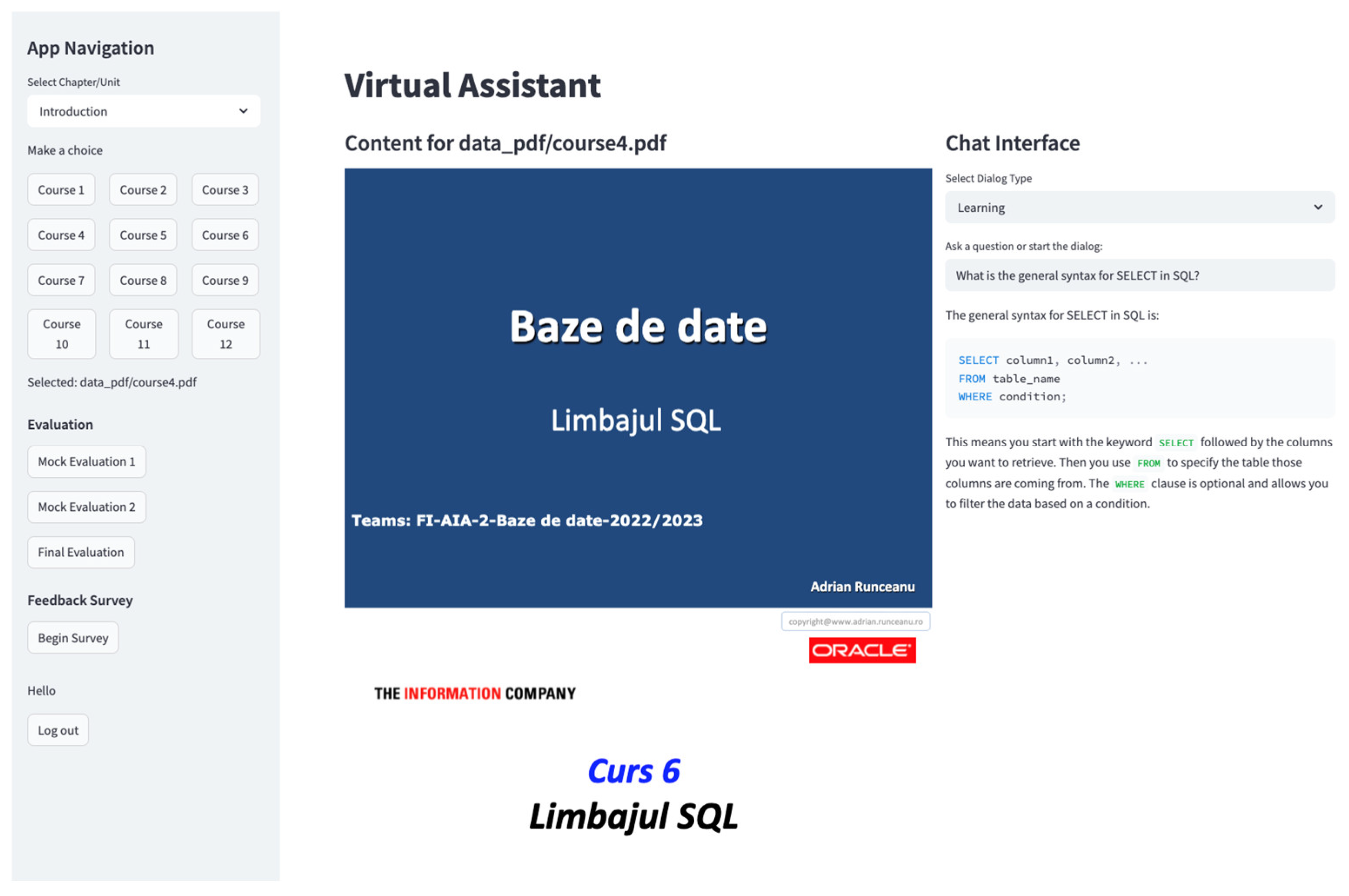

3.3. Description of the User Interface

- -

- Module 1—AI Assistant: In this module, the student selects in the left frame one of the course chapters which is displayed in the center frame. Then, the student can ask the AI assistant questions about the selected chapter in the right frame.

- -

- Modules 2 and 3—Preparation for Evaluation/Final Evaluation: These modules share a similar UI and present students with exercises, questions, and programming tasks in the middle frame. In the right frame, the chatbot provides feedback on student responses or links to Module 1 so the student can review the course documentation.

- -

- Module 4—Feedback Survey: The center frame displays a form for evaluating the application.

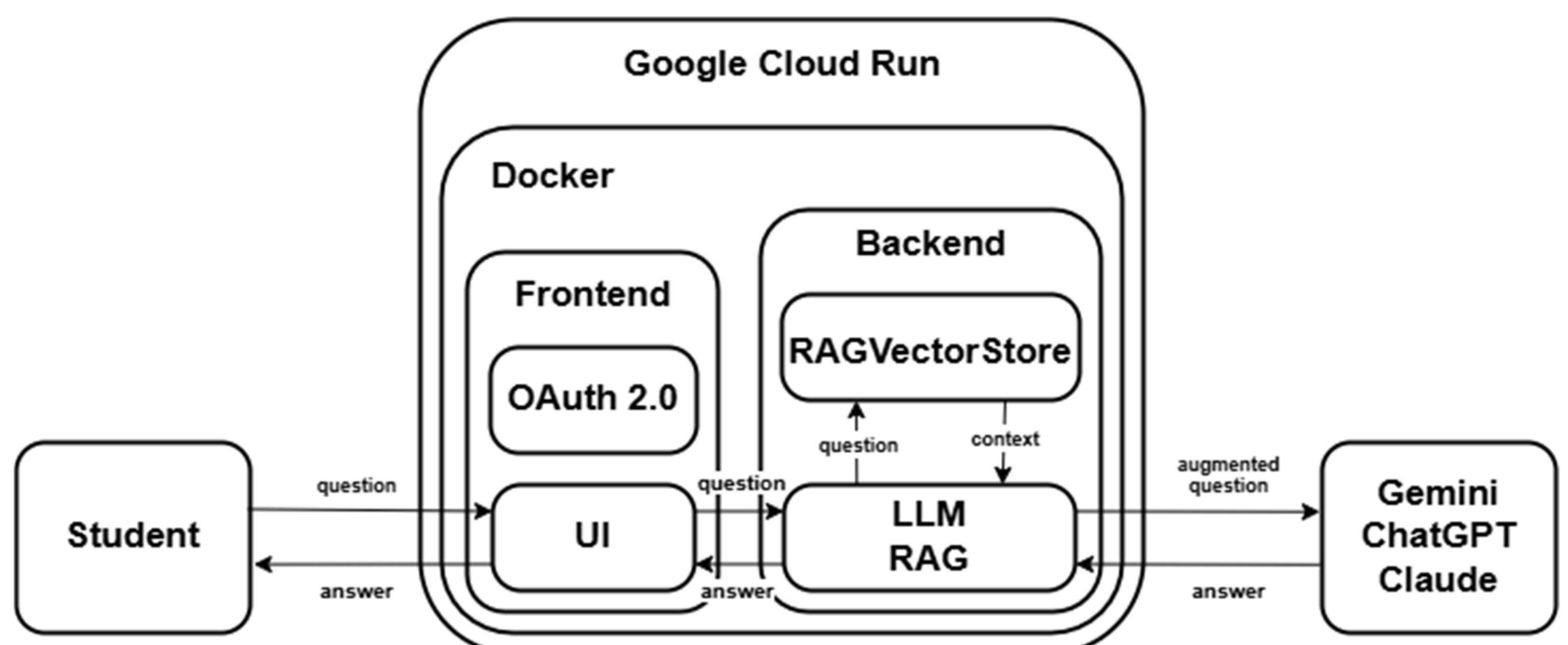

3.4. Architecture Details of the Platform

3.4.1. General Software Application Architecture and Flow

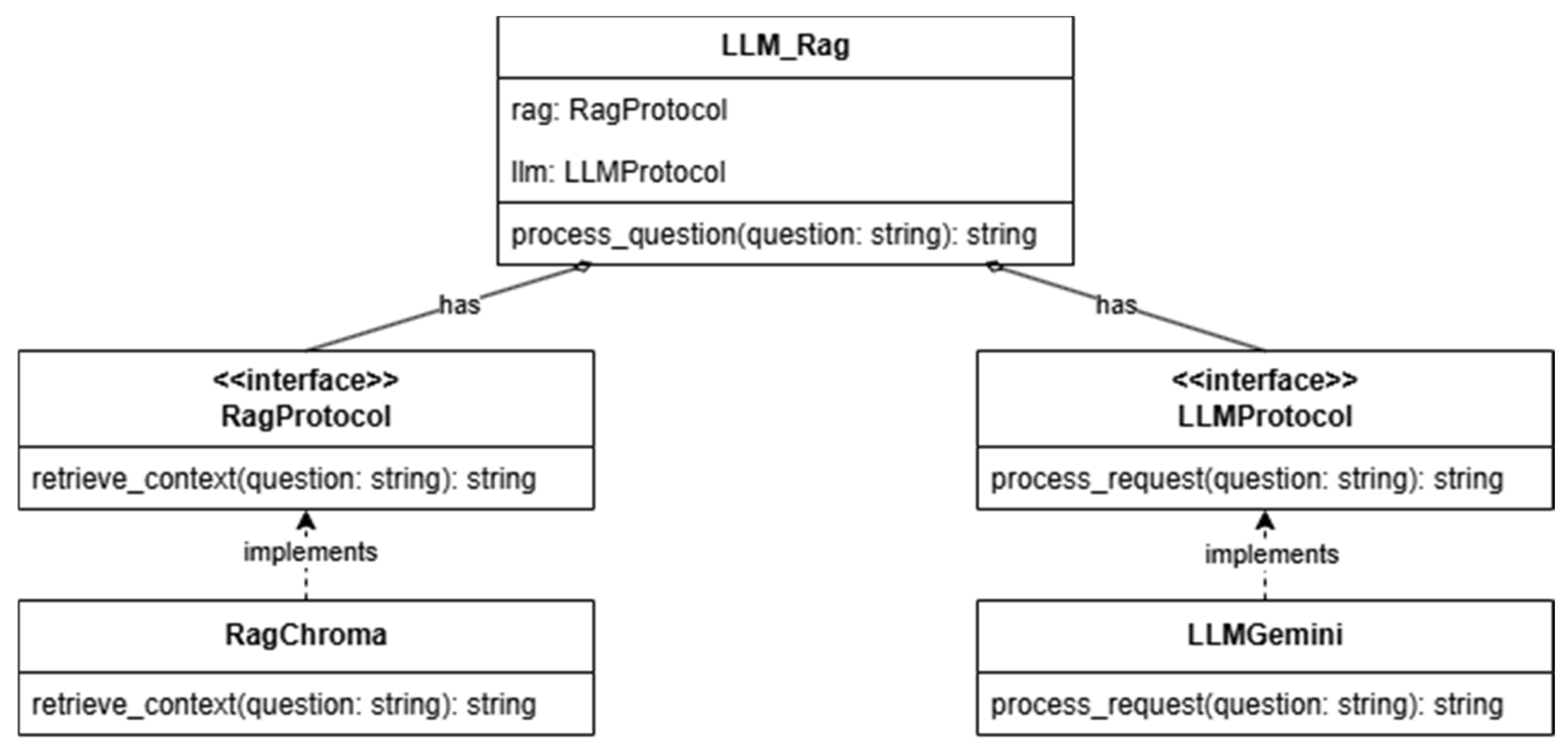

3.4.2. AI Components/Modules’ Architecture

- LLM Protocol: This is an interface which describes the minimal conditions that an LLM needs to implement to be usable; in this case, it should be able to answer a question.

- RAG Protocol: This is an interface which describes what the Retrieval Augmented Generation (RAG) pattern should implement. The main idea [71] is to use a vector database to select possible candidates from the documentation (PDF course support), which are provided as context to an LLM so it can answer the student question. The objects implementing the RAG Protocol provides functions for the following:

- Retrieves context—When given a question, it is able to retrieve the possible context from the vector DB.

- Embedding Generation—This is a helper function to convert (embed) text into vector representation.

- Similarity Search—This performs similarity searches within the vector store database to find the most relevant chunks.

- LLM RAG (Figure 4) is a class which contains the following:

- An object ‘rag’ which implements the RAG Protocol (for example, RAGChroma) to store and retrieve relevant document chunks (content) for the question.

- A function to augment the question with the context recovered from the ‘rag’ object.

- An object ‘llm’ which implements the LLM Protocol (for example, LLMGemini) to answer the augmented question.

- Evaluate Answer: Compares user’s free text or single-choice answers with correct answers.

- Evaluate Code Answer: Executes user-provided code snippets and compares them against correct code.

- Calculate-Score: Calculates the overall score based on a list of responses.

3.5. Technical Implementation of the Platform

3.5.1. Frontend Implementation

- Session Management: Manages user sessions and state. This includes handling Google OAuth 2.0 authentication.

- User Interface: Provides the user interface for interaction, including chapter selection, course navigation, dialog with the AI, evaluation, and feedback surveys:

- ○

- Navigation: Uses a sidebar for primary navigation, allowing users to select chapters/units and specific course materials.

- ○

- Dialog Interaction: Renders a dialog zone where users interact with the AI assistant. This includes input fields and display of AI responses.

- ○

- Evaluation Display: Presents evaluation results to the user.

- ○

- Styling: Streamlit themes and custom CSS.

3.5.2. Backend Implementation

4. Experiments and Results

4.1. Description of the Experiments

4.1.1. Pilot Courses Evaluated on Our Solution

4.1.2. Sample

4.1.3. Description of the Classical Teaching Process

4.1.4. Description of the AI-Enhanced Teaching Process (Pilot)

4.2. Results: Evaluation of the Platform by Instructors and Students

4.2.1. Perceived Advantages and Disadvantages for Instructors

- This application greatly simplifies the migration of their existing course material to an online/AI-enhanced application, an obstacle which was, in their opinion, insurmountable before being presented with this framework.

- The ability to deploy the application on a university server or cloud account avoids many of the issues related to student confidentiality.

- They appreciated the reduction in time spent on simple questions and grading which permits them to focus on more difficult issues.

4.2.2. Perceived Advantages for the Students

- Students consider a major benefit of this platform to be that they can ask any question they might hesitate to ask during class (so-called “stupid-questions”) while having the same confidence in the answer as if they were asking a real teacher.

- They appreciate that each answer highlights the relevant sections in the text, which increases their confidence in the AI assistant’s answer.

- They appreciate that the application can be used on mobile phones, for example, during their commutes or small breaks.

4.3. Results: Testing of the AI Components of the Platform

- A total of 16 single-choice questions from previous exams.

- A total of 40 free-answer questions.

- -

- A total of 16 questions from previous exams (Manual Test 1 and Test 2) same as the single-choice ones from above, but we deleted the possible answers and asked the AI to answer in free form;

- -

- A total of 24 questions generated with o3-mini-high with low, medium, high difficulty settings.

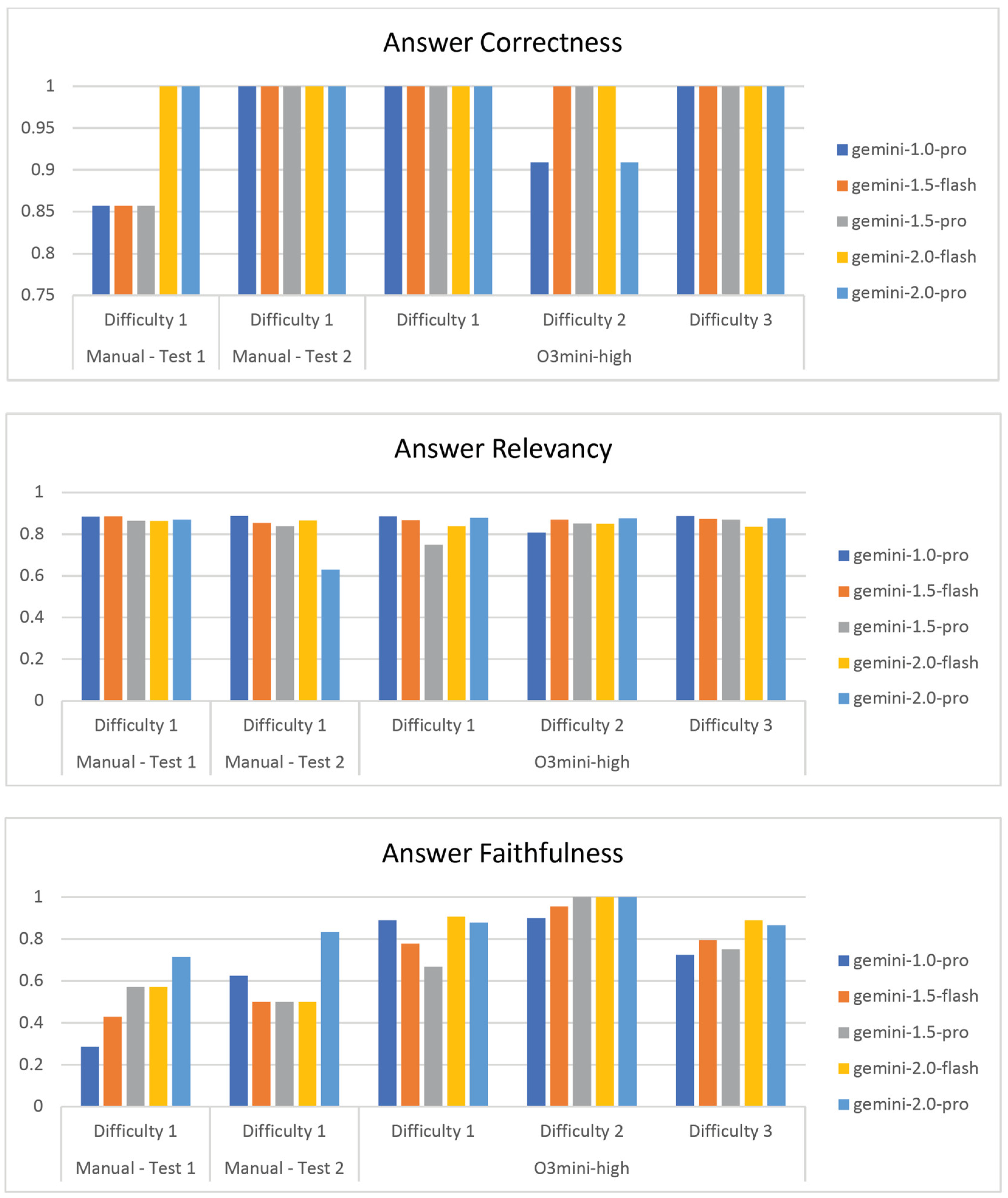

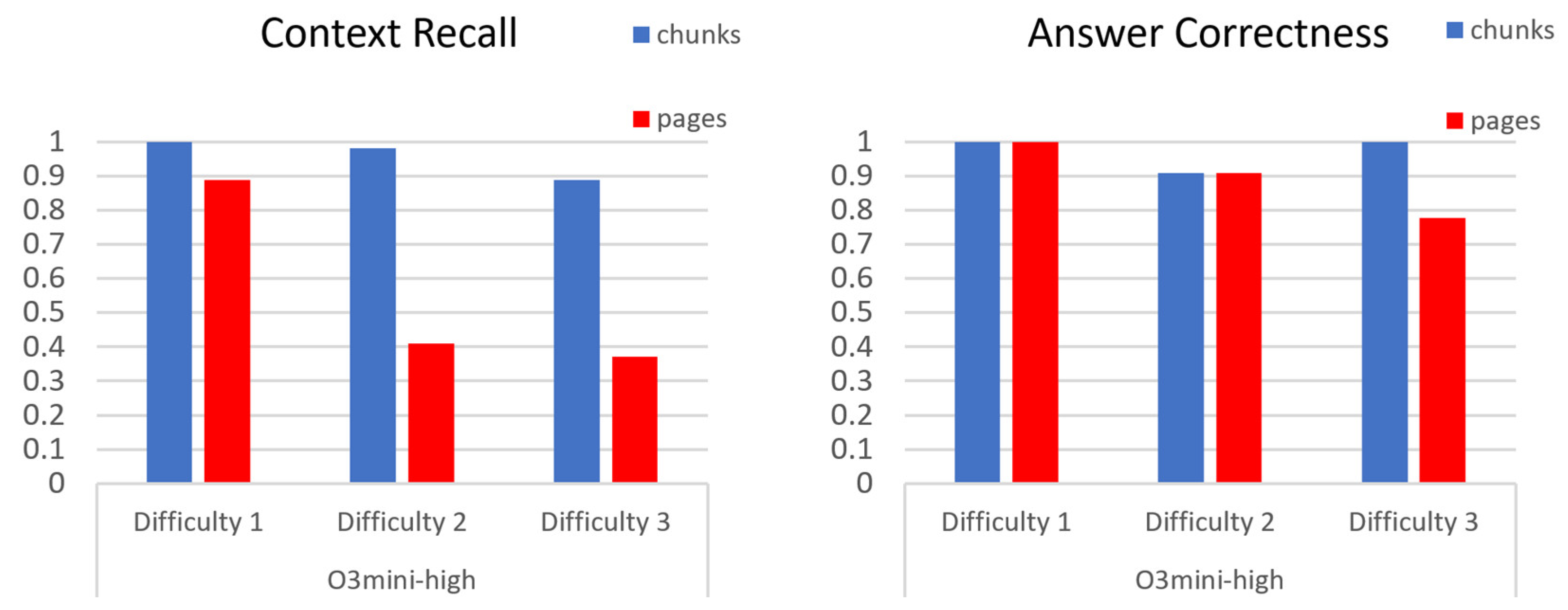

4.3.1. AI Assistant (Module 1) Assessment

- Retrieval (Contextual) Metrics, i.e., whether the system “finds” the right information from an external knowledge base before the LLM generates its answer. The metrics used were as follows:

- Context Precision—measures whether the most relevant text “chunks” are ranked at the top of the retrieved list.

- Context Recall—evaluates whether all relevant information is retrieved.

- Generation Metrics, i.e., whether the LLM must have an answer that is not only fluent but also grounded in the retrieved material. The metrics we employed were as follows:

- Answer Relevancy—how well the generated answer addresses the user’s question and uses the supplied context. It penalizes incomplete users or unnecessary details.

- Answer Faithfulness—whether the response is factually based on the retrieved information, minimizing “hallucinations”, estimated either with ragas or human evaluation.

- Answer Text Overlap Scores (conventional text metrics BLEU, ROUGE, F1 [82])—compare generated answers against reference answers.

- Correctness is very high for all LLMs, with results on par with 1 expert.

- The answer relevancy results are very promising as well, having mostly scores above 80% relevancy, as observed by human raters in the HELM study [83].

- Context retrieval is very important; results are better when more context is provided, which is expected and natural [82].

- For faithfulness, we extracted two trends: (a) the faithfulness is better for higher difficulty questions; (b) faithfulness increases for newer LLMs, Gemini 2.0 Pro being the best. Gemini 1.0 and 1.5 will sometimes ignore the instruction to answer only from context.

- Older metrics are not relevant: NLP (non-LLM) metrics like ‘ROUGE’, ‘BLUE’, ‘factual correctness’ are no longer suited for evaluation of assistant performance (see Appendix A.1 with full results and [83]). The main explanation is that two answers can correctly explain the same idea and obtain a high answer relevancy but use very different words which will cause bleu. rouge and factual correctness to be very low.

4.3.2. AI Evaluator Assessment (Module 2 and 3)

- Evaluator grading to free-form questions: correctness 90%.

- Evaluator grading to single-choice questions: correctness 100%.

- Relevancy of evaluator suggestions to wrong questions: relevance 99%.

- Comprehensiveness (whether the response covers all the key aspects that the reference material would demand): 75%.

- Readability (whether the answer is well-organized and clearly written): 90%.

4.3.3. Common Benchmarks

4.3.4. Summary of LLM Results

5. Discussion

5.1. Technical Adoption Barrier

5.2. Cost Analysis

5.3. Competing Solutions

5.4. Legal and Governance Issues

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| SQL | Structure Query Language |

| LLM | Large language model |

| CAME | Computer-Assisted Methods in Education |

| CAI | Computer-Assisted Instruction |

| CEI | Computer-Enhanced Instruction |

| VR | Virtual Reality |

| AR | Augmented Reality |

| LMS | Learning Management System |

| NLP | Natural Language Processing |

| ChatGPT | Chat Generative Pre-Trained Transformer developed by OpenAI |

| Gemini | Generative artificial intelligence chatbot developed by Google |

| Claude | a family of large language models developed by Anthropic |

| LLAMA | a family of large language models (LLMs) released by Meta AI |

| LLMProtocol | Large Language Model Protocol |

| RAG | Retrieval Augmented Generation |

| RAGProtocol | Retrieval Augmented Generation Protocol |

| LLMGemini | Large Language Model Gemini |

| Claude Sonnet | Claude 3.5 Sonnet |

| VertexAI | Vertex AI Platform |

| ECTS | European Credits Transfer System |

Appendix A

Appendix A.1

| Row Labels | Average of Corect | Context_Recall | Faithfulness | Answer_Relevancy | Bleu_Score | Rouge Score | Factual Correctness |

|---|---|---|---|---|---|---|---|

| chunks | |||||||

| gemini-1.0-pro | 95.00% | 81.25% | 71.85% | 86.39% | 8.34% | 22.99% | 56.00% |

| gemini-1.5-flash | 97.50% | 82.50% | 74.13% | 87.09% | 14.85% | 31.00% | 57.11% |

| gemini-1.5-pro | 97.50% | 83.75% | 74.38% | 83.31% | 13.40% | 35.69% | 43.77% |

| gemini-2.0-flash | 100.00% | 77.50% | 82.92% | 84.82% | 15.08% | 35.93% | 46.85% |

| gemini-2.0-pro | 97.50% | 82.50% | 87.61% | 85.12% | 10.01% | 24.76% | 44.40% |

| pages | |||||||

| gemini-1.0-pro | 47.08% | 74.05% | 45.12% | 3.09% | 32.04% | 21.85% | |

| gemini-1.5-flash | 47.08% | 57.83% | 74.76% | 7.81% | 20.64% | 47.13% | |

| gemini-1.5-pro | 47.08% | 55.26% | 83.79% | 5.70% | 16.87% | 39.73% | |

| gemini-2.0-flash | 47.08% | 88.79% | 69.63% | 4.35% | 17.42% | 33.75% | |

| gemini-2.0-pro | 47.08% | 87.78% | 78.15% | 3.83% | 14.45% | 39.45% | |

| Grand Total | 97.50% | 64.29% | 75.47% | 77.82% | 8.65% | 25.18% | 43.28% |

Appendix A.2

References

- Incheon Declaration and Framework for Action, for the Implementation of Sustainable Development Goal 4. Available online: https://uis.unesco.org/sites/default/files/documents/education-2030-incheon-framework-for-action-implementation-of-sdg4-2016-en_2.pdf (accessed on 26 February 2025).

- Survey: 86% of Students Already Use AI in Their Studies. Available online: https://campustechnology.com/articles/2024/08/28/survey-86-of-students-already-use-ai-in-their-studies.aspx (accessed on 26 February 2025).

- Half of High School Students Already Use AI Tools. Available online: https://leadershipblog.act.org/2023/12/students-ai-research.html (accessed on 26 February 2025).

- Ravšelj, D.; Keržič, D.; Tomaževič, N.; Umek, L.; Brezovar, N.; AIahad, N.; Abdulla, A.A.; Akopyan, A.; Aldana Segura, M.W.; AlHumaid, J.; et al. Higher education students’ perceptions of ChatGPT: A global study of early reactions. PLoS ONE 2025, 16, e0245832. [Google Scholar] [CrossRef]

- How to Use the ADDIE Instructional Design Model–SessionLab. Available online: https://www.sessionlab.com/blog/addie-model-instructional-design/ (accessed on 26 February 2025).

- 2023 Learning Trends and Beyond-eLearning Industry. Available online: https://elearningindustry.com/2023-learning-trends-and-beyond (accessed on 26 February 2025).

- Learning Management System Trends to Stay Ahead in 2023-LinkedIn. Available online: https://www.linkedin.com/pulse/learning-management-system-trends-stay-ahead-2023-greenlms (accessed on 26 February 2025).

- Gamification Education Market Overview Source. Available online: https://www.marketresearchfuture.com/reports/gamification-education-market-31655?utm_source=chatgpt.com (accessed on 26 February 2025).

- eLearning Trends And Predictions For 2023 and Beyond-eLearning Industry. Available online: https://elearningindustry.com/future-of-elearning-trends-and-predictions-for-2023-and-beyond (accessed on 26 February 2025).

- AI Impact on Education: Its Effect on Teaching and Student Success. Available online: https://www.netguru.com/blog/ai-in-education (accessed on 26 February 2025).

- Introducing AI-Powered Study Tool. Available online: https://www.pearson.com/en-gb/higher-education/products-services/ai-powered-study-tool.html (accessed on 26 February 2025).

- Crompton, H.; Burke, D. Artificial Intelligence in Higher Education: The State of the Field. Int. J. Educ. Technol. High. Educ. 2023, 20, 22. [Google Scholar] [CrossRef]

- Xu, W.; Ouyang, F. The application of AI technologies in STEM education: A systematic review from 2011 to 2021. Int. J. STEM Educ. 2022, 9, 59. [Google Scholar] [CrossRef]

- Hadzhikoleva, S.; Rachovski, T.; Ivanov, I.; Hadzhikolev, E.; Dimitrov, G. Automated Test Creation Using Large Language Models: A Practical Application. Appl. Sci. 2024, 14, 9125. [Google Scholar] [CrossRef]

- Nurhayati, T.N.; Halimah, L. The Value and Technology: Maintaining Balance in Social Science Education in the Era of Artificial Intelligence. In Proceedings of the International Conference on Applied Social Sciences in Education, Bangkok, Thailand, 14–16 November 2024; Volume 1, pp. 28–36. [Google Scholar]

- Nunez, J.M.; Lantada, A.D. Artificial intelligence aided engineering education: State of the art, potentials and challenges. Int. J. Eng. Educ. 2020, 36, 1740–1751. [Google Scholar]

- Darayseh, A.A. Acceptance of artificial intelligence in teaching science: Science teachers’ perspective. Comput. Educ. Artif. Intell. 2023, 4, 100132. [Google Scholar] [CrossRef]

- Briganti, G.; Le Moine, O. Artificial intelligence in medicine: Today and tomorrow. Front. Med. 2020, 7, 27. [Google Scholar] [CrossRef]

- Kandlhofer, M.; Steinbauer, G.; Hirschmugl-Gaisch, S.; Huber, P. Artificial intelligence and computer science in education: From kindergarten to university. In Proceedings of the 2016 IEEE Frontiers in Education Conference (FIE), Erie, PA, USA, 12–15 October 2016. [Google Scholar]

- Edmett, A.; Ichaporia, N.; Crompton, H.; Crichton, R. Artificial Intelligence and English Language Teaching: Preparing for the Future. British Council 2023. [Google Scholar] [CrossRef]

- Hajkowicz, S.; Sanderson, C.; Karimi, S.; Bratanova, A.; Naughtin, C. Artificial intelligence adoption in the physical sciences, natural sciences, life sciences, social sciences and the arts and humanities: A bibliometric analysis of research publications from 1960–2021. Technol. Soc. 2023, 74, 102260. [Google Scholar] [CrossRef]

- Rahman, M.M.; Watanobe, Y.; Nakamura, K. A bidirectional LSTM language model for code evaluation and repair. Symmetry 2021, 13, 247. [Google Scholar] [CrossRef]

- Wollny, S.; Schneider, J.; Di Mitri, D.; Weidlich, J.; Rittberger, M.; Drachsler, H. Are we there yet?-A systematic literature review on chatbots in education. Front. Artif. Intell. 2021, 4, 654924. [Google Scholar] [CrossRef]

- Rahman, M.M.; Watanobe, Y.; Rage, U.K.; Nakamura, K. A novel rule-based online judge recommender system to promote computer programming education. In Proceedings of the Advances and Trends in Artificial Intelligence. From Theory to Practice: 34th International Conference on Industrial, Engineering and Other Applications of Applied Intelligent Systems, IEA/AIE 2021, Kuala Lumpur, Malaysia, 26–29 July 2021; pp. 15–27. [Google Scholar]

- Rahman, M.M.; Watanobe, Y.; Nakamura, K. Source code assessment and classification based on estimated error probability using attentive LSTM language model and its application in programming education. Appl. Sci. 2020, 10, 2973. [Google Scholar] [CrossRef]

- Rahman, M.M.; Watanobe, Y.; Kiran, R.U.; Kabir, R. A stacked bidirectional lstm model for classifying source codes built in mpls. In Proceedings of the Machine Learning and Principles and Practice of Knowledge Discovery in Databases: International Workshops of ECML PKDD 2021, Virtual Event, 13–17 September 2021; pp. 75–89. [Google Scholar]

- Litman, D. Natural language processing for enhancing teaching and learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- ChatGPT. Available online: https://chatgpt.com (accessed on 26 February 2025).

- Gemini. Available online: https://gemini.google.com/app (accessed on 26 February 2025).

- Claude. Available online: https://claude.ai/ (accessed on 26 February 2025).

- LLAMA. Available online: https://www.llama.com (accessed on 26 February 2025).

- Tian, S.; Jin, Q.; Yeganova, L.; Lai, P.-T.; Zhu, Q.; Chen, X.; Yang, Y.; Chen, Q.; Kim, W.; Comeau, D.C.; et al. Opportunities and challenges for ChatGPT and large language models in biomedicine and health. Brief. Bioinform. 2024, 25, bbad493. [Google Scholar] [CrossRef]

- Gill, S.S.; Xu, M.; Patros, P.; Wu, H.; Kaur, R.; Kaur, K.; Fuller, S.; Singh, M.; Arora, P.; Parlikad, A.K.; et al. Transformative effects of ChatGPT on modern education: Emerging Era of AI Chatbots. Internet Things Cyber-Phys. Syst. 2024, 4, 19–23. [Google Scholar] [CrossRef]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar]

- Nan, D.; Sun, S.; Zhang, S.; Zhao, X.; Kim, J.H. Analyzing behavioral intentions toward Generative Artificial Intelligence: The case of ChatGPT. Univers. Access Inf. Soc. 2024, 24, 885–895. [Google Scholar] [CrossRef]

- Argyle, L.P.; Busby, E.C.; Fulda, N.; Gubler, J.R.; Rytting, C.; Wingate, D. Out of one, many: Using language models to simulate human samples. Political Anal. 2023, 31, 337–351. [Google Scholar] [CrossRef]

- Rice, S.; Crouse, S.R.; Winter, S.R.; Rice, C. The advantages and limitations of using ChatGPT to enhance technological research. Technol. Soc. 2024, 76, 102426. [Google Scholar] [CrossRef]

- Hämäläinen, P.; Tavast, M.; Kunnari, A. Evaluating Large Language Models in Generating Synthetic Hci Research Data: A Case Study. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg Germany, 23–28 April 2023; pp. 1–19. [Google Scholar]

- Wang, S.; Xu, T.; Li, H.; Zhang, C.; Liang, J.; Tang, J.; Yu, P.S.; Wen, Q. Large Language Models for Education: A Survey and Outlook, arXiv 2024, arXiv:2403.18105. Available online: https://arxiv.org/html/2403.18105v1 (accessed on 26 February 2025).

- Hattie, J. Visible Learning: A Synthesis of Over 800 Meta-Analyses Relating to Achievement; Routledge: London, UK, 2009. [Google Scholar] [CrossRef]

- Alzahrani, L.; Seth, K.P. Factors influencing students’ satisfaction with continuous use of learning management systems during the COVID-19 pandemic: An empirical study. Educ. Inf. Technol. 2021, 26, 6787–6805. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Learning Management System, EN.WIKIPEDIA.ORG. Available online: https://en.wikipedia.org/wiki/Learning_management_system (accessed on 26 February 2025).

- Studies on the Impact of Cellphones on Academics, CACSD.ORG. Available online: https://www.cacsd.org/article/1698443 (accessed on 26 February 2025).

- Lepp, A.; Barkley, J.E.; Karpinski, A.C. The relationship between cell phone use and academic performance in a sample of U.S. college students. Comput. Hum. Behav. 2015, 31, 343–350. [Google Scholar] [CrossRef]

- Junco, R. In-class multitasking and academic performance. Comput. Hum. Behav. 2012, 28, 2236–2243. [Google Scholar] [CrossRef]

- Clinton, V. Reading from paper compared to screens: A systematic review and meta-analysis. J. Res. Read. 2019, 42, 288–325. [Google Scholar] [CrossRef]

- Mizrachi, D.; Salaz, A.M.; Kurbanoglu, S.; Boustany, J. Academic reading format preferences and behaviors among university students worldwide: A comparative survey analysis. PLoS ONE 2018, 13, e0197444. [Google Scholar] [CrossRef]

- Mizrachi, D.; Salaz, A.M.; Kurbanoglu, S.; Boustany, J. The Academic Reading Format International Study (ARFIS): Final results of a comparative survey analysis of 21,265 students in 33 countries. Ref. Serv. Rev. 2021, 49, 250–266. [Google Scholar] [CrossRef]

- Mizrachi, D.; Salaz, A.M. Beyond the surveys: Qualitative analysis from the academic reading format international study (ARFIS). Coll. Res. Libr. 2020, 81, 808. [Google Scholar] [CrossRef]

- Welsen, S.; Wanatowski, D.; Zhao, D. Behavior of Science and Engineering Students to Digital Reading: Educational Disruption and Beyond. Educ. Sci. 2023, 13, 484. [Google Scholar] [CrossRef]

- Quizlet’s State of AI in Education Survey Reveals Higher Education is Leading AI Adoption. Available online: https://www.prnewswire.com/news-releases/quizlets-state-of-ai-in-education-survey-reveals-higher-education-is-leading-ai-adoption-302195348.html (accessed on 26 February 2025).

- Sandu, N.; Gide, E. Adoption of AI-Chatbots to Enhance Student Learning Experience in Higher Education in India. In Proceedings of the 2019 18th International Conference on Information Technology Based Higher Education and Training (ITHET), Magdeburg, Germany, 26–27 September 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Bruner, J.; Barlow, M.A. What are Conversational Bots?: An Introduction to and Overview of AI-driven Chatbots; O’Reilly Media: Sebastopol, CA, USA, 2016. [Google Scholar]

- Shevat, A. Designing Bots: Creating Conversational Experiences; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2017. [Google Scholar]

- Thomas, D. The AI Advantage: How to Put the Artificial Intelligence Revolution to Work; The MIT Press: Cambridge, MA, USA, 2018. [Google Scholar] [CrossRef]

- Verleger, M.; Pembridge, J. A Pilot Study Integrating an AI-driven Chatbot in an Introductory Programming Course. In Proceedings of the Frontiers in Education Conference, San Jose, CA, USA, 3–6 October 2019. [Google Scholar]

- Duncker, D. Chatting with chatbots: Sign making in text-based human-computer interaction. Sign Syst. Stud. 2020, 48, 79–100. [Google Scholar] [CrossRef]

- Smutny, P.; Schreiberova, P. Chatbots for learning: A review of educational chatbots for the Facebook Messenger. Comput. Educ. 2020, 151, 103862. [Google Scholar] [CrossRef]

- Miklosik, A.; Evans, N.; Qureshi, A.M.A. The Use of Chatbots in Digital Business Transformation: A Systematic Literature Review. IEEE Access 2021, 9, 106530–106539. [Google Scholar] [CrossRef]

- Singh, S.; Thakur, H.K. Survey of Various AI Chatbots Based on Technology Used. In Proceedings of the ICRITO 2020-IEEE 8th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions), Noida, India, 4–5 June 2020; pp. 1074–1079. [Google Scholar]

- Okonkwo, C.W.; Ade-Ibijola, A. Python-Bot: A Chatbot for Teaching Python Programming. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 202–208. [Google Scholar]

- Dan, Y.; Lei, Z.; Gu, Y.; Li, Y.; Yin, J.; Lin, J.; Ye, L.; Tie, Z.; Zhou, Y.; Wang, Y.; et al. EduChat: A Large-Scale Language Model-based Chatbot System for Intelligent Education. arXiv 2023, arXiv:2308.02773. [Google Scholar]

- GPTeens. 2024. Available online: https://en.wikipedia.org/wiki/GPTeens?utm_source=chatgpt.com (accessed on 26 February 2025).

- Li, Y.; Qu, S.; Shen, J.; Min, S.; Yu, Z. Curriculum-Driven EduBot: A Framework for Developing Language Learning Chatbots Through Synthesizing Conversational Data. arXiv 2023, arXiv:2309.16804. [Google Scholar]

- BlazeSQL. Available online: https://www.blazesql.com/ (accessed on 26 February 2025).

- OpenSQL-From Questions to SQL. Available online: https://web.archive.org/web/20250115110920/http://www.opensql.ai/ (accessed on 5 May 2025).

- Chat with SQL Databases Using AI. Available online: https://www.askyourdatabase.com/?utm_source=chatgpt.com (accessed on 26 February 2025).

- Mahapatra, S. Impact of ChatGPT on ESL Students’ Academic Writing Skills: A Mixed Methods Intervention Study. Smart Learn. Environ. 2024, 11, 9. [Google Scholar] [CrossRef]

- Pros And Cons Of Traditional Teaching: A Detailed Guide. Available online: https://www.billabonghighschool.com/blogs/pros-and-cons-of-traditional-teaching-a-detailed-guide/ (accessed on 26 February 2025).

- Google Cloud Run. Available online: https://cloud.google.com/run#features (accessed on 26 February 2025).

- Gao, Y.; Xiong, Y.; Gao, X.; Jia, K.; Pan, J.; Bi, Y.; Dai, Y.; Sun, J.; Wang, M.; Wang, H. Retrieval-Augmented Generation for Large Language Models: A Survey. arXiv 2024, arXiv:2312.10997. [Google Scholar]

- Zheng, L.; Chiang, W.L.; Sheng, Y.; Zhuang, S.; Wu, Z.; Zhuang, Y.; Lin, Z.; Li, Z.; Li, D.; Xing, E.; et al. Judging LLM-as-a-Judge with MT-Bench and Chatbot Arena. Adv. Neural Inf. Process. Syst. 2023, 36, 46595–46623. [Google Scholar]

- Liang, P.; Bommasani, R.; Lee, T.; Tsipras, D.; Soylu, D.; Yasunaga, M.; Zhang, Y.; Narayanan, D.; Wu, Y.; Kumar, A.; et al. Holistic Evaluation of Language Models. arXiv 2023, arXiv:2211.09110. [Google Scholar]

- Gemini Team. Gemini: A Family of Highly Capable Multimodal Models. arXiv 2023, arXiv:2312.11805. [Google Scholar]

- Gemini Team. Gemini 1.5: Unlocking Multimodal Understanding Across Millions of Tokens of Context. arXiv 2024, arXiv:2403.05530. [Google Scholar]

- Akter, S.N.; Yu, Z.; Muhamed, A.; Ou, T.; Bäuerle, A.; Cabrera, Á.A.; Dholakia, K.; Xiong, C.; Neubig, G. An In-depth Look at Gemini’s Language Abilities. arXiv 2023, arXiv:2312.11444. [Google Scholar]

- Google Cloud Vertex AI. Available online: https://cloud.google.com/vertex-ai (accessed on 26 February 2025).

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.; Mishkin, P.; Lowe, R. Training language models to follow instructions with human feedback. arXiv 2022, arXiv:2203.02155. [Google Scholar]

- Bai, Y.; Kadavath, S.; Kundu, S.; Askell, A.; Kernion, J.; Jones, A.; Chen, A.; Goldie, A.; Mirhoseini, A.; McKinnon, C. Constitutional AI: Harmlessness from AI Feedback. arXiv 2023, arXiv:2303.08774. [Google Scholar]

- Microsoft Search AI. Available online: https://learn.microsoft.com/en-us/rest/api/searchservice/ (accessed on 26 February 2025).

- Yang, Y.; Li, Z.; Dong, Q.; Xia, H.; Sui, Z. Can Large Multimodal Models Uncover Deep Semantics Behind Images? arXiv 2024, arXiv:2402.11281. [Google Scholar]

- RAG Evaluation. Available online: https://docs.confident-ai.com/guides/guides-rag-evaluation (accessed on 26 February 2025).

- Zhang, Y.; Mai, Y.; Roberts, J.S.R.; Bommasani, R.; Dubois, Y.; Liang, P. HELM Instruct: A Multidimensional Instruction Following Evaluation Framework with Absolute Ratings. 2024. Available online: https://crfm.stanford.edu/2024/02/18/helm-instruct.html (accessed on 26 February 2025).

- Jauhiainen, J.S.; Guerra, A.G. Evaluating Students’ Open-ended Written Responses with LLMs: Using the RAG Framework for GPT-3.5, GPT-4, Claude-3, and Mistral-Large. arXiv. 2024.

- Best Practices for LLM Evaluation of RAG Applications-A Case Study on the Databricks Documentation Bot. Available online: https://www.databricks.com/blog/LLM-auto-eval-best-practices-RAG (accessed on 26 February 2025).

- Renze, M.; Guven, E. The Effect of Sampling Temperature on Problem Solving in Large Language Models. 2024. Available online: https://arxiv.org/html/2402.05201v1 (accessed on 26 February 2025).

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. arXiv 2019, arXiv:1908.10084. [Google Scholar]

- Reimers, N.; Gurevych, I. Making Monolingual Sentence Embeddings Multilingual using Knowledge Distillation. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP); pp. 4512–4525. Available online: https://arxiv.org/abs/2004.09813 (accessed on 26 February 2025).

| Classification | Type | Description | Example |

|---|---|---|---|

| Knowledge Domain | Domain-Specific | Specialized in a specific field or industry. | Python-Bot, Babylon Health |

| General-Purpose | Broader functionality across multiple domains. | Siri, Alexa | |

| Service Provided | Informational | Provides facts, updates, and general information. | FIT-EBot |

| Transactional | Handles tasks like booking, purchasing, or transactions. | Erica by BOA | |

| Support/Assistant | Assists with troubleshooting or performing complex tasks. | IT support bots | |

| Goals | Task-Oriented | Designed to complete specific user-defined tasks. | BlazeSQL AI |

| Conversational | Focused on engaging in natural dialogue with users. | ChatGPT | |

| Learning/Training | Educates or trains users in specific domains. | Percy | |

| Input/Response | Rule-Based | Operates on pre-written scripts and decision logic. | ELIZA |

| AI-Powered | Leverages AI and NLP to provide dynamic, context-aware responses. | OpenSQL.ai | |

| Hybrid | Combines rule-based and AI features for versatile interaction. | Many enterprise chatbots |

| Chatbot Name | Purpose | Features |

|---|---|---|

| Python-Bot [61] | Python-Bot is a learning assistant chatbot designed to assist novice programmers in understanding the Python programming language. | User-Friendly Interface: Offers an intuitive platform for students to interact with, making the learning process more accessible. |

| Educational Focus: Provides detailed explanations of Python concepts, accompanied by practical examples to reinforce learning. | ||

| Interactive Learning: Engages users in a conversational manner, allowing them to ask questions and receive immediate feedback. | ||

| EduChat [62] | EduChat is an LLM-based chatbot system designed to support personalized, fair, and compassionate intelligent education for teachers, students, and parents. | Educational Functions: Enhances capabilities such as open question answering, essay assessment, Socratic teaching, and emotional support. |

| Domain-Specific Knowledge: Pre-trained on educational formats to provide accurate and relevant information. | ||

| Tool Integration: Fine-tuned to utilize various tools, enhancing its educational support capabilities. | ||

| GPTeens [63] | GPTeens is an AI-based chatbot developed to provide educational materials aligned with school curricula for teenage users. | Interactive Format: Utilizes natural language processing to support conversational interactions with learners. |

| Age-Appropriate Design: Delivers responses suitable for teenage users. | ||

| Curriculum Integration: Trained on educational materials aligned with the South Korean national curriculum. | ||

| EduBot [64] | Curriculum-Driven EduBot is a framework for developing language learning chatbots for assisting students. | Topic Extraction: Extracts pertinent topics from textbooks to generate dialogues related to these topics. |

| Conversational Data Synthesis: Uses large language models to generate dialogues, which are then used to fine-tune the chatbot. | ||

| User Adaptation: Adapts its dialogue to match the user’s proficiency level, providing personalized conversation practice. | ||

| BlazeSQL [65] | BlazeSQL is designed to transform natural language questions into SQL queries, enabling users to extract data insights from their databases without extensive SQL knowledge. | AI-Driven Query Generation: Utilizes advanced AI technology to comprehend database schemas and generate SQL queries based on user input in plain English. |

| Broad Database Compatibility: Supports various databases, including MySQL, PostgreSQL, SQLite, Microsoft SQL Server, and Snowflake. | ||

| Privacy-Focused Operation: Operates locally on the user’s desktop, ensuring that sensitive data remain on the user’s machine. | ||

| No-Code Data Visualization: Generates dashboards and visualizations directly from query results, simplifying data presentation. | ||

| OpenSQL.ai [66] | OpenSQL.ai aims to simplify the process of SQL query generation by allowing users to interact with their databases through conversational language. | Text-to-SQL Conversion: Transforms user questions posed in plain English into precise SQL code, facilitating data retrieval without manual query writing. |

| User-Friendly Interface: Designed for both technical and non-technical users, making database interactions more accessible. | ||

| Efficiency Enhancement: Streamlines data tasks by reducing the need for complex SQL coding, thereby increasing productivity. | ||

| AskYourDatabase [67] | AskYourDatabase is an AI-powered platform that enables users to interact with their SQL and NoSQL databases using natural language inputs, simplifying data querying and analysis. | Natural Language Interaction: Allows users to query, visualize, manage, and analyze data by asking questions in plain language, eliminating the need for SQL expertise. |

| Data Visualization: Instantly converts complex data into clear, engaging visuals without requiring coding skills. | ||

| Broad Database Support: Compatible with popular databases such as MySQL, PostgreSQL, MongoDB, and SQL Server. | ||

| Self-Learning Capability: The AI learns from data and user feedback, improving its performance over time. | ||

| Access Control and Embeddability: Offers fine-grained user-level access control and can be embedded as a widget on websites. |

| Course | Semester | ECTS | Hours | Year |

|---|---|---|---|---|

| Databases (DBs) | 4th | 4 | 56 | 2020–2025 |

| Database Programming Techniques (DBPTs) | 5th | 5 | 56 | 2020–2025 |

| Object-Oriented Programming (OOP) | 3rd | 6 | 70 | 2020–2025 |

| Designing Algorithms (DAs) | 2nd | 4 | 56 | 2021–2024 |

| Course | Students | %Male | %Female |

|---|---|---|---|

| Databases (DBs) | 37 | 89% | 11% |

| Database Programming Techniques (DBPTs) | 36 | 91% | 8% |

| Object-Oriented Programming (OOP) | 37 | 89% | 11% |

| Designing Algorithms (DAs) | 49 | 83% | 16% |

| Criteria | Question | Eval |

|---|---|---|

| 1. Ease of Application Setup | How would you rate the ease of setting up the application, including adding courses, creating exam questions, and generating exam questions automatically? | 5/5 |

| 2. Chatbot Answer Quality | How do you rate the accuracy and relevance of the chatbot’s responses to questions in Module 1? | 4.8/5 |

| 3. Evaluator’s Judgment Accuracy | How do you rate the quality and fairness of the evaluator’s assessment of student answers? | 4.1/5 |

| 4. Evaluator Hints for Wrong Answer | How useful are the evaluator’s hints when a student selects multiple answers in a question? | 3.2/5 |

| Criteria | Question | Eval |

|---|---|---|

| 1. Usability | How would you rate the ease of using and accessing the Module 1/2/3 | 4.2/5 |

| 2. Chatbot Answers Clarity | How easy to understand are the chatbot answers and suggestions? | 4.9/5 |

| 3. Chatbot Answers usefulness | How often are the chatbot fully answering your question? | 5/5 |

| 4. Bugs | How often the application was irresponsible or crashed? | 2.9/5 |

| Source | Difficulty | Questions No | Type |

|---|---|---|---|

| Manual Test1 (2023 Exam) | 1 | 8 | Single-choice |

| Manual Test2 (2023 Exam) | 1 | 8 | Single-choice |

| O3-mini-high | 1 | 6 | Free-answer |

| O3-mini-high | 2 | 6 | Free-answer |

| O3-mini-high | 3 | 6 | Free-answer |

| LLM | Gemini-1.0-Pro | Gemini-1.5-Flash | Gemini-1.5-Pro | Gemini-2.0-Flash | Gemini-2.0-Pro |

|---|---|---|---|---|---|

| Correct % | 0.95 | 0.975 | 0.975 | 1 | 0.975 |

| Model Variant | Cost per 1M Input Tokens | Cost per 1M Output Tokens | Cost for 10 M Input Tokens | Cost for 45 K Output Tokens | Total Estimated Cost |

|---|---|---|---|---|---|

| Gemini 1.5 Flash | USD 0.15 | USD 0.60 | USD 1.50 | USD 0.03 | USD 1.53 |

| Gemini 1.5 Pro | USD 2.50 | USD 10.00 | USD 25.00 | USD 0.45 | USD 25.45 |

| Gemini 2.0 Flash | USD 0.10 | USD 0.40 | USD 1.00 | USD 0.02 | USD 1.02 |

| Claude 3.5 Sonnet | USD 3.00 | USD 15.00 | USD 30.00 | USD 0.68 | USD 30.68 |

| Chat GPT-4o | USD 2.5 | USD 20 | USD 25.00 | USD 0.2 | USD 25.2 |

| DeepSeek (V3) | USD 0.14 | USD 0.28 | USD 1.40 | USD 0.01 | USD 1.41 |

| Mistral (NeMo) | USD 0.15 | USD 0.15 | USD 1.50 | USD 0.01 | USD 1.51 |

| Criteria | Current App | Coursera [47] | Stanford [46] | Udemy [47] | edX [46] |

|---|---|---|---|---|---|

| Cost for Student | Free | Approx EUR 40 per course per month | Tuition-based, varies by program | Varies (EUR 10–200 per course) | Free courses, Paid certificates |

| Cost for Teacher/University | Free < 1 year LLM tokens | Free for universities; revenue-sharing for instructors | Salary-based | Instructors can set prices or offer courses for free | Free for universities |

| Ease of Use | High | High | High | High | High |

| Video Content | Real classes available | Yes | Yes | Yes | Yes |

| AI Assistant | Yes | Yes | No | No | No |

| AI Evaluator | Yes | Yes | No | No | Yes |

| Possible Reach (Students) | 1 billion | Approx. 148 million registered learners | Enrolled students–20 k/year | Over 57 million learners | Over 110 million learners |

| Possible Reach (Teachers) | 97 million total | Thousands of instructors | Limited to faculty members | Open to anyone interested in teaching | University professors |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Runceanu, A.; Balan, A.; Gavanescu, L.; Neagu, M.-M.; Cojocaru, C.; Borcosi, I.; Balacescu, A. Enhancing the Learning Experience with AI. Information 2025, 16, 410. https://doi.org/10.3390/info16050410

Runceanu A, Balan A, Gavanescu L, Neagu M-M, Cojocaru C, Borcosi I, Balacescu A. Enhancing the Learning Experience with AI. Information. 2025; 16(5):410. https://doi.org/10.3390/info16050410

Chicago/Turabian StyleRunceanu, Adrian, Adrian Balan, Laviniu Gavanescu, Marian-Madalin Neagu, Cosmin Cojocaru, Ilie Borcosi, and Aniela Balacescu. 2025. "Enhancing the Learning Experience with AI" Information 16, no. 5: 410. https://doi.org/10.3390/info16050410

APA StyleRunceanu, A., Balan, A., Gavanescu, L., Neagu, M.-M., Cojocaru, C., Borcosi, I., & Balacescu, A. (2025). Enhancing the Learning Experience with AI. Information, 16(5), 410. https://doi.org/10.3390/info16050410