1. Introduction

The rise of educational software across multiple learning environments has fundamentally modified the manner in which knowledge is delivered, accessed, and measured. Educational software creates automated feedback, self-regulated learning, and interactions that can accommodate a vast array of learners and subjects [

1]. As these systems continue to be used more in both formal and informal learning contexts, researchers and software developers are less concerned with whether learners can access these educational technologies and are more concerned with how they can be tailored to suit the changing needs, aims, and behaviors of individual learners [

2].

Today’s classrooms, either in-person or online, are comprised of ever-growing heterogeneous groups of learners [

3]. Learners join the classroom with a variety of social and cultural backgrounds, previous knowledge, cognitive styles, motivations, and learning preferences. Thus, a universal approach to instructional design is often insufficient. Personalization has now become a priority in educational systems design [

4], which aims to tailor content and learner support strategies to the individual learner’s profile and needs at a specific moment.

Personalization in educational contexts is not solely about content sequencing or recommendation [

5]. It is also important to personalize adaptations in instructional strategy—the choice of how to respond to a learner’s action online, in real time [

6]. For instance, should the system provide a hint, give an explicit code example, prompt to self-reflect, or advance the learner? The determination of the most helpful instructional strategy at the right moment may influence the learner’s level of engagement, understanding, and retention of material.

There are a number of approaches that have proposed methods for making these types of adaptive instructional decisions [

7]. There are rule-based systems, Bayesian networks, fuzzy logic, reinforcement learning, case-based reasoning, and others. Each methodology has its own advantages and drawbacks. However, one family of methods, Multi-Criteria Decision Making (MCDM), is of particular interest to education because instructors are often attempting to balance many different factors related to the learner [

8]. One MCDM method is the Technique for Order Preference by Similarity to the Ideal Solution (TOPSIS).

TOPSIS is a widely used MCDM method that was introduced by Hwang and Yoon in 1981 [

9]. TOPSIS finds the best solution from a finite set of possible option alternatives by comparing the distance of each alternative to an ideal solution (the best case) and an anti-ideal solution (the worst case). The learner options are assessed via various criteria, with weights assigned, and the option that is closest to the ideal solution and furthest from the anti-ideal situation is selected. The method is intuitive, computationally simple, and appropriate for real-time usage, which makes it a very good injective for learner-centered instructional systems [

10]. We used TOPSIS for this work because it can accommodate multiple, often competing, metrics for learners (performance, effort, engagement, etc.) in a mathematically principled and interpretable manner.

This paper presents a decision support system for learner-centered instructional strategy adaptation in personalized learning. The system collects real-time performance data from students and applies a decision support model based on the TOPSIS algorithm to determine the most pedagogically relevant instructional strategy among a collection of strategies (i.e., contextual hints, examples with annotations, reflection prompts, and scaffolding activities). This research is novel in that we are layering real-time multi-criteria decision-making model into an adaptive instructional engine, allowing interventions based on learner state rather than content order. As a testbed for our research, we applied this framework to a Java programming learning environment, which provided a well-structured and cognitively demanding context to validate adaptive instructional strategies. Java was selected as the instructional domain due to its structured syntax, object-oriented paradigm, and its widespread use in programming education—factors that make it ideal for evaluating the effectiveness of real-time adaptive instructional interventions [

11,

12]. The work describes the architecture of the system, decision-making model, and implementation; we provide a goal assessment regarding the system with cognitive, behavioral, and usability performance. The model is an open, extensible and empirically-based method of personalization founded on MCDM theory that can be applied to many educational contexts. Summarizing, the contribution of this work is the integration of the TOPSIS multi-criteria decision-making algorithm into a real-time adaptive instructional decision-support system for personalized learning interventions. Unlike traditional systems focusing primarily on content sequencing, this approach dynamically selects instructional strategies tailored specifically to individual learner states.

The remainder of the paper is structured as follows.

Section 2 discusses related work.

Section 3 outlines the system architecture.

Section 4 details the TOPSIS-based decision framework.

Section 5 presents the instructional strategy adaptation and implementation.

Section 6 covers the system evaluation.

Section 7 discusses the findings, and

Section 8 provides conclusions and future directions.

2. Related Work

Adaptive learning systems in computer science education have progressed tremendously in programming pedagogy [

13,

14,

15,

16,

17,

18,

19]. Adaptive learning systems typically seek to individualize the educational experience by quickly acting upon data from the learners’ direct experience. When the context is Java programming and other technical subjects, customization often relates mostly to content difficulty, recommendations, and pacing [

20,

21]. Some intelligent tutoring systems, such as [

22,

23], analyze the learners’ submissions for context-aware feedback. Other systems base activity selection or explanations upon a learner profile based on learning style or prior knowledge. Generally, while these systems may adapt what is presented, addressing how to pedagogically intervene through adaptive instructional strategies in consideration of learner states is less common.

To aid with this kind of instructional decision-making, a number of learner modeling methods have been implemented [

24]. The most straightforward learner models are the Rule-based systems that have straightforward development and maintenance and achieve a high level of transparency; however, they lack scalability and flexibility [

25,

26,

27]. The more sophisticated probabilistic learner models include Bayesian networks, which model the probabilistic relationships between different learner variables [

28,

29,

30]; these also include fuzzy logic systems, which use fuzzy membership functions to model uncertainty in learner variable interpretation [

31,

32,

33]. Then there reinforcement learning methods that optimize instructional policies through trial and error, and case-based reasoning systems define new learners based on similar previous learner profiles and successful instructional interventions [

34,

35,

36]. There are advantages to all of these models, but they all share issues of fairly high complexity, data dependency, or low interpretability, especially as they are deployed in real-time systems, which require immediate action.

In recent years, the MCDM approaches, particularly the Technique for Order Preference by Similarity to the Ideal Solution (TOPSIS), have begun to gain more attention and use in educational research [

10,

37,

38,

39,

40,

41,

42,

43,

44,

45,

46]. TOPSIS has been used in a variety of contexts in education, including, for example, evaluating learning management systems, recommending learning resources, and matching course recommendations with learner objectives and goals. Each of these scenarios demonstrates the use of TOPSIS in situations requiring consideration of several, often competing, criteria. The TOPSIS method lends itself to this problem and provides an approach that has mathematical rigor and is also transparent for educators and learners to understand. However, use of TOPSIS has remained primarily in offline analysis or generating generalized recommendations and has not been integrated into real-time adaptive systems [

47].

Several prominent MCDM methods have been proposed in the literature, such as Analytic Hierarchy Process (AHP), ELECTRE, PROMETHEE, and VIKOR [

48,

49,

50,

51]. AHP is powerful for criteria weighting but can become computationally intensive in real-time contexts [

52]. ELECTRE and PROMETHEE are useful in handling qualitative preferences, yet their complexity makes rapid decision-making challenging [

53]. VIKOR is effective in compromise ranking but can be sensitive to criteria weights [

54]. We selected the TOPSIS method due to its simplicity, computational efficiency, clear interpretability, and effectiveness in handling conflicting criteria. These attributes make TOPSIS particularly well-suited for the real-time adaptive instructional scenarios central to our study.

In this paper, we demonstrate a new paradigm for using TOPSIS by applying it in a learner-centered pedagogical framework for Java programming. Previous studies had used TOPSIS to make static assessments or recommendations of content, while our system was able to assess interactions in real time and determine the ranking of potential pedagogies post-learning task. This is a shift from adaptation of content to adaptation of pedagogy and, in doing so, provided additional dimensions of personalization, i.e., tactical adaptation of how support is provided rather than what support is provided. Our work fills an important niche in describing and operationalizing a multi-criteria pedagogical decision-making process that is interpretable and dynamic, within the constraints programming education presents.

3. System Architecture

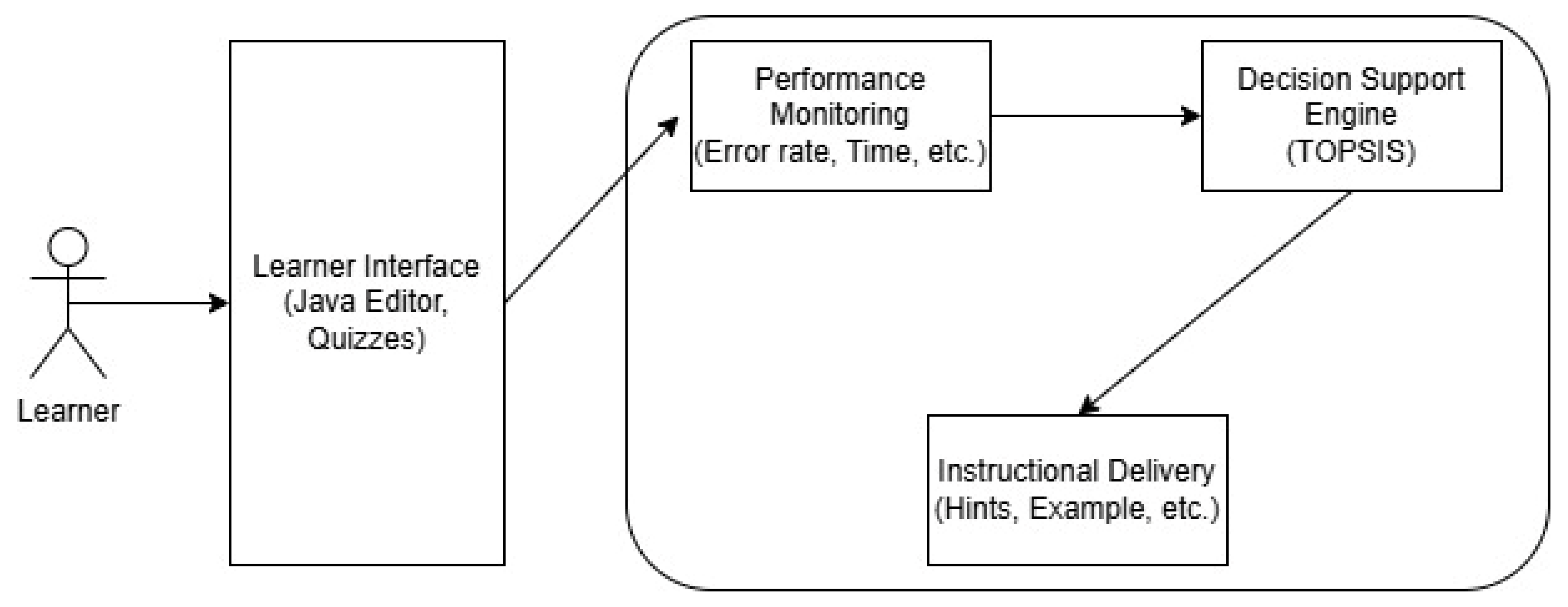

The proposed learning system is a web-based personalized learning ecosystem that facilitates Java programming instruction through real-time, data-driven adaptations to teaching strategies. The learner interface was developed as a web-based environment using standard front-end technologies suitable for interactive educational applications. This design enables real-time feedback, responsiveness, and seamless integration with the adaptive decision engine. The design of the system is based on pedagogy and computational decision support, ensuring that all learners receive timely and effective interventions based on their real-time performance and behavioral traces. All of the decisions with instructional design are based on the steps in TOPSIS, which sequentially aggregates and prioritizes multiple learner-centered criteria to identify the best instructional strategy the learner could be provided with at any learning step. The system is grounded in three core functional components: Learner Performance Monitoring, Decision Support Engine, and Instructional Content Delivery. The three components work within a feedback loop enabling real-time personalization.

The Learner Performance Monitoring component is responsible for collecting and keeping up-to-date a range of data about the learner’s performance while interacting with Java programming tasks. While engaged with other components of the system, the environment provides learners with an integrated code editor, a submission console and assessment modules. This component collects all sorts of overt behaviors and performance measures for the learner including the number of syntax and semantic errors, total time in completing tasks, hint request frequency, the number of compilations before submission, quiz scores, and so on. These raw data points are converted to normalized learner features to be used by the Decision Support Engine. In addition, the system captures a time series of the learning events so that future iterations will be able to use this time series data to make adaptations based on trends in behavior.

With the learner profile updated, the Decision Support Engine is activated. The Decision Support Engine then deploys the TOPSIS algorithm to evaluate a number of predetermined instructional strategies against the individual characteristics of the learner, through demonstrating selected criteria. Each strategy is scored based on its estimated effectiveness given the learner’s recent performance. The characteristics and criteria for scoring the strategies are of a cognitive dimension (e.g., concept acquisition, overall error rate) and behavioral dimension (e.g., amount of time on task, motivational proxies) and these each may have different weights of importance pedagogically.

The Instructional Content Delivery component implements the chosen strategy in an efficient, unobtrusive manner. Due to the circumstance and level of engagement, the system will either provide an interactive hint or draw attention to a part of a past submission, or provide a marked-up code example, or prompt for reflection on performance. The platform is designed to provide instructional strategies with minimal disruption to instruction, while adapting to user instruction seamlessly. The Instructional Content Delivery logs all instructional activity for subsequent analysis and use by the system and also for research in pedagogy.

The entire system was developed to use a data flow and interaction cycle in real time. Each learner action (e.g., submission of code, request for help, submission of quiz response) becomes a data acquisition point. The performance monitoring module takes in the information, processes it and encodes it for use by the decision support engine. The Decision Support Engine analyzes the learner state in near real-time using a TOPSIS evaluation process and determines the most appropriate response from an instructional context. This creates a continuous cycle that allows the system to be responsive to changes in learner engagement and performance throughout a session.

To further illustrate the process, let us focus on a learner struggling with nested loops in Java. She has made several attempts, spent a long period in attempting the task, and asked for several hints. All are indications of cognitive overload and low task mastery. The Learner Performance Monitoring captures these metrics, and the Decision Support Engine evaluates various strategies. Among the options—providing another hint, suggesting a simpler exercise, or showing a complete worked example—the Decision Support Engine determines, based on weighted learner criteria, that the most appropriate action is to display an annotated example. The Instructional Content Delivery will present this example, as her focus will be on the structure and logic of nested loops. Following a review of this, she will complete the task again, and then the adaptive cycle continues.

The architecture supports responsive instruction, while retaining a semblance of interpretability, accountability, and pedagogically sound practices. Whereas a black-box model would be opaque to the learner, the use of TOPSIS allows the learner to see prompts, hints, and other strategies being considered, allowing the instructor and designers the benefit of participating in the decision, and to refine and improve the choice of strategies. This enables the system to be built out further, with dynamic weighing, diversely pooling strategies, or even linkage to richer learner models.

A high-level outline of the system architecture is shown in

Figure 1, which encapsulates the major processing stages and their relationships in the real-time personalization loop while highlighting the modular and extensible architecture of the platform.

4. Multi-Criteria Decision Framework Using TOPSIS

At the heart of the instructional adaptation system is the Decision Support Engine, which utilizes the Technique for Order Preference by Similarity to the Ideal Solution (TOPSIS). Through the Decision Support Engine, real-time instructional personalization becomes possible in our emergent system, by choosing the most advantageous pedagogical action, given the multi-dimensional representation of the learner’s cognitive and behavioral state. The procedures implemented by TOPSIS for educational decision making are dynamic, as opposed to traditional dynamic adaptation systems, which still mostly take the reactive approach of looking only at the learner’s data to select a learning method. TOPSIS allows for the comparison of each instructional strategy as it responds to current data, while drawing upon a structured reasoning approach based on education principles.

After every learner interaction, the system executes a comparison of a predetermined set of six instructional alternatives: (1) provide a contextual hint; (2) show an annotated code example; (3) assign an easier related task; (4) provide a reflection prompt; (5) permit the learner to proceed to the next concept; and (6) elicit a micro-quiz. These instructional alternatives were defined in collaboration with a group of programming educators and instructional designers. The instructional alternatives reflect different pedagogical intents, such as scaffolding, consolidation, remediation, motivation, and progression. This set was refined during iterative design sessions with educators, who identified common instructional moves relevant to Java programming instruction.

The process of decision making is based on six pedagogically relevant criteria: Error Rate, Time on Task, Mastery, Motivation Score, Hint Usage Frequency (or the influence on learner independence or dependence), and Speed of Progress. These indicators were informed by a literature review on learner analytics and validated through interviews with educators who had experienced adaptive learning environments. Each criterion relates to a specific dimension of learner behavior and progress. For example, Error Rate indicates ongoing task performance; Time on Task indicates cognitive load; Mastery is inferred from performance on formative assessments; Motivation Score is related to persistence through tasks, voluntary resource access use and patterns of engagement with the platform; Hint Usage Frequency informs the indication of learner independence or dependency; and Speed of Progress indicates potential pacing and engagement. These six criteria are summarized in

Table 1, which outlines their descriptions and pedagogical relevance within the adaptive decision-making process.

To allow for comparison among these heterogeneous dimensions, a min–max normalization was used to normalize all input values to a [0, 1] scale. Once data were normalized, at each decision point a decision matrix was created that has a row for each instructional strategy and a column for the six criteria. Importantly, the entries for the decision matrix are not the learner’s raw values, but effect estimates for each strategy on each criterion given the learner’s current stage of development. These estimates are based on expert-informed heuristics, pilot trial information, and directly observing previous uses of the system. For example, past patterns indicate that an easier task tends to improve perceived success and decrease frustration (lower error rate and time), and that a reflection prompt tends to improve motivation but may increase time on task.

Weight assignment is an integral part of TOPSIS; it defines how much each criterion contributes to the final ranking in the analysis. The weights used in this developmental study were W = [0.25, 0.15, 0.20, 0.15, 0.10, 0.15] and they were agreed upon using the Delphi method with five experts in computer science education, adaptive learning, and pedagogical experiences. Three iterative rounds allowed the experts to rank the criterion as regards to what extent it supports student learning, along with justifications for scores. The agreements in Criterion weights reflect the best identified priorities, where Error Rate and Mastery (the right answer and understanding) were strongly regarded, followed by criteria relating to Effort, Motivation, and Pace indicators, moderately weighted.

With the matrix fully populated and weighted, the system calculates both the ideal solution vector, made from the best values of each criterion (e.g., error rate, mastery, speed) and the anti-ideal solution vector made from worst-case values. Each learning alternative for the particular educational context was treated with a Euclidean distance formula, measuring the calculated distance from the ideal and the anti-ideal. The resultant closeness coefficient Ci was computed for each particular alternative:

where

and

represent the distances to the ideal and anti-ideal solutions, respectively. The strategy with the highest score is selected for delivery.

To provide a tangible example, suppose we are considering three strategies—Hint, Code Example, and Micro-Quiz—with three simplified criteria. The normalized and weighted scores are shown in

Table 2.

Given ideal and anti-ideal vectors defined as [0.3, 0.4, 0.9] and [0.8, 0.6, 0.3], respectively, we calculate the Euclidean distances, which enables us to calculate for each strategy. Notably, if the Quiz alternative has the highest closeness coefficient (Ci), it is chosen and implemented without delay. If two alternatives have almost identical values, the system employs a second rule (e.g., selection of the strategy that has not recently been used with the learner) to break ties and provide differentiated variability in pedagogy.

The benefit of this decision model lies in its flexibility, but also in its accountability. Instructors may retrace the decision path, examine the weightings, and follow the contributing factors towards a given adaptation. An additional benefit is that not only is the current model flexible, but it is also modular. With some restructuring, we would be able to add additional criteria (i.e., affective states from facial movement or typing patterns), or update mappings of strategies over time as we explore observable learner outcomes.

In conclusion, the TOPSIS-based Decision Support Engine provides an evidence-based, transparent, and scalable way of adapting instructional strategy in real-time. Providing a personalized pedagogical decision-making process built on multiple learner metrics, expert knowledge, and structured evaluation logic, it demonstrates a new level of personalization in pedagogical decision-making in the context of programming education. To visually summarize the full decision-making process, the overall workflow of the Decision Support Engine, which is based on the TOPSIS method, is shown in

Figure 2.

This quantitative scoring is then used to trigger the corresponding pedagogical action within the learning platform interface, as explained in

Section 5.

5. Instructional Strategy Adaptation and System Implementation

The instructional strategies for instructional strategy adaptation implemented into the proposed learning system are a facilitated implementation of cognitive load theory principles relating to learner-centric responsiveness, scaffolding, and formative assessment. The strategies included in the learning system are designed for various types of learning difficulties, promote reflective thinking, develop motivation, and promote understanding of the appropriate concept. Additionally, each strategy serves a clear instructional goal. For instance, hints serve as a targeted form of scaffolding to assist learners who are experiencing temporary misunderstanding, whereas an annotated code example is used as scaffolding to assist with tacit understanding by learners who consistently fail to apply a programming construct. Moreover, the reflective prompts are intended to support learner metacognition, while micro-quizzes serve to support retention and provide evidence of residual misunderstanding.

The selection process is initiated by the system’s perception of how the learner is transitioning through the learning state. The learner’s state is expressed through a multidimensional profile from the constantly updated record of their patterns of interaction. Indicators, like frequent compilation errors, excessive time spent on tasks, multiple hints, and no observable improvement, indicate a potential bottleneck for learning. The data are represented by way of six pedagogically meaningful parameters, and these serve to structure the decisions on instructional options stemming from the principles of TOPSIS, one of the multi-criteria decision-making methods. Our specification of the probable effects of any instructional method—i.e., the effect of each instructional method defined in terms of the six pedagogical parameters—was obtained through a combination of heuristics informed by expert knowledge and pre-experimental data stemming from previous pilots of the system. For instance, it was noticed that learners who had been provided with schema with annotated code examples in the previous months sporadically returned to course learning with lower rates of errors and improved mastery, while those with reflection prompts tended to spend more time on assessment items than those who did not receive this instructional suggestion, but also displayed elevated engagement scores in post-task surveys. These patterns formed the basis of the values used in the estimates for impact for each of the instructional approaches in the decision matrix. It is important to know that the mappings were not arbitrary: we based them on accepted educational notions of learning behaviors and performance assessment, and on the empirical evidence we witnessed in earlier versions of the platform.

The incorporation of the TOPSIS-based Decision Support Engine into the larger learning context was a complicated synchronization of the front-end interaction components with the back-end decision logic. The learning object was designed with a client–server architecture. The front-end interface of the learning context, designed with JavaScript and React, supports the interactive Java programming tasks in the learning object, provides personalized feedback to the learner, and gathers the learner’s inputs. The back-end of the learning context, designed with Python, includes the TOPSIS engine, data normalization and scoring classifier routine, and the instructional response controller. The data viewing and processing components for the interactions are loosely coupled and communicate through the RESTful API, allowing data transfers to occur asynchronously and for each component to be updated in low-latency time. When a learner interacts with the learning context, an API call is created from the front-end interface. This call sends interaction movement data to the server, where the decision engine uses the data to perform the decision analysis regarding what the optimal instructional strategy is.

The adaptation mechanism must utilize a lightweight session-based learner model that is updated in real time to support the active adaptation process. The learner model does not try to predict the long-term level of success, but does try to respond meaningfully to where the learner is currently at in their activity. Each time the learner submits work or requests help, the system retrieves performance metrics and re-evaluates the instructional context to put the chosen strategy for a learner into the interface in a coherent manner. Once TOPSIS identifies the optimal instructional strategy, the system will instantiate and personalize the recommended strategy dynamically, by taking assets with associated metadata tags of pedagogical value from a library of pedagogical resources. Each resource wise asset (hint, code example, reasoning with the author, etc.) is indexed by topic, difficulty and a set of common misconceptions. Moreover, for example, if the student is working on a loop structure and the TOPSIS engine recommends ‘hint’ and recognizes repeated off-by-one errors, the system retrieves the hint “Consider whether your loop condition includes or excludes the endpoint value” from its database. The hint is contextually inserted within the learner’s code editor so that only an adaptation to the UI is made, without requiring a page refresh or manual request. In a different case, if a code example is selected, the system produces an annotated example in a highlighter, consistent with the learner’s current concept (e.g., array iterating), formatted liberally with a small explanation box. Each pedagogical strategy is bracketed with the specific delivery situation: hints in-line, examples in expandable panels, reflection prompts in modals, and so on, such that the envisioned interventions feel snugly situated in that workflow. With the immediacy of guttural nesting guided by the pedagogical rationale, adaptive support is not only algorithmically instantiated but also meaningfully experienced by the learner.

To maximize experimentation and development, the application was designed with modularity and extensibility in mind. The TOPSIS engine, data-processing pipeline, and instructional strategy modules are encapsulated as discrete services, updateable and replaceable without needing to rewrite the main application. Each decision made by a learner is logged in a systematic way that will allow them to be analyzed and to make continuous improvements to the adaptation logic. The system therefore demonstrates both theoretical rigor in its pedagogical underpinning and technical rigor in its implementation. It joins data-informed decision making with real-world instructional delivery and exemplifies that real-time personalization can be accomplished in programming education.

6. Evaluation

To thoroughly test the pedagogical effectiveness, behavioral incidence, and technical feasibility of the proposed TOPSIS-based adaptation system, we conducted a longitudinal mixed-method study with 100 postgraduate students in a conversion master’s program in computer science at a Greek university. The cohort included students from non-STEM (Science, Technology, Engineering, and Mathematics) fields, such as humanities, law, education, and psychology, representing a diverse range of learner profiles. Most participants had low baseline programming skills but highly variable motivation and prior experience—an ideal context in which to evaluate adaptive instructional technologies. The participants’ ages ranged from 23 to 38 years old (M = 27.4, SD = 3.2), and the sample included 58 females and 42 males. This diversity in academic background, prior exposure to computing, and learner demographics provided a robust and realistic setting for assessing the system’s capacity to personalize instruction effectively.

Participants were randomly assigned to a control group (n = 50), using a traditional static e-learning platform, and an experimental group (n = 50), using our adaptive platform and TOPSIS-based instructional strategic engine. Both groups covered the same four-week curriculum in Java fundamentals delivered by the same instructors. The only difference between the two was the personalization for the experimental group.

We assessed system effectiveness through a comprehensive evaluation framework combining quantitative and qualitative methods across four dimensions: (1) Learning outcomes, (2) Behavioral engagement, (3) Instructional strategy effectiveness, and (4) System responsiveness and usability.

7. Discussion

The evaluation results provide strong evidence for the utility of the TOPSIS-based instructional strategy adaptation framework in enhancing both cognitive and behavioral dimensions of learner engagement within a Java programming context. In this section, we interpret these findings through the lens of personalized learning, adaptive system design, pedagogical significance, and the scalability of the proposed approach.

From a pedagogical standpoint, the observed improvements in learning outcomes suggest that the system’s adaptive strategies were well-aligned with learner needs and delivered support at critical points in the learning process. This alignment likely helped reduce cognitive overload during challenging tasks and offered timely scaffolding when learners were ready to engage with more complex material. Such timing is essential in promoting deep learning—particularly for novice programmers facing conceptual and syntactic difficulties.

The behavioral engagement patterns—reflected in increased time-on-task, problem attempts, and voluntary retries—point to the motivational benefits of real-time personalization. Learners did not passively consume instructional content but actively interacted with varied forms of support. This suggests that the system may have encouraged self-regulated learning behaviors, helping students to recognize and act upon their changing needs.

The consistency between expert evaluations, learner satisfaction, and system-selected strategies further reinforces the interpretability and instructional coherence of the TOPSIS framework. The system’s decisions were rated as pedagogically acceptable by instructors in the vast majority of cases, supporting the claim that the selected criteria and weights mirror expert instructional reasoning. Where discrepancies occurred, they reflected differences in teaching style rather than system misjudgment—highlighting the model’s flexibility and contextual sensitivity.

Qualitative feedback from learners further corroborated the value of the adaptive interventions. Students emphasized that hints, prompts, and examples were not only helpful but also well-integrated into their learning experience. This supports the notion of the system functioning as a learning companion rather than a directive tutor—aligning with contemporary theories that prioritize metacognition, scaffolding, and learner autonomy.

The comparative results against a traditional rule-based adaptive engine underscore the added pedagogical value of using a multi-criteria decision-making method. Unlike fixed-threshold systems, the TOPSIS framework enabled nuanced, context-sensitive decisions that better reflected the complexity of individual learner states. This adaptability proved to be a key differentiator in both learning outcomes and perceived usability.

Additionally, the positive correlation between the use of adaptive strategies and learning gains—while not causal—suggests a dose–response relationship worth exploring further. This opens future research avenues in longitudinal modeling to understand how adaptive interventions shape learning trajectories over time.

In comparison to the existing literature on educational adaptive systems, the resulting contribution from this study provides a more nuanced and analytically supported model of personalization. Current work has typically concentrated on content sequencing (e.g., [

55]), learner modeling approaches with Bayesian Knowledge Tracing [

26,

27,

28,

56], or rule-based feedback models [

23,

24,

25,

57]. While these earlier studies have made contributions in their particular contexts, the usability of these models is often compromised by low adaptability, a black-box to decide content sequence, or lack of instructor visibility. For instance, rule-based systems typically use rigid heuristics that do not adapt to the diversity or subtlety of learner behavior, while probabilistic approaches, like BKT, though powerful in prediction, offer little interpretability to educators aiming to understand or modify adaptation logic.

Conversely, the TOPSIS-based system used in this study allows for multi-criteria reasoning similar to that of human instructional decision making. The ability to clearly define the trade-offs between performance indicators and to rank actions of students provides instructional approaches that are both contextualized and pedagogically sound. Furthermore, the transparency of the TOPSIS framework permits administrators and designers of a system to explore decision pathways, adjust weights, and re-assess alternatives without the need to re-train a model. This interpretive quality—often lacking with deep learning or probabilistic frameworks—enhances its application in educational settings that require accountability and individualization.

In addition, the architecture of the system allows for scalability and extensibility. The modularization of the TOPSIS engine, the decoupled front-end/back-end design, and the transparent decision logs allow the system to be deployed, tracked, and refined when deployed in different subject domains and learning contexts. Regarding the need for further adaptations, comparable models could be applied in math, engineering, and even writing intensive subjects where learners frequently experience the same cognitive difficulties. The ethical mechanisms introduced in the study, in the form of institutional ethics approval and informed consent, additionally contribute to the methodological integrity of the study, and its readiness for wider application. The use of semi-structured interviews and the collection of open feedback also provide a human-centered evaluation of the system, beyond quantitative exam scores, in order to shed light on learner experience and instructional quality.

While many existing adaptive systems rely on static thresholds or probabilistic models with limited pedagogical transparency, the approach presented in this study bridges the gap between algorithmic precision and instructional interpretability. What distinguishes our system is not only its performance, but also its capacity to support meaningful pedagogical decisions through clear, adjustable criteria. Unlike systems that offer limited feedback on why a particular recommendation is made, our TOPSIS-based framework enables both educators and researchers to trace, understand, and revise the logic behind each adaptive intervention. This capacity positions the system not simply as a technical tool, but as a collaborative partner in instructional design—an important shift in the evolution of adaptive educational technologies. By embedding decision transparency and contextual flexibility into the core of the system, our work demonstrates how personalization can be both data-driven and pedagogically grounded.

The evidence presented and discussed here supports the conclusion that the adaptive instructional system we adopted here is a strong pedagogically-grounded and technically-feasible solution for learner-centered programming education. The system operationalizes multi-criteria decision-making through TOPSIS in real time, which reflects an innovative and effective way to provide intelligent support that is tailored to an individual learner. As adaptive learning continues to advance, the approach used in this study represents a powerful combination of precision, versatility, and transparency—qualities essential for the next generation of educational technologies.