LiteMP-VTON: A Knowledge-Distilled Diffusion Model for Realistic and Efficient Virtual Try-On

Abstract

1. Introduction

2. Related Works

2.1. Image-Based Virtual Try-On

2.2. Knowledge Distillation

- Logit-based Distillation: Logit-based distillation methods [23,25,26] primarily focus on distilling information from the output layer, using the Softmax output of teacher model as soft targets to train the student model. Temperature scaling [25] exposes inter-class “dark knowledge”, enabling the student to learn finer decision boundaries without extra structural alignment. This approach enhances the performance of smaller networks and is both simple and effective, making it applicable to a wide range of knowledge distillation tasks.

- Feature-based Distillation: Feature-based distillation methods aim to replicate feature representations in the intermediate layers of the teacher model. FitNets [28] trains the student with additional hints from a teacher’s mid-level layer; in a later study, FactorTransfer [27] further decomposes features into orthogonal factors, making channel-wise alignment more robust to architecture mismatch.

- Relation-based Distillation: Relation-based KD explores pairwise or higher-order relations among samples and layers. For instance, RKD [29] encodes pairwise distance and angle information, encouraging the student to reconstruct the teacher’s metric space rather than exact activations, which proves effective for heterogeneous backbones.

3. Methods

3.1. Primary Knowledge

3.2. Framework

3.3. MP-VTON

3.4. LiteMP-VTON

3.4.1. Teacher Model

3.4.2. Student Model

3.4.3. Cross-Attention Alignment Distillation Module

3.4.4. Loss Function

4. Experiments

4.1. Experimental Setup

4.2. Quantitative Evaluation

4.3. Qualitative Evaluation

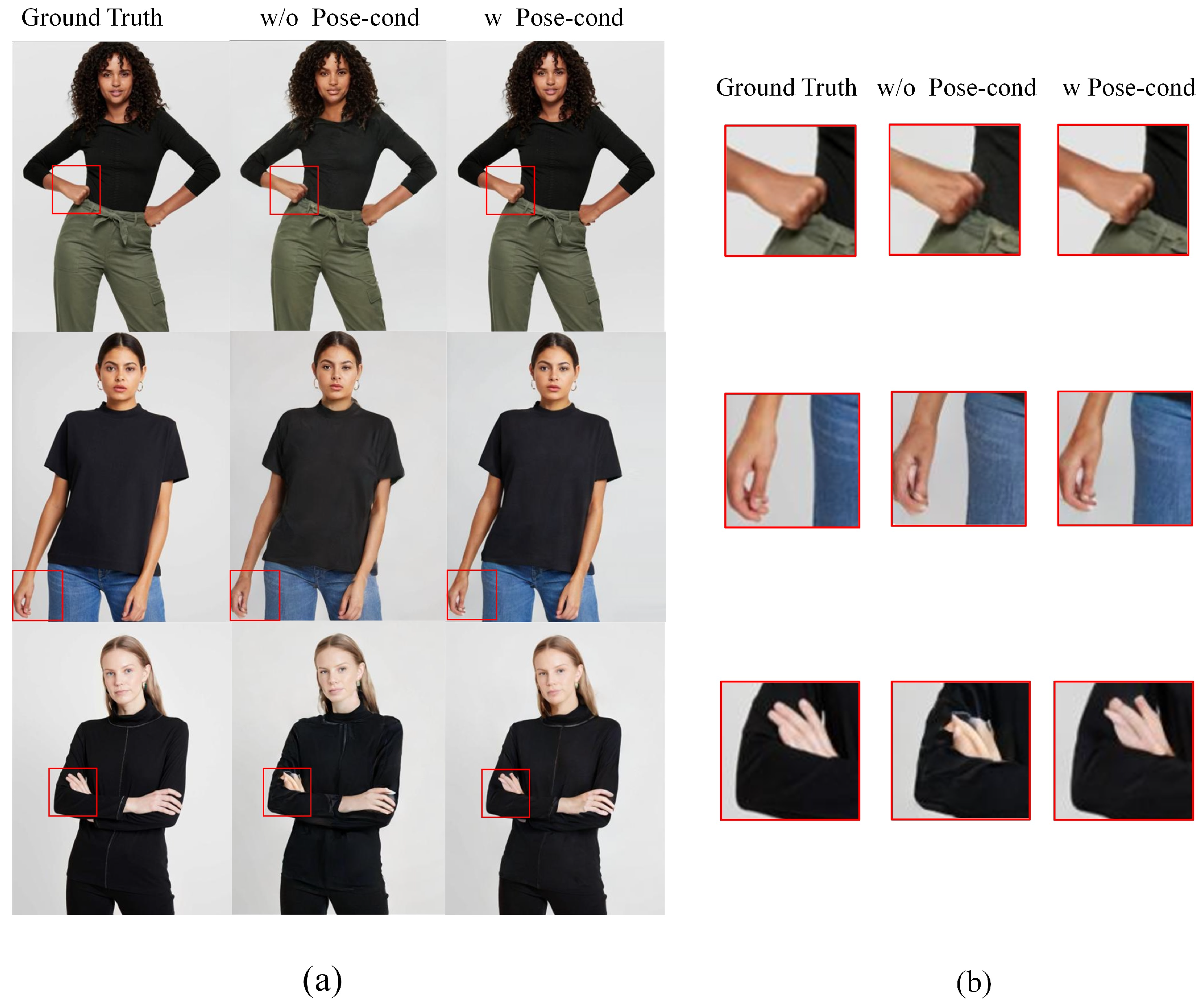

4.4. Ablation Experiments

4.5. Comparative Experiments on the Distillation Process

4.6. Inference Efficiency Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Song, J.; Meng, C.; Ermon, S. Denoising diffusion implicit models. arXiv 2020, arXiv:2010.02502. [Google Scholar]

- Song, Y.; Sohl-Dickstein, J.; Kingma, D.P.; Kumar, A.; Ermon, S.; Poole, B. Score-based generative modeling through stochastic differential equations. arXiv 2020, arXiv:2011.13456. [Google Scholar]

- Dhariwal, P.; Nichol, A. Diffusion models beat gans on image synthesis. Adv. Neural Inf. Process. Syst. 2021, 34, 8780–8794. [Google Scholar]

- Ho, J.; Salimans, T. Classifier-free diffusion guidance. arXiv 2022, arXiv:2207.12598. [Google Scholar]

- Li, X.; Kampffmeyer, M.; Dong, X.; Xie, Z.; Zhu, F.; Dong, H.; Liang, X. WarpDiffusion: Efficient Diffusion Model for High-Fidelity Virtual Try-on. arXiv 2023, arXiv:2312.03667. [Google Scholar]

- Zhu, L.; Yang, D.; Zhu, T.; Reda, F.; Chan, W.; Saharia, C.; Norouzi, M.; Kemelmacher-Shlizerman, I. Tryondiffusion: A tale of two unets. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 4606–4615. [Google Scholar]

- Morelli, D.; Baldrati, A.; Cartella, G.; Cornia, M.; Bertini, M.; Cucchiara, R. Ladi-vton: Latent diffusion textual-inversion enhanced virtual try-on. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 8580–8589. [Google Scholar]

- Gou, J.; Sun, S.; Zhang, J.; Si, J.; Qian, C.; Zhang, L. Taming the power of diffusion models for high-quality virtual try-on with appearance flow. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 7599–7607. [Google Scholar]

- Li, Y.; Wang, H.; Jin, Q.; Hu, J.; Chemerys, P.; Fu, Y.; Wang, Y.; Tulyakov, S.; Ren, J. Snapfusion: Text-to-image diffusion model on mobile devices within two seconds. Adv. Neural Inf. Process. Syst. 2024, 36, 20662–20678. [Google Scholar]

- Ondrúška, P.; Kohli, P.; Izadi, S. Mobilefusion: Real-time volumetric surface reconstruction and dense tracking on mobile phones. IEEE Trans. Vis. Comput. Graph. 2015, 21, 1251–1258. [Google Scholar] [CrossRef]

- Keller, W.; Borkowski, A. Thin plate spline interpolation. J. Geod. 2019, 93, 1251–1269. [Google Scholar] [CrossRef]

- Wang, B.; Zheng, H.; Liang, X.; Chen, Y.; Lin, L.; Yang, M. Toward characteristic-preserving image-based virtual try-on network. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 589–604. [Google Scholar]

- Xie, Z.; Huang, Z.; Dong, X.; Zhao, F.; Dong, H.; Zhang, X.; Zhu, F.; Liang, X. Gp-vton: Towards general purpose virtual try-on via collaborative local-flow global-parsing learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 23550–23559. [Google Scholar]

- Wan, Y.; Ding, N.; Yao, L. FA-VTON: A Feature Alignment-Based Model for Virtual Try-On. Appl. Sci. 2024, 14, 5255. [Google Scholar] [CrossRef]

- Chen, C.; Ni, J.; Zhang, P. Virtual Try-On Systems in Fashion Consumption: A Systematic Review. Appl. Sci. 2024, 14, 11839. [Google Scholar] [CrossRef]

- Xie, Z.; Huang, Z.; Zhao, F.; Dong, H.; Kampffmeyer, M.; Dong, X.; Zhu, F.; Liang, X. Pasta-gan++: A versatile framework for high-resolution unpaired virtual try-on. arXiv 2022, arXiv:2207.13475. [Google Scholar]

- Ge, Y.; Song, Y.; Zhang, R.; Ge, C.; Liu, W.; Luo, P. Parser-free virtual try-on via distilling appearance flows. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8485–8493. [Google Scholar]

- Choi, S.; Park, S.; Lee, M.; Choo, J. Viton-hd: High-resolution virtual try-on via misalignment-aware normalization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14131–14140. [Google Scholar]

- Lee, S.; Gu, G.; Park, S.; Choi, S.; Choo, J. High-resolution virtual try-on with misalignment and occlusion-handled conditions. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 204–219. [Google Scholar]

- Zhang, S.; Ni, M.; Chen, S.; Wang, L.; Ding, W.; Liu, Y. A two-stage personalized virtual try-on framework with shape control and texture guidance. IEEE Trans. Multimed. 2024, 26, 10225–10236. [Google Scholar] [CrossRef]

- Yang, Z.; Zeng, A.; Yuan, C.; Li, Y. Effective whole-body pose estimation with two-stages distillation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 4210–4220. [Google Scholar]

- Hinton, G. Distilling the Knowledge in a Neural Network. arXiv 2015, arXiv:1503.02531. [Google Scholar]

- Joyce, J.M. Kullback-Leibler Divergence; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Müller, R.; Kornblith, S.; Hinton, G.E. When Does Label Smoothing Help? Advances in Neural Information Processing Systems. 2019, pp. 4694–4703. Available online: https://dl.acm.org/doi/10.5555/3454287.3454709 (accessed on 30 March 2025).

- Zhao, B.; Cui, Q.; Song, R.; Qiu, Y.; Liang, J. Decoupled knowledge distillation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 11953–11962. [Google Scholar]

- Kim, J.; Park, S.; Kwak, N. Paraphrasing Complex Network: Network Compression via Factor Transfer. Advances in Neural Information Processing Systems 2018. pp. 2765–2774. Available online: https://dl.acm.org/doi/10.5555/3327144.3327200 (accessed on 30 March 2025).

- Romero, A.; Ballas, N.; Kahou, S.E.; Chassang, A.; Gatta, C.; Bengio, Y. Fitnets: Hints for thin deep nets. arXiv 2014, arXiv:1412.6550. [Google Scholar]

- Park, W.; Kim, D.; Lu, Y.; Cho, M. Relational knowledge distillation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3967–3976. [Google Scholar]

- Salimans, T.; Ho, J. Progressive distillation for fast sampling of diffusion models. arXiv 2022, arXiv:2202.00512. [Google Scholar]

- Sun, W.; Chen, D.; Wang, C.; Ye, D.; Feng, Y.; Chen, C. Accelerating diffusion sampling with classifier-based feature distillation. In Proceedings of the 2023 IEEE International Conference on Multimedia and Expo (ICME), Brisbane, Australia, 10–14 July 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 810–815. [Google Scholar]

- Wang, C.; Guo, Z.; Duan, Y.; Li, H.; Chen, N.; Tang, X.; Hu, Y. Target-Driven Distillation: Consistency Distillation with Target Timestep Selection and Decoupled Guidance. arXiv 2024, arXiv:2409.01347. [Google Scholar] [CrossRef]

- Yang, D.; Liu, S.; Yu, J.; Wang, H.; Weng, C.; Zou, Y. Norespeech: Knowledge distillation based conditional diffusion model for noise-robust expressive tts. arXiv 2022, arXiv:2211.02448. [Google Scholar]

- Kingma, D.P. Auto-encoding variational bayes. arXiv 2013, arXiv:1312.6114. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. [Google Scholar]

- Liao, W.; Jiang, Y.; Liu, R.; Feng, Y.; Zhang, Y.; Hou, J.; Wang, J. Stable Diffusion-Driven Conditional Image Augmentation for Transformer Fault Detection. Information 2025, 16, 197. [Google Scholar] [CrossRef]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. Lora: Low-rank adaptation of large language models. arXiv 2021, arXiv:2106.09685. [Google Scholar]

- Zhang, L.; Rao, A.; Agrawala, M. Adding conditional control to text-to-image diffusion models. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 3836–3847. [Google Scholar]

- Ye, H.; Zhang, J.; Liu, S.; Han, X.; Yang, W. Ip-adapter: Text compatible image prompt adapter for text-to-image diffusion models. arXiv 2023, arXiv:2308.06721. [Google Scholar]

- Güler, R.A.; Neverova, N.; Kokkinos, I. Densepose: Dense human pose estimation in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7297–7306. [Google Scholar]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime multi-person 2d pose estimation using part affinity fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7291–7299. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; PMLR: New York, NY, USA, 2021; pp. 8748–8763. [Google Scholar]

- Kim, G.; Kwon, T.; Ye, J.C. Diffusionclip: Text-guided diffusion models for robust image manipulation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2426–2435. [Google Scholar]

- Saharia, C.; Chan, W.; Saxena, S.; Li, L.; Whang, J.; Denton, E.L.; Ghasemipour, K.; Gontijo Lopes, R.; Karagol Ayan, B.; Salimans, T.; et al. Photorealistic text-to-image diffusion models with deep language understanding. Adv. Neural Inf. Process. Syst. 2022, 35, 36479–36494. [Google Scholar]

- Simonyan, K. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 586–595. [Google Scholar]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. Gans Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium. Advances in Neural Information Processing Systems. 2017, pp. 6629–6640. Available online: https://dl.acm.org/doi/abs/10.5555/3295222.3295408 (accessed on 30 March 2025).

- Bińkowski, M.; Sutherland, D.J.; Arbel, M.; Gretton, A. Demystifying mmd gans. arXiv 2018, arXiv:1801.01401. [Google Scholar]

- Loshchilov, I. Decoupled weight decay regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar]

| Model | LPIPS ↓ | SSIM ↑ | FID ↓ | KID ↓ | Parameter |

|---|---|---|---|---|---|

| VITON-HD | 0.116 | 0.862 | 12.12 | 0.32 | 100 M |

| HR-VITON | 0.104 | 0.878 | 11.27 | 0.27 | 100 M |

| DCI-VTON | 0.081 | 0.880 | 8.76 | 0.11 | 1027 M |

| MP-VTON (ours) | 0.078 | 0.887 | 8.73 | 0.11 | 1027 M |

| Teacher Model (w/o CLIP) | 0.081 | 0.879 | 8.76 | 0.12 | 898 M |

| Student Model (LiteMP-VTON) | 0.090 | 0.870 | 9.78 | 0.17 | 286 M |

| Methods | Teacher Model | Student Model | ||||||

|---|---|---|---|---|---|---|---|---|

| LPIPS ↓ | SSIM ↑ | FID ↓ | KID ↓ | LPIPS ↓ | SSIM ↑ | FID ↓ | KID ↓ | |

| A | 0.075 | 0.853 | 11.04 | 0.25 | 0.183 | 0.764 | 15.6 | 0.51 |

| A + B | 0.090 | 0.873 | 9.12 | 0.21 | 0.121 | 0.857 | 11.7 | 0.31 |

| A + B + C | 0.081 | 0.879 | 8.76 | 0.12 | 0.090 | 0.870 | 9.8 | 0.17 |

| A + B + C + D | 0.078 | 0.887 | 8.73 | 0.11 | - | - | - | - |

| Case | LPIPS ↓ | SSIM ↑ | FID ↓ | KID ↓ |

|---|---|---|---|---|

| Case 1 | 0.135 | 0.576 | 23.5 | 0.54 |

| Case 2 | 0.102 | 0.683 | 16.6 | 0.42 |

| Case 3 | 0.095 | 0.721 | 13.2 | 0.31 |

| Case 4 | 0.090 | 0.870 | 9.8 | 0.17 |

| Model | GFLOPs | Inference Time (s) | Memory Usage (MB) |

|---|---|---|---|

| Teacher Model | 548.34 | 2.39 | 6713 |

| Student Model | 238.24 | 1.08 | 2211 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, S.; Wang, L.; Ding, W. LiteMP-VTON: A Knowledge-Distilled Diffusion Model for Realistic and Efficient Virtual Try-On. Information 2025, 16, 408. https://doi.org/10.3390/info16050408

Zhang S, Wang L, Ding W. LiteMP-VTON: A Knowledge-Distilled Diffusion Model for Realistic and Efficient Virtual Try-On. Information. 2025; 16(5):408. https://doi.org/10.3390/info16050408

Chicago/Turabian StyleZhang, Shufang, Lei Wang, and Wenxin Ding. 2025. "LiteMP-VTON: A Knowledge-Distilled Diffusion Model for Realistic and Efficient Virtual Try-On" Information 16, no. 5: 408. https://doi.org/10.3390/info16050408

APA StyleZhang, S., Wang, L., & Ding, W. (2025). LiteMP-VTON: A Knowledge-Distilled Diffusion Model for Realistic and Efficient Virtual Try-On. Information, 16(5), 408. https://doi.org/10.3390/info16050408