1. Introduction

No organization wants to discover weaknesses in its cyber incident response procedures during an actual attack. Once an incident begins, it is typically too late to correct procedural flaws. Effective incident management requires organizations to follow established technical procedures, make rapid decisions, and allocate resources efficiently.

The selection of an exercise format depends on factors such as organizational size, cybersecurity awareness, and overall readiness [

1]. Cyber ranges (CRs) are commonly used for technical training because they simulate realistic environments. However, designing custom CR scenarios is time-consuming and costly [

2]. Since all actions occur in real time, CRs are typically less suitable for training managers, who must often wait for technical tasks to complete before making decisions, thus slowing responses due to this dependency.

In contrast, tabletop exercises (TTXs) focus on managerial-level training, emphasizing soft skills rather than technical execution. They are faster to prepare than CR-based exercises, but their effectiveness heavily depends on the control team’s expertise. These facilitators perform adjudication by interpreting participant actions, assessing their feasibility, and determining outcomes based on scenario logic [

3]. However, without experienced adjudicators, TTXs may lack realism as the decisions often have no tangible consequences and the scenarios may deviate from plausible attack progressions.

This paper introduces a novel incident response exercise that emulates technical actions without executing them directly. The method models the logical effects of typical CR actions within an abstracted network environment, allowing participants to focus on decision-making while technical implementation is omitted. This approach reduces reliance on highly experienced facilitators, simplifies preparation compared to CRs, and enables rapid execution with minimal staffing. It is also scalable and adaptable to various organizational structures and participant roles. To the best of the authors’ knowledge, this approach has not been systematically studied, and no established best practices currently exist for its implementation.

Rather than replicating real-world environments through direct technical execution, this paper explores an alternative based on abstracting operational contexts by emulating technical activities. Here, emulation refers to representing the logical outcomes of technical actions without performing them in real systems. The method is tailored to organizational management personnel, who must understand but not perform technical activities. It integrates military wargaming procedures to structure decision-making, an approach originally developed for defense planning, into cyber incident response training. Although tested with a specific simulator, the approach is transferable to similar systems. It does not aim to replace CRs but rather to enhance TTXs by reducing subjectivity and assumptions in scenario execution.

In addition to its technical distinctiveness, the method introduces methodological innovation. It adapts professional wargaming tools, particularly the Data Collection and Management Plan (DCMP) [

4], to guide exercise design. The DCMP translates training goals into measurable objectives and participant roles, supporting a transparent and goal-oriented methodology. To the best of the authors’ knowledge, this represents the first application of the DCMP to cyber incident response training. The method was developed and tested in a joint civil–military setting and can be customized for organizations with varying levels of cyber maturity.

Unlike the existing training methods, this approach integrates emulated technical actions into a simulation framework designed to support decision-making by middle and senior management. It avoids real-time technical exploits and reduces dependence on experienced facilitators. By incorporating system wargaming principles that emphasize structured, rule-based scenario development and operational-level decision-making, it offers a viable alternative to traditional CRs and TTXs. The resulting emulation-supported training framework combines structured adjudication, rule-based logic, and the DCMP to enable realistic, time-sensitive incident response exercises for non-technical teams.

This study is guided by the following research questions:

RQ1: Can cyber incident response exercises be conducted using emulated technical actions instead of real-time execution, and, if so, what would such an exercise look like?

RQ2: What resources are needed to prepare and run such an exercise, including time, personnel, and technical complexity, and how do these compare with those required for traditional CR and TTX formats?

RQ3: Can the DCMP, originally used in military wargaming, be adapted to systematically structure and evaluate cyber incident response exercises?

These questions reflect the practical motivation behind the study. The aim is to explore whether an alternative exercise format can provide a realistic and efficient option for training management-level personnel involved in cyber incident response.

The remainder of this paper is structured as follows:

Section 2 presents related studies on cyber defense exercises and their findings.

Section 3 discusses the management levels and the challenges of the current exercise approaches.

Section 4 outlines the development and testing of our exercise method. The developed exercise concept is presented in

Section 5 and compared to other approaches in

Section 6.

Section 7 discusses this exercise concept. The concluding section summarizes our findings.

2. Related Work

Cyber exercises are essential for preparing organizations to handle cyber incidents. The traditional formats focus either on technical skills or management-level decision-making. Technical training typically uses CRs, which provide hands-on, real-time scenarios, while managers train using TTXs or wargames, structured simulations of competitive decision-making used to explore planning and consequences.

Guides for organizing computer security exercises [

5,

6,

7,

8,

9,

10,

11,

12], along with supporting studies [

13], confirm two dominant types: simulations developing technical skills via CRs and TTXs aimed at management-level training. This dual structure is widely adopted, including in high-level NATO exercises [

14]. CR-based formats emphasize technical competencies aligned with frameworks such as NICE [

15], and they replicate attacker tactics, techniques, and procedures (TTPs, standardized methods used by threat actors during attacks) [

16]. In contrast, TTXs focus on discussion-based interaction and policy-level planning, supporting awareness and non-technical procedure testing.

Chowdhury and Gkioulos [

17] identified simulation systems positioned between CRs and TTXs. We grouped these systems into two categories based on their applicability to management training. The first includes tools oriented toward strategic planning or investment decisions, such as Cyber Arena [

18], which focuses on long-term goals like hiring or adopting technologies under resource constraints rather than immediate incident response. Although detailed documentation is lacking, the available materials indicate that these exercises are scenario-based but not incident-driven. The second category includes CR or testbed systems adapted for management training. A testbed is a controlled environment used to simulate and observe the behavior of cybersecurity systems or configurations. Access to these platforms is often restricted, limiting insight into execution pacing or participant interaction. Many are commercial products, which reduces transparency. One example is Cyber Gym, described in academic sources [

5,

14] and promotional content [

19,

20]. It showcases industrial control systems (ICSs), critical infrastructure technologies combined with active cyber defenses.

Some recent works apply digital twins, virtual models that replicate the behavior of real-world systems, for cybersecurity training. Sipola et al. [

21] simulated a food supply chain to test cyber responses in logistics, while Holik et al. [

22] emulated communication in digital substations for operator training. These methods focus on technical realism. In contrast, our approach is designed for management-level participants and focuses on decision-making rather than technical replication, enabling efficient and scalable training without requiring infrastructure models or deep technical knowledge.

Gamification, the use of game-like mechanics in non-game contexts to boost engagement, has been used to build cyber situational awareness [

23]. One early tool is CyberCIEGE [

24], which teaches basic cybersecurity concepts through interactive scenarios. The demo version introduces key principles and prevents attacks if configurations are correctly applied. Similarly, “Red vs. Blue” portals [

2,

25] emphasize strategic investment over direct engagement. These tools do not simulate realistic, time-sensitive incident workflows. CyberCIEGE abstracts threats for educational purposes, while Red vs. Blue emphasizes planning rather than operational coordination. Neither enables structured testing under real-time attack conditions. In contrast, the proposed method immerses participants in a simulated organization under active threat, requiring real-time decision-making and providing immediate feedback.

The educational value of TTXs for cybersecurity decision-making was further explored in [

26]. In most cyber TTXs, facilitators guide scenario presentation, followed by group discussion of potential actions. National institutions have published templates for such formats [

9,

27,

28], offering ready-to-use guidelines for adaptation.

Wargaming is another relevant concept. NATO defines wargames as structured representations of competition, allowing participants to make decisions and observe consequences [

29]. Perla [

30] distinguished wargames from TTXs by noting their outcome-driven progression. Our method applies this principle by updating the scenario based on participant decisions. Military wargames often explore cyber operations within a broader context [

31,

32,

33,

34] but rarely address organization-level incident management. Hobbyist and military games share mechanics such as role-taking, turn-based actions, and probabilistic resolution [

35]. However, a review of hobby games [

36,

37] found no example that simulates incident response within an organizational setting. NATO’s cyber-wargame-based education programs are discussed in [

38], highlighting the importance of experienced facilitators and the presence of a skilled control cell, an adjudication team responsible for enforcing realism and guiding scenario outcomes. Our earlier research provides further background on the use of wargames [

39,

40].

CRs serve multiple roles, including education, certification, and testing [

41]. Švábenský [

42] proposed combining CRs with serious games, which use game-based mechanics to enhance engagement and learning outcomes in non-entertainment contexts. Ukwandu et al. [

43] developed a taxonomy identifying seven participant team types, highlighting the diversity of roles in cyber exercises. These classifications underscore the complexity of CR exercises. Ostby et al. [

44] addressed the importance of support roles, while Granåsen and Andersson [

45] proposed performance metrics to assess exercise effectiveness.

We identified no exercises that abstract technical execution while allowing participants to focus exclusively on decision-making. A comparable concept appears in MITRE’s report [

5], but no concrete implementation was described. To our knowledge, no current framework emulates technical-level actions while applying structured wargaming methodology to incident response. Our proposed method bridges this gap by integrating wargaming principles with simulation automation, offering a new category of cyber exercises that are cost-effective, repeatable, and accessible for organizations with limited resources.

3. Background

Cyber incident response involves both technical experts and managers, making it a multi-level challenge. To establish the foundation for our approach, we begin by clarifying the levels of management and key terms used throughout this work.

According to [

46], the civilian organizational hierarchy is generally structured as follows, from lowest to highest: front-line problem-solving workers, front-line management, middle management, and top management. From a national and military perspective [

47], the hierarchy consists of national strategic, military strategic, operational, and tactical levels. The national strategic level defines long-term national goals and provides the overarching context for civilian and military operations. The military strategic level aligns military objectives with national priorities. The operational level develops campaign plans to meet these objectives, while the tactical level focuses on specific tasks and missions. Below the tactical lies the technical domain, comprising the individuals who execute the tasks and their tools. Although this framework is generalized, it may vary across countries, and the boundaries between levels are often fluid. Higher management must understand lower-level needs and provide appropriate support.

Turning to wargaming, Johnson [

48] identifies four levels: tactical, command–tactical, operational, and strategic. At the tactical and command–tactical levels, wargames involve fine-grained detail and simulate concrete actions. In cyber exercises, this corresponds to tasks typically conducted in CRs or testbeds. As the scope increases toward the strategic level, the focus broadens to include diplomatic, informational, military, and economic (DIME) factors. In organizational cyber terms, this translates into long-term investments in infrastructure, personnel, and procedures. The operational level, positioned between tactical and strategic, reflects day-to-day cyber operations and incident response procedures.

In a CR, actions replicate real production environments, meaning they operate under realistic time constraints. This is similar to military field exercises, where personnel demonstrate live capabilities and performance data are collected. Perla’s military cycle of research [

30] integrates three elements: field exercises (which generate empirical data), wargames (which explore decision-making), and structured analysis. Together, these provide a comprehensive feedback loop. CRs focus on how to perform technical tasks, as outlined in frameworks like NICE [

15], while TTXs and wargames emphasize what to do, focusing on procedural decisions [

49].

In cyber contexts, technical-level exercises are usually conducted in CRs by technical staff or front-line personnel, corresponding to the technical domain. Each hierarchical level presents distinct challenges, regardless of whether the organization is military or civilian. When CRs are used across multiple management levels, a key issue arises: real-time execution of technical actions slows higher-level decision-making, which typically requires faster-paced, less technically detailed responses. As such, TTXs and wargames are better suited for management-level training due to their ability to abstract technical delays.

Developing CR scenarios typically requires introducing vulnerable software or services and creating exploits. Once defenders learn the remediation steps, the scenario loses effectiveness upon repetition. Therefore, CR development is often outsourced to specialized companies with the necessary expertise [

50].

The term “TTX” is broadly used in the literature to include various discussion-based formats, such as seminars, matrix games—structured decision-making exercises using predefined options—and wargames, which simulate interactive scenarios with outcome-based consequences [

37,

51].

Another key concern is the time and resource burden of preparing exercises. In many sectors, such as critical infrastructure and finance, contingency exercises are legally mandated [

52,

53,

54]. As more organizations face such mandates, the central issue becomes how to prepare and deliver these exercises efficiently. Preparation time varies significantly: TTXs typically require 3–6 months, whereas CR-based exercises may take several years to develop [

2,

5,

6,

12,

55]. Execution, by contrast, usually lasts hours or days [

2,

56]. Extended preparation requirements make CRs more viable for large organizations and less practical for small or resource-constrained entities.

The exercise concept introduced in this paper addresses these limitations. It is intended for incident response at the operational or management level, emphasizing decision-making during an active incident. Unlike TTXs, it does not rely on expert facilitators to assess actions, and, unlike CRs, it is faster and simpler to prepare. The goal is to enhance training in incident response procedures by combining realism with efficiency.

4. Methodology and Experiments

The proposed methodology was developed step by step based on the literature review, analysis of existing exercises, and repeated testing. This process is shown in

Figure 1. While the figure shows the overall development path, the next sections explain how ideas from earlier studies and observed practices were gradually turned into a new exercise format through testing and feedback. This included small trials, early versions of the method, and more complete validation exercises that helped to shape the final version.

The final method was built around four main steps: (1) setting training goals using essential questions (EQs), (2) assigning roles based on those goals, (3) designing scenario events that match the roles and goals, and (4) running exercises using emulated technical actions in a rule-based simulation. These steps follow the DCMP (Data Collection and Management Plan) framework, originally used in military wargaming and explained in

Section 5.1.1. The method does not depend on any specific tool and can be adapted to different organizations. This overview provides a general structure, which is then explained in more detail.

To test how the method works in practice, it was used in exercises based on the Cyber Conflict Simulator (CCS) [

57], a commercial platform. While one of the co-authors contributed to the early concept of CCS, the authors declare no commercial interests or intellectual property rights related to the simulator. Although this study was conducted using the CCS platform, the described methodology—including role-based tasking, emulated technical actions, scenario design, and the evaluation process—is tool-agnostic. Role-based tasking refers to assigning specific responsibilities to defined participant roles, while emulated technical actions represent the outcomes of technical procedures without real execution. The methodology can be applied to other simulation environments that support task sequencing, time control, and rule-based logic. As with many CR-based studies, the platform used here is proprietary and does not provide public access to its source code or internal logic. However, the methodology remains platform-independent and aligns with previous research based on commercial simulation tools.

The exercise method was developed through an iterative process, shown in

Figure 1, that combined a literature review, findings from past exercises, hands-on testing, and initial validation. The literature review focused on identifying key steps in exercise preparation and implementation. Sources included official manuals, public guides, and after-action reports from earlier cyber and wargaming exercises.

In addition to the main exercises described in the next sections, we conducted a series of small-scale exercises and demonstrations involving civilian experts and military representatives, as outlined in

Section 6.1.2. These sessions served to gather feedback from a broad spectrum of potential users. Participants included senior managers, military officers with training experience, wargame designers, and others not necessarily specialized in cybersecurity.

The method was refined through three phases: proof-of-concept trials, scaled-up exercises, and final validation tests. Each phase expanded on the previous one, increasing in complexity and revealing new development challenges. The process continued until no further significant improvements were identified.

The following subsections describe the exercises conducted in each phase. Only a portion is presented in this paper due to confidentiality agreements and the lack of explicit consent for academic publication. Nevertheless, anonymized insights and general observations are referenced in

Section 6.1.1 and

Section 7.

4.2. Simulation System

This subsection briefly describes the simulator’s core capabilities to support methodological transparency and reproducibility. It extends the previously published overview in [

58], which focused on modeling the financial consequences of cyber attacks, whereas the present study introduces a novel exercise method. The methodology is tool-agnostic and applicable across any platform offering equivalent features. Accordingly, the paper does not aim to document the simulator itself but to present a structured approach for applying such tools in support of cyber defense exercises at the operational and management levels.

The simulator does not require integration with an organization’s operational infrastructure. It functions independently of live systems and therefore does not interface with tools such as SIEM (Security Information and Event Management) or SOAR (Security Orchestration, Automation, and Response) platforms, which are commonly used to monitor and coordinate security operations in real environments. Instead, it internally emulates the presence of such mechanisms within a self-contained environment.

The simulation system creates custom environments, called landscapes, and emulates technical tasks typically performed in a CR. Within this simulation environment, it is possible to recreate an organization’s network, security controls, and technical roles with an appropriate level of detail for each scenario. Simulated virtual technicians execute technical actions, and each technician can be assigned a specific skill set.

During exercises, first-level managers interact with a web interface to monitor the current situation and assign tasks to virtual technicians. The simulator processes technical actions using predefined rule sets and incorporates probabilistic outcomes based on scenario configuration and a built-in rule set. It enforces task dependencies such as required network access, credentials, and time constraints. Users can adjust simulation speed from real-time to near-instantaneous at any point. For conceptual clarity, an illustrative example of how an emulated action is processed by the simulation system is provided in

Appendix B. The simulator then emulates these actions and calculates results following a brief processing interval.

All actions performed within the simulation system are recorded and can be replayed as action sequences, chronological reconstructions of user-triggered activities. Additionally, the entire simulation state, i.e., a snapshot of the system at a given moment, can be saved and reloaded. This enables repeatable scenario execution or continuation from any point. This functionality is highlighted due to its relevance for findings presented in

Section 7.1.2.

The simulator can model dependencies between business processes and IT systems. For example, the availability of a specific service can be defined as a function of parameters such as network path availability, accessible data, and the supporting software or hardware. The simulator can also display any parameter or combination of parameters on a dashboard. Dashboards reflect the current state of the simulation.

As outlined in

Section 1, in traditional TTXs, facilitators assess what actions are possible and determine their outcomes. In exercises assisted by CCS, the simulation system assumes the role of the control cell, autonomously assessing and emulating the outcomes of technical tasks. In contrast to CRs, which perform real-time actions at real-time speeds, this system simulates them much faster—often instantly. This design enables decision-makers, from first-level managers to top management, to concentrate on decision-making while the simulator manages technical execution. This represents a novel approach to conducting cyber incident response exercises. However, the approach and simulator currently lack documented use cases or established best practices.

The information provided in this section refers exclusively to externally visible functionalities and does not disclose any proprietary or internal mechanisms of the simulator.

4.5. Final Validation Tests

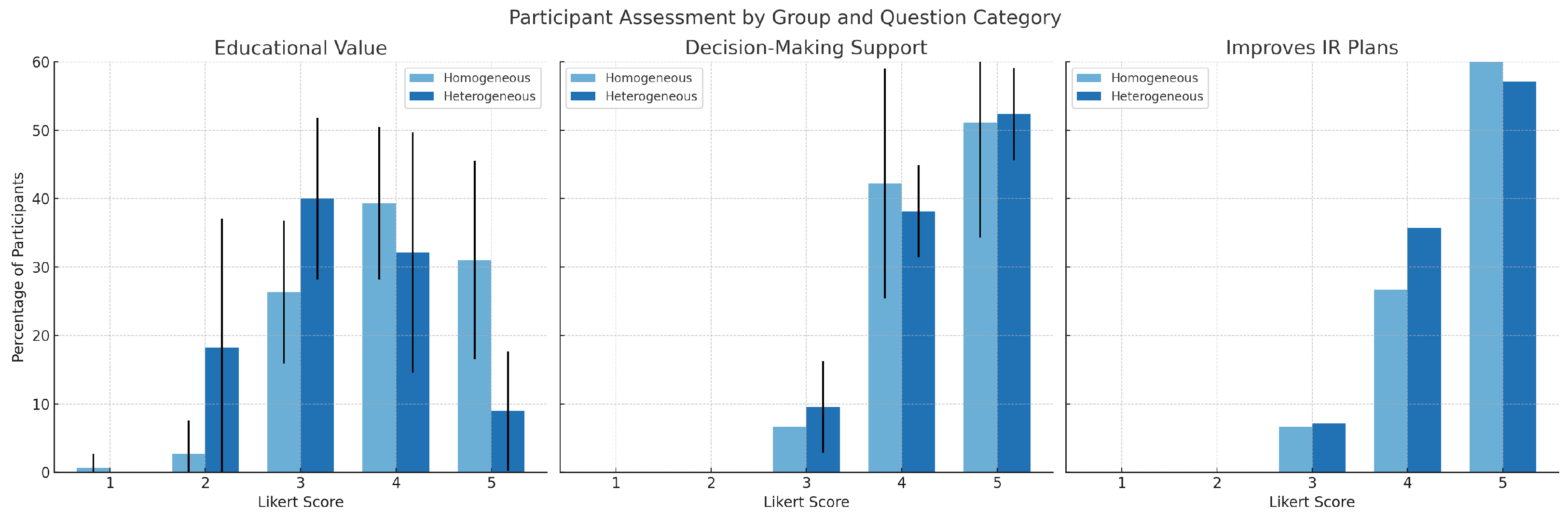

At this stage, we considered the exercise concept ready for testing with actual organizations and their employees. This phase aimed to (i) evaluate preparation procedures and (ii) test the concept using real organizations and systems. Several additional tests were conducted using a similar methodology; however, due to contractual obligations, we are unable to disclose full details of those sessions. Instead, we provide a high-level overview of two exercises conducted with the Croatian Military Academy (CMA) and the University of Zagreb, Faculty of Electrical Engineering and Computing (FER).

The exercise was initially conducted with the support of CMA, which enabled structured testing with their officers and officer candidates. This was followed by a planned re-run in collaboration with FER, allowing us to validate the concept and compare outcomes across professional and academic contexts. The simulated unclassified military network included 500 simulated objects with associated users, distributed across 80 network segments.

A distinctive feature of this phase was that the same scenario was executed twice: first with military participants, then with civilians. In the first test, military trainees defended their own network. For the second test, CMA requested anonymization of the network, after which the exercise was conducted with civilian participants from FER. Except for the anonymization, all other parameters of the environment remained unchanged.

In both cases, participants were divided into three teams, as shown in

Table 1.

The first exercise involved military cybersecurity officers (NATO OF-3 level) acting as incident managers, with officer candidates in the final phase of their training assigned to technical teams. These candidates were preparing for future roles in military Security Operations Centers (SOCs), which monitor and respond to threats in real time, and Computer Security Incident Response Teams (CSIRTs), which manage the investigation and coordination of cybersecurity incidents. Senior officers formed the management board and also observed the session. The second exercise involved civilian students from FER, who participated on a voluntary basis.

Afterward, participants completed a voluntary questionnaire regarding their experience. The full list of questions is provided in

Appendix A, while results are presented in

Section 6.1.2. It is important to emphasize that the results presented in

Section 6.1.2 are based solely on these two exercises, for which formal written consent was obtained from all participants. During the development phase, more than ten additional exercises were conducted with various public and private organizations. However, due to confidentiality agreements and the absence of explicit consent for research publication, data from those sessions were excluded from the statistical analysis. Nonetheless, they are referenced in anonymized form in

Section 6.1.1. Moreover, the exercise method was significantly refined during this phase, and only the final iteration was considered mature enough for structured evaluation.

This testing phase allowed us to explore additional considerations introduced in the previous section:

How learning outcomes vary when

- (a)

students train using a digital clone of their own organization, compared to;

- (b)

a heterogeneous group of students working with an unfamiliar network.

Whether this exercise format can be used to develop decision-making skills.

Whether this exercise format can assist in creating or improving existing incident response plans.

5. Proposed Exercise Method

Before presenting the method based on emulating technical actions, it is important to clarify that exercises should not be treated as one-time events. Instead, as per established military training methodologies [

65], exercises should be understood as a process comprising four key phases:

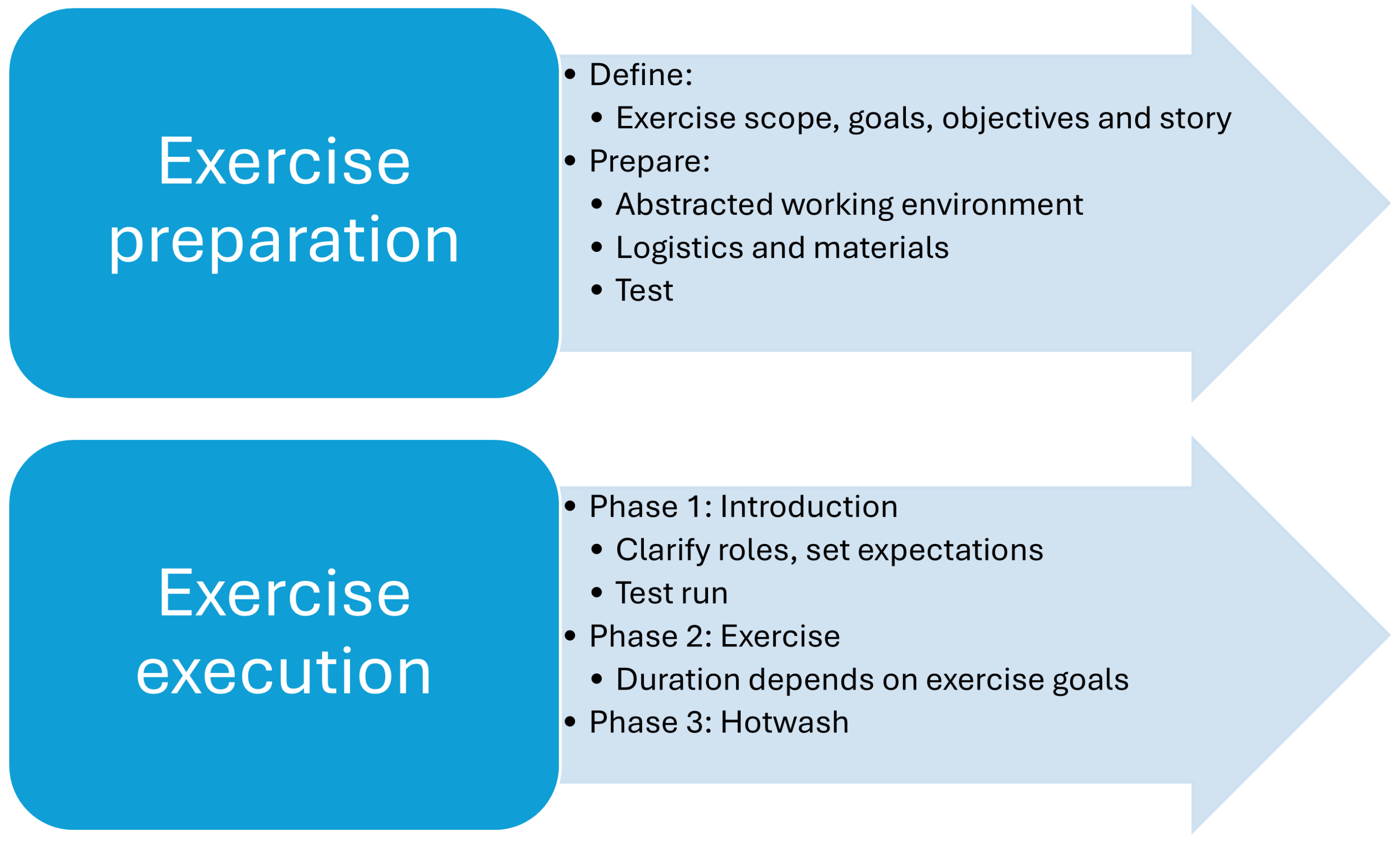

This work focuses on the preparation and execution phases and introduces a structured methodology designed to improve the planning and conduct of cyber defense exercises. Unlike most existing frameworks, which emphasize organizational coordination and general scenario building [

10,

12], the method presented here offers an operationally focused structure that links exercise goals to specific participant actions through a simulation-supported approach.

The method relies on a simulation system capable of emulating realistic technical conditions while granting participants full control over decision-making. It is particularly suited for training first-line managers and executive-level roles by offering scalable scenarios, reduced preparation overhead, and increased fidelity. While inspired by principles from military wargaming, the approach has been adapted to address the specific requirements of cyber defense training.

According to the literature reviewed during this research [

5,

6,

7,

8,

9,

10,

12], exercise preparation often spans several months or even years. The execution phase, visible to participants and observers, typically lasts only a few hours or days and is thus the shortest phase of the process.

The proposed exercise method is based on the emulation of technical actions using a simulation system. This approach specifically employs an open-type simulation system, wherein the term simulation system refers to a tool that performs actions based on user input. In military terminology, open-type simulations actively involve human participants, pausing or slowing down the scenario to await decisions, rather than executing numerous iterations automatically without interaction. Their primary objective is to support human training and decision-making, not to produce statistically optimized outcomes. This contrasts with automated or closed simulations, which operate solely on predefined rules. The method targets first-level managers through to senior leadership and, in military terms, focuses on the operational level of response without extending into strategic-level considerations or technical implementation. It is best described as a wargame supported by simulation.

These characteristics significantly influence the preparation and conduct of exercises. Existing guides typically serve as high-level frameworks designed to accommodate various exercise types and organizational contexts. Consequently, they emphasize organizational structure and delegate implementation details to the planners.

Our proposed exercise method structures the process into two primary phases: preparation and execution, as illustrated in

Figure 2. As outlined in NIST SP 800-84 [

7], preparation begins by defining the scope (e.g., involved organizational units) and exercise goals. The scope determines the level of abstraction; for instance, it may replicate the entire organization or focus on a simplified view tailored to senior management. Lower management levels generally require less abstraction, which must remain aligned with the exercise’s intended objectives. Similar to the NARUC guide [

12], this process includes formulating a main exercise narrative, which serves as the foundation for subsequent planning.

5.1. Planning and Preparation

A key finding is that exercises can be prepared and executed in less time and with fewer resources when technical steps are abstracted.

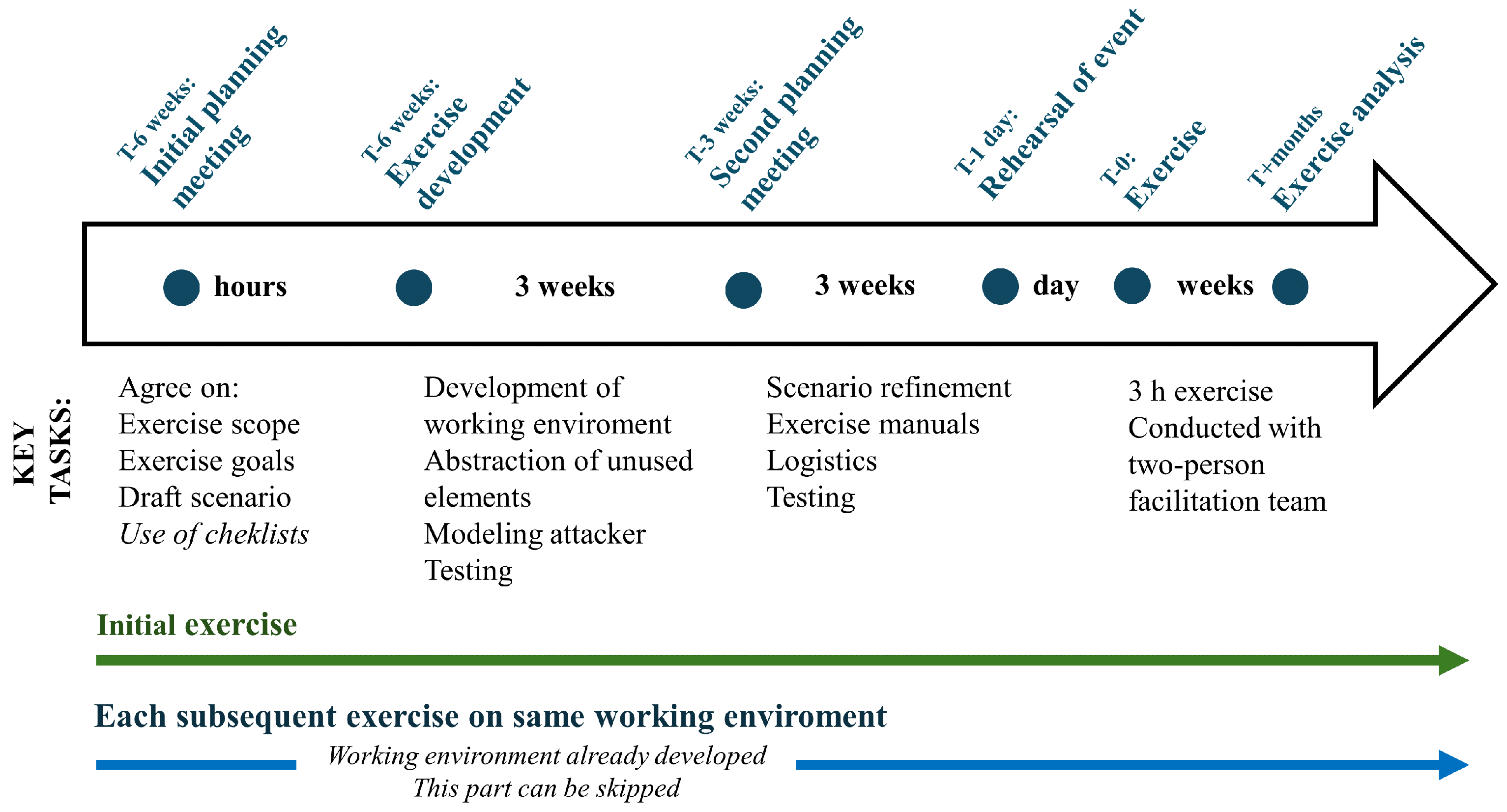

Figure 3 depicts the primary steps involved in exercise preparation and execution. The process begins with an initial planning meeting, during which the following inputs should be clearly defined: scope, goals, and a preliminary scenario or exercise narrative. Following the initial meeting, development can begin almost immediately. A second planning meeting should be held at the midpoint of development to refine existing components and finalize pending decisions. We recommend conducting an internal final test at the exercise location one day prior to the event to confirm readiness. The exercise itself typically lasts only a few hours.

For organizations planning recurring exercises, the “Development of Working Environment” step shown in

Figure 3 can often be skipped; the simulation environment may be reused—either partially or in full—for future exercises. Exercise analysis varies depending on expectations and the required level of detail and is therefore not addressed in this section.

5.1.1. Development Using a DCMP-Based Methodology

This approach emphasizes the importance of establishing agreement on several foundational elements during the initial planning phase of the exercise:

These elements form the basis for defining simulation complexity, designing narrative flow, and identifying key roles. Since the organization requesting the exercise has already acknowledged the need for such an activity, it should provide preliminary input on expected participants and define any overarching constraints. It typically also proposes an initial set of goals. The final element, while not mandatory, is a unifying narrative that ensures internal coherence across all events.

The number of participants and simulated roles are directly related to the simulation environment’s size and the required level of abstraction, both of which influence the preparation timeline. At this stage, all values are treated as provisional. Depending on the scope, network segments may be described in detail or abstracted to include only representative elements. These inputs are refined iteratively throughout planning. Goals may range in scope—from specific tasks to broader themes. Regardless of granularity, the method emphasizes structured decomposition to maintain consistency and ensure participant engagement.

Following this meeting, we propose the use of an adapted Data Collection and Management Plan (DCMP) methodology to systematically decompose exercise goals into fundamental elements and connect them to participants and the scenario. In doing so, we methodologically ensure goal clarity and establish a foundation for scenario design. The DCMP process includes the following steps:

Decompose each goal into sub-issues and sub-sub-issues—until no further meaningful refinement is possible. These final components are referred to as essential questions (EQs);

Assign each EQ to appropriate individuals based on organizational roles, identifying which teams or departments (e.g., management, legal, or incident response) are best positioned to address them;

Design scenario events that evoke responses to each EQ;

Define the data required for participants to interpret these events and respond appropriately. This includes facts, cues, or situational indicators embedded within the scenario.

EQs serve as the fundamental building blocks of the scenario. Each EQ represents a specific decision, judgment, or observation that participants are expected to make. Unlike broad or abstract objectives, EQs are concrete and directly tied to participant roles and expected behaviors. Only after the list of EQs is finalized can injects be created. An inject is a pre-planned scenario element that introduces new information, stimuli, or situational changes into the environment with the intent of prompting participant action or decision-making. Each inject is deliberately crafted to trigger a response to one or more EQs, ensuring that all scenario events are purposeful and aligned with overarching goals. This linkage also enables evaluators to assess whether objectives have been achieved based on participant responses to specific EQ-driven events.

This decomposition process clarifies the intent behind each goal and outlines what the scenario must deliver. Initial goals are rarely detailed; actionable elements emerge through decomposition. For guidance on this process, see Chapter 13 of Shilling’s report [

66]. Once EQs are defined and linked to roles, the complete list of required participants can be finalized. Adjustments at this stage may reveal that the initial exercise format is suboptimal for achieving all objectives.

At this point, the similarities with traditional wargaming applications of the DCMP end. While military wargames use custom methods, models, and tools (MMTs) [

4] to address each EQ individually, this method leverages simulation platforms to standardize components. A key feature is the emulation of technical actions. The number and types of usable MMTs are limited by the simulation platform’s capabilities. The simulator provides predefined actions for attackers and defenders, as described in

Section 4.2 and

Appendix B. Planners must map each EQ to one or more supported emulated actions, narrative events, or both. This process transforms high-level goals into decision-relevant EQs, assigns them to roles, and builds corresponding events—ensuring each event directly supports the exercise objectives. A structured event sequence maintains focus and fosters participant involvement. This approach differs from guides such as ENISA [

10] or CCA [

8], which predefine participants and build scenarios around them.

Only after this decomposition can the simulation environment be constructed. Elements relevant to EQ activation must be specified in detail, while peripheral systems may be simplified or mocked, following wargaming best practices. This significantly reduces preparation time. For management-level exercises, excessive technical detail can lead to low-value interactions that detract from decision-making. At this point, organizers must provide data to support scenario development at an appropriate fidelity level. Based on our observations, when inventory data are available, the environment can typically be prepared within a few weeks, as discussed in

Section 6.

The outcome of this process is a consolidated table listing all events, the related EQs, and responsible participants. This table serves both as a real-time monitoring tool and a post-exercise evaluation reference. It also supports assessment of overall success since objectives are decomposed into trackable elements. The table indicates which objectives were addressed and to what extent.

In practice, not all EQs can be addressed in a single exercise. Some require prerequisites that may not be feasible, and certain roles may be unavailable. Additionally, some EQs function more effectively as performance indicators than interactive prompts. For instance, a goal such as “How does information flow through different hierarchical levels?” depends on specific triggers that allow observers to evaluate communication pathways.

Although the DCMP is well established in military wargaming, its structured application in cyber defense training has, to our knowledge, not yet been systematically implemented. In this work, the DCMP has been adapted to design scenario-driven cyber exercises by linking objectives to specific EQs, assigning responsibilities, and creating corresponding events. This ensures each scenario element is purposeful and aligned with training goals. The integration of the DCMP thus provides a structured, practical, and repeatable method for developing realistic, goal-oriented exercises.

5.2. Exercise Execution

This section describes the practical execution phase of the exercise, focusing on maintaining realism, engaging participants in their assigned roles, and preserving decision-making autonomy across all organizational levels. Following the structured development of scenarios through the DCMP methodology, participants enter a simulated environment that replicates real-world decision pressures and operational complexity.

In our tests, most participants were using the simulation system and this type of exercise for the first time. To address this, each session begins with a 45-min introduction designed to explain participant roles, set expectations, and conduct a short test scenario. This phase is followed by a brief break prior to the start of the main exercise. The scenario typically begins under normal operational conditions and gradually escalates through minor incidents, culminating in a major crisis.

Depending on the desired level of abstraction and the number of simulated components, exercises may involve multiple tiers of management. The core structure involves first-level managers overseeing simulated technicians, who execute technical actions as described in

Section 4. The simulator’s time-acceleration capability, as outlined earlier, enables rapid feedback on technical actions, supporting accelerated decision-making.

Higher-level managers, such as department heads or executive officers, interact with simulation dashboards that provide real-time insights—such as the status of critical services, projected financial losses from service disruptions, and the overall organizational impact. These dashboards are further described in

Section 4.2.

All decisions during the exercise are made solely by participants. The facilitator’s role, as described in

Section 7.1.3, is not to influence outcomes but to guide the scenario toward fulfilling the predefined objectives.

To maintain realism, the simulated environment should mirror actual operational conditions. Network architecture, security controls, and decision-making processes should reflect real-world workflows, enabling participants to engage with the scenario in a way that aligns with their actual responsibilities. This layered structure enables each level of management to make role-appropriate decisions, while the simulation system ensures that emulated technical actions are both feasible and consistent. Unlike traditional TTXs, this method minimizes bias and human error in interpreting action outcomes.

Depending on the scenario, participants may interact with additional roles, such as legal advisors, media representatives, or external stakeholders. They may also negotiate with simulated attackers or coordinate with Computer Security Incident Response Teams (CSIRTs), as described in

Section 4.5.

Empirical data suggest that an exercise duration of two to three hours offers an effective balance between operational realism and logistical feasibility, particularly given the challenge of securing extended availability from key decision-makers. While longer exercises are feasible, organizations should ensure adequate allocation of personnel throughout the full duration of the activity.

At the conclusion of the exercise, we recommend conducting a brief hotwash—an informal debrief held immediately after the session to capture initial impressions, clarify ambiguous events, and highlight key lessons learned. The hotwash facilitates early feedback, encourages participant reflection on their decision-making, and prepares the ground for a more detailed after-action review (AAR).

7. Discussion

This section is structured around a set of recurring design and implementation challenges observed during test exercises. The first part examines the management of accelerated simulation time and its effects on team coordination, cognitive load, and decision-making. The subsequent sections explore how attacker behavior was modeled to support realism while minimizing preparation overhead, and how facilitation roles were adapted to maintain participant engagement with minimal staffing.

Building on these operational aspects, the discussion then addresses the broader flexibility of the proposed method, particularly how goal-driven scenario structuring, targeted abstraction, and the reuse of existing components contribute to streamlining exercise design. A separate subsection provides a comparison with related work to highlight the methodological contributions and trade-offs. Finally, the section reflects on participant behavior, discusses the implications for data collection and evaluation, and situates the method within the wider field of cyber wargaming practices. The limitations and areas for future development are also outlined.

7.6. Comparison with Related Work

To contextualize the contribution of the proposed method, this section compares it with the established training formats and tools described in

Section 2. The focus is on differences in purpose, structure, and applicability—particularly regarding decision-making under pressure and accessibility for organizations with limited resources. Rather than repeating previously described features, the comparison highlights how the method addresses specific limitations of the existing approaches, including TTXs, CRs, gamified tools, and simulation platforms.

Traditional management-level formats, particularly TTXs, rely heavily on facilitator judgment to determine the outcomes of participant decisions. This reliance on subjective interpretation limits consistency and makes exercises harder to replicate at scale. The proposed method addresses this limitation by incorporating a rule-based simulation engine that automates adjudication (see

Section 5). This allows facilitators to concentrate on scenario progression rather than interpreting participant intent. The facilitator’s role thus shifts from evaluator to orchestrator of the exercise flow (see

Section 7.1.3).

In contrast to the general guides for cyber exercise planning [

5,

6,

7,

8,

9,

10,

11,

12], which treat scenario design, inject development, and evaluation as separate activities, our method begins with clearly defined goals. Using the DCMP framework (see

Section 5.1.1), these goals are translated into specific questions and tasks, which then drive scenario content, participant roles, and evaluation metrics. This structure ensures alignment between the training objectives and all the elements of the exercise lifecycle.

CRs represent the dominant model for technical cybersecurity training [

43,

74], providing high-fidelity environments supported by specialized teams. However, they are resource-intensive and tightly bound to real-time execution, which limits flexibility. Management-level participants often need to wait for technical teams to complete their actions, reducing opportunities for reflection and scenario adjustment. These constraints make CRs less accessible to smaller organizations and less suitable for decision-focused training. Our method complements rather than replaces CRs, offering an abstracted, time-efficient alternative that is better suited to management-level learning (see

Section 4 and

Section 6.1.1).

Gamified tools such as CyberCIEGE and Red vs. Blue use simplified or stylized environments to convey cybersecurity principles. They typically emphasize investment strategies, resource prioritization, or system configuration—such as setting access controls or selecting defense upgrades—rather than coordinating responses in real time during evolving incidents. These formats are often used to build awareness or reinforce abstract concepts, but they do not simulate organizational dynamics or operational decision-making under pressure. In contrast, simulation platforms like Cyber Arena offer customized working environments and emphasize long-term planning and resource allocation to support an organization’s strategic objectives. This aligns with the Ends–Ways–Means model [

94], where cyber capabilities are not strategic objectives in themselves but part of the “ways” and “means” that enable the achievement of broader organizational goals, such as maintaining production or service continuity. While Cyber Arena provides valuable strategic-level insights, it does not replicate the time-sensitive procedural coordination required during active cyber incidents. Our method addresses this gap by modeling organization-specific threat scenarios and focusing on the operational coordination required for effective incident response at the management level.

At the conceptual level, our method builds on the MITRE framework proposed by Fox et al. [

5], which highlights the need for decision-support tools in cyber operations. While the MITRE report remains theoretical, our approach applies its core principles in a structured and actionable format.

A key innovation is the application of the DCMP framework (see

Section 5.1.1), which is widely used in military wargaming but has not previously been adapted for cyber exercises. In our implementation, the DCMP enables structured alignment between the scenario events and learning objectives. Another original feature is dynamic time control (see

Section 7.1), which allows exercise pacing to be adjusted in real time—something not addressed in the reviewed literature.

Despite these advantages, several limitations remain. First, the abstraction of technical execution—although beneficial for clarity and accessibility—may reduce perceived realism for technically experienced participants (see

Section 6.1.1). Whether high technical fidelity is essential for management-level learning remains an open question; our findings suggest it is often not, and may even distract from core training goals.

Second, the method depends heavily on accurate scenario design. Errors made during the planning phase are difficult to correct during execution, unlike in facilitator-driven formats. While the DCMP helps to mitigate this risk through structured preparation, it does not eliminate it entirely.

Finally, while our method draws on wargaming principles, we found no hobbyist or professional wargames that explicitly address organizational-level cyber incident response (see

Section 2). The existing games typically focus on strategic planning or kinetic operations but not on procedural coordination under active threat conditions. This gap highlights the need for a new category of simulation that applies wargaming mechanics to real-world incident response training.

7.7. Limitations and Future Work

A key limitation of this approach is its dependence on the simulator. If an action is inaccurately modeled, unsupported, or miscalculated, there is limited opportunity for real-time correction during the exercise. Additionally, the simulator has known constraints. For instance, modeling employee fatigue remains a challenge; in our simulations, personnel are assumed to work extended hours without rest, which may not reflect realistic conditions. Many real-world factors that influence incident response—such as organizational culture, stress, or informal communication—are not captured in the current system.

Despite these limitations, simulator-based adjudication offers several advantages. It helps to reduce human error by relying on consistent rule-based decision-making instead of improvised facilitator judgment. This contributes to more objective outcomes, greater repeatability, and reduced facilitator bias. Moreover, simulated exposure to rare but high-impact incidents adds realism and enhances preparedness for real-world crises.

Another limitation is the lack of longitudinal tracking of individual or organizational progress. While the initial feedback (

Section 6.1) indicates gains in confidence and coordination awareness, the long-term training effects remain unverified. Future work should assess whether these gains persist over time and influence actual incident response behavior.

A further limitation concerns the proposed scalability model. While the concept of conducting multiple parallel sessions using a shared simulation setup has been outlined, it was not systematically tested within the scope of this study. In principle, such a model should be feasible given the modularity of the methodology and the simulator architecture, but empirical validation of large-scale parallel execution remains a subject for future research.

Finally, future research could explore integrating artificial intelligence into the simulation environment. AI agents might assume certain roles or support facilitation, which could alleviate some human-in-the-loop challenges. However, this functionality is not currently supported in our system and was thus beyond the scope of this study.

As already mentioned in earlier sections of this paper, in an ideal setting, this approach would be combined with CR environments serving as a real-world backend for emulating actions. Such integration would allow for precise calibration of action durations and expected outcomes. The results from the cyber range could then be fed into the simulation system to enable more accurate emulation, clearer identification of necessary actions, and more realistic timing and effects. Due to limited access to such infrastructure and resource constraints, this remains a direction for future exploration rather than a feature of the current implementation.

Author Contributions

Conceptualization, S.G. and D.G.; methodology, D.G.; validation, D.G., S.G. and G.G.; formal analysis, S.G. and G.G.; resources, S.G.; data curation, D.G. and S.G.; writing—original draft preparation, D.G.; writing—review and editing, S.G. and G.G.; visualization, D.G.; supervision, S.G. and G.G.; project administration, S.G.; funding acquisition, S.G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Ethical review and approval were waived for this study because it involved only anonymous voluntary questionnaires focused on participant perceptions of a training method. No personal data were collected, and participants were informed in advance that their responses would be used for research purposes only. The study did not involve medical, psychological, or behavioral experimentation.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

All relevant data are contained within the article, including figures, tables, and appendices. No additional datasets were generated or analyzed during the current study.

Acknowledgments

The The authors acknowledge the use of AI-assisted technologies, including ChatGPT-4o, to improve the language, clarity, and presentation of this manuscript.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| C&C | Command and Control |

| CCS | Cyber Conflict Simulator |

| CR | Cyber Range |

| CSIRT | Computer Security Incident Response Team |

| DCMP | Data Collection and Management Plan |

| EQ | Essential Question |

| MMTs | Methods, Models, and Tools |

| SCADA | Supervisory Control and Data Acquisition |

| SIEM | Security Information and Event Management |

| SME | Subject Matter Expert |

| ICS | Industrial Control System |

| SOC | Security Operation Center |

| SOAR | Security Orchestration, Automation, and Response |

| TSO | Transmission System Operator |

| TTPs | Techniques, Tactics, and Procedures |

| TTX | Tabletop Exercise |

References

- National Institute of Standards and Technology. Framework for Improving Critical Infrastructure Cybersecurity, Version 1.1; Technical Report; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2018. [CrossRef]

- Vykopal, J.; Vizvary, M.; Oslejsek, R.; Celeda, P.; Tovarnak, D. Lessons learned from complex hands-on defence exercises in a cyber range. In Proceedings of the 2017 IEEE Frontiers in Education Conference (FIE), Indianapolis, IN, USA, 18–21 October 2017; pp. 1–8. [Google Scholar] [CrossRef]

- Kick, J. Cyber Exercise Playbook; The MITRE Corporation: McLean, VA, USA, 2014. [Google Scholar]

- Appleget, J.; Burks, R.; Cameron, F. The Craft of Wargaming: A Detailed Planning Guide for Defense Planners and Analysts; Naval Institute Press: Annapolis, MD, USA, 2020. [Google Scholar]

- Fox, D.B.; Mccollum, C.D.; Arnoth, E.I.; Mak, D.J. HSSEDI: Cyber Wargaming: Framework for Enhancing Cyber Wargaming with Realistic Business Context; Technical Report 18, The Homeland Security Systems Engineering and Development Institute (HSSEDI); The MITRE Corporation: McLean, VA, USA, 2018. [Google Scholar]

- Wilhelmson, N.; Svensson, T. Handbook for Planning, Running and Evaluating Information Technology and Cyber Security Exercises; The Swedish National Defence College, Center for Asymmetric Threat Studies (CATS): Stockholm, Sweden, 2014. [Google Scholar]

- National Institute of Standards and Technology (NIST). Special Publication 800-84: Guide to Test, Training, and Exercise Programs for IT Plans and Capabilities; NIST Special Publication; NIST: Gaithersburg, MD, USA, 2006; p. 97.

- Club de la Continuité d’Activité (Business Continuity Club CCA). Organising a Cyber Crisis Management Exercise, 1st ed.; Agence Nationale de la Sécurité des Systèmes d’Information: Paris, France, 2021. [Google Scholar]

- Cybersecurity and Infrastructure Security Agency (CISA). Tabletop Exercise Package (CTEP) Hompage. Available online: https://www.cisa.gov/cisa-tabletop-exercises-packages (accessed on 10 February 2020).

- Ouzounis, E.; Trimintzios, P.; Saragiotis, P. Good Practice Guide on National Exercises; ENISA: Athens, Greece, 2009. [Google Scholar]

- Aoyama, T.; Nakano, T.; Koshijima, I.; Hashimoto, Y.; Watanabe, K. On the complexity of cybersecurity exercises proportional to preparedness. J. Disaster Res. 2017, 12, 1081–1090. [Google Scholar] [CrossRef]

- Costantini, L.P.; Raffety, A. Cybersecurity Tabletop Exercise Guide, 1.1 ed.; National Association of Regulatory Utilty Commissioners NARUC: Washington, DC, USA, 2021. [Google Scholar]

- Brajdić, I.; Kovačević, I.; Groš, S. Review of National and International Cybersecurity Exercises Conducted in 2019. In ICCWS 2021 16th International Conference on Cyber Warfare and Security; Academic Conferences International Ltd.: Reading, UK, 2021. [Google Scholar] [CrossRef]

- Kim, H.; Kwon, H.; Kim, K.K. Modified cyber kill chain model for multimedia service environments. Multimed. Tools Appl. 2019, 78, 3153–3170. [Google Scholar] [CrossRef]

- Petersen, R.; Santos, D.; Smith, M.C.; Wetzel, K.A.; Witte, G. SP 800-181 rev1: Workforce Framework for Cybersecurity (NICE Framework); Technical Report; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2020. [CrossRef]

- The MITRE Corporation. ATT&CK—Adversarial Tactics, Techniques, and Common Knowledge Home Page. Available online: https://attack.mitre.org/ (accessed on 1 July 2019).

- Chowdhury, N.; Gkioulos, V. Cyber security training for critical infrastructure protection: A literature review. Comput. Sci. Rev. 2021, 40, 100361. [Google Scholar] [CrossRef]

- PwC Czech Republic. Video: CSAS Workshop EN. 2019. Available online: https://www.youtube.com/watch?v=ueU-tOALeW0 (accessed on 14 December 2023).

- CybergmIEC. edp CyberGym Movie. 2017. Available online: https://www.youtube.com/watch?v=C2s2IBdEloQ (accessed on 15 December 2023).

- CybergmIEC. CyberGymNYC Video. 2019. Available online: https://www.youtube.com/watch?v=gqD-pug_Ib4 (accessed on 16 December 2023).

- Sipola, T.; Kokkonen, T.; Puura, M.; Riuttanen, K.E.; Pitkäniemi, K.; Juutilainen, E.; Kontio, T. Digital Twin of Food Supply Chain for Cyber Exercises. Appl. Sci. 2023, 13, 7138. [Google Scholar] [CrossRef]

- Holik, F.; Yayilgan, S.Y.; Olsborg, G.B. Emulation of Digital Substations Communication for Cyber Security Awareness. Electronics 2024, 13, 2318. [Google Scholar] [CrossRef]

- Ask, T.F.; Knox, B.J.; Lugo, R.G.; Hoffmann, L.; Sütterlin, S. Gamification as a neuroergonomic approach to improving interpersonal situational awareness in cyber defense. Front. Educ. 2023, 8, 988043. [Google Scholar] [CrossRef]

- Cone, B.D.; Irvine, C.E.; Thompson, M.F.; Nguyen, T.D. A video game for cyber security training and awareness. Comput. Secur. 2007, 26, 63–72. [Google Scholar] [CrossRef]

- Ota, Y.; Mizuno, E.; Watarai, K.; Aoyama, T.; Hamaguchi, T.; Hashimoto, Y.; Koshijima, I. Development of a Hybrid Exercise for Organizational Cyber Resilience. Saf. Secur. Eng. IX 2021, 1, 55–65. [Google Scholar] [CrossRef]

- Angafor, G.N.; Yevseyeva, I.; He, Y. Game-based learning: A review of tabletop exercises for cybersecurity incident response training. Secur. Priv. 2020, 3, e126. [Google Scholar] [CrossRef]

- HITRUST Health Information Trust Alliance. CyberRX 2.0 Level I Playbook Participant and Facilitator Guide; HITRUST Health Information Trust Alliance: Frisco, TX, USA, 2015. [Google Scholar]

- UK National Cyber Security Centre. Exercise in a Box. 2022. Available online: https://www.ncsc.gov.uk/information/exercise-in-a-box (accessed on 21 April 2023).

- NATO Allied Command Transformation. NATO Wargaming Handbook; NATO Allied Command Transformation: Norfolk, VA, USA, 2023. [Google Scholar] [CrossRef]

- Perla, P. The Art of Wargaming: A Guide for Professionals and Hobbyists; Naval Institute Press: Annapolis, MD, USA, 2012.

- UK Defence Science and Technology Laboratory. The Dstl Cyber Red Team Game; UK Defence Science and Technology Laboratory: Salisbury, UK, 2021. [Google Scholar]

- Bae, S.J. Littoral Commander: Indo-Pacific Rulebook; The Dietz Foundation: Washington, DC, USA, 2022. [Google Scholar]

- Flack, N.; Lin, A.; Peterson, G.; Reith, M. Battlespace Next(TM). Int. J. Serious Games 2020, 7, 49–70. [Google Scholar] [CrossRef]

- Reed, M. The Operational Wargame Series: The Best Game Not in Stores Now. 2021. Available online: https://nodicenoglory.com/2021/06/23/the-operational-wargame-series-the-best-game-not-in-stores-now (accessed on 7 June 2023).

- Engelstein, G.; Shalev, I. Building Blocks of Tabletop Game Design: An Encyclopedia of Mechanisms, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2022. [Google Scholar]

- Haggman, A. Cyber Wargaming: Finding, Designing, and Playing Wargames for Cyber Security Education. Ph.D. Thesis, University of London, London, UK, 2019. [Google Scholar]

- Smith, F.L.I.; Kollars, N.; Schechter, B. Cyber Wargaming: Research and Education for Security in a Dangerous Digital World; Georgetown University Press: Washington, DC, USA, 2024. [Google Scholar]

- Kodalle, T. Cyber Wargaming on the Strategic/Political Level: Exploring Cyber Warfare in a Matrix Wargame. In ECCWS 2021 20th European Conference on Cyber Warfare and Security; Academic Conferences International Ltd.: Reading, UK, 2021. [Google Scholar] [CrossRef]

- Gernhardt, D.; Ćutić, D.; Štengl, T. Wargaming and decision-making process in defence. In Interdisciplinary Management Research XIX: Conference Proceedings, Proceedings of the 19th Interdisciplinary Management Research (IMR 2023), Osijek, Croatia, 28–30 September 2023; Glavaš, J., Požega, Ž., Eds.; Ekonomski fakultet Sveučilišta Josipa Jurja Strossmayera u Osijeku: Osijek, Croatia, 2023. [Google Scholar]

- Lorusso, L.; Gernhardt, D.; Novak, D. Wargaming adjudication in the air-force and other military areas of education. Transp. Res. Procedia 2023, 73, 203–211. [Google Scholar] [CrossRef]

- Katsantonis, M.N.; Manikas, A.; Mavridis, I.; Gritzalis, D. Cyber range design framework for cyber security education and training. Int. J. Inf. Secur. 2023, 22, 1005–1027. [Google Scholar] [CrossRef]

- Švábenský, V.; Cermak, M.; Vykopal, J.; Laštovička, M. Enhancing cybersecurity skills by creating serious games. In Proceedings of the 23rd Annual ACM Conference on Innovation and Technology in Computer Science Education, ITiCSE, Larnaca, Cyprus, 2–4 July 2018; pp. 194–199. [Google Scholar] [CrossRef]

- Ukwandu, E.; Farah, M.A.B.; Hindy, H.; Brosset, D.; Kavallieros, D.; Atkinson, R.; Tachtatzis, C.; Bures, M.; Andonovic, I.; Bellekens, X. A review of cyber-ranges and test-beds: Current and future trends. Sensors 2020, 20, 7148. [Google Scholar] [CrossRef]

- Ostby, G.; Lovell, K.N.; Katt, B. EXCON Teams in Cyber Security Training. In Proceedings of the 2019 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 5–7 December 2019; IEEE: New York, NY, USA, 2019; pp. 14–19. [Google Scholar] [CrossRef]

- Granåsen, M.; Andersson, D. Measuring team effectiveness in cyber-defense exercises: A cross-disciplinary case study. Cogn. Technol. Work 2016, 18, 121–143. [Google Scholar] [CrossRef]

- Mihm, J.; Loch, C.H.; Wilkinson, D.; Huberman, B.A. Hierarchical Structure and Search in Complex Organizations. Manag. Sci. 2010, 56, 831–848. [Google Scholar] [CrossRef]

- U.S. Joint Chiefs of Staff. Doctrine for the Armed Forces of the United States; U.S. Joint Chiefs of Staff: Washington, DC, USA, 2013. [Google Scholar]

- Johnson, J. The “Four Levels” of Wargaming: A New Scope on the Hobby. 2014. Available online: https://www.beastsofwar.com/featured/levels-wargames-exploring-scopes-hobby/ (accessed on 30 August 2024).

- Eikmeier, D.C. Waffles or Pancakes ? Operational- versus Tactical-Level Wargaming. Jt. Force Q. 2015, 3, 50–53. [Google Scholar]

- Chouliaras, N.; Kittes, G.; Kantzavelou, I.; Maglaras, L.; Pantziou, G.; Ferrag, M.A. Cyber Ranges and TestBeds for Education, Training, and Research. Appl. Sci. 2021, 11, 1809. [Google Scholar] [CrossRef]

- Mouat, T. Practical Advice on Matrix Games Version 15. 2020. Available online: http://www.mapsymbs.com/PracticalAdviceOnMatrixGames.pdf (accessed on 28 August 2024).

- National Institute of Standards and Technology (NIST). Security and Privacy Controls for Information Systems and Organizations; NIST: Gaithersburg, MD, USA, 2020.

- European Commission. Consolidated Text: Directive (EU) 2022/2555 of the European Parliament and of the Council of 14 December 2022 on Measures for a High Common Level of Cybersecurity Across the Union, Amending Regulation (EU) No 910/2014 and Directive (EU) 2018/1972, and rep; European Commission: Brussels, Belgium, 2022. [Google Scholar]

- European Commission. Regulation (EU) 2022/2554 of the European Parliament and of the Council on Digital Operational Resilience for the Financial Sector and Amending Regulations (EC) No 1060/2009, (EU) No 648/2012, (EU) No 600/2014, (EU) No 909/2014 and (EU) 2016/1011; European Commission: Brussels, Belgium, 2022. [Google Scholar]

- Mases, S.; Maennel, K.; Toussaint, M.; Rosa, V. Success Factors for Designing a Cybersecurity Exercise on the Example of Incident Response. In Proceedings of the 2021 IEEE European Symposium on Security and Privacy Workshops (EuroS&PW), Vienna, Austria, 6–10 September 2021; IEEE: New York, NY, USA, 2021; pp. 259–268. [Google Scholar] [CrossRef]

- Lee, D.; Kim, D.; Lee, C.; Ahn, M.K.; Lee, W. ICSTASY: An Integrated Cybersecurity Training System for Military Personnel. IEEE Access 2022, 10, 62232–62246. [Google Scholar] [CrossRef]

- Utilis, d.o.o. Cyber Conflict Simulator Homepage, 2022. Available online: https://ccs.utilis.biz/ (accessed on 20 April 2024).

- Sokol, N.; Kežman, V.; Groš, S. Using Simulations to Determine Economic Cost of a Cyber Attack. In Proceedings of the Workshop on the Economics of Information Security (WEIS), Dallas, TX, USA, 8–10 April 2024. [Google Scholar]

- Whitehead, D.E.; Owens, K.; Gammel, D.; Smith, J. Ukraine cyber-induced power outage: Analysis and practical mitigation strategies. In Proceedings of the 2017 70th Annual Conference for Protective Relay Engineers (CPRE), College Station, TX, USA, 3–6 April 2017. [Google Scholar] [CrossRef]

- Lee, R.M.; Assante, M.; Conway, T. Analysis of the Cyber Attack on the Ukrainian Power Grid; Technical Report; E-ISAC—Electricty Information Sharing and Analysis Center, SANS Industrial Control Systems: Washington, DC, USA, 2016. [Google Scholar]

- Cichonski, P.; Millar, T.; Grance, T.; Scarfone, K. Computer Security Incident Handling Guide: Recommendations of the National Institute of Standards and Technology; Technical Report; National Institute of Standards and Technology: Gaithersburg, MD, USA, 2012. [CrossRef]

- Petek, D. Simpozij Kibernetička Obrana. 2022. Available online: https://osrh.hr/Data/HTML/HR/GLAVNA/DOGA%C4%90ANJA/20220822_Simpozij_Kiberneti%C4%8Dka_obrana/Simpozij_Kiberneti%C4%8Dka_obrana_HR.htm (accessed on 17 May 2023).

- Deep Conference Committee. Deep 2022 Workshop|Lead. 2022. Available online: https://deep-conference.com/deep-2022/ (accessed on 15 January 2020).

- Military Agency for Standardization. Stanag 2116 Mis (Edition 5)—Nato Codes for Grades of Military Personnel; Military Agency for Standardization: Brussels, Belgium, 1996. [Google Scholar]

- North Atlantic Treaty Organisation. BI-SC Collective Training and Exercise Directive (CT&ED) 075-003; North Atlantic Treaty Organisation: Brussels, Belgium, 2013. [Google Scholar]

- Shilling, A. Operations Assessment in Complex Environments: Theory and Practice; Technical Report Final Report of RTG SAS-110; NATO Science and Technology Organization: Brussels, Belgium, 2019. [Google Scholar]

- Department of Defense. The Department of Defense Cyber Table Top Guidebook; Technical Report; U.S. Department of Defense: Washington, DC, USA, 2018.

- Department of Defense. The Department of Defense Cyber Table Top Guidebook Version 2.0; Technical Report; U.S. Department of Defense: Washington, DC, USA, 2021.

- Colbert, E.J.; Sullivan, D.T.; Patrick, J.C.; Schaum, J.W.; Ritchey, R.P.; Reinsfelder, M.J.; Leslie, N.O.; Lewis, R.C. Terra Defender Cyber-Physical Wargame; Technical Report; US Army Reasearch Laboratory: Adelphi, MD, USA, 2017. [Google Scholar]

- Andreolini, M.; Colacino, V.G.; Colajanni, M.; Marchetti, M. A Framework for the Evaluation of Trainee Performance in Cyber Range Exercises. Mob. Netw. Appl. 2020, 25, 236–247. [Google Scholar] [CrossRef]

- National Security Agency; Department of Homeland Security; Applied Physics Laboratory Johns Hopkins. IACD Playbooks and Workflows. Available online: https://www.iacdautomate.org/playbook-and-workflow-examples (accessed on 13 February 2022).

- Scottish Government. Publication—Advice and Guidance, Cyber Resilience: Incident Management Homepage. 2021. Available online: https://www.gov.scot/publications/cyber-resilience-incident-management (accessed on 20 April 2023).

- Perla, P.P.; Mcgrady, E. Why wargaming works. Nav. War Coll. Rev. 2011, 64, 111–130. [Google Scholar]

- Henshel, D.S.; Deckard, G.M.; Lufkin, B.; Buchler, N.; Hoffman, B.; Rajivan, P.; Collman, S. Predicting proficiency in cyber defense team exercises. In Proceedings of the MILCOM 2016—2016 IEEE Military Communications Conference, Baltimore, MD, USA, 1–3 November 2016; IEEE: New York, NY, USA, 2016; pp. 776–781. [Google Scholar] [CrossRef]

- National Institute of Standards and Technology. NIST Special Publication 800 Series; NIST: Gaithersburg, MD, USA, various years.

- Mandiant Inc. Mandiant Special Report: M-Trends 2023; Technical Report; Mandiant Inc.: Reston, VA, USA, 2023. [Google Scholar]

- Gernhardt, D.; Gros, S. Use of a non-peer reviewed sources in cyber-security scientific research. In Proceedings of the 2022 45th Jubilee International Convention on Information, Communication and Electronic Technology (MIPRO), Opatija, Croatia, 23–27 May 2022; pp. 1057–1062. [Google Scholar] [CrossRef]

- The MITRE Corporation. MITRE ATT&CK: Groups. 2024. Available online: https://attack.mitre.org/groups (accessed on 17 April 2020).

- Bouwman, X.; Griffioen, H.; Egbers, J.; Doerr, C.; Klievink, B.; van Eeten, M. A different cup of TI? The added value of commercial

threat intelligence. In Proceedings of the 29th USENIX Security Symposium, Boston, MA, USA, 12–14 August 2020; pp. 433–450. [Google Scholar]

- Lockheed Martin. Applying Cyber Kill Chain® Methodology. 2018. Available online: https://www.lockheedmartin.com/en-us/capabilities/cyber/cyber-kill-chain.html (accessed on 19 January 2019).

- Assante, M.; Lee, R. SANS Whitepaper: The Industrial Control System Cyber Kill Chain; Technical Report; Sans Institute: Bethesda, MD, USA, 2015. [Google Scholar]

- Development Concepts and Doctrine Centre. Red Teaming Handbook, 3rd ed.; UK Ministry of Defence: London, UK, 2021.

- Department of the Army. Army Techniques Publication (ATP) 2-01.3 Intelligence Preparation of the Battlefield; Department of the Army: Washington, DC, USA, 2019. [Google Scholar]

- Settanni, G.; Skopik, F.; Shovgenya, Y.; Fiedler, R.; Carolan, M.; Conroy, D.; Boettinger, K.; Gall, M.; Brost, G.; Ponchel, C.; et al. A collaborative cyber incident management system for European interconnected critical infrastructures. J. Inf. Secur. Appl. 2017, 34, 166–182. [Google Scholar] [CrossRef]

- Schlette, D.; Caselli, M.; Pernul, G. A Comparative Study on Cyber Threat Intelligence: The Security Incident Response Perspective. IEEE Commun. Surv. Tutor. 2021, 23, 2525–2556. [Google Scholar] [CrossRef]

- Brilingaitė, A.; Bukauskas, L.; Juozapavičius, A.; Kutka, E. Information Sharing in Cyber Defence Exercises. In Proceedings of the 19th European Conference on Cyber Warfare, Online, 25–26 June 2020; ACPI, Academic Conferences and Publishing International Limited: Reading, UK, 2020; pp. 42–49. [Google Scholar] [CrossRef]

- North American Electric Reliability Corporation. GridEx—Grid Security Exercises Overview. Available online: https://www.nerc.com/pa/CI/ESISAC/Pages/GridEx.aspx (accessed on 24 February 2022).

- Downes-Martin, S. Your Boss, Players and Sponsor: The Three Witches of Wargaming. Nav. War Coll. Rev. 2014, 67, 5. [Google Scholar]

- Weuve, C.A.; Perla, P.P.; Markowitz, M.C.; Rubel, R.; Downes-Martin, S.; Martin, M.; Vebber, P.V. Wargame Pathologies; Technical Report; CNA and Naval War College: Alexandria, VA, USA, 2004. [Google Scholar]

- Schlette, D.; Empl, P.; Caselli, M.; Schreck, T.; Pernul, G. Do You Play It by the Books? A Study on Incident Response Playbooks

and Influencing Factors. In Proceedings of the 2024 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2024; pp. 3625–3643. [Google Scholar] [CrossRef]

- Empl, P.; Schlette, D.; Stöger, L.; Pernul, G. Generating ICS vulnerability playbooks with open standards. Int. J. Inf. Secur. 2024, 23, 1215–1230. [Google Scholar] [CrossRef]

- McHugh, F. Fundamentals of War Gaming, 3rd ed.; US Naval War College: Newport, RI, USA, 1966. [Google Scholar]

- United Kingdom Ministry of Defence. Wargaming Handbook; Development, Concepts and Doctrine Centre: Swindon, UK, 2017.

- Lykke, A.F.J. Defining Military Strategy. Mil. Rev. 1989, 69, 2–8. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).