Toward Robust Security Orchestration and Automated Response in Security Operations Centers with a Hyper-Automation Approach Using Agentic Artificial Intelligence

Abstract

1. Introduction

Key Contributions

- The IVAM Framework: The Investigation–Validation–Active Monitoring (IVAM) framework is introduced, incorporating MITRE ATT&CK for adversarial mapping, the NIST Cybersecurity Framework for standardization, and Quantitative Risk Assessment (QRA) for structured validation. This integration delivers a rarely seen level of structured, risk-driven incident response.

- Agentic LLM-Based SOAR Architecture: A novel SOAR framework is proposed that leverages autonomous LLM agents capable of dynamically generating, adapting, and executing contextual playbooks. This approach surpasses traditional static or no-code/low-code models by enabling zero-shot task execution and tool orchestration.

- Adaptive Multi-Agent System: The capabilities of a multi-step reasoning AI agent are demonstrated, featuring shared memory, external tool integration, and human-in-the-loop functionality. This enables evolution from reactive task automation to proactive, self-adjusting threat mitigation.

2. Background & Related Works

2.1. Evolution of Cybersecurity Automation

2.2. Comparative Analysis of Traditional and Contemporary SOAR Architectures

2.2.1. No-Code Automation

2.2.2. Low-Code Automation

2.2.3. Hyper-Automation

2.2.4. The Integration of ML and AI Capabilities into Modern SOAR Architectures

2.3. Hyper-Automation and Agent-Based AI Systems

2.4. Frameworks for Constructing an Effective Incident Response

- Identify: Recognizing and assessing security risks.

- Protect: Implementing safeguards to mitigate potential threats.

- Detect: Continuously monitoring for security incidents.

- Respond: Taking immediate action upon detecting threats.

- Recover: Restoring affected systems and minimizing impact.

- Tactics: Represent the adversary’s overall objectives.

- Techniques: Describe the methods used to achieve those objectives.

- Procedures: Outline specific implementations of these techniques.

2.5. Risk Assessment in Cybersecurity Domain

2.5.1. Quantitative Risk Assessment

2.5.2. Large Language Models (LLMs) and Agentic AI in Cybersecurity Domain

3. Methodology

3.1. The IVAM Framework

- MITRE ATT&CK Knowledge Base for mapping Tactics, Techniques, and Procedures (TTPs),

- NIST Cybersecurity Framework (CSF) for prioritization and procedural standardization,

- Quantitative Risk Assessment (QRA) for structured risk evaluation.

3.1.1. Investigation Phase

Identify the Attack Type

Analyze Affected Files and Systems

Determine the Scope of Infection

Assess Data and Business Impact

- Classify Data: Begin by identifying whether sensitive or regulated data, such as Personally Identifiable Information (PII), financial records, or intellectual property, was accessed or exfiltrated during the breach. Understanding the type of data compromised is essential for assessing potential risks and determining necessary remediation steps.

- Assess Operational Impact: Evaluate the extent of business disruption caused by the incident. This includes measuring downtime, loss of productivity, and the resources required for recovery efforts. Understanding the operational impact helps in prioritizing response actions and allocating resources effectively.

- Evaluate Compliance Risks: Identify any legal obligations arising from the breach, such as those under the General Data Protection Regulation (GDPR), Health Insurance Portability and Accountability Act (HIPAA), or Payment Card Industry Data Security Standard (PCI-DSS). Determine if notifications to affected individuals or regulatory bodies are necessary, and ensure compliance with relevant laws to mitigate potential legal consequences.

Identify the Initial Infection Vector

- Review Access Logs: Analyze logs from Remote Desktop Protocol (RDP), Virtual Private Network (VPN), and Secure Shell (SSH) services to detect unauthorized access or brute-force attempts. Unusual login times, failed login attempts, and access from unfamiliar IP addresses can indicate potential security breaches. Regularly reviewing these logs helps in the early detection of unauthorized activities.

- Investigate Web Applications: Examine server logs for any signs of exploitation, such as abnormal requests or injection attacks. Web applications are common targets for attackers seeking vulnerabilities to exploit, necessitating regular monitoring and prompt patching of identified issues. Utilizing Web Application Firewalls (WAFs) and conducting regular security assessments can enhance protection.

- Consider Physical Access: Assess the potential for security breaches through physical means, including the use of removable media or insider threats. Unauthorized physical access to systems can lead to data theft or the introduction of malicious software, highlighting the need for strict access controls and monitoring. Implementing measures such as surveillance systems, access badges, and security personnel can mitigate these risks.

Conduct Advanced Forensic Analysis

- Perform Forensic Analysis: Begin by conducting memory forensics and disk analysis to uncover the root causes of the incident. Memory forensics involves capturing and analyzing the contents of a computer’s volatile memory (RAM) to identify malicious processes, open network connections, and other artifacts that may not be present on the disk. Disk analysis complements this by examining the file system and storage media for malicious files, logs, and other persistent indicators of compromise. Tools such as The Sleuth Kit can assist in this analysis.

- Map to MITRE ATT&CK: Utilize the MITRE ATT&CK framework to identify the Tactics, Techniques, and Procedures (TTPs) employed by the adversaries. This globally accessible knowledge base categorizes adversary behaviors observed in real-world attacks, aiding in understanding and anticipating potential threat actions.

- Correlate with Threat Intelligence: Compare the findings from your forensic analysis against known attack groups or malware families. By correlating observed TTPs with threat intelligence reports, you can attribute the attack to specific adversaries and understand their motivations and capabilities. This correlation enhances your organization’s ability to defend against future attacks by informing proactive security measures.

Containment and Mitigation

- Isolate Affected Systems: Promptly remove compromised endpoints from the network to prevent the spread of malicious activity. This containment strategy is essential to limit further damage and is a critical component of incident response frameworks.

- Block Malicious Entities: Update security tools, such as firewalls and intrusion prevention systems, to block identified malicious IP addresses, domains, and file hashes. This proactive measure helps prevent further exploitation by known threats.

- Secure and Patch: Apply the latest security updates to all systems to address vulnerabilities exploited during the incident. Reset compromised credentials to prevent unauthorized access and enforce hardened configurations to enhance system defenses.

- Implement Best Practices: Enforce the principle of least privilege by ensuring users have only the access necessary for their roles. Implement multi-factor authentication to add an extra layer of security and establish network segmentation to contain potential threats and limit their movement within the network.

3.1.2. Validation Phase

Challenges of Asset Valuation

Focus on Relative Quantification

Addressing Information Constraints

- Intangible Risks: Factors such as reputational damage and regulatory penalties often lack direct ties to specific assets, making their valuation complex.

- Supply Chain Vulnerabilities: Involving external stakeholders complicates comprehensive asset valuation due to varying data availability and reliability across the supply chain.

- Dynamic Operational Environments: Rapid changes in operations can lead to fluctuating asset values, rendering static estimates unreliable and necessitating continuous monitoring.

3.1.3. Active Monitoring

3.2. Agentic AI Security Response Construction

- Incident Response Analysis & Generation: Analyzes log data and problem reports to detect security threats using industry-standard frameworks.

- Incident Mitigation & Resolution: Provides mitigation strategies aligned with NIST CSF 2.0 and MITRE ATT&CK and generates remediation steps, including playbook automation.

- Automation & Technical Guidance: Ensures security responses follow SOAR best practices and offers step-by-step technical procedures.

- Security Research & Advisory: Utilizes vector databases and security repositories to provide evidence-based security recommendations.

- Conversational Efficiency & Memory: Engages in professional, context-aware interactions while maintaining conversation history for enhanced accuracy.

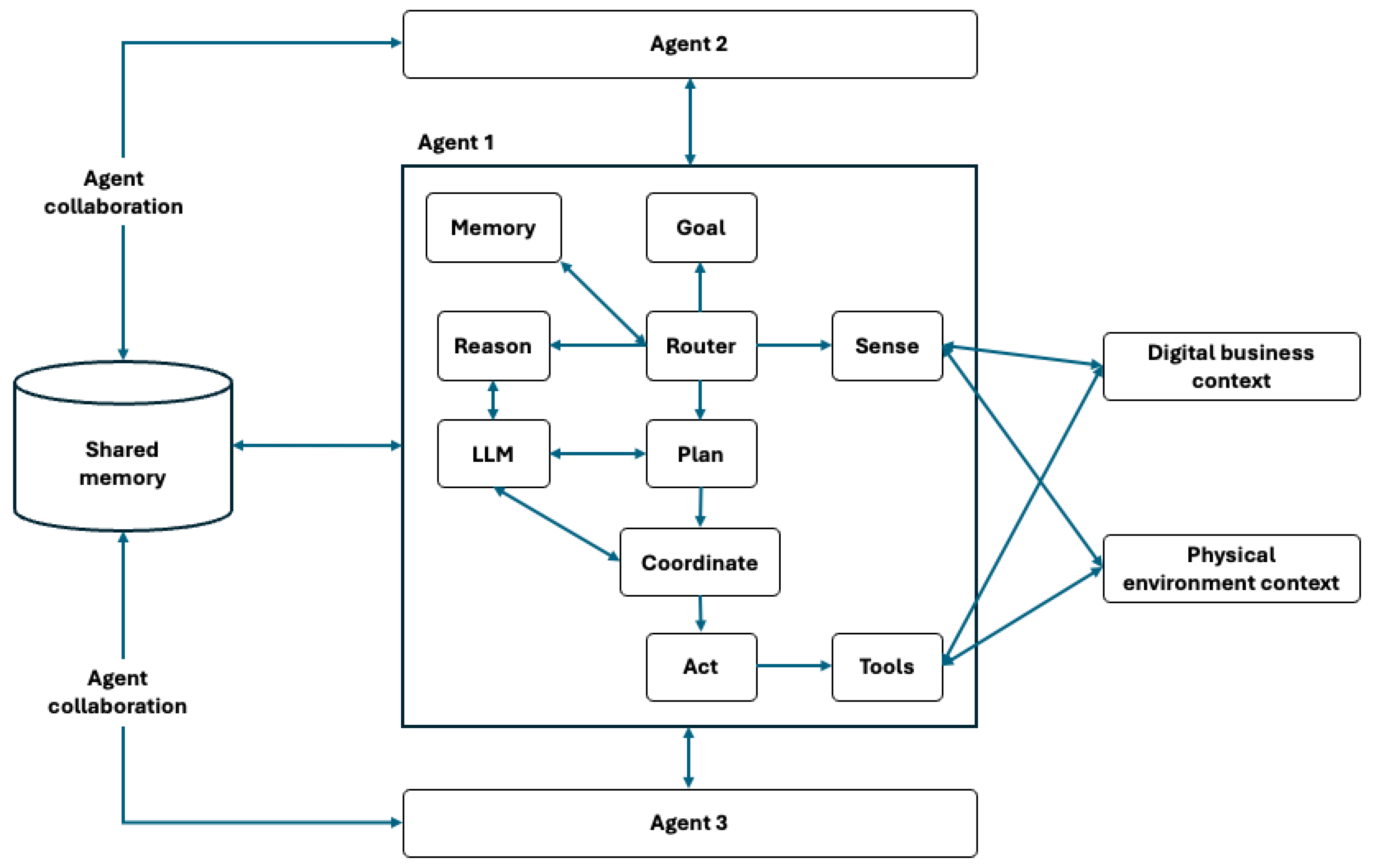

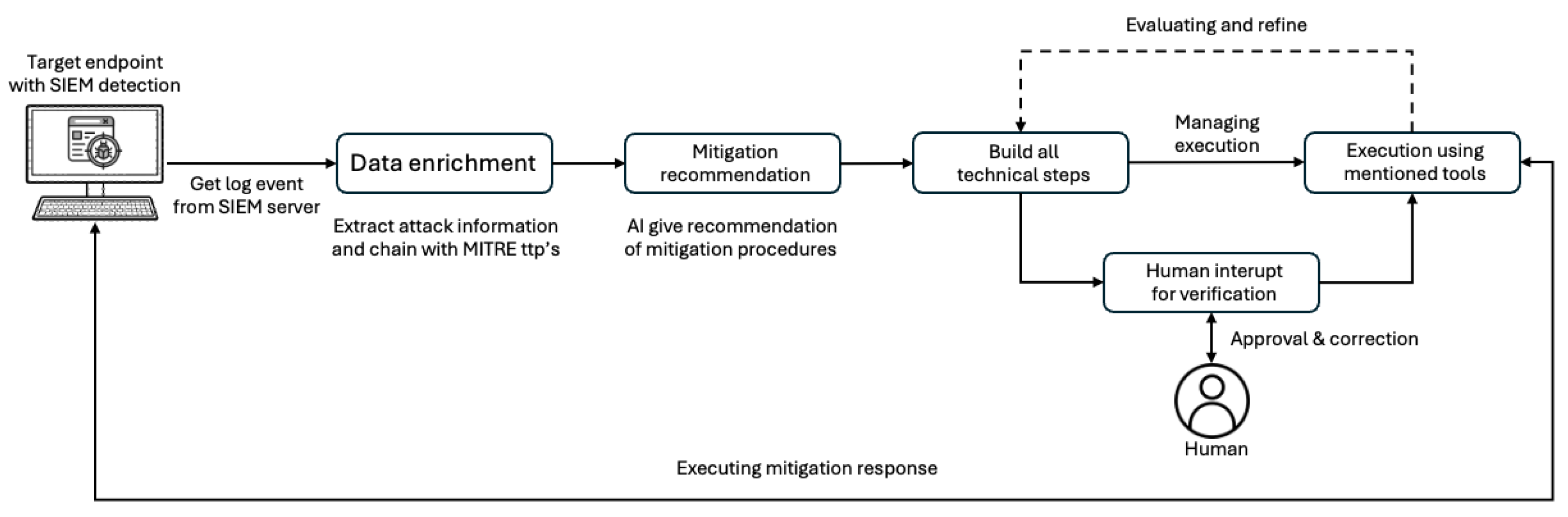

3.2.1. End-to-End Mitigation Workflow Powered AI-Agent

3.2.2. Agentic AI Building Blocks and Function

Leveraging LLMs for Agent-Powered Security Systems

Planning and Data Management

- Data Refinement: The system cleanses, labels, and structures incoming event data for efficient processing.

- Security Incident Response Data: Historical logs, real-time event monitoring, and domain-specific threat intelligence inform analysis and decision-making.

- Vector Database (Vector DB): A specialized database storing the embeddings of security incidents, enabling rapid retrieval of past events with similar characteristics. This capability aids in contextualizing new threats and supports proactive response measures.

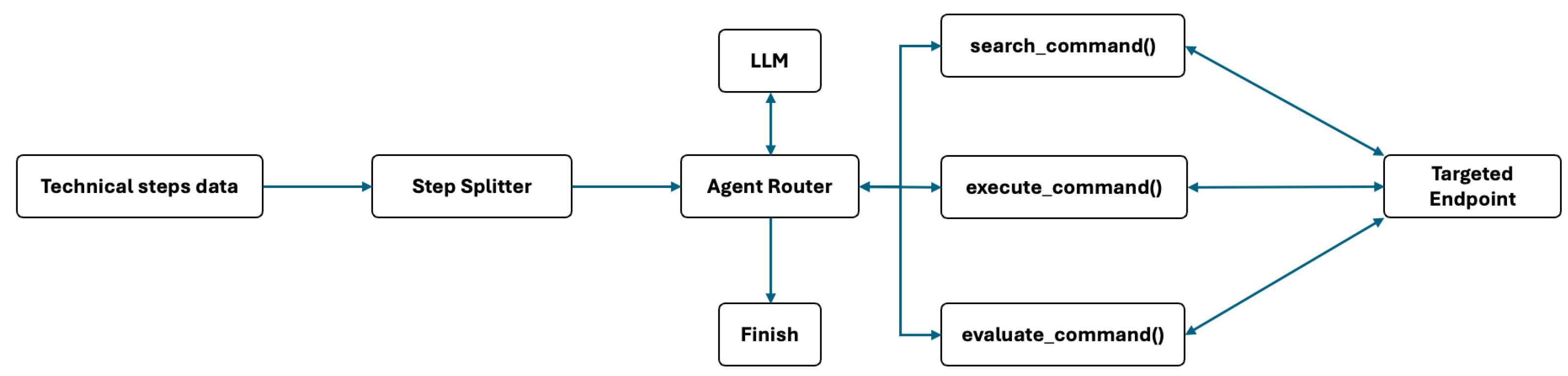

Model Orchestration and Task Decomposition

- Agent Router: An agent component that routes queries and tasks to the appropriate Large Language Model (LLM) based on predefined rules, model specialization, or real-time performance metrics. It also utilizes the incident similarity engine, retrieving relevant cases from the vector DB to enhance contextual understanding.

- LLM Models: Two specialized LLMs power the system:

- -

- LLM Model Llama-3.3-70B-Instruct: Focuses on broad security policies, general text processing, and strategic threat mitigation.

- -

- LLM Model deepseek-ai/DeepSeek-R1-Distill-Llama-70B: Designed for deep, domain-specific security analysis and advanced query resolution.

Tooling and Execution Component

Technical Step Builder

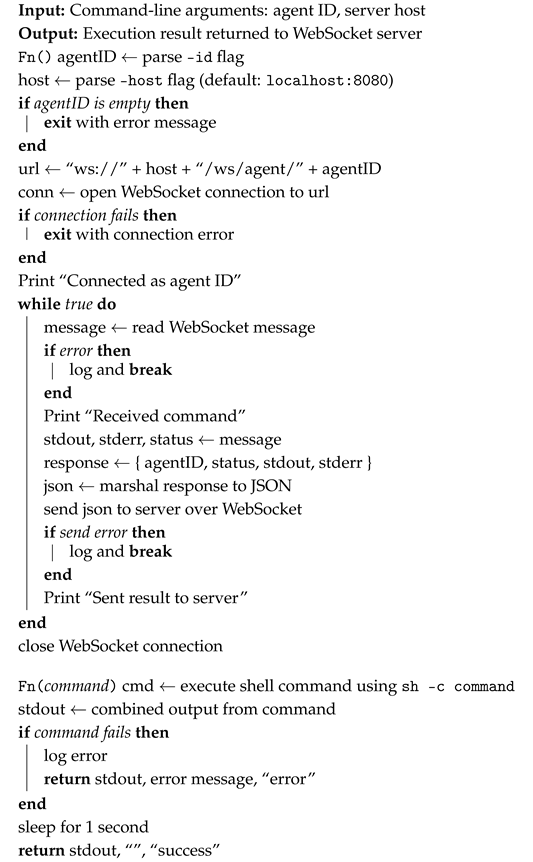

Agent Executor

| Algorithm 1: WebSocket Agent Client (Command Executor) |

|

3.3. System Automation Flow

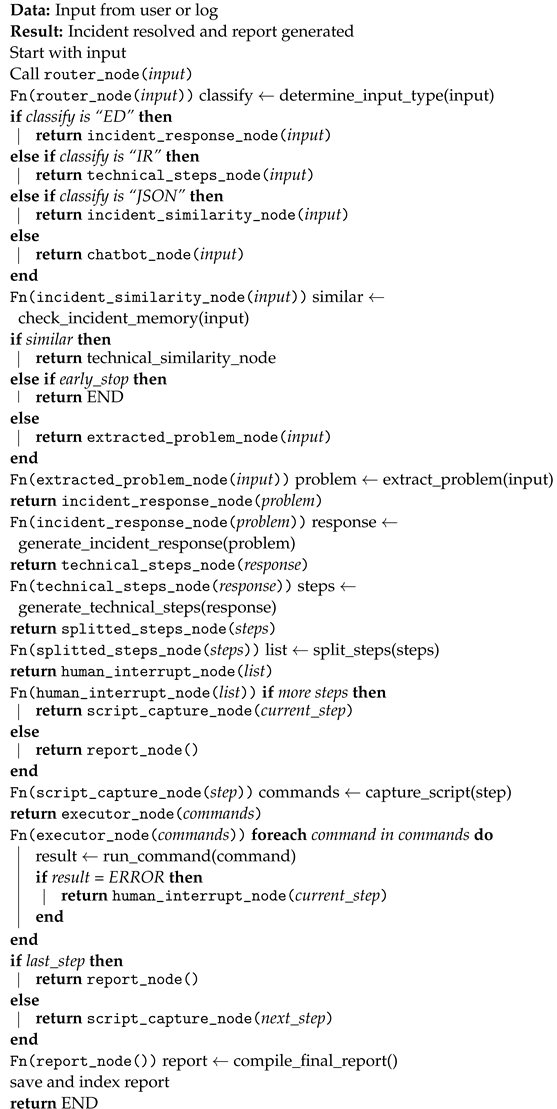

3.4. Agent Implementation Overview and Flow Logic

3.4.1. Routing and Input Classification

| Algorithm 2: Agent Graph Flow |

|

3.4.2. Similarity-Based Retrieval

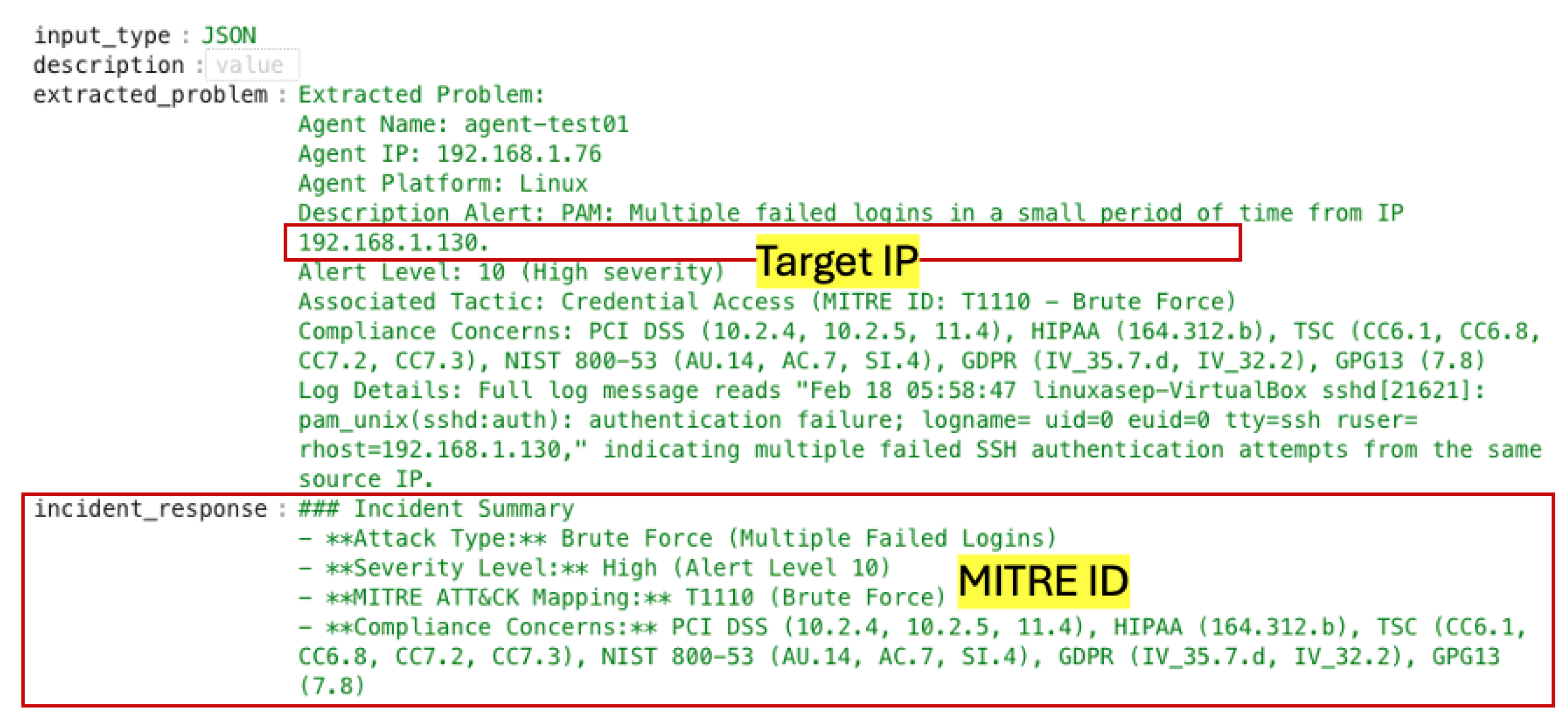

3.4.3. Problem Extraction and Response Generation

3.4.4. Stepwise Execution and Control Flow

3.4.5. Post Execution Reporting and Indexing

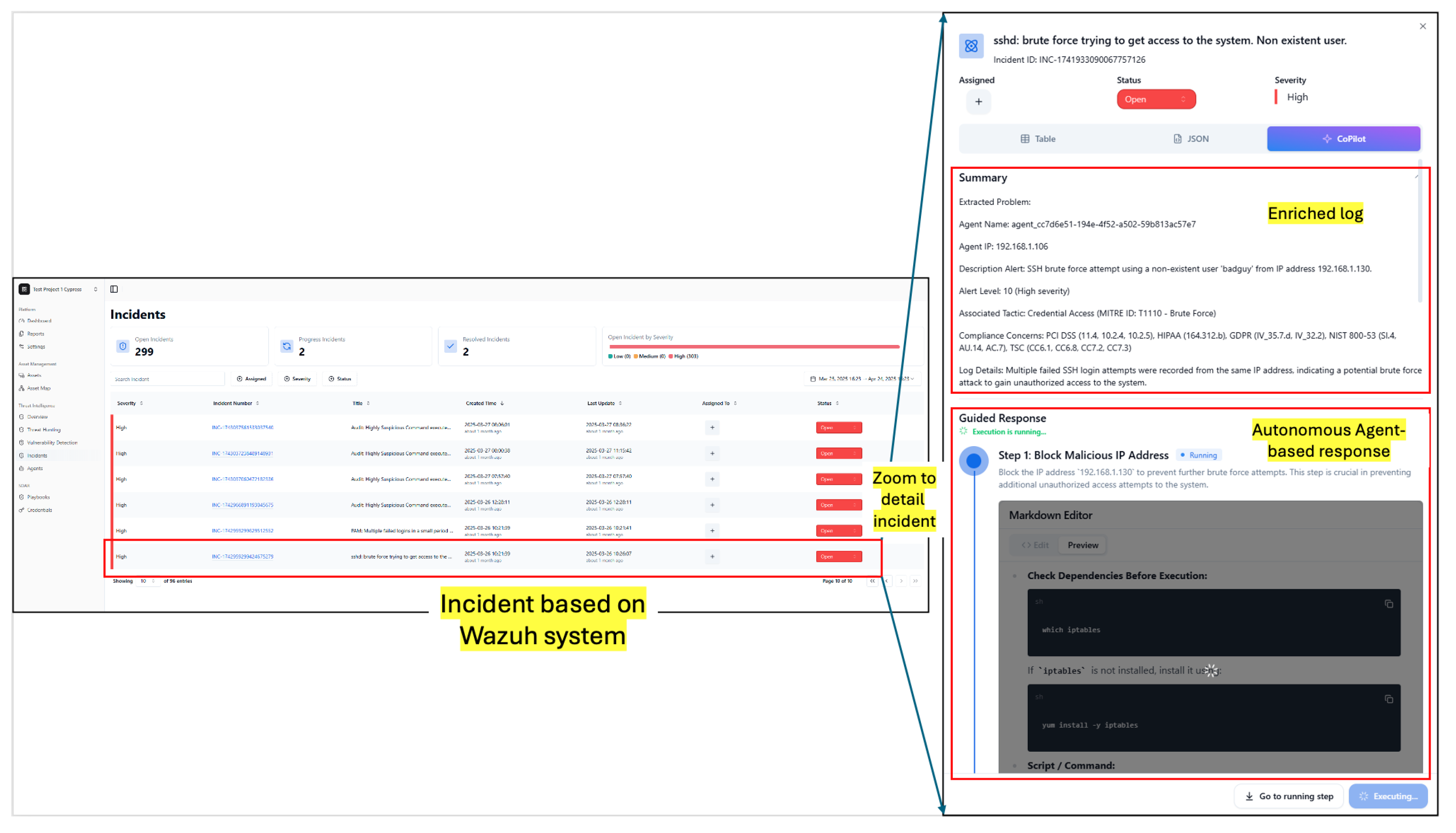

4. Agent Validation Result

4.1. Deployment

4.2. Data Enrichment

4.3. Agent-Based Mitigation Result

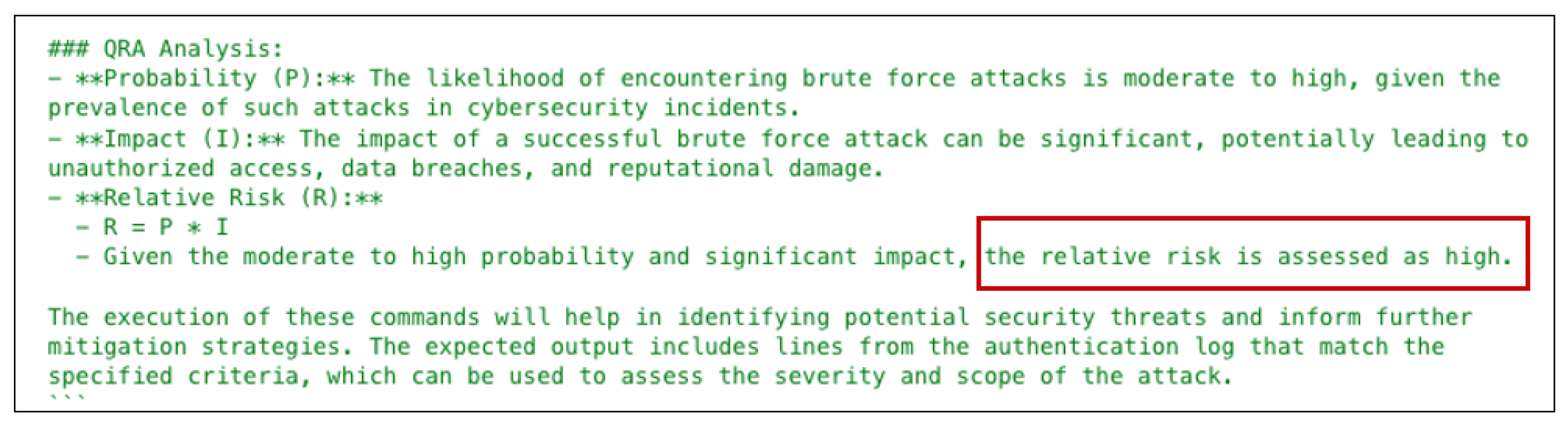

4.4. Brute-Force Quantitative Risk Assessment Result

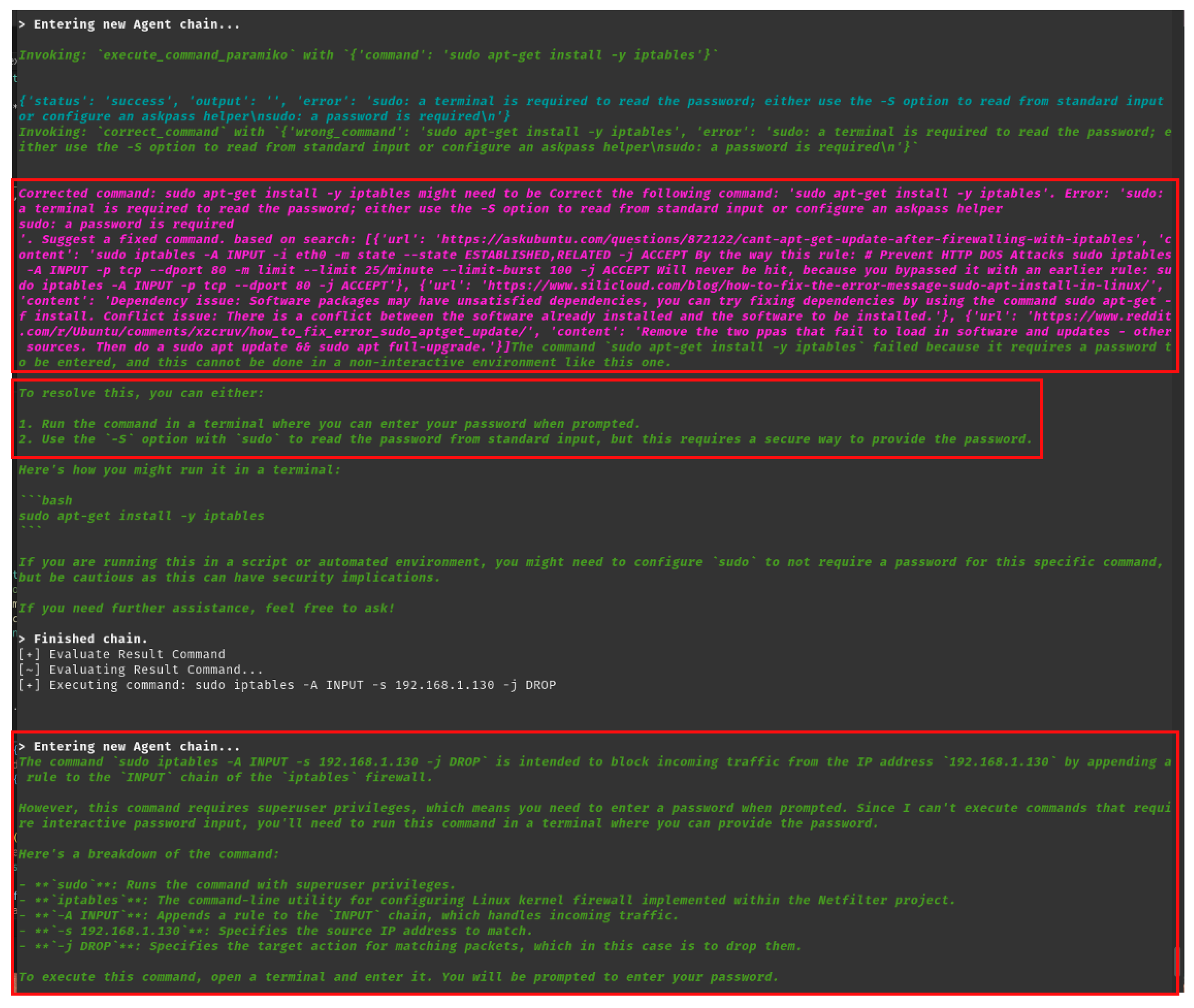

4.5. AI-Driven Adaptive Error Resolution

4.6. Mean Time to Remediate (MTTR) Results

5. Discussion

6. Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Crowley, C.; Pescatore, J. Common and Best Practices for Security Operations Centers: Results of the 2019 SOC Survey. SANS Institute Information Security Reading Room. 2019. Available online: https://www.sans.org/media/analyst-program/common-practices-security-operations-centers-results-2019-soc-survey-39060.pdf (accessed on 12 February 2025).

- Threat Intelligence Team. Accelerate Incident Response with SOAR. Threat Intelligence Blog. 2025. Available online: https://www.threatintelligence.com/blog/soar-incident-response (accessed on 7 January 2025).

- CREST. CREST Launches Comprehensive White Paper on Maximising SOAR in the SOC—Global Security Mag Online. Global Security Magazine Online. 2023. Available online: https://www.globalsecuritymag.com/crest-launches-comprehensive-white-paper-on-maximising-soar-in-the-soc.html (accessed on 7 January 2025).

- Logic, S. How SOAR Can Foster Efficient SecOps in Modern SOCs|Sumo Logic. Sumo Logic Blog. 2025. Available online: https://www.sumologic.com/blog/how-soar-can-foster-efficient-secops-in-modern-socs/ (accessed on 7 January 2025).

- Securaa. SOAR: Revolutionizing Security Operations Centers (SOC) Teams-Securaa. Securaa Blog. 2025. Available online: https://securaa.io/soar-revolutionizing-security-operations-centers-soc-teams/ (accessed on 7 January 2025).

- Rapid7. Automating Threat Hunting with SOAR for Faster Response Times|Rapid7 Blog. Rapid7 Blog. 2019. Available online: https://www.rapid7.com/blog/post/2019/07/29/how-to-automate-threat-hunting-with-soar-for-faster-response-times/ (accessed on 7 January 2025).

- D3 Security. How Smart SOAR Automates Threat Hunting|D3 Security. D3 Security Blog. 2025. Available online: https://d3security.com/blog/how-smart-soar-automates-threat-hunting/ (accessed on 7 January 2025).

- Saint-Hilaire, K.A.; Neal, C.; Cuppens, F.; Boulahia-Cuppens, N.; Hadji, M. Optimal Automated Generation of Playbooks. In Data and Applications Security and Privacy XXXVIII: Proceedings of the 38th Annual IFIP WG 11.3 Conference, DBSec 2024, San Jose, CA, USA, 15–17 July 2024; Ferrara, A.L., Krishnan, R., Eds.; Springer Nature: Cham, Switzerland, 2024; pp. 191–199. [Google Scholar]

- Islam, C.; Babar, M.A.; Nepal, S. Architecture-Centric Support for Integrating Security Tools in a Security Orchestration Platform. In Proceedings of the Software Architecture, Salvador, Brazil, 16–20 March 2020; Jansen, A., Malavolta, I., Muccini, H., Ozkaya, I., Zimmermann, O., Eds.; Springer Nature: Cham, Switzerland, 2020; pp. 165–181. [Google Scholar]

- Zhang, Y.; Li, X.; Wang, M. Playbook-Centric Scalable SOAR System Architecture. In Proceedings of the 2020 International Conference on Cyber Security and Protection of Digital Services (Cyber Security 2020), Dublin, Ireland, 15–17 June 2020; IEEE: New York, NY, USA, 2020; pp. 1–8. [Google Scholar]

- Tilbury, J.; Flowerday, S. Humans and Automation: Augmenting Security Operation Centers. J. Cybersecur. Priv. 2024, 4, 388–409. [Google Scholar] [CrossRef]

- Kochale, K.; Boerakker, D.; Teutenberg, T.; Schmidt, T.C. Concept of flexible no-code automation for complex sample preparation procedures. J. Chromatogr. A 2024, 1736, 465343. [Google Scholar] [CrossRef] [PubMed]

- Lee, M.; Jang-Jaccard, J.; Kwak, J. Novel Architecture of Security Orchestration, Automation and Response in Internet of Blended Environment. Comput. Mater. Contin. 2022, 73, 199–223. [Google Scholar] [CrossRef]

- Tyagi, A. Enterprise Risk Management: Benefits and Challenges. Available online: https://ssrn.com/abstract=3748267 (accessed on 11 January 2025).

- Smith, D.; Fischbacher, M. The changing nature of risk and risk management: The challenge of borders, uncertainty and resilience. Risk Manag. 2009, 11, 1–12. [Google Scholar] [CrossRef]

- Luxoft. Transforming Regulatory and GRC with Low-Code Automation Technologies|Luxoft Blog. Luxoft Blog. 2025. Available online: https://www.luxoft.com/blog/transforming-regulatory-and-grc-with-low-code-automation-technologies (accessed on 13 January 2025).

- Quantzig. Top Benefits of Low-Code Platforms That Transforms Your Business in 2025. Quantzig Blog. 2025. Available online: https://www.quantzig.com/low-code-no-code-platform/top-benefits-of-low-code-platforms (accessed on 13 January 2025).

- Technology, B. Low-Code Development: A Comprehensive Guide for 2025. Bacancy Technology Blog. 2025. Available online: https://www.bacancytechnology.com/blog/low-code-development (accessed on 13 January 2025).

- Intelliconnectq. Low-Code Platforms for Optimizing Operational Costs. Intelliconnectq Blog. 2025. Available online: https://intelliconnectq.com/blog/low-code-platforms-for-optimizing-operational-costs (accessed on 13 January 2025).

- Sido, N.; Emon, E.A.; Ahmed, E.; Supervisor, E.; Falch, M. Low/No Code Development and Generative AI. Bachelor’s Thesis, Aalborg University, Copenhagen, Denmark, 2024. Available online: https://vbn.aau.dk/ws/files/717521040/LowNOCode__GenAI.pdf (accessed on 13 January 2025).

- Desmond, M.; Duesterwald, E.; Isahagian, V.; Muthusamy, V. A No-Code Low-Code Paradigm for Authoring Business Automations Using Natural Language. arXiv 2022, arXiv:2207.10648v1. [Google Scholar]

- Rajput, A.S.; Professor, R.G.A. Hyper-automation-The next peripheral for automation in IT industries. arXiv 2023, arXiv:2305.11896. [Google Scholar]

- Quargnali, G. Low-Code/No-Code Development and Generative AI: Opportunities and Challenges. Bachelor’s Thesis, Haaga-Helia University of Applied Sciences, Helsinki, Finland, 2023. Available online: https://www.theseus.fi/bitstream/handle/10024/751688/Quargnali_Giovanni.pdf (accessed on 12 January 2025).

- Engel, C.; Ebel, P.; Leimeister, J.M. Cognitive automation. Electron. Mark. 2022, 32, 339–350. [Google Scholar] [CrossRef]

- Engel, C.; Elshan, E.; Ebel, P.; Leimeister, J.M. Stairway to heaven or highway to hell: A model for assessing cognitive automation use cases. J. Inf. Technol. 2024, 39, 94–122. [Google Scholar] [CrossRef]

- Matthijs Bal, P.; Davids, J.; Garcia, E.; McKnight, C.; Nichele, E.; Orhan, M.A.; van Rossenberg, Y. The Psychology of Automation and Artificial Intelligence atWork: Exploring Four Fantasies and Their Leadership Implications. In Power, Politics and Influence: Exercising Followership, Leadership, and Practicing Politics; Akande, A., Ed.; Springer Nature: Cham, Switzerland, 2024; pp. 575–592. [Google Scholar] [CrossRef]

- Kusiak, A. Hyper-automation in manufacturing industry. J. Intell. Manuf. 2024, 35, 1–2. [Google Scholar] [CrossRef]

- Fedosovsky, M.E.; Uvarov, M.M.; Aleksanin, S.A.; Pyrkin, A.A.; Colombo, A.W.; Prattichizzo, D. Sustainable Hyperautomation in High-Tech Manufacturing Industries: A Case of Linear Electromechanical Actuators. IEEE Access 2022, 10, 98204–98219. [Google Scholar] [CrossRef]

- SANS Institute. SANS 2020 MITRE ATT&CK Whitepaper; Technical Report; SANS Institute: Bethesda, MD, USA, 2020; Available online: https://www.sans.org/media/vendor/SANS-2020-MITRE-ATT&CK-Whitepaper-Sell-Sheet.pdf (accessed on 6 February 2025).

- Bartwal, U.; Mukhopadhyay, S.; Negi, R.; Shukla, S. Security Orchestration, Automation and Response Engine for Deployment of Behavioural Honeypots. arXiv 2022, arXiv:cs.CR/2201.05326. [Google Scholar]

- Kinyua, J.; Awuah, L. AI/ML in Security Orchestration, Automation and Response: Future Research Directions. Intell. Autom. Soft Comput. 2021, 28, 528–543. [Google Scholar] [CrossRef]

- SANS Institute. Incident Response Cycle; SANS Resource; SANS Institute: Bethesda, MD, USA, 2025; Available online: https://www.sans.org/media/score/504-incident-response-cycle.pdf (accessed on 21 February 2025).

- Fysarakis, K.; Lekidis, A.; Mavroeidis, V.; Lampropoulos, K.; Lyberopoulos, G.; Vidal, I.G.M.; Terés i Casals, J.C.; Luna, E.R.; Moreno Sancho, A.A.; Mavrelos, A.; et al. PHOENI2X—A European Cyber Resilience Framework With Artificial-Intelligence-Assisted Orchestration, Automation & Response Capabilities for Business Continuity and Recovery, Incident Response, and Information Exchange. In Proceedings of the 2023 IEEE International Conference on Cyber Security and Resilience (CSR), Venice, Italy, 31 July–2 August 2023; pp. 538–545. [Google Scholar] [CrossRef]

- Kremer, R.; Wudali, P.N.; Momiyama, S.; Araki, T.; Furukawa, J.; Elovici, Y.; Shabtai, A. IC-SECURE: Intelligent System for Assisting Security Experts in Generating Playbooks for Automated Incident Response. arXiv 2023, arXiv:cs.CR/2311.03825. [Google Scholar]

- Sworna, Z.T.; Islam, C.; Babar, M.A. APIRO: A Framework for Automated Security Tools API Recommendation. arXiv 2022, arXiv:cs.SE/2201.07959. [Google Scholar]

- Valencia, L.J. Artificial Intelligence as the New Hacker: Developing Agents for Offensive Security. Master’s Thesis, New Mexico Institute of Mining and Technology, Socorro, New Mexico, 2024. [Google Scholar]

- Oesch, S.; Hutchins, J.; Austria, P.; Chaulagain, A. Agentic AI and the Cyber Arms Race. IEEE Comput. Mag. Cybertrust Column 2025, To be published. arXiv:cs.CY/2503.04760. [Google Scholar] [CrossRef]

- Khan, R.; Sarkar, S.; Mahata, S.K.; Jose, E. Security Threats in Agentic AI System. arXiv 2024, arXiv:2410.14728. [Google Scholar]

- Kaheh, M.; Kholgh, D.K.; Kostakos, P. Cyber Sentinel: Exploring Conversational Agents’ Role in Streamlining Security Tasks with GPT-4. arXiv 2023, arXiv:2309.16422. [Google Scholar]

- Infrastructure Security Agency. CISA|Defend Today, Secure Tomorrow. CISA Website. 2021. Available online: https://www.cisa.gov/sites/default/files/publications/Incident-Response-Plan-Basics_508c.pdf (accessed on 8 January 2025).

- Framework for Improving Critical Infrastructure Cybersecurity; Version 1.1; American’s Cyber Defense Agency: Washington, DC, USA, 2018. [CrossRef]

- Strom, B.E.; Applebaum, A.; Miller, D.P.; Nickels, K.C.; Pennington, A.G.; Thomas, C.B. MITRE ATT&CK®: Design and Philosophy. MITRE Technical Report. 2018. Available online: https://attack.mitre.org/docs/ATTACK_Design_and_Philosophy_March_2020.pdf (accessed on 8 January 2025).

- Zadeh, A.; Lavine, B.; Zolbanin, H.; Hopkins, D. Cybersecurity risk quantification and classification framework for informed risk mitigation decisions. Decis. Anal. J. 2023, 9, 100328. [Google Scholar] [CrossRef]

- Quinn, S.; Ivy, N.; Barrett, M.; Witte, G.; Gardner, R.K. NISTIR 8286B: Prioritizing Cybersecurity Risk for Enterprise Risk Management; Technical Report 8286B; National Institute of Standards and Technology (NIST): Gaithersburg, MD, USA, 2022. [Google Scholar] [CrossRef]

- Algarni, A.M.; Malaiya, Y.K. A consolidated approach for estimation of data security breach costs. In Proceedings of the 2016 2nd International Conference on Information Management, (ICIM), London, UK, 7–8 May 2016; pp. 26–39. [Google Scholar]

- Jouini, M.; Rabai, L.B.A.; Khedri, R. A Quantitative Assessment of Security Risks Based on a Multifaceted Classification Approach. Springer J. Inf. Secur. 2020, 20, 493–510. [Google Scholar] [CrossRef]

- OpenAI. GPT-4. 2023. Available online: https://openai.com/product/gpt-4 (accessed on 6 February 2025).

- Google. Bard. 2023. Available online: https://bard.google.com/ (accessed on 6 February 2025).

- Anthropic. Claude. 2023. Available online: https://www.anthropic.com/claude (accessed on 6 February 2025).

- Meta AI. LLaMA: Large Language Model Meta AI. 2023. Available online: https://ai.meta.com/blog/large-language-model-llama-meta-ai/ (accessed on 6 February 2025).

- Cohere AI. Command R: Retrieval-Augmented Generation Model. 2023. Available online: https://cohere.ai/command (accessed on 6 February 2025).

- Hugging Face BigScience. BLOOM: BigScience Large Open-Science Open-Access Multilingual Language Model. 2023. Available online: https://huggingface.co/bigscience/bloom (accessed on 6 February 2025).

- EleutherAI. GPT-NeoX: A Large-Scale Autoregressive Language Model. 2023. Available online: https://github.com/EleutherAI/gpt-neox (accessed on 6 February 2025).

- DeepSeek AI. DeepSeek-R1. 2023. Available online: https://en.wikipedia.org/wiki/DeepSeek (accessed on 6 February 2025).

- Alibaba DAMO Academy. Qwen: Large Language Model by Alibaba. 2023. Available online: https://en.wikipedia.org/wiki/List_of_large_language_models (accessed on 6 February 2025).

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Bommasani, R.; Hudson, D.A.; Adeli, E.; Altman, R.; Arora, S.; von Arx, S.; Bernstein, M.S.; Bohg, J.; Bosselut, A.; Brunskill, E.; et al. On the Opportunities and Risks of Foundation Models. arXiv 2021, arXiv:2108.07258. [Google Scholar]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Ichter, B.; Xia, F.; Chi, E.; Le, Q.; Zhou, D. Chain-of-Thought Prompting Elicits Reasoning in Large Language Models. arXiv 2022, arXiv:2201.11903. [Google Scholar]

- Qin, Y.; Liang, S.; Ye, Y.; Zhu, K.; Yan, L.; Lu, Y.; Lin, Y.; Cong, X.; Tang, X.; Qian, B.; et al. ToolLLM: Facilitating Large Language Models to Master 16000+ Real-world APIs. arXiv 2023, arXiv:2307.16789, 16789. [Google Scholar]

- Nair, V.; Schumacher, E.; Tso, G.; Kannan, A. DERA: Enhancing Large Language Model Completions with Dialog-Enabled Resolving Agents. arXiv 2023, arXiv:cs.CL/2303.17071. [Google Scholar]

- Wang, H.; Zhao, L.; Chen, M. Ethical AI in Autonomous Cybersecurity: Challenges and Opportunities. ACM Trans. Inf. Syst. Secur. 2023, 26, 1–18. [Google Scholar] [CrossRef]

- Wang, G.; Lin, Z.; Peng, J.B.; Wu, Q.; Lu, S.; Gonzalez, J.E.; Abbeel, P.; Zhou, D.S. Voyager: An Open-Ended Embodied Agent with Large Language Models. arXiv 2023, arXiv:2305.16291. [Google Scholar]

- Ahn, M.; Brohan, A.; Chai, Y.H.H.; Canny, J.; Goldberg, K.; McGrew, B.; Ichter, B. Can Large Language Models Be an Alternative to Robot Motion Planning? arXiv 2022, arXiv:2206.05841. [Google Scholar]

- Singh, S.; Gupta, S.; Thakur, A.; Saran, A. ProgPrompt: Generating Situated Robot Task Plans using Large Language Models. arXiv 2023, arXiv:2304.05381. [Google Scholar]

- Mitchell, E.; Brynjolfsson, E. The AI cybersecurity revolution: Leveraging autonomous decision-making for proactive threat mitigation. Cybersecur. J. 2022, 10, 45–67. [Google Scholar]

- Arsanjani, M.S. The Anatomy of Agentic AI. Medium. 2023. Available online: https://dr-arsanjani.medium.com/the-anatomy-of-agentic-ai-0ae7d243d13c (accessed on 12 February 2025).

- Singh, R.; Patel, N.; Li, Z. AI-driven cybersecurity orchestration: From predictive analytics to automated defenses. J. Artif. Intell. Cybersecur. 2023, 8, 112–138. [Google Scholar]

- Zacharewicz, G.; Chen, D.; Vallespir, B. Short-Lived Ontology Approach for Agent/HLA Federated Enterprise Interoperability. In Proceedings of the 2009 International Conference on Interoperability for Enterprise Software and Applications, Beijing, China, 21–22 April 2009. [Google Scholar] [CrossRef]

- Paul, S.; Alemi, F.; Macwan, R. LLM-Assisted Proactive Threat Intelligence for Automated Reasoning. arXiv 2025, arXiv:2504.00428. Available online: https://arxiv.org/abs/2504.00428 (accessed on 20 April 2025).

- Zhou, K.; Wang, P.; Zhang, E. AI-driven Vulnerability Assessment: Automating Risk Identification and Mitigation. J. Mach. Learn. Secur. 2022, 5, 189–210. [Google Scholar]

- Radanliev, P. AI Ethics: Integrating Transparency, Fairness, and Privacy in AI Development. Appl. Artif. Intell. 2025, 39. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and harnessing adversarial examples. arXiv 2014, arXiv:1412.6572. [Google Scholar]

- Johnson, R.; Kim, E.; Williams, D. LLM-driven Adversarial Defense: Real-Time Detection and Mitigation of Adversarial Attacks. J. AI Secur. Res. 2023, 9, 155–178. [Google Scholar]

- Ismail; Kurnia, R.; Widyatama, F.; Wibawa, I.M.; Brata, Z.A.; Ukasyah; Nelistiani, G.A.; Kim, H. Enhancing Security Operations Center: Wazuh Security Event Response with Retrieval-Augmented-Generation-Driven Copilot. Sensors 2025, 25, 870. [Google Scholar] [CrossRef]

- Pupentsova, S.; Gromova, E. Risk Management in Business Valuation in the Context of Digital Transformation. Real Estate Manag. Valuat. 2021, 29, 97–106. [Google Scholar] [CrossRef]

- Metin, B.; Duran, S.; Telli, E.; Mutlutürk, M.; Wynn, M. IT Risk Management: Towards a System for Enhancing Objectivity in Asset Valuation that Engenders a Security Culture. Information 2024, 15, 55. [Google Scholar] [CrossRef]

- DeepSeek AI. DeepSeek-R1-Distill-Llama-70B. Hugging Face. 2023. Available online: https://huggingface.co/deepseek-ai/DeepSeek-R1-Distill-Llama-70B (accessed on 12 February 2025).

- Meta AI. Llama-3.3-70B-Instruct. Hugging Face. 2023. Available online: https://huggingface.co/meta-llama/Meta-Llama-3-70B-Instruct (accessed on 12 February 2025).

- Documentation, G. DeepSeek-R1-Distill-Llama-70B. Groq Console Docs. 2023. Available online: https://console.groq.com/docs/model/deepseek-r1-distill-llama-70b (accessed on 12 February 2025).

- Wazuh. Wazuh: The Open Source Security Platform. Wazuh Official Website. 2025. Available online: https://wazuh.com/ (accessed on 25 February 2025).

- Wazuh. Detect Brute-Force Attack. Wazuh Documentation. 2025. Available online: https://documentation.wazuh.com/current/proof-of-concept-guide/detect-brute-force-attack.html (accessed on 21 February 2025).

- Networks, P.A. Brute Force Investigation—Generic. Cortex XSOAR Documentation. 2025. Available online: https://xsoar.pan.dev/docs/reference/playbooks/brute-force-investigation---generic (accessed on 24 February 2025).

- Freitas, S.; Kalajdjieski, J.; Gharib, A.; McCann, R. AI-Driven Guided Response for Security Operation Centers with Microsoft Copilot for Security. arXiv 2024, arXiv:2407.09017. [Google Scholar]

| System Prompt Format |

|---|

| 1. Incident Response Analysis & Generation: |

| - Analyze log data and problem reports. |

| - Identify security threats using industry-standard frameworks. |

| 2. Incident Mitigation & Resolution: |

| - Provide mitigation strategies aligned with NIST CSF 2.0 and MITRE ATT&CK. |

| - Generate remediation steps, including playbook automation. |

| 3. Automation & Technical Guidance: |

| - Offer step-by-step response procedures. |

| - Ensure technical flow follows SOAR best practices. |

| 4. Security Research & Advisory: |

| - Utilize vector databases and security repositories. |

| - Provide evidence-based guidance. |

| 5. Conversational Efficiency & Memory: |

| - Engage professionally and contextually with users. |

| - Maintain conversation history for improved accuracy. |

| # | XSOAR Brute-Force Investigation—Generic | Proposed AI-Agent | Notes |

|---|---|---|---|

| 1 | Initial Detection & Triage Identify abnormal login attempts and confirm brute-force indicators. | Incident Summary

| Both approaches emphasize quick identification of brute-force attempts. Early triage ensures correct prioritization and immediate response. |

| 2 | Gather Evidence & Analyze Logs Review system logs to confirm scope, timeline, and potential impact. | Step 2: Analyze Log Files

| The AI agent’s procedure mirrors XSOAR’s approach by collecting evidence from relevant logs. Identifying compromised accounts or unusual sources is a shared goal. |

| 3 | Contain & Mitigate Ongoing Attack Block malicious IP addresses or isolate infected hosts. | Step 1: Isolate the Affected System

| Both methods prioritize swift containment to stop the attack in progress. Blocking the malicious IP is a common immediate action. |

| 4 | Implement Protective Measures Use account lockouts or IP blocking tools to thwart brute force. | Step 3: Monitor and Block Suspicious IPs

| XSOAR’s generic playbook recommends threshold-based blocking and lockouts. The AI agent explicitly uses Fail2Ban. Password resets align with best practices for compromised accounts. |

| 5 | Strengthen Access Controls Enhance MFA and tighten SSH settings. | Step 5: Enable and Configure Two-Factor Authentication

| Both highlight multi-factor authentication and SSH hardening as key defenses. |

| 6 | Forensic Analysis Investigate system integrity, checking for unauthorized changes or malware. | Step 7: Conduct a Forensic Analysis

| XSOAR’s deep-dive investigation is addressed by the AI agent’s emphasis on file integrity checks and audit logging. |

| 7 | Remediation & Restoration Return systems to secure the baseline once threats are removed. | Steps 1, 3–7 Combined

| While XSOAR treats remediation as a distinct phase, the AI agent’s steps collectively restore normal, secure operations. |

| 8 | Documentation Record all findings, actions, and lessons learned. | Step 8: Document the Incident

| Proper record keeping is essential for audits, compliance, and post-incident reviews. Both emphasize thorough documentation. |

| 9 | Policy Review & Compliance Check Review and update security policies for regulatory alignment. | Step 9: Review and Update Security Policies

| Both approaches highlight the importance of aligning policies with relevant standards. Continuous improvement is a central theme. |

| 10 | Post-Incident Analysis & Lessons Learned Conduct a debrief and refine IR processes. | Step 10: Conduct a Post-Incident Analysis

| A structured after-action review is key in both XSOAR’s process and the AI agent’s approach. Lessons learned to drive future improvements. |

| Step | Action Taken | System Response | AI Agent Analysis | Next Steps |

|---|---|---|---|---|

| 1 | Run sudo apt-get install -y iptables | Error: sudo: a password is required | Requires sudo authentication. | User runs manually or provides password via -S. |

| 2 | Suggested authentication alternatives | User input required | Needs user interaction. | User manually enters a password. |

| 3 | Ran sudo iptables -A INPUT -s 192.168.1.130 -j DROP | Error: sudo: a password is required | Same issue: requires authentication. | Configure sudo to allow execution without password. |

| 4 | Issued a Human Intervention Request | Awaiting user action | Execution blocked by authentication. | User must execute manually or adjust sudo settings. |

| 5 | Standing by for further instructions | Ready for next attempt | Awaiting user input. | User feedback required. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ismail; Kurnia, R.; Brata, Z.A.; Nelistiani, G.A.; Heo, S.; Kim, H.; Kim, H. Toward Robust Security Orchestration and Automated Response in Security Operations Centers with a Hyper-Automation Approach Using Agentic Artificial Intelligence. Information 2025, 16, 365. https://doi.org/10.3390/info16050365

Ismail, Kurnia R, Brata ZA, Nelistiani GA, Heo S, Kim H, Kim H. Toward Robust Security Orchestration and Automated Response in Security Operations Centers with a Hyper-Automation Approach Using Agentic Artificial Intelligence. Information. 2025; 16(5):365. https://doi.org/10.3390/info16050365

Chicago/Turabian StyleIsmail, Rahmat Kurnia, Zilmas Arjuna Brata, Ghitha Afina Nelistiani, Shinwook Heo, Hyeongon Kim, and Howon Kim. 2025. "Toward Robust Security Orchestration and Automated Response in Security Operations Centers with a Hyper-Automation Approach Using Agentic Artificial Intelligence" Information 16, no. 5: 365. https://doi.org/10.3390/info16050365

APA StyleIsmail, Kurnia, R., Brata, Z. A., Nelistiani, G. A., Heo, S., Kim, H., & Kim, H. (2025). Toward Robust Security Orchestration and Automated Response in Security Operations Centers with a Hyper-Automation Approach Using Agentic Artificial Intelligence. Information, 16(5), 365. https://doi.org/10.3390/info16050365