AIMT Agent: An Artificial Intelligence-Based Academic Support System

Abstract

1. Introduction

2. Materials and Methods

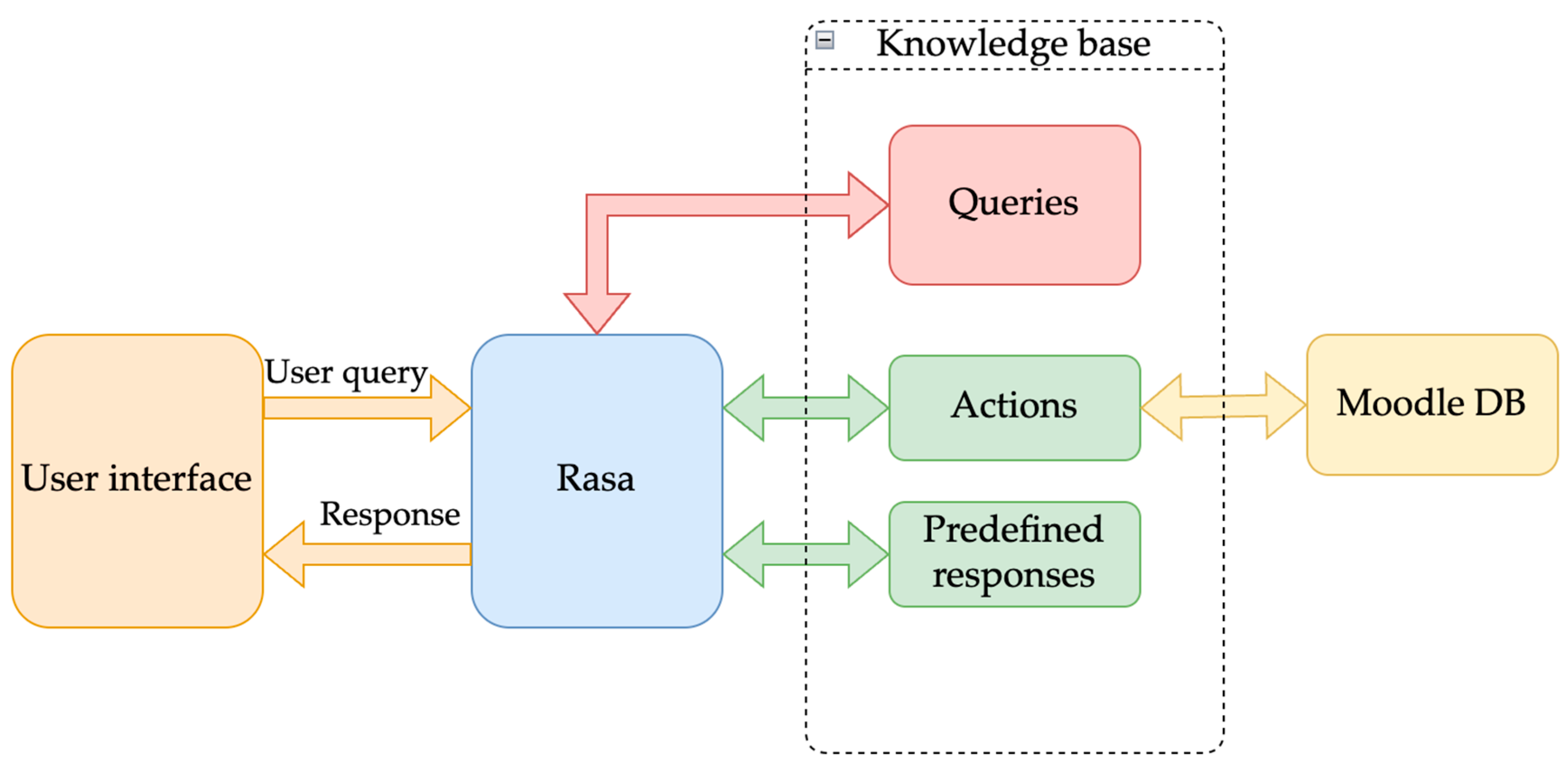

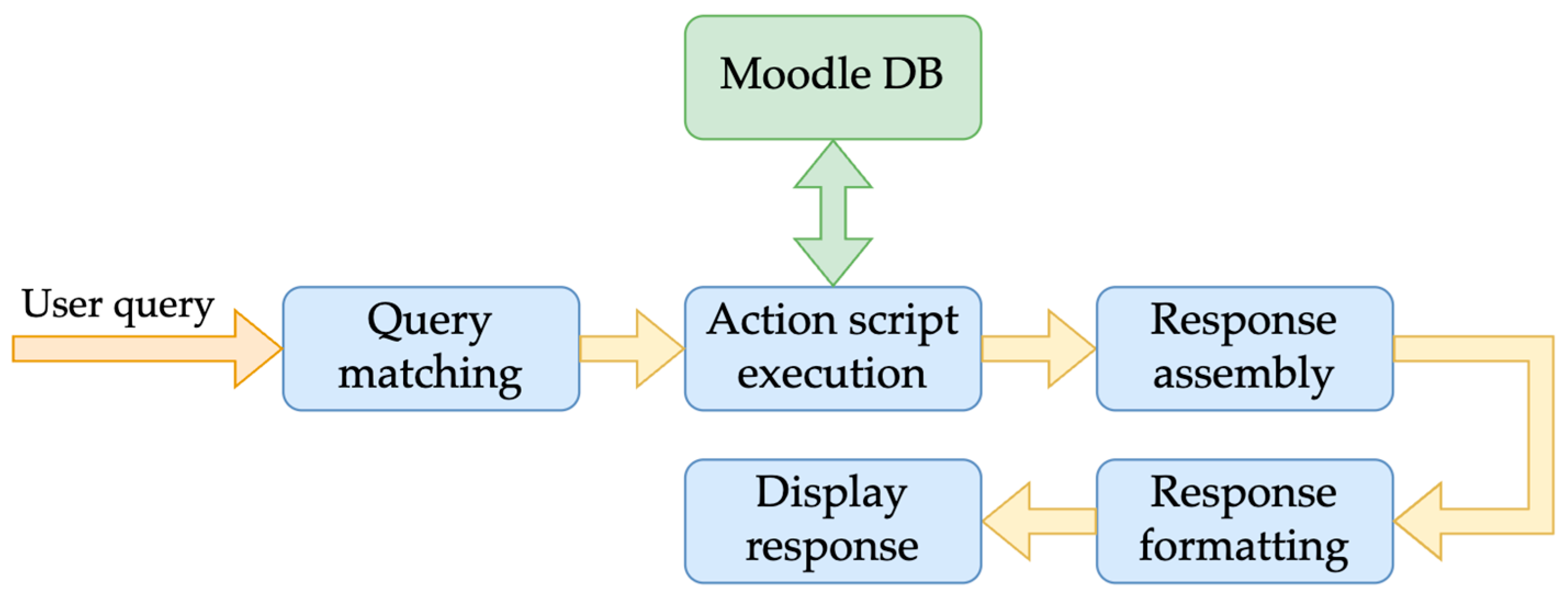

2.1. The Conversational Agent

- Intents: These are the questions that the user may ask. Based on common questions asked by prospective and current students, an initial list of queries in English was compiled. For each question, common formulations of the question were given as examples and entered into the knowledge base. By providing more examples of a certain query, the probability of a user question being understood and matched to an intent by the agent is improved.

- Answers: These are the predefined answers that the agent will return when a known question. The answers were based on information provided on the MSc program’s website.

- Rules: These are the entities that define the correspondence of the questions with the predefined answers.

2.2. Integration with Moodle LMS

2.3. Evaluation

3. Results

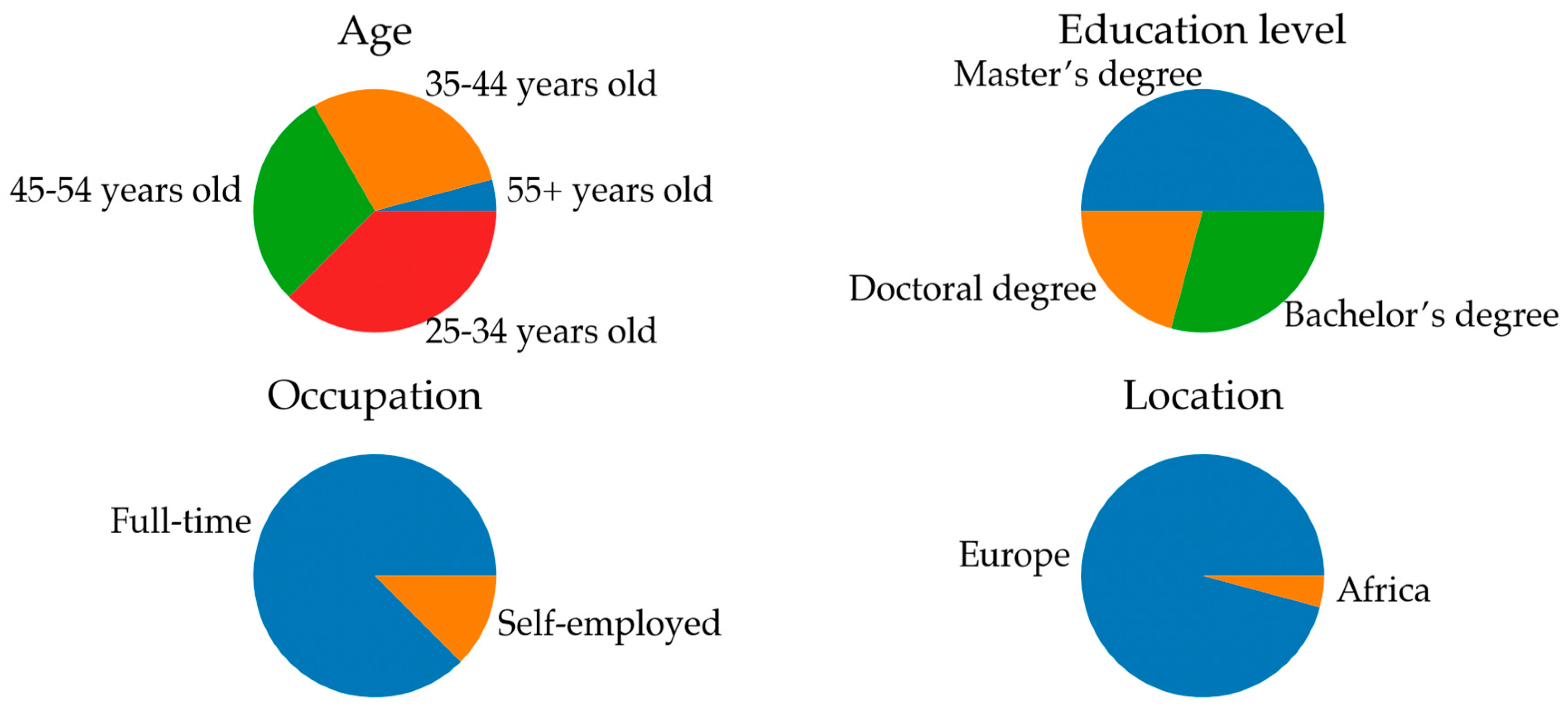

3.1. Demographics

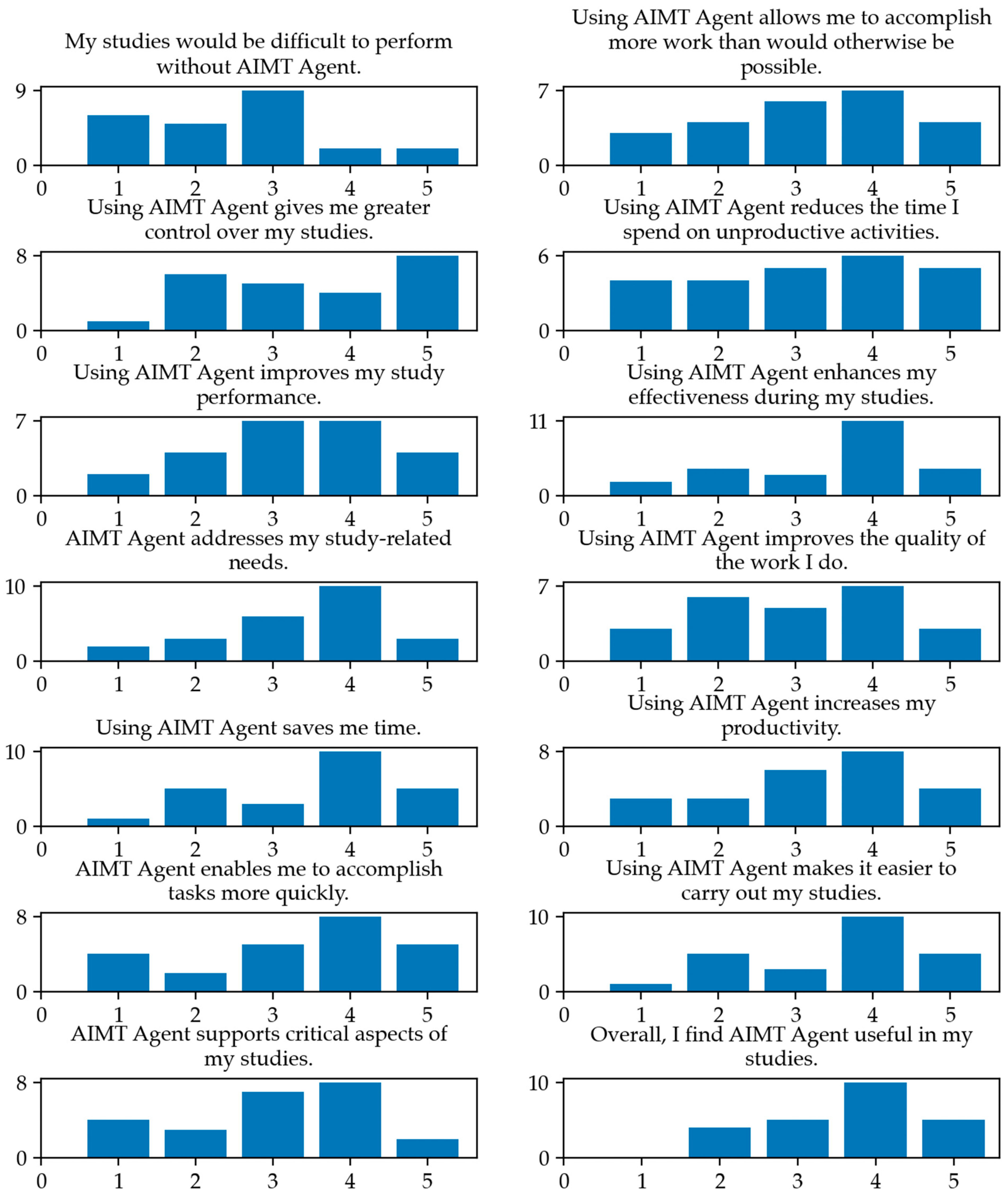

3.2. Perceived Usefulness

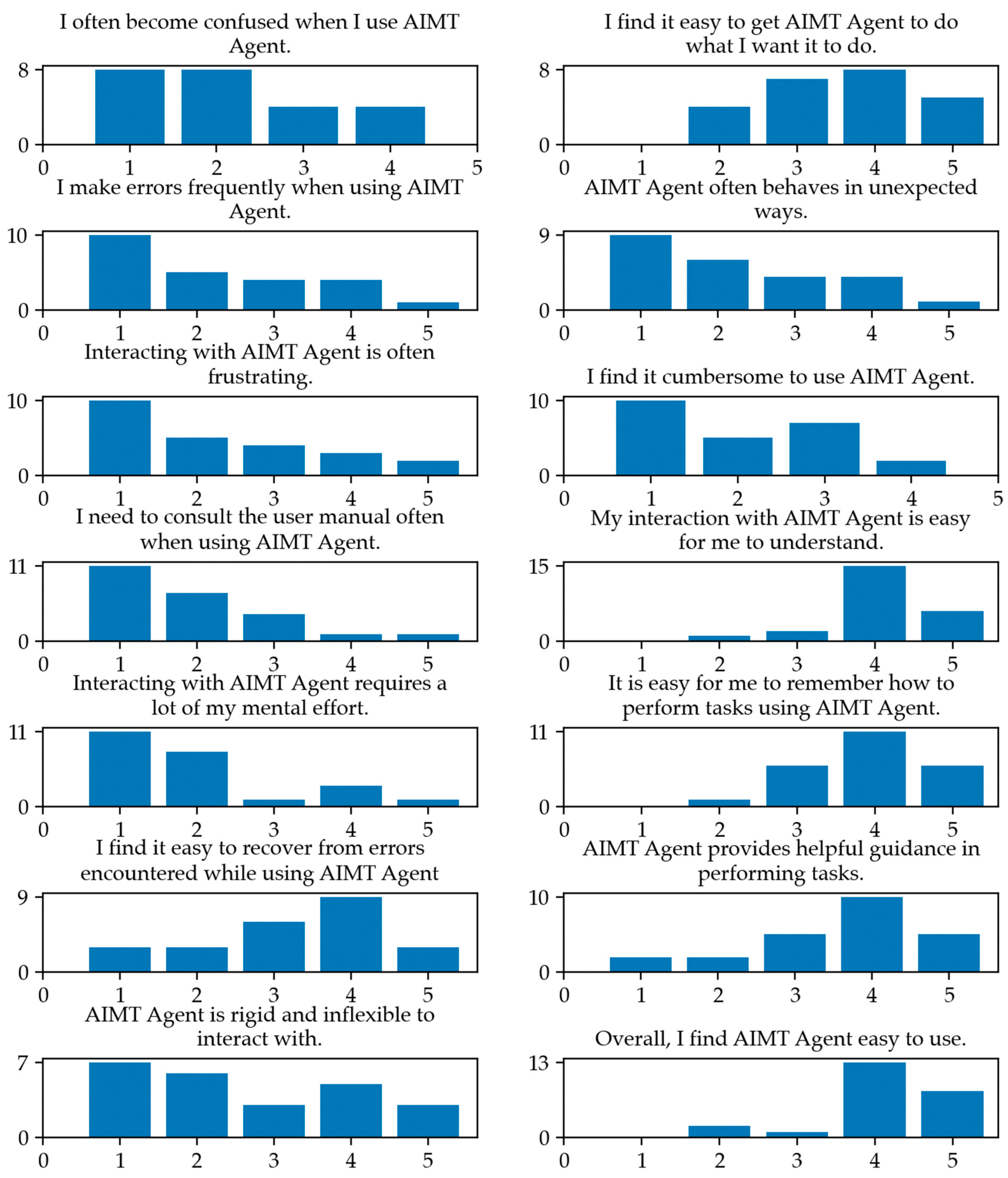

3.3. Perceived Ease of Use

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Skrebeca, J.; Kalniete, P.; Goldbergs, J.; Pitkevica, L.; Tihomirova, D.; Romanovs, A. Modern Development Trends of Chatbots Using Artificial Intelligence (AI). In Proceedings of the 2021 62nd International Scientific Conference on Information Technology and Management Science of Riga Technical University (ITMS), Riga, Latvia, 14–15 October 2021; pp. 1–6. [Google Scholar]

- Bavaresco, R.; Silveira, D.; Reis, E.; Barbosa, J.; Righi, R.; Costa, C.; Antunes, R.; Gomes, M.; Gatti, C.; Vanzin, M.; et al. Conversational Agents in Business: A Systematic Literature Review and Future Research Directions. Comput. Sci. Rev. 2020, 36, 100239. [Google Scholar] [CrossRef]

- Nicolescu, L.; Tudorache, M.T. Human-Computer Interaction in Customer Service: The Experience with AI Chatbots—A Systematic Literature Review. Electronics 2022, 11, 1579. [Google Scholar] [CrossRef]

- Laranjo, L.; Dunn, A.G.; Tong, H.L.; Kocaballi, A.B.; Chen, J.; Bashir, R.; Surian, D.; Gallego, B.; Magrabi, F.; Lau, A.Y.S.; et al. Conversational Agents in Healthcare: A Systematic Review. J. Am. Med. Inform. Assoc. 2018, 25, 1248–1258. [Google Scholar] [CrossRef] [PubMed]

- Bin Sawad, A.; Narayan, B.; Alnefaie, A.; Maqbool, A.; Mckie, I.; Smith, J.; Yuksel, B.; Puthal, D.; Prasad, M.; Kocaballi, A.B. A Systematic Review on Healthcare Artificial Intelligent Conversational Agents for Chronic Conditions. Sensors 2022, 22, 2625. [Google Scholar] [CrossRef] [PubMed]

- Adiguzel, T.; Kaya, M.H.; Cansu, F.K. Revolutionizing Education with AI: Exploring the Transformative Potential of ChatGPT. Contemp. Educ. Technol. 2023, 15, ep429. [Google Scholar] [CrossRef]

- Ramandanis, D.; Xinogalos, S. Designing a Chatbot for Contemporary Education: A Systematic Literature Review. Information 2023, 14, 503. [Google Scholar] [CrossRef]

- Hwang, G.-J.; Chang, C.-Y. A Review of Opportunities and Challenges of Chatbots in Education. Interact. Learn. Environ. 2023, 31, 4099–4112. [Google Scholar] [CrossRef]

- Okonkwo, C.W.; Ade-Ibijola, A. Chatbots Applications in Education: A Systematic Review. Comput. Educ. Artif. Intell. 2021, 2, 100033. [Google Scholar] [CrossRef]

- Wu, R.; Yu, Z. Do AI Chatbots Improve Students Learning Outcomes? Evidence from a Meta-analysis. Br. J. Educ. Technol. 2024, 55, 10–33. [Google Scholar] [CrossRef]

- Pérez, J.Q.; Daradoumis, T.; Puig, J.M.M. Rediscovering the Use of Chatbots in Education: A Systematic Literature Review. Comput. Appl. Eng. Educ. 2020, 28, 1549–1565. [Google Scholar] [CrossRef]

- Jeon, J. Chatbot-Assisted Dynamic Assessment (CA-DA) for L2 Vocabulary Learning and Diagnosis. Comput. Assist. Lang. Learn. 2023, 36, 1338–1364. [Google Scholar] [CrossRef]

- Hien, H.T.; Cuong, P.-N.; Nam, L.N.H.; Nhung, H.L.T.K.; Thang, L.D. Intelligent Assistants in Higher-Education Environments. In Proceedings of the Ninth International Symposium on Information and Communication Technology—SoICT 2018, New York, NY, USA, 6 December 2018; pp. 69–76. [Google Scholar]

- Kim, N.-Y.; Cha, Y.; Kim, H.-S. Future English Learning: Chatbots and Artificial Intelligence. Multimed. Assist. Lang. Learn. 2019, 22, 32–53. [Google Scholar]

- Verleger, M.; Pembridge, J. A Pilot Study Integrating an AI-Driven Chatbot in an Introductory Programming Course. In Proceedings of the 2018 IEEE Frontiers in Education Conference (FIE), San Jose, CA, USA, 3–6 October 2018; pp. 1–4. [Google Scholar]

- Ait Baha, T.; El Hajji, M.; Es-Saady, Y.; Fadili, H. The Impact of Educational Chatbot on Student Learning Experience. Educ. Inf. Technol. 2024, 29, 10153–10176. [Google Scholar] [CrossRef]

- Sophia, J.J.; Jacob, T.P. EDUBOT-A Chatbot For Education in Covid-19 Pandemic and VQAbot Comparison. In Proceedings of the 2021 Second International Conference on Electronics and Sustainable Communication Systems (ICESC), Coimbatore, India, 4 August 2021; pp. 1707–1714. [Google Scholar]

- Neumann, A.T.; Yin, Y.; Sowe, S.; Decker, S.; Jarke, M. An LLM-Driven Chatbot in Higher Education for Databases and Information Systems. IEEE Trans. Educ. 2024, 68, 103–116. [Google Scholar] [CrossRef]

- Lieb, A.; Goel, T. Student Interaction with NewtBot: An LLM-as-Tutor Chatbot for Secondary Physics Education. In Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11 May 2024; pp. 1–8. [Google Scholar]

- Bilquise, G.; Ibrahim, S.; Salhieh, S.M. Investigating Student Acceptance of an Academic Advising Chatbot in Higher Education Institutions. Educ. Inf. Technol. 2024, 29, 6357–6382. [Google Scholar] [CrossRef]

- Kuhail, M.A.; Al Katheeri, H.; Negreiros, J.; Seffah, A.; Alfandi, O. Engaging Students With a Chatbot-Based Academic Advising System. Int. J. Hum. Comput. Interact. 2023, 39, 2115–2141. [Google Scholar] [CrossRef]

- Sinha, S.; Basak, S.; Dey, Y.; Mondal, A. An Educational Chatbot for Answering Queries. In Advances in Intelligent Systems and Computing; Springer: New York, NY, USA, 2020; Volume 937, pp. 55–60. [Google Scholar]

- Hwang, G.-J.; Xie, H.; Wah, B.W.; Gašević, D. Vision, Challenges, Roles and Research Issues of Artificial Intelligence in Education. Comput. Educ. Artif. Intell. 2020, 1, 100001. [Google Scholar] [CrossRef]

- Shumanov, M.; Johnson, L. Making Conversations with Chatbots More Personalized. Comput. Human Behav. 2021, 117, 106627. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived Usefulness, Perceived Ease of Use, and User Acceptance of Information Technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef]

| Function | Example Query |

|---|---|

| Ask for the contact information of a teacher | What is Dr Tsinakos email? |

| Ask for pending assignments | When are my assignments due? |

| Ask for the objectives of the MSc program | What is the goal of this Msc? |

| Ask for instructions on accessing journals | How can I access journals? |

| Retrieve information regarding the thesis | How is the thesis completed? |

| Retrieve submission date of the thesis | When is the thesis deadline? |

| Retrieve information regarding the independent study module | What is independent study? |

| Retrieve submission date of the independent study module | When is the deadline for the independent study? |

| Ask for thesis topics | What are the thesis topics? |

| Ask for independent study topics | What are the independent study topics? |

| Question | Sample User Query | System Response | Result |

|---|---|---|---|

| 1 | When is my thesis due? | Thesis is due on 29 September 2024 03:00 AM | Correct response |

| 2 | Can i proceed to a phd? | PhD programs are available after coordinating with the Program Director first | Successful response |

| 3 | In the course IMT8 are two assignments obligatory? | You have no assignments pending | Misunderstanding the question |

| 4 | In IMT8 do I have 2 compulsory assignments? | Sorry, I didn’t quite understand that. Could you please rephrase? | Unknown question |

| Questions |

|---|

| 1. My studies would be difficult to perform without AIMT Agent. |

| 2. Using AIMT Agent gives me greater control over my studies. |

| 3. Using AIMT Agent improves my study performance. |

| 4. AIMT Agent addresses my study-related needs. |

| 5. Using AIMT Agent saves me time. |

| 6. AIMT Agent enables me to accomplish tasks more quickly. |

| 7. AIMT Agent supports critical aspects of my studies. |

| 8. Using AIMT Agent allows me to accomplish more work than would otherwise be possible. |

| 9. Using AIMT Agent reduces the time I spend on unproductive activities. |

| 10. Using AIMT Agent enhances my effectiveness during my studies. |

| 11. Using AIMT Agent improves the quality of the work I do. |

| 12. Using AIMT Agent increases my productivity. |

| 13. Using AIMT Agent makes it easier to carry out my studies. |

| 14. Overall, I find AIMT Agent useful in my studies. |

| Questions |

|---|

| 1. I often become confused when I use AIMT Agent. |

| 2. I make errors frequently when using AIMT Agent. |

| 3. Interacting with AIMT Agent is often frustrating. |

| 4. I need to consult the user manual often when using AIMT Agent. |

| 5. Interacting with AIMT Agent requires a lot of my mental effort. |

| 6. I find it easy to recover from errors encountered while using AIMT Agent. |

| 7. AIMT Agent is rigid and inflexible to interact with. |

| 8. I find it easy to get AIMT Agent to do what I want it to do. |

| 9. AIMT Agent often behaves in unexpected ways. |

| 10. I find it cumbersome to use AIMT Agent. |

| 11. My interaction with AIMT Agent is easy for me to understand. |

| 12. It is easy for me to remember how to perform tasks using AIMT Agent. |

| 13. AIMT Agent provides helpful guidance in performing tasks. |

| 14. Overall, I find AIMT Agent easy to use. |

| Question | Perceived Usefulness | Perceived Ease of Use | ||

|---|---|---|---|---|

| Mean | Standard Deviation | Mean | Standard Deviation | |

| 1 | 2.17 | 1.09 | 2.54 | 1.22 |

| 2 | 2.21 | 1.28 | 3.50 | 1.32 |

| 3 | 2.25 | 1.36 | 3.29 | 1.20 |

| 4 | 1.92 | 1.10 | 3.38 | 1.13 |

| 5 | 1.96 | 1.20 | 3.54 | 1.18 |

| 6 | 3.25 | 1.22 | 3.33 | 1.37 |

| 7 | 2.63 | 1.44 | 3.04 | 1.23 |

| 8 | 3.58 | 1.02 | 3.21 | 1.28 |

| 9 | 2.25 | 1.26 | 3.17 | 1.40 |

| 10 | 2.04 | 1.04 | 3.46 | 1.22 |

| 11 | 4.08 | 0.72 | 3.04 | 1.27 |

| 12 | 3.92 | 0.83 | 3.29 | 1.27 |

| 13 | 3.58 | 1.18 | 3.54 | 1.18 |

| 14 | 4.13 | 0.85 | 3.67 | 1.01 |

| Category | Description | No. of Responses |

|---|---|---|

| 1 | Correct answer | 131 (66.8%) |

| 2 | Incorrect answer due to insufficient examples | 19 (9.7%) |

| 3 | Incorrect answer due to malformed question | 14 (7.1%) |

| 4 | No answer due to insufficient data in the knowledge base | 22 (11.2%) |

| 5 | No answer due to malformed question | 10 (5.1%) |

| Total | 196 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lytridis, C.; Tsinakos, A. AIMT Agent: An Artificial Intelligence-Based Academic Support System. Information 2025, 16, 275. https://doi.org/10.3390/info16040275

Lytridis C, Tsinakos A. AIMT Agent: An Artificial Intelligence-Based Academic Support System. Information. 2025; 16(4):275. https://doi.org/10.3390/info16040275

Chicago/Turabian StyleLytridis, Chris, and Avgoustos Tsinakos. 2025. "AIMT Agent: An Artificial Intelligence-Based Academic Support System" Information 16, no. 4: 275. https://doi.org/10.3390/info16040275

APA StyleLytridis, C., & Tsinakos, A. (2025). AIMT Agent: An Artificial Intelligence-Based Academic Support System. Information, 16(4), 275. https://doi.org/10.3390/info16040275