Abstract

This paper proposes a solution for guiding visually impaired people to reach predefined locations marked with preregistered passive ultra-high-frequency RFID tags inside public buildings (e.g., secretary’s offices and information desks). Our approach employs an unmanned ground vehicle guidance system that assists customers in following predefined routes. The solution also includes a methodology for recording the best routes between all possible locations that may be visited. When reaching the destination, the system will read the tag, extract all the associated information from a database, and translate it into an audio format played into the user’s headphones. The system includes functionalities such as recording and playback of prerecorded routes, voice commands, and audio instructions. By describing the software and hardware architecture of the proposed guiding systems prototype, we show how combining ultra-high-frequency RFID technology with unmanned ground vehicle guiding systems equipped with ultrasonic, grayscale, hall sensors, and voice interfaces allows the development of accessible, low-cost guiding systems with increased functionalities. Moreover, we compare and analyze two different modes of route recording based on line following and manual recording, obtaining a performance regarding route playback with deviations under 10% for several basic scenarios.

1. Introduction

According to the International Agency for the Prevention of Blindness, in 2020, the total number of people suffering from compete blindness reached 43 million, approximately 0.5% of the world’s population [1]. The same study shows that the number of people affected by moderate to severe vision impairment is much greater, reaching 295 million (about 3.7% of the world’s population). All these people need to move around in different public spaces like any other person. Whether we speak about educational institutions, administrative buildings, museums, concert halls, offices, or commercial buildings, accessibility should also be provided to visually impaired people. Still, in many cases, the necessity to guide them and to signal key points in public places is not yet fulfilled. However, some buildings integrate tactile signals, vocal guiding systems, or qualified personnel to ensure a guiding service for people in need. Such an example is represented by the Louvre Museum in Paris, France [2].

Nonetheless, even if buildings have tactile maps, access and guidance into the buildings still represent a challenge for visually impaired people because they need to estimate the distances on their own. Moreover, these solutions do not provide the same level of accessibility to information regarding the points of interest in the building. Because most of the information is usually presented in visual form (e.g., printed timetables, announcements, and posters), the navigation system should also provide the necessary data in an audio format.

In this paper, we propose an infrastructure positioning solution for indoor guiding to predefined locations, following pre-recorded tracks, and using a vocal user interface. The proposed system offers accessibility not only to physical locations but also to all the information that is associated with these locations. The solution is based on a guiding unmanned ground vehicle (UGV) equipped with different sensors, a microphone, and carrying an RFID reading module. The UGV’s position is estimated by combining self-positioning methods with RFID readings from known positions.

The main contributions presented in this paper are as follows:

- The proposal of a novel approach regarding indoor guiding to predefined locations based on a fusion of accessible current technologies that can be easily used by visually impaired people;

- The design and implementation of a low-cost prototype system;

- The design and implementation of route recording and playback algorithms.

This paper is structured into six sections. Section 1 presents the motivation; In Section 2, other solutions are discussed and compared. In Section 3, the problem is defined, including a selection of scenarios and requirements. The prototype is presented in Section 4. The paper continues with the experiments and results analysis and ends with conclusions and future work.

2. Related Work

While outdoor navigation systems usually rely on the global positioning system (GPS), which is relatively accessible and low-cost, for indoor positioning, there is no standard solution. Since GPS-based navigation systems were primarily developed for outdoor environments, indoor GPS navigation systems face several challenges, including the lack of signal and reconfigurable interior spaces. Thus, the number of indoor guiding systems is significantly reduced [3,4,5].

If we speak about indoor guiding systems, there are different approaches, ranging from simpler solutions like the development of tactile maps to vocal guides through headphone systems to more complex solutions that include specialized personnel or robotic systems. Nonetheless, a general high-accuracy solution that can be configured according to people’s needs is still missing [6,7].

Regarding indoor positioning systems (IPS), one of the most popular technologies used is represented by video cameras [6]. In additio to cameras, other light-based solutions use light detection and ranging (LiDAR) sensors for navigation, obstacle, and object detection [8]. Both these types of technologies have their limitation: while cameras are influenced by ambient light, LiDAR has proven to be inefficient on reflective surfaces and clear glass. According to [9], non-camera-based systems are common in industrial settings and are also integrated into guiding systems for the visually impaired. Sensor tags like radio-frequency identification (RFID), near-field communication (NFC), Bluetooth low energy (BLE), or ultra-wideband (UWB) are also used in addition to cameras and light-based systems [9,10,11]. Passive UHF-RFID systems seem to be the most relevant solutions in recent years regarding RFID-based indoor vehicle localization thanks to their low cost, flexibility, scalability, and ease of implementation, being a valuable competitor to other classical IPS [12]. Compared to LiDAR or depth cameras, they do not need a line-of-sight environment. Moreover, UHF-RFID offers long reading ranges, up to a maximum of 10 m or more, and greater durability, including moisture and dust. Very recent articles in the most prestigious journals show the relevance of UHF-RFID technology in localization solutions based on robotic systems [13,14,15]. In particular, [15] proposes a tag array-based localization method with a maximum error of 6 cm.

In general, the main limitations of the current approaches are related to accuracy (position error), response time, availability, and scalability. Network-based IPSs can determine the necessary information using range or without range. Range-based methods use geometric information (distance or angle) from signals from different wireless nodes and then combine the geometric constraints to determine the user’s position. The most common range-based solutions use signal propagation time between receiver and transmitter (usually denoted as time-based approaches), the angle of arrival, or the RSSI of the signal as a basis for calculations. The accuracy of time-based approaches is limited by the building structure, internal layout, and building location, which can affect signal propagation. Time-based location solutions are also prone to errors produced by clock inaccuracies, errors in time estimation, and synchronization discrepancies between clock signals.

Focusing on guiding systems for the visually impaired and considering the requirements regarding the accuracy and delivery time, hybrid approaches that combine different technologies, like computer vision and radio frequency, together with processing and information generation algorithms, are the ones that provide higher reliability compared to single technology-based solutions [6,16,17].

While considering the human–machine interaction, speech recognition has been gaining popularity in recent years [3], in contrast to keypads, buttons, vibrations, or touch screens. In [18], the usage of text-to-speech is also mentioned in the context of popular interfaces for indoor applications for the visually impaired.

More complex solutions that aim to replace specialized personnel or service dogs to guide visually impaired people are briefly analyzed next.

The endeavors of introducing guiding robotic systems by replacing service dogs are not new, as shown in [19]. In this paper, the authors propose a robotic dog using 2D LiDAR technology for localization and a Depth-RGB camera for tracking the guided person. One notable aspect that differentiates this solution from others is the leash attached to the robotic dog, which has the ability to vary its tension in order to communicate to the user in case of obstacle avoidance maneuvers. Although important, the communication is still limited.

A solution for outdoor use, represented by a UAV for guiding blind runners, is presented in [20]. This study is worth mentioning because it underlines the fact that blind people can track and follow guiding objects even at high speeds. Other solutions that use LiDAR-based localization are presented in [21,22]. The latter also includes a video camera for detecting free seats. A guiding robotic system is proposed in [23]. This solution is general and does not target visually impaired people. Still, it represents a complex system containing 3D LiDAR-based localization, vocal recognition, obstacle avoidance, and vocal communication, which emphasize the people’s opening to human-to-robot interaction.

A comparison between the above-mentioned approaches is presented in Table 1.

Table 1.

Solution comparison.

Comparing the solutions, we can observe that the one integrating the most functionalities is presented in [23], which is not dedicated to blind people.

What differentiates our proposed solution from the others resides in the fact that our solution also targets blind people, it does not need Internet connectivity, the dimensions are reduced compared to the robotic dog, and more importantly, it integrates a facility to register and replay a predefined route and to offer accessibility not only to physical locations but also with the information associated to them in a friendly manner using a vocal interface.

The analysis of the related work also emphasizes the limitations of current knowledge in the field which are mainly related to noise, interference of the radio signals, synchronization, line of sight, processing, network bandwidth overhead, complexity in adding or removing network nodes, insufficient acquisition of visual information during displacement, signal strength affected by room layout, motion estimation errors, and a high level of complexity when tracking multiple targets on one hand, and on the other, we have relatively complex and expensive systems that visually impaired people find difficult to use. From these gaps, we address the problem of accessibility for ordinary users to accurate guiding systems, the need for an user friendly and simple to use interface for visually impaired people, and the problem of adequate design in terms of size, while also unburdening the user of necessary wearable components.

3. Problem Statement

This research addresses the problem of indoor guiding in public buildings with a limited number of locations of interest.

The chosen environment representation is similar to the one proposed in [24], a bi-dimensional (2D) representation of each floor of the building, consisting of a set of predefined locations:

In Equation (1), we add an origin location (e.g., the access point for each level). The set of locations for floor level j becomes the following:

where is the origin location, and and are the predefined locations of that floor.

Unlike the model in [24], each location’s physical positions represented by their coordinates are known.

In order to ensure safe guidance to a location of interest in a public building, one problem is to determine the best routes between locations and with , passing through a set of intermediary points and with , such that the trajectory between and assures a safe path for the visually impaired people. As a remark, the path from to generally differs from the path from to due to the following possible reasons: (a) some narrow hallways may be restricted and cannot be traversed in both directions or (b) while in one direction the normal flux of the people uses the left side of a path, in the opposite direction, the normal flux of people is on the right side.

The other problem is to follow the predefined routes while avoiding obstacles that appear on the path, which can be split into two subproblems: (a) to determine the existence of a collision-free admissible path [25] between every current position of the guiding system and the next intermediary point of the predefined route and (b) if following the predefined path implies a collision, to determine an approximation of the path which avoids the obstacle while reaching the next intermediary point of the predefined path.

To resolve these problems, we have designed a compact UGV guiding system capable of moving back and forth and rotating within 360 degrees. We have defined a coding system for the routes and a text file format for storing each recorded movement. Regarding the reconstruction of the trajectories of the UGV, we rely on the recording of movements and an odometry technique based on movement feedback using hall sensors.

The main hypotheses assumed in this paper are as follows:

- 1.

- The building is either on one floor or it has elevators for reaching each desired floor;

- 2.

- There is one entrance point for people who need guidance or one access point of interest for each floor;

- 3.

- The number of locations for which the guidance is needed is limited and predetermined.

3.1. Indoor Movement Scenarios

In the development of our project, we have considered a series of commonly used scenarios regarding the accessibility needs of an average person in a public building:

- One-floor buildings (e.g., pavilions) hosting events like exhibitions, student fairs, etc., in which there is a limited number of fixed locations of interest from which the user can choose one at a time;

- Multiple-floor buildings equipped with elevators, where each floor has a limited number of fixed locations of interest from which the user can choose one at a time;

- One or multiple-floor buildings equipped with an elevator, for which there is a predefined route passing through all locations of interest at a certain floor.

For each scenario, the strategy is to define a set of predefined locations as in Equation (2). In Scenarios 1 and 2, for each pair of locations i and j, the system must pre-record a route. Route recording can be carried out in various modes: manually, semi-automated, or fully automated. In this article, we will discuss and analyze only the first two modes.

This choice is motivated by the fact that in the context of a guiding system for visually impaired individuals in indoor public buildings, the primary focus is on ensuring simplicity, reliability, and ease of use. We consider the most common and frequently used pathways, such as routes from the entrance to key destinations like specific offices, meeting rooms, or common areas. In high-traffic public buildings, these routes tend to remain consistent over time, with limited variation in the paths taken by users.

For the third scenario, if the locations are represented as nodes in an acyclic graph, the route can be found using any algorithm of topological sort on that graph (e.g., the one proposed in [26]). However, the algorithm for this route determination is out of the scope of this paper.

3.2. Project Requirements

Starting from the identified needs presented in the previous sections, we developed a methodology and a prototype for an indoor guiding system called Vision Voyager, which will be described in the following sections. For the research presented in this paper, we followed a waterfall model of the system development cycle as a research methodology, starting with the requirements, continuing with the design and implementation, and ending with testing and analysis.

From the previously defined problem and corresponding scenarios, we subtracted a list of requirements:

- The system shall be able to record predefined routes and present them as options for the user;

- The system shall be able to detect locations and points of interest from an acceptable distance;

- The system shall be able to provide a vocal interface for presenting the command options and the necessary information to the user;

- The system shall be able to accept basic vocal commands for the selection of the route, pause, and stop;

- The system shall be affordable in terms of cost;

- The system shall be relatively small (i.e., it will not exceed the size of a guide dog).

4. Proposed Solution: Vision Voyager

Starting with the selected scenarios and the requirements defined in the previous section, we propose a modular system composed of an unmanned ground vehicle equipped with sensors for environment perception and route recording, a microphone, a headset for a vocal interface with the user, and a UHF-RFID reading module responsible for identifying predefined points of interest in a range of 15 cm to 5 m.

The UGV is responsible for leading the user on a pre-recorded route, and the vocal interface is responsible for interacting with the user, receiving commands and providing the necessary information. The RFID reader detects the points of interest within the range of the user and plays the information associated with them in an audio format.

4.1. System Design

In the early stages of designing the architecture, the concept of modularity is a central factor. The entire system is designed around the idea of separation of each important element in the system. The final state of the product represents a system composed of modules that are as independent as possible and can be interconnected in various configurations. The need for such an approach comes primarily from the multitude of sensors and modules that make the Vision Voyager robot interact with the environment. In addition, the fact that the robot is built to be paired with the UHF-RFID Tag Reader is an important aspect of choosing a modular design.

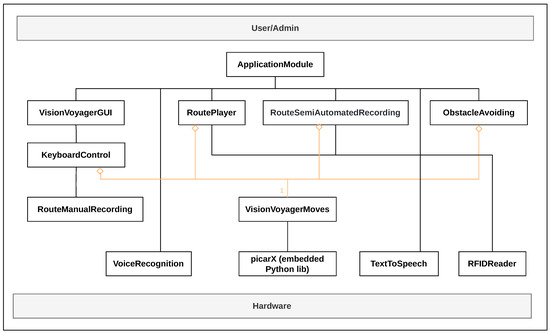

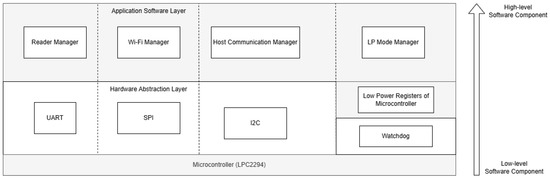

We propose a layered hardware architecture consisting of three main layers: the control layer, the mobility support layer, and the sensing layer. Regarding the software architecture, the design implies that each major functionality is contained in a module, and the modules are, therefore, organized in layers, as can be seen in Figure 1.

Figure 1.

Software architecture of the Vision Voyager robot.

In this layered approach, the lower levels are closer to the hardware side, while the higher levels are the ones that ensure the interaction with the administrator or with the system users. Through this representation, the dependence of the upper modules on the lower ones can be observed. From a top-down perspective, the layers are the following:

- The user interface layer;

- The application services layer;

- The intermediate processing layer;

- The hardware drivers layer.

4.2. Prototype Implementation

The hardware architecture follows the layered approach presented in the previous section. The first layer, the one that commands and processes all the data, is built around a Raspberry Pi 4, i.e., the central processing element of the system. The second layer, responsible for mobility support, is represented by the SunFounder robot’s hardware at the top (HAT) of Raspberry Pi 4. The HAT plays an intermediary role between the first and last layers, being controlled by the Raspberry Pi and, in turn, controlling the motors and sensors. The last layer is represented by the sensing and action layers. It is the one that interacts with the environment and is composed of ultrasonic detection sensors, a grayscale sensor, two hall sensors, and the headset, but also includes motors, servomotors, and, the most complex piece of this layer, the RFID reader.

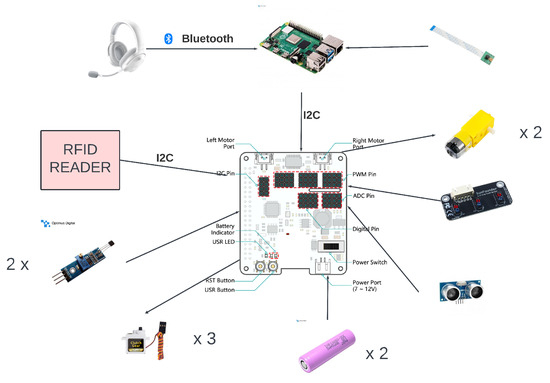

Regarding the hardware components of the proposed prototype, we can see the modular approach, as depicted in Figure 2.

Figure 2.

Hardware components of the Vision Voyager robot.

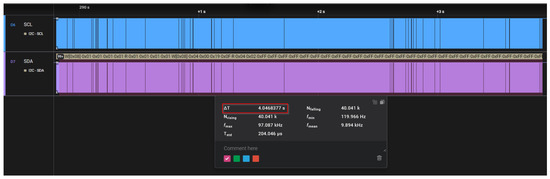

The system has two central control components: the Raspberry Pi and the HAT. Communication between the two is realized through the I2C protocol. I2C also connects the RFID reader to Raspberry Pi. Having I2C as a communication support for both the HAT and the RFID reader, the presented system may be categorized as having a master and two slave devices. Communication between the two slaves is carried out by addressing each of the slaves separately.

Regarding the robotic hardware controlled by Raspberry Pi 4 Model B, we choose the PiCar-X kit from SunFounder, as it includes some of the components necessary for the proposed system, namely a camera, motors and wheels for movement, servomotors for steering and the camera’s two-axis movement, an ultrasonic sensor, a grayscale sensor, and a multifunctional expansion board: the robot HAT. In addition to the components included in the kit, we have also add two Hall effect sensors to monitor the rotation of the rear wheels.

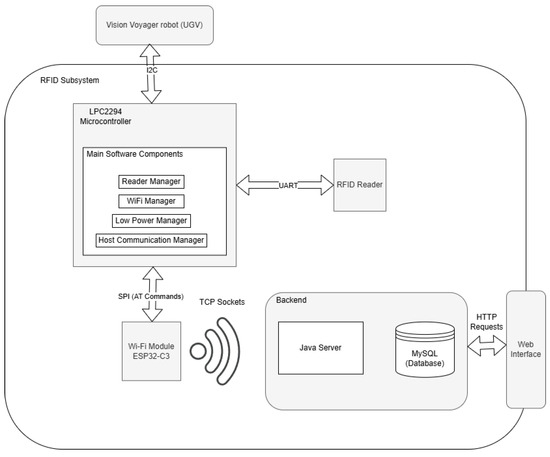

Another important component of the proposed solution is represented by a custom-made RFID subsystem, which is shown in Figure 3. The subsystem is responsible for detecting the preregistered location passive RFID tags, identifying them, and retrieving the associated information regarding the location from the database. The following main circuits compose the RFID subsystem:

Figure 3.

RFID subsystem as a concept.

- Wi-Fi module: ESP32-C3;

- UHF-RFID reader: M6e-Nano;

- External antenna;

- Microcontroller: Philips LPC2294;

- Host system: Raspberry Pi 4.

The UHF-RFID module supports Gen2 UHF passive tags, operating in a range of 865–927 MHz, with a reading range of 30–60 cm with its default antenna. To obtain a reading range of several meters, we configure the reading module and attach an external antenna. Due to the small dimensions of the module we choose a small antenna that operates within the frequency range demanded by the RFID tags and provides a reading range of 2–3 m, which is in agreement with the project’s specifications.

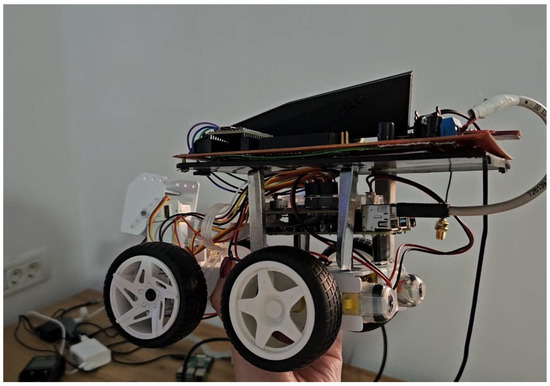

The final prototype is depicted in Figure 4.

Figure 4.

Vision Voyager: robot prototype.

4.3. Methodology

The proposed methodology for Vision Voyager consists of two main steps:

- Route registration mode;

- Route playback and guiding mode.

Route registration takes place offline before the beneficiaries actually use the system. It is carried out by an administrator when the building is free (e.g., outside working hours). This recording can be achieved in two different ways, namely by manual recording and a path-following mode, as described in a more detailed manner in the following paragraphs. We choose this approach instead of a fully automated path search because it covers the chosen indoor movement scenarios presented in Section 3.1. After all, we wish to have control over the routes a visitor should follow in order to avoid possible accidents or crowding paths and areas that are not of direct interest to visually impaired visitors.

The route playback and guiding mode implies the following scenario. Each user will start from an origin location, , as defined in Section 3. In each origin location, there will be a prototype of the Vision Voyager robot, with pre-recorded routes from location to . Using vocal commands, a user will turn on a robot and choose one location from the options presented by the vocal menu (one option between –). The system will play back the routes while vocally guiding the user using repetitive sounds like beacons together with vocal commands (i.e., turn left, go forward, and stop). During route playback and guiding, the system is also responsible for detecting the points of interest and for playing back the information related to each point.

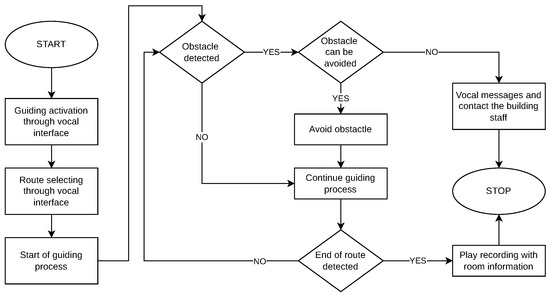

4.3.1. Route Registration and Playback

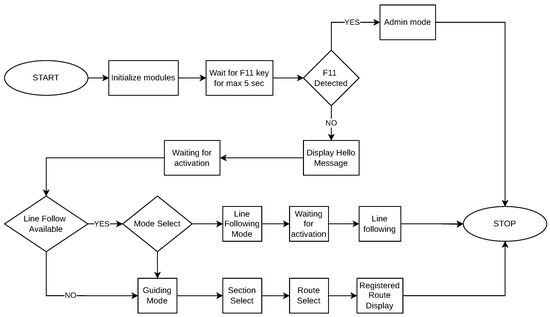

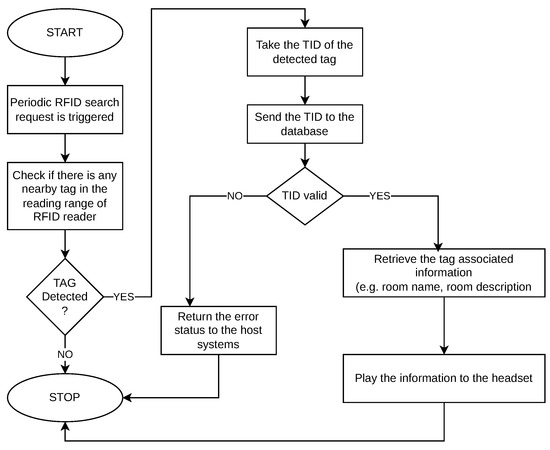

As mentioned in the previous sections, the system relies on a set of predefined routes. Route recording is responsible for capturing and storing the movements required to follow that fixed route. The process of registering a new route into the menu and recording it is depicted in Figure 5.

Figure 5.

System flow chart.

For this process, we implement two alternatives: manual recording and line-following modes. The first corresponds to the RouteManualRecording software module and makes use of the keyboard interface by which an administrator guides the UGV on the chosen path from the origin to the destination point. Each maneuver is then recorded into the text file corresponding to that specific route. Every route has a text file during the recording; thus, adding a new route into the system adds a new file containing the corresponding recording. The second method of recording corresponds to RouteSemiAutomatedRecording; in this case, black tape is stuck to the floor, marking the route between two points of interest. The UGV in the line-following mode follows the tape and records each maneuver into a text file in a similar manner to the previous case with some differences, which will be described in more detail in the next paragraphs.

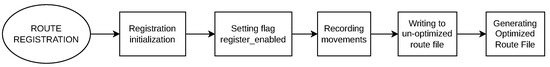

The process for manual route recording is described in Figure 6.

Figure 6.

Route registration process flow chart.

Each action is carried out with the support of the modules in Figure 1, which will be briefly described next. The manual recording is designed to manage input from the administrator via the keyboard and relies on a single external library from IBM called the Curses Library.

Recording a followed line differs from the implementation described in Figure 6 due to sensor imprecisions; thus, recording the route based on the line requires filtering out the swaying movements over the line and retaining only the direction change movements.

The line-following recording is based on the grayscale hardware module, which consists of three phototransistors. These phototransistors detect differences in light intensity to identify the line’s position and are also used to determine whether the robot is near an edge (e.g., in the proximity of descending stairs). By analyzing the readings from the phototransistors, the module can adjust the robot’s path to maintain a consistent trajectory and ensure safety.

Obstacle avoidance is implemented by the obstacle avoidance module and relies on an ultrasonic sensor. An important note about obstacle avoidance is that it functions both during line following and route guiding.

Managing the robot’s movements is implemented by the VisionVoyagerMoves module, which is also responsible for reading information from its sensors. Its implementation is primarily based on the external library Pybind11, a user-friendly and highly flexible C++ library that allows developers to access functionalities and objects defined in Python (https://www.python.org/) from C++ and vice versa.

The purpose of this wrapper module is to abstract and facilitate the use of functionalities already provided by the picarx Python library, offered by SunFounder, which manages the movement and reading of hardware elements. It provides a higher level of abstraction, making interaction with the hardware easier without needing to understand all the internal details of the picarx library.

Another module involved in route selection is the VoiceRecognition module. For this module, which is responsible for voice commands, we choose “Pocket Sphinx” as the foundation, a large vocabulary speech recognition engine offered by Carnegie Mellon University in Pittsburgh, Pennsylvania, one of the most popular voice recognition engines compatible with C++, which does not require any Internet connection.

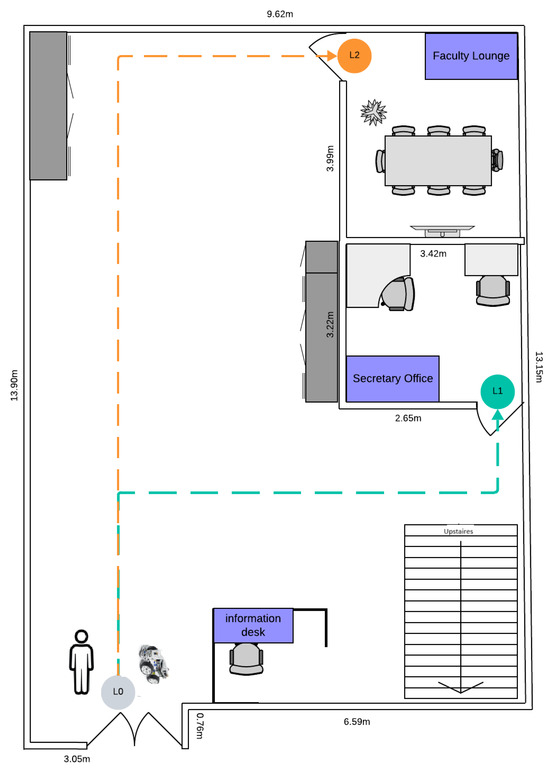

Regarding the recording of a route like the one in Figure 7, the recording will be stored in the database in a text format, as shown in the upper-right corner.

Figure 7.

Example of route registration.

Here, the command “set_dir_servo_angle(-20)” represents setting the wheel steering direction to the left, while the line following the “set_dir_servo_angle(-20)” command represents maintaining the current command for 2000 ms.

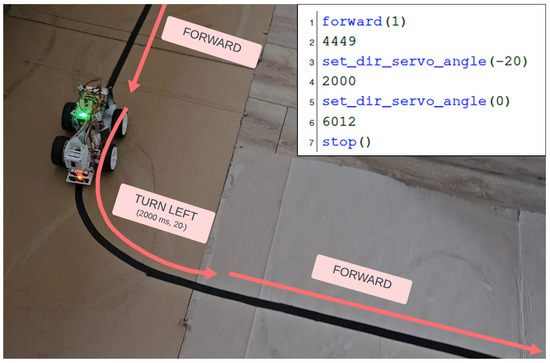

4.3.2. Points of Interest Detection

The detection of predefined locations and points of interest is provided by the UHF-RFID reader module from Figure 1. While the UGV moves on a pre-recorded route, RFID passive tags of different locations and points of interest enter into the RFID module reading range. When a pre-registered RFID tag is detected, it is searched in the local database, and all the information associated with it is retrieved. With the help of the text to speech module, the text retrieved from the database is transformed into audio format and played in the user headset. This scenario is presented in the UML activity diagram in Figure 8.

Figure 8.

Point-of-interest detection nominal scenario.

The part responsible for text-to-speech conversion is based on the use of eSpeak software (https://espeak.sourceforge.net/), an open-source, compact software known for its space efficiency, making it suitable for inclusion in resource-limited systems.

The RFID module, running on the microcontroller, implements the “Superloop” concept and is divided into four software components, each of them being responsible for handling a hardware resource (e.g., UART and I2C). The software components depicted in Figure 9 and defined at the subsystem level are as follows:

Figure 9.

Software architecture of the RFID subsystem.

- Reader manager;

- Wi-Fi manager;

- Host communication manager;

- Low power mode manager (depicted as LP Mode Manager in the Figure 9).

The RFID reader finds a nearby passive tag and sends the TID (Tag ID) to the database. A TID is associated with the following information: room name, room description, and a boolean stating if the room is a terminal node in the route to which it belongs. All that information is given to the UGV device as text, which can be further processed through a text-to-speech mechanism.

On a more detailed level, the implemented commands for the RFID subsystem are as follows:

- Search for new nearby rooms;

- Check if the subsystem is initialized;

- Send a ping signal to the subsystem.

Once an external request for detecting new nearby rooms is made by the UGV host system, the software component “Host Communication Manager” is woken up. This component is responsible for the handling of communication with the external environment. While a request is in progress on the RFID subsystem’s side, a “REQUEST PENDING” is sent as a response until a final acceptance or decline of the request is concluded and acknowledged by the host system.

The component “Low Power Mode Manager” is responsible for putting the whole microcontroller-based system into sleep mode to improve power consumption. If at least one software component has a job to process, the whole system will stay awake. If all components are finished with their jobs, the microcontroller will enter sleep mode.

“Reader Manager” is responsible for commanding the hardware RFID reader and communicating with the “Wi-Fi Manager”, which acts as a bridge between the microcontroller and the remote database. The communication between the microcontroller and the database is carried out using sockets, and no actual Internet connection is required.

Recovery algorithms are also implemented in case the communication between any of the hardware modules fails. If the communication cannot be recovered, the whole subsystem enters a “safe state”, where new requests will automatically be declined. Watchdog protection is implemented and well-integrated with the sleep mode.

4.3.3. Use Case Example

Figure 10 illustrates the process for using the UGV guidance system. The starting point is represented as in this figure. At this point, the user is connected to the system by the staff member stationed at the information desk, who then activates the system through the vocal interface. After activation, the user selects a route, initiating the navigation process.

Figure 10.

Use case for Vision Voyager.

In the example shown in Figure 10, the robot specifies the direction of movement through voice messages while avoiding obstacles along the route. Upon reaching an RFID tag (in this example, either or ) the robot delivers voice messages providing information about the corresponding room, such as its schedule or available services. Furthermore, if the robot reaches RFID tag or , it will guide the user to the next destination upon reactivation, restarting the process outlined in Figure 11.

Figure 11.

Use case for Vision Voyager.

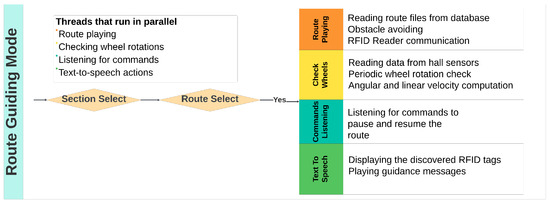

5. Testing Hypotheses and Experiments

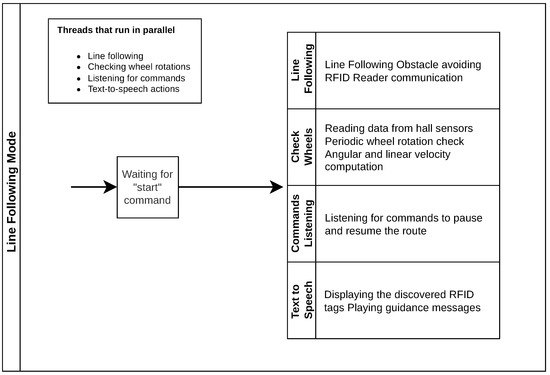

To test, validate, and measure the performance of the proposed system, we chose two operation modes: the “Route-Guiding Mode” (Figure 12), where the robot can playback pre-recorded routes, and “Line-Following Mode” (Figure 13), where the robot follows a line and, based on the directions of the line, guides a visually impaired person along a path. The experiments were designed to measure the system’s performance under different conditions and to evaluate its accuracy. The performance metrics used were accuracy regarding the guiding instructions, object detection and voice recognition, and precision regarding the path following expressed in terms of trajectory deviation.

Figure 12.

Route-guiding operating mode.

Figure 13.

Line-following operating mode.

Depending on the two modes of operation, the robot performs a multitude of actions, many of which run in parallel using threading. Numerous tests have been conducted on the system, ranging from basic feature tests like text-to-speech, voice recognition, or line following to more complex guidance scenarios where the robot records and executes a route.

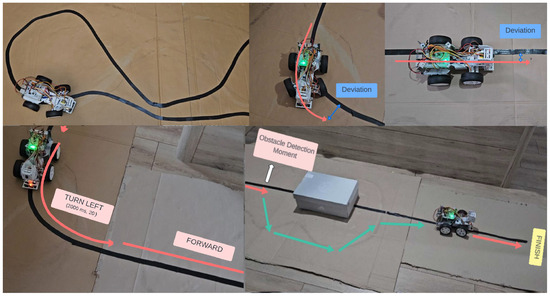

5.1. Line-Follower Experimental Test

The first scenario depicted in Figure 14 is very complex and much harder to follow compared to a real-life scenario, where most of the time, the line would be straight with a few smooth curves at different points in time. Since the robot successfully manages to follow the line with relatively tight curves, there is a certain level of assurance regarding real-life use cases.

Figure 14.

Experimental tests for the robot.

5.2. Obstacle-Avoiding Experimental Test

The obstacle-avoidance feature was tested both in the stored route playback mode and in the line-following mode. A representative image of the obstacle avoidance test scenario can be seen in the bottom-right of Figure 14, where the robot can locate the line again after avoiding the obstacle. This type of scenario provides the best testing for this functionality.

5.3. Route Recording and Playback Testing

Testing the route playback is closely related to route recording, as the base point for playback is the file processed during recording. To test the functionality of recording and playback, two scenarios are distinguished. The first involves recording a route based on the line-follower module, while the second involves recording keyboard commands through the admin mode. The first case is illustrated in Figure 14, based on replaying a line-based recording from the same starting point as the recording. At the end, a check of the endpoint where the robot stopped was performed. The observed deviations between line following and recording were under 10 % in most cases.

5.4. Results

The usability test that we considered at the system level was regarding the accuracy and voice command clarity. The testing scenario consisted of navigation from a predefined origin point (e.g., building entrance) to a user-selected room, following the audio instructions. We focused on verifying that the order and timing of the guidance commands were properly synchronized to ensure the user was directed to the chosen destination. The success criteria for this topic include the following aspect: correct synchronization of guidance commands. This criterion can be divided into two smaller, measurable criteria: validity of the guidance message and Guidance message timing. In terms of the validity of the messages, we refer to the correct order of movements, which must be accurate in every case, even when the robot is required to deviate from the planned route to avoid an obstacle. The second criterion, the timing of when a guidance message is provided to the user, is subject to error, as there may be slight disruptions caused by factors such as the speed at which the robot’s wheels rotate on different surfaces.

To assess overall performance, we consolidated the data obtained from all the tests conducted in Table 2.

Table 2.

Performance evaluation.

The route recording covered the two different modes proposed in this paper: the manual mode, obtaining deviation < 2%, and the line-following mode, with a slightly higher deviation. This deviation means that during route playback, the robot reached a position between 2% and 7%, respectively, of the total route length away from the exact spot where it stopped during recording.

The validity of the guidance message test verified the accuracy of recording and playback of the command sequence, with 100% meaning that all the commands recorded and played for the user were valid. The guidance message timing test refers to the timing of the commands given to the user for navigation, where 5–10% deviation indicates that the commands are delivered 5% to 10% earlier or later than planned due to delays or variations in how the processing threads are executed.

The line-following accuracy tests show that during line following, the robot never loses the line.

The wheel rotation problem detection accuracy test shows that the robot always detects if one of the rear wheels stops or rotates slower than planned. In contrast, the edge detection accuracy test shows that the robot detects all instances when it reaches an edge, such as a staircase or a change in elevation.

The obstacle detection tests show that the robot detects obstacles most of the time, but occasionally (in less than 5% of cases), it detects them too late to react in time due to how the threads interact. Meanwhile, an obstacle-avoiding accuracy > 60% means that in at least two out of three cases, the robot successfully avoids the obstacle. However, sometimes, it gets stuck by detecting a new obstacle, for example, when it is too close to a wall while performing the avoidance manoeuvre. The deviation refers to being 5% to 10% further than the point where the robot would have reached if there were no obstacles. The deviation and failure to avoid the obstacle also occur due to the robot’s position and angle after the maneuver, which can block the path.

A voice recognition accuracy > 75% shows the robot’s capacity to recognize the given command. A deviation < 10% refers to the fact that in 1 out of 10 cases, the robot recognizes a different command than the one spoken. Occasionally, the robot does not recognize anything at all, and the recognition process is restarted.

RFID Subsystem Testing

Regarding the RFID subsystem, two different parameters were measured and analyzed in order to evaluate its performance: detection time and energy consumption.

By default, the RFID subsystem request to start RF emissions would lead to continuous (in “burst”) RF emissions. Although this guarantees faster detection times for passive tags, this also leads to over-temperature conditions.

The RFID module supports configurations (via UART) for two RF parameters:

- RF on time, which tells us how long the RFID emits RF.

- RF off time, which tells us how long RF emissions shall pause after one emission round.

The RFID subsystem supports parametrization for the total search time, which can be configured at compile-time. In the following section, we will discuss how “RF on time” and “RF off time” described above and the requested search time are related to each other.

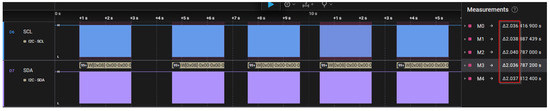

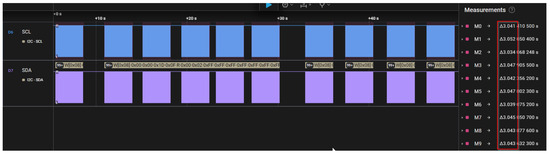

In Figure 15 and Figure 16, the expected search time was set to 2 s and 3 s, while “RF on time” and “RF off time” parameters were set to 500 ms in both cases. In the mentioned figures, it can be observed that the actual search time is quite accurate with the expected value, in contrast with the scenario depicted in Figure 17. In this case, the expected search time remains unchanged, but “RF on time” is increased to 700 ms while “RF off time” is increased to 900 ms. An increased deviation can be observed in the latter case. The deviation is greatly further increased in Figure 18 when both RF times are set to 1000 ms.

Figure 15.

Maximum search time duration when the expected search time is 2000 ms and “RF on time” = “RF off time” = 500 ms.

Figure 16.

Maximum search time duration when the expected search time is 3000 ms and “RF on time” = “RF off time” = 500 ms.

Figure 17.

Maximum search time duration when the expected search time is 3000 ms, “RF on time” = 700 ms and “RF off time” = 900 ms.

Figure 18.

Maximum search time duration when the expected search time is 3000 ms and “RF on time” = “RF off time” = 1000 ms.

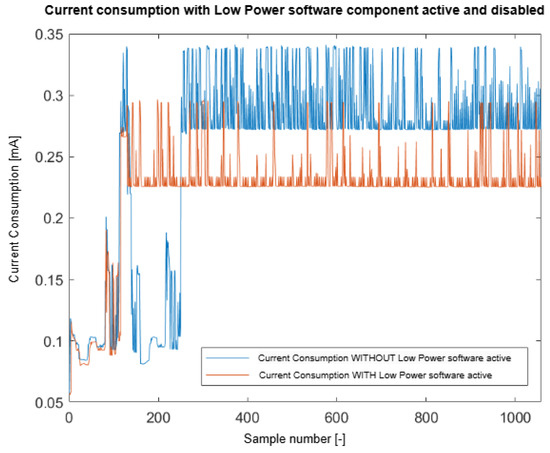

Regarding the RFID subsystem, the current consumption was measured in isolation to other subsystems in two scenarios:

- With Low-Power Mode Manager software component enabled;

- With Low-Power Mode Manager software component disabled.

In Figure 19, the current consumption results are presented. It can be observed that there is a slight improvement regarding power consumption when the Low-Power Mode Manager component is enabled, even if the “Low-Power” mode implementation targets only the microcontroller, which enters sleep mode when there is no external request to be performed. This means the RFID and Wi-Fi modules are still awake, running their internal algorithms. In the mentioned diagram, this behavior can be easily noticed by observing the periodic spikes in the measurements (in both cases).

Figure 19.

Current consumption for the RFID subsystem with and without the Low-Power Manager software component.

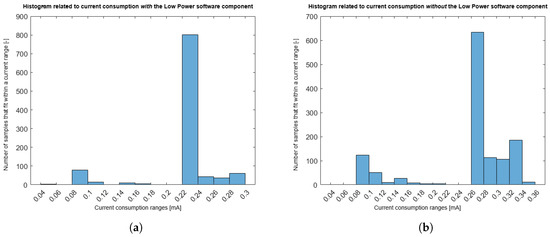

The distribution of the current consumption measurements can also be visualized in Figure 20.

Figure 20.

(a) Current consumption distribution with the software component “Low-Power Manager” enabled. (b) Current consumption distribution with the software component “Low-Power Manager” disabled.

5.5. Limitations and Discussions

The system prototype represents a limited proof of concept for an accessible guiding system for visually impaired people. The solution design was restricted to the hypotheses assumed in Section 3 and based on the requirements stated in Section 3.2. Thus, it is a system intended for indoor use on flat surfaces, which is not able to climb stairs or run on rough terrain.

Regarding RFID tag detection, the reader is capable of simultaneous tag detection, but this feature is not used in the current software implementation of the RFID subsystem. The area of detection for the RFID antenna is currently tuned to have a radius of 5 m.

Regarding the recording methodology, we mention that the recording is done only outside working hours.

We also wish to outline the following technical restraints:

- Mechanical limitations: misalignments of the steering servomotor can cause different deviations when running on different surfaces;

- Hardware limitations: camera performance is limited due to the fixed focus lens and lack of infrared sensors;

- Software limitations: the voice recognition algorithm is limited to some basic words in English.

The adaptation to more challenging environments than the ones considered in Section 3.1 requires switching to a more efficient moving platform that could climb stairs and adding a combination of advanced sensors (e.g., LiDAR, infrared, etc.).

Regarding the recording methods, as can be seen from the obtained results, each recording method has its limitations, as well as its advantages and disadvantages. While the manual mode of recording has a smaller deviation, it implies the involvement of an administrator in the recording phase, being also time-consuming. While semi-automated, the line following the recording mode has a higher deviation.

The adaptation to a fully automated path-finding method requires better real-time environmental awareness and dynamic path recalibration. It can be achieved by integrating various technologies, such as smart feedback mechanisms, advanced mapping, and personalized user preferences.

6. Conclusions and Future Work

In this article, we have presented Vision Voyager as a proof-of-concept for a UGV system for indoor guiding of visually impaired people. By integrating different technologies like UGV and RFID, we have succeeded in implementing a modular, low-cost, and simple-to-use indoor guiding system intended for public buildings. Moreover, we have proposed a methodology and an algorithm for recording and playback of pre-recorded routes in indoor environments using Raspberry Pi. The experiments show a deviation under 10% between the recorded and the playback route in the chosen scenarios and an obstacle detection accuracy greater than 95%. To conclude, the fusion of the current technologies allows the development of accessible guiding systems with increased functionalities, which could ease the accessibility of visually impaired people to public buildings.

A further step in the development of the proposed solution is to analyze how the system could be adapted for different buildings, indoor and outdoor spaces, and environments with different layouts. Another important aspect of future development is the development of usability tests with real users. This implies specific conditions that we plan to meet once the prototype is being developed on a full scale.

Author Contributions

Conceptualization, C.-S.S. and V.S.; methodology, C.-S.S.; software, I.-F.K. and A.-C.K.; validation, V.S., S.N. and D.-I.C.; formal analysis, D.-I.C.; investigation, C.-S.S. and V.S.; resources, C.-S.S. and V.S.; data curation, C.-S.S., V.S., and S.N.; writing—original draft preparation, I.-F.K. and A.-C.K.; writing—review and editing, C.-S.S. and D.-I.C.; visualization, D.-I.C.; supervision, C.-S.S. and V.S.; project administration, C.-S.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data are contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| GPS | Global Positioning System |

| HAT | Hardware Attached on Top |

| I2C | Inter-Integrated Circuit |

| LiDAR | Light Detection and Ranging |

| RGB | Red–Green–Blue |

| RFID | Radio Frequency Identification |

| UGV | Unmanned Ground Vehicle |

| UWB | Ultra-Wideband |

| WiFi | Wireless Fidelity |

| UART | Universal Asynchronous Receiver Transmitter |

| SPI | Serial Peripheral Interface |

| TID | Tag Identification |

References

- Bourne, R.; Steinmetz, J.D.; Flaxman, S.; Briant, P.S.; Taylor, H.R.; Resnikoff, S.; Casson, R.J.; Abdoli, A.; Abu-Gharbieh, E.; Afshin, A.; et al. Trends in prevalence of blindness and distance and near vision impairment over 30 years: An analysis for the Global Burden of Disease Study. Lancet Glob. Health 2021, 9, e130–e143. [Google Scholar] [CrossRef] [PubMed]

- Vaz, R.; Freitas, D.; Coelho, A. Blind and visually impaired visitors’ experiences in museums: Increasing accessibility through assistive technologies. Int. J. Incl. Mus. 2020, 13, 57. [Google Scholar] [CrossRef]

- Kim, I.J. Recent advancements in indoor electronic travel aids for the blind or visually impaired: A comprehensive review of technologies and implementations. Univers. Access Inf. Soc. 2024, 1–21. [Google Scholar] [CrossRef]

- Shewail, A.S.; Elsayed, N.A.; Zayed, H.H. Survey of indoor tracking systems using augmented reality. IAES Int. J. Artif. Intell. 2023, 12, 402. [Google Scholar] [CrossRef]

- Jang, B.; Kim, H.; wook Kim, J. Survey of Landmark-based Indoor Positioning Technologies. Inf. Fusion 2023, 89, 166–188. [Google Scholar] [CrossRef]

- Simões, W.C.S.S.; Machado, G.S.; Sales, A.M.A.; de Lucena, M.M.; Jazdi, N.; de Lucena, V.F. A Review of Technologies and Techniques for Indoor Navigation Systems for the Visually Impaired. Sensors 2020, 20, 3935. [Google Scholar] [CrossRef] [PubMed]

- Nimalika Fernando, D.A.M.; Murray, I. Route planning methods in indoor navigation tools for vision impaired persons: A systematic review. Disabil. Rehabil. Assist. Technol. 2023, 18, 763–782. [Google Scholar] [CrossRef] [PubMed]

- Jain, M.; Patel, W. Review on lidar-based navigation systems for the visually impaired. SN Comput. Sci. 2023, 4, 323. [Google Scholar] [CrossRef]

- Plikynas, D.; Zvironas, A.; Budrionis, A.; Gudauskis, M. Indoor navigation systems for visually impaired persons: Mapping the features of existing technologies to user needs. Sensors 2020, 20, 636. [Google Scholar] [CrossRef]

- Plikynas, D.; Zvironas, A.; Gudauskis, M.; Budrionis, A.; Daniusis, P.; Sliesoraityte, I. Research advances of indoor navigation for blind people: A brief review of technological instrumentation. IEEE Instrum. Meas. Mag. 2020, 23, 22–32. [Google Scholar] [CrossRef]

- Madake, J.; Bhatlawande, S.; Solanke, A.; Shilaskar, S. A Qualitative and Quantitative Analysis of Research in Mobility Technologies for Visually Impaired People. IEEE Access 2023, 11, 82496–82520. [Google Scholar] [CrossRef]

- Motroni, A.; Buffi, A.; Nepa, P. A Survey on Indoor Vehicle Localization Through RFID Technology. IEEE Access 2021, 9, 17921–17942. [Google Scholar] [CrossRef]

- Jin, M.; Yao, S.; Li, K.; Tian, X.; Wang, X.; Zhou, C.; Cao, X. Fine-Grained UHF RFID Localization for Robotics. IEEE/ACM Trans. Netw. 2024, 1–16. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, X.; Chen, S.; Tong, X.; Deng, Z.; Gu, T.; Li, K. Toward Robust RFID Localization via Mobile Robot. IEEE/ACM Trans. Netw. 2024, 32, 2904–2919. [Google Scholar] [CrossRef]

- Xie, Y.; Wang, X.; Lin, P.; Zhang, Y.; Zhang, Z.; Wu, J.; Hua, H. Relative Positioning of the Inspection Robot Based on RFID Tag Array in Complex GNSS-Denied Environments. IEEE Trans. Instrum. Meas. 2024, 73, 1–14. [Google Scholar] [CrossRef]

- Theodorou, P.; Tsiligkos, K.; Meliones, A. Multi-Sensor Data Fusion Solutions for Blind and Visually Impaired: Research and Commercial Navigation Applications for Indoor and Outdoor Spaces. Sensors 2023, 23, 5411. [Google Scholar] [CrossRef] [PubMed]

- Okolo, G.I.; Althobaiti, T.; Ramzan, N. Assistive Systems for Visually Impaired Persons: Challenges and Opportunities for Navigation Assistance. Sensors 2024, 24, 3572. [Google Scholar] [CrossRef]

- Kandalan, R.N.; Namuduri, K. Techniques for Constructing Indoor Navigation Systems for the Visually Impaired: A Review. IEEE Trans. Hum.-Mach. Syst. 2020, 50, 492–506. [Google Scholar] [CrossRef]

- Xiao, A.; Tong, W.; Yang, L.; Zeng, J.; Li, Z.; Sreenath, K. Robotic Guide Dog: Leading a Human with Leash-Guided Hybrid Physical Interaction. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 11470–11476. [Google Scholar] [CrossRef]

- Al Zayer, M.; Tregillus, S.; Bhandari, J.; Feil-Seifer, D.; Folmer, E. Exploring the Use of a Drone to Guide Blind Runners. In Proceedings of the 18th International ACM SIGACCESS Conference on Computers and Accessibility, ASSETS ’16, Reno, NV, USA, 23–26 October 2016; pp. 263–264. [Google Scholar] [CrossRef]

- Wachaja, A.; Agarwal, P.; Zink, M.; Adame, M.R.; Möller, K.; Burgard, W. Navigating blind people with a smart walker. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–3 October 2015; pp. 6014–6019. [Google Scholar] [CrossRef]

- Kayukawa, S.; Ishihara, T.; Takagi, H.; Morishima, S.; Asakawa, C. BlindPilot: A Robotic Local Navigation System that Leads Blind People to a Landmark Object. In Proceedings of the Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, CHI EA ’20, Honolulu, HI, USA, 25–30 April 2020; pp. 1–9. [Google Scholar] [CrossRef]

- Edirisinghe, S.; Satake, S.; Kanda, T. Field Trial of a Shopworker Robot with Friendly Guidance and Appropriate Admonishments. J. Hum.-Robot Interact. 2023, 12, 1–37. [Google Scholar] [CrossRef]

- Nguyen, Q.H.; Vu, H.; Tran, T.H.; Nguyen, Q.H. Developing a way-finding system on mobile robot assisting visually impaired people in an indoor environment. Multimed. Tools Appl. 2017, 76, 2645–2669. [Google Scholar] [CrossRef]

- Minguez, J.; Lamiraux, F.; Laumond, J.P. Motion planning and obstacle avoidance. Springer Handb. Robot. 2008, 1, 827–852. [Google Scholar] [CrossRef]

- Ahammad, T.; Hasan, M.; Zahid Hassan, M. A New Topological Sorting Algorithm with Reduced Time Complexity. In Intelligent Computing and Optimization; Vasant, P., Zelinka, I., Weber, G.W., Eds.; Springer: Cham, Switzerland, 2021; pp. 418–429. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).