HFMM-Net: A Hybrid Fusion Mamba Network for Efficient Multimodal Industrial Defect Detection

Abstract

1. Introduction

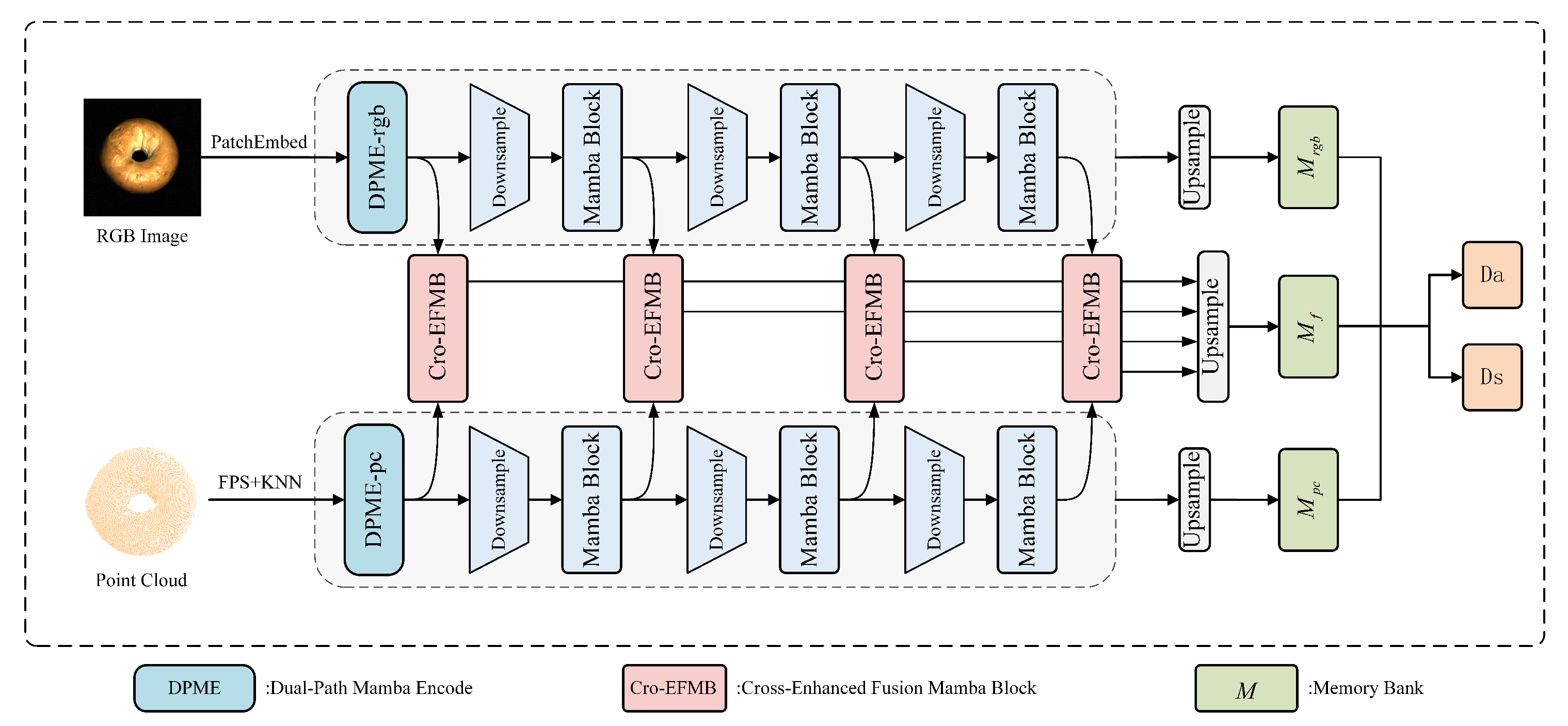

- We propose HFMM-Net, a novel Mamba-based multimodal anomaly detection framework that achieves state-of-the-art detection and segmentation performance on MVTec 3D-AD and Eyecandies datasets.

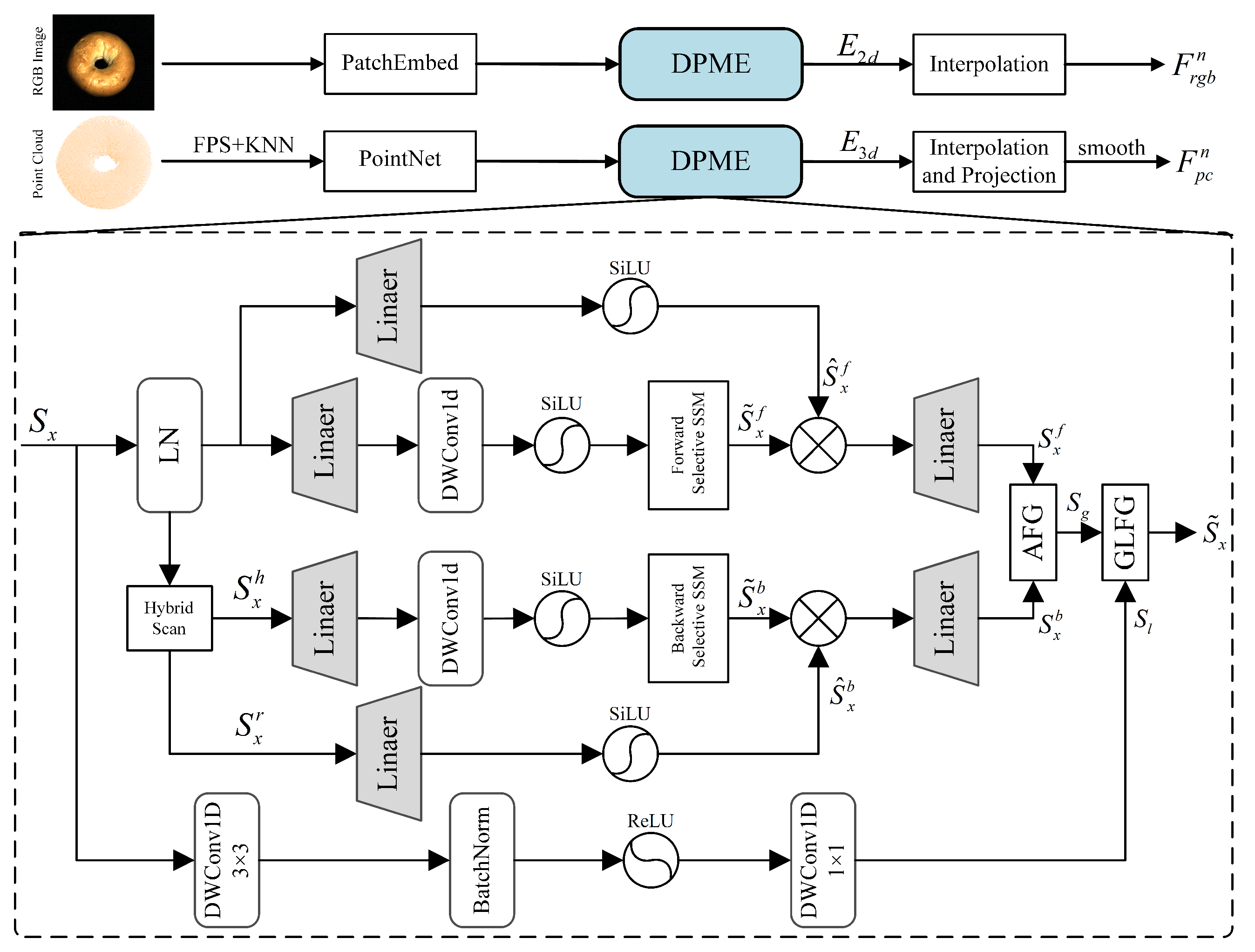

- We introduce a Dual-Path Mamba Encoder (DPME) that enhances multi-scale global feature representation using hybrid directional state space modeling while maintaining linear complexity.

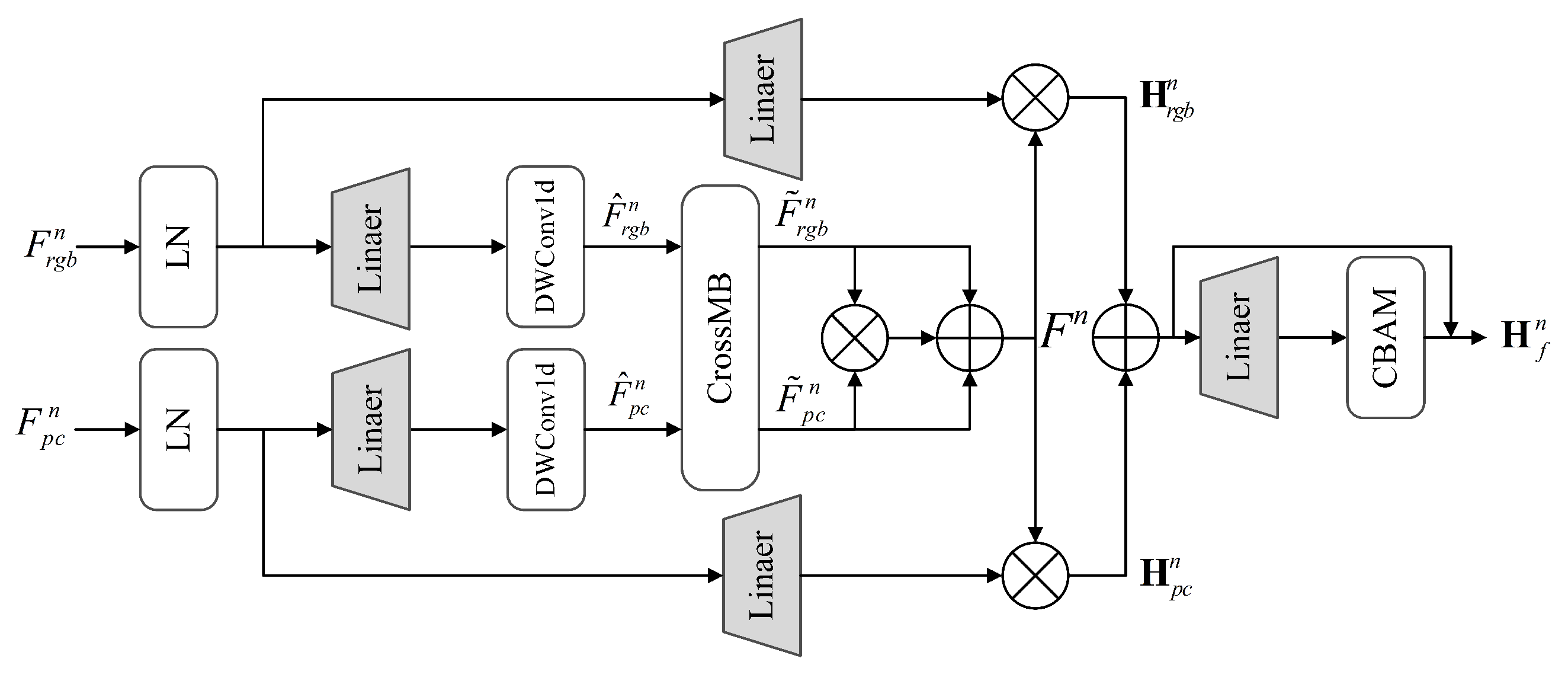

- We design a Cross-Enhanced Fusion Mamba Block (Cro-EFMB), enabling dynamic injection and efficient fusion of image and point cloud features, significantly enhancing modality complementarity and localization performance.

2. Related Work

2.1. Multimodal Industrial Anomaly Detection

2.2. Applications of Mamba in Visual Representation

3. Approach

3.1. Preliminaries

3.2. Overall Architecture

3.3. Feature Extraction Module: Dual-Path Mamba Encoder (DPME)

- (1)

- Hybrid Scan Strategy

- (2)

- Token Generation for Each Modality

3.4. Cross-Enhanced Fusion Mamba Block (Cro-EFMB)

3.5. Decision-Level Fusion

4. Experiments

4.1. Experiment Settings

4.2. Anomaly Detection on MVTec 3D-AD

4.3. Anomaly Detection on Eyecandies

4.4. Few-Shot Anomaly Detection

4.5. Ablation Studies

- w/o DPME: The bidirectional Mamba encoder is removed, and the RGB and point cloud features are simply concatenated before being fed into the subsequent modules.

- w/o Dual-Path: The bidirectional hybrid scanning is replaced with a standard unidirectional SSM to assess the modeling capability of a single pathway.

4.6. Inference Efficiency and Memory Footprint

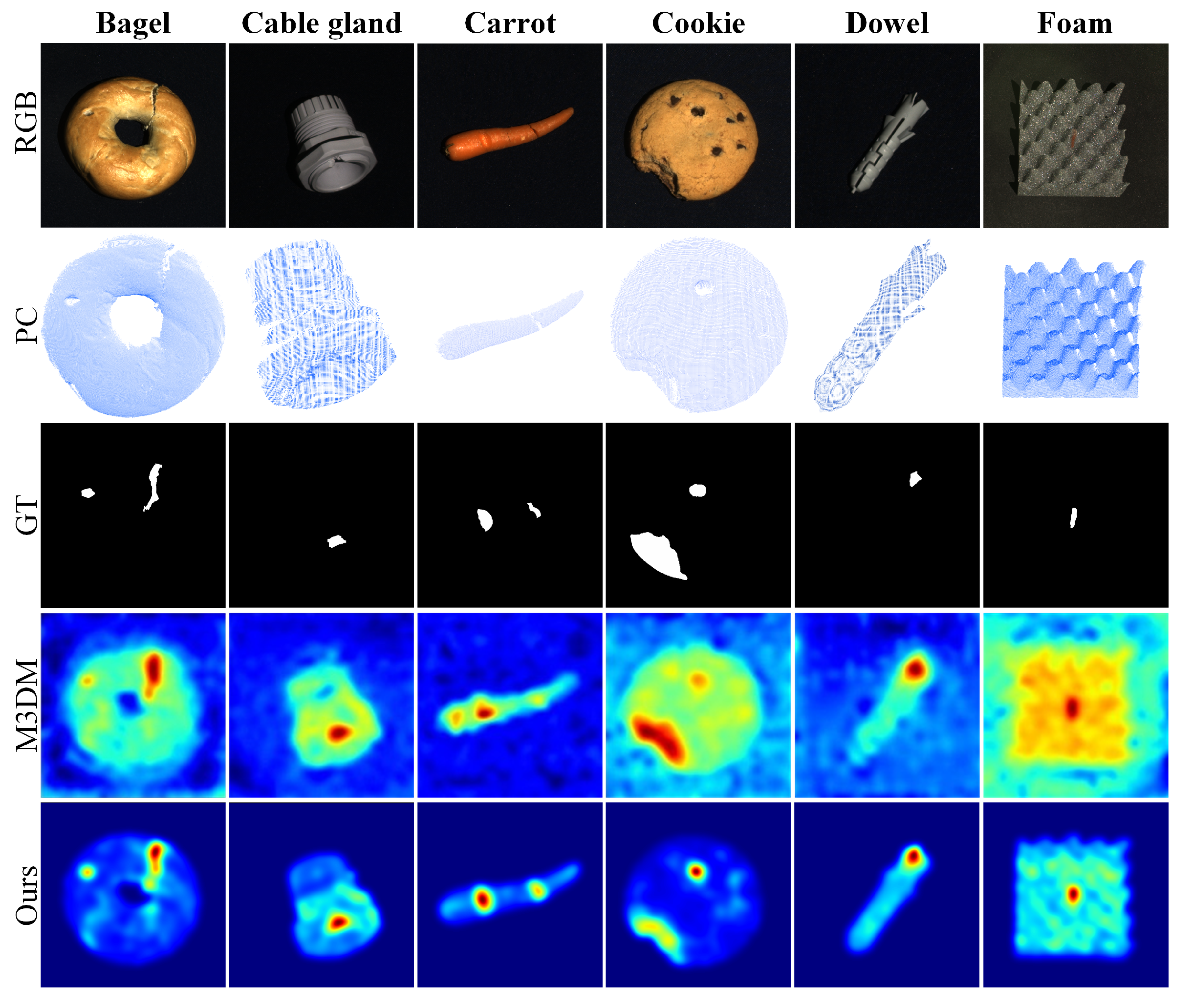

4.7. Features Visualization

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Liu, J.; Xie, G.; Wang, J.; Li, S.; Wang, C.; Zheng, F.; Jin, Y. Deep industrial image anomaly detection: A survey. Mach. Intell. Res. 2024, 21, 104–135. [Google Scholar] [CrossRef]

- Bergmann, P.; Fauser, M.; Sattlegger, D.; Steger, C. Uninformed students: Student-teacher anomaly detection with discriminative latent embeddings. In Proceedings of the IEEE/CVF Conference on Computer vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 4183–4192. [Google Scholar]

- Bergmann, P.; Jin, X.; Sattlegger, D.; Steger, C. The mvtec 3d-ad dataset for unsupervised 3d anomaly detection and localization. arXiv 2021, arXiv:2112.09045. [Google Scholar] [CrossRef]

- Bonfiglioli, L.; Toschi, M.; Silvestri, D.; Fioraio, N.; De Gregorio, D. The eyecandies dataset for unsupervised multimodal anomaly detection and localization. In Proceedings of the Asian Conference on Computer Vision, Macao, China, 4–8 December 2022; pp. 3586–3602. [Google Scholar]

- Tu, Y.; Zhang, B.; Liu, L.; Li, Y.; Zhang, J.; Wang, Y.; Wang, C.; Zhao, C. Self-supervised feature adaptation for 3d industrial anomaly detection. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 75–91. [Google Scholar]

- Rudolph, M.; Wehrbein, T.; Rosenhahn, B.; Wandt, B. Asymmetric student-teacher networks for industrial anomaly detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–7 January 2023; pp. 2592–2602. [Google Scholar]

- Gu, Z.; Zhang, J.; Liu, L.; Chen, X.; Peng, J.; Gan, Z.; Jiang, G.; Shu, A.; Wang, Y.; Ma, L. Rethinking reverse distillation for multi-modal anomaly detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–21 February 2024; Volume 38, pp. 8445–8453. [Google Scholar]

- Jing, Y.; Zhong, J.X.; Sheil, B.; Acikgoz, S. Anomaly detection of cracks in synthetic masonry arch bridge point clouds using fast point feature histograms and PatchCore. Autom. Constr. 2024, 168, 105766. [Google Scholar] [CrossRef]

- Cao, Y.; Xu, X.; Shen, W. Complementary pseudo multimodal feature for point cloud anomaly detection. Pattern Recognit. 2024, 156, 110761. [Google Scholar] [CrossRef]

- Horwitz, E.; Hoshen, Y. Back to the feature: Classical 3d features are (almost) all you need for 3d anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 2968–2977. [Google Scholar]

- Wang, Y.; Peng, J.; Zhang, J.; Yi, R.; Wang, Y.; Wang, C. Multimodal industrial anomaly detection via hybrid fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 8032–8041. [Google Scholar]

- Costanzino, A.; Ramirez, P.Z.; Lisanti, G.; Di Stefano, L. Multimodal industrial anomaly detection by crossmodal feature mapping. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 17234–17243. [Google Scholar]

- Gu, A.; Dao, T. Mamba: Linear-time sequence modeling with selective state spaces. arXiv 2023, arXiv:2312.00752. [Google Scholar] [CrossRef]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Jiao, J.; Liu, Y. Vmamba: Visual state space model. Adv. Neural Inf. Process. Syst. 2024, 37, 103031–103063. [Google Scholar]

- Zhang, H.; Zhu, Y.; Wang, D.; Zhang, L.; Chen, T.; Wang, Z.; Ye, Z. A survey on visual mamba. Appl. Sci. 2024, 14, 5683. [Google Scholar] [CrossRef]

- Ma, C.; Wang, Z. Semi-Mamba-UNet: Pixel-level contrastive and cross-supervised visual Mamba-based UNet for semi-supervised medical image segmentation. Knowl.-Based Syst. 2024, 300, 112203. [Google Scholar] [CrossRef]

- Zou, W.; Gao, H.; Yang, W.; Liu, T. Wave-mamba: Wavelet state space model for ultra-high-definition low-light image enhancement. In Proceedings of the 32nd ACM International Conference on Multimedia, Melbourne, VIC, Australia, 28 October–1 November 2024; pp. 1534–1543. [Google Scholar]

- Li, W.; Zhou, H.; Yu, J.; Song, Z.; Yang, W. Coupled mamba: Enhanced multimodal fusion with coupled state space model. Adv. Neural Inf. Process. Syst. 2024, 37, 59808–59832. [Google Scholar]

- Perera, P.; Nallapati, R.; Xiang, B. Ocgan: One-class novelty detection using gans with constrained latent representations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 2898–2906. [Google Scholar]

- Kipf, T.N.; Welling, M. Variational graph auto-encoders. arXiv 2016, arXiv:1611.07308. [Google Scholar] [CrossRef]

- Bergmann, P.; Fauser, M.; Sattlegger, D.; Steger, C. MVTec AD–A comprehensive real-world dataset for unsupervised anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9592–9600. [Google Scholar]

- Gong, D.; Liu, L.; Le, V.; Saha, B.; Mansour, M.R.; Venkatesh, S.; Hengel, A.v.d. Memorizing normality to detect anomaly: Memory-augmented deep autoencoder for unsupervised anomaly detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1705–1714. [Google Scholar]

- Zavrtanik, V.; Kristan, M.; Skočaj, D. Reconstruction by inpainting for visual anomaly detection. Pattern Recognit. 2021, 112, 107706. [Google Scholar] [CrossRef]

- Roth, K.; Pemula, L.; Zepeda, J.; Schölkopf, B.; Brox, T.; Gehler, P. Towards total recall in industrial anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 14318–14328. [Google Scholar]

- Horwitz, E.; Hoshen, Y. An empirical investigation of 3d anomaly detection and segmentation. arXiv 2022, arXiv:2203.05550. [Google Scholar]

- Caron, M.; Touvron, H.; Misra, I.; Jégou, H.; Mairal, J.; Bojanowski, P.; Joulin, A. Emerging properties in self-supervised vision transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 9650–9660. [Google Scholar]

- Pang, Y.; Tay, E.H.F.; Yuan, L.; Chen, Z. Masked autoencoders for 3d point cloud self-supervised learning. World Sci. Annu. Rev. Artif. Intell. 2023, 1, 2440001. [Google Scholar]

- Zhao, H.; Jiang, L.; Jia, J.; Torr, P.H.; Koltun, V. Point transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 16259–16268. [Google Scholar]

- Wang, Z.; Wang, Y.; An, L.; Liu, J.; Liu, H. Local transformer network on 3d point cloud semantic segmentation. Information 2022, 13, 198. [Google Scholar] [CrossRef]

- Liu, X.; Wang, J.; Leng, B.; Zhang, S. Tuned Reverse Distillation: Enhancing Multimodal Industrial Anomaly Detection with Crossmodal Tuners. arXiv 2025, arXiv:2412.08949. [Google Scholar]

- Zhu, Q.; Wan, Y. BiDFNet: A Bidirectional Feature Fusion Network for 3D Object Detection Based on Pseudo-LiDAR. Information 2025, 16, 437. [Google Scholar] [CrossRef]

- Ruan, J.; Li, J.; Xiang, S. Vm-unet: Vision mamba unet for medical image segmentation. arXiv 2024, arXiv:2402.02491. [Google Scholar] [CrossRef]

- Wang, C.; Tsepa, O.; Ma, J.; Wang, B. Graph-mamba: Towards long-range graph sequence modeling with selective state spaces. arXiv 2024, arXiv:2402.00789. [Google Scholar]

- Li, Y.; Xie, C.; Chen, H. Multi-scale representation for image deraining with state space model. Signal Image Video Process. 2025, 19, 183. [Google Scholar] [CrossRef]

- Tang, Y.; Li, Y.; Zou, H.; Zhang, X. Interactive Segmentation for Medical Images Using Spatial Modeling Mamba. Information 2024, 15, 633. [Google Scholar] [CrossRef]

- Zhang, T.; Yuan, H.; Qi, L.; Zhang, J.; Zhou, Q.; Ji, S.; Yan, S.; Li, X. Point cloud mamba: Point cloud learning via state space model. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 10121–10130. [Google Scholar]

- Liang, D.; Zhou, X.; Xu, W.; Zhu, X.; Zou, Z.; Ye, X.; Tan, X.; Bai, X. Pointmamba: A simple state space model for point cloud analysis. Adv. Neural Inf. Process. Syst. 2024, 37, 32653–32677. [Google Scholar]

- Xie, X.; Cui, Y.; Tan, T.; Zheng, X.; Yu, Z. Fusionmamba: Dynamic feature enhancement for multimodal image fusion with mamba. Vis. Intell. 2024, 2, 37. [Google Scholar] [CrossRef]

- Zhu, L.; Liao, B.; Zhang, Q.; Wang, X.; Liu, W.; Wang, X. Vision mamba: Efficient visual representation learning with bidirectional state space model. arXiv 2024, arXiv:2401.09417. [Google Scholar] [CrossRef]

- Gu, A.; Goel, K.; Ré, C. Efficiently modeling long sequences with structured state spaces. arXiv 2021, arXiv:2111.00396. [Google Scholar]

- Gu, A.; Johnson, I.; Goel, K.; Saab, K.; Dao, T.; Rudra, A.; Ré, C. Combining recurrent, convolutional, and continuous-time models with linear state space layers. Adv. Neural Inf. Process. Syst. 2021, 34, 572–585. [Google Scholar]

- Smith, J.T.; Warrington, A.; Linderman, S.W. Simplified state space layers for sequence modeling. arXiv 2022, arXiv:2208.04933. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

| Method | Bagel | CableGland | Carrot | Cookie | Dowel | Foam | Peach | Potato | Rope | Tire | Mean |

|---|---|---|---|---|---|---|---|---|---|---|---|

| BTF | 0.918 | 0.748 | 0.967 | 0.883 | 0.932 | 0.582 | 0.896 | 0.912 | 0.941 | 0.886 | 0.866 |

| PatchCore + FPFH | 0.930 | 0.817 | 0.952 | 0.822 | 0.903 | 0.688 | 0.859 | 0.924 | 0.920 | 0.966 | 0.878 |

| M3DM | 0.986 | 0.891 | 0.988 | 0.973 | 0.957 | 0.809 | 0.981 | 0.958 | 0.967 | 0.911 | 0.942 |

| CFM | 0.984 | 0.905 | 0.974 | 0.967 | 0.960 | 0.941 | 0.973 | 0.937 | 0.972 | 0.869 | 0.948 |

| CPMF | 0.977 | 0.932 | 0.956 | 0.977 | 0.961 | 0.881 | 0.965 | 0.954 | 0.959 | 0.939 | 0.950 |

| TRD | 0.986 | 0.961 | 0.968 | 0.966 | 0.972 | 0.902 | 0.982 | 0.935 | 0.984 | 0.881 | 0.953 |

| Ours | 0.995 | 0.920 | 0.985 | 0.995 | 0.974 | 0.900 | 0.960 | 0.933 | 0.980 | 0.920 | 0.956 |

| Method | Bagel | CableGland | Carrot | Cookie | Dowel | Foam | Peach | Potato | Rope | Tire | Mean |

|---|---|---|---|---|---|---|---|---|---|---|---|

| BTF | 0.930 | 0.960 | 0.970 | 0.890 | 0.950 | 0.950 | 0.920 | 0.940 | 0.920 | 0.900 | 0.933 |

| PatchCore + FPFH | 0.950 | 0.860 | 0.990 | 0.900 | 0.930 | 0.820 | 0.960 | 0.980 | 0.960 | 0.960 | 0.931 |

| M3DM | 0.980 | 0.950 | 0.995 | 0.960 | 0.985 | 0.945 | 0.990 | 0.970 | 0.995 | 0.990 | 0.976 |

| CFM | 0.989 | 0.963 | 0.998 | 0.975 | 0.983 | 0.952 | 0.988 | 0.980 | 0.998 | 0.963 | 0.979 |

| CPMF | 0.994 | 0.990 | 0.982 | 0.983 | 0.985 | 0.987 | 0.992 | 0.993 | 0.994 | 0.986 | 0.988 |

| TRD | 0.995 | 0.993 | 0.989 | 0.996 | 0.993 | 0.989 | 0.991 | 0.995 | 0.995 | 0.990 | 0.992 |

| Ours | 0.996 | 0.992 | 0.997 | 0.993 | 0.994 | 0.988 | 0.992 | 0.993 | 0.997 | 0.989 | 0.993 |

| Method | Bagel | CableGland | Carrot | Cookie | Dowel | Foam | Peach | Potato | Rope | Tire | Mean |

|---|---|---|---|---|---|---|---|---|---|---|---|

| BTF | 0.880 | 0.700 | 0.910 | 0.850 | 0.930 | 0.550 | 0.890 | 0.900 | 0.900 | 0.880 | 0.839 |

| PatchCore + FPFH | 0.972 | 0.966 | 0.970 | 0.927 | 0.933 | 0.889 | 0.975 | 0.981 | 0.952 | 0.971 | 0.953 |

| M3DM | 0.967 | 0.970 | 0.973 | 0.949 | 0.941 | 0.932 | 0.978 | 0.966 | 0.968 | 0.971 | 0.961 |

| CFM | 0.980 | 0.972 | 0.995 | 0.950 | 0.970 | 0.971 | 0.986 | 0.992 | 0.971 | 0.980 | 0.976 |

| CPMF | 0.957 | 0.945 | 0.979 | 0.868 | 0.897 | 0.746 | 0.979 | 0.980 | 0.961 | 0.977 | 0.928 |

| TRD | 0.977 | 0.981 | 0.988 | 0.969 | 0.972 | 0.983 | 0.991 | 0.983 | 0.976 | 0.978 | 0.979 |

| Ours | 0.989 | 0.970 | 0.995 | 0.974 | 0.970 | 0.960 | 0.996 | 0.990 | 0.995 | 0.982 | 0.982 |

| Method | Bagel | CableGland | Carrot | Cookie | Dowel | Foam | Peach | Potato | Rope | Tire | Mean |

|---|---|---|---|---|---|---|---|---|---|---|---|

| BTF | 0.26 | 0.20 | 0.31 | 0.28 | 0.24 | 0.18 | 0.30 | 0.26 | 0.29 | 0.28 | 0.26 |

| PatchCore + FPFH | 0.37 | 0.30 | 0.45 | 0.42 | 0.36 | 0.29 | 0.42 | 0.44 | 0.39 | 0.40 | 0.384 |

| M3DM | 0.43 | 0.38 | 0.48 | 0.47 | 0.46 | 0.38 | 0.50 | 0.46 | 0.48 | 0.46 | 0.45 |

| CFM | 0.46 | 0.43 | 0.47 | 0.45 | 0.44 | 0.40 | 0.48 | 0.45 | 0.44 | 0.40 | 0.442 |

| CPMF | 0.47 | 0.41 | 0.48 | 0.44 | 0.42 | 0.41 | 0.46 | 0.47 | 0.43 | 0.42 | 0.441 |

| TRD | 0.46 | 0.43 | 0.49 | 0.43 | 0.41 | 0.40 | 0.46 | 0.48 | 0.44 | 0.46 | 0.446 |

| Ours | 0.48 | 0.42 | 0.50 | 0.47 | 0.39 | 0.42 | 0.47 | 0.49 | 0.46 | 0.47 | 0.457 |

| Method | I-AUROC | P-AUROC | AUPRO@30% | AUPRO@1% |

|---|---|---|---|---|

| M3DM | 0.783 | 0.902 | 0.865 | 0.225 |

| CFM | 0.891 | 0.976 | 0.882 | 0.334 |

| CPMF | 0.882 | 0.962 | 0.875 | 0.335 |

| TRD | 0.877 | 0.963 | 0.887 | 0.338 |

| Ours | 0.887 | 0.966 | 0.891 | 0.341 |

| I-AUROC | P-AUROC | AUPRO@30% | AUPRO@1% | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Method | 5-Shot | 10-Shot | 50-Shot | Full | 5-Shot | 10-Shot | 50-Shot | Full | 5-Shot | 10-Shot | 50-Shot | Full | 5-Shot | 10-Shot | 50-Shot | Full |

| M3DM | 0.823 | 0.845 | 0.907 | 0.942 | 0.982 | 0.984 | 0.986 | 0.986 | 0.937 | 0.943 | 0.955 | 0.961 | 0.330 | 0.355 | 0.399 | 0.450 |

| CFM | 0.811 | 0.846 | 0.906 | 0.948 | 0.966 | 0.967 | 0.975 | 0.979 | 0.949 | 0.954 | 0.968 | 0.976 | 0.382 | 0.398 | 0.432 | 0.442 |

| TRD | 0.833 | 0.851 | 0.912 | 0.953 | 0.974 | 0.983 | 0.989 | 0.992 | 0.939 | 0.950 | 0.967 | 0.979 | 0.393 | 0.402 | 0.435 | 0.448 |

| Ours | 0.822 | 0.845 | 0.908 | 0.956 | 0.982 | 0.985 | 0.991 | 0.993 | 0.948 | 0.954 | 0.963 | 0.982 | 0.392 | 0.399 | 0.445 | 0.457 |

| Method | I-AUROC | P-AUROC | AUPRO@30% | AUPRO@1% |

|---|---|---|---|---|

| HFMM-Net | 0.956 | 0.987 | 0.982 | 0.457 |

| w/o DPME | 0.944 | 0.976 | 0.968 | 0.438 |

| w/o Dual-Path | 0.947 | 0.978 | 0.970 | 0.440 |

| w/o smooth | 0.953 | 0.983 | 0.979 | 0.449 |

| HFMM-ViT | 0.946 | 0.977 | 0.969 | 0.439 |

| HFMM-MHA | 0.943 | 0.975 | 0.966 | 0.435 |

| HFMM-ResNet18 | 0.936 | 0.972 | 0.961 | 0.428 |

| Method | I-AUROC | P-AUROC | AUPRO@30% | AUPRO@1% |

|---|---|---|---|---|

| HFMM-Net | 0.956 | 0.993 | 0.982 | 0.457 |

| w/o Cro-EFMB | 0.943 | 0.977 | 0.980 | 0.452 |

| w/o CBAM | 0.940 | 0.972 | 0.977 | 0.446 |

| Component | I-AUROC | P-AUROC | AUPRO@30% | AUPRO@1% | |

|---|---|---|---|---|---|

| DPME | Cro-EFMB | ||||

| × | × | 0.899 | 0.965 | 0.961 | 0.430 |

| × | ✓ | 0.944 | 0.976 | 0.968 | 0.438 |

| ✓ | × | 0.943 | 0.977 | 0.980 | 0.452 |

| ✓ | ✓ | 0.956 | 0.993 | 0.982 | 0.457 |

| Method | I-AUROC | P-AUROC | AUPRO@30% | AUPRO@1% |

|---|---|---|---|---|

| Only RGB | 0.854 | 0.934 | 0.977 | 0.448 |

| Only PC | 0.873 | 0.905 | 0.962 | 0.439 |

| Ours | 0.956 | 0.987 | 0.982 | 0.457 |

| Method | Memory (MB) | Inference (FPS) | t (ms) | I-AUROC |

|---|---|---|---|---|

| BTF | 381.06 | 3.91 | 255.8 | 0.866 |

| M3DM | 6528.70 | 0.528 | 1893.9 | 0.942 |

| CFM | 437.91 | 12.00 | 83.3 | 0.948 |

| CPMF | 2195.00 | 0.609 | 1642.0 | 0.950 |

| TRD | 682.50 | 21.70 | 46.1 | 0.953 |

| w/o DPME | 524.3 | 26.1 | 38.5 | 0.944 |

| HFMM-ViT | 780.2 | 5.3 | 188.7 | 0.946 |

| HFMM-Net (Ours) | 594.70 | 24.80 | 40.3 | 0.956 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, G.; Tan, L.; He, M.; Wu, Q. HFMM-Net: A Hybrid Fusion Mamba Network for Efficient Multimodal Industrial Defect Detection. Information 2025, 16, 1018. https://doi.org/10.3390/info16121018

Zhao G, Tan L, He M, Wu Q. HFMM-Net: A Hybrid Fusion Mamba Network for Efficient Multimodal Industrial Defect Detection. Information. 2025; 16(12):1018. https://doi.org/10.3390/info16121018

Chicago/Turabian StyleZhao, Guo, Liang Tan, Musong He, and Qi Wu. 2025. "HFMM-Net: A Hybrid Fusion Mamba Network for Efficient Multimodal Industrial Defect Detection" Information 16, no. 12: 1018. https://doi.org/10.3390/info16121018

APA StyleZhao, G., Tan, L., He, M., & Wu, Q. (2025). HFMM-Net: A Hybrid Fusion Mamba Network for Efficient Multimodal Industrial Defect Detection. Information, 16(12), 1018. https://doi.org/10.3390/info16121018