DCSC Mamba: A Novel Network for Building Change Detection with Dense Cross-Fusion and Spatial Compensation

Abstract

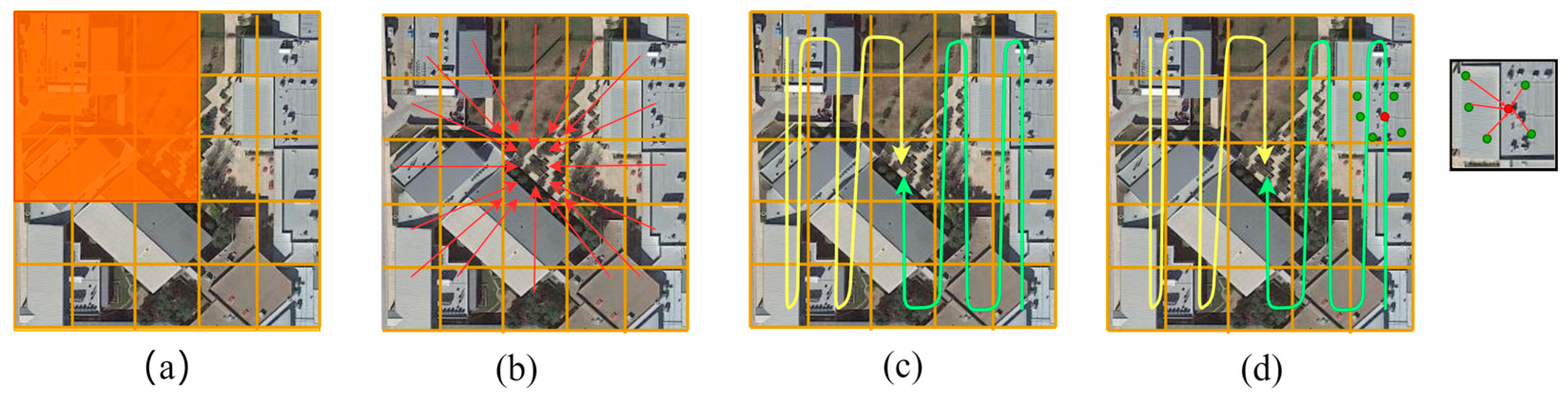

1. Introduction

2. Principles and Methods

2.1. Fundamentals of State Space Models

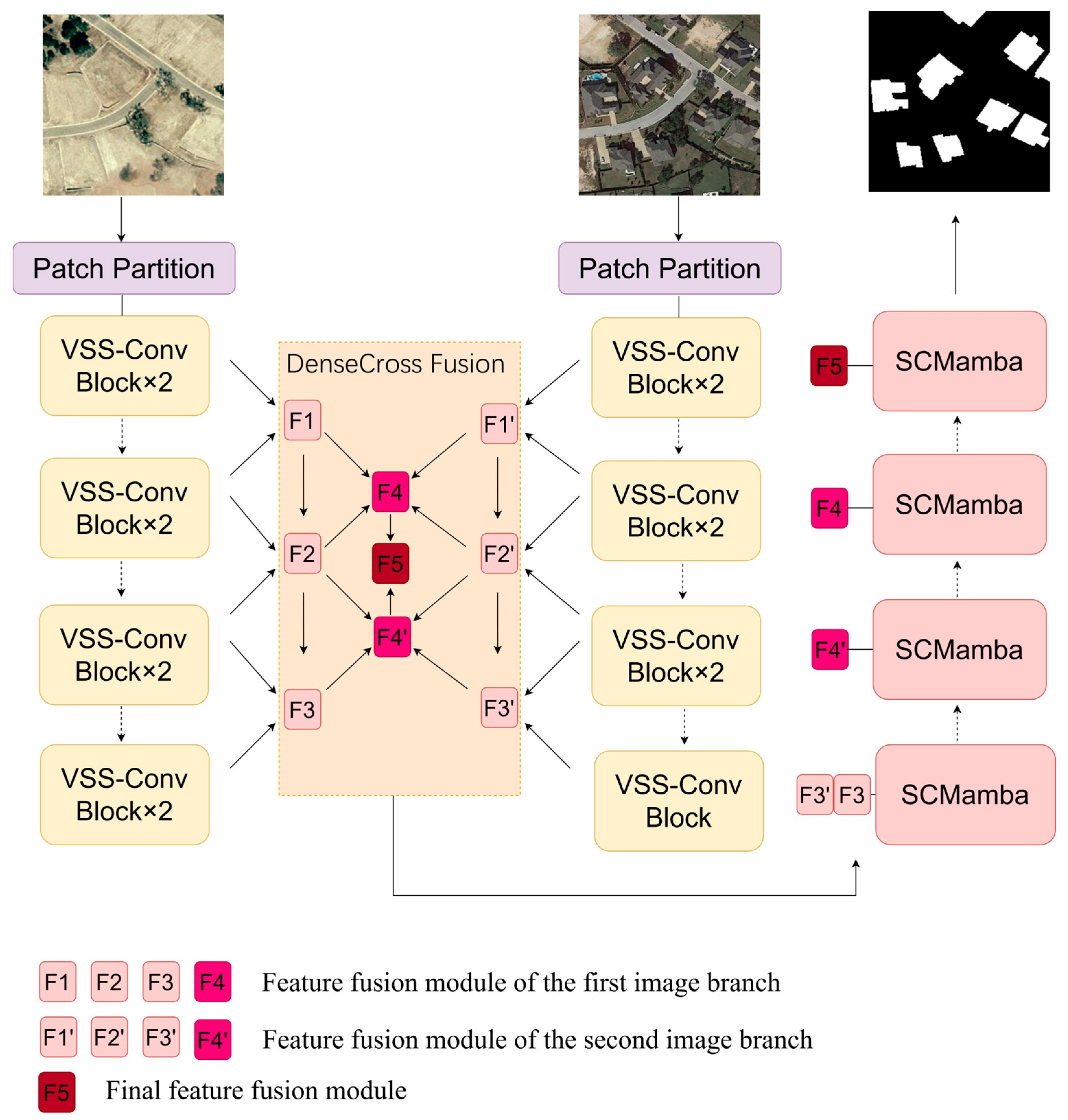

2.2. DCSC Mamba Model Architecture

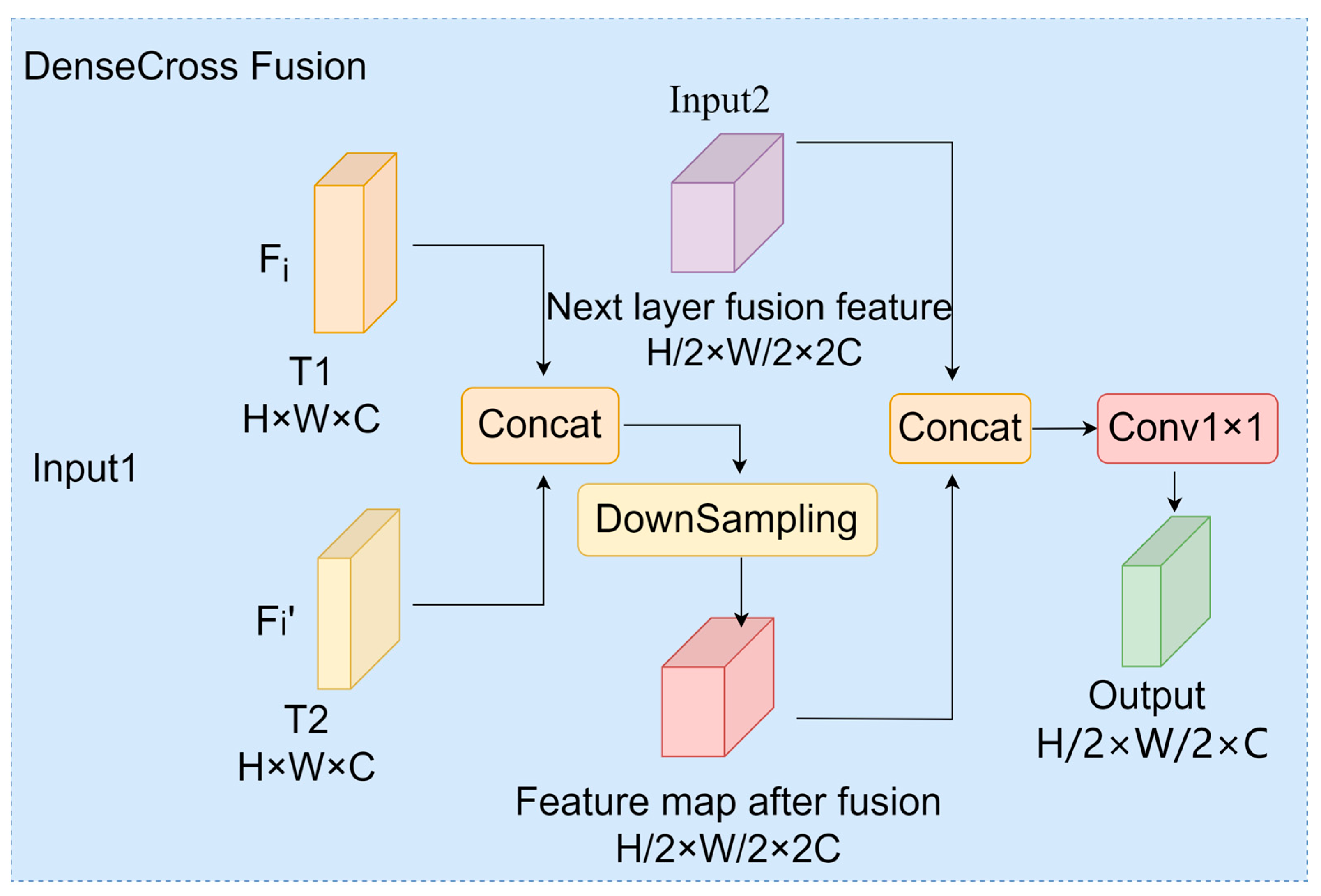

2.3. Dense Cross Fusion

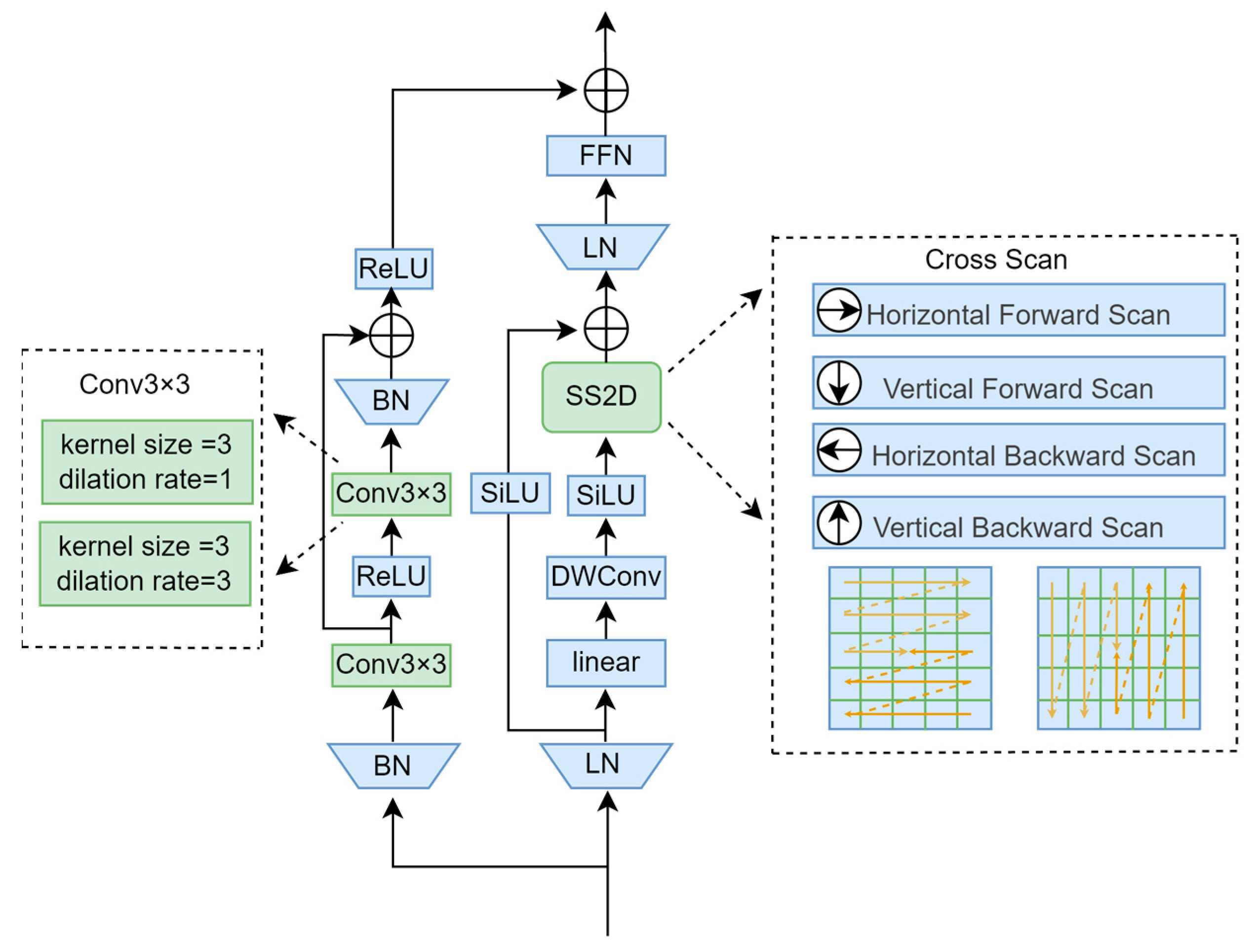

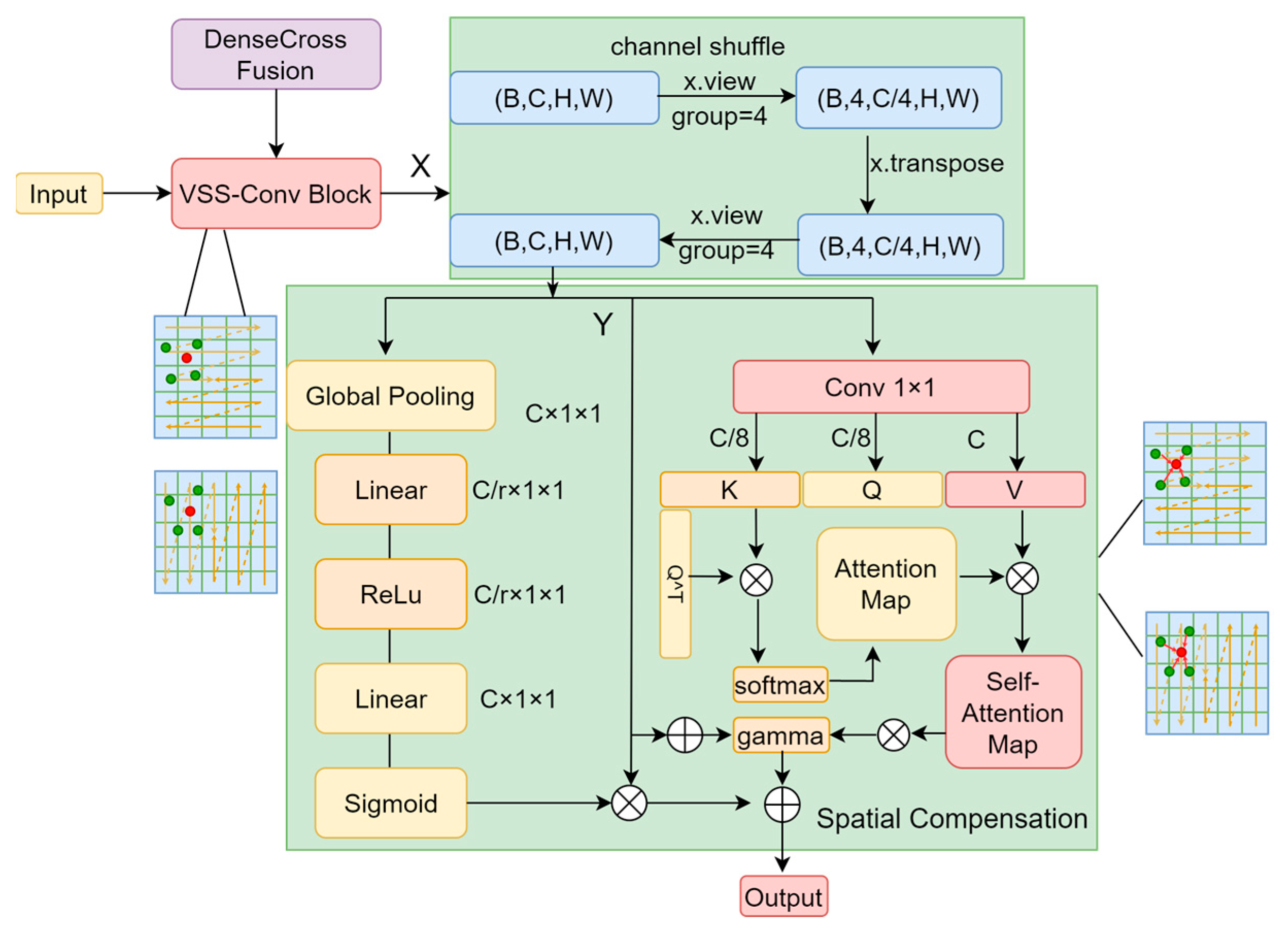

2.4. SCMamba

3. Experiments and Analysis

3.1. Experimental Dataset

3.2. Experimental Environment and Parameters

3.3. Evaluation Indicators

3.4. Experimental Results and Analysis

3.4.1. Experimental Results and Analysis of the LEVIR-CD Dataset

3.4.2. Experimental Results and Analysis of the SYSU-CD Dataset

3.5. Ablation Experiments

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bernhard, M.; Strauß, N.; Schubert, M. MapFormer: Boosting Change Detection by Using Pre-change Information. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 2–6 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 11657–11667. [Google Scholar] [CrossRef]

- Cheng, G.; Huang, Y.; Li, X.; Lyu, S.; Xu, Z.; Zhao, H.; Zhao, Q.; Xiang, S. Change Detection Methods for Remote Sensing in the Last Decade: A Comprehensive Survey. Remote Sens. 2024, 16, 2355. [Google Scholar] [CrossRef]

- Liu, B.; Chen, H.; Li, K.; Yang, M. Transformer-Based Multimodal Change Detection with Multitask Consistency Constraints. Inf. Fusion 2024, 108, 102358. [Google Scholar] [CrossRef]

- Gan, Y.; Xuan, W.; Chen, H.; Liu, J.; Du, B. RFL-CDNet: Towards Accurate Change Detection via Richer Feature Learning. Pattern Recognit. 2024, 153, 110515. [Google Scholar] [CrossRef]

- Chen, Z.; Zhou, Y.; Wang, B.; Xu, X.; He, N.; Jin, S. EGDE-Net: A Building Change Detection Method for High-Resolution Remote Sensing Imagery Based on Edge Guidance and Differential Enhancement. ISPRS J. Photogramm. Remote Sens. 2022, 191, 203–222. [Google Scholar] [CrossRef]

- He, F.; Chen, H.; Yang, S.; Guo, Z. Building Change Detection Network Based on Multilevel Geometric Representation Optimization Using Frame Fields. Remote Sens. 2024, 16, 4223. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, R.; Xu, Y. Semi-Supervised Remote Sensing Building Change Detection with Joint Perturbation and Feature Complementation. Remote Sens. 2024, 16, 3424. [Google Scholar] [CrossRef]

- Qin, R.; Tian, J.; Reinartz, P. 3D Change Detection–Approaches and Applications. ISPRS J. Photogramm. Remote Sens. 2016, 122, 41–56. [Google Scholar] [CrossRef]

- Hussain, M.; Chen, D.; Cheng, A.; Wei, H.; Stanley, D. Change Detection from Remotely Sensed Images: From Pixel-Based to Object-Based Approaches. ISPRS J. Photogramm. Remote Sens. 2013, 80, 91–106. [Google Scholar] [CrossRef]

- Benedek, C.; Szirányi, T. Change Detection in Optical Aerial Images by a Multilayer Conditional Mixed Markov Model. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3416–3430. [Google Scholar] [CrossRef]

- Liu, J.; Gong, M.; Qin, K.; Zhang, P. A Deep Convolutional Coupling Network for Change Detection Based on Heterogeneous Optical and Radar Images. IEEE Trans. Neural Netw. Learn. Syst. 2016, 29, 545–559. [Google Scholar] [CrossRef]

- Zhan, Y.; Fu, K.; Yan, M.; Sun, X.; Wang, H.; Qiu, X. Change Detection Based on Deep Siamese Convolutional Network for Optical Aerial Images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1845–1849. [Google Scholar] [CrossRef]

- Alcantarilla, P.F.; Stent, S.; Ros, G.; Arroyo, R.; Gherardi, R. Street-View Change Detection with Deconvolutional Networks. Auton. Robots 2018, 42, 1301–1322. [Google Scholar] [CrossRef]

- Daudt, R.C.; Le Saux, B.; Boulch, A. Fully Convolutional Siamese Networks for Change Detection. In Proceedings of the 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 4063–4067. [Google Scholar] [CrossRef]

- Chen, H.; Shi, Z. A Spatial-Temporal Attention-Based Method and a New Dataset for Remote Sensing Image Change Detection. Remote Sens. 2020, 12, 1662. [Google Scholar] [CrossRef]

- Chen, J.; Yuan, Z.; Peng, J.; Chen, L.; Huang, H.; Zhu, J.; Liu, Y.; Li, H. DASNet: Dual Attentive Fully Convolutional Siamese Networks for Change Detection in High-Resolution Satellite Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 1194–1206. [Google Scholar] [CrossRef]

- Wang, G.; Li, B.; Zhang, T.; Zhang, S. A Network Combining a Transformer and a Convolutional Neural Network for Remote Sensing Image Change Detection. Remote Sens. 2022, 14, 2228. [Google Scholar] [CrossRef]

- Aleissaee, A.A.; Kumar, A.; Anwer, R.M.; Khan, S.; Cholakkal, H.; Xia, G.-S.; Khan, F.S. Transformers in Remote Sensing: A Survey. Remote Sens. 2023, 15, 1860. [Google Scholar] [CrossRef]

- Liu, M.; Chai, Z.; Deng, H.; Liu, R. A CNN-Transformer Network with Multiscale Context Aggregation for Fine-Grained Cropland Change Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4297–4306. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Álvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

- Bandara, W.G.C.; Patel, V.M. A Transformer-Based Siamese Network for Change Detection. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS 2022), Kuala Lumpur, Malaysia, 17–22 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 207–210. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 10012–10022. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y.; et al. A Survey on Vision Transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 87–110. [Google Scholar] [CrossRef]

- Liu, Y.; Tian, Y.; Zhao, Y.; Yu, H.; Xie, L.; Wang, Y.; Ye, Q.; Jiao, J.; Liu, Y. Vmamba: Visual State Space Model. Adv. Neural Inf. Process. Syst. 2024, 37, 103031–103063. [Google Scholar]

- Huang, T.; Pei, X.; You, S.; Wang, F.; Qian, C.; Xu, C. LocalMamba: Visual State Space Model with Windowed Selective Scan. In Proceedings of the European Conference on Computer Vision (ECCV 2024), Cham, Switzerland, 8–14 October 2024; Springer Nature: Cham, Switzerland, 2024; pp. 12–22. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, A.; Reid, I.; Hartley, R.; Zhuang, B.; Tang, H. Motion Mamba: Efficient and Long Sequence Motion Generation. In Proceedings of the European Conference on Computer Vision (ECCV 2024), Cham, Switzerland, 8–14 October 2024; Springer Nature: Cham, Switzerland, 2024. Lecture Notes in Computer Science. Volume 15059, pp. 265–282. [Google Scholar] [CrossRef]

- Zhang, H.; Zhu, Y.; Wang, D.; Zhang, L.; Chen, T.; Wang, Z.; Ye, Z. A Survey on Visual Mamba. Appl. Sci. 2024, 14, 5683. [Google Scholar] [CrossRef]

- Liu, L.; Ma, L.; Wang, S.; Wang, J.; Melo, S. Two-Stage Mamba-Based Diffusion Model for Image Restoration. Sci. Rep. 2025, 15, 22265. [Google Scholar] [CrossRef]

- Shi, Y.; Dong, M.; Xu, C. Multi-Scale VMamba: Hierarchy in Hierarchy Visual State Space Model. Adv. Neural Inf. Process. Syst. 2024, 37, 25687–25708. [Google Scholar]

- Goel, K.; Gu, A.; Donahue, C.; Ré, C. It’s Raw! Audio Generation with State-Space Models. In Proceedings of the International Conference on Machine Learning (ICML 2022), Baltimore, MD, USA, 17–23 July 2022; PMLR: Cambridge, MA, USA, 2022; pp. 7616–7633. [Google Scholar]

- Chen, H.; Song, J.; Han, C.; Xia, J.; Yokoya, N. ChangeMamba: Remote Sensing Change Detection with Spatiotemporal State Space Model. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4409720. [Google Scholar] [CrossRef]

- Tang, Y.; Li, Y.; Zou, H.; Zhang, X. Interactive Segmentation for Medical Images Using Spatial Modeling Mamba. Information 2024, 15, 633. [Google Scholar] [CrossRef]

- Shi, Q.; Liu, M.; Li, S.; Liu, X.; Wang, F.; Zhang, L. A deeply supervised attention metric-based network and an open aerial image dataset for remote sensing change detection. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5604816. [Google Scholar] [CrossRef]

- Jin, W.D.; Xu, J.; Han, Q.; Zhang, Y.; Cheng, M.M. CDNet: Complementary Depth Network for RGB-D Salient Object Detection. IEEE Trans. Image Process. 2021, 30, 3376–3390. [Google Scholar] [CrossRef]

- Zhang, C.; Yue, P.; Tapete, D.; Jiang, L.; Shangguan, B.; Huang, L.; Liu, G. A Deeply Supervised Image Fusion Network for Change Detection in High-Resolution Bi-Temporal Remote Sensing Images. ISPRS J. Photogramm. Remote Sens. 2020, 166, 183–200. [Google Scholar] [CrossRef]

- Chen, H.; Qi, Z.; Shi, Z. Remote Sensing Image Change Detection with Transformers. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–14. [Google Scholar] [CrossRef]

- Chen, C.P.; Hsieh, J.W.; Chen, P.Y.; Hsieh, Y.H.; Wang, B.S. SARAS-Net: Scale and Relation Aware Siamese Network for Change Detection. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI 2023), Washington, DC, USA, 7–14 February 2023; AAAI Press: Palo Alto, CA, USA, 2023; Volume 37, pp. 14187–14195. [Google Scholar] [CrossRef]

| Method | Precision | Recall | F1 | IoU |

|---|---|---|---|---|

| CDNet | 82.74 | 89.95 | 86.20 | 75.74 |

| DSIFN | 88.82 | 90.64 | 89.72 | 81.36 |

| BIT | 88.37 | 90.95 | 89.64 | 81.23 |

| ChangeFormer | 84.12 | 89.56 | 86.76 | 76.62 |

| SarasNet | 84.65 | 92.75 | 88.51 | 79.40 |

| ChangeMamba | 86.61 | 90.40 | 88.46 | 79.32 |

| DcscMamba | 89.57 | 91.02 | 90.29 | 82.30 |

| Method | Precision | Recall | F1 | IoU |

|---|---|---|---|---|

| CDNet | 71.78 | 80.38 | 75.84 | 61.08 |

| DSIFN | 75.97 | 81.69 | 78.73 | 64.92 |

| BIT | 77.39 | 76.03 | 76.52 | 61.97 |

| ChangeFormer | 74.16 | 78.78 | 76.54 | 61.99 |

| SarasNet | 80.03 | 77.21 | 79.04 | 65.35 |

| ChangeMamba | 76.24 | 80.18 | 78.16 | 64.16 |

| DcscMamba | 82.56 | 76.88 | 79.62 | 66.13 |

| Method | Precision | Recall | F1 | IoU | Parameters (M) |

|---|---|---|---|---|---|

| Baseline | 80.70 | 83.31 | 81.98 | 69.47 | 70.08 |

| Baseline+Dc | 85.78 | 88.95 | 87.34 | 77.53 | 71.73 |

| Baseline+Sc | 83.88 | 92.23 | 87.86 | 78.34 | 73.08 |

| DcscMamba | 89.57 | 91.02 | 90.29 | 82.30 | 74.73 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, R.; Mao, R.; Yang, Y.; Zhang, W.; Lin, Y.; Zhang, Y. DCSC Mamba: A Novel Network for Building Change Detection with Dense Cross-Fusion and Spatial Compensation. Information 2025, 16, 975. https://doi.org/10.3390/info16110975

Xu R, Mao R, Yang Y, Zhang W, Lin Y, Zhang Y. DCSC Mamba: A Novel Network for Building Change Detection with Dense Cross-Fusion and Spatial Compensation. Information. 2025; 16(11):975. https://doi.org/10.3390/info16110975

Chicago/Turabian StyleXu, Rui, Renzhong Mao, Yihui Yang, Weiping Zhang, Yiteng Lin, and Yining Zhang. 2025. "DCSC Mamba: A Novel Network for Building Change Detection with Dense Cross-Fusion and Spatial Compensation" Information 16, no. 11: 975. https://doi.org/10.3390/info16110975

APA StyleXu, R., Mao, R., Yang, Y., Zhang, W., Lin, Y., & Zhang, Y. (2025). DCSC Mamba: A Novel Network for Building Change Detection with Dense Cross-Fusion and Spatial Compensation. Information, 16(11), 975. https://doi.org/10.3390/info16110975