A Lightweight Adaptive Attention Fusion Network for Real-Time Electrowetting Defect Detection

Abstract

1. Introduction

- •

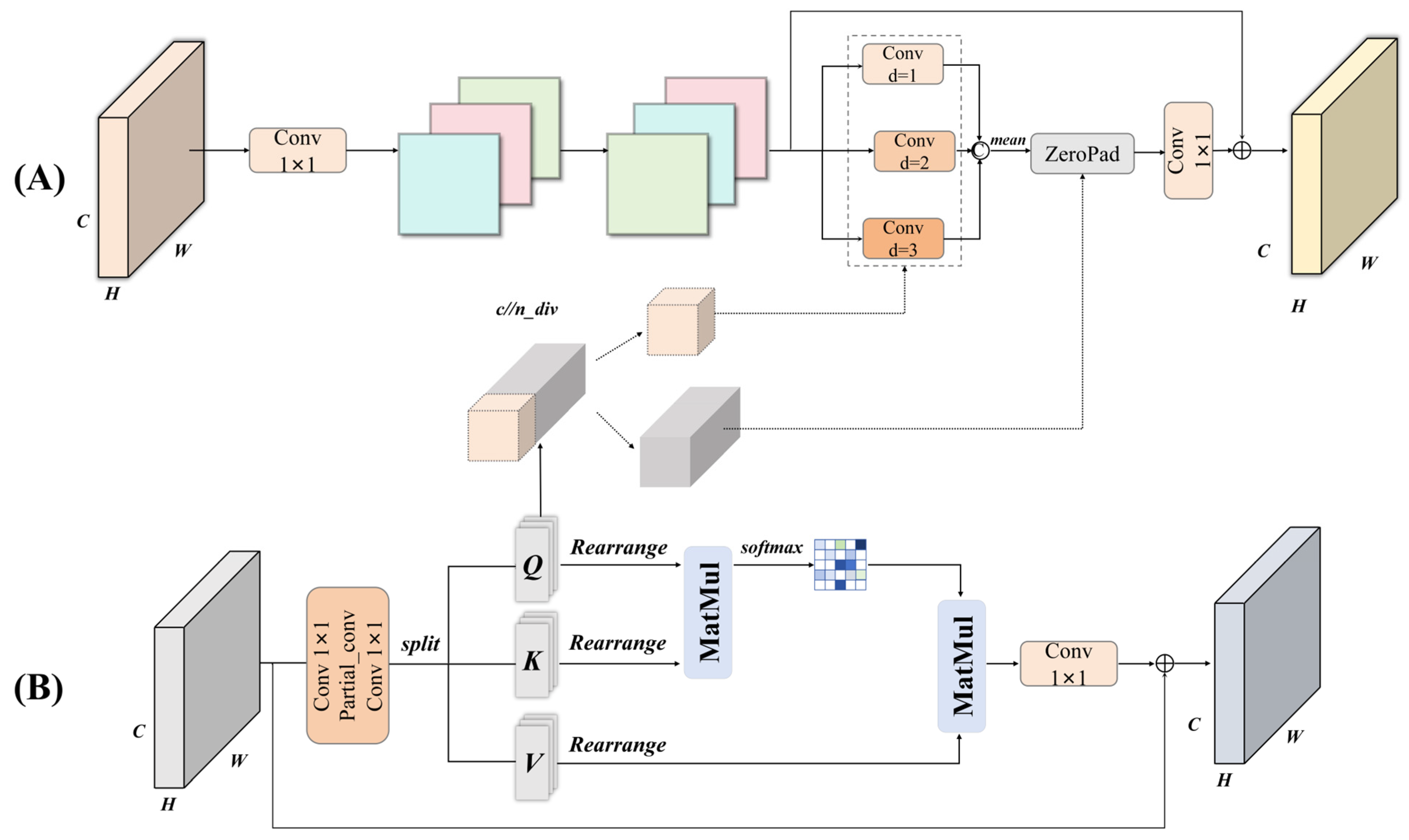

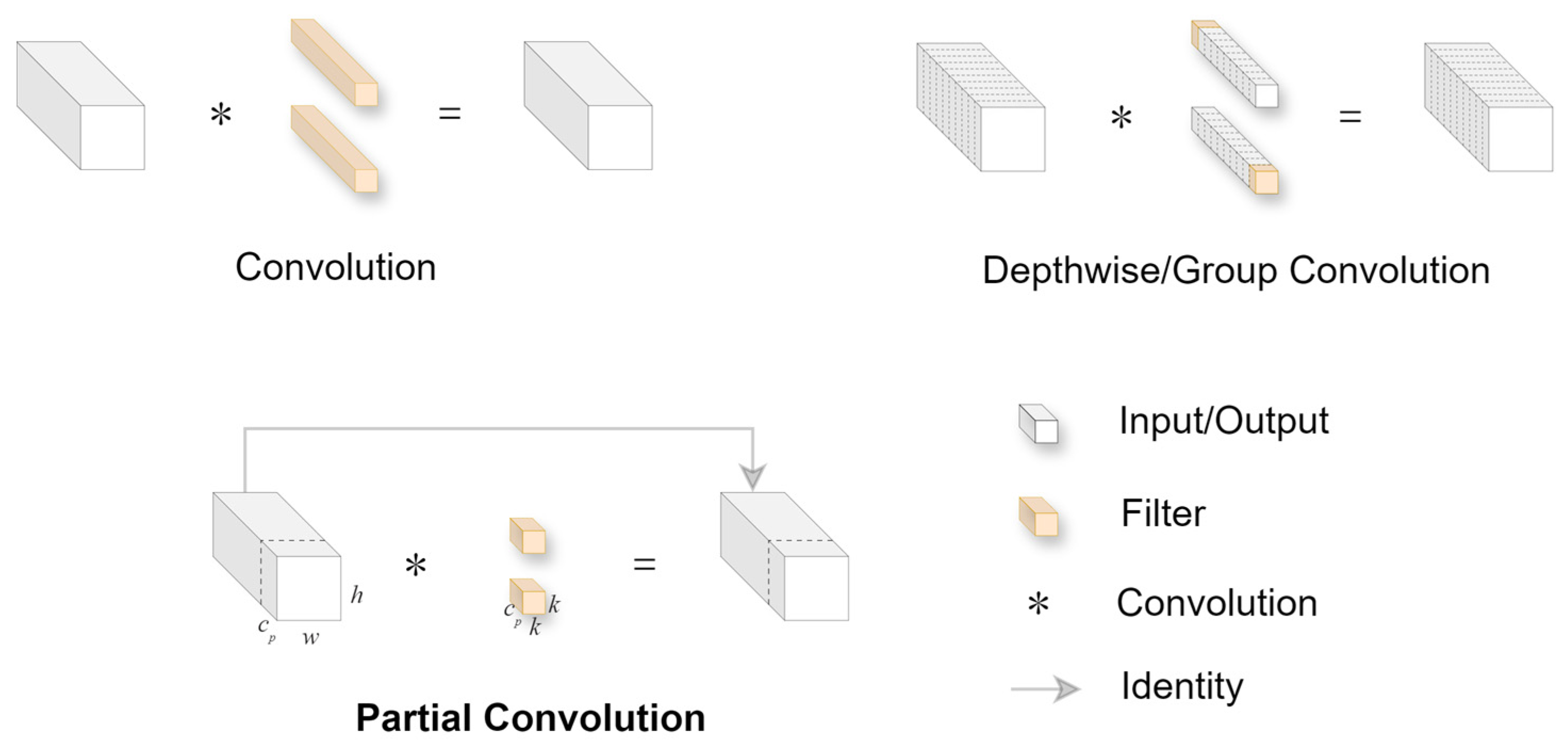

- We propose the Multi-scale Partial convolutional Fusion with Attention mechanism (MPFA): MPFA integrates multi-scale partial convolutions with attention mechanisms to enhance electrowetting defect detection across scales and amplify feature representations of micro-defects. By leveraging the inherent properties of partial convolutions—specifically, base convolution sharing and a channel splitting strategy—we significantly reduce convolutional channel counts during multi-scale feature map processing. This design achieves marked reductions in both computational load and parameter volume.

- •

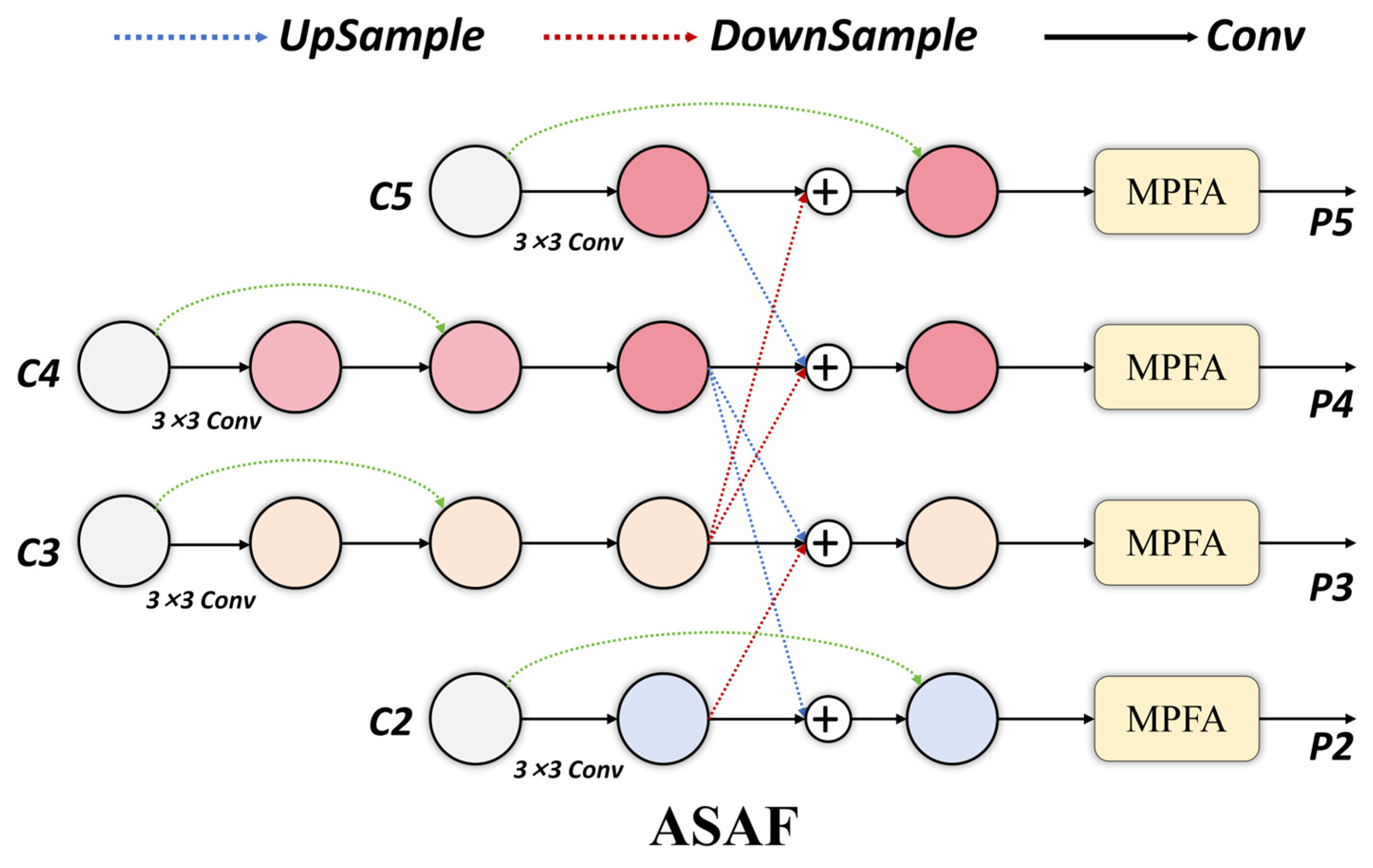

- We propose the Adaptive Scale Attention Fusion Pyramid (ASAF-Pyramid): ASAF-Pyramid introduces a finer-scale detection layer (P2) tailored for electrowetting targets. This framework constructs a cross-tier adaptive weighting mechanism that effectively incorporates features from four distinct scales, thereby optimizing cross-scale defect localization capabilities.

- •

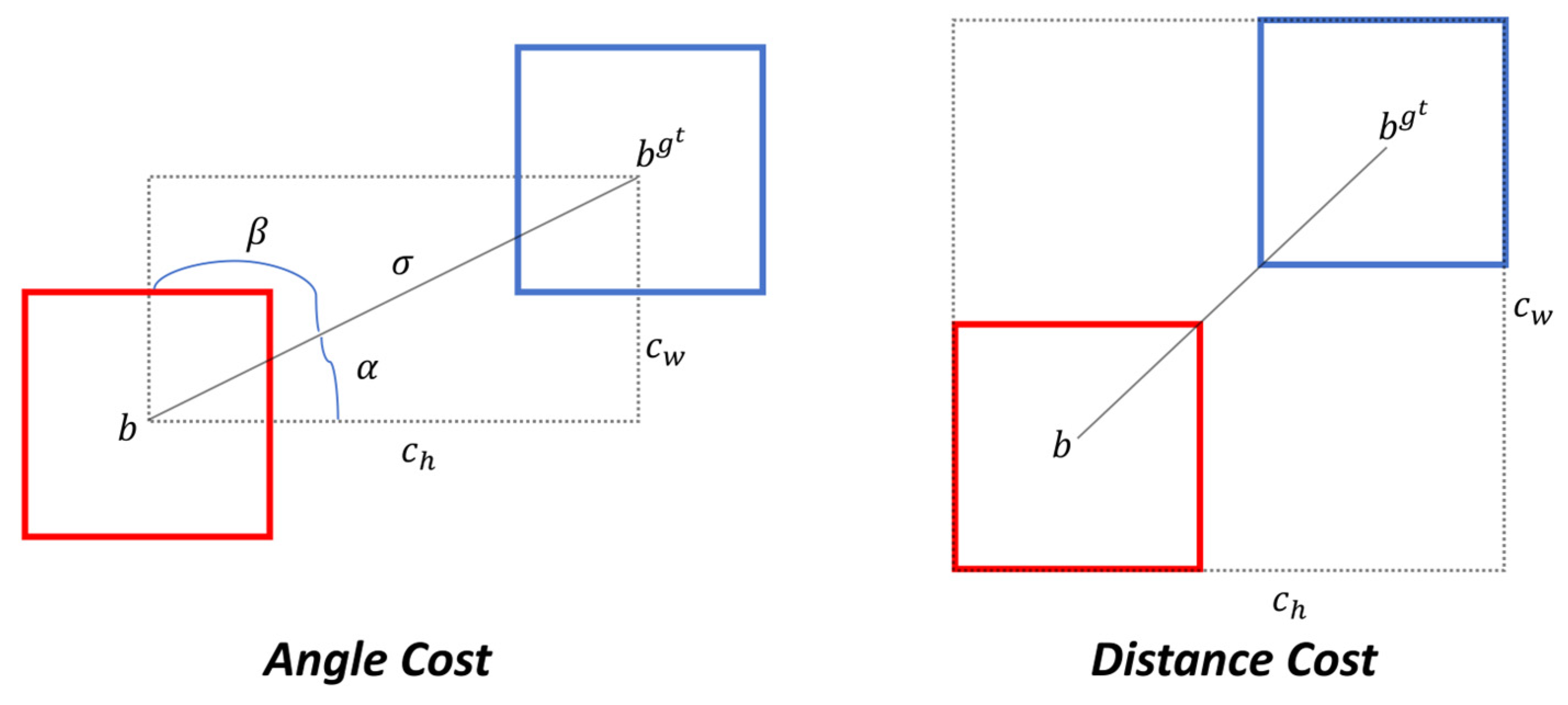

- Furthermore, building upon the aforementioned foundation, we replaced the original CIoU loss function with Shape-IoU bounding box regression [19], enhancing the model’s capability to detect irregular, small-target electrowetting defects.

2. Related Work

2.1. Object Detection Algorithms

2.2. Research on Small Object Detection

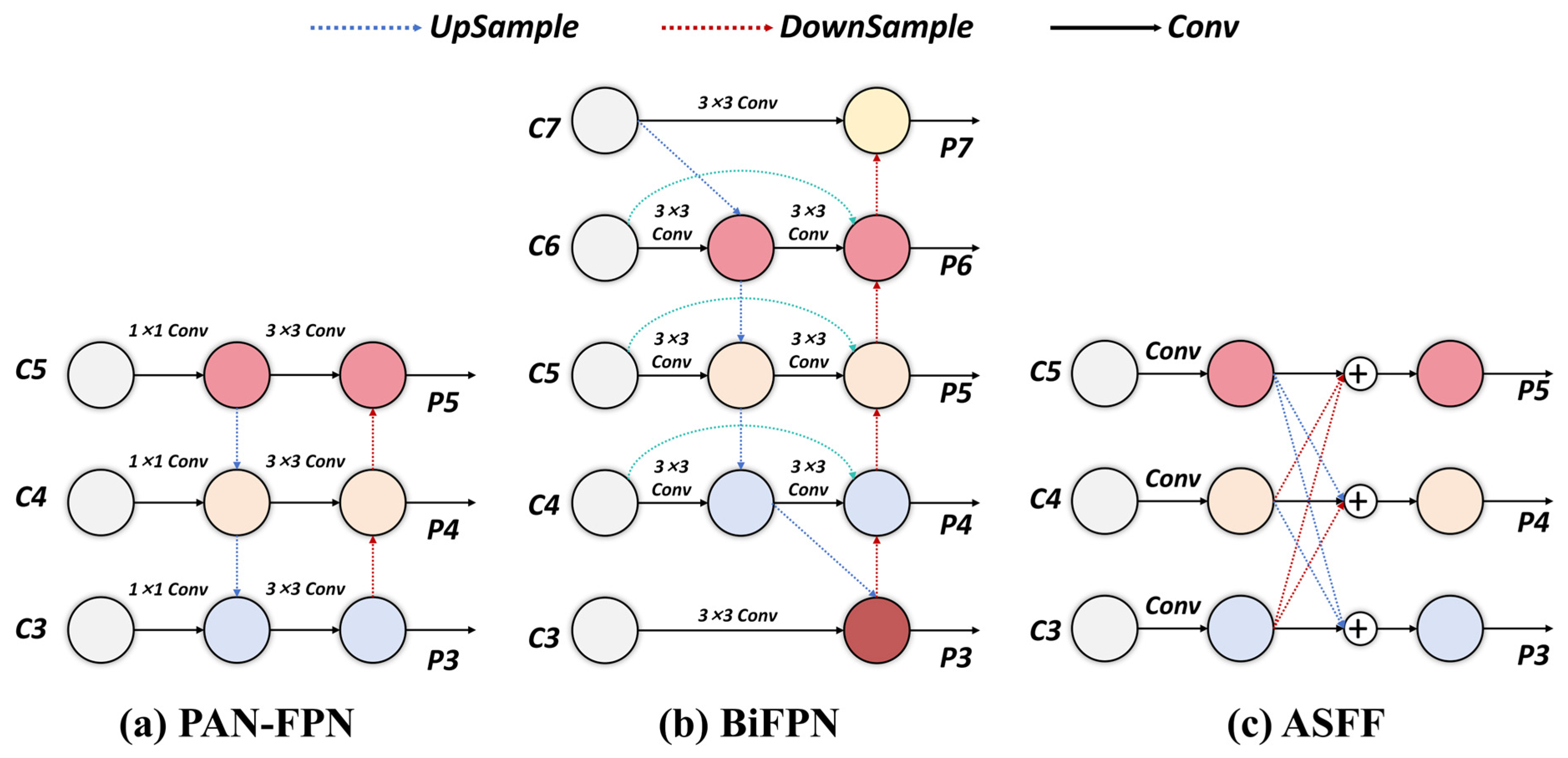

2.3. Multi-Scale Fusion Methods

3. Methods

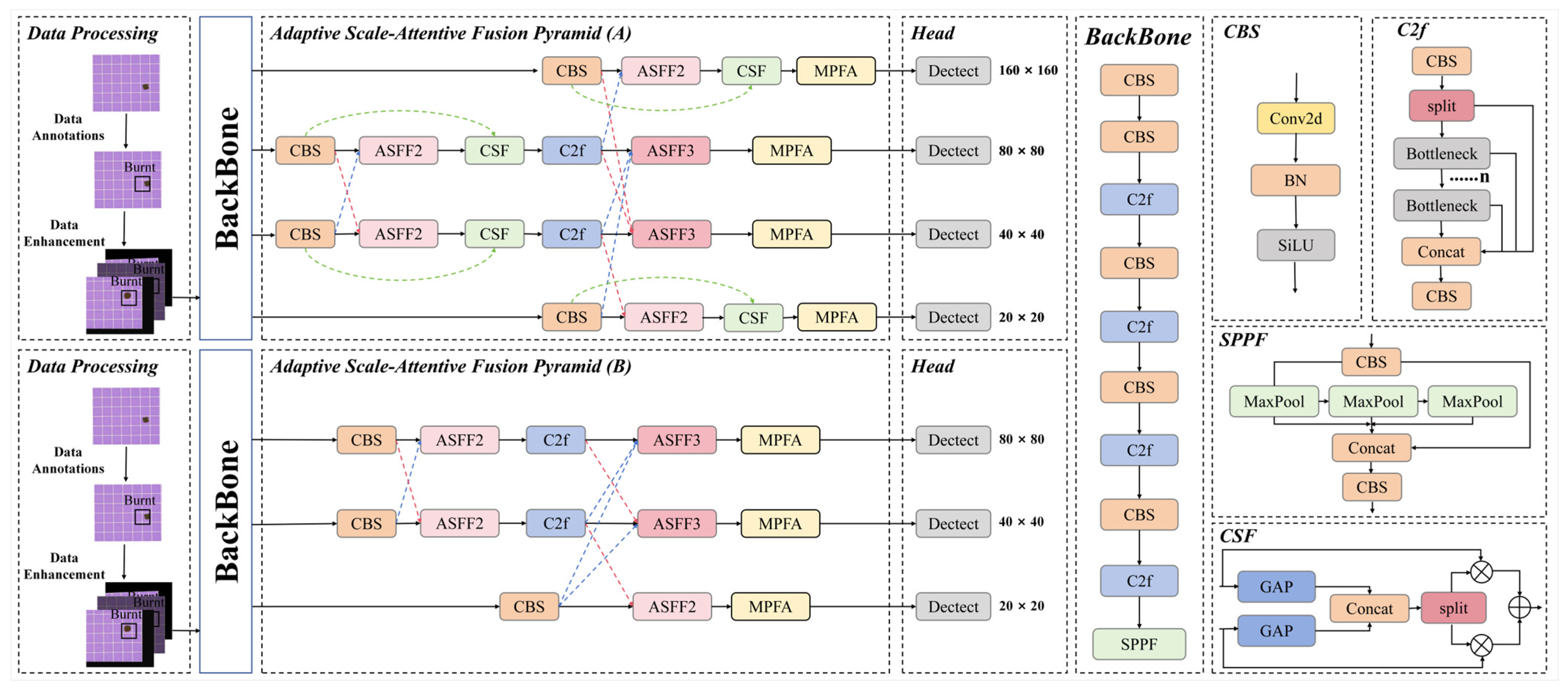

3.1. Network Architecture

- •

- ASAF-Pyramid (A) This is the primary and most powerful configuration. It introduces a dedicated, high-resolution P2 detection layer sourced from earlier, shallower feature maps. The P2 layer preserves the richest spatial details, which is paramount for the precise localization of micron-scale defects (e.g., charge trapping). Consequently, ASAF-Pyramid (A) employs four detection heads (P2, P3, P4, P5) for comprehensive defect detection across all scales, from the minutest to the largest.

- •

- ASAF-Pyramid (B) This variant serves as an ablative counterpart to demonstrate the effectiveness of our core fusion strategy independently of adding new scales. It applies the proposed progressive feature refinement and cross-tier adaptive weighting only to the original three pyramid levels (P3, P4, P5). This design allows for a direct comparison with the baseline YOLOv8 neck, showing that our fusion method itself brings significant improvement, and the addition of the P2 layer in variant (A) provides a further boost, especially for small targets.

3.2. Multi-Scale Partial Convolution Fusion Attention

3.3. Shape-IoU Loss Function

4. Experiment

4.1. Datasets

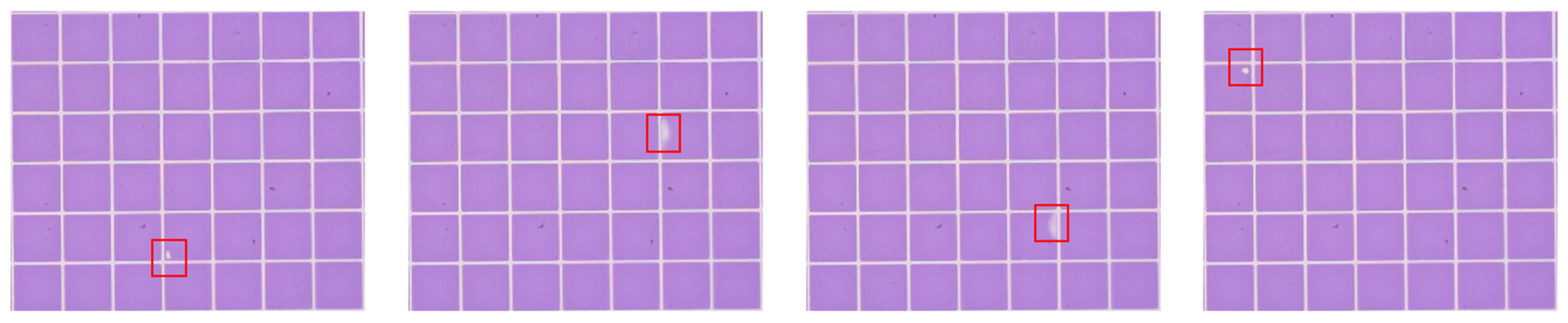

- •

- Burnt: Refers to localized overheating caused by overcurrent, overvoltage, or short-circuiting, resulting in permanent physical ablation of the electrowetting device. The dark brown/black areas in Figure 9a indicate the burnt regions.

- •

- Charge Trapping: This is one of the most prevalent and challenging failure modes in electrowetting devices. It occurs when unwanted electric charges become trapped within the dielectric or other sensitive layers. Charges trapped in the dielectric layer (beneath the hydrophobic coating) generate a residual electric field, as illustrated in Figure 9b.

- •

- Deformation: This describes the irregular distortion of pixel walls caused by voltage-induced alterations in droplet morphology, which ultimately compromises display quality, as shown in Figure 9c.

- •

- Degradation: This signifies the progressive deterioration of electrowetting performance over time. Contributing factors may include the chemical degradation of the hydrophobic layer (leading to reduced hydrophobicity), fluid contamination, and material aging. These issues prevent the droplet from achieving an optimal contact angle on the surface, as depicted in Figure 9d.

- •

- Oil Leakage: This failure mode involves the spillage of the oil phase from the fluidic cavity due to insufficient interfacial tension between the oil and aqueous phases. This leakage can cause display malfunctions or damage other components, as seen in Figure 9e.

- •

- Oil Splitting: In the unpowered (non-actuated) state, the oil (purple area) should uniformly cover the entire pixel area. However, due to factors such as hydrophobic layer degradation, the oil fails to split and retreat properly to the corners, thereby exposing the substrate below (appearing white), as shown in Figure 9f.

- •

- Normal: This represents the ideal state of the device, characterized by intact pixel structures, an undamaged dielectric layer, proper sealing, and well-defined, controllable oil-aqueous interfaces, as shown in Figure 9g.

4.2. Experimental Environment

4.3. Evaluation Metrics

4.4. Results and Analysis

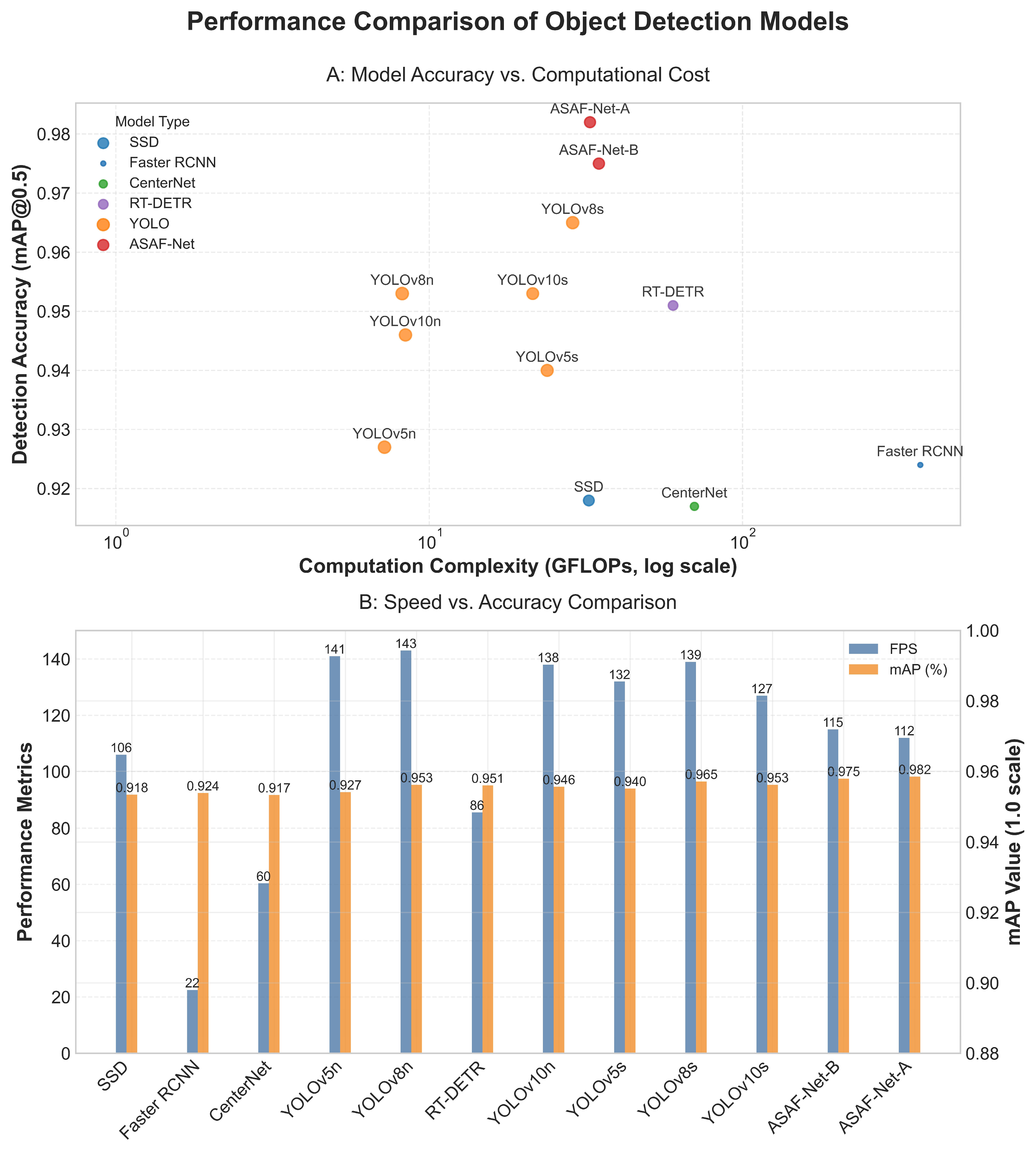

4.4.1. Comparison Experiment

4.4.2. Ablation Experiments

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Lippmann, G. Relations entre les phénomènes électriques et capillaires. Ann. Chim. Phys. 1875, 5, 494–549. [Google Scholar]

- Beni, G.; Hackwood, S. Electro-Wetting Displays. Appl. Phys. Lett. 1981, 38, 207–209. [Google Scholar] [CrossRef]

- Berge, B. Electrocapillarite et mouillage de films isolants par l’eau. C. R. Acad. Sci. Paris. Sér II 1993, 317, 157–163. [Google Scholar]

- Hayes, R.A.; Feenstra, B.J. Video-Speed Electronic Paper Based on Electrowetting. Nature 2003, 425, 383–385. [Google Scholar] [CrossRef] [PubMed]

- Mugele, F.; Baret, J.-C. Electrowetting: From Basics to Applications. J. Phys. Condens. Matter. 2005, 17, R705–R774. [Google Scholar] [CrossRef]

- Giraldo, A.; Aubert, J.; Bergeron, N.; Li, F.; Slack, A.; Van De Weijer, M. 34.2: Transmissive Electrowetting-Based Displays for Portable Multi-Media Devices. SID Symp. Dig. Tech. Pap. 2009, 40, 479–482. [Google Scholar] [CrossRef]

- Ku, Y.; Kuo, S.; Huang, Y.; Chen, C.; Lo, K.; Cheng, W.; Shiu, J. Single-layered Multi-color Electrowetting Display by Using Ink-jet-printing Technology and Fluid-motion Prediction with Simulation. J. Soc. Inf. Disp. 2011, 19, 488–495. [Google Scholar] [CrossRef]

- Heikenfeld, J.; Steckl, A.J. Intense Switchable Fluorescence in Light Wave Coupled Electrowetting Devices. Appl. Phys. Lett. 2005, 86, 011105. [Google Scholar] [CrossRef]

- Cuimei, L.; Zhiliang, Q.; Nan, J.; Jianhua, W. Human Face Detection Algorithm via Haar Cascade Classifier Combined with Three Additional Classifiers. In Proceedings of the 2017 13th IEEE International Conference on Electronic Measurement & Instruments (ICEMI), Yangzhou, China, 20–22 October 2017; pp. 483–487. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 13–16 December 2015; pp. 1440–1448. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2016; Volume 9905, pp. 21–37. ISBN 978-3-319-46447-3. [Google Scholar]

- Jocher, G. YOLOv5 by Ultralytics. Available online: https://github.com/ultralytics/yolov5 (accessed on 20 June 2025).

- Liu, S.; Huang, D.; Wang, Y. Learning Spatial Fusion for Single-Shot Object Detection. arXiv 2019, arXiv:1911.09516. [Google Scholar] [CrossRef]

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. arXiv 2020, arXiv:1905.11946. [Google Scholar] [CrossRef]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU Loss: Faster and Better Learning for Bounding Box Regression. Proc. AAAI Conf. Artif. Intell. 2020, 34, 12993–13000. [Google Scholar] [CrossRef]

- Gevorgyan, Z. SIoU Loss: More Powerful Learning for Bounding Box Regression. arXiv 2022, arXiv:2205.12740. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. arXiv 2013, arXiv:1311.2524v5. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollar, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-End Object Detection with Transformers. arXiv 2020, arXiv:2005.12872. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-Time Object Detection. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 16965–16974. [Google Scholar]

- Li, J.; Liang, X.; Wei, Y.; Xu, T.; Feng, J.; Yan, S. Perceptual Generative Adversarial Networks for Small Object Detection. arXiv 2017, arXiv:1706.05274. [Google Scholar] [CrossRef]

- Bai, Y.; Zhang, Y.; Ding, M.; Ghanem, B. SOD-MTGAN: Small Object Detection via Multi-Task Generative Adversarial Network. In Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2018; Volume 11217, pp. 210–226. ISBN 978-3-030-01260-1. [Google Scholar]

- Stachoń, M.; Pietroń, M. Chosen Methods of Improving Small Object Recognition with Weak Recognizable Features. In Advances in Information and Communication; Arai, K., Ed.; Lecture Notes in Networks and Systems; Springer Nature: Cham, Switzerland, 2023; Volume 652, pp. 270–285. ISBN 978-3-031-28072-6. [Google Scholar]

- Wang, J.; Xu, C.; Yang, W.; Yu, L. A Normalized Gaussian Wasserstein Distance for Tiny Object Detection. arXiv 2022, arXiv:2110.13389. [Google Scholar] [CrossRef]

- Wu, Z.; Zhen, H.; Zhang, X.; Bai, X.; Li, X. SEMA-YOLO: Lightweight Small Object Detection in Remote Sensing Image via Shallow-Layer Enhancement and Multi-Scale Adaptation. Remote Sens. 2025, 17, 1917. [Google Scholar] [CrossRef]

- Zhang, Y.; Wu, C.; Fan, Y. MLF-YOLO: A Novel Multiscale Feature Fusion Network for Remote Sensing Small Target Detection. J. Real-Time Image Process. 2025, 22, 138. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. arXiv 2017, arXiv:1612.03144. [Google Scholar] [CrossRef]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. arXiv 2018, arXiv:1803.01534. [Google Scholar] [CrossRef]

- Chen, J.; Kao, S.; He, H.; Zhuo, W.; Wen, S.; Lee, C.-H.; Chan, S.-H.G. Run, Don’t Walk: Chasing Higher FLOPS for Faster Neural Networks. arXiv 2023, arXiv:2303.03667. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. CenterNet: Keypoint Triplets for Object Detection. arXiv 2019, arXiv:1904.08189. [Google Scholar] [CrossRef]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLOv8: Ultralytics’ Newest Object Detection Model. Ultralytics. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 20 June 2025).

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

| Device | |

|---|---|

| System | Windows 11 OS |

| GPU | NVIDIA GeForce RTX 4070Super 12G GPU |

| CPU | 13th Gen Intel(R) Core(TM) i5-13490F 2.50 GHz |

| NVIDIA CUDA | CUDA 12.4 |

| PyTorch version | 2.6.0 |

| Python version | 3.11 |

| Model | Precision | Recall | mAP@0.5 | Param (M) | GFLOPs | FPS |

|---|---|---|---|---|---|---|

| SSD | 0.892 | 0.905 | 0.918 | 24.4 | 32.3 | 106 |

| Faster RCNN | 0.905 | 0.882 | 0.924 | 137 | 369 | 22.5 |

| CenterNet | 0.893 | 0.904 | 0.917 | 32.3 | 70.2 | 60.4 |

| YOLOv5n | 0.916 | 0.876 | 0.927 | 2.51 | 7.20 | 141 |

| YOLOv8n | 0.913 | 0.929 | 0.953 | 3.01 | 8.20 | 143 |

| RT-DETR | 0.904 | 0.919 | 0.951 | 20.2 | 60.0 | 85.5 |

| YOLOv10n | 0.904 | 0.921 | 0.946 | 2.71 | 8.40 | 138 |

| YOLOv5s | 0.906 | 0.909 | 0.940 | 9.12 | 23.8 | 132 |

| YOLOv8s | 0.915 | 0.941 | 0.965 | 11.1 | 28.7 | 139 |

| YOLOv10s | 0.908 | 0.923 | 0.953 | 7.22 | 21.4 | 127 |

| ASAF-Net-B | 0.909 | 0.952 | 0.975 | 12.3 | 34.8 | 115 |

| ASAF-Net-A | 0.912 | 0.985 | 0.982 | 9.82 | 33.2 | 112 |

| Model | AP | |||

|---|---|---|---|---|

| Charge Trapping | Burnt | Deformation | Degradation | |

| YOLOv5n | 0.759 | 0.935 | 0.855 | 0.838 |

| YOLOv8n | 0.796 | 0.942 | 0.871 | 0.857 |

| YOLOv10n | 0.794 | 0.981 | 0.887 | 0.854 |

| YOLOv5s | 0.832 | 0.995 | 0.945 | 0.947 |

| YOLOv8s | 0.850 | 0.995 | 0.995 | 0.949 |

| YOLOv10s | 0.889 | 0.995 | 0.946 | 0.926 |

| ASAF-Net-B | 0.975 | 0.995 | 0.995 | 0.960 |

| ASAF-Net-A | 0.986 | 0.995 | 0.995 | 0.978 |

| Structure | Param (M) | GFLOPs |

|---|---|---|

| ASAF | 9.93 | 33.4 |

| ASFF | 10.1 | 36.8 |

| YOLOv8s BackBone | mAP@0.5 | Param (M) | GFLOPs | |||

|---|---|---|---|---|---|---|

| ASAF-Pyramid (A) | ASAF-Pyramid (B) | MPFA | SIoU | |||

| 0.965 | 11.1 | 28.7 | ||||

| √ | 0.972 | 9.93 | 33.4 | |||

| √ | 0.968 | 12.8 | 35.6 | |||

| √ | 0.971 | 10.7 | 27.8 | |||

| √ | 0.967 | 11.1 | 28.7 | |||

| √ | √ | 0.978 | 9.82 | 33.2 | ||

| √ | √ | 0.974 | 9.93 | 33.4 | ||

| √ | √ | 0.973 | 12.3 | 34.8 | ||

| √ | √ | 0.971 | 12.8 | 35.6 | ||

| √ | √ | √ | 0.975 | 12.3 | 34.8 | |

| √ | √ | √ | 0.982 | 9.82 | 33.2 | |

| Models | MPFA | mAP@0.5 | Param (M) | GFLOPs | ||

|---|---|---|---|---|---|---|

| n_div = 2 | n_div = 4 | n_div = 8 | ||||

| ASAF-Net-A | √ | 0.979 | 9.86 | 33.4 | ||

| √ | 0.982 | 9.82 | 33.2 | |||

| √ | 0.972 | 9.81 | 33.1 | |||

| ASAF-Net-B | √ | 0.971 | 12.5 | 35.1 | ||

| √ | 0.975 | 12.3 | 34.8 | |||

| √ | 0.968 | 12.2 | 34.7 | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, R.; Zheng, J.; Long, W.; Chen, H.; Luo, Z. A Lightweight Adaptive Attention Fusion Network for Real-Time Electrowetting Defect Detection. Information 2025, 16, 973. https://doi.org/10.3390/info16110973

Chen R, Zheng J, Long W, Chen H, Luo Z. A Lightweight Adaptive Attention Fusion Network for Real-Time Electrowetting Defect Detection. Information. 2025; 16(11):973. https://doi.org/10.3390/info16110973

Chicago/Turabian StyleChen, Rui, Jianhua Zheng, Wufa Long, Haolin Chen, and Zhijie Luo. 2025. "A Lightweight Adaptive Attention Fusion Network for Real-Time Electrowetting Defect Detection" Information 16, no. 11: 973. https://doi.org/10.3390/info16110973

APA StyleChen, R., Zheng, J., Long, W., Chen, H., & Luo, Z. (2025). A Lightweight Adaptive Attention Fusion Network for Real-Time Electrowetting Defect Detection. Information, 16(11), 973. https://doi.org/10.3390/info16110973