BAT-Net: Bidirectional Attention Transformer Network for Joint Single-Image Desnowing and Snow Mask Prediction †

Abstract

1. Introduction

- We propose BAT-Net, a coupled Transformer framework that jointly disentangles snow and scenes without explicit physical priors.

- A Bidirectional Attention Module that enables the background branch to see and undo the current snow belief.

- We present the SCM and FAM, two lightweight encoder upgrades that handle extreme flake scale variance.

- FallingSnow, a new real-world benchmark with only falling snow and pixel-accurate masks.

2. Related Works

2.1. Single-Image Snow Removal

2.2. General Image Restoration Methods

2.3. Vision Transformer in Image Restoration

3. Proposed Method

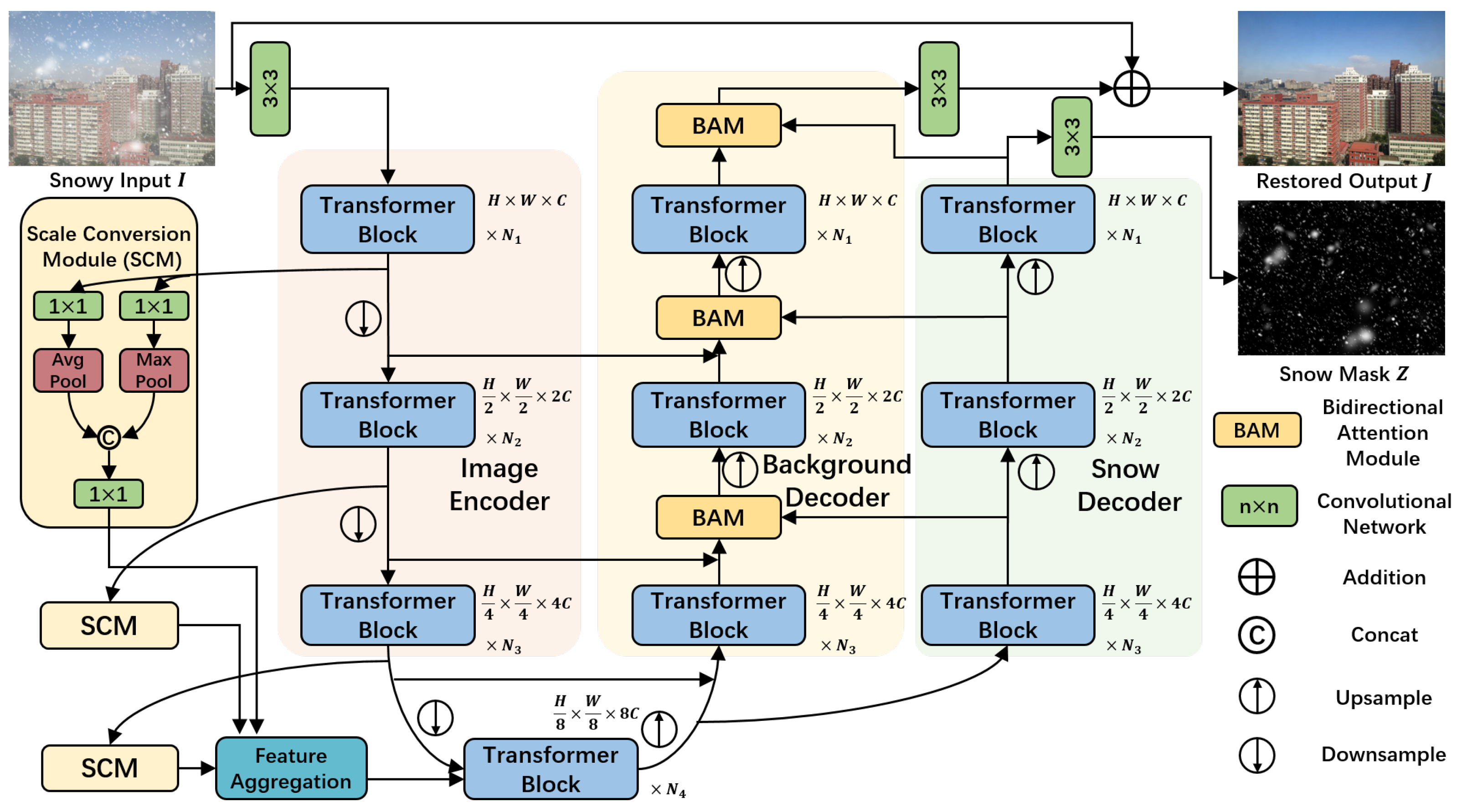

3.1. Overall Pipeline

3.2. Scale Conversion Module and Feature Aggregation Module

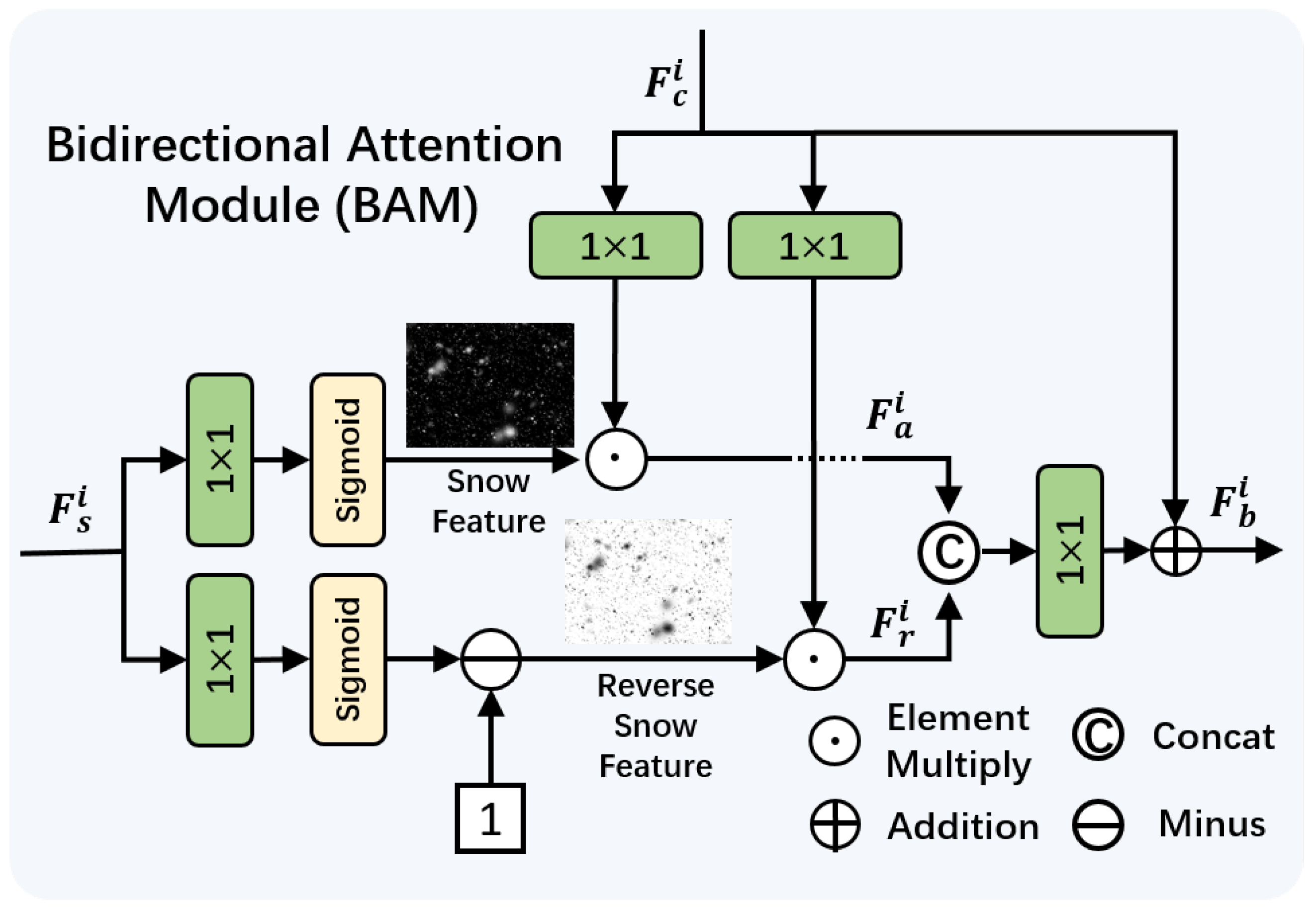

3.3. Bidirectional Attention Module

3.4. Computational Complexity Analysis

4. Experiments and Analysis

4.1. Datasets and Implementation Details

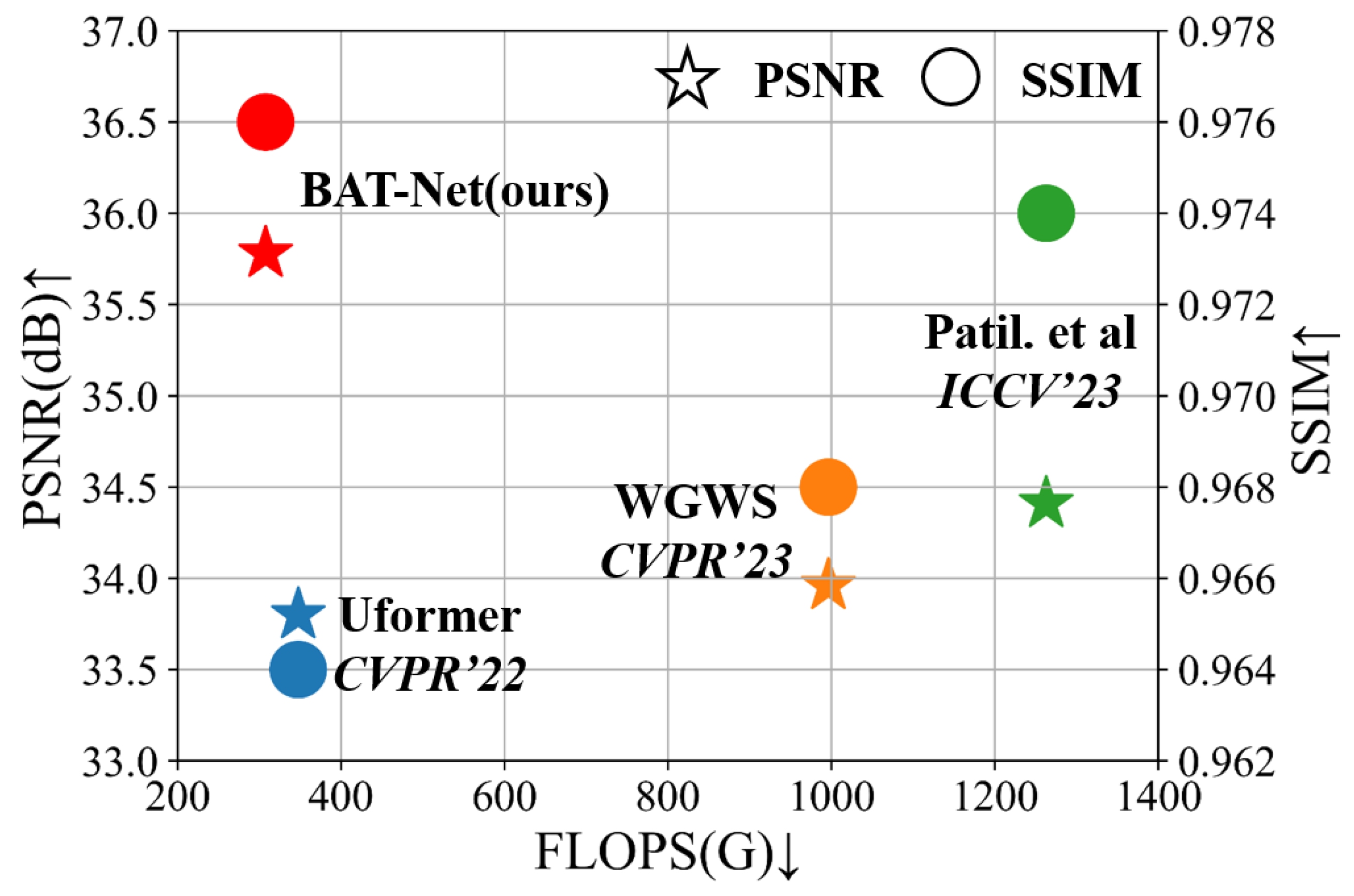

4.2. Comparison with State-of-the-Art Methods

4.2.1. Synthetic Image Desnowing

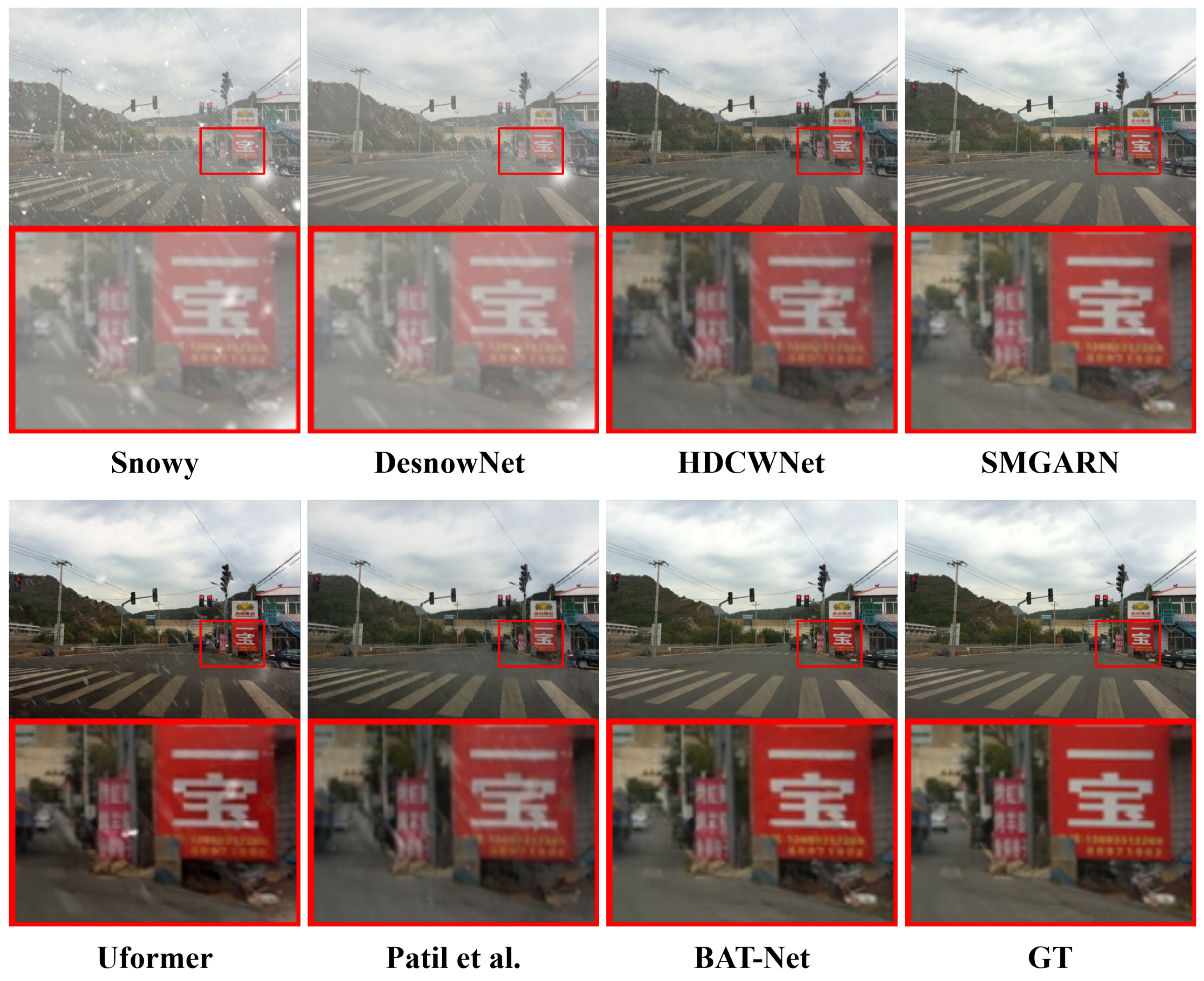

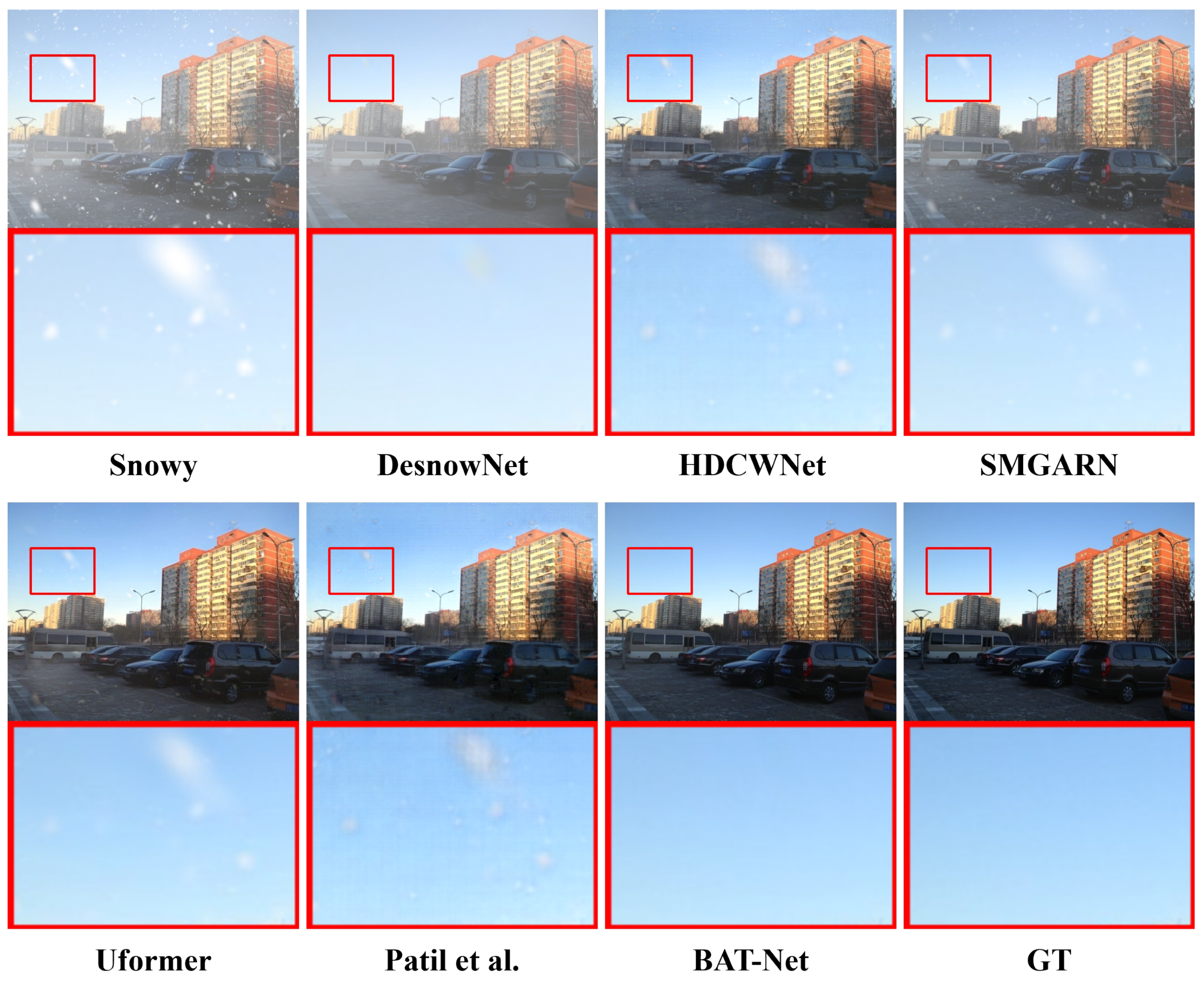

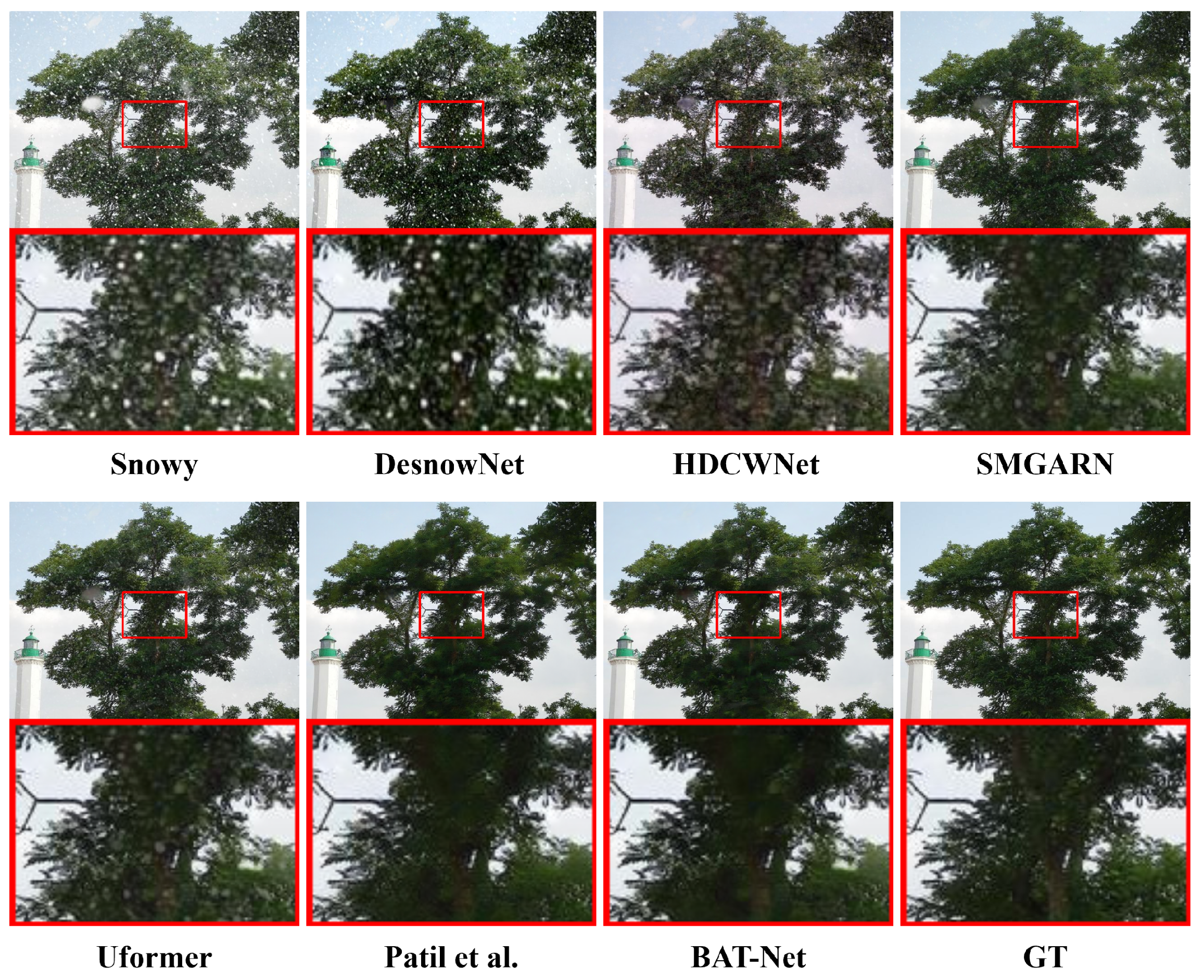

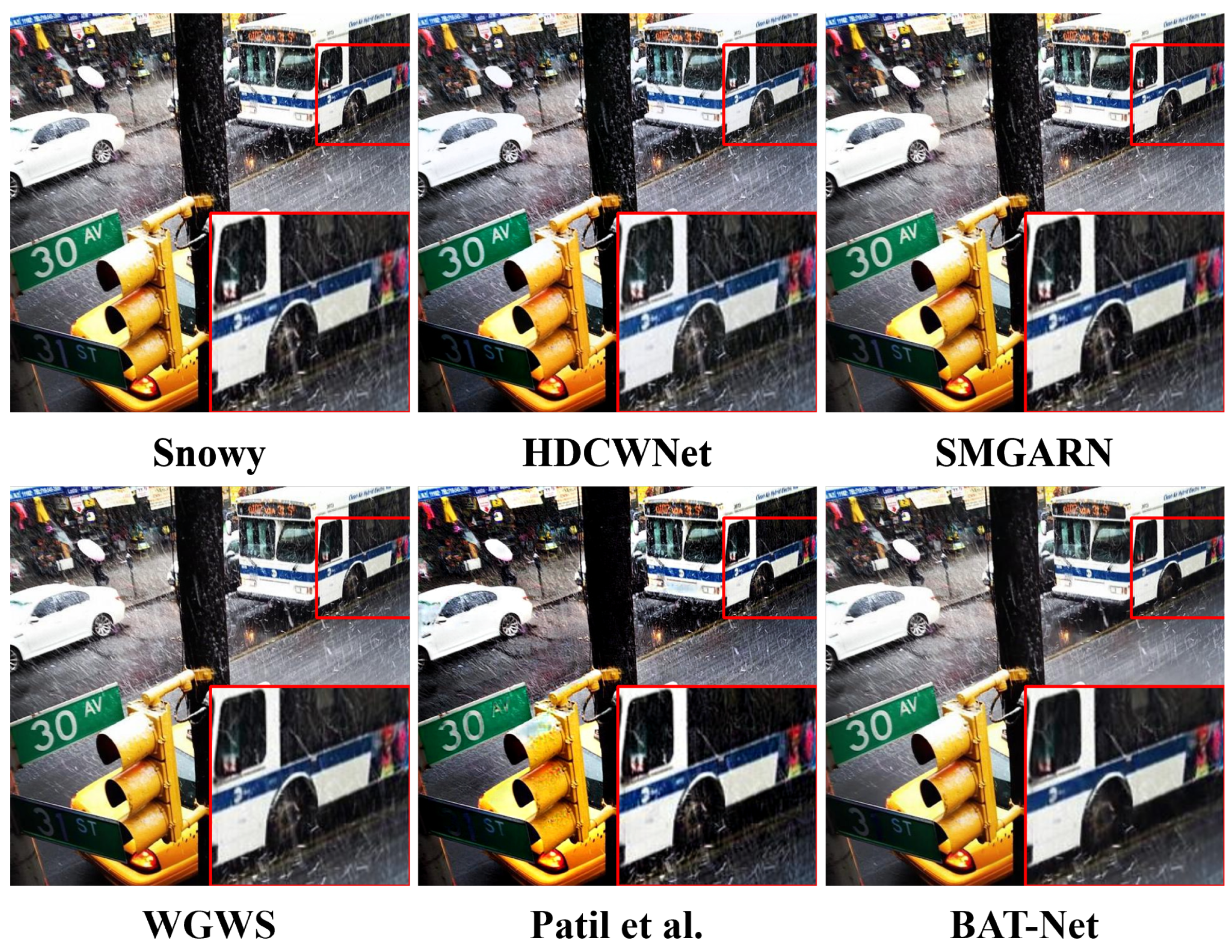

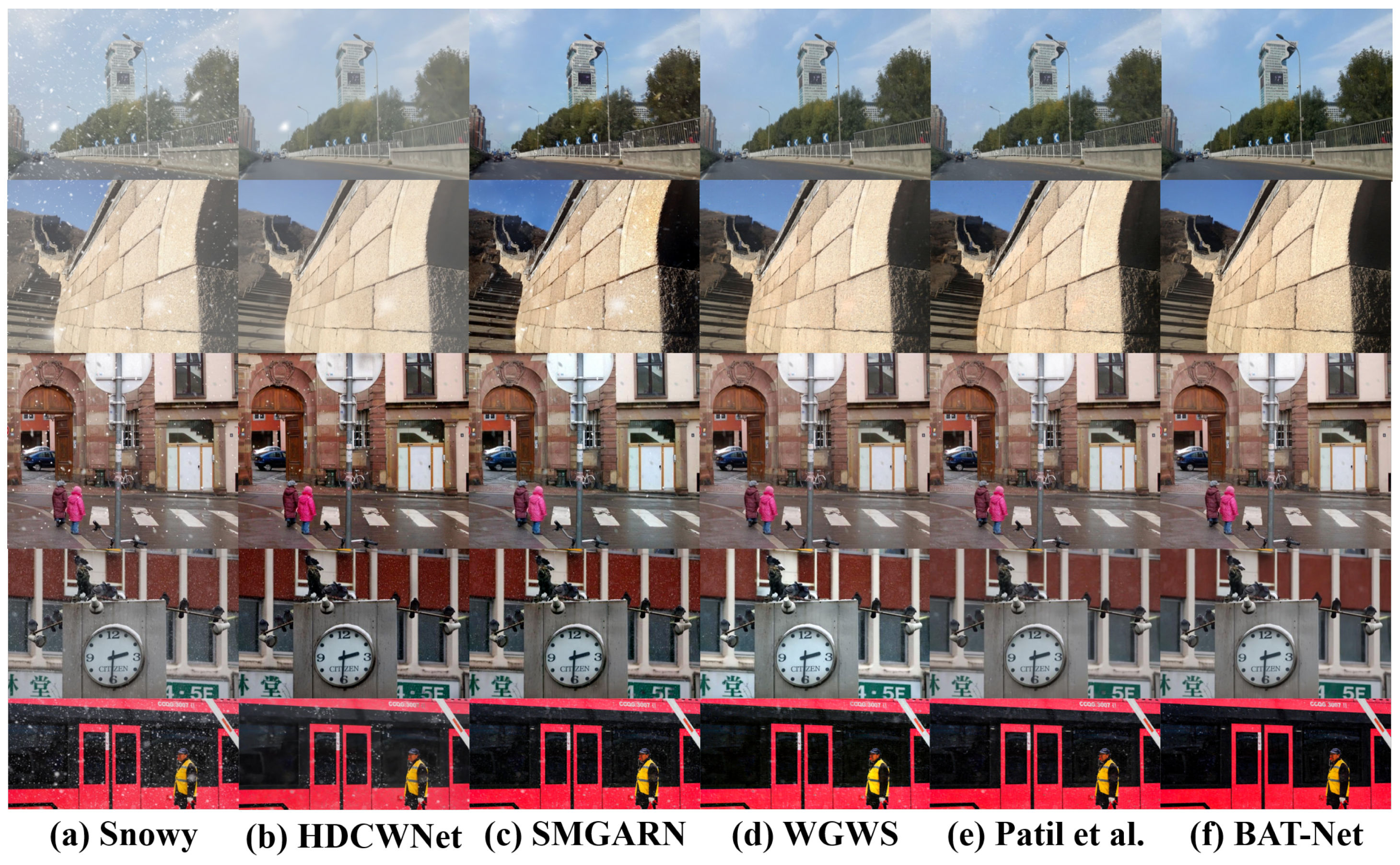

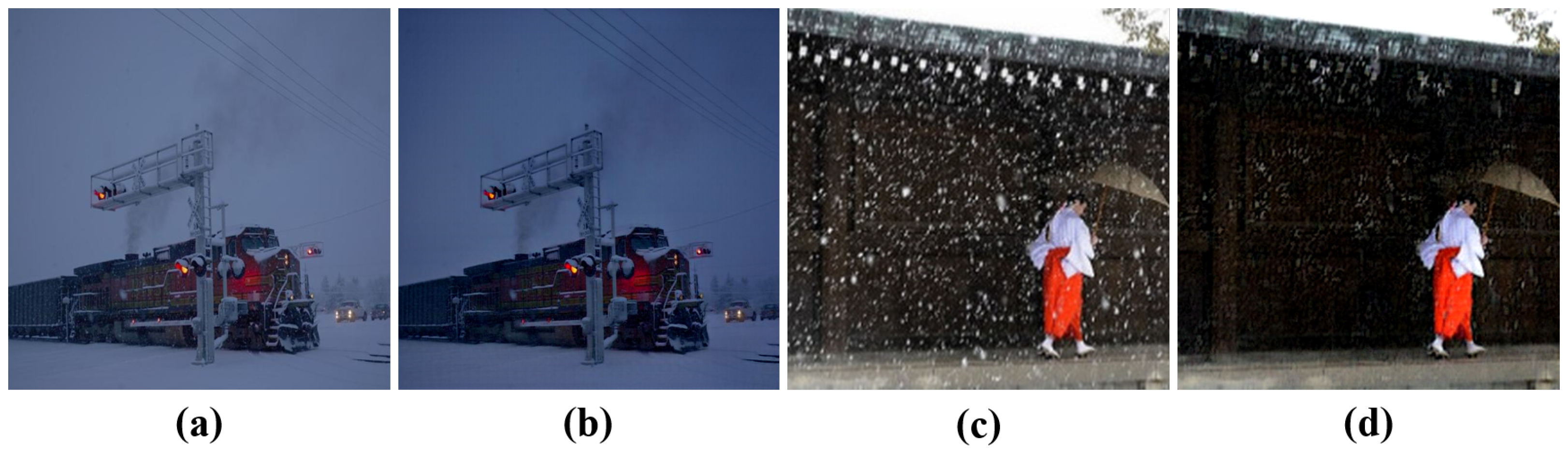

4.2.2. Real-Image Desnowing

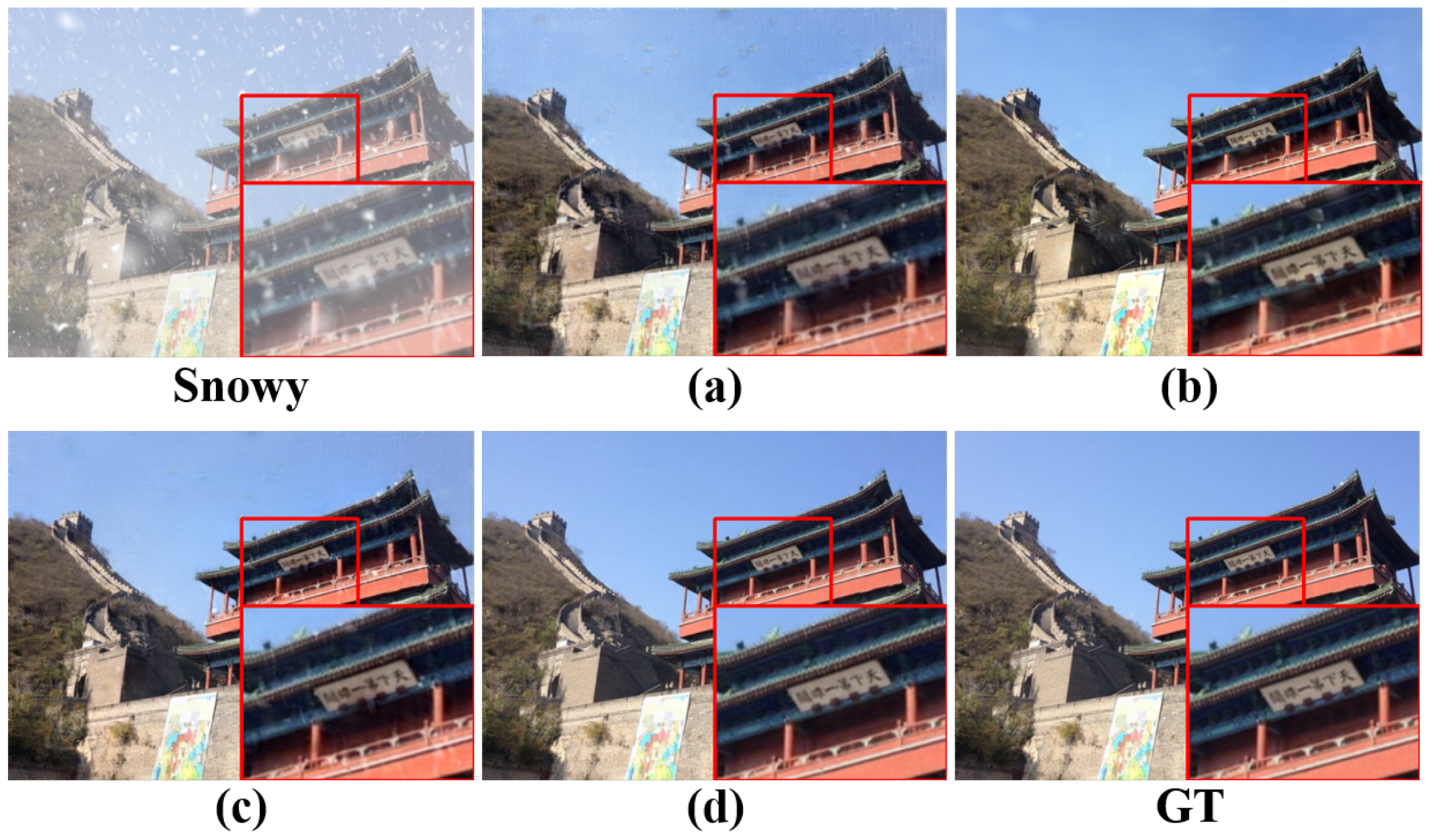

4.3. Qualitative Summary

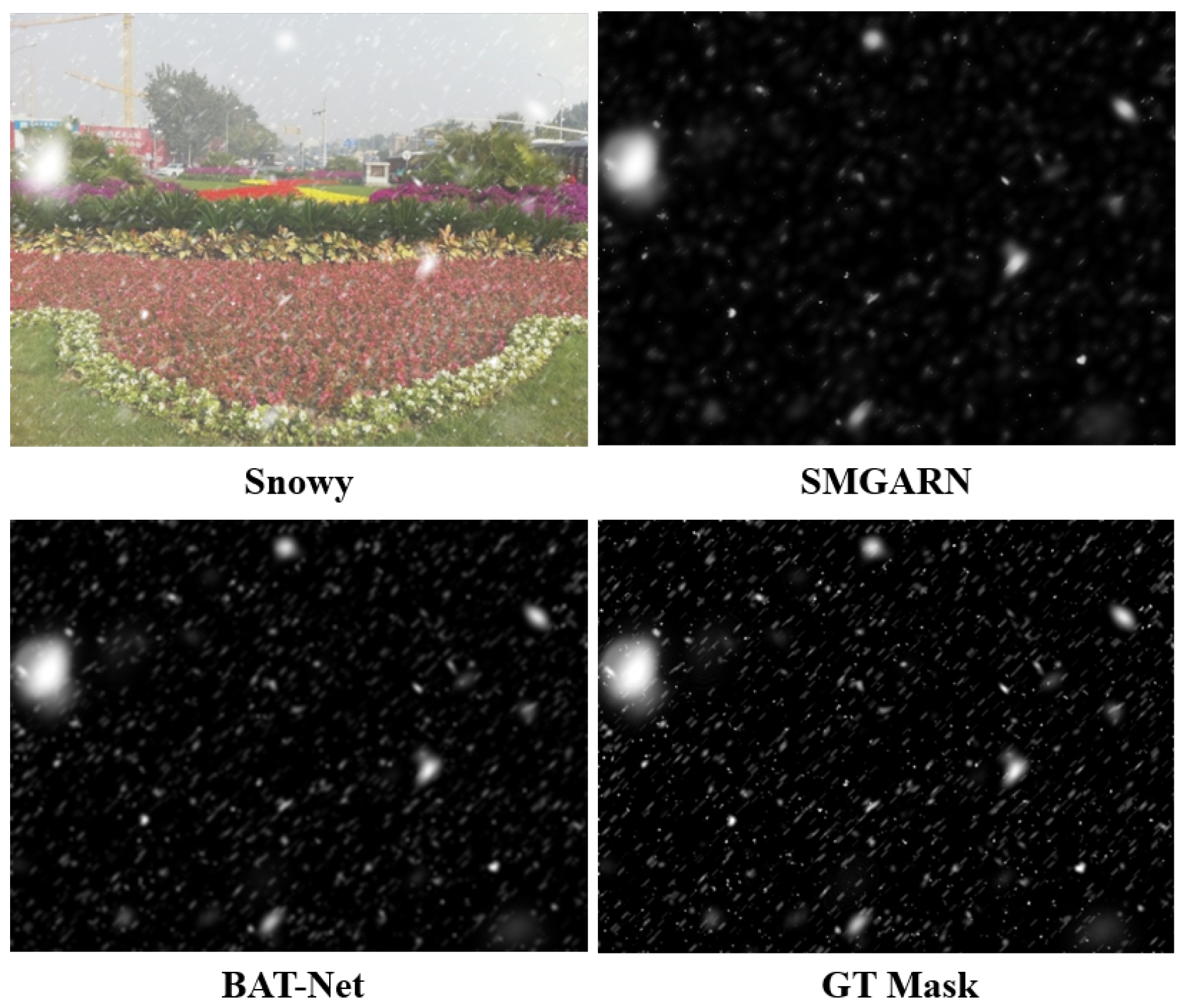

4.4. Snow Mask Prediction

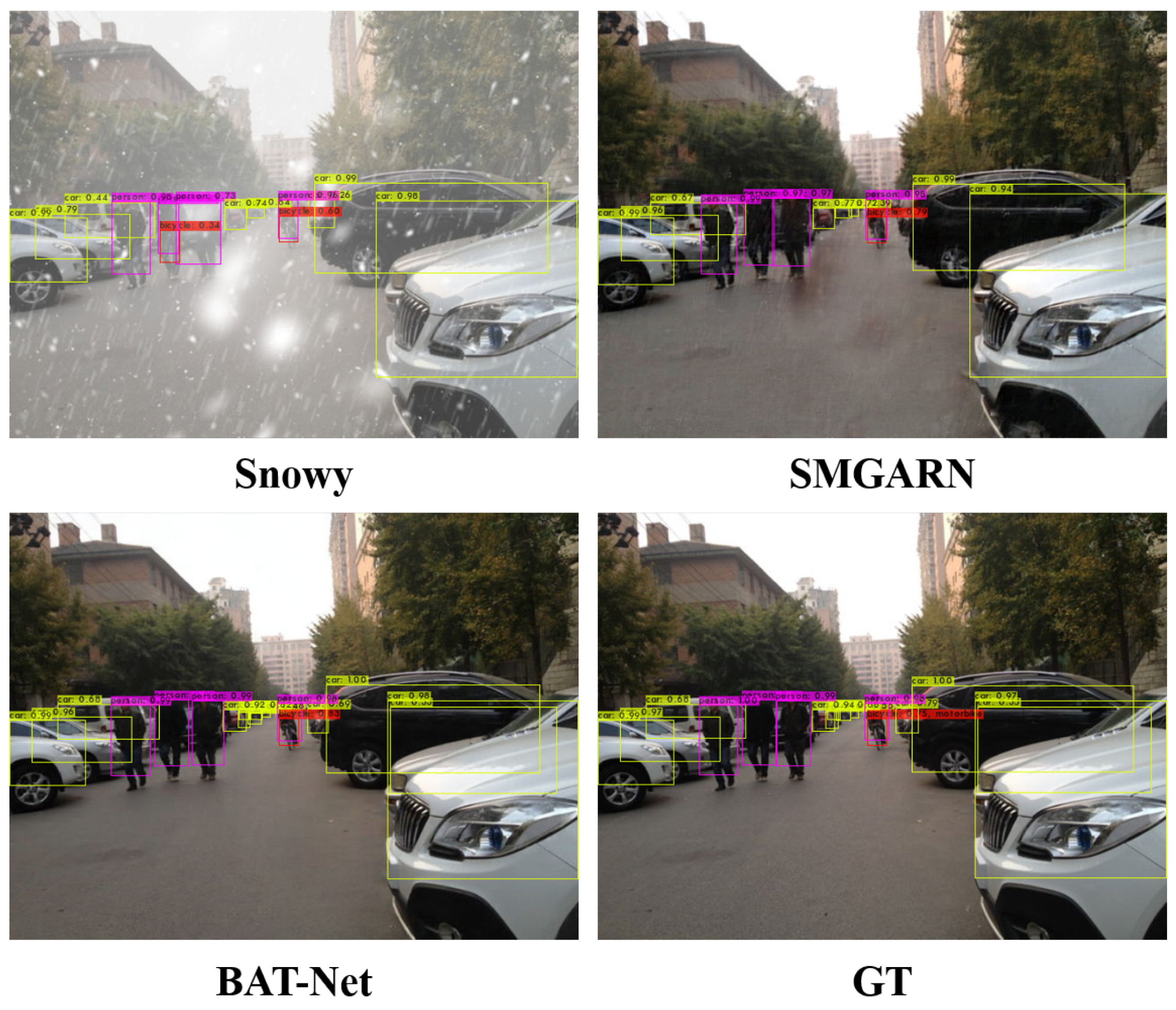

4.5. Object Detection

4.6. Ablation Studies

4.6.1. Contributions of Core Components

- SCM: Removing the Scale Conversion Module results in a significant drop of 0.94 dB in PSNR, confirming that harmonizing multi-scale features onto a uniform grid is crucial for effective subsequent processing.

- FAM: Ablating the Feature Aggregation Module while keeping the SCM leads to a 0.73 dB decrease, demonstrating that cross-scale channel-wise attention is independently vital for integrating fine edges with global illumination contexts.

- Snow Decoder: Disabling the snow decoder and its bidirectional attention causes the most substantial performance loss of 2.47 dB in PSNR, underscoring that explicit snow mask prediction and the cooperative “challenge” between decoders are the most indispensable elements for high-quality background inpainting.

4.6.2. Study of the Bidirectional Attention Module

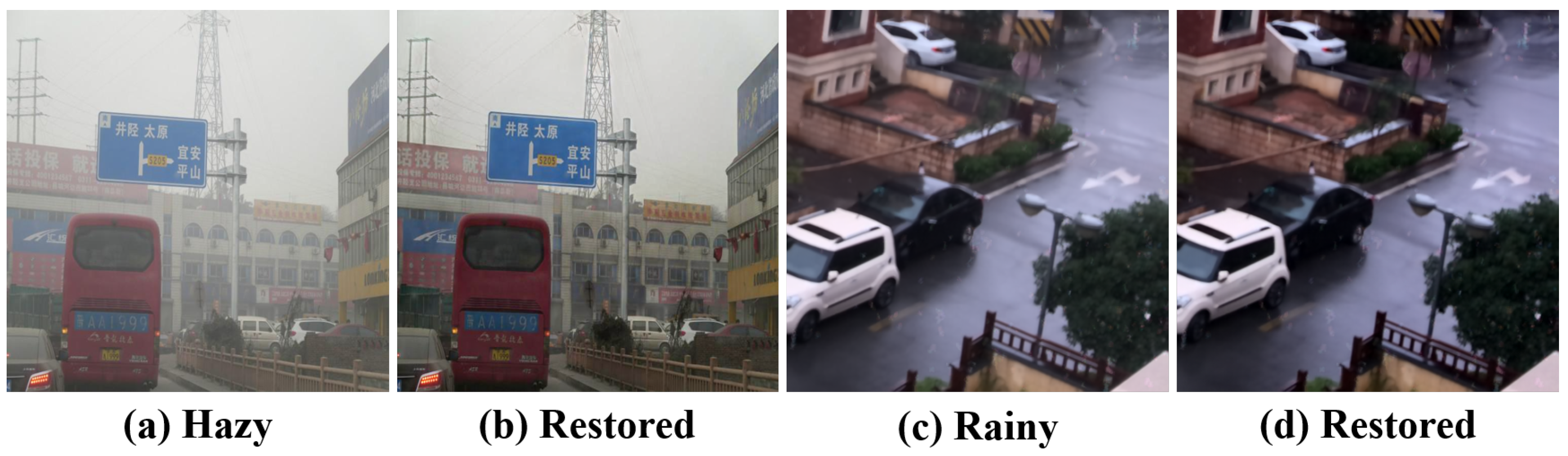

4.7. Cross-Weather Validation

4.8. Failure Cases Analysis

4.9. Study of Hyperparameters and Model Complexity

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, Y.; Yan, D. Simultaneous snow mask prediction and single image desnowing with a bidirectional attention transformer network. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Urumqi, China, 13–15 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 294–308. [Google Scholar]

- Xu, J.; Zhao, W.; Liu, P.; Tang, X. An improved guidance image based method to remove rain and snow in a single image. Comput. Inf. Sci. 2012, 5, 49. [Google Scholar] [CrossRef]

- Zheng, X.; Liao, Y.; Guo, W.; Fu, X.; Ding, X. Single-image-based rain and snow removal using multi-guided filter. In Proceedings of the Neural Information Processing: 20th International Conference, ICONIP 2013, Daegu, Korea, 3–7 November 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 258–265, Proceedings, Part III 20. [Google Scholar]

- Pei, S.C.; Tsai, Y.T.; Lee, C.Y. Removing rain and snow in a single image using saturation and visibility features. In Proceedings of the 2014 IEEE International Conference on Multimedia and Expo Workshops (ICMEW), Chengdu, China, 14–18 July 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 1–6. [Google Scholar]

- Wang, Y.; Liu, S.; Chen, C.; Zeng, B. A hierarchical approach for rain or snow removing in a single color image. IEEE Trans. Image Process. 2017, 26, 3936–3950. [Google Scholar] [CrossRef] [PubMed]

- Yu, S.; Zhao, Y.; Mou, Y.; Wu, J.; Han, L.; Yang, X.; Zhao, B. Content-adaptive rain and snow removal algorithms for single image. In Proceedings of the Advances in Neural Networks–ISNN 2014: 11th International Symposium on Neural Networks, ISNN 2014, Hong Kong SAR, China; Macao SAR, China, 28 November–1 December 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 439–448, Proceedings 11. [Google Scholar]

- Zhang, K.; Li, R.; Yu, Y.; Luo, W.; Li, C. Deep dense multi-scale network for snow removal using semantic and depth priors. IEEE Trans. Image Process. 2021, 30, 7419–7431. [Google Scholar] [CrossRef] [PubMed]

- Li, P.; Yun, M.; Tian, J.; Tang, Y.; Wang, G.; Wu, C. Stacked dense networks for single-image snow removal. Neurocomputing 2019, 367, 152–163. [Google Scholar] [CrossRef]

- Chen, W.T.; Fang, H.Y.; Hsieh, C.L.; Tsai, C.C.; Chen, I.; Ding, J.J.; Kuo, S.Y. All snow removed: Single image desnowing algorithm using hierarchical dual-tree complex wavelet representation and contradict channel loss. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 4196–4205. [Google Scholar]

- Liu, Y.F.; Jaw, D.W.; Huang, S.C.; Hwang, J.N. DesnowNet: Context-aware deep network for snow removal. IEEE Trans. Image Process. 2018, 27, 3064–3073. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.T.; Fang, H.Y.; Ding, J.J.; Tsai, C.C.; Kuo, S.Y. JSTASR: Joint size and transparency-aware snow removal algorithm based on modified partial convolution and veiling effect removal. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 754–770, Proceedings, Part XXI 16. [Google Scholar]

- Cheng, B.; Li, J.; Chen, Y.; Zeng, T. Snow mask guided adaptive residual network for image snow removal. Comput. Vis. Image Underst. 2023, 236, 103819. [Google Scholar] [CrossRef]

- Wang, Z.; Cun, X.; Bao, J.; Zhou, W.; Liu, J.; Li, H. Uformer: A general u-shaped transformer for image restoration. In Proceedings of the IEEE/CVF Conference on CVPR, New Orleans, LA, USA, 19–24 June 2022; pp. 17683–17693. [Google Scholar]

- Zhu, Y.; Wang, T.; Fu, X.; Yang, X.; Guo, X.; Dai, J.; Qiao, Y.; Hu, X. Learning Weather-General and Weather-Specific Features for Image Restoration Under Multiple Adverse Weather Conditions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 21747–21758. [Google Scholar]

- Patil, P.W.; Gupta, S.; Rana, S.; Venkatesh, S.; Murala, S. Multi-weather Image Restoration via Domain Translation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 21696–21705. [Google Scholar]

- Wang, C.; Shen, M.; Yao, C. Rain streak removal by multi-frame-based anisotropic filtering. Multimed. Tools Appl. 2017, 76, 2019–2038. [Google Scholar] [CrossRef]

- Ding, X.; Chen, L.; Zheng, X.; Huang, Y.; Zeng, D. Single image rain and snow removal via guided L0 smoothing filter. Multimed. Tools Appl. 2016, 75, 2697–2712. [Google Scholar] [CrossRef]

- Fazlali, H.; Shirani, S.; Bradford, M.; Kirubarajan, T. Single image rain/snow removal using distortion type information. Multimed. Tools Appl. 2022, 81, 14105–14131. [Google Scholar] [CrossRef]

- Jaw, D.W.; Huang, S.C.; Kuo, S.Y. DesnowGAN: An efficient single image snow removal framework using cross-resolution lateral connection and GANs. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 1342–1350. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, J.; Fang, Z.; Huang, B.; Jiang, X.; Gao, Y.; Hwang, J.N. Single image snow removal via composition generative adversarial networks. IEEE Access 2019, 7, 25016–25025. [Google Scholar] [CrossRef]

- Cheng, Y.; Ren, H.; Zhang, R.; Lu, H. Context-aware coarse-to-fine network for single image desnowing. Multimed. Tools Appl. 2023, 83, 55903–55920. [Google Scholar] [CrossRef]

- Li, Y.; Qian, Q.; Duan, H.; Min, X.; Xu, Y.; Jiang, X. Boosting power line inspection in bad weather: Removing weather noise with channel-spatial attention-based UNet. Multimed. Tools Appl. 2023, 83, 88429–88445. [Google Scholar] [CrossRef]

- Li, B.; Liu, X.; Hu, P.; Wu, Z.; Lv, J.; Peng, X. All-in-one image restoration for unknown corruption. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 17452–17462. [Google Scholar]

- Valanarasu, J.M.J.; Yasarla, R.; Patel, V.M. Transweather: Transformer-based restoration of images degraded by adverse weather conditions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 2353–2363. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H.; Shao, L. Multi-stage progressive image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 19–25 June 2021; pp. 14821–14831. [Google Scholar]

- Chen, W.T.; Huang, Z.K.; Tsai, C.C.; Yang, H.H.; Ding, J.J.; Kuo, S.Y. Learning multiple adverse weather removal via two-stage knowledge learning and multi-contrastive regularization: Toward a unified model. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17653–17662. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H. Restormer: Efficient transformer for high-resolution image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5728–5739. [Google Scholar]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Van Gool, L.; Timofte, R. Swinir: Image restoration using swin transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 1833–1844. [Google Scholar]

- Lee, H.; Choi, H.; Sohn, K.; Min, D. Knn local attention for image restoration. In Proceedings of the EEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2139–2149. [Google Scholar]

- Song, Y.; He, Z.; Qian, H.; Du, X. Vision transformers for single image dehazing. IEEE Trans. Image Process. 2023, 32, 1927–1941. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Li, H.; Li, M.; Pan, J. Learning A Sparse Transformer Network for Effective Image Deraining. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–23 June 2023; pp. 5896–5905. [Google Scholar]

- Loshchilov, I.; Hutter, F. Sgdr: Stochastic gradient descent with warm restarts. arXiv 2016, arXiv:1608.03983. [Google Scholar]

- Yang, W.; Tan, R.T.; Feng, J.; Liu, J.; Guo, Z.; Yan, S. Deep joint rain detection and removal from a single image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 June 2017; pp. 1357–1366. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Li, B.; Ren, W.; Fu, D.; Tao, D.; Feng, D.; Zeng, W.; Wang, Z. Benchmarking single-image dehazing and beyond. IEEE Trans. Image Process. 2018, 28, 492–505. [Google Scholar] [CrossRef] [PubMed]

- Wang, T.; Yang, X.; Xu, K.; Chen, S.; Zhang, Q.; Lau, R.W. Spatial attentive single-image deraining with a high quality real rain dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 12270–12279. [Google Scholar]

| Model | Architecture | Input/Output | Core Mechanism | Limitations/Addressed Gap |

|---|---|---|---|---|

| DesnowNet [10] | Multi-scale CNN | Snowy Image/Clean Image | Multi-scale convolutional network to capture snow of different sizes. | Lacks explicit snow modeling; struggles with complex, semi-transparent snow. |

| SMGARN [12] | Two-stage CNN | Snowy Image/Clean Image + Snow Mask | Predicts a snow mask first, then uses it to guide restoration. | Unidirectional flow: Errors in the initial mask are baked into the final output. |

| Uformer [13] | U-shaped Transformer | Degraded Image/Clean Image | Non-overlapping window-based self-attention for local–global modeling. | A general-purpose restorer; does not explicitly model the snow–background physical interaction. |

| WGWS [14] | CNN with gating | Degraded Image/Clean Image | A gating mechanism to fuse features from snowy and pre-denoised images. | Implicitly handles snow; lacks a dedicated, interactive module for occlusion reasoning. |

| BAT-Net (ours) | Dual-decoder Transformer | Snowy Image/Clean Image + Snow Mask | Bidirectional Attention Module (BAM) for closed-loop, mutual correction between decoders. | Addresses the issues of fragile unidirectional pipelines by enabling real-time cross-decoder verification and refinement. |

| Dataset | Type | Training Images | Test Images | Characteristics |

|---|---|---|---|---|

| CSD [9] | Synthetic | 8000 | 2000 | Large-scale synthetic dataset with diverse snow degradation. |

| SRRS [11] | Synthetic | 10,000 | 2000 | Features snow of different sizes and transparency levels. |

| Snow100K [10] | Synthetic | 20,000 | 2000 | Contains three levels of snowfall severity (light, medium, heavy). |

| Snow100K-Real [10] | Real | - | 1329 | Real snow scenes, often containing both falling and accumulated snow. |

| FallingSnow (ours) | Real | - | 1394 | Curated real-world dataset featuring only falling snow, eliminating the confounding factor of ground snow accumulation. |

| Category | Methods | Source |

|---|---|---|

| Desnowing Methods | DesnowNet [10] | TIP’2018 |

| JSTASR [11] | ECCV’2020 | |

| HDCWNet [9] | ICCV’2021 | |

| SMGARN [12] | CVIU’2023 | |

| Multiple Degradations Removal | MPRNet [25] | CVPR’2021 |

| Chen et al. [26] | CVPR’2022 | |

| Uformer [13] | CVPR’2022 | |

| WGWS [14] | CVPR’2023 | |

| Patil et al. [15] | ICCV’2023 |

| Datasets | CSD [9] | SRRS [11] | Snow100k [10] |

|---|---|---|---|

| Snowy | 14.26/0.692 | 16.51/0.787 | 22.51/0.759 |

| DesnowNet [10] | 20.13/0.815 | 20.38/0.844 | 30.50/0.941 |

| JSTASR [11] | 27.96/0.883 | 25.82/0.896 | 23.12/0.866 |

| HDCWNet [9] | 29.06/0.914 | 27.78/0.928 | 31.54/0.951 |

| SMGARN [12] | 31.93/0.952 ± 0.10/0.002 | 29.14/0.947 ± 0.11/0.002 | 31.92/0.933 ± 0.07/0.003 |

| MPRNet [25] | 33.98/0.971 | 30.37/0.960 | 33.87/0.952 |

| Chen et al. [26] | 33.62/0.961 | 30.17/0.953 | 32.67/0.939 |

| Uformer [13] | 33.80/0.964 ± 0.08/0.002 | 30.72/0.968 ± 0.11/0.001 | 33.81/0.947 ± 0.07/0.001 |

| WGWS [14] | 33.96/0.968 ± 0.09/0.002 | 30.55/0.965 ± 0.05/0.002 | 34.21/0.953 ± 0.07/0.002 |

| Patil et al. [15] | 34.41/0.974 ± 0.07/0.003 | 31.21/0.967 ± 0.11/0.001 | 34.11/0.950 ± 0.09/0.002 |

| BAT-Net | 35.78/0.976 ± 0.06/0.001 | 32.13/0.971 ± 0.07/0.002 | 34.62/0.957 ± 0.10/0.002 |

| Methods | PSNR↑/SSIM↑ |

|---|---|

| JORDER [33] | 19.95/0.392 ± 0.14/0.004 |

| DesnowNet [10] | 22.01/0.566 ± 0.10/0.002 |

| JSTASR [11] | 23.67/0.621 ± 0.11/0.003 |

| SMGARN [12] | 24.95/0.667 ± 0.09/0.002 |

| BAT-Net | 26.67/0.732 ± 0.08/0.002 |

| Model Variant | SCM | FAM | Snow Decoder | PSNR/SSIM |

|---|---|---|---|---|

| BAT-Net (Full) | ✔ | ✔ | ✔ | 35.78/0.976 |

| -SCM | - | ✔ | ✔ | 34.84/0.965 |

| -FAM | ✔ | - | ✔ | 35.05/0.967 |

| -Snow Decoder | ✔ | ✔ | - | 33.31/0.961 |

| Configuration | Forward Attention | Reverse Attention | PSNR↑/SSIM↑ |

|---|---|---|---|

| (a) Baseline (w/o BAM) | 34.21/0.966 | ||

| (b) Reverse only | ✔ | 35.12/0.971 | |

| (c) Forward only | ✔ | 35.34/0.973 | |

| (d) Full BAM | ✔ | ✔ | 35.78/0.976 |

| Config. | Layer Numbers (4 Stages) | Attention Heads (4 Stages) | PSNR↑/SSIM↑ |

|---|---|---|---|

| (a) | 4,4,4,4 | 2,2,4,4 | 34.66/0.967 |

| (b) | 4,4,4,4 | 1,2,4,8 | 34.92/0.971 |

| (c) | 2,4,4,6 | 2,2,4,4 | 35.07/0.970 |

| (d) | 2,4,4,6 | 1,2,4,8 | 35.78/0.976 |

| Model | FLOPs (G) | Params (M) | Inference Time (s) | FPS |

|---|---|---|---|---|

| Uformer [13] | 347.6 | 50.9 | 0.1737 | 5.76 |

| WGWS [14] | 996.2 | 12.6 | 0.1919 | 5.21 |

| Patil et al. [15] | 1262.9 | 11.1 | 0.1098 | 9.11 |

| BAT-Net (ours) | 307.4 | 43.2 | 0.1073 | 9.32 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y. BAT-Net: Bidirectional Attention Transformer Network for Joint Single-Image Desnowing and Snow Mask Prediction. Information 2025, 16, 966. https://doi.org/10.3390/info16110966

Zhang Y. BAT-Net: Bidirectional Attention Transformer Network for Joint Single-Image Desnowing and Snow Mask Prediction. Information. 2025; 16(11):966. https://doi.org/10.3390/info16110966

Chicago/Turabian StyleZhang, Yongheng. 2025. "BAT-Net: Bidirectional Attention Transformer Network for Joint Single-Image Desnowing and Snow Mask Prediction" Information 16, no. 11: 966. https://doi.org/10.3390/info16110966

APA StyleZhang, Y. (2025). BAT-Net: Bidirectional Attention Transformer Network for Joint Single-Image Desnowing and Snow Mask Prediction. Information, 16(11), 966. https://doi.org/10.3390/info16110966