Abstract

The rapid integration of artificial intelligence (AI) in content generation has encouraged the development of AI detection tools aimed at distinguishing between human- and AI-authored texts. These tools are increasingly adopted not only in academia but also in sensitive decision-making contexts, including candidate screening by hiring agencies in government and private sectors. This extensive reliance raises serious questions about their reliability, fairness, and appropriateness for high-stakes applications. This study evaluates the performance of six widely used AI content detection tools, namely Undetectable AI, Zerogpt.com, Zerogpt.net, Brandwell.ai, Gowinston.ai, and Crossplag, referred to as Tools A through F in this study. The assessment focused on the ability of the tools to identify human versus AI-generated content across multiple domains. Verified human-authored texts were gathered from reputable sources, including university websites, pre-ChatGPT publications in Nature and Science, government portals, and media outlets (e.g., BBC, US News). Complementary datasets of AI-generated texts were produced using ChatGPT-4o, encompassing coherent essays, nonsensical passages, and hybrid texts with grammatical errors, to test tool robustness. The results reveal significant performance limitations. The accuracy ranged from 14.3% (Tool B) to 71.4% (Tool D), with the precision and recall metrics showing inconsistent detection capabilities. The tools were also highly sensitive to minor textual modifications, where slight changes in phrasing could flip classifications between “AI-generated” and “human-authored.” Overall, the current AI detection tools lack the robustness and reliability needed for enforcing academic integrity or making employment-related decisions. The findings highlight an urgent need for more transparent, accurate, and context-aware frameworks before these tools can be responsibly incorporated into critical institutional or societal processes.

1. Introduction

As artificial intelligence (AI) technologies advance, the ability of large language models (LLMs) to produce human-like text has raised serious concerns regarding authenticity, intellectual property, academic integrity, and misinformation. Generative AI systems such as ChatGPT by OpenAI [1], Gemini by Google [2], and Copilot by Microsoft [3] have rapidly emerged as powerful tools capable of generating coherent text and engaging in natural language dialogs through advanced natural language processing (NLP) techniques. Nowadays, these tools are broadly adopted in education, research, industry, and daily life. Nevertheless, their extensive use has prompted questions about originality and authenticity, which has led to the rising development and deployment of AI content detection systems.

The rapid evolution of AI chatbots is largely attributable to advances in OpenAI’s Generative Pre-Trained Transformer (GPT) series. GPT-1, introduced in 2018, demonstrated the potential of integrating unsupervised pre-training with supervised fine-tuning to accomplish improvements across a variety of NLP tasks [4]. In 2019, GPT-2 extended this paradigm by adopting a significantly larger architecture and a broad diverse training dataset, which produced notable improvements in fluency and contextual reasoning [5]. The subsequent release of GPT-3 in 2020 signified a major milestone in AI research. Thus, with 175 billion parameters, GPT-3 established state-of-the-art performance across numerous NLP benchmarks and displayed the ability to produce highly realistic and contextually coherent text [6]. A fine-tuned version of GPT-3.5 became the foundation of ChatGPT that was first introduced to the public in November 2022 [1].

In March 2023, OpenAI launched GPT-4, a large multimodal model capable of processing both textual and visual input and producing textual output [7,8]. Contrary to GPT-3.5, GPT-4 demonstrated superior reasoning capability, enhanced contextual comprehension, and improved performance on complex tasks. In May 2024, OpenAI further expanded the multimodal ability of its models with GPT-4o, which supports text, image, video, and audio inputs, and produces outputs in text, image, and audio modalities [9]. Thus, GPT-4o was particularly substantial for enabling real-time interactive conversations with response times like those of human speakers. This model replaced earlier methods that required separate systems for audio transcription and response generation, thereby providing a more natural conversational experience. In July 2024, a smaller and cost-efficient version, GPT-4o mini, was introduced [10]. In September 2024, OpenAI released the o1 model that was trained with large-scale reinforcement learning to reinforce its reasoning capacity by allowing the system to engage in intermediate problem-solving steps before producing answers [11]. This approach reflects a growing trend of incorporating reinforcement learning techniques with LLMs to improve reliability, factual accuracy, and interpretability.

The increasing sophistication of generative AI systems has intensified the necessity for robust detection mechanisms capable of differentiating human-authored text from machine-generated content. Several detection systems have developed and are commonly used across academic, journalistic, and professional environments. While their specific implementation varies, most tools aim to offer users with a probability score or categorical classification signifying the likelihood of text being AI-generated. For example, ZeroGPT and Undetectable AI present their evaluations as numerical percentages that quantify the probability of a text being human-written or machine-generated. Other tools, such as Brandwell.ai, present categorical outcomes, such as “Reads like AI,” “Passes as Human,” or “Hard to Tell,” thereby providing users an interpretative framework rather than a statistical score. Additional tools, including Copyleaks AI Detector and Turnitin’s AI writing detection module, incorporate probabilistic detection with plagiarism-checking systems, facilitating their application in academic evaluation workflows. Table 1 presents a selection of broadly used AI content detection tools that differ in accessibility, output formats, and explanation methods. The tools listed were chosen based on their popularity and extensive application in academic and professional contexts. These detection tools are increasingly adopted in contexts where authenticity is crucial. In education, the tools serve as mechanisms for academic integrity enforcement by pinpointing AI-generated submissions. In journalism, the tools help mitigate the risk of publishing AI-generated misinformation. Meanwhile, in recruitment and professional screening, organizations are using the tools to ensure that application materials genuinely reflect the candidate’s skills rather than the capabilities of generative AI. Thus, the adoption of such systems highlights a broader societal reliance on automated verification tools in maintaining trust and authenticity in digital communication.

Table 1.

Overview of extensively used AI detection tools.

Despite the wide adoption of these tools, AI content detection tools face significant challenges regarding accuracy, interpretability, and fairness. The risk of misclassification remains high, and the consequences of inaccurate detection can be severe. Thereby, human-authored work may be erroneously flagged as AI-generated, leading to academic penalties or reputational harm, while AI-generated work may evade detection, undermining trust in academic, journalistic, or organizational processes. This dual risk underscores the fragile balance between the promise and limitations of detection systems. The challenge is intensified by the rapid advancement of LLMs. The recent systems such as GPT-4 and GPT-4o produce text that closely resembles human writing, both in linguistic variety and contextual suitability. As a result, the linguistic markers that early detection models depend upon have become less reliable. Wu et al. [12] highlighted that the introduction of autoregressive models significantly improved the complexity of detection by reducing the statistical differences between human- and machine-authored text. The existing detection methods, many of which initially depend on statistical analysis of word frequency distributions, are no longer sufficiently discriminative [13]. Moreover, a report showed that between January 2022 and May 2023, mainstream media experienced a 55% increase in AI-generated news articles, complemented by a 457% rise in misinformation during the same period [14]. This surge elucidates not only the growing use of generative AI but also its potential to amplify disinformation at unprecedented scales [15,16]. Scholarly reviews suggest that while early methods treat detection as a relatively straightforward classification task, the evolving sophistication of LLMs requires more nuanced methods. Beresneva [13] noted that detection once depend on relatively simple statistical cues, but contemporary models challenge these assumptions. Thus, as generative models become more expressive, detection tools must evolve to integrate deeper linguistic, contextual, and semantic analysis, possibly through hybrid methods that incorporate statistical methods with neural network-based classification systems.

The integration of generative AI into essential sectors highlights the urgency of developing reliable methods for distinguishing between human-written and AI-generated content. In academia, unreliable detection may result in false allegations of misconduct or the acceptance of unoriginal work. In journalism, failure to identify AI-authored articles may challenge trust in media institutions. In professional contexts such as recruitment, an inability to evaluate authenticity may lead to hiring decisions based on misrepresented skills. These risks are compounded by the accelerating enhancement of generative AI models, which consistently narrow the gap between human and machine writing. Therefore, this study seeks to conduct a systematic assessment of widely adopted AI content detection tools, with the objective of evaluating their accuracy and reliability under varying conditions. Text samples from both academic and non-academic sources are used, and performances are measured using statistical metrics such as accuracy, precision, and recall. Through this evaluation, the study aims to offer a clearer understanding of the strengths and limitations of existing detection systems, as well as insights into their practical applicability across different domains.

2. Research Methodology

This study conducts a comprehensive assessment of the effectiveness of six broadly used AI content detection tools in distinguishing between human-written and AI-generated text. Both freely accessible and subscription-based tools are considered to offer a balanced representation of the systems presently available in academic and professional contexts. For simplicity, the tools are anonymized and referenced as Tools A through F in this study. Precisely, these are Undetectable AI (Tool A), ZeroGPT.com (Tool B), ZeroGPT.net (Tool C), Brandwell.ai (Tool D), Winston AI (Tool E), and Crossplag (Tool F). The selection of these tools was based on their popularity, accessibility, and frequent application in higher education, publishing, and organizational evaluation workflows. Additionally, these tools represent a range of underlying detection methodologies and have substantial user bases, making the tools relevant for real-world application. The selection of the tools aimed to capture a representative sample of broadly adopted detection technologies to offer meaningful insights into their performance and limitations. To assess these detection systems, human-authored text was gathered from a variety of high-quality sources. A large amount of the dataset was obtained from the official websites of globally ranked universities, ensuring reliable human-generated material for testing. The universities selected include the Massachusetts Institute of Technology (MIT) [17], University of Cambridge [18], Harvard University [19], University of British Columbia [20], Imperial College London [21], University of Illinois Urbana-Champaign [22,23], Stanford University [24], and the University of California, Berkeley [25,26]. Additional material was also collected from Idaho State University [27]. The rationale for choosing university content is grounded in the assumption that official institutional web materials are professionally written and judiciously reviewed, thus signifying authentic human authorship.

Besides the university sources, the dataset integrated a diverse collection of academic publications. To establish a baseline of unquestionably human-authored academic text, journal articles published before the release of generative AI models such as ChatGPT were adopted. These include influential works from 2005 to 2015 [28,29,30,31,32,33,34,35]. Among them is the seminal paper published in 2005 by Yann LeCun, Yoshua Bengio, and Geoffrey Hinton [32], often referred to as the “godfathers of AI” and recipients of the Turing Award, with Geoffrey Hinton also receiving the 2024 Nobel Prize in Physics. Since these papers precede the development of advanced AI text generators, the articles serve as a reliable benchmark for assessing the capability of the tools to correctly identify human-authored scientific literature. Moreover, recent papers were also included to reveal contemporary academic writing styles and to test whether the detection tools could effectively distinguish between recent human-authored text and AI-generated content. The selected sources include publications in Nature, Humanities and Social Sciences Communications, and Nature Communications [36,37,38,39]. Additionally, content from other sections of Nature’s website was included, such as the Aims and Scope statements of Nature npj, Artificial Intelligence and editorials such as Nature News Q&A [40,41,42]. These provide additional diversity in writing tone and technical depth, spanning both academic and semi-academic communication styles.

To extend the assessment beyond strictly academic writing, the dataset also included text drawn from international media outlets such as BBC News, BBC Sports, and U.S. News. The media articles vary significantly from academic writing in structure, vocabulary, and narrative tone, providing a valuable counterpoint for testing the generalizability of detection tools. Similarly, content from government websites was incorporated to capture formal administrative and policy-oriented language. Notable examples include the famous speech “I Have a Dream” by Martin Luther King Jr., accessed through the U.S. National Archives [43], and selected content from the Government of Canada’s official website [44]. These sources were selected because they represent different writing genres and rhetorical styles, expanding the range of test material available for evaluation.

To discover the limitations of AI detection tools, the dataset was deliberately augmented with AI-generated text that had been post-processed through targeted editing. Precisely, grammatical errors and minor stylistic inconsistencies were introduced into ChatGPT-generated passages to simulate imperfect human writing. This adjustment was planned to test whether the detection tools could still identify such content as machine-authored, or whether the edits would reduce the likelihood of AI identification. Also, illogical text generated by GPT-4o was included in the dataset. This category of content, while syntactically valid, lacks semantic coherence and is therefore useful for testing whether detection tools are sensitive to logical inconsistencies or rely solely on surface-level linguistic features. To further stress-test the robustness of the tools, sensitivity experiments were implemented by applying minor modifications to human-authored text. These involved the deletion of single words, adjustments to punctuation, or slight paraphrasing. The objective was to evaluate whether small alterations could substantially change the classification output of detection tools, thereby emphasizing their vulnerability to manipulation. For this purpose, content from the Stanford University News webpage was specifically utilized [45]. Table 2 summarizes the different categories of text sources employed in this study.

Table 2.

Overview of different categories of text used for the analysis.

The performance of the selected AI detection tools was evaluated using three statistical metrics: accuracy, precision, and recall. Accuracy denotes the proportion of correctly classified instances relative to the total number of evaluated samples. While accuracy provides a general measure of performance, it is sensitive to class imbalances and may not consistently reflect performance in cases where one class dominates the dataset [46]. In addition, precision, also known as the positive predictive value, measures the proportion of correctly recognized AI-generated instances among all instances classified as AI-generated [46]. This metric is mainly relevant in contexts such as academic integrity, where false positives could lead to unjust penalties for students or researchers. Furthermore, recall, also referred to as sensitivity or the true positive rate, enumerates the proportion of actual AI-generated instances that were correctly identified by the detection tool [47]. This measure is crucial in detecting fraudulent or manipulated content, where the consequences of missed detection can be severe. For tools that report probabilistic scores (e.g., percentage likelihood of AI authorship), a threshold of 50% was assumed. In this study, any text assigned a score above 50% AI-likelihood was classified as AI-generated, while scores below this threshold were categorized as human-authored. The classification threshold was chosen based on the widespread usage in the literature as a balanced point to optimize the trade-off between false positives and false negatives. This fixed cutoff enables comparability across studies. For tools that provided categorical or binary outputs without percentage probabilities, the classification was adopted directly without conversion. This ensured consistency in assessment while respecting the native output design of each tool. The combination of diverse text sources, manipulated content, and multiple assessment metrics enables a comprehensive analysis of the effectiveness of current AI detection systems. By integrating academic, non-academic, historical, and modified AI-generated text, this study provides a rigorous evaluation of the robustness and reliability of detection tools in differentiating between human and machine authorship.

3. Results and Discussion

3.1. General Overview of Detection Performance

The results of this study divulge the limited effectiveness of current AI detection tools in accurately differentiating between human-written and AI-generated content. Table 3 summarizes the classification results across different categories of text, while detailed numerical outputs for each source are available in the Supplementary Materials. Generally, the results highlight recurring issues, including frequent misclassification of authentic human-authored text as AI-generated, inconsistent behavior across tools, and extreme sensitivity to minor textual changes. Altogether, these outcomes raise concerns regarding the reliability of AI detection systems, particularly when used in high-stakes settings such as academia, publishing, or professional environments.

Table 3.

Results of detection tools.

3.2. Misclassification of Human-Written University Content

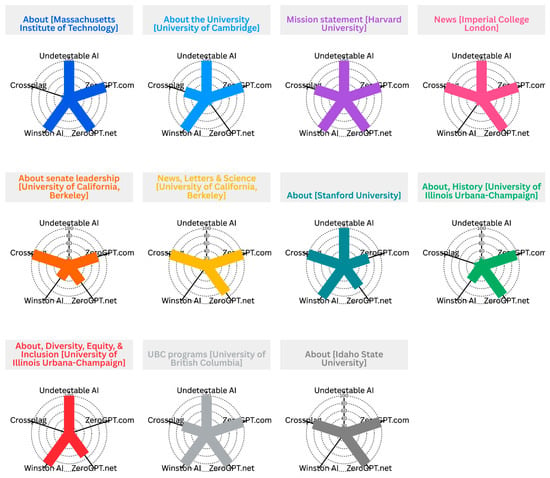

One of the most striking results of the evaluation was the extensive misclassification of university content. About pages, mission statements, and informational texts sourced from globally renowned institutions, including the Massachusetts Institute of Technology, the University of Cambridge, Harvard University, and the University of British Columbia, were consistently flagged as AI-generated by most tools. For example, Tools A (Undetectable AI), B (ZeroGPT.com), C (ZeroGPT.net), and E (Winston AI) consistently labeled such content as 100% AI-generated text, while Tool D (Brandwell.ai) classified the texts as “Reads like AI” or “Hard to Tell.” Figure 1 shows the misclassification of human-written university content by the detection tools except Tool D. This trend can be ascribed to the nature of institutional content itself. University websites usually use formal, structured, and polished language, which closely resembles the stylistic tendencies of AI-generated prose. Thus, detection algorithms appear unable to differentiate between high-quality human writing and machine-generated text. The fact that foundational university descriptions, crafted long before the advent of generative AI, are misclassified at such high rates highlights a critical limitation of these tools, hence conflating linguistic sophistication with artificial authorship. This issue is particularly challenging in academic settings, where such tools are increasingly being considered for use in assessing student writing. If even the world’s most prestigious institutions’ own websites are flagged as AI-generated texts, then students producing formal or technical writing could face unwarranted suspicion.

Figure 1.

Classification of human-written university content (Massachusetts Institute of Technology [17], University of Cambridge [18], Harvard University [19], Imperial College London [21], University of California, Berkeley [25,26], Stanford University [24], University of Illinois Urbana-Champaign [22,23], University of British Columbia [20], Idaho State University [27]).

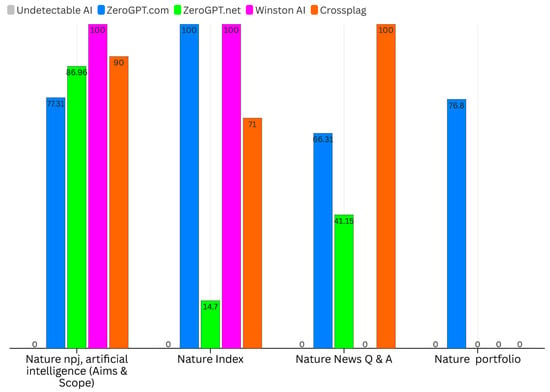

3.3. Published Papers and Journal Websites

The misclassification problem extended beyond university content into peer-reviewed journal publications. As presented in Figure 2, texts taken from leading journals such as Nature [35] including papers written as well as articles published before the rise in AI systems such as ChatGPT, were frequently flagged as AI-generated. For instance, the foundational 2005 paper by LeCun et al. [32], a seminal work in AI research, was consistently classified as 100% AI-generated by all tools except Crossplag (Figure 3). This outcome shows a particularly severe shortcoming of detection tools. Generally, scientific writing is inherently formal, precise, and technical, frequently following strict conventions of academic prose. These stylistic traits are clearly misinterpreted by detection algorithms as indicative of AI authorship. For example, abstracts from papers across various fields, including physics, computer science, and social sciences, were regularly flagged as AI-generated, even when published decades before the existence of AI writing tools (Figure 3).

Figure 2.

Classification of text collected from journal websites (Nature npj, artificial intelligence [40], Nature Index [35], Nature News Q&A [42], Nature portfolio [41]).

Figure 3.

Classification of texts gathered from published papers (Srianand et al. [34], Chen et al. [33], LeCun et al. [32], Jung et al. [31], Barber et al. [30], Tsukada et al. [29], Al-Zahrani & Alasmari [36], Jordan & Mitchell [28], Nishiie et al. [39], Peng et al. [38], Guo et al. [37]).

Remarkably, the results varied significantly across detection tools. For example, Tool B (ZeroGPT.com) frequently returned values close to 100% AI-generated for journal abstracts, while Tool F (Crossplag) produced more mixed results, at times classifying the same content as entirely human-authored. This inconsistency highlights the lack of standardized method across detection systems, further complicating their interpretive reliability. The implications for scholarly publishing are profound. If AI detectors cannot reliably differentiate authentic scientific writing from AI-generated text, their use in editorial workflows could lead to false accusations of misconduct, undermining both authors and publishers.

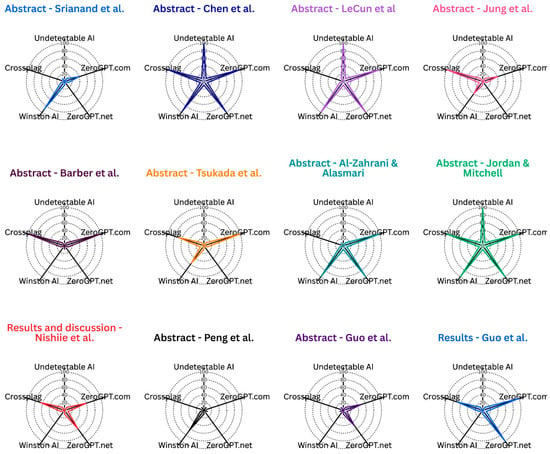

3.4. Governmental and Historical Texts

The unreliability of AI detection tools was further verified through their misclassification of government documents and historical speeches. A prominent example is Martin Luther King Jr.’s famous “I Have a Dream” speech [43], which was flagged as AI-generated by several tools. Tool B classified the speech as 87.7% AI-generated, while Tool C returned 84.4% AI-generated (Figure 4). Similarly, government texts from the Government of Canada’s website were identified as AI-generated, with Tools B and F reporting 87.57% and 60%, respectively.

Figure 4.

Classification of government and historical texts (Martin Luther King Jr.’s speech “I Have a Dream.” [43], Government of Canada [44]).

These findings are particularly striking, as both examples represent unambiguously human-authored text with substantial historical and cultural context. The tendency to misclassify such well-documented, human-generated content divulges the flawed assumptions underlying AI detection algorithms. Specifically, these tools seem to depend heavily on stylistic markers, such as polished grammar and formal sentence structures, without integrating contextual or temporal cues. The misclassification of widely recognized historical and governmental texts raises concerns about the adoption of detection tools in contexts such as plagiarism investigations, where the stakes involve academic integrity, public trust, and reputational consequences.

3.5. Media Outlets

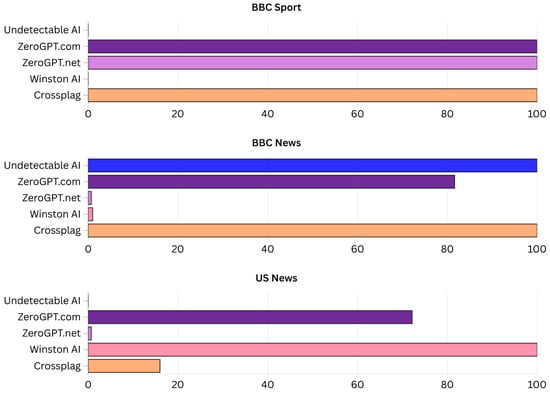

The text sourced from media outlets, including BBC News, BBC Sport, and U.S. News, also produced inconsistent classifications. As illustrated in Figure 5, Tools B, C, and F categorized content from BBC Sport as 100% AI-generated, while content from BBC News was similarly identified by Tools A, B, and F. In contrast, Tool D returned ambiguous outputs such as “Hard to Tell” (Table 2). The tendency to misclassify journalistic writing shows that the problem is not confined to highly formal or technical texts. Instead, the issue extends to news articles, which vary significantly in style and tone from academic writing. This suggests that the current detection models are unable to account for the stylistic diversity of human writing. The implications for journalism are equally concerning. As media organizations increasingly face scrutiny over misinformation and AI-generated content, dependence on flawed detection tools could lead to unwarranted challenges to journalistic credibility.

Figure 5.

Classification of texts collected from media outlets.

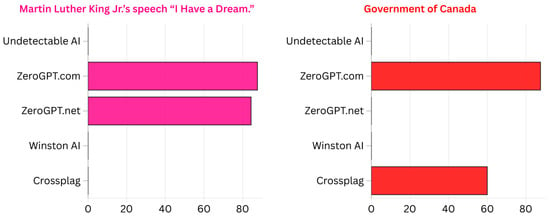

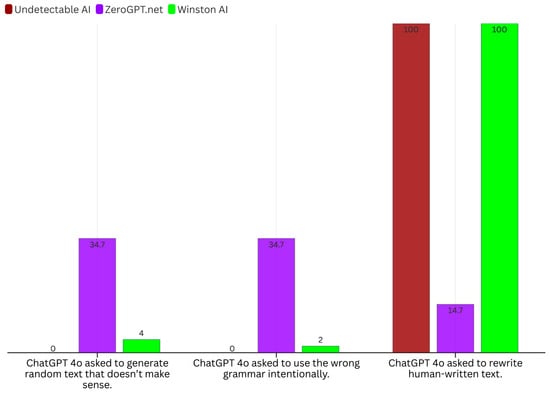

3.6. Misclassification of AI-Generated Content

In contrast to the frequent misclassification of human-authored texts, intentionally altered AI-generated content was frequently identified as human-written. For example, when ChatGPT 4o generated nonsensical or incoherent text, detection tools constantly classified it as human-authored (Figure 6). Likewise, when grammatical errors were deliberately introduced, the outputs were almost uniformly identified as human-generated. This inverse error, mistaking AI text for human-authored material, suggests that detection tools are more sensitive to surface-level grammatical correctness than to deeper markers of coherence or originality. In practice, this means that a user seeking to avoid detection could simply introduce minor errors into AI-generated content, thereby fooling the system. In addition, when ChatGPT was asked to rewrite human-written content, the classification results were again inconsistent. Tools A, D, and E flagged the rewritten text as AI-generated, while the others labeled it as human-written. This inconsistency further elucidates the instability of current detection methodologies.

Figure 6.

Classification of AI-generated texts.

3.7. Quantitative Evaluation of Tool Performance

The quantitative performance of each tool, assessed using accuracy, precision, and recall metrics, is summarized in Table 4. Among the six tools, Tool D (brandwell.ai) achieved the highest scores, with 71.4% accuracy, 11.1% precision, and 33.3% recall. By contrast, Tool B (Zerogpt.com) performed the worst, with an accuracy of 14.3%, and both precision and recall at 0%. Although the performance of Tool D was comparatively stronger, its precision and recall values remained extremely low. The highest recall across all tools was only 33.3%, and precision never exceeded 11.1%. Thus, such low values indicate that even the best-performing system failed to reliably distinguish between AI and human content. These metrics highlight the fundamental limitations of AI detection tools. The high rates of false positives (misclassifying human text as AI-generated) and false negatives (misclassifying AI text as human-written) render them unsuitable for contexts requiring dependable decision-making.

Table 4.

Classification performance of popular AI detection tools.

3.8. Sensitivity to Minor Textual Changes

An additional series of tests was conducted to examine the tools’ sensitivity to minor textual alterations. By using content from Stanford University News [45], small modifications such as the deletion of a single word or the substitution of a punctuation mark were introduced. The results revealed dramatic shifts in labeling. For instance, in Tool B, deleting a single word changed the classification from 69.51% AI-generated to 100% human-authored. Likewise, in Tool C, the same deletion changed the output from 69.21% AI-generated to just 0.7% AI-generated. These results demonstrate that even negligible edits can completely reverse the classification outcome. The changes in punctuation had equally pronounced effects. By replacing a colon with a period in the first sentence of a paragraph shifted Tool D’s classification from “AI-generated” to “Hard to Tell,” while Tools B and C reported changes ranging from 69.21% AI-generated to 82.32% AI-generated or to nearly 100% human-authored. Thus, such volatility demonstrates that detection tools are not robust to even the simplest textual variations. Therefore, rather than detecting intrinsic markers of AI authorship, these systems appear overly sensitive to superficial textual features, resulting in inconsistent and unreliable outputs.

3.9. Broader Implications

Collectively, these findings highlight a critical flaw in the current landscape of AI content detection. The detection tools that are broadly marketed as solutions for academic integrity or content verification cannot reliably fulfill their intended role. Instead, the tools produce frequent false positives, misclassify altered AI text, and deliver inconsistent results across contexts. The broader implications are far-reaching. In academia, the misclassification of authentic student writing could lead to unfair penalties. In publishing, false accusations of AI authorship could undermine scholarly trust. In journalism, reliance on faulty detection could erode public confidence. Furthermore, the demonstrated ease with which AI text can evade detection through minor edits raises serious doubts about the long-term viability of the detection tools. These challenges highlight the necessity for policymakers, educators, and industry leaders to approach AI content detection with caution, ensuring that decisions informed by the detection tools do not unconsciously harm individuals or institutions. In addition, the ethical and legal implications of misclassification must be carefully considered as AI integration in society continues to grow. Overall, while AI detection remains a promising area of research, the results of this study suggest that current implementations are not yet fit for high stakes use. Instead, the tools should be employed cautiously, if at all, and always in conjunction with human judgment and additional verification methods.

4. Recommendations for Future Development and Usage of AI Detection Tools

Based on the results of this study, some actions to improve the reliability and responsible application of AI content detection tools are recommended. First, the establishment of standardized benchmarking frameworks is crucial, which should include diverse, well-curated datasets including both human-authored and AI-generated texts. In addition, open access to such benchmarks will aid transparent performance comparisons and foster ongoing improvements across detection tools. Second, detection algorithms should integrate adaptive thresholding and context-aware analysis. Thus, moving beyond fixed thresholds to consider factors such as text genre and stylistic nuances can reduce false positive and false negative rates. Third, enhancing robustness against minor text manipulation is critical. The detection tools should be trained with distorted and adversarial examples to maintain detection precision despite simple edits or grammatical variations. Fourth, transparency from developers regarding the limitations and performance metrics of the detection tools must be prioritized. Thus, end users, especially in academia and publishing, require guidance to infer results carefully, supplementing automated outputs with human judgment and additional verification. Finally, interdisciplinary collaboration involving researchers, educators, policymakers, and industry leaders is required to develop ethical guidelines and best practices. This will enable mitigate risks related to fairness, privacy, and misuse in high-stakes applications. Thus, implementing these measures will aid the creation of more trustworthy and effective AI content detection tools, enabling the tools function as valuable aids rather than sole arbiters in authorship evaluation.

5. Conclusions

The rapid advancement and adoption of generative artificial intelligence technologies have created an urgent need for reliable AI content detection tools. Given that these tools are progressively used in academic, professional, and even regulatory settings, it is crucial to evaluate their accuracy, consistency, and reliability before relying on the tools in critical decision-making contexts. This study undertook a comprehensive assessment of six widely adopted AI detection tools across a diverse range of textual sources, including prestigious university websites, journal articles published before the emergence of generative AI, government websites, mainstream media outlets, and texts produced by ChatGPT-4o under varying conditions. The findings revealed serious shortcomings in all detection tools assessed. Remarkably, a widely cited 2005 Nature paper authored by AI pioneers LeCun et al. [32] was incorrectly flagged as AI-generated by five of the six tools, despite being published years before generative AI systems existed. Likewise, several journal articles published in Nature prior to the release of generative AI were also misclassified as AI-produced. This trend extended to reputable university websites, where sections such as “About,” “History,” “Mission,” and program descriptions were frequently labeled as AI-generated despite their authenticity. In addition, governmental texts, including the iconic “I Have a Dream” speech from the U.S. National Archives and official materials from the Government of Canada, were also misclassified by multiple tools. Even professional journalism from BBC News, BBC Sports, and U.S. News was often incorrectly identified as AI-generated. The performance metrics highlight these weaknesses. Tool B (Zerogpt.com) demonstrated the lowest performance overall, with an accuracy of only 14.3%, with both precision and recall dropping to 0%. By contrast, Tool D (brandwell.ai) showed relatively stronger results, achieving 71.4% accuracy, but still only 11.1% precision and 33.3% recall. Thus, these metrics highlight the persistent problem of false positives and missed identifications across all tools. These results specify that, while some tools may perform marginally better than others, none provide the level of reliability necessary for high-stakes decision-making.

Further investigation revealed that the tools are highly sensitive to even minor textual modifications. Small changes, such as deleting a single word or altering punctuation, frequently resulted in dramatic shifts in classification, toggling content from “human-authored” to “AI-generated” or vice versa. This instability undermines the trustworthiness of the tools, especially when used in contexts where the stakes are high. Additionally, all six tools failed to detect AI-generated texts with intentionally poor grammar, categorizing them as human-written. Even nonsensical outputs generated by ChatGPT-4o were classified as human-authored, demonstrating that the tools are not only unreliable but also vulnerable to manipulation. Taken together, the evidence presented in this study shows that current AI detection tools are inefficient, inconsistent, and prone to critical errors. Thus, heavy reliance on these tools for decision-making in academia, publishing, education, or law enforcement could result in misguided judgments and unintended consequences. The users must therefore exercise caution, fully recognizing the limitations and risks associated with these technologies. At the same time, the presentation claims made by many detection tools, emphasizing “high accuracy” or “advanced reliability”, can mislead users into overestimating their effectiveness. These claims may function as approaches to attract users or gather additional data for training their algorithms, rather than reflecting robust, scientifically validated performance. To address these issues, there is a pressing necessity for a standardized benchmarking framework to assess AI content detection tools. Thus, such framework should include common evaluation criteria, curated benchmark datasets of both human- and AI-generated texts, and transparent reporting protocols. The development of a shared, openly accessible repository of test data would further ensure replicability and aid systematic cross-comparison across tools.

Thereby, future work should focus on the creation and adoption of these standards, which would serve to improve the reliability of detection systems while also guiding responsible use. Until such benchmarks are established, AI detection tools should be viewed not as definitive arbiters of authorship, but rather as supplementary tools whose outputs require careful human interpretation. Only through rigorous validation, transparency, and collaboration across academia, industry, and policymakers can the reliability of these systems be enhanced and their role in society responsibly defined.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/info16100904/s1.

Author Contributions

Conceptualization, T.G.W. and M.S.A.; Methodology, T.G.W., I.A.T., M.S.A., M.M. and M.K.H.; Validation, T.G.W., I.A.T., M.S.A. and M.M.; Investigation, T.G.W. and I.A.T.; Resources, M.S.A. and M.M.; Data curation, T.G.W. and I.A.T.; Writing—original draft, T.G.W. and I.A.T.; Writing—review & editing, T.G.W., I.A.T., M.S.A., M.M. and M.K.H.; Visualization, T.G.W. and I.A.T.; Supervision, M.S.A. and M.M.; Project administration, T.G.W. and M.S.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article or supplementary material.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- OpenAI. Introducing ChatGPT. 2022. Available online: https://www.openai.com (accessed on 19 August 2025).

- Google. Gemini. 2024. Available online: https://gemini.google.com/ (accessed on 19 August 2025).

- Microsoft Copilot. Available online: https://copilot.microsoft.com/ (accessed on 19 August 2025).

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training; OpenAI: San Francisco, CA, USA, 2018. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language Models Are Unsupervised Multitask Learners; OpenAI blog: San Francisco, CA, USA, 2019. [Google Scholar]

- Brown, T.B.; Krueger, G.; Mann, B.; Askell, A.; Herbert-voss, A.; Winter, C.; Ziegler, D.M.; Radford, A.; Mccandlish, S. Language Models are Few-Shot Learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- OpenAI; Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; et al. GPT-4 Technical Report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- OpenAI; Brown, A. GPT-4 is OpenAI’s most advanced system, producing safer and more useful responses. J. Archit. Comput. 2024, 22, 275–276. [Google Scholar] [CrossRef]

- OpenAI. Hello GPT-4o. 2024. Available online: https://openai.com/index/hello-gpt-4o/ (accessed on 19 August 2025).

- OpenAI. GPT-4o mini: Advancing cost-efficient intelligence. 2024. Available online: https://openai.com/index/gpt-4o-mini-advancing-cost-efficient-intelligence/ (accessed on 19 August 2025).

- OpenAI. Learning to Reason with LLMs. 2024. Available online: https://openai.com/index/learning-to-reason-with-llms/ (accessed on 19 August 2025).

- Wu, J.; Yang, S.; Zhan, R.; Yuan, Y.; Chao, L.S.; Wong, D.F. A Survey on LLM-Generated Text Detection: Necessity, Methods, and Future Directions. Comput. Linguist. 2025, 51, 275–338. [Google Scholar] [CrossRef]

- Beresneva, D. Computer-generated text detection using machine learning: A systematic review. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); 2016; Volume 9612, Available online: https://link.springer.com/chapter/10.1007/978-3-319-41754-7_43 (accessed on 19 August 2025).

- Hanley, H.W.A.; Durumeric, Z. Machine-Made Media: Monitoring the Mobilization of Machine-Generated Articles on Misinformation and Mainstream News Websites. Proc. Int. AAAI Conf. Web Soc. Media 2023, 18, 542–556. [Google Scholar] [CrossRef]

- Mirsky, Y.; Demontis, A.; Kotak, J.; Shankar, R.; Gelei, D.; Yang, L.; Zhang, X.; Pintor, M.; Lee, W.; Elovici, Y.; et al. The Threat of Offensive AI to Organizations. Comput. Secur. 2023, 124, 103006. [Google Scholar] [CrossRef]

- Weidinger, L.; Mellor, J.; Rauh, M.; Griffin, C.; Uesato, J.; Huang, P.-S.; Cheng, M.; Glaese, M.; Balle, B.; Kasirzadeh, A.; et al. Ethical and social risks of harm from Language Models. arXiv 2021, arXiv:2112.04359v1. [Google Scholar] [CrossRef]

- Massachusetts Institute of Technology. About MIT. Available online: https://www.mit.edu/about/ (accessed on 5 October 2024).

- University of Cambridge. About the university: History. Retrieved 5 October 2024. Available online: https://www.cam.ac.uk/about-the-university/history (accessed on 19 August 2025).

- Harvard University. About: Mission, Vision, History. Available online: https://college.harvard.edu/about/mission-vision-history (accessed on 5 October 2024).

- University of British Columbia. UBC Programs: Master of Management Dual Degree—Okanagan. Available online: https://you.ubc.ca/ubc_programs/master-management-dual-degree-okanagan/ (accessed on 5 October 2024).

- Imperial College London. Imperial Startups Showcase Innovative Ideas in London. 2021. Available online: https://www.imperial.ac.uk/news/256539/imperial-startups-showcase-innovative-ideas-london/ (accessed on 5 October 2024).

- University of Illinois at Urbana-Champaign. About: Overview of ACES. Available online: https://www.aces.illinois.edu/about/overview-aces (accessed on 5 October 2024).

- University of Illinois at Urbana-Champaign. About: Diversity, Equity, and Inclusion. Available online: https://international.illinois.edu/about/dei/index.html (accessed on 5 October 2024).

- Stanford University. About Stanford. Available online: https://www.stanford.edu/about/ (accessed on 5 October 2024).

- University of California, Berkeley. About: Senate Leadership. Available online: https://academic-senate.berkeley.edu/about/senate-leadership (accessed on 5 October 2024).

- University of California, Berkeley. Two Centuries Later, Performance Spaces Still Struggle with ‘Soft Censorship’. 2021. Available online: https://news.berkeley.edu/2024/09/24/two-centuries-later-performance-spaces-still-struggle-with-soft-censorship/ (accessed on 5 October 2024).

- Idaho State University. About ISU. Available online: https://coursecat.isu.edu/aboutisu/ (accessed on 5 October 2024).

- Jordan, M.I.; Mitchell, T.M. Mitchell Machine learning: Trends, perspectives, and prospects. Science 2015, 349, 253–255. [Google Scholar] [CrossRef]

- Tsukada, Y.I.; Fang, J.; Erdjument-Bromage, H.; Warren, M.E.; Borchers, C.H.; Tempst, P.; Zhang, Y. Histone demethylation by a family of JmjC domain-containing proteins. Nature 2006, 439, 811–816. [Google Scholar] [CrossRef]

- Barber, D.L.; Wherry, E.J.; Masopust, D.; Zhu, B.; Allison, J.P.; Sharpe, A.H.; Freeman, G.J.; Ahmed, R. Restoring function in exhausted CD8 T cells during chronic viral infection. Nature 2006, 439, 682–687. [Google Scholar] [CrossRef]

- Jung, M.; Reichstein, M.; Ciais, P.; Seneviratne, S.I.; Sheffield, J.; Goulden, M.L.; Bonan, G.; Cescatti, A.; Chen, J.; De Jeu, R.; et al. Recent decline in the global land evapotranspiration trend due to limited moisture supply. Nature 2010, 467, 951–954. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2005, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Chen, L.; Chetkovich, D.M.; Petralia, R.S.; Sweeney, N.T.; Kawasaki, Y.; Wenthold, R.J.; Bredt, D.S.; Nicoll, R.A. Stargazin regulates synaptic targeting of AMPA receptors by two distinct mechanisms. Nature 2000, 408, 936–943. [Google Scholar] [CrossRef] [PubMed]

- Srianand, R.; Petitjean, P.; Ledoux, C. The cosmic microwave background radiation temperature at a redshift of 2.34. Nature 2000, 408, 931–935. [Google Scholar] [CrossRef] [PubMed]

- Savage, N. The race to the top among the world’s leaders in artificial intelligence. Nature 2020, 588, S102–S104. [Google Scholar] [CrossRef]

- Al-Zahrani, A.M.; Alasmari, T.M. Exploring the impact of artificial intelligence on higher education: The dynamics of ethical, social, and educational implications. Humanit. Soc. Sci. Commun. 2024, 11, 912. [Google Scholar] [CrossRef]

- Guo, Y.; Li, Y.; Zhang, M.; Ma, R.; Wang, Y.; Weng, X.; Zhang, J.; Zhang, Z.; Chen, X.; Yang, W. Polymeric nanocarrier via metabolism regulation mediates immunogenic cell death with spatiotemporal orchestration for cancer immunotherapy. Nat. Commun. 2024, 15, 8586. [Google Scholar] [CrossRef]

- Peng, Z.; Tong, L.; Shi, W.; Xu, L.; Huang, X.; Li, Z.; Yu, X.; Meng, X.; He, X.; Lv, S.; et al. Multifunctional human visual pathway-replicated hardwarea based on 2D materials. Nat. Commun. 2024, 15, 8650. [Google Scholar] [CrossRef]

- Nishiie, N.; Kawatani, R.; Tezuka, S.; Mizuma, M.; Hayashi, M.; Kohsaka, Y. Vitrimer-like elastomers with rapid stress-relaxation by high-speed carboxy exchange through conjugate substitution reaction. Nat. Commun. 2024, 15, 8657. [Google Scholar] [CrossRef]

- Nature Npj, Artificial Intelligence Aims & Scope. Available online: https://www.nature.com/npjai/aims (accessed on 19 August 2025).

- Nature Nature Portfolio. Available online: https://www.nature.com/nature-portfolio (accessed on 19 August 2025).

- Kozlov, M. Mpox vaccine roll-out begins in Africa: What will success look like? Nature 2024. preprint. Available online: https://www.nature.com/articles/d41586-024-03243-2 (accessed on 19 August 2025). [CrossRef]

- National Archives and Records Administration Transcript of March (Part 3 of 3). Available online: https://www.archives.gov/files/social-media/transcripts/transcript-march-pt3-of-3-2602934.pdf (accessed on 19 August 2025).

- Government of Canada. Federal Research Funding Agencies Release Tri-Agency Research Training Strategy. Available online: https://www.canada.ca/en/research-coordinating-committee/news/updates/2024/09/federal-research-funding-agencies-release-tri-agency-research-training-strategy.html (accessed on 19 August 2025).

- Stanford News Stanford Launches Center Focused on Human and Planetary Health. Stanford Report, 31 October 2024.

- Naser, M.Z.; Alavi, A.H. Error metrics and performance fitness indicators for artificial intelligence and machine learning in engineering and sciences. Archit. Struct. Constr. 2023, 3, 499–517. [Google Scholar] [CrossRef]

- Kutty, A.A.; Wakjira, T.G.; Kucukvar, M.; Abdella, G.M.; Onat, N.C. Urban resilience and livability performance of European smart cities: A novel machine learning approach. J. Clean. Prod. 2022, 378, 134203. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).