Sustainable Real-Time NLP with Serverless Parallel Processing on AWS

Abstract

1. Introduction

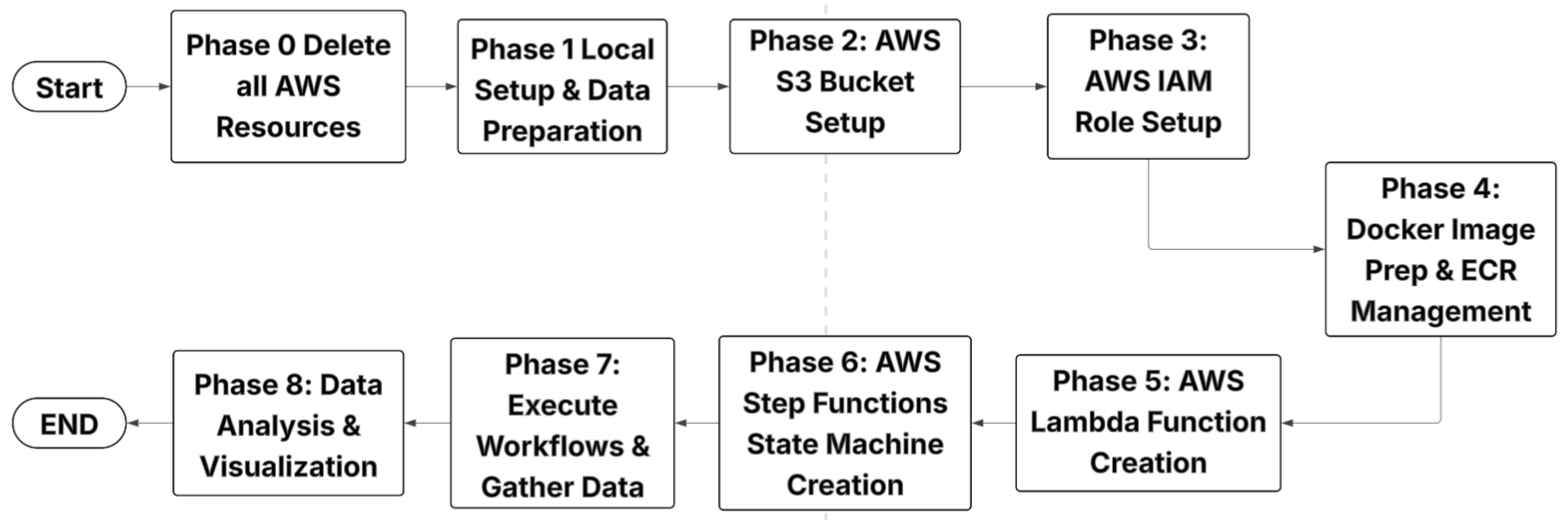

2. Methodology

2.1. Selecting Large Language Model and Dataset

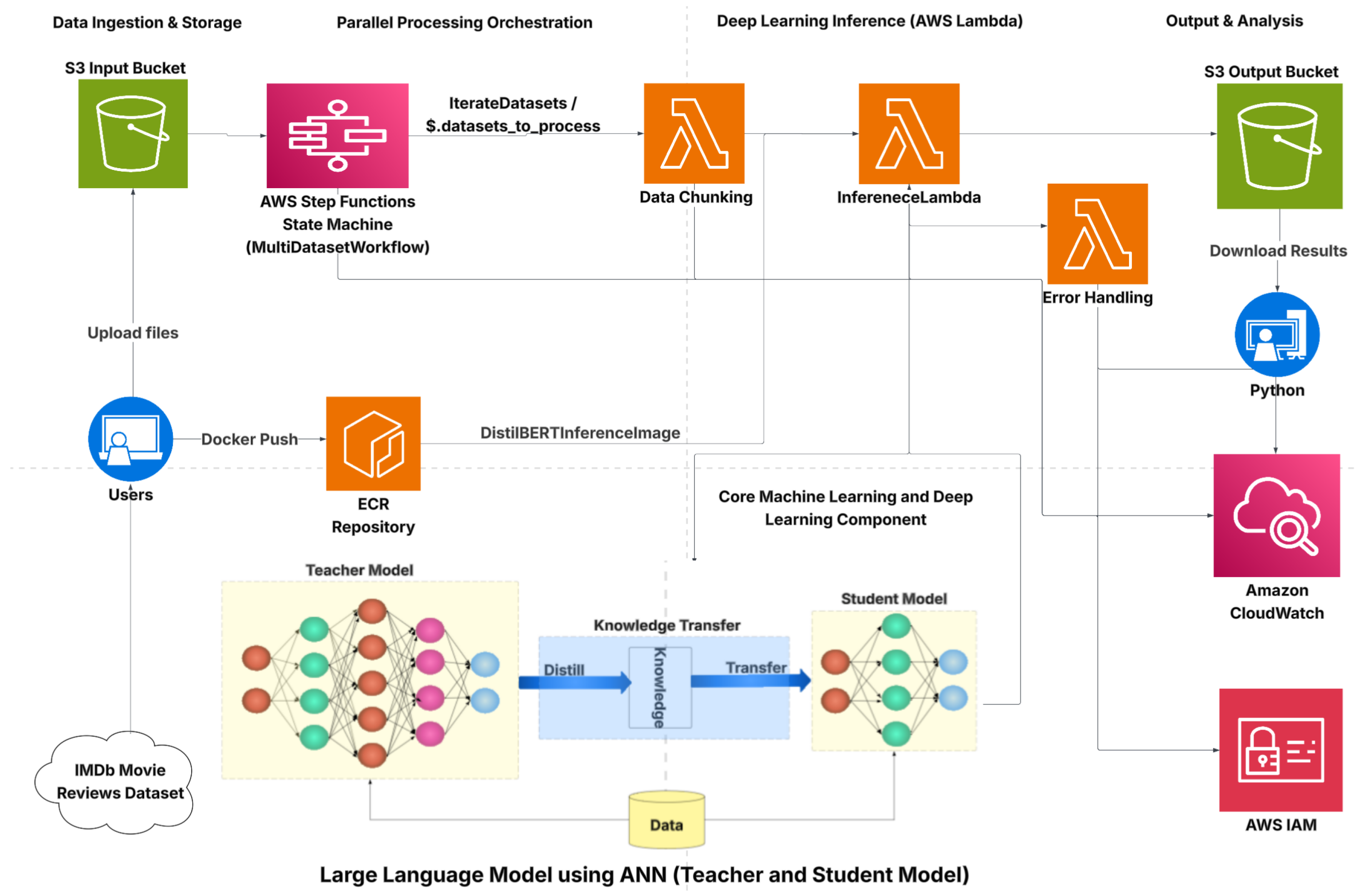

2.2. Serverless Parallel Processing Architecture Framework

- Data Ingestion and Storage: Amazon S3 serves as the data lake for input text (IMDb subsets) and for storing outputs [41]. Datasets are uploaded and organized for batch processing, and results are written back for downstream analysis.

- Parallel Processing Orchestration: AWS Step Functions coordinates nested parallel execution (e.g., Map/Inline Map), controlling task fan-out/fan-in and error handling [42]. This allows thousands of independent document-level inferences to execute concurrently under policy-controlled limits.

- Deep Learning Inference: Containerized model inference is packaged in Amazon ECR and executed by Lambda (container images), ensuring consistent environments and fast cold-starts with pre-fetched artifacts.

- Output and Analysis: Amazon CloudWatch provides centralized logging/metrics for workflow runs and billed-duration capture; Lambda’s serverless monitoring guidance supports traceability and performance tuning. AWS IAM enforces least-privilege access across services (buckets, state machines, functions, and logs).

Local Environment Setup and Data Preparation

2.3. Custom Dataset Characterization

2.4. Data Analysis and Visualization

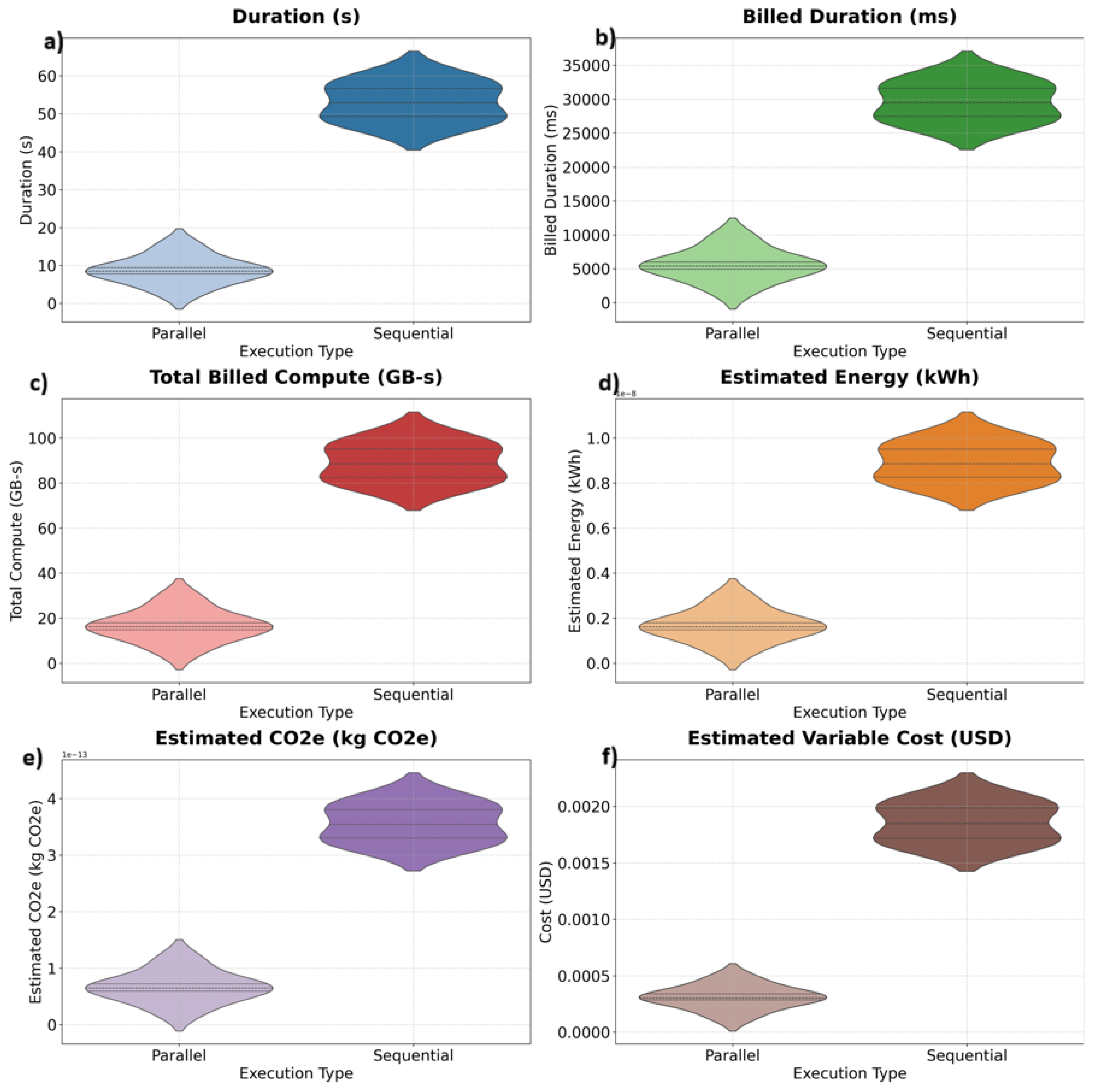

- Total Lambda Compute (GBs)

- Estimated Energy Consumption (kWh)

- Estimated CO2 Emissions (kg CO2e)

- Estimated Variable Cost (USD)

2.5. Limitations

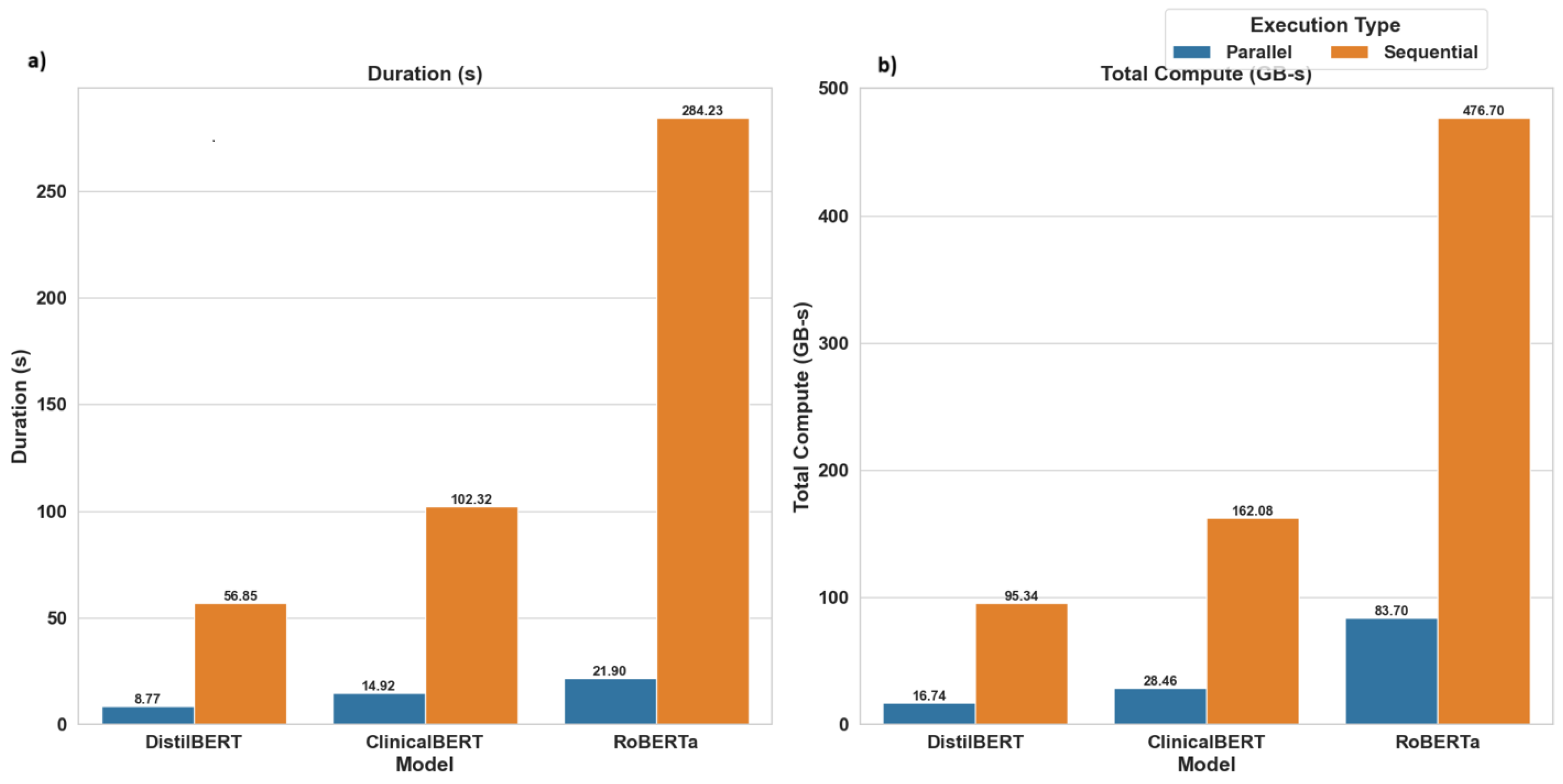

3. Results

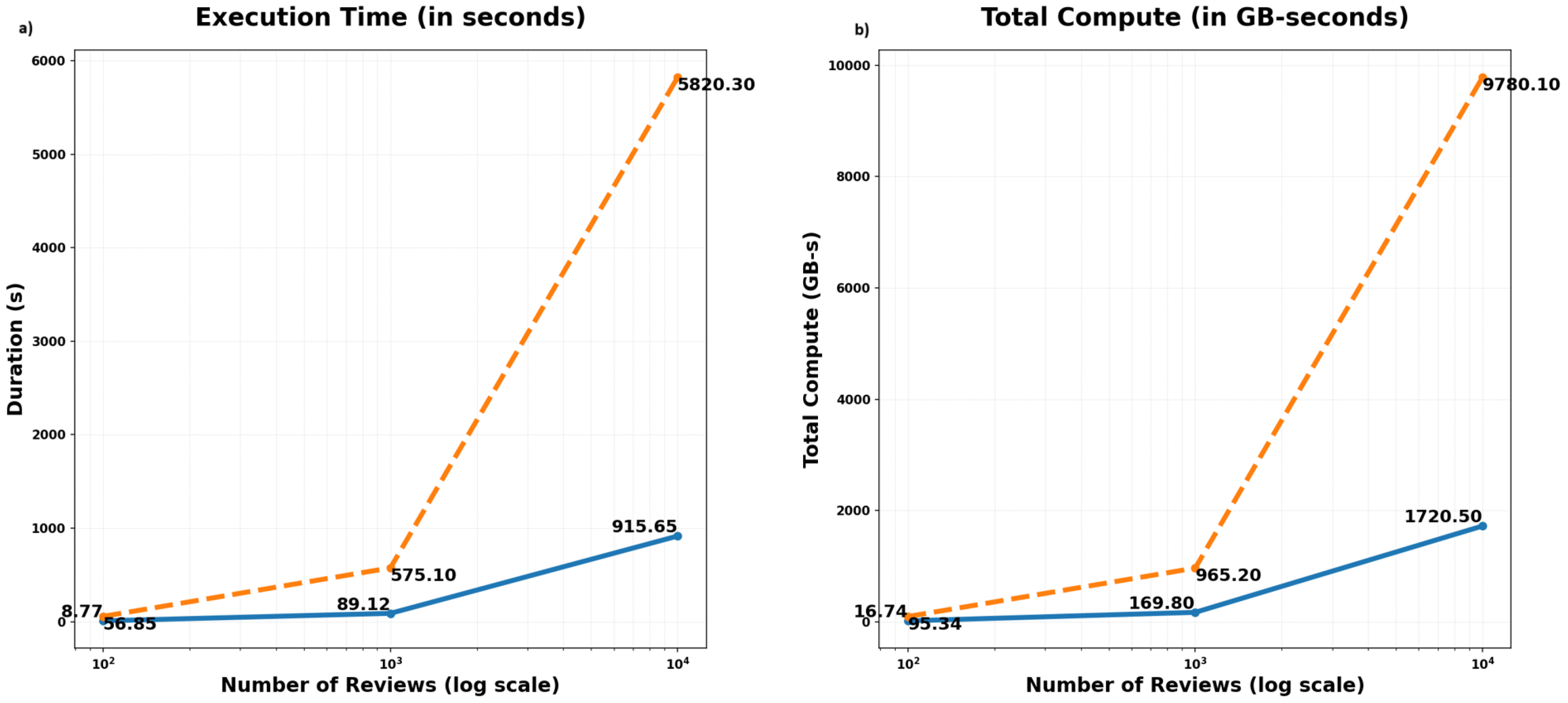

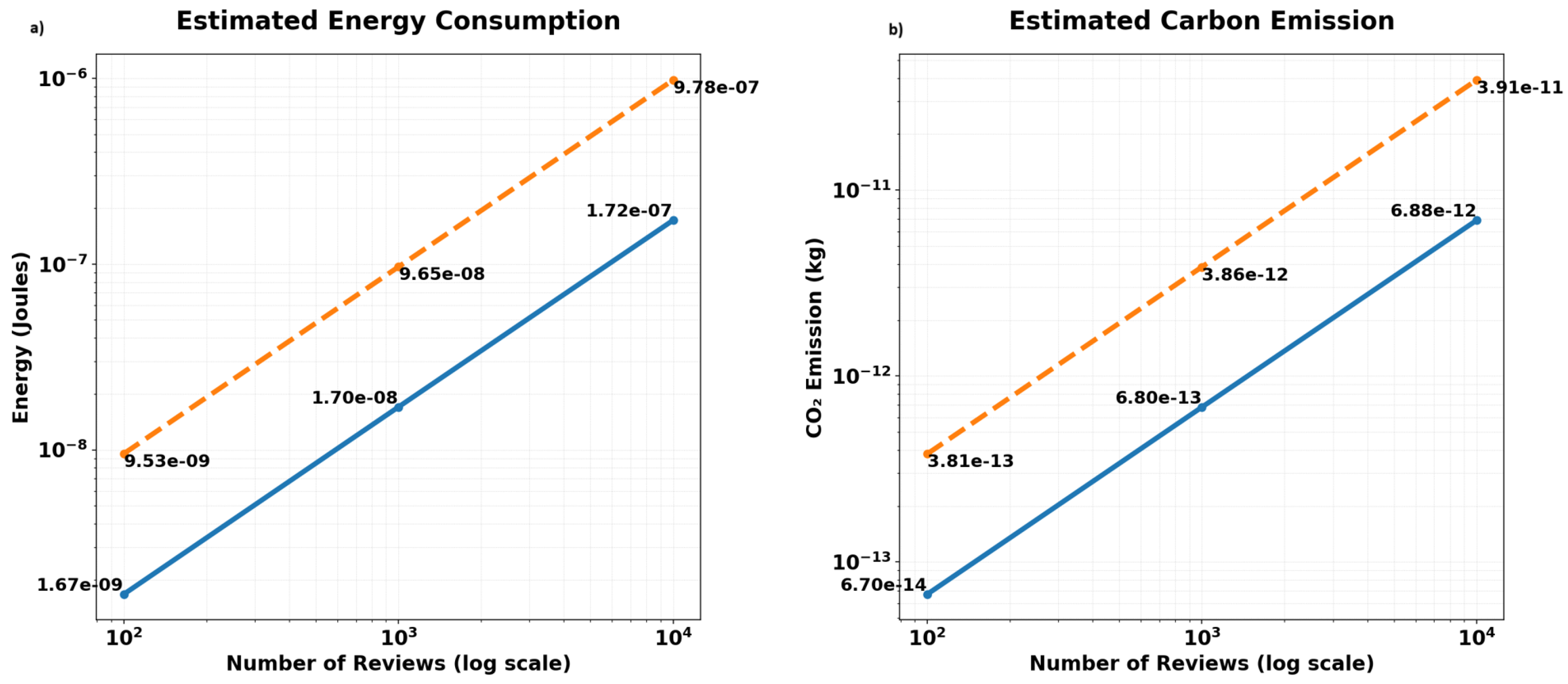

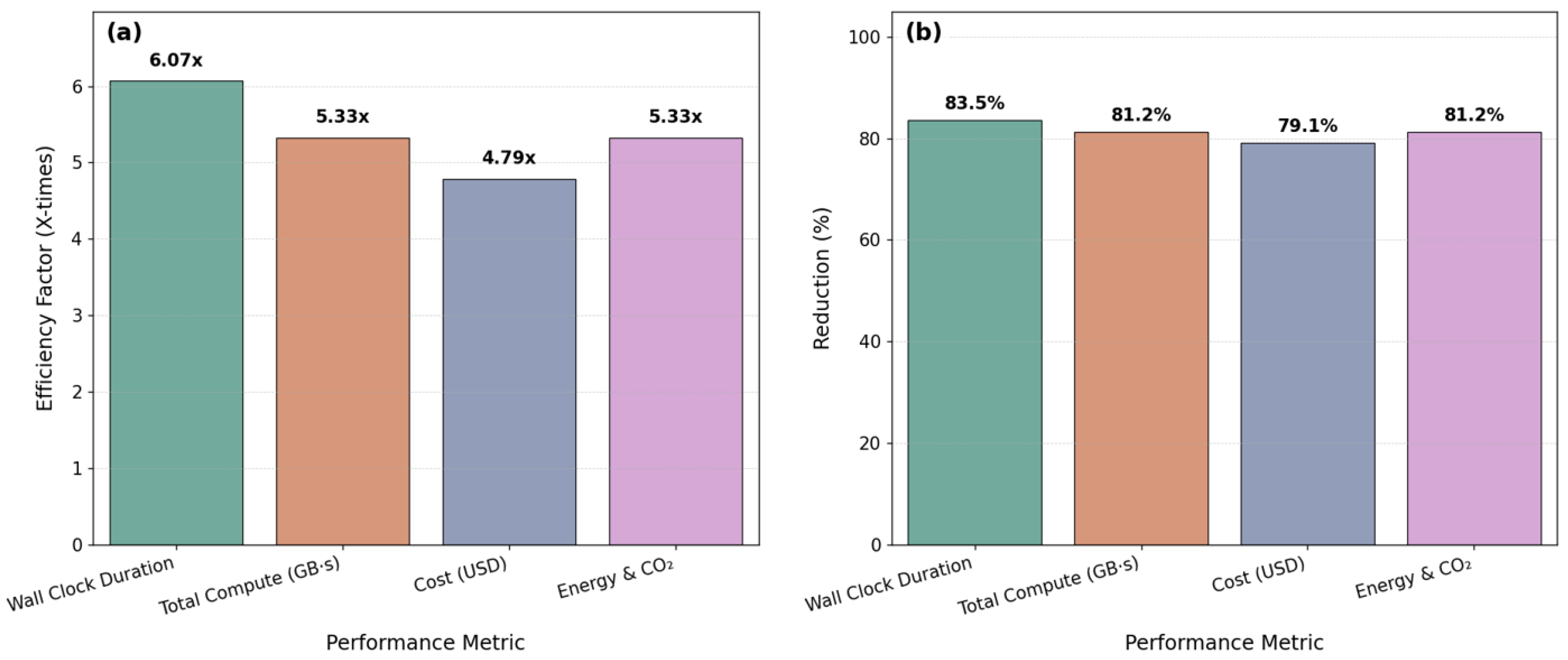

Scalability Extrapolation

4. Discussion

5. Conclusions

6. Limitations and Future Work

- Native scalability using a step function distributed map to process datasets with 50,000+ files.

- Cost optimization through AWS Lambda power tuning and more granular total cost of ownership models.

- Enhanced sustainability metrics by integrating AWS-native carbon reporting tools for precise emissions analysis.

- Improved inference diagnostics, including confidence scores and richer evaluation scripts.

- Task-specific model optimization, retraining or fine-tuning models (e.g., DistilBERT) to the target dataset to boost classification accuracy alongside efficiency.

- Robustness testing with large, real-world streaming datasets, exploring integration with AWS Glue, Kinesis, or Data Pipeline.

- Application to new domains, particularly neuroscience, where parallel serverless architectures can accelerate preprocessing, feature extraction, and model inference for detecting early markers of Alzheimer’s or dementia.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jones, N. Measuring AI’s Energy/Environmental Footprint to Access Impacts. FAS. 2025. Available online: https://fas.org/publication/measuring-and-standardizing-ais-energy-footprint/ (accessed on 4 April 2025).

- Jones, N. The Growing Energy Demand of Data Centers: Impacts of AI and Cloud Computing. ResearchGate. 2025. Available online: https://www.researchgate.net/publication/388231241_The_Growing_Energy_Demand_of_Data_Centers_Impacts_of_AI_and_Cloud_Computing (accessed on 5 April 2025).

- Desroches, C.; Chauvin, M.; Ladan, L.; Vateau, C.; Gosset, S.; Cordier, P. Exploring the Sustainable Scaling of AI Dilemma: A Projective Study of Corporations’ AI Environmental Impacts. arXiv 2025, arXiv:2501.14334. [Google Scholar] [CrossRef]

- Wolters Kluwe. Energy Demands Will Be a Growing Concern for AI Technology. 2025. Available online: https://www.wolterskluwer.com/en/expert-insights/energy-demands-will-be-a-growing-concern-for-ai-technology (accessed on 10 April 2025).

- Pritchard, R. PREDICTIONS. Digitalisation World. 2024. Available online: https://cdn.digitalisationworld.com/uploads/pdfs/056bca8e0be4e84789c8a98ffdb2fbb2198a2745208f2fbc.pdf (accessed on 20 April 2025).

- Ariyanti, S.; Suryanegara, M.; Arifin, A.S.; Nurwidya, A.I.; Hayati, N. Trade-Off Between Energy Consumption and Three Configuration Parameters in Artificial Intelligence (AI) Training: Lessons for Environmental Policy. Sustainability 2025, 17, 5359. [Google Scholar] [CrossRef]

- Yu, Z.; Wu, Y.; Deng, Z.; Tang, Y.; Zhang, X.-P. OpenCarbonEval: A Unified Carbon Emission Estimation Framework in Large-Scale AI Models OpenReview. arXiv 2024. [Google Scholar] [CrossRef]

- Mondal, S.; Faruk, F.B.; Rajbongshi, D.; Efaz, M.M.K.; Islam, M.M. GEECO: Green Data Centers for Energy Optimization and Carbon Footprint Reduction. Sustainability 2023, 15, 15249. [Google Scholar] [CrossRef]

- Li, Y.; Lin, Y.; Wang, Y.; Ye, K.; Xu, C. Serverless Computing: State-of-the-Art, Challenges and Opportunities. IEEE Trans. Serv. Comput. 2022, 16, 1522–1539. [Google Scholar] [CrossRef]

- Zheng, J. A Large-Scale 12-Lead Electrocardiogram Database for Arrhythmia Study (Version 1.0.0). PhysioNet 2022. [Google Scholar] [CrossRef]

- Mankala, C.; Silva, R. Evolutionary Artificial Neuroidal Network Using Serverless Architecture. Available online: https://www.proquest.com/pqdtglobal/docview/3249536286?sourcetype=Dissertations%20&%20Theses/ (accessed on 20 August 2025).

- Codecademy Team. Understanding Convolutional Neural Network (CNN) Architecture. Codecademy. 2024. Available online: https://www.codecademy.com/article/understanding-convolutional-neural-network-cnn-architecture (accessed on 20 May 2025).

- IBM. AI vs. Machine Learning vs. Deep Learning vs. Neural Networks. IBM. 2023. Available online: https://www.ibm.com/think/topics/ai-vs-machine-learning-vs-deep-learning-vs-neural-networks (accessed on 25 May 2025).

- Coursera. Deep Learning vs. Neural Network: What’s the Difference? Coursera. 2025. Available online: https://www.coursera.org/articles/deep-learning-vs-neural-network (accessed on 25 May 2025).

- H2O.ai. The Difference Between Neural Networking and Deep Learning. H2O.ai. 2024. Available online: https://h2o.ai/wiki/neural-networking-deep-learning/ (accessed on 25 May 2025).

- Wikipedia. Deep Learning. Wikipedia. 2025. Available online: https://en.wikipedia.org/wiki/Deep_learning (accessed on 25 May 2025).

- Pure Storage Blog. Deep Learning vs. Neural Networks. Pure Storage Blog. 2022. Available online: https://blog.purestorage.com/purely-educational/deep-learning-vs-neural-networks/ (accessed on 25 May 2025).

- Sanh, V.; Debut, L.; Chaumond, J.; Wolf, T. DistilBERT, a Distilled Version of BERT: Smaller, Faster, Cheaper and Lighter. arXiv 2019. [Google Scholar] [CrossRef]

- Analytics Vidhya. Introduction to DistilBERT in Student Model. 2022. Available online: https://www.analyticsvidhya.com/blog/2022/11/introduction-to-distilbert-in-student-model/ (accessed on 25 May 2025).

- Zilliz Learn. DistilBERT: A Smaller, Faster, and Distilled BERT. 2024. Available online: https://zilliz.com/learn/distilbert-distilled-version-of-bert (accessed on 25 May 2025).

- Number Analytics. DistilBERT for Efficient NLP. 2025. Available online: https://www.numberanalytics.com/blog/distilbert-efficient-nlp-data-science (accessed on 25 May 2025).

- AITechTrend. Realizing the Benefits of HuggingFace DistilBERT for NLP Applications. 2023. Available online: https://aitechtrend.com/realizing-the-benefits-of-huggingface-distilbert-for-nlp-applications/ (accessed on 25 May 2025).

- Analytics Vidhya. A Gentle Introduction to RoBERTa. Analytics Vidhya. 2022. Available online: https://www.analyticsvidhya.com/blog/2022/10/a-gentle-introduction-to-roberta/ (accessed on 25 May 2025).

- Amazon Web Services. What is the AWS Serverless Application Model (AWS SAM)? Available online: https://docs.aws.amazon.com/serverless-application-model/latest/developerguide/what-is-sam.html (accessed on 25 May 2025).

- Singu, S.M. Serverless Data Engineering: Unlocking Efficiency and Scalability in Cloud-Native Architectures. Artif. Intell. Res. Appl. 2023, 3. Available online: https://www.aimlstudies.co.uk/index.php/jaira/article/view/358 (accessed on 25 May 2025).

- Moody, G.B.; Mark, R.G. The Impact of the MIT-BIH Arrhythmia Database. IEEE Eng. Med. Biol. Mag. 2001, 20, 45–50. [Google Scholar] [CrossRef] [PubMed]

- Irid, S.M.H.; Moussaoui, D.; Hadjila, M.; Azzoug, O. Classification of ecg signals based on mit-bih dataset using bi-lstm model for assisting cardiologists diagnosis. Trait. Du Signal 2024, 41, 3245. [Google Scholar] [CrossRef]

- PhysioNet. PTB Diagnostic ECG Database v1.0.0. 2004. Available online: https://www.physionet.org/physiobank/database/ptbdb/ (accessed on 25 May 2025).

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Alsentzer, E.; Murphy, J.; Boag, W.; Weng, W.H.; Jindi, J.; Naumann, T.; McDermott, M. Publicly Available Clinical BERT Embeddings. arXiv 2019, arXiv:1904.03323. [Google Scholar] [CrossRef]

- McAuley, J.; Leskovec, J. Hidden Factors and Hidden Topics: Understanding Consumer Preferences from Reviews. In Proceedings of the 7th ACM Conference on Recommender Systems, Hong Kong, China, 12–16 October 2013; pp. 251–258. [Google Scholar]

- Socher, R.; Perelygin, A.; Wu, J.; Chuang, J.; Manning, C.D.; Ng, A.Y.; Potts, C. Recursive Deep Models for Semantic Compositionality Over a Sentiment Treebank. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, Seattle, WA, USA, 18–21 October 2013; pp. 1631–1642. Available online: https://aclanthology.org/D13-1170.pdf (accessed on 1 June 2025).

- Serverless Computing—AWS Lambda Pricing—Amazon Web Services. AWS. Available online: https://aws.amazon.com/lambda/pricing/ (accessed on 1 June 2025).

- What Is Amazon S3?—Amazon Simple Storage Service. AWS. Available online: https://aws.amazon.com/s3/ (accessed on 1 June 2025).

- Use Amazon S3 as a Data Lake—Amazon S3. AWS. Available online: https://docs.aws.amazon.com/AmazonS3/latest/userguide/data-lake-s3.html (accessed on 1 June 2025).

- AWS Step Functions—Workflow Orchestration. AWS. Available online: https://aws.amazon.com/step-functions/ (accessed on 1 June 2025).

- What Is AWS Step Functions? How It Works and Use Cases. Datadog. Available online: https://www.datadoghq.com/knowledge-center/aws-step-functions/ (accessed on 11 June 2025).

- What Is Amazon Elastic Container Registry?—Amazon ECR. AWS. Available online: https://aws.amazon.com/ecr/ (accessed on 11 June 2025).

- Deploying Container Images to AWS Lambda—AWS Lambda. AWS. Available online: https://docs.aws.amazon.com/lambda/latest/dg/images-create.html (accessed on 11 June 2025).

- What Is Amazon CloudWatch?—Amazon CloudWatch. AWS. Available online: https://aws.amazon.com/cloudwatch/ (accessed on 11 June 2025).

- Monitoring and Logging for Serverless Applications—AWS Lambda. AWS. Available online: https://docs.aws.amazon.com/lambda/latest/dg/monitoring-cloudwatch.html (accessed on 11 June 2025).

- What Is IAM?—AWS Identity and Access Management. AWS. Available online: https://aws.amazon.com/iam/ (accessed on 11 June 2025).

- PyTorch. PyTorch.org. Available online: https://pytorch.org/ (accessed on 11 June 2025).

- Boto3 Documentation. Available online: https://boto3.amazonaws.com/v1/documentation/api/latest/index.html (accessed on 11 June 2025).

- Pandas—Python Data Analysis Library. pandas.pydata.org. Available online: https://pandas.pydata.org/ (accessed on 11 June 2025).

- Matplotlib. Matplotlib.org. Available online: https://matplotlib.org/ (accessed on 11 June 2025).

- Seaborn: Statistical Data Visualization. Seaborn.pydata.org. Available online: https://seaborn.pydata.org/ (accessed on 11 June 2025).

- wordcloud · PyPI. PyPI.org. Available online: https://pypi.org/project/wordcloud/ (accessed on 11 June 2025).

- Scikit-Learn: Machine Learning in Python. scikit-learn.org. Available online: https://scikit-learn.org/stable/ (accessed on 11 June 2025).

- AWS Command Line Interface. AWS. Available online: https://aws.amazon.com/cli/ (accessed on 11 June 2025).

- NumPy. NumPy.org. Available online: https://numpy.org/ (accessed on 11 June 2025).

- AWS Customer Carbon Footprint Tool. AWS. Available online: https://aws.amazon.com/aws-cost-management/aws-customer-carbon-footprint-tool/ (accessed on 11 June 2025).

- Greenhouse Gas Protocol—A Corporate Accounting and Reporting Standard. World Resources Institute and WBCSD. Available online: https://ghgprotocol.org/ (accessed on 11 June 2025).

- ISO 14064-1:2018; Greenhouse Gases—Part 1: Specification with Guidance at the Organization Level for Quantification and Reporting of Greenhouse Gas Emissions and Removals. International Organization for Standardization: Geneva, Switzerland, 2018.

- ANOVA—Analysis of Variance. Investopedia. Available online: https://www.investopedia.com/terms/a/anova.asp (accessed on 11 June 2025).

- Extrapolation—Definition and Applications. Investopedia. Financial Analysis. Available online: https://www.investopedia.com/terms/f/financial-analysis.asp/ (accessed on 11 June 2025).

- Microsoft. Microsoft to be Carbon Negative by 2030. Microsoft News. Available online: https://blogs.microsoft.com/blog/2020/01/16/microsoft-will-be-carbon-negative-by-2030/ (accessed on 21 June 2025).

- Hinton, G.; Vinyals, O.; Dean, J. Distilling the knowledge in a neural network. arXiv 2015, arXiv:1503.02531. [Google Scholar] [CrossRef]

- Goldberger, A.; Amaral, L.; Glass, L.; Hausdorff, J.; Ivanov, P.C.; Mark, R.; Mietus, J.E.; Moody, G.B.; Peng, C.K.; Stanley, H.E. PhysioBank, PhysioToolkit, and PhysioNet: Components of a New Research Resource for Complex Physiologic Signals. 2000, 101, e215–e220. Available online: https://physionet.org/content/incartdb/1.0.0/ (accessed on 21 June 2025).

- Jiang, F. Artificial Intelligence in Healthcare: Past, Present, and Future. Stroke Vasc. Neurol. 2017, 2, 230–243. [Google Scholar] [CrossRef] [PubMed]

- Medina-Avelino, J.; Silva-Bustillos, R.; Holgado-Terriza, J.A. Are Wearable ECG Devices Ready for Hospital at Home Application? Sensors 2025, 25, 2982. [Google Scholar] [CrossRef] [PubMed]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. Available online: https://aclanthology.org/N19-1423.pdf (accessed on 21 June 2025).

- imdb_reviews TensorFlow Datasets. 2024. Available online: https://www.tensorflow.org/datasets/catalog/imdb_reviews (accessed on 21 June 2025).

- IMDB Movie Review Sentiment Classification Dataset. Keras. Available online: https://keras.io/api/datasets/imdb/ (accessed on 21 June 2025).

- Brownlee, J. What is a Confusion Matrix in Machine Learning. Machine Learning Mastery. 2020. Available online: https://machinelearningmastery.com/confusion-matrix-machine-learning/ (accessed on 21 June 2025).

- Narkhede, S.K. Understanding Confusion Matrix. Towards Data Science. 2018. Available online: https://towardsdatascience.com/understanding-confusion-matrix-a9ad42dcfd62 (accessed on 21 June 2025).

| Dataset Name | Positive Reviews (%) | Negative Reviews (%) | Total Files |

|---|---|---|---|

| local_imdb_100N_files | 0 | 100 | 100 |

| local_imdb_100P_files | 100 | 0 | 100 |

| local_imdb_55N_45P_files | 45 | 55 | 100 |

| local_imdb_55P_45N_files | 55 | 45 | 100 |

| local_imdb_20P_80N_files | 20 | 80 | 100 |

| local_imdb_80P_20N_files | 80 | 20 | 100 |

| Run Name | Type | Time (s) | Billed Time (ms) | Total Compute |

|---|---|---|---|---|

| 100 Neg | Parallel | 14.68 | 9311 | 28.01 GB-S |

| 100 Neg | Sequential | 56.354 | 31,426 | 94.54 GB-S |

| 80 Pos/20 Neg | Parallel | 9.68 | 6140 | 18.47 GB-S |

| 80 Pos/20 Neg | Sequential | 59.545 | 33,205 | 99.8 GB-S |

| 80 Neg/20 Pos | Parallel | 7.646 | 4850 | 14.59 GB-S |

| 80 Neg/20 Pos | Sequential | 49.339 | 27,514 | 82.71 GB-S |

| 55 Neg/45 Pos | Parallel | 3.525 | 2236 | 6.73 GB-S |

| 55 Neg/45 Pos | Sequential | 47.535 | 26,508 | 79.69 GB-S |

| 55 Pos/45 Neg | Parallel | 8.248 | 5231 | 15.73 GB-S |

| 55 Pos/45 Neg | Sequential | 49.242 | 27,460 | 82.55 GB-S |

| 100 Pos | Parallel | 8.774 | 5565 | 16.74 GB-S |

| 100 Pos | Sequential | 56.847 | 31,701 | 95.34 GB-S |

| Run Name | Type | Est. Energy (kWh) | Est. CO2e (kg) | Cost (USD) |

|---|---|---|---|---|

| 100 Neg | Parallel | 2.80 | 1.12 | 0.000467 |

| 100 Neg | Sequential | 9.45 | 3.78 | 0.001976 |

| 80 Pos/20 Neg | Parallel | 1.85 | 7.39 | 0.000348 |

| 80 Pos/20 Neg | Sequential | 9.98 | 3.99 | 0.002058 |

| 80 Neg/20 Pos | Parallel | 1.46 | 5.84 | 0.000283 |

| 80 Neg/20 Pos | Sequential | 8.27 | 3.31 | 0.001718 |

| 55 Neg/45 Pos | Parallel | 6.73 | 2.69 | 0.000152 |

| 55 Neg/45 Pos | Sequential | 7.97 | 3.19 | 0.001666 |

| 55 Pos/45 Neg | Parallel | 1.57 | 6.29 | 0.000297 |

| 55 Pos/45 Neg | Sequential | 8.26 | 3.30 | 0.001715 |

| 100 Pos | Parallel | 1.67 | 6.70 | 0.000314 |

| 100 Pos | Sequential | 9.53 | 3.81 | 0.00199 |

| Files | Execution | Time (s) | Compute | Energy | CO2 (kg) | Cost (USD) |

|---|---|---|---|---|---|---|

| 100 | Parallel | 8.76 | 16.71 GBs | 1.67 × 10−9 kWh | 6.68 × 10−14 | 0.000319 |

| Sequential | 53.14 | 89.11 GBs | 8.91 × 10−9 kWh | 3.56 × 10−13 | 0.001525 | |

| 1000 | Parallel | 87.64 | 167.12 GBs | 1.67 × 10−8 kWh | 6.68 × 10−13 | 0.003185 |

| Sequential | 531.36 | 891.05 GBs | 8.91 × 10−8 kWh | 3.56 × 10−12 | 0.015251 | |

| 10,000 | Parallel | 876.4 | 1671.17 GBs | 1.67 × 10−7 kWh | 6.68 × 10−12 | 0.03185 |

| Sequential | 5313.6 | 8910.50 GBs | 8.91 × 10−7 kWh | 3.56 × 10−11 | 0.15251 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mankala, C.K.; Silva, R.J. Sustainable Real-Time NLP with Serverless Parallel Processing on AWS. Information 2025, 16, 903. https://doi.org/10.3390/info16100903

Mankala CK, Silva RJ. Sustainable Real-Time NLP with Serverless Parallel Processing on AWS. Information. 2025; 16(10):903. https://doi.org/10.3390/info16100903

Chicago/Turabian StyleMankala, Chaitanya Kumar, and Ricardo J. Silva. 2025. "Sustainable Real-Time NLP with Serverless Parallel Processing on AWS" Information 16, no. 10: 903. https://doi.org/10.3390/info16100903

APA StyleMankala, C. K., & Silva, R. J. (2025). Sustainable Real-Time NLP with Serverless Parallel Processing on AWS. Information, 16(10), 903. https://doi.org/10.3390/info16100903