Abstract

Digital platforms for intangible cultural heritage (ICH) function as vibrant electronic marketplaces, yet they grapple with content overload, high search costs, and under-leveraged social networks of heritage custodians. To address these electronic-commerce challenges, we present GCHS, a custodian-aware, graph-based deep learning model that enhances ICH recommendation by uniting three critical signals: custodians’ social relationships, user interest profiles, and content metadata. Leveraging an attention mechanism, GCHS dynamically prioritizes influential custodians and resharing behaviors to streamline user discovery and engagement. We first characterize ICH-specific propagation patterns, e.g., custodians’ social influence, heterogeneous user interests, and content co-consumption and then encode these factors within a collaborative graph framework. Evaluation on a real-world ICH dataset demonstrates that GCHS delivers improvements in Top-N recommendation accuracy over leading benchmarks and significantly outperforms in terms of next-N sequence prediction. By integrating social, cultural, and transactional dimensions, our approach not only drives more effective digital commerce interactions around heritage content but also supports sustainable cultural dissemination and stakeholder participation. This work advances electronic-commerce research by illustrating how graph-based deep learning can optimize content discovery, personalize user experience, and reinforce community networks in digital heritage ecosystems.

1. Introduction

Digital dissemination has become a critical pathway for both preserving and valorizing intangible cultural heritage (ICH). Through digital capture, editing, and multi-channel distribution, stakeholders can transform living traditions into cultural assets that generate social recognition and economic opportunities for custodians [1,2]. Major social platforms (e.g., TikTok, Weibo, Kuaishou) and e-commerce-driven livestreams now host demonstrations, tutorials, contextual commentary, and artisan storefronts, activities that extend the reach of custodians and create new incentives for practice transmission. At the same time, the scale and heterogeneity of these streams pose new challenges: discoverability is poor for long-tail traditions, popularity signals produce a Matthew effect that crowds out niche content, and sparse user–item interactions for specialized ICH genres limit the effectiveness of conventional recommenders.

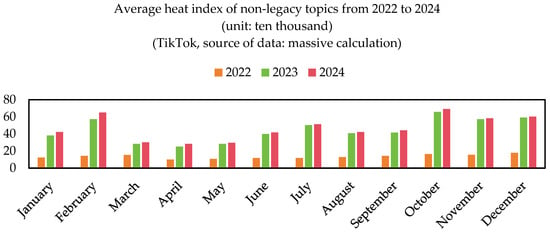

Figure 1 illustrates these dynamics empirically for one representative platform: it plots the monthly average “heat index” for ICH topics on TikTok from 2021 to 2024. Two key observations emerge. First, attention to ICH is uneven and episodic, which spikes alignment with cultural events and promotional campaigns, while baseline interest for many traditions remains low. Second, a small set of custodians and content pieces captures a disproportionate share of attention, highlighting the dual problem of extreme skew and temporal volatility. These patterns motivate a recommender design that (a) raises exposure for authoritative but low-visibility items, (b) adapts to temporal surges, and (c) leverages provenance signals (custodian identity and behavior) to recover cultural relevance beyond simple popularity.

Figure 1.

Average heat index of intangible cultural heritage topics on TikTok.

To make these goals concrete, we summarize our contributions here. First, we propose GCHS, a custodian-aware graph recommendation framework that integrates (i) user–content interactions, (ii) custodian embeddings as provenance features, and (iii) attention-enhanced multi-hop graph aggregation to weight heterogeneous neighbor signals. GCHS is specifically designed to surface culturally authoritative items while mitigating popularity bias for ICH content. Second, we compile and evaluate two real-world ICH datasets derived from social platforms, e.g., Weibo and TikTok. We evaluate GCHS against classic and state-of-the-art baselines (including Transformer-based sequential models) and present ablation and interpretability studies to explain model behavior. Third, we demonstrate how learned attention coefficients identify influential custodians and diffusion paths, and we provide per-custodian ablation and feature-contribution analyses that explain why particular custodians and features alter ranking outcomes. These analyses inform actionable recommendations for platforms and curators.

Taken together, this paper addresses both technical and domain needs: GCHS advances graph-based, provenance-aware recommendation for culturally sensitive content, and our empirical analyses guide platforms that aim to balance accuracy with cultural relevance and diversity.

2. Literature Review

2.1. Digital ICH and Custodians

Digital representations of intangible cultural heritage (ICH) encompass a wide variety of media formats that capture living traditions, e.g., craft techniques, performing arts, rituals, oral histories, and applied knowledge. In practice, digital ICH appears as institutional archives and curated exhibitions, mass-media productions, immersive reconstructions (VR/AR), and, increasingly, user-generated social media artifacts such as short videos, livestreams, and blog posts. Social media channels afford low-cost, decentralized publication and exceptional reach; they have become an important complement to formal preservation efforts by enabling custodians to demonstrate skills, teach techniques, and market cultural products to broad audiences. At the same time, the openness and scale of social platforms generate severe discoverability challenges: high-volume streams privilege popularity signals and crowd out niche, specialist content that is nonetheless culturally significant [3,4,5,6,7,8].

Central to the contemporary digital ecosystem are ICH custodians, formally recognized inheritors, practitioners, and transmitting agents who embody domain knowledge and often act as authoritative hubs of diffusion. Custodians typically maintain authenticated public accounts on social platforms, posting demonstrations, tutorials, and contextual narratives that help audiences interpret artifacts and practices. Their activity produces both explicit social ties (followers, mutual follows) and numerous implicit ties (shared audiences, co-interactions). These custodian-centered social structures have two immediate implications for computational curation. First, custodians supply provenance and endorsement signals that can increase trust and relevance for items that would otherwise languish in long tails. Second, custodian behavior is itself a dynamic cultural input. Changes in a custodian’s style, output frequency, or collaborations reshape diffusion patterns and audience formation. Hence, any automated system that aims to support ICH discovery must consider custodians not merely as content producers but as relational and temporal actors that modulate how cultural items spread and accrue meaning [9,10,11].

2.2. Domain-Specific Dissemination Dynamics

Three interlocking domain characteristics make ICH dissemination distinct from standard media recommendation tasks. The first is the living, evolving nature of ICH: traditions adapt across time and practitioners, producing diverse manifestations even within a named category. This intra-tradition heterogeneity implies that simple lexical or tag matching underestimates the semantic richness users seek; system designers, therefore must allow for stylistic, performative, and contextual differences in content representations [12,13,14]. The second characteristic is heterogeneous user motivation. Audiences consume ICH content for technical learning, emotional experience, identity affirmation, or commercial interest. These differing intents create diverse behavioral signatures. Some users repeatedly seek deep, long-form tutorials, while others prefer brief, affective clips, so a one-size-fits-all ranking objective risks alienating segments and misallocating exposure [15,16,17]. Third, media affordances and regionality matter: some practices are ill-served by short-form video, whereas other visual crafts map well to brief clips; regional meanings further affect cross-community reception [18,19]. Finally, social sharing and peer cascades are central to cultural transmission: resharing by committed followers or endorsements by trusted custodians can catalyze rediscovery and revaluation of long-tail items. Together, these dynamics demand recommendation objectives that trade off immediate click-based utility with cultural relevance, diversity, and provenance-aware exposure [5,9].

2.3. Recommendation Approaches Relevant to ICH

The recommended literature offers a spectrum of techniques that map onto different facets of the ICH challenge. Classical content-based and collaborative-filtering methods remain instructive: content-based filtering leverages item descriptors to match inferred user profiles and is interpretable but prone to overspecialization [11,12], while collaborative filtering (both memory-based nearest neighbors and model-based matrix factorization) exploits interaction patterns but suffers from cold-start and sparsity for newly published or niche ICH items [13,14,15,16,17,18]. Hybrid systems combine content, collaborative signals, and auxiliary metadata (demographics, tags, or knowledge graphs) to improve robustness and diversity [19,20,21,22], providing a practical foundation for ICH-specific adaptations.

Socially aware recommenders explicitly inject peer influence into predictions. Early social recommender systems such as SoRec, TrustMF, and SoReg extended matrix factorization to include explicit or inferred social constraints and demonstrated how social ties can reduce sparsity and bias latent representations toward socially proximate interests [23,24,25]. Graph-embedding methods (e.g., Node2Vec) provided richer structural encodings for nodes in social and interaction graphs, but decoupling embedding and ranking limits end-to-end optimization [26,27,28]. The rise in graph neural networks (GNNs) addresses this limitation by learning node representations through iterative message passing over heterogeneous graphs, enabling multi-hop diffusion signals and end-to-end training that are well suited to custodian-centered diffusion scenarios [29,30,31,32,33,34,35,36,37].

Parallel to graph-based methods, sequential and Transformer-based models (e.g., SASRec, BERT4Rec) have become state-of-the-art for capturing temporal dependencies in user interaction sequences, demonstrating strong gains on recall and ranking metrics where session dynamics are important [38,39,40,41,42]. These sequence models are particularly effective when recent interactions predict near-term behavior, but they generally do not model cross-user diffusion or provenance explicitly. Recent hybrid architectures that fuse graph and sequence perspectives, e.g., time-gated GNNs and graph-sequence hybrids, offer promising avenues for combining temporal sensitivity with relational diffusion [43,44].

2.4. Gaps and Motivation for GCHS

Despite progress across these strands, three gaps are significant for ICH recommendation. First, few systems explicitly treat custodians as structured diffusion agents with authority and resharing behavior that materially alter item attractiveness; most social recommenders either incorporate coarse popularity or infer social regularities without modeling custodian provenance [23,24,25,29]. Second, powerful sequential and Transformer models that capture temporal patterns typically omit cross-user diffusion and custodian authority, yielding high recall for short-term signals but limited capacity to promote culturally authoritative, long-tail items. Third, many GNN-based recommenders assume static relational graphs [38,39,40,41,42] and thus overlook event-driven surges and the temporal evolution of custodian networks that often characterize cultural consumption, particularly around festivals or curated exhibitions [43,44].

These gaps motivate the design of a custodian-aware, attention-augmented graph recommendation framework. Such a model should (1) represent custodian provenance as a first-class feature to surface authoritative items, (2) use attention-weighted, multi-hop graph aggregation to discriminate informative neighbors from noisy co-interactions, and (3) be extensible to temporal modules that capture evolving custodian influence and user preference drift. The GCHS model presented in this work operationalizes these principles by fusing user–content interactions, custodian embeddings, and relevance-aware graph aggregation to better capture the social and cultural dynamics of ICH dissemination.

3. Methodology

3.1. Basic Theory

3.1.1. Collaborative Filtering

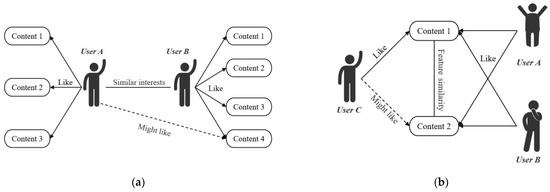

Collaborative filtering (CF) exploits patterns in user–item interactions to infer preferences. By computing similarity either among users (user-based CF, Figure 2a) or among items (item-based CF, Figure 2b), CF recommends items liked by similar users or items analogous to those previously consumed. Implementation divides into two categories: Memory-based CF, which directly computes real-time similarities over the user–item matrix, enabling straightforward yet computationally intensive recommendations. Model-based CF, which learns low-dimensional latent embeddings for users and items, commonly via matrix factorization, to predict preferences efficiently and mitigate sparsity. Given our focus on social-enhanced recommendation, we emphasize user-centric CF, detailing both memory- and model-based paradigms as foundations for integrating social network signals.

Figure 2.

Types of collaborative filtering (CF): (a) user-based CF and (b) item-based CF.

Memory-based collaborative filtering relies directly on historical user–item interactions. Let

denote the interaction matrix, where

if user i has engaged with item j. Notably, we treat all interactions as implicit feedback. User similarity is measured by the cosine of the interaction vectors:

The above yields values in [0,1]. After normalization, these similarity scores weight neighbors’ contributions to predict a target user’s affinity for unseen items.

Memory-based methods require no model training and naturally improve as data volume grows; however, they exhibit several drawbacks in the ICH domain, such that cold-start for new items, extreme sparsity, popularity bias and homogeneous preference assumption.

To address sparsity and cold-start issues, model-based CF introduces low-dimensional latent factors. Matrix factorization decomposes

into:

where each row of

represents a user’s

-dimensional preference vector, and each column of V represents an item’s latent feature vector. The inner product

estimates user i’s reference for item j. Learning

and

via regularized optimization mitigates sparsity, provides smoother predictions for cold-start items, and reduces computational cost at inference time.

Let

denote the set of all observed user–item interactions. Under matrix factorization, each user iii and item

is embedded into a shared k-dimensional latent space as

The predicted preference of user

for item

is then given by the inner product

and the corresponding prediction error is

Model parameters

are learned by minimizing the regularized squared error over

, ensuring accurate reconstruction of the observed interactions while controlling model complexity

3.1.2. Social Network-Based Recommendation

In digital-media platforms, social relations provide indispensable auxiliary signals for modeling user interests. These relations fall into two categories: explicit ties, such as explicit follow-and-follower links, and implicit affinities, inferred when users interact with the same content or share connections to common creators under the principle of “birds of a feather flock together.” Because explicit linkage does not always imply shared preferences, and conversely, behavioral similarity may reveal latent affinity, even ostensibly unconnected users can exhibit strong implicit bonds. By collapsing explicit and implicit relations into a unified measure of social proximity, recommender systems can more faithfully reconstruct users’ preference landscapes.

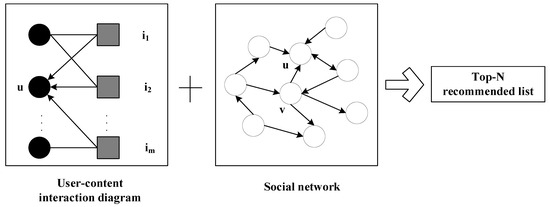

Integrating social proximity into collaborative filtering frameworks yields substantial gains in recommendation accuracy and robustness. Figure 3 illustrates the GCHS pipeline: a left bipartite panel shows the user–content interaction graph from which initial user and item features are derived, while the middle panel depicts the user social network used to enrich user representations via attention-weighted aggregation of neighbors. Item embeddings are further refined by aggregating features of their interacting audiences and custodian (creator) signals. Finally, the right panel shows the fusion and matching stage where the learned user, item and custodian representations are combined and ranked to produce a personalized Top-N recommendation list. Social ties enrich sparse interaction histories, thereby mitigating cold-start challenges for both new users and newly published ICH items. Moreover, recommendations backed by users’ own networks enjoy higher perceived credibility, fostering greater trust and engagement. The availability of social evidence, such as “users like you or your friends have also engaged with this item”, further enhances transparency and user acceptance.

Figure 3.

Recommendation integrating social proximity into collaborative filtering frameworks.

Beyond simple linkage, advanced methods weight network topology, such as common-friend counts, Jaccard-weighted shared neighbors, and friend-of-friend centrality, to refine proximity estimates. This rich social context has inspired a spectrum of models, from matrix-factorization approaches with social regularization to graph-based recommenders that leverage node embeddings, and most recently, graph neural networks that jointly learn from user–user and user–item graphs to capture complex relational patterns. As a result, social network-enhanced recommendation has become a pivotal research direction in delivering personalized, trustworthy, and explainable ICH content recommendations.

3.1.3. Attention Mechanism

Attention mechanisms have become integral to modern recommender systems by enabling dynamic weighting of input features and thus capturing the complex interactions between users and items. Variants include soft attention, hard attention, key–value attention, and multi-head attention.

Soft attention assigns a normalized probability to each element in an input sequence, such as a user’s historical interaction list, allowing the model to focus selectively on the most relevant past behaviors while down-weighting less informative ones. Hard attention operates as a binary selector, isolating the single most salient element at each step; for example, it can pick a subset of significant purchase records from a voluminous history to drive the recommendation. Key–value attention treats each input as a (key, value) pair, facilitating fine-grained modeling of how specific item attributes influence user preferences. Multi-head attention partitions the input into multiple subspaces, each processed by an independent attention head; this parallel structure excels at identifying diverse preference patterns, such as shifts in taste across different temporal or contextual dimensions.

In practice, attention layers are routinely combined with deep-learning and reinforcement-learning architectures to further enhance recommendation performance. In social network-based recommendation, for instance, the strength of ties between a target user and each neighbor varies according to interest alignment; naive aggregation would conflate strong and weak signals. GATs [31,32] address this by learning a distinct attention coefficient

for each edge

, which reweights neighbor

’s feature vector before aggregation:

where

is a non-linear activation,

is the weight matrix,

is the neighbor’s representation,

is the neighbor set, and

is the learned attention parameters. By weighting each neighbor’s contribution according to learned affinity, GATs produce node embeddings that more accurately reflect personalized social influence, yielding more precise and interpretable ICH content recommendations.

3.1.4. GraphSAGE Model

Graph SAmple and aggregate (GraphSAGE) is a spatial-domain graph convolutional framework that decomposes convolution into two stages: neighborhood aggregation and feature update [45]. At each layer

, a node

first collects feature vectors from its one-hop neighbors

and concatenates them with its own representation

. An aggregation function AGGREGATE() then fuses these inputs into a summary vector, which is transformed by a learnable weight matrix

and a non-linear activation

:

Early variants such as NN4G simply sum neighbor vectors before combining them with the central node. GraphSAGE generalizes this idea by supporting multiple aggregator functions, e.g., mean, sum, LSTM-based, or pooling, to capture diverse local structures. To ensure scalability on large graphs, GraphSAGE employs neighborhood sampling, whereby each node randomly selects a fixed number of neighbors at each layer. This sampling bounds computation while preserving representative local context for each update.

3.1.5. GGNN Model

The Gated Graph Neural Network (GGNN) extends message-passing to graph-structured data by incorporating GRU-style gating mechanisms [46]. At each propagation step

, a node

receives incoming messages from both its in- and out-neighbors via separate adjacency matrices

and

. These messages are concatenated into an aggregated input vector

, which is then processed by gated update equations:

where

is the node’s previous hidden state,

denotes element-wise multiplication, and

the sigmoid activation. Through these gated updates, GGNN captures both the structure and temporal evolution of information flow, making it well-suited for modeling sequential or relational dependencies in ICH content recommendation.

3.2. Attention-Enhanced Static Social Relation Recommendation

We propose an innovative spatial GCN, an Attention-augmented GraphSAGE, tailored to ICH recommendation by jointly extracting three feature types: (1) content audience profiles, (2) user preference embeddings, and (3) custodian social-attraction vectors. These embeddings are aligned via similarity scoring to produce personalized ICH recommendations.

3.2.1. User–Content–Custodian Feature Extraction

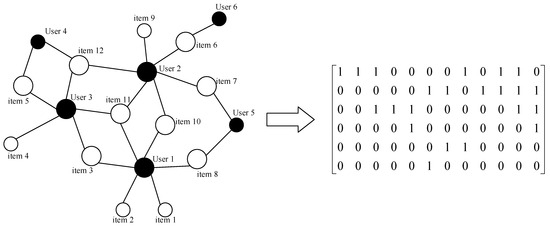

User Preference Embedding. Users with similar tastes tend to engage with the same content. We first derive each user’s initial embedding from the one-hot-encoded user–item interaction graph

, where

indicates an interaction. Each user u is thus represented by a binary feature vector reflecting their ICH interactions. Figure 4 displays the user-item interaction to binary interaction matrix. We derive each user’s initial feature vector from the one-hot encoded user-item interaction matrix R, where

indicates an interaction between user u and item i, and

0 otherwise. This matrix is used to initialize user feature vectors for downstream embedding and graph-based aggregation.

Figure 4.

User–item interaction to binary interaction matrix.

We then refine these embeddings via the user’s implicit social ties, which defined by overlapping interaction sets rather than simple follow links, to capture true interest affinity. Let

denote u’s one-hop social neighbors who share at least one common ICH interaction. We apply an attention-weighted GraphSAGE aggregation over these neighbors to update u’s embedding:

where the attention coefficient

quantifies the social trust between

and neighbor

:

After L layers, the final preference embedding for u is the concatenation of all layer-wise outputs:

where

is the embedding of user u after layer l;

denotes element-wise multiplication, emphasizing features common to u and v;

are trainable weight matrices;

is a non-linear activation;

is the set of u’s implicit social neighbors;

is the normalized attention weight representing social trust between u and v.

This mechanism ensures that each neighbor’s contribution is modulated by both its relevance to u’s current embedding and its shared interests, yielding a robust user preference representation for subsequent matching against content and custodian features.

Content Audience Embedding. The audience profile of each ICH item reflects the diversity of user interests it attracts. We first compute an initial embedding for content iii by averaging the embeddings of its interacting users:

where

is the set of users who have interacted with content i, and

denotes user u’s final preference embedding.

To refine

, we apply an attention-weighted GraphSAGE update that privileges users whose interests most closely align with the content:

where attention coefficients

are computed via softmax over dot-product scores:

After L layers, we concatenate all layer-wise embeddings to form the final content representation:

where

is the embedding of content i after l GraphSAGE iterations;

is user u’s embedding at layer l;

denotes element-wise multiplication, highlighting features shared by content and user;

are trainable weight matrices;

is a non-linear activation function;

is the normalized attention weight representing the relevance of user u to content i;

is the set of users who have interacted with content i.

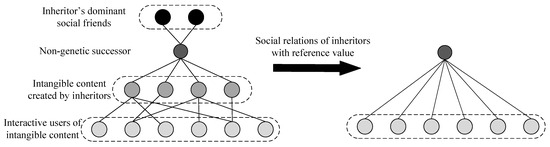

Custodian Embedding via Static Social Relation. Custodians drive the propagation of ICH within digital communities, and each custodian’s personal style and thematic focus attract distinct audiences. We distinguish two types of custodian–user ties: explicit followers (account subscribers) and implicit followers (users who have actively engaged with the custodian’s content). Prior research indicates that mere subscription without interaction conveys minimal preference information; moreover, all explicit followers who interact are already captured as implicit ties (Figure 5). Therefore, we define a custodian’s embedding solely by the features of their created ICH items. Concretely, we aggregate the embeddings of content iii authored by custodian h using a simple mean:

where

is the resulting custodian embedding,

denotes the set of ICH items published by h, and

is the embedding of content i. is the final attention-weighted embedding of content i from Equation (18).

Figure 5.

Inheritor’s social relationship.

3.2.2. Recommendation Model Based on Attention Mechanism and Static Social Relationships of ICH Inheritors

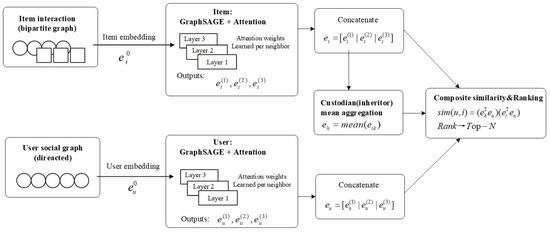

GCHS Model Framework. Building upon the earlier construction of user preference features, ICH content audience features, and inheritor attractiveness features, we propose a recommendation framework that computes user–content similarity and generates a Top-N recommendation list accordingly. The proposed model is denoted as GCHS (Graph-based Collaborative model incorporating Heritage content and Social relationships). The overall architecture is illustrated in Figure 6.

Figure 6.

Schematic of the GCHS architecture.

In Figure 6, the top row is the item-side processing. Raw user–item interactions produce an initial item embedding

, which is refined by a multi-layer GraphSAGE with attention-weighted neighbor aggregation; layer-wise outputs

are concatenated to form the final item vector

. Middle-right: custodian (inheritor) features

are obtained by mean-aggregating the embeddings of items authored by that custodian. Bottom row is the user-side processing that mirrors the item-side pipeline. The social graph provides neighbors whose features are attention-aggregated through a multi-layer GraphSAGE to yield

. Final fusion: the composite similarity combines custodian–user influence and item–user match; candidates are ranked by this score and the Top-N list is produced.

The GCHS model requires feature representations from three distinct perspectives: user preferences, ICH content characteristics, and inheritor attributes. These are extracted in a three-module structure: user Interest features, ICH Content Features and inheritor features

First, a user–content interaction graph and a user–user social graph are constructed based on users’ historical behavior and their social relationships. Initial embeddings of users and content are obtained from these graphs. Then, a multi-layer spatial graph convolutional network based on attention mechanisms, GraphSAGE, is employed to iteratively aggregate and refine feature vectors. Each layer applies attention-based aggregation to capture complex dependencies, and the final user and content embeddings are obtained by concatenating representations across all layers.

For inheritor feature learning, we model implicit social relationships by aggregating the features of users who have interacted with the ICH content published by the inheritor. A mean aggregation strategy is adopted to derive the inheritor’s feature vector. Finally, the user–content similarity score is computed by integrating the inheritor features, leading to the generation of a comprehensive similarity measure used to rank and select the Top-N recommendations.

User–Content Similarity Evaluation. The user–content similarity assessment serves as the core mechanism for matching users with potentially relevant ICH content. Recognizing that inheritors play a significant role in influencing user engagement with ICH content, as evidenced by prior studies, we incorporate inheritor features into the similarity metric.

Among various similarity metrics (e.g., cosine similarity, Euclidean distance, inner product), we adopt the inner product for its efficiency and compatibility with embedding-based methods. The similarity between user u and ICH content i is defined as follows:

where

denotes the embedding vector of user u,

denotes the embedding of the recommended ICH content i,

represents the embedding of the inheritor h who published content i.

The similarity value

reflects both the relevance of the content to the user and the influence of the content publisher (inheritor). The Top-N items with the highest similarity scores are selected as final recommendations.

Training Methodology. The model training focuses on learning the parameters of the graph aggregators and associated weight variables. To optimize these parameters, a loss function is defined based on the principle of neighborhood similarity maximization and negative sampling:

where

is the embedding of the target node (user or content),

is the embedding of a neighboring node reached via random walk, σ(⋅) denotes the sigmoid function,

indicates that

is a negative sample drawn from a noise distribution

;

represents the number of negative samples,

is a small constant for numerical stability.

The first term in the loss function encourages the embeddings of neighboring nodes to be close (i.e., high similarity), while the second term penalizes the similarity of distant or randomly sampled nodes. This contrastive learning objective ensures that the learned embeddings preserve both structural and semantic proximity in the graph space.

4. Data

4.1. Data Description

Weibo, as a major user-generated content platform, hosts a vast community of officially certified ICH custodians and heritage organizations. To promote intangible cultural heritage preservation, Weibo curates and amplifies topics such as “Meet ICH,” “Intangible Cultural Heritage,” “ICH in Everyday Life,” and “Remarkable Chinese ICH,” which collectively garnered over one million discussions and in excess of one billion views. These topic tags provide a rich source of data on custodians, content, users, and interaction records.

To construct our experimental dataset, we employed the WeiboSpider crawler to collect information from 100 certified ICH custodian accounts. From each custodian, we retrieved up to 50 ICH-related post IDs published between 1 September 2023 and 31 March 2024, and for each post, up to 500 user IDs corresponding to users who interacted with the content. Following this procedure, we amassed the raw dataset summarized in Table 1. To streamline downstream analysis, all original Weibo IDs for users, posts, and custodians were remapped to sequential integer indices. The raw samples and their indexed equivalents are provided in the Supplementary Material.

Table 1.

Summarization of the overall dataset.

4.2. Evaluation Metrics

To assess the effectiveness of ICH recommendations, we evaluate both classification accuracy, that is, how well the model identifies relevant items, and ranking quality which presents how effectively relevant items are prioritized.

Classification Accuracy is measured by Precision, Recall, and their harmonic mean, the F-measure:

where

is the number of truly relevant items for the user, N is the number of items predicted as relevant, TP is the number of correctly predicted relevant items.

Ranking Quality is measured by Normalized Discounted Cumulative Gain (NDCG), which accounts for the positions of relevant items in the recommendation list:

where

is the relevance score (similarity) of the item at rank i, n is the r recommendation list length.

4.3. Parameter Settings and Experimental Environment

The GCHS model is implemented in PyTorch (Table 2) and trained on an 8:1:1 split of training, validation, and test sets, with an initial learning rate of 0.01.

Table 2.

Experimental Configuration.

GraphSAGE hyperparameters were chosen based on empirical validation: Number of layers k = 2; Neighbor sampling sizes: 25 for layer 1 and 10 for layer 2.

where neighbors are sampled with replacement if the available pool is smaller than the target size, and without replacement otherwise.

Top-N recommendations were evaluated at

, with both classification and ranking metrics reported.

5. Results and Discussion

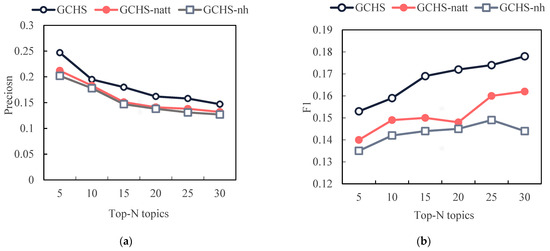

5.1. Ablation Study

To validate the contributions of inheritor features and attention-based aggregation, we compare the full GCHS model against two ablated variants using controlled experiments.

GCHS-nh removes the inheritor feature term. The user–content similarity reduces to a simple inner product:

GCHS-natt replaces all attention-weighted aggregations in user and content feature learning with unweighted summations. The update rules become:

where

and

are the embedding of user u and contents i after layer l;

element-wise multiplication highlighting shared features;

are trainable weight matrices;

is a non-linear activation;

first-hop social neighbors of u and interacting users of i.

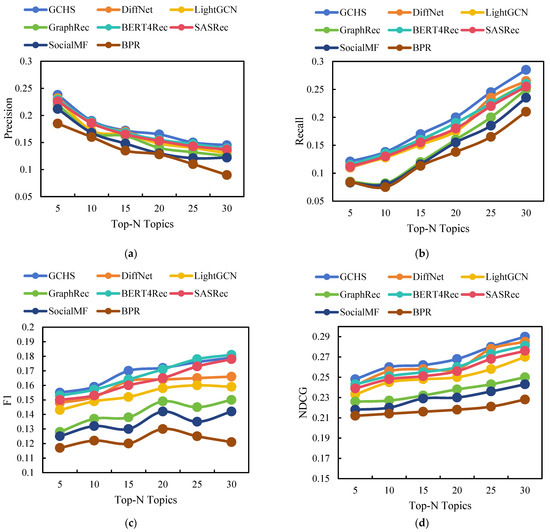

Figure 7 compares Precision, Recall, F-measure, and NDCG across GCHS, GCHS-nh, and GCHS-natt for various Top-N settings. All models exhibit the typical trade-off: Precision decreases and Recall increases with larger N, while F-measure and NDCG rise overall.

Figure 7.

Results of the Weibo dataset: (a) precision, (b) F1 score, (c) NDCG, and (d) Recall varying with N.

Crucially, GCHS outperforms both ablations on all four metrics. Removing inheritor features (GCHS-nh) or disabling attention (GCHS-natt) leads to consistent performance degradation, demonstrating that inheritor features materially enhance recommendation quality by injecting custodial influence into similarity scoring. And attention-based aggregation yields more accurate user and content embeddings by weighting neighbors according to relevance, thereby improving both classification accuracy and ranking fidelity. These results confirm the positive, complementary contributions of our two novel components to the GCHS framework.

5.2. Comparative Experiments

Building on the ablation study’s validation of each component’s utility, we further evaluate GCHS against six representative baselines on the same dataset. These baselines encompass both classic and state-of-the-art recommenders (Table 3), including algorithms that leverage implicit feedback (BPR) social relations (SocialMF), and graph neural networks (GraphRec, NGCF, LightGCN, DiffNet), and Transformer-style sequential models (SASRec and BERT4Rec).

Table 3.

Description of baseline models.

First, using each method, we compute Precision, Recall, F-measure, and NDCG for Top-N recommendations where

}.

Figure 8 shows that, in the Weibo dataset, the proposed GCHS consistently achieved the best balance between precision, recall, F1 and ranking quality. For example, at Top-5 GCHS obtained Precision at 5 (0.238), outperforming the closest graph-based competitor DiffNet (0.232) and the Transformer baselines BERT4Rec (0.229) and SASRec (0.226). GCHS also attained a higher ranking fidelity (NDCG at 30, 0.290) and strong recall growth as N increases (Recall at 30, 0.285), indicating the model both surfaces highly relevant items near the top and expands relevant coverage as the recommendation list grows. These patterns reflect two strengths of the approach: (1) the custodian (inheritor) embedding injects authoritative diffusion signals that reduce effective sparsity for long-tail ICH items, and (2) attention-weighted aggregation discriminates informative neighbors from noisy co-interactions. Transformer sequential models (SASRec, BERT4Rec) perform competitively, especially on recall and NDCG, because they capture short-term temporal patterns, but they do not uniformly surpass GCHS on precision or F1, which we attribute to their lack of an explicit custodian-diffusion signal.

Figure 8.

Results of the Weibo dataset: (a) precision, (b) Recall, (c) F1 score and (d) NDCG varying with N.

Although percentage point gains may appear modest in absolute terms, small improvements in ranking and F-measure are often meaningful in recommendation tasks because they translate to materially higher exposure for long-tail items and measurable downstream effects, e.g., click or purchase volume, curator income. In the ICH domain, even modest relative increases can materially improve the discovery of niche cultural content and thus have a practical impact beyond the numeric gain. Therefore, we further evaluate the complexity of GCHS and baseline models.

Table 3 summarizes asymptotic computational complexity and costs for GCHS and the baselines considered in this study. The cost expressions are given per training epoch and per-user at inference time; they are intended to show relative scaling rather than precise wall-clock durations. Specifically, |U| is the number of users, |I| is the number of items, |H| is the number of custodians, |V| = |U| + |I| represents the total graph nodes, |E| is the number of user-item edges, |Es| is the number of social edges, d is the embedding dimension, L is the number of GNN layers, S is the sampled neighbors per node per layer, Q is the number of negative samples per positive, m is the per-user sequence length.

It can be seen that GCHS attains expressive power by integrating multi-hop aggregation, attention weighting, and custodian (inheritor) aggregation; this yields improved recommendation accuracy but incurs higher computational cost relative to lightweight baselines (BPR, SocialMF) and LightGCN. The primary overheads relative to sampling-based GNNs are (a) the attention score computations across sampled neighbors with an extra O(S·d) per node per layer and (b) dense linear projections O(d2) if full d × d transforms are used. Custodian aggregation is negligible by comparison.

Transformer-based sequence models (SASRec, BERT4Rec) have distinct scaling: complexity grows quadratically with per-user sequence length mmm, which can make them expensive for long histories; they remain highly effective for short-term sequential patterns but are not directly comparable in graph-centric settings without additional graph or social side information.

In practice, inference costs O(|I|·d) are mitigated by candidate generation and approximate nearest neighbor search; training costs are reduced by neighbor sampling, mini-batching, mixed precision, and GPU acceleration. Table 4 displays the runtimes of models. It can be seen that GCHS yields meaningful accuracy gains for ICH recommendation at a moderate computational cost: it is approximately 32% slower per epoch than DiffNet but improves F-measure, NDCG, and accuracy materially. LightGCN is the most efficient GNN trade-off, while Transformer sequence models, e.g., SASRec and BERT4Rec, are far more expensive in both time and memory.

Table 4.

Complexity comparison.

Finally, we apply GCHS to the full test set to generate Top-N lists. Table 5 presents representative Top-7 recommendation lists generated by GCHS for a subset of test users. The lists are clearly individualized. Each user’s Top-1 differs, and the full Top-7 contains a mix of low- and high-index content IDs, which indicates that the model produces distinct, personalized candidate sets rather than repeating a single popular item for all users. At the same time, some items, e.g., IDs 1067, 1412, appear in multiple users’ lists, suggesting that GCHS balances personalization with exposure of broadly relevant content. The presence of widely separated ID values within each list implies that the method can surface long-tail content alongside more central items, a desirable property for promoting niche intangible cultural heritage. To fully quantify these qualitative observations, one should report precision/recall and NDCG over the test set and complement them with diversity/novelty metrics and manual inspection to confirm that recommended items are both relevant and meaningful to the target users.

Table 5.

Top-N Recommended Content.

5.3. Experiment on TikTok Dataset

To further evaluate the generalizability and robustness of GCHS in a realistic social media environment, we conducted supplementary experiments on a TikTok dataset assembled from certified ICH custodians. The TikTok data were collected and preprocessed using the same pipeline applied to the Weibo dataset such as account certification filtering for custodians, interaction sampling caps, anonymization, and re-indexing. Although TikTok is video-centric and exhibits platform-specific user behavior, the core signals we model, inheritor/custodian influence, user social ties, and attention-weighted neighbor aggregation, remain present and informative. The dataset summary and sample interaction records are reported in Table 6.

Table 6.

Summarization of the overall TikTok dataset.

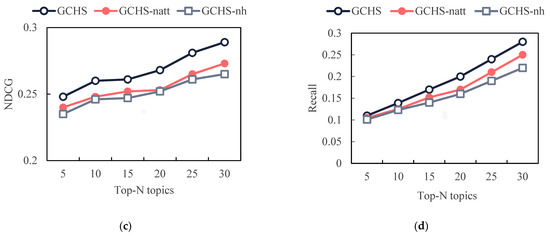

We compared the full GCHS model to the two ablated variants used in the main experiments (GCHS-natt and GCHS-nh) and computed Precision, Recall, F1, and NDCG across Top-N settings; results are reported in the following tables. Experimental settings mirrored the main study: identical train/validation/test splits, same hyperparameter choices where applicable, and Top-N evaluation for N ∈ {5,10,15,20,25,30}.

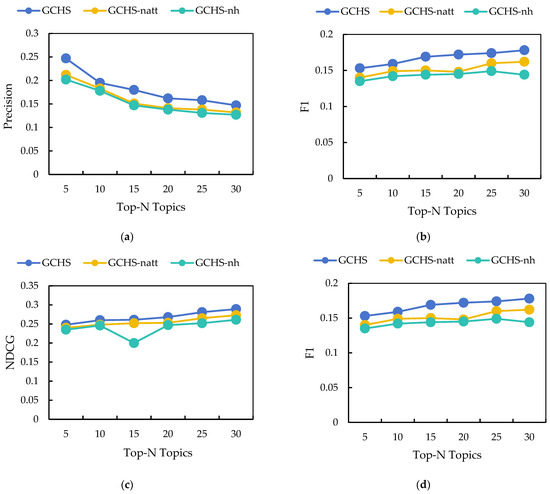

Figure 9 indicates that across all evaluated Top-N thresholds, GCHS consistently outperformed both ablations on Precision, Recall, F1, and NDCG, demonstrating that (i) injecting custodian features and (ii) using attention-based, relevance-weighted aggregation materially improve recommendation quality in a real social-platform scenario. For example, at Top-5, the Precision of GCHS = 0.247, which is 16.5% higher than GCHS-natt (0.212) and 22.3% higher than GCHS-nh (0.202); at Top-30 NDCG, GCHS = 0.289, representing relative gains of 5.9% and 9.1% over GCHS-natt (0.273) and GCHS-nh (0.265), respectively.

Figure 9.

Results of the TikTok dataset: (a) precision, (b) F1 score, (c) NDCG and (d) Recall varying with N.

These results suggest two complementary mechanisms driving GCHS’s superior performance. First, the custodian (inheritor) embedding provides authoritative diffusion cues that effectively reduce the sparsity of long-tail ICH items and increase the likelihood that culturally relevant content is surfaced near the top of recommendation lists. Second, attention-based aggregation enables the model to selectively upweight informative neighbors and downweight noisy co-interactions, improving early-rank precision and overall ranking fidelity. Together, these components produce robust improvements in both accuracy and ranking quality on real social-platform data, confirming the value of integrating custodian signals with relevance-aware graph aggregation for ICH recommendation.

We further evaluate GCHS against BPR, SocialMF, GraphRec, NGCF, LightGCN, DiffNet, SASRec, and BERT4Rec on behalf of the TikTok dataset.

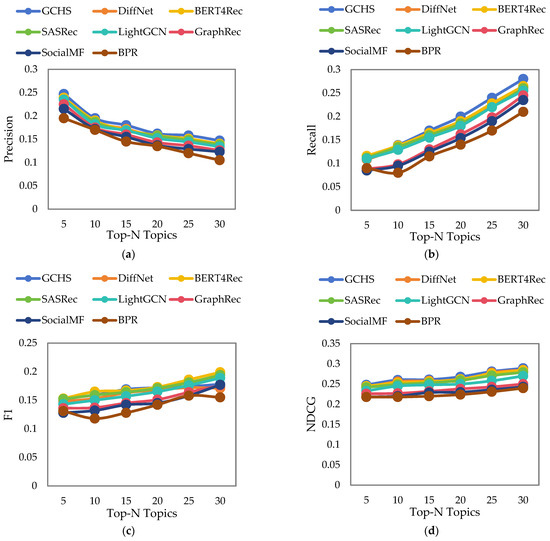

On the TikTok ICH corpus (self-certified public accounts), in Figure 10, we observe a very similar but slightly amplified pattern: GCHS achieves higher absolute performance across metrics, e.g., Precision at 5 (0.247); Recall at 30 (0.280); NDCG at 30 (0.289), compared with the same set of baselines, e.g., DiffNet, LightGCN, GraphRec, SocialMF, BPR, SASRec, BERT4Rec. Notably, the relative advantage of custodian-aware modeling is more pronounced on this dataset: the gap between GCHS and non-custodian graph models widens slightly, e.g., Precision at 5 difference vs. DiffNet = 0.009, which is consistent with stronger or more coherent custodian signals in public-account style data where certified custodians publish and reshare content systematically. Ablation comparisons (GCHS vs. GCHS-nh/GCHS-natt) further confirm that both the custodian component and attention weighting materially contribute to these gains (custodian ablation produces double-digit relative drops in Precision at 5 in our controlled tests).

Figure 10.

Results of the TikTok dataset: (a) precision, (b) recall, (c) F1, and (d) NDCG varying with N.

Across both Weibo and TikTok corpora the results are stable: (a) GCHS delivers the best overall trade-off across Precision, Recall, F1 and NDCG; (b) Transformer sequential models (SASRec, BERT4Rec) are strong competitors as they frequently match or exceed graph baselines on recall and NDCG, but do not consistently exceed GCHS on precision/F1 because they lack explicit cross-user custodian signals; and (c) the two novel ingredients in GCHS explain most of the improvement, especially on TikTok datasets where custodian behavior is prominent. Practically, these findings suggest that platforms aiming to promote culturally meaningful, long-tail ICH content should combine sequence-aware personalization with custodian-aware graph signals: sequence models capture short-term user dynamics while GCHS’s custodian signals increase exposure and trust for authoritative heritage items.

5.4. Qualitative Insights

For each trained GCHS model, we aggregated the attention weights that flow from custodian nodes to users/content and ranked custodians by their total attention mass. Aggregate attention mass equals the sum of learned attention weights originating from a custodian’s node, normalized across all custodians. Columns report the custodian identifier, number of published ICH posts in the corpus, and the custodian’s share of total attention mass. Top-10 custodians account for a disproportionate share of model attention, indicating concentrated influence in the diffusion of ICH content.

Table 7 ranks custodians by the aggregate attention mass assigned by the trained GCHS model. Across both the Weibo and TikTok corpora, attention is strongly concentrated: the top 10 custodians together account for approximately 40–45% of total custodian attention mass, while the remaining custodians share the residual mass. This concentration indicates that GCHS learns to prioritize a relatively small set of highly influential inheritors when forming user–content similarity scores, a pattern consistent with empirical observations of ICH dissemination in China, where a limited number of nationally or provincially recognized custodians often act as diffusion hubs. The rightmost columns (posts authored; average attention per post) confirm that high attention mass is not merely a function of posting volume but reflects learned authority and diffusion effectiveness.

Table 7.

Top custodians by aggregate attention mass (Weibo & TikTok experiments).

In addition, we measured the relative change in ranking quality (ΔNDCG varying with N and ΔPrecision at 5) after zeroing the outgoing edges or removing content authored by each custodian and ranked custodians by their impact. For each listed custodian, we compute the relative change in NDCG at 30 and Precision at 5 after removing the custodian’s outgoing edges, i.e., simulating the absence of that custodian’s diffusion signals. Values are reported as percentage point changes relative to the intact model. Custodians are ordered by their average impact on NDCG at 30.

Table 8 quantifies the causal influence of individual custodians on ranking quality via targeted ablation. Removing the edges or content of the most influential custodians yields measurable performance drops: ablating the top 1 to 3 custodians reduces NDCG at 30 by 3–6% on average and Precision at 5 by 1.5–4%, demonstrating that a handful of custodians materially shape ranking outcomes. These ablation results corroborate the attention-mass analysis: custodians with high aggregate attention are also those whose removal has the largest detrimental effect on recommendation performance. From a practical perspective, the results identify custodians whose engagement, e.g., promotion, certification, would most effectively improve platform-level dissemination of curated ICH items.

Table 8.

Per-custodian ablation: impact on ranking quality (ΔNDCG at 30 and ΔPrecision at 5).

For the feature-level contribution analysis, a feature-contribution method is used, which is the average of local explanations computed from the final similarity score. We decomposed the contribution of (i) user–content affinity, (ii) content audience embedding, and (iii) custodian embedding to the final similarity score. The table reports the mean contribution (percentage of absolute score) and standard deviation across the test set.

Table 9 shows that all three components contribute meaningfully to the final similarity score. Averaged across users and datasets, user–content affinity accounts for the largest share (45–50%), custodian embeddings contribute on average 28–32%, and content-audience features explain the remaining 18–25%. The non-trivial share attributable to custodian embeddings confirms that custodian signals are not ancillary: they are core determinants of the model’s ranking decisions. The standard deviations indicate heterogeneity across users, for some user segments (e.g., long-tail interest users), custodian signals play an even larger role, consistent with the model’s function of surfacing authoritative content for niche tastes.

Table 9.

Average feature contributions to the final user–content similarity score.

6. Discussion

The empirical results validate that integrating custodian (inheritor) features and attention-based neighbor aggregation within a graph framework substantially enhances recommendation effectiveness in digital marketplaces. Ablation studies show that omitting custodian social signals diminishes accuracy, demonstrating that modeling custodians’ influence enriches similarity assessments and addresses data sparsity by introducing authoritative relational cues [55]. Likewise, replacing attention-weighted aggregation with uniform averaging degrades embedding quality, confirming that relevance-aware neighbor weighting produces more discriminative user and item representations, which consistent with findings in graph attention networks for social recommendation [56]. When evaluated against both classical (BPR, SocialMF) and advanced GNN baselines (GraphRec, NGCF, LightGCN, DiffNet), the proposed model achieves the highest gains in Top-N accuracy, F-measure, and NDCG. These improvements illustrate how multi-hop diffusion, combined with custodian-driven attention, more effectively captures the complex interplay of social ties, content metadata, and user interests, thereby reducing search costs and mitigating popularity biases in content discovery [57].

Beyond metric improvements, the framework offers practical advantages for e-commerce platforms seeking agile, socially aware recommendation solutions. By amplifying underexposed heritage items through custodian resharing behaviors, the model fosters long-tail engagement and supports culturally diverse content circulation. Its attention mechanism can be deployed in real time across web and mobile channels, enabling dynamic personalization that adapts to evolving user preferences and social contexts. This coherent integration of social, cultural, and transactional dimensions demonstrates a flexible approach to emerging recommendation challenges, aligning with the broader goal of leveraging advanced machine learning techniques to optimize user experience and extend digital commerce reach [58].

Although the Weibo and TikTok datasets provide valuable real-world benchmarks, they are limited to Chinese platforms and cultural context. This raises concerns about cross-platform generalizability and scalability. Future research should extend GCHS to larger, multilingual, and cross-domain datasets (e.g., TikTok, YouTube, or international ICH archives) to validate robustness across diverse digital ecosystems.

7. Conclusions

In response to the challenges of content overload, high search costs, and under-utilized social networks in digital content platforms, we present GCHS: a custodian-aware graph-based deep learning model that fuses inheritor relationships, user interest profiles, and content metadata through an attention-augmented GraphSAGE network. Ablation and comparative evaluations on a real-world ICH dataset confirm that incorporating custodian influence and relevance-driven neighbor weighting yields significant improvements in Top-N accuracy, F-measure, and NDCG over state-of-the-art baselines. By enhancing personalized content discovery and amplifying long-tail engagements, this approach advances the design of recommendation systems that are both socially conscious and technologically robust, offering a scalable solution for diverse e-commerce applications.

One promising extension is the integration of temporal dynamics. Custodian influence and user interests are inherently time-varying, shaped by events, festivals, and shifting cultural trends. Embedding temporal signals into the GCHS framework, for instance, via time-aware graph neural networks or sequential Transformers, would enable more realistic modeling of evolving social relations and preference trajectories.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/info16100902/s1, Table S1. Representative Sample of Raw Interaction Records (Weibo); Table S2. Sample of Indexed Interaction Records (Weibo); Table S3. Representative Sample of Raw Interaction Records (TikTok); Table S4. Sample of Indexed Interaction Records (TikTok).

Author Contributions

W.X.: Conceptualization, methodology, resources, discussion, writing—original draft preparation and funding acquisition; B.Y.: Data curation, visualization; H.Z.: data collection and visualization. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by a project grant from Chengdu Academy of Social Sciences (Grant No. 2024CS116), Key Laboratory of Digital Protection and Intelligent Sharing of Traditional Local Opera Resources (Chengdu University of Technology), Sichuan Provincial Department of Culture and Tourism (Grant No. 24XQZD02; Grant No. 24XQYB05), Sichuan Mineral Resources Research Center (Grant No. SCKCZY2025-YB004).

Institutional Review Board Statement

The study was conducted in accordance with the 2013 version of the Declaration of Helsinki and approved by the Institutional Review Board (IRB) of Chengdu University of Technololgy. The protocol number is No. 24XQYB05 and the approval date is 5 December 2024. Data used in this research were collected from social media platforms Weibo and TikTok. Only publicly available posts were included and all data were processed and reported in aggregated or anonymized form so that individual users cannot be identified. Any direct quotations included in the manuscript have been redacted or paraphrased to prevent identification. The research team complied with the platforms’ terms of service and relevant national or regional data protection laws. If participant contact or additional data collection, e.g., surveys or interviews, was performed, informed consent was obtained from each participant as documented in the informed consent form.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

Author Bowen Yu was employed by the company Chengdu Zero-One Era Technology Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

| ANN | Approximate Nearest Neighbors |

| ASR | Attentive Social Recommendation |

| BPR | Bayesian Personalized Ranking |

| BST | Behavior Sequence Transformer |

| CNN | Convolutional Neural Network |

| DCG | Discounted Cumulative Gain |

| GAT | Graph Attention Network |

| GCHS | Graph-based Collaborative model incorporating Heritage content and Social relationships |

| GCN | Graph Convolutional Network |

| GGNN | Gated Graph Neural Network |

| GNN | Graph Neural Network |

| GraphSAGE | Graph Sample and Aggregate |

| GRU | Gated Recurrent Unit |

| ICH | Intangible Cultural Heritage |

| LSTM | Long Short-Term Memory |

| LightGCN | Lightweight Graph Convolutional Network |

| NGCF | Neural Graph Collaborative Filtering |

| NDCG | Normalized Discounted Cumulative Gain |

| PMF | Probabilistic Matrix Factorization |

| RNN | Recurrent Neural Network |

| SASRec | Self-Attentive Sequential Recommendation |

| SOR | Stimulus–Organism–Response |

| Top-N | Top-N Recommendation |

References

- Sun, L.; Li, J.N.; Wang, Z.Y.; Liu, W.S.; Zhang, S.; Wu, J.T. Research on the Redesign of China’s Intangible Cultural Heritage Based on Sustainable Livelihood-The Case of Luanzhou Shadow Play Empowering Its Rural Development. Sustainability 2024, 16, 4555. [Google Scholar] [CrossRef]

- Trcek, D. Cultural heritage preservation by using blockchain technologies. Herit. Sci. 2022, 10, 6. [Google Scholar] [CrossRef]

- Zhuang, S. Research on Digital Protection of Intangible Cultural Heritage Based on Modern Information Technology. In Proceedings of the 2021 4th International Conference on Information Systems and Computer Aided Education, Dalian, China, 24–26 September 2021; Association for Computing Machinery: Dalian, China, 2021; pp. 2546–2549. [Google Scholar]

- Ye, X.J.; Ruan, Y.W.; Xia, S.B.; Gu, L.W. Adoption of digital intangible cultural heritage: A configurational study integrating UTAUT2 and immersion theory. Humanit. Soc. Sci. Commun. 2025, 12, 23. [Google Scholar] [CrossRef]

- Zhang, C.; Tian, Y.; Zhang, Y. Analysis of the Digital Dissemination Path of Intangible Cultural Heritage from the Perspective of Grounded Theory. Adv. Soc. Behav. Res. 2025, 15, 12–15. [Google Scholar] [CrossRef]

- Tricarico, L.; Lorenzetti, E.; Morettini, L. Crowdsourcing Intangible Heritage for Territorial Development: A Conceptual Framework Considering Italian Inner Areas. Land 2023, 12, 1843. [Google Scholar] [CrossRef]

- Zhang, Y. The Impact of Short Videos on the Creation and Dissemination of Intangible Cultural Heritage. Commun. Humanit. Res. 2024, 32, 140–146. [Google Scholar] [CrossRef]

- Ma, Z.C.; Guo, Y.Y. Leveraging Intangible Cultural Heritage Resources for Advancing China’s Knowledge-Based Economy. J. Knowl. Econ. 2023, 15, 12946–12978. [Google Scholar] [CrossRef]

- Wang, H. A refined pushing method for financial product marketing data based on user interest mining. Int. J. Bus. Intell. Data Min. 2025, 26, 461–472. [Google Scholar] [CrossRef]

- Dou, J.H.; Qin, J.Y.; Jin, Z.X.; Li, Z. Knowledge graph based on domain ontology and natural language processing technology for Chinese intangible cultural heritage. J. Vis. Lang. Comput. 2018, 48, 19–28. [Google Scholar] [CrossRef]

- Kulkarni, S.; Rodd, S.F. Context Aware Recommendation Systems: A review of the state of the art techniques? Comput. Sci. Rev. 2020, 37, 100255. [Google Scholar] [CrossRef]

- Aljunid, M.F.; Huchaiah, M.D. IntegrateCF: Integrating explicit and implicit feedback based on deep learning collaborative filtering algorithm. Expert Syst. Appl. 2022, 207, 117933. [Google Scholar] [CrossRef]

- Mu, R.; Zeng, X.; Zhang, J. Heterogeneous information fusion based graph collaborative filtering recommendation. Intell. Data Anal. 2023, 27, 1595–1613. [Google Scholar] [CrossRef]

- Torkashvand, A.; Jameii, S.M.; Reza, A. Deep learning-based collaborative filtering recommender systems: A comprehensive and systematic review. Neural Comput. Appl. 2023, 35, 24783–24827. [Google Scholar] [CrossRef]

- Ramathulasi, T.; Babu, M. Assessing User Interest in Web API Recommendation using Deep Learning Probabilistic Matrix Factorization. Int. J. Adv. Comput. Sci. Appl. 2023, 14, 744–752. [Google Scholar] [CrossRef]

- Li, G.; Ou, W. Pairwise probabilistic matrix factorization for implicit feedback collaborative filtering. Neurocomputing 2016, 204, 17–25. [Google Scholar] [CrossRef]

- Strahl, J.; Peltonen, J.; Mamitsuka, H.; Kaski, S. Scalable Probabilistic Matrix Factorization with Graph-Based Priors. Proc. AAAI Conf. Artif. Intell. 2020, 34, 5851–5858. [Google Scholar] [CrossRef]

- Wang, J.; Guo, Y.; Yang, L.; Wang, Y. Binary Graph Convolutional Network with Capacity Exploration. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 3031–3046. [Google Scholar] [CrossRef]

- Afoudi, Y.; Lazaar, M.; Al Achhab, M. Hybrid recommendation system combined content-based filtering and collaborative prediction using artificial neural network. Simul. Model. Pract. Theory 2021, 113, 102375. [Google Scholar] [CrossRef]

- Chicaiza, J.; Valdiviezo-Diaz, P. A Comprehensive Survey of Knowledge Graph-Based Recommender Systems: Technologies, Development, and Contributions. Information 2021, 12, 232. [Google Scholar] [CrossRef]

- Xiao, Y.; Zhu, Y.; He, W.; Huang, M. Influence prediction model for marketing campaigns on e-commerce platforms. Expert Syst. Appl. 2023, 211, 118575. [Google Scholar] [CrossRef]

- Zhang, J.; Peng, Q.; Sun, S.; Liu, C. Collaborative filtering recommendation algorithm based on user preference derived from item domain features. Phys. A Stat. Mech. Its Appl. 2014, 396, 66–76. [Google Scholar] [CrossRef]

- Wu, S.; Sun, F.; Zhang, W.; Xie, X.; Cui, B. Graph Neural Networks in Recommender Systems: A Survey. ACM Comput. Surv. 2022, 55, 97. [Google Scholar] [CrossRef]

- Ahmadian, S.; Ahmadian, M.; Jalili, M. A deep learning based trust- and tag-aware recommender system. Neurocomputing 2022, 488, 557–571. [Google Scholar] [CrossRef]

- Yacobson, E.; Toda, A.M.; Cristea, A.I.; Alexandron, G. Recommender systems for teachers: The relation between social ties and the effectiveness of socially-based features. Comput. Educ. 2024, 210, 104960. [Google Scholar] [CrossRef]

- Cheng, Z.; Dong, J.; Liu, F.; Zhu, L.; Yang, X.; Wang, M. Disentangled Cascaded Graph Convolution Networks for Multi-Behavior Recommendation. ACM Trans. Recomm. Syst. 2024, 2, 31. [Google Scholar] [CrossRef]

- Kumar, A.; Jain, D.K.; Mallik, A.; Kumar, S. Modified node2vec and attention based fusion framework for next POI recommendation. Inf. Fusion 2024, 101, 101998. [Google Scholar] [CrossRef]

- Anwar, T.; Uma, V.; Hussain, M.I.; Pantula, M. Collaborative filtering and kNN based recommendation to overcome cold start and sparsity issues: A comparative analysis. Multimed. Tools Appl. 2022, 81, 35693–35711. [Google Scholar] [CrossRef]

- Fan, W.; Derr, T.; Ma, Y.; Wang, J.; Tang, J.; Li, Q. Deep adversarial social recommendation. In Proceedings of the 28th International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019; AAAI Press: Washington, DC, USA, 2019; pp. 1351–1357. [Google Scholar]

- Sharma, K.; Lee, Y.-C.; Nambi, S.; Salian, A.; Shah, S.; Kim, S.-W.; Kumar, S. A Survey of Graph Neural Networks for Social Recommender Systems. ACM Comput. Surv. 2024, 56, 265. [Google Scholar] [CrossRef]

- Li, X.; Sun, L.; Ling, M.; Peng, Y. A survey of graph neural network based recommendation in social networks. Neurocomputing 2023, 549, 126441. [Google Scholar] [CrossRef]

- Wu, Z.; Pan, S.; Chen, F.; Long, G.; Zhang, C.; Yu, P.S. A Comprehensive Survey on Graph Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 4–24. [Google Scholar] [CrossRef]

- Salamat, A.; Luo, X.; Jafari, A. HeteroGraphRec: A heterogeneous graph-based neural networks for social recommendations. Knowl.-Based Syst. 2021, 217, 106817. [Google Scholar] [CrossRef]

- Wang, H.; Yao, K.; Luo, J.; Lin, Y. An Implicit Preference-Aware Sequential Recommendation Method Based on Knowledge Graph. Wirel. Commun. Mob. Comput. 2021, 2021, 5206228. [Google Scholar] [CrossRef]

- Jiang, Y.; Ma, H.; Liu, Y.; Li, Z.; Chang, L. Enhancing social recommendation via two-level graph attentional networks. Neurocomputing 2021, 449, 71–84. [Google Scholar] [CrossRef]

- Wu, Q.; Zhang, H.; Gao, X.; He, P.; Weng, P.; Gao, H.; Chen, G. Dual Graph Attention Networks for Deep Latent Representation of Multifaceted Social Effects in Recommender Systems. In The World Wide Web Conference; Association for Computing Machinery: San Francisco, CA, USA, 2019; pp. 2091–2102. [Google Scholar]

- Sang, L.; Xu, M.; Qian, S.; Wu, X. Knowledge Graph enhanced Neural Collaborative Filtering with Residual Recurrent Network. Neurocomputing 2021, 454, 417–429. [Google Scholar] [CrossRef]

- Wu, S.; Tang, Y.; Zhu, Y.; Wang, L.; Xie, X.; Tan, T. Session-based recommendation with graph neural networks. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence and Thirty-First Innovative Applications of Artificial Intelligence Conference and Ninth AAAI Symposium on Educational Advances in Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; AAAI Press: Honolulu, HI, USA, 2019; Volume 33, p. 43. [Google Scholar]

- Alrashidi, M.; Selamat, A.; Ibrahim, R.; Krejcar, O. Social Recommendation for Social Networks Using Deep Learning Approach: A Systematic Review, Taxonomy, Issues, and Future Directions. IEEE Access 2023, 11, 63874–63894. [Google Scholar] [CrossRef]

- Bach, N.X.; Long, D.H.; Phuong, T.M. Recurrent convolutional networks for session-based recommendations. Neurocomputing 2020, 411, 247–258. [Google Scholar] [CrossRef]

- Zhao, Z.; Zhu, M.; Sheng, Y.; Wang, J. A Top-N-Balanced Sequential Recommendation Based on Recurrent Network. IEICE Trans. Inf. Syst. 2019, E102.D, 737–744. [Google Scholar] [CrossRef]

- Zhang, X.; Guo, F.; Chen, T.; Pan, L.; Beliakov, G.; Wu, J. A Brief Survey of Machine Learning and Deep Learning Techniques for E-Commerce Research. J. Theor. Appl. Electron. Commer. Res. 2023, 18, 2188–2216. [Google Scholar] [CrossRef]

- Islam, M.A.; Mohammad, M.M.; Sarathi Das, S.S.; Ali, M.E. A survey on deep learning based Point-of-Interest (POI) recommendations. Neurocomputing 2022, 472, 306–325. [Google Scholar] [CrossRef]

- Wang, J.; Yang, B.; Liu, H.; Li, D. Global spatio-temporal aware graph neural network for next point-of-interest recommendation. Appl. Intell. 2023, 53, 16762–16775. [Google Scholar] [CrossRef]

- Ding, Y.; Zhao, X.; Zhang, Z.; Cai, W.; Yang, N. Multiscale Graph Sample and Aggregate Network with Context-Aware Learning for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 4561–4572. [Google Scholar] [CrossRef]

- Buonanno, A.; Di Gennaro, G.; Ospedale, A.; Palmieri, F.A.N. Gated Graph Neural Networks for Classification Task. In Advanced Neural Artificial Intelligence: Theories and Applications; Esposito, A., Faundez-Zanuy, M., Morabito, F.C., Pasero, E., Cordasco, G., Eds.; Springer Nature Singapore: Singapore, 2025; pp. 171–181. [Google Scholar]

- Wu, L.; Li, J.; Sun, P.; Hong, R.; Ge, Y.; Wang, M. DiffNet++: A Neural Influence and Interest Diffusion Network for Social Recommendation. IEEE Trans. Knowl. Data Eng. 2022, 34, 4753–4766. [Google Scholar] [CrossRef]

- Rendle, S.; Freudenthaler, C.; Gantner, Z.; Schmidt-Thieme, L. BPR: Bayesian personalized ranking from implicit feedback. In Proceedings of the Twenty-Fifth Conference on Uncertainty in Artificial Intelligence, Montreal, QC, Canada, 18–21 June 2009; AUAI Press: Montreal, QC, Canada, 2009; pp. 452–461. [Google Scholar]

- Jamali, M.; Ester, M. A matrix factorization technique with trust propagation for recommendation in social networks. In Proceedings of the RecSys’10: Fourth ACM Conference on Recommender Systems, Barcelona, Spain, 26–30 September 2010; pp. 135–142. [Google Scholar]

- Wang, L.; Zhou, W.; Liu, L.; Yang, Z.; Wen, J. Deep adaptive collaborative graph neural network for social recommendation. Expert Syst. Appl. 2023, 229, 120410. [Google Scholar] [CrossRef]

- Wang, X.; He, X.; Wang, M.; Feng, F.; Chua, T.-S. Neural graph collaborative filtering. In Proceedings of the 42nd International Acm Sigir Conference on Research and Development in Information Retrieval, Paris, France, 21–25 July 2019; pp. 165–174. [Google Scholar]

- He, X.; Deng, K.; Wang, X.; Li, Y.; Zhang, Y.; Wang, M. LightGCN: Simplifying and Powering Graph Convolution Network for Recommendation. In Proceedings of the 43rd International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, China, 25–30 July 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 639–648. [Google Scholar]

- Kang, W.-C.; McAuley, J. Self-attentive sequential recommendation. In Proceedings of the 2018 IEEE International Conference on Data Mining (ICDM), Singapore, 17–20 November 2018; pp. 197–206. [Google Scholar]

- Sun, F.; Liu, J.; Wu, J.; Pei, C.; Lin, X.; Ou, W.; Jiang, P. BERT4Rec: Sequential recommendation with bidirectional encoder representations from transformer. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 3–7 November 2019; pp. 1441–1450. [Google Scholar]

- He, X.; Liao, L.; Zhang, H.; Nie, L.; Hu, X.; Chua, T.-S. Neural collaborative filtering. In Proceedings of the 26th International World Wide Web Conference, Perth, Australia, 3–7 April 2017; pp. 173–182. [Google Scholar]

- Liu, Z.; Zhou, J. Graph attention networks. In Introduction to Graph Neural Networks; Springer: Berlin/Heidelberg, Germany, 2020; pp. 39–41. [Google Scholar]

- Taherkhani, F.; Kazemi, H.; Nasrabadi, N.M. Matrix completion for graph-based deep semi-supervised learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January 2019; pp. 5058–5065. [Google Scholar]

- Ying, R.; He, R.; Chen, K.; Eksombatchai, P.; Hamilton, W.L.; Leskovec, J. Graph convolutional neural networks for web-scale recommender systems. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, London, UK, 19–23 August 2018; pp. 974–983. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).