Large Language Model Agents for Biomedicine: A Comprehensive Review of Methods, Evaluations, Challenges, and Future Directions

Abstract

1. Introduction

- (1)

- What are the main LLM agent technical paradigms in the biomedical field, and how do they satisfy requirements for subject-matter expertise, interpretability, and regulatory compliance?

- (2)

- In clinical and research settings, how can we comprehensively assess LLM agent performance, reliability, and safety using both automated metrics and user studies?

- (3)

- What are the key challenges currently faced by biomedical LLM agents in terms of knowledge updating, reasoning interpretability, resource constraints, data privacy, and ethical compliance?

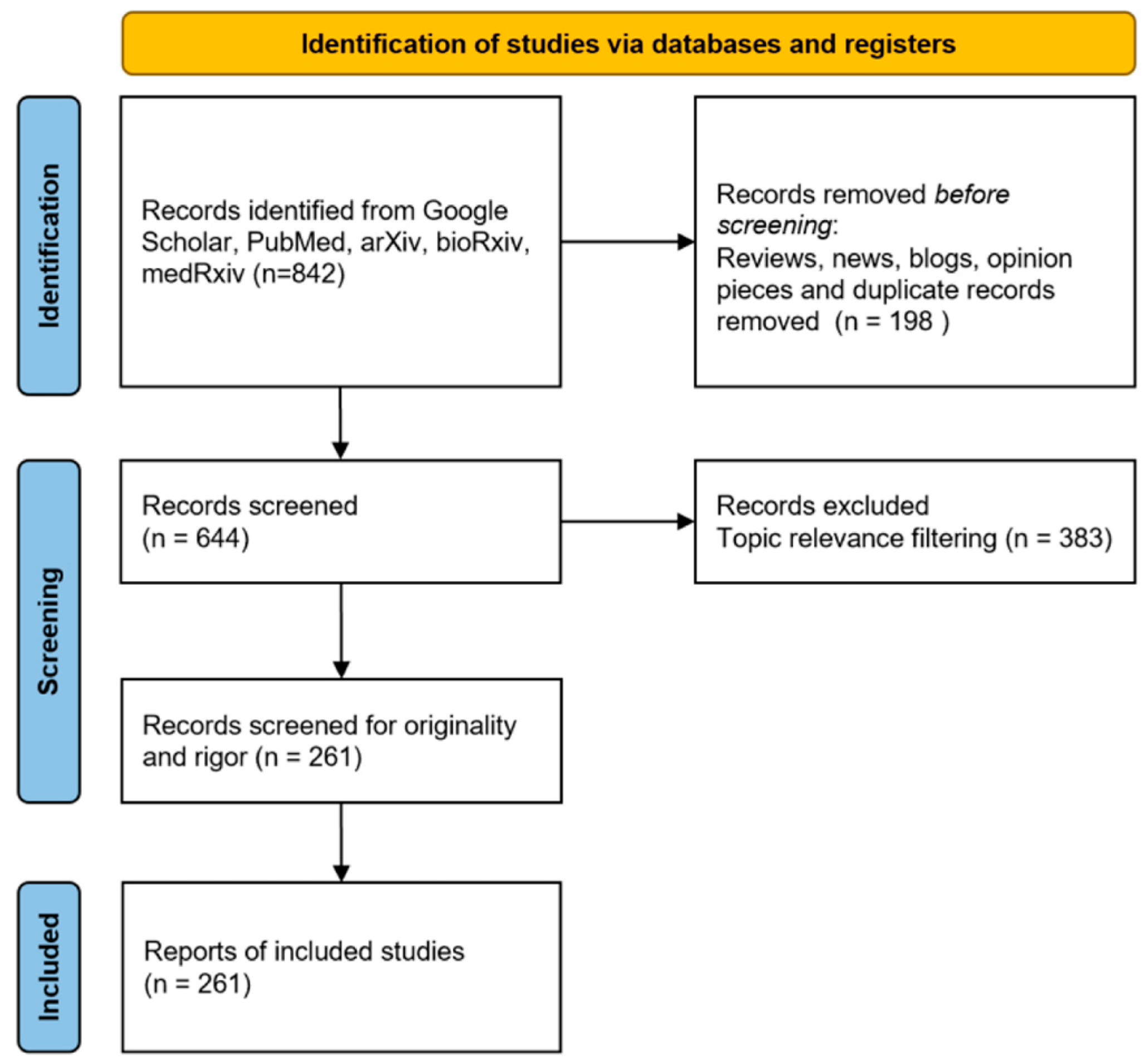

2. Data Preparation

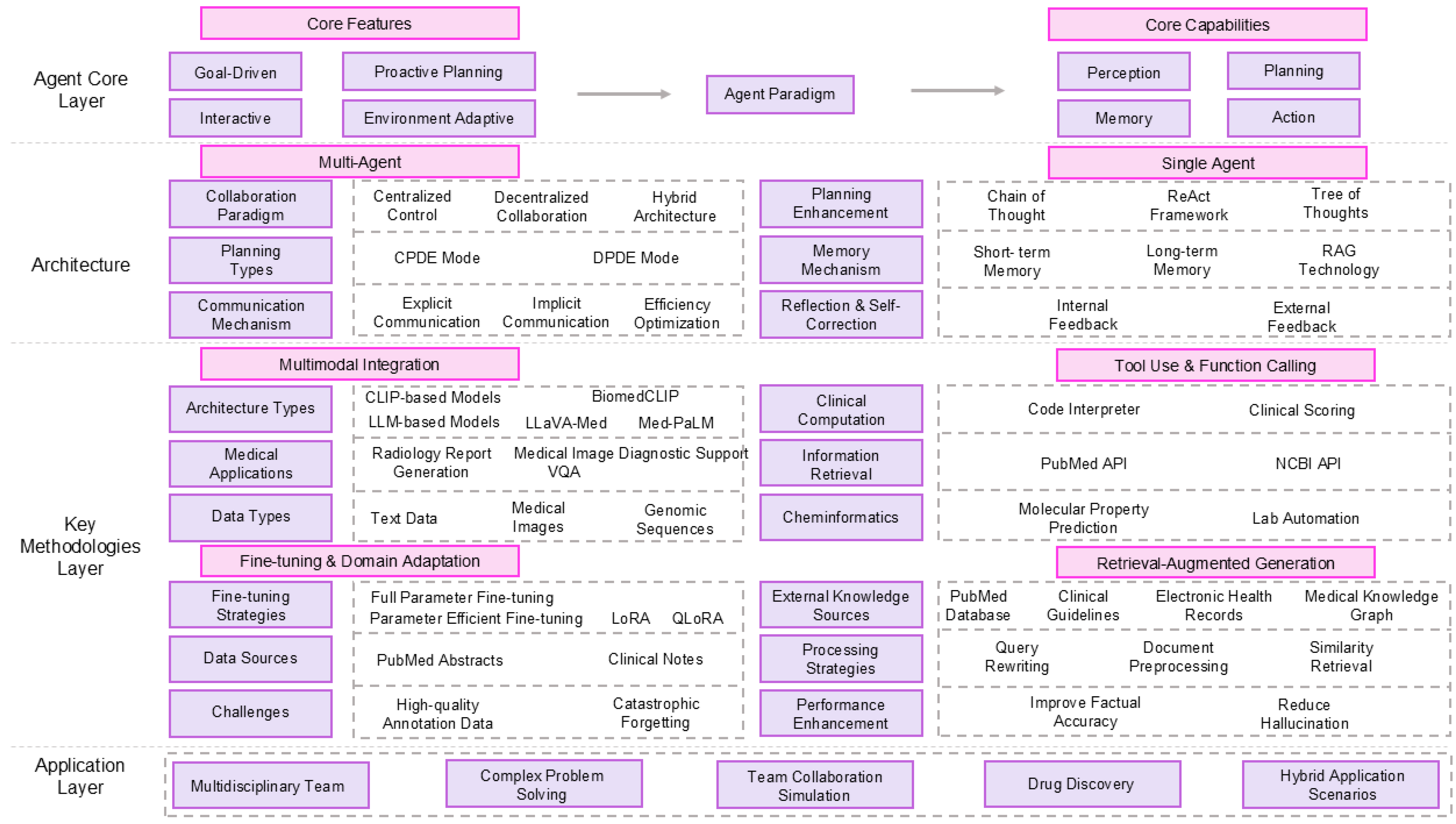

3. Methodology and Architecture of Biomedical LLM Agents

3.1. Fundamental Concepts: From LLMs to Agents

3.2. LLM Agent Architecture

3.2.1. Single-Agent Systems

3.2.2. Multi-Agent Systems (MAS)

3.3. Methodology of Biomedical Agents

3.3.1. Retrieval-Augmented Generation

3.3.2. Fine-Tuning and Domain Adaptation

3.3.3. Tool Use

3.3.4. Multimodal Integration

4. Performance Evaluation and Benchmarking

4.1. The Need for Agent-Specific Evaluation

4.2. Key Benchmarks for Biomedical LLM Agents

4.3. Evaluation Indicators and Methods

4.4. Performance Comparison

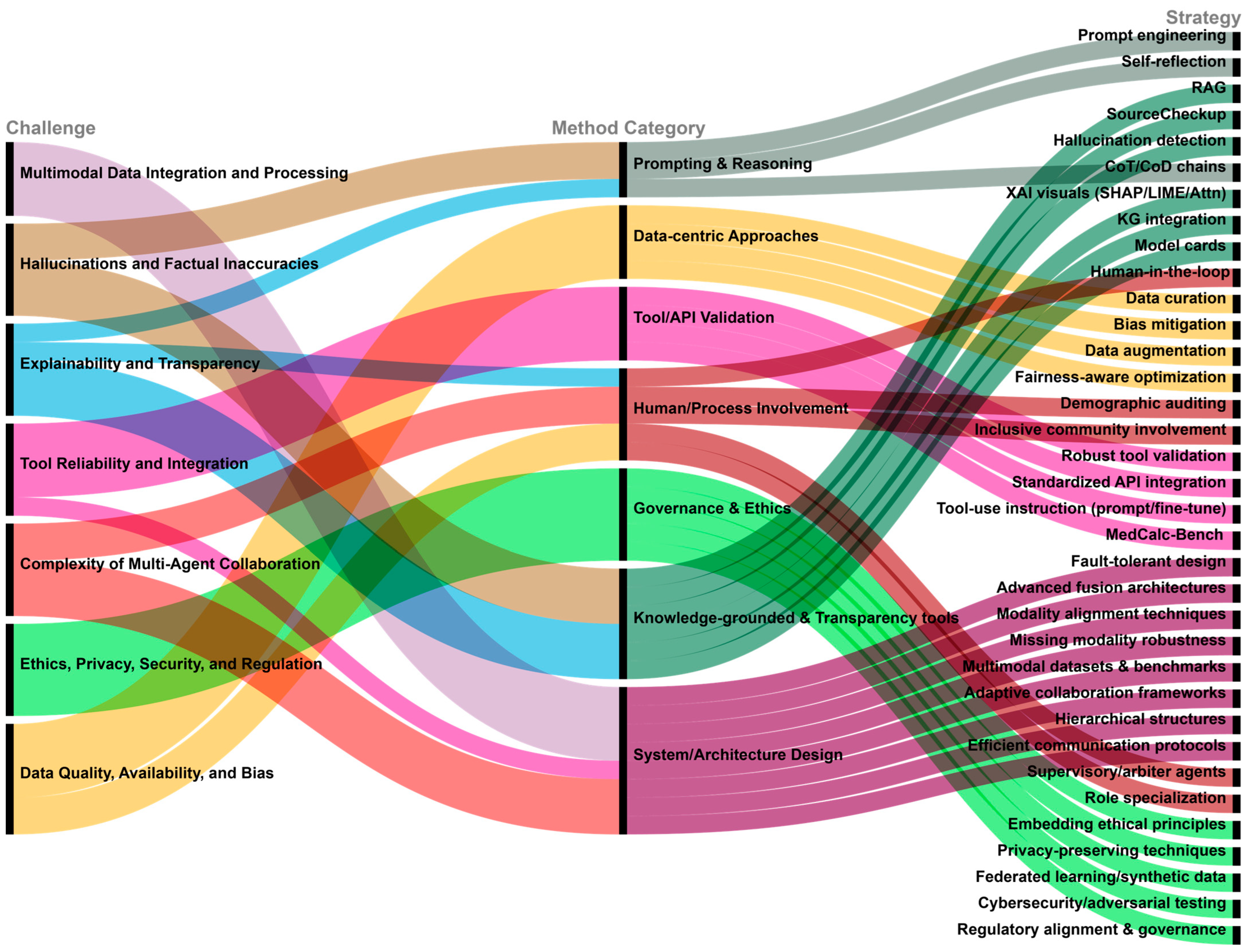

5. Key Challenges and Mitigation Strategies

5.1. Hallucinations and Factual Inaccuracies

- (1)

- Challenge: LLM agents are prone to generating content that is plausible, but factually incorrect or unsupported, a phenomenon known as hallucination. In high-stakes biomedical applications, such errors can lead to harmful outcomes, including misdiagnoses, inappropriate treatment recommendations, and misleading scientific claims, thereby jeopardizing patient safety and research integrity. Manifestations include erroneous clinical calculations [4], citing irrelevant or unsupported references [5], fabricating nonexistent facts, or producing outputs that contradict input information [73].

- (2)

- Mitigation Strategies: RAG [42] mitigates hallucination by grounding model outputs in verified knowledge sources such as PubMed or clinical guidelines, though its reliability still requires careful validation [74]. Delegating computation to external tools also helps reduce errors in quantitative reasoning tasks [4]. Self-correction mechanisms and reflection loops allow agents to evaluate and revise their outputs [13], while systems like SourceCheckup [5] support automated validation and transparent citation. Additionally, hallucination detection tools and purpose-built benchmarks (e.g., MedHal [67]) assist in identifying factual inconsistencies [75]. Prompt engineering [11] also plays a role in reducing hallucinations by guiding the model toward evidence-based responses.

5.2. Explainability and Transparency

- (1)

- (2)

- Mitigation Strategies: Various interpretability techniques, such as SHAP, LIME, and attention visualization, offer insights into model predictions [2]. Reasoning transparency can be improved through structured prompting strategies like Chain-of-Thought (CoT), Chain-of-Diagnosis (CoD) [76], or argumentation frameworks used in ArgMed- Agents [28]. Integrating structured knowledge graphs grounds reasoning in transparent semantic relations [2], while model cards provide standardized documentation on training data, performance, and limitations [77]. Human-in-the-loop designs also enhance transparency by allowing users to query and critique model outputs interactively [78].

5.3. Data Quality, Availability, and Bias

- (1)

- Challenge: LLM agent performance is highly sensitive to data quality and diversity [51]. Biomedical datasets are often limited in size, poorly annotated, or hypothetical in nature [51]. More critically, training data may encode biases related to race, gender, or socioeconomic status, which can lead to inequitable care recommendations [12,79]. For instance, models may misdiagnose skin conditions in patients with darker skin tones or suggest higher-cost treatments based on demographic profiles. These biases may be further amplified by feedback loops and dataset reuse [79].

- (2)

- Mitigation Strategies: High-quality, diverse, and rigorously curated datasets are foundational to reducing bias [51]. Bias mitigation should be implemented across the entire pipeline, from data collection to training and evaluation, using techniques such as adversarial debiasing, data augmentation, and fairness-aware optimization [13]. Ongoing auditing of model outputs across demographic groups is critical [77], as is stakeholder involvement during dataset development to ensure inclusive representation and equity [77].

5.4. Tool Reliability and Integration

- (1)

- Challenge: LLM agents rely heavily on external tools for computation, retrieval, and decision support [20]. Failures in tool reliability, outdated resources, or unstable interfaces can compromise agent performance. Additionally, agents often struggle with understanding tool functions, parameter usage, and invocation contexts [34], leading to misuse or overdependence [34].

- (2)

- Mitigation Strategies: Ensuring tool robustness requires continuous development, validation, and API standardization [20]. Enhancing agent comprehension of tool documentation through fine-tuning or prompt optimization improves tool selection and usage accuracy [6]. Benchmarks such as Medcalc-Bench [45] provide structured assessments of agents’ ability to execute clinical computations via APIs, including drug dosing, renal function estimation, and risk scoring. Finally, incorporating error-handling mechanisms enables agents to respond gracefully to tool failures, improving overall reliability.

5.5. Multimodal Data Integration and Processing

- (1)

- Challenge: Biomedical agents must often integrate heterogeneous data modalities text, images, genomics, and EHRs, which differ in format, scale, and sparsity [51,80]. Aligning and jointly analyzing these modalities in a unified framework remains technically challenging, especially under incomplete or noisy conditions [51].

- (2)

- Mitigation Strategies: Progress requires more advanced fusion architectures (e.g., gated and tensor fusion, Transformer-based joint encoders) capable of modeling cross-modal relationships [81]. Robust alignment techniques are essential to link data at the semantic level [51]. Agents must also handle missing data gracefully, which calls for models explicitly designed for partial or sparse input [81]. Building comprehensive multimodal datasets and evaluation benchmarks is equally crucial for meaningful progress [51].

5.6. Complexity of Multi-Agent Collaboration

- (1)

- Challenge: Multi-agent systems (MAS) offer enhanced flexibility and specialization but introduce challenges such as task allocation, inter-agent reasoning coordination, and communication overhead [35,82,83]. In biomedical workflows, failures in coordination can lead to suboptimal or erroneous outcomes [39].

- (2)

- Mitigation Strategies: Optimizing collaboration requires adaptive control schemes (MDAgents [39]), hierarchical role assignment (KG4Diagnosis [23]), and efficient communication protocols [41]. Consensus mechanisms, such as supervisory agents, can arbitrate disagreements and ensure reliability. Explicit role specialization also reduces redundancy and conflict. For instance, MedCo demonstrates how agents can function as educators, students, and evaluators in a simulated medical training context [84].

5.7. Ethics, Privacy, Security, and Regulation

- (1)

- Challenge: Ethical, legal, and social concerns are among the most pressing challenges in deploying biomedical LLM agents. Risks include algorithmic bias that may exacerbate healthcare inequities [12], erosion of traditional patient–clinician relationships [85], unclear accountability in cases of AI-induced medical error [12], overreliance on AI systems [75], and disputes around intellectual property of AI-generated content [35].

- (2)

- Mitigation Strategies: To address these concerns, ethical principles such as fairness, transparency, accountability, and safety must be embedded throughout the agent lifecycle [88]. Privacy-preserving techniques, including data anonymization, differential privacy, federated learning, secure multi-party computation, and synthetic data generation, help protect sensitive information [35]. Strengthening cybersecurity through adversarial testing and secure interface design is also essential [35].

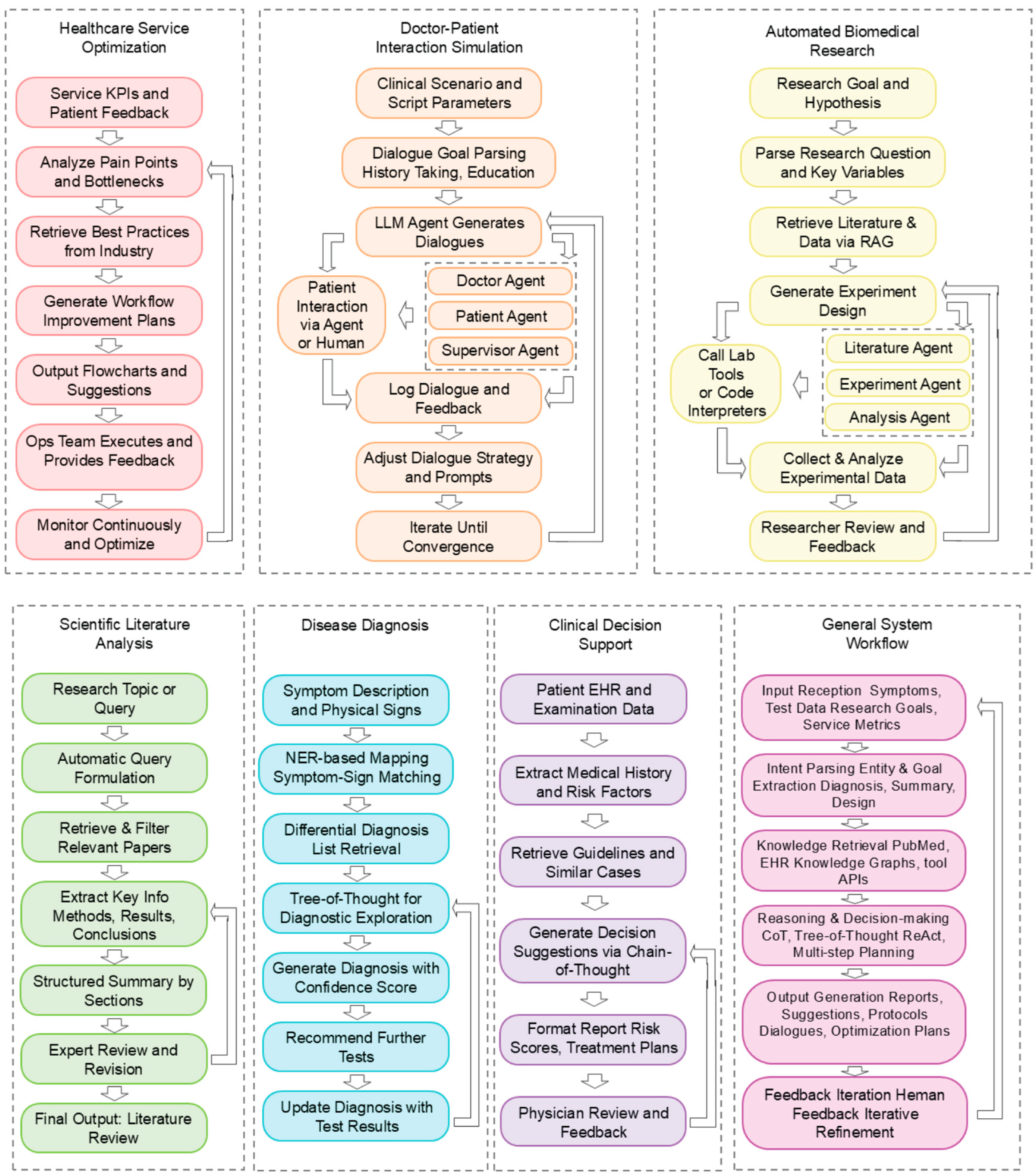

6. Discussion

6.1. Major Insights from the Current Landscape

- (1)

- The necessity of Human-in-the-Loop: Despite rapid advancements in autonomous LLM agents, the integration of Human-in-the-Loop (HITL) mechanisms remains indispensable for biomedical applications. Given the high-stakes nature of clinical decision-making, full agent autonomy in tasks such as diagnosis, treatment planning, or data interpretation is neither ethically acceptable nor technically safe. HITL frameworks enable real-time expert supervision, enhance system transparency, and act as safeguards against hallucinations, biases, and misuse of external tools. Prior studies, including the Zodiac framework [90] and virtual lab agents [91], underscore how clinician–agent collaboration can not only mitigate risk but also improve performance through iterative feedback and correction. Recent developments have also explored internal supervision via multi-agent architectures. For example, Cui et al. [92] proposed a dual-agent model in which a critical agent dynamically monitors and adjusts the reasoning process of a predictive agent—effectively emulating internalized HITL oversight. These findings collectively support the view that biomedical agents should be explicitly designed with human supervision interfaces, particularly in regulation-sensitive domains such as oncology, radiology, and pharmacovigilance.

- (2)

- Biomedical full-spectrum applications: from basic research to clinical implementation: LLM agents are rapidly emerging as generalizable tools capable of driving innovation across the entire biomedical pipeline. From early-stage hypothesis generation and drug discovery to downstream tasks like clinical trial matching, diagnostic reasoning, and personalized care planning, these systems are demonstrating substantial potential to accelerate, scale, and automate workflows traditionally limited by human capacity. Notable examples such as MedAgent-Pro [93] and FUAS-Agents [94] illustrate how agents can operate within structured, rule-based clinical environments like surgical decision-making or protocol-driven diagnosis. Figure 4 summarizes representative agent workflows across biomedical domains, including healthcare optimization, dialogue simulation, scientific research, and clinical decision support. Simultaneously, open-ended agents like the “virtual laboratory” platform show promise for creativity and hypothesis exploration in basic science contexts, such as antibody design. Collectively, these developments support the growing consensus that biomedical LLM agents are not confined to isolated use cases but instead represent a versatile computational paradigm capable of supporting diverse biomedical tasks.

6.2. Proposed New Metrics for Agent Robustness and Trustworthiness

6.3. Toward Standardized Safety Evaluation

6.4. Strategic Perspectives for Future Development

- (1)

- Enhanced Medical Reasoning and Planning Capabilities: Despite substantial progress, current biomedical LLM agents still face limitations in handling complex, long-range reasoning and multi-step task planning [28]. Future research is expected to explore multiple complementary directions.First, advances in foundation models such as DeepSeek R1 [95] offer improved capabilities in long-context comprehension, instruction following, and nuanced medical reasoning. Second, new reasoning paradigms are being introduced beyond conventional Chain-of-Thought (CoT) and ReAct strategies. These include symbolic logic frameworks [96], causal inference-based models [97], and hierarchical planning algorithms that can better support high-stakes clinical decision-making [28]. Third, the ability of agents to self-assess their confidence and recognize knowledge gaps is gaining attention as a mechanism to ensure safety and reliability [98]. Additionally, adaptive architectures that dynamically restructure based on task requirements may offer further gains in generalization and robustness [99].

- (2)

- Continual Learning and Knowledge Updating: Given the fast pace of biomedical research, agents must maintain mechanisms for continuous learning and timely knowledge integration to prevent the use of outdated or invalid information [12].This need motivates future efforts in three key directions. First, efficient incorporation of new clinical guidelines, medical literature, and experimental findings, potentially through hybrid strategies combining RAG and fine-tuning, will be essential. Second, lifelong or continual learning frameworks [100] must be designed to allow agents to update incrementally while preserving previously acquired knowledge [101]. Third, while federated learning offers strong privacy protection, its deployment in real medical environments faces challenges such as data heterogeneity, communication latency, and privacy–utility trade-offs. Recent studies have proposed validated mitigation strategies, including adaptive optimization algorithms (e.g., FedProx, FedDyn) to address data imbalance, secure aggregation and homomorphic encryption to prevent information leakage, and federated differential privacy mechanisms for enhanced protection of gradient updates. Preliminary biomedical applications, such as the FLUID framework [102] and MedFL [103], have demonstrated that incorporating these strategies enables robust distributed learning and continual adaptation across hospitals without exposing sensitive patient data. These approaches represent a significant step toward trustworthy and collaborative AI in healthcare.

- (3)

- Standardization, Governance, and Regulation: To enable trustworthy and equitable deployment, future work must establish clear standards for benchmarking, validation, and risk management [104]. Standardization efforts should aim to define consistent evaluation metrics and community benchmarks [105]. At the governance level, key priorities include ensuring algorithmic transparency, responsible data use, and stakeholder accountability throughout the agent lifecycle [106]. Regulatory frameworks must evolve to address the dynamic nature of AI systems, adopting mechanisms such as continuous monitoring, adaptive certification, and risk-sensitive regulation tailored to specific use cases and deployment environments [89].

- (4)

- Expanding and Deepening Multimodal Capabilities: As biomedical data becomes increasingly diverse and multimodal, agents must be equipped to interpret and reason over heterogeneous inputs, including clinical notes, imaging, genomics, physiological signals, and patient-generated data [51]. Future progress depends on the development of advanced multimodal fusion architectures that can model intermodal dependencies and contextual relationships more effectively. There is also a pressing need to integrate emerging modalities such as wearable sensor data, proteomics, and behavioral analytics into unified reasoning frameworks. Importantly, improving the interpretability and traceability of multimodal reasoning processes will be key to enabling clinical trust and accountability.

- (5)

- Human–AI Collaboration and Interaction: Rather than functioning as replacements for human professionals, future biomedical LLM agents should serve as collaborative partners that augment human expertise [13]. This calls for the design of more natural and efficient interaction interfaces, enabling users to guide, correct, and query agent behavior in real time. Collaborative decision-making protocols must be developed to support shared agency and role clarity between humans and machines. Furthermore, cultivating user trust will require increased transparency, reliability, and user control over agent outputs especially in high-risk or legally sensitive domains.

- (6)

- Toward Trustworthy AI: Ultimately, the success of biomedical LLM agents will hinge on their alignment with both technical standards and broader societal values [107]. Building truly trustworthy systems requires integration across all preceding fronts from robust model architectures and continual learning protocols to ethical design, regulatory compliance, and meaningful stakeholder engagement. These agents must not only function reliably in technical terms but also earn the confidence of clinicians, patients, and regulatory bodies.

6.5. Future Research Roadmap and Strategic Priorities

7. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, Y.; Chen, X.; Jin, B.; Wang, S.; Ji, S.; Wang, W.; Han, J. A comprehensive survey of scientific large language models and their applications in scientific discovery. In Proceedings of the 2024 Conference on Empirical Methods in Natural Language Processing, Miami, FL, USA, 12–16 November 2024; pp. 8783–8817. [Google Scholar]

- Liu, L.; Yang, X.; Lei, J.; Liu, X.; Shen, Y.; Zhang, Z.; Wei, P.; Gu, J.; Chu, Z.; Qin, Z.; et al. A survey on medical large language models: Technology, application, trustworthiness, and future directions. arXiv 2024, arXiv:2406.03712. [Google Scholar] [CrossRef]

- Gao, S.; Fang, A.; Huang, Y.; Giunchiglia, V.; Noori, A.; Schwarz, J.R.; Ektefaie, Y.; Kondic, J.; Zitnik, M. Empowering biomedical discovery with ai agents. Cell 2024, 187, 6125–6151. [Google Scholar] [CrossRef]

- Goodell, A.J.; Chu, S.N.; Rouholiman, D.; Chu, L.F. Large language model agents can use tools to perform clinical calculations. npj Digit. Med. 2025, 8, 163. [Google Scholar] [CrossRef]

- Wu, K.; Wu, E.; Wei, K.; Zhang, A.; Casasola, A.; Nguyen, T.; Riantawan, S.; Shi, P.; Ho, D.; Zou, J. An automated framework for assessing how well llms cite relevant medical references. Nat. Commun. 2025, 16, 3615. [Google Scholar] [CrossRef]

- Zhu, Y.; Wei, S.; Wang, X.; Xue, K.; Zhang, S.; Zhang, X. MeNTi: Bridging medical calculator and LLM agent with nested tool calling. In Proceedings of the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers), Albuquerque, NM, USA, 29 April–4 May 2025; pp. 5097–5116. [Google Scholar]

- Wu, X.; Zhao, Y.; Zhang, Y.; Wu, J.; Zhu, Z.; Zhang, Y.; Ouyang, Y.; Zhang, Z.; Wang, H.; Yang, J.; et al. Medjourney: Benchmark and evaluation of large language models over patient clinical journey. Adv. Neural Inf. Process. Syst. 2024, 37, 87621–87646. [Google Scholar]

- Schmidgall, S.; Ziaei, R.; Harris, C.; Reis, E.; Jopling, J.; Moor, M. Agentclinic: A multimodal agent benchmark to evaluate ai in simulated clinical environments. arXiv 2024, arXiv:2405.07960. [Google Scholar] [CrossRef]

- Huang, K.; Zhang, S.; Wang, H.; Qu, Y.; Lu, Y.; Roohani, Y.; Li, R.; Qiu, L.; Zhang, J.; Di, Y.; et al. Biomni: A general-purpose biomedical ai agent. bioRxiv 2025. [Google Scholar] [CrossRef] [PubMed]

- Fan, Y.; Xue, K.; Li, Z.; Zhang, X.; Ruan, T. An llm-based framework for biomedical terminology normalization in social media via multi-agent collaboration. In Proceedings of the 31st International Conference on Computational Linguistics, Abu Dhabi, United Arab Emirates, 19–24 January 2025; pp. 10712–10726. [Google Scholar]

- Luo, Y.; Shi, L.; Li, Y.; Zhuang, A.; Gong, Y.; Liu, L.; Lin, C. From intention to implementation: Automating biomedical research via LLMs. Sci. China Inf. Sci. 2025, 68, 170105. [Google Scholar] [CrossRef]

- Qin, H.; Tong, Y. Opportunities and challenges for large language models in primary health care. J. Prim. Care Community Health 2025, 16, 21501319241312571. [Google Scholar]

- Wang, W.; Ma, Z.; Wang, Z.; Wu, C.; Chen, W.; Li, X.; Yuan, Y. A survey of llm-based agents in medicine: How far are we from baymax? arXiv 2025, arXiv:2502.11211. [Google Scholar] [CrossRef]

- Lee, J.; Yoon, W.; Kim, S.; Kim, D.; Kim, S.; So, C.H.; Kang, J. Biobert: A pre-trained biomedical language representation model for biomedical text mining. Bioinformatics 2020, 36, 1234–1240. [Google Scholar] [CrossRef]

- Gu, Y.; Tinn, R.; Cheng, H.; Lucas, M.; Usuyama, N.; Liu, X.; Naumann, T.; Gao, J.; Poon, H. Domain-specific language model pretraining for biomedical natural language processing. ACM Trans. Comput. Healthc. (HEALTH) 2021, 3, 1–23. [Google Scholar] [CrossRef]

- Swanson, D.R. Fish oil, raynaud’s syndrome, and undiscovered public knowledge. Perspect. Biol. Med. 1986, 30, 7–18. [Google Scholar] [CrossRef]

- Swanson, D.R. Migraine and magnesium: Eleven neglected connections. Perspect. Biol. Med. 1988, 31, 526–557. [Google Scholar] [CrossRef]

- Gilardi, F.; Alizadeh, M.; Kubli, M. Chatgpt outperforms crowd workers for text-annotation tasks. Proc. Natl. Acad. Sci. USA 2023, 120, e2305016120. [Google Scholar] [CrossRef]

- Abbasian, M.; Azimi, I.; Rahmani, A.M.; Jain, R. Conversational health agents: A personalized llm-powered agent framework. arXiv 2023, arXiv:2310.02374. [Google Scholar] [CrossRef]

- Ramos, M.C.; Collison, C.J.; White, A.D. A review of large language models and autonomous agents in chemistry. Chem. Sci. 2025, 16, 2514–2572. [Google Scholar] [CrossRef]

- Tang, X.; Zou, A.; Zhang, Z.; Li, Z.; Zhao, Y.; Zhang, X.; Cohan, A.; Gerstein, M. MedAgents: Large language models as collaborators for zero-shot medical reasoning. arXiv 2024, arXiv:2311.10537. [Google Scholar]

- Chen, X.; Yi, H.; You, M.; Liu, W.; Wang, L.; Li, H.; Zhang, X.; Guo, Y.; Fan, L.; Chen, G.; et al. Enhancing diagnostic capability with multi-agents conversational large language models. NPJ Digit. Med. 2025, 8, 159. [Google Scholar]

- Zuo, K.; Jiang, Y.; Mo, F.; Lio, P. Kg4diagnosis: A hierarchical multi-agent llm framework with knowledge graph enhancement for medical diagnosis. In Proceedings of the AAAI Bridge Program on AI for Medicine and Healthcare. PMLR, Philadelphia, PA, USA, 25 February 2025; pp. 195–204. [Google Scholar]

- Yue, L.; Xing, S.; Chen, J.; Fu, T. Clinicalagent: Clinical trial multi-agent system with large language model-based reasoning. In Proceedings of the 15th ACM International Conference on Bioinformatics, Computational Biology and Health Informatics, Shenzhen, China, 22–25 November 2024; pp. 1–10. [Google Scholar]

- Huang, K.; Qu, Y.; Cousins, H.; Johnson, W.A.; Yin, D.; Shah, M.; Zhou, D.; Altman, R.; Wang, M.; Cong, L. Crispr-gpt: An llm agent for automated design of gene-editing experiments. arXiv 2024, arXiv:2404.18021. [Google Scholar]

- Roohani, Y.H.; Vora, J.; Huang, Q.; Liang, P.; Leskovec, J. BioDiscoveryAgent: An AI agent for designing genetic perturbation experiments. arXiv 2024, arXiv:2405.17631. [Google Scholar]

- Das, R.; Maheswari, K.; Siddiqui, S.; Arora, N.; Paul, A.; Nanshi, J.; Ud-balkar, V.; Sarvade, A.; Chaturvedi, H.; Shvartsman, T.; et al. Improved precision oncology question-answering using agentic llm. medRxiv 2024. [Google Scholar] [CrossRef]

- Hong, S.; Xiao, L.; Zhang, X.; Chen, J. Argmed-agents: Explainable clinical decision reasoning with llm disscusion via argumentation schemes. In Proceedings of the 2024 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Lisboa, Portugal, 3–6 December 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 5486–5493. [Google Scholar]

- Chan, T.K.; Dinh, N.-D. Entagents: Ai agents for complex knowledge otolaryngology. medRxiv 2025. [Google Scholar] [CrossRef]

- Luo, L.; Ning, J.; Zhao, Y.; Wang, Z.; Ding, Z.; Chen, P.; Fu, W.; Han, Q.; Xu, G.; Qiu, Y.; et al. Taiyi: A bilingual fine-tuned large language model for diverse biomedical tasks. J. Am. Med. Inform. Assoc. 2024, 31, 1865–1874. [Google Scholar] [CrossRef]

- Kim, S. Medbiolm: Optimizing medical and biological qa with fine-tuned large language models and retrieval-augmented generation. arXiv 2025, arXiv:2502.03004. [Google Scholar]

- Hsu, E.; Roberts, K. Llm-ie: A python package for biomedical generative information extraction with large language models. JAMIA Open 2025, 8, ooaf012. [Google Scholar] [CrossRef] [PubMed]

- Mondal, D.; Inamdar, A. Seqmate: A novel large language model pipeline for automating rna sequencing. arXiv 2024, arXiv:2407.03381. [Google Scholar]

- Yang, Z.; Qian, J.; Huang, Z.-A.; Tan, K.C. Qm-tot: A medical tree of thoughts reasoning framework for quantized model. arXiv 2025, arXiv:2504.12334. [Google Scholar]

- Luo, J.; Zhang, W.; Yuan, Y.; Zhao, Y.; Yang, J.; Gu, Y.; Wu, B.; Chen, B.; Qiao, Z.; Long, Q.; et al. Large language model agent: A survey on methodology, applications and challenges. arXiv 2025, arXiv:2503.21460. [Google Scholar] [CrossRef]

- Lu, Y.; Wang, J. Karma: Leveraging multi-agent llms for automated knowledge graph enrichment. arXiv 2025, arXiv:2502.06472. [Google Scholar] [CrossRef]

- Jia, Z.; Jia, M.; Duan, J.; Wang, J. Ddo: Dual-decision optimization via multi-agent collaboration for llm-based medical consultation. arXiv 2025, arXiv:2505.18630. [Google Scholar]

- Kim, Y. Healthcare Agents: Large Language Models in Health Prediction and Decision-Making. Ph.D. Dissertation, Massachusetts Institute of Technology, Cambridge, MA, USA, 2025. [Google Scholar]

- Kim, Y.; Park, C.; Jeong, H.; Chan, Y.S.; Xu, X.; McDuff, D.; Lee, H.; Ghassemi, M.; Breazeal, C.; Park, H.W. Mdagents: An adaptive collaboration of llms for medical decision-making. Adv. Neural Inf. Process. Syst. 2024, 37, 79410–79452. [Google Scholar]

- Xiao, M.; Cai, X.; Wang, C.; Zhou, Y. m-kailin: Knowledge-driven agentic scientific corpus distillation framework for biomedical large language models training. arXiv 2025, arXiv:2504.19565. [Google Scholar]

- Cheng, Y.; Zhang, C.; Zhang, Z.; Meng, X.; Hong, S.; Li, W.; Wang, Z.; Wang, Z.; Yin, F.; Zhao, J.; et al. Exploring large language model based intelligent agents: Definitions, methods, and prospects. arXiv 2024, arXiv:2401.03428. [Google Scholar] [CrossRef]

- Liu, S.; McCoy, A.B.; Wright, A. Improving large language model applications in biomedicine with retrieval-augmented generation: A systematic review, meta-analysis, and clinical development guidelines. J. Am. Med Inform. Assoc. 2025, 32, ocaf008. [Google Scholar] [CrossRef]

- Christophe, C.; Kanithi, P.; Munjal, P.; Raha, T.; Hayat, N.; Rajan, R.; Al-Mahrooqi, A.; Gupta, A.; Salman, M.U.; Pimentel, M.A.F.; et al. Med42: Evaluating fine-tuning strategies for medical LLMs: Full parameter vs. parameter-efficient approaches. arXiv 2024, arXiv:2404.14779. [Google Scholar]

- Song, S.; Xu, H.; Ma, J.; Li, S.; Peng, L.; Wan, Q.; Liu, X.; Yu, J. How to complete domain tuning while keeping general ability in llm: Adaptive layer-wise and element-wise regularization. arXiv 2025, arXiv:2501.13669. [Google Scholar]

- Khandekar, N.; Jin, Q.; Xiong, G.; Dunn, S.; Applebaum, S.; Anwar, Z.; Sarfo-Gyamfi, M.; Safranek, C.; Anwar, A.; Zhang, A.; et al. Medcalc- bench: Evaluating large language models for medical calculations. Adv. Neural Inf. Process. Syst. 2024, 37, 84730–84745. [Google Scholar]

- Liao, Y.; Jiang, S.; Wang, Y.; Wang, Y. Reflectool: To- wards reflection-aware tool-augmented clinical agents. arXiv 2024, arXiv:2410.17657. [Google Scholar]

- He, Y.; Li, A.; Liu, B.; Yao, Z.; He, Y. Medorch: Medical diagnosis with tool-augmented reasoning agents for flexible extensibility. arXiv 2025, arXiv:2506.00235. [Google Scholar]

- Li, B.; Yan, T.; Pan, Y.; Luo, J.; Ji, R.; Ding, J.; Xu, Z.; Liu, S.; Dong, H.; Lin, Z.; et al. MMedAgent: Learning to use medical tools with multi-modal agent. arXiv 2024, arXiv:2407.02483. [Google Scholar]

- Shi, W.; Xu, R.; Zhuang, Y.; Yu, Y.; Zhang, J.; Wu, H.; Zhu, Y.; Ho, J.; Yang, C.; Wang, M.D. EHRAgent: Code empowers large language models for few-shot complex tabular reasoning on electronic health records. In Proceedings of the Conference on Empirical Methods in Natural Language Processing. Conference on Empirical Methods in Natural Language Processing, Miami, FL, USA, 12–16 November 2024; p. 22315. [Google Scholar]

- Choi, J.; Palumbo, N.; Chalasani, P.; Engelhard, M.M.; Jha, S.; Kumar, A.; Page, D. MALADE: Orchestration of LLM-powered agents with retrieval augmented generation for pharmacovigilance. arXiv 2024, arXiv:2408.01869. [Google Scholar] [CrossRef]

- Niu, Q.; Chen, K.; Li, M.; Feng, P.; Bi, Z.; Yan, L.K.; Zhang, Y.; Yin, C.H.; Fei, C.; Liu, J.; et al. From text to multimodality: Exploring the evolution and impact of large language models in medical practice. arXiv 2024, arXiv:2410.01812. [Google Scholar]

- Li, C.; Wong, C.; Zhang, S.; Usuyama, N.; Liu, H.; Yang, J.; Naumann, T.; Poon, H.; Gao, J. Llava-med: Training a large language-and-vision assistant for biomedicine in one day. Adv. Neural Inf. Process. Syst. 2023, 36, 28541–28564. [Google Scholar]

- Liu, T.; Xiao, Y.; Luo, X.; Xu, H.; Zheng, W.J.; Zhao, H. Geneverse: A collection of open-source multimodal large language models for genomic and proteomic research. arXiv 2024, arXiv:2406.15534. [Google Scholar] [CrossRef]

- Liu, P.; Bansal, S.; Dinh, J.; Pawar, A.; Satishkumar, R.; Desai, S.; Gupta, N.; Wang, X.; Hu, S. Medchat: A multi-agent framework for multimodal diagnosis with large language models. arXiv 2025, arXiv:2506.07400. [Google Scholar]

- Ferber, D.; El Nahhas, O.S.; Wo, G.; Wiest, I.C.; Clusmann, J.; Leßmann, M.-E.; Foersch, S.; Lammert, J.; Tschochohei, M.; Ja¨ger, D.; et al. Development and validation of an autonomous artificial intelligence agent for clinical decision-making in oncology. Nat. Cancer 2025, 6, 1337–1349. [Google Scholar] [CrossRef]

- Gao, S.; Zhu, R.; Kong, Z.; Noori, A.; Su, X.; Ginder, C.; Tsiligkaridis, T.; Zitnik, M. Txagent: An ai agent for therapeutic reasoning across a universe of tools. arXiv 2025, arXiv:2503.10970. [Google Scholar] [CrossRef]

- Liu, P.; Ren, Y.; Tao, J.; Ren, Z. Git-mol: A multi-modal large language model for molecular science with graph, image, and text. Comput. Biol. Med. 2024, 171, 108073. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Lu, Y.; Chen, S.; Hu, X.; Zhao, J.; Fu, T.; Zhao, Y. DrugAgent: Automating AI-aided drug discovery programming through LLM multi-agent collaboration. In Proceedings of the 2nd AI4Research Workshop: Towards a Knowledge-grounded Scientific Research Lifecycle, Philadelphia, PA, USA, 4 March 2025. [Google Scholar]

- Xu, R.; Zhuang, Y.; Zhong, Y.; Yu, Y.; Tang, X.; Wu, H.; Wang, M.D.; Ruan, P.; Yang, D.; Wang, T.; et al. Medagentgym: Training llm agents for code-based medical reasoning at scale. arXiv 2025, arXiv:2506.04405. [Google Scholar]

- Wang, Z.; Zhu, Y.; Zhao, H.; Zheng, X.; Sui, D.; Wang, T.; Tang, W.; Wang, Y.; Harrison, E.; Pan, C.; et al. Colacare: Enhancing electronic health record modeling through large language model-driven multi-agent collaboration. Proc. ACM Web Conf. 2025, 2025, 2250–2261. [Google Scholar]

- Yi, Z.; Xiao, T.; Albert, M.V. A multimodal multi-agent framework for radiology report generation. arXiv 2025, arXiv:2505.09787. [Google Scholar] [CrossRef]

- Almansoori, M.; Kumar, K.; Cholakkal, H. Self-evolving multi-agent simulations for realistic clinical interactions. arXiv 2025, arXiv:2503.22678. [Google Scholar]

- Jiang, Y.; Black, K.C.; Geng, G.; Park, D.; Ng, A.Y.; Chen, J.H. Medagentbench: Dataset for benchmarking llms as agents in medical applications. arXiv 2025, arXiv:2501.14654. [Google Scholar]

- Mehandru, N.; Miao, B.Y.; Almaraz, E.R.; Sushil, M.; Butte, A.J.; Alaa, A. Evaluating large language models as agents in the clinic. NPJ Digit. Med. 2024, 7, 84. [Google Scholar] [CrossRef]

- Johnson, A.; Bulgarelli, L.; Pollard, T.; Horng, S.; Celi, L.A.; Mark, R.; Mimic-iv. PhysioNet. 2020, pp. 49–55. Available online: https://physionet.org/content/mimiciv/1.0/ (accessed on 23 August 2021).

- Song, K.; Trotter, A.; Chen, J.Y. Llm agent swarm for hypothesis- driven drug discovery. arXiv 2025, arXiv:2504.17967. [Google Scholar] [CrossRef]

- Mehenni, G.; Zouaq, A. Medhal: An evaluation dataset for medical hallucination detection. arXiv 2025, arXiv:2504.08596. [Google Scholar] [CrossRef]

- Mitchener, L.; Laurent, J.M.; Tenmann, B.; Narayanan, S.; Wellawatte, G.P.; White, A.; Sani, L.; Rodriques, S.G. Bixbench: A comprehensive benchmark for llm-based agents in computational biology. arXiv 2025, arXiv:2503.00096. [Google Scholar]

- Fan, Z.; Wei, L.; Tang, J.; Chen, W.; Wang, S.; Wei, Z.; Huang, F. AI Hospital: Benchmarking large language models in a multi-agent medical interaction simulator. arXiv 2025, arXiv:2402.09742. [Google Scholar]

- Altermatt, F.R.; Neyem, A.; Sumonte, N.; Mendoza, M.; Villagran, I.; Lacassie, H.J. Performance of single-agent and multi-agent language models in spanish language medical competency exams. BMC Med. Educ. 2025, 25, 666. [Google Scholar] [CrossRef]

- Zhao, Y.; Wang, H.; Zheng, Y.; Wu, X. A layered debating multi-agent system for similar disease diagnosis. In Proceedings of the 2025 Conference of the Nations of the Americas Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 2: Short Papers), Albuquerque, NM, USA, 29 April–4 May 2025; pp. 539–549. [Google Scholar]

- Tu, T.; Schaekermann, M.; Palepu, A.; Saab, K.; Freyberg, J.; Tanno, R.; Wang, A.; Li, B.; Amin, M.; Cheng, Y.; et al. Towards conversational diagnostic artificial intelligence. Nature 2025, 642, 442–450. [Google Scholar] [CrossRef]

- Garcia-Fernandez, C.; Felipe, L.; Shotande, M.; Zitu, M.; Tripathi, A.; Rasool, G.; Naqa, I.E.; Rudrapatna, V.; Valdes, G. Trustworthy ai for medicine: Continuous hallucination detection and elimination with check. arXiv 2025, arXiv:2506.11129. [Google Scholar]

- Bunnell, D.J.; Bondy, M.J.; Fromtling, L.M.; Ludeman, E.; Gourab, K. Bridging ai and healthcare: A scoping review of retrieval- augmented generation—Ethics, bias, transparency, improvements, and applications. medRxiv 2025. [Google Scholar] [CrossRef]

- Rani, M.; Mishra, B.K.; Thakker, D.; Babar, M.; Jones, W.; Din, A. Biases and trustworthiness challenges with mitigation strategies for large language models in healthcare. In Proceedings of the 2024 International Conference on IT and Industrial Technologies (ICIT), Chiniot, Pakistan, 10–12 December 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Chen, J.; Gui, C.; Gao, A.; Ji, K.; Wang, X.; Wan, X.; Wang, B. Cod, towards an interpretable medical agent using chain of diagnosis. arXiv 2024, arXiv:2407.13301. [Google Scholar] [CrossRef]

- Goktas, P.; Grzybowski, A. Shaping the future of healthcare: Ethical clinical challenges and pathways to trustworthy ai. J. Clin. Med. 2025, 14, 1605. [Google Scholar] [CrossRef]

- Jin, Q.; Wang, Z.; Yang, Y.; Zhu, Q.; Wright, D.; Huang, T.; Wilbur, W.J.; He, Z.; Taylor, A.; Chen, Q.; et al. Agentmd: Empowering language agents for risk prediction with large-scale clinical tool learning. arXiv 2024, arXiv:2402.13225. [Google Scholar]

- Comeau, D.S.; Bitterman, D.S.; Celi, L.A. Preventing unrestricted and unmonitored ai experimentation in healthcare through transparency and accountability. npj Digit. Med. 2025, 8, 42. [Google Scholar] [CrossRef]

- Li, Y.C.; Wang, L.; Law, J.N.; Murali, T.; Pandey, G. Integrating multimodal data through interpretable heterogeneous ensembles. Bioinform. Adv. 2022, 2, vbac065. [Google Scholar] [CrossRef]

- AlSaad, R.; Alrazaq, A.A.; Boughorbel, S.; Ahmed, A.; Renault, M.A.; Damseh, R.; Sheikh, J. Multimodal large language models in health care: Applications, challenges, and future outlook. J. Med. Internet Res. 2024, 26, e59505. [Google Scholar] [CrossRef]

- Nweke, I.P.; Ogadah, C.O.; Koshechkin, K.; Oluwasegun, P.M. Multi-agent ai systems in healthcare: A systematic review enhancing clinical decision-making. Asian J. Med. Princ. Clin. Pract. 2025, 8, 273–285. [Google Scholar] [CrossRef]

- Pandey, H.G.; Amod, A.; Kumar, S. Advancing healthcare automation: Multi-agent system for medical necessity justification. arXiv 2024, arXiv:2404.17977. [Google Scholar] [CrossRef]

- Wei, H.; Qiu, J.; Yu, H.; Yuan, W. Medco: Medical education copilots based on a multi-agent framework. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2025; pp. 119–135. [Google Scholar]

- Nov, O.; Aphinyanaphongs, Y.; Lui, Y.W.; Mann, D.; Porfiri, M.; Riedl, M.; Rizzo, J.-R.; Wiesenfeld, B. The transformation of patient-clinician relationships with ai-based medical advice. Commun. ACM 2021, 64, 46–48. [Google Scholar] [CrossRef]

- Chen, K.; Zhen, T.; Wang, H.; Liu, K.; Li, X.; Huo, J.; Yang, T.; Xu, J.; Dong, W.; Gao, Y. Medsentry: Understanding and mitigating safety risks in medical llm multi-agent systems. arXiv 2025, arXiv:2505.20824. [Google Scholar]

- Pham, T. Ethical and legal considerations in healthcare ai: Innovation and policy for safe and fair use. R. Soc. Open Sci. 2025, 12, 241873. [Google Scholar] [CrossRef]

- Cheong, B.C. Transparency and accountability in ai systems: Safe- guarding wellbeing in the age of algorithmic decision-making. Front. Hum. Dyn. 2024, 6, 1421273. [Google Scholar] [CrossRef]

- Palaniappan, K.; Lin, E.Y.T.; Vogel, S. Global regulatory frameworks for the use of artificial intelligence (ai) in the healthcare services sector. Healthcare 2024, 12, 562. [Google Scholar] [CrossRef]

- Zhou, Y.; Zhang, P.; Song, M.; Zheng, A.; Lu, Y.; Liu, Z.; Chen, Y.; Xi, Z. Zodiac: A cardiologist-level llm framework for multi-agent diagnostics. arXiv 2024, arXiv:2410.02026. [Google Scholar]

- Swanson, K.; Wu, W.; Bulaong, N.L.; Pak, J.E.; Zou, J. The virtual lab: Ai agents design new SARS-CoV-2 nanobodies with experimental validation. bioRxiv 2024. [Google Scholar] [CrossRef]

- Cui, H.; Shen, Z.; Zhang, J.; Shao, H.; Qin, L.; Ho, J.C.; Yang, C. Llms-based few-shot disease predictions using ehr: A novel approach combining predictive agent reasoning and critical agent instruction. AMIA Annu. Symp. Proc. 2024, 2025, 319. [Google Scholar]

- Wang, Z.; Wu, J.; Cai, L.; Low, C.H.; Yang, X.; Li, Q.; Jin, Y. Medagent-pro: Towards evidence-based multi-modal medical diagno- sis via reasoning agentic workflow. arXiv 2025, arXiv:2503.18968. [Google Scholar]

- Zhao, L.; Bai, J.; Bian, Z.; Chen, Q.; Li, Y.; Li, G.; He, M.; Yao, H.; Zhang, Z. Autonomous multi-modal llm agents for treatment planning in focused ultrasound ablation surgery. arXiv 2025, arXiv:2505.21418. [Google Scholar] [CrossRef]

- Moëll, B.; Aronsson, F.S.; Akbar, S. Medical reasoning in LLMs: An in-depth analysis of DeepSeek R1. Front. Artif. Intell. 2025, 8, 1616145. [Google Scholar] [CrossRef]

- Matsumoto, N.; Choi, H.; Moran, J.; Hernandez, M.E.; Venkatesan, M.; Li, X.; Chang, J.-H.; Wang, P.; Moore, J.H. Escargot: An ai agent leveraging large language models, dynamic graph of thoughts, and biomedical knowledge graphs for enhanced reasoning. Bioinformatics 2025, 41, btaf031. [Google Scholar] [CrossRef]

- Xu, W.; Luo, G.; Meng, W.; Zhai, X.; Zheng, K.; Wu, J.; Li, Y.; Xing, A.; Li, J.; Li, Z.; et al. Mragent: An llm-based automated agent for causal knowledge discovery in disease via mendelian randomization. Brief. Bioinform. 2025, 26, bbaf140. [Google Scholar] [CrossRef]

- Atf, Z.; Safavi-Naini, S.A.A.; Lewis, P.R.; Mahjoubfar, A.; Naderi, N.; Savage, T.R.; Soroush, A. The challenge of uncertainty quan- tification of large language models in medicine. arXiv 2025, arXiv:2504.05278. [Google Scholar]

- Zhuang, Y.; Jiang, W.; Zhang, J.; Yang, Z.; Zhou, J.T.; Zhang, C. Learning to be a doctor: Searching for effective medical agent architectures. arXiv 2025, arXiv:2504.11301. [Google Scholar] [CrossRef]

- Zheng, J.; Shi, C.; Cai, X.; Li, Q.; Zhang, D.; Li, C.; Yu, D.; Ma, Q. Lifelong learning of large language model based agents: A roadmap. arXiv 2025, arXiv:2501.07278. [Google Scholar] [CrossRef]

- Li, J.; Lai, Y.; Li, W.; Ren, J.; Zhang, M.; Kang, X.; Wang, S.; Li, P.; Zhang, Y.-Q.; Ma, W.; et al. Agent hospital: A simulacrum of hospital with evolvable medical agents. arXiv 2024, arXiv:2405.02957. [Google Scholar] [CrossRef]

- Casaletto, J.A.; Foley, P.; Fernandez, M.; Sanders, L.M.; Scott, R.T.; Ranjan, S.; Jain, S.; Haynes, N.; Boerma, M.; Costes, S.V.; et al. Foundational architecture enabling federated learning for training space biomedical machine learning models between the international space station and earth. bioRxiv 2025. [Google Scholar] [CrossRef]

- Zhang, L.; Li, Y. Federated learning with layer skipping: Efficient training of large language models for healthcare nlp. arXiv 2025, arXiv:2504.10536. [Google Scholar] [CrossRef]

- Rosenthal, J.T.; Beecy, A.; Sabuncu, M.R. Rethinking clinical trials for medical ai with dynamic deployments of adaptive systems. npj Digit. Med. 2025, 8, 252. [Google Scholar] [CrossRef] [PubMed]

- Bedi, S.; Liu, Y.; Orr-Ewing, L.; Dash, D.; Koyejo, S.; Callahan, A.; Fries, J.A.; Wornow, M.; Swaminathan, A.; Lehmann, L.S.; et al. A systematic review of testing and evaluation of healthcare applications of large language models (llms). medRxiv 2024. [Google Scholar] [CrossRef]

- Solaiman, B.; Mekki, Y.M.; Qadir, J.; Ghaly, M.; Abdelkareem, M.; Al-Ansari, A. A “true lifecycle approach” towards governing healthcare ai with the gcc as a global governance model. npj Digit. Med. 2025, 8, 337. [Google Scholar] [CrossRef] [PubMed]

- Sankar, B.S.; Gilliland, D.; Rincon, J.; Hermjakob, H.; Yan, Y.; Adam, I.; Lemaster, G.; Wang, D.; Watson, K.; Bui, A.; et al. Building an ethical and trustworthy biomedical ai ecosystem for the translational and clinical integration of foundation models. Bioengineering 2024, 11, 984. [Google Scholar] [CrossRef] [PubMed]

| Agent/Framework | Core LLM | Key Methods | Application | Ref. |

|---|---|---|---|---|

| MedAgents | GPT-4, GPT-3.5 | MAS, RAG (implicit), CoT | Medical reasoning | [21] |

| MAC Framework | GPT-4, GPT-3.5 | MAS (MDT simulation) | Rare disease diagnosis | [22] |

| KG4Diagnosis | GPT, MedPaLM | MAS, RAG, Tool Use | Diagnosis w/KG | [23] |

| BioResearcher | GPT-4o | MAS, Literature | Automated research | [11] |

| CT-Agent | GPT-4 | MAS, ReAct, Tool Use | Clinical trial analysis | [24] |

| CRISPR-GPT | N/A | Tool Use, Planning | Gene editing design | [25] |

| BioDiscoveryAgent | Claude 3.5 | PubMed Tool, Critic Agent | Perturbation planning | [26] |

| GeneSilico Copilot | N/A | RAG, Retrieval Tools, ReAct | Oncology (breast cancer) | [27] |

| ArgMed-Agents | GPT-3.5, GPT-4 | MAS, Symbolic Reasoning | Explainable decision making | [28] |

| ENTAgents | N/A | MAS, RAG, Reflection | ENT QA system | [29] |

| Taiyi | Qwen-7B-base | Bilingual Fine-tuning | Biomed NLP tasks | [30] |

| MedBioLM | N/A | Fine-tuning, RAG | Biomedical QA | [31] |

| LLM-IE Agent | N/A | Prompt Editor Tool Use | Biomedical IE (NER, RE) | [32] |

| SeqMate | gpt-3.5-turbo | BioTools, Planning | RNA-seq analysis | [33] |

| Clinical Calc Agent | LLaMa, GPT-4o | Code Tool, RAG, API | Clinical scoring tasks | [4] |

| Benchmark | Focus | Modalities | Metrics | Ref. |

|---|---|---|---|---|

| AgentClinic | Clinical simulation | Dialogue, Image, EHR | Accuracy, Bias analysis | [8] |

| MedJourney | Patient journey QA | Dialogue, Text | Accuracy, BLEU, Recall | [7] |

| CalcQA | Tool-based execution | Text (cases) | Tool accuracy | [6] |

| MedAgentBench | FHIR interaction | Text + EHR | Task success rate | [63] |

| MedHal | Hallucination detection | Text (clinical, QA) | Accuracy, F1 score | [67] |

| SourceCheckup | Citation verification | Text, URL | Source accuracy | [5] |

| BixBench | Bioinformatics QA | Text + Bio data | Task accuracy | [68] |

| Challenge | Impact | Current Mitigation | Limitations | Proposed Metric |

|---|---|---|---|---|

| Hallucinations and Factual Inaccuracies | Misdiagnoses, fabricated references, unverified outputs | RAG, tool-based computation, self-correction, prompt engineering | Residual factual errors, difficulty defining, hallucination boundaries | Hallucination Trust Index (HTI) |

| Explainability and Transparency | Limited interpretability, clinician distrust | SHAP, LIME, CoT, CoD, argumentation, model cards, human-in-the-loop | Opaque internal logic, difficult for non-experts to audit outputs | — |

| Data Quality, Availability, and Bias | Biased predictions, reduced generalization | Dataset curation, bias audits, fairness-aware training | Limited real-world demographic coverage | Equity Alignment Score (EAS) |

| Tool Reliability and Integration | Calculation errors, API misuse | Prompt refinement, toolspecific evaluation, error handling | Interface mismatch, invocation confusion | Tool Execution Fidelity (TEF) |

| Multimodal Data Integration and Processing | Sparse or conflicting patient signals | Joint encoders, gated fusion, alignment protocols | Modality gaps, noisy inputs | Multimodal Alignment Consistency (MAC) |

| Multi-Agent Collaboration Complexity | Inter-agent misalignment, redundant roles | Role assignment, consensus mechanisms | Coordination latency, planning inconsistencies | Agent Coordination Latency (ACL) |

| Ethics, Privacy, Security, Regulation | Data misuse, legal ambiguity, safety concerns | Privacy-preserving computation, AI-specific governance | No agent-specific regulatory standard, unclear responsibility | AI Governance Readiness Index (AGRI) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, X.; Sankar, R. Large Language Model Agents for Biomedicine: A Comprehensive Review of Methods, Evaluations, Challenges, and Future Directions. Information 2025, 16, 894. https://doi.org/10.3390/info16100894

Xu X, Sankar R. Large Language Model Agents for Biomedicine: A Comprehensive Review of Methods, Evaluations, Challenges, and Future Directions. Information. 2025; 16(10):894. https://doi.org/10.3390/info16100894

Chicago/Turabian StyleXu, Xiaoran, and Ravi Sankar. 2025. "Large Language Model Agents for Biomedicine: A Comprehensive Review of Methods, Evaluations, Challenges, and Future Directions" Information 16, no. 10: 894. https://doi.org/10.3390/info16100894

APA StyleXu, X., & Sankar, R. (2025). Large Language Model Agents for Biomedicine: A Comprehensive Review of Methods, Evaluations, Challenges, and Future Directions. Information, 16(10), 894. https://doi.org/10.3390/info16100894