Abstract

In the era of artificial intelligence, higher education is embracing new opportunities for pedagogical innovation. This study investigates the impact of integrating AI into college English teaching, focusing on its role in enhancing students’ critical thinking and academic engagement. A controlled experiment compared AI-assisted instruction with traditional teaching, revealing that AI-supported learning improved overall English proficiency, especially in writing skills and among lower- and intermediate-level learners. Behavioral analysis showed that the quality of AI interaction—such as meaningful feedback adoption and autonomous revision—was more influential than mere usage frequency. Student feedback further suggested that AI-enhanced teaching stimulated motivation and self-efficacy while also raising concerns about potential overreliance and shallow engagement. These findings highlight both the promise and the limitations of AI in language education, underscoring the importance of teacher facilitation and thoughtful design of human–AI interaction to support deep and sustainable learning.

1. Introduction

In the second decade of the 21st century, artificial intelligence (AI) technologies entered a phase of large-scale practical application. In particular, the rapid breakthroughs of Generative Artificial Intelligence (Generative AI) in natural language processing and human–computer interaction have profoundly disrupted traditional educational models and created opportunities for deep structural transformation [1,2,3]. With the increasingly widespread use of large language models such as ChatGPT and DeepSeek, the educational field is undergoing a paradigm shift from “tool-assisted” instruction to “intelligent symbiosis” [4,5,6]. In the context of college English teaching, Generative AI not only provides language generation and knowledge supplementation but also reshapes learners’ cognitive pathways and linguistic thinking through contextual simulation, interactive guidance, and personalized feedback.

Traditional college English instruction in China [7] is typically teacher-centered, focusing on systematic input of vocabulary, grammar, and reading, often assessed through standardized tests. While this approach ensures efficiency in knowledge transmission, its limitations have become increasingly evident. Students have limited opportunities for language output, which restricts the development of communicative competence. In addition, the highly homogenized nature of instruction fails to meet the diverse needs and cognitive rhythms of learners [8]. In an era where emerging engineering disciplines, intercultural communication, and global competence are gaining prominence, the educational role of college English urgently needs to shift—from knowledge transmission to competency building and from uniform instruction to intelligent adaptation.

The rise of Generative AI [9] offers a timely opportunity for deep reform in college English instruction. First, AI systems possess robust language generation and adaptive response capabilities that can simulate authentic communicative contexts through task-based learning, enhancing the realism and immersion of language practice [10]. Second, AI systems can record and analyze learners’ behavioral data, enabling dynamic learner profiling and real-time interventions, thereby shifting pedagogical decision-making from experience-driven to data-driven processes. Third, AI systems are inherently scalable, connecting individual learners with global resources and thus providing boundless learning spaces and diversified language input [11]. In this context, the integration of Generative AI into college English education has become a key breakthrough point for the intelligent transformation of higher education.

International debates and risk landscape: While the affordances of Generative AI are promising, international scholarship has highlighted critical risks that must be integrated into any conceptual framework. Cognitive offloading may lead to the accumulation of “cognitive debt,” reducing opportunities for retrieval practice and executive control [12]. Overconfidence in AI outputs correlates with reduced critical-thinking effort and miscalibrated metacognition [13]. Scholars have also described a “cognitive paradox” in which efficiency gains may paradoxically suppress deep processing if not properly scaffolded [14]. Policy-level guidance (UNESCO, 2023/2025; OECD, 2025) further cautions that current AI systems lack metacognition and self-evaluation, underscoring the importance of human oversight and ethical guardrails [15]. Taken together, these debates indicate that the same affordances (simulation, adaptive feedback, and scalability) must be weighed against corresponding risks (cognitive offloading, shallow engagement, and dependency). Any research design should, therefore, explicitly model these tensions.

From generic “challenges” to testable tensions: To address these debates, we reconceptualize the challenges of AI-supported instruction as a set of testable tensions: (1) quality of AI interaction versus frequency of use; (2) teacher facilitation versus tool autonomy; and (3) feedback adoption versus cognitive passivity. These tensions motivate our central hypotheses: (a) the quality of AI-mediated interactions will be a stronger predictor of learning gains than frequency of use; and (b) learners who maintain calibrated self-monitoring will benefit more than those who rely uncritically on AI assistance. These hypotheses align with international debates while operationalizing the conceptual framework for empirical testing.

Existing studies have primarily focused on the technological functions of AI and the construction of teaching platforms, with limited attention to how AI operates within authentic classrooms and reshapes learner behavior. Empirical comparative studies targeting language courses remain scarce. Therefore, this study seeks to bridge domestic implementation with international debates by explicitly modeling both the affordances and risks of AI in college English instruction. Specifically, we take the integration of a Generative AI platform into authentic classrooms as our starting point, aiming to connect instructional design with learner behavior and outcomes and to provide both empirical evidence and theoretical insight for AI-driven educational innovation.

Globally, AI in education has undergone extensive exploration. From early Intelligent Tutoring Systems [16] to learning analytics platforms grounded in cognitive science, AI has evolved from a teaching assistant to an active participant in learning processes [17]. In recent years, the rise of Generative AI has transformed the nature of linguistic interaction in educational contexts. Hwang et al. [18] found that ChatGPT provides high-quality feedback, language modeling, and text reconstruction in English writing instruction, significantly enhancing learners’ expressive abilities and writing confidence. Lin [19] further emphasized that Generative AI enables “collaborative writing,” helping learners internalize linguistic structures and discourse logic through interaction.

In China, the development of smart education systems has accelerated in recent years. Research has primarily focused on platform construction (e.g., “smart classrooms” [20]), instructional model design, and AI-based assessment systems. For example, Zheng et al. [21] proposed a “teacher-led + AI-supported” collaborative teaching framework for college English, using knowledge graphs and corpora to facilitate precision instruction. However, domestic research still faces several limitations: (1) much of the work remains at the level of technological application, lacking classroom-based behavioral data; (2) most studies rely on theoretical construction or survey interviews, with limited empirical control studies and behavioral modeling; and (3) classroom integration of Generative AI remains exploratory, with little systematic research on instructional models, outcome evaluation, or learner behavior mechanisms.

This study addresses these gaps by centering on the integration of Generative AI into college English instruction. We implement a six-week comparative experiment with three core components:

- (1)

- Construction of a hybrid human–AI collaborative teaching model.

- (2)

- Integration of multi-source data for instructional evaluation.

- (3)

- Development of a replicable intelligent instructional pathway model.

The remainder of this paper is organized as follows. Section 2 elaborates on the research motivation. Section 3 develops the theoretical and conceptual framework for integrating Generative AI into college English instruction, drawing upon constructivist learning theory and international critiques of AI affordances. Section 4 details the experimental design and methodology. Section 5 presents the results across instructional effectiveness, behavioral patterns, and subjective learner feedback. Section 6 explores pedagogical implications and reflects on limitations. Section 7 concludes with recommendations for future work.

2. Motivation

Driven by both theoretical inquiry and practical demands, this study conducts an in-depth investigation into the implementation effects and operational mechanisms of Generative-AI-assisted instruction in college English classrooms. Specifically, the research addresses three core questions:

Is Generative-AI-assisted instruction more effective than traditional teaching in terms of learning outcomes? This question is examined through a quasi-experimental pretest–posttest design comparing students’ scores across Generative-AI-assisted and traditional instruction groups, with the aim of evaluating the relative impact of the two teaching models on language learning effectiveness.

What are the behavioral characteristics of students in a Generative-AI-supported learning environment? Drawing on platform log data (e.g., question frequency, interaction content, and dwell time) and behavioral pathway modeling techniques, the study analyzes the structure and evolution of student learning behaviors within AI-enhanced instructional settings.

What are the instructional advantages and potential challenges of Generative-AI-assisted teaching? By synthesizing data from student questionnaires, teacher interviews, and classroom observations, the study systematically identifies the benefits of Generative AI instruction in areas such as motivation, engagement, and competence development while also highlighting practical challenges such as cognitive dependency and uneven interaction quality.

Based on these research questions, the study aims to construct a task-driven, human–AI collaborative instructional model for college English teaching. A comprehensive evaluation is conducted across three key dimensions: instructional effectiveness, learning behavior, and teaching satisfaction. On this basis, the study proposes optimized instructional strategies and practical recommendations for Generative-AI-supported pedagogy while acknowledging current limitations (e.g., short intervention duration and single-institution sampling) and pointing to directions for future refinement.

3. Constructing a Logical Framework for AI–Education Integration

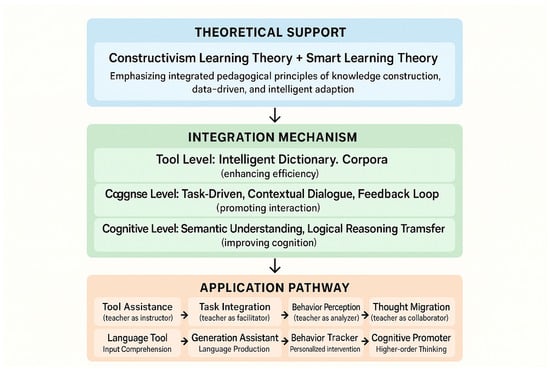

With the accelerated integration of artificial intelligence into education, constructing a scientific and systematic framework for AI–education fusion has become both a theoretical prerequisite and a practical guide for promoting the intelligent transformation of higher education curricula. This study proposes a logical pathway for the deep integration of Generative AI and college English instruction from three interrelated dimensions: educational philosophy, technological mechanisms, and instructional processes. Rather than serving as a purely descriptive taxonomy, the framework is explicitly anchored in established theories of constructivism, distributed cognition, scaffolding, and human–AI collaboration. As shown in Figure 1, the framework provides the theoretical foundation for both the empirical design and the pedagogical transformation of AI-assisted teaching.

Figure 1.

The framework for AI–education fusion.

3.1. Theoretical Foundation: From Constructivism to Distributed Cognition

The integration of AI and education can be understood as a process of co-constructing cognition between human learners and artificial intelligence systems. Its theoretical basis can be traced to Constructivist Learning Theory, which emphasizes that knowledge should be actively constructed by learners through interaction with their environment rather than passively received from teachers [22]. From this perspective, Generative AI is not merely a “language generator” but functions as an “interactive learner” with semantic understanding capabilities. It dynamically responds to student input by providing feedback, corrections, and guidance, thereby creating a socially constructed learning environment [23].

Beyond constructivism, the framework also draws on scaffolding theory and distributed cognition. Scaffolding theory emphasizes temporary supports provided by more capable others—teachers, peers, or intelligent systems—that enable learners to accomplish tasks beyond their current ability. Generative AI can serve as a dynamic scaffold, gradually reducing assistance as learners gain independence. Distributed cognition theory [24] highlights that cognitive processes are not confined to the individual mind but are distributed across people, artifacts, and environments. From this perspective, AI platforms become part of the learner’s cognitive ecology, extending working memory, externalizing representations, and enabling collaborative problem solving. These theoretical lenses ensure that the proposed framework is not merely descriptive but also conceptually grounded.

Building on these perspectives, the emerging Smart Learning Theory [25] further emphasizes that data sensing, contextual adaptation, and system intelligence are key elements for achieving a ubiquitous–personalized–optimized learning cycle [26]. The language generation and interactive functions of Generative AI make it an ideal vehicle for implementing a hybrid of constructivist, scaffolding, and adaptive learning paradigms. As such, it represents a critical component of cognition-driven instruction in the future.

3.2. Integration Mechanism: A Three-Tiered Human–AI Collaboration Model

This study proposes a Three-Tiered Logical Mechanism Model for integrating Generative AI into college English instruction, explicitly informed by human–AI collaboration frameworks [27]. It comprises three layers:

Tool Layer: At this level, AI functions as an external instructional aid, performing tasks such as language generation, grammar checking, and text expansion. Its role is comparable to that of an enhanced dictionary and corpus-like resource, primarily aimed at improving students’ expression efficiency and assisting teachers in lesson preparation.

Process Layer: AI begins to contribute to classroom task design and interaction management. Through task-driven learning, students engage with AI in contextual dialogues, role simulations, and text continuation, forming a cyclical feedback loop of “practice–revision–repractice.” Meanwhile, teachers use behavioral data to dynamically adjust instructional strategies. In this layer, human–AI collaboration theory positions AI as a “collaborative partner” rather than a passive tool, enabling distributed task management and adaptive co-regulation of learning.

Cognitive Layer: AI progresses from a language supporter to a facilitator of cognitive development. Through interactive revision, semantic comparison, and logical reasoning, students form a cognitive chain of “reflective questioning → language reconstruction → expressive transfer.” Here, AI serves simultaneously as a cognitive scaffold and an externalized representation system, fostering the development of critical thinking, metacognition, and multimodal communicative competence.

3.3. Application Pathway: From Tool Insertion to Paradigm Evolution

Based on the structural characteristics of college English tasks and the functional attributes of Generative AI, this study further proposes a Four-Stage Evolutionary Pathway for AI-integrated instruction, as shown in Table 1. Rather than a simple linear progression, these stages are mapped onto constructivist learning cycles (input → construction → reflection → transfer), thereby clarifying the theoretical grounding of each step.

Table 1.

Four-stage evolutionary pathway of AI integration in college English instruction.

By explicitly linking each stage to constructivist and distributed cognition principles, the pathway emphasizes not only practical progression but also theoretical justification, thereby transforming the framework from a descriptive taxonomy into a theory-informed model.

4. Research Design and Methodology

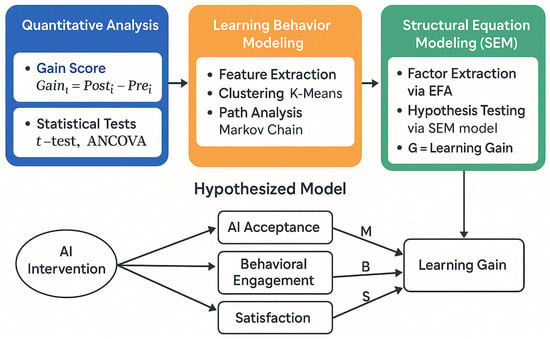

Before examining the impact of Generative Artificial Intelligence (Generative AI) on college English teaching outcomes and learning behaviors, it is essential to establish a systematic and rigorous research design framework. This study adopts a mixed-methods design to address three core questions: whether AI-based instruction is effective, why it is effective, and for whom it is most effective. Specifically, this chapter outlines the implementation pathway across three components: experimental design, data sources, and analytical methods. It includes participant selection, instructional strategies, multi-source data collection, and the construction of multi-level statistical models. By integrating quantitative performance evaluation, behavioral modeling, and subjective pathway analysis, this study not only verifies the effectiveness of AI-assisted instruction but also explores its underlying mechanisms and key influencing factors. The overall research logic and methodological process are illustrated in Figure 2.

Figure 2.

Research design and methodological framework of the study.

4.1. Experimental Design and Data Sources

This study systematically evaluates the instructional effectiveness and behavioral impacts of Generative AI in college English classrooms. A non-equivalent pretest–posttest control group design, a type of quasi-experimental method, was employed to approximate causal inference in authentic classroom settings while maintaining control over instructional variables.

Participants were 2023 undergraduates (non-English majors) from a key provincial university in China, spanning science and engineering, economics and management, and humanities. A total of 150 students were randomly assigned to the experimental or control group ( each) using stratified randomization based on gender and English pretest scores to enhance baseline comparability. Baseline equivalence was checked via independent-samples tests and standardized mean differences; no material imbalance was detected, and balance diagnostics are reported in Table 2. The experiment lasted six weeks, with two sessions per week (two class hours per session; 24 h total). Course content, instructional objectives, and assessment structures were held constant across groups to minimize extraneous interference. All procedures received approval from the institutional ethics committee and written informed consent was obtained from all participants.

Table 2.

Baseline equivalence diagnostics between experimental and control groups.

In terms of instructional strategy, the experimental group utilized a dual-platform Generative AI environment (ChatGPT 4.0 and DeepSeek 3.2, accessed via institutional accounts with official web interfaces; no third-party plug-ins or external model add-ons were used) embedded with task-driven modules (e.g., AI-guided writing and discourse extension), real-time feedback mechanisms, and behavioral tracking systems.

AI implementation details: Students engaged in a structured “draft → feedback → revision” loop: (i) submit a task-specific prompt and generate a first draft; (ii) request and document AI feedback on content, organization, and language form; (iii) revise the draft and append a short reflection note explaining which recommendations were adopted or rejected and why.

To support consistent use, a prompt template library was provided (e.g., genre-audience-purpose specification, style constraints, and content boundaries). Instructors received a short instructional facilitation protocol (pre-brief, live monitoring dashboard, and post-task debrief) to standardize coaching across classes. Responsible-use guidelines were communicated prior to the experiment: AI could be used for idea generation, language polishing, and structural suggestions; full outsourcing of assignments to AI was prohibited. Students were required to attach AI interaction summaries (prompt snapshots or paraphrased logs) to submissions, ensuring auditability and accountability.

In contrast, the control group followed a traditional teacher-centered approach combining PPT lectures and paper-based exercises, with feedback provided via manual grading and periodic assessments. All classes were delivered by instructors with comparable qualifications to minimize style bias. To monitor treatment fidelity, periodic classroom observations were conducted using a standardized checklist, with adherence notes summarized in Table 3.

Table 3.

Classroom observation checklist and fidelity adherence summary.

To comprehensively assess the effects of AI instruction, a multi-source data collection system was implemented, integrating quantitative and qualitative, process and outcome, and objective and subjective dimensions.

In terms of data collection, this study employed a multi-source design to comprehensively capture the outcomes and mechanisms of Generative-AI-assisted instruction.

First, cognitive outcome data were obtained from pretest and posttest English proficiency assessments, which covered reading comprehension, language usage, and writing. All items and rubrics were developed by a professional test design team, aligned with CEFR B1–B2 levels and the Chinese Standards of English Language Ability (CSE Levels 4–5). Inter-rater calibration was conducted prior to scoring, and the final scores were averaged from three independent raters under double-blind conditions to ensure reliability and validity.

Second, AI platform log data (experimental group only) recorded the full process of student–AI interaction. Key indicators included weekly interaction frequency, average output length per task, revision count, and feedback adoption rate (defined as the proportion of actionable AI suggestions incorporated into the revised draft). Additional measures included weekly usage time, self-revision rate (operationalized by edit-distance between drafts), pathway completeness index (whether the full cycle “draft–feedback–revision–resubmission” was completed), BLEU score, and a lexico–syntactic features index (type–token ratio and clause complexity). All raw logs were anonymized before export, with personal identifiers such as names, IDs, and IPs removed. Data were stored on secure institutional servers with restricted access and daily backups in accordance with university data protection policies.

Third, in-class behavioral observations were conducted using structured observation sheets to record behaviors such as question frequency, participation, and engagement. Observers rotated across sessions and cross-reviewed notes. Observation prompts were aligned with the facilitation protocol (e.g., evidence of feedback uptake and quality of revision planning) to ensure consistency between intended pedagogy and enacted practices.

Fourth, motivation and perception surveys were administered in Weeks 3 and 6 using a 5-point Likert-scale instrument titled “Generative AI Learning Experience and Motivation,” measuring five dimensions. Scale items, factor structure, and reliability coefficients are reported in Table 4. Administration procedures were identical across groups to minimize measurement bias.

Table 4.

Scale items, factor structure, and reliability coefficients.

Finally, teacher and student interviews were conducted in a semi-structured format with instructors and a purposive subsample of students, selected via maximum-variation sampling across behavioral clusters. The interview protocol covered four domains: (i) usefulness and limitations of AI feedback; (ii) changes in writing process and self-regulation; (iii) experiences with the draft–feedback–revision cycle; and (iv) responsible AI use and academic integrity. Interviews lasted approximately 20–30 min, were audio-recorded with participant consent, and were subsequently transcribed.

Academic integrity and ethical safeguards. Students signed an acceptable-use statement clarifying permitted and prohibited AI uses, and instructors verified consistency across drafts, AI summaries, and final submissions. Any concerns were addressed through formative feedback rather than punitive measures during the study. Informed consent was obtained prior to participation, and privacy safeguards for log data were strictly enforced, in line with COPE/APA ethical guidelines.

This five-dimensional framework enabled a comprehensive assessment of both cognitive outcomes and behavioral–cognitive mechanisms under AI-supported learning while ensuring transparency regarding platform implementation, task design, interview protocols, and ethical compliance.

4.2. Analysis Methods

To evaluate the effectiveness of Generative-AI-assisted instruction, this study employed a mixed-methods approach that integrated statistical inference, behavioral modeling, and structural modeling.

4.2.1. Quantitative Assessment of Instructional Effectiveness

The English proficiency test was developed and standardized by a professional language testing team. Items were aligned with the Common European Framework of Reference for Languages (CEFR, B1–B2 levels) and the Chinese Standards of English Language Ability (CSE Levels 4–5), ensuring both international and national comparability. Reliability and validity were confirmed through a pilot test (), yielding Cronbach’s for the overall scale and inter-rater reliability of for writing tasks.

Individual learning gains were computed as

Group differences were first examined with an independent samples t-test:

If normality assumptions were violated, the non-parametric Mann–Whitney U test was applied. To control for baseline bias, ANCOVA was performed:

where denotes the posttest score, the treatment effect (AI vs. control), and the pretest covariate.

4.2.2. Modeling of Learning Behavior Data

The following behavioral indicators were defined, as shown in Table 5.

Table 5.

Behavioral feature definitions.

The use of BLEU scores and linguistic feature indices was theoretically justified as they provide objective measures of revision quality and language complexity. This hybrid metric complements human ratings, reducing subjectivity and capturing both surface-level edits and deeper structural improvements in student writing.

To model learning processes, a Markov Chain was employed with the following state set: S: Start Task; Q: Ask Question; F: Receive Feedback; R: Revise and Retry; C: Submit Task.

The transition probabilities were defined by the matrix:

Finally, to examine how behaviors predicted learning outcomes, a multiple regression model was constructed:

Significance tests of coefficients identified the most influential behavioral predictors of student learning gains.

4.2.3. Subjective Perception and Structural Modeling

The motivation and perception survey was adapted from validated instruments in second-language acquisition research. Items measured five dimensions: AI usefulness, ease of use, engagement, self-efficacy, and satisfaction. Reliability was high, with Cronbach’s at Week 3 and at Week 6. Construct validity was confirmed via confirmatory factor analysis (CFA), with strong fit indices (CFI = 0.95, RMSEA = 0.041).

Exploratory Factor Analysis (EFA) was first applied to extract motivational and satisfaction dimensions from questionnaire data. A Structural Equation Model (SEM) was then constructed with the following pathway: M, AI Acceptance (Mediator); B, Behavioral Engagement; S, Satisfaction; G, Learning Gain; X, AI Instruction (0 = Traditional, 1 = AI group).

The SEM equations were specified as

Model estimation was conducted using AMOS or PLS-SEM, and fit indices (e.g., CFI and RMSEA) were evaluated.

This multilayered analysis framework—including t-tests, ANCOVA, regression, behavioral simulation, and SEM—allowed the study to answer not only {whether} AI-assisted instruction is effective but also {why} it is effective and {for whom} it is most effective.

4.2.4. Statistical Considerations

Several additional statistical considerations were implemented to strengthen analytic rigor. First, effect sizes (e.g., Cohen’s d) were reported alongside significance tests to convey practical impact. The relatively large observed effect size (Cohen’s ) is interpreted with caution as it may partly reflect alignment between intervention tasks and assessment content. Second, because multiple outcomes (grammar, reading, and writing) were analyzed, both Bonferroni and False Discovery Rate (FDR) corrections were applied; ** the main findings remained statistically robust. Third, although no a priori power analysis was conducted, ** a post hoc calculation indicated that with and , the design had power >0.90 to detect medium effects (), supporting adequacy of the sample size. Finally, five participants (3.3%) had missing posttest data; both multiple imputation and complete-case analyses were conducted, and results converged across methods, though residual bias cannot be fully excluded.

4.2.5. Measurement Validity and Reliability

The primary outcome was a standardized English proficiency test developed by a professional assessment team, covering reading comprehension, language usage, and writing. ** The test was aligned with both the national College English Teaching Syllabus and CEFR descriptors, ensuring contextual appropriateness for Chinese learners. Internal consistency reliability was strong (Cronbach’s ).

Behavioral indicators were extracted from AI platform logs (e.g., interaction frequency, revision count, and feedback adoption rate). These indicators capture observable engagement patterns in student–AI interaction and serve as proxies for learning processes, though they may not fully reflect underlying cognitive mechanisms.

Although stratified randomization was used, potential confounds such as learner motivation, prior technology familiarity, and novelty effects were not directly measured or statistically adjusted. These limitations are acknowledged and will be addressed in future studies through explicit measurement and inclusion of control variables.

5. Experimental Results and Comparative Analysis

5.1. Quantitative Outcome Analysis

To systematically evaluate the effectiveness of Generative-AI-assisted instruction in college English classrooms, this study conducted a comparative analysis of students’ English proficiency before and after the intervention. Standardized language tests were administered, covering three core modules: language knowledge, reading comprehension, and writing performance. All test scores were normalized to a 100-point scale. Test items were designed by a centralized assessment team, and grading was conducted via a double-blind method by three independent raters, whose scores were averaged to ensure consistency and reliability.

The preliminary results indicate a significant advantage of Generative-AI-assisted instruction over traditional teaching. Students in the AI group improved from a pretest mean of 67.21 to a posttest mean of 82.46, showing an average gain of 15.25 points. In contrast, the control group improved from 68.02 to 74.60, with a gain of only 6.58 points. An independent samples t-test revealed that the difference in gain scores between the two groups was statistically significant (), demonstrating the potential effectiveness of Generative AI in language instruction. In terms of relative improvement, the AI group achieved a 22.7% average increase—2.4 times greater than that of the control group.

A breakdown of the sub-components revealed that the AI group made the most substantial progress in writing. Their average writing score increased by 6.08 points, significantly more than the 2.44-point gain observed in the control group (). The AI system’s functionalities—such as language generation, grammar suggestions, and sentence expansion—likely contributed to a real-time “feedback–revision–reconstruction” loop, enhancing productive language skills. In reading and grammar as well, the AI group outperformed the control group.

To control for baseline differences in language proficiency, Analysis of Covariance (ANCOVA) was conducted with the pretest score as a covariate:

where is the posttest score, is the treatment effect (Generative AI vs. traditional), and is the pretest score. The result remained significant after adjustment (, , partial ), indicating a stable medium effect size.

Further stratified analysis based on performance levels revealed important patterns. Students were divided into top quartile, middle 50%, and bottom quartile. The results showed the AI group had a particularly strong compensatory effect on lower-achieving students.

To reduce redundancy, overlapping descriptive statistics originally spread across multiple tables have been consolidated into Table 3, Table 4, Table 5, Table 6, Table 7, Table 8 and Table 9; the main text now retains only key effect sizes and inferential statistics to avoid duplication while preserving reproducibility.

Table 6.

Group performance comparison (pretest–posttest).

Table 7.

Subskill improvement comparison.

Table 8.

Improvement by student proficiency level.

Table 9.

Thematic coding and frequency of student interview feedback (n = 15).

While the AI group showed significantly greater gains, effect sizes should be interpreted with caution. The unusually large Cohen’s d may partly reflect alignment between test items and AI-supported tasks, raising the possibility of task-specific effects rather than fully generalizable proficiency improvements. Stratified analysis also indicates heterogeneous benefits: the strongest compensatory advantage occurred among lower-performing students, whereas gains for high achievers were smaller. This nuance highlights both the promise and the boundary conditions of Generative-AI-assisted instruction.

A posttest score distribution analysis also revealed that the AI group showed a right-skewed pattern with scores concentrated in the 80–90 range, while the control group had more dispersed results concentrated around 70–75. Notably, eight students in the AI group scored above 90 (up from one in the pretest), compared to only one student in the control group.

In conclusion, results from overall gains, subskill improvements, covariate-controlled analyses, distribution patterns, and proficiency stratification collectively indicate that Generative AI provides a measurable instructional advantage. Particularly, AI instruction enhances productive writing skills, reduces performance disparities, and supports the potential development of lower-achieving students. These effects are not due solely to the technology itself but depend critically on task design, teacher facilitation, and responsible integration. Future research should focus on refining human–AI collaborative mechanisms and advancing toward a triadic ecosystem of technology–teacher–learner synergy.

5.2. Behavioral Analytics and Interaction Modeling

To explore the behavioral mechanisms underlying the Generative AI intervention, multidimensional modeling and pattern analysis were conducted based on the usage logs of 75 students in the experimental group. Six behavioral variables were extracted, including interaction frequency, duration, adoption rate, and self-revision rate, which are summarized in Table 10. The goal was to reveal differences in behavioral styles, evolution of interaction paths, and the contribution of behavioral variables to learning outcomes.

Table 10.

Behavioral variable summary (n = 75).

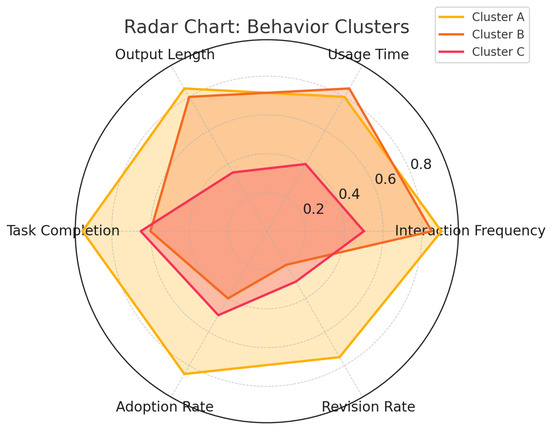

5.2.1. Learner Behavioral Clustering

Using K-means clustering (), students were categorized into three behavioral groups: High-Frequency High-Efficiency (Cluster A), High-Frequency Low-Efficiency (Cluster B), and Low-Frequency Conservative (Cluster C). As shown in Figure 3, Cluster A outperformed in all behavioral metrics, including interaction frequency (z = 0.90), task completion rate (0.95), AI suggestion adoption rate (0.85), and self-revision rate (0.75). Cluster B showed high usage frequency but low adoption (0.40) and revision rates (0.20), suggesting surface-level engagement rather than deep learning. Cluster C scored low across all metrics, indicating minimal engagement.

Figure 3.

Behavioral clustering of students based on AI interaction metrics.

Critical interpretation: The clustering results, although internally consistent (silhouette score = 0.62), should be interpreted as exploratory typologies rather than definitive categories as their stability across different cohorts and tasks cannot be guaranteed. Similarly, the Markov chain modeling was employed as an explanatory tool, but formal tests of key assumptions (e.g., stationarity and ergodicity) were not conducted. Future studies should explicitly validate these assumptions, apply cross-sample replications, and assess whether transition probabilities generalize across tasks, time, and instructional contexts. The “high-frequency but low-efficiency” pattern observed in Cluster B highlights the risks of surface engagement but may also reflect situational factors, such as time pressure or misaligned prompts, rather than the intrinsic limitations of Generative AI.

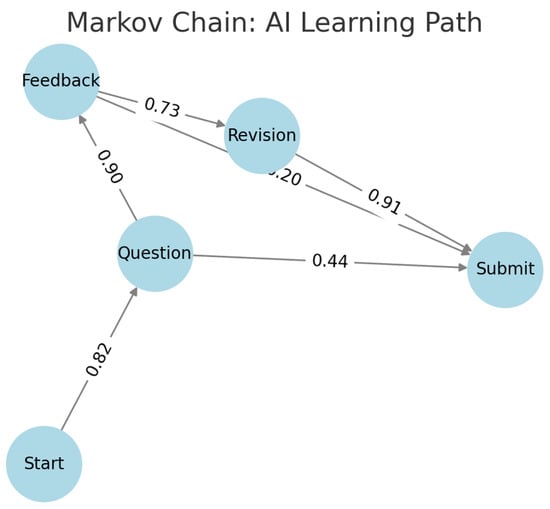

5.2.2. AI Learning Pathway Efficiency Analysis

To further investigate the internal mechanisms of student–AI interaction, this study employed Markov chain modeling to analyze task execution state transitions (see Figure 4). The state set comprised the following: Start Task, Raise Question, Receive Feedback, Revise Content, and Submit Output.

Figure 4.

Markov chain model of student–AI interaction pathways.

Cluster A primarily followed the canonical pathway: Start → Question → Feedback → Revision → Submit, with a completion rate of 68%. Transition probabilities were relatively high (e.g., , , ), indicating a stable, linear, and logically coherent process of AI-supported learning.

By contrast, Cluster B exhibited substantial path skipping. In particular, shows that nearly half of these learners submitted work directly after posing a question, without undergoing feedback or revision. This type of “dialogue bypass” diminishes the instructional validity of AI interaction by excluding the feedback–revision loop, which is central to deep learning. Nevertheless, such behavior may also reflect contextual factors (e.g., time constraints, weak prompting skills, or task fatigue), suggesting that pathway efficiency is not solely determined by the technology but also by learner motivation and task design.

5.2.3. Behavioral Variables as Predictors of Learning Gains

5.2.4. Subjective Feedback Analysis

To comprehensively assess the impact of Generative-AI-assisted instruction on students’ learning experience, we employed Likert-scale surveys and in-depth interviews, focusing on five key dimensions: learning motivation, technological acceptance, system satisfaction, self-efficacy, and task engagement. Teacher observations were also integrated to refine key trends and instructional insights.

As shown in Table 11, students in the experimental group reported significant improvements across multiple dimensions between Week 3 and Week 6. Learning motivation, technology acceptance, self-efficacy, and task engagement all showed statistically significant gains, while system satisfaction reached a consistently high level of agreement. These results align with the quantitative proficiency gains, suggesting that AI-assisted instruction enhanced both confidence and perceived value of learning.

Table 11.

Changes in student feedback scores across dimensions (n = 75).

Complementing these survey results, Table 12 summarizes themes from student interviews. Nearly half of the participants described highly positive experiences, emphasizing autonomy and continuous engagement with AI support. Others expressed neutral views, using AI primarily as a supplementary tool while retaining a preference for teacher-led instruction. A minority reported negative experiences, such as skipping feedback or outsourcing tasks, reflecting risks of over-reliance and surface-level interaction.

Table 12.

Categorized summary of student interview feedback (n = 15).

Interview transcripts were analyzed through a two-coder inductive thematic approach. Open, axial, and selective coding were conducted, with a codebook iteratively refined on a 20% development set. Two trained coders independently coded the full sample and achieved high inter-rater reliability (Cohen’s , range – across themes). Discrepancies were adjudicated, and theme frequencies were reported to contextualize exemplar quotations (see Table 9).

While qualitative data enriched the interpretation, systematic coding and inter-rater reliability were applied to mitigate anecdotal bias. Moreover, improvements in motivation and self-efficacy observed in surveys were triangulated with interview themes, strengthening validity. Nonetheless, reliance on self-reports entails risks of social desirability bias and subjective interpretation.

6. Discussion

This study empirically examined the application of Generative AI in college English instruction. The results show that AI-assisted teaching significantly enhanced students’ language performance, learning behaviors, and classroom engagement, yet they also revealed risks and limitations. The discussion focuses on three core dimensions: learner behavior, the evolving role of teachers, and boundary conditions for blended instruction.

First, AI reshaped student learning behaviors by fostering a task-driven cycle of questioning, feedback, revision, and output. Learners who actively adopted feedback and engaged in self-revision achieved the largest gains, confirming the value of quality-oriented interaction. However, risks of cognitive offloading and reduced self-regulation suggest that short-term gains may not translate into sustainable autonomy. AI should serve as a scaffold for cognitive construction rather than a substitute.

Second, the teacher’s role is shifting from knowledge transmission to instructional design and behavioral facilitation. Effective task design, boundaries for AI use, and timely pedagogical interventions proved crucial to student outcomes. Teachers must develop digital literacy and data fluency while acting as safeguards against over-automation, ensuring that human judgment and educational values mediate AI adoption.

Third, while AI offers efficiency and feedback advantages, traditional teaching remains essential for tasks requiring cultural interpretation, discourse depth, and emotional connection. A blended model—AI for repetitive or procedural tasks and teachers for critical discourse—appears most effective. Future work should conceptualize blended learning as dynamic co-construction, not merely task division.

Finally, the study’s limitations include its single-institution sample, short duration, and reliance on proxy behavioral indicators. Ethical concerns around informed consent, data privacy, and AI dependency also warrant closer attention. Future research should pursue longer-term and cross-institutional designs, employ multimodal behavioral measures, and develop governance frameworks that align technical gains with equity, autonomy, and critical capacity.

In sum, Generative AI provides measurable instructional benefits but also introduces new pedagogical and ethical challenges. Progress lies not only in technical refinement but in the co-evolution of human and AI roles, guided by theory, ethics, and reflective pedagogy.

7. Conclusions

This study developed a task-driven, human–AI collaborative framework and empirically evaluated Generative AI in college English instruction. The results showed that AI-assisted teaching significantly improved language proficiency, especially writing skills, and that quality-oriented engagement (feedback adoption and revision) outweighed mere frequency of use. Distinct behavioral profiles further revealed that strategic learners benefited most, while shallow interactions yielded limited gains.

Subjective feedback indicated higher motivation, acceptance, and confidence under AI-supported learning, though risks of dependency and superficial use were noted. Teachers emphasized the importance of boundaries and reflective engagement.

Theoretically, this work contributes to debates on human–AI symbiosis by highlighting interaction quality as a key determinant of learning gains. Methodologically, it integrates cognitive outcomes, behavioral logs, and learner perceptions into a unified model. Practically, it offers a replicable AI teaching paradigm with implications for teacher roles, governance, and ethical safeguards.

Future research should expand samples, extend duration, and address long-term and ethical dimensions to ensure that efficiency gains do not compromise autonomy or critical capacities.

Author Contributions

Conceptualization, C.L. and J.L.; methodology, C.L.; software, J.L.; validation, C.L. and J.L.; formal analysis, C.L.; investigation, C.L. and J.L.; resources, C.L.; data curation, J.L.; writing—original draft preparation, C.L.; writing—review and editing, C.L. and J.L.; visualization, J.L.; supervision, C.L.; project administration, C.L.; funding acquisition, C.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Key Research Project on Economic and Social Development of Heilongjiang Province (Foreign Language Special Program) (WY202561) and in part by the Special Research Project of the Heilongjiang Higher Education Society on the Third Plenary Session of the 20th CPC Central Committee and the 2024 National Education Conference (24GJZXC040).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board (Ethics Committee) of Harbin University of Science and Technology (approval date: 6 May 2025).

Informed Consent Statement

Written informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Due to privacy and ethical restrictions, the datasets generated and/or analyzed during the current study are not publicly available.

Acknowledgments

We thank the participating students and course instructors for their cooperation and the administrative and IT support teams for assistance with data collection and management.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Cingillioglu, I.; Gal, U.; Prokhorov, A. AI-experiments in education: An AI-driven randomized controlled trial for higher education research. Educ. Inf. Technol. 2024, 29, 19649–19677. [Google Scholar] [CrossRef]

- Bates, T.; Cobo, C.; Mariño, O.; Wheeler, S. Can artificial intelligence transform higher education? Int. J. Educ. Technol. High. Educ. 2020, 17, 42. [Google Scholar] [CrossRef]

- Airaj, M. Ethical artificial intelligence for teaching-learning in higher education. Educ. Inf. Technol. 2024, 29, 17145–17167. [Google Scholar] [CrossRef]

- Wang, Y.; Jiang, X. AI for education: Trends and insights. Innovation 2025, 6, 100872. [Google Scholar] [CrossRef] [PubMed]

- Rawas, S. ChatGPT: Empowering lifelong learning in the digital age of higher education. Educ. Inf. Technol. 2024, 29, 6895–6908. [Google Scholar] [CrossRef]

- Baig, M.I.; Yadegaridehkordi, E. ChatGPT in the higher education: A systematic literature review and research challenges. Int. J. Educ. Res. 2024, 127, 102411. [Google Scholar] [CrossRef]

- Swain, R. On the teaching and evaluation of experiential learning in a conventional university setting. Br. J. Educ. Technol. 1991, 22, 4–11. [Google Scholar] [CrossRef]

- Chernikova, O.; Sommerhoff, D.; Stadler, M.; Holzberger, D.; Nickl, M.; Seidel, T.; Kasneci, E.; Kuechemann, S.; Kuhn, J.; Fischer, F.; et al. Personalization through adaptivity or adaptability? A meta-analysis on simulation-based learning in higher education. Educ. Res. Rev. 2025, 46, 100662. [Google Scholar] [CrossRef]

- Hashmi, N.; Bal, A.S. Generative AI in higher education and beyond. Bus. Horizons 2024, 67, 607–614. [Google Scholar] [CrossRef]

- Essa, A. The future of postsecondary education in the age of AI. Educ. Sci. 2024, 14, 326. [Google Scholar] [CrossRef]

- Fernández, A.; Gómez, B.; Binjaku, K.; Meçe, E.K. Digital transformation initiatives in higher education institutions: A multivocal literature review. Educ. Inf. Technol. 2023, 28, 12351–12382. [Google Scholar] [CrossRef]

- Kosmyna, N.; Hauptmann, E.; Yuan, Y.T.; Situ, J.; Liao, X.H.; Beresnitzky, A.V.; Braunstein, I.; Maes, P. Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task. arXiv 2025, arXiv:2506.08872. [Google Scholar] [CrossRef]

- Lee, H.P.H.; Rintel, S.; Banks, R.; Wilson, N. The Impact of Generative AI on Critical Thinking. In Proceedings of the ACM (CHI/Related Venues, 2025), Microsoft Research Technical Report Version Available, Yokohama, Japan, 26 April–1 May 2025. [Google Scholar] [CrossRef]

- Jose, B.; Cherian, J.; Verghis, A.M.; Varghise, S.M.; Joseph, M.S.S. The cognitive paradox of AI in education: Between enhancement and erosion. Front. Psychol. 2025, 16, 1550621. [Google Scholar] [CrossRef] [PubMed]

- Miao, F.; Holmes, W. Guidance for Generative AI in Education and Research; UNESCO: Paris, France, 2023; ISBN 978-92-3-100612-8. [Google Scholar] [CrossRef]

- Rowe, N.C.; Galvin, T.P. An authoring system for intelligent procedural-skill tutors. IEEE Intell. Syst. Their Appl. 1998, 13, 61–69. [Google Scholar] [CrossRef]

- Tang, Q.; Deng, W.; Huang, Y.; Wang, S.; Zhang, H. Can Generative Artificial Intelligence be a Good Teaching Assistant?—An Empirical Analysis Based on Generative AI-Assisted Teaching. J. Comput. Assist. Learn. 2025, 41, e70027. [Google Scholar] [CrossRef]

- Hwang, M.; Jeens, R.; Lee, H.K. Exploring learner prompting behavior and its effect on ChatGPT-assisted English writing revision. Asia-Pac. Educ. Res. 2025, 34, 1157–1167. [Google Scholar] [CrossRef]

- Lin, Z. Techniques for supercharging academic writing with generative AI. Nat. Biomed. Eng. 2024, 9, 1–6. [Google Scholar] [CrossRef]

- Zhang, X.; Sun, J.; Deng, Y. Design and application of intelligent classroom for English language and literature based on artificial intelligence technology. Appl. Artif. Intell. 2023, 37, 2216051. [Google Scholar] [CrossRef]

- Zheng, H.; Miao, J. Construction of college english teaching model in the era of artificial intelligence. J. Phys. Conf. Ser. 2021, 1852, 032017. [Google Scholar] [CrossRef]

- Thomas, A.; Menon, A.; Boruff, J.; Rodriguez, A.M.; Ahmed, S. Applications of social constructivist learning theories in knowledge translation for healthcare professionals: A scoping review. Implement. Sci. 2014, 9, 54. [Google Scholar] [CrossRef]

- Nipa, T.J.; Kermanshachi, S. Assessment of open educational resources (OER) developed in interactive learning environments. Educ. Inf. Technol. 2020, 25, 2521–2547. [Google Scholar] [CrossRef]

- Salomon, G. Distributed cognition: Psychological and educational considerations. Cogn. Instr. 1993, 10, 1–31. [Google Scholar] [CrossRef]

- Gibson, D.; Kovanovic, V.; Ifenthaler, D.; Dexter, S.; Feng, S. Learning theories for artificial intelligence promoting learning processes. Br. J. Educ. Technol. 2023, 54, 1125–1146. [Google Scholar] [CrossRef]

- Zhang, R.; Meng, Z.; Wang, H.; Liu, T.; Wang, G.; Zheng, L.; Wang, C. Hyperscale data analysis oriented optimization mechanisms for higher education management systems platforms with evolutionary intelligence. Appl. Soft Comput. 2024, 155, 111460. [Google Scholar] [CrossRef]

- Seeber, I.; Bittner, E.; Briggs, R.O.; de Vreede, T.; de Vreede, G.J.; Elkins, A.; Maier, R.; Merz, A.B.; Oeste-Reiß, S.; Randrup, N.; et al. Machines as teammates: A research agenda on AI in team collaboration. Inf. Manag. 2020, 57, 103174. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).