SCEditor-Web: Bridging Model-Driven Engineering and Generative AI for Smart Contract Development

Abstract

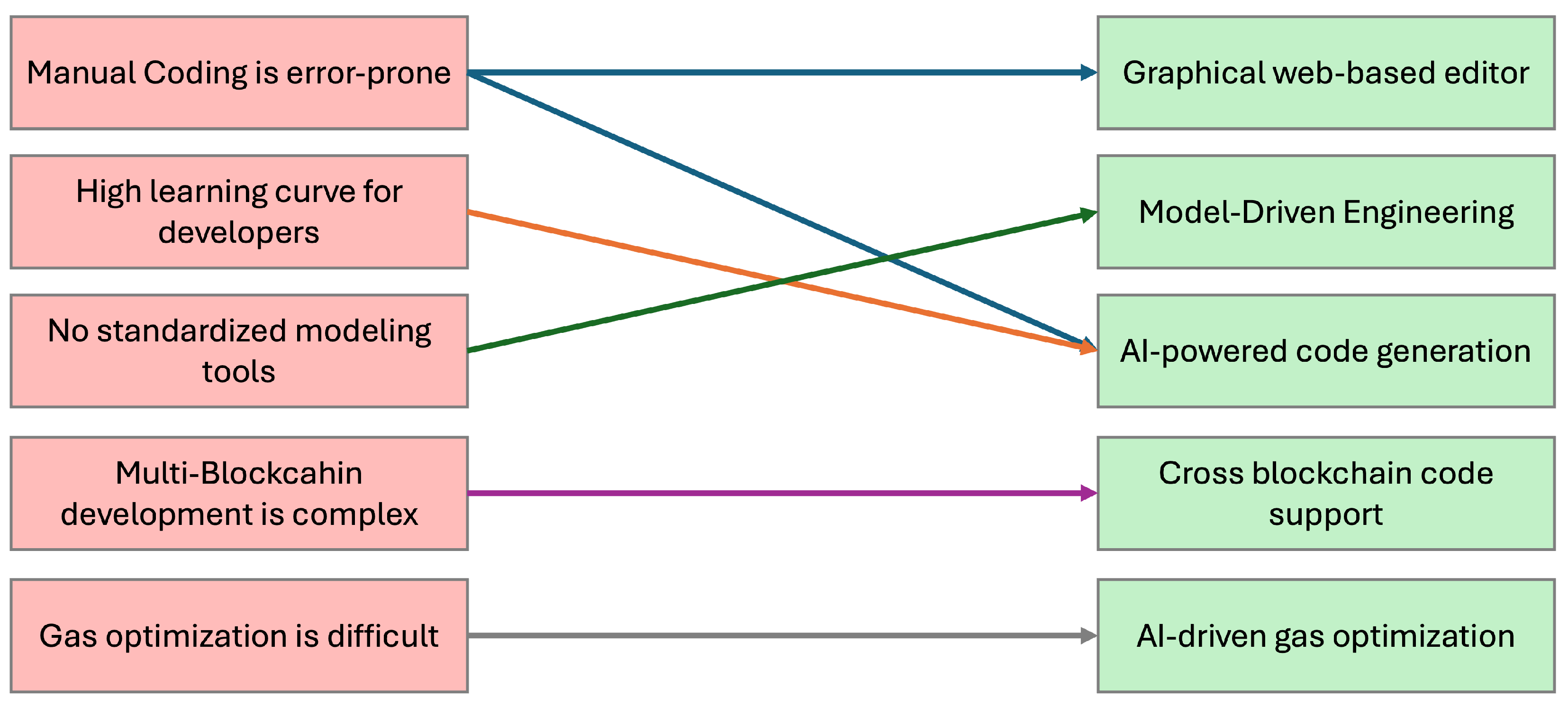

1. Introduction

2. Related Work

2.1. Model-Driven and Visual Smart Contract Development

2.2. AI-Based Smart Contract Development and Generation

2.3. Comparative Analysis

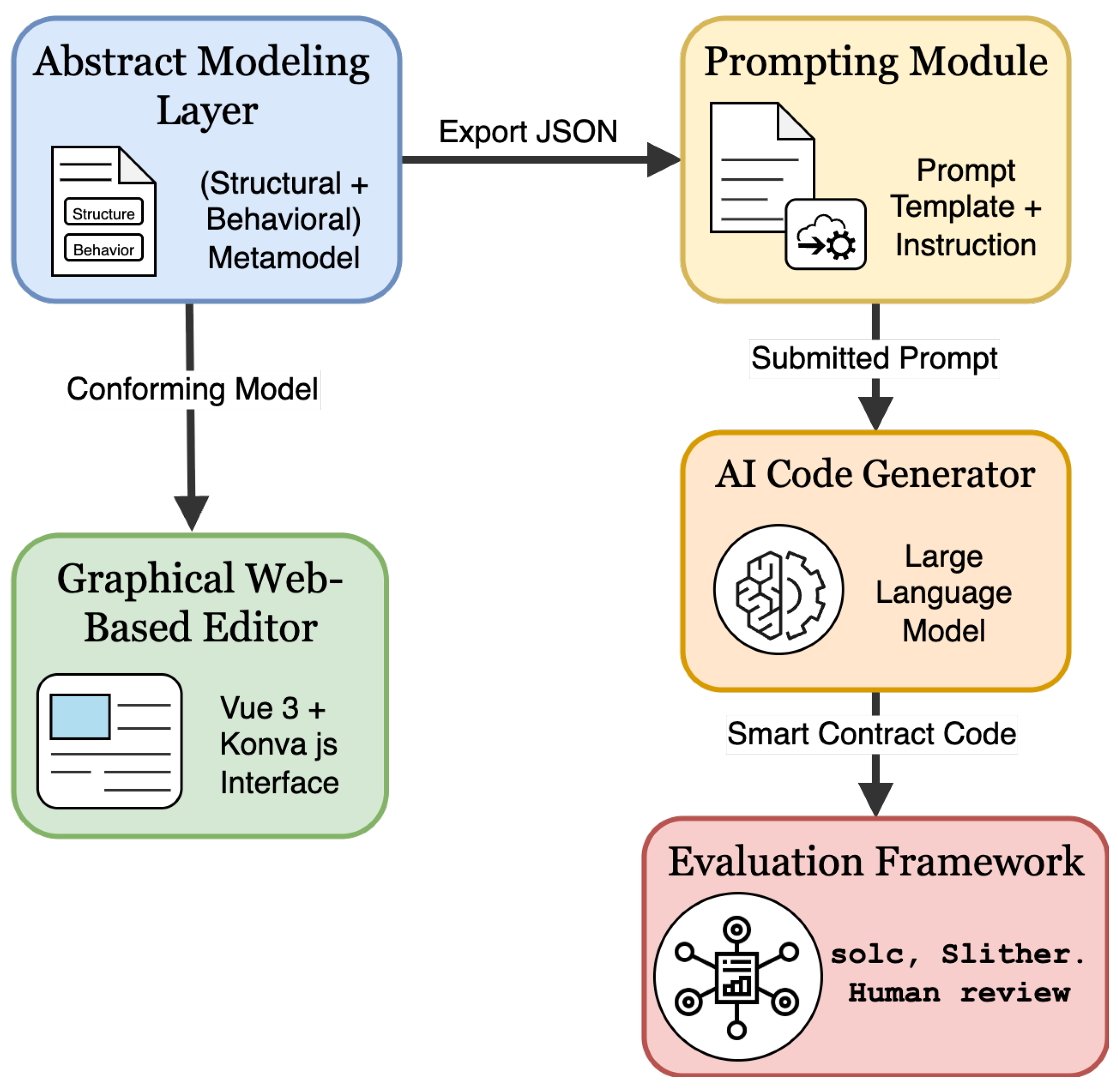

3. System Overview and Methodology

- Abstract Modeling Layer: It includes a platform-independent metamodel for abstract contract modeling;

- Graphical Web-based Editor: A web-based tool for designing structural and behavioral aspects of smart contracts;

- AI code generator: An AI-driven code generation engine that utilizes large language models;

- Prompting Module: A zero-shot prompt formulation strategy adapted for structured data;

- Evaluation Framework: It presents a selected dataset for cross-model evaluation of generated code.

3.1. Model-Driven Smart Contract Design

- 1.

- Support platform independence, enabling eventual deployment across heterogeneous blockchain systems;

- 2.

- Facilitate structured graphical modeling and formal reasoning;

- 3.

- Ensure JSON-level compatibility required for AI-driven code generation.

| Listing 1. IF Statement JSON representation. |

| { "type": "IfStatement", "condition": { "left": "isApproved", "operator": "==", "right": "true" }, "body": [ { "type": "EmitStatement", "event": "ApprovalConfirmed" } ] } |

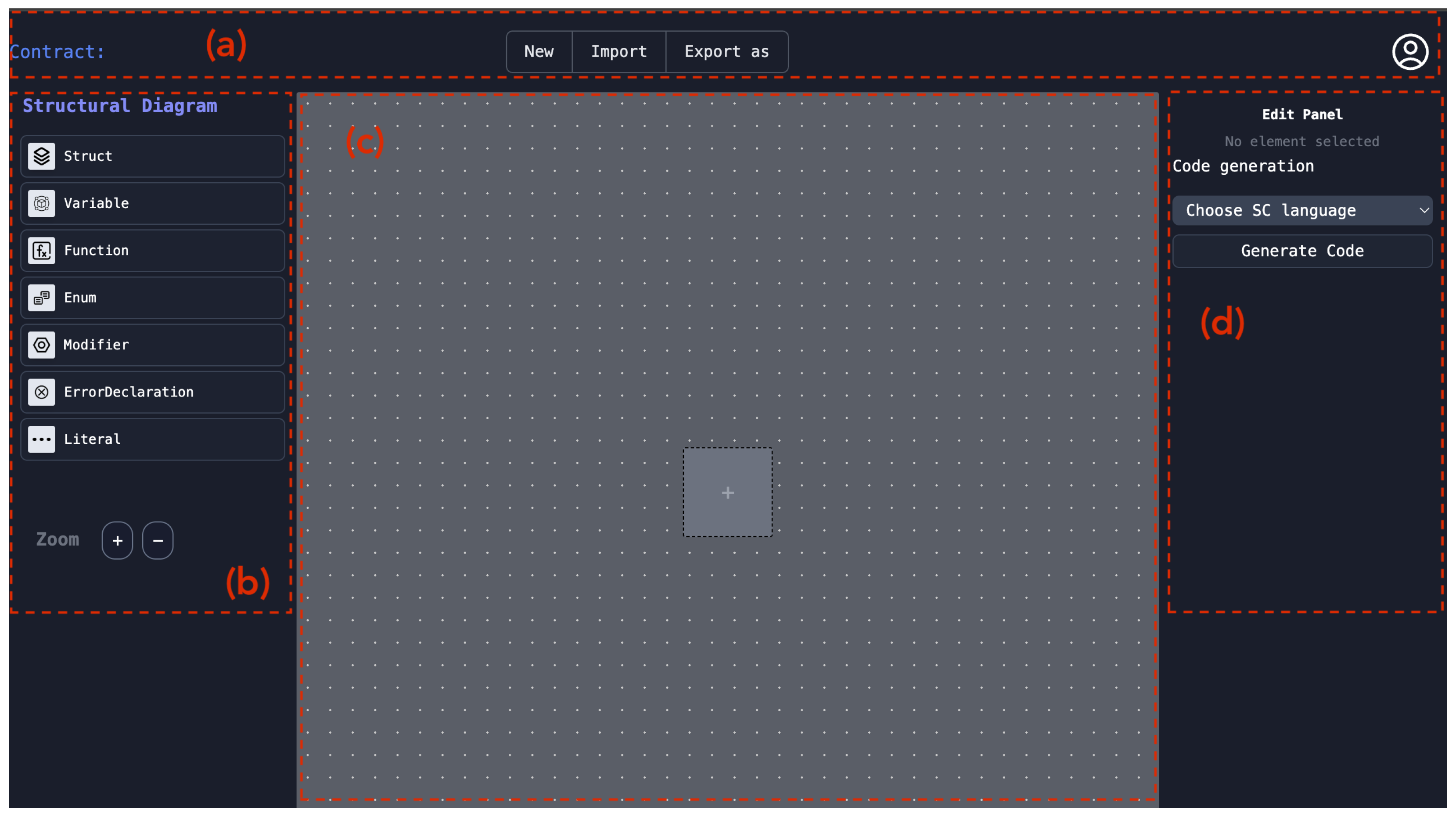

3.2. Web-Based Editor

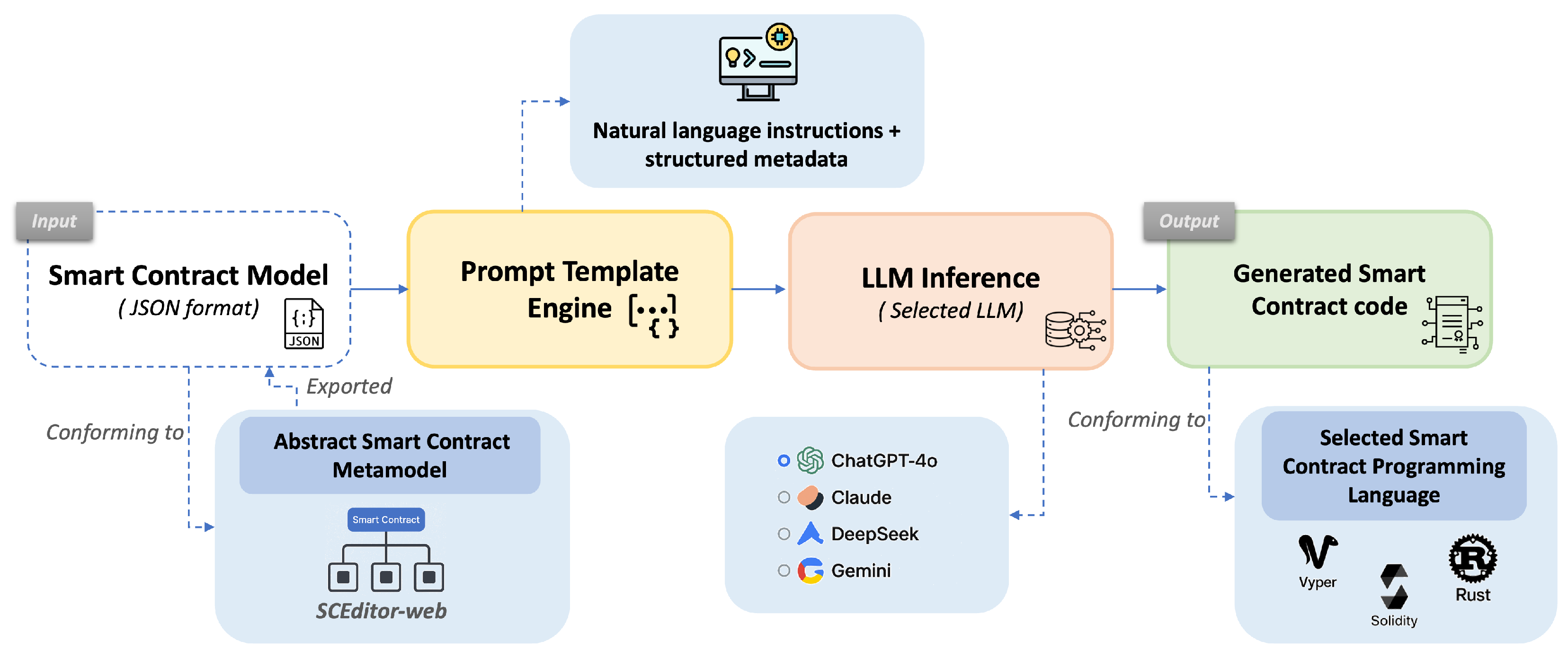

3.3. AI-Based Code Generation

| Listing 2. LLM prompt for smart contract code generation. |

| You are a professional smart contract developer. Based on the JSON specification below, generate a complete [blockchain_language] smart contract. The JSON contains: - Structural definitions (contract, variables, structs, functions, etc.). - Optional natural language descriptions that clarify developer intentions or behavior. Please follow these guidelines: - Implement all logic explicitly defined in the JSON structure. - Use the description field (when present) to enrich the contract, infer purpose, and write readable, semantically appropriate code. - Prioritize the description to resolve ambiguities. - Write clean, commented, deployable code. Here is the smart contract definition: <JSON> [contract.json] </JSON> Now generate the [blockchain_language] code. Output only the smart contract code. Do not include explanations. |

- ChatGPT-4o (OpenAI, 2024): A transformer-based model known for advanced reasoning and multilingual capabilities [46].

- Claude 3.7 Sonnet (Anthropic, 2025): Designed for instruction following and safe, high-fidelity generation [47].

- DeepSeek-V3 (DeepSeek, 2025): An open-source alternative with high performance on structured development benchmarks [48].

- Gemini 2.5 Pro (Google DeepMind, 2025): Optimized for software and logic tasks, with structured document comprehension [49].

3.4. Prompt Strategy

- Zero-shot prompting aligns with real-world development workflows where training datasets are sparse or unavailable.

- Fine-tuning or retrieval-augmented generation (RAG) techniques require complex infrastructure and are often limited to narrow domains or specific blockchain platforms.

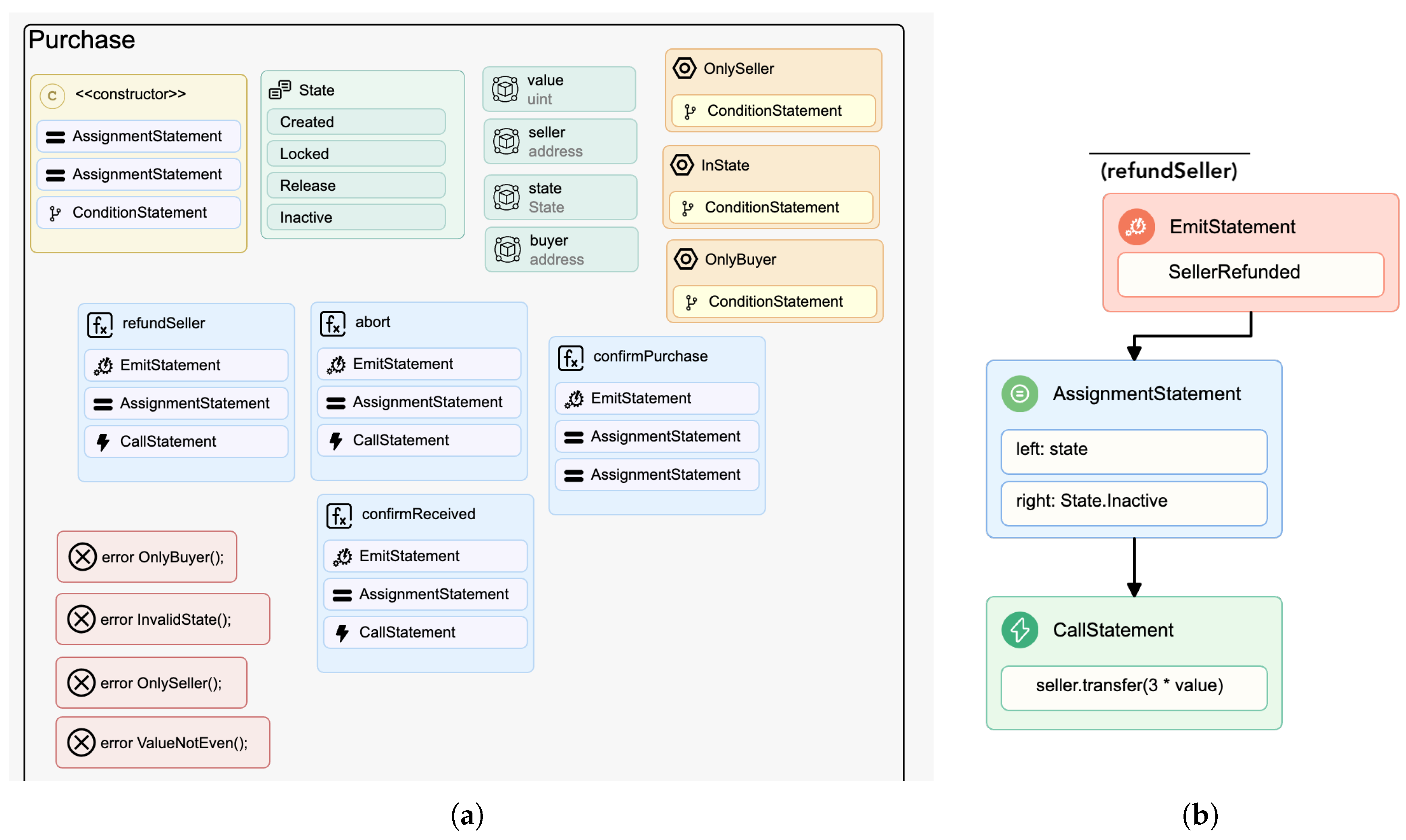

3.5. Dataset: JSON–Solidity Pairs

- Blind Auction: Sealed bidding process with reveal and finalization phases.

- Remote Purchase: Conditional delivery and payment release using escrow logic.

- Hotel Inventory Management: Room availability, booking, and refund management.

4. Evaluation Design

4.1. Metrics Defined

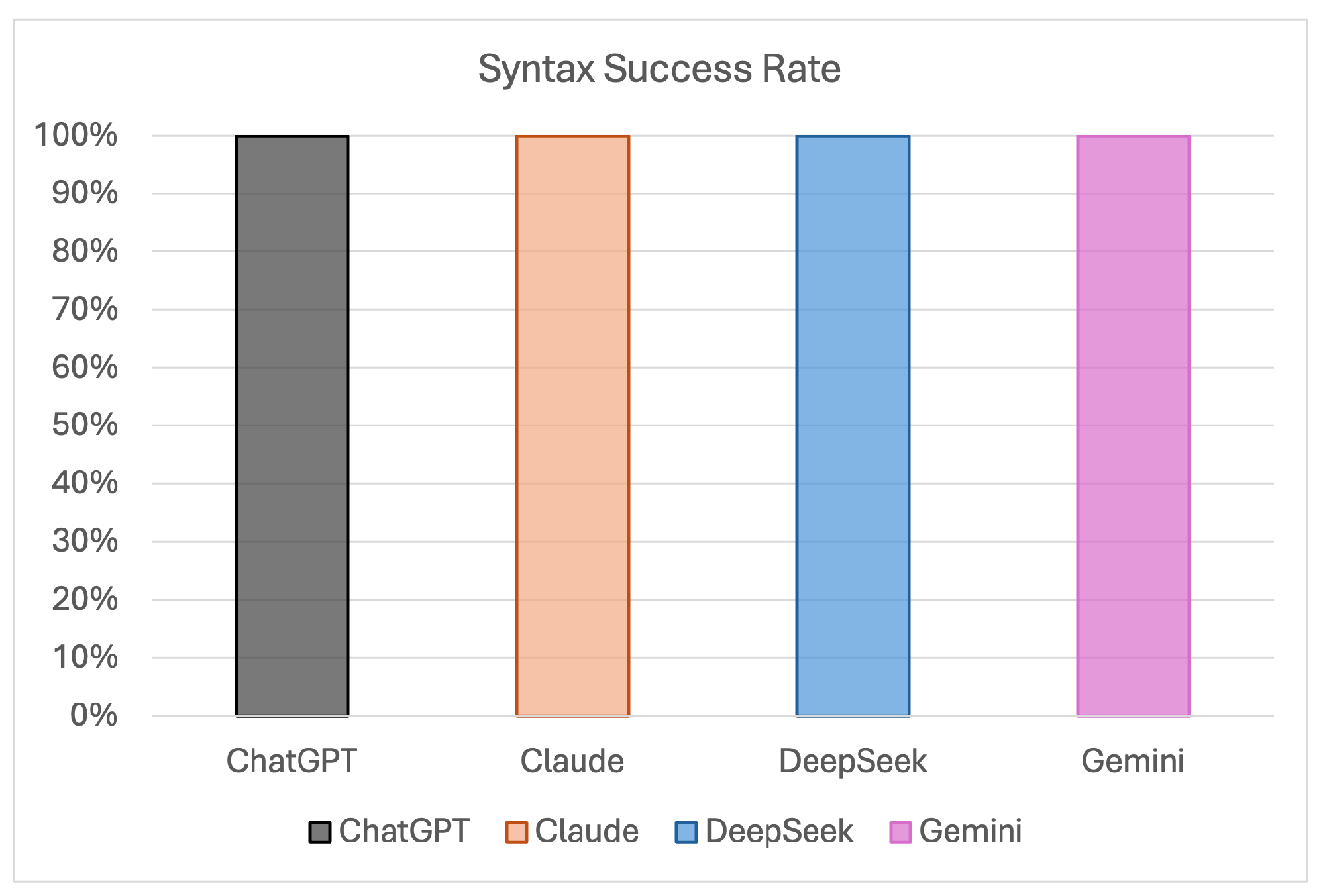

4.1.1. Syntax Success Rate (SSR)

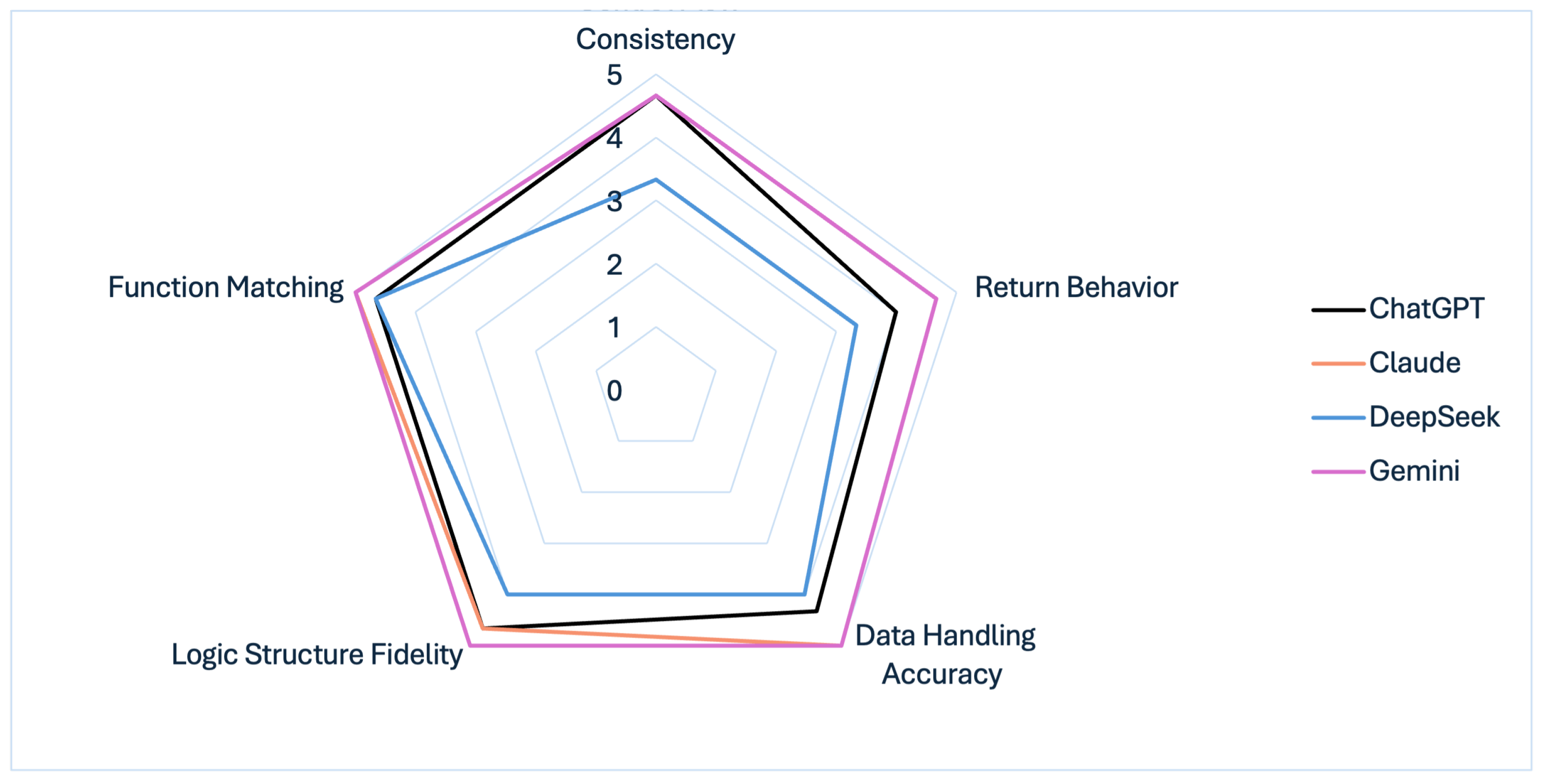

4.1.2. Semantic Fidelity Score (SFS)

- Function Matching: Does the generated contract include all the functions defined in the JSON specification, with appropriate naming and visibility?

- Logic Structure: Are control structures like if, else, for, and return statements correctly reconstructed?

- Data Handling: Are mappings, structs, arrays, and variables declared and accessed in a manner faithful to the original model?

- Return Behavior: Are output values returned in the correct format and context?

- Control Flow Consistency: Does the contract preserve the overall logic sequence and interaction pattern of the modeled design?

4.1.3. Code Quality Score (CQS)

- Readability: Are indentation, line length, and code structure clear and consistent?

- Modularity: Are functions concise and logically separated?

- Naming Conventions: Do identifiers meaningfully represent their roles?

- Gas-Aware Design: Are Solidity-specific gas optimization patterns used appropriately?

- Solidity Best Practices: Is there use of require, proper visibility modifiers, and protection against vulnerabilities?

4.1.4. Normalized Composite Score (NCS)

4.2. Tools Used

4.2.1. Solidity Compiler (solc)

4.2.2. Slither Static Analysis

- Unchecked external calls;

- Uninitialized storage variables;

- Reentrancy risk;

- Inefficient gas usage;

- Missing visibility specifiers.

4.2.3. Batch Validation Pipeline

4.3. Testing Setup

- ChatGPT 4o: Accessed via OpenAI Chat API.

- Claude 3.7 Sonnet: Accessed via Anthropic platform.

- DeepSeek-V3: Accessed via DeepSeek’s website.

- Gemini 2.5 Pro: Accessed via Google AI Studio.

4.4. Threats to Validity

- Construct Validity: The evaluation metrics used (SSR, SFS, and CQS) are designed to approximate code correctness, completeness, and quality. However, these proxies do not fully capture critical aspects such as gas efficiency, formal correctness, or deployability on real networks. Additionally, SFS relies on reference-based pattern detection, which may not cover all semantically correct alternatives.

- Internal Validity: The smart contract specifications used for testing were manually designed and may reflect unintentional biases or structural regularities that influence LLM outputs. Moreover, prompt formulation plays a key role in LLM performance; while we aimed for consistency across models, small changes in prompt wording can impact the generated code. We mitigated this by applying a controlled prompt generation pipeline and consistent evaluation scripts.

- External Validity: Our results are based on a specific set of LLMs (ChatGPT-4o, Claude 3.7 Sonnet, DeepSeek-V3 and Gemini 2.5 Pro) and target three languages (Solidity, Vyper, Rust). While these represent a broad and modern selection, findings may not generalize to other LLMs or contract platforms. Similarly, real-world smart contracts often include broader system-level interactions and external dependencies not modeled in our evaluation.

- Conclusion Validity: While metric-based trends were consistent across multiple LLMs and contract types, the interpretation of scores (especially SFS and CQS) can be sensitive to subjectivity in reference construction or prompt design. Our analysis focused on static evaluations and does not account for runtime behavior, gas usage, or formal verification outcomes.

5. Results and Analysis

5.1. SCEditor-Web

- AI-assisted modeling: Real-time features such as automatic component generation, contextual code suggestions, and prompt previews within the editor interface.

- Design-time validation: Mechanisms for checking syntactic completeness and semantic consistency during model construction to avoid malformed exports or generation failures.

- Advanced behavioral modeling: Support for nested control flow, inline expressions, and chained statements.

- Execution and simulation: Facilities for validating contract behavior through runtime simulation and state-transition analysis.

- Collaboration and versioning: Built-in history tracking, multi-user editing, and integration with version control systems.

- Interoperability: Import capabilities for existing smart contract code and models from other DSLs, enabling reuse and migration of legacy artifacts.

- Usability enhancements: Undo/redo, alignment aids, keyboard shortcuts, and support for large-scale models.

5.2. Application Example: Remote Purchase

5.3. Syntax Success Rate

5.4. Semantic Fidelity

5.5. Code Quality Evaluation

5.6. Runtime Validation of Generated Contracts

5.7. Normalized Composite Score

6. Discussion

6.1. Positioning of Our Approach vs. Related Work

6.2. Strengths and Limitations of the Study

- Language Scope: All contract generations and analyses were restricted to Solidity, the dominant smart contract language for the Ethereum Virtual Machine (EVM). Although our metamodel is designed to be independent of any blockchain, and the editor can accommodate structures compatible with other platforms (e.g., Solana or Polkadot), the evaluation focused solely on Solidity to ensure metric consistency and simplify tooling integration. Future work may include testing Rust (for Solana) or Vyper (EVM-compatible) to assess cross-chain adaptability.

- Security Validation: While our evaluation framework covered syntax, semantic fidelity, code quality, and runtime execution, it did not include dedicated security verification. Key aspects such as vulnerability scanning, formal verification, and conformance to blockchain-specific operational rules (e.g., gas metering, access control, reentrancy resistance) were not yet addressed. Future work should integrate our workflow with established auditing tools and formal methods to ensure that generated contracts are not only functional but also secure.

- Zero-Shot Prompting: The models were evaluated in a zero-shot configuration. We did not explore performance under few-shot prompts, chain-of-thought scaffolding, or system prompt customization. While this choice allowed for a clean comparison of each model’s default reasoning capabilities, it also may under-represent the full potential of each LLM under guided prompting scenarios.

- Human-Dependent Evaluation: While the semantic fidelity and code quality scoring rubrics were carefully defined and applied consistently, they still involve manual interpretation. Inter-rater reliability was not measured, and results may reflect the evaluator’s familiarity with Solidity best practices and metamodel constraints. Incorporating multi-reviewer scoring or automated fidelity checks could enhance the reproducibility of this component.

- LLM Reliability: While the editor leverages LLMs for code synthesis, these models remain probabilistic and may occasionally produce hallucinations, incomplete logic, or semantic drift from the source model. Our multi-metric evaluation (syntax, semantic fidelity, runtime validation) helps detect such cases, but full determinism and logical soundness remain open challenges for future work.

7. Conclusions and Future Work

Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Taherdoost, H. Smart contracts in blockchain technology: A critical review. Information 2023, 14, 117. [Google Scholar] [CrossRef]

- Lai, J.; Yan, K. VortexDraft: A blockchain smart contract auto-generation system based on named entity recognition. In Proceedings of the IET Conference Proceedings CP989, Sanya, China, 1–4 August 2024; Volume 2024, pp. 350–356. [Google Scholar]

- Gan, J.; Su, J.; Lin, K.; Zheng, Z. FinanceFuzz: Fuzzing Smart Contracts with Financial Properties. Blockchain Res. Appl. 2025, 100301. [Google Scholar] [CrossRef]

- Guo, L. EXPRESS: Smart Contracts in Supply Chains. J. Mark. Res. 2025, 62, 00222437251314003. [Google Scholar] [CrossRef]

- Bawa, G.; Singh, H.; Rani, S.; Kataria, A.; Min, H. Exploring perspectives of blockchain technology and traditional centralized technology in organ donation management: A comprehensive review. Information 2024, 15, 703. [Google Scholar] [CrossRef]

- Mars, R.; Cheikhrouhou, S.; Kallel, S.; Hadj Kacem, A. A survey on automation approaches of smart contract generation. J. Supercomput. 2023, 79, 16065–16097. [Google Scholar] [CrossRef]

- Kannengiesser, N.; Lins, S.; Sander, C.; Winter, K.; Frey, H.; Sunyaev, A. Challenges and common solutions in smart contract development. IEEE Trans. Softw. Eng. 2021, 48, 4291–4318. [Google Scholar] [CrossRef]

- Curty, S.; Härer, F.; Fill, H.G. Blockchain application development using model-driven engineering and low-code platforms: A survey. In Proceedings of the International Conference on Business Process Modeling, Development and Support, Leuven, Belgium, 6–7 June 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 205–220. [Google Scholar]

- Coblenz, M.; Sunshine, J.; Aldrich, J.; Myers, B.A. Smarter smart contract development tools. In Proceedings of the 2019 IEEE/ACM 2nd International Workshop on Emerging Trends in Software Engineering for Blockchain (WETSEB), Montreal, QC, Canada, 27 May 2019; pp. 48–51. [Google Scholar]

- Zafar, A.; Azam, F.; Latif, A.; Anwar, M.W.; Safdar, A. Exploring the Effectiveness and Trends of Domain-Specific Model Driven Engineering: A Systematic Literature Review (SLR). IEEE Access 2024, 12, 86809–86830. [Google Scholar] [CrossRef]

- Dorado, J.P.C.; Dulce-Villarreal, E.; Hurtado, J.A. Model Driven Engineering Tool for the Generation of Interoperable Smart Contracts. In Proceedings of the Colombian Conference on Computing, Manizales, Colombia, 4–6 September 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 324–331. [Google Scholar]

- Samanipour, A.; Bushehrian, O.; Robles, G. MDAPW3: MDA-based development of blockchain-enabled decentralized applications. Sci. Comput. Program. 2025, 239, 103185. [Google Scholar] [CrossRef]

- Ait Hsain, Y.; Laaz, N.; Mbarki, S. A Common Metamodel for Smart Contracts Development. In Proceedings of the International Conference on Advanced Intelligent Systems for Sustainable Development, Agadir, Morocco, 17–23 December 2024; Springer: Berlin/Heidelberg, Germany, 2025; pp. 358–365. [Google Scholar]

- Curty, S.; Härer, F.; Fill, H.G. Design of blockchain-based applications using model-driven engineering and low-code/no-code platforms: A structured literature review. Softw. Syst. Model. 2023, 22, 1857–1895. [Google Scholar] [CrossRef]

- Köpke, J.; Meroni, G.; Salnitri, M. Designing secure business processes for blockchains with SecBPMN2BC. Future Gener. Comput. Syst. 2023, 141, 382–398. [Google Scholar] [CrossRef]

- Jurgelaitis, M.; čeponienė, L.; Butkienė, R. Solidity code generation from UML state machines in model-driven smart contract development. IEEE Access 2022, 10, 33465–33481. [Google Scholar] [CrossRef]

- Hsain, Y.A.; Laaz, N.; Mbarki, S. Ethereum’s smart contracts construction and development using model driven engineering technologies: A review. Procedia Comput. Sci. 2021, 184, 785–790. [Google Scholar] [CrossRef]

- Nassar, E.Y.; Mazen, S.; Craβ, S.; Helal, I.M. Modelling blockchain-based systems using model-driven engineering. In Proceedings of the 2023 Fifth International Conference on Blockchain Computing and Applications (BCCA), Kuwait, Kuwait, 24–26 October 2023; pp. 329–334. [Google Scholar]

- Khalid, S.; Brown, C. Evaluating Capabilities and Perspectives of Generative AI Tools in Smart Contract Development. In Proceedings of the 7th ACM International Symposium on Blockchain and Secure Critical Infrastructure, Meliá Hanoi Hanoi, Vietnam, 25–29 August 2025; pp. 1–12. [Google Scholar]

- Napoli, E.A.; Barbàra, F.; Gatteschi, V.; Schifanella, C. Leveraging large language models for automatic smart contract generation. In Proceedings of the 2024 IEEE 48th Annual Computers Software, and Applications Conference (COMPSAC), Osaka, Japan, 2–4 July 2024; pp. 701–710. [Google Scholar]

- Barbàra, F.; Napoli, E.A.; Gatteschi, V.; Schifanella, C. Automatic smart contract generation through llms: When the stochastic parrot fails. In Proceedings of the 6th Distributed Ledger Technology Workshop, Turin, Italy, 14–15 May 2024. [Google Scholar]

- Ding, H.; Liu, Y.; Piao, X.; Song, H.; Ji, Z. SmartGuard: An LLM-enhanced framework for smart contract vulnerability detection. Expert Syst. Appl. 2025, 269, 126479. [Google Scholar] [CrossRef]

- Busch, D.; Bainczyk, A.; Smyth, S.; Steffen, B. LLM-based code generation and system migration in language-driven engineering. Int. J. Softw. Tools Technol. Transf. 2025, 27, 137–147. [Google Scholar] [CrossRef]

- Hsain, Y.A.; Laaz, N.; Mbarki, S. SCEditor: A Graphical Editor Prototype for Smart Contract Design and Development. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 1185–1195. [Google Scholar] [CrossRef]

- Velasco, G.; Vaz, N.A.; Carvalho, S.T. A High-Level Metamodel for Developing Smart Contracts on the Ethereum Virtual Machine. In Proceedings of the Workshop em Blockchain: Teoria, Tecnologias e Aplicações (WBlockchain), Porto Alegre, Brazil, 24 June 2024; SBC: Porto Alegre, Brazil, 2024; pp. 97–110. [Google Scholar]

- Dulce-Villarreal, E.; Hernandez, G.; Insuasti, J.; Hurtado, J.; Garcia-Alonso, J. Validation of MUISCA: A MDE-Based Tool for Interoperability of Healthcare Environments Using Smart Contracts in Blockchain. In Envisioning the Future of Health Informatics and Digital Health; IOS Press: Amsterdam, The Netherlands, 2025; pp. 255–259. [Google Scholar]

- Wöhrer, M.; Zdun, U. Domain specific language for smart contract development. In Proceedings of the 2020 IEEE International Conference on Blockchain and Cryptocurrency (ICBC), Toronto, ON, Canada, 2–6 May 2020; pp. 1–9. [Google Scholar]

- Gómez Macías, C. SmaC: A Model-Based Framework for the Development of Smart Contracts. Ph.D. Thesis, Universidad Rey Juan Carlos, Móstoles, Spain, 2023. [Google Scholar]

- Ye, X.; Zeng, N.; Tao, X.; Han, D.; König, M. Smart contract generation and visualization for construction business process collaboration and automation: Upgraded workflow engine. J. Comput. Civ. Eng. 2024, 38, 04024030. [Google Scholar] [CrossRef]

- Hamdaqa, M.; Met, L.A.P.; Qasse, I. iContractML 2.0: A domain-specific language for modeling and deploying smart contracts onto multiple blockchain platforms. Inf. Softw. Technol. 2022, 144, 106762. [Google Scholar] [CrossRef]

- Alzhrani, F.; Saeedi, K.; Zhao, L. A Business Process Modeling Pattern Language for Blockchain Application Requirement Analysis. In Understanding Blockchain Applications from Architectural and Business Process Perspectives; The University of Manchester: Manchester, UK, 2022. [Google Scholar]

- Daspe, E.; Durand, M.; Hatin, J.; Bradai, S. Benchmarking Large Language Models for Ethereum Smart Contract Development. In Proceedings of the 2024 6th Conference on Blockchain Research & Applications for Innovative Networks and Services (BRAINS), Berlin, Germany, 9–11 October 2024; pp. 1–4. [Google Scholar]

- Qasse, I.; Mishra, S.; Hamdaqa, M. Chat2Code: Towards conversational concrete syntax for model specification and code generation, the case of smart contracts. arXiv 2021, arXiv:2112.11101. [Google Scholar] [CrossRef]

- Tong, Y.; Tan, W.; Guo, J.; Shen, B.; Qin, P.; Zhuo, S. Smart contract generation assisted by AI-based word segmentation. Appl. Sci. 2022, 12, 4773. [Google Scholar] [CrossRef]

- Gao, S.; Liu, W.; Zhu, J.; Dong, X.; Dong, J. BPMN-LLM: Transforming BPMN Models into Smart Contracts Using Large Language Models. IEEE Softw. 2025, 42, 50–57. [Google Scholar] [CrossRef]

- Kevin, J.; Yugopuspito, P. SmartLLM: Smart Contract Auditing using Custom Generative AI. arXiv 2025, arXiv:2502.13167. [Google Scholar] [CrossRef]

- Krichen, M. Strengthening the security of smart contracts through the power of artificial intelligence. Computers 2023, 12, 107. [Google Scholar] [CrossRef]

- Mohan, M.S.; Swamy, T.; Reddy, V.C. Strengthening Smart Contracts: An AI-Driven Security Exploration. Glob. J. Comput. Sci. Technol. 2023, 23, 57–67. [Google Scholar]

- Upadhya, J.; Upadhyay, K.; Sainju, A.; Poudel, S.; Hasan, M.N.; Poudel, K.; Ranganathan, J. QuadraCode AI: Smart Contract Vulnerability Detection with Multimodal Representation. In Proceedings of the 2024 33rd International Conference on Computer Communications and Networks (ICCCN), Kailua-Kona, HI, USA, 29–31 July 2024; pp. 1–9. [Google Scholar]

- Sun, J.; Long, H.W.; Kang, H.; Fang, Z.; El Saddik, A.; Cai, W. A Multidimensional Contract Design for Smart Contract-as-a-Service. IEEE Trans. Comput. Soc. Syst. 2025. [Google Scholar] [CrossRef]

- Brambilla, M.; Cabot, J.; Wimmer, M. Model-Driven Software Engineering in Practice; Morgan & Claypool Publishers: San Rafael, CA, USA, 2017. [Google Scholar]

- Viyović, V.; Maksimović, M.; Perisić, B. Sirius: A rapid development of DSM graphical editor. In Proceedings of the IEEE 18th International Conference on Intelligent Engineering Systems INES 2014, Tihany, Hungary, 3–5 July 2014; pp. 233–238. [Google Scholar]

- Abdelmalek, H.; Khriss, I.; Jakimi, A. Towards an effective approach for composition of model transformations. Front. Comput. Sci. 2024, 6, 1357845. [Google Scholar] [CrossRef]

- Chen, M.; Tworek, J.; Jun, H.; Yuan, Q.; Pinto, H.P.D.O.; Kaplan, J.; Edwards, H.; Burda, Y.; Joseph, N.; Brockman, G.; et al. Evaluating large language models trained on code. arXiv 2021, arXiv:2107.03374. [Google Scholar] [CrossRef]

- Le, H.; Chen, H.; Saha, A.; Gokul, A.; Sahoo, D.; Joty, S. Codechain: Towards modular code generation through chain of self-revisions with representative sub-modules. arXiv 2023, arXiv:2310.08992. [Google Scholar]

- Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; Anadkat, S.; et al. Gpt-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Anthropic. Claude. Artificial Intelligence Model. 2023. Available online: https://www.anthropic.com (accessed on 5 April 2025).

- Liu, A.; Feng, B.; Xue, B.; Wang, B.; Wu, B.; Lu, C.; Zhao, C.; Deng, C.; Zhang, C.; Ruan, C.; et al. Deepseek-v3 technical report. arXiv 2024, arXiv:2412.19437. [Google Scholar]

- DeepMind, G. Gemini 2.5 Pro Preview: Even Better Coding Performance. 2025. Available online: https://deepmind.google/technologies/gemini/pro/ (accessed on 8 May 2025).

- Sobo, A.; Mubarak, A.; Baimagambetov, A.; Polatidis, N. Evaluating LLMs for code generation in HRI: A comparative study of ChatGPT, gemini, and claude. Appl. Artif. Intell. 2025, 39, 2439610. [Google Scholar] [CrossRef]

- Zhang, K.; Wang, D.; Xia, J.; Wang, W.Y.; Li, L. Algo: Synthesizing algorithmic programs with generated oracle verifiers. Adv. Neural Inf. Process. Syst. 2023, 36, 54769–54784. [Google Scholar]

- Reynolds, L.; McDonell, K. Prompt programming for large language models: Beyond the few-shot paradigm. In Proceedings of the Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–7. [Google Scholar]

- Schulhoff, S.; Ilie, M.; Balepur, N.; Kahadze, K.; Liu, A.; Si, C.; Li, Y.; Gupta, A.; Han, H.; Schulhoff, S.; et al. The prompt report: A systematic survey of prompting techniques. arXiv 2024, arXiv:2406.06608. [Google Scholar]

- Jiang, J.; Wang, F.; Shen, J.; Kim, S.; Kim, S. A survey on large language models for code generation. arXiv 2024, arXiv:2406.00515. [Google Scholar] [CrossRef]

- Kojima, T.; Gu, S.S.; Reid, M.; Matsuo, Y.; Iwasawa, Y. Large language models are zero-shot reasoners. Adv. Neural Inf. Process. Syst. 2022, 35, 22199–22213. [Google Scholar]

| Reference | Year | Approach Type | Modeling Inputs | Transformations & Code Generation | Targets and Outputs | Strengths | Limitations |

|---|---|---|---|---|---|---|---|

| [25] | 2024 | Visual MDE (HLM-SC) | High-level metamodel for Ethereum | M2M/M2T, Solidity code | Ethereum/Solidity | Reduces complexity, preserves semantics | Ethereum-specific, static rules |

| [16] | 2022 | MDE with UML | UML state machines and class diagrams | M2M/M2T, Solidity code | Ethereum/Solidity | Correct and gas-efficient code | Solidity-only, rigid transformations |

| [18] | 2023 | MDE/CIM | CIM with graph grammar rules | CIM to PIM | Multi-platform/PIM models | Formal verification, Distributed ledger technology (DLT) integration | Complex rules, no AI |

| [26] | 2025 | MDE (MUISCA) | Domain-specific models for eHealth | M2M/M2T | Multi-platform/eHealth | Interoperability, real-world validation | Domain-specific, limited scalability |

| [27] | 2020 | MDE/DSL | DSL with feature modeling | DSL To Solidity/Vyper | Multi-platform/Solidity, Vyper | Modularity, reusability | Static rules |

| [28] | 2023 | Textual DSL (SmaC, Xtext) | DSL-based smart contract models | M2M/M2T, Solidity code | Ethereum/Solidity | Maintainability, vulnerability mitigation | Platform-specific, text-heavy |

| [30] | 2022 | Platform-independent DSL (iContractML 2.0) | Platform-independent contract models | PIM to PSM, Multi-target generation | Ethereum, Hyperledger | Cross-platform generation, high mapping accuracy | Complexity in abstraction alignment |

| [29] | 2024 | Graphical DSL | DSL for B2B collaboration | Auto-generation to Solidity | Ethereum/Solidity | Domain adaptation, collaborative workflows | Domain-specific (construction) |

| [31] | 2022 | Blockchain business patterns | Reusable process models | No (specification only) | Not specified | Process standardization | No code generation |

| [24] | 2024 | Visual MDE (SCEditor) | Abstract metamodel + graphical editor | Not specified | Multi-platform | Standardizes modeling, simplifies migration, supports visual design | Desktop-bound, no AI, no code generation |

| [34] | 2022 | NLP (AIASCG) | Natural language documents | NL to structured code, AI-assisted word segmentation | Not specified | NL-to-code bridge | Limited for complex logic, lack of formal representations |

| [32] | 2024 | AI (LLM benchmark) | JSON/schema | LLM-based, Solidity generation | Ethereum/Solidity | Comparative LLM analysis | High prompt sensitivity |

| [35] | 2025 | BPMN + LLM (BPMN-LLM) | BPMN models | BPMN to code | Multi-platform/Solidity | Uses BPMN as LLM input | BPMN expressiveness limits |

| [33] | 2021 | Interactive NLP+ MDE (Chat2Code) | User dialogue | NL to code (Chat Mechanism) | Solidity, Hyperledger Composer and Microsoft Azure | Accessible to non-experts | Quality depends on dialogue |

| [36] | 2025 | AI auditing (SmartLLM) | Existing code | LLM-based, ERC compliance checking | Ethereum/Solidity | High detection precision | No generation (audit only) |

| [37] | 2023 | AI security (supervised) | Code | Vulnerability detection | Ethereum/Solidity | Preventive bug identification | Not multi-platform |

| [38] | 2023 | AI lifecycle framework | Models and code | Generation + audit + deployment | Ethereum/Solidity | End-to-end automation | Not multi-language |

| [39] | 2024 | AI multimodal (QuadraCode) | Code and representations | Vulnerability detection | Ethereum/Solidity | Security and resilience | No code generation |

| [40] | 2025 | Modular architecture (SCaaS) | Pre-validated components | Reuse (component-based) | Multi/component-based | Low-code, secure components | Depends on component library |

| Use Case | Domain | Smart Contract Functionalities |

|---|---|---|

| Blind Auction | Auctions/E-Commerce | Implements sealed bid auction logic with privacy guarantees. Includes both commitment and reveal phases, time-based validation, and transfer of winning bid. |

| Remote Purchase | Retail/Logistics | Represents a purchase process using an escrow, where both the buyer and the seller must confirm the transaction. Encodes payment, delivery, refund conditions, and secure seller-buyer arbitration. |

| Hotel Inventory | Hospitality/Travel | Manages hotel room availability, booking, cancellation, and state transitions. Provides filtering, occupancy logic, and refund handling. |

| Diagram Type | Metaclass | Definition/Role | SCEditor Notation |

|---|---|---|---|

| Structural | Struct | Defines a composite data type grouping multiple fields. |  |

| Structural | Variable | Represents persistent state or storage elements in the contract. |  |

| Structural | Function | Encapsulates executable logic, with parameters and return values. |  |

| Structural | Enum | Declares symbolic constants for restricted value sets. |  |

| Structural | Modifier | Specifies reusable preconditions for function execution. |  |

| Structural | ErrorDeclaration | Declares structured error types for handling exceptional cases. |  |

| Functional | Assignment | Defines value binding or state update operations. |  |

| Functional | Call | Represents function or contract invocations with arguments. |  |

| Functional | Condition | Models branching logic (e.g., if/else). |  |

| Functional | Emit | Triggers events for off-chain listeners. |  |

| Functional | Loop | Encodes iterative behavior (e.g., for, while). |  |

| Structural/Functional | Literal | Represents constant values such as numbers or strings. |  |

| Model | HotelInventory | BlindAuction | RemotePurchase |

|---|---|---|---|

| ChatGPT 4o | 4.0 | 4.8 | 4.6 |

| Claude 3.7 | 5.0 | 5.0 | 4.4 |

| DeepSeek-V3 | 4.0 | 4.6 | 3.6 |

| Gemini 2.5 Pro | 5.0 | 5.0 | 4.6 |

| Model | HotelInventory | BlindAuction | RemotePurchase |

|---|---|---|---|

| ChatGPT 4o | 3.4 | 3.8 | 3.6 |

| Claude 3.7 | 4.4 | 4.4 | 4.4 |

| DeepSeek-V3 | 3.8 | 4.0 | 4.0 |

| Gemini 2.5 Pro | 4.4 | 4.4 | 4.4 |

| Model | Initialization | confirmPurchase | confirmReceived | refundSeller |

|---|---|---|---|---|

| ChatGPT 4o | ✔ | ✔ | ✔ | ✔ |

| Claude 3.7 Sonnet | ✔ | ✔ | ✔ | ✔ |

| DeepSeek-V3 | ✔ | ✔ | ✔ | ✔ |

| Gemini 2.5 pro | ✔ | ✔ | ✔ | x |

| Model | SSR | Avg. SFS | Avg. CQS | NCS |

|---|---|---|---|---|

| ChatGPT 4o | 100% | 4.47 | 3.60 | 0.871 |

| Claude 3.7 | 100% | 4.73 | 4.40 | 0.942 |

| DeepSeek-V3 | 100% | 3.87 | 3.93 | 0.853 |

| Gemini 2.5 Pro | 100% | 4.87 | 4.40 | 0.951 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ait Hsain, Y.; Laaz, N.; Mbarki, S. SCEditor-Web: Bridging Model-Driven Engineering and Generative AI for Smart Contract Development. Information 2025, 16, 870. https://doi.org/10.3390/info16100870

Ait Hsain Y, Laaz N, Mbarki S. SCEditor-Web: Bridging Model-Driven Engineering and Generative AI for Smart Contract Development. Information. 2025; 16(10):870. https://doi.org/10.3390/info16100870

Chicago/Turabian StyleAit Hsain, Yassine, Naziha Laaz, and Samir Mbarki. 2025. "SCEditor-Web: Bridging Model-Driven Engineering and Generative AI for Smart Contract Development" Information 16, no. 10: 870. https://doi.org/10.3390/info16100870

APA StyleAit Hsain, Y., Laaz, N., & Mbarki, S. (2025). SCEditor-Web: Bridging Model-Driven Engineering and Generative AI for Smart Contract Development. Information, 16(10), 870. https://doi.org/10.3390/info16100870